AFFPN: Attention Fusion Feature Pyramid Network for Small Infrared Target Detection

Abstract

:1. Introduction

- (1)

- We propose the AFFPN for single-frame small infrared target detection, which achieves a better performance than existing methods on the publicly available SIRST dataset, enabling effective segmentation of small target details, and exhibits higher robustness against complex backgrounds.

- (2)

- We propose an attention fusion module that focuses on the channel and spatial location information of different layers and uses global contextual information to achieve feature fusion. This module helps the network focus on the semantic and detailed information of the infrared mini-target and dynamically perceives the features of the different network layers of small targets.

- (3)

- We deploy the proposed algorithm on an NVIDIA Jetson AGX Xavier development board and achieve real-time detection of 256 × 256-pixel resolution images.

2. Related Work

2.1. Small Infrared Target Detection

2.2. Attention and Feature Fusion

3. Proposed Method

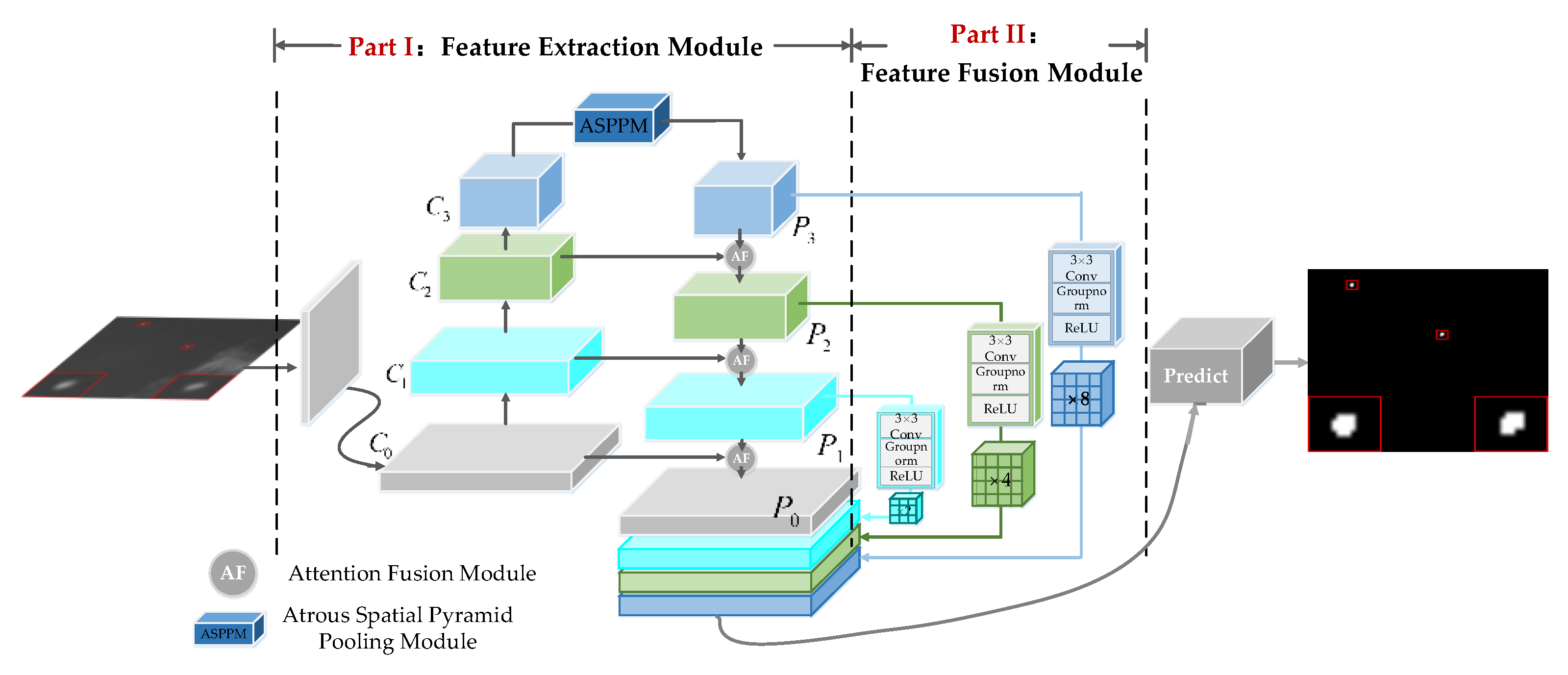

3.1. Network Architecture

3.2. Feature Extraction Module

3.2.1. ASPPM

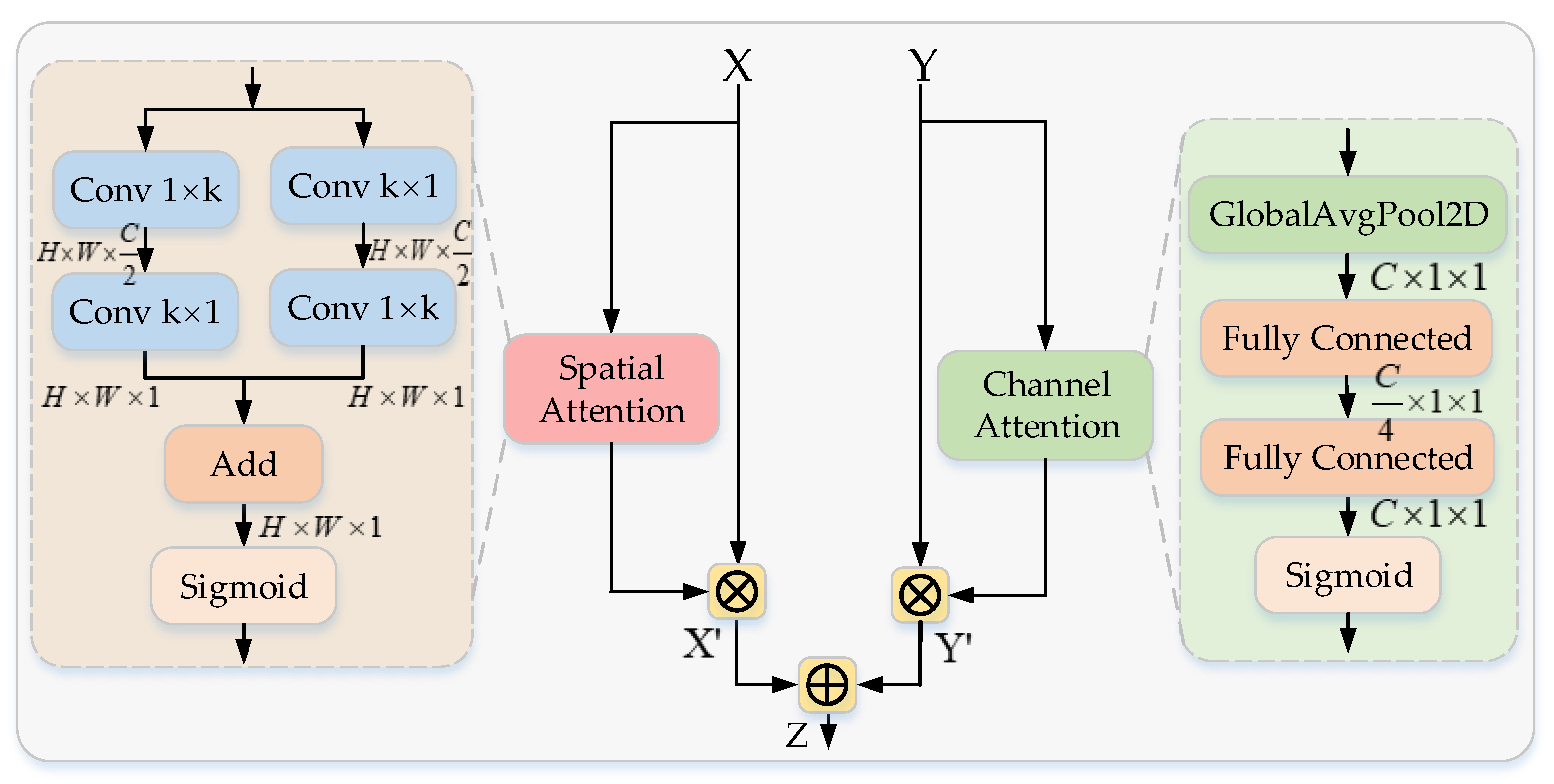

3.2.2. Attention Fusion Module

3.3. Feature Fusion Module

4. Experimental Evaluation

4.1. Evaluation Metrics

- (1)

- Mean intersection over union (mIoU): mIoU is the classical pixel-level semantic segmentation evaluation metric used to characterize the contour description capability of an algorithm. It is defined as the ratio of the intersection and concatenation area between predictions and labels, as follows:

- (2)

- Normalized IoU (nIoU): nIoU is an evaluation metric designed by [11] for small infrared target detection to better measure the segmentation performance of small targets and prevent the impact of the segmentation results of large targets on the overall evaluation metric. It is defined as follows, where TP, T, and P denote true positive, true, and positive, respectively:

- (3)

- F-measure: The F-measure is used to measure the relationship between precision and recall. Precision, recall, and the F-measure are defined as follows, where , and FP and FN denote the numbers of false positives and false negatives, respectively:

- (4)

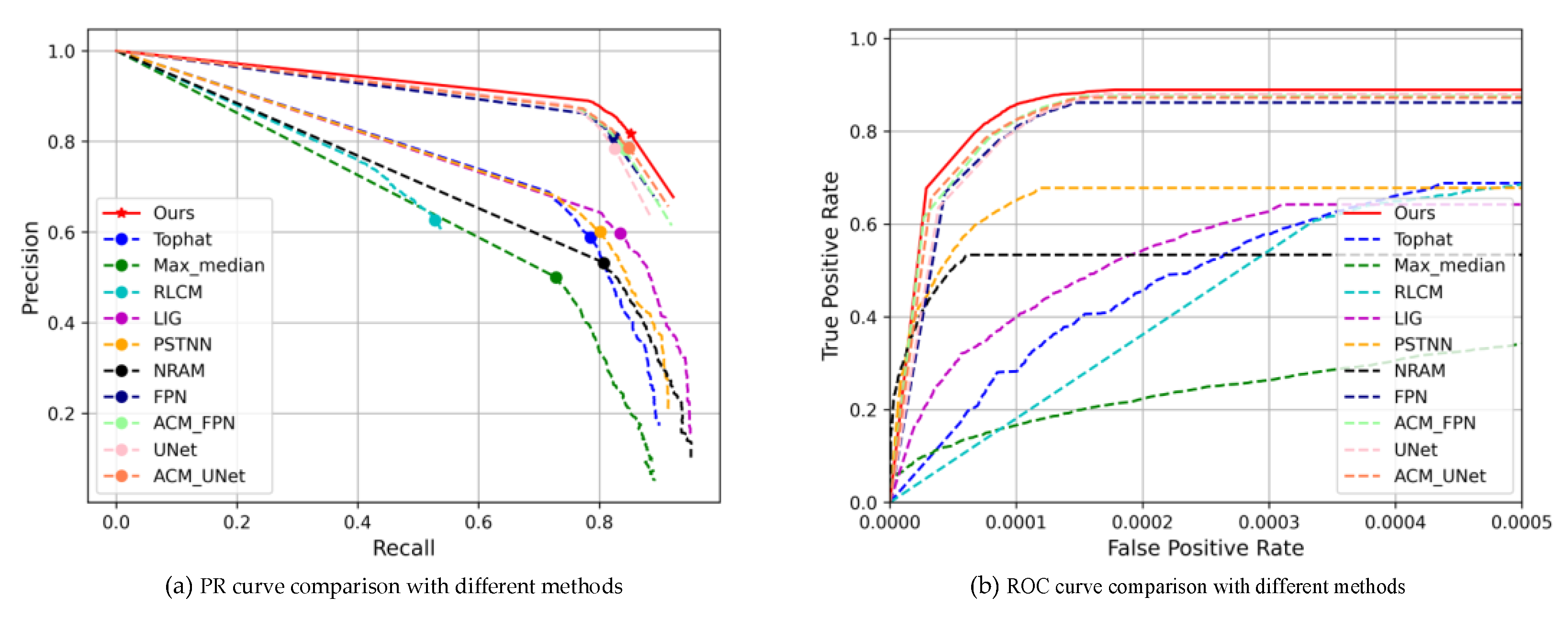

- PR curve: The PR curve is used to characterize the dynamic change between precision and recall; the closer the curve is to the upper right, the better the performance. Average precision (AP) is used to accurately evaluate the PR curve, as defined as follows, where P is precision and R is recall:

- (5)

- ROC: The dynamic relationship between true positive rate (TPR) and false positive rate (FPR) is described by the ROC. The TPR and FPR are defined as follows, where FN denotes the number of false negatives:

4.2. Implementation Details

4.3. Ablation Study

- (1)

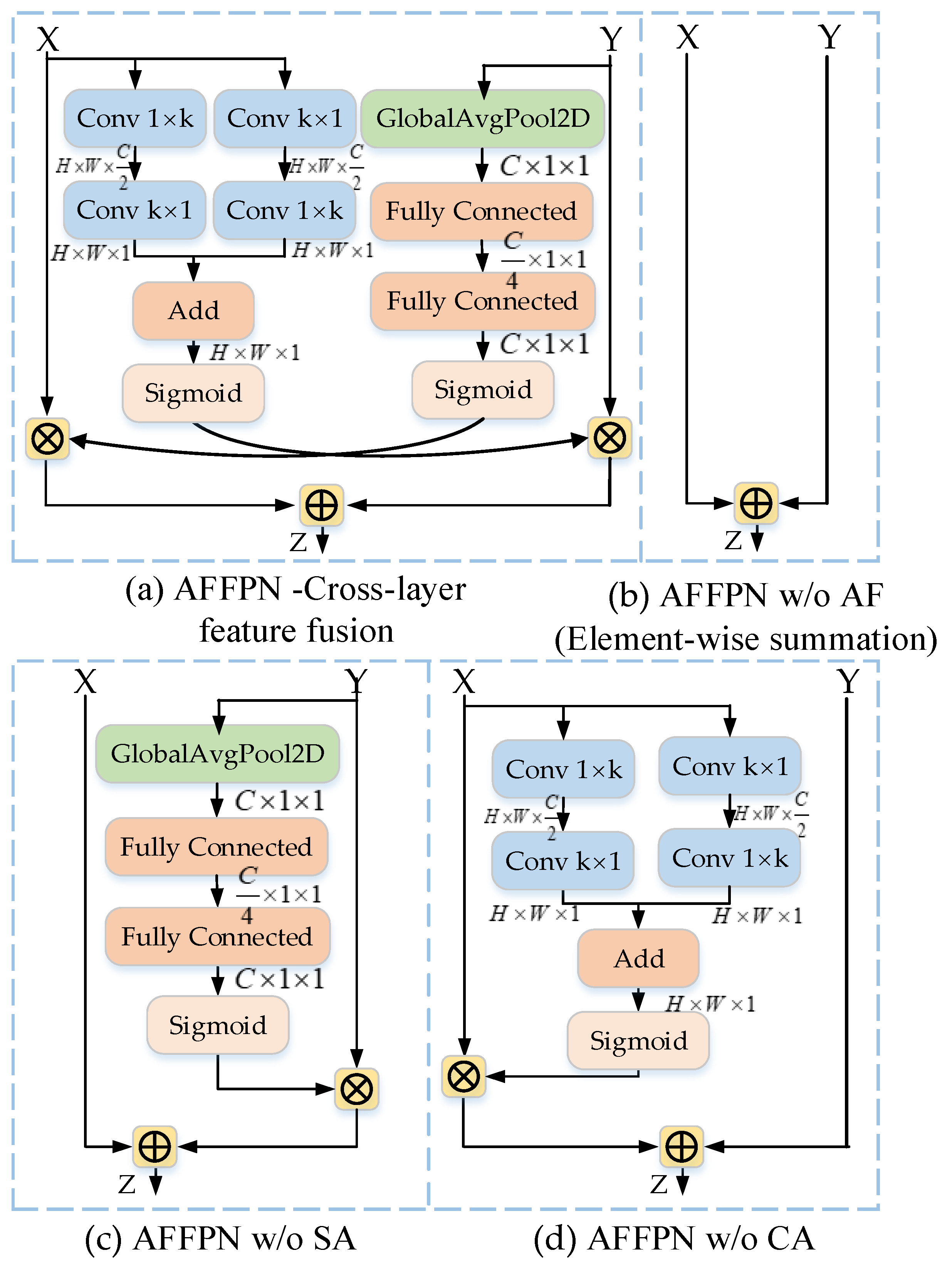

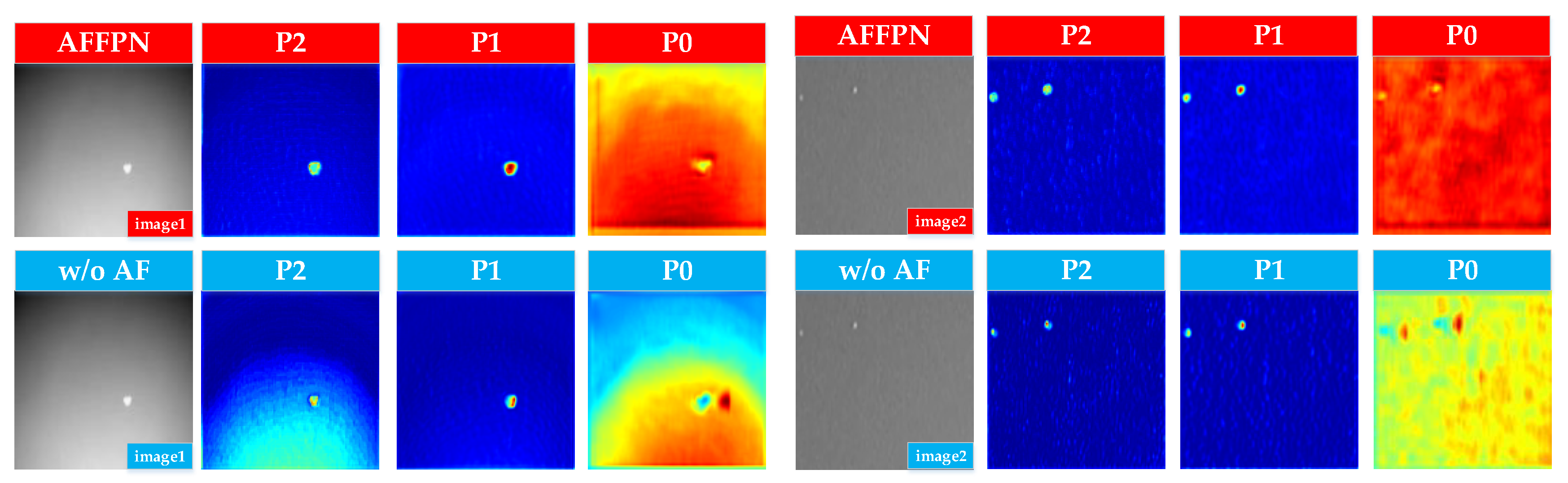

- Ablation study for the attention fusion module: The attention fusion module adaptively enhances shallow spatial location features and deep semantic features, filtering redundant features while focusing on the valuable information of the target in different layers to achieve better feature fusion. We compared AFFPN with four variants to demonstrate the effectiveness of the designed attention fusion module.

- AFFPN-cross-layer feature fusion: We considered cross-layer feature modulation between different feature layers, changing the feature layers that CA and SA focus on. Specifically, the features of the shallow layer are dynamically weighted and modulated by SA and the features of the deep layer, and the features of the deep layer are weighted and modulated by CA and the features of the shallow layer. Finally, their features are summed to fuse them, as shown in Figure 5a.

- AFFPN w/o AF (element-wise summation): This variant of AFFPN removes the CA and SA modules and uses the common element-wise summation approach instead of the AF module to achieve feature fusion in different layers. The aim is to explore the effectiveness of the AF module, as shown in Figure 5b.

- AFFPN w/o SA: We considered only CA in this AFFPN variant, and removed SA to investigate its contributions, as shown in Figure 5c.

- AFFPN w/o CA: We considered only SA in this variant, removing CA to evaluate its advantages, as shown in Figure 5d.

- (2)

- Ablation study for ASPPM and multiscale feature fusion: The ASPPM is used to enhance the global a priori information of a target and reduce contextual information loss. Multiscale feature fusion concatenates deep features containing semantic information and shallow features containing spatial location detail information to generate globally robust feature maps in order to improve the detection performance of small targets. We compared AFFPN with two variants to demonstrate the effectiveness of ASPPM and multiscale feature fusion.

- AFFPN w/o ASPPM: We removed the ASPPM from this variant to assess its contribution.

- AFFPN w/o multilayer concatenation: We removed the multilayer feature fusion module in this variant and used the last layer of the feature extraction module to predict the targets to explore the effectiveness of multiscale feature fusion.

4.4. Comparison with State-of-the-Art Methods

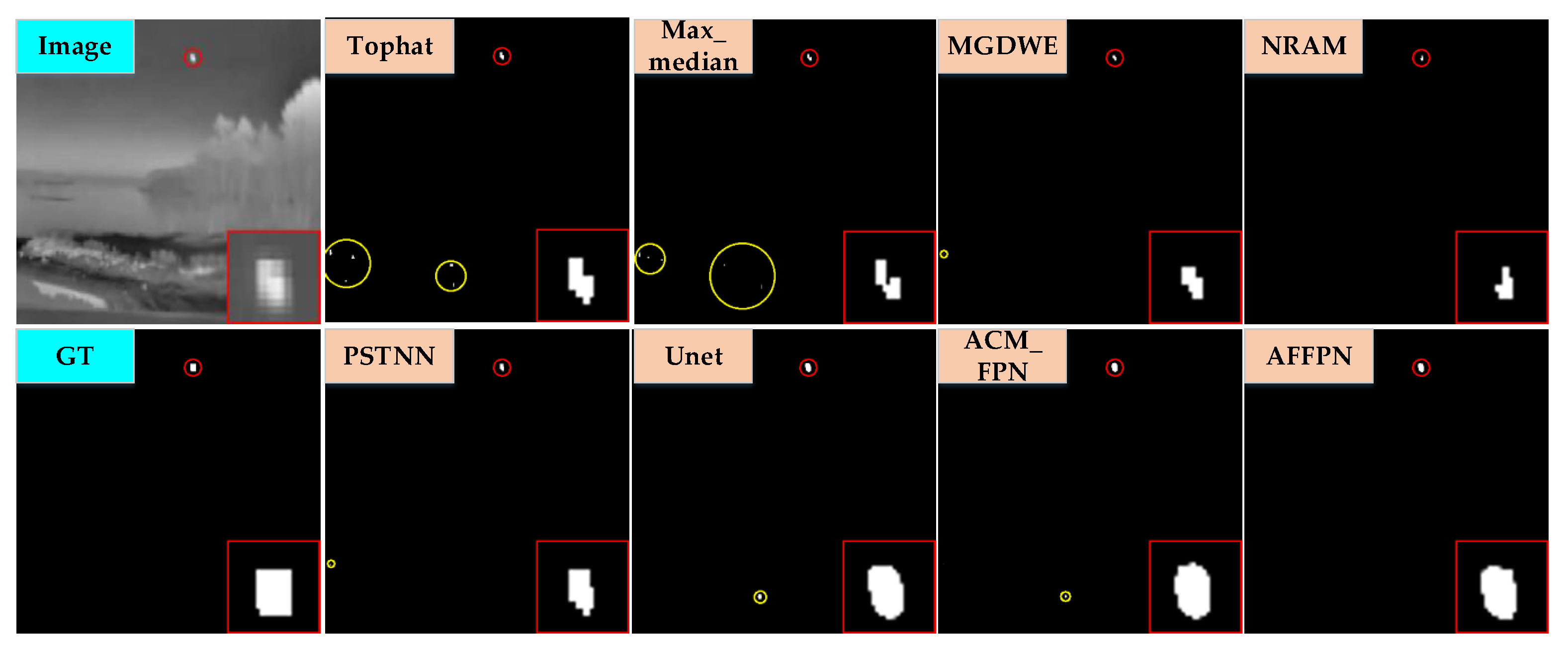

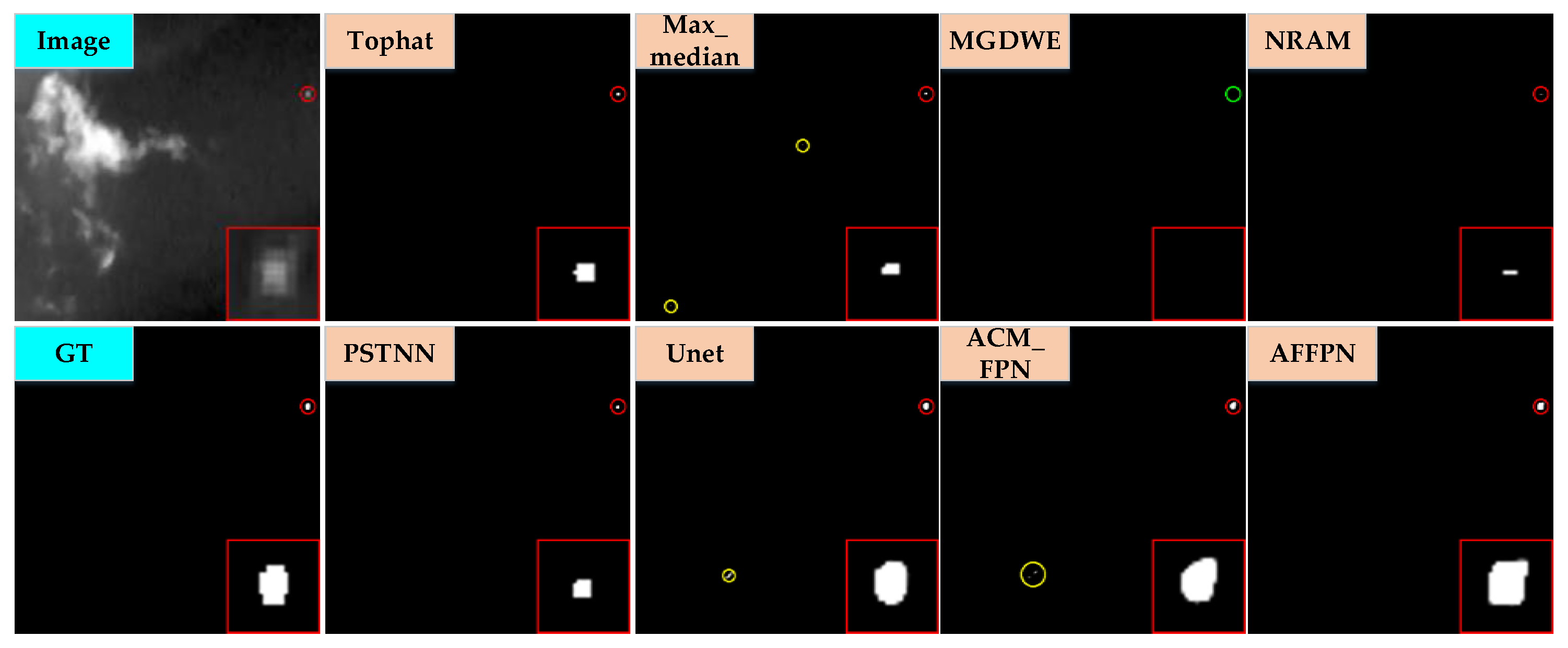

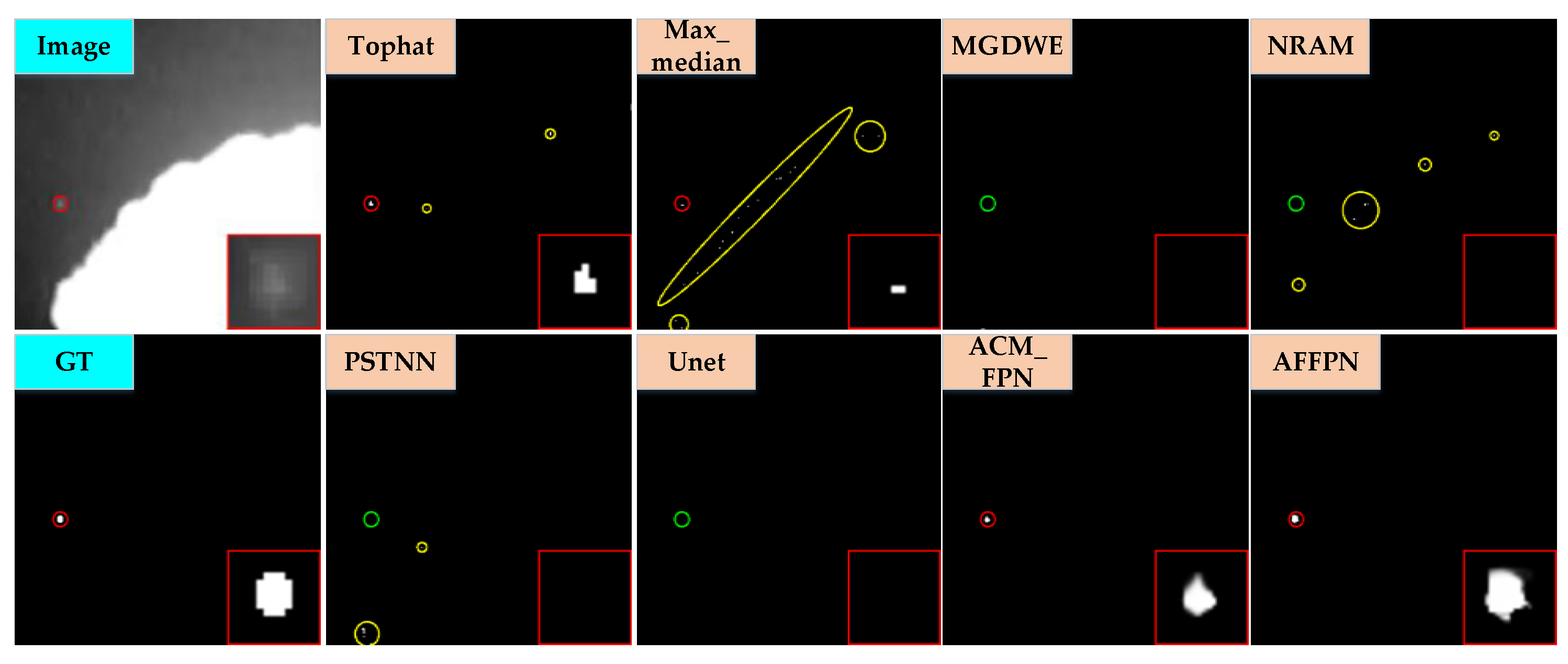

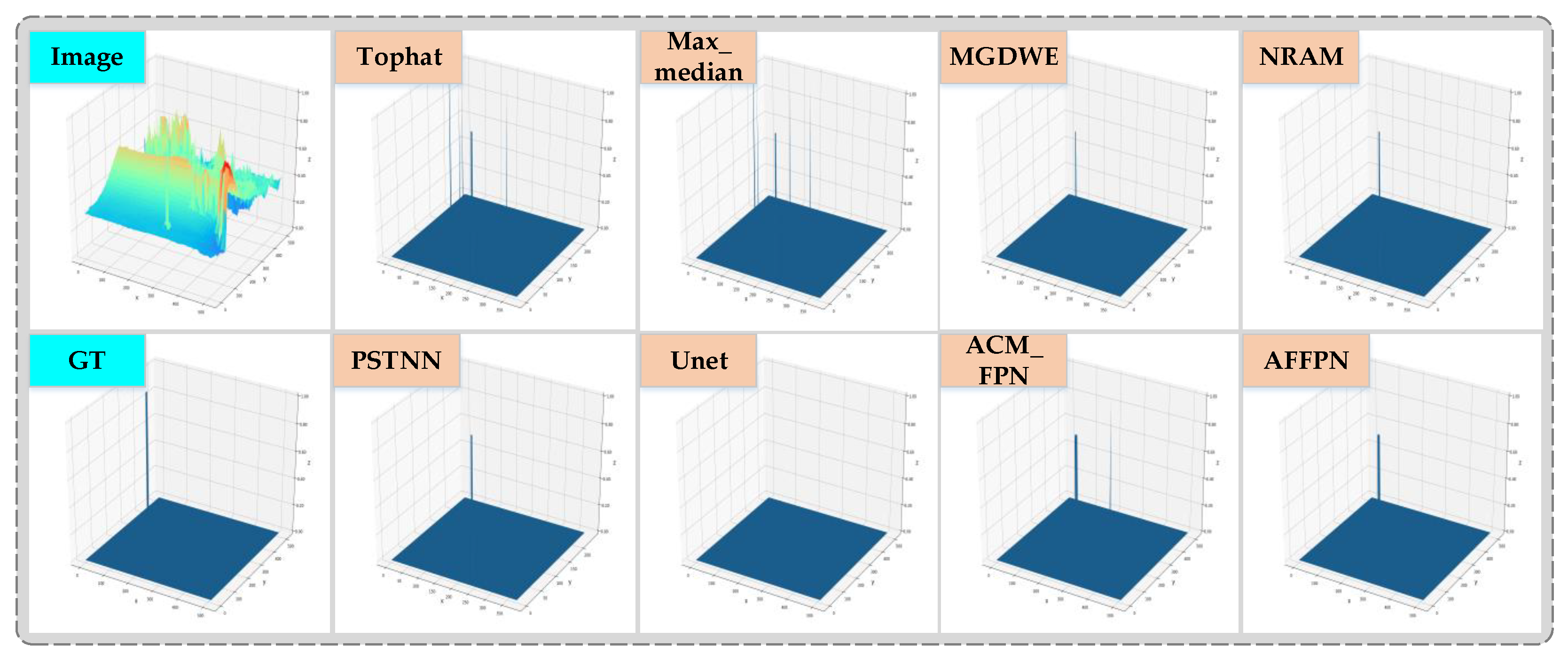

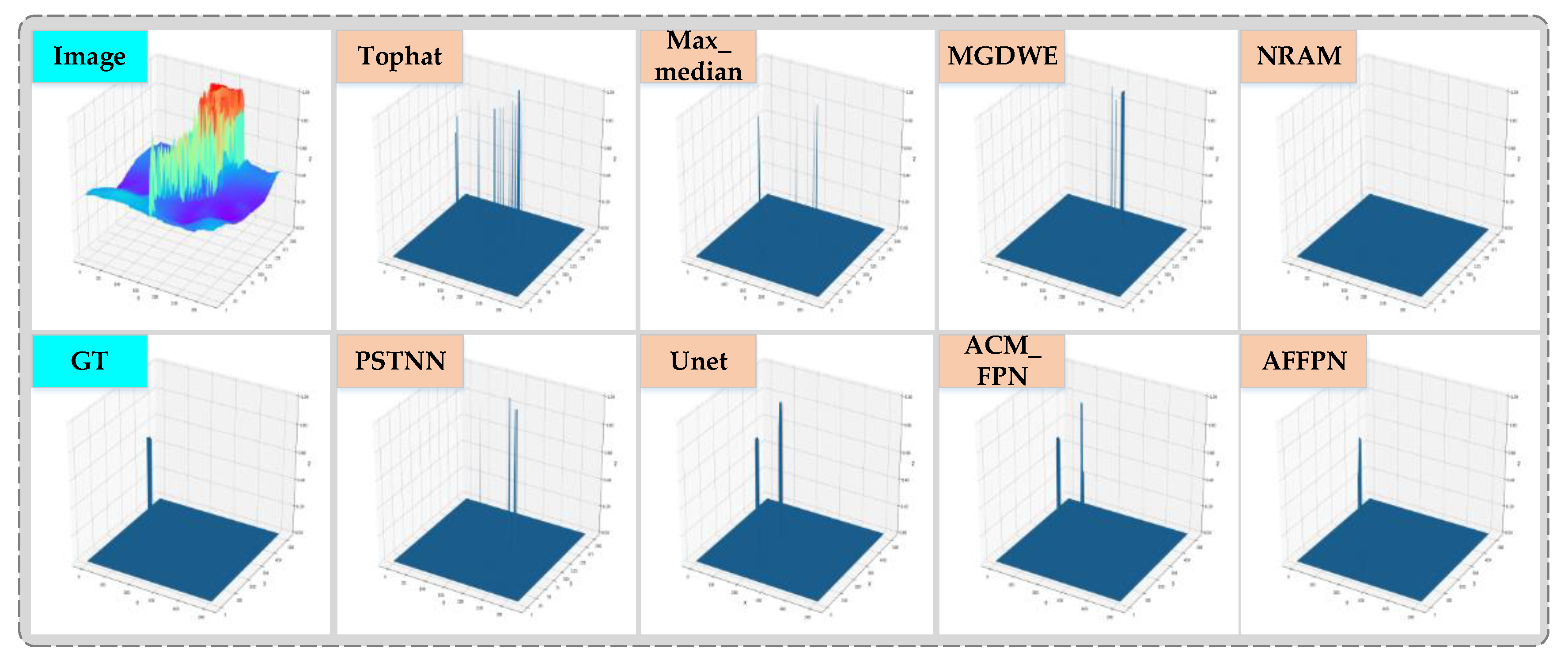

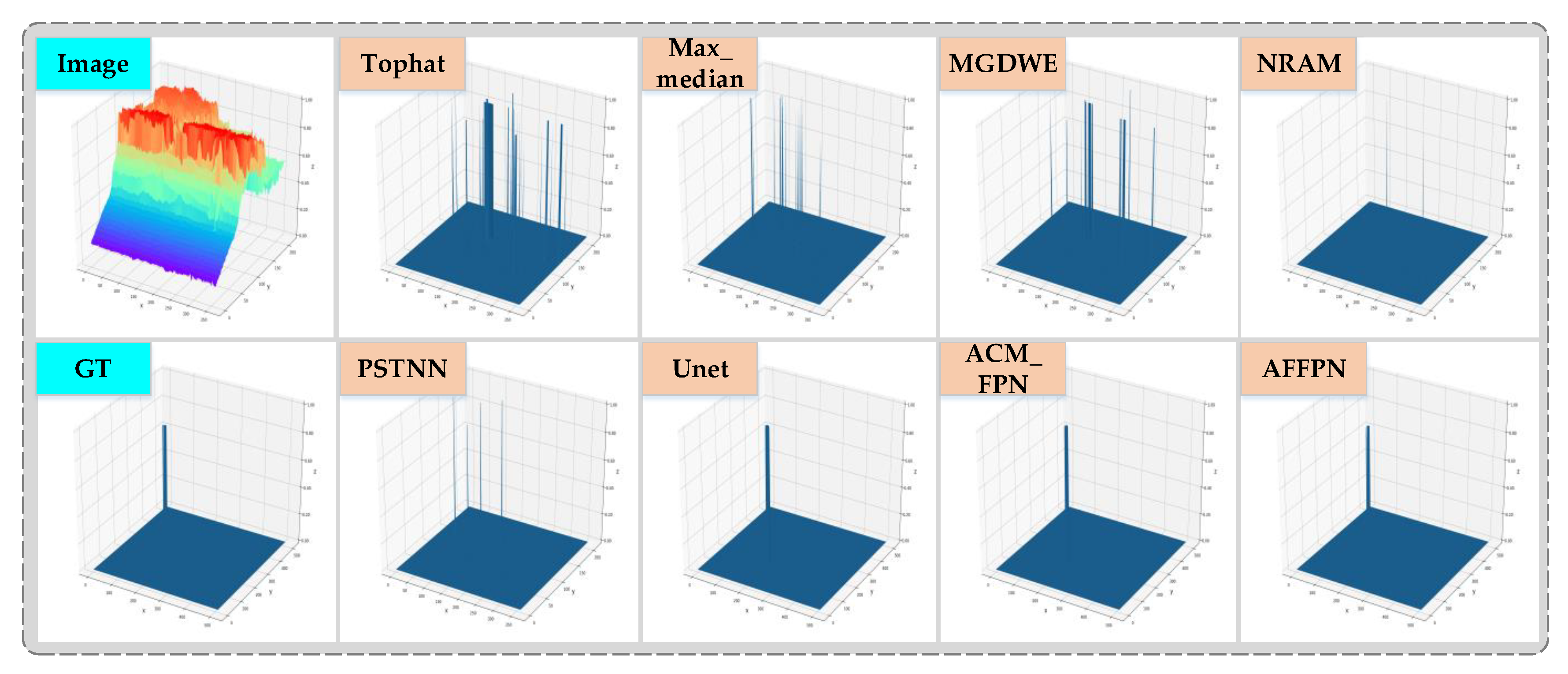

- (1)

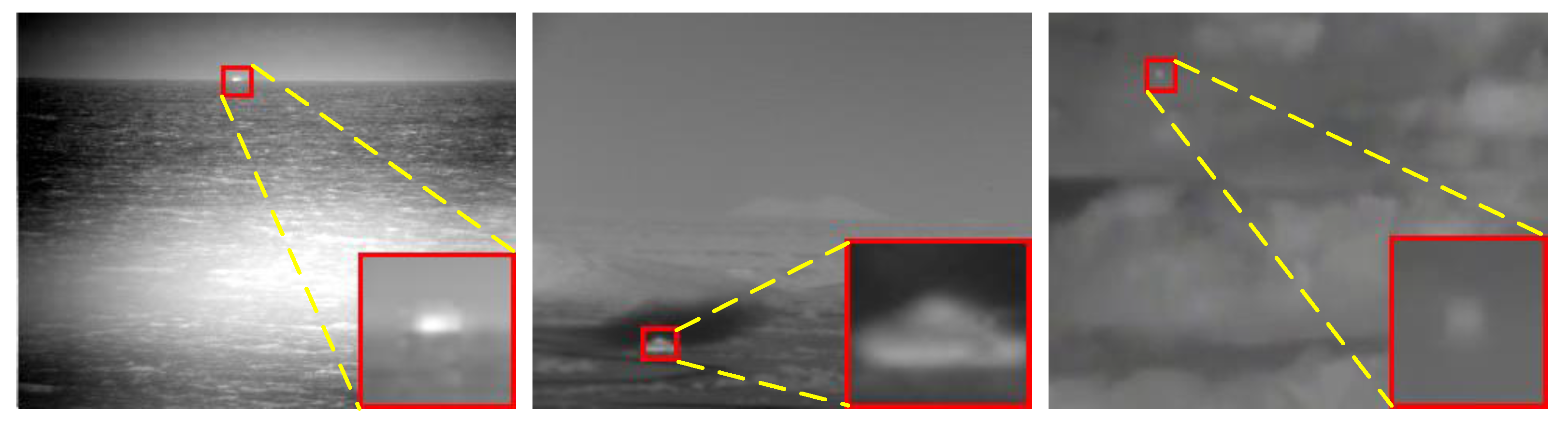

- Qualitative comparison. Figure 7, Figure 8 and Figure 9 compare the detection results of the eight methods on three typical scenes of small infrared targets, where the detection methods are labeled in the top-left corner of each image. The target area is magnified in the lower-right corner to show the results of fine segmentation more visually. We used red, yellow, and green circles to indicate correctly detected targets, false positives, and missed detections, respectively.

- (2)

- Numerical quantitative comparison. We obtained the predicted values of all the traditional model-driven methods, after which we eliminated low response regions by setting adaptive thresholds to suppress noise, calculated as follows:where and denote the maximum and average values of the output, respectively. All data-driven methods used the experimental parameters of the original authors.

- (3)

- Comparison of the inference performance. The inference performance is key to the practical deployment and application of unmanned platforms. The NVIDIA Jetson AGX Xavier development board has been widely used in a variety of unmanned platforms because of its high-performance computing capabilities. We deployed AFFPN on a stationary high-performance computer platform to compare its inference performance with those of other methods. We also implemented it on the NVIDIA Jetson AGX Xavier development board to further advance the application of AFFPN in real-world scenarios.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rawat, S.; Verma, S.K.; Kumar, Y. Review on recent development in infrared small target detection algorithms. Procedia Comput. Sci. 2020, 167, 2496–2505. [Google Scholar] [CrossRef]

- Tom, V.T.; Peli, T.; Leung, M.; Bondaryk, J.E. Morphology-based algorithm for point target detection in infrared backgrounds. In Proceedings of the Signal and Data Processing of Small Targets, Orlando, FL, USA, 22 October 1993; pp. 2–11. [Google Scholar] [CrossRef]

- Deshpande, S.D.; Er, M.H.; Venkateswarlu, R.; Chan, P. Max-Mean and Max-Median Filters for Detection of Small Targets; SPIE: Bellingham, WA, USA, 1999. [Google Scholar] [CrossRef]

- Chen, C.; Li, H.; Wei, Y.; Xia, T.; Tang, Y. A Local Contrast Method for Small Infrared Target Detection. IEEE Trans. Geosci. Remote Sens. 2014, 52, 574–581. [Google Scholar] [CrossRef]

- Han, J.; Ma, Y.; Zhou, B.; Fan, F.; Liang, K.; Fang, Y. A Robust Infrared Small Target Detection Algorithm Based on Human Visual System. IEEE Geosci. Remote Sens. Lett. 2014, 11, 2168–2172. [Google Scholar]

- Han, J.; Moradi, S.; Faramarzi, I.; Liu, C.; Zhang, H.; Zhao, Q. A Local Contrast Method for Infrared Small-Target Detection Utilizing a Tri-Layer Window. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1822–1826. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Zhang, H.; Zhao, Q.; Zhang, X.; Li, N. Infrared Small Target Detection Based on the Weighted Strengthened Local Contrast Measure. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1670–1674. [Google Scholar] [CrossRef]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A. Infrared Patch-Image Model for Small Target Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Ganesh, A.; Wright, J.; Wu, L.; Chen, M.; Ma, Y. Fast Convex Optimization Algorithms for Exact Recovery of a Corrupted Low-Rank Matrix. Available online: https://hdl.handle.net/2142/74352 (accessed on 15 August 2009).

- Wang, X.; Peng, Z.; Kong, D.; Zhang, P.; He, Y. Infrared dim target detection based on total variation regularization and principal component pursuit. Image Vis. Comput. 2017, 63, 1–9. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Attentional Local Contrast Networks for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar] [CrossRef]

- Wang, H.; Zhou, L.; Wang, L. Miss Detection vs. False Alarm: Adversarial Learning for Small Object Segmentation in Infrared Images. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2019, Seoul, Korea, 27 October–2 November 2019; pp. 8508–8517. [Google Scholar]

- Gao, Z.; Dai, J.; Xie, C. Dim and small target detection based on feature mapping neural networks. J. Vis. Commun. Image Represent. 2019, 62, 206–216. [Google Scholar] [CrossRef]

- McIntosh, B.; Venkataramanan, S.; Mahalanobis, A. Infrared Target Detection in Cluttered Environments by Maximization of a Target to Clutter Ratio (TCR) Metric Using a Convolutional Neural Network. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 485–496. [Google Scholar] [CrossRef]

- Du, J.; Huanzhang, L.; Hu, M.; Zhang, L.; Xinglin, S. CNN-based infrared dim small target detection algorithm using target oriented shallo— deep features and effective small anchor. Let Image Processing 2020, 15, 1–15. [Google Scholar]

- Zhao, B.; Wang, C.-P.; Fu, Q.; Han, Z.-S. A Novel Pattern for Infrared Small Target Detection with Generative Adversarial Network. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4481–4492. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric Contextual Modulation for Infrared Small Target Detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 949–958. [Google Scholar]

- Chen, F.; Gao, C.; Liu, F.; Zhao, Y.; Zhou, Y.; Meng, D.; Zuo, W. Local Patch Network with Global Attention for Infrared Small Target Detection. In IEEE Transactions on Aerospace and Electronic Systems; IEEE: New York, NY, USA, 2022. [Google Scholar]

- Hou, Q.; Wang, Z.; Tan, F.-J.; Zhao, Y.; Zheng, H.; Zhang, W. RISTDnet: Robust Infrared Small Target Detection Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 7000805. [Google Scholar] [CrossRef]

- Hou, Q.; Zhang, L.; Tan, F.-J.; Xi, Y.; Zheng, H.; Li, N. ISTDU-Net: Infrared Small-Target Detection U-Net. IEEE Geosci. Remote Sens. Lett. 2022, 19, 7506205. [Google Scholar] [CrossRef]

- Ma, T.; Yang, Z.; Wang, J.; Sun, S.; Ren, X.; Ahmad, U. Infrared Small Target Detection Network With Generate Label and Feature Mapping. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6505405. [Google Scholar] [CrossRef]

- Wang, K.; Du, S.; Liu, C.; Cao, Z. Interior Attention-Aware Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5002013. [Google Scholar] [CrossRef]

- Wang, A.; Li, W.; Wu, X.; Huang, Z.; Tao, R. MPANet: Multi-Patch Attention for Infrared Small Target object Detection. arXiv 2022, arXiv:2206.02120. [Google Scholar]

- Chen, Y.; Li, L.; Liu, X.; Su, X.; Chen, F. A Multi-task Framework for Infrared Small Target Detection and Segmentation. arXiv 2022, arXiv:2206.06923. [Google Scholar]

- Zhou, H.; Tian, C.; Zhang, Z.; Li, C.; Xie, Y.; Li, Z. PixelGame: Infrared small target segmentation as a Nash equilibrium. arXiv 2022, arXiv:2205.13124. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [Green Version]

- Li, B.; Xiao, C.; Wang, L.; Wang, Y.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense Nested Attention Network for Infrared Small Target Detection. arXiv 2021, arXiv:2106.00487. [Google Scholar]

- Zhao, T.; Wu, X. Pyramid Feature Attention Network for Saliency Detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3080–3089. [Google Scholar]

- Bai, X.; Zhou, F. Analysis of new top-hat transformation and the application for infrared dim small target detection. Pattern Recognit. 2010, 43, 2145–2156. [Google Scholar] [CrossRef]

- Qin, Y.; Bruzzone, L.; Gao, C.; Li, B. Infrared Small Target Detection Based on Facet Kernel and Random Walker. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7104–7118. [Google Scholar] [CrossRef]

- Liu, J.; He, Z.; Chen, Z.; Shao, L. Tiny and Dim Infrared Target Detection Based on Weighted Local Contrast. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1780–1784. [Google Scholar] [CrossRef]

- Deng, Q.; Lu, H.; Tao, H.; Hu, M.; Zhao, F. Multi-Scale Convolutional Neural Networks for Space Infrared Point Objects Discrimination. IEEE Access 2019, 7, 28113–28123. [Google Scholar] [CrossRef]

- Shi, M.; Wang, H. Infrared Dim and Small Target Detection Based on Denoising Autoencoder Network. Mob. Netw. Appl. 2020, 25, 1469–1483. [Google Scholar] [CrossRef]

- Wang, K.; Li, S.; Niu, S.; Zhang, K. Detection of Infrared Small Targets Using Feature Fusion Convolutional Network. IEEE Access 2019, 7, 146081–146092. [Google Scholar] [CrossRef]

- Zhao, M.; Cheng, L.; Yang, X.; Feng, P.; Liu, L.; Wu, N. TBC-Net: A real-time detector for infrared small target detection using semantic constraint. arXiv 2020, arXiv:2001.05852. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Zhang, T.; Cao, S.; Pu, T.; Peng, Z. AGPCNet: Attention-Guided Pyramid Context Networks for Infrared Small Target Detection. arXiv 2021, arXiv:2111.03580. [Google Scholar]

- Zhang, H.; Goodfellow, I.J.; Metaxas, D.N.; Odena, A. Self-Attention Generative Adversarial Networks. In Proceedings of the International Conference on Machine Learning; PMLR: New York City, NY, USA, 2019. [Google Scholar]

- Liu, H.; Chen, T.; Guo, P.; Shen, Q.; Cao, X.; Wang, Y.; Ma, Z. Non-local Attention Optimized Deep Image Compression. arXiv 2019, arXiv:1904.09757. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.-S. CBAM: Convolutional Block Attention Module. In Proceedings of the European conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3141–3149. [Google Scholar]

- Park, J.; Woo, S.; Lee, J.-Y.; Kweon, I.-S. BAM: Bottleneck Attention Module. arXiv 2018, arXiv:1807.06514. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 11531–11539. [Google Scholar]

- Zhang, H.; Zu, K.; Lu, J.; Zou, Y.; Meng, D. EPSANet: An Efficient Pyramid Split Attention Block on Convolutional Neural Network. arXiv 2021, arXiv:2105.14447. [Google Scholar]

- Zhang, Q.-L.; Yang, Y. SA-Net: Shuffle Attention for Deep Convolutional Neural Networks. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 2235–2239. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Zhang, Y.; Hsieh, J.-W.; Lee, C.-C.; Fan, K.-C. SFPN: Synthetic FPN for Object Detection. arXiv 2022, arXiv:2203.02445. [Google Scholar]

- Gong, Y.; Yu, X.; Ding, Y.; Peng, X.; Zhao, J.; Han, Z. Effective Fusion Factor in FPN for Tiny Object Detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1159–1167. [Google Scholar]

- Tong, X.; Sun, B.; Wei, J.; Zuo, Z.; Su, S. EAAU-Net: Enhanced Asymmetric Attention U-Net for Infrared Small Target Detection. Remote. Sens. 2021, 13, 3200. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.E.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference On Computer Vision And Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Fang, H.; Xia, M.; Zhou, G.; Chang, Y.; Yan, L. Infrared Small UAV Target Detection Based on Residual Image Prediction via Global and Local Dilated Residual Networks. IEEE Geosci. Remote Sens. Lett. 2021, 19, 7002305. [Google Scholar] [CrossRef]

- Duchi, J.C.; Hazan, E.; Singer, Y. Adaptive Subgradient Methods for Online Learning and Stochastic Optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Rahman, M.A.; Wang, Y. Optimizing Intersection-Over-Union in Deep Neural Networks for Image Segmentation. In Advances in Visual Computing, Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Deng, H.; Sun, X.; Liu, M.; Ye, C.; Zhou, X. Small Infrared Target Detection Based on Weighted Local Difference Measure. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4204–4214. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, L.; Yuan, D.; Chen, H. Infrared small target detection based on local intensity and gradient properties. Infrared Phys. Technol. 2018, 89, 88–96. [Google Scholar] [CrossRef]

- Deng, H.; Sun, X.; Liu, M.; Ye, C.; Zhou, X. Infrared small-target detection using multiscale gray difference weighted image entropy. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 60–72. [Google Scholar] [CrossRef]

- Zhang, L.; Peng, L.; Zhang, T.; Cao, S.; Peng, Z. Infrared Small Target Detection via Non-Convex Rank Approximation Minimization Joint l2, 1 Norm. Remote. Sens. 2018, 10, 1821. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Peng, Z. Infrared Small Target Detection Based on Partial Sum of the Tensor Nuclear Norm. Remote. Sens. 2019, 11, 382. [Google Scholar] [CrossRef] [Green Version]

| Stage | Output | Backbone |

|---|---|---|

| 480 × 480 | ||

| 480 × 480 | ||

| 240 × 240 | ||

| 120 × 120 |

| Model | Params (M) | mIoU (×10−2) | nIoU (×10−2) | F-Measure (×10−2) |

|---|---|---|---|---|

| AFFPN–cross-layer feature fusion | 7.40 | 75.89 | 74.21 | 82.50 |

| AFFPN w/o AF (element-wise summation) | 7.17 | 75.80 | 74.63 | 82.48 |

| AFFPN w/o SA | 7.18 | 76.26 | 74.43 | 82.01 |

| AFFPN w/o CA | 7.39 | 75.58 | 74.15 | 82.93 |

| AFFPN (Ours) | 7.40 | 78.14 | 75.91 | 83.63 |

| Model | Params (M) | mIoU (×10−2) | nIoU (×10−2) | F-Measure (×10−2) |

|---|---|---|---|---|

| AFFPN with SE | 7.40 | 76.71 | 75.06 | 82.60 |

| AFFPN with CBAM | 7.28 | 75.97 | 74.02 | 82.90 |

| AFFPN with Shuffle Attention | 7.46 | 74.47 | 73.11 | 82.56 |

| AFFPN (Ours) | 7.40 | 78.14 | 75.91 | 83.63 |

| Model | Params (M) | mIoU (×10−2) | nIoU (×10−2) | F-Measure (×10−2) | AP (×10−2) | AUC (×10−2) |

|---|---|---|---|---|---|---|

| AFFPN w/o ASPPM | 7.63 | 76.32 | 74.59 | 83.29 | 79.17 | 94.44 |

| AFFPN w/o multilayer concatenation | 7.68 | 74.25 | 74.55 | 83.42 | 78.53 | 93.67 |

| AFFPN (ours) | 7.40 | 78.14 | 75.91 | 83.53 | 80.61 | 94.52 |

| Methods | Parameter Settings |

|---|---|

| Top-hat | Structure size = 3 × 3 |

| Max-median | Patch size = 3 × 3 |

| RLCM | Size: 8 × 8, Slide step: 4, threshold factor: k = 1 |

| MPCM | L = 9, window size: 3 × 3, 5 × 5, 7 × 7 |

| LIGP | k = 0.2, Local window size = 11 × 11 |

| MGDWE | r = 2, Local window size = 7 × 7 |

| NRAM | Patch size: 50 × 50, Slide step: 10, |

| PSTNN | Patch size: 40 × 40, Slide step: 40, , |

| Methods | mIoU (×10−2) | nIoU (×10−2) | F-Measure (×10−2) | AP (×10−2) | AUC (×10−2) |

|---|---|---|---|---|---|

| Top-hat | 28.75 | 42.95 | 69.29 | 58.49 | 84.40 |

| Max-median | 15.65 | 25.43 | 62.40 | 41.50 | 74.96 |

| RLCM | 28.56 | 34.44 | 46.94 | 39.95 | 87.97 |

| MPCM | 21.35 | 24.54 | 65.98 | 39.73 | 72.65 |

| LIGP | 31.01 | 40.62 | 72.56 | 58.83 | 82.11 |

| MGDWE | 16.22 | 23.06 | 50.60 | 20.30 | 61.85 |

| NRAM | 24.99 | 32.39 | 67.82 | 48.38 | 76.69 |

| PSTNN | 39.68 | 48.16 | 71.73 | 59.31 | 83.89 |

| FPN | 72.18 | 70.41 | 80.39 | 75.90 | 93.10 |

| U-Net | 73.64 | 72.35 | 80.81 | 76.11 | 94.01 |

| TBC-Net | 73.40 | 71.30 | — | — | — |

| ACM-FPN | 73.65 | 72.22 | 81.60 | 78.33 | 93.79 |

| ACM-U-Net | 74.45 | 72.70 | 81.68 | 78.08 | 93.63 |

| AFFPN(Ours) | 78.14 | 75.91 | 83.53 | 80.61 | 94.52 |

| Methods | Top-Hat | Max-Median | RLCM | MPCM | LIGP | MGDWE | NRAM |

|---|---|---|---|---|---|---|---|

| Times (s) | 0.006 | 0.007 | 6.850 | 0.347 | 0.877 | 1.670 | 0.971 |

| Methods | TBC-Net | U-Net | ACM-FPN | ACM-U-Net | Ours (C) | Ours (G) | Ours (B) |

| Times (s) | 0.049 | 0.144 | 0.067 | 0.156 | 0.218 | 0.008 | 0.059 |

| Time (s) | Batch Size | ||||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 4 | 8 | 16 | 32 | ||

| Power Mode (W) | 10 | 0.1203 | 0.0815 | 0.0624 | 0.0521 | 0.0472 | 0.0479 |

| 15 | 0.1190 | 0.0804 | 0.0612 | 0.0514 | 0.0459 | 0.0461 | |

| 30 | 0.0593 | 0.0391 | 0.0299 | 0.0249 | 0.0227 | 0.0236 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zuo, Z.; Tong, X.; Wei, J.; Su, S.; Wu, P.; Guo, R.; Sun, B. AFFPN: Attention Fusion Feature Pyramid Network for Small Infrared Target Detection. Remote Sens. 2022, 14, 3412. https://doi.org/10.3390/rs14143412

Zuo Z, Tong X, Wei J, Su S, Wu P, Guo R, Sun B. AFFPN: Attention Fusion Feature Pyramid Network for Small Infrared Target Detection. Remote Sensing. 2022; 14(14):3412. https://doi.org/10.3390/rs14143412

Chicago/Turabian StyleZuo, Zhen, Xiaozhong Tong, Junyu Wei, Shaojing Su, Peng Wu, Runze Guo, and Bei Sun. 2022. "AFFPN: Attention Fusion Feature Pyramid Network for Small Infrared Target Detection" Remote Sensing 14, no. 14: 3412. https://doi.org/10.3390/rs14143412

APA StyleZuo, Z., Tong, X., Wei, J., Su, S., Wu, P., Guo, R., & Sun, B. (2022). AFFPN: Attention Fusion Feature Pyramid Network for Small Infrared Target Detection. Remote Sensing, 14(14), 3412. https://doi.org/10.3390/rs14143412