Real-Time Integration of Segmentation Techniques for Reduction of False Positive Rates in Fire Plume Detection Systems during Forest Fires

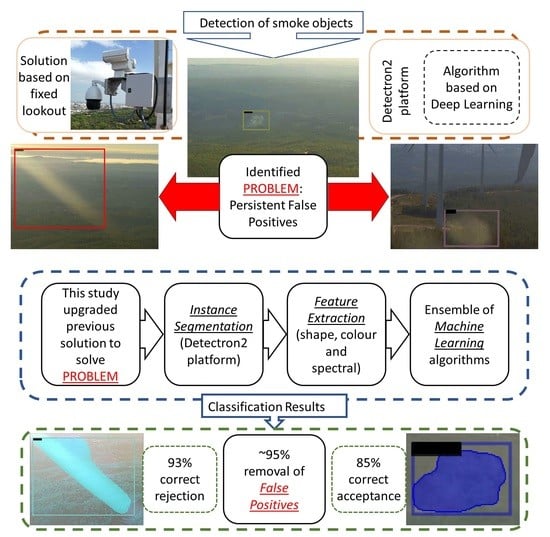

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

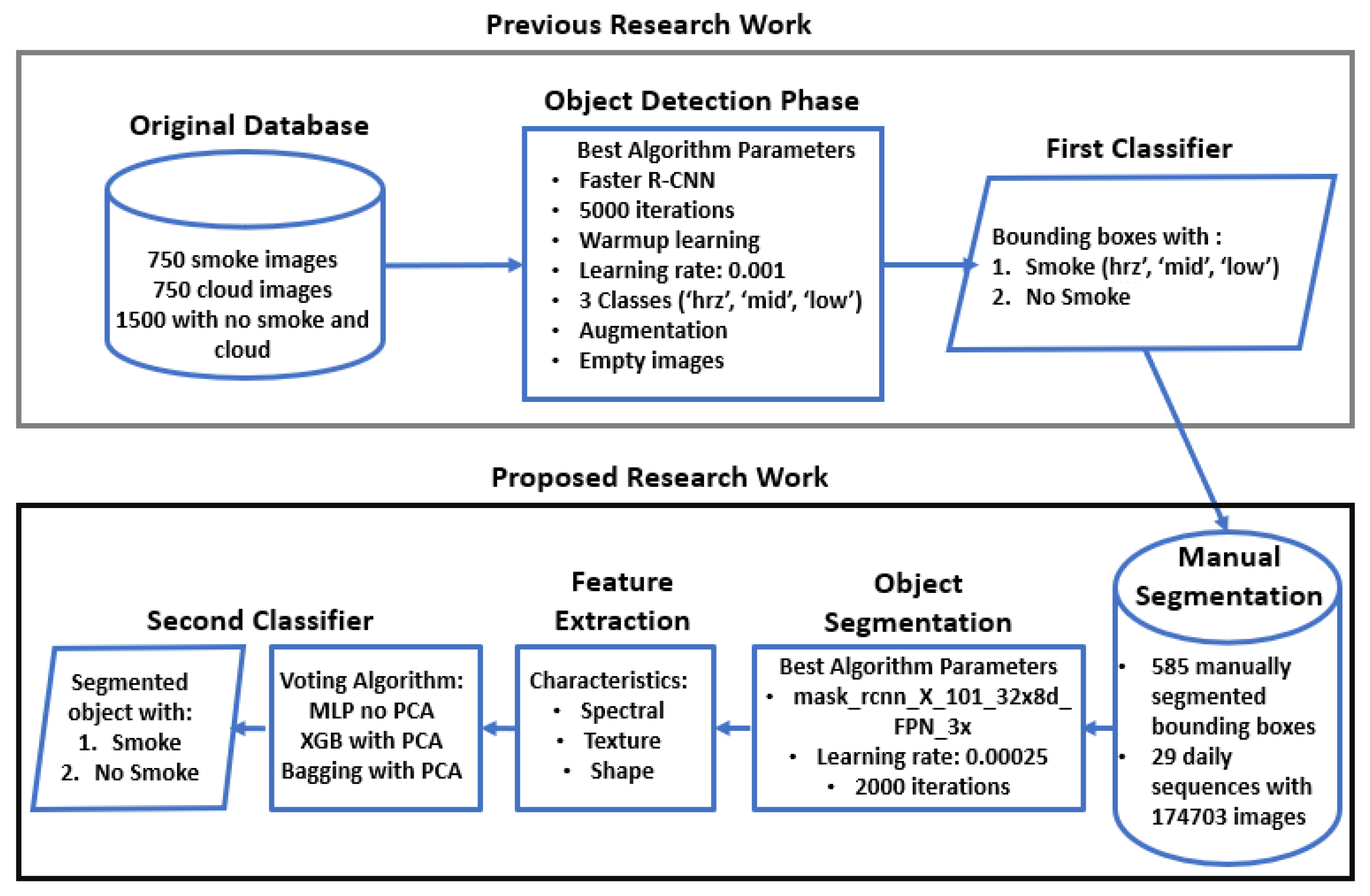

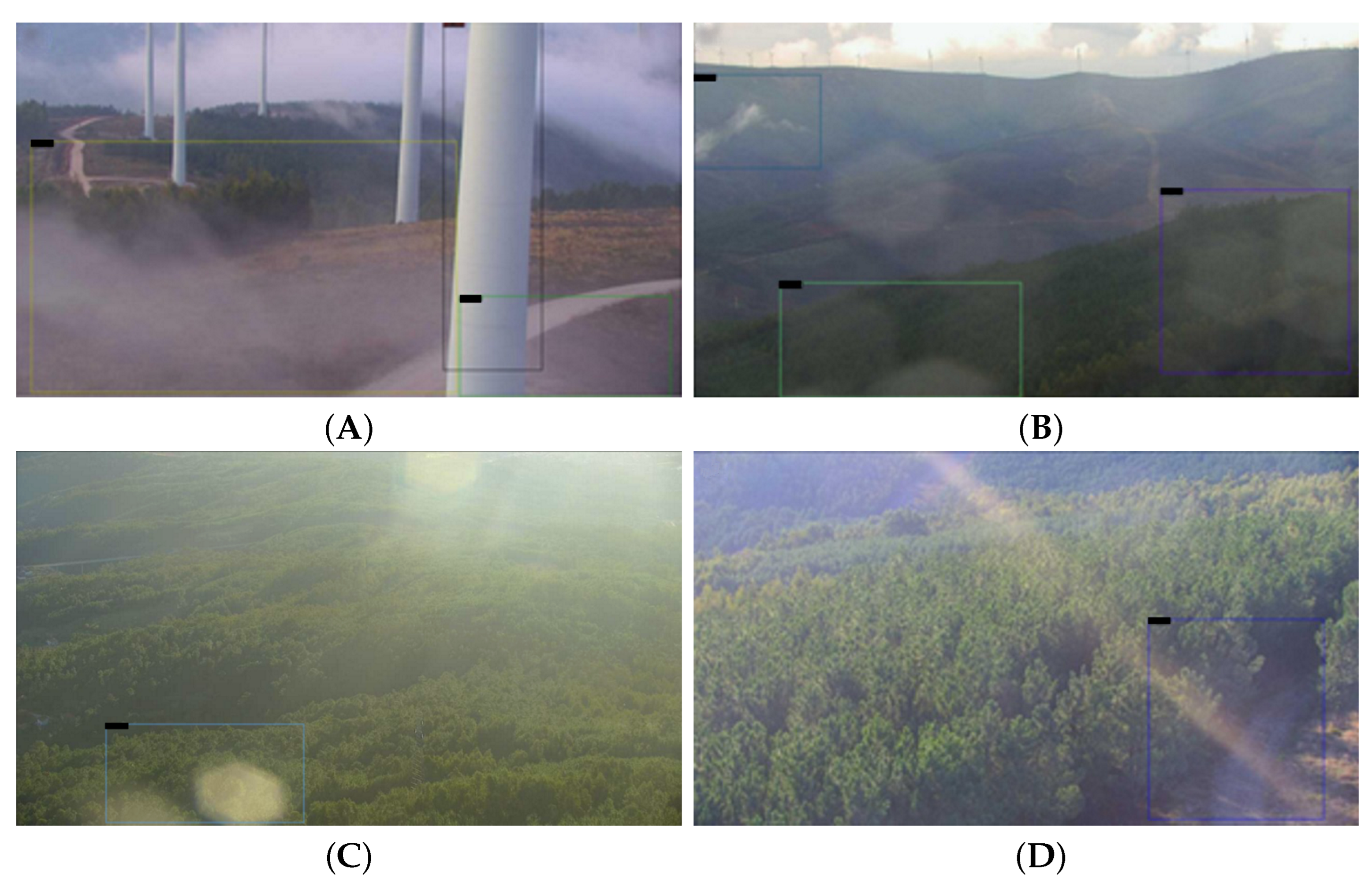

3.1. Workflow

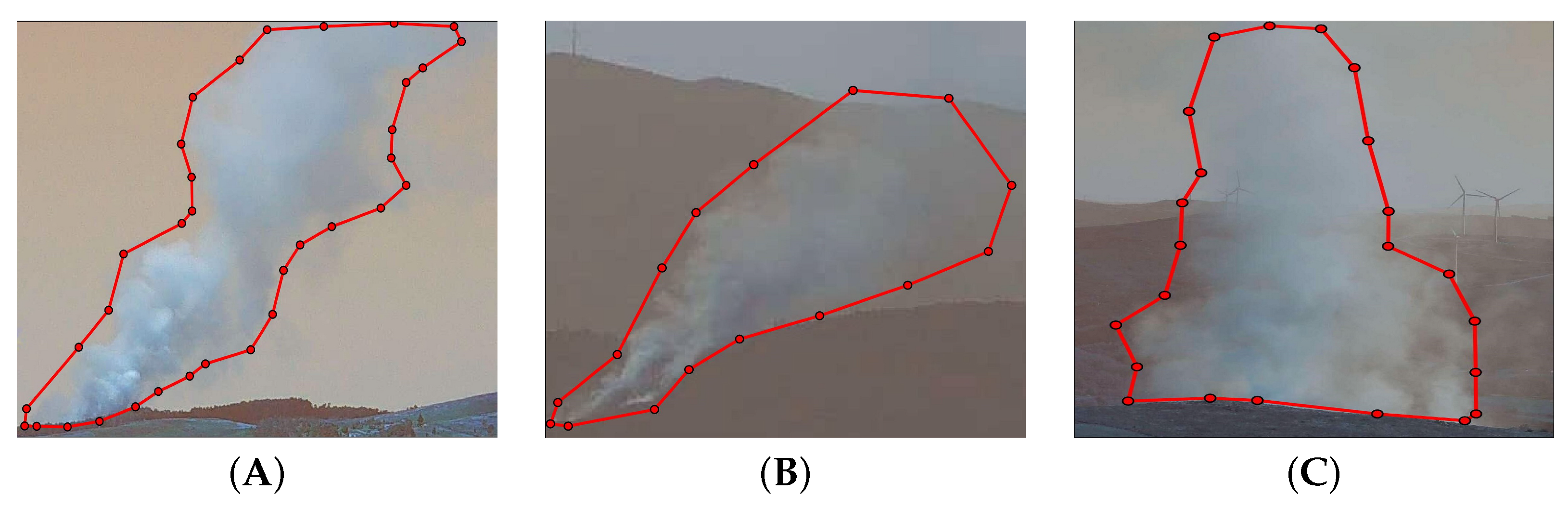

3.2. Dataset

3.3. Algorithms

3.3.1. Smoke Segmentation with Deep Learning Algorithms

3.3.2. Feature Extraction

3.3.3. Smoke Classification with Machine Learning Algorithms

3.4. Performance Evaluation

4. Results and Discussion

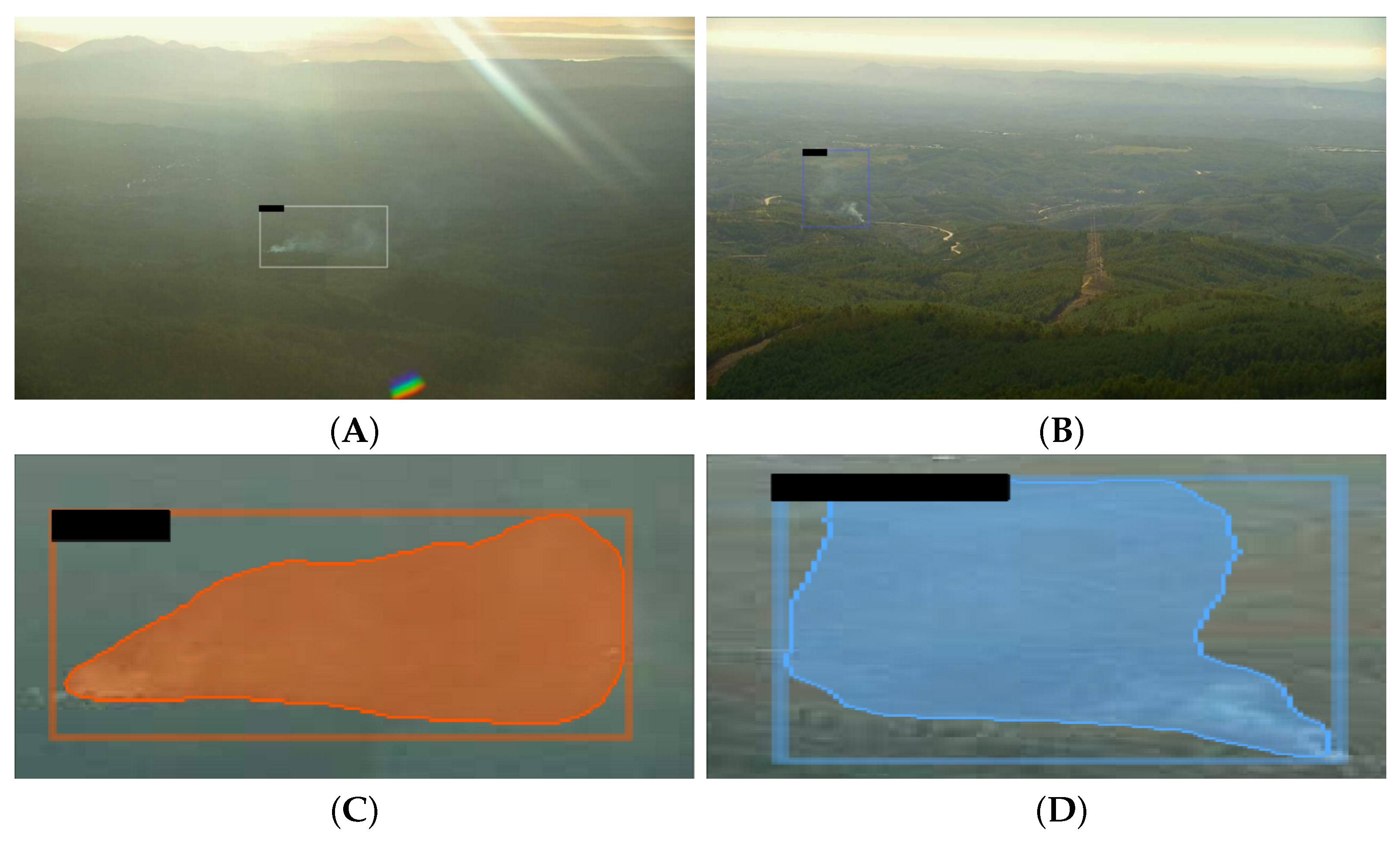

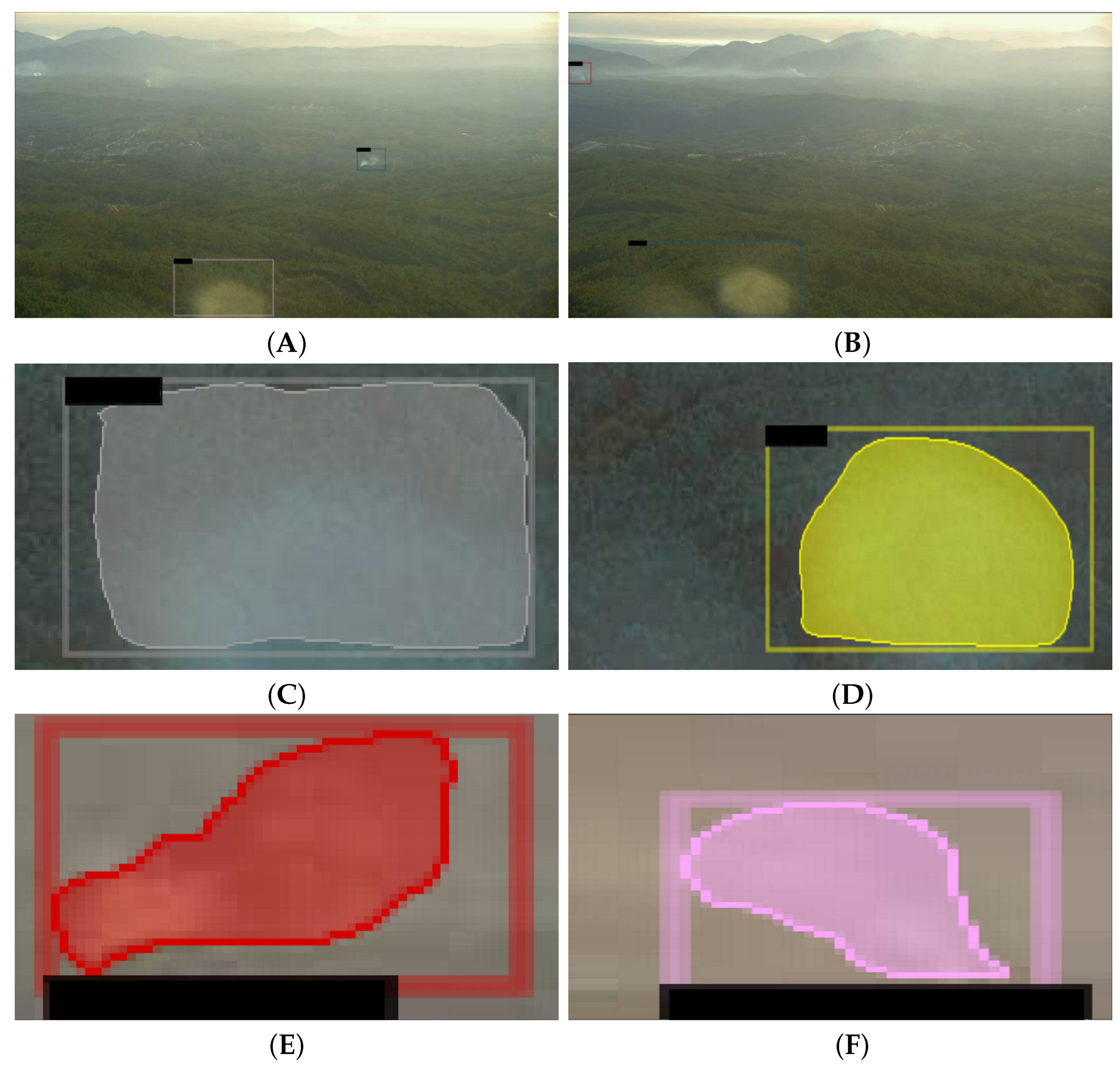

4.1. Smoke Segmentation

4.2. Smoke Classifier Based on Machine Learning

4.3. Temporal Evaluation of the Classification Algorithm

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- San-Miguel-Ayanz, J.; Durrant, T.; Boca, R.; Maianti, P.; Libertà, G.; Vivancos, T.A.; Oom, D.; Branco, A.; Rigo, D.T.; Ferrari, D.; et al. Forest Fires in Europe, Middle East and North Africa 2020; European Commission Joint Research Centre: Ispra, Italy, 2021. [Google Scholar] [CrossRef]

- Giglio, L.; Boschetti, L.; Roy, D.P.; Humber, M.L.; Justice, C.O. The Collection 6 MODIS burned area mapping algorithm and product. Remote Sens. Environ. 2018, 217, 72–85. [Google Scholar] [CrossRef] [PubMed]

- Haikerwal, A.; Reisen, F.; Sim, M.R.; Abramson, M.J.; Meyer, C.P.; Johnston, F.H.; Dennekamp, M. Impact of smoke from prescribed burning: Is it a public health concern? J. Air Waste Manag. Assoc. 2015, 65, 592–598. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Milne, M.; Clayton, H.; Dovers, S.; Cary, G.J. Evaluating benefits and costs of wildland fires: Critical review and future applications. Environ. Hazards 2014, 13, 114–132. [Google Scholar] [CrossRef]

- Oliveira, M.; Delerue-Matos, C.; Pereira, M.C.; Morais, S. Environmental particulate matter levels during 2017 large forest fires and megafires in the center region of Portugal: A public health concern? Int. J. Environ. Res. Public Health 2020, 17, 1032. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jaffe, D.A.; Wigder, N.L. Ozone production from wildfires: A critical review. Atmos. Environ. 2012, 51, 1–10. [Google Scholar] [CrossRef]

- Finlay, S.E.; Moffat, A.; Gazzard, R.; Baker, D.; Murray, V. Health Impacts of Wildfires. PLoS Curr. 2012, 4, e4f959951cce2c. [Google Scholar] [CrossRef] [PubMed]

- Abedi, R. Forest fires (investigation of causes, damages and benefits). New Sci. Technol. 2020, 2, 183–187. [Google Scholar]

- Alkhatib, A.A.A. A Review on Forest Fire Detection Techniques. Int. J. Distrib. Sens. Netw. 2014, 10, 597368. [Google Scholar] [CrossRef] [Green Version]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef]

- Jain, P.; Coogan, S.C.; Subramanian, S.G.; Crowley, M.; Taylor, S.; Flannigan, M.D. A review of machine learning applications in wildfire science and management. Environ. Rev. 2020, 28, 478–505. [Google Scholar] [CrossRef]

- Martyn, I.; Petrov, Y.; Stepanov, S.; Sidorenko, A.; Vagizov, M. Monitoring forest fires and their consequences using MODIS spectroradiometer data. IOP Conf. Ser. Earth Environ. Sci. 2020, 507, 12019. [Google Scholar] [CrossRef]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite Smoke Scene Detection Using Convolutional Neural Network with Spatial and Channel-Wise Attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef] [Green Version]

- Priya, R.S.; Vani, K. Deep learning based forest fire classification and detection in satellite images. In Proceedings of the 2019 11th International Conference on Advanced Computing (ICoAC), Chennai, India, 18–20 December 2019; pp. 61–65. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.L.L.; Moritake, K.; Cabezas, M. Deep Learning in Forestry Using UAV-Acquired RGB Data: A Practical Review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Kinaneva, D.; Hristov, G.; Raychev, J.; Zahariev, P. Early forest fire detection using drones and artificial intelligence. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 1060–1065. [Google Scholar]

- Almeida, R.V.D.; Vieira, P. Forest Fire Finder—DOAS application to long-range forest fire detection. Atmos. Meas. Tech. 2017, 10, 2299–2311. [Google Scholar] [CrossRef] [Green Version]

- de Almeida, R.V.; Crivellaro, F.; Narciso, M.; Sousa, A.; Vieira, P. Bee2Fire: A Deep Learning Powered Forest Fire Detection System; SCITEPRESS-Science and Technology Publications: Setúbal, Portugal, 2020; Volume 2, pp. 603–609. [Google Scholar] [CrossRef]

- Guede-Fernández, F.; Martins, L.; de Almeida, R.V.; Gamboa, H.; Vieira, P. A Deep Learning Based Object Identification System for Forest Fire Detection. Fire 2021, 4, 75. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, Y. Real-time forest smoke detection using hand-designed features and deep learning. Comput. Electron. Agric. 2019, 167, 105029. [Google Scholar] [CrossRef]

- Hough, G. ForestWatch—A long-range outdoor wildfire detection system. In Proceedings of the Resmenes de las Comunicaciones de la IV Conferencia Internacional Sobre Incendios Forestales, Seville, Spain, 13–18 May 2007. [Google Scholar]

- AlcheraX, I. FireScout|Wildfire Detection That Never Sleeps. Available online: https://firescout.ai/ (accessed on 1 April 2022).

- Çetin, A.E.; Dimitropoulos, K.; Gouverneur, B.; Grammalidis, N.; Günay, O.; Habiboǧlu, Y.H.; Töreyin, B.U.; Verstockt, S. Video fire detection—Review. Digit. Signal Process. A Rev. J. 2013, 23, 1827–1843. [Google Scholar] [CrossRef] [Green Version]

- Chaturvedi, S.; Khanna, P.; Ojha, A. A survey on vision-based outdoor smoke detection techniques for environmental safety. ISPRS J. Photogramm. Remote Sens. 2022, 185, 158–187. [Google Scholar] [CrossRef]

- Kim, B.; Lee, J. A Bayesian Network-Based Information Fusion Combined with DNNs for Robust Video Fire Detection. Appl. Sci. 2021, 11, 7624. [Google Scholar] [CrossRef]

- Cazzolato, M.T.; Avalhais, L.P.; Chino, D.Y.; Ramos, J.S.; de Souza, J.A.; Rodrigues, J.F., Jr.; Traina, A.J. FiSmo: A Compilation of Datasets from Emergency Situations for Fire and Smoke Analysis. In Brazilian Symposium on Databases-SBBD; SBC: Uberlândia, Brazil, 2017. [Google Scholar]

- Lee, Y.; Shim, J. False Positive Decremented Research for Fire and Smoke Detection in Surveillance Camera using Spatial and Temporal Features Based on Deep Learning. Electronics 2019, 8, 1167. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Dang, L.; Ren, J. Forest fire image recognition based on convolutional neural network. J. Algorithms Comput. Technol. 2019, 13, 1748302619887689. [Google Scholar] [CrossRef] [Green Version]

- Wu, X.; Lu, X.; Leung, H. An adaptive threshold deep learning method for fire and smoke detection. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 1954–1959. [Google Scholar]

- Cao, Y.; Yang, F.; Tang, Q.; Lu, X. An attention enhanced bidirectional LSTM for early forest fire smoke recognition. IEEE Access 2019, 7, 154732–154742. [Google Scholar] [CrossRef]

- Ryu, J.; Kwak, D. Flame Detection Using Appearance-Based Pre-Processing and Convolutional Neural Network. Appl. Sci. 2021, 11, 5138. [Google Scholar] [CrossRef]

- Jiao, Z.; Zhang, Y.; Xin, J.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. A deep learning based forest fire detection approach using UAV and YOLOv3. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–27 July 2019; pp. 1–5. [Google Scholar]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 2 March 2022).

- Noor, S.; Waqas, M.; Saleem, M.I.; Minhas, H.N. Automatic Object Tracking and Segmentation Using Unsupervised SiamMask. IEEE Access 2021, 9, 106550–106559. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef] [Green Version]

- Wong, K.H. OpenLabeler. 2020. Available online: https://github.com/kinhong/OpenLabeler (accessed on 12 February 2022).

- Ma, L.; Fu, T.; Blaschke, T.; Li, M.; Tiede, D.; Zhou, Z.; Ma, X.; Chen, D. Evaluation of feature selection methods for object-based land cover mapping of unmanned aerial vehicle imagery using random forest and support vector machine classifiers. ISPRS Int. J. Geo-Inf. 2017, 6, 51. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- der Walt, S.V.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T. Scikit-Image: Image processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef] [PubMed]

- Mingqiang, Y.; Kidiyo, K.; Joseph, R. A survey of shape feature extraction techniques. Pattern Recognit. 2008, 15, 43–90. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Chen, M.; Wang, Q.; Li, X. Discriminant Analysis with Graph Learning for Hyperspectral Image Classification. Remote Sens. 2018, 10, 836. [Google Scholar] [CrossRef] [Green Version]

- Du, P.; Xia, J.; Zhang, W.; Tan, K.; Liu, Y.; Liu, S. Multiple classifier system for remote sensing image classification: A review. Sensors 2012, 12, 4764–4792. [Google Scholar] [CrossRef] [PubMed]

- Pan, H.; Badawi, D.; Cetin, A.E. Computationally efficient wildfire detection method using a deep convolutional network pruned via fourier analysis. Sensors 2020, 20, 2891. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- High Performance Wireless Research and Education Network. Education Network University of California San Diego. HPWREN Dataset. 2019. Available online: http://hpwren.ucsd.edu/HPWREN-FIgLib/ (accessed on 10 January 2022).

| Algorithms | Searched Parameters | Values or Variables |

|---|---|---|

| KNN | Number of nNighbors | [‘1’, ‘2’, ‘3’, ‘4’, ‘5’, ‘6’] |

| Power Parameter | [‘1’, ‘2’] | |

| DT | Criterion | [’gini’, ’entropy’] |

| LDA | Solver | [’svd’, ’lsqr’, ’eigen’] |

| NB | Smoothing Variable | [‘0.00000001’, ‘0.000000001’, ‘0.00000001’] |

| RF | Number of Estimators | [‘10’, ‘50’, ‘100’, ‘500’] |

| Maximum Depth | [‘4’, ‘6’, ‘8’, ‘10’, ‘12’, ‘14’] | |

| AdaBoost | Number of Estimators | [‘10’, ‘50’, ‘100’] |

| Learning Rate | [‘0.0001’, ‘0.001’, ‘0.01’, ‘0.1’, ‘1.0’] | |

| XGBoost | Default Parameters Used | [‘Not applicable’] |

| Bagging | Number of Estimators | [‘10’, ‘50’, ‘100’, ‘500’] |

| MLP | Size of the Hidden Layer | [‘(150, 100, 50)’, ‘(120, 80, 40)’, ‘(100, 50, 30)’] |

| Max Number of Iterations | [‘100’, ‘500’, ‘1000’] | |

| Activation Function | [‘tanh’, ’relu’] | |

| Solver Method | [‘sgd’, ’adam’] | |

| Alpha | [‘0.0001’, ‘0.05’] | |

| Learning Rate | [‘constant’, ‘adaptive’] | |

| Learning Rate Initial Value | [‘0.001’, ’0.0005’] |

| Case 20 | Case 06 | Case 07 | Case 16 | |

|---|---|---|---|---|

| bbAP | 48.88 | 48.06 | 21.48 | 25.41 |

| bbAP50 | 89.75 | 93.22 | 62.78 | 70.57 |

| bbAP75 | 34.67 | 26.62 | 2.44 | 5.77 |

| segAP | 38.63 | 37.61 | 17.14 | 20.12 |

| segAP50 | 83.22 | 84.36 | 57.74 | 67.57 |

| segAP75 | 34.67 | 26.62 | 2.44 | 5.77 |

| segAR | 47.60 | 45.50 | 33.40 | 36.40 |

| Algorithms | No PCA | With PCA |

|---|---|---|

| MLP | 84.3 | 82.9 |

| Random Forests | 81.3 | 82.9 |

| Bagging | 81.45 | 82.9 |

| LDA | 73.9 | 69.8 |

| Naïve Bayes | 60.13 | 61.8 |

| Decision Trees | 74.2 | 78.0 |

| k-Nearest Neighbor | 57.2 | 56.4 |

| AdaBoost | 76.3 | 78.1 |

| XGBoost | 81.4 | 84.5 |

| Voting Algorithm | 84.6 | |

| Total Detections | 10,333 | |

| Total Images | 174,703 | |

| After First Classifier | After Second Classifier | |

| TP | 145 | 104 |

| FP | 10,188 | 411 |

| TN | 0 | 9777 |

| FN | 0 | 41 |

| Video Name | Time Elapsed (min) | ||

|---|---|---|---|

| Method [45] | Results with Proposed Classifier | Results with First Classifier [19] | |

| Lyons Fire | 8 | 5 | 5 |

| Holy Fire East View | 11 | 3 | 2 |

| Holy Fire South View | 9 | 2 | 1 |

| Palisades Fire | 3 | 7 | 5 |

| Palomar Mountain Fire | 13 | 18 | 10 |

| Highway Fire | 2 | 4 | 2 |

| Tomahawk Fire | 5 | 5 | 3 |

| DeLuz Fire | 11 | 22 | 16 |

| 20190529_94Fire_lp-s-mobo-c | N.A. 1 | 3 | 3 |

| 20190610_FIRE_bh-w-mobo-c | N.A. | 33 | 5 |

| 20190716_FIRE_bl-s-mobo-c | N.A. | 18 | 18 |

| 20190924_FIRE_sm-n-mobo-c | N.A. | 8 | 7 |

| 20200611_skyline_lp-n-mobo-c | N.A. | 6 | 4 |

| 20200806_SpringsFire_lp-w-mobo-c | N.A. | 1 | 1 |

| 20200822_BrattonFire_lp-e-mobo-c | N.A. | 28 | 5 |

| 20200905_ValleyFire_lp-n-mobo-c | N.A. | 14 | 3 |

| 20160722_FIRE_mw-e-mobo-c | N.A. | N.D. 2 | 5 |

| 20170520_FIRE_lp-s-iqeye | N.A. | 10 | 2 |

| 20170625_BBM_bm-n-mobo | N.A. | N.D. | 21 |

| 20170708_Whittier_syp-n-mobo-c | N.A. | 9 | 5 |

| 20170722_FIRE_so-s-mobo-c | N.A. | 16 | 13 |

| 20180504_FIRE_smer-tcs8-mobo-c | N.A. | 16 | 9 |

| 20180504_FIRE_smer-tcs8-mobo-c | N.A. | 3 | 3 |

| 20180809_FIRE_mg-w-mobo-c | N.A. | 8 | 2 |

| 20200822_BrattonFire_lp-s-mobo-c | N.A. | 3 | 3 |

| 20200905_ValleyFire_pi-w-mobo-c | N.A. | 6 | 6 |

| 20200930_BoundaryFire_wc-e-mobo-c | N.A. | 1 | 1 |

| 0200930_inMexico_lp-s-mobo-c | N.A. | 10 | 10 |

| 20200808_OliveFire_wc-e-mobo-c | N.A. | 5 | 5 |

| 0200905_ValleyFire_sm-e-mobo-c | N.A. | 10 | 10 |

| 20200813_Ranch2Fire_wilson-e-mobo-c | N.A. | 4 | 4 |

| 20200930_inMexico_om-e-mobo-c | N.A. | 10 | 3 |

| Mean ± sd for 1–8 | 7.8 ± 3.8 | 8.3 ± 5.5 | 5.5 ± 3.8 |

| Mean ± sd for 1–24 | N.A. | 10.9 ± 6.3 | 6.3 ± 4.2 |

| Mean ± sd for 1–32 | N.A. | 9.6 ± 6.0 | 6.0 ± 3.8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martins, L.; Guede-Fernández, F.; Valente de Almeida, R.; Gamboa, H.; Vieira, P. Real-Time Integration of Segmentation Techniques for Reduction of False Positive Rates in Fire Plume Detection Systems during Forest Fires. Remote Sens. 2022, 14, 2701. https://doi.org/10.3390/rs14112701

Martins L, Guede-Fernández F, Valente de Almeida R, Gamboa H, Vieira P. Real-Time Integration of Segmentation Techniques for Reduction of False Positive Rates in Fire Plume Detection Systems during Forest Fires. Remote Sensing. 2022; 14(11):2701. https://doi.org/10.3390/rs14112701

Chicago/Turabian StyleMartins, Leonardo, Federico Guede-Fernández, Rui Valente de Almeida, Hugo Gamboa, and Pedro Vieira. 2022. "Real-Time Integration of Segmentation Techniques for Reduction of False Positive Rates in Fire Plume Detection Systems during Forest Fires" Remote Sensing 14, no. 11: 2701. https://doi.org/10.3390/rs14112701

APA StyleMartins, L., Guede-Fernández, F., Valente de Almeida, R., Gamboa, H., & Vieira, P. (2022). Real-Time Integration of Segmentation Techniques for Reduction of False Positive Rates in Fire Plume Detection Systems during Forest Fires. Remote Sensing, 14(11), 2701. https://doi.org/10.3390/rs14112701