Hyperspectral UAV Images at Different Altitudes for Monitoring the Leaf Nitrogen Content in Cotton Crops

Abstract

:1. Introduction

2. Materials and Methods

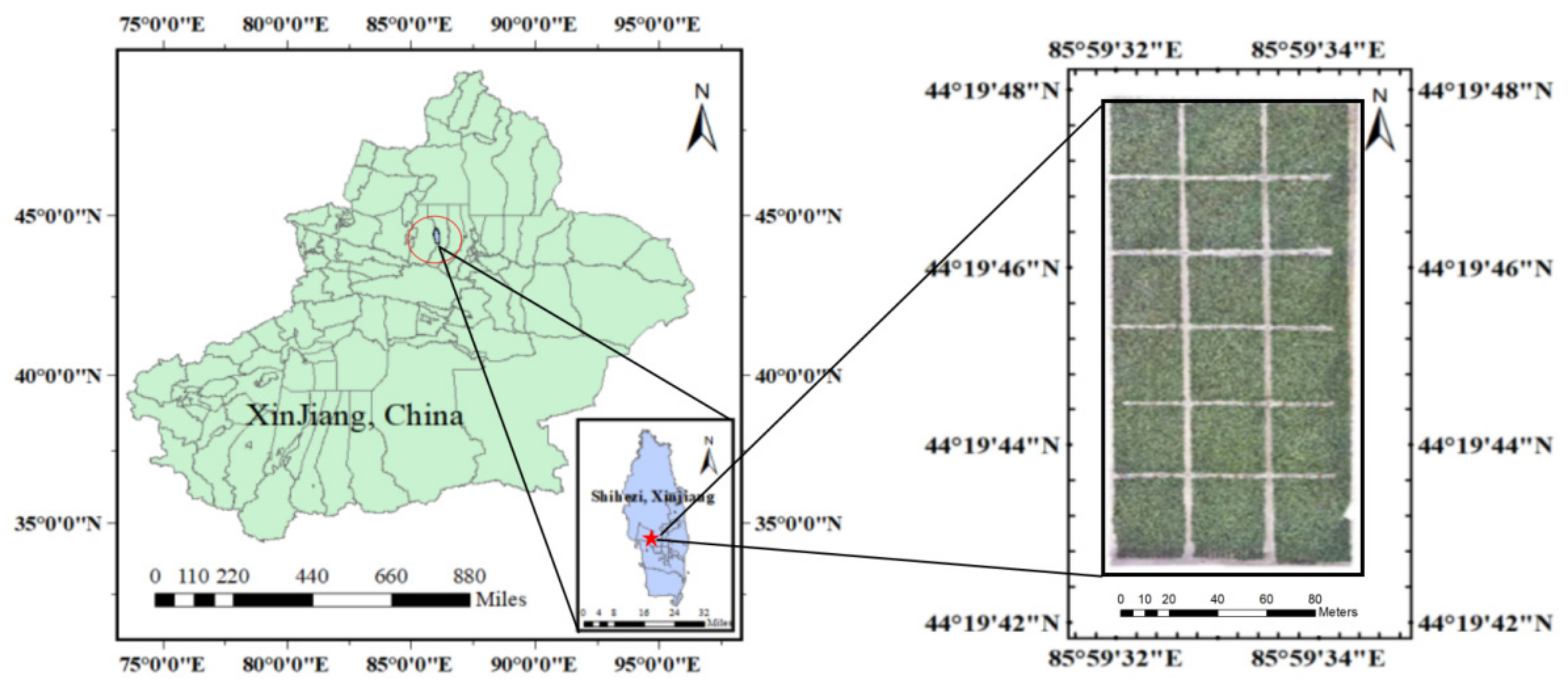

2.1. Experiment Management and Data Extraction

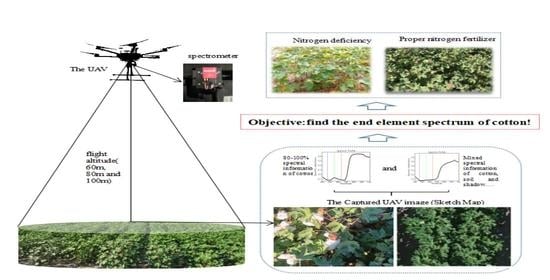

2.1.1. UAV Platforms and Airborne Sensors

2.1.2. Hyperspectral Reflectance Extraction of UAV

2.2. Collection of Leaf Samples and Laboratory Measurement of the Leaf Nitrogen Content (LNC)

2.3. Spectral Pretreatment Method

2.4. Build and Validate Models

- ①

- Multiple linear regression, MLR.

- ②

- Partial least-squares regression, PLSR.

- ③

- Principal component regression, PCR.

- ④

- Support vector regression, SVR.

2.5. Evaluation of Model Performance

2.6. The Software

3. Results

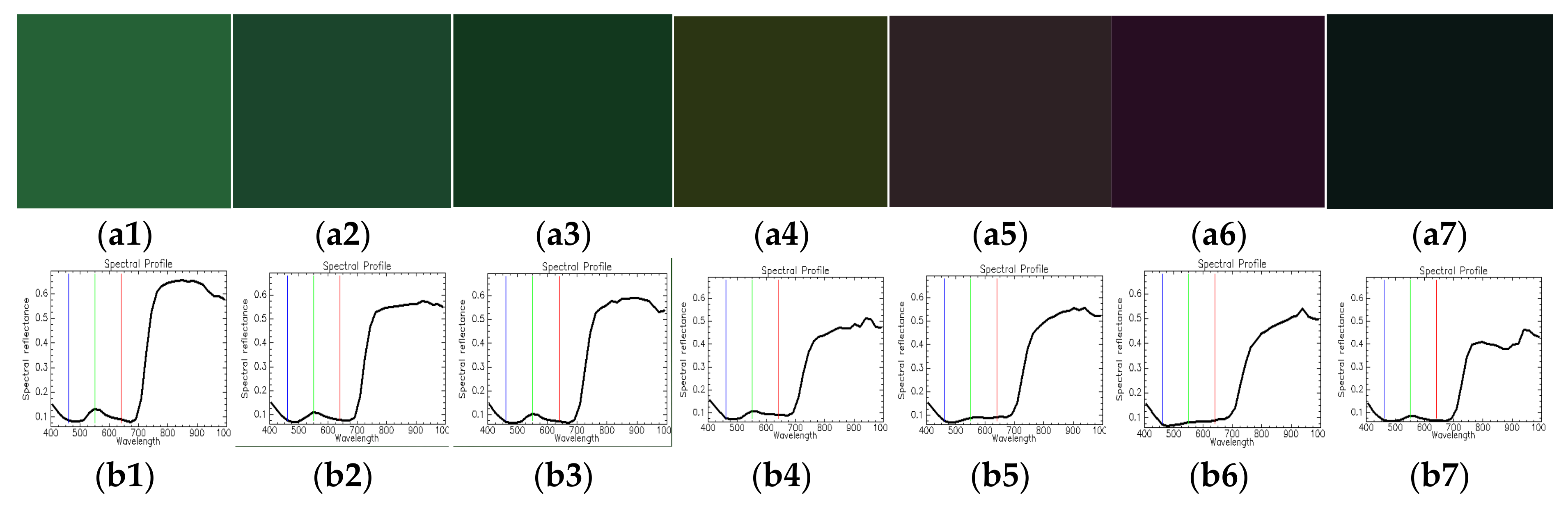

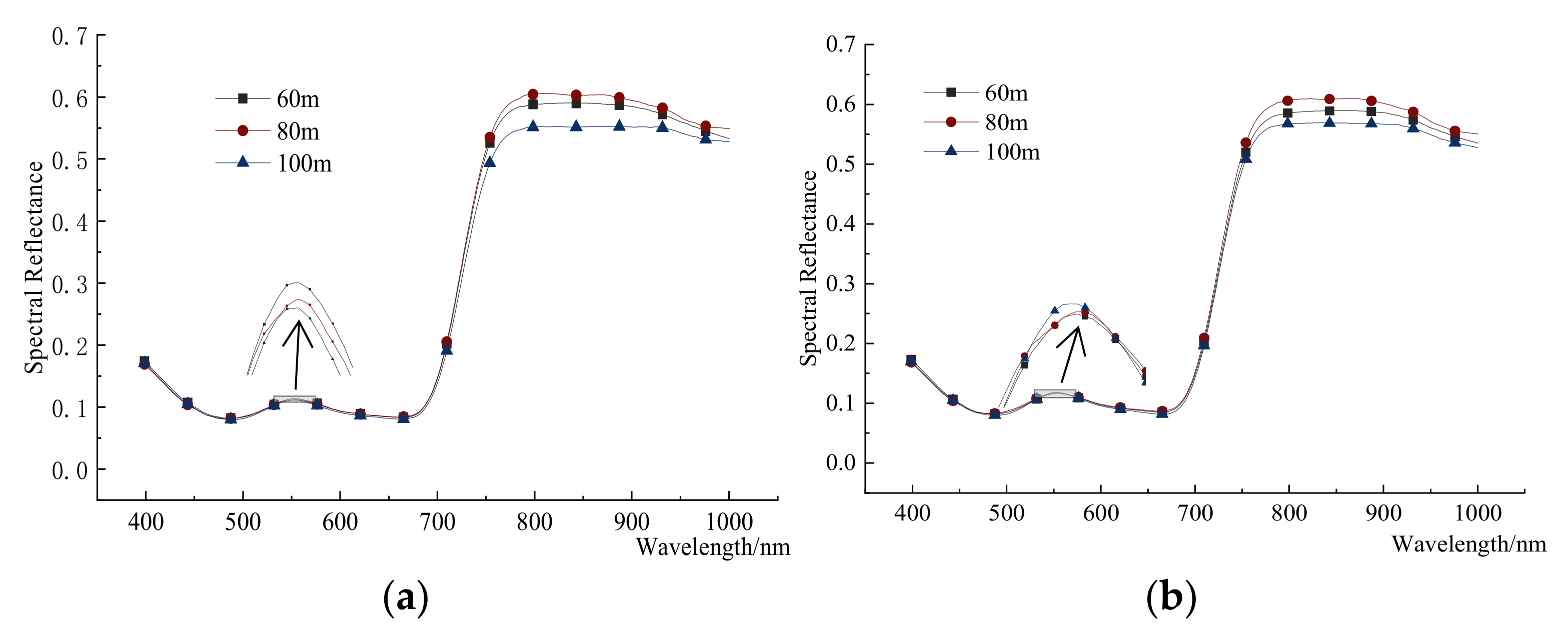

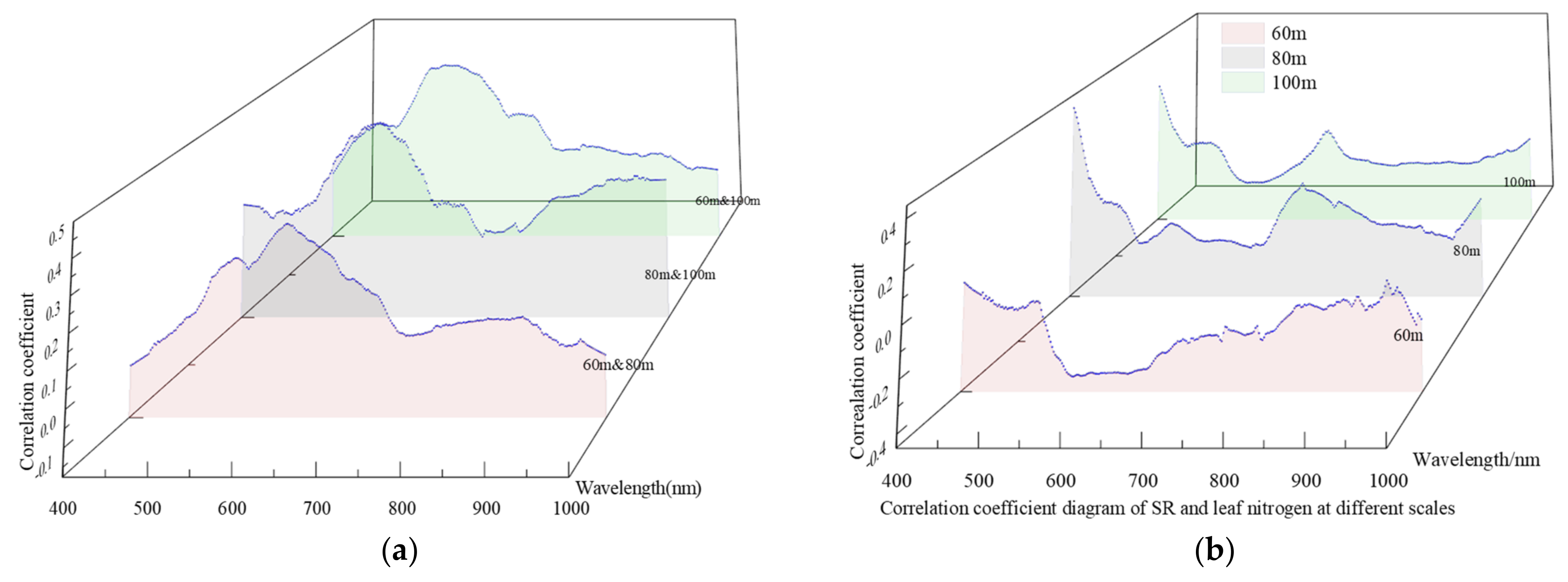

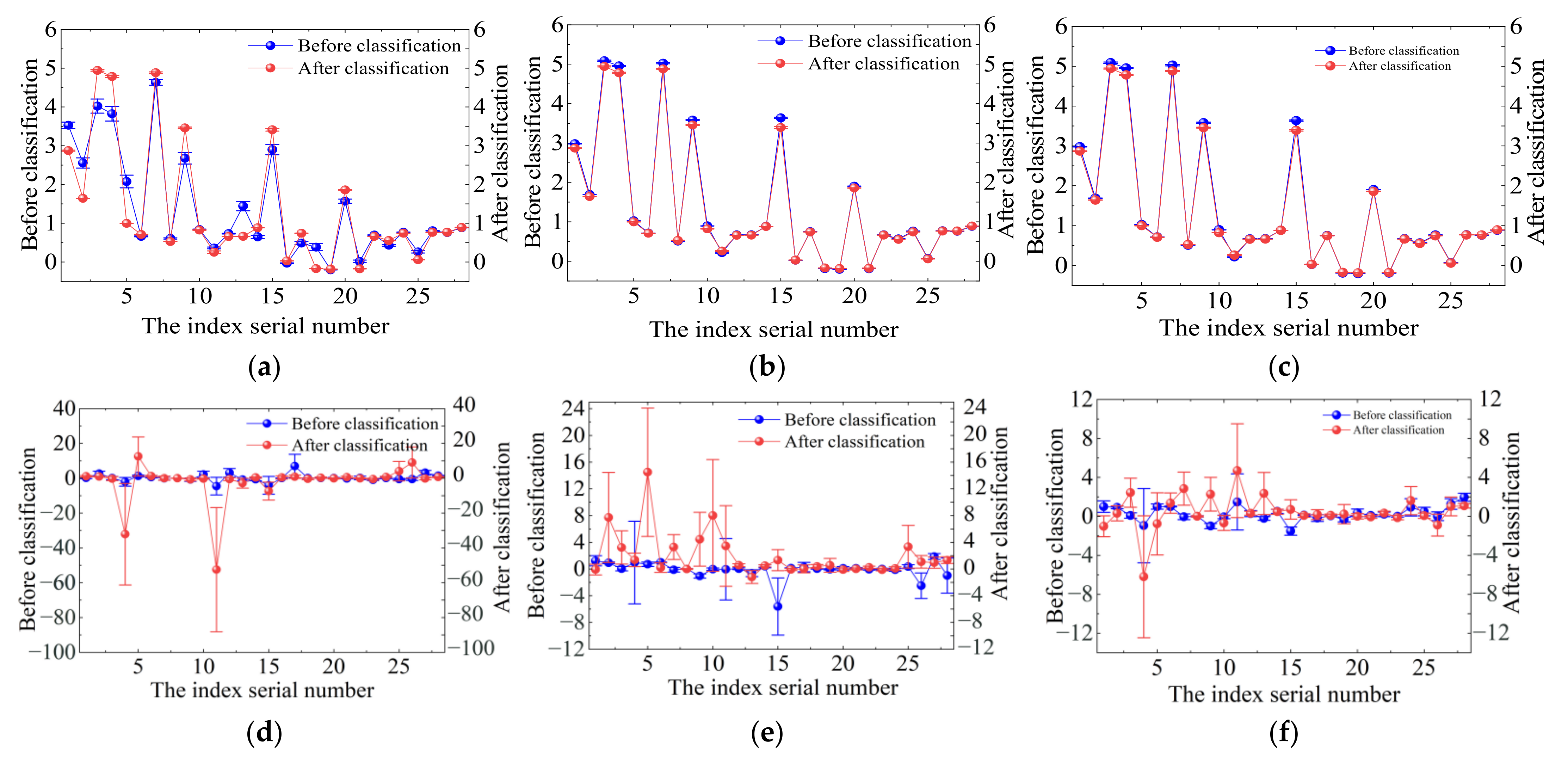

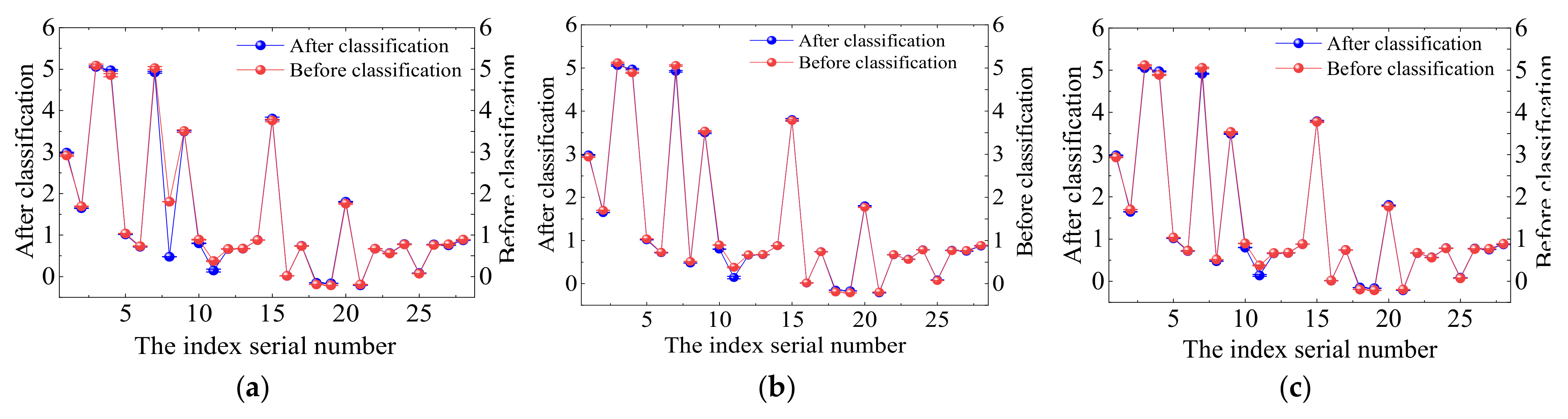

3.1. The Regular SR Multiple Scales

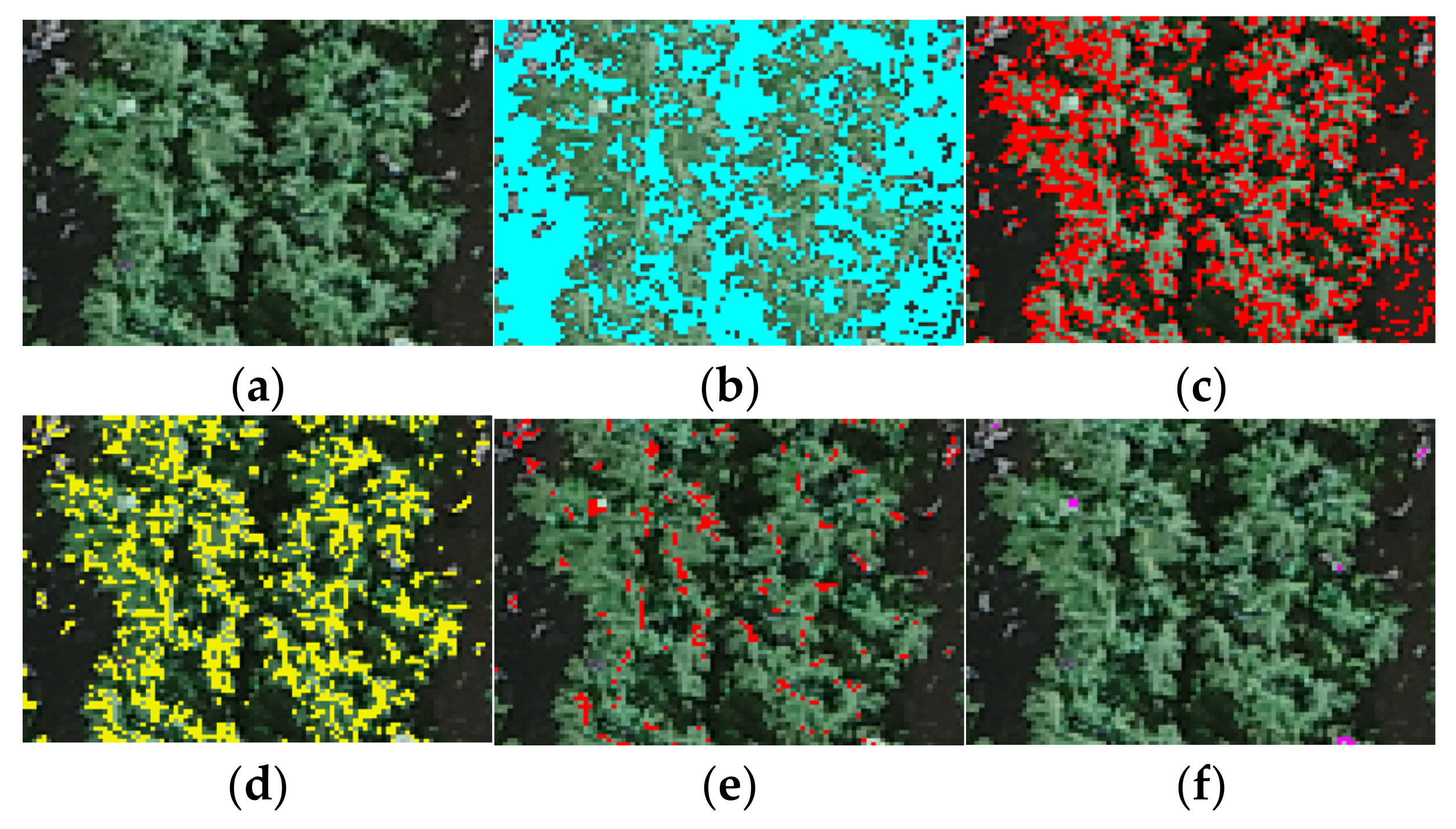

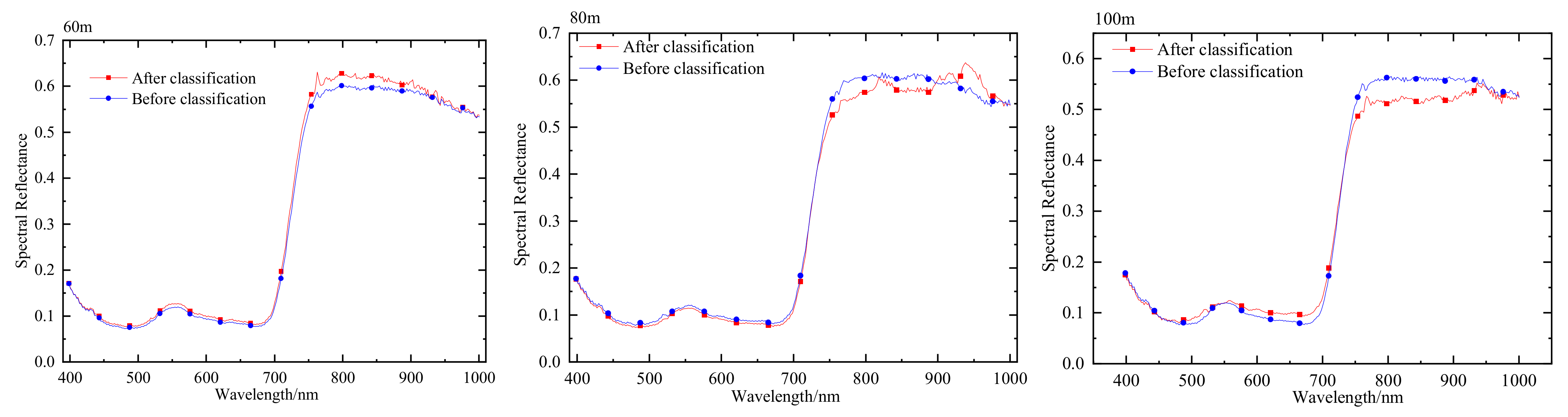

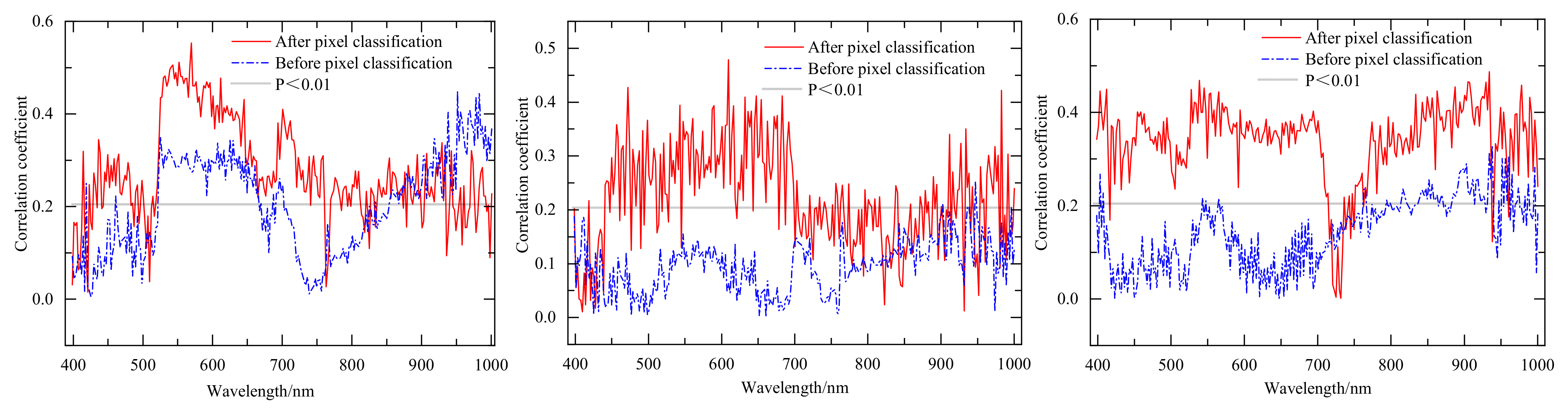

3.2. Research on Anti-Jamming Algorithm of UAV Hyperspectral Image

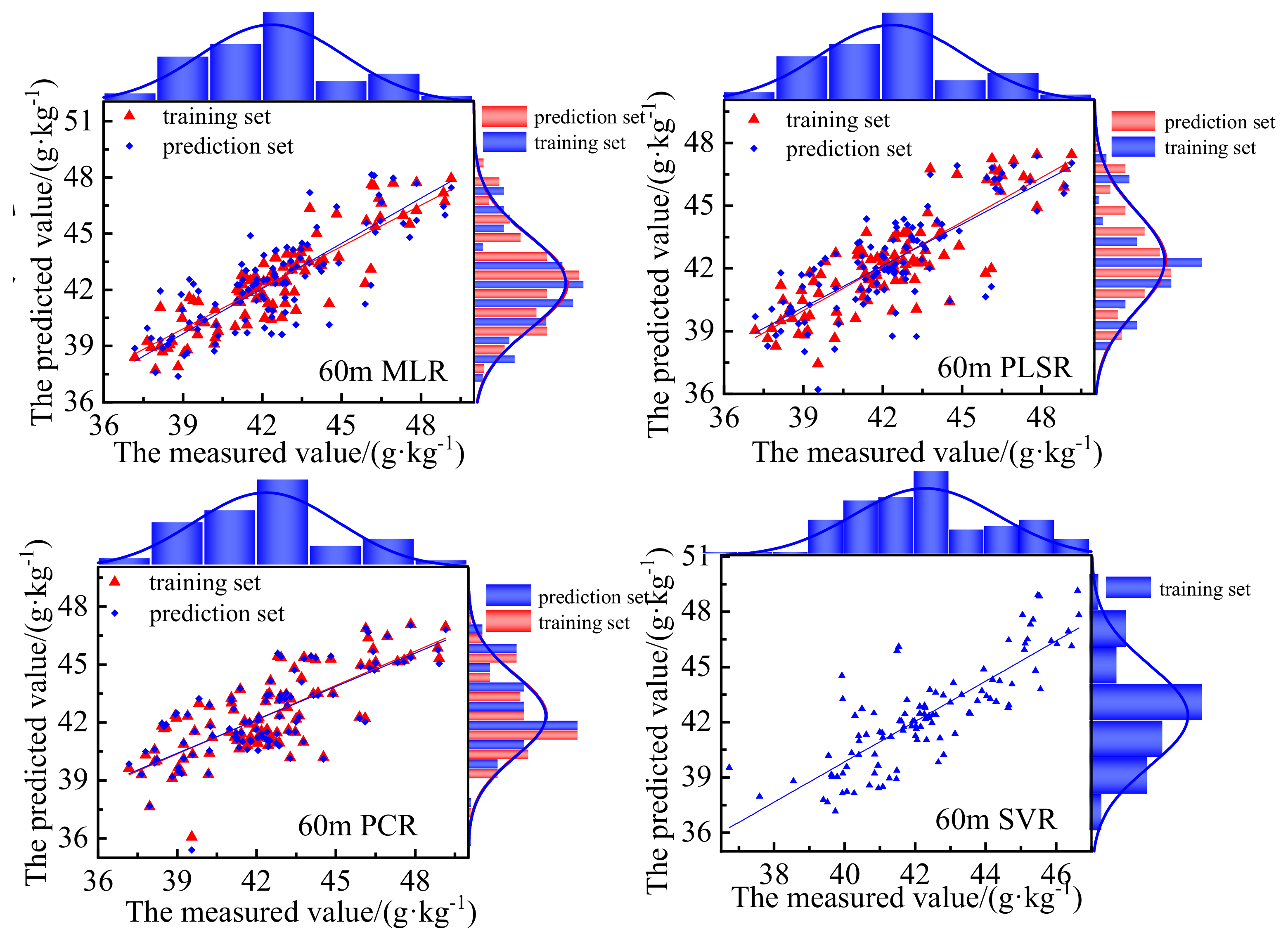

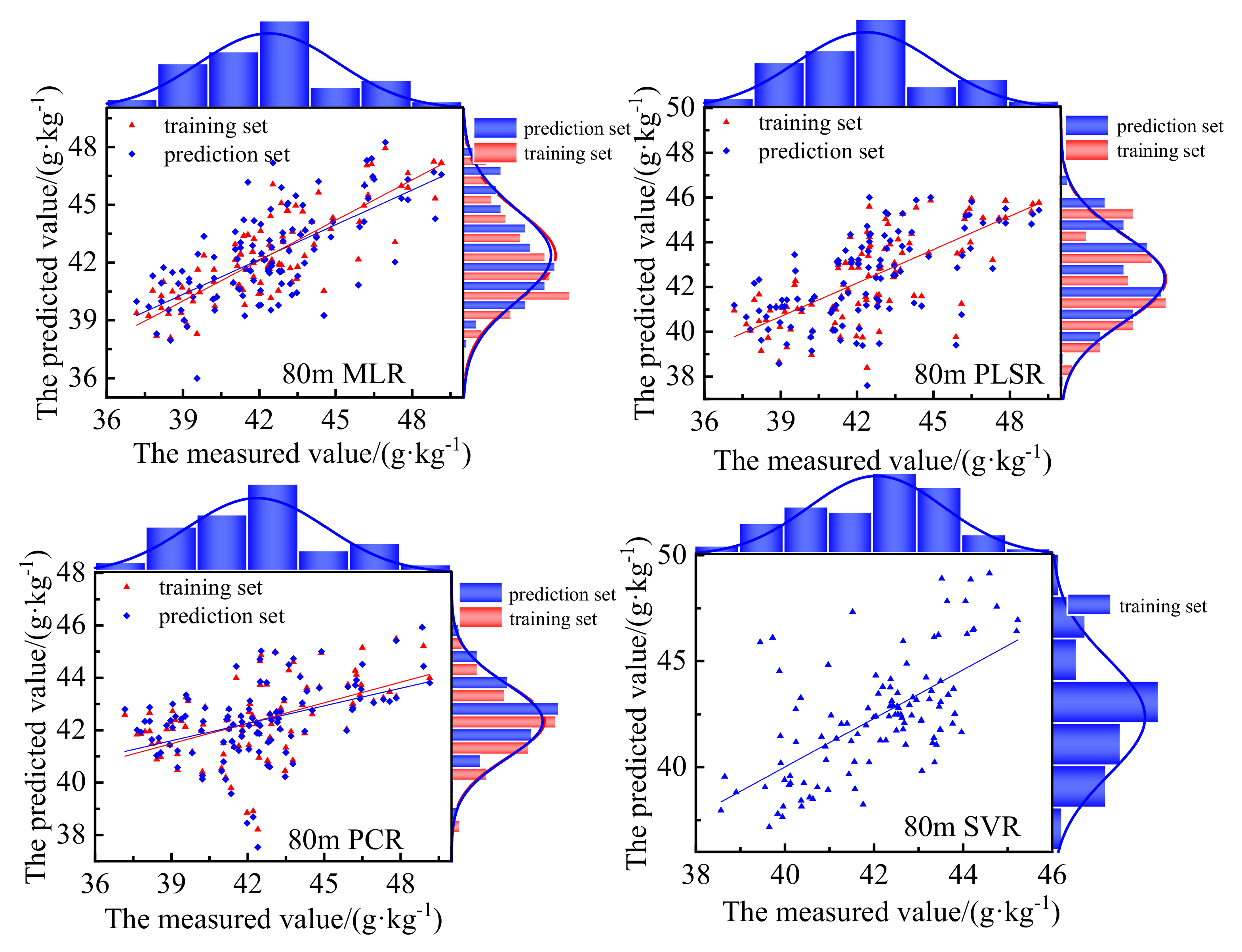

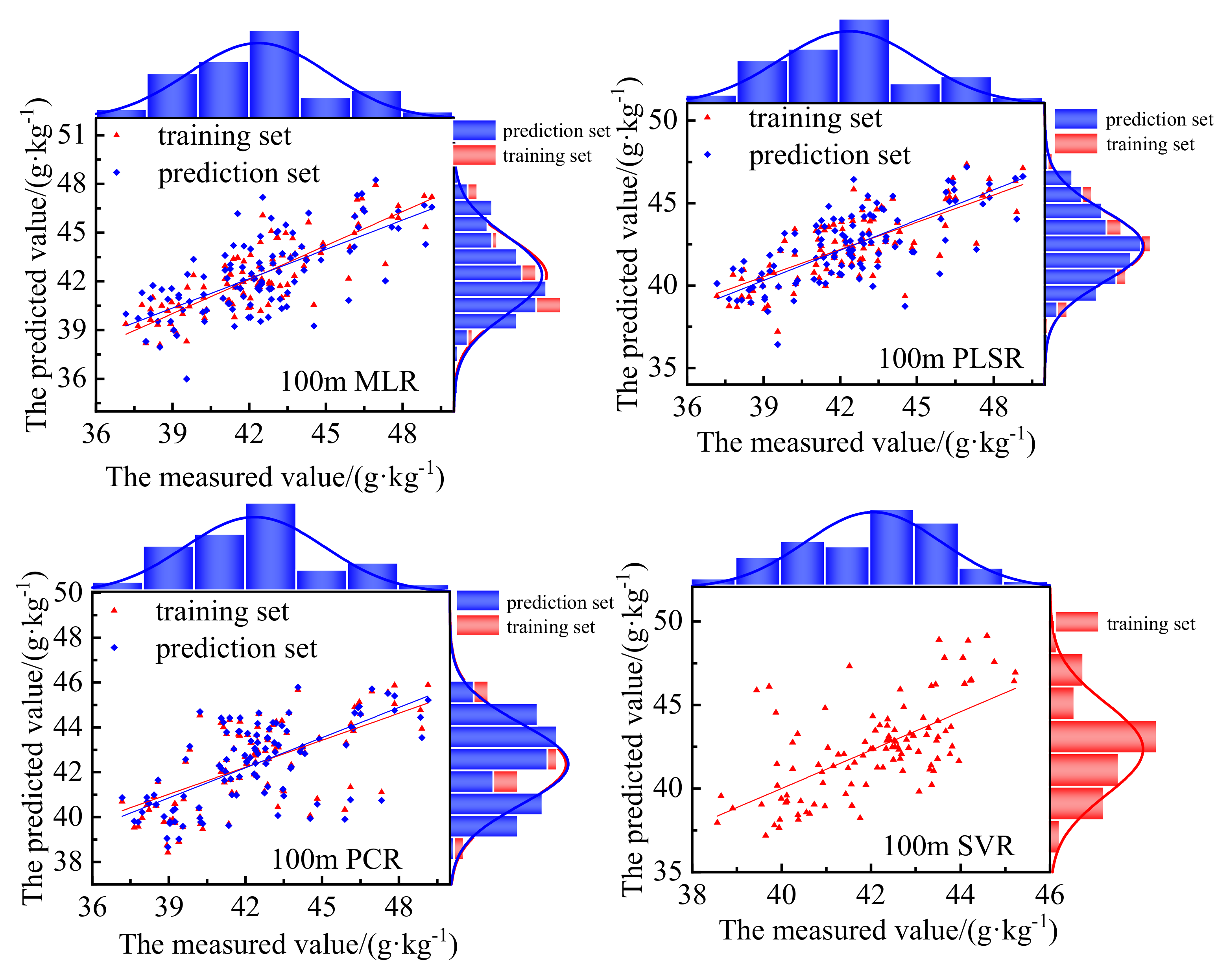

3.3. The Model for Cotton LNC

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stroppiana, D.; Boschetti, M.; Brivio, P.A.; Bocchi, S. Plant nitrogen concentration in paddy rice from field canopy hyperspectral radiometry. Field Crops Res. 2009, 111, 119–129. [Google Scholar] [CrossRef]

- Saggar, S.; Jha, N.; Deslippe, J.; Bolan, N.S.; Luo, J.; Giltrap, D.L.; Kim, D.G.; Zaman, M.; Tillman, R.W. Denitrification and N2O:N2 production in temperate grasslands: Processes, measurements, modelling and mitigating negative impacts. Sci. Total Environ. 2013, 465, 173–195. [Google Scholar] [CrossRef] [PubMed]

- Belder, P.; Spiertz, J.H.J.; Bouman, B.A.M.; Lu, G.; Tuong, T.P. Nitrogen economy and water productivity of lowland rice under water-saving irrigation. Field Crops Res. 2005, 93, 169–185. [Google Scholar] [CrossRef]

- Di Paolo, E.; Rinaldi, M. Yield response of corn to irrigation and nitrogen fertilization in a Mediterranean environment. Field Crops Res. 2008, 105, 202–210. [Google Scholar] [CrossRef]

- Lee, W.S.; Alchanatis, V.; Yang, C.; Hirafuji, M.; Moshou, D.; Li, C. Sensing technologies for precision specialty crop production. Comput. Electron. Agric. 2010, 74, 2–33. [Google Scholar] [CrossRef]

- Sharifi, A. Remotely sensed vegetation indices for crop nutrition mapping. J. Sci. Food Agric. 2020, 100, 5191–5196. [Google Scholar] [CrossRef]

- Connolly, C.; Inman, D.; Khosla, R.; Mayfield, T. On-the-go active remote sensing for efficient crop nitrogen management. Sens. Rev. 2005, 25, 209–214. [Google Scholar] [CrossRef]

- Ercoli, L.; Mariotti, M.; Masom, A.; Massantini, F. Relationship between nitrogen and chlorophyll content and spectral properties in maize leaves. Eur. J. Agron. 1993, 2, 113–117. [Google Scholar] [CrossRef]

- Govender, M.; Chetty, K.; Naiken, V.; Bulcock, H. A comparison of satellite hyperspectral and multispectral remote sensing imagery for improved classification and mapping of vegetation. Water SA 2008, 34, 147–154. [Google Scholar] [CrossRef] [Green Version]

- Rao, N.; Garg, P.; Ghosh, S.; Dadhwal, V. Estimation of leaf total chlorophyll and nitrogen concentrations using hyperspectral satellite imagery. J. Agric. Sci. 2008, 146, 65–75. [Google Scholar] [CrossRef]

- Barnes, E.; Clarke, T.; Richards, S.; Colaizzi, P.D.; Haberland, J.; Kostrzewski, M.; Moran, M.S. Coincident detection of crop water stress, nitrogen status and canopy density using ground-based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 June 2000. [Google Scholar]

- Ye, X.; Sakai, K.; Okamoto, H.; Garciano, L.O. A ground-based hyperspectral imaging system for characterizing vegetation spectral features. Comput. Electron. Agric. 2008, 63, 13–21. [Google Scholar] [CrossRef]

- Chen, P.; Haboudane, D.; Tremblay, N.; Wang, J.; Vigneault, P.; Li, B. New spectral indicator assessing the efficiency of crop nitrogen treatment in corn and wheat. Remote Sens. Environ. 2010, 114, 1987–1997. [Google Scholar] [CrossRef]

- Li, F.; Miao, Y.; Feng, G.; Yuan, F.; Yue, S.; Gao, X.; Liu, Y.; Liu, B.; Ustin, S.L.; Chen, X. Improving estimation of summer maize nitrogen status with red edge-based spectral vegetation indices. Field Crop Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

- Zhu, Y.; Tian, Y.; Yao, X.; Liu, X.; Cao, W. Analysis of common canopy reflectance spectra for indicating leaf nitrogen concentrations in wheat and rice. Plant Prod. Sci. 2007, 10, 400–411. [Google Scholar] [CrossRef]

- Wang, J.; Li, X.; Bai, L.; Du, H.; Xiang, D.; Bai, S.J. Characteristics of reflection spectrum of cotton canopy in North Xinjiang. Chin. J. Agrometeorol. 2012, 33, 114–118. [Google Scholar]

- Schlemmer, M.; Gitelson, A.; Schepers, J.; Ferguson, R.; Peng, Y.; Shanahan, J.; Rundquist, D. Remote estimation of nitrogen and chlorophyll contents in maize at leaf and canopy levels. Int. J. Appl. Earth Obs. Geoinf. 2013, 25, 47–54. [Google Scholar] [CrossRef] [Green Version]

- Feng, W.; Guo, B.B.; Wang, Z.J.; He, L.; Song, X.; Wang, Y.H.; Guo, T.C. Measuring leaf nitrogen concentration in winter wheat using double-peak spectral reflection remote sensing data. Field Crops Res. 2014, 159, 43–52. [Google Scholar] [CrossRef]

- Mayfield, A.H.; Trengove, S.P. Grain yield and protein responses in wheat using the N-Sensor for variable rate N application. Crop Pasture Sci. 2009, 60, 818–823. [Google Scholar] [CrossRef]

- Raun, W.R.; Solie, J.B.; Taylor, R.K.; Arnall, D.B.; Mack, C.J.; Edmonds, D.E. Ramp calibration strip technology for determining midseason nitrogen rates in corn and wheat. Agron. J. 2008, 100, 1088–1093. [Google Scholar] [CrossRef] [Green Version]

- Raffy, M. Change of scale in models of remote sensing: A general method for spatialization of models. Remote Sens. Environ. 1992, 40, 101–112. [Google Scholar] [CrossRef]

- Wu, L.; Liu, X.N.; Zheng, X.; Qin, Q.M.; Ren, H.Z.; Sun, Y.J. Spatial scaling transformation modeling based on fractal theory for the leaf area index retrieved from remote sensing imagery. J. Appl. Remote Sens. 2015, 9, 096015. [Google Scholar] [CrossRef]

- Osco, L.P.; Junior, J.M.; Ramos, A.; Furuya, D.; Teodoro, P.E. Leaf nitrogen concentration and plant height prediction for maize using UAV-based multispectralimagery and machine learning techniques. Remote Sens. 2020, 12, 3237. [Google Scholar] [CrossRef]

- Feng, W.; Wu, Y.; He, L.; Ren, X.; Wang, Y.; Hou, G.; Wang, Y.; Liu, W.; Guo, T. An optimized non-linear vegetation index for estimating leaf area index in winter wheat. Precis. Agric. 2019, 20, 1157–1176. [Google Scholar] [CrossRef] [Green Version]

- Yang, S. Evaluation of the UAV-Based Multispectral Imagery and Its Application for Crop Intra-Field Nitrogen Monitoring and Yield Prediction in Ontario; Western University: London, ON, Canada, 2016. [Google Scholar]

- Quemada, M.; Gabriel, J.L.; Zarco-Tejada, P. Airborne hyperspectral images and ground-level optical sensors as assessment tools for maize nitrogen fertilization. Remote Sens. 2014, 6, 2940–2962. [Google Scholar] [CrossRef] [Green Version]

- Gabriel, J.L.; Zarco-Tejada, P.J.; López-Herrera, P.J.; Pérez-Martín, E.; Alonso-Ayuso, M.; Quemada, M. Airborne and ground level sensors for monitoring nitrogen status in a maize crop. Biosyst. Eng. 2017, 160, 124–133. [Google Scholar] [CrossRef]

- Hassler, S.C.; Gurel, F.B. Unmanned Aircraft System (UAS) Technology and Applications in Agriculture. Agronomy 2019, 9, 618. [Google Scholar] [CrossRef] [Green Version]

- Rango, A.; Laliberte, A.; Herrick, J.E.; Winters, C.; Havstad, K.; Steele, C.; Browning, D. Unmanned aerial vehicle-based remote sensing for rangeland assessment, monitoring, and management. J. Appl. Remote Sens. 2009, 3, 11–15. [Google Scholar]

- Boegh, E.; Houborg, R.; Bienkowski, J.; Braban, C.F.; Dalgaard, T.; Van, D.N.; Dragosits, U.; Holmes, E.; Magliulo, V.; Schelde, K.; et al. Remote sensing of LAI, chlorophyll and leaf nitrogen pools of crop- and grasslands in five European landscapes. Biogeosciences 2013, 10, 6279–6307. [Google Scholar] [CrossRef] [Green Version]

- Jia, Y.; Su, Z.; Shen, W.; Yuan, J.; Xu, Z. UAV remote sensing image mosaic and its application in agriculture. Int. J. Smart Home 2016, 10, 159–170. [Google Scholar] [CrossRef]

- Majidi, M.; Erfanian, A.; Khaloozadeh, H. A new approach to estimate true position of unmanned aerial vehicles in an INS/GPS integration system in GPS spoofing attack conditions. Int. J. Autom. Comput. 2018, 15, 747–760. [Google Scholar] [CrossRef]

- Bricco, B.; Brown, R.J. Precision agriculture and the role of remote sensing: A Review. Can. J. Remote Sens. 2014, 24, 315–327. [Google Scholar] [CrossRef]

- Jain, N.; Ray, S.S.; Singh, J.P.; Panigrahy, S. Use of hyperspectral data to assess the effects of different nitrogen applications on a potato crop. Precis. Agric. 2007, 8, 225–239. [Google Scholar] [CrossRef]

- Basso, B.; Fiorentino, C.; Cammarano, D.; Schulthess, U. Variable rate nitrogen fertilizer response in wheat using remote sensing. Precis. Agric. 2015, 17, 168–182. [Google Scholar] [CrossRef]

- Zhang, M.; Li, M.; Liu, W.; Cui, L.; Long, S. Analyzing the performance of statistical models for estimating leaf nitrogen concentration of Phragmites australis based on leaf spectral reflectance. Spectrosc. Lett. 2019, 52, 483–491. [Google Scholar] [CrossRef]

- Qiu, S.; Wang, J.; Gao, L. Discrimination and characterization of strawberry juice based on electronic nose and tongue: Comparison of different juice processing approaches by LDA, PLSR, RF, and SVM. J. Agric. Food Chem. 2014, 62, 6426–6434. [Google Scholar] [CrossRef]

- Yuan, H.; Yang, G.; Li, C.; Wang, Y.; Liu, J.; Yu, H. Retrieving Soybean Leaf Area Index from Unmanned Aerial Vehicle Hyperspectral Remote Sensing: Analysis of RF, ANN, and SVM Regression Models. Remote Sens. 2017, 9, 309. [Google Scholar] [CrossRef] [Green Version]

- Tan, K.; Wang, S.; Song, Y.; Liu, Y.; Gong, Z. Estimating nitrogen status of rice canopy using hyperspectral reflectance combined with BPSO-SVR in cold region. Chemom. Intell. Lab. Syst. 2018, 172, 68–79. [Google Scholar] [CrossRef]

- Huang, Z.; Turner, B.J.; Dury, S.J.; Wallis, I.R.; Foley, W.J. Estimating foliage nitrogen concentration from HYMAP data using continuum removal analysis. Remote Sens. Environ. 2004, 93, 18–29. [Google Scholar] [CrossRef]

- Serrano, L.; Peñuelas, J.; Ustin, S.L. Remote sensing of nitrogen and lignin in Mediterranean vegetation from AVIRIS data: Decomposing biochemical from structural signals. Remote Sens. Environ. 2002, 81, 355–364. [Google Scholar] [CrossRef]

- Zhang, J.; He, Y.; Yuan, L.; Liu, P.; Zhou, X.; Huang, Y. Machine learning-based spectral library for crop classification and status monitoring. Agronomy 2019, 9, 496. [Google Scholar] [CrossRef] [Green Version]

- Feng, W.; Yao, X.; Zhu, Y.; Tian, Y.C.; Cao, W.X. Monitoring leaf nitrogen status with hyperspectral reflectance in wheat. Eur. J. Agron. 2008, 28, 394–404. [Google Scholar] [CrossRef]

- Guo, J.; Zhang, J.; Xiong, S.; Zhang, Z.; Wei, Q.; Zhang, W.; Feng, W.; Ma, X. Hyperspectral assessment of leaf nitrogen accumulation for winter wheat using different regression modeling. Precis. Agric. 2021, 22, 1634–1658. [Google Scholar] [CrossRef]

- Yin, C.; Lin, J.; Ma, L.; Zhang, Z.; Hou, T.; Zhang, L. Study on the quantitative relationship among canopy hyperspectral reflectance, vegetation index and cotton leaf nitrogen content. J. Indian Soc. Remote Sens. 2021, 49, 1787–1799. [Google Scholar] [CrossRef]

- Tian, Y.C.; Gu, K.J.; Chu, X.; Yao, X.; Cao, W.X.; Zhu, Y. Comparison of different hyperspectral vegetation indices for canopy leaf nitrogen concentration estimation in rice. Plant Soil 2014, 376, 193–209. [Google Scholar] [CrossRef]

- Hu, X.; Yu, T.; Wan, K.; Yuan, J. Poker card recognition with computer vision methods. In Proceedings of the 2021 IEEE International Conference on Electronic Technology, Communication and Information (ICETCI), Changchun, China, 27–29 August 2021. [Google Scholar]

- Abulaiti, Y.; Sawut, M.; Maimaitiaili, B. A possible fractional order derivative and optimized spectral indices for assessing total nitrogen content in cotton. Comput. Electron. Agric. 2020, 171, 105275. [Google Scholar] [CrossRef]

- Caturegli, L.; Corniglia, M.; Gaetani, M.; Grossi, N.; Magni, S.; Migliazzi, M.; Angelini, L.; Mazzoncini, M.; Silvestri, N.; Fontanelli, M.; et al. Unmanned aerial vehicle to estimate nitrogen status of turfgrasses. PLoS ONE 2016, 11, e0158268. [Google Scholar] [CrossRef] [Green Version]

- Yao, X.; Ren, H.; Cao, Z.; Tian, Y.; Cao, W.; Zhu, Y.; Cheng, T. Detecting leaf nitrogen content in wheat with canopy hyperspectrum under different soil backgrounds. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 114–124. [Google Scholar] [CrossRef]

- Gomez, C.; Rossel, R.; Mcbratney, A. Soil organic carbon prediction by hyperspectral remote sensing and field vis-NIR spectroscopy: An Australian case study. Geoderma 2008, 146, 403–411. [Google Scholar] [CrossRef]

- Wang, J.; Wang, T.; Skidmore, A.; Shi, T.; Wu, G. Evaluating Different Methods for Grass Nutrient Estimation from Canopy Hyperspectral Reflectance. Remote Sens. 2015, 7, 5901–5917. [Google Scholar] [CrossRef] [Green Version]

| Base Fertilizer | N Application Stage | ||

|---|---|---|---|

| Data | Proportion | Detailed Fertilizer Usage (kg ha−1) | |

| 30% | 70% (330 × 0.7 = 231 kg/ha) | ||

| 99 kg/ha | 13 June | 12% | 27.72 |

| 23 June | 12% | 27.72 | |

| 14 July | 15% | 34.65 | |

| 25 July | 17% | 39.27 | |

| 5 August | 20% | 46.2 | |

| 16 August | 24% | 55.44 | |

| Parameter | The Value |

|---|---|

| Leverage limit | 2.0 |

| Sample outlier limit, calibration | 3.0 |

| Individual value outlier, calibration | 3.0 |

| Individual value outlier, validation | 3.0 |

| Variable outlier limit, calibration | 2.0 |

| Variable outlier limit, validation | 3.0 |

| Total explained variance (%) | 20 |

| Ratio of calibrated to validated residual variance | 0.5 |

| Ratio of validated to calibrated residual variance | 0.70 |

| Residual variance increase limit (%) | 6.0 |

| UAV Flight Altitude | Modeling Method | Model Performance (Test Set) | Model Performance (Validation Set) | ||||

|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | ||

| 60 m | MLR | 0.80 | 0.41 | 0.95 | 0.63 | 1.66 | 1.31 |

| PLS | 0.71 | 1.43 | 1.30 | 0.61 | 1.74 | 1.30 | |

| SVR | 0.67 | 1.77 | 1.17 | 0.62 | 1.68 | 1.31 | |

| PCR | 0.59 | 1.74 | 1.39 | 1.56 | 1.82 | 1.46 | |

| 80 m | MLR | 0.72 | 1.67 | 1.16 | 1.47 | 1.99 | 1.61 |

| PLSR | 0.49 | 1.92 | 1.56 | 0.35 | 2.19 | 1.79 | |

| SVR | 0.44 | 2.09 | 1.63 | 0.27 | 2.33 | 1.77 | |

| PCR | 0.26 | 2.34 | 1.89 | 0.19 | 2.47 | 2.00 | |

| 100 m | MLR | 0.69 | 1.75 | 1.18 | 0.46 | 2.06 | 1.62 |

| PLS | 0.61 | 1.69 | 1.35 | 0.47 | 1.97 | 1.56 | |

| PCR | 0.45 | 2.02 | 1.57 | 0.41 | 2.16 | 1.66 | |

| SVR | 0.40 | 1.67 | 1.59 | 0.29 | 2.31 | 1.16 | |

| 60, 80, and 100 m | MLR | 0.96 | 1.12 | 1.57 | 0.47 | 2.43 | 1.57 |

| SVR | 0.71 | 1.48 | 1.08 | 0.66 | 1.59 | 1.19 | |

| PLS | 0.63 | 1.66 | 1.19 | 0.58 | 1.77 | 1.36 | |

| PCR | 0.59 | 1.74 | 1.36 | 0.54 | 1.86 | 1.45 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, C.; Lv, X.; Zhang, L.; Ma, L.; Wang, H.; Zhang, L.; Zhang, Z. Hyperspectral UAV Images at Different Altitudes for Monitoring the Leaf Nitrogen Content in Cotton Crops. Remote Sens. 2022, 14, 2576. https://doi.org/10.3390/rs14112576

Yin C, Lv X, Zhang L, Ma L, Wang H, Zhang L, Zhang Z. Hyperspectral UAV Images at Different Altitudes for Monitoring the Leaf Nitrogen Content in Cotton Crops. Remote Sensing. 2022; 14(11):2576. https://doi.org/10.3390/rs14112576

Chicago/Turabian StyleYin, Caixia, Xin Lv, Lifu Zhang, Lulu Ma, Huihan Wang, Linshan Zhang, and Ze Zhang. 2022. "Hyperspectral UAV Images at Different Altitudes for Monitoring the Leaf Nitrogen Content in Cotton Crops" Remote Sensing 14, no. 11: 2576. https://doi.org/10.3390/rs14112576

APA StyleYin, C., Lv, X., Zhang, L., Ma, L., Wang, H., Zhang, L., & Zhang, Z. (2022). Hyperspectral UAV Images at Different Altitudes for Monitoring the Leaf Nitrogen Content in Cotton Crops. Remote Sensing, 14(11), 2576. https://doi.org/10.3390/rs14112576