Multi-Hand Gesture Recognition Using Automotive FMCW Radar Sensor

Abstract

:1. Introduction

2. Radar Signal Processing

2.1. IF Signal of FMCW Radar

2.2. Theory of Parameters Estimation

2.2.1. Range Estimation

2.2.2. Doppler Estimation

2.2.3. Angle Estimation

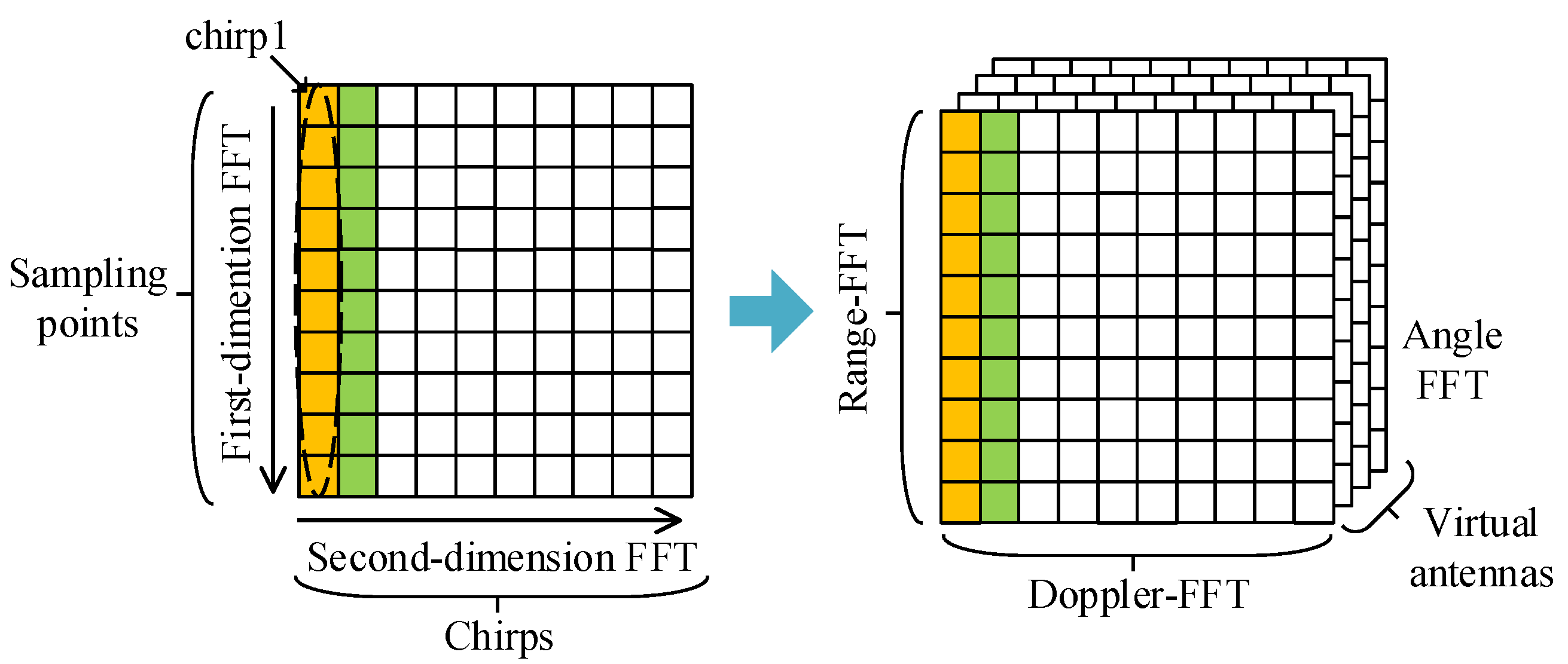

2.3. 3D-FFT-Based RDM and RAM Construction

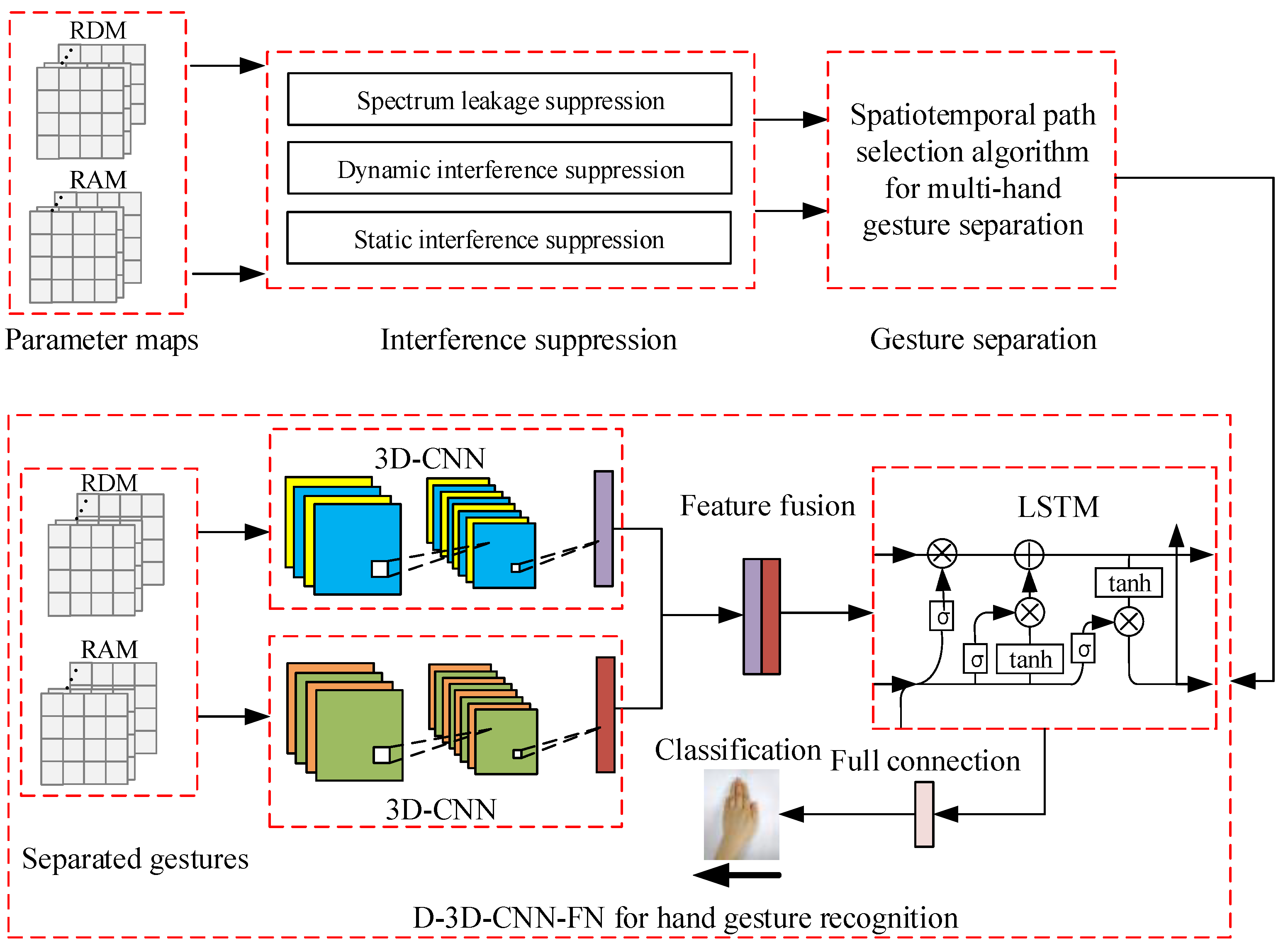

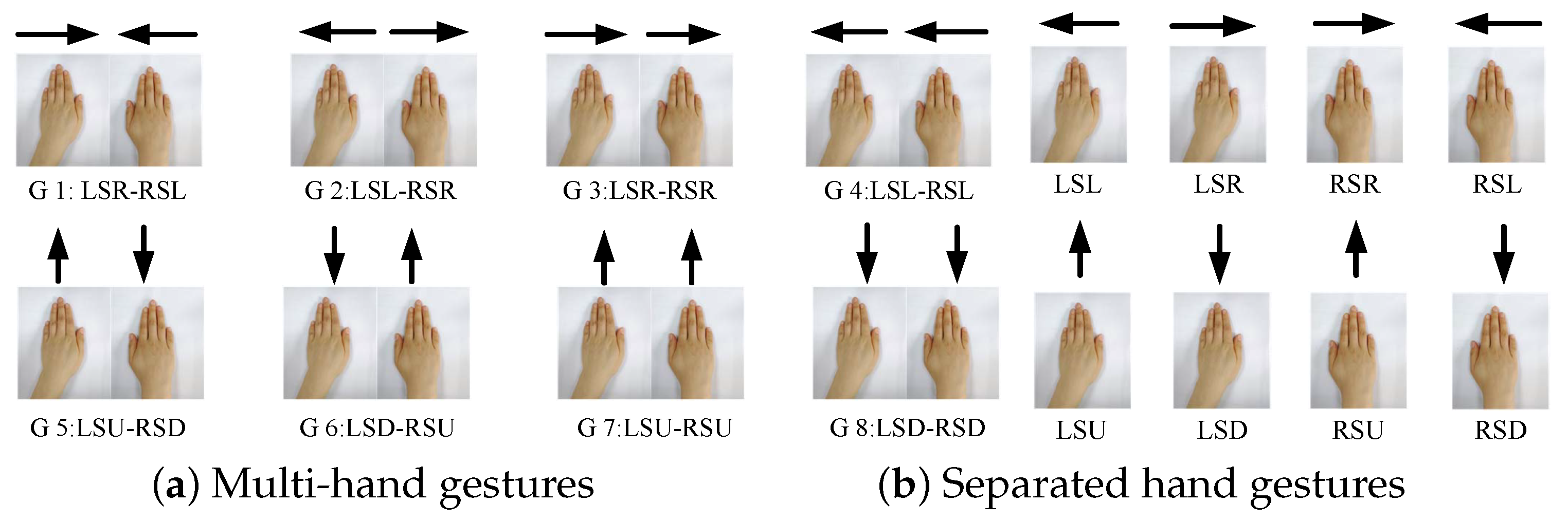

3. Proposed Multi-Hand Gesture Recognition System

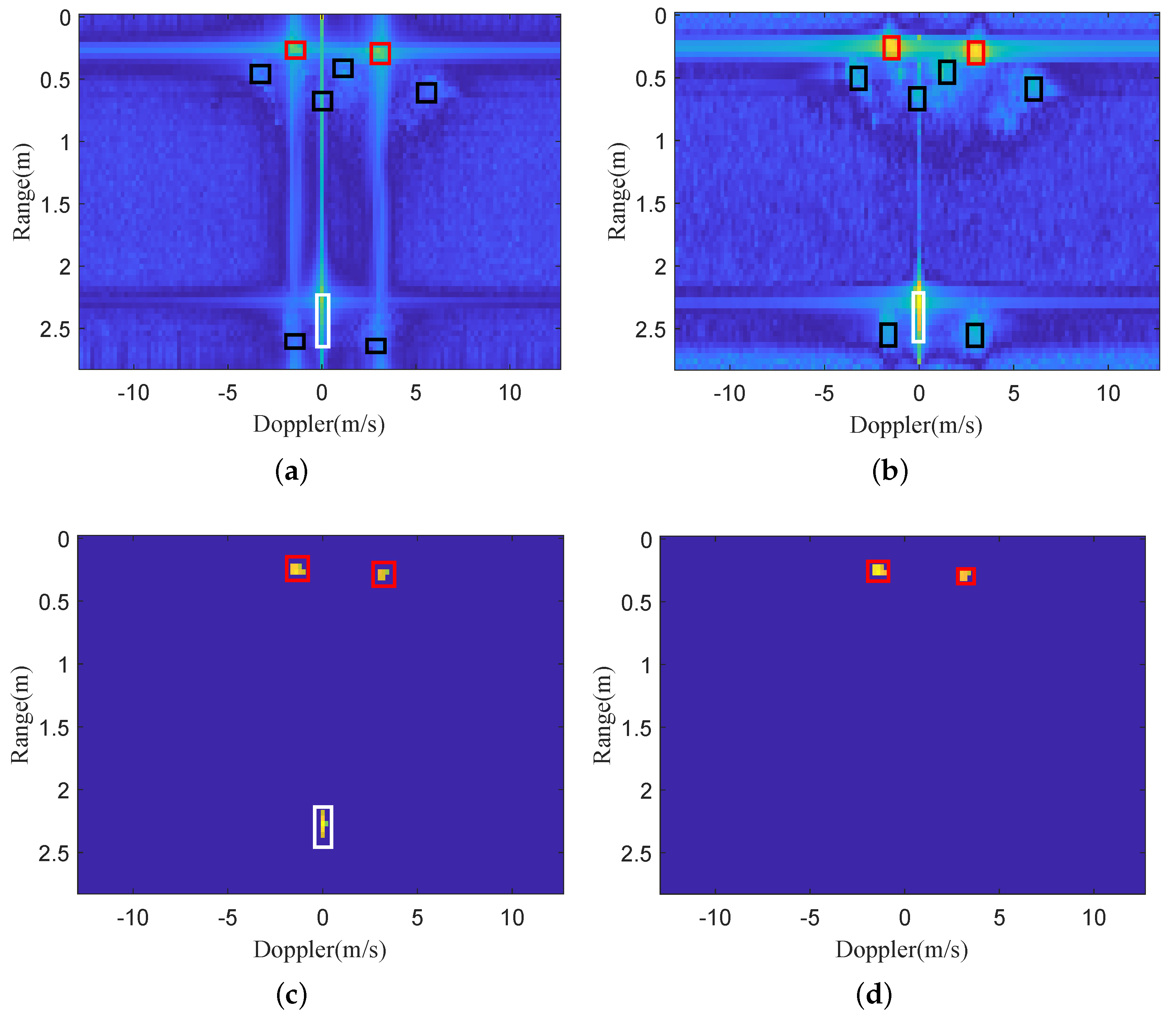

3.1. Interference Suppression

3.1.1. Spectral Leakage Cancellation

3.1.2. Dynamic Interference Suppression

3.1.3. Static Interference Suppression

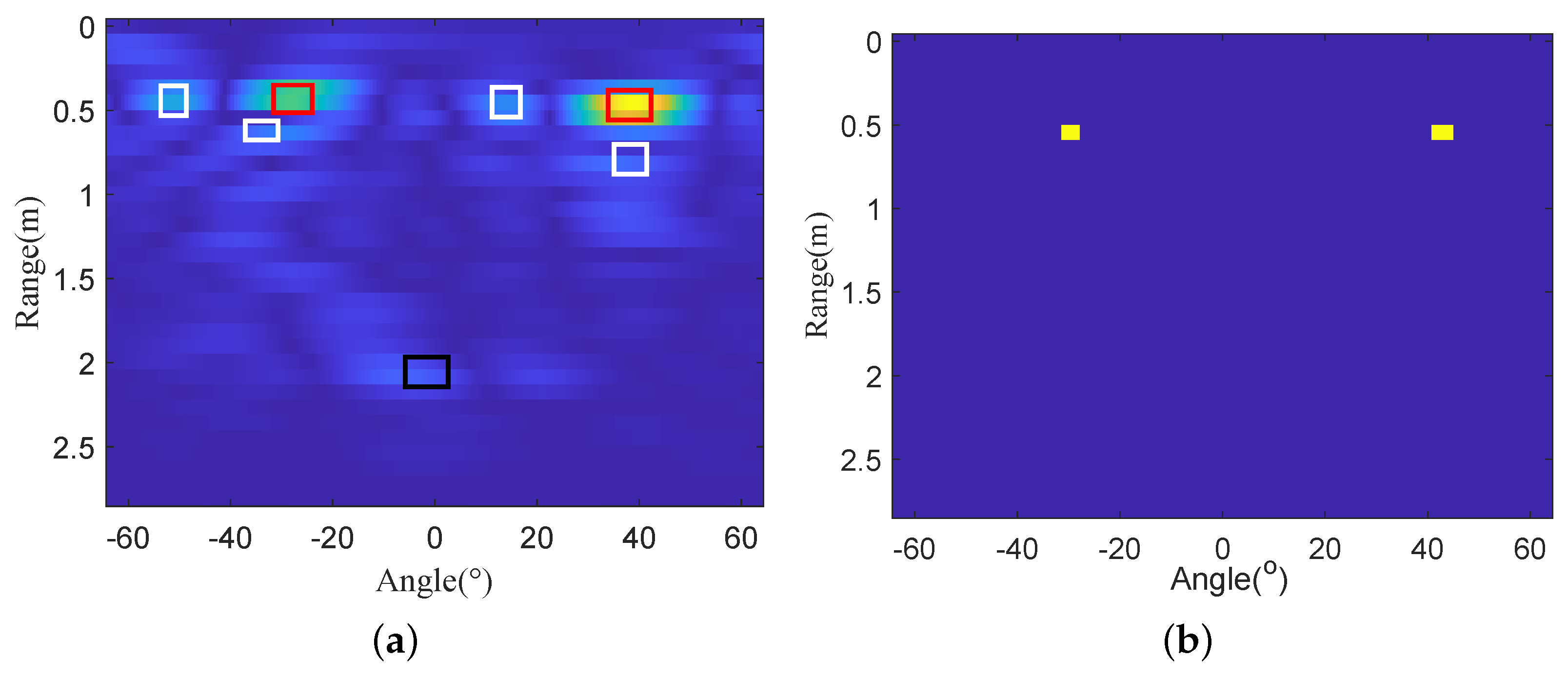

3.2. Spatiotemporal Path Selection Algorithm

| Algorithm 1 Spatiotemporal path selection algorithm for multi-hand gesture separation. |

|

3.3. D-3D-CNN-FN for HGR

3.3.1. 3D-CNN-Based Feature Extraction

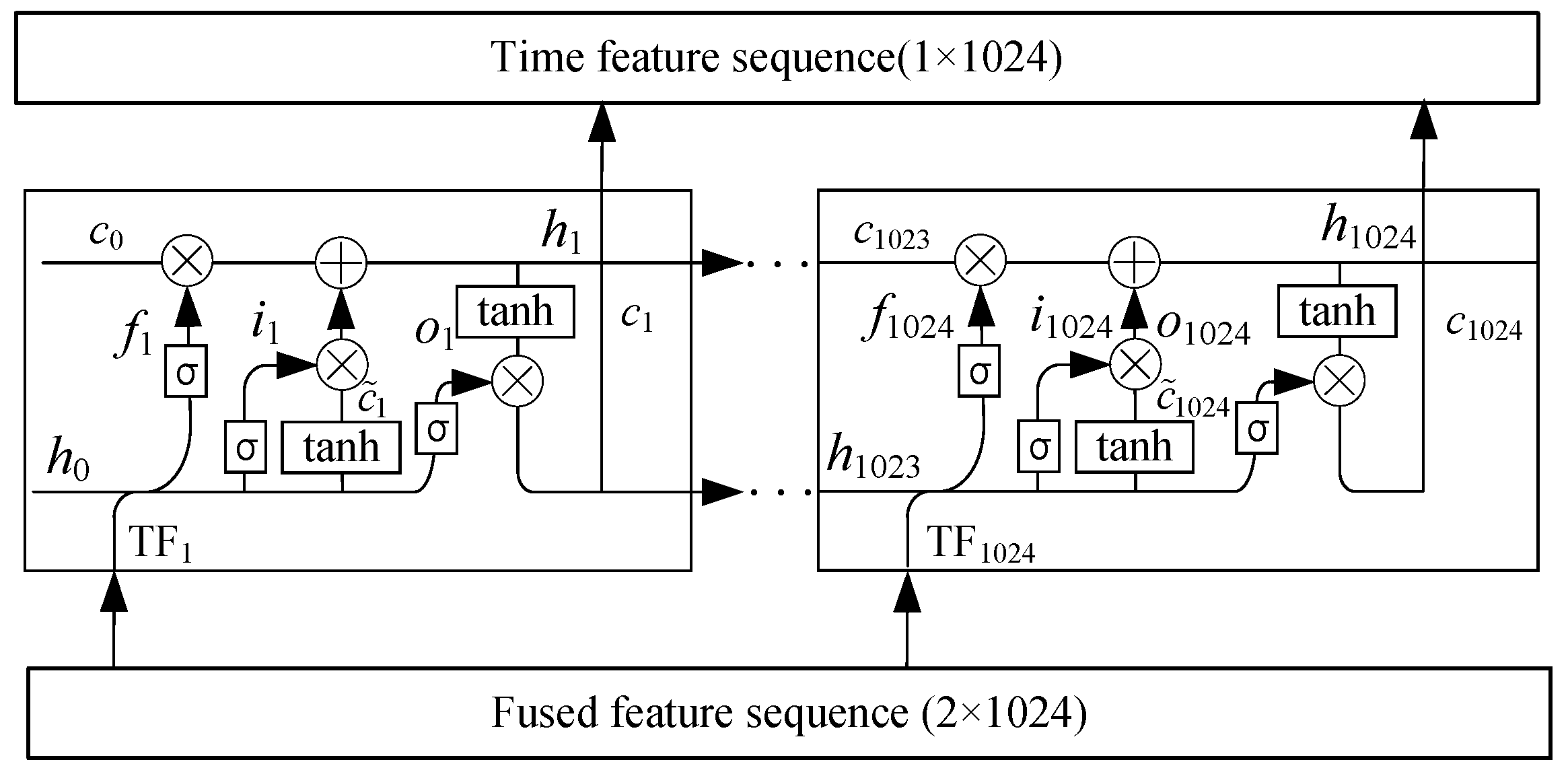

3.3.2. LSTM-Based Time Sequential Feature Extraction

3.3.3. Gesture Classification

4. Experiments and Analysis

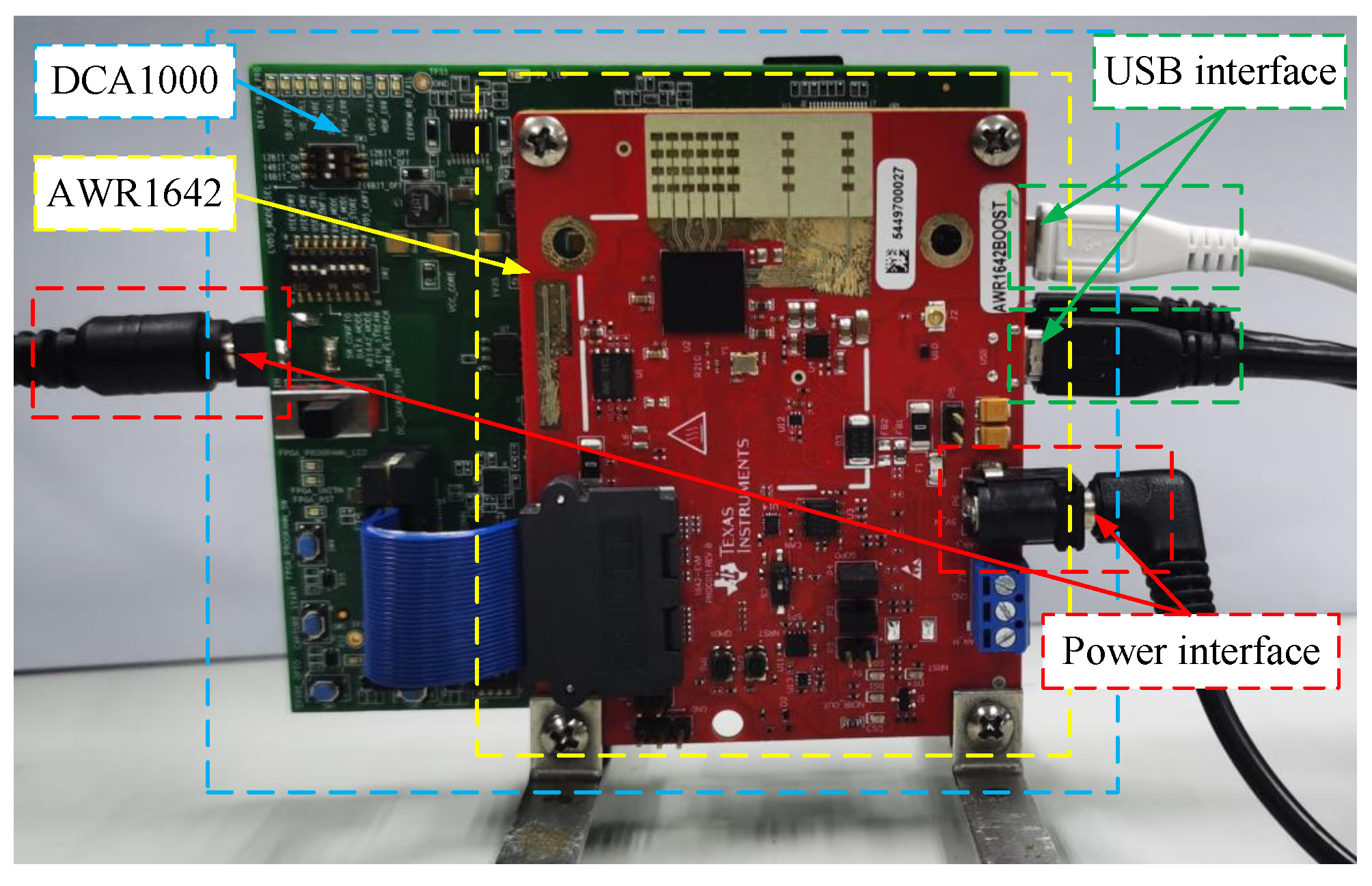

4.1. Experimental Setup

4.2. Results and Analysis

4.2.1. Effect of Interference Suppression

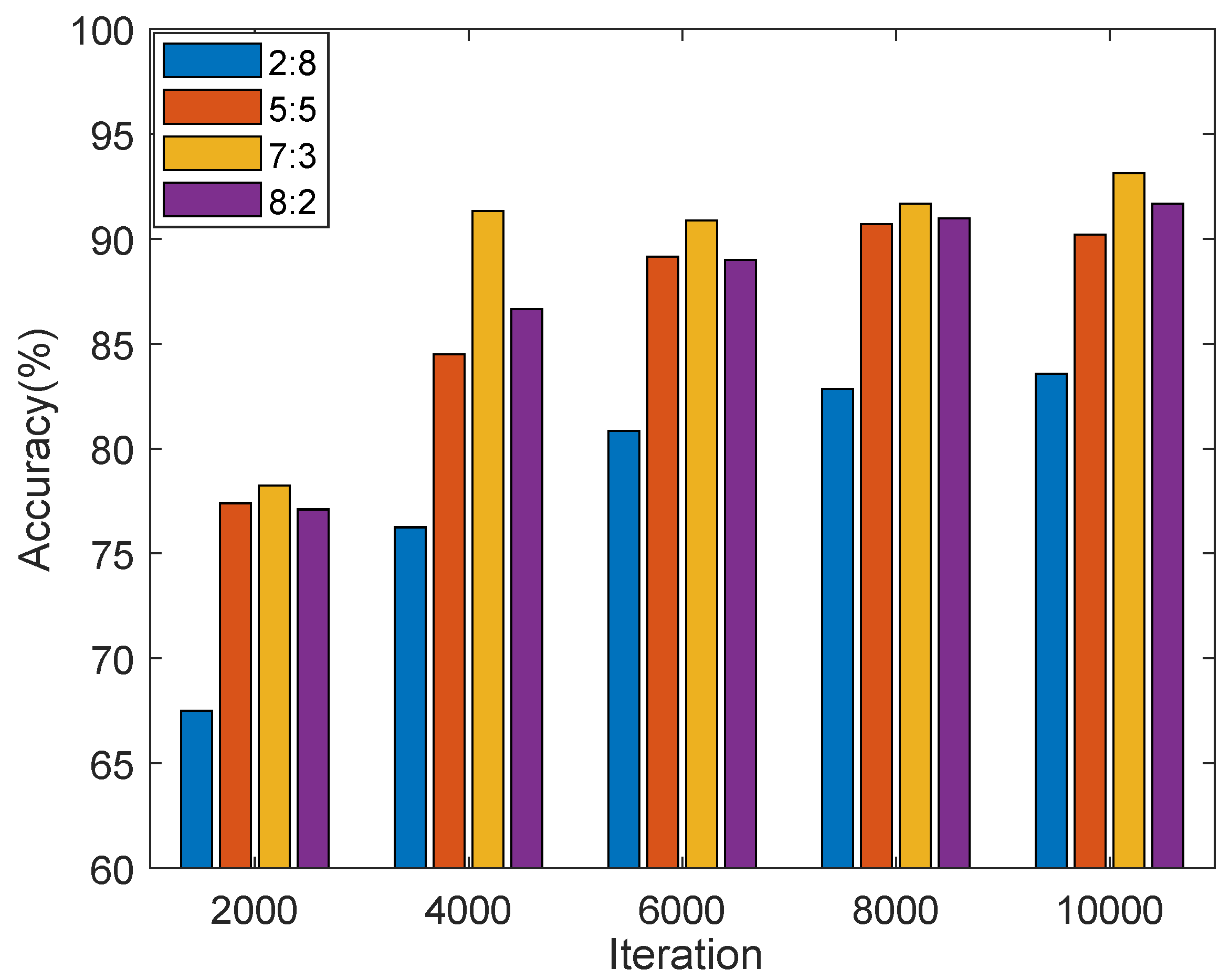

4.2.2. Impact of Training Dataset Size

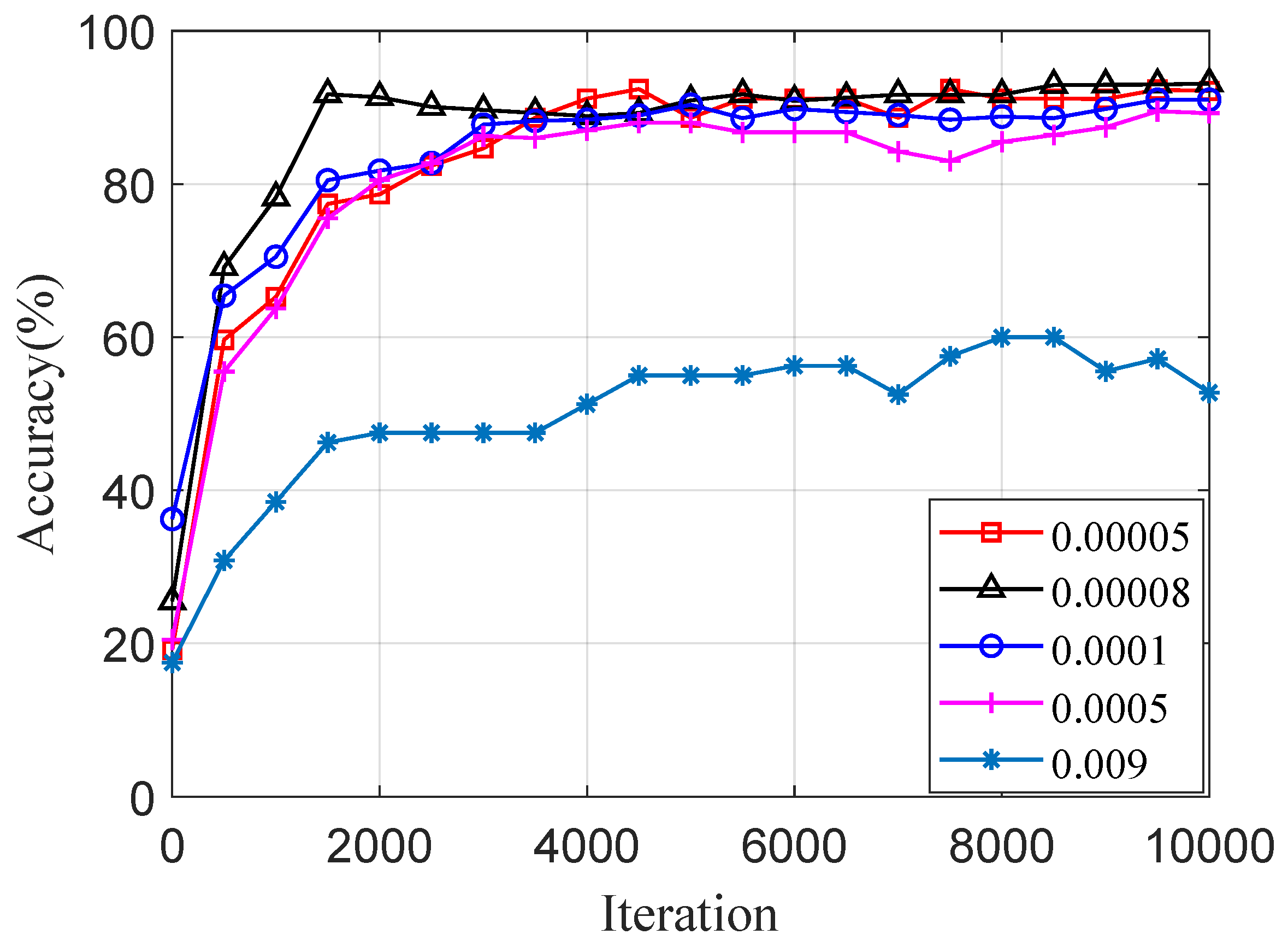

4.2.3. Impact of Learning Rate

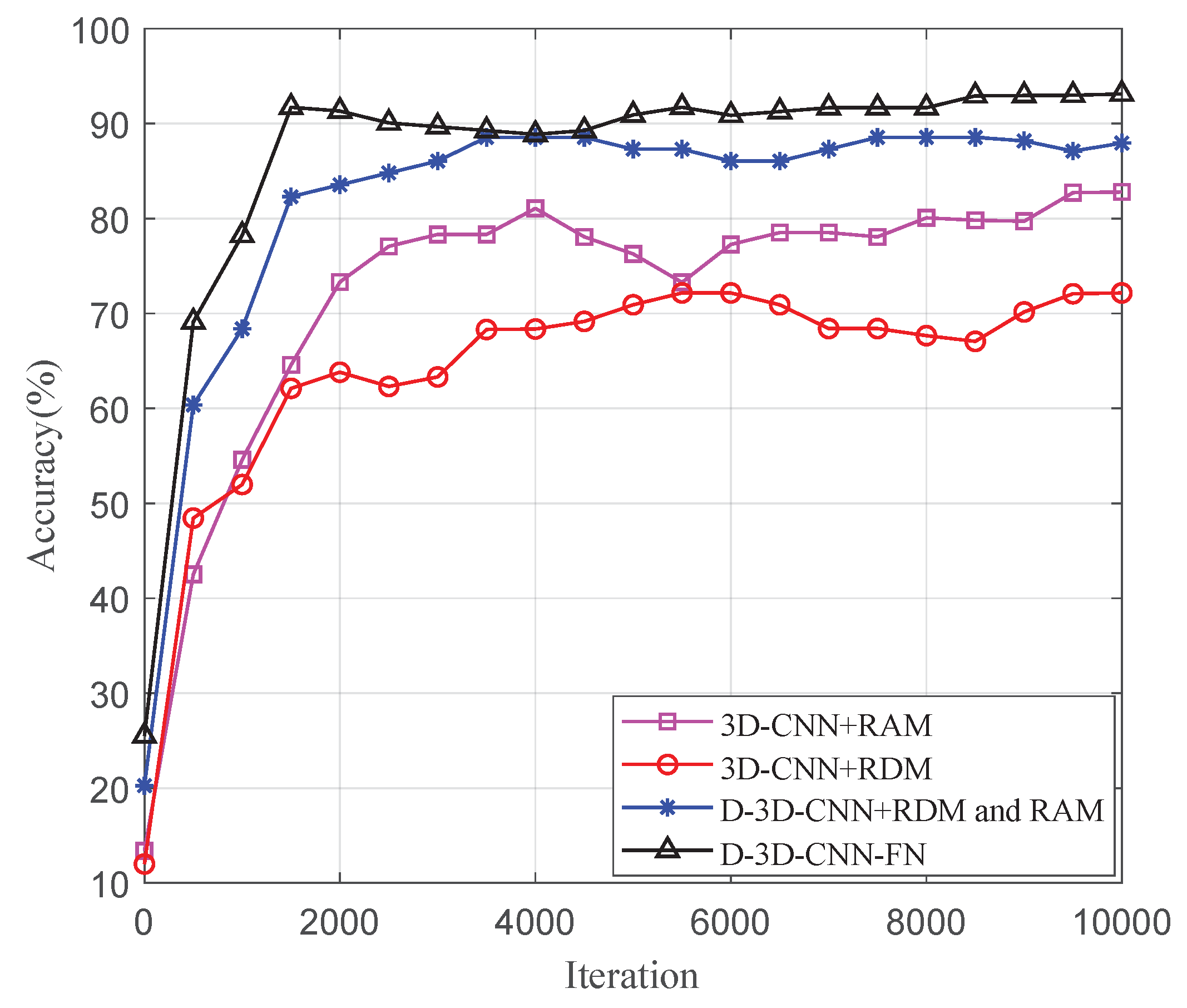

4.2.4. Recognition Accuracy Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, X.; Chen, L.; Chao, F.; Fang, D. Sensing our world using wireless signals. IEEE Internet Comput. 2019, 23, 38–45. [Google Scholar] [CrossRef] [Green Version]

- Ye, Y.; He, C.; Liao, B.; Qian, G. Capacitive proximity sensor array with a simple high sensitivity capacitance measuring circuit for human-computer interaction. IEEE Sens. J. 2018, 8, 5906–5914. [Google Scholar] [CrossRef]

- Simao, M.; Gibaru, O.; Neto, P. Online recognition of incomplete gesture data to interface collaborative robots. IEEE Trans. Ind. Electron. 2019, 66, 9372–9382. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, J.; Lan, F. Dynamic Hand Gesture Recognition Based on Short-Term Sampling Neural Networks. IEEE/CAA J. Autom. Sin. 2021, 8, 110–120. [Google Scholar] [CrossRef]

- Wang, Y.; Shu, Y.; Jia, X.; Xie, L.; Zhou, M. Multi-feature fusion-based hand gesture sensing and recognition system. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar]

- Sakamoto, T.; Gao, X.; Yavari, E.; Rahman, A.; Boric-Lubecke, O.; Lubecke, V.M. Radar-based hand gesture recognition using I-Q echo plot and convolutional neural network. In Proceedings of the IEEE Conference on Antenna Measurements and Applications (CAMA), Tsukuba, Japan, 4–6 December 2017; pp. 393–395. [Google Scholar]

- Hazra, S.; Santra, A. Short-range radar-based gesture recognition system using 3D CNN with triplet loss. IEEE Access 2019, 7, 125623–125633. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Short-range FMCW monopulse radar for hand-gesture sensing. In Proceedings of the IEEE Radar Conference (RadarConf), Arlington, VA, USA, 10–15 May 2015; pp. 1491–1496. [Google Scholar]

- Wang, Y.; Wang, S.; Zhou, M.; Jiang, Q.; Tian, Z. TS-I3D based hand gesture recognition method with radar sensor. IEEE Access 2019, 7, 22902–22913. [Google Scholar] [CrossRef]

- Xia, Z.; Luomei, Y.; Zhou, C.; Xu, F. Multidimensional feature representation and learning for robust hand-gesture recognition on commercial millimeter-wave radar. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4749–4764. [Google Scholar] [CrossRef]

- Howard, S.; Weinberg, G. Optimal predictive inference and noncoherent CFAR detectors. IEEE Trans. Aerosp. Electron. Syst. 2019, 56, 2603–2615. [Google Scholar] [CrossRef]

- Yang, Z.; Zheng, X. Hand gesture recognition based on trajectories features and computation-efficient reused LSTM network. IEEE Sens. J. 2021, 21, 16945–16960. [Google Scholar] [CrossRef]

- Chung, H.; Chung, Y.; Tsai, W. An efficient hand gesture recognition system based on deep CNN. In Proceedings of the IEEE International Conference on Industrial Technology (ICIT), Melbourne, Australia, 13–15 February 2019; pp. 853–858. [Google Scholar]

- Wang, S.; Song, J.; Lien, J.; Poupyrev, I.; Hilliges, O. Interacting with soli: Exploring fine-grained dynamic gesture recognition. In Proceedings of the Radio-frequency Spectrum Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 851–860. [Google Scholar]

- Skaria, S.; Al-Hourani, A.; Lech, M.; Evans, R. Hand-gesture recognition using two-antenna doppler radar with deep convolutional neural networks. IEEE Sens. J. 2021, 19, 3041–3048. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Kautz, J. Hand gesture recognition with 3D convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 1–7. [Google Scholar]

- Zhang, W.; Wang, J. Dynamic hand gesture recognition based on 3D convolutional neural network models. In Proceedings of the IEEE 16th International Conference on Networking, Sensing and Control (ICNSC), Banff, AB, Canada, 9–11 May 2019; pp. 224–229. [Google Scholar]

- Zhang, Z.; Tian, Z.; Zhou, M. Latern: Dynamic continuous hand gesture recognition using FMCW radar sensor. IEEE Sens. J. 2018, 18, 3278–3289. [Google Scholar] [CrossRef]

- Kumar, S.; Subha, D. Prediction of depression from EEG signal using long short term memory (LSTM). In Proceedings of the 2019 3rd International Conference on Trends in Electronics and Informatics ICOEI, Tirunelveli, India, 23–25 April 2019; pp. 1248–1253. [Google Scholar]

- Peng, Z.; Li, C.; Munoz-Ferreras, J.; Gomez-Garcia, R. An FMCW radar sensor for human gesture recognition in the presence of multiple targets. In Proceedings of the IEEE MTT-S International Microwave Bio Conference (IMBIOC), Gothenburg, Sweden, 15–17 May 2017; pp. 1–3. [Google Scholar]

- Wang, J.; Ran, Z.; Gao, Q.; Ma, X.; Pan, M.; Xue, K. Multi-person device-free gesture recognition using mmWave signals. China Commun. 2021, 18, 186–199. [Google Scholar] [CrossRef]

- Zhang, D.; Hu, Y.; Chen, Y. Mtrack: Tracking multiperson moving trajectories and vital signs with radio signals. IEEE Internet Things J. 2021, 8, 3904–3914. [Google Scholar] [CrossRef]

- Donoho, D. De-noising by soft-thresholding. IEEE Trans. Inf. Theory 2002, 41, 613–627. [Google Scholar] [CrossRef] [Green Version]

- He, W.; Wang, Y.; Zhou, M.; Wang, B. A novel parameters correction and multivariable decision tree method for edge computing enabled HGR system. Neurocomputing 2022, 487, 203–213. [Google Scholar] [CrossRef]

- Tu, J.; Lin, J. Fast acquisition of heart rate in noncontact vital sign radar measurement using time-window-variation technique. IEEE Trans. Instrum. Meas. 2015, 65, 112–122. [Google Scholar] [CrossRef]

- Xu, Z.; Yuan, M. An interference mitigation technique for automotive millimeter wave radars in the tunable Q-factor wavelet transform domain. IEEE Trans. Microw. Theory Tech. 2021, 69, 5270–5283. [Google Scholar] [CrossRef]

- Xu, Z.; Shi, Q. Interference mitigation for automotive radar using orthogonal noise waveforms. IEEE Geosci. Remote Sens. Lett. 2017, 15, 137–141. [Google Scholar] [CrossRef]

- Wang, J. CFAR-based interference mitigation for FMCW automotive radar systems. IEEE Trans. Intell. Transp. Syst. 2021. [Google Scholar] [CrossRef]

- Cardillo, E.; Li, C.; Caddemi, A. Millimeter-wave radar cane: A blind people aid with moving human recognition capabilities. IEEE J. Electromagn. Microwaves Med. Biol. 2021. [Google Scholar] [CrossRef]

- Haggag, K.; Lange, S.; Pfeifer, T.; Protzel, P. A credible and robust approach to ego-motion estimation using an automotive radar. IEEE Robot. Autom. Lett. 2022, 7, 6020–6027. [Google Scholar] [CrossRef]

- Chintakindi, S.; Varaprasad, O.; Siva Sarma, D. Improved Hanning window based interpolated FFT for power harmonic analysis. In Proceedings of the TENCON 2015—2015 IEEE Region 10 Conference, Macao, China, 1–4 November 2015; pp. 1–5. [Google Scholar]

- Yang, J.; Lu, C.; Li, L. Target detection in passive millimeter wave image based on two-dimensional cell-weighted average CFAR. In Proceedings of the IEEE 11th International Conference on Signal Processing, Beijing, China, 21–25 October 2012; pp. 917–921. [Google Scholar]

- Xu, Z.; Baker, C.J.; Pooni, S. Range and Doppler cell migration in wideband automotive radar. IEEE Trans. Veh. Technol. 2019, 68, 5527–5536. [Google Scholar] [CrossRef]

- Single-Chip 76-GHz to 81-GHz Automotive Radar Sensor Integrating DSP and MCU. Available online: https://www.ti.com/product/AWR1642 (accessed on 19 April 2022).

- Real-Time Data-Capture Adapter for Radar Sensing Evaluation Module. Available online: https://www.ti.com/tool/DCA1000EVM (accessed on 19 April 2022).

| Parameters | Values | Parameters | Values |

|---|---|---|---|

| 77 GHz | 105.202 MHz/μs | ||

| B | 3.997 GHz | 3.9 mm | |

| 64 | 128 | ||

| 0.0446 m | 38 s | ||

| 2.8531 m | 0.4006 m/s | ||

| 2 MHz | 25.6366 m/s |

| Parameter | 3D-CNN | 3D-CNN | D-3D-CNN | D-3D-CNN-FN |

|---|---|---|---|---|

| Dataset | RDM | RAM | RDM+RAM | RDM+RAM |

| LSL | 73.33 | 86.67 | 89.67 | 94.33 |

| LSR | 71.67 | 83.33 | 87.67 | 96.67 |

| LSU | 69.00 | 81.67 | 86.33 | 90.67 |

| LSD | 74.67 | 82.00 | 83.00 | 91.33 |

| RSL | 72.33 | 79.67 | 90.00 | 95.00 |

| RSR | 75.00 | 83.33 | 86.67 | 93.33 |

| RSU | 70.00 | 86.67 | 84.33 | 91.67 |

| RSD | 71.33 | 86.67 | 88.00 | 92.00 |

| Ave. | 72.16 | 82.79 | 86.95 | 93.12 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Wang, D.; Fu, Y.; Yao, D.; Xie, L.; Zhou, M. Multi-Hand Gesture Recognition Using Automotive FMCW Radar Sensor. Remote Sens. 2022, 14, 2374. https://doi.org/10.3390/rs14102374

Wang Y, Wang D, Fu Y, Yao D, Xie L, Zhou M. Multi-Hand Gesture Recognition Using Automotive FMCW Radar Sensor. Remote Sensing. 2022; 14(10):2374. https://doi.org/10.3390/rs14102374

Chicago/Turabian StyleWang, Yong, Di Wang, Yunhai Fu, Dengke Yao, Liangbo Xie, and Mu Zhou. 2022. "Multi-Hand Gesture Recognition Using Automotive FMCW Radar Sensor" Remote Sensing 14, no. 10: 2374. https://doi.org/10.3390/rs14102374

APA StyleWang, Y., Wang, D., Fu, Y., Yao, D., Xie, L., & Zhou, M. (2022). Multi-Hand Gesture Recognition Using Automotive FMCW Radar Sensor. Remote Sensing, 14(10), 2374. https://doi.org/10.3390/rs14102374