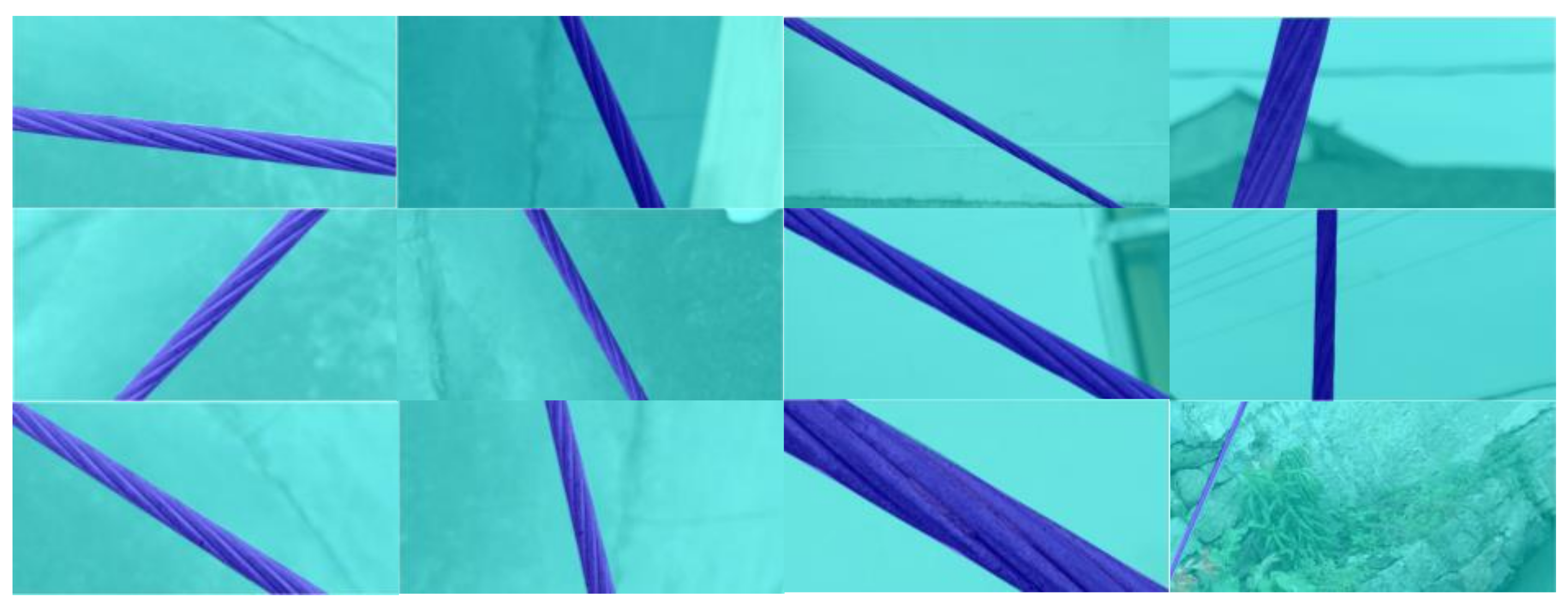

Figure 1.

Discrete images for the training and validation of convolutional neural network (CNN) models.

Figure 1.

Discrete images for the training and validation of convolutional neural network (CNN) models.

Figure 2.

Screenshots of continuous videos with different cable pixel-to-total pixel (C-T) ratios (left to right, 0.12, 0.08, 0.04, 0.02, and 0.01, respectively)

Figure 2.

Screenshots of continuous videos with different cable pixel-to-total pixel (C-T) ratios (left to right, 0.12, 0.08, 0.04, 0.02, and 0.01, respectively)

Figure 3.

Time history diagrams and frequency domain diagrams derived from acceleration samplings of Z axis by acceleration sensor.

Figure 3.

Time history diagrams and frequency domain diagrams derived from acceleration samplings of Z axis by acceleration sensor.

Figure 4.

Annotated discrete images in training and validation set.

Figure 4.

Annotated discrete images in training and validation set.

Figure 5.

C-T ratios of images in validation set.

Figure 5.

C-T ratios of images in validation set.

Figure 6.

Schematic of relative scale, duration time of training, validation and testing, and precision of three feature extractors.

Figure 6.

Schematic of relative scale, duration time of training, validation and testing, and precision of three feature extractors.

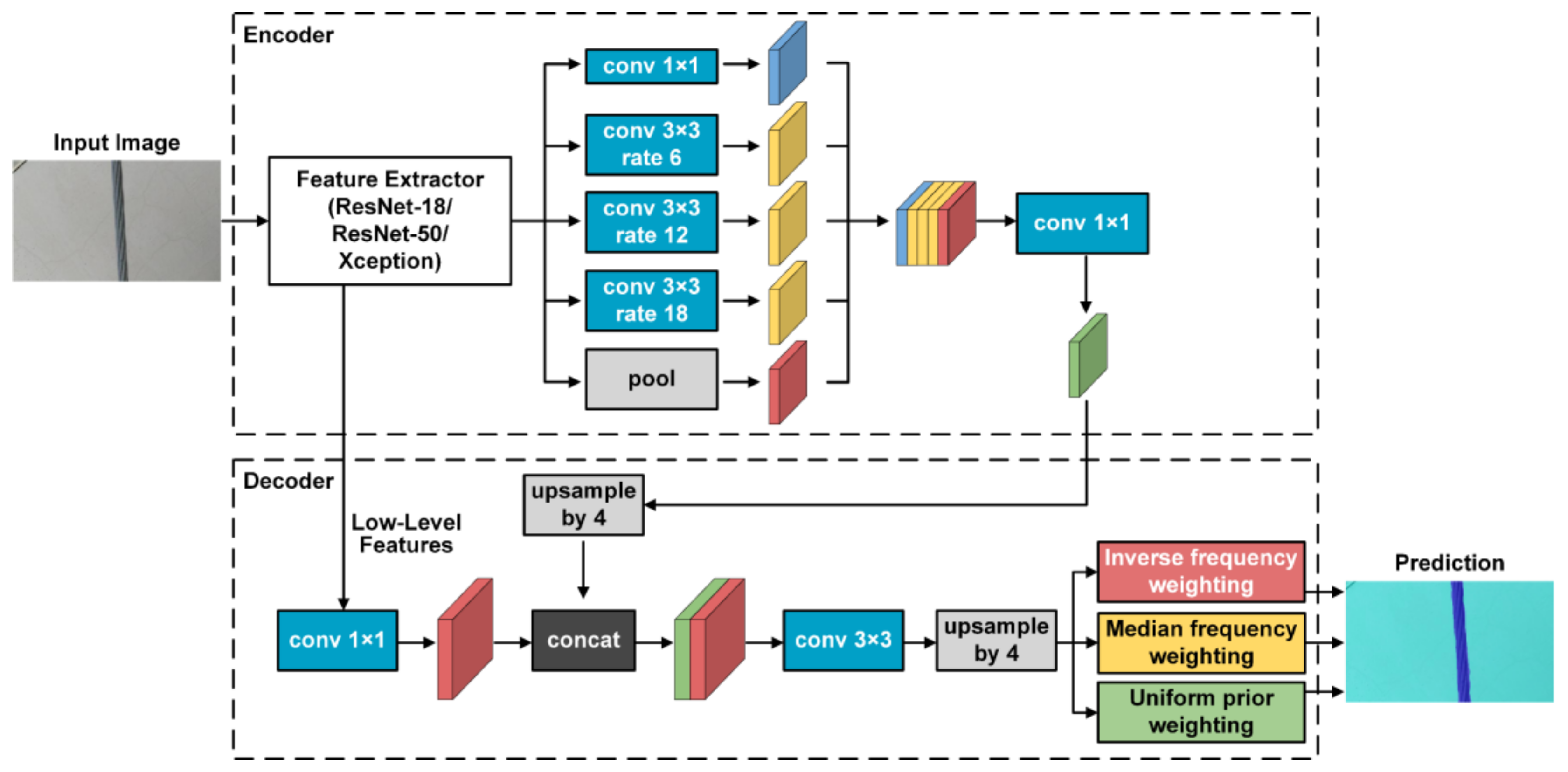

Figure 7.

Detailed structure of the underlying framework of modified CNN frameworks.

Figure 7.

Detailed structure of the underlying framework of modified CNN frameworks.

Figure 8.

Detailed structure of the ResNet-18 feature extractor (adopted in Net-I, Net-II, and Net-III).

Figure 8.

Detailed structure of the ResNet-18 feature extractor (adopted in Net-I, Net-II, and Net-III).

Figure 9.

Detailed structure of the ResNet-50 feature extractor (adopted in Net-IV, Net-V, and Net-VI).

Figure 9.

Detailed structure of the ResNet-50 feature extractor (adopted in Net-IV, Net-V, and Net-VI).

Figure 10.

Detailed structure of the Xception feature extractor (adopted in Net-VII, Net-VIII, and Net-IX).

Figure 10.

Detailed structure of the Xception feature extractor (adopted in Net-VII, Net-VIII, and Net-IX).

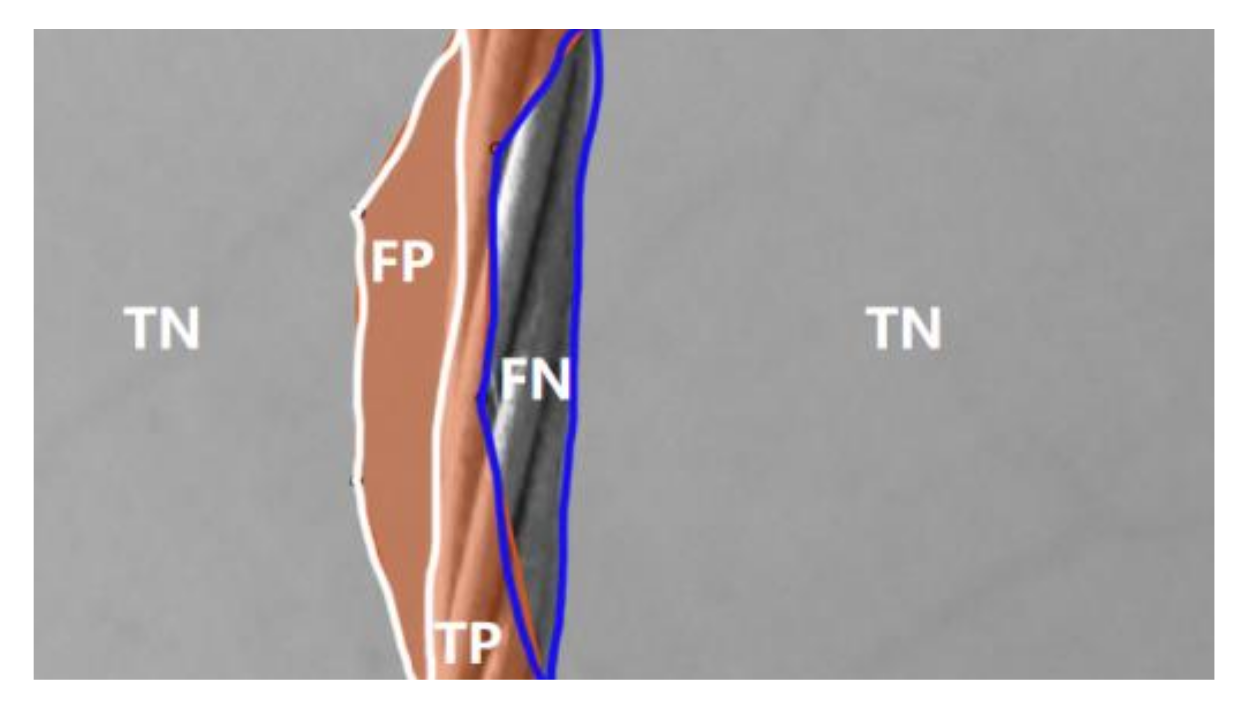

Figure 11.

Schematic diagram of confusion matrix metrics for cable versus backgrounds pixel-wise classification.

Figure 11.

Schematic diagram of confusion matrix metrics for cable versus backgrounds pixel-wise classification.

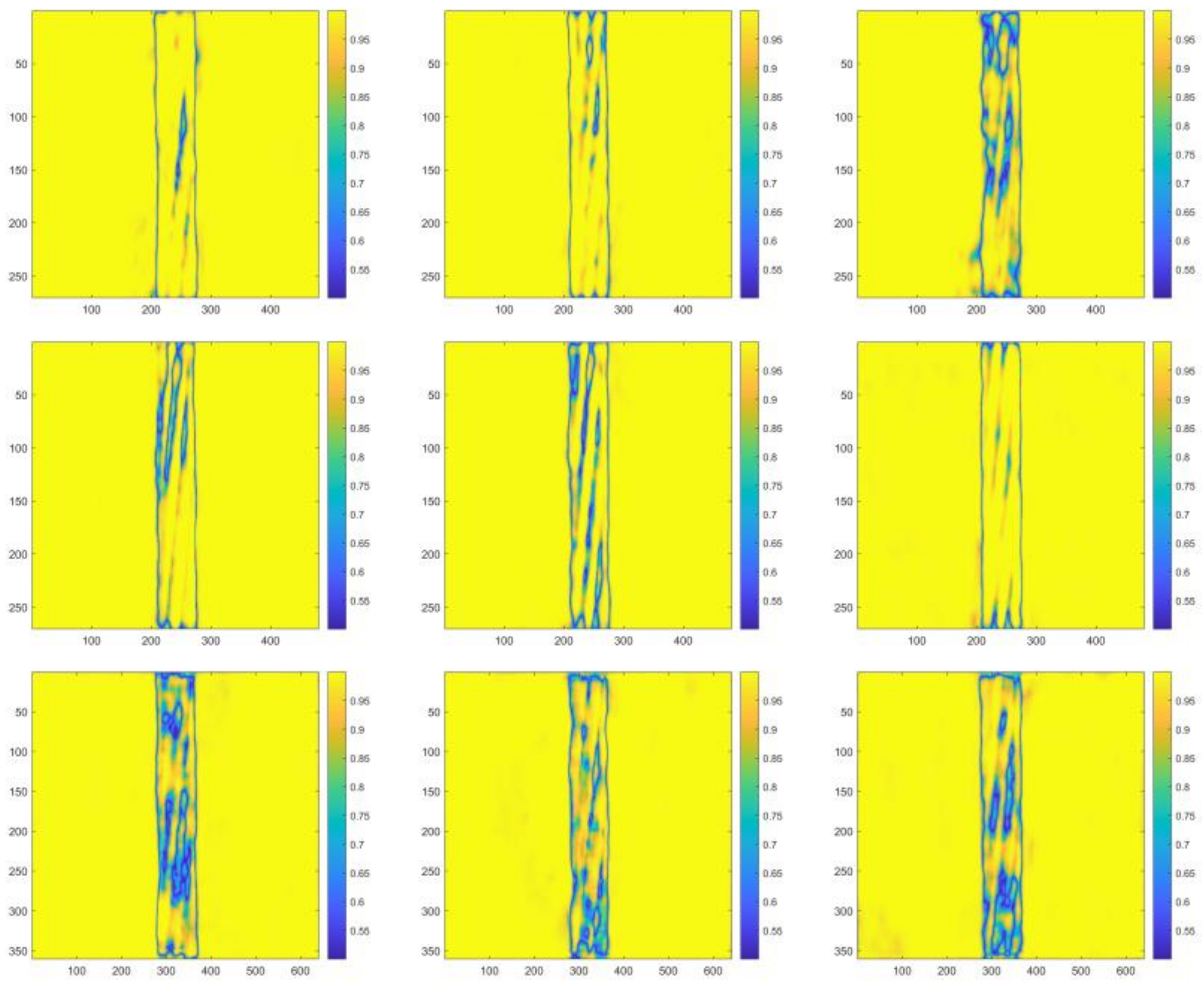

Figure 12.

Visualized segmentation results in the heat map form of the same representative testing image with the C-T ratio of 0.12 (left to right, top to bottom, Net-I–Net-IX, respectively, hereinafter).

Figure 12.

Visualized segmentation results in the heat map form of the same representative testing image with the C-T ratio of 0.12 (left to right, top to bottom, Net-I–Net-IX, respectively, hereinafter).

Figure 13.

Visualized segmentation results in the heat map form of the same representative testing image with the C-T ratio of 0.08.

Figure 13.

Visualized segmentation results in the heat map form of the same representative testing image with the C-T ratio of 0.08.

Figure 14.

Visualized segmentation results in the heat map form of the same representative testing image with the C-T ratio of 0.04.

Figure 14.

Visualized segmentation results in the heat map form of the same representative testing image with the C-T ratio of 0.04.

Figure 15.

Visualized segmentation results in the heat map form of the same representative testing image with the C-T ratio of 0.02.

Figure 15.

Visualized segmentation results in the heat map form of the same representative testing image with the C-T ratio of 0.02.

Figure 16.

Visualized segmentation results in the heat map form of the same representative testing image with the C-T ratio of 0.01.

Figure 16.

Visualized segmentation results in the heat map form of the same representative testing image with the C-T ratio of 0.01.

Figure 17.

Time history diagrams and frequency domain diagrams of the vibrating cable in five continuous videos (top to bottom, C-T ratio is 0.01, 0.02, 0.04, 0.08, 0.12, respectively).

Figure 17.

Time history diagrams and frequency domain diagrams of the vibrating cable in five continuous videos (top to bottom, C-T ratio is 0.01, 0.02, 0.04, 0.08, 0.12, respectively).

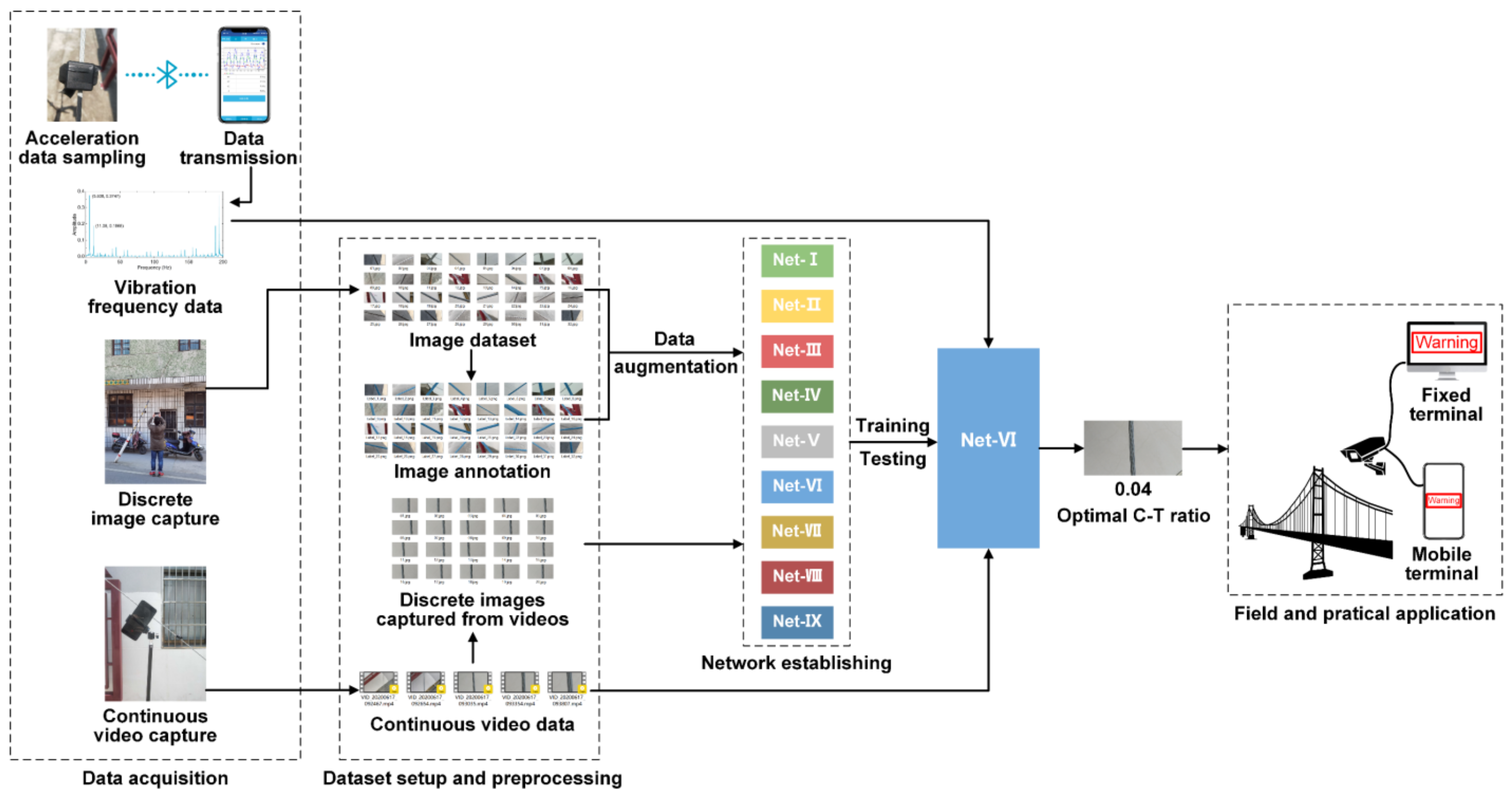

Figure 18.

Working flow and research process of the present study.

Figure 18.

Working flow and research process of the present study.

Figure 19.

Frequency domain diagrams of the vibrating cable in five videos with 30 Hz sampling frequency (top to bottom, left to right, C-T ratio is 0.01, 0.02, 0.04, 0.08, 0.12, respectively).

Figure 19.

Frequency domain diagrams of the vibrating cable in five videos with 30 Hz sampling frequency (top to bottom, left to right, C-T ratio is 0.01, 0.02, 0.04, 0.08, 0.12, respectively).

Table 1.

Assessments of eight existing cable tension measuring methods.

Table 1.

Assessments of eight existing cable tension measuring methods.

| Method | Device

Applicability | Device

Portability | Cost-

Effectiveness | Precision |

|---|

| Pressure sensor testing method | Poor | Poor | Poor | High |

| Pressure gauge testing method | Poor | Poor | Poor | High |

| Vibration wave method | Good | Good | Good | Low |

| Static profile method | Poor | Good | Good | Low |

| Resistance strain gauge method | Poor | Good | Poor | High |

| Elongation method | Good | Good | Good | High |

| Electromagnetic method | Poor | Poor | Poor | High |

| Anchoring removed method | Good | Good | Poor | High |

Table 2.

Video photography related parameters.

Table 2.

Video photography related parameters.

| C-T Ratio | Photography Distance and Digital Zoom Multiples |

|---|

| 0.12 | [1.8m, 1×] – [3.6m, 8×] |

| 0.08 | [1.4m, 1×] – [3.2m, 8×] |

| 0.04 | [1.1m, 1×] – [2.7m, 8×] |

| 0.02 | [0.8m, 1×] – [2.2m, 8×] |

| 0.01 | [0.4m, 1×] – [1.8m, 8×] |

Table 3.

Frequencies sampled by the acceleration sensor and their average values.

Table 3.

Frequencies sampled by the acceleration sensor and their average values.

| First Order Frequency | Second Order Frequency |

|---|

| Original (Hz) | Average (Hz) | Original (Hz) | Average (Hz) |

|---|

| 5.608 | 5.606 | 11.06 | 11.07 |

| 5.6 | 11.06 |

| 5.61 | 11.08 |

Table 4.

Recognizing–matching strategy and end-to-end multi-target tracking strategy.

Table 4.

Recognizing–matching strategy and end-to-end multi-target tracking strategy.

| | Feature Extracting Algorithm | Matching Algorithm |

|---|

| Conventional computer vision solution | Conventional computer vision | Conventional computer vision |

| Recognizing-matching strategy | Deep learning: CNN target recognition frameworks | Conventional computer vision |

| Deep learning: Advanced 3D target recognition frameworks | Conventional computer vision |

| End-to-end multi-target tracking strategy | Deep learning, and the two are merged. |

Table 5.

Important properties of ResNet-18, ResNet-50, and Xception.

Table 5.

Important properties of ResNet-18, ResNet-50, and Xception.

| Feature Extractor | Depth | Layers | Connections | Parameters | Image Input Size |

|---|

| ResNet-18 | 18 | 71 | 78 | 11.7 million | 224 × 224 |

| ResNet-50 | 50 | 177 | 192 | 25.6 million | 224 × 224 |

| Xception | 71 | 170 | 181 | 22.9 million | 299 × 299 |

Table 6.

Original cable pixel to background pixel ratio of training and validation images and that after weighting.

Table 6.

Original cable pixel to background pixel ratio of training and validation images and that after weighting.

| | Cable Pixel-to-Background Pixel Ratio (Cable: Background) |

|---|

| Original | 0.0612:1 |

| Inverse frequency weighting | 16.3346:1 |

| Median frequency weighting | 16.3347:1 |

| Uniform prior weighting | 16.3281:1 |

Table 7.

Modifications of nine CNN frameworks.

Table 7.

Modifications of nine CNN frameworks.

| | Feature Extractor | Weighting Algorithm |

|---|

| Net-I | ResNet-18 | Inverse frequency weighting |

| Net-II | ResNet-18 | Median frequency weighting |

| Net-III | ResNet-18 | Uniform prior weighting |

| Net-IV | ResNet-50 | Inverse frequency weighting |

| Net-V | ResNet-50 | Median frequency weighting |

| Net-VI | ResNet-50 | Uniform prior weighting |

| Net-VII | Xception | Inverse frequency weighting |

| Net-VIII | Xception | Median frequency weighting |

| Net-IX | Xception | Uniform prior weighting |

Table 8.

Search sets of training hyperparameters to be optimized.

Table 8.

Search sets of training hyperparameters to be optimized.

| Training Hyperparameter | Set of Search Values |

|---|

| Initial learning rate | {0.01, 0.001, 0.0001} |

| Batch size | {10, 20, 30, 40, 50} |

| Epoch | {2, 3, 4, 5} |

Table 9.

Fixed training hyperparameters and their options.

Table 9.

Fixed training hyperparameters and their options.

| Training Hyperparameter | Option |

|---|

| Optimizer | Stochastic gradient descent with momentum (SGDM) |

| Momentum | 0.9 |

| Validation frequency | 2 |

| Validation patience | 4 |

| Shuffle | Every epoch |

| L2 regularization | 0.005 |

| Down sampling factor | 16 |

| Execution environment | CPU |

Table 10.

Optimal training hyperparameters for nine CNN models.

Table 10.

Optimal training hyperparameters for nine CNN models.

| | Net-I | Net-II | Net-III | Net-IV | Net-V | Net-VI | Net-VII | Net-VIII | Net-IX |

|---|

| Initial learning rate | 0.001 | 0.01 | 0.01 | 0.001 | 0.0001 | 0.01 | 0.001 | 0.0001 | 0.01 |

| Batch size | 30 | 40 | 30 | 40 | 30 | 40 | 30 | 50 | 30 |

| Epoch | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

Table 11.

Training and validation accuracies of nine CNN models.

Table 11.

Training and validation accuracies of nine CNN models.

| | Net-I | Net-II | Net-III | Net-IV | Net-V | Net-VI | Net-VII | Net-VIII | Net-IX |

|---|

| Training accuracy (%) | 94.59 | 95.46 | 94.57 | 95.23 | 94.00 | 94.77 | 94.58 | 94.70 | 94.03 |

| Validation accuracy (%) | 95.21 | 95.45 | 94.72 | 94.77 | 94.11 | 94.66 | 93.75 | 94.27 | 93.84 |

Table 12.

Confusion matrix for binary classification.

Table 12.

Confusion matrix for binary classification.

| Confusion Matrix | Ground Truth |

|---|

| Positive | Negative |

|---|

| Prediction | Positive | TP | FP |

| Negative | TN | FN |

Table 13.

Evaluations of validation set with aggregate dataset metrics.

Table 13.

Evaluations of validation set with aggregate dataset metrics.

| | Net-I | Net-II | Net-III | Net-IV | Net-V | Net-VI | Net-VII | Net-VIII | Net-IX |

|---|

| 0.9718 | 0.9789 | 0.9552 | 0.9663 | 0.9759 | 0.9873 | 0.9654 | 0.9605 | 0.9555 |

| 0.8489 | 0.8948 | 0.8492 | 0.8883 | 0.8888 | 0.9141 | 0.8811 | 0.8894 | 0.8397 |

| 0.8995 | 0.8980 | 0.8741 | 0.8786 | 0.8779 | 0.9015 | 0.8771 | 0.8846 | 0.8614 |

| 0.9772 | 0.9770 | 0.9732 | 0.9756 | 0.9731 | 0.9758 | 0.9727 | 0.9754 | 0.9697 |

Table 14.

Evaluations of validation set with class metrics of cable class.

Table 14.

Evaluations of validation set with class metrics of cable class.

| | Net-I | Net-II | Net-III | Net-IV | Net-V | Net-VI | Net-VII | Net-VIII | Net-IX |

|---|

| Precision | 0.9464 | 0.9611 | 0.9137 | 0.9348 | 0.9564 | 0.9795 | 0.9355 | 0.9242 | 0.9161 |

| 0.7998 | 0.8808 | 0.8058 | 0.8557 | 0.8940 | 0.9360 | 0.8904 | 0.8681 | 0.8315 |

| IoU | 0.8126 | 0.8097 | 0.7637 | 0.7706 | 0.7719 | 0.8185 | 0.7706 | 0.7833 | 0.7409 |

Table 15.

Class metrics of cable class of testing images with the C-T ratio of 0.12.

Table 15.

Class metrics of cable class of testing images with the C-T ratio of 0.12.

| | Net-I | Net-II | Net-III | Net-IV | Net-V | Net-VI | Net-VII | Net-VIII | Net-IX |

|---|

| Precision | 0.9905 | 0.9812 | 0.9195 | 0.9192 | 0.9424 | 0.9866 | 0.9100 | 0.9330 | 0.8857 |

| 0.8514 | 0.8039 | 0.6711 | 0.5963 | 0.6485 | 0.8639 | 0.6432 | 0.6682 | 0.5757 |

| IoU | 0.9054 | 0.8821 | 0.7958 | 0.7852 | 0.7997 | 0.8969 | 0.7861 | 0.8361 | 0.7579 |

Table 16.

Class metrics of cable class of testing images with the C-T ratio of 0.08.

Table 16.

Class metrics of cable class of testing images with the C-T ratio of 0.08.

| | Net-I | Net-II | Net-III | Net-IV | Net-V | Net-VI | Net-VII | Net-VIII | Net-IX |

|---|

| Precision | 0.9835 | 0.9924 | 0.9954 | 0.9957 | 0.9995 | 0.9996 | 0.9765 | 0.9755 | 0.9562 |

| 0.8928 | 0.9221 | 0.9443 | 0.9480 | 0.9719 | 0.9597 | 0.8545 | 0.8836 | 0.7760 |

| IoU | 0.8466 | 0.8498 | 0.8661 | 0.8757 | 0.8751 | 0.8668 | 0.8449 | 0.8660 | 0.8042 |

Table 17.

Class metrics of cable class of testing images with the C-T ratio of 0.04.

Table 17.

Class metrics of cable class of testing images with the C-T ratio of 0.04.

| | Net-I | Net-II | Net-III | Net-IV | Net-V | Net-VI | Net-VII | Net-VIII | Net-IX |

|---|

| Precision | 0.9769 | 0.9391 | 0.8378 | 0.9324 | 0.9908 | 0.9973 | 0.9782 | 0.9505 | 0.9689 |

| 0.9033 | 0.8401 | 0.7598 | 0.8312 | 0.9422 | 0.9685 | 0.9446 | 0.9170 | 0.9329 |

| IoU | 0.8059 | 0.7430 | 0.6628 | 0.7503 | 0.8152 | 0.8226 | 0.8229 | 0.8076 | 0.8124 |

Table 18.

Class metrics of cable class of testing images with the C-T ratio of 0.02.

Table 18.

Class metrics of cable class of testing images with the C-T ratio of 0.02.

| | Net-I | Net-II | Net-III | Net-IV | Net-V | Net-VI | Net-VII | Net-VIII | Net-IX |

|---|

| Precision | 0.8661 | 0.9178 | 0.8178 | 0.9392 | 0.9639 | 0.9697 | 0.9314 | 0.9641 | 0.9864 |

| 0.9950 | 0.9965 | 0.9898 | 0.9905 | 0.9948 | 0.9961 | 0.9746 | 0.9449 | 0.9140 |

| IoU | 0.4633 | 0.4818 | 0.4535 | 0.5261 | 0.5397 | 0.5372 | 0.5763 | 0.5887 | 0.5853 |

Table 19.

Class metrics of cable class of testing images with the C-T ratio of 0.01.

Table 19.

Class metrics of cable class of testing images with the C-T ratio of 0.01.

| | Net-I | Net-II | Net-III | Net-IV | Net-V | Net-VI | Net-VII | Net-VIII | Net-IX |

|---|

| Precision | 0.1017 | 0.2478 | 0.1044 | 0.3593 | 0.5688 | 0.5690 | 0.5679 | 0.3159 | 0.6964 |

| 0.4696 | 0.7017 | 0.5432 | 0.8515 | 0.8870 | 0.9139 | 0.9773 | 0.8608 | 0.9476 |

| IoU | 0.0792 | 0.1677 | 0.0851 | 0.2087 | 0.2897 | 0.2878 | 0.2685 | 0.1894 | 0.3356 |

Table 20.

Comparison of cable vibration frequencies sampled by acceleration sensor and that derived from videos.

Table 20.

Comparison of cable vibration frequencies sampled by acceleration sensor and that derived from videos.

| C-T Ratio | First Order Frequency | Second Order Frequency |

|---|

| Video (Hz) | Acceleration Sensor (Hz) | Percentage Error (%) | Video (Hz) | Acceleration Sensor (Hz) | Percentage Error (%) |

|---|

| 0.01 | 5.629 | 5.606 | 0.41 | 11.15 | 11.07 | 0.72 |

| 0.02 | 5.669 | 1.12 | 11.13 | 0.54 |

| 0.04 | 5.583 | −0.41 | 11.03 | −0.36 |

| 0.08 | 5.506 | −1.78 | 10.90 | −1.54 |

| 0.12 | 5.506 | −1.78 | 10.88 | −1.71 |

Table 21.

Comparative study of CableNet, existing acceleration sensor-leveraged method, and method proposed by references.

Table 21.

Comparative study of CableNet, existing acceleration sensor-leveraged method, and method proposed by references.

| Method | Device | Algorithm | Efficiency | Cost-

Effectiveness | Applicability of Varying

Backgrounds | Error 1 |

|---|

| CableNet | Camera | Semantic segmentation | Real time

(25 FPS) | Good | Good | 0.41% |

| Reference [13] | Camera | Background

differencing | Real time | Good | Poor | ±0.5% |

| Reference [16] | Camera | Digital image

processing | Real time | Good | Poor | 0.5%–3.5% |

| Reference [20] | Mobile phone | Hough transform | Real time | Good | Poor | 0.44%–0.96% |

| Reference [51] | Mobile phone | – | Real time | Poor | – | 0.04%–0.38% |

Acceleration

sensor | Acceleration

sensor | – | Real time | Poor | – | – |

Table 22.

First two order frequencies derived from videos with sampling frequency of 30 Hz and 60 Hz.

Table 22.

First two order frequencies derived from videos with sampling frequency of 30 Hz and 60 Hz.

| C-T Ratio | First Order Frequency (Hz) | Second Order Frequency (Hz) |

|---|

| 30 Hz | 60 Hz | 30 Hz | 60 Hz |

|---|

| 0.01 | 5.628 | 5.629 | 11.14 | 11.15 |

| 0.02 | 5.667 | 5.669 | 11.12 | 11.13 |

| 0.04 | 5.583 | 5.583 | 11.03 | 11.03 |

| 0.08 | 5.506 | 5.506 | 10.90 | 10.90 |

| 0.12 | 5.506 | 5.506 | 11.88 | 10.88 |