Abstract

Low-rank representation with hypergraph regularization has achieved great success in hyperspectral imagery, which can explore global structure, and further incorporate local information. Existing hypergraph learning methods only construct the hypergraph by a fixed similarity matrix or are adaptively optimal in original feature space; they do not update the hypergraph in subspace-dimensionality. In addition, the clustering performance obtained by the existing k-means-based clustering methods is unstable as the k-means method is sensitive to the initialization of the cluster centers. In order to address these issues, we propose a novel unified low-rank subspace clustering method with dynamic hypergraph for hyperspectral images (HSIs). In our method, the hypergraph is adaptively learned from the low-rank subspace feature, which can capture a more complex manifold structure effectively. In addition, we introduce a rotation matrix to simultaneously learn continuous and discrete clustering labels without any relaxing information loss. The unified model jointly learns the hypergraph and the discrete clustering labels, in which the subspace feature is adaptively learned by considering the optimal dynamic hypergraph with the self-taught property. The experimental results on real HSIs show that the proposed methods can achieve better performance compared to eight state-of-the-art clustering methods.

1. Introduction

Hyperspectral image (HSI) classification is an important problem in the remote sensing community. Extensive prior literature addresses the classification in the framework of supervised classification [1,2,3], in which the training of the classifier relies on the labeled data (with ground-truth information). However, the labeled datasets are strained and impossible to obtain in some applications by human capacity [4]. With the aim of exploiting the unlabeled remote sensing data, unsupervised classification methods containing the segmentation of the dataset into several groups with no prior label information are necessary.

According to the existing literature, clustering methods are divided into several categories [5]. The two most popular categories suitable for the characteristics of the HSIs are centroid-based methods and spectral-based methods. Among the centroid-based clustering methods, k-means [6] and fuzzy c-means (FCM) [7] get more attention due to their computational efficiency and simplicity, which group pixels by finding the minimum distance between pixels and each clustering centroid through iterative update. Recently, the spectral-based clustering methods have been highly popular and have been widely used for hyperspectral data clustering. In general, these methods construct a similarity matrix based on the original data first, then apply the centroid-based methods to the eigenspaces of the Laplacian matrix to segment pixels. Specifically, the locally spectral clustering (LSC) [8] and the globally spectral clustering (GSC) [9] use the local and global neighbors about each pixel to construct the similarity matrix which represents the relationship between pairs of pixels respectively, and applies k-means on the eigenspace of the Laplacian matrices, but they cannot distinguish between subspaces the pixels should belong to. Otherwise, the large spectral variability results in the uniform feature point distribution, which increased the difficulty of HSI clustering [5]. The recently developed sparse subspace clustering (SSC) [10,11] and low-rank subspace clustering (LRSC) [12,13] methods use the sparse and low-rank representation coefficients to define the adjacent matrix, and apply spectral clustering to obtain the segmentation result. However, compared with SSC, LRSC is better at exploring the global structure information by finding the lowest-rank representation of all the data jointly. Nevertheless, the original LRSC model cannot explore the local latent structure information of the data while exploiting the corresponding subspaces.

Inspired by the theory of manifold learning in image processing [14], Lu et al. [15] proposed the graph-embedded low-rank representation (GLRR) by incorporating graph regularization into low-rank representation objective function. However, the general graph regularization model only uses the paired relationship between two pixels, which cannot excavate the complex high-order relationships of the pixels. In fact, the relationship between the hyperspectral pixels we are interested in is not just a pairwise relationship between two pixels, but a plural or even more complex relationship. Instead of considering pairwise relations, the hypergraph models the data manifold structures by exploring the high-order relations among data points, which is first proposed by Berge [16]. Zhou et al. [17] combined the powerful methodology of spectral clustering to extend originally undirected graph to hypergraph. Then, hypergraph is widely used in feature extraction [18], band selection [19], dimension reduction [20], and noise reduction [21] in hyperspectral images. According to the extensive prior literature, the methods associated with hypergraph based on representation learning are divided into two categories. One is using the representation coefficient as the hyperedge weight to construct hypergraph, such as [2,22] regards sparse and low-rank coefficients as a new feature to measure the similarity of the pixels and adaptively select neighbors for constructing the hypergraph, respectively. The other is using hypergraph as regularization to optimal representation coefficient by capturing intrinsic geometrical structure. Gao et al. [23] first introduced hypergraph into sparse coding, in which hypergraph explores the similarity information among the pixels within the same hyperedge, and simultaneously updates the sparse representation coefficient of them to be similar to each other. Motivated by the idea of hypergraph regularization, it was introduced into non-negative matrix factorization [24], sparse NMF [25], low-rank representation [26,27].

It is noteworthy that there are two main problems in existing hypergraph-based representation learning methods. First, the pre-constructed hypergraph is usually learned from the original data with a certain distance measurement but not optimized dynamically. Then, Zhang et al. [28] proposed a unified framework for data structure estimation and feature selection, which update the hypergraph weight in the hypergraph learning process. In Reference [29], a dynamic hypergraph structure learning method was proposed, in which the incidence matrix of hypergraph can be learned by considering the data correlation on both the label space and the feature space. In addition, the data from the original feature space may contain various of noises, which could degenerate the performance since these methods highly depend on the constructed hypergraph. Zhu et al. [30,31] proposed an unsupervised sparse feature selection method by embedding a hypergraph Laplacian regularizer, in which the hypergraph was learned dynamically from the optimized sparse subspace feature. Otherwise, the hypergraph was adaptively learned from the latent representation space, which can robustly characterize the intrinsic data structure [32,33]. Second, the clustering performance obtained by the existing k-means-based methods is unstable as the initialization of the cluster centers has too much impact on the performance of the k-means method. Therefore, it is necessary to construct a unified framework and directly generate discrete clustering labels [34,35,36]. However, the existing unified clustering framework is based on the general graph structure, which may lead to significant information loss and reduce the performance of the clustering algorithm.

To address the issues, we propose a novel unified dynamic hypergraph low-rank subspace clustering method for hyperspectral images, known as UDHLR. First, we develop a dynamic hypergraph low-rank subspace clustering method, known as DHLR, where the hypergraph regularization is used to preserve the local complex structure of the low-dimensional data. Meanwhile, the hypergraph is adaptively learned from the low-rank subspace feature. However, the DHLR algorithm works in two separate steps: learning the low-rank coefficient matrix as similarity graph; generating the discrete clustering label by the k-means method. Therefore, we integrate these two subtasks into a unified framework, in which low-rank representation coefficient, hypergraph structure and discrete clustering label are optimized by using the results of the others to get an overall optimal result. The main contributions of our methods are summarized as follows:

- (1)

- Instead of pre-constructing a fixed hypergraph incidence and weight matrices, the hypergraph is adaptively learned from the low-rank subspace feature. The dynamically constructed hypergraph is well structured and theoretically suitable for clustering.

- (2)

- The proposed method simultaneously optimizes continuous labels, and discrete cluster labels by a rotation matrix without any relaxing information loss.

- (3)

- It jointly learns the similarity hypergraph from the learned low-rank subspace data and the discrete clustering labels by solving a unified optimization problem, in which the low-rank subspace feature and hypergraph are adaptively learned by considering the clustering performance and the continuous clustering labels just serve as intermediate products.

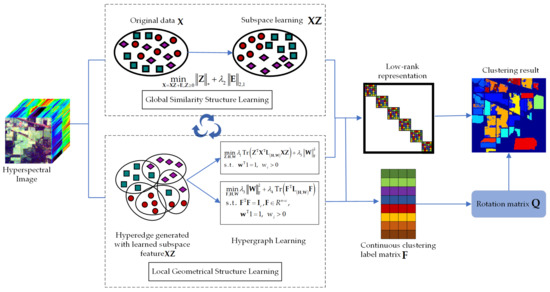

The remainder of this paper is organized as follows: Section 2 revisits the low-rank representation and hypergraph. Section 3 describes the proposed DHLR and UDHLR models. Section 4 presents the experimental setting and experimental results. Section 5 presents the discussions about computation complexity. Finally, concludes are presented in Section 6. The framework of the proposed methods is shown in Figure 1.

Figure 1.

Illustration of the proposed method.

2. Related Work

The important notations in the paper are summarized in Table 1.

Table 1.

Important notation used in this paper.

2.1. Low-Rank Representation

Let denotes a hyperspectral image with n samples, represents the i-th pixel with d spectral bands. Low-rank representation (LRR) attempts to solve the following objective function to seek the lowest-rank representation for clustering

where denotes the lowest-rank representation matrix under a self-expressive dictionary [13], is the rank of matrix Z, N is a sparse matrix of outliers. However, the rank minimization problem is NP-hard and difficult to optimize, thus the nuclear norm is adopted to address this issue, yielding the following optimization [13]:

where is the nuclear norm constraint of matrix Z and is calculated as , is the i-th singular value of matrix Z. The representation matrix Z can be solved by optimizing the above problem via the inexact augmented Lagrange multiplier (ALM) method [37]. Finally, the adjacency matrix as the edge weights can be constructed with the obtained low-rank coefficient matrix, and the clustering result is obtained by applying the k-means to the eigenspaces of the Laplacian matrix induced by the adjacency matrix.

2.2. Hypergraph

The relationship between pixels we are interested in is not just a pairwise relationship between two pixels, but a plural or even more complex relationship. When simply compress the multivariate relationship into a pairwise relationship between two pixels, it would inevitably lose a lot of useful information, thus it would affect the accuracy of feature learning to a certain extent [19].

Let denote a hypergraph, where and are the set of vertexes and hyperedges, respectively. The dataset can be used to make up the set of the vertexes . denotes the weight matrix of the hyperedges. For simplicity, it only considers the case where the hyperedge contains the same number of vertices. For a given edge , the hyperedge weight can be constructed as , in which is the set of the nearest neighbors to , is the kernel parameter. An incidence matrix denotes the relationship between vertices and hyperedges, with entries defined as:

The vertex degree of each vertex is defined as , and the degree of hyperedge is defined as . Then, and are the vertex–degree matrix and the hyperedge–degree matrix, respectively. Finally, the normalized hypergraph Laplacian matrix is

Thus, the hypergraph can well represent local structure information and complex relationship between pixels. It worth noting that the quality of depends on and , we use to represent hypergraph Laplacian hereunder.

3. Materials and Methods

The conventional hypergraph construction is only based on the original features, which is independent of the learned features in low-rank subspace. There is no guarantee that the learned hypergraph is optimal to model the pixelwise relationship among subspace feature. Therefore, this learned a suboptimal hypergraph structure can lead to a suboptimal solution in the process of learning incidence matrix. To address the above problems, we propose to learn a dynamic hypergraph to explore the intrinsic complex local structure of pixels in their low-dimensional feature space. In addition, hypergraph-based manifold regularization can make the low-rank representation coefficient well capture the global structure information of the hyperspectral data. In the end, the proposed model learns a rotation matrix to simultaneously learn continuous labels and discrete cluster labels in one step.

3.1. Dynamic Hypergraph-Based Low-Rank Subspace Clustering

Based on Section 2.2, a hypergraph structure can be used to maintain the local relationship of the original data [17,28]. First, we propose to preserve the local complex structure of the low-dimensional data by the hypergraph regularization. To do this, we design the following objective function:

Obviously, Equation (5) is equivalent to:

where is hypergraph Laplacian, and are two tuning parameters. However, is pre-constructed based on the original data, which usually cannot be learned dynamically. In this paper, we propose to update hypergraph based on the low-dimensional subspace information, furthermore, couple with the learning of in a unified framework. To achieve this, we design the final objective function as follows:

where , the two-norm regularization on the weight matrix is used to avoid overfitting. On the one hand, can preserve the global structures via the low-rank constraint to conduct subspace learning. On the other hand, it can also preserve the local structures via the second term of Equation (7) to select the informative features. The proposed dynamic hypergraph low-rank subspace clustering is known as DHLR.

3.2. Optimization Algorithm for Solving Problem (7)

In order to solve problem (7), the variable is introduced to make (7) separable for optimization as follows:

The optimization problem (8) can be solved with ADMM algorithm by minimizing the following augmented Lagrangian formulation:

where , and are Lagrange multipliers, is a positive penalty parameter. The variables . and Lagrange multipliers can be obtained by alternately solving each variable of (9) with other variables fixed. The detailed solution steps are as follows:

Update: Fixing variables , we can obtain the solution of by solving the following problem:

By introducing the singular value thresholding (SVT) operator [38], the solution of is given as:

where denotes the SVT operator.

Update: Fixing variables , we can obtain the objective function about as follows:

Problem (12) has a closed-form solution as a quadratic minimization problem, which is:

Update: Fixing variables , we can obtain the solution of by solving the following problem:

The objective function on the variable can be rewritten as:

In which , the i-th column of is

where and are the i-th column of matrices and , respectively.

Update and : According to the definition of the hypergraph in Section 2.2, the hyperedges are constructed from the original data and may affect the accuracy of the hypergraph with the noise. To tackle this problem, we use the low-dimensional subspace feature with no noisy to learn the hyperedges. Then the formulation for constructing the set of the hyperedges is given like [30] as follow:

in which is the near neighbor pixels. In this work, is the top K similarity neighbors of except for itself. After producing the incidence matrix , it is easy to work out by

Update: Fixing variables , we can obtain the objective function about as follows:

in which . By letting , and . Equation (19) can be rewritten as the following form:

Then Equation (20) can be rewritten as the following form:

According to the Karush–Kuhn–Tucker conditions, the closed-form solution for is:

Then we further obtain and

Update the Lagrange multipliers , and penalty parameter by

The entire procedure for solving DHLR method is summarized in Algorithm 1.

| Algorithm 1 the DHLR algorithm for HSI clustering |

|

3.3. Unified Dynamic Hypergraph-Based Low-Rank Subspace Clustering

Most existing hypergraph-based clustering methods contain two independent processes: the hypergraph construction and clustering. Using the hypergraph to construct similarity matrix, then use the spectral clustering or k-means to produce final clustering labels [39]. Although this approach was very popular in clustering applications, it may also produce very unstable performance since the initialization of the cluster centers has too much impact on the performance of the k-means method [8]. In order to address their problem, we propose a unified framework to exploit the correlation between the similarity hypergraph and discrete cluster labels for the clustering task. It updates the dynamic hypergraph with an optimal low-rank subspace feature and then directly generates the discrete cluster labels by introducing a rotation matrix. Finally, the proposed model cannot only make use of the optimal dynamic hypergraph and the global low-dimensional feature information but also get the discrete clustering labels. In order to achieve the above purpose, the objective function can be denoted as

where and are penalty parameters. In general, (s.t. ) is the cluster indicator matrix in spectral clustering method. In order to solve the NP-hard problem caused by the discrete constraint on , is relaxed into continuous domain, and the orthogonal constraint is adopted to make it computational tractable. In order to achieve an ideal clustering structures, [40] proposed to impose a rank constrain on the hypergraph Laplacian matrix induced by representation matrix , . Under this constraint, we can directly partition the data into clusters. The rank constraint problem is equivalent to minimize [41]. According to Ky Fan’s theorem [42], . In order to generate the discrete clustering label, we introduce a rotation matrix . According to the spectral solution invariance property [43], the last term can find a proper orthonormal to make the result of approximate to the real discrete clustering labels. is the discrete label matrix. In fact, Equation (25) is not a simple unification of some terms, which can exploit the relationship between the dynamic hypergraph matrix and the clustering labels. Ideally, we have if and only if pixel i and j are in the same cluster, equivalently . It is also true vice versa. Therefore, the feedback from the inferred labels and the similarity hypergraph matrix can affect each other. From this point of view, our clustering framework has the self-taught property.

3.4. Optimization Algorithm for Solving Problem (25)

In order to solve problem (25), the variable is introduced to make (25) separable for optimization as follows:

Then, (26) can be rewritten into the following augmented Lagrangian formulation:

The steps to update and are similar to those of DHLR except for updating , , and .

Update: Fixing variables , we can obtain the solution of by solving the following problem:

By letting , , and , since is diagonal matrix, Equation (28) can be rewritten as the following form:

Similar to the solution of problem (20), the closed-form solution for is:

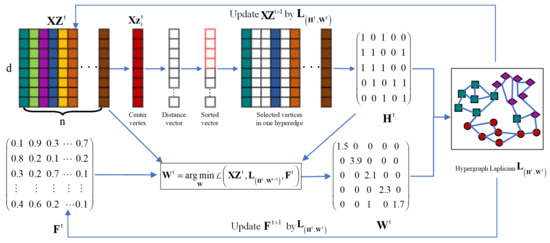

We further obtain , and via the same formulation as Equation (23). The diagram about the iterative optimization of and in the dynamic hypergraph is shown in Figure 2.

Figure 2.

Illustration of updating the dynamic hypergraph.

Update: with other variables fixed, it is equivalent to solving

The solution of variable can be efficiently obtained via the algorithm proposed by [44].

Update: By fixing other variables, we have

It has a closed-form solution as an orthogonal Procrustes problem [45]. The solution is , where and are left and right parts of the SVD decomposition of .

Update: with other variates fixed, the problem becomes

Notes that , the above subproblem can be rewritten as below:

The optimal solution of variate is:

Update the Lagrange multipliers and penalty parameter like DHLR in Equation (24). The details of the UDHLR algorithm optimization are summarized in Algorithm 2.

| Algorithm 2 the UDHLR algorithm for HSI clustering |

|

4. Results

4.1. Experimental Datasets

To validate the effectiveness of the proposed methods, we conduct experiments on three real-world hyperspectral datasets, namely Indian Pines, Salinas-A, and Jasper Ridge. Table 2 summarizes the detailed information of these three datasets.

Table 2.

Important notation used in this paper.

4.1.1. Indian pines

The Indian Pines dataset was collected by an Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor over Northwestern Indiana in 1992. The image has a spatial resolution of 20 m and 220 spectral bands ranging from 0.4 to 2.5 μm. During the test, 20 spectral bands (104–108, 150–163, and 220) are removed due to water absorption and noisy [46]. The size of this image is . There are originally 16 classes in total. Following, e.g., [47], nine main classes were used in our experiment: corn-no-till, corn-minimum-till, grass pasture, grass-trees, hay-windrowed, soybean-no-till, soybean-minimum-till, soy-bean-clean, and woods.

4.1.2. Selinas-A

The original data set is the Salinas Valley data. This scene was acquired by the AVIRIS sensor over the Salinas Valley, California in 1998. The size of this image is and contains 224 spectral bands with a spatial resolution of 3.7 m per pixel. There are originally 16 classes in Salina Valley. Following, e.g., [48], a subset of the Salinas Valley scene, denoted as Salinas-A hereinafter, is adopted, which contains of pixels with 6 classes and 204 bands remain after removing noisy bands. The subset is in the [591–678] × [158–240] of Salinas Valley.

4.1.3. Jasper Ridge

There are pixels and 224 spectral bands in Jasper Ridge dataset. After removing the spectral bands 1–3, 108–112, 154–166 and 220–224 affected by water vapor and the atmospheric, we obtained 198 spectral bands. Since the ground-truth is too complex to get in this hyperspectral image, we consider a sub image containing pixels with four classes. The first pixel starts from the (105,269)-th pixel in the original image.

4.2. Experimental Setup

4.2.1. Evaluation Metrics

In the experimental results, the normalized mutual information (NMI) is employed to gauge the clustering performance quantitatively, which measures the overlap between the experimental obtained labels and the ground-truth labels. Given two variables and , NMI is defined as [49]:

where is the mutual information between and , and respectively denote the entropies of and . Obviously, if is identical with , will be equal to 1; if is independent from , will become 0.

In addition, we also evaluate the clustering performance by measuring user’s accuracy, producer’s accuracy, overall accuracy (OA), average accuracy (AA), coefficient. For a dataset with n pixels, is the clustering label of pixel obtained by clustering method, is the ground-truth of . The OA is obtained by

where , if ; , otherwise. is the optimal mapping function that permutes clustering labels to match the ground-truth labels. The best mapping can be found by using the Kuhn–Munkres algorithm [50]. The average accuracy (AA) is the ratio between the number of predictions on each class and the total number of each class. For clustering tasks, the clustering results (i.e., clustering labels) obtained in the experiment must be aligned to the class labels of the ground-truth. To achieve the above purpose, a simple exhaustive search on all permutations of the cluster labels is used to maximize the resulting OA as was done in [51]. We note that this alignment is perhaps the most beneficial for maximizing OA measurement, there may be alternative alignments that powerful for maximizing AA or [52].

4.2.2. Compared Methods

In order to evaluate the clustering performance of the proposed DHLR and UDHLR algorithms, eight clustering methods are selected for fair comparison. The first category comprises two centroid-based methods, which are k-means [6] and fuzzy c-means (FCM) [7]. The iterations of the k-means method are 200 in our experiment. The fuzziness exponent in FCM we set is 2. For the second category, we compare against classical spectral-based clustering approaches using both a globally connected graph (GSC [8]) as well as a locally connected graph (LSC [9]). The graph weights are constructed by a Gaussian kernel. The third category comprises four subspace-based spectral clustering methods, including SSC [10,11], LRSC [12,13], GLRSC [15], and the hypergraph-regularized LRSC (HGLRSC)as described in [52].

The regularization parameters for SSC and LRSC are searched from the set to choose the value producing the best clustering result. For the parameter pair and in both GLRSC and HG-LRSC, the same is done searching over the set to choose the appropriate values. These parameters are used in the experiment of the compared algorithms.

For SSC and LRSC, the regularization parameter is set via an exhaustive search over the set , choosing the value yielding the best OA performance. The same is done for the parameter pair and in both GLRSC and HGLRSC as well as in (4) using an exhaustive search over the values . These parameters are used throughout the remainder of the experimental results.

4.3. Parameters Tuning

There are five parameters , , , , and in UDHLR, and three parameters , , in DHLR. In this section, we evaluate the parameter sensitivity of the proposed methods on three datasets, and investigate different parameter settings. In the experiment, we tune the parameters , , , , and in the range of . We observe the variations of OA with different values of each parameters.

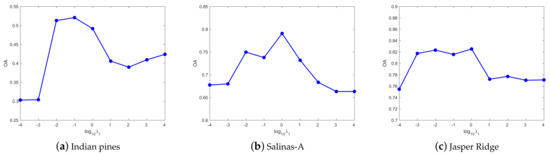

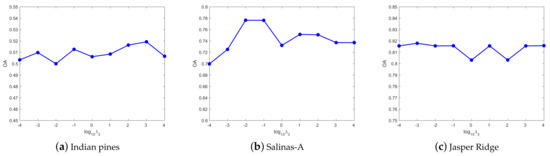

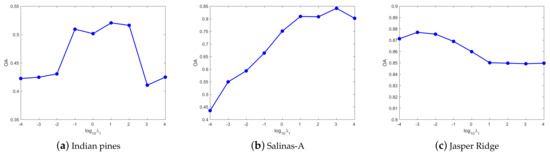

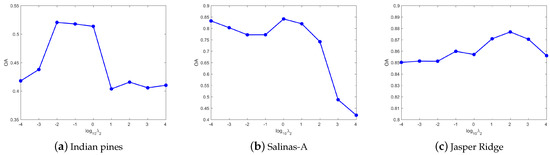

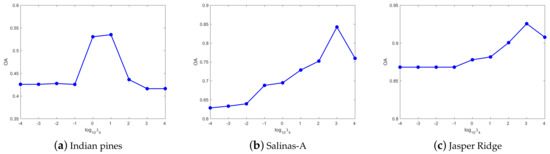

(1) Parameter analysis in DHLR: In DHLR, is the manifold regularization parameter, is the noise regularization parameter, is penalty parameter of hyperedge weight . Figure 3 shows the OA of DHLR with respect to the parameter . For the Indian Pines dataset, the peak value of OA generates when . For the Salinas-A dataset, we set for obtaining the best result in the experiments. For the Jasper Ridge dataset, the clustering results are better when we set . Figure 4 shows the OA of DHLR with respect to the parameter . For the Indian Pines dataset, the peak value of OA generates when . For the Salinas-A dataset, we set for obtaining the best result in the experiments. For the Jasper Ridge dataset, the clustering results are better when we set . Figure 5 shows the OA of DHLR with respect to the parameter . According to Figure 5, we find that the proposed methods can achieve better performance with in the setting of 1000, 0.01, 0.001 for the Indian Pines, Salinas-A, and Jasper Ridge datasets, respectively.

Figure 3.

The OA of DHLR with different on three datasets.

Figure 4.

The OA of DHLR with different on three datasets.

Figure 5.

The OA of DHLR with different on three datasets.

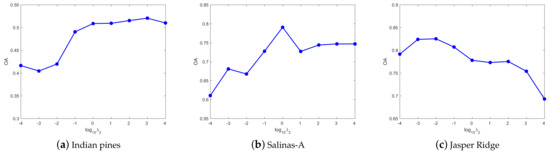

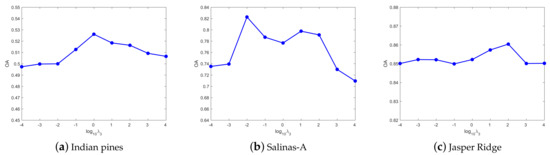

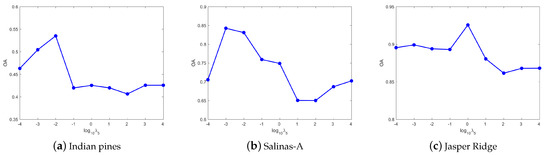

(2) Parameter analysis in UDHLR: Except for the same three parameters , , and as DHLR, is the parameter of the label feature manifold regularization. In addition, is conductive to discrete label learning. Figure 6 shows the OA of UDHLR with respect to the parameters . For the Indian Pines dataset, the best results can be achieved when . For the Salinas-A dataset, the clustering results are better when we set . For the Jasper Ridge dataset, we set for obtaining the best result in the experiments. Figure 7 shows the OA of UDHLR with respect to the parameters . For the Indian Pines dataset, the best results can be achieved when . For the Salinas-A dataset, the clustering results are better when we set . For the Jasper Ridge dataset, we set for obtaining the best result in the experiments. Figure 8 shows the OA of UDHLR with respect to the parameters . The UDHLR performs well when being set of 1, 0.01, 100 for the Indian Pines, Salinas-A, and Jasper Ridge datasets, respectively. In UDHLR, and play a vital role in clustering performance. Figure 9 demonstrates the OA values of three datasets under tuning while keeping other parameters fixed. As can be seen, the best result can be achieved when for the Indian Pines dataset. For the Salinas-A dataset, we set in our experiments. The results in Figure 9c show that the UDHLR performs well when for the Jasper Ridge dataset. Figure 10 shows the OA of UDHLR with respect to the parameters . For the Indian Pines dataset, the peak value of OA generates when . For the Salinas-A dataset, we set for obtaining the best result in the experiments. For the Jasper Ridge dataset, the clustering results are better when we set .

Figure 6.

The OA of UDHLR with different on three datasets.

Figure 7.

The OA of UDHLR with different on three datasets.

Figure 8.

The OA of UDHLR with different on three datasets.

Figure 9.

The OA of UDHLR with different on three datasets.

Figure 10.

The OA of UDHLR with different on three datasets.

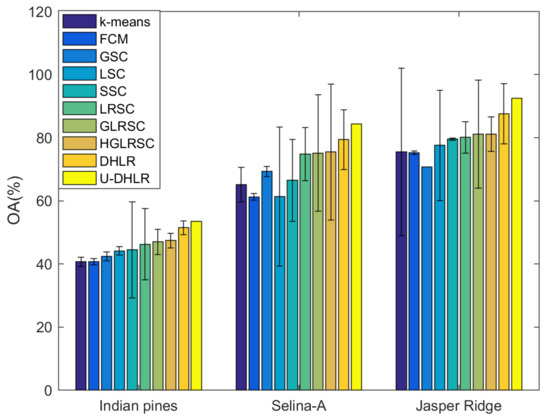

4.4. Investigate of Clustering Performance

Both the clustering maps and quantitative evaluation results are given in this section. The presented results clearly demonstrate that DHLR and UDHLR outperform the other methods on the three datasets. We run all the methods 100 times independently, and show the mean results of the clustering result in the corresponding Tables of the three datasets. In addition, the corresponding variance values of the methods generated in three datasets are recorded in Figure 11.

Figure 11.

Histogram of the clustering accuracy with variance.

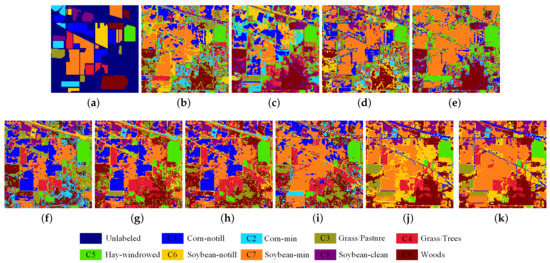

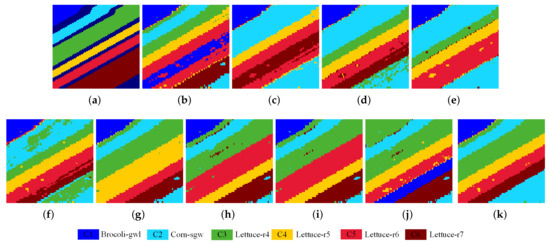

(1) Indian Pines: Figure 12 shows the clustering maps of the Indian Pines dataset. Table 3 gives the quantitative the clustering results. In general, the graph-based methods get better performance than the methods with no graph. Specifically, the K-means and FCM methods perform poorly with many misclassifications in the cluster map because of without exploring the local geometrical structure of the data. Compared with K-means and FCM, the GSC and LSC methods improves the clustering results by applying k-means on the eigenspace of the Laplacian matrices. In contrast, the subspace clustering methods can obtain a much better performance by using subspace learning to model the complex inherent structure of HSI data. Compared with K-means, SSC and LRSC perform much better in this dataset, obtaining the increments in OA of 3.82% and 5.58%, respectively. However, the learned representation coefficient matrix cannot capture the essential geometric structure information. As a result, the clustering results are not very high. GLRSC and HGLRSC improve the clustering performance of LRSC by optimizing the low-rank representation coefficient with the graph and hypergraph regularization, which shows the advantage of incorporating the latent geometric structure information. Unfortunately, the hypergraph is usually fixed, which is constructed by the original data, which is not optimized adaptively. The proposed DHLR algorithm improves 4.49% compared with the classical LRSC, and more than 4.07% compared with HGLRSC. Furthermore, the proposed UDHLR method obtains the best results with the 2.07% improvements than DHLR.

Figure 12.

Indian Pines dataset. (a) Ground-truth. (b) k-means, 40.66%. (c) FCM, 40.70%. (d) GSC, 42.33%. (e) LSC, 44.13%. (f) SSC, 44.48%. (g) LRSC, 46.24%. (h) GLRSC, 46.86%. (i) HGLRSC, 47.38%. (j) DHLR, 51.45%. (k) UDHLR, 53.52%.

Table 3.

Performance of Indian Pines dataset.

(2) Salinas-A: Figure 13 illustrates the visualization performance of the Salinas-A dataset. Table 4 gives the corresponding quantitative clustering results. Among these comparison algorithms, GLRSC and HGLRSC combine the graph theory and representation learning into the HSI data clustering. Meanwhile, SSC and LRSC only use the representation learning to obtain the new feature, and GSC and LSC only use the graph theory into the clustering. It can be seen from Table 4 that clustering accuracy of GSC, LSC, SSC and LRSC is lower than GLRSC and HGLRSC. This indicates that learning with the local geometry structure information can improve the HSI clustering observably. In addition, K-means and FCM methods perform poorer than the spectral-based methods. Compared with the aforementioned methods, the proposed DHLR and UDHLR effectively improve the clustering performance by optimal the hypergraph adaptively. As shown in Table 4, UDHLR achieves the highest OA than other methods. We can see that the proposed DHLR and UDHLR algorithms can effectively preserve the detailed structure information, and show an obvious advantage compared with the other clustering methods.

Figure 13.

Salinas-A dataset. (a) Ground-truth. (b) k-means, 65.12%. (c) FCM, 61.21%. (d) GSC, 69.27%. (e) LSC, 61.36%. (f) SSC, 66.49%. (g) LRSC, 74.79%. (h) GLRSC, 75.15%. (i) HGLRSC, 75.45%. (j) DHLR, 79.37%. (k) UDHLR, 84.31%.

Table 4.

Performance of Salinas-A dataset.

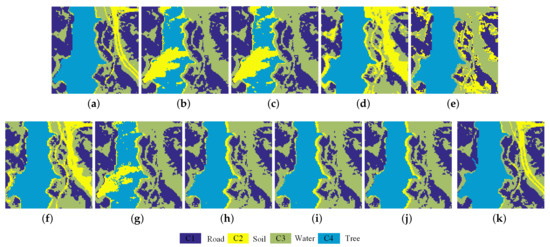

(3) Jasper Ridge: Figure 14 and Table 5 show the visual and quantitative clustering results of Jasper Ridge dataset, respectively. From Figure 14 and Table 5, we can see that the centroid-based and spectral-based clustering methods—K-means, FCM, GSC, LSC, SSC, and LRSC—achieve poorer clustering performance when compared with the graph and hypergraph combined clustering results. On the contrary, GLRSC obtains a much higher clustering accuracy than LRSC. HGLRSC also obtains higher clustering precision than LRSC and GLRSC. The proposed DHLR and UDHLR algorithm outperform the other state-of-the-art clustering methods significantly. In which the UDHLR method achieves the best clustering results, with the best OA of 92.56%, which again demonstrates the advantage of the proposed algorithm.

Figure 14.

Jasper Ridge dataset. (a) Ground-truth. (b) k-means, 75.56%. (c) FCM, 75.28%. (d) GSC, 70.75%. (e) LSC, 77.55%. (f) SSC, 79.52%. (g) LRSC, 80.12%. (h) GLRSC, 81.09%. (i) HGLRSC, 81.16%. (j) DHLR, 82.89%. (k) UDHLR, 92.56%.

Table 5.

Performance of Jasper Ridge dataset.

5. Discussion

In this section, we will discuss the computation complexity of the proposed DHLR and UDHLR methods. The main computation cost of the DHLR algorithm lies in updating , , , which need the complexity about all of them. As referred in [13], r is the rank of the dictionary with the orthogonal basis of the dictionary data. The updating of need to construct an matrix, whose time complexity is . The complexity of updating is . In addition, updating the Lagrange multipliers take the complexity of , which is too small to be neglected. The complexity of the UDHLR algorithm comes from the updating of , , , except for the variables , , , , same as DHLR. The complexity for updating is . The solution of solving involves SVD and the complexity is . To update , we need . Therefore, the total complexity of UDHLR is . Though, the number of the cluster c is small, the computation complexity of the proposed methods is greatly higher than original LRSC algorithm because of involving matrix inversion and SVD. In the future, we will consider parallel computing to increasing running speed.

6. Conclusions

In this paper, we propose a novel unified adaptive hypergraph-regularized low-rank subspace learning method for hyperspectral clustering. In the proposed framework, low-rank and the hypergraph terms are used to explore the local and global structure information of data, and the last two terms are used to learn the continuous label and the discrete label. Specifically, the hypergraph is adaptively learned from the low-rank subspace feature without exploring a fixed incidence matrix, which is theoretically optimal for clustering. Otherwise, the proposed model learns a rotation matrix to simultaneously learn continuous labels and discrete cluster labels, which need no relaxing information loss as many existing spectral clustering methods. It jointly learns the similarity hypergraph from the learned low-rank subspace data and the discrete clustering labels by solving an optimization problem, in which the subspace feature is adaptively learned by considering the clustering performance and the continuous clustering labels just serve as intermediate products. The experimental results demonstrate that the proposed DHLR and UDHLR outperforms the existing clustering methods. However, the computational complexity of each iteration is very high in the proposed methods, which should be optimized in the view of running time. In the future, we will optimize the complexity of the proposed method and intend the hypergraph learning to conduct the large-scale hyperspectral image clustering.

Author Contributions

Conceptualization, J.X. and L.X.; methodology, L.X.; software, J.X.; validation, J.X., and L.X.; formal analysis, L.X. and J.Y.; writing—original draft preparation, J.X.; writing—review and editing, L.X. and J.Y.; visualization, J.X.; supervision, L.X. and J.Y.; project administration, L.X.; funding acquisition, L.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been supported by the National Natural Science Foundation of China (Grant No.61871226, 61571230), Jiangsu Provincial Social Developing Project (Grant No. BE2018727), the National Major Research Plan of China (Grant No. 2016YFF0103604).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data reported in this paper are available on https://rslab.ut.ac.ir/data, accessed on 15 February 2021.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, Q.; He, X.; Li, X. Locality and structure regularized low rank representation for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 57, 911–923. [Google Scholar] [CrossRef]

- Liu, J.; Xiao, Z.; Chen, Y.; Yang, J. Spatial-spectral graph regularized kernel sparse representation for hyperspectral image classification. ISPRS Int. J. Geo-Inform. 2017, 6, 258. [Google Scholar] [CrossRef]

- Liu, J.; Wu, Z.; Xiao, Z.; Yang, J. Classification of hyperspectral images using kernel fully constrained least squares. ISPRS Int. J. Geo-Inform. 2017, 6, 344. [Google Scholar] [CrossRef]

- Shen, Y.; Xiao, L.; Chen, J.; Pan, D. A Spectral-Spatial Domain-Specific Convolutional Deep Extreme Learning Machine for Supervised Hyperspectral Image Classification. IEEE Access 2019, 7, 132240–132252. [Google Scholar] [CrossRef]

- Zhang, H.; Zhai, H.; Zhang, L.; Li, P. Spectral—spatial sparse subspace clustering for hyperspectral remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3672–3684. [Google Scholar] [CrossRef]

- Lloyd, S. Least squares quantization in PCM. IEEE Trans. Inform. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Peizhuang, W. Pattern recognition with fuzzy objective function algorithms (James C. Bezdek). SIAM Rev. 1983, 25, 442. [Google Scholar] [CrossRef]

- Ng, A.Y.; Jordan, M.I.; Weiss, Y. On spectral clustering: Analysis and an algorithm. In Advances in Neural Information Processing Systems; MIT Press: Vancouver, BC, Canada, 2002; pp. 849–856. [Google Scholar]

- Belkin, M.; Niyogi, P. Laplacian eigenmaps for dimensionality reduction and data representation. Neur. Comput. 2003, 15, 1373–1396. [Google Scholar] [CrossRef]

- Elhamifar, E.; Vidal, R. Sparse subspace clustering. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Los Alamitos, CA, USA, 20–25 June 2009; pp. 2790–2797. [Google Scholar]

- Elhamifar, E.; Vidal, R. Sparse subspace clustering: Algorithm, theory, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2765–2781. [Google Scholar] [CrossRef]

- Vidal, R.; Favaro, P. Low rank subspace clustering (LRSC). Pattern Recognit. Lett. 2014, 43, 47–61. [Google Scholar] [CrossRef]

- Liu, G.; Lin, Z.; Yan, S.; Sun, J.; Yu, Y.; Ma, Y. Robust recovery of subspace structures by low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 171–184. [Google Scholar] [CrossRef]

- Zheng, M.; Bu, J.; Chen, C.; Wang, C.; Zhang, L.; Qiu, G.; Cai, D. Graph regularized sparse coding for image representation. IEEE Trans. Image Process. 2010, 20, 1327–1336. [Google Scholar] [CrossRef] [PubMed]

- Lu, X.; Wang, Y.; Yuan, Y. Graph-regularized low-rank representation for destriping of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4009–4018. [Google Scholar] [CrossRef]

- Berge, C. Hypergraphs; North-Holland: Amsterdam, The Netherlands, 1989. [Google Scholar]

- Zhou, D.; Huang, J.; Schölkopf, B. Learning with hypergraphs: Clustering, classification, and embedding. Adv. Neural Inf. Process. Syst. 2006, 19, 1601–1608. [Google Scholar]

- Yuan, H.; Tang, Y.Y. Learning with hypergraph for hyperspectral image feature extraction. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1695–1699. [Google Scholar] [CrossRef]

- Bai, X.; Guo, Z.; Wang, Y.; Zhang, Z.; Zhou, J. Semisupervised hyperspectral band selection via spectral—Spatial hypergraph model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2774–2783. [Google Scholar] [CrossRef]

- Du, W.; Qiang, W.; Lv, M.; Hou, Q.; Zhen, L.; Jing, L. Semi-supervised dimension reduction based on hypergraph embedding for hyperspectral images. Int. J. Remote Sens. 2018, 39, 1696–1712. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Zhong, S. Hyper-laplacian regularized unidirectional low-rank tensor recovery for multispectral image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4260–4268. [Google Scholar]

- Huang, H.; Chen, M.; Duan, Y. Dimensionality reduction of hyperspectral image using spatial-spectral regularized sparse hypergraph embedding. Remote Sens. 2019, 11, 1039. [Google Scholar] [CrossRef]

- Gao, S.; Tsang, I.W.H.; Chia, L.T. Laplacian sparse coding, hypergraph laplacian sparse coding, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 92–104. [Google Scholar] [CrossRef] [PubMed]

- Zeng, K.; Yu, J.; Li, C.; You, J.; Jin, T. Image clustering by hyper-graph regularized non-negative matrix factorization. Neurocomputing 2014, 138, 209–217. [Google Scholar] [CrossRef]

- Wang, W.; Qian, Y.; Tang, Y.Y. Hypergraph-regularized sparse NMF for hyperspectral unmixing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 681–694. [Google Scholar] [CrossRef]

- Yin, M.; Gao, J.; Lin, Z. Laplacian regularized low-rank representation and its applications. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 504–517. [Google Scholar] [CrossRef] [PubMed]

- Zeng, M.; Ning, B.; Hu, C.; Gu, Q.; Cai, Y.; Li, S. Hyper-Graph Regularized Kernel Subspace Clustering for Band Selection of Hyperspectral Image. IEEE Access 2020, 8, 135920–135932. [Google Scholar] [CrossRef]

- Zhang, Z.; Bai, L.; Liang, Y.; Hancock, E. Joint hypergraph learning and sparse regression for feature selection. Pattern Recognit. 2017, 63, 291–309. [Google Scholar] [CrossRef]

- Zhang, Z.; Lin, H.; Gao, Y.; BNRist, K. Dynamic Hypergraph Structure Learning. In Proceedings of the International Joint Conferences on Artificial Intelligence Organization, Stockholm, Sweden, 13–19 June 2018; pp. 3162–3169. [Google Scholar]

- Zhu, X.; Zhu, Y.; Zhang, S.; Hu, R.; He, W. Adaptive Hypergraph Learning for Unsupervised Feature Selection. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence IJCAI, Melbourne, VIC, Australia, 19–25 August 2017; pp. 3581–3587. [Google Scholar]

- Zhu, X.; Zhang, S.; Zhu, Y.; Zhu, P.; Gao, Y. Unsupervised Spectral Feature Selection with Dynamic Hyper-graph Learning. IEEE Trans. Knowl. Data Eng. 2020. [Google Scholar] [CrossRef]

- Tang, C.; Liu, X.; Wang, P.; Zhang, C.; Li, M.; Wang, L. Adaptive hypergraph embedded semi-supervised multi-label image annotation. IEEE Trans. Multimed. 2019, 21, 2837–2849. [Google Scholar] [CrossRef]

- Ding, D.; Yang, X.; Xia, F.; Ma, T.; Liu, H.; Tang, C. Unsupervised feature selection via adaptive hypergraph regularized latent representation learning. Neurocomputing 2020, 378, 79–97. [Google Scholar] [CrossRef]

- Kang, Z.; Peng, C.; Cheng, Q.; Xu, Z. Unified Spectral Clustering with Optimal Graph. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17), San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Han, Y.; Zhu, L.; Cheng, Z.; Li, L.; Liu, X. Discrete Optimal Graph Clustering. IEEE Trans. Cybernet. 2020, 50, 1697–1710. [Google Scholar] [CrossRef]

- Yang, Y.; Shen, F.; Huang, Z.; Shen, H.T. A Unified Framework for Discrete Spectral Clustering. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 2273–2279. [Google Scholar]

- Lin, Z.; Chen, M.; Ma, Y. The Augmented Lagrange Multiplier Method for Exact Recovery of Corrupted Low-Rank Matrices. arXiv 2010, arXiv:1009.5055. [Google Scholar]

- Liu, G.; Yan, S. Latent Low-Rank Representation for subspace segmentation and feature extraction. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1615–1622. [Google Scholar] [CrossRef]

- Boley, D.; Chen, Y.; Bi, J.; Wang, J.Z.; Huang, J.; Nie, F.; Huang, H.; Rahimi, A.; Recht, B. Spectral Rotation versus K-Means in Spectral Clustering. In Proceedings of the Twenty-Seventh AAAI Conference on Artificial Intelligence, Bellevue, WA, USA, 14–18 July 2013. [Google Scholar]

- Kang, Z.; Peng, C.; Cheng, Q. Twin Learning for Similarity and Clustering: A Unified Kernel Approach. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17), San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Mohar, B. The Laplacian spectrum of graphs. In Graph Theory, Combinatorics, and Applications; Wiley: Hoboken, NJ, USA, 1991; pp. 871–898. [Google Scholar]

- Fan, K. On a Theorem of Weyl Concerning Eigenvalues of Linear Transformations I. Proc. Natl. Acad. Sci. USA 1949, 35, 652–655. [Google Scholar] [CrossRef]

- Yu, S.X.; Shi, J. Multiclass Spectral Clustering. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003. [Google Scholar]

- Wen, Z.; Yin, W. A feasible method for optimization with orthogonality constraints. Math. Programm. 2013, 142, 397–434. [Google Scholar] [CrossRef]

- Nie, F.; Zhang, R.; Li, X. A generalized power iteration method for solving quadratic problem on the Stiefel manifold. Sci. China Inf. Sci. 2017, 60, 112101. [Google Scholar] [CrossRef]

- Zhong, Y.; Zhang, L.; Gong, W. Unsupervised remote sensing image classification using an artificial immune network. Int. J. Remote Sens. 2011, 32, 5461–5483. [Google Scholar] [CrossRef]

- Ji, R.; Gao, Y.; Hong, R.; Liu, Q. Spectral-Spatial Constraint Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2014, 3, 1811–1824. [Google Scholar] [CrossRef]

- Ul Haq, Q.S.; Tao, L.; Sun, F.; Yang, S. A Fast and Robust Sparse Approach for Hyperspectral Data Classification Using a Few Labeled Samples. Geosci. Remote Sens. IEEE Trans. 2012, 50, 2287–2302. [Google Scholar] [CrossRef]

- Strehl, A.; Ghosh, J. Cluster Ensembles—A Knowledge Reuse Framework for Combining Multiple Partitions. J. Mach. Learn. Res. 2002, 3, 583–617. [Google Scholar]

- Lovasz, L.; Plummer, M.D. Matching Theory; AMS Chelsea Publishing: Amsterdam, North Holland, 1986. [Google Scholar]

- Murphy, J.M.; Maggioni, M. Unsupervised Clustering and Active Learning of Hyperspectral Images with Nonlinear Diffusion. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1829–1845. [Google Scholar] [CrossRef]

- Xu, J.; Fowler, J.E.; Xiao, L. Hypergraph-Regularized Low-Rank Subspace Clustering Using Superpixels for Unsupervised Spatial-Spectral Hyperspectral Classification. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).