Transformer-Based Decoder Designs for Semantic Segmentation on Remotely Sensed Images

Abstract

:1. Introduction

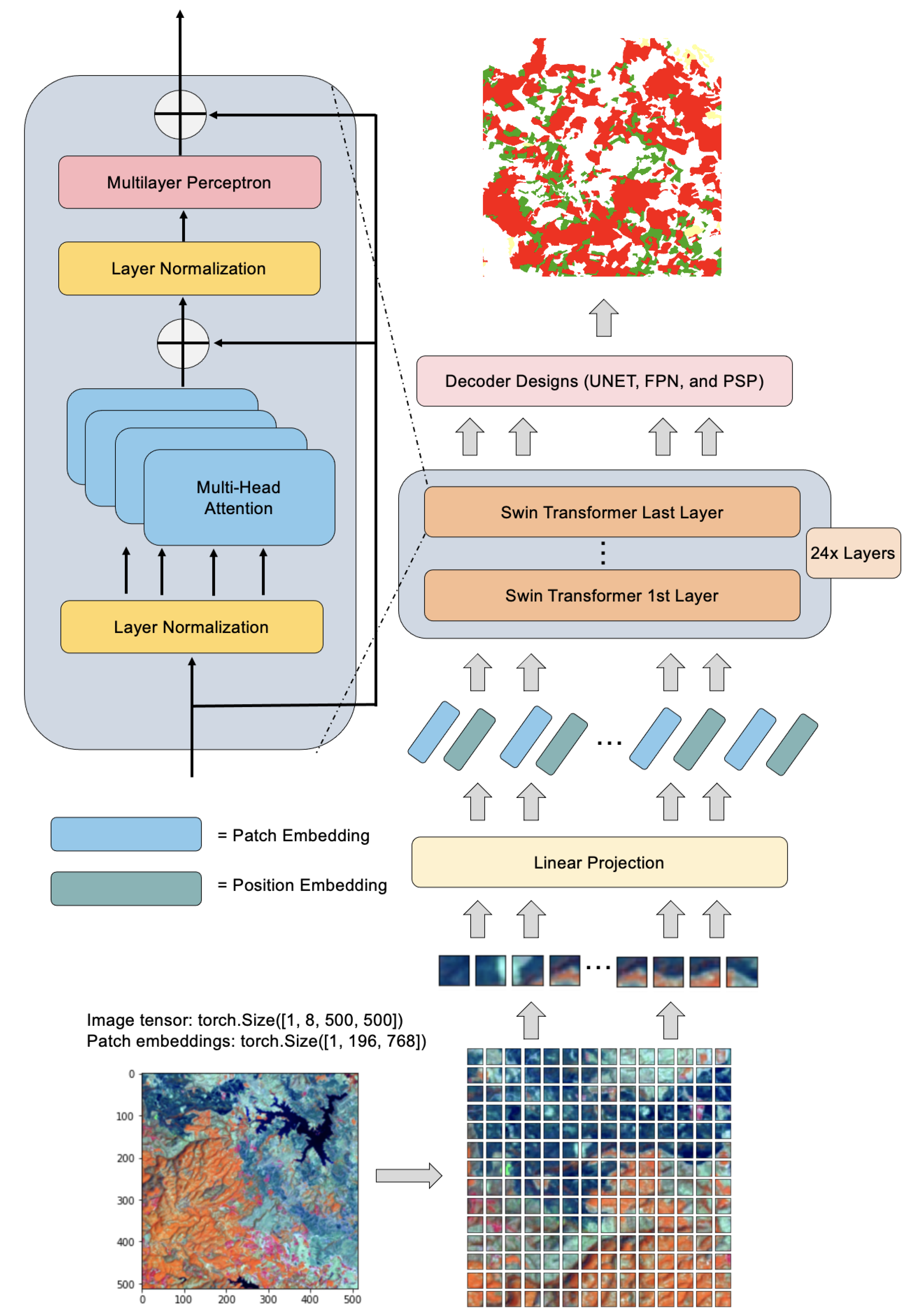

- Utilizing a pre-training ViT to retrieve the virtual visual tokens based on the vision patches from aerial and satellite images: we immediately fine-tune the model weights on downstream responsibilities by appropriating pre-training SwinTF under ViT, as a backbone, by appending responsibility layers and superimposing the pretrained encoder.

2. Material and Methods

2.1. Transformer Model

2.1.1. Transformer Based Semantic Segmentation

2.1.2. Decoder Designs

2.1.3. Environment and Deep Learning Configurations

2.2. Aerial and Satellite Imagery

| Data Set | Total Images | Training Set | Validation Set | Testing Set |

|---|---|---|---|---|

| TH-Isan Landsat-8 Corpus | 1420 | 1000 | 300 | 120 |

| TH-North Landsat-8 Corpus | 1600 | 1000 | 400 | 200 |

| ISPRS Vaihingen Corpus | 16 (Patches) | 10 | 2 | 4 |

2.2.1. North East (Isan) and North of Thailand Landsat-8 Corpora

2.2.2. ISPRS Vaihingen Corpus

2.2.3. Evaluation Metrics

3. Results

3.1. Results for TH-Isan Landsat-8 Corpus

3.1.1. Effect of Swin Transformer and Pretrained Models

3.1.2. Effect of Transformer with Decoder Designs

| Pretrained | Backbone | Model | |||||

|---|---|---|---|---|---|---|---|

| Baseline | Yes | - | DeepLab V3 [8] | 0.7547 | 0.7483 | 0.7515 | 0.6019 |

| Yes | - | UNet [29] | 0.7353 | 0.7340 | 0.7346 | 0.5806 | |

| Yes | - | PSP [30] | 0.7783 | 0.7592 | 0.7686 | 0.6242 | |

| Yes | - | FPN [31] | 0.7633 | 0.7688 | 0.7660 | 0.6208 | |

| Yes | Res152 | GCN-A-FF-DA [36] | 0.7946 | 0.7883 | 0.7909 | 0.6549 | |

| Yes | RestNest-K50-GELU | GCN-A-FF-DA [36,43] | 0.8397 | 0.8285 | 0.8339 | 0.7154 | |

| No | ViT | SwinTF [12,13,37] | 0.8778 | 0.8148 | 0.8430 | 0.7319 | |

| Yes | ViT | SwinTF [12,13,37] | 0.8925 | 0.8637 | 0.8774 | 0.7824 | |

| Proposed Method | Yes | ViT | SwinTF-UNet | 0.8746 | 0.8955 | 0.8830 | 0.7936 |

| Yes | ViT | SwinTF-PSP | 0.8939 | 0.9022 | 0.8980 | 0.8151 | |

| Yes | ViT | SwinTF-FPN | 0.8966 | 0.8842 | 0.8895 | 0.8025 |

| Pretrained | Backbone | Model | Corn | Pineapple | Para Rubber | |

|---|---|---|---|---|---|---|

| Baseline | Yes | - | DeepLab V3 [8] | 0.6334 | 0.8306 | 0.7801 |

| Yes | - | UNet [29] | 0.6210 | 0.8129 | 0.7927 | |

| Yes | - | PSP [30] | 0.6430 | 0.8170 | 0.8199 | |

| Yes | - | FPN [31] | 0.6571 | 0.8541 | 0.8191 | |

| Yes | Res152 | GCN-A-FF-DA [36] | 0.6834 | 0.8706 | 0.8301 | |

| Yes | RestNest-K50-GELU | GCN-A-FF-DA [36,43] | 0.8982 | 0.9561 | 0.8657 | |

| No | ViT | SwinTF [12,13,37] | 0.7021 | 0.9179 | 0.8859 | |

| Yes | ViT | SwinTF [12,13,37] | 0.9003 | 0.9572 | 0.8763 | |

| Proposed Method | Yes | ViT | SwinTF-UNet | 0.9139 | 0.9652 | 0.8876 |

| Yes | ViT | SwinTF-PSP | 0.9386 | 0.9632 | 0.8985 | |

| Yes | ViT | SwinTF-FPN | 0.9234 | 0.9619 | 0.8886 |

3.2. Results for TH-North Landsat-8 Corpus

3.2.1. Effect of Swin Transformer and Pretrained Models

3.2.2. Effect of Transformer with our Decoder Designs

| Pretrained | Backbone | Model | |||||

|---|---|---|---|---|---|---|---|

| Baseline | Yes | - | DeepLab V3 [8] | 0.5019 | 0.5323 | 0.5166 | 0.3483 |

| Yes | - | UNet [29] | 0.4836 | 0.5334 | 0.5073 | 0.3398 | |

| Yes | - | PSP [30] | 0.4949 | 0.5456 | 0.5190 | 0.3505 | |

| Yes | - | FPN [31] | 0.5112 | 0.5273 | 0.5192 | 0.3506 | |

| Yes | Res152 | GCN-A-FF-DA [36] | 0.5418 | 0.5722 | 0.5559 | 0.3857 | |

| Yes | RestNest-K50-GELU | GCN-A-FF-DA [36,43] | 0.6029 | 0.5977 | 0.5977 | 0.4289 | |

| No | ViT | SwinTF [12,13,37] | 0.6076 | 0.5809 | 0.5940 | 0.4225 | |

| Yes | ViT | SwinTF [12,13,37] | 0.6233 | 0.5883 | 0.6047 | 0.4340 | |

| Proposed Method | Yes | ViT | SwinTF-UNet | 0.6273 | 0.6177 | 0.6224 | 0.4519 |

| Yes | ViT | SwinTF-PSP | 0.6384 | 0.6245 | 0.6312 | 0.4613 | |

| Yes | ViT | SwinTF-FPN | 0.6324 | 0.6289 | 0.6306 | 0.4606 |

| Pretrained | Backbone | Model | Corn | Pineapple | Para Rubber | |

|---|---|---|---|---|---|---|

| Baseline | Yes | - | DeepLab V3 [8] | 0.4369 | 0.8639 | 0.8177 |

| Yes | - | UNet [29] | 0.4135 | 0.8418 | 0.7721 | |

| Yes | - | PSP [30] | 0.4413 | 0.8702 | 0.8032 | |

| Yes | - | FPN [31] | 0.4470 | 0.8743 | 0.8064 | |

| Yes | Res152 | GCN-A-FF-DA [36] | 0.4669 | 0.9039 | 0.8177 | |

| Yes | RestNest-K50-GELU | GCN-A-FF-DA [36,43] | 0.5151 | 0.9394 | 0.8442 | |

| No | ViT | SwinTF [12,13,37] | 0.5375 | 0.9302 | 0.8628 | |

| Yes | ViT | SwinTF [12,13,37] | 0.5592 | 0.9527 | 0.8873 | |

| Proposed Method | Yes | ViT | SwinTF -UNet | 0.5850 | 0.9703 | 0.9117 |

| Yes | ViT | SwinTF-PSP | 0.6008 | 0.9877 | 0.9296 | |

| Yes | ViT | SwinTF-FPN | 0.6006 | 0.9857 | 0.9245 |

3.3. Results for ISPRS Vaihingen Corpus

3.3.1. Effect of Swin Transformer and Pretrained Models

3.3.2. Effect of Transformer with Our Decoder Designs

| Pretrained | Backbone | Model | |||||

|---|---|---|---|---|---|---|---|

| Baseline | Yes | - | DeepLab V3 [8] | 0.8672 | 0.8672 | 0.8672 | 0.7656 |

| Yes | - | UNet [29] | 0.8472 | 0.8572 | 0.8522 | 0.7425 | |

| Yes | - | PSP [30] | 0.8614 | 0.8799 | 0.8706 | 0.7708 | |

| Yes | - | FPN [31] | 0.8701 | 0.8812 | 0.8756 | 0.7787 | |

| Yes | Res152 | GCN-A-FF-DA [36] | 0.8716 | 0.8685 | 0.8694 | 0.8197 | |

| Yes | RestNest-K50-GELU | GCN-A-FF-DA [36,43] | 0.9044 | 0.9088 | 0.9063 | 0.8292 | |

| No | ViT | SwinTF [12,13,37] | 0.8537 | 0.9356 | 0.8770 | 0.7701 | |

| Yes | ViT | SwinTF [12,13,37] | 0.9756 | 0.8949 | 0.9221 | 0.8753 | |

| Proposed Method | Yes | ViT | SwinTF-UNet | 0.9203 | 0.9732 | 0.9438 | 0.8977 |

| Yes | ViT | SwinTF-PSP | 0.9271 | 0.9820 | 0.9483 | 0.9098 | |

| Yes | ViT | SwinTF-FPN | 0.9296 | 0.9756 | 0.9494 | 0.9086 |

| Model | IS | Buildings | LV | Tree | Car | |

|---|---|---|---|---|---|---|

| Baseline | DeepLab V3 [8] | 0.8289 | 0.8026 | 0.8257 | 0.7985 | 0.6735 |

| UNet [29] | 0.8189 | 0.7826 | 0.7857 | 0.7845 | 0.6373 | |

| PSP [30] | 0.8273 | 0.8072 | 0.8059 | 0.8050 | 0.6781 | |

| FPN [31] | 0.8327 | 0.8111 | 0.8127 | 0.8117 | 0.6896 | |

| GCN-A-FF-DA [36] | 0.8431 | 0.8336 | 0.8362 | 0.8312 | 0.7014 | |

| GCN-A-FF-DA [36,43] | 0.9005 | 0.9076 | 0.8942 | 0.8877 | 0.8233 | |

| SwinTF [12,13,37] | 0.8811 | 0.8934 | 0.8878 | 0.8734 | 0.7866 | |

| Pretrained SwinTF [12,13,37] | 0.9137 | 0.9139 | 0.8803 | 0.8922 | 0.8118 | |

| Proposed Method | Pretrained SwinTF-UNet | 0.9139 | 0.9101 | 0.8870 | 0.9035 | 0.9006 |

| Pretrained SwinTF-PSP | 0.9259 | 0.9195 | 0.8790 | 0.9093 | 0.9019 | |

| Pretrained SwinTF-FPN | 0.9356 | 0.9157 | 0.8746 | 0.9169 | 0.9057 |

4. Discussion

Limitations and Outlook

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Li, R.; Zheng, S.; Zhang, C.; Duan, C.; Su, J.; Wang, L.; Atkinson, P.M. Multiattention network for semantic segmentation of fine-resolution remote sensing images. IEEE Trans. Geosci. Remote. Sens. 2021. [Google Scholar] [CrossRef]

- Yang, N.; Tang, H. Semantic Segmentation of Satellite Images: A Deep Learning Approach Integrated with Geospatial Hash Codes. Remote Sens. 2021, 13, 2723. [Google Scholar] [CrossRef]

- Li, H.; Qiu, K.; Chen, L.; Mei, X.; Hong, L.; Tao, C. SCAttNet: Semantic segmentation network with spatial and channel attention mechanism for high-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2020, 18, 905–909. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Lyu, X.; Gao, H.; Tong, Y.; Cai, S.; Li, S.; Liu, D. Dual attention deep fusion semantic segmentation networks of large-scale satellite remote-sensing images. Int. J. Remote Sens. 2021, 42, 3583–3610. [Google Scholar] [CrossRef]

- Chen, Z.; Li, D.; Fan, W.; Guan, H.; Wang, C.; Li, J. Self-attention in reconstruction bias U-Net for semantic segmentation of building rooftops in optical remote sensing images. Remote Sens. 2021, 13, 2524. [Google Scholar] [CrossRef]

- Tasar, O.; Giros, A.; Tarabalka, Y.; Alliez, P.; Clerc, S. Daugnet: Unsupervised, multisource, multitarget, and life-long domain adaptation for semantic segmentation of satellite images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1067–1081. [Google Scholar] [CrossRef]

- Peng, C.; Zhang, X.; Yu, G.; Luo, G.; Sun, J. Large kernel matters—Improve semantic segmentation by global convolutional network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4353–4361. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 603–612. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- He, X.; Chen, Y.; Lin, Z. Spatial-Spectral Transformer for Hyperspectral Image Classification. Remote Sens. 2021, 13, 498. [Google Scholar] [CrossRef]

- Qing, Y.; Liu, W.; Feng, L.; Gao, W. Improved Transformer Net for Hyperspectral Image Classification. Remote Sens. 2021, 13, 2216. [Google Scholar] [CrossRef]

- Sun, Z.; Cao, S.; Yang, Y.; Kitani, K.M. Rethinking transformer-based set prediction for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 3611–3620. [Google Scholar]

- Yang, F.; Zhai, Q.; Li, X.; Huang, R.; Luo, A.; Cheng, H.; Fan, D.P. Uncertainty-Guided Transformer Reasoning for Camouflaged Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 4146–4155. [Google Scholar]

- Wang, L.; Li, R.; Wang, D.; Duan, C.; Wang, T.; Meng, X. Transformer Meets Convolution: A Bilateral Awareness Network for Semantic Segmentation of Very Fine Resolution Urban Scene Images. Remote Sens. 2021, 13, 3065. [Google Scholar] [CrossRef]

- Jin, Y.; Han, D.; Ko, H. TrSeg: Transformer for semantic segmentation. Pattern Recognit. Lett. 2021, 148, 29–35. [Google Scholar] [CrossRef]

- Chen, H.; Wang, Y.; Guo, T.; Xu, C.; Deng, Y.; Liu, Z.; Ma, S.; Xu, C.; Xu, C.; Gao, W. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 12299–12310. [Google Scholar]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision transformers for dense prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 12179–12188. [Google Scholar]

- Srinivas, A.; Lin, T.Y.; Parmar, N.; Shlens, J.; Abbeel, P.; Vaswani, A. Bottleneck transformers for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 16519–16529. [Google Scholar]

- Kim, K.; Wu, B.; Dai, X.; Zhang, P.; Yan, Z.; Vajda, P.; Kim, S.J. Rethinking the Self-Attention in Vision Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 3071–3075. [Google Scholar]

- Salvador, A.; Gundogdu, E.; Bazzani, L.; Donoser, M. Revamping Cross-Modal Recipe Retrieval with Hierarchical Transformers and Self-supervised Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 15475–15484. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 10347–10357. [Google Scholar]

- Lin, A.; Chen, B.; Xu, J.; Zhang, Z.; Lu, G. DS-TransUNet: Dual Swin Transformer U-Net for Medical Image Segmentation. arXiv 2021, arXiv:2106.06716. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. arXiv 2021, arXiv:2102.12122. [Google Scholar]

- Li, X.; Chen, H.; Qi, X.; Dou, Q.; Fu, C.W.; Heng, P.A. H-DenseUNet: Hybrid densely connected UNet for liver and tumor segmentation from CT volumes. IEEE Trans. Med Imaging 2018, 37, 2663–2674. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Kim, S.W.; Kook, H.K.; Sun, J.Y.; Kang, M.C.; Ko, S.J. Parallel feature pyramid network for object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 234–250. [Google Scholar]

- International Society for Photogrammetry and Remote Sensing. 2D Semantic Labeling Challenge. Available online: http://www2.isprs.org/commissions/comm3/wg4/semantic-labeling.html (accessed on 9 September 2018).

- Badrinarayanan, V.; Handa, A.; Cipolla, R. Segnet: A deep convolutional encoder–decoder architecture for robust semantic pixel-wise labelling. arXiv 2015, arXiv:1505.07293. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder–decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Kendall, A.; Badrinarayanan, V.; Cipolla, R. Bayesian segnet: Model uncertainty in deep convolutional encoder–decoder architectures for scene understanding. arXiv 2015, arXiv:1511.02680. [Google Scholar]

- Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Semantic Labeling in Remote Sensing Corpora Using Feature Fusion-Based Enhanced Global Convolutional Network with High-Resolution Representations and Depthwise Atrous Convolution. Remote Sens. 2020, 12, 1233. [Google Scholar] [CrossRef] [Green Version]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2021; pp. 6881–6890. [Google Scholar]

- Purkait, P.; Zhao, C.; Zach, C. SPP-Net: Deep absolute pose regression with synthetic views. arXiv 2017, arXiv:1712.03452. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Qin, Z.; Zhang, Z.; Chen, X.; Wang, C.; Peng, Y. Fd-mobilenet: Improved mobilenet with a fast downsampling strategy. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 1363–1367. [Google Scholar]

- Cheng, B.; Wei, Y.; Shi, H.; Feris, R.; Xiong, J.; Huang, T. Revisiting rcnn: On awakening the classification power of faster rcnn. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 453–468. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the OSDI, Savannah, GA, USA, 2–4 November 2016; Volume 16, pp. 265–283. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

| Acronym | Representation |

|---|---|

| DL | Deep Learning |

| FPN | Feature Pyramid Network |

| LR | Learning Rate |

| PSP | Pyramid Scene Parsing Network |

| ResNet152 | 152-layer ResNet |

| SwinTF | Swin Transformer |

| SwinTF-FPN | Swin Transformer with FPN Decoder Design |

| SwinTF-PSP | Swin Transformer with PSP Decoder Design |

| SwinTF-UNet | Swin Transformer with U-Net Decoder Design |

| TH-Isan Landsat-8 corpus | North East Thailand Landsat-8 data set |

| TH-North Landsat-8 corpus | North Thailand Landsat-8 data set |

| ViT | Vision Transformer |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Transformer-Based Decoder Designs for Semantic Segmentation on Remotely Sensed Images. Remote Sens. 2021, 13, 5100. https://doi.org/10.3390/rs13245100

Panboonyuen T, Jitkajornwanich K, Lawawirojwong S, Srestasathiern P, Vateekul P. Transformer-Based Decoder Designs for Semantic Segmentation on Remotely Sensed Images. Remote Sensing. 2021; 13(24):5100. https://doi.org/10.3390/rs13245100

Chicago/Turabian StylePanboonyuen, Teerapong, Kulsawasd Jitkajornwanich, Siam Lawawirojwong, Panu Srestasathiern, and Peerapon Vateekul. 2021. "Transformer-Based Decoder Designs for Semantic Segmentation on Remotely Sensed Images" Remote Sensing 13, no. 24: 5100. https://doi.org/10.3390/rs13245100

APA StylePanboonyuen, T., Jitkajornwanich, K., Lawawirojwong, S., Srestasathiern, P., & Vateekul, P. (2021). Transformer-Based Decoder Designs for Semantic Segmentation on Remotely Sensed Images. Remote Sensing, 13(24), 5100. https://doi.org/10.3390/rs13245100