Abstract

Weather radar data can capture large-scale bird migration information, helping solve a series of migratory ecological problems. However, extracting and identifying bird information from weather radar data remains one of the challenges of radar aeroecology. In recent years, deep learning was applied to the field of radar data processing and proved to be an effective strategy. This paper describes a deep learning method for extracting biological target echoes from weather radar images. This model uses a two-stream CNN (Atrous-Gated CNN) architecture to generate fine-scale predictions by combining the key modules such as squeeze-and-excitation (SE), and atrous spatial pyramid pooling (ASPP). The SE block can enhance the attention on the feature map, while ASPP block can expand the receptive field, helping the network understand the global shape information. The experiments show that in the typical historical data of China next generation weather radar (CINRAD), the precision of the network in identifying biological targets reaches up to 99.6%. Our network can cope with complex weather conditions, realizing long-term and automated monitoring of weather radar data to extract biological target information and provide feasible technical support for bird migration research.

1. Introduction

Billions of birds migrate between remote breeding and wintering ground each year, through areas increasingly transformed by humans [1,2,3]. Studying animal migration behavior can help understand habitat distribution, virus transmission, climate change, as well as reduce aviation safety risks [4,5]. For example, the collision between birds and aircraft will cause serious damage to the aircraft, potentially even causing it to crash [6]; therefore, predicting the flight trajectory of bird flocks in time and guiding flights to bypass them will effectively avoid air traffic accidents.

Since the 1960s, radar systems were proven to be able to monitor and quantify the movement of birds in the atmosphere [7,8]. As an effective tool in studying animal migration patterns, they play an increasingly important role in protecting endangered species [9]. Insect radar can obtain the fine physical characteristics of flying animals [10] and is widely used in aerial biological monitoring. However, weather radar covers a wider area and can also effectively receive biological echoes in the scanning area, including birds [11], bats [12], and insects [13], so as to provide a reasonable explanation for a series of migration ecological problems [14]. Nevertheless, due to the limitations of the radar system, weather environment, and biological properties [15], extracting and identifying biological information from huge weather radar data remains a challenge of radar biology [16]. Solving above problems can aid the sustainable development of radar aeroecology [17,18].

Early studies demonstrated how to detect and quantify bird movements [11,19,20], but required substantial manual effort. Deep learning technology makes it possible to automatically obtain useful information from massive amounts of data, and increasingly, studies are introducing this kind of method as a weather radar echo classification strategy. Lakshmanan et al. (2007) [21] introduced an elastic network to distinguish precipitation and nonprecipitation echoes from WSR-88D data, then made improvements in following study [22], which separated two types of echoes at the pixel level for the first time. Dokter et al. (2011) [23] developed an algorithm to automatically separate precipitation from biology in European C-band radars, later extended to U.S. dual-polarized S-band radar data [16], but it was unable to completely separate precipitation and biology in historical data. In subsequent work, RoyChowdhury et al. (2016) [24] trained multiple network models and focused on the performance impact of fine-tuning the network, achieving 94.4% accuracy in more than 10,000 WSR-88D historical data. Recent advances were the MistNet network [14], which made fine-scaled predictions in the segmentation task of a weather radar image. In historical and contemporary WSR-88D data, MistNet could identify at least 95.9% of all biomass, with a false discovery rate of 1.3%, but lacked the adaptability to the historical data with more precipitation events.

Prior work proved that using deep learning method to extract biological information from a radar image is efficient and can be applied to datasets of millions of radar scans to generate fine-grained predictions. However, we notice that the existed research preferred to use dual-polarization radar data, since single-polarization radar is unable to obtain the polarization measurement of the target, meaning that the task of echo extraction is more difficult [25]. As China did not yet deploy a large-scale dual-polarized weather radar upgrade service [26], we pay more attention to how to extract biological echoes from single-polarization radar data. In addition, prominent biological targets on weather radars can produce characteristic values of spectral width, which aid in identifying kinds of flying animals and discriminating them from other kinds of scatters [27]. However, the spectrum width was largely ignored in the existed research.

In this paper, we develop Atrous-Gated CNN, a deep convolutional neural network model using weather radar reflectivity and spectral width data. Our goal is to discriminate precipitation from biology in single-polarization radar scans using deep learning technology, and adapt to different weather environments, so as to accurately mine valuable information.

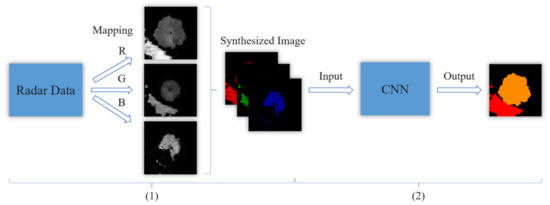

The overall process of the task consists of two main parts (Figure 1). We first map and render the radar data to generate an RGB image as the input of the convolutional neural network. The network is then used to train and segment the image to obtain biological echo. The rest of the paper is organized as follows. Section 2 introduces the reasons and specific methods for rendering raw radar data into images. Section 3 presents the architecture of our proposed network. Section 4 details the performance of our model and makes comparison with previous study. Section 5 discusses the results and future works. The summary is provided in Section 6.

Figure 1.

Biological echo extraction processing. It is composed of two main parts: (1) data mapping and rendering, (2) image segmentation using convolutional neural network (CNN).

2. Materials

China next generation weather radar (CINRAD) network, consists of more than 200 sites, covering the central and eastern regions of China. S-band radar is used in the eastern region, and C-band radar is used in the central region. The network plays an important role in the monitoring, early warning, and forecasting of typhoons and heavy rains, as well as severe convective precipitation. In this section, we will sequentially introduce the scanning strategy and data products of CINRAD network radar in China, radar image rendering strategy as well as geometric characteristics of different echo types.

2.1. Scanning Strategy and Data Products

Radars in the CINRAD network are horizontally polarized with the beam width of 1°. Volume scan mode is adopted to sample the airspace within hundreds of kilometers around the radar, and each volume scan takes about 6 min. During one scan, the antenna rotates around the vertical axis at a fixed elevation angle to obtain sampling data of the corresponding airspace cone area. A typical scanning strategy during clear air conditions includes nine sweeps at elevation angles from 0.5° to 19.5°. Data products mainly include reflectivity factor, radial velocity, and spectral width, being stored in the form of a binary file. Reflectivity is a measure of the fraction of radiation reflected by a given target, which is usually recorded in decibels. Radial velocity represents the component of motion of a target toward or away from the radar, and spectral width means the standard deviation of the Doppler velocity in the spectrum. The main parameters of radar data are shown in Table 1.

Table 1.

Main parameters of weather radar base data format.

2.2. Data Rendering

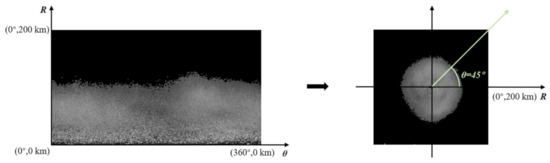

To extract biological information from weather radar images, we first render and map the original radar data into rectangular coordinates, so as to artificially identify the difference between biological and precipitation echoes from the geometric shape. Specifically, raw radar data products are stored in polar coordinate, including range bin and azimuth information. Considering that bird migration occurs at a lower altitude, typically, between 0.4 and 2 km [11,24], we select the first 200 range bin data, which corresponds to the altitude detection range of 0–2 km, to generate an image in polar coordinates. The image is then mapped to rectangular coordinates by Equation (1), and a radar image with a resolution of 320 × 320 is obtained through linear interpolation. In our radar image, the X-axis represents the east–west direction, the Y-axis represents the north–south direction, and the radar is located in the center of the image. Figure 2 shows the process of mapping a radar reflectivity data in polar coordinates to rectangular coordinates.

where and denote the detection range and azimuth angle, respectively. When , it stands for east direction. x and y represent the detection range in rectangular coordinates.

Figure 2.

Process of mapping a radar reflectivity data in polar coordinates to rectangular coordinates. We map image in polar coordinates to rectangular coordinates through coordinate transformation. In rectangular coordinates, resolution of image is 320 × 320, which corresponds to actual detection range of 400 × 400 km X and Y axes represent east–west and north–south directions, respectively.

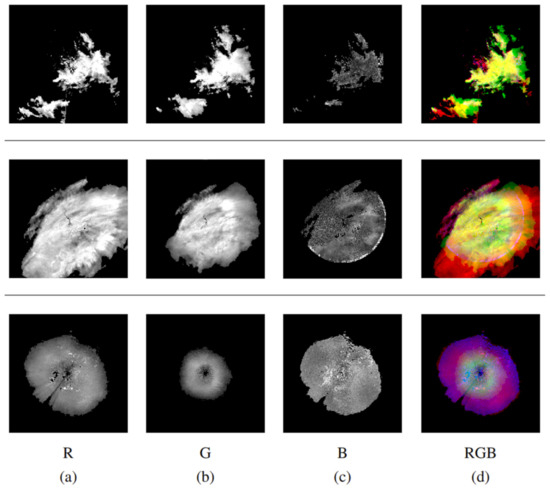

We choose the reflectivity and spectral width parameters to generate the radar rendering image, because they can clearly characterize and aid identifying precipitation and biological targets, as highlighted as follows:

- Significant differences exist in the reflectivity of biological and precipitation echo. Biological targets have smaller reflection area than precipitation, which results in echo intensity much lower than precipitation echo. The intensity of precipitation echo can exceed 50 dBZ, but usually lower than 20 dBZ for biological echo [11,28].

- Biological targets and precipitation targets have different airspeeds. Doppler velocity parameters are usually used to distinguish different types of target scatterers (birds, bats, insects, raindrops, and other particles) [11,29]. Most birds fly at speeds greater than 8 m/s, while air speeds are generally lower [30]. Daytime fain weather without birds produce weather signals that have the smallest volumetric median values of spectral width (i.e., <2 m/s), echoes contaminated by birds usually have a higher spectral width (i.e., >12 m/s) [11,31,32].

Bird migration occurs at a lower altitude, typically, between 0.4 and 2 km [11,24], and migration tends to be in groups, meaning most of aerial animal concentrate into several heights. According to the radar ray distance-height equation, under standard atmospheric conditions, the 0.5° radar beam can reach about 2500 m, the 1.45° radar beam can reach about 5000 m, meaning radar measurements at the 0.5° and 1.45° elevation angle provide more information on animal echoes than measurements of higher elevation angles. Thus, as introduction mentioned, we select the radar reflectivity factor products of 0.5° and 1.45° elevation angle as well as spectral width of 0.5° elevation angle as the R, G, B channels of a radar rendering image. The image’s size is 320 × 320 × 3, corresponding to the scanning area within 200 km. Renderings of the scans as images are shown in Figure 3.

Figure 3.

Radar scanning rendering. We use reflectivity data Z and Spectrum width W to generate a rendered image (d). Red channel (a) and green channel (b) corresponds to reflectivity image with elevation angle of 0.5° and 1.45° respectively, blue channel (c) corresponds to spectral image with elevation angle of 0.5°.

2.3. Data Annotations

Accurately classifying and labeling different categories of images manually are a hard work. As shown in Figure 3, significant difference between the radar rendering image and the visual image makes it difficult to distinguish the echo type of the radar scanning area, especially when precipitation and biological echo overlap. The existing strategy for manual classification of rendered images includes using bird radar to assist identification and verification [14], or finding an experienced radar ornithology expert as annotators [24,33]. However, since our data covers a wide range of continents, the above methods have limitations. Thus, using ecological knowledge, we summarize the characteristics of precipitation and biological echo in a weather radar image for manually labeling.

2.3.1. Precipitation Echo

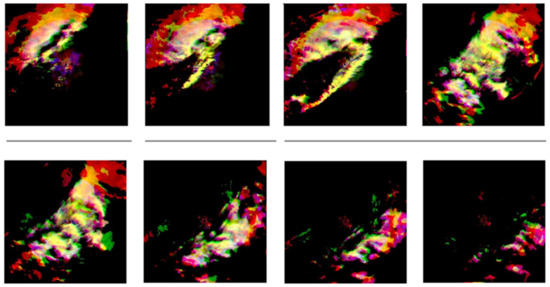

Backscattering of electromagnetic waves by clouds in the atmosphere, various water vapor condensates in precipitation, and precipitation echo caused by drastic changes in the atmospheric environment are the main types of meteorological echoes. Generally, the shape of precipitation echo includes sheet echo, block echo, etc. [34] Based on these mechanisms of the precipitation echo and the weather radar scanning method, we conclude that the precipitation echo has the following three typical characteristics. Figure 4 shows continuous time variation of precipitation echo rendering.

Figure 4.

Rendering of Changzhou weather radar station from 13:00 to 16:30 UTC on 2 April 2012, with 30 min intervals.

- Primarily visible in the red and green channel. Precipitation echo can extend to several kilometers in the vertical direction [34] while being scanned by weather radar at all elevation angles, and the echo intensity can exceed 50 dBZ [11,28]; thus, the red and green channels of the rendered image have strong gray values. In addition, in environments without biological echo pollution, including isolated tornado storms, the echo spectrum width is usually lower than 2 m/s [31,32], meaning the third channel tends to have a black background.

- Echo shape is irregular especially at the edge, and the texture is coarser. Precipitation echo is greatly affected by the underlying surface and can change widely within the radar detection area [35], resulting in more irregular shapes and textures.

- Significant displacement changes in time and space.

2.3.2. Biological Echo

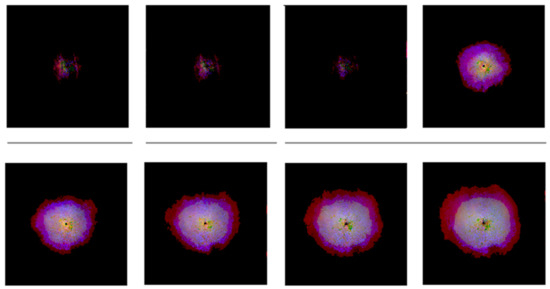

There are essential differences between the origin of biological echo and precipitation echo, biological echo mainly comes from the reflection of aerial animals to radar wave, having great differences in the size and medium of the spherical water droplet within Rayleigh scattering region. In addition, compared with that of precipitation, aerial animal is more susceptible to physical environmental factors, thus the migration height is usually concentrated at a fixed altitude, corresponding to the lower scanning elevation angle of the radar. Based on the formation mechanism and behavior characteristics of biological echo, we conclude that biological echoes have the following three typical characteristics in the rendering. Rendering of a typical biological echo is shown as Figure 5.

Figure 5.

Rendering of Changzhou weather radar station from 08:30 to 12:00 UTC on 17 October 2012, with 30 min intervals.

- Primarily visible in the red and blue channel. The migration altitude of birds usually occurs below 3000 m [11] and can be almost completely covered by weather radar’s 0.5° elevation beam at 200 km. Higher elevation angles cannot effectively illuminate biological echoes in a large-scale range [26,35]. In addition, the migration of biological groups, especially when migrating at different radial speeds, will cause a higher spectral width [31,32].

- Echo shape is approximately circular, and the texture and edges are smoother. Animal migration is usually concentrated at a certain height, with obvious layered characteristics [36], meaning biological populations are more likely evenly distributed relative to precipitation [35], and the maximum migration height remains basically unchanged. As a result, under the observation of polar coordinates, the farthest detection range of different azimuth angles remains basically unchanged (Figure 2), thus the echo is approximately circular in rectangular coordinate.

- Fast gathering in time and space. Large-scale migration of aerial creatures usually occurs during the evening [11], causing a sudden increase in the number of scattered targets in the airspace detected by the weather radar, which will result in a sudden increase in the intensity of the echo.

3. Methods

Deep networks transform the input matrix (such as a two-dimensional image) into one or more output values through a series of linear and nonlinear combinations, widely used in regression and classification tasks [14]. Increasingly advanced scene understanding tasks such as image segmentation were effectively solved with the introduction and application of network architectures such as FCN, showing higher accuracy even efficiency compared to other algorithms. Thus, we convert the biological target echo extraction process into a semantic segmentation task, assigning label to each pixel.

As mentioned before, biological echoes and precipitation echoes in rendering image show significant differences in features such as color, texture, and especially shape. For example, shape of the precipitation echo tends to be irregular, showing fragmentation, while the biological echo is usually concentrated in the center of the image, showing a regular circle (Figure 4 and Figure 5). We suppose that the ability to effectively extract these features is the key to classifying the two types of echoes.

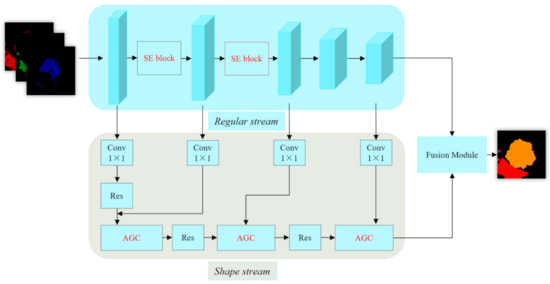

Gated-SCNN [37] is an advanced semantic segmentation network model currently, which constitutes of two main streams, i.e., the regular stream and the shape stream. The architecture can produce sharper predictions around object boundaries. Our model draws on this network structure and makes the following improvements:

- We incorporate Squeeze-and-Excitation (SE) [38] block into the regular stream. For our rendered radar images, the channel R and the channel G corresponds to reflectivity image with elevation angle of 0.5° and 1.45°, respectively. The channel R is mainly dominated by biological migration events, while the channel G is mainly dominated by precipitation events. We suppose that the two events are independent and SE block can reduce channel interdependence as well as enhance the attention on the current feature map.

- We add atrous spatial pyramid pooling (ASPP) [39] block to build Atrous Gated Convolution (AGC) before Gated Convolutional Layers [37] in the original network. For our rendered radar images, it is normal that biological and precipitation targets occupy large-scale spatial areas, resulting in wide coverage in PPI scans. ASPP block can expand the receptive field, helping the network understand the global shape information.

The modified network is referred to as Atrous-Gated CNN, shown in Figure 6, with key structures illustrated in Figure 7 and Figure 8.

Figure 6.

Atrous-Gated CNN for weather radar image segmentation task (red color represents our modifications). architecture consists of two main streams, regular stream and shape stream. Regular stream is Wide Residual Networks combined with SE module. Shape stream consists of a set of residual blocks and Atrous Gated Convolution (AGC).

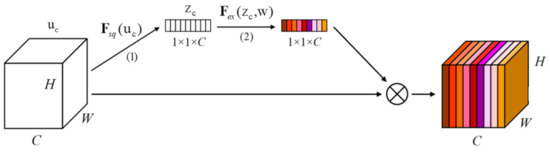

Figure 7.

Squeeze-and-Excitation block generates attention metrics for each feature channel. It consists of two main steps: (1) a squeeze procedure to embed global spatial information into a channel descriptor; (2) an excitation procedure to capture channel-wise dependencies.

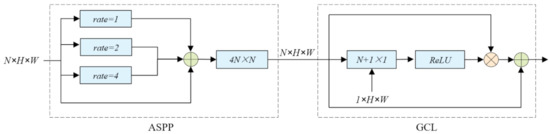

Figure 8.

Atrous Gated Convolution module. We integrate ASPP module to expand receptive field before GCL module of original network. Sampling rates are set to 1, 2, and 4.

3.1. Gated-SCNN

Gated-SCNN uses a dual-channel structure of regular stream and shape stream to process information in parallel. Regular stream is Wide Residual Networks based semantic segmentation network, so basic features such as color and texture of the target can be extracted. Shape stream is composed of a few residual blocks and Gated Convolutional Layers (GCL). GCL ensures that the shape stream only processes boundary relevant information and increases the extraction efficiency of shape features. GCL obtains an attention map (H and W are the length and width of the attention map) by concatenating and followed by a normalized convolution layer and a sigmoid function :

where and represent the t-th convolutional layer of the regular stream and the shape stream respectively, denotes concatenation of feature maps. Given the feature map , GCL apply element-wise product to with , followed by a residual connection and channel-wise weighting with kernel at each pixel :

Based on dual-channel structure, Gated-SCNN can produce sharp predictions around object boundaries. We suppose that Gated-SCNN network can show good performance in weather radar image segmentation tasks, especially in the recognition of precipitation target, for the precipitation echo tends to have complex edges (Figure 4).

3.2. SE Block

Squeeze-and-Excitation (SE) block is a computational unit that adaptively recalibrates channel-wise feature by explicitly modelling interdependencies between channels. A typical SE block consists of two steps: squeeze and excitation. Squeeze performs average pooling along the spatial dimension to compress global spatial information into a channel descriptor, and the output dimension matches the number of input characteristic channels. Excitation learns to generate the weight of each feature channel, thereby fully captures channel-wise dependencies.

where and refer to sigmoid and ReLU function. Given the feature map of convolution neural network , where C, H, W represent the depth, height, and width of the feature map respectively, the output weight parameter is obtained. and represent the weight parameters of the connected layer, their spatial dimensions are and , respectively, where r is an artificially set constant representing dimension reduction.

We incorporate the SE element (Figure 7) into regular stream as an attention mechanism to generate attention metrics for each feature channel, thereby reducing channel interdependence. For our input rendered images, different channels represent radar information acquisition for different altitude levels. Generally, the migration altitude of birds usually occurs below 3000 m [11], which can be almost completely covered by weather radar’s 0.5° elevation beam at 200 km, while the precipitation can be covered by multiple elevation angles. Thus, the channel R of a rendered image mainly includes biological migration events, and the channel G mainly includes precipitation events. We propose that both are independent natural phenomena and have no significant correlation. Therefore, for the task of meteorological data classification and regression, it is more important to enhance the network’s attention to the current events.

3.3. ASPP Block

The original network is specially designed for Cityscapes Dataset (A dataset jointly provided by three German companies including Daimler, and contains stereo vision data of more than 50 cities), in which the target usually occupies only the local scanning area. However, for weather radar images, it’s normal that biological and precipitation targets occupy large-scale spatial areas, resulting in wide coverage in PPI scans. Therefore, local observation, that is, small receptive field, cannot effectively extract shape information, limiting the network performance. To this end, we introduce atrous spatial pyramid pooling (ASPP) into the shape stream to improve network performance.

ASPP block is known as a main contribution of DeepLab neural network [39]. It uses atrous convolution [40] with multiple sampling rates to probe an incoming feature layer in parallel, thus capturing objects as well as image context at multiple scales. Atrous convolution is an improved operation to solve the limitation that convolutional and pooling operations will reduce image resolution to obtain large receptive field. By introducing a parameter called “dilation rate” into the convolution layer, it allows us to effectively enlarge the field of view of filters to incorporate larger context without increasing the amount of computation. Considering one-dimensional signals, the general atrous convolution operation is defined as follows:

Here, is the output for a given input with a filter of length K. The rate parameter r represents the sampling stride for the input signal. In particular, atrous convolution is equivalent to standard convolution when . The operation of the algorithm is similar in 2D, in our work, we consider the sampling rates of ASPP block are 1, 2 and 4.

We integrate ASPP block based on GCL module to build Atrous Gated Convolution (AGC) module, helping the network understand the global shape information of the target. The proposed module is shown in Figure 8.

4. Results

4.1. Dataset

We used a total of 5,752,042 radar scans from the China Meteorological Administration. The scans contain historical data for all months of more than 200 stations from 2008 to 2019 (Miss data from 2013 to 2016). For each radar station, we selected the scans in spring (March, April and May) and autumn (August, September and October), resulting in an experimental set of 11,795 scans by rendering and labeling as described in Section 2. During the training process, the data are randomly into training and evaluation subsets (8000 images for training and 3795 images for evaluation).

4.2. Evaluation and Experimental Settings

We use four quantitative measures to evaluate the performance of our model: precision, recall, F-score, IoU (Intersection over Union). They are defined as follows:

where TP (Ture Positive) and FN (False Negative) represent the number of positive pixels correctly classified and incorrectly classified respectively, and FP (False Positive) is the number of negative pixels classified incorrectly. For a segmentation task with a total of k + 1 classes (from L0 to Lk including background, in our task, k = 2), we assume that pij is the number of pixels of class i inferred to belong to class j. For class i, the specific calculation formulas of TP, FP, and FN are as follows:

Among these metrics, F-score is the harmonic mean of precision and recall, which can comprehensively evaluate the network performance. IoU is the most commonly used metric, it computes a ratio between the intersection and the union of two sets, i.e., the predicted pixel set, and the label pixel set.

The training parameters are set as follows: We apply adaptive moment estimation (Adam) as the optimizer with the momentum of 0.9 and weight decay of 0.0001. the learning rate is 0.0015. Experiments are implemented on Torch framework, using a workstation with two Intel 14-core Xeon E5 CPUs and two Nvidia GTX-1080Ti GPUs.

4.3. CNN Performance

In preliminary experiments, the proposed Atrous-Gated CNN is compared with original model (Gated-SCNN) and other previous work (MistNet, DeepLab V3+) [14,26]. Our results show that: (1) in historical CINRAD data, the precision of the model’s segmentation of biological and precipitation targets exceeds 99%; (2) the improved network increased IoU in precipitation echo and biological echo by 1.6% and 1.7%, respectively; (3) our model achieves higher F-score and IoU than previous work in the segmentation precision of biological targets.

4.3.1. Quantitative Evaluation

Table 2 gives performance comparison between Atrous-Gated CNN and original network, and it should be noted that our conclusion is the average result of multiple training. The result shows that our structure achieves good performance in weather radar image segmentation task, with the precision and recall of biological echo of 99.6% and 98.9% respectively, improved 1.4% and 0.4% compared to the original network. In addition, we pay more attention to the impact of the network modification on IoU metric, because IoU is more sensitive and representative. Compared with Gated-SCNN, Atrous-Gated CNN’s IoU in precipitation and biological echo are increased by 1.6% and 1.7%, respectively, which proves that the introduction of SE module and ASPP module can improve the performance of the network in weather radar image segmentation tasks. Atrous-Gated CNN performs worse in background case, which means that the improvement in the segmentation metric of the network in biology and precipitation case is mainly due to more accurate classification between the two (biology and precipitation). In another word, SE module and ASPP module can aid the network to classify biological echo and precipitation echo. The reason why Atrous-Gated CNN performs worse in background case will be explained in detail in the discussion.

Table 2.

Performance comparison of Atrous-Gated CNN and Gated-SCNN.

>We conduct ablation experiments on the dataset to verify the effectiveness of SE and ASPP, and compares our method with other previous work (MistNet, DeepLab V3+) [14,26]. In biology, we pay more attention to the performance of the network in biological echo. Table 3 compares the segmentation performance of these networks in biological echo segmentation, where the order is sorted according to IoU metric (Due to the different input formats of the network, the results of MistNet refer to their paper). The fifth row and the sixth row indicate that only SE and ASPP are added on the basis of Gated-SCNN, respectively, and we can conclude that both SE and ASPP can improve the segmentation performance. Our method achieves the best performance by using them simultaneously. But the improvement is not significant compared with that of adding SE or ASPP alone because the accuracy is close to the upper limit. The recall of MistNet is close to or even higher than our network, and we assume that this is because MistNet tends to classify pixels as biological echo, so as to retain more biological information. Only echoes with obvious characteristics of precipitation targets will be classified as precipitation. This classification decision is not easy to lose biological information but will lead to a large number of pixels being incorrectly classified as biological categories, and therefore has a lower precision of 93.5%. In contrast, the precision of Atrous-Gated CNN is 6.1% higher, and the biology is still retained to the maximum extent, leading to a better F-score and IoU.

Table 3.

Performance comparison of Atrous-Gated CNN with other networks in biological echo segmentation.

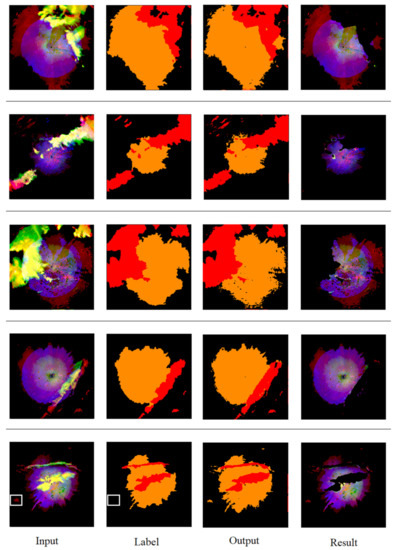

4.3.2. Qualitative Evaluation

Figure 9 demonstrates several examples of predictions made by Atrous-Gated CNN compared to the ground-truth human annotations. In the real world, the biological echo and precipitation echo in the rendered image are likely to overlap, which brings challenges to the accurate segmentation using CNN. Therefore, we selected several rendered images with overlapping phenomena as examples to prove that the network can handle this issue. It is unrealistic to label large amounts of data completely accurately, as shown in Figure 9, there are many errors in the manual labeling process. For example, echoes with fewer pixels may be labeled as background (White box area in Figure 9). However, our method can still correctly classify these pixels, indicating that the network has generalization.

Figure 9.

Segmentation results (red: precipitation, orange: biology, black: background) predicted by Atrous-Gated CNN are shown along with human annotations. For each example, input is a rendered image, output is label image obtained by semantic segmentation of CNN models, and result is biological target extracted from segmentation prediction. White box represents incorrectly labeled pixel areas.

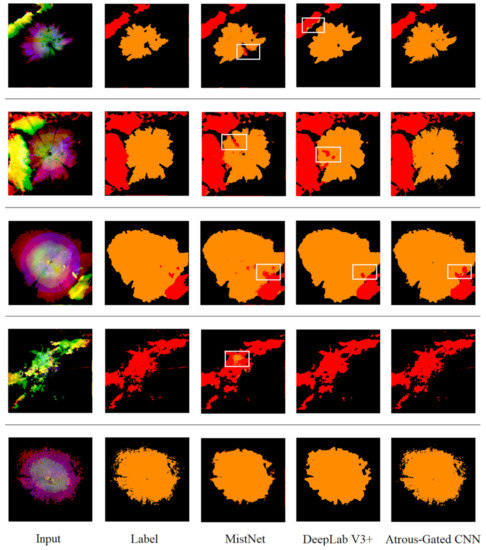

In addition, we qualitatively evaluate the performance of different networks through the segmentation results on the same image. Figure 10 compares the predictions of MistNet, DeepLab V3+ and our method on several samples, where the white box represents the pixel areas with obvious segmentation errors. Because the input format of MistNet is an image with 15 channels, which is different from ours and the basic framework of MistNet is FCN, we use the prediction results of FCN-8s to represent MistNet. The result of Gated-SCNN is similar to that of Atrous-Gated CNN, which will not be shown here. Atrous-Gated CNN has fewer prediction errors on five examples, which proves the effectiveness of the proposed method. In the fourth and fifth samples (row 4 and 5) of Figure 10, the edges of the echo are rough and complicated, which means that accurate prediction is challenging. MistNet and DeepLab V3+ have fuzzy predictions on these edges, resulting in smoother edges of the prediction results. In contrast, Atrous-Gated CNN predicts these edges more accurately, and the prediction result is almost the same as the label image, which verifies that shape stream of the network can effectively extract the shape features of the echo.

Figure 10.

Segmentation results (red: precipitation, orange: biology, black: background) predicted by MistNet, DeepLab V3+ and Atrous-Gated CNN. First column shows input rendered image, second column shows label image, last three columns show segmentation results predicted by MistNet, DeepLab V3+ and Atrous-Gated CNN, respectively. Basic framework of MistNet is FCN, and we use prediction results of FCN-8s to represent MistNet. White box represents pixel areas with obvious segmentation errors.

4.3.3. Computational Complexity

We calculate the number of parameters and FLOPs (floating point operations) of different networks when the input image resolution is 320 × 320, as shown in Table 4. Compared with MistNet and DeepLab V3+, our method shows disadvantages in computational complexity, but has a significant performance improvement, especially the performance improvement is challenging when the IoU exceeds 90%. In addition, compared with that of the Gate-SCNN, our method increases the IoU of precipitation and biological echo by 1.6% and 1.7% respectively, while its parameters and FLOPs are almost unchanged, proving that our modification is efficient.

Table 4.

Computational complexity of different networks.

5. Discussion

China next generation weather radar (CINRAD) network comprises more than 200 weather surveillance radars, covering the central and eastern regions of China. Although designed for meteorological applications, these radars can receive the signal reflected by various air targets, including birds, insects, bats, and so on. The success of extracting and identifying biological information from the recorded data can help quantify bird movements over a large coverage area and answer a series of important migration ecology questions.

In this study, based on the formation mechanism of different echoes, we summarize the typical distinctions of biological and precipitation echoes in a rendered image, which can help intuitively judge different echo types. We also note that in other works [14,24], the biological and precipitation echoes in a rendered image show the similar characteristics, even if the rendering methods are different.

In our results section, Table 2 shows in detail the performance comparison results of Gated-SCNN and Atrous-Gated CNN. Our network shows better performance in biological and precipitation echo segmentation. However, we noticed that compared to Gated-SCNN, the modified network performs worse in background case. As we mentioned in Section 3, SE module aid the network distinguish precipitation and biological events, while ASPP module help the network better extract the shape features of biological and precipitation echo. We suppose that SE and ASPP module will guide the network to pay more attention to the classification of precipitation and biological echo, which is also our purpose of integrating them into Gated-SCNN. However, this will reduce the network’s attention to the classification of background, resulting in worse performance in background case. The proposed method is still higher (0.8%) than Gated-SCNN on average IoU, suggesting that the modification is beneficial.

In addition, we note that the misclassification of precipitation echoes may be the main factor limiting the performance of CNN. Generally, compared with that of biological echoes, precipitation echoes have more complex edges, especially scattered into many small echoes (Figure 4), which is difficult to be classified for CNN. In MistNet method, Lin et al. (2019) classified the historical data into ‘clear’ and ‘weather’ types according to whether there is precipitation echo within 37.5 km of the radar center, testing the performance of the network in precipitation weather. By following this methodology, we obtain a ‘Historical (weather)’ subset of historical scans containing 2000 data. On the ‘Historical (all)’ dataset, the IoU of MistNet and DeepLab V3+ decreased to 70.5% and 88.5% (Table 5), meaning the precipitation has a significant negative impact on their performance. Benefited by two-stream architecture, Gated-SCNN and Atrous-Gated CNN can produce sharper predictions around object boundaries and boost performance on thinner and smaller objects, thus obtaining higher IoU score and showing significant advantages in the task of weather radar image segmentation.

Table 5.

Performance comparison of different networks on ‘Historical (weather)’ dataset.

Our results show that deep learning is an effective tool in biological echo extraction tasks in CINRAD, and is likely to be applied to other radar data processing tasks. In future work, new deep learning technologies and combination with other optimization algorithms are needed to further improve the accuracy of biological echo extraction.

6. Conclusions

Extracting and identifying biological information was a long-term challenge in radar aeroeclogy that substantially limits the study of bird migration patterns. In this paper, we propose a method to extract biological information from weather radar data. It consists of two main modules: (1) rendering and mapping raw radar data into radar images, and (2) training CNN to obtain label images of different echo types for biological echo extraction. By analyzing the CINRAD historical data, we reveal the typical feature of biological and precipitation echoes in color and shape in a rendered image. Based on this knowledge, we integrate SE and ASPP block into Gated-SCNN to adapt to our segmentation task, where SE block can reduce channel interdependence as well as enhance the attention on the feature map and ASPP block can expand the receptive field, helping the network understand the global shape information. Finally, our experiments show that the precision of the proposed network in identifying biological targets can reach 98.6%, a significant improvement over MistNet and DeepLab V3+. Of course, our model will aim to mine valuable biological information from Chinese weather radar network data, enabling long-term measurements of migration systems of the whole continent.

Author Contributions

Conceptualization, S.W. and C.H.; methodology, C.H. and K.C.; software, S.W. and H.M.; validation, S.W., K.C. and H.M.; formal analysis, K.C.; investigation, K.C.; data curation, K.C. and D.W.; writing—original draft preparation, S.W.; writing—review and editing, C.H.; visualization, C.H. and R.W.; supervision, C.H.; project administration, C.H.; funding acquisition, C.H. and R.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by National Natural Science Foundation of China (Grant No. 31727901) and Major Scientific and Technological Innovation Project (Grant No. 2020CXGC010802).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hahn, S.; Bauer, S.; Liechti, F. The natural link between Europe and Africa–2.1 billion birds on migration. Oikos 2009, 118, 624–626. [Google Scholar] [CrossRef]

- Dokter, A.M.; Farnsworth, A.; Fin, D. Seasonal abundance and survival of North America’s migratory avifauna determined by weather radar. Nat. Ecol. Evol. 2018, 2, 1603–1609. [Google Scholar] [CrossRef] [PubMed]

- Van, D.B.M.; Horton, K.G. A continental system for forecasting bird migration. Science 2018, 361, 1115–1118. [Google Scholar] [CrossRef]

- Tsiodras, S.; Kelesidis, T.; Kelesidis, I. Human infections associated with wild birds. J. Infect. 2008, 56, 83–98. [Google Scholar] [CrossRef]

- Zakrajsek, E.J.; Bissonette, J.A. Ranking the risk of wildlife species hazardous to military aircraft. Wildl. Soc. Bull. 2005, 33, 258–264. [Google Scholar] [CrossRef]

- Haykin, S.; Deng, C. Classification of radar clutter using neural networks. IEEE Trans. Neural Netw. 1991, 2, 589–600. [Google Scholar] [CrossRef]

- Brooks, M. Electronics as a possible aid in the study of bird flight and migration. Science 1945, 101, 329. [Google Scholar] [CrossRef] [PubMed]

- Lack, D.; Varley, G.C. Detection of birds by radar. Nature 1945, 156, 446. [Google Scholar] [CrossRef]

- Gauthreaux, J.S.A.; Belser, C.G. Radar ornithology and biological conservation. AUK 2003, 120, 266–277. [Google Scholar] [CrossRef]

- Li, W.; Hu, C.; Wang, R. Comprehensive analysis of polarimetric radar cross-section parameters for insect body width and length estimation. Sci. China Inf. Sci. 2021, 64, 122302. [Google Scholar] [CrossRef]

- Gauthreaux, S.A.; Belser, C.G. Displays of bird movements on the WSR-88D: Patterns and quantification. Weather Forecast. 1998, 13, 453–464. [Google Scholar] [CrossRef]

- Stepanian, P.M.; Wainwright, C.E. Ongoing changes in migration phenology and winter residency at Bracken Bat Cave. Glob. Change Biol. 2018, 24, 3266–3275. [Google Scholar] [CrossRef] [PubMed]

- Rennie, S.J. Common orientation and layering of migrating insects in southeastern Australia observed with a Doppler weather radar. Meteorol. Appl. 2014, 21, 218–229. [Google Scholar] [CrossRef]

- Lin, T.Y.; Winner, K.; Bernstein, G. MistNet: Measuring historical bird migration in the US using archived weather radar data and convolutional neural networks. Methods Ecol. Evol. 2019, 10, 1908–1922. [Google Scholar] [CrossRef]

- Schmaljohann, H.; Liechti, F.; Bächler, E. Quantification of bird migration by radar—A detection probability problem. Ibis 2008, 150, 342–355. [Google Scholar] [CrossRef]

- Dokter, A.M.; Desmet, P.; Spaaks, J.H. bioRad: Biological analysis and visualization of weather radar data. Ecography 2019, 42, 852–860. [Google Scholar] [CrossRef]

- Gauthreaux, S.; Diehl, R. Discrimination of biological scatterers in polarimetric weather radar data: Opportunities and challenges. Remote Sens. 2020, 12, 545. [Google Scholar] [CrossRef]

- Hu, C.; Li, S.; Wang, R. Extracting animal migration pattern from weather radar observation based on deep convolutional neural networks. J. Eng. Technol. 2019, 2019, 6541–6545. [Google Scholar] [CrossRef]

- Gauthreaux, S.A.; Livingston, J.W.; Belser, C.G. Detection and discrimination of fauna in the aerosphere using Doppler weather surveillance radar. Integr. Comp. Biol. 2008, 48, 12–23. [Google Scholar] [CrossRef]

- O’Neal, B.J.; Stafford, J.D.; Larkin, R.P. Waterfowl on weather radar: Applying ground-truth to classify and quantify bird movements. J. Field Ornithol. 2010, 81, 71–82. [Google Scholar] [CrossRef]

- Lakshmanan, V.; Fritz, A.; Smith, T. An automated technique to quality control radar reflectivity data. J. Appl. Meteorol. Climatol. 2007, 46, 288–305. [Google Scholar] [CrossRef]

- Lakshmanan, V.; Zhang, J.; Howard, K. A technique to censor biological echoes in radar reflectivity data. J. Appl. Meteorol. Climatol. 2010, 49, 453–462. [Google Scholar] [CrossRef][Green Version]

- Dokter, A.M.; Liechti, F.; Stark, H. Bird migration flight altitudes studied by a network of operational weather radars. J. R. Soc. Interface 2011, 8, 30–43. [Google Scholar] [CrossRef] [PubMed]

- RoyChowdhury, A.; Sheldon, D.; Maji, S. Distinguishing weather phenomena from bird migration patterns in radar imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 19 December 2016; pp. 10–17. [Google Scholar]

- Stepanian, P.M.; Horton, K.G.; Melnikov, V.M. Dual-polarization radar products for biological applications. Ecosphere 2016, 7, e01539. [Google Scholar] [CrossRef]

- Cui, K.; Hu, C.; Wang, R. Deep-learning-based extraction of the animal migration patterns from weather radar images. Sci. China Inf. Sci. 2020, 63, 140304. [Google Scholar] [CrossRef]

- Larkin, R.P.; Diehl, R.H. Spectrum width of flying animals on Doppler radar. In Proceedings of the 15th Conference on Biometeorology/Aerobiology and 16th International Congress of Biometeorology, American Meteorological Society, Kansas City, MI, USA, 30 October 2002; p. 9. [Google Scholar]

- Rennie, S.J.; Curtis, M.; Peter, J. Bayesian echo classification for Australian single-polarization weather radar with application to assimilation of radial velocity observations. J. Atmos. Ocean. Technol. 2015, 32, 1341–1355. [Google Scholar] [CrossRef]

- Gauthreaux, S.A.; Belser, C.G. Bird movements on Doppler weather surveillance radar. Birding 2003, 35, 616–628. [Google Scholar]

- Bruderer, B.; Boldt, A. Flight characteristics of birds: I. Radar measurements of speeds. Ibis 2001, 143, 178–204. [Google Scholar] [CrossRef]

- Feng, H.; Wu, K.; Cheng, D. Spring migration and summer dispersal of Loxostege sticticalis (Lepidoptera: Pyralidae) and other insects observed with radar in northern China. Environ. Entomol. 2004, 33, 1253–1265. [Google Scholar] [CrossRef]

- Fang, M.; Doviak, R.J.; Melnikov, V. Spectrum width measured by WSR-88D: Error sources and statistics of various weather phenomena. J. Atmos. Ocean. Technol. 2004, 21, 888–904. [Google Scholar] [CrossRef]

- Rosa, I.M.; Marques, A.T.; Palminha, G. Classification success of six machine learning algorithms in radar ornithology. Ibis 2016, 158, 28–42. [Google Scholar] [CrossRef]

- Lamkin, W.E. Radar Signature Analysis of Weather Phenomena. Ann. N. Y. Acad. Sci. 1969, 163, 171–186. [Google Scholar] [CrossRef]

- Zhang, P.; Liu, S.; Xu, Q. Identifying Doppler velocity contamination caused by migrating birds. Part I: Feature extraction and quantification. J. Atmos. Ocean. Technol. 2005, 22, 1105–1113. [Google Scholar] [CrossRef]

- Hu, G.; Lim, K.S.; Horvitz, N. Mass seasonal bioflows of high-flying insect migrants. Science 2016, 354, 1584–1587. [Google Scholar] [CrossRef] [PubMed]

- Takikawa, T.; Acuna, D.; Jampani, V. Gated-scnn: Gated shape cnns for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 5229–5238. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2016, arXiv:1511.07122v3. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).