1. Introduction

Nowadays, there is a wide range of agricultural activities where geospatial technologies can support management and decision-making by collecting geographic data, 2D/3D modelling, and mapping. Some of these technologies are global navigation satellite systems (GNSS), remote sensing and photogrammetry, airborne laser scanning (ALS), sensors (in-situ or mobile), and spatial analysis tools for raster/vector within a geographic information systems (GIS) environment.

Geospatial technologies have contributed to precision agriculture (PA), where managing, measuring, and monitoring agricultural production have become more efficient and accurate. In practice, it means managing issues or tasks, ranging from land preparation, sowing, and harvesting; to using technology that allows measuring soil changes; to crop health and growth through ongoing monitoring. For example, remote sensing and photogrammetry can ensure the fast identification of crop areas that need more care or water, detect possible deficiencies in the irrigation of different sites, and optimise resources. As a result, this will contribute to a better distribution of nutrients, fertilisers, and water without waste, increasing agricultural production or preventing its loss.

GNSS, satellite imagery, and GIS were the first technological contributions to the emergence of PA in the 1980s. Over these last two decades, the use of remotely sensed data has increased significantly in several studies in agriculture, namely, for land use and crop classification and monitoring soil and vegetation health. According to [

1], many of these studies have focused on hyperspectral sensors, followed by multispectral and RGB sensors. The application of various types of satellite sensors in PA is summarised in [

2], as well as vegetation indices for the classification, mapping, and analysis of spatial variability. However, spatial and temporal resolution satellite imagery has been shown to be unsuitable for many PA applications [

2]. In addition, their cost is still high when it comes to monitoring some tasks in PA.

In view of satellite imagery limitations for PA, the interest in the usage of aerial imagery has increased significantly in the last five years [

1], focusing on unmanned aerial vehicles (UAVs), also called Unmanned Aerial Systems (UASs). UAVs have demonstrated higher flexibility for acquiring very-high-resolution images (a few centimetres) and high-temporal resolution (daily). Furthermore, they are easy to use, fast, and cost-effective in the acquisition of 2D/3D data when compared with other surveying methods (GNSS, large-format digital cameras, and ALS). However, UAVs have some operational limitations, such as covering only small areas because of short battery autonomy; they should not be used during strong wind and rainy weather or extreme temperatures (negative temperatures or higher than 40 °C).

The miniaturisation of sensors for UAV has contributed to the significant increase of applications in agriculture, such as thermal sensors [

3,

4], multispectral [

5,

6]; hyperspectral [

7,

8], and LiDAR sensors [

9,

10].

The most common UAV applications in PA are connected to managing and monitoring cropping systems, such as growth monitoring [

11]; detecting weeds and healthy or diseased crops [

12,

13]; and plant water-stress monitoring, including the detection of sectors under full irrigation and deficit irrigation over the cropping system [

4,

14]. Radoglou-Grammatikis et al. [

15] summarised a set of UAV applications related to monitoring processes in agriculture. Furthermore, several authors have also reviewed the potential and limitations of UAV-based photogrammetry technologies in PA [

1,

7,

16].

The type of phenomenon or feature we intend to measure and the level of detail—2D (area) or 3D (height and volume)—required for the study of the phenomenon is decisive for choosing the sensor and technique. As indicated in [

16], 68.5% of agricultural studies for weed mapping and growth monitoring used photogrammetric techniques based on RGB and multispectral imagery.

3D high-resolution UAV data derived from photogrammetry techniques have not been as explored and investigated in PA applications, such as the use of high-resolution DSMs in topographic changes, drainage, soil properties, and soil moisture [

1]. However, they have been used in erosion studies [

17,

18,

19]; calculating stockpile volume [

20] and quarry volume [

21]; monitoring the movement of tailings impoundments in the decimetre range and volume calculations [

22]; monitoring shallow landslides and erosion [

23]; and monitoring erosion bridges [

24]. These studies have demonstrated that the 3D data extracted from UAV imagery can monitor and measure changes in soil surface with a few centimetres’ accuracy.

Soil management is essential for all stages of agricultural production, where evaluating its effect on crops is also a critical control task in the PA context [

6]. After each decision-making process, cropped field changes can be measured with a geospatial technology that allows for a more straightforward multi-temporal data collection.

Land preparation is the second agricultural operation within the various stages of agricultural production [

1], where it is essential to evaluate the effects of tillage practices (conservation tillage, conventional, or no-tillage) as a soil management activity [

6].

Soil tillage entails mobilising soil without contributing to sustainable agriculture because it always causes the destruction of biological processes and the loss of organic matter and contributes to runoff and soil erosion. Conventional regular tillage consists of preparing the soil for sowing, removing weeds and destroying plant debris, exposing pests to sunshine for control, and ground levelling [

25].

The effects of soil tillage can be studied by analysing soil properties, drainage, and topographic mapping before and after soil mobilisation, where UAV data can provide a higher contribution, namely, with the production of 3D surface models (digital terrain model (DTM), digital surface model (DSM), and slope), orthoimage, and mapping within a 2D/3D multi-temporal environment. In particular, after tillage, the quantification of mobilised soil volume is an essential task for monitoring soil tillage effects. It can be estimated from the difference between two DTMs (derived from the modelling of UAV dense point clouds), called differential DTM. Although the acquisition of multi-temporal DTMs has become more accessible and frequent with UAV surveys, comparing DTMs is still a challenge, especially when data are spatially heterogeneous and include positional errors. Previous studies have addressed the issue of how to produce a reliable differential DTM correcting the misalignment between DTMs using a robust and straightforward co-registration method: Cucchiaro et al. [

26] tested several algorithms for the co-registration of multi-temporal 3D point clouds and developed a DTM co-registration tool to correct minor inaccuracies in the alignment of gridded data; adjusted DTMs using a mathematical model that includes the elevation differences and the derivatives of slope and aspect [

27]; and employed statistical multiple regression techniques to adjust DSMs (obtained from satellite image) based on easting, northing, aspect, slope, and elevation [

28,

29].

Some authors have compared the effects of different cropping systems (conventional tillage and no-tillage) on agricultural production based on UAV multi-temporal data to (1) extract the difference in plant height, volume and canopy cover [

30]; and (2) evaluate tillage and no-tillage effects on the nutritional status and crop productivity for two consecutive years [

6]. Additionally, [

31] showed the effects of a cropping system in the vineyards, where the mobilised soil volume for a given period of time was estimated, taking into account the erosion and deposition rates [

32].

Despite UAV’s demonstrated potential in managing and monitoring the different stages of agricultural production, according to [

16], the most significant difficulty is its implementation by farmers because it requires technical qualifications or experts to assist in image processing. The implementation of standardised workflows to support some applications in agriculture should be a solution in the future.

It is also essential to highlight that the present evolution of geospatial technologies and the progress in big data analysis, artificial intelligence, robots, and geoinformatics can enable solutions that will contribute to new sustainable precision agriculture and a new environmental paradigm recently discussed in [

33].

The main objectives of this work are to (1) demonstrate the potential of unmanned aerial vehicle (UAV) technology in the acquisition of 3D data to measure soil tillage; (2) propose a methodology that allows us to reliably quantify mobilised soil volume changes where multi-temporal DTMs required a co-registration method to be comparable; and (3) assess the positional accuracy of SfM processing and DTMs produced, which is relevant for the quality of the estimated total mobilised soil volume.

Finally, we demonstrated how co-registration between two DTMs is relevant to improving the differential DTM and thus estimating mobilised soil volume. Therefore, an unchanged-area-matching method was used to estimate the regression values of a DTM, based on statistical multiple regression analysis [

28] and a set of June elevation profiles from unchanged areas.

The land preparation based on conventional tillage has resulted in topographic changes, which should be quantified to predict their effects better and simultaneously contribute to more effective soil management. Therefore, this work is innovative in the use of UAV data to quantify the mobilised soil volume after a soil tillage operation. It aims to contribute to sustainable precision agriculture through regular and continuous monitoring and mapping of tilled soil to minimise the negative environmental impacts of this type of cropping system on soil and crop productivity, with the detection of possible areas suffering an ongoing erosion process. On the other hand, it aims to promote the implementation of UAV technology among farmers, where the knowledge of the positional accuracy of the generated products should always be a concern. Finally, this work shall contribute to improving the estimation of tillage operation costs by calculating the total mobilised soil volume instead of the tilled area.

2. Materials and Methods

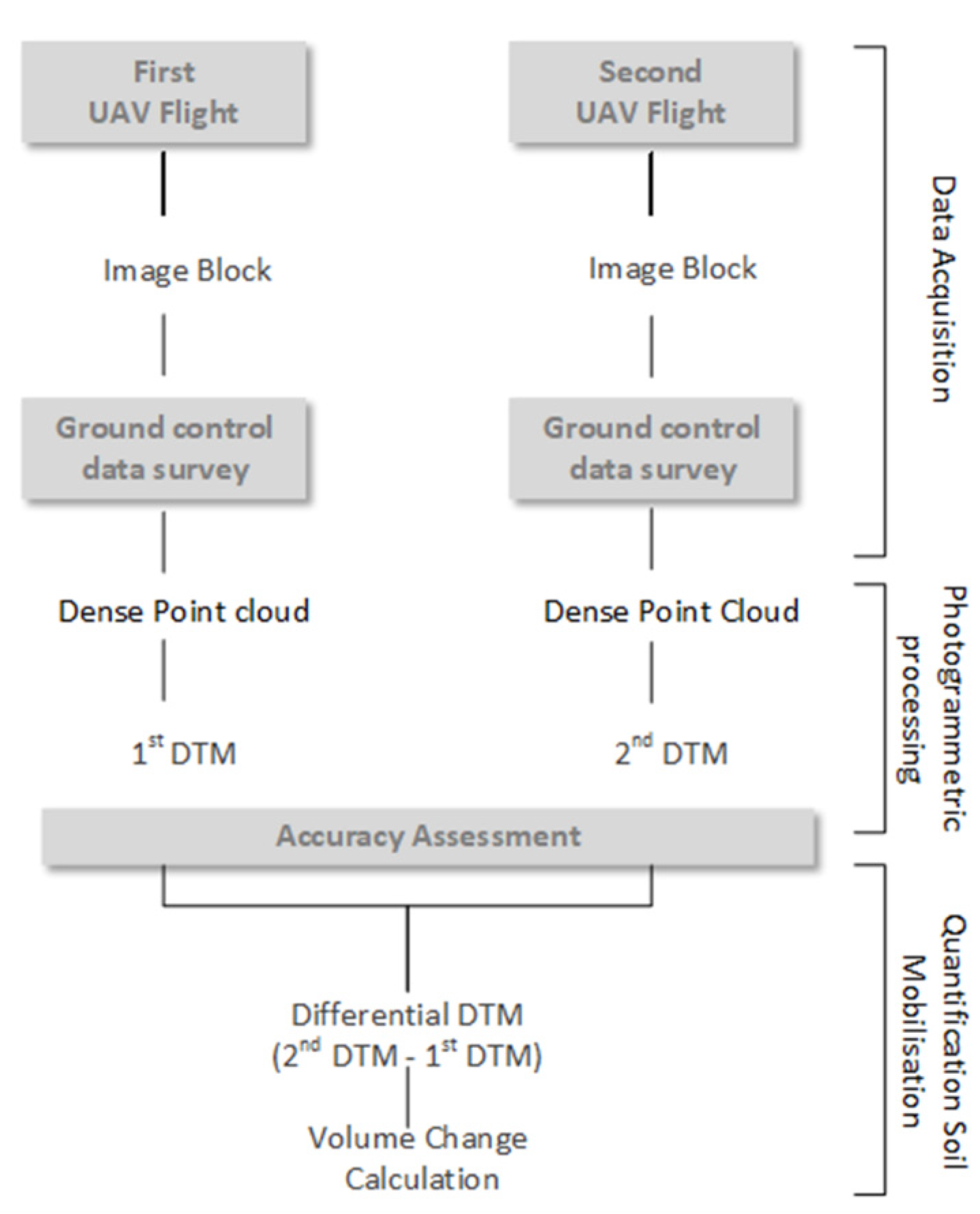

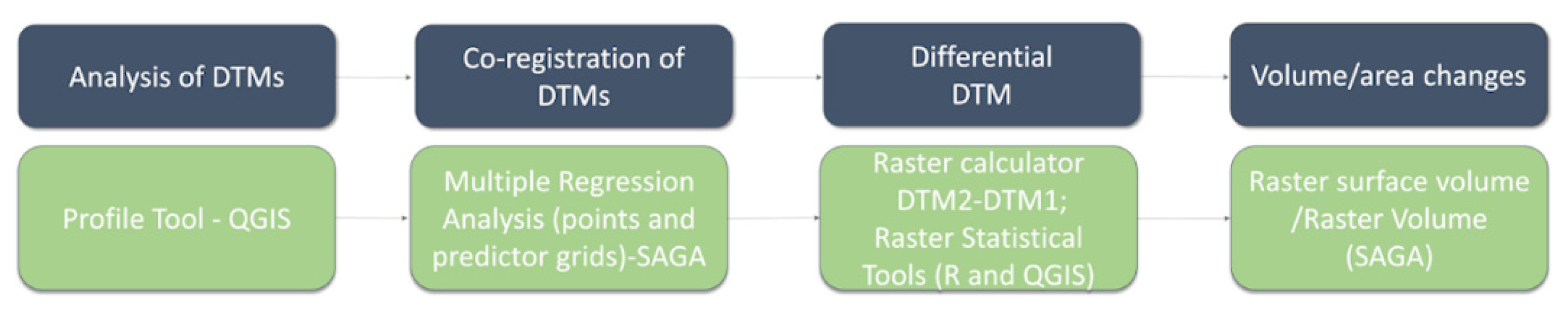

This study proposes a methodology (

Figure 1) for the quantification of mobilised soil volume following soil tillage. This methodology is composed of the following three stages: (1) data acquisition, (2) photogrammetry processing, and (3) quantification of soil tillage/mobilisation.

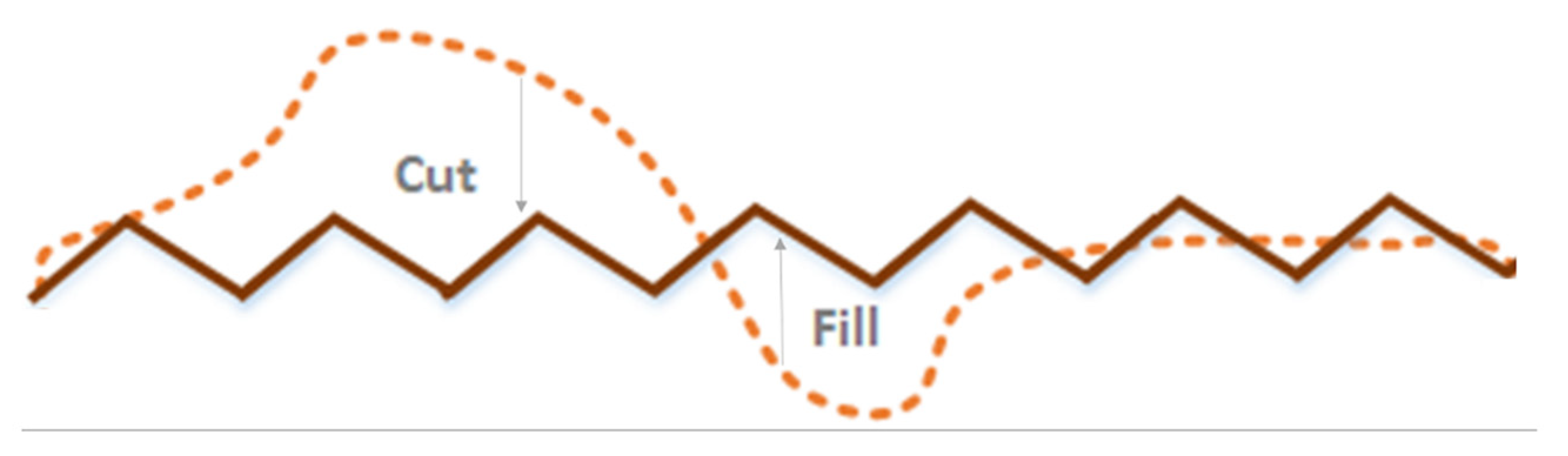

The first stage is related to the acquisition of UAV imagery and ground control survey. The ground control survey is essential to support and improve the positional accuracy of the next stage. The second stage consists of georeferencing each image block based on the photogrammetric SfM workflow, followed by the accuracy assessment. Finally, the last stage has three essential tasks within a volume change workflow: (1) production of a differential DTM grid, containing the elevation difference for every pixel related to the occurred change; (2) correction of differential DTM by unchanged-area-matching method; and (3) calculation of mobilised soil volume from the differential DTM, including the quantification of fill and cut areas (a digital volume model was produced).

The accuracy assessment should be performed at the end of photogrammetric processing and for the 2D/3D products. Both are essential to enable the horizontal and vertical accuracy of results. Positional accuracy should be in the range of one centimetre, taking into account the values that are expected to be estimated for the soil volume mobilisation. Data and process quality control in the whole methodological process are also concerns.

The computing framework was based on the software Agisoft Metashape for SfM processing, and some free and open-source tools from SAGA, GRASS GIS, and QGIS related to raster and statistical analysis were used for co-registration, mapping changed areas, and calculating volume. The use of these GIS tools increases reproducibility and reduces operational costs (available for free use, modification, and distribution) based on the Free and Open Source Software (FOSS) GIS environment.

2.1. Study Area

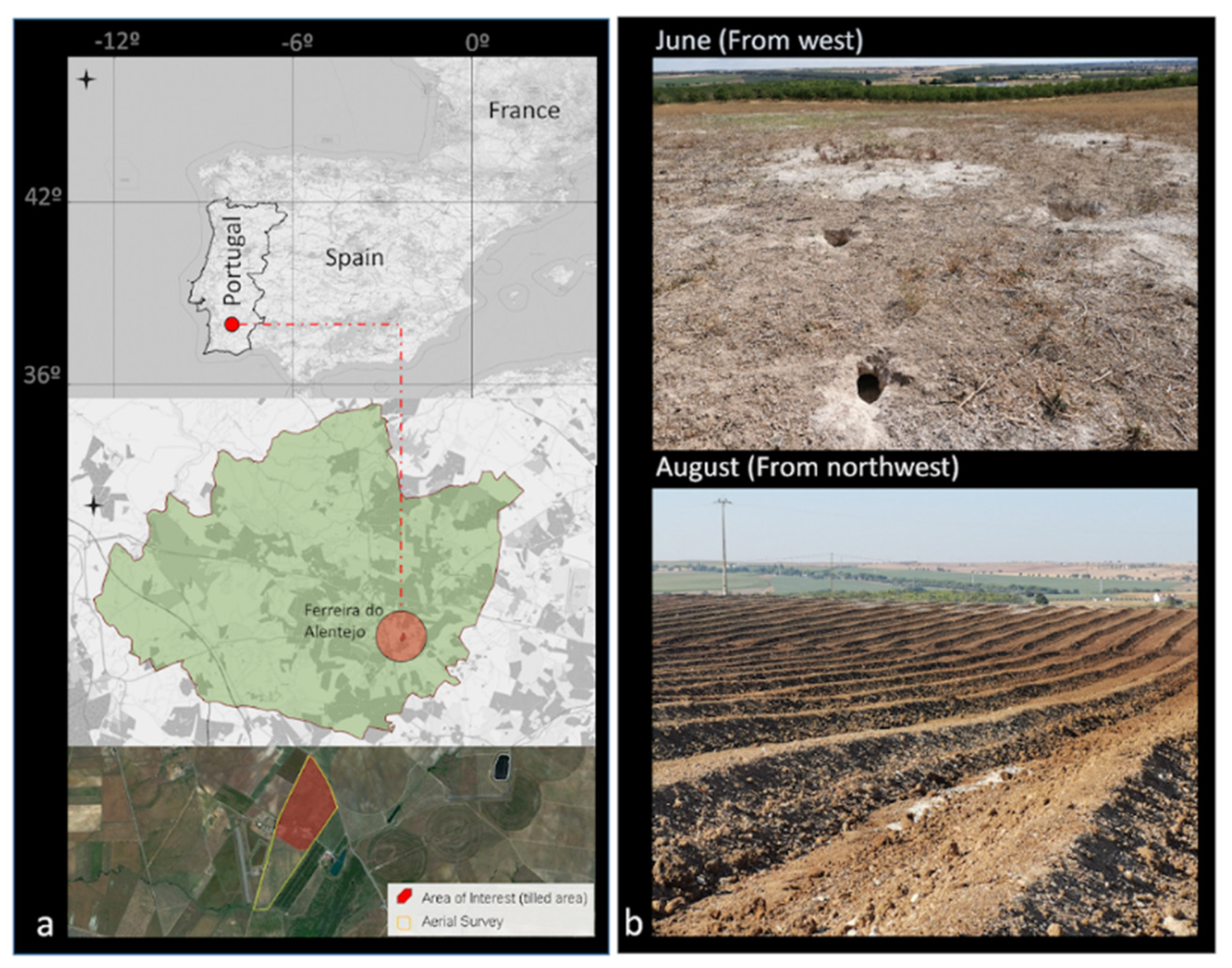

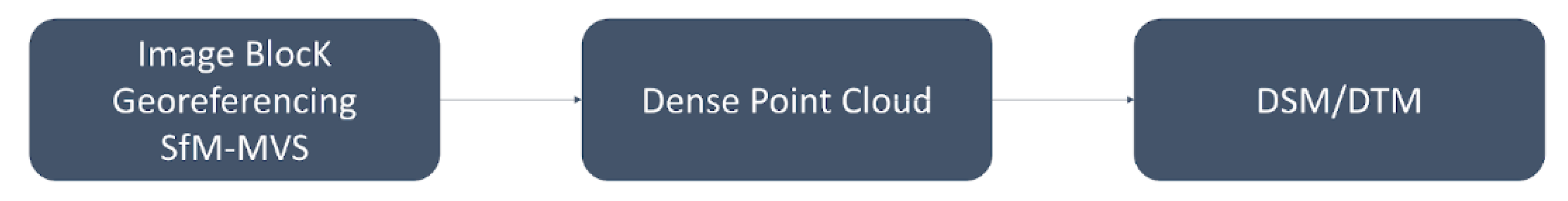

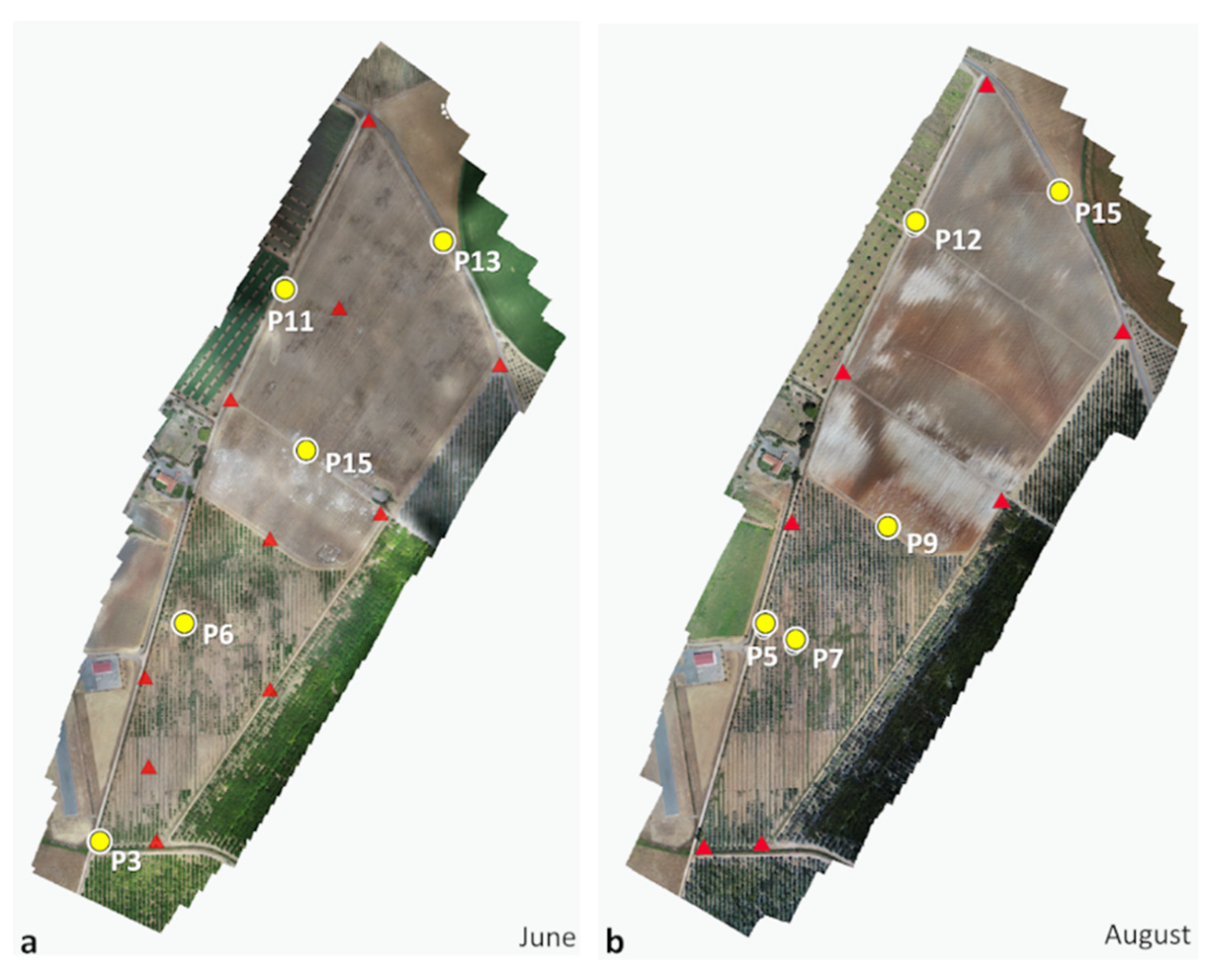

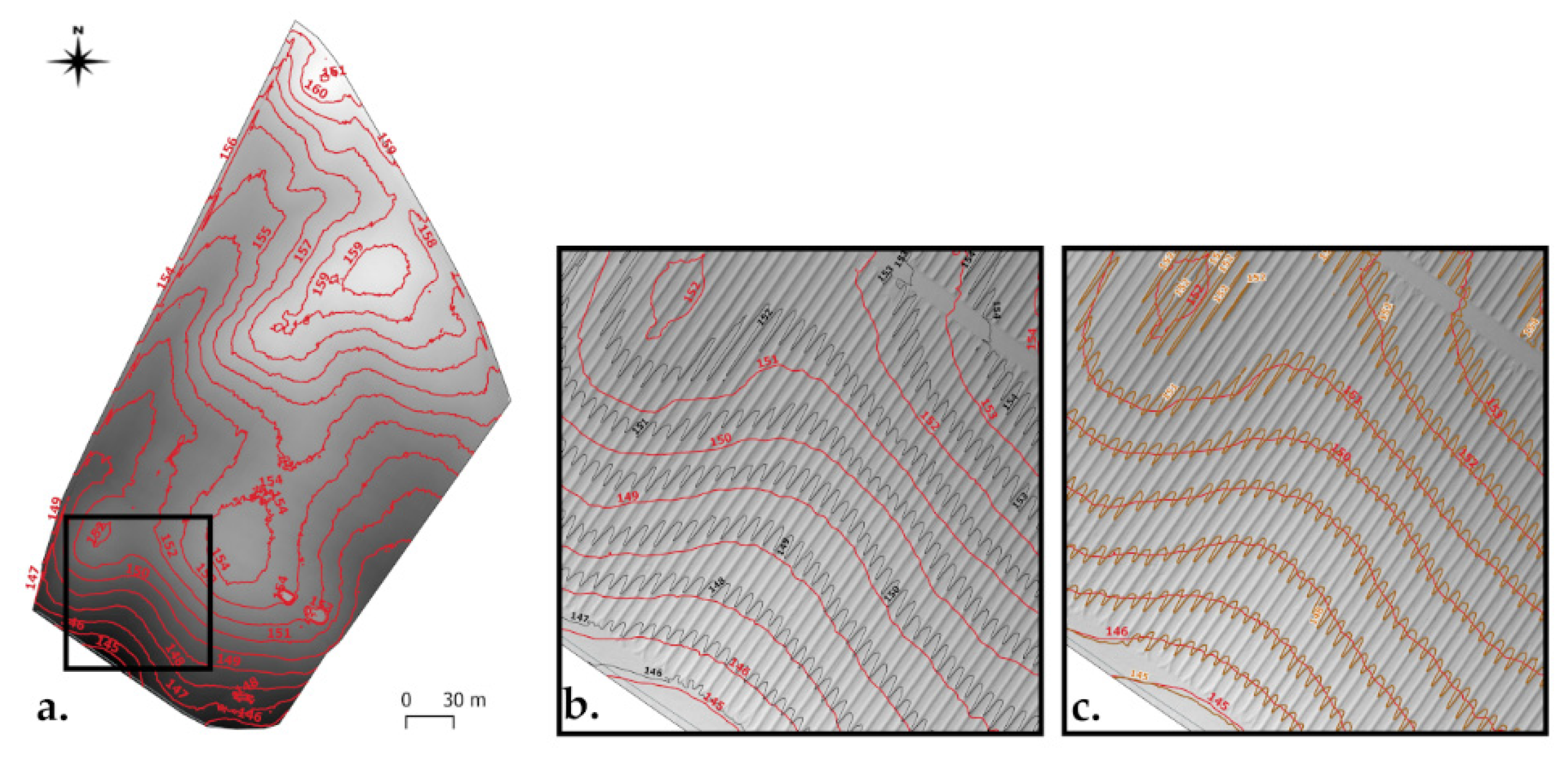

The study area is a rural area located in the small village of Ferreira do Alentejo in the district of Beja, which is approximately 150 km south of Lisbon, Portugal (

Figure 2). The total area is approximately 0.55 square kilometres, with an extension of 650 m wide North to South and 400 m long East to West. This rural area can be divided into two areas: the southern area covered by walnut trees and some herbaceous vegetation, and the northern area where a soil tillage operation occurred in August 2019 for olive tree cultivation. The tilled soil area is 187,000 square metres, about 35% of the total area surveyed by UAV.

The collection of UAV data in this area in June and August was easier because it is not a very windy area and the weather in this part of Portugal is temperate with rainy winters and dry, warm summers. Topographically, the terrain has a gentle slope from North to South, with slopes below 10 degrees in 80% of the area of interest.

2.2. UAV Data Acquisition

UAV image acquisition implies completing a series of tasks before flying: (1) choosing sensor or UAV equipment; (2) dealing with administrative procedures, such as flight permissions, pilot licenses, and data collection authorisations; (3) analysing weather conditions (wind and cloudiness); (4) analysing terrain characteristics (vegetation and topography); and (5) mapping flight planning and parameters. In [

34], the authors provide a checklist to help users in UAV flight planning and also suggest the metadata for aerial surveys.

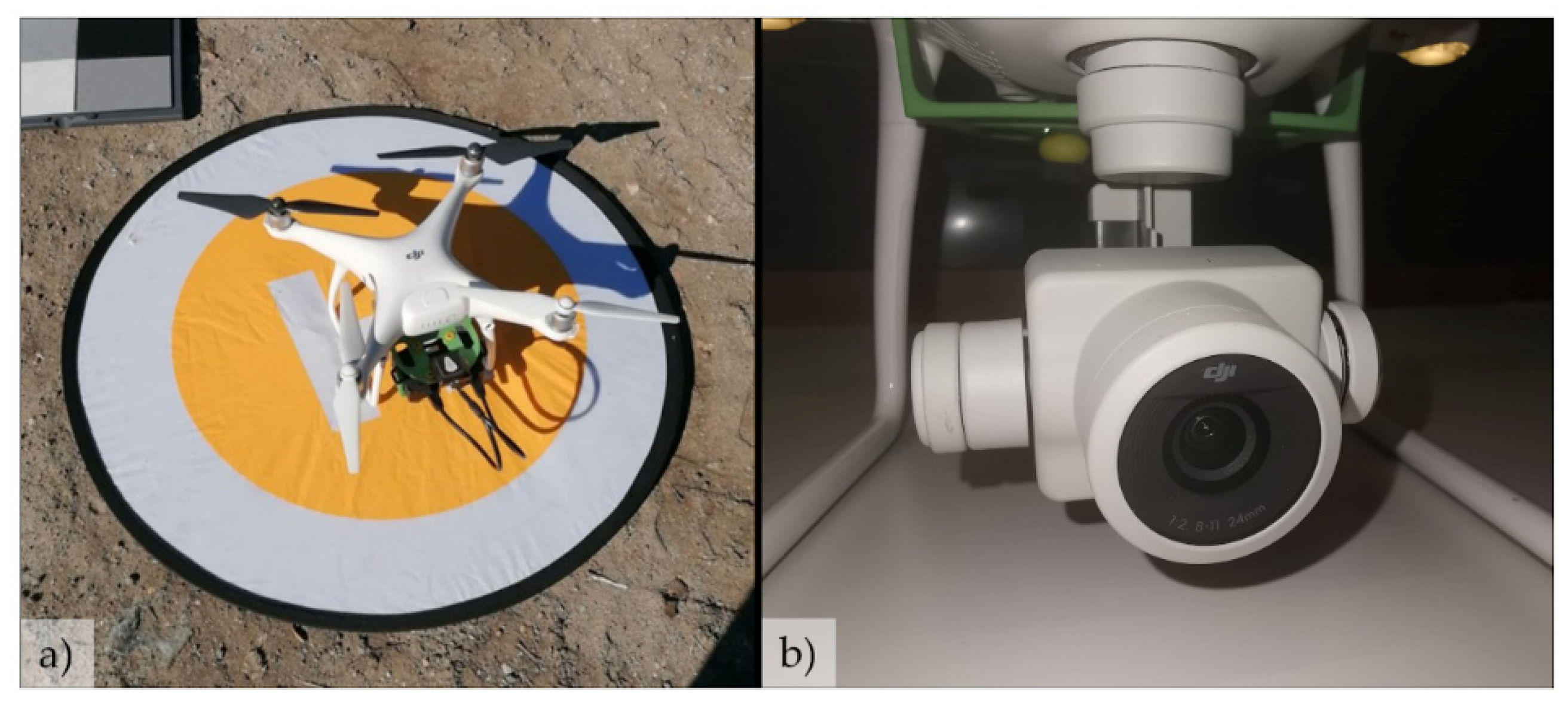

The UAV integrates a direct georeferencing system [

35] that includes a GNSS receiver and an inertial navigation system (INS). In practice, this means that the absolute position and orientation parameters of each aerial image are known. The DJI Phantom 4 Pro [

36] used in this work is about 1.4 Kg and has a battery autonomy of approximately 20 min (

Figure 3) that enabled the coverage of the study area on a single flight. The horizontal and vertical accuracy positioning of onboard GNSS/INS are ±1.5 m and ±0.5 m, respectively [

36].

The UAV was equipped with an optical sensor FC6310 (

Figure 3b) for RGB data collection, which has a nominal focal length (f) of 8.8 mm and a physical size of 13.2 mm by 8.8 mm. This sensor has a resolution of 20 MPixel (5472 × 3648 pixels) and a pixel size of 2.41 mm. Assuming the characteristics of sensor and flight heights between 100 m and 350 m, the ground sampling distance (GSD) varies from approximately 3 to 10 cm, respectively. The GSD is given by the following equation [

37]:

where

H: flight height,

f: focal length,

SS: sensor size in pixels,

and IS: image size related to sensor size in pixels.

The amount of UAV flights required to cover a site depends on the size of the area, image overlapping, flight line configuration, GSD, and UAV battery autonomy. For instance, a small GSD leads to longer flight times to cover the entire area of interest.

The study area was covered by two aerial photogrammetric surveys using the same UAV. The first aerial survey was carried out in June 2019, and the second was in August two months later. A very-high spatial resolution (a few centimetres) and higher overlapping imagery were defined for the UAV flight missions, taking into account the objectives of this work.

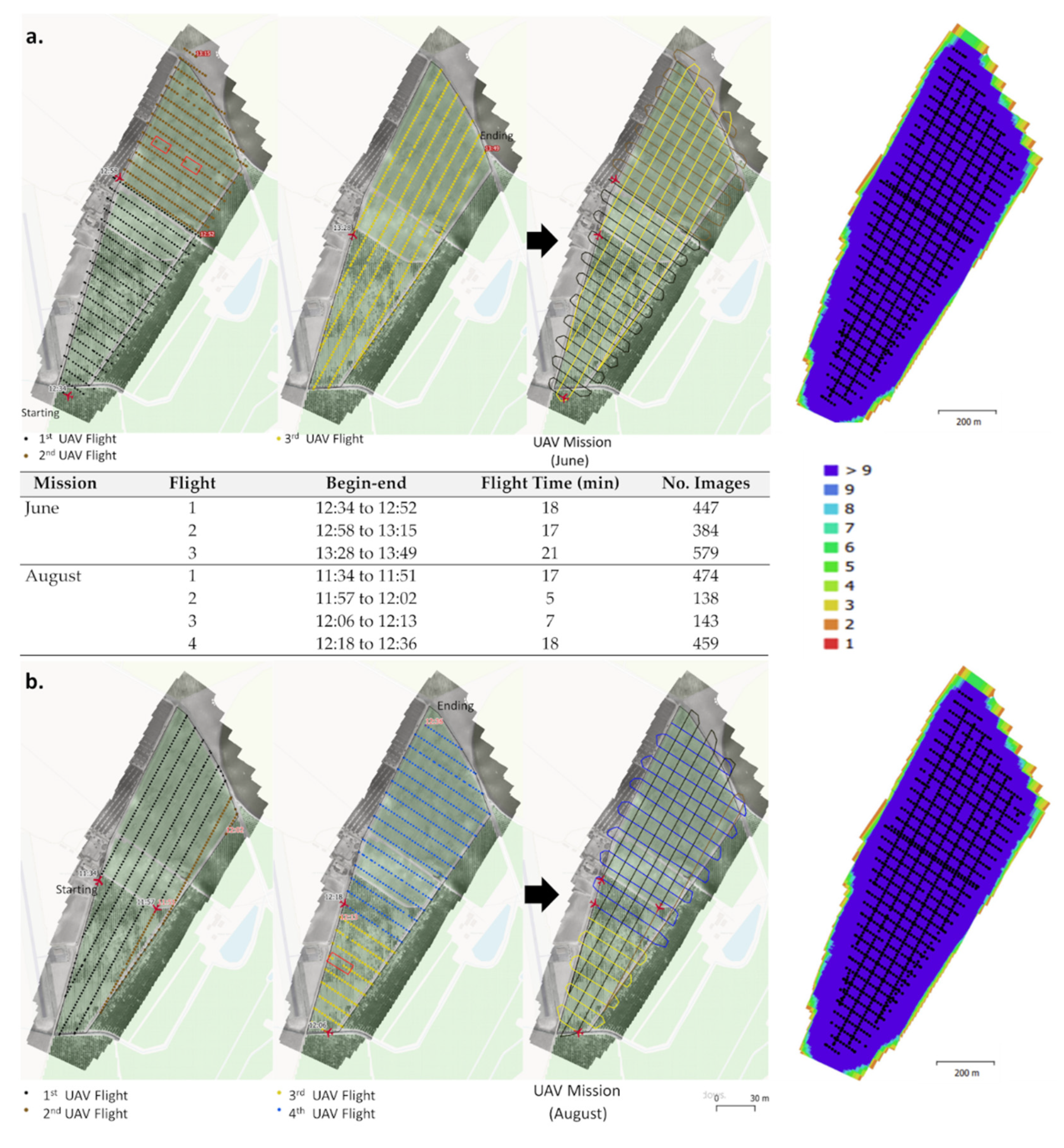

The study area can be covered by one UAV flight in the North–South direction, under suitable conditions of battery life and stable weather conditions with reduced wind. However, the two flight missions made in June and August were planned to enable full coverage of the area, thus reducing the impact of possible camera triggering issues. Therefore, two UAV flights were carried out for each mission (

Figure 4): one flight in the North–South direction and the second flight in the opposite direction (West–East). This cross-flight pattern allowed us to increase the point density of the point cloud and provided more details on the topographic surface. Moreover, cross-flight patterns improve camera calibration during image block processing [

38] and enhance vertical accuracy in flat and homogeneous terrains [

39].

All flights were performed with a forward overlap (between two consecutive images) of about 90%, and the overlap between two consecutive flight lines (side overlap) was about 70% (

Figure 4).

The slight variations between exposure cameras that occurred in few areas of image coverage did not contribute to gaps in point cloud (

Figure 4) because of the higher overlapping. Usually, these variations result from local disturbances on the UAV system due to wind, radio interference, electro-mechanical failure, etc. [

37].

UAV imagery collection with this higher overlapping resulted in at least nine images per object point, without stereoscopic failures, as illustrated in

Figure 4. Furthermore, the higher image overlap from multiple point-views and angles contributes to a high level of redundancy and reduces systematic errors [

40] in image block photogrammetric processing. In other words, the dense multi-image matching increases the positional quality and point density of the 3D point cloud [

41].

It is important to add that the software used to support the flight planning and monitoring of each UAV mission was Map Pilot of DJI. The flight planning was based on the definition of a set of several flight parameters, like some of those mentioned above. The characteristics of UAV flight missions can be seen in

Table 1.

Each UAV flight mission (June and August) took one hour approximately with various flights (

Figure 4 and

Table 1).

For aerial flights, solar altitude is also a relevant factor for the radiometric quality of the imagery. Therefore, low sun angles (<30°) must be avoided to reduce the presence of long shadows and details obscured on images [

42]. In this work, the flights were made with a sun angle above 55 degrees of the horizon.

2.3. Ground Control Data Survey

Typically, the direct georeferencing technique (onboard GNSS/IMU) is limited and does not ensure the highest required positional quality [

35]. Therefore, the measurement of well-defined points on images—Ground Control Points (GCPs)—for the photogrammetric processing is required to increase the positional accuracy of the image block at the centimetre level [

43,

44]. Despite some advances in direct georeferencing with the onboard RTK (real-time kinematic) or PPK (post-processed kinematic) positioning technologies, the use of some GCPs is still recommended [

42,

45].

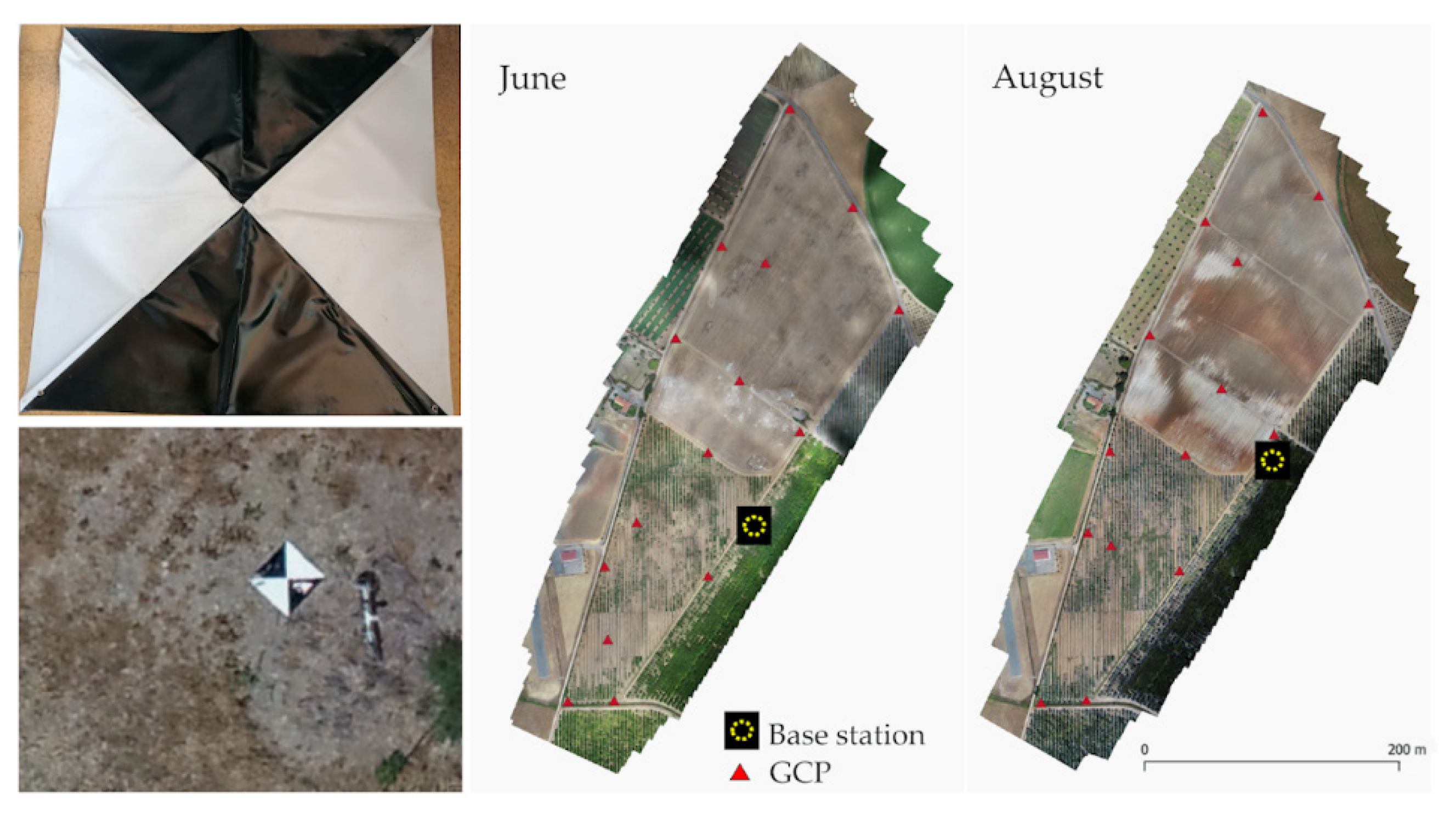

Usually, man-made or natural features can be used as GCP in the area covered by aerial image pairs. However, in this study area, well-defined points were difficult to find as it is a rural area. Therefore, artificial targets of 1 by 1 metre were placed in the area (

Figure 5) in June and August. The targets designed were plastic bands with a black and white triangular pattern, with contrasting colours in the centre. Additionally, they were stuck with hooks on the ground to avoid any shift of these plastic bands during the operational flight.

We should highlight that the GCP network established and measured was not the same for the two time periods, including the base station.

It is essential to ensure a good definition of these points in the images for accurate measurements of their image coordinates in the photogrammetric processing as otherwise the positional accuracy of the DSM/DTM would be affected. Although the smallest identifiable object in the image is related to the GSD, the dimension of these points should be slightly higher than the GSD to ensure their clear identification and measuring on images. Assmann et al. [

46] recommended an overall side length 7–10 times the GSD for the dimension of these types of square targets. Therefore, the size of artificial targets should not be smaller than 21 cm, considering the GSD imagery. Additionally, these square targets (1 by 1 m) can enable accurate measurements of image coordinates at their mark centre for a GSD of up to 10 cm or a maximum flight height of about 360 m (Equation (1)).

In addition, the accuracy of the field measurements of these targets is also critical for the positional quality of DTM. The position of these 15 target centre points evenly distributed along the area of interest (

Figure 5) was accurately measured with a low-cost GNSS system, Emlid Reach RS+ GNSS receiver. According to the manufacturer’s specifications, Emlid’s accuracy is ±0.007 m + 1 ppm horizontal and ±0.014 m + 2 ppm vertical for a single baseline of up to 10 km.

The RTK performance of Emlid is adequate for this work, following the requirements to achieve centimetre-level accurate measurements, viz. (1) the baseline length (distance between the rover and the base station) should be up to 10 km—this is essential because the Emlid system only receives satellite signals on one frequency (L1), which means that positional accuracy decreases at the rate of 1 mm/km [

47]; (2) fixing the position derived from measuring, which needed more time for a single-frequency receiver as Emlid. More details about this low-cost device’s usage, accuracy, and limitations can be found in [

48].

The coordinate measurements were performed in RTK mode for both surveys, whose distance from the base station to the rover was not more than 1 km. Some of these artificial target points were used as independent check points (ICP) for the accuracy assessment of photogrammetric end-products, which means that they were not included in the processing of UAV data.

It is important to highlight that the assessment of the need for artificial points on the ground should take place before the UAV flight. Another solution is to place some GCPs materialised outside the tilled area, which means that the area can be more easily monitored in the future using the same GCPs.

2.4. Photogrammetric Processing

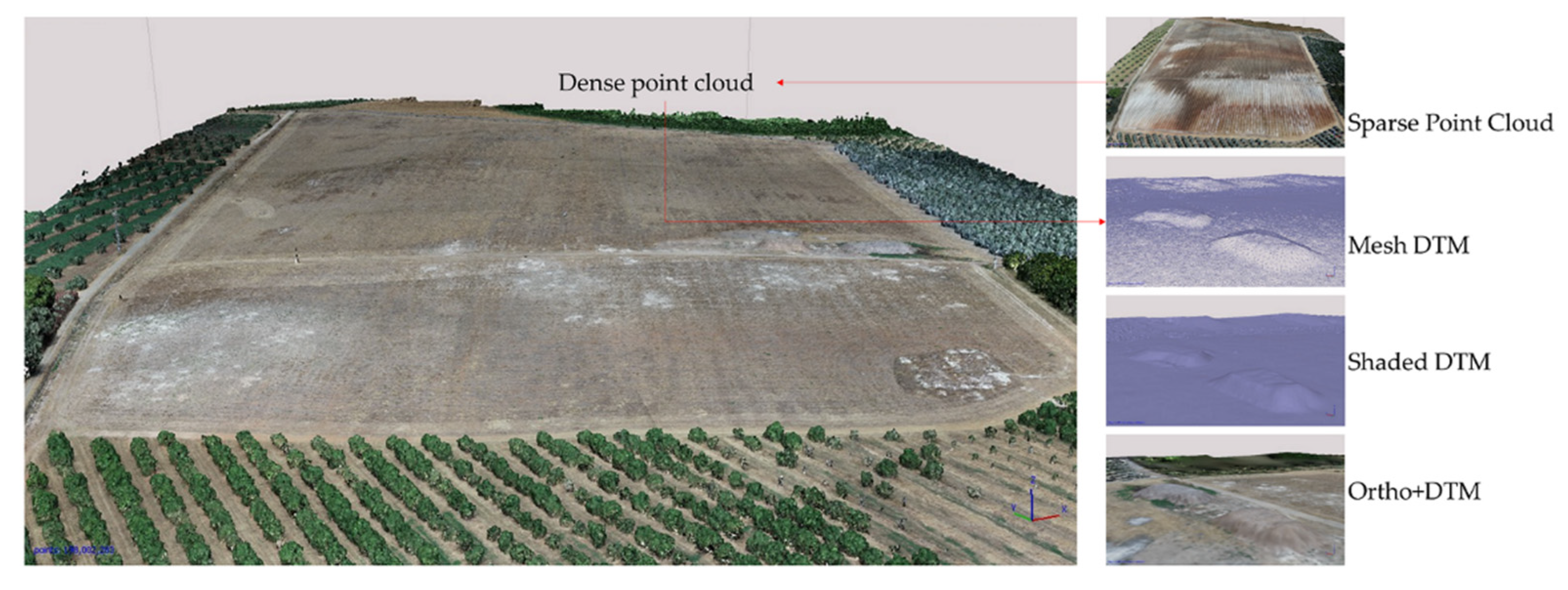

Photogrammetric processing is an automated image-based reconstruction that combines computer vision and photogrammetric techniques. The photogrammetric mapping includes a range of 2D/3D products, such as dense point clouds, 3D surface models (DSM, DTM), and orthoimages.

Several commercial software products and FOSS photogrammetric solutions have been developed for the automatic processing of UAV imagery. In this study, 3D data were acquired from Agisoft Metashape, commercial software that provides a photogrammetric workflow based on Structure from Motion–Multi-View Stereo (SfM–MVS) for image-block processing.

SfM is a photogrammetric technique that generates a dense point cloud from overlapping images. SfM has revolutionised traditional photogrammetry [

40] because it enables the identification and matching of pixels in images at different scales and orientations, including self-camera calibration, and it can produce a georeferenced 3D point cloud without GCPs (using the information of direct georeferencing in the mathematical model).

The three main steps of photogrammetric processing used for the production of DSM/DTM are (1) image block georeferencing, (2) dense point cloud generation, and (3) derived DTM from filtering DSM (

Figure 6).

Georeferencing is the reconstruction of image block geometry at the exposure time, following the basic principles of aerial triangulation, where camera self-calibration and bundle block adjustment (BBA) were performed within a SfM photogrammetric workflow [

40,

49].

At the beginning of processing, an initial “alignment” of image pairs is performed using a scale-invariant feature transform (SIFT) approach [

50], in which a set of key points identified and matched (tie points) are automatically extracted from the image pairs that overlap. Then, the SfM performs bundle adjustments to compute the camera interior orientation (IO) parameters (including lens distortion), the six exterior orientation parameters (related to the position and orientation of each image captured), and a sparse 3D point cloud (set of tie points). During the BBA step, the image coordinates of a set of GCPs (evenly distributed along the study area) are measured in all image pairs where they appear. In practice, this task is performed simply to correct the position of these points in the images because the block has already undergone an initial alignment from the onboard navigation system. We should also stress that to enable the positional accuracy of block georeferenced and derived products, the usage of a reasonable number of well-defined GCPs with an optimal spatial distribution near image block borders is crucial, as well as some additional GCPs in the middle [

42,

44,

51,

52].

Self-camera calibration is also performed in this processing with the initial correction of IO parameters and addition of parameters; in the case of Agisoft Metashape, Brown’s distortion model is used [

53].

In sequence, every task involved in the imagery processing contributes to the level of accuracy of an end-product. For instance, sub-pixel accuracy in image-matching, accurate set of GCPs (centimetre-level accuracy) measured in image pairs, and exact camera calibration contribute to a positional accuracy of a few centimetres.

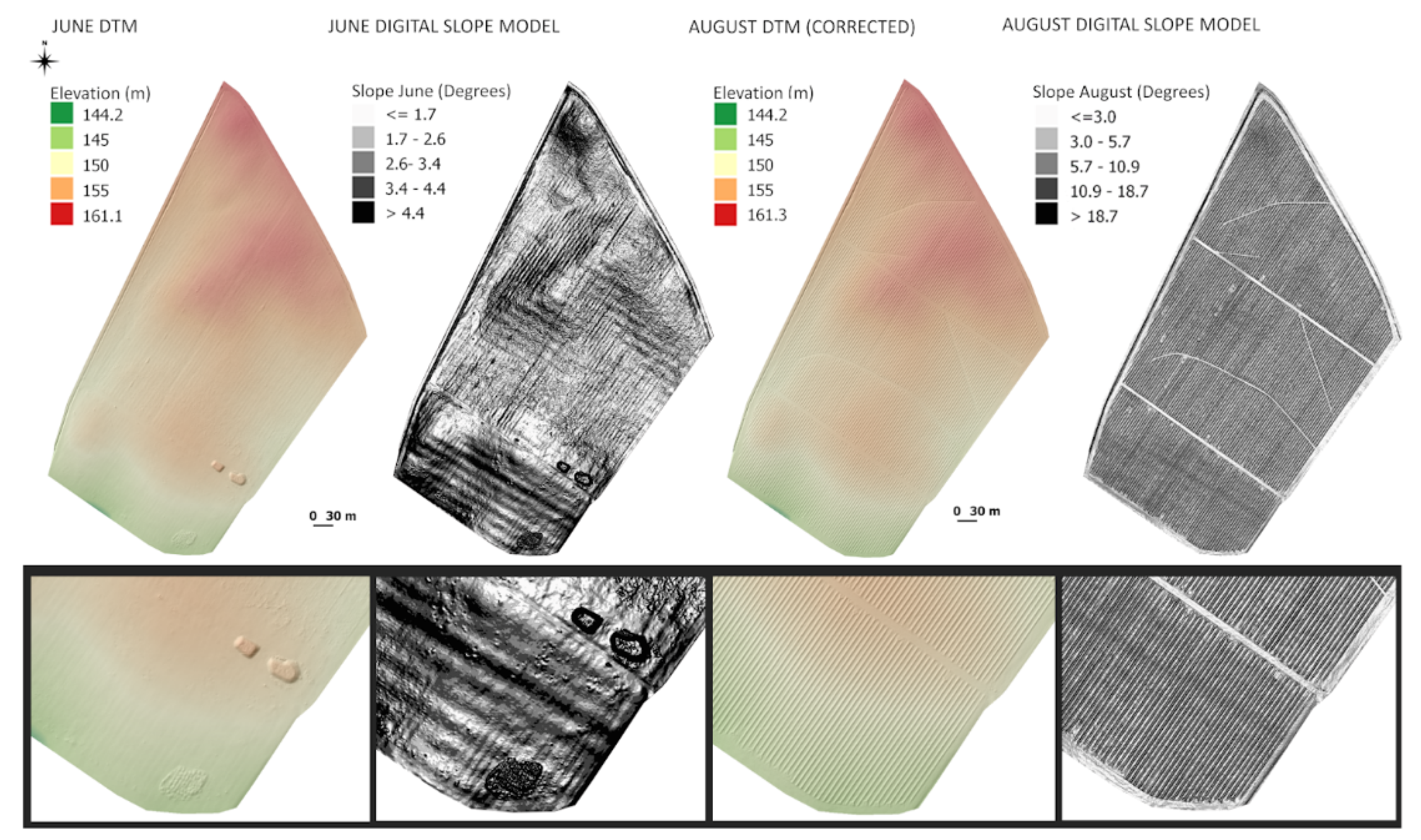

The georeferenced sparse point cloud is densified using an MVS dense matching algorithm. The result is a dense point cloud with RGB information derived from the input images. Finally, the dense point cloud classified as non-ground and ground points was interpolated into a DTM. The grid resolution of the DTM should not be smaller than the point spacing, which is given by the square root of the inverse point density (pts/m2).

The DTM represents the elevation of the bare earth; both artificial and natural objects are excluded. The filtering technique applied for point cloud classification is essential to obtain accurate terrain modelling without errors, and without information that does not belong to the terrain [

54], since they influence the estimation of volume changes [

55]. Agisoft [

53] implemented a ground point filtering, which included a threshold parameter related to the maximum angle between the terrain model and the line to connect the point (15 degrees by default) and the maximum distance parameters for a set of surrounding points. Higher values of the angle parameter may improve results in steeper areas. The accuracy of DSM/DTMs produced from this software is detailed in [

43].

In this work, an orthoimage mosaic was also produced using a high-resolution DTM and georeferenced image block. This product was obtained to support the visual inspection of the study area.

2.5. Positional Accuracy

Positional accuracy is a mandatory issue in the UAV mapping of any geospatial product [

51]. As one of the measures to assess spatial data quality [

56], it reflects the spatial proximity of an element represented in a system from the “true position” or more accurate position. This discrepancy represents a positional error (e

i). Therefore, the positional accuracy of spatial data can be evaluated by the root mean square error (RMSE) statistic [

51]. Thus, the horizontal accuracy is assessed by planar RMSE

XY for both directions

x and

y in Equation (2), and vertical accuracy of 3D data by RMSEz in the z-direction given by Equation (3).

where

n: number of independent checkpoints used;

and are positional errors in x, y, and z directions, respectively.

It represents the difference between the estimated coordinate from SfM and the coordinate value obtained from an independent source of higher accuracy (GNSS) for identical ground points; n is the total number of observations.

In practice, the assessment follows the traditional method of comparing estimated and measured ground coordinates for GCPs or ICPs. The ICPs were used to assess the quality of the georeferenced dense point cloud and the DTM at the end of UAV imagery processing. The accuracy assessment of georeferenced image blocks and the DTMs will be discussed in

Section 3.2.

2.6. Quantification of Mobilised Soil Volume

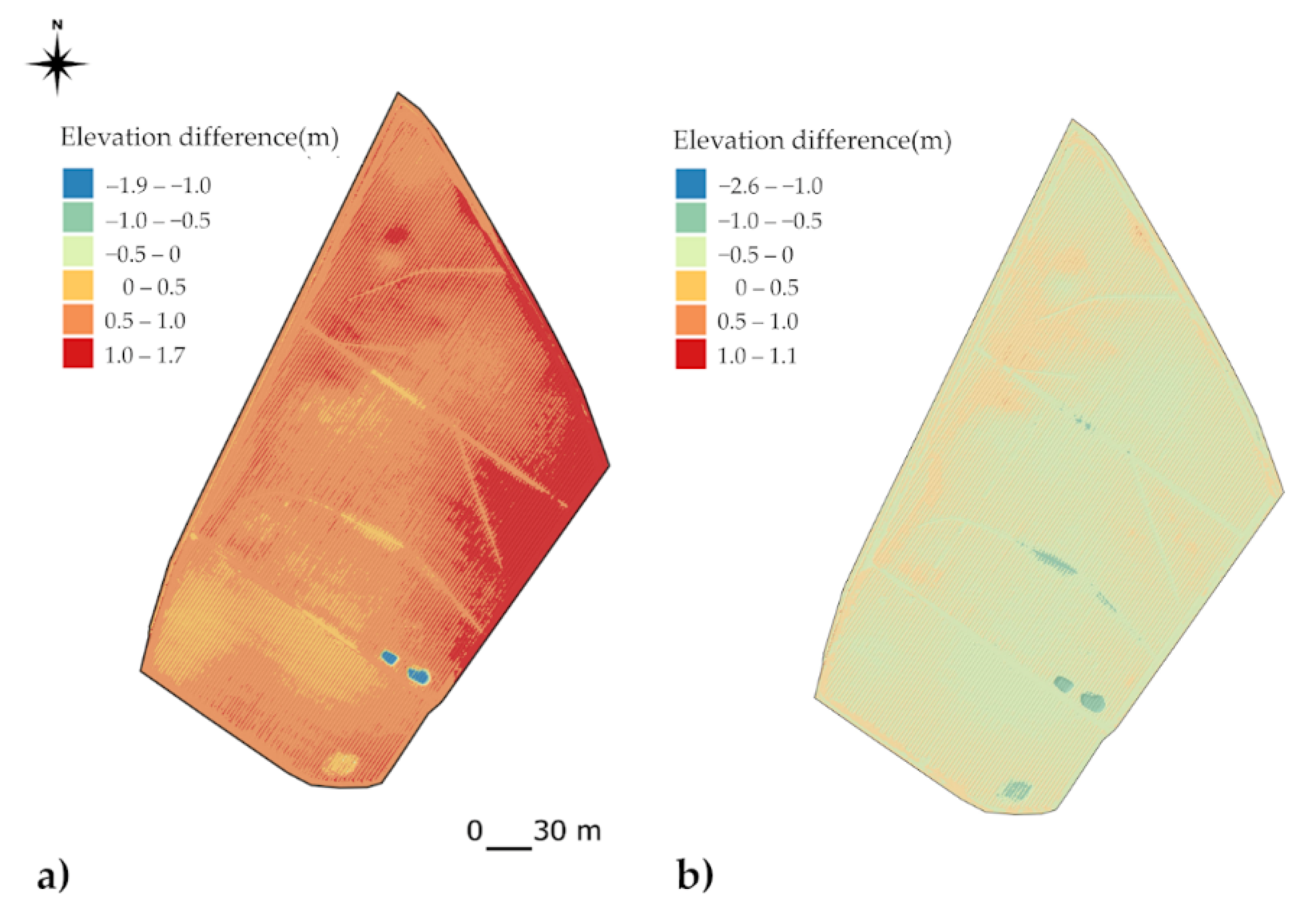

Measuring the amount of mobilised soil after tillage implies calculating volume changes for the time frame studied (before and after soil tillage). Among the various methods available for mapping volume changes, the differential DTM was the solution chosen, taking into account UAV-based RGB imagery.

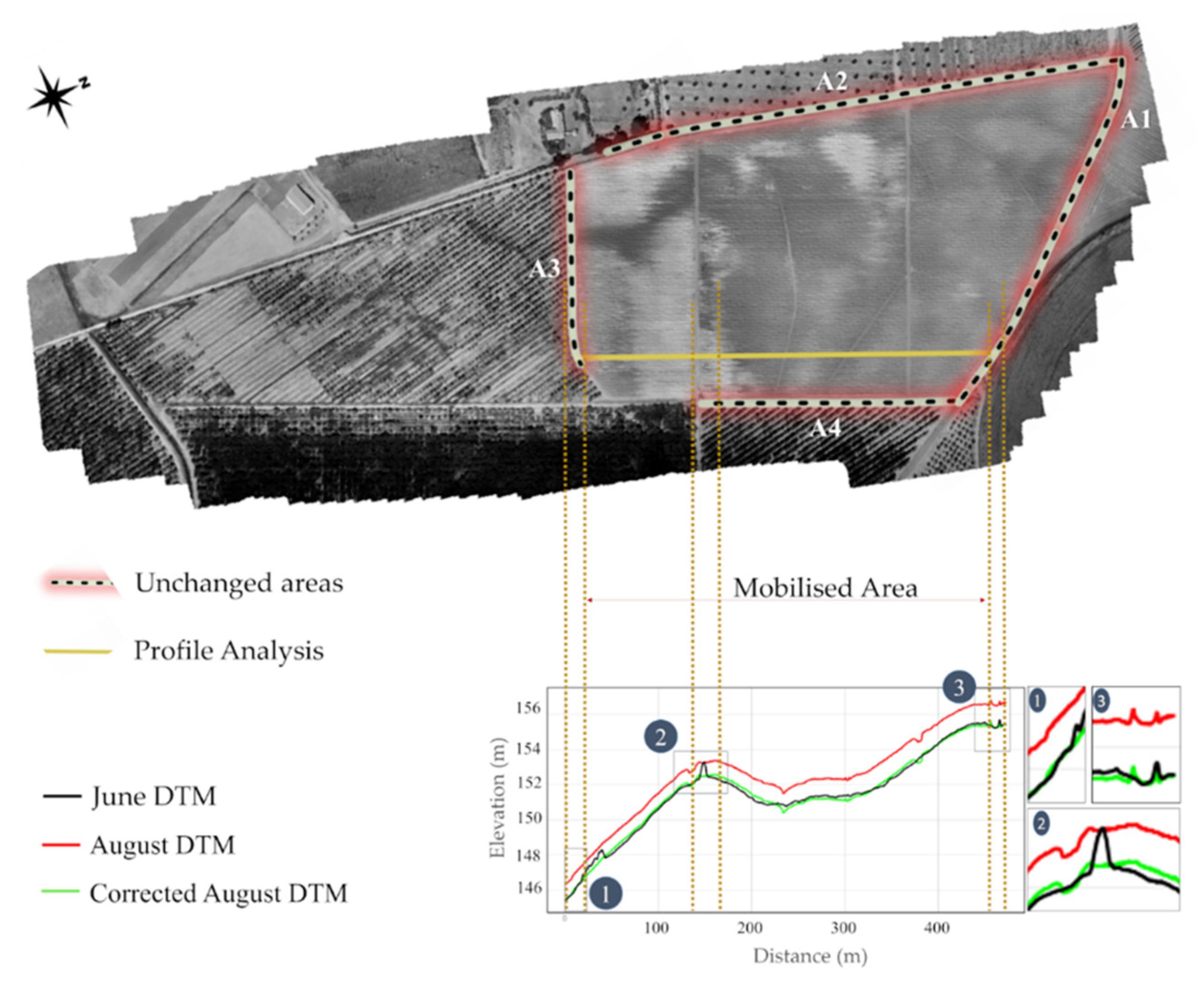

The quantification of mobilised soil volume from the two previously obtained DTMs involves the following steps: (1) analysis of DTMs; (2) co-registration of DTMs, where the deviation between the two DTMs should be reduced; (3) generation of differential DTM; and (4) estimation of volume/area changes occurred after the soil tillage (

Figure 7).

Analytical methods to support the analysis of various DTMs are essential, such as the traditional terrain profiles and the swath profile method [

57,

58]. In this study, the visual checking of DTM errors and analysis of deviations between the two DTMs were performed with terrain profiles using the QGIS plugin profile tool. Additionally, raster statistical tools from GRASS GIS (

r. stats) and QGIS (

raster layer statistics) were used to compare the range of elevation values in the two DTMs.

The differential DTM should be derived from the difference between two DTMs with the same resolution, position, and size. This 3D model contains information about the elevation difference for each grid cell data.

The differential DTM provides reliable results if the two following conditions for the input DTMs are met [

55]: (1) pixel size, height ranges, and corner coordinates are the same; and (2) the number of free-cut areas and their location match.

Usually, the difficulties in the estimation of accurate volume changes are (1) the uncertainties of input DTMs, related to the quality of point cloud georeferencing and point cloud classification; and (2) the relative co-registration quality of different DTMs (ensuring that in unchanged areas the terrain profiles are almost coincident). The result of differential DTMs never reflects the actual scenario of changes if the issues mentioned above have not been controlled. Several methods have been used to ensure (or improve) the quality of mapping relative changes or volume changes based on correction of relative position between multi-temporal 3D data at the point cloud or DTM levels, such as (1) co-registering point clouds using stable areas, such as roads (using the CloudCompare software) before the generation of the two DSMs [

22]; (2) using SIFT key points to detect and match functions (in OpenCV) for the co-registration of raster DSMs [

59]; (3) aligning dense point clouds by fitting a reference plane using CloudCompare software [

31]; and (4) estimating a mean depth value for differential DTMs, applied for the estimation of a landslide volume [

60] in areas of difficult and restricted access.

It is important to highlight that most studies related to the measurement of erosion and landslides consider the same ground control network for the imagery processing and accuracy assessment of DTMs [

19,

23,

24], which allows one to reduce the uncertainties and avoid the alignment of DTMs. However, in our study, the two DTMs were not produced with the same GCPs, because it was not possible to materialise these points due to soil mobilisation. This limitation contributes to a misalignment error between the DTMs.

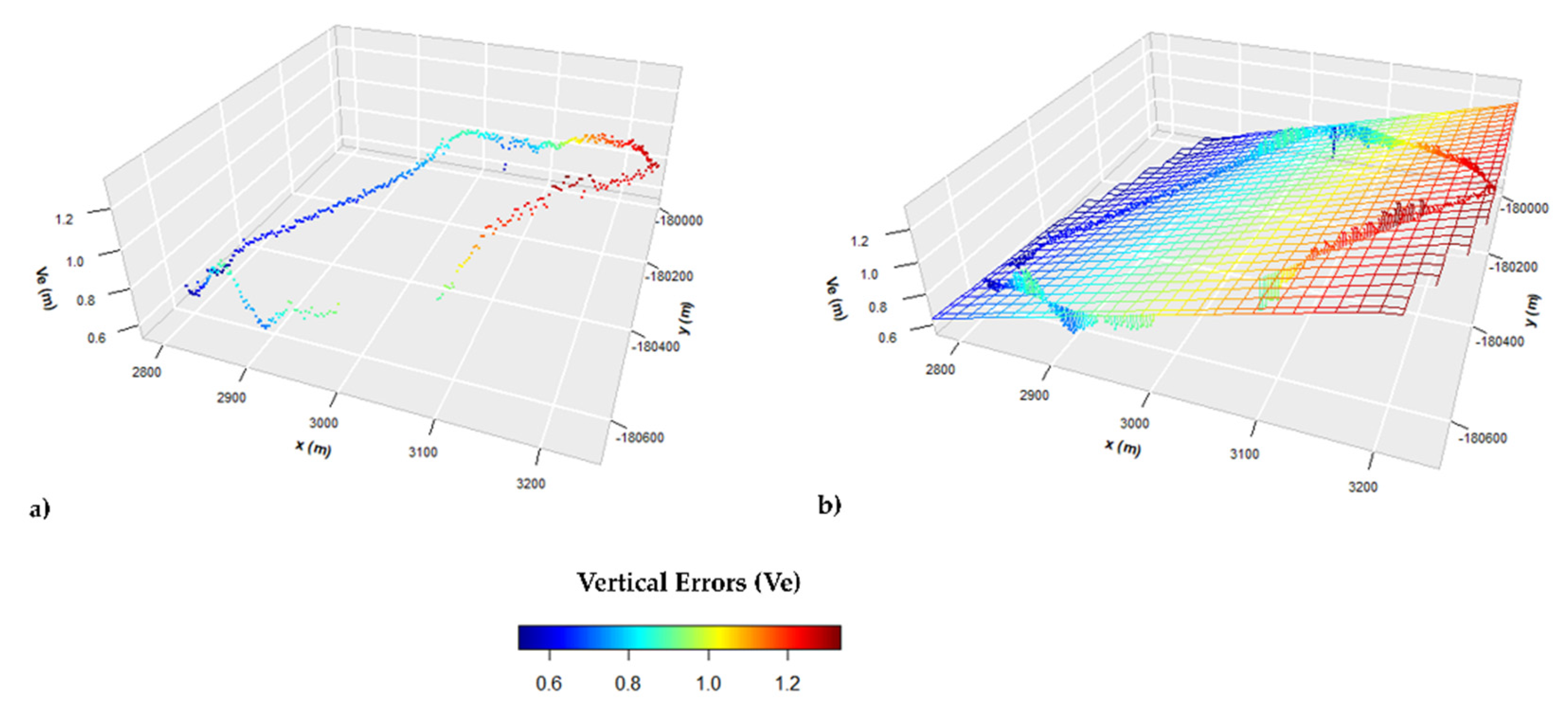

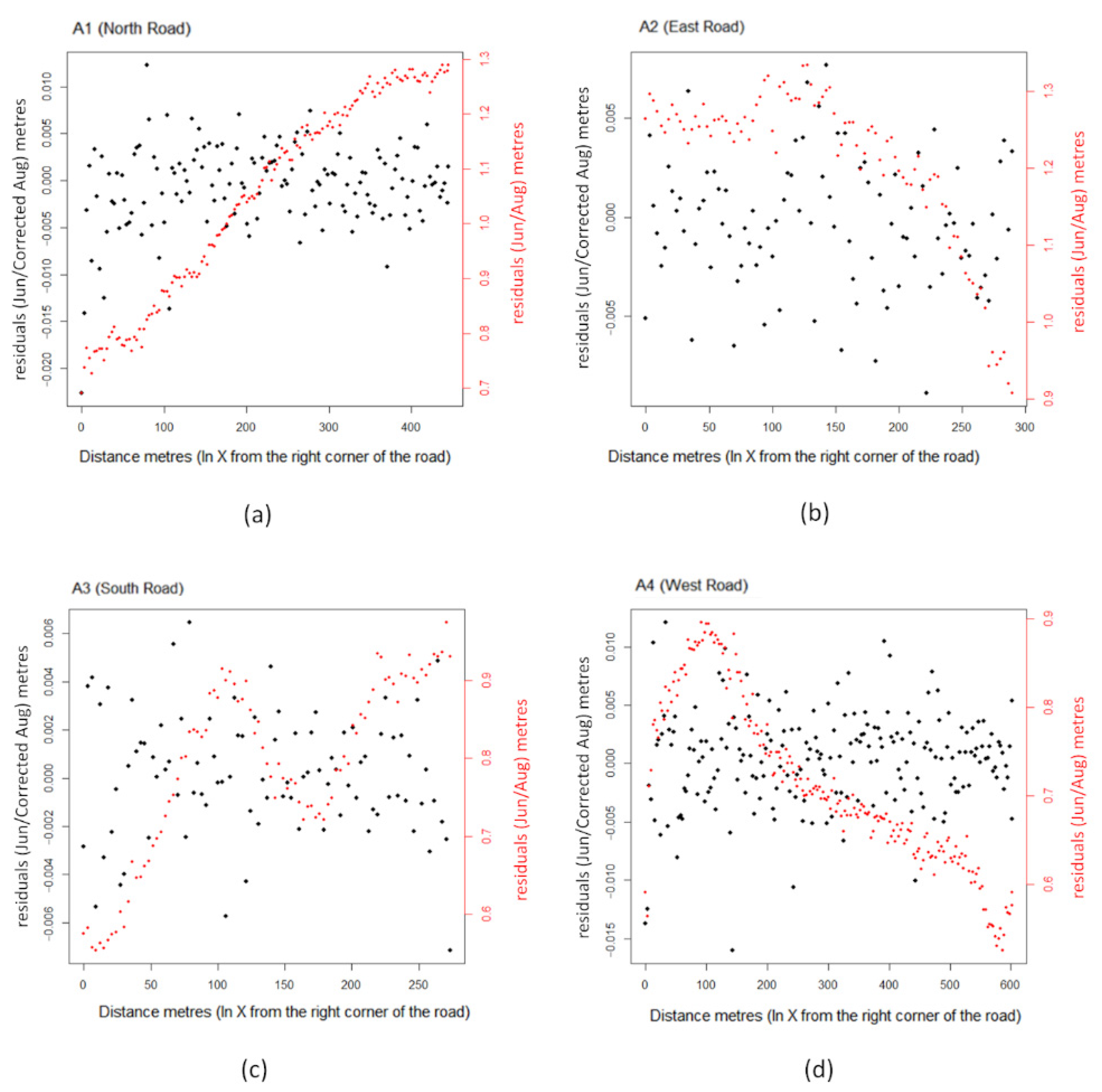

In this work, before the generation of the differential DTM, the relative position between the two DTMs was improved using the unchanged-area-matching method. This method is similar to the co-registration techniques described in previous studies [

28,

29,

61]. Ideally, the DTMs should be coincident in areas where no changes have occurred between the time frames studied. Therefore, the proposed method uses a multiple regression analysis model to reduce the elevation differences in corresponding points of the two DTMs in unchanged areas. The modelling is based on a set of June elevation values related to unchanged areas, where the August DTM (grid) is the dependent variable. The multiple regression model with three independent variables is given by the following equation:

where

ZJun,: predictor variable related to the elevation data of June profiles,

X: predictor variables related to the horizontal coordinates,

: value of ZAug when all the other parameters are set to zero (the intercept),

: regression coefficients of the corresponding independent variables,

and : model error (residuals).

The residuals represented in the regression equation by the model error is the difference between the observed and the estimated elevation values of August.

This regression analysis was performed by the multiple regression analysis (points and predictor grids) module implemented in SAGA [

62]. First, the regression coefficients were estimated from June elevation profiles of unchanged areas and the August DTM, where the horizontal coordinates were included; second, a new August DTM was produced using the estimated regression model, where the residuals were interpolated onto a regular grid using the bicubic spline interpolation method. The DTM resampled with regression-based values will be called corrected August DTM. The elevation values of corrected August DTM should be closer to the elevation values of June for unchanged areas, as expected (or residuals closer to zero).

The unchanged areas chosen in the DTMs should be roads or areas with objects above the surface accurately removed by point cloud filtering. It is recommended not to select crop areas or dense vegetation areas when the time frame between the two DTMs is too long to reduce the contribution of inaccurate filtering.

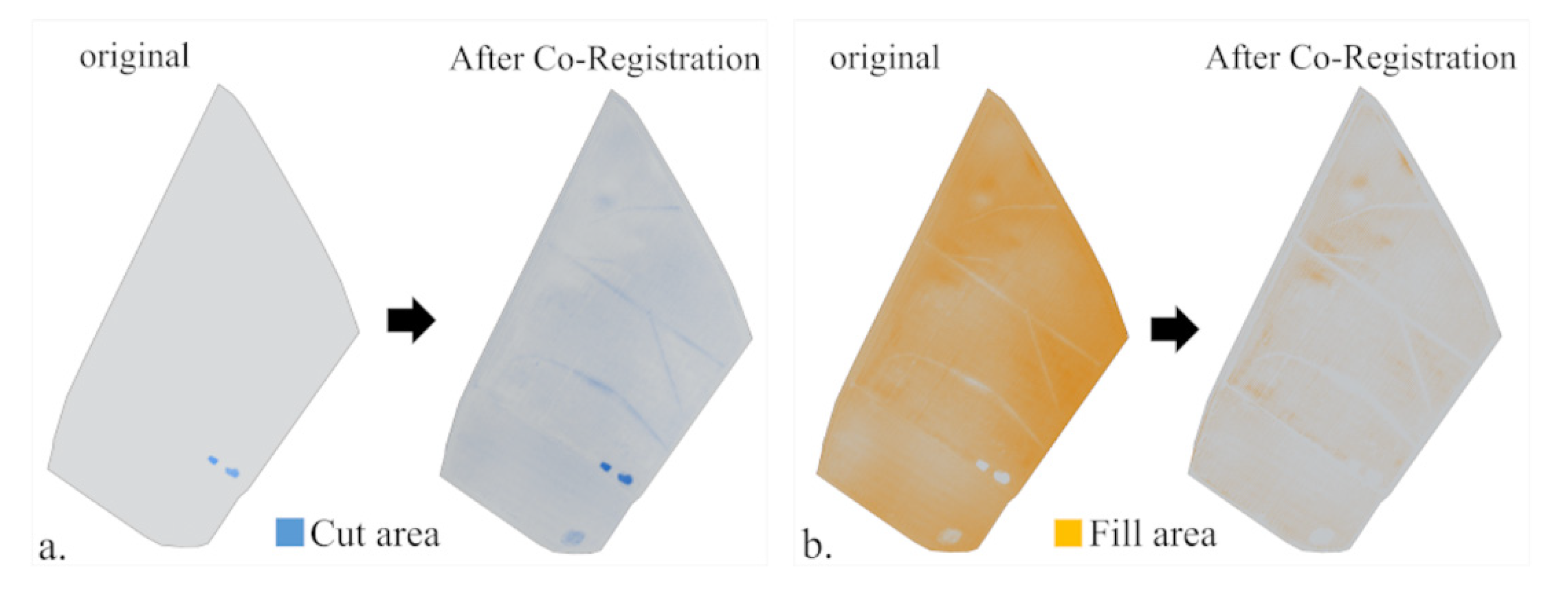

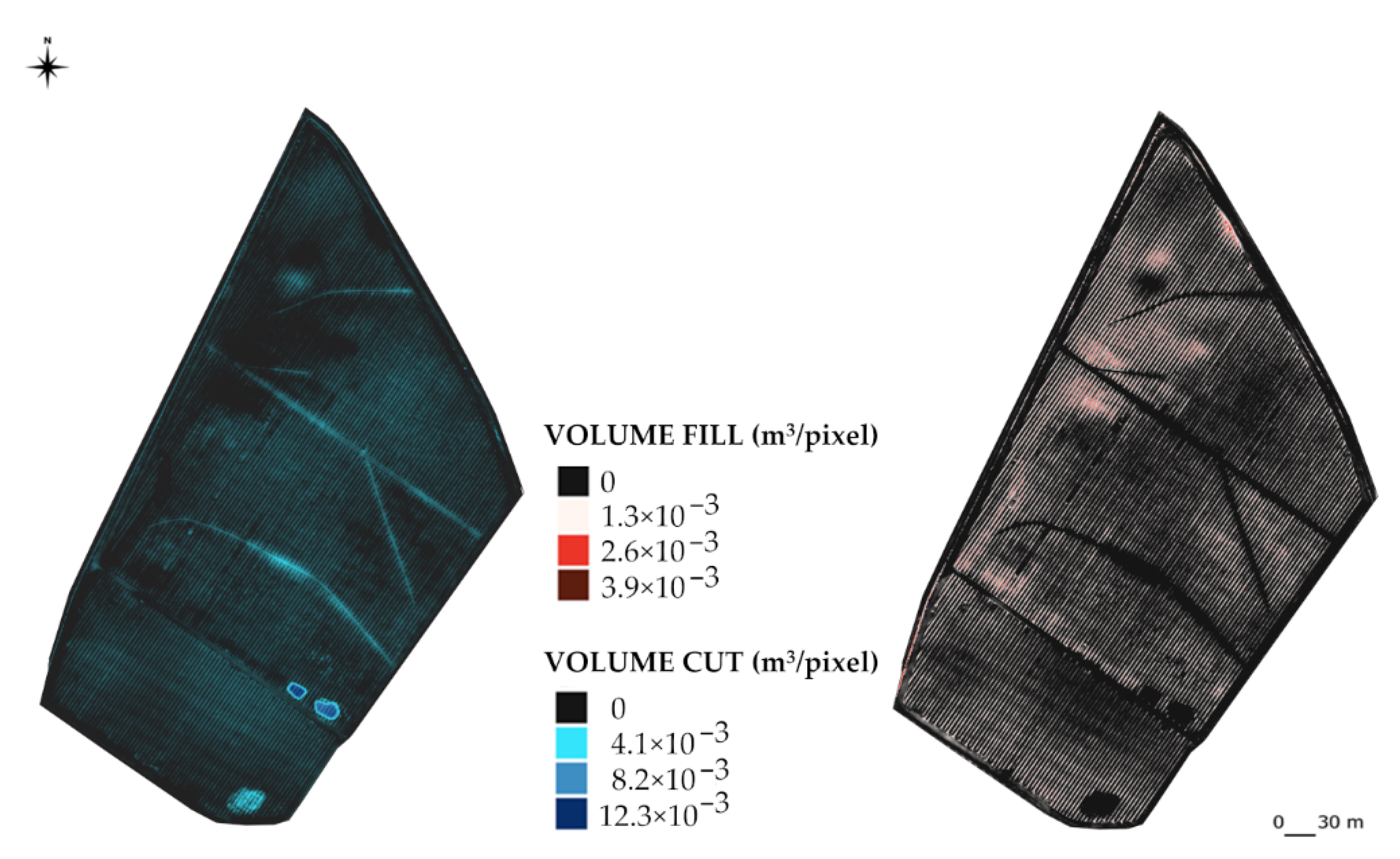

The differential DTM was obtained by subtracting the June DTM from the corrected August DTM using the raster calculator tool in QGIS. This 3D model enabled the identification of the areas where the soil was extracted (cut) or accumulated (fill) in a soil tillage operation (

Figure 8). Then, the positive values of the differential DTM grid mean that the terrain elevation has increased or has been filled in August, and negative values indicate that the terrain elevation has decreased or has been cut in August.

The total mobilised soil volume [

VT] was calculated separately for every pixel that represents fill or cut areas. In practice, it corresponds to the difference between fill and cut volumes. The volume change formula calculated for every differential DTM pixel is given by the following equation:

where

n, m: number of cells for the fill and cut areas, respectively,

GR2: area of each cell, which is given by the grid resolution of Differential DTM,

and h: elevation difference.

The total volume of transferred soil during the soil tillage operation is given by the sum of fill and cut volumes. The digital volume change model was also generated in this work, where each cell grid represents the volume change value. It implies multiplying the differential DTM cells by each cell area on the ground.

The differential DTM, digital volume change model, and the extraction of statistical values were carried out by a set of algorithms dedicated to the analysis and statistical raster grid surface within an environmental QGIS, such as raster volume and raster surface volume.

4. Conclusions

This study showed the potential of UAV data in the estimation of soil volume change for a soil tillage operation. Therefore, a methodology was proposed to manage and monitor land preparation with low costs within a short time, which includes estimating fill/cut volumes and change volumes after the soil tillage with an accuracy level of a few centimetres. It also integrates the positional accuracy assessment of georeferenced UAV blocks and DTMs, where the accuracy of a few centimetres was required for this work. Following the importance of data quality assessment to estimate change volume, the impact of differential DTM co-registered with the unchanged-area-matching method was also demonstrated.

Some improvements must be considered for this proposed methodology: (1) usage of a UAV system that integrates GNSS RTK or PPK to reduce the fieldwork when measuring GCPs, and (2) establishing a materialised GCP network outside the tilled area to ensure the monitoring of future tasks in the same area, and also to reduce (or avoid) the vertical error between multi-temporal DTMs/DSMs.

This work contributes to monitoring soil changes with a methodology that allows us to combine multi-temporal DTMs obtained from different UAV surveys. Furthermore, the usage of multi-temporal UAV data will become a more common procedure and a challenge for data users, which will increase the demand for solutions that allow for the comparison of 3D data. From a farmer’s point of view, it is helpful in monitoring runoff conditions. Moreover, it can be used as an economic model, where the costs of soil tillage operations are based on volume (3D data) instead of area size (2D data).

UAV technology and robust methodologies for the acquisition of 3D models that can be easily implemented and used by farmers in land preparation will bring many advantages when managing and monitoring the effects of traditional and conventional tillage systems, such as reducing soil compaction caused by machinery. Furthermore, it will contribute to sustainable precision agriculture with the usage of efficient geospatial tools, ensuring the data quality of a 2D/3D product.