Abstract

Semantic segmentation from very fine resolution (VFR) urban scene images plays a significant role in several application scenarios including autonomous driving, land cover classification, urban planning, etc. However, the tremendous details contained in the VFR image, especially the considerable variations in scale and appearance of objects, severely limit the potential of the existing deep learning approaches. Addressing such issues represents a promising research field in the remote sensing community, which paves the way for scene-level landscape pattern analysis and decision making. In this paper, we propose a Bilateral Awareness Network which contains a dependency path and a texture path to fully capture the long-range relationships and fine-grained details in VFR images. Specifically, the dependency path is conducted based on the ResT, a novel Transformer backbone with memory-efficient multi-head self-attention, while the texture path is built on the stacked convolution operation. In addition, using the linear attention mechanism, a feature aggregation module is designed to effectively fuse the dependency features and texture features. Extensive experiments conducted on the three large-scale urban scene image segmentation datasets, i.e., ISPRS Vaihingen dataset, ISPRS Potsdam dataset, and UAVid dataset, demonstrate the effectiveness of our BANet. Specifically, a 64.6% mIoU is achieved on the UAVid dataset.

1. Introduction

Semantic segmentation of very fine resolution (VFR) urban scene images comprises a hot topic in the remote sensing community [1,2,3,4,5,6]. It plays a crucial role in various urban applications, such as urban planning [7], vehicle monitoring [8], land cover mapping [9], change detection [10], and building and road extraction [11,12], as well as other practical applications [13,14,15]. The goal of semantic segmentation is to label each pixel with a certain category. Since geo-objects in urban areas are characterized by large within-class and small between-class variance commonly, semantic segmentation of very fine resolution RGB imagery remains a challenging issue [16,17]. For example, urban buildings made of diverse materials show variant spectral signatures, while buildings and roads made of the same material (e.g., cement) exhibit similar textural information in RGB images.

Due to the advantage in local texture extraction, many researchers have investigated the challenging urban scene segmentation task based on deep convolutional neural networks (DCNNs) [18,19]. Especially, the methods based on fully convolutional neural network (FCN) [20], which can be trained end-to-end, have achieved great breakthroughs in urban scene labelling [21]. In comparison with the traditional machine learning methods, such as support vector machine (SVM) [22], random forest [23], and conditional random field (CRF) [24], the FCN-based methods have demonstrated remarkable generalization capability and high efficiency [25,26]. Therefore, numerous specially designed FCN-based networks have been spawned for urban scene segmentation, including UNet and its variants [4,16,27,28], multi-scale context aggregation networks [29,30], and multi-level feature fusion networks [5], attention-based networks [3,31,32], as well as lightweight networks [33]. For example, Sherrah [21] introduced the FCN to semantically label remote sensing images. Kampffmeyer et al. [34] quantified the uncertainty in urban remote sensing images at the pixel level, thereby enhancing the accuracy of relatively small objects (e.g., Cars). Maggiori et al. [35] designed an auxiliary CNN to learn the features fusion schemes. Multi-modal data were further utilized by Audebert et al. [36] in their V-FuseNet to enhance the segmentation performance. However, if either modality is unavailable in the test phase caused by sensors’ corruption or thick cloud cover [37], such a multi-modal data fusion scheme will be invalid. Kampffmeyer et al. [38], therefore, proposed a hallucination network aiming to replace missing modalities during testing. In addition, enhancing the segmentation accuracy by optimizing object boundaries is another burgeoning research area [39,40].

The accuracy of FCN-based networks, although encouraging, appears to be incompetent for VFR segmentation. The reason is that almost all FCN-based networks are built on DCNNs, while the latter is designed for extracting local patterns and lacks the ability to model global context in its nature [41]. Hence, extensive investigations have been devoted to addressing the above issue since the long-range dependency is vital for segmenting confusing manmade objects in urban areas. Typical methods include dilated convolutional networks which are designed for enlarging the receptive field [42,43] and attentional networks that are proposed for capturing long-range relational semantic content of feature maps [31,44]. Nevertheless, these two networks have never been able to get rid of the dependence on the convolution operation, impairing the effectiveness of long-range information extraction.

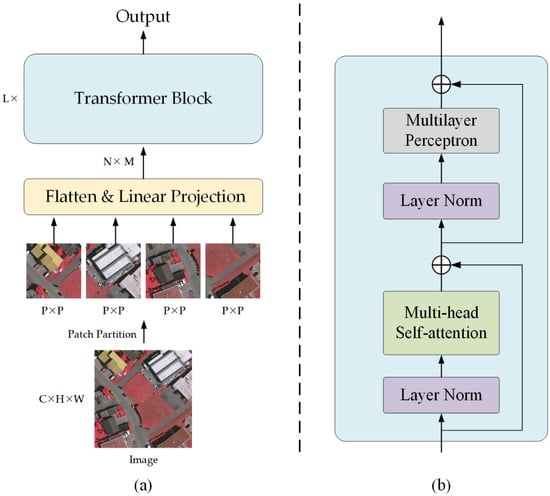

Most recently, with its strong ability in long-range dependency capture and sequence-based image modelling, an entirely novel architecture named Transformer [45] has become prominent in various computer vision tasks, such as image classification [46], object detection [47], and semantic segmentation [48]. The schematic flowchart of the Transformer is illustrated in Figure 1a. First, the Transformer deploys a patch partition to split the 2D input image into non-overlapping image patches. (H, W) and C denotes the resolution and the channel dimension of the input image, respectively. (P, P) is the resolution of each image patch. Then, a flatten operation and a linear projection are employed to produce the 1D sequence. The length of the sequence is N, where N = (H × W)/P2. M is the output dimension of the linear projection. Finally, the sequence is fed into stacked transformer blocks to extract features with long-range dependencies. As shown in Figure 1b, a standard transformer block is composed of multi-head self-attention (MHSA) [45], layer norm (LN) [49], and multilayer perceptron (MLP) as well as two addition operations. L represents the number of transformer blocks. Benefiting from the non-convolution structure and attention mechanism, Transformer could capture long-range dependencies more effectively [50].

Figure 1.

(a) Illustration of the schematic flowchart of the Transformer. (b) Illustration of a standard transformer block.

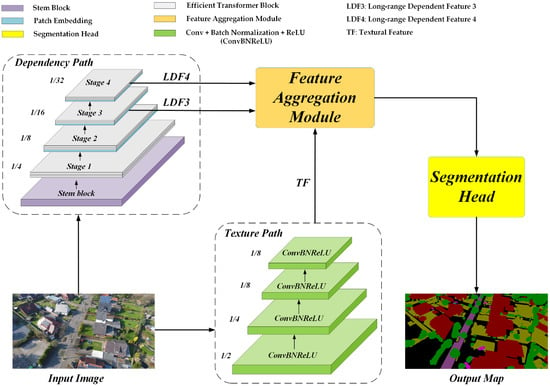

Inspired by the advancement of Transformer, in this paper, we propose a Bilateral Awareness Network (BANet) for accurate semantic segmentation of VFR urban scene images. Different from the traditional single-path convolutional neural networks, BANet addresses the challenging urban scene segmentation by constructing two feature extraction paths, as illustrated in Figure 2. Specifically, a texture path using stacked convolution layers is developed to extract the textural feature. Meanwhile, a dependency path using Transformer blocks is established to capture the long-range dependent feature. To leverage the benefits provided by the two features, we design a feature aggregation module (FAM) which introduces the linear attention mechanism to reduce the fitting residual of fused features, thereby strengthening the generalization capability of the network. Experimental results on three large-scale urban scene image segmentation datasets demonstrate the effectiveness of our BANet. In addition, the well-designed bilateral structure could provide a unified solution for semantic segmentation, object detection, and change detection, which undoubtedly boosts deep learning techniques in the remote sensing domain. To sum up, the main contributions of this paper are the following:

Figure 2.

The overall architecture of Bilateral Awareness Network (BANet).

- (1)

- A novel bilateral structure composed of convolution layers and transformer blocks is proposed for understanding and labelling very fine resolution urban scene images. It provides a new perspective for capturing textural information and long-range dependencies simultaneously in a single network.

- (2)

- A feature aggregation module is developed to fuse the textural feature and long-range dependent feature extracted by the bilateral structure. It employs linear attention to reduce the fitting residual and greatly improves the generalization of fused features.

The remainder of this paper is organized as follows. The architecture of BANet and its components are detailed in Section 2. Experimental comparisons on three semantic segmentation datasets (UAVid, ISPRS Vaihingen, and Potsdam) are provided in Section 3. A comprehensive discussion is presented in Section 4. Finally, conclusions are drawn in Section 5.

2. Bilateral Awareness Network

2.1. Overview

The overall architecture of the Bilateral Awareness Network (BANet) is exhibited in Figure 2, where the input image is fed into the dependency path and texture path simultaneously.

The dependency path employs a stem block and four transformer stages (i.e., Stage 1–4) to extract long-range dependent features. Each stage consists of two efficient transformer blocks (ETB). In particular, Stage 2, Stage 3, and Stage 4 involve patch embedding (PE) operations additionally. Proceed by the dependency path, two long-range dependent features (i.e., LDF3 and LDF4) are generated.

The texture path deploys four convolution layers to capture the textural feature (TF), while each convolutional layer is equipped with batch normalization (BN) [51] and ReLU activation function [52]. The downsampling factor is set as 8 for the texture path to preserve spatial details.

Since the outputs of the dependency path and the texture path are in disparate domains, FAM is proposed to merge them effectively. Whereafter, a segmentation head module is attached to convert the fused feature into a segmentation map.

2.2. Dependency Path

The dependency path is constructed by the ResT-Lite [53] pertained on ImageNet. As an efficient vision transformer, ResT-Lite is suitable for urban scene interpretation due to its balanced trade-off between segmentation accuracy and computational complexity. The main basic modules of the ResT-lite include the stem block, patch embedding and efficient transformer block.

Stem block: The stem block aims to shrink the height and width dimension and expand the channel dimension. To capture low-level information effectively, it introduces three 3 × 3 convolution layers with strides of [2, 1, 2]. The first two convolution layers are followed by BN and ReLU. Proceed by the stem block, the spatial resolution is downscaled by a factor of 4, and the channel dimension is extended from 3 to 64.

Patch embedding: The patch embedding aims to downsample the feature map for hierarchical feature representation. The output for each patch embedding can be formalized as

where represents a convolution layer with a kernel size of s+1 and a stride of s. Here, s is set as 2. DWConv denotes a 3 × 3 depth-wise convolution [54] with a stride of 1.

Efficient transformer block: Each efficient transformer is composed of efficient multi-head self-attention (EMSA) [53], MLP and LN. The output for each efficient transformer block can be formalized as

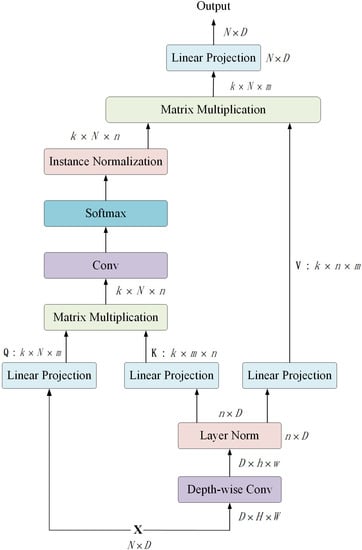

The EMSA, a revised self-attention module for computer vision based on MHSA, is the main module of ETB. As illustrated in Figure 3, the detailed steps of EMSA are as follows:

Figure 3.

The flowchart of efficient multi-head self-attention.

- (1)

- EMSA obtains three vectors from the input vector . Different from the standard multi-head self-attention, EMSA first deploys a depth-wise convolution with a kernel size of r+1 and stride of r to decrease the resolution of and , thereby compressing the computation and memory. For the four transformer stages, r is set as 8, 4, 2, 1, respectively.

- (2)

- To be specific, the input vector is reshaped to a new vector with a shape of , where . Proceed by the depth-wise convolution, the new vector is reshaped to . Here, and . Then, the new vector is recovered to as the input of LN, where . Thus, the initial shape of and is . The initial shape of is .

- (3)

- The three vectors are fed into three linear projections and reshaped to , and , respectively. Here, denotes the number of heads, m denotes the head dimension, .

- (4)

- A matrix multiplication operation is applied on and to generate an attention map with the shape of .

- (5)

- The attention map is further proceeded by a convolution layer, a Softmax activation function and an Instance Normalization [55] operation.

- (6)

- A matrix multiplication operation is applied on the proceeded attention map and . Finally, a linear projection is utilized to generate the output vector. The formalization of EMSA can be referred to the Equation (5).

Here, Conv is a standard 1 × 1 convolution with a stride of 1. IN denotes an instance normalization operation. LP represents a linear projection that keeps a dimension of .

2.3. Texture Path

The texture path is a lightweight convolutional network, which builds four diverse convolutional layers to capture textural information. The output for the texture path can be formalized as

Here, T represents a combined function consisting of a convolutional layer, a batch normalization operation, and a ReLU activation. The convolutional layer of has a kernel size of 7 and a stride of 2, which expands the channel dimension from 3 to 64. For and , the kernel size and stride are 3 and 2, respectively. The channel dimension is kept as 64. For , the convolutional layer is a standard 1 × 1 convolution with a stride of 1, expanding the channel dimension from 64 to 128. Thus, the output textural feature is downscaled 8 times and has a channel dimension of 128.

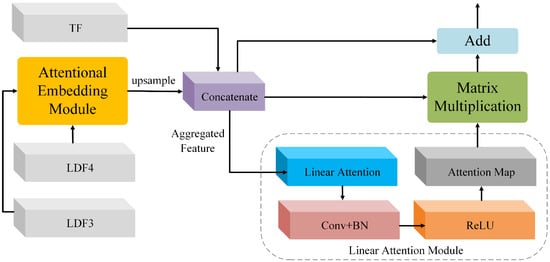

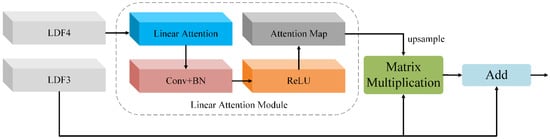

2.4. Feature Aggregation Module

The FAM aims to leverage the benefits of the dependent features and texture features comprehensively for powerful feature representation. As shown in Figure 4, the input features for the FAM include the LDF3, LDF4 and TF. To fuse those features, we first employ an attentional embedding module (AEM) to merge the LDF3 and LDF4. Thereafter, the merged feature is upsampled to concatenate with the TF, obtaining the aggregated feature. Finally, the linear attention module is deployed to reduce the fitting residual of the aggregated feature (AF). The pipeline of FAM can be denoted as

Figure 4.

The feature aggregation module.

Here, C represents the concatenate function. U denotes an upsampling operation with a scale factor of 2. The details of LAM and AEM are as follows.

Linear attention module: The conventional dot-product attention mechanism can be defined as

where query matrix Q, the key matrix K, and the value matrix V are generated by the corresponding standard 1 × 1 convolutional layer with a stride of 1, and indicates applying the softmax function along each row of matrix . The models the similarities between each pair of pixels of the input, thoroughly extracting the global contextual information contained in the features. However, as . and , the product between and belongs to , which leads to memory and computation complexity. Therefore, the high resource-demanding of dot-product crucially limits the application on large inputs. Under the condition of softmax normalization function, the i-th row of result matrix generated by the dot-product attention module according to Equation (9) can be written as

In our previous work on the linear attention (LA) mechanism [3], we design the LA based on first-order approximation of Taylor expansion on Equation (11):

where norm is utilized to ensure . Then, Equation (11) can be rewritten as

and be simplified as

The above equation can be transformed in a vectorized form as

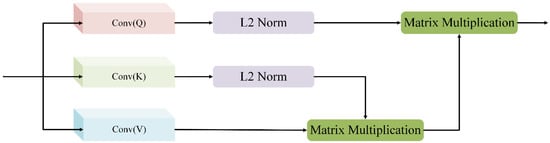

As and can be calculated and reused for every query, time and memory complexity of the proposed LA based on Equation (15) is , while the illustration can be seen in Figure 5.

Figure 5.

The linear attention.

In the FAM, we first employ LA to enhance the spatial relationships of AF, thereby suppressing the fitting residual. Then, a convolutional layer with BN and ReLU is deployed to obtain the attention map. Finally, we apply a matrix multiplication operation between AF and the attention map to obtain the attentional AF. The pipeline of LAM is defined as

Here, Conv represents a standard convolution with a stride of 1.

Attentional embedding module: The AEM adopts the LAM to enhance the spatial relationships of LDF4. Then, we apply a matrix multiplication operation between the upsampling attention map of LDF4 and LDF3 to produce the attentional LDF3. Finally, we use an addition operation to fuse the original LDF3 and the attentional LDF3. The pipeline of AEM is illustrated in Figure 6 and can be formalized as

where U denotes the nearest upsampling operation with a scale factor of 2.

Figure 6.

The attentional embedding module.

Capitalizing on the benefits provided by feature fusion, the final segmentation feature is abundant in both long-range dependency and textural information for precise semantic segmentation of urban scene images. In addition, linear attention reduces the fitting residual, strengthening the generalization of the network.

3. Experiments

In this section, experiments are conducted on three publicly available datasets to evaluate the effectiveness of the proposed BANet. We not only compare the performance of our model on the ISPRS Vaihingen and Potsdam datasets (http://www2.isprs.org/commissions/comm3/wg4/semantic-labeling.html, accessed on 20 October 2020) against the state-of-the-art models designed for remote sensing images but also take those proposed for natural images into consideration. Further, the UAVid dataset [56] is utilized to demonstrate the advantages of our method. Please note that as the backbone for the dependency path of our BANet is ResT-Lite with 10.49 M parameters, the backbone for comparative methods is selected as ResNet-18 with 11.7 M parameters correspondingly for a fair comparison.

3.1. Experiments on the ISPRS Vaihingen and Potsdam Datasets

3.1.1. Datasets

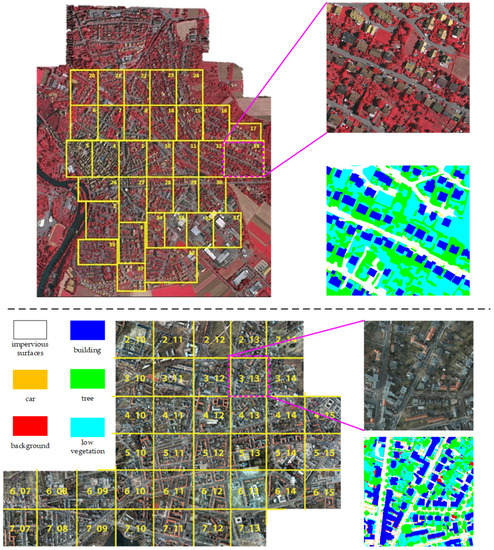

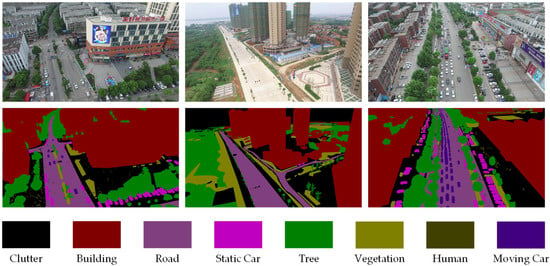

Vaihingen: There are 33 VFR images with a 2494 × 2064 average size in the Vaihingen dataset. The ground sampling distance (GSD) of tiles in Vaihingen is 9 cm. We utilize tiles: 2, 4, 6, 8, 10, 12, 14, 16, 20, 22, 24, 27, 29, 31, 33, 35, 38 for testing, tile: 30 for validation, and the remaining 15 images for training. Please note that we use only the near-infrared, red, and green channels in our experiments. The example images and labels can be seen in the top part of Figure 7.

Figure 7.

Example images and labels from the ISPRS Vaihingen dataset (top part) and Potsdam dataset (bottom part).

Potsdam: There are 38 fine-resolution images that cover urban scenes in the size of 6000 × 6000 pixels with a 5 cm GSD. We utilize ID: 2_13, 2_14, 3_13, 3_14, 4_13, 4_14, 4_15, 5_13, 5_14, 5_15, 6_13, 6_14, 6_15, 7_13 for testing, ID: 2_10 for validation, and the remaining 22 images, except image named 7_10 with error annotations, for training. Only the red, green, and blue channels are used in our experiments. The example images and labels can be seen in the bottom part of Figure 7.

3.1.2. Training Setting

For optimizing the network, the Adam is set as the optimizer with the 0.0003 learning rate and 8 batch size. The images, as well as corresponding labels, are cropped into patches with 512 × 512 pixels and augmented by rotating, resizing, and flipping during training. All the experiments are implemented on a single NVIDIA RTX 3090 GPU with 24 GB RAM. The cross-entropy loss function is utilized as the loss function to measure the disparity between the achieved segmentation maps and the ground reference. If OA on the validation set does not increase for more than 10 epochs, the training procedure will be stopped, while the maximum iteration period is 100 epochs.

3.1.3. Evaluation Metrics

The performance of BANet on the ISPRS Potsdam dataset is evaluated using the overall accuracy (OA), the mean Intersection over Union (mIoU), and the F1 score (F1), which are computed on the accumulated confusion matrix:

where , , , and indicate the true positive, false positive, true negative, and false negatives, respectively, for object indexed as class k. OA is calculated for all categories including the background.

3.1.4. Experimental Results

A detailed comparison between our BANet and other architectures including BiSeNet [57], FANet [58], MAResU-Net [3], EaNet [40], SwiftNet [59], and ShelfNet [60] can be seen in Table 1 and Table 2, based upon the F1-score for each category, mean F1-score, and the OA, and the mIoU on the Vaihingen Potsdam test sets. As it can be observed from the table, the proposed BANet transcends the previous methods designed for segmentation by a large margin, achieving the highest OA of 90.48% and mIoU of 81.35% in the Vaihingen dataset, while the figures for the Potsdam dataset are 91.06% and 86.25%, respectively. Specifically, on the Vaihingen dataset, the proposed BANet brings more than 0.4% improvement in OA and 1.7% improvement in mIoU compared with the suboptimal method, while the improvements for the Potsdam dataset are more than 1.1% and 1.8%. Particularly, as the relatively small objects, the Car is difficult to recognize in the Vaihingen dataset. Even so, the proposed BANet achieves an 86.76% F1-score, preceding the suboptimal method by more than 5.5%.

Table 1.

The experimental results on the Vaihingen dataset.

Table 2.

The experimental results on the Potsdam dataset.

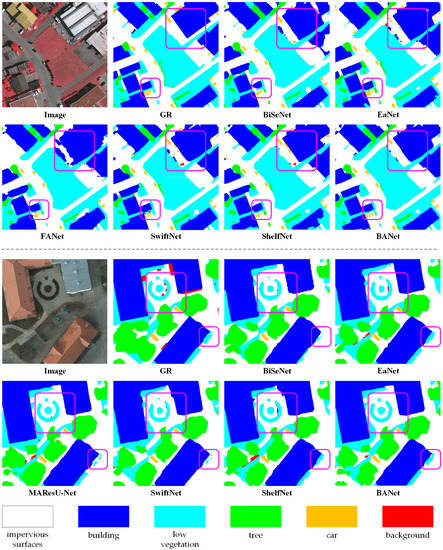

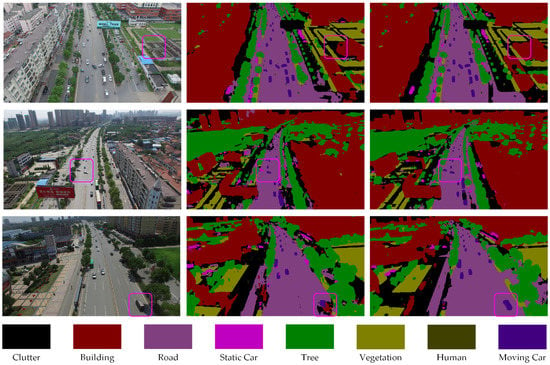

To qualitatively validate the effectiveness, we visualize the segmentation maps generated by our BANet and comparative methods in Figure 8. Due to the limited receptive field, the BiSeNet, EaNet, and SwiftNet assign the classification of a specific pixel only by considering a few adjacent areas, leading to fragmented maps and confusion of objects. The direct utilization of the attention mechanism (i.e., MAResU-Net) and the structure of multiple encoder-decoder (i.e., ShelfNet) brings certain improvements. However, the issue of the receptive field is still not entirely resolved. By contrast, we construct the dependency path in our BANet based on an attention-based backbone, i.e., ResT, to capture the long-range global relations, thereby tackling the limitation of the receptive field. Furthermore, a texture path built on convolution operation is equipped in our BANet to utilize the spatial details information in feature maps. Particularly, as shown in Figure 8, the complex circular contour of the Low vegetation is preserved completely by our BANet. In addition, the outlines of the Building generated by our BANet are smoother than those obtained by comparative methods.

Figure 8.

The experimental results on the ISPRS Vaihingen dataset (top part) and Potsdam dataset (bottom part). GR represents Ground Reference.

3.2. Experiments on the UAVid Dataset

3.2.1. Dataset

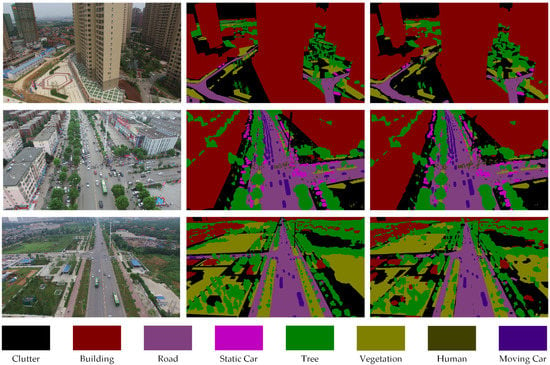

As a fine-resolution Unmanned Aerial Vehicle (UAV) semantic segmentation dataset, the UAVid dataset (https://uavid.nl/, accessed on 10 May 2021) is focusing on urban street scenes with a 3840 × 2160 resolution. UAVid is a challenging benchmark since the large resolution of images, large-scale variation, and complexities in the scenes. To be specific, there are 420 images in the dataset where 200 are for training, 70 for validation, and the remaining 150 for testing. The example images and labels can be seen in Figure 9.

Figure 9.

Example images and labels from the UAVid dataset.

We adopt the same hyperparameters and data augmentation as those for experiments on ISPRS datasets, except batch size as 4 and the patch size as 1024 × 1024 during training.

3.2.2. Evaluation Metrics

For the UAVid dataset, the performance is assessed from the official server based on the intersection-over-union metric:

where , , , and indicate the true positive, false positive, true negative, and false negatives, respectively, for object indexed as class k.

3.2.3. Experimental Results

Quantitative comparison with MSD [56], Fast-SCNN [61], BiSeNet, SwiftNet, and ShelfNet are reported in Table 3. As can be seen, the proposed BANet achieves the best IOU score on five out of eight classes and the best mIoU with a 3% gain over the suboptimal BiSeNet. Qualitative results on the UAVid validation set and test set are demonstrated in Figure 10 and Figure 11, respectively. Compared with the benchmark MSD with obvious local and global inconsistencies, the proposed BANet can effectively capture the cues to scene semantics. For example, in the second row of Figure 11, the cars in the pink box are obviously all moving on the road. However, the MSD identity the left car which is crossing the street as the static car. In contrast, our BANet successfully recognizes all moving cars.

Table 3.

The experimental results on the UAVid dataset.

Figure 10.

The experimental results on the UAVid validation set. The first column illustrates the input RGB images; the second column depicts the ground reference, and the third column shows the predictions of our BANet.

Figure 11.

The experimental results on the UAVid test set. The first column illustrates the input RGB images; the second column depicts the outputs of MSD, and the third column shows the predictions of our BANet.

4. Discussion

4.1. Ablation Study

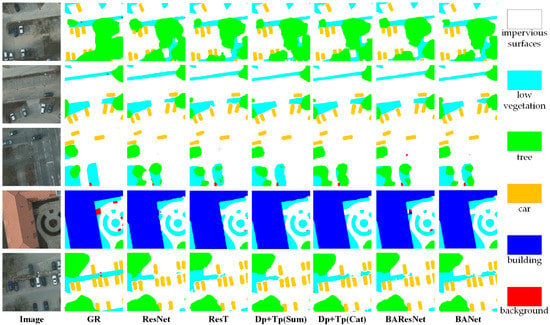

In this part, we conduct extensive ablation experiments on the ISPRS Potsdam dataset to verify the effectiveness of components in the proposed BANet, while the experimental settings and quantitative comparisons are illustrated in Table 4. The results are reported by the average value and corresponding deviation by three-fold experiments. Qualitative comparisons about the ablation study can be seen in Figure 12.

Table 4.

The experimental results of the ablation study.

Figure 12.

The ablation study on the ISPRS Potsdam dataset. GR represents Ground Reference.

Baseline: We select two baselines in ablation experiments, the dependency path which utilizes the ResNet-18 (denoted as ResNet) as the backbone and the dependency path which adopts the ResT-Lite (denoted as ResT) as the backbone. The feature maps generated by the dependency path are directly upsampled to restore the shape for final segmentation.

Ablation for the texture path: As rich spatial details are important for segmentation, the texture path conducted on the convolution operation is designed in our BANet for preserving the spatial texture. Table 4 illustrates that even the simple fusion schemes such as summation (indicated as Dp+Tp(Sum)) and concatenation (signified as Dp+Tp(Cat)) to merge the texture information can enhance the performance in OA at least 0.2%.

Ablation for feature aggregation module: Given the information obtained by the dependency path and the texture path are in different domains, neither summation nor concatenation is the optimal feature fusion scheme. As shown in Table 4, more than 0.5% improvement in OA brings by our BANet compared with Dp+Tp(Sum) and Dp+Tp(Cat) explains the validity of the proposed feature aggregation module.

Ablation for ResT-Lite: Since a novel transformer-based backbone, i.e., ResT, is introduced in our BANet, it is valuable to compare the accuracy between the ResNet and ResT. As illustrated in Table 4, the replacement of the backbone in the dependency path brings more than the 1% improvement in OA. In addition, we substitute the backbone in our BANet with ResNet-18 (denoted as BAResNet) to further evaluate the performance. As can be seen in Table 4, a 1.2% gap in OA illuminates the effectiveness of the ResT-Lite. Note that the number of parameters for BAResNet is 14.77 million (59.0 MB for weights file), while the figure for BANet is 15.44 million (56.4 MB for weights file). The inference speed of BAResNet is 73.2 FPS on a single mid-range GPU card, i.e., 1660Ti, for 512 × 512 input images, while the speed of BANet is 33.2 FPS, both satisfy the requirement of real-time (≥30 FPS) scenarios. Please notice that the Nvidia GPU has the specialized optimization for CNN, while the optimization for Transformer is not available now. Therefore, the comparison is not completely fair now.

4.2. Application Scenarios

The main application scenario of our method is urban scene segmentation using remotely sensed images captured by satellite, aerial sensors, and UAV drones. The proposed Bilateral Awareness Network, which consists of a texture path, a dependency path, and a feature aggregation module, provides a unified framework for semantic segmentation, object detection, and change detection. Moreover, our model considers both accuracy and complexity, revealing enormous potential in illegal land use detection, real-time traffic monitoring, and urban environmental assessment.

In the future, we will continue to study the hybrid structure of convolution and Transformer and apply it to a wider range of urban applications

5. Conclusions

This paper proposes a Bilateral Awareness Network for semantic segmentation of very fine resolution urban scene images. Specifically, there are two branches in our BANet, a dependency path built on the Transformer backbone to capture the long-range relationships and a texture path constructed on the convolution operation to exploit the fine-grained details in VHR images. In particular, we further design an attentional feature aggregation module to fuse the global relationship information captured by the dependency path and the spatial texture information generated by the texture path. Extensive experiments on the ISPRS Vaihingen dataset, ISPRS Potsdam dataset, and UAVid dataset demonstrate the effectiveness of the proposed BANet. As a novel exploration to combine the Transformer and convolution in a bilateral structure, we envisage this pioneering paper could inspire practitioners and researchers engaged in this area to explore more possibilities of the Transformer in the remote sensing domain.

Author Contributions

This work was conducted in collaboration with all authors. D.W. and T.W. defined the research theme. X.M. supervised the research work and provided experimental facilities. L.W. and R.L. designed the semantic segmentation model and conducted the experiments. C.D. checked the experimental results. This manuscript was written by L.W. and R.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (NSFC) under grant number 41971352, National Key Research and Development Program of China under grant number 2018YFB0505003.

Data Availability Statement

We are grateful to ISPRS for providing the open benchmarks for 2D remote sensing image semantic segmentation. The data in the paper can be obtained through the following link. Potsdam: https://www2.isprs.org/commissions/comm2/wg4/benchmark/2d-sem-label-potsdam/, accessed on 20 October 2020 Vaihingen: https://www2.isprs.org/commissions/comm2/wg4/benchmark/2d-sem-label-vaihingen/, accessed on 20 October 2020 and UAVid: https://uavid.nl/, accessed on 10 May 2021 Code is available at https://github.com/lironui/BANet, accessed on 25 June 2021.

Acknowledgments

The authors are very grateful to the many people who helped to comment on the article, and the Large Scale Environment Remote Sensing Platform (Facility No. 16000009, 16000011, 16000012) provided by Wuhan University, and the supports provided by Surveying and Mapping Institute Lands and Resource Department of Guangdong Province, Guangzhou. Special thanks to editors and reviewers for providing valuable insight into this article.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| VFR | Very Fine Resolution |

| DCNNs | Deep Convolutional Neural Networks |

| FCN | Fully Convolutional Neural Network |

| SVM | Support Vector Machine |

| RF | Random Forest |

| CRF | Conditional Random Field |

| MHSA | Multi-Head Self-Attention |

| MLP | Multilayer Perceptron |

| FAM | Feature Aggregation Module |

| BANet | Bilateral Awareness Network |

| TF | Textural Features |

| AF | Aggregated Feature |

| LDF | Long-range Dependent Features |

| BN | Batch Normalization |

| GSD | Ground Sampling Distance |

| UAV | Unmanned Aerial Vehicle |

| LA | Linear Attention |

| AEM | Attentional Embedding Module |

| EMSA | Efficient Multi-head Self-attention |

References

- Zhang, C.; Atkinson, P.M.; George, C.; Wen, Z.; Diazgranados, M.; Gerard, F. Identifying and mapping individual plants in a highly diverse high-elevation ecosystem using UAV imagery and deep learning. ISPRS J. Photogramm. Remote Sens. 2020, 169, 280–291. [Google Scholar] [CrossRef]

- Zhang, C.; Harrison, P.A.; Pan, X.; Li, H.; Sargent, I.; Atkinson, P.M. Scale sequence joint deep learning (SS-JDL) for land use and land cover classification. Remote Sens. Environ. 2020, 237, 111593. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C.; Su, J.; Zhang, C. Multistage attention ResU-Net for Semantic segmentation of fine-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2021. [Google Scholar] [CrossRef]

- Li, R.; Duan, C.; Zheng, S.; Zhang, C.; Atkinson, P.M. MACU-Net for semantic segmentation of fine-resolution remotely sensed images. IEEE Geosci. Remote Sens. Lett. 2021. [Google Scholar] [CrossRef]

- Wang, L.; Fang, S.; Zhang, C.; Li, R.; Duan, C.; Meng, X.; Atkinson, P.M. SaNet: Scale-aware neural network for semantic labelling of multiple spatial resolution aerial images. arXiv 2021, arXiv:2103.07935. [Google Scholar]

- Huang, Z.; Wei, Y.; Wang, X.; Shi, H.; Liu, W.; Huang, T.S. AlignSeg: Feature-Aligned segmentation networks. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [Google Scholar] [CrossRef] [PubMed]

- Yao, H.; Qin, R.; Chen, X. Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef] [Green Version]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-Detect: Vehicle detection and classification through semantic segmentation of aerial images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef] [Green Version]

- Matikainen, L.; Karila, K. Segment-based land cover mapping of a suburban area—Comparison of high-resolution remotely sensed datasets using classification trees and test field points. Remote Sens. 2011, 3, 1777–1804. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Q.; Seto, K.C. Mapping urbanization dynamics at regional and global scales using multi-temporal DMSP/OLS nighttime light data. Remote Sens. Environ. 2011, 115, 2320–2329. [Google Scholar] [CrossRef]

- Wei, Y.; Wang, Z.; Xu, M. Road structure refined CNN for road extraction in aerial image. IEEE Geosci. Remote Sens. Lett. 2017, 14, 709–713. [Google Scholar] [CrossRef]

- Li, E.; Femiani, J.; Xu, S.; Zhang, X.; Wonka, P. Robust rooftop extraction from visible band images using higher order CRF. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4483–4495. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Ji, Y.; Chen, J.; Deng, Y.; Chen, J.; Jie, Y. Combining segmentation network and nonsubsampled contourlet transform for automatic marine raft aquaculture area extraction from sentinel-1 images. Remote Sens. 2020, 12, 4182. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Bester, M.S.; Guillen, L.A.; Ramezan, C.A.; Carpinello, D.J.; Fan, Y.; Hartley, F.M.; Maynard, S.M.; Pyron, J.L. Semantic segmentation deep learning for extracting surface mine extents from historic topographic maps. Remote Sens. 2020, 12, 4145. [Google Scholar] [CrossRef]

- Kalajdjieski, J.; Zdravevski, E.; Corizzo, R.; Lameski, P.; Kalajdziski, S.; Pires, I.M.; Garcia, N.M.; Trajkovik, V. Air pollution prediction with multi-modal data and deep neural networks. Remote Sens. 2020, 12, 4142. [Google Scholar] [CrossRef]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef] [Green Version]

- Li, R.; Duan, C. ABCNet: Attentive bilateral contextual network for efficient semantic segmentation of fine-resolution remote sensing images. arXiv 2021, arXiv:2102.02531. [Google Scholar]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. Joint deep learning for land cover and land use classification. Remote Sens. Environ. 2019, 221, 173–187. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. An object-based convolutional neural network (OCNN) for urban land use classification. Remote Sens. Environ. 2018, 216, 57–70. [Google Scholar] [CrossRef] [Green Version]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Sherrah, J. Fully convolutional networks for dense semantic labelling of high-resolution aerial imagery. arXiv 2016, arXiv:1606.02585. [Google Scholar]

- Guo, Y.; Jia, X.; Paull, D. Effective sequential classifier training for SVM-based multitemporal remote sensing image classification. IEEE Trans. Image Process. 2018, 27, 3036–3048. [Google Scholar] [CrossRef] [Green Version]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Krähenbühl, P.; Koltun, V. Efficient inference in fully connected crfs with gaussian edge potentials. Adv. Neural Inf. Process. Syst. 2011, 24, 109–117. [Google Scholar]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Marcos, D.; Volpi, M.; Kellenberger, B.; Tuia, D. Land cover mapping at very high resolution with rotation equivariant CNNs: Towards small yet accurate models. ISPRS J. Photogramm. Remote Sens. 2018, 145, 96–107. [Google Scholar] [CrossRef] [Green Version]

- Yue, K.; Yang, L.; Li, R.; Hu, W.; Zhang, F.; Li, W. TreeUNet: Adaptive Tree convolutional neural networks for subdecimeter aerial image segmentation. ISPRS J. Photogramm. Remote Sens. 2019, 156, 1–13. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Liu, Y.; Fan, B.; Wang, L.; Bai, J.; Xiang, S.; Pan, C. Semantic labeling in very high resolution images via a self-cascaded convolutional neural network. ISPRS J. Photogramm. Remote Sens. 2018, 145, 78–95. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.Y.; Kumaar, S.; Lyu, Y.; Nex, F. Real-time semantic segmentation with context aggregation network. ISPRS J. Photogramm. Remote Sens. 2021, 178, 124–134. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3146–3154. [Google Scholar]

- Li, R.; Zheng, S.; Zhang, C.; Duan, C.; Su, J.; Wang, L.; Atkinson, P.M. Multiattention network for semantic segmentation of fine-resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021. [Google Scholar] [CrossRef]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. Icnet for real-time semantic segmentation on high-resolution images. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 405–420. [Google Scholar]

- Kampffmeyer, M.; Salberg, A.-B.; Jenssen, R. Semantic segmentation of small objects and modeling of uncertainty in urban remote sensing images using deep convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1–9. [Google Scholar]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. High-resolution aerial image labeling with convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7092–7103. [Google Scholar] [CrossRef] [Green Version]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Beyond RGB: Very high resolution urban remote sensing with multimodal deep networks. ISPRS J. Photogramm. Remote Sens. 2018, 140, 20–32. [Google Scholar] [CrossRef] [Green Version]

- Duan, C.; Pan, J.; Li, R. Thick cloud removal of remote sensing images using temporal smoothness and sparsity regularized tensor optimization. Remote Sens. 2020, 12, 3446. [Google Scholar] [CrossRef]

- Kampffmeyer, M.; Salberg, A.B.; Jenssen, R. Urban land cover classification with missing data modalities using deep convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1758–1768. [Google Scholar] [CrossRef] [Green Version]

- Marmanis, D.; Schindler, K.; Wegner, J.D.; Galliani, S.; Datcu, M.; Stilla, U. Classification with an edge: Improving semantic image segmentation with boundary detection. ISPRS J. Photogramm. Remote Sens. 2018, 135, 158–172. [Google Scholar] [CrossRef] [Green Version]

- Zheng, X.; Huan, L.; Xia, G.-S.; Gong, J. Parsing very high resolution urban scene images by learning deep ConvNets with edge-aware loss. ISPRS J. Photogramm. Remote Sens. 2020, 170, 15–28. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Liu, Q.; Kampffmeyer, M.; Jenssen, R.; Salberg, A.B. Dense Dilated Convolutions’ Merging Network for Land Cover Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6309–6320. [Google Scholar] [CrossRef] [Green Version]

- Huang, Z.; Wang, X.; Wei, Y.; Huang, L.; Shi, H.; Liu, W.; Huang, T.S. CCNet: Criss-cross attention for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Wang, L.; Li, R.; Duan, C.; Fang, S. Transformer meets DCFAM: A novel semantic segmentation scheme for fine-resolution remote sensing images. arXiv 2021, arXiv:2104.12137. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve Restricted Boltzmann machines. In Proceedings of the International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Zhang, Q.; Yang, Y. ResT: An efficient transformer for visual recognition. arXiv 2021, arXiv:2105.13677. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Instance normalization: The missing ingredient for fast stylization. arXiv 2016, arXiv:1607.08022v3. [Google Scholar]

- Lyu, Y.; Vosselman, G.; Xia, G.-S.; Yilmaz, A.; Yang, M.Y. UAVid: A semantic segmentation dataset for UAV imagery. ISPRS J. Photogramm. Remote Sens. 2020, 165, 108–119. [Google Scholar] [CrossRef]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Hu, P.; Perazzi, F.; Heilbron, F.C.; Wang, O.; Lin, Z.; Saenko, K.; Sclaroff, S. Real-time semantic segmentation with fast attention. IEEE Robot. Autom. Lett. 2021, 6, 263–270. [Google Scholar] [CrossRef]

- Oršić, M.; Šegvić, S. Efficient semantic segmentation with pyramidal fusion. Pattern Recognit. 2021, 110, 107611. [Google Scholar] [CrossRef]

- Zhuang, J.; Yang, J.; Gu, L.; Dvornek, N. Shelfnet for fast semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019; pp. 847–856. [Google Scholar]

- Poudel, R.P.K.; Liwicki, S.; Cipolla, R. Fast-scnn: Fast semantic segmentation network. arXiv 2019, arXiv:1902.04502. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).