On-Site Global Calibration of Mobile Vision Measurement System Based on Virtual Omnidirectional Camera Model

Abstract

1. Introduction

2. Materials and Methods

2.1. Coordinate System Notation

2.2. Method Overview

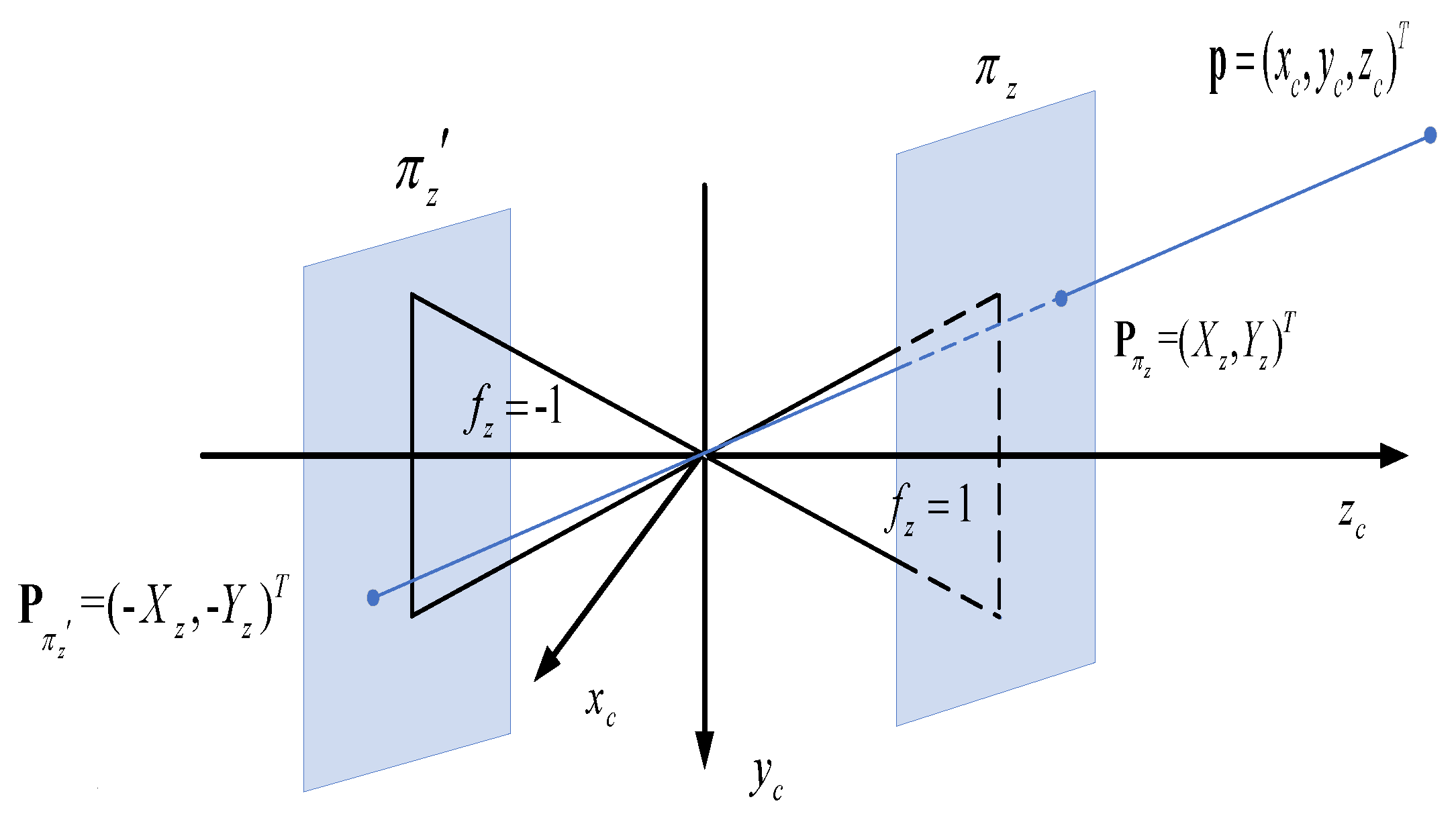

2.3. Virtual Omnidirectional Camera Model

2.4. Expanded Factorial Linear Transform

2.4.1. Direct Linear Transform of Single Image Plane

2.4.2. Factorial Linear Transform of Virtual Camera Model of Virtual Single Image Plane

- The measurement matrix of the projection matrix is deduced by the virtual single image plane camera model. It is decomposed into two factorial matrixes:

- The intermediate variable is introduced to build the new measurement matrix:

- In the condition of , the least-squares solution of the equation set is solved:

2.4.3. Expanded Factorial Linear Transform of Virtual Omnidirectional Camera Model

2.5. Linear Solution of Location and Attitude

- 1.

- Solution of the camera location C

- 2.

- Solution of and

2.6. Nonlinear Optimization

2.7. Location and Attitude Transformation

- 1.

- Computation of geodetic coordinate from 3D coordinates

- 2.

- Rotation to ENU coordinate system

3. Simulation of Synthetic Data and Outdoor Experiment

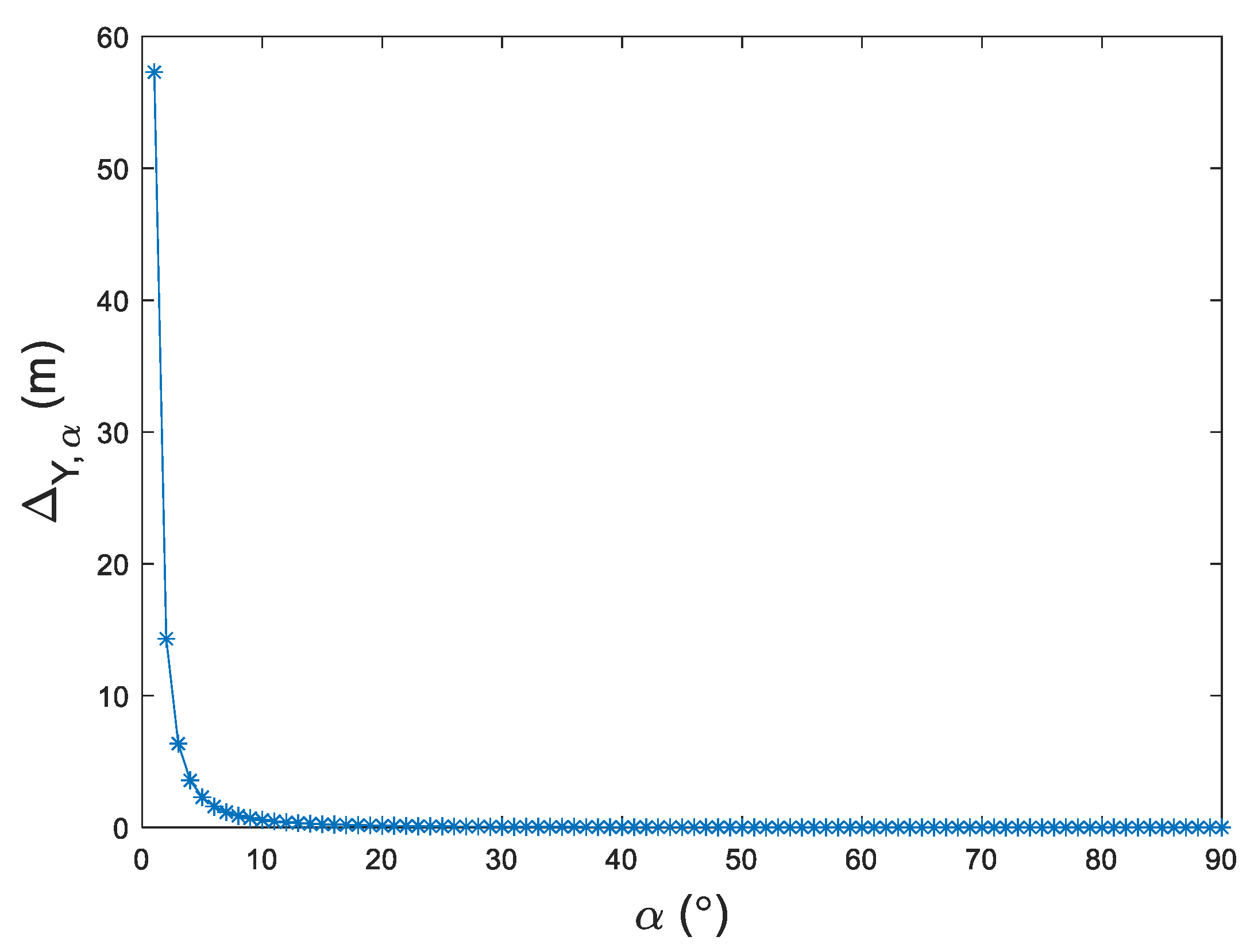

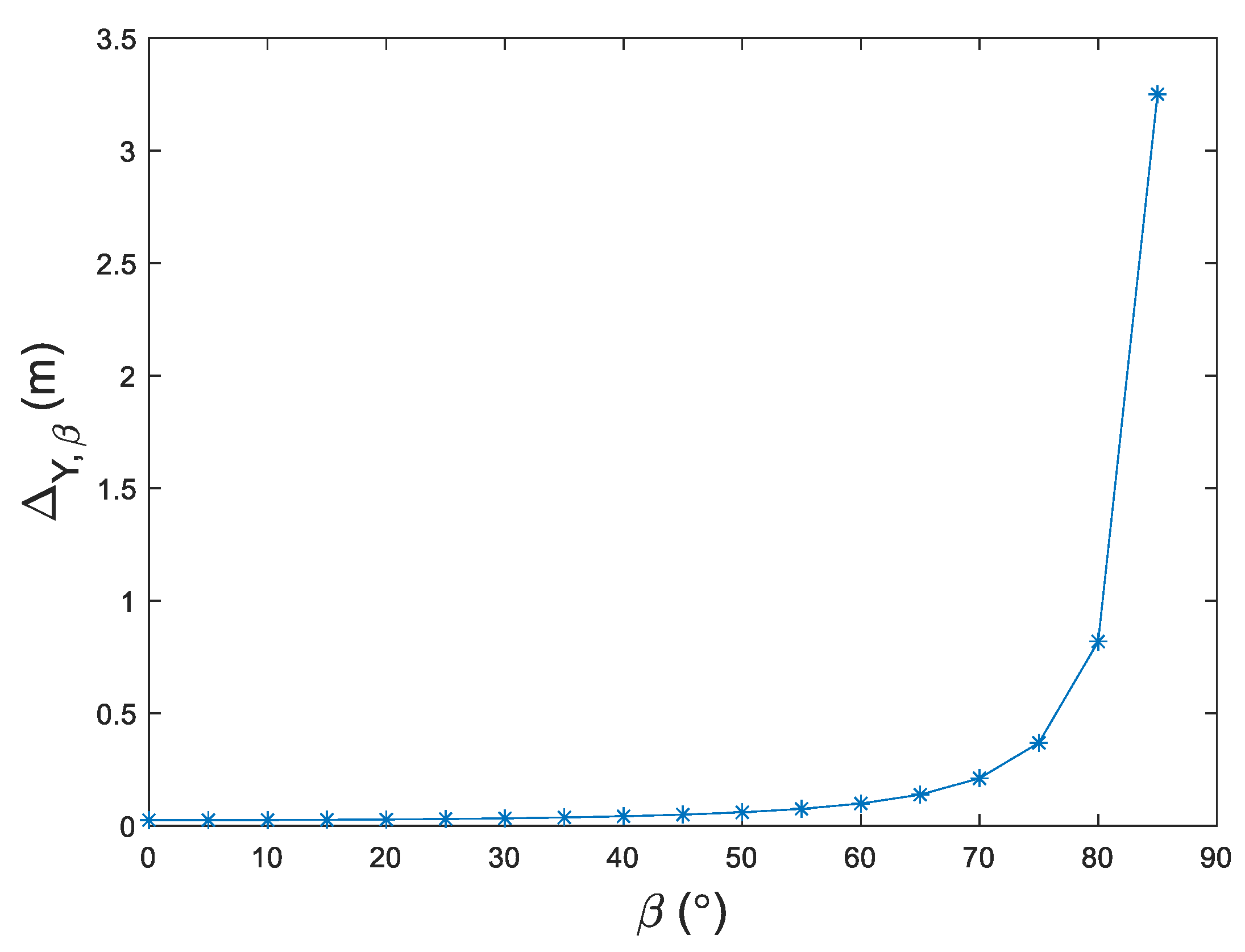

3.1. Error Analysis of Imaging in Virtual Single Image Camera Model

3.2. Simulations

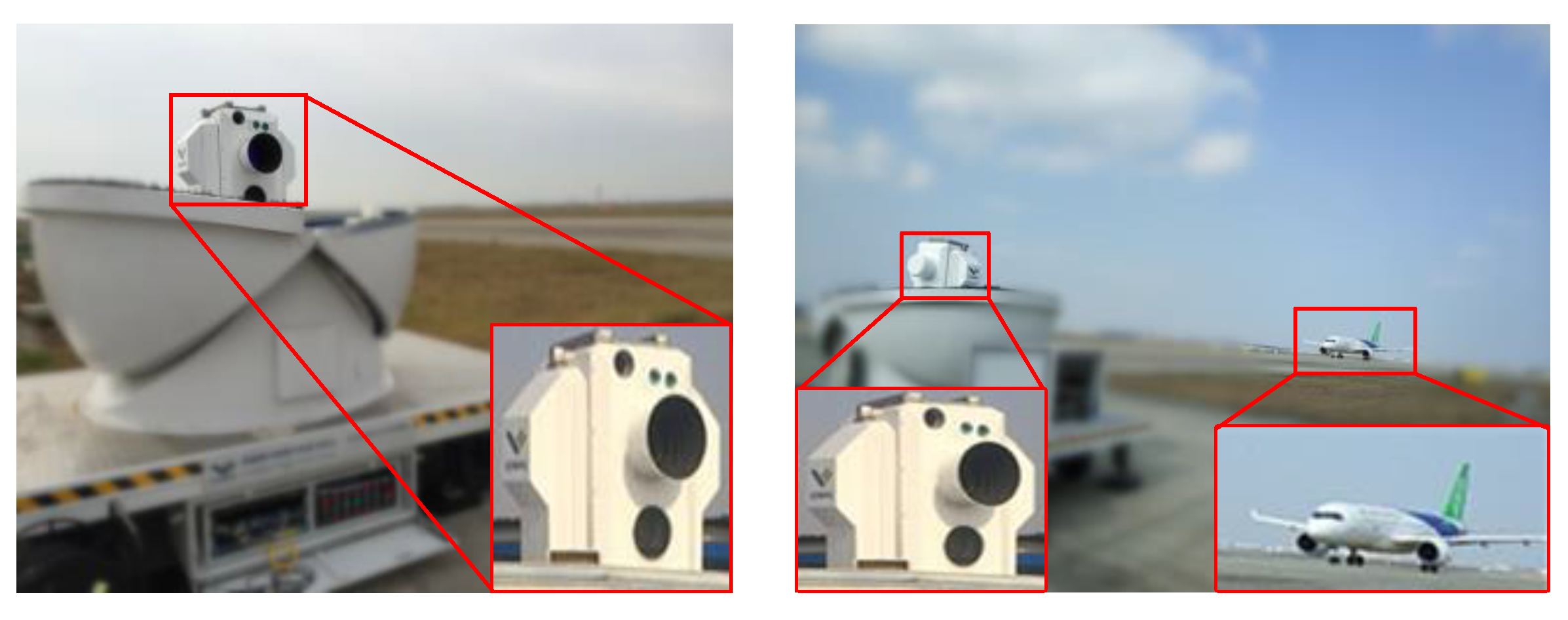

3.3. Outdoor Experiment

4. Discussion

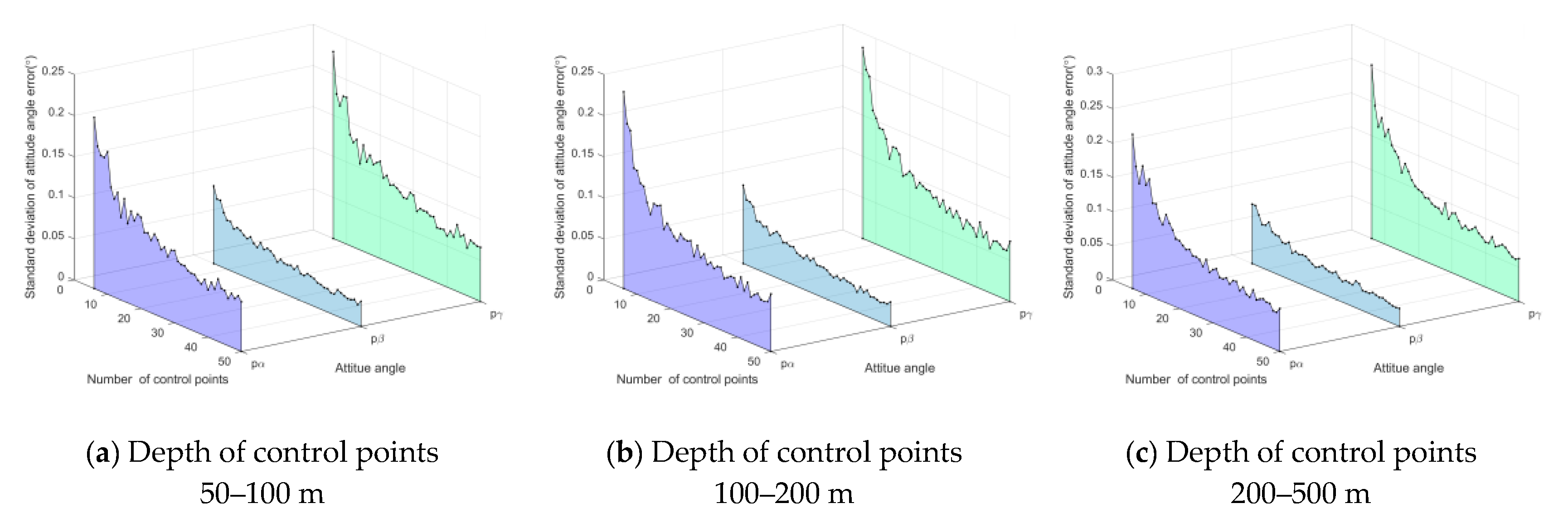

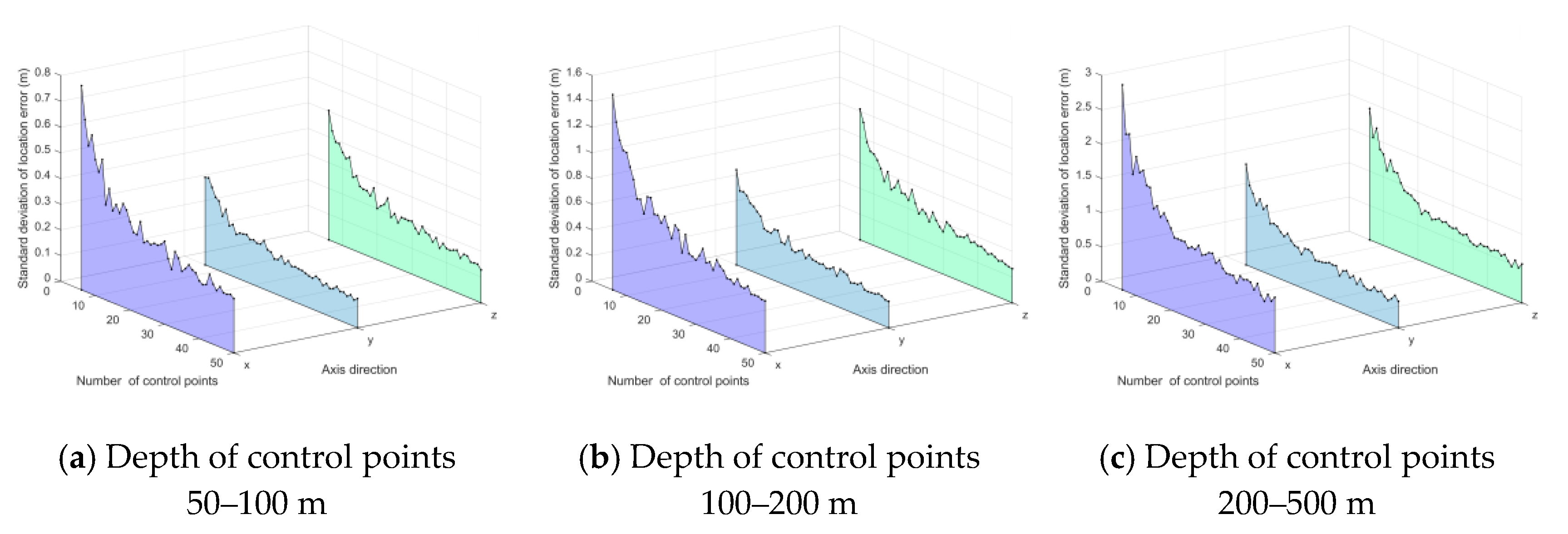

4.1. Performance with Respect to Number of Control Points

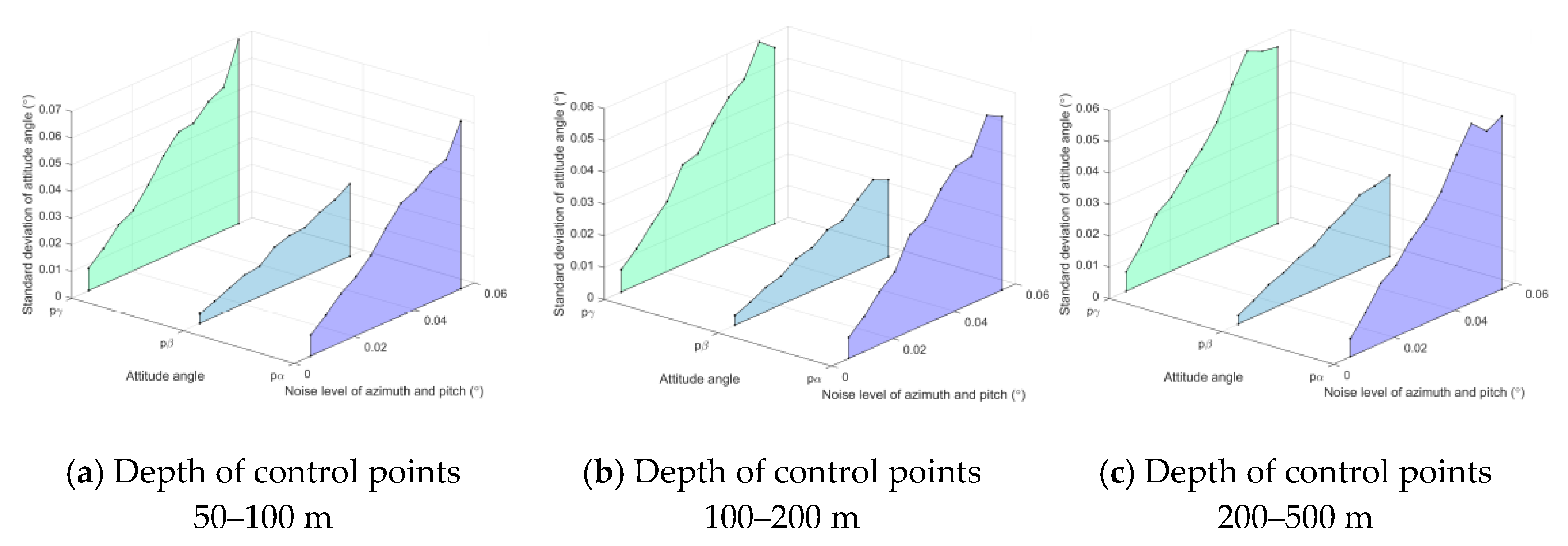

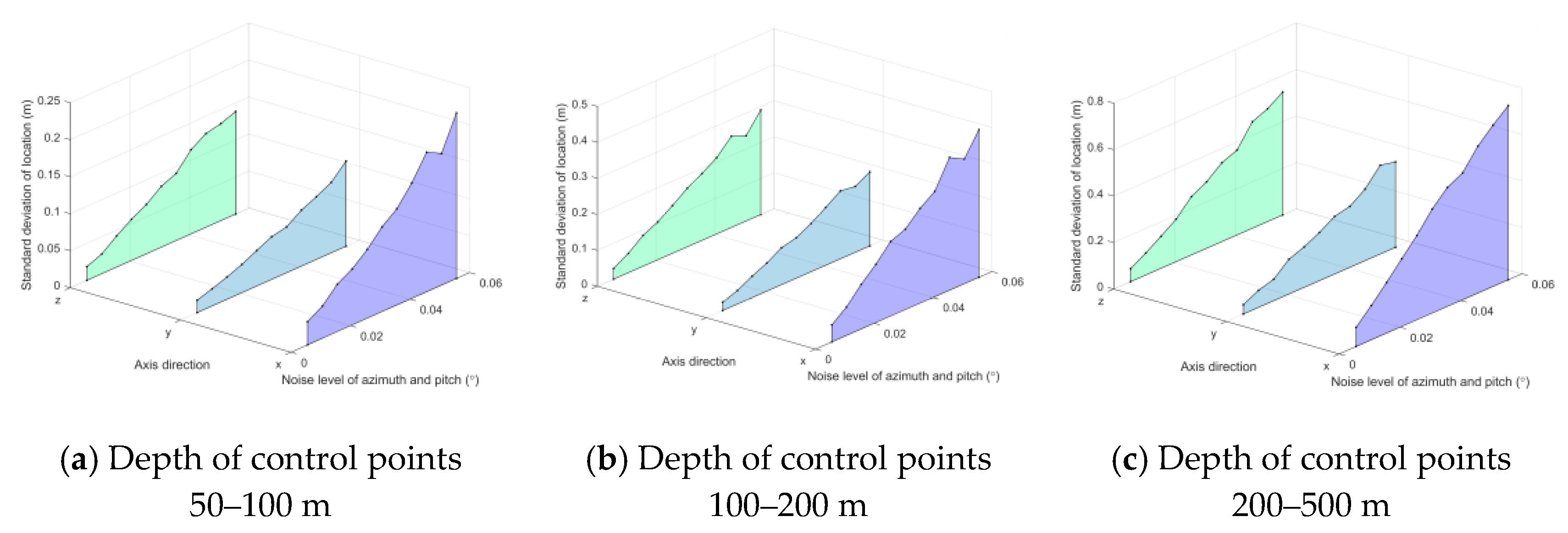

4.2. Performance with Respect to Angle Noise Level

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chai, B.; Liu, F.; Huang, Z.; Tan, K.; Wei, Z. An outdoor accuracy evaluation method of aircraft flight attitude dynamic vison measure system. In Proceedings of the Optical Sensing and Imaging Technologies and Applications, Beijing, China, 22–24 May 2018; p. 1084635. [Google Scholar]

- Liu, Y.; Lin, J.R. Multi-sensor global calibration technology of vision sensor in car body-in-white visual measurement system. Acta Metrol. Sin. 2014, 5, 204–209. [Google Scholar]

- Kitahara, I.; Saito, H.; Akimichi, S.; Onno, T.; Ohta, Y.; Kanade, T. Large-scale virtualized reality. In Proceedings of the IEEE Computer Vision & Pattern Recognition (CVPR), Technical Sketches, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Cheng, J.H.; Ren, S.N.; Wang, G.L.; Yang, X.D.; Chen, K. Calibration and compensation to large-scale multi-robot motion platform using laser tracker. In Proceedings of the IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Shenyang, China, 8–12 June 2015; pp. 163–168. [Google Scholar]

- Liu, Z.; Li, F.J.; Zhang, G.J. An external parameter calibration method for multiple cameras based on laser rangefinder. Measurement 2014, 47, 954–962. [Google Scholar] [CrossRef]

- Chen, G.; Guo, Y.; Wang, H.; Ye, D.; Gu, Y.J.O. Stereo vision sensor calibration based on random spatial points given by CMM. Optik 2012, 123, 731–734. [Google Scholar] [CrossRef]

- Lu, R.S.; Li, Y.F. A global calibration method for large-scale multi-sensor visual measurement systems. Sens. Actuators A 2004, 116, 384–393. [Google Scholar] [CrossRef]

- Zhao, Y.H.; Yuan, F.; Ding, Z.L.; Li, J. Global calibration method for multi-vision measurement system under the conditions of large field of view. J. Basic Sci. Eng. 2011, 19, 679–688. [Google Scholar]

- Zhao, F.; Tamaki, T.; Kurita, T. Marker based simple non-overlapping camera calibration. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 1180–1184. [Google Scholar]

- Liu, Z.; Wei, X.G.; Zhang, G.J. External parameter calibration of widely distributed vision sensors with non-overlapping fields of view. Opt. Lasers Eng. 2013, 51, 643–650. [Google Scholar] [CrossRef]

- Zou, W.; Li, S. Calibration of nonoverlapping in-vehicle cameras with laser pointers. IEEE Trans. Intell. Trans. Syst. 2015, 16, 1348–1359. [Google Scholar] [CrossRef]

- Zou, W. Calibration Non-Overlapping Camera with a Laser Ray; Tottori University: Tottori, Japan, 2015. [Google Scholar]

- Liu, Q.Z.; Sun, J.H.; Zhao, Y.T.; Liu, Z. Calibration method for geometry relationships of nonoverlapping cameras using light planes. Opt. Eng. 2013, 52. [Google Scholar] [CrossRef]

- Liu, Q.; Sun, J.; Liu, Z.; Zhang, G. Global calibration method of multi-sensor vision system using skew laser lines. Chin. J. Mech. Eng. 2012, 25, 405–410. [Google Scholar] [CrossRef]

- Nischt, M.; Swaminathan, R. Self-calibration of asynchronized camera networks. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 2164–2171. [Google Scholar]

- Svoboda, T.J.E. Swiss Federal Institute of Technology, Zurich, Tech. Rep. BiWi-TR-263. Quick Guide to Multi-Camera Self-Calibration. 2003. Available online: http://www.vision.ee.ethz.ch/svoboda/SelfCal (accessed on 3 June 2020).

- Kraynov, A.; Suchopar, A.; D’Souza, L.; Richards, R. Determination of geometric orientation of adsorbed cinchonidine on Pt and Fe and quiphos on Pt nanoclusters via DRIFTS. Phys. Chem. Chem. Phys. 2006, 8, 1321–1328. [Google Scholar] [CrossRef]

- Khare, S.; Kodambaka, S.; Johnson, D.; Petrov, I.; Greene, J.J. Determining absolute orientation-dependent step energies: A general theory for the Wulff-construction and for anisotropic two-dimensional island shape fluctuations. Surf. Sci. 2003, 522, 75–83. [Google Scholar] [CrossRef]

- Yu, F.; Xiong, Z.; Qu, Q. Multiple circle intersection-based celestial positioning and integrated navigation algorithm. J. Astronaut. 2011, 32, 88–92. [Google Scholar]

- Yang, P.; Xie, L.; Liu, J. Simultaneous celestial positioning and orientation for the lunar rover. Aerosp. Sci. Technol. 2014, 34, 45–54. [Google Scholar] [CrossRef]

- Meier, F.; Zakharchenya, B.P. Optical Orientation; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Bairi, A.J.S. Method of quick determination of the angle of slope and the orientation of solar collectors without a sun tracking system. Sol. Wind Technol. 1990, 7, 327–330. [Google Scholar] [CrossRef]

- Lambrou, E.; Pantazis, G. Astronomical azimuth determination by the hour angle of Polaris using ordinary total stations. Surv. Rev. 2008, 40, 164–172. [Google Scholar] [CrossRef]

- Ishikawa, T. Satellite navigation and geospatial awareness: Long-term effects of using navigation tools on wayfinding and spatial orientation. Prof. Geogr. 2019, 71, 197–209. [Google Scholar] [CrossRef]

- Chen, Y.; Ding, X.; Huang, D.; Zhu, J. A multi-antenna GPS system for local area deformation monitoring. Earth Planets Space 2000, 52, 873–876. [Google Scholar] [CrossRef]

- Bertiger, W.; Bar-Sever, Y.; Haines, B.; Iijima, B.; Lichten, S.; Lindqwister, U.; Mannucci, A.; Muellerschoen, R.; Munson, T.; Moore, A.W.; et al. A Real-Time Wide Area Differential GPS System. Navigation 1997, 44, 433–447. [Google Scholar] [CrossRef]

- Bakuła, M.; Przestrzelski, P.; Kaźmierczak, R. Reliable technology of centimeter GPS/GLONASS surveying in forest environments. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1029–1038. [Google Scholar] [CrossRef]

- Siejka, Z.J.S. Validation of the accuracy and convergence time of real time kinematic results using a single galileo navigation system. Sensors 2018, 18, 2412. [Google Scholar] [CrossRef]

- Specht, M.; Specht, C.; Wilk, A.; Koc, W.; Smolarek, L.; Czaplewski, K.; Karwowski, K.; Dąbrowski, P.S.; Skibicki, J.; Chrostowski, P.; et al. Testing the Positioning Accuracy of GNSS Solutions during the Tramway Track Mobile Satellite Measurements in Diverse Urban Signal Reception Conditions. Energies 2020, 13, 3646. [Google Scholar] [CrossRef]

- Lai, L.; Wei, W.; Li, G.; Wu, D.; Zhao, Y. Design of Gimbal Control System for Miniature Control Moment Gyroscope. In Proceedings of the 2018 Chinese Automation Congress (CAC), Xi’an, China, 30 November–2 December 2018; pp. 3529–3533. [Google Scholar]

- Belfi, J.; Beverini, N.; Bosi, F.; Carelli, G.; Cuccato, D.; De Luca, G.; Di Virgilio, A.; Gebauer, A.; Maccioni, E.; Ortolan, A.; et al. Deep underground rotation measurements: GINGERino ring laser gyroscope in Gran Sasso. Rev. Sci. Instrum. 2017, 88, 034502. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Tan, Z.; Wang, X.; Wang, Z. Experimental analysis of the dynamic north-finding method based on a fiber optic gyroscope. Appl. Opt. 2017, 56, 6504–6510. [Google Scholar] [CrossRef]

- Liu, Y.; Shi, M.; Wang, X. Progress on atomic gyroscope. In Proceedings of the 2017 24th Saint Petersburg International Conference on Integrated Navigation Systems (ICINS), Saint Petersburg, Russia, 29–31 May 2017. [Google Scholar]

- Schwartz, S.; Feugnet, G.; Morbieu, B.; El Badaoui, N.; Humbert, G.; Benabid, F.; Fsaifes, I.; Bretenaker, F. New approaches in optical rotation sensing. In Proceedings of the International Conference on Space Optics—ICSO 2014, Tenerife, Spain, 7–10 October 2014; p. 105633Y. [Google Scholar]

- Kok, M.; Hol, J.D.; Schön, T.B. Using inertial sensors for position and orientation estimation. arXiv 2017, arXiv:1704.06053. [Google Scholar]

- Krasuski, K.; Savchuk, S. Determination of the Precise Coordinates of the GPS Reference Station in of a GBAS System in the Air Transport. Commun. Sci. Lett. Univ. Zilina 2020, 22, 11–18. [Google Scholar] [CrossRef]

- Paziewski, J.; Sieradzki, R.; Baryla, R. Multi-GNSS high-rate RTK, PPP and novel direct phase observation processing method: Application to precise dynamic displacement detection. Meas. Sci. Technol. 2018, 29, 035002. [Google Scholar] [CrossRef]

- Wu, F.; Hu, Z.; Duan, F. 8-point algorithm revisited: Factorized 8-point algorithm. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Kyoto, Japan, 27 September–4 October 2009; Volume 1, pp. 488–494. [Google Scholar]

- Li, Y. The Design and Implementation of Coordinate Conversion System; China University of Geosciences: Wuhan, China, 2010. [Google Scholar]

- Yan, M.; Du, P.; Wang, H.-L.; Gao, X.-J.; Zhang, Z.; Liu, D. Ground multi-target positioning algorithm for airborne optoelectronic system. J. Appl. Opt. 2012, 33, 717–720. [Google Scholar]

| Virtual Camera Model | (m) | (m) | (m) |

|---|---|---|---|

| Virtual single image plane camera model | 0.977 | 0.336 | 0.490 |

| Virtual omnidirectional camera model | 0.254 | 0.132 | 0.173 |

| Virtual Camera Model | (°) | (°) | (°) |

|---|---|---|---|

| Virtual single image plane camera model | 0.188 | 0.062 | 0.221 |

| Virtual omnidirectional camera model | 0.084 | 0.029 | 0.085 |

| Serial Number | 3D Coordinates of Control Point | Azimuth and Pitch | |||

|---|---|---|---|---|---|

| (m) | (m) | (m) | (°) | (°) | |

| 1 | −2,111,731.43 | 4,650,038.09 | 3,808,082.93 | 101.780 | −0.364 |

| 2 | −2,111,623.25 | 4,650,128.17 | 3,808,035.33 | 58.333 | 0.115 |

| 3 | −2,111,615.66 | 4,650,229.64 | 3,807,916.79 | 4.739 | −0.018 |

| 4 | −2,111,638.23 | 4,650,247.04 | 3,807,883.35 | 348.565 | −0.132 |

| 5 | −2,111,660.48 | 4,650,264.24 | 3,807,850.40 | 332.347 | −0.216 |

| 6 | −2,111,682.46 | 4,650,281.25 | 3,807,817.91 | 318.308 | −0.273 |

| 7 | −2,111,679.49 | 4,650,299.64 | 3,807,802.63 | 316.095 | 0.700 |

| 8 | −2,111,746.73 | 4,650,330.73 | 3,807,722.77 | 292.785 | −0.376 |

| 9 | −2,111,837.99 | 4,650,215.37 | 3,807,824.48 | 251.707 | 2.416 |

| 10 | −2,111,824.46 | 4,650,224.93 | 3,807,820.37 | 259.246 | 2.464 |

| Serial Number | Residual Error of 3D Control Point | Residual Error of Azimuth and Pitch | |||

|---|---|---|---|---|---|

| (m) | (m) | (m) | (°) | (°) | |

| 1 | 0.004 | 0.441 | 0.008 | −0.002 | 0.120 |

| 2 | 0.001 | –0.635 | –0.002 | 0.001 | −0.197 |

| 3 | –0.012 | –1.189 | –0.004 | −0.004 | −0.432 |

| 4 | 0.010 | –1.144 | –0.005 | 0.003 | −0.436 |

| 5 | 0.002 | –1.105 | –0.008 | −0.001 | −0.409 |

| 6 | 0.010 | –1.027 | –0.002 | 0.002 | −0.344 |

| 7 | –0.015 | –1.147 | 0.001 | −0.003 | −0.339 |

| 8 | 0.016 | –0.877 | 0.020 | 0.005 | −0.197 |

| 9 | 0.008 | 0.236 | 0.008 | 0.002 | 0.104 |

| 10 | 0.023 | 0.110 | –0.096 | −0.043 | 0.048 |

| RMSE | 0.012 | 0.644 | 0.032 | 0.014 | 0.297 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chai, B.; Wei, Z. On-Site Global Calibration of Mobile Vision Measurement System Based on Virtual Omnidirectional Camera Model. Remote Sens. 2021, 13, 1982. https://doi.org/10.3390/rs13101982

Chai B, Wei Z. On-Site Global Calibration of Mobile Vision Measurement System Based on Virtual Omnidirectional Camera Model. Remote Sensing. 2021; 13(10):1982. https://doi.org/10.3390/rs13101982

Chicago/Turabian StyleChai, Binhu, and Zhenzhong Wei. 2021. "On-Site Global Calibration of Mobile Vision Measurement System Based on Virtual Omnidirectional Camera Model" Remote Sensing 13, no. 10: 1982. https://doi.org/10.3390/rs13101982

APA StyleChai, B., & Wei, Z. (2021). On-Site Global Calibration of Mobile Vision Measurement System Based on Virtual Omnidirectional Camera Model. Remote Sensing, 13(10), 1982. https://doi.org/10.3390/rs13101982