Abstract

In this paper, we address various challenges in multi-pedestrian and vehicle tracking in high-resolution aerial imagery by intensive evaluation of a number of traditional and Deep Learning based Single- and Multi-Object Tracking methods. We also describe our proposed Deep Learning based Multi-Object Tracking method AerialMPTNet that fuses appearance, temporal, and graphical information using a Siamese Neural Network, a Long Short-Term Memory, and a Graph Convolutional Neural Network module for more accurate and stable tracking. Moreover, we investigate the influence of the Squeeze-and-Excitation layers and Online Hard Example Mining on the performance of AerialMPTNet. To the best of our knowledge, we are the first to use these two for regression-based Multi-Object Tracking. Additionally, we studied and compared the and Huber loss functions. In our experiments, we extensively evaluate AerialMPTNet on three aerial Multi-Object Tracking datasets, namely AerialMPT and KIT AIS pedestrian and vehicle datasets. Qualitative and quantitative results show that AerialMPTNet outperforms all previous methods for the pedestrian datasets and achieves competitive results for the vehicle dataset. In addition, Long Short-Term Memory and Graph Convolutional Neural Network modules enhance the tracking performance. Moreover, using Squeeze-and-Excitation and Online Hard Example Mining significantly helps for some cases while degrades the results for other cases. In addition, according to the results, yields better results with respect to Huber loss for most of the scenarios. The presented results provide a deep insight into challenges and opportunities of the aerial Multi-Object Tracking domain, paving the way for future research.

1. Introduction

Visual Object Tracking (VOT), that is, locating objects in video frames over time, is a dynamic field of research with a wide variety of practical applications such as in autonomous driving, robot aided surgery, security, and safety. The recent advances in machine and deep learning techniques have drastically boosted the performance of VOT methods by solving long-standing issues such as modeling appearance feature changes and relocating the lost objects [1,2,3]. Nevertheless, the performance of the existing VOT methods is not always satisfactory due to hindrances such as heavy occlusions, difference in scales, background clutter or high-density in the crowded scenes. Thus, developing more sophisticated VOT methods overcoming these challenges is highly demanded.

The VOT methods can be categorized into Single-Object Tracking (SOT) and Multi-Object Tracking (MOT) methods, which track single and multiple objects throughout subsequent video frames, respectively. The MOT scenarios are often more complex than the SOT because the trackers must handle a larger number of objects in a reasonable time (e.g., ideally real-time). Most previous VOT works using traditional approaches such as Kalman and particle filters [4,5], Discriminative Correlation Filter (DCF) [6], or silhouette tracking [7], simplify the tracking procedure by constraining the tracking scenarios with, for example, stationary cameras, limited number of objects, limited occlusions, or absence of sudden background or object appearance changes. These methods usually use handcrafted feature representations (e.g., Histogram of Gradients (HOG) [8], color, position) and their target modeling is not dynamic [9]. In real-world scenarios, however, such constraints are often not applicable and VOT methods based on these traditional approaches perform poorly.

The rise of Deep Learning (DL) offered several advantages in object detection, segmentation, and classification [10,11,12]. Approaches based on DL have also been successfully applied to VOT problems, and significantly enhancing the performance, especially in unconstrained scenarios. Examples include the Convolutional Neural Network (CNN) [13,14], Recurrent Neural Network (RNN) [15], Siamese Neural Network (SNN) [16,17], Generative Adversarial Network (GAN) [18] and several customized architectures [19].

Despite the many progress made for VOT in ground imagery, in the remote sensing domain, VOT has not been fully exploited, due to the limited available volume of images with high enough resolution and level of details. In recent years, the development of more advanced camera systems and the availability of very high-resolution aerial images have opened new opportunities for research and applications in the aerial VOT domain ranging from the analysis of ecological systems to aerial surveillance [20,21].

Aerial imagery allows collecting very high-resolution data from wide open areas in a cost- and time-efficient manner. Performing MOT based on such images (e.g., with Ground Sampling Distance (GSD) < 20 cm/pixel) allows us to track and monitor the movement behaviours of multiple small objects such as pedestrians and vehicles for numerous applications such as disaster management and predictive traffic and event monitoring. However, few works have addressed aerial MOT [22,23,24], and the aerial MOT datasets are rare. The large number and the small sizes of moving objects compared to the ground imagery scenarios together with large image sizes, moving cameras, multiple image scale, low frame rates as well as various visibility levels and weather conditions makes MOT in aerial imagery especially complicate. Existing drone or ground surveillance datasets frequently used as MOT benchmarks, such as MOT16 and MOT17 [25], are very different from aerial MOT scenarios with respect to their image and object characteristics. For example, the objects are bigger and the scenes are less crowded, with the objects appearance features usually being discriminative enough to distinguish the objects. Moreover, the videos have higher frame rates and better qualities and contrasts.

In this paper, we aim at investigating various existing challenges in the tracking of multiple pedestrian and vehicles in aerial imagery through intensive experiments with a number of traditional and DL-based SOT and MOT methods. This paper extends our recent work [26], in which we introduced a new MOT dataset, the so-called Aerial Multi-Pedestrian Tracking (AerialMPT), as well as a novel DL-based MOT method, the so-called AerialMPTNet, that fuses appearance, temporal, and graphical information for a more accurate MOT. In this paper, we also extensively evaluate the effectiveness of different parts of AerialMPTNet and compare it to traditional and state-of-the-art DL-based MOT methods. Additionally, we propose a MOT method inspired by the SORT method [27], the so-called Euclidean Online Tracking (EOT), which employs GSD adapted Euclidean distance for object association in consecutive frames.

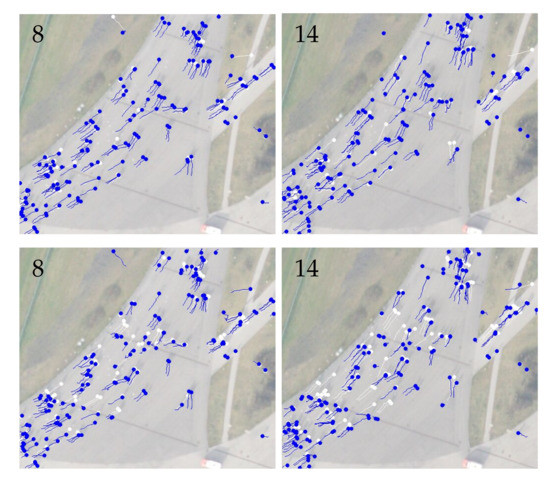

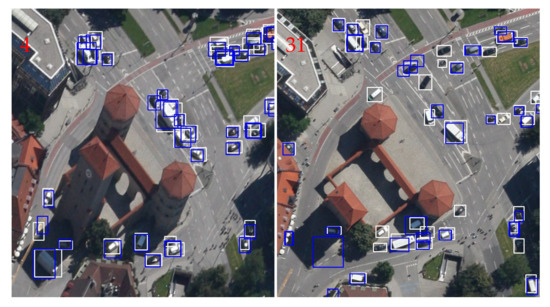

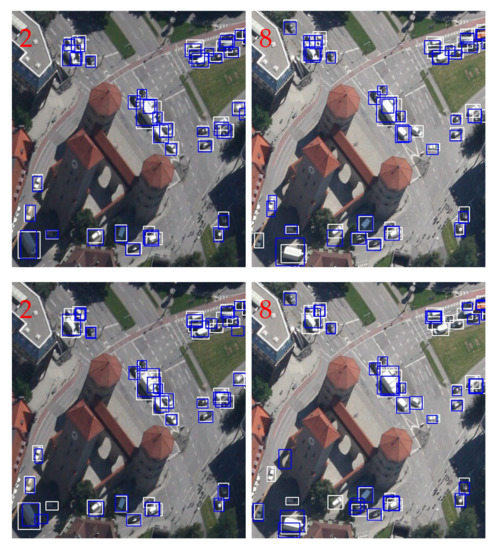

We conduct our experiments on three aerial MOT datasets, namely AerialMPT and KIT AIS (https://www.ipf.kit.edu/code.php, accessed on 10 May 2021) pedestrian and vehicle datasets. All image sequences were captured by an airborne platform during different flight campaigns of the German Aerospace Center (DLR) (https://www.dlr.de, accessed on 10 May 2021) and vary significantly in object density, movement patterns, and image size and quality. Figure 1 shows sample images from the AerialMPT dataset with the tracking results of our AerialMPTNet. The images were captured at different flight altitudes and their GSD (reflecting the spatial size of a pixel) varies between 8 cm and 13 cm. The total number of objects per sequence ranges up to 609. Pedestrians in these datasets appear as small points, hardly exceeding an area of 4 × 4 pixels. Even for human experts, distinguishing multiple pedestrians based on their appearance is laborious and challenging. Vehicles appear as bigger objects and are easier to distinguish based on their appearance features. However, different vehicle sizes, fast movements together with the low frame rates (e.g., 2 fps) and occlusions by bridges, trees, or other vehicles presents challenges to the vehicle tracking algorithm, illustrated in Figure 2.

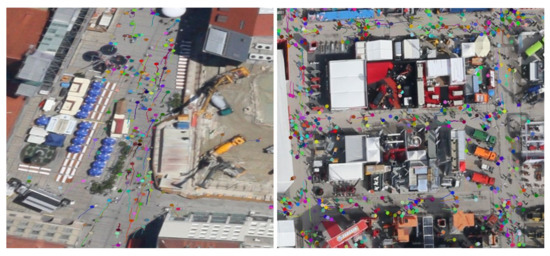

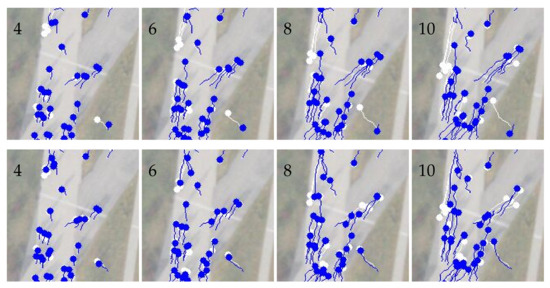

Figure 1.

Multi-Pedestrian tracking results of AerialMPTNet on the frame 18 of the “Munich02” (left) and frame 10 of the “Bauma3” (right) sequences of the AerialMPT dataset. Different pedestrians are depicted in different colors with the corresponding trajectories.

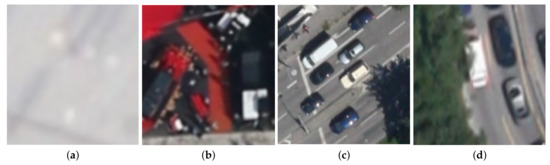

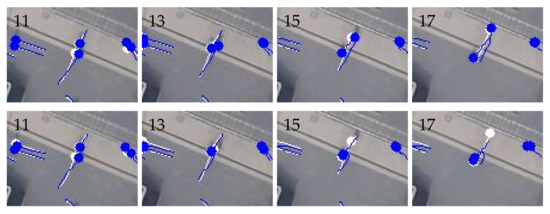

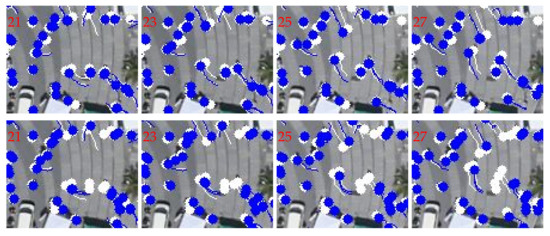

Figure 2.

Illustrations of some challenges in aerial MOT datasets. The examples are from the KIT AIS pedestrian (a), AerialMPT (b), and KIT AIS vehicle datasets (c,d). Multiple pedestrians which are hard to distinguish due to their similar appearance features and low image contrast (a). Multiple pedestrians at a trade fair walking closely together with occlusions, shadows, and strong background colors (b). Multiple vehicles at a stop light where the shadow on the right hand side can be problematic (c). Multiple vehicles with some of them occluded by trees (d).

AerialMPTNet is an end-to-end trainable regression-based neural network comprising a SNN module which takes two image patches as inputs, a target and a search patch, cropped from a previous and a current frame, respectively. The object location is known in the target patch and should be predicted for the search patch. In order to overcome the tracking challenges of the aerial MOT such as the objects with similar appearance features and densely moving together, AerialMPTNet incorporates temporal and graphical information in addition to the appearance information provided by the SNN module. Our AerialMPTNet employs a Long Short-Term Memory (LSTM) for temporal information extraction and movement prediction, and a Graph Convolutional Neural Network (GCNN) for modeling the spatial and temporal relationships between adjacent objects (graphical information). AerialMPTNet outputs four values indicating the coordinates of the top-left and bottom-right corners of each object’s bounding box in the search patch. In this paper, we also investigate the influence of Squeeze-and-Excitation (SE) and Online Hard Example Mining (OHEM) [28] on the tracking performance of AerialMPTNet. To the best of our knowledge, we are the first work applying adaptive weighting of convolutional channels by SE and employ OHEM for the training of a DL-based tracking-by-regression method.

According to the results, our AerialMPTNet outperforms all previous methods for the pedestrian datasets and achieves competitive results for the vehicle dataset. Furthermore, LSTM and GCNN modules adds value to the tracking performance. Moreover, while using SE and OHEM can significantly help in some scenarios, in other cases they may degrade the tracking results. In summary, the contributions of this paper over our previous work [26] are:

- We apply OHEM and SE to a MOT task for the first time.

- We propose EOT which outperforms tracking methods with Intersection over Union (IoU)-based association strategy.

- We conduct an ablation study to evaluate the role of all different parts of AerialMPTNet.

- We evaluate the role of loss functions in the tracking performance by comparing and Huber loss functions.

- We evaluated and compared various MOT methods for pedestrian tracking in aerial imagery.

- We conduct intensive qualitative and quantitative evaluations of AerialMPTNet on two aerial pedestrian and one aerial vehicle tracking datasets.

We believe that our paper can promote research on aerial MOT (esp. for pedestrians and vehicles) by providing a deep insight into its challenges and opportunities.

The rest of the paper is organized as follows: Section 2 presents an overviews on related works; Section 3 introduces the datasets used in our experiments; Section 4 represents the metrics used for our quantitative evaluations; Section 5 provides a comprehensive study on previous traditional and DL-based tracking methods on the aerial MOT datasets, with Section 8.4 explaining our AerialMPTNet with all its configurations; Section 7 represents our experimental setups; Section 8 provides an extensive evaluation of our AerialMPTNet and compares it to the other methods; and Section 10 concludes our paper and gives ideas for future works.

2. Related Works

Visual object tracking is defined as locating one or multiple objects in videos or image sequences over time. The traditional tracking process comprises four phases including initialization, appearance modeling, motion modeling, and object finding. During initialization, the targets are detected manually or by an object detector. In the appearance modeling step, visual features of the region of interest are extracted by various learning-based methods for detecting the target objects. The variety of scales, rotations, shifts, and occlusions makes this step challenging. Image features play a key role in the tracking algorithms. They can be mainly categorized into handcrafted and deep features. In recent years, research studies and applications have focused on developing and using deep features based on DNNs which have shown to be able to incorporate multi-level information and more robustness against appearance variations [29]. Nevertheless, DNNs require large enough training datasets, which are not always available. Thus, for many applications, the handcrafted features are still preferable. The motion modeling step aims at predicting the object movement in time and estimate the object locations in the next frames. This procedure effectively reduces the search space and consequently the computation cost. Widely used methods for motion modeling include Kalman filter [30], Sequential Monte Carlo methods [31] and RNNs. In the last step, object locations are found as the ones close to the estimated locations by the motion model.

2.1. Various Categorizations of VOT

Visual object tracking methods can be divided into SOT [32,33] and MOT [22,34] methods. While SOTs only track a single predetermined object throughout a video, even if there are multiple objects, MOTs can track multiple objects at the same time. Thus, MOTs can face exponential complexity and runtime increase based on the number of objects as compared to SOTs.

Object tracking methods also can be categorized into detection-based [35] and detection-free methods [36]. While the detection-based methods utilize object detectors to detect objects in each frame, the detection-free methods only need the initial object detection. Therefore, detection-free methods are usually faster than the detection-based ones; however, they are not able to detect new objects entering the scene and require manual initialization.

Object tracking methods can be further divided based on their training strategies using either online or offline learning strategy. The methods with an online learning strategy can learn about the tracked objects during runtime. Thus, they can track generic objects [37]. The methods with offline learning strategy are trained beforehand and are therefore faster during runtime [38].

Tracking methods can be categorized into online and offline. Offline trackers take advantage of past and futures frames, while online ones can only infer from past frames. Although having all frames by offline tracking methods can increase the performance, in real-world scenarios future frames are not available.

Most existing tracking approaches are based on a two-stage tracking-by-detection paradigm [39,40]. In the first stage, a set of target samples is generated around the previously estimated position using region proposal, random sampling, or similar methods. In the second stage, each target sample is either classified as background or as the target object. In one-stage-tracking, however, the model receives a search sample together with a target sample as two inputs and directly predicts a response map or object coordinates by a previously trained regressor [17,22].

Object tracking methods can be categorized into the Traditional and DL-Based ones. Traditional tracking methods mostly rely on the Kalman and particle filters to estimate object locations. They use velocity and location information to perform tracking [4,5,41]. Tracking methods only relying on such approaches have shown poor performance in unconstrained environments. Nevertheless, such filters can be advantageous in limiting the search space (decreasing the complexity and computational cost) by predicting and propagating object movements to the following frames. A number of traditional tracking methods follow a tracking-by-detection paradigm based on template matching [42]. A given target patch models the appearance of the region of interest in the first frame. Matched regions are then found in the next frame using correlation, normalized cross-correlation, or the sum of squared distances methods [43,44]. Scale, illumination, and rotation changes can cause difficulties with these methods. More advanced tracking-by-detection-based methods rely on discriminative modeling, separating targets from their backgrounds within a specific search space. Various methods have been proposed for discriminative modeling, such as boosting methods and Support Vector Machines (SVMs) [45,46]. A series of traditional tracking algorithms, such as MOSSE and KCF [6,47], utilizes correlation filters, which model the target’s appearance by a set of filters trained on the images. In these methods, the target object is initially selected by cropping a small patch from the first frame centered at the object. For the tracking, the filters are convolved with a search window in the next frame. The output response map assumes to have a peak at the target’s next location. As the correlation can be computed in the Fourier domain, such trackers achieve high frame rates.

Recently, many research works and applications have focused on using DL-based tracking methods. The great advantage of DL-based features over handcrafted ones such as HOG, raw pixels values or grey-scale templates have been presented previously for a variety of computer vision applications. These features are robust against appearance changes, occlusions, and dynamic environments. Examples of DL-based tracking methods include re-identification with appearance modeling and deep features [34], position regression mainly based on SNNs [16,17], path prediction based on RNN-like networks [48], and object detection with DNNs such as YOLO [49].

2.2. SOTs and MOTs

Among various categorizations, in this section, we consider the SOT and MOT one for reviewing the existing object tracking methods. We believe that this is the fundamental categorization of the tracking methods which significantly affects the method design. In the following, we briefly introduce a few recent methods from both categories and experimentally discuss their strengths and limitations on aerial imagery in Section 5.

2.2.1. SOT Methods

Kalal et al. proposed Median Flow [50], which utilizes point and optical flow tracking. The inputs to the tracker are two consecutive images together with the initial bounding box of the target object. The tracker calculates a set of points from a rectangular grid within the bounding box. Each of these points is tracked by a Lucas-Kanade tracker generating a sparse motion flow. Afterwards, the framework evaluates the quality of the predictions and filters out the worst 50%. The remaining point predictions are used to calculate the new bounding box positions considering the displacement.

MOSSE [6], KFC [47] and CSRT [51] are based upon DCFs. Bolme et al. [6] proposed MOSSE which uses a new type of correlation filter called Minimum Output Sum of Squared Errors (MOSSE), which aims at producing stable filters when initialized using only one frame and grey-scale templates. MOSSE is trained with a set of training images and training outputs , where is generated from the ground truth as a 2D Gaussian centered on the target. This method can achieve state-of-the-art performances while running with high frame rates. Henriques et al. [47] replaced the grey-scale templates with HOG features and proposed the idea of Kernelized Correlation Filter (KCF). KCF works with multiple channel-like correlation filters. Additionally, the authors proposed using non-linear regression functions which are stronger than linear functions and provide non-linear filters that can be trained and evaluated as efficiently as linear correlation filters. Similar to KCF, dual correlation filters use multiple channels. However, they are based on linear kernels to reduce the computational complexity while maintaining almost the same performance as the non-linear kernels. Recently, Lukezic et al. [51] proposed to use channel and reliability concepts to improve tracking based on DCFs. In this method, the channel-wise reliability scores weight the influence of the learned filters based on their quality to improve the localization performance. Furthermore, a spatial reliability map concentrates the filters to the relevant part of the object for tacking. This makes it possible to widen the search space and improves the tracking performance for non-rectangular objects.

As we stated before, the choice of appearance features plays a crucial role in object tracking. Most previous DCF-based works utilize handcrafted features such as HOG, grey-scale features, raw pixels, and color names or the deep features trained independently for other tasks. Wang et al. [32] proposed an end-to-end trainable network architecture able to learn convolutional features and perform the correlation-based tracking simultaneously. The authors encode a DCF as a correlation filter layer into the network, making it possible to backpropagate the weights through it. Since the calculations remain in the Fourier domain, the runtime complexity of the filter is not increased. The convolutional layers in front of the DCF encode the prior tracking knowledge learned during an offline training process. The DCF defines the network output as the probability heatmaps of object locations.

In the case of generic object tracking, the learning strategy is typically entirely online. However, online training of neural networks is slow due to backpropagation leading to a high run time complexity. However, Held et al. [17] developed a regression-based tracking method, called GOTURN, based on a SNN, which uses an offline training approach helping the network to learn the relationship between appearance and motion. This makes the tracking process significantly faster. This method utilizes the knowledge gained during the offline training to track new unknown objects online. The authors showed that without online backpropagation, GOTURN can track generic objects at 100 fps. The inputs to the network are two image patches cropped from the previous and current frames, centered at the known object position in the previous frame. The size of the patches depends on the object bounding box sizes and can be controlled by a hyperparameter. This determines the amount of contextual information given to the network. The network output is the coordinates of the object in the current image patch, which is then transformed to the image coordinates. GOTURN achieves state-of-the-art performance on common SOT benchmarks such as VOT 2014 (https://www.votchallenge.net/vot2014/, accessed on 10 May 2021).

2.2.2. MOT Methods

Bewley et al. [27] proposed a simple multi-object tracking approach, called SORT, for online tracking applications. Bounding box position and size are the only values used for motion estimation and assigning the objects to their new positions in the next frame. In the first step, objects are detected using Faster R-CNN [12]. Subsequently, a linear constant velocity model approximates the movements of each object individually in consecutive frames. Afterwards, the algorithm compares the detected bounding boxes to the predicted ones based on IoU, resulting in a distance matrix. The Hungarian algorithm [52] then assigns each detected bounding box to a predicted (target) bounding box. Finally, the states of the assigned targets are updated using a Kalman filter. SORT runs with more than 250 Frames per Second (fps) with almost state-of-the-art accuracy. Nevertheless, occlusion scenarios and re-identification issues are not considered for this method, which makes it inappropriate for long-term tracking.

Wojke et al. [34] extended SORT to DeepSORT and tackled the occlusion and re-identification challenges, keeping the track handling and Kalman filtering modules almost unaltered. The main improvement takes place into the assignment process, in which two additional metrics are used: (1) motion information provided based on the Mahalanobis distance between the detected and predicted bounding boxes, (2) appearance information by calculating the cosine distance between the appearance features of a detected object and the already tracked object. The appearance features are computed by a deep neural network trained on a large person re-identification dataset [53]. A cascade strategy then determines object-to-track assignments. This strategy effectively encodes the probability spread in the association likelihood. DeepSORT performs poorly if the cascade strategy cannot match the detected and predicted bounding boxes.

Recently, Bergmann et al. [1] introduced Tracktor++ which is based on the Faster R-CNN object detection method. Faster R-CNN classifies region proposals to target and background and fits the selected bounding boxes to object contours by a regression head. The authors trained Faster R-CNN on the MOT17Det pedestrian dataset [25]. The first step is an object detection by Faster R-CNN. The detected objects in the first frame are then initialized as tracks. Afterwards, the tracks are tracked in the next frame by regressing their bounding boxes using the regression head. In this method, the lost or deactivated tracks can be re-identified in the following frames using a SNN and a constant velocity motion model.

2.3. Tracking in Satellite and Aerial Imagery

The reviewed object tracking methods in the previous sections have been mainly developed for computer vision datasets and challenges. In this section, we focus on the proposed methods for satellite and aerial imagery. Visual object tracking for targets such as pedestrians and vehicles in satellite and aerial imagery is a challenging task that has been addressed by only few works, compared to the huge number addressing pedestrian and vehicle tracking in ground imagery [13,54].Tracking in satellite and aerial imagery is much more complex. This is due to the moving cameras, large image sizes, different scales, large number of moving objects, tiny size of the objects (e.g., 4 × 4 pixels for pedestrians, 30 × 15 for vehicles), low frame rates, different visibility levels, and different atmospheric and weather conditions [25,55].

2.3.1. Tracking by Moving Object Detection

Most of the previous works in satellite and aerial object tracking are based on moving object detection [23,24,56]. Reilly et al. [23] proposed one of the earliest aerial object tracking approaches focusing on vehicle tracking mainly in highways. They compensate camera motion by a correction method based on point correspondence. A median background image is then modeled from ten frames and subtracted from the original frame for motion detection, resulting in the moving object positions. All images are split into overlapping grids, with each one defining an independent tracking problem. Objects are tracked using bipartite graph, matching a set of label nodes and a set of target nodes. The Hungarian algorithm solves the cost matrix afterwards to determine the assignments. The usage of the grids allows tracking large number of objects with the runtime complexity for the Hungarian algorithm.

Meng et al. [24] followed the same direction. They addressed the tracking of ships and grounded aircrafts. Their method detects moving objects by calculating an Accumulative Difference Image (ADI) from frame to frame. Pixels with high values in the ADI are likely to be moving objects. Each target is afterwards modeled by extracting its spectral and spatial features, where spectral features refer to the target probability density functions and the spatial features to the target geometric areas. Given the target model, matching candidates are found in the following frames via regional feature matching using a sliding window paradigm.

Tracking methods based on moving object detection are not applicable for our pedestrian and vehicle tracking scenarios. For instance, Reilly et al. [23] use a road orientation estimate to constrain the assignment problem. Such estimations which may work for vehicles moving along predetermined paths (e.g., highways and streets), do not work for pedestrian tracking with much more diverse and complex movement behaviors (e.g., crowded situations and multiple crossings). In general, such methods perform poorly in unconstrained environments, are sensitive to illumination change and atmospheric conditions (e.g., clouds, shadows, or fog), suffer from the parallax effect, and cannot handle small or static objects. Additionally, since finding the moving objects requires considering multiple frames, these methods cannot be used for the real-time object tracking.

2.3.2. Tracking by Appearance Features

The methods based on appearance-like features overcome the issues of the tracking by moving object detection approaches [22,57,58,59,60], making it possible to detect small and static objects on single images. Butenuth et al. [57] deal with pedestrian tracking in aerial image sequences. They employ an iterative Bayesian tracking approach to track numerous pedestrians, where each pedestrian is described by its position, appearance features, and direction. A linear dynamic model then predicts futures states. Each link between a prediction and a detection is weighted by evaluating the state similarity and associated with the direct link method described in [35]. Schmidt et al. [58] developed a tracking-by-detection framework based on Haar-like features. They use a Gentle AdaBoost classifier for object detection and an iterative Bayesian tracking approach, similar to [57]. Additionally, they calculate the optical flow between consecutive frames to extract motion information. However, due to the difficulties of detecting small objects in aerial imagery, the performance of the method is degraded by a large number of false positives and negatives.

Bahmanyar et al. [22] proposed Stack of Multiple Single Object Tracking CNNs (SMSOT-CNN) and extended the GOTURN method, a SOT method developed by Held et al. [17], by stacking the architecture of GOTURN to track multiple pedestrians and vehicles in aerial image sequences. SMSOT-CNN is the only previous DL-based work dealing with MOT. SMSOT-CNN expands the GOTURN network by three additional convolutional layers to improve the tracker’s performance in locating the object in the search area. In their architecture, each SOT-CNN is responsible for tracking one object individually leading to a linear increase in the tracking complexity by the number of objects. They evaluate their approach on the vehicle and pedestrian sets of the KIT AIS aerial image sequence dataset. Experimental results show that SMSOT-CNN significantly outperforms GOTURN. Nevertheless, SMSOT-CNN performs poorly in crowded situations and when objects share similar appearance features.

In Section 5, we experimentally investigate a set of the reviewed visual object tracking methods on three aerial object tracking datasets.

3. Datasets

In this section, we introduce the datasets used in our experiments, namely the KIT AIS (pedestrian and vehicle sets), the Aerial Multi-Pedestrian Tracking (AerialMPT) [26], and DLR’s Aerial Crowd Dataset (DLR-ACD) [61]. All these datasets are the first of their kind and aim at promoting pedestrian and vehicle detection and tracking based on aerial imagery. The images of all these datasetes have been acquired by the German Aerospace Center (DLR) using the 3K camera system, comprising a nadir-looking and two side-looking DSLR cameras, mounted on an airborne platform flying at different altitudes. The different flight altitudes and camera configurations allow capturing images with multiple spatial resolutions (ground sampling distances-GSDs) and viewing angles.

For the tracking datasets, since the camera is continuously moving, in a post-processing step, all images were orthorectified with a digital elevation model, co-registered, and geo-referenced with a GPS/IMU system. Afterwards, images taken at the same time were fused into a single image and cropped to the region of interest. This process caused small errors visible in the frame alignments. Moreover, the frame rate of all sequences is 2 Hz. The image sequences were captured during different flight campaigns and differ significantly in object density, movement patterns, qualities, image sizes, viewing angles, and terrains. Furthermore, different sequences are composed by a varying number of frames ranging from 4 to 47. The number of frames per sequence depends on the image overlap in flight direction and the camera configuration.

3.1. KIT AIS

The KIT AIS dataset is generated for two tasks, vehicle and pedestrian tracking. The data have been annotated manually by human experts and suffer from a few human errors. Vehicles are annotated by the smallest enclosing rectangle (i.e., bounding box) oriented in the direction of their travel, while individual pedestrians are marked by point annotations on their heads. In our experiments, we used bounding boxes of sizes and pixels for the pedestrians according to the GSDs of the images, ranging from 12 to 17 cm. As objects may leave the scene or be occluded by other objects, the tracks are not labeled continuously for all cases. For the vehicle set cars, trucks, and buses are annotated if they lie entirely within the image region with more than of their bodies visible. In the pedestrian set only pedestrians are labeled. Due to crowded scenarios or adverse atmospheric conditions in some frames, pedestrians can be hardly visible. In these cases, the tracks have been estimated by the annotators as precisely as possible. Table 1 and Table 2 represent the statistics of the pedestrian and vehicle sets of the KIT AIS dataset, respectively.

Table 1.

Statistics of the KIT AIS pedestrian dataset.

Table 2.

Statistics of the KIT AIS vehicle dataset.

The KIT AIS pedestrian is composed of 13 sequences with 2649 pedestrians (Pedest.), annotated by 32,760 annotation points (Anno.) throughout the frames Table 1. The dataset is split into 7 training and 6 testing sequences with 104 and 85 frames (Fr.), respectively. The sequences are characterized by different lengths ranging from 4 to 31 frames. The image sequences come from different flight campaigns over Allianz Arena (Munich, Germany), Rock am Ring concert (Nuremberg, Germany), and Karlsplatz (Munich, Germany).

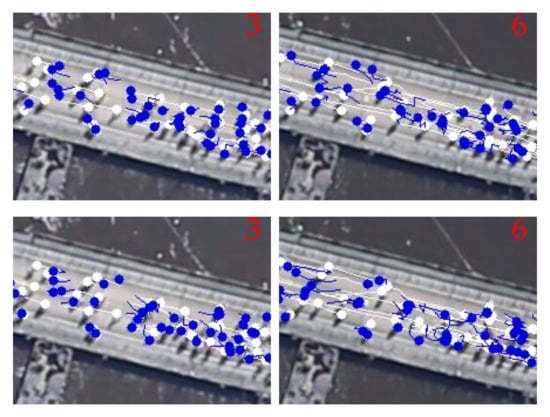

The KIT AIS vehicle comprises nine sequences with 464 vehicles annotated by 10,817 bounding boxes throughout 239 frames. It has no pre-defined train/test split. For our experiments, we split the dataset into five training and four testing sequences with 131 and 108 frames, respectively, similarly to [22]. According to Table 2, the lengths of the sequences vary between 14 and 47 frames. The image sequences have been acquired from a few highways, crossroads, and streets in Munich and Stuttgart, Germany. The dataset presents several tracking challenges such as lane change, overtaking, and turning maneuvers as well as partial and total occlusions by big objects (e.g., bridges). Figure 3 demonstrates sample images from the KIT AIS vehicle dataset.

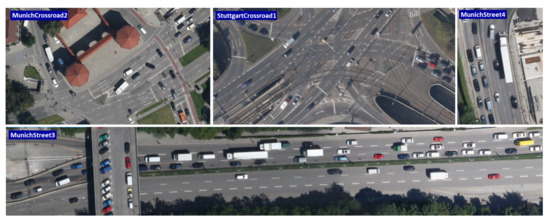

Figure 3.

Sample images from the KIT AIS vehicle dataset acquired at different locations in Munich and Stuttgart, Germany.

3.2. AerialMPT

The Aerial Multi-Pedestrian Tracking (AerialMPT) dataset [26] is newly introduced to the community, and deals with the shortcomings of the KIT AIS dataset such as the poor image quality and limited diversity. AerialMPT consists of 14 sequences with 2528 pedestrians annotated by 44,740 annotation points throughout 307 frames Table 3. Since the images have been acquired by a newer version of the DLR’s 3K camera system, their quality and contrast are much better than the images of KIT AIS dataset. Figure 4 compares a few sample images from the AerialMPT and KIT AIS datasets.

Table 3.

Statistics of the AerialMPT dataset.

Figure 4.

Sample images from the AerialMPT and KIT AIS datasets. “Bauma3”, “Witt”, “Pasing1” are from AerialMPT. “Entrance_01”, “Walking_02”, and “Munich02” are from KIT AIS.

AerialMPT is split into 8 training and 6 testing sequences with 179 and 128 frames, respectively. The lengths of the sequences vary between 8 and 30 frames. The image sequences were selected from different crowd scenarios, for example, from moving pedestrians on mass events and fairs to sparser crowds in the city centers. Figure 1 demonstrates an image from the AerialMPT dataset with the overlaid annotations.

AerialMPT vs. KIT AIS

The AerialMPT has been generated in order to mitigate the limitations of the KIT AIS pedestrian dataset. In addition to the higher quality of the images, the numbers of minimum annotations per frame and the total annotations of AerialMPT are significantly larger than those of the KIT AIS dataset. All sequences in AerialMPT contain at least 50 pedestrians, while more than 20% of the sequences of KIT AIS include less than ten pedestrians. Based on our visual inspection, not only the pedestrian movements in AerialMPT are more complex and realistic, but also the diversity of the crowd densities are greater than those of KIT AIS. The sequences in AerialMPT differ in weather conditions and visibility, incorporating more diverse kinds of shadows as compared to KIT AIS. Furthermore, the sequences of AerialMPT are longer in average, with 60% longer than 20 frames (less than 20% in KIT AIS). Further details on these datasets can be found in [26].

3.3. DLR-ACD

DLR-ACD is the first aerial crowd image dataset [61] comprises 33 large aerial RGB images with average size of pixels from different mass events and urban scenes containing crowds such as sports events, city centers, open-air fairs, and festivals. The GSDs of the images vary between 4.5 and 15 cm/pixel. In DLR-ACD 226,291 pedestrians have been manually labeled by point annotations, with the number of pedestrians ranging from 285 to 24,368 per image. In addition to its unique viewing angle, the large number of pedestrians in most of the images (>2 K) makes DLR-ACD stand out among the existing crowd datasets. Moreover, the crowd density can vary significantly within each image due to the large field of view of the images. Figure 5 demonstrates example images from the DLR-ACD dataset. For further details on this dataset, the interested reader is remanded to [61].

Figure 5.

Example images of the DLR-ACD dataset. The images are from an open-air (a) festival (b) and music concert.

4. Evaluation Metrics

In this section, we introduce the most important metrics we use for our quantitative evaluations. We adopted widely-used metrics in the MOT domain based on [25] which are listed in Table 4. In this table, ↑ and ↓ denote higher or lower values being better, respectively. The objective of MOT is finding the spatial positions of p objects as bounding boxes throughout an image sequence (object trajectories). Each bounding box is defined by the x and y coordinates of its top-left and bottom-right corners in each frame. Tracking performances are evaluated based on true positives (TP), correctly predicting the object positions, false positives (FP), predicting the position of another object instead of the target object’s position, and false negatives (FN), where an object position is totally missed. In our experiments, a prediction (tracklet) is considered as TP if the intersection over union (IoU) of the predicted and the corresponding ground truth bounding boxes is greater than . Moreover, an identity switch (IDS) occurs if an annotated object a is associated with a tracklet t, and the assignment in the previous frame was . The fragmentation metric shows the total number of times a trajectory is interrupted during tracking.

Table 4.

Description of the metrics used for quantitative evaluations.

Among these metrics, the crucial ones are the Multiple-Object Tracker Accuracy (MOTA) and the Multiple-Object Tracker Precision (MOTP). MOTA represents the ability of trackers in following the trajectories throughout the frames t, independently from the precision of the predictions:

The Multiple-Object Tracker Accuracy Log (MOTAL) is similar to MOTA; however, ID switches are considered on a logarithmic scale.

MOTP measures the performance of the trackers in precisely estimating object locations:

where is the distance between a matched object i and the ground truth annotation in frame t, and c is the total number of matched objects.

Each tracklet can be considered as mostly tracked (MT), partially tracked (PT), or mostly lost (ML), based on how successful an object is tracked during its whole lifetime. A tracklet is mostly lost if it is only tracked less than 20% of its lifetime and mostly tracked if it is tracked more than 80% of its lifetime. Partially tracked applies to all remaining tracklets. We report MT, PT, and ML as percentages of the total amount of tracks. The false acceptance rate (FAR) for an image sequence with f frames describes the average amount of FPs per frame:

In addition, we use recall and precision measures, defined as follows:

Identification precision (IDP), identification recall (IDR), and IDF1 are similar to precision and recall; however, they take into account how long the tracker correctly identifies the targets. IDP and IDR are the ratios of computed and ground-truth detections that are correctly identified, respectively. IDF1 is calculated as the ratio of correctly identified detections over the average number of computed and ground-truth detections. IDF1 allows ranking different trackers based on a single scalar value. For any further information on these metrics, the interested reader is remanded to [62].

5. Preliminary Experiments

This section empirically shows the existing challenges in aerial pedestrian tracking. We study the performance of a number of existing tracking methods including KCF [47], MOSSE [6], CSRT [51], Median Flow [50], SORT, DeepSORT [34], Stacked-DCFNet [32], Tracktor++ [1], SMSOT-CNN [22], and Euclidean Online Tracking on aerial data, and show their strengths and limitations. Since in the early phase of our research, only the KIT AIS pedestrian dataset was available to us, the experiments of this section have been conducted on this dataset. However, our findings also hold for the AerialMPT dataset.

The tracking performance is usually correlated to the detection accuracy for both detection-free and detection-based methods. As our main focus is at tracking performance, in most of our experiments we assume perfect detection results and use the ground truth data. While for the object locations in the first frame are given to the detection-free methods, the detection-based methods are provided with the object locations in every frame. Therefore, for the detection-based methods, the most substantial measure is the number of ID switches, while for the other methods all metrics are considered in our evaluations.

5.1. From Single- to Multi-Object Tracking

Many tracking methods have been initially designed to track only single objects. However, according to [22], most of them can be extended to handle MOT. Tracking management is an essential function in MOT which stores and exploits multiple active tracks at the same time, in order to remove and initialize the tracks of objects leaving from and entering into the scenes. For our experiments we developed a tracking management module for extending the SOT methods to MOT. It unites memory management, including the assignment of unique track IDs and individual object position storage, with track initialization, aging and removing functionalities.

OpenCV provides several built-in object tracking algorithms. Among them, we investigate the KCF, MOSSE, CSRT, and Median Flow SOT methods. We extend them to the MOT scenarios within the OpenCV framework. We initialize the trackers by the ground truth bounding box positions.

DCFNet [32] is also an SOT on a DCF. However, the DCF is implemented as part of a DNN and uses the features extracted by a light-weight CNN. Therefore, DCFNet is a perfect choice to study whether deep features improve the tracking performance compared to the handcrafted ones. For our experiments, we took the PyTorch implementation (https://github.com/foolwood/DCFNet_pytorch, accessed on 10 May 2021) of DCFNet and modified its network structure to handle multi-object tracking, and we refer to it as “Stacked-DCFNet”. From the KIT AIS pedestrian training set we crop a total of 20,666 image patches centered at every pedestrian. The patch size is the bounding box size multiplied by 10 in order to consider contextual information to some degree. Then we scale the patches to 125 × 125 pixels to match the network input size. Using the patches, we retrain the convolutional layers of the network for 50 epochs with ADAM [63] optimizer, MSE loss, initial learning rate of 0.01, and a batch size of 64. Moreover, we set the spatial bandwidth to 0.1 for both online tracking and offline training. Furthermore, in order to adapt it to MOT, we use our developed Python module. Multiple targets are given to the network within one batch. For each target object, the network receives two image patches, from previous and current frames, centered on the known previous position of the object. The network output is the probability heatmap in which the highest value represents the most likely object location in the image patch of the current frame (search patch). If this value is below a certain threshold, we consider the object as lost. Furthermore, we propose a simple linear motion model and set the center point of the search patch to the position estimate of this model instead of the position of the object in the previous frame patch (as in the original work). Based on the latest movement of a target, we estimate its position as:

where k determines the influence of the last movement. For all of the methods, we remove the objects if they leave the scene and their track ages are greater than 3 frames.

Table 5 and Table 6 show the overall and sequence-wise tracking results of these methods on the KIT AIS pedestrian dataset, respectively. The results of Table 5 indicate the poor performance of all of these methods with a total MOTA scores varying between −85.8 and −55.9. The results of KCF and MOSSE are very similar. However, the use of HOG features and non-linear kernels in KCF improves MOTA by 0.9 and MOTP by 0.5 points respectively, compared to MOSSE. Moreover, both methods mostly track about 1% of the pedestrians in average. However, they have the first and second best MOTP values among the compared methods in Table 5. This indicates that although they lose track of many objects (partially or totally), their tracking localization is relatively precise. Moreover, according to the results, Stacked-DCFNet significantly outperforms the method with handcrafted features by a MOTA score of −37.3 (18.6 points higher than that of the CSRT). The MT and ML rates are also improving with only losing 23.6% of all tracks while mostly tracking the 13.8% of the pedestrians.

Table 5.

Results of KCF, MOSSE, CSRT, Median Flow, and Stacked-DCFNet on the KIT AIS pedestrian dataset. The first and second best values are highlighted.

Table 6.

Results of KCF, MOSSE, CSRT, Median Flow, and Stacked-DCFNet on different sequences of KIT AIS pedestrian dataset. The first and second best values of each method on the sequences are highlighted.

CSRT (which is also DCF-based) outperforms both prior methods significantly, reaching a total MOTA and MOTP of −55.9 and 78.4. The smaller MOTP value of CSRT indicates its slightly worse tracklet localization precision as compared to KCF and MOSSE. Furthermore, it mostly tracks about 10% of the pedestrians in average and proves the effectiveness of the channel and reliability scores. According to the table, Median Flow achieves comparable results to CSRT with total MOTA and MOTP scores of −63.8 and 77.7, respectively. Comparing the results of different sequences in Table 6 indicates that all algorithms perform significantly better on the “RaR_Snack_Zone_02” and “RaR_Snack_Zone_04” sequences. Based on visual inspection, we argue that this is due to their short length resulting in fewer lost objects and ID switches. Comparing their performances on the longer sequences (“AA_Crossing_02”, “AA_Walking_02” and “Munich02”) demonstrates that Stacked-DCFNet performs much better than the other methods on these sequences, showing the ability of the method in tracking objects for a longer time.

Altogether, according to the results, we argue that the deep features outperform the handcrafted ones by a large margin.

5.2. Multi-Object Trackers

In this section, we study a number of MOT methods including SORT, DeepSORT, and Tracktor++. Additionally, we propose a new tracking algorithm called Euclidean Online Tracking (EOT) which uses the Euclidean distance for object matching.

5.2.1. DeepSORT and SORT

DeepSORT [34] is a MOT method comprising deep features and an IoU-based tracking strategy. For our experiments, we use the PyTorch implementation (https://github.com/ZQPei/deep_sort_pytorch, accessed on 10 May 2021) of DeepSORT and adapt it for the KIT AIS dataset by changing the bounding box size and IoU threshold, as well as fine-tuning the network on the training set of the KIT AIS dataset. As mentioned, for the object locations we use the ground truth and do not use the DeepSORT’s object detector. Table 7 and Table 8 show the tracking results of our experiments in which Rcll, Prcn, FAR, MT, PT, ML, FN, FM, and MOTP are not important in our evaluations as the ground truth is used instead of the detection results. Therefore, the best values for these metrics are not highlighted for non of the methods in Table 7 and for DeepSORTs and SORTs in Table 8.

Table 7.

Results of DeepSORT, SORT, Tracktor++, and SMSOT-CNN on the KIT AIS pedestrian dataset. The first and second best values are highlighted.

Table 8.

Results of DeepSORT, SORT, Tracktor++, and SMSOT-CNN on the KIT AIS pedestrian dataset. The first and second best values of each method on the sequences are highlighted.

In the first experiment, we employ DeepSORT with its original parameter settings. As the results show, this configuration is not suitable for tracking small objects (pedestrians) in aerial imagery. DeepSORT utilizes deep appearance features to associate objects to tracklets; however, for the first few frames, it relies on IoU metric until enough appearance features are available. The original IoU threshold is . The standard DeepSORT uses a Kalman filter for each object to estimate its position in the next frame. However, due to small IoU overlaps between most predictions and detections, many tracks can not be associated with any detection, making it impossible to use the deep features afterwards. The main cause of minor overlaps is the small size of the bounding boxes. For example, if the Kalman filter estimates the object position only 2 pixels off the detection’s position, for a bounding box of 4 × 4 pixels, the overlap would be below the threshold and, consequently, the tracklet and the object cannot be matched. These mismatches result in a large number of falsely initiated new tracks, leading to a total amount of 8627 ID switches, an average amount of 8.27 ID switches per person, and an average amount of 0.71 ID switches per detection.

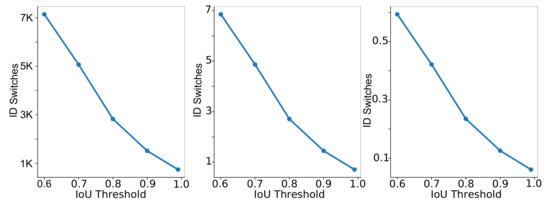

We tackle this problem by enlarging the bounding boxes by a factor of two in order to increase the IoU overlaps, increase the number of matched tracklets and detections, and enable the use of appearance features. According to Table 7, this configuration (DeepSORT-BBX) results in a 41.19% decrease in the total number of ID switches (from 8627 to 5073), a 56.38% decrease in the average number of ID switches per person (from 8.62 to 4.86), and a 59.15% decrease in the average number of ID switches per detection (from 0.71 to 0.42). We further analyze the impact of using different IoU thresholds on the tracking performance. Figure 6 illustrates the number of ID switches with different IoU thresholds. It can be observed that by increasing the threshold (minimizing the required overlap for object matching) the number of ID switches reduces. The least number of ID switches (738 switches) is achieved by the IoU threshold of 0.99, as can be seen in Table 7 for DeepSORT-IoU. Based on the results, enlarging the bounding boxes and changing the IoU threshold significantly improves the tracking results of DeepSORT-BBX-IoU as compared to the original settings of DeepSORT (ID switches by 91.44% and MOTA by 3.7 times). This confirms that the missing IoU overlap is the main issue with the standard DeepSORT.

Figure 6.

ID Switches versus IoU thresholds in DeepSORT. From left to right: total, average per person, and average per detection ID Switches.

After adapting the IoU object matching, the deep appearance features play a prominent role in the object tracking after the first few frames. Thus, a fine-tuning of the DeepSORT’s neural network on the training set of the KIT AIS pedestrian dataset can further improve the results (DeepSORT-BBX-IoU-FT). Originally, the network has been trained on a large person re-identification dataset, which is very different from our scenario, especially in the looking angle and the object sizes, as the bounding boxes in aerial images are much smaller than in the person re-identification dataset ( vs. pixels). Scaling the bounding boxes of our aerial dataset to fit the network input size leads to relevant interpolation errors. For our experiments we initialize the last re-identification layers from scratch, and the rest of the network using the pre-trained weights and biases. We also changed the number of classes to 610, representing the number of different pedestrians after cropping the images into the patches with the size of the bounding boxes, and ignoring the patches located at the image border. Instead of scaling the patches to pixels, we only scale them to . We trained the classifier for 20 epochs with SGD optimizer, Cross-Entropy loss function, batch size of 128, and an initial learning rate of . Moreover, we doubled the bounding box sizes for our experiment. The results in Table 7 show that the total number of ID switches only decreases from 738 to 734. This indicates that the deep appearance features of DeepSORT are not useful for our problem. While for a large object a small deviation of the bounding box position is tolerable (as the bounding box still mostly contains object-relevant areas), for our very small objects this can cause significant changes in object relevance. The extracted features mostly contain background information. Consequently, in the appearance matching step, the object features from its previous and currently estimated positions can differ significantly. Additionally, the appearance features of different pedestrians in aerial images are often not discriminative enough to distinguish them.

In order to better demonstrate this effect, we evaluate DeepSORT without any appearance feature, also known as SORT. Table 7 shows the tracking results with original and doubled bounding box sizes and an IoU threshold of 0.99. According to the results, SORT outperforms the fine-tuned DeepSORT with 438 ID switches. Nevertheless, the number of ID switches is still high, given that we use the ground truth object positions. This could be due to the low frame rate of the dataset and the small sizes of the the objects. Although enlarging the bounding boxes improved the performance significantly (60% and 56% better MOTA for DeepSORT and SORT, respectively), it leads to a poor localization accuracy.

5.2.2. Tracktor++

Tracktor++ [1] is an MOT method based on deep features. It employs a Faster-RCNN to perform object detection and tracking through regression. We use its PyTorch implementation (https://github.com/phil-bergmann/tracking_wo_bnw, accessed on 10 May 2021) and adapt it to our aerial dataset. We tested Tracktor++ with the ground truth object positions instead of using its detection module; however, it totally failed the tracking task with these settings. Faster-RCNN has been trained on the datasets which are very different to our aerial dataset, for example in looking angle, number and size of the objects. Therefore, we fine-tune Faster-RCNN on the KIT AIS dataset. To this end, we had to adjust the training procedure to the specification of our dataset.

We use Faster-RCNN with a ResNet50 backbone, pre-trained on the ImageNet dataset. We change the anchor sizes to {2, 3, 4, 5, 6} and the aspect ratios to {0.7, 1.0, 1.3}, enabling it to detect small objects. Additionally, we increase the maximum detections per image to 300, set the minimum size of an image to be rescaled to 400 pixels, the region proposal non-maximum suppression (NMS) threshold to 0.3, and the box predictor NMS threshold to 0.1. The NMS thresholds influence the amount of overlap for region proposals and box predictions. Instead of SGD, we use an ADAM optimizer with an initial learning rate of 0.0001 and a weight decay of 0.0005. Moreover, we decrease the learning rate every 40 epochs by a factor of 10 and set the number of classes to 2, corresponding to background and pedestrians. We also apply substantial online data augmentation including random flipping of every second image horizontally and vertically, color jitter, and random scaling in a range of 10%.

The tracking results of Tracktor++ with the fine-tuned Faster-RCNN are presented in Table 7. The detection precision and recall of Faster-RCNN are 25% and 31%, respectively, with this poor detection performance potentially propagated to the tracking part. According to the table, Tracktor++ only achieves an overall MOTA of 5.3 and 2188 ID switches even when we use ground truth object positions. We conclude by assuming that Tracktor++ has difficulties with the low frame rate of the dataset and the small object sizes.

5.2.3. SMSOT-CNN

SMSOT-CNN [22] is the first DL-based method for multi-object tracking in aerial imagery. It is an extension to GOTURN [17], an SOT regression-based method using CNNs to track generic objects at high speed. SMSOT-CNN adapts GOTURN for MOT scenarios by three additional convolution layers and a tacking management module. The network receives two image patches from the previous and current frames, where both are centered at the object position in the previous frame. The size of the image patches (the amount of contextual information) is adjusted by a hyperparameter. The network regresses the object position in the coordinates of the current frame’s image patch. SMSOT-CNN has been evaluated on the KIT AIS pedestrian dataset in [22], where the objects’ first positions are given based on the ground truth data. The tracking results can be seen in Table 7. Due to the use of a deep network and the local search for the next position of the objects, the number of ID switches by SMSOT-CNN is 157, which is small, relative to the other methods. Moreover, this algorithm achieves an overall MOTA and MOTP of −29.8 and 71.0, respectively. Based on our visual inspections, SMSOT-CNN has some difficulties in densely crowded situations where the objects share similar appearance features. In these cases, multiple similarly looking objects can be present in an image patch, resulting in ID switches and losing track of the target objects. Furthermore, the small sizes of the pedestrians make them similar to many non-pedestrian objects in the feature space causing a large number of FPs and FNs.

5.2.4. Euclidean Online Tracking

Inspired by the tracking results of SORT besides its simplicity, we propose EOT based on the architecture of SORT for pedestrian tracking in aerial imagery. EOT uses a Kalman filter similarly to SORT. Then it calculates the euclidean distance between all predictions () and detections (), and normalizes them w.r.t. the GSD of the frame to construct a cost matrix as follows:

After that, as in SORT, we use the Hungarian algorithm to look for global minima. However, if objects enter or leave the scene, the Hungarian algorithm can propagate an error to the whole prediction-detection matching procedure: therefore, we constrain the cost matrix so that all distances greater than a certain threshold are ignored and set to an infinity cost. We empirically set the threshold to pixels. Furthermore, only objects successfully tracked in the previous frame are considered for the matching process. According to Table 7, while the total MOTA score is competitive with the previously studied methods, EOT achieves the least ID switches (only 37). Compared to SORT, as EOT keeps better track of the objects, the deviations in the Kalman filter predictions are smaller. Therefore, Euclidean distance is a better option as compared to IoU for our aerial image sequences.

5.3. Conclusion of the Experiments

In this section, we conclude our preliminary study. According to the results, our EOT is the best performing tracking method. Figure 7 illustrates a major case of success by our EOT method. We can observe that almost all pedestrians are tracked successfully, even though the sequence is crowded and people walk in different directions. Furthermore, the significant cases of false positives and negatives are caused by the limitation of the evaluation approach. In other words, while EOT tracks most of the objects, since the evaluation approach is constrained to the minimum 50% overlap (4 pixels), the correctly tracked objects with smaller overlaps are not considered.

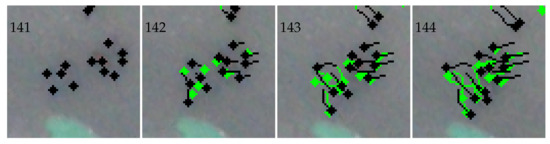

Figure 7.

A success case processed by Stacked-DCFNet on the sequence “Munich02”. The tracking results and ground truth are depicted in green and black, respectively.

Figure 8 shows a typical failure case of the Stacked-DCFNet method. In the first two frames, most of the objects are tracked correctly; however, after that, the diagonal line in the patch center is confused with the people walking across it. We assume that the line shares similar appearance features with the crossing people. Figure 9 demonstrates a successful tracking case by Stacked-DCFNet. People are not walking closely together and the background is more distinguishable from the people. Figure 10 illustrates another typical failure case of DCFNet. The image includes several people walking closely in different directions, introducing confusion into the tracking method due to the people’s similar appearance features. We closely investigate these failure cases in Figure 11. In this figure, we visualize the activation map of the last convolution layer of the network. Although the convolutional layers of Stacked-DCFNet are supposed to be trained only for people, the line and the people (considering their shadows) appear indistinguishable. Moreover, based on the features, different people cannot be discriminated. We also evaluated SMSOT-CNN and found that it shares similar failure and success cases with Stacked-DCFNet, as both take advantage of convolutional layers for extracting appearance features.

Figure 8.

A failure case by Stacked-DCFNet on the sequence “AA_Walking_02”. The tracking results and ground truth are depicted in green and black, respectively.

Figure 9.

A success case by Stacked-DCFNet on the sequence “AA_Crossing_02”. The tracking results and ground truth are depicted in green and black, respectively.

Figure 10.

A failure case by Stacked-DCFNet on the test sequence “RaR_Snack_Zone_04”. The tracking results and the ground truth are depicted in green and black, respectively.

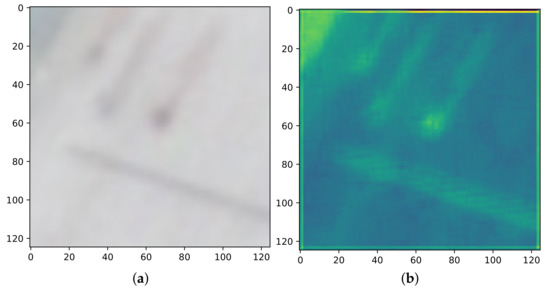

Figure 11.

(a) An input image patch to the last convolutional layer of Stacked-DCFNetand and (b) its corresponding activation map.

Altogether, the Euclidean distance paired with trajectory information in EOT works better than IoU for tracking in aerial imagery. However, detection-based trackers such as EOT require object detection in every frame. As shown for Tracktor++, the detection accuracy of the object detectors is very poor for pedestrians in aerial images. Thus, detection-based methods are not appropriate for our scenarios. Moreover, the approaches which employ deep appearance features for re-identification share similar problems with object detectors, features with poor discrimination abilities in the presence of similarly looking objects, leading to ID switches and loosing track of objects. The tracking methods based on regression and correlation (e.g., Stacked-DCFNet and SMSOT-CNN) show, in general, better performances than the methods based on re-identification because they track objects by local image patches that errors to be propagated to the whole image. Furthermore, according to our investigations, the path taken by every pedestrian is influenced by three factors: (1) the pedestrian’s path history, (2) the positions and movements of the surrounding people, (3) the arrangement of the scene.

We conclude that both regression- and correlation-based tracking methods are good choices for our scenario. They can be improved by considering trajectory information and the pedestrians movement relationships.

6. AerialMPTNet

In this section we explain our proposed AerialMPTNet tracking algorithm with its different configurations. Part of its architecture and configurations has been presented in [26].

As stated in Section 5, a pedestrian’s movement trajectory is influenced by its movement history, its motion relationships to its neighbours, and scene arrangements. The same holds for the vehicles in traffic scenarios. For the vehicles, there are other constraints such as moving along predetermined paths (e.g., streets, highways, railways) in most of the time. Different objects have different motion characteristics such as speed and acceleration. For example, several studies have shown that walking speed of pedestrians are strongly influenced by their age, gender, temporal variations as well as distractions (e.g., cell phone usage), whether the individual is moving in a group or not, and even the size of the city where the event takes place [64,65]. Regarding road traffic, similar factors could influence driving behaviors and movement characteristics (e.g., cell phone usage, age, stress level, and fatigue) [66,67]. Furthermore, similar to the pedestrians, maneuvers of a vehicle can directly affect the movements of other neighbouring vehicles: for example, if the vehicle brakes, all the following vehicles must brake, too.

The understanding of individual motion patterns is crucial for tracking algorithms, especially when only limited visual information about target objects is available. However, current regression-based tracking methods such as GOTURN and SMSOT-CNN do not incorporate movement histories or relationships between adjacent objects. These networks locate the next position of objects by monitoring a search area in their immediate proximity. Thus, the contextual information provided to the network is limited. Additionally, during the training phase, the networks do not learn how to differentiate the targets from similarly looking objects within the search area. Thus, as discussed in Section 5, ID switches and losing of object tracks happen often for these networks in crowded situations or by object intersections.

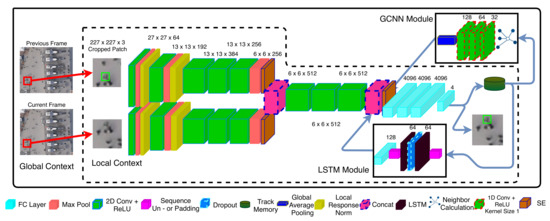

In order to tackle the limitations of previous works we propose to fuse visual features, track history, and the movement relationships of adjacent objects in an end-to-end fashion within a regression-based DNN, which we refer to as AerialMPTNet. Figure 12 shows an overview of the network architecture. AerialMPTNet takes advantage of a Siamese Neural Network (SNN) for visual features, a Long Short-Term Memory (LSTM) module for movement histories, and a GraphCNN for movement relationships. The network takes two local image patches cropped from two consecutive images (previous and current), called target and search patch in which the object location is known and has to be predicted, respectively. Both patches are centered at the object coordinates known from the previous frame. Their size (the degree of contextual information) is correlated with the size of the objects, and it is set to pixels to be compatible to the network’s input. Both patches are then given to the SNN module (retained from [22]) composed of two branches of five 2D convolutional, two local response normalization, and three max-pooling layers with shared weights. Afterwards, the two output features are concatenated and given to three 2D convolutional layers and, finally, four fully connected layers regressing the object position in the search patch coordinates. We use ReLU activations for all these convolutional layers.

Figure 12.

Overview of the network’s architecture composing a SNN, a LSTM and a GraphCNN module. The inputs are two consecutive images cropped and centered to a target object, while the output is the object location in search crop coordinates.Overview of AerialMPTNet’s architecture.

The network output is a vector of four values indicating the x and y coordinates of the top-left and bottom-right corners of the objects’ bounding boxes. These coordinates are then transformed into image coordinates. In our network, the LSTM module and the GraphCNN module use the object coordinates in the search patch and image domain, respectively.

6.1. Long Short-Term Memory Module

In order to encode movement histories and predict object trajectories, recent works mainly relied on LSTM- and RNN-based structures [68,69,70]. While these structures have been mostly used for individual objects, due to the large number of objects, we cannot apply these structures directly to our scenarios. Thus, we propose using a structure which treats all object by only one model and predicts the movements (movement vectors) instead of positions.

In order to test our idea, we built an LSTM comprising two bidirectional LSTM layers with 64 dimensions, a dropout layer with in between, and a linear layer which generates two-dimensional outputs, representing the x and y values of the movement vector. The input of the LSTM module are two-dimensional movement vectors with dynamic lengths up to five steps of the objects’ movement histories. We applied this module to our pedestrian tracking datasets. The results of this experiment show that our LSTM module can predict the next movement vector of multiple pedestrians with about 3.6 pixels (0.43 m) precision, which is acceptable for our scenarios. Therefore, training a single LSTM on multiple objects would be enough for predicting the objects’ movement vectors.

We embed a similar LSTM module into our network as shown in Figure 12. For the training of the module, the network first generates a sequence of object movement vectors based on the object location predictions. In our experiments, each track has a dynamic history of up to five last predictions. As tracks are not assumed to start at the same time, the length of each track history can be different. Thus, we use zero-padding to make the lengths of track histories similar, allowing to process them together as a batch. These sequences are fed into the first LSTM layer with a hidden size of 64. A dropout with is then applied to the hidden state of the first LSTM layer, and passes the results to the second LSTM layer. The output features of the second LSTM layer are fed into a linear layer of size 128. The 128-dimensional output of the LSTM module is then concatenated with and , the output of the GCNN module. The concatenation allows the network to predict object locations more precisely based on a fusion of appearance and movement features.

6.2. GraphCNN Module

The GraphCNN module consists of three 1D convolution layers with kernels and respectively 32, 64, and 128 channels. We generate each object’s adjacency graph based on the location prediction of all objects. To this end, the eight closest neighbors in a radius of 7.5 m from the object are considered and modeled as a directed graph by a set of vectors from the neighbouring objects to the target object’s position . The resulting graph is represented as . If less than eight neighbors are existing, we zero-pad the rest of the vectors.

The GraphCNN module also uses historical information by considering five previous graph configurations. Similarly to the LSTM module, we use zero-padding if less than five previous configurations are available. The resulting graph sequences are described by a matrix which is fed into the first convolution layer. In our setup, graph sequences of multiple objects are given to the network as a batch of matrices. The output of the last convolutional layer is gone through a global average pooling in order to generate the final 128-dimensional output of the module , which is concatenated to and . The features of the GraphCNN module enable the network to better understand group movements.

6.3. Squeeze-and-Excitation Layers

During our preliminary experiments in Section 5, we experienced a high deviation in the quality of activation maps produced by the convolution layers in DCFNet and SMSOT-CNN. This deviation shows the direct impact of single channels and their importance for the final result of the network. In order to consider this factor in our approach, we model the dominance of the single channels by Squeeze-And-Excitation (SE) layers [71].

CNNs extract image information by sliding spatial filters across the inputs to different layers. While the lower layers extract detailed features such as edges and corners, the higher layers can extract more abstract structures such as object parts. In this process, each filter at each layer has a different relevance to the network output. However, all filters (channels) are usually weighted equally. Adding the SE layers to a network helps weighting each channel adaptively based on their relevance. In the SE layers, each channel is squeezed to a single value by using global average pooling [72], resulting in a vector with k entries. This vector is given to a fully connected layer reducing the size of the output vector by a certain ratio, followed by a ReLu activation function. The result is fed into a second fully connected layer scaling the vector back to its original size and applying a sigmoid activation afterwards. In the final step, each channel of the convolution block is multiplied by the results of the SE layer. This channel weighting step adds less than 1% to the overall computational cost. As can bee seen in Figure 12, we add one SE layer after each branch of the SNN module, and one SE layer after the fusion of , , and .

6.4. Online Hard Example Mining

In the object detection domain, datasets usually contain a large number of easy cases with respect to cases which are challenging for the algorithms. Several strategies have been developed in order to account for this, such as sample-aware loss functions (e.g., Focal Loss [73]), where the easy and hard samples are weighted based on their frequencies, and online hard example mining (OHEM) [28], which gives hard examples to the network if they are previously failed to be correctly predicted. The selection and focusing on such hard examples can make the training more effective. OHEM have been explored in the object detection task [74,75], however, its usage has not been investigated for the object tracking task. In the multi-object tracking domain, such strategies have been rarely used although the tracking datasets suffer from the sample problem as the detection datasets. To the best of our knowledge, none of the previous works in the regression-based tracking used OHEM during their training process.

Thus, in order to deal with the sample imbalance problem of our datasets, we propose adapting and employing OHEM for our training process. To this end, if the tracker loses an object during training, we reset the object to its original starting position and the starting frame, and feed it to the network in the next iteration again. If the tracker fails again, we ignore the sample by removing it from the batch.

7. Experimental Setup

For all of our experiments, we used PyTorch and one Nvidia Titan XP GPU. We trained all networks with an SGD optimizer and an initial learning rate of . For all training setups, unless indicated otherwise, we use the loss, , where x and represent the output of the network and ground truth, respectively. The batch size of all our experiments is 150; however, during offline feedback training, the batch size can differ due to unsuccessful tracking cases and subsequent removal of the object from the batch.

In our experiments, we consider SMSOT-CNN as baseline network and compare different parts of our approach to it. The original SMSOT-CNN is described in Caffe. In order to make it completely comparable to our approach, we re-implement it in PyTorch. For the training of SMSOT-CNN, we assign different fractions of the initial learning rate to each layer, as in the original Caffe implementation, inspired by the GOTURN’s implementation. In more detail, we assign the initial learning rate to each convolutional layer, and assign a learning rate 10 times larger to the fully connected layers. Weights are initialized by Gaussians with different standard deviations, while biases are initialized by constant values (zero or one), as in the Caffe version. The training process of SMSOT-CNN is based on a so-called Example Generator. Provided with one target image with known object coordinates, this creates multiple examples by creating and shifting the search crop to create different kinds of movements. It is also possible to give the true target and search images. A hyperparameter set to 10 controls the number of examples generated for each image. For the pedestrian tracking, we use DLR-ACD to increase the number of available training samples. SMSOT-CNN is trained completely offline and learns to regress the object location based on only the previous location of the object.