Reconstruction of Complex Roof Semantic Structures from 3D Point Clouds Using Local Convexity and Consistency

Abstract

1. Introduction

2. State-of-the-Art Methods

2.1. Unsupervised Methods

2.2. Supervised Methods

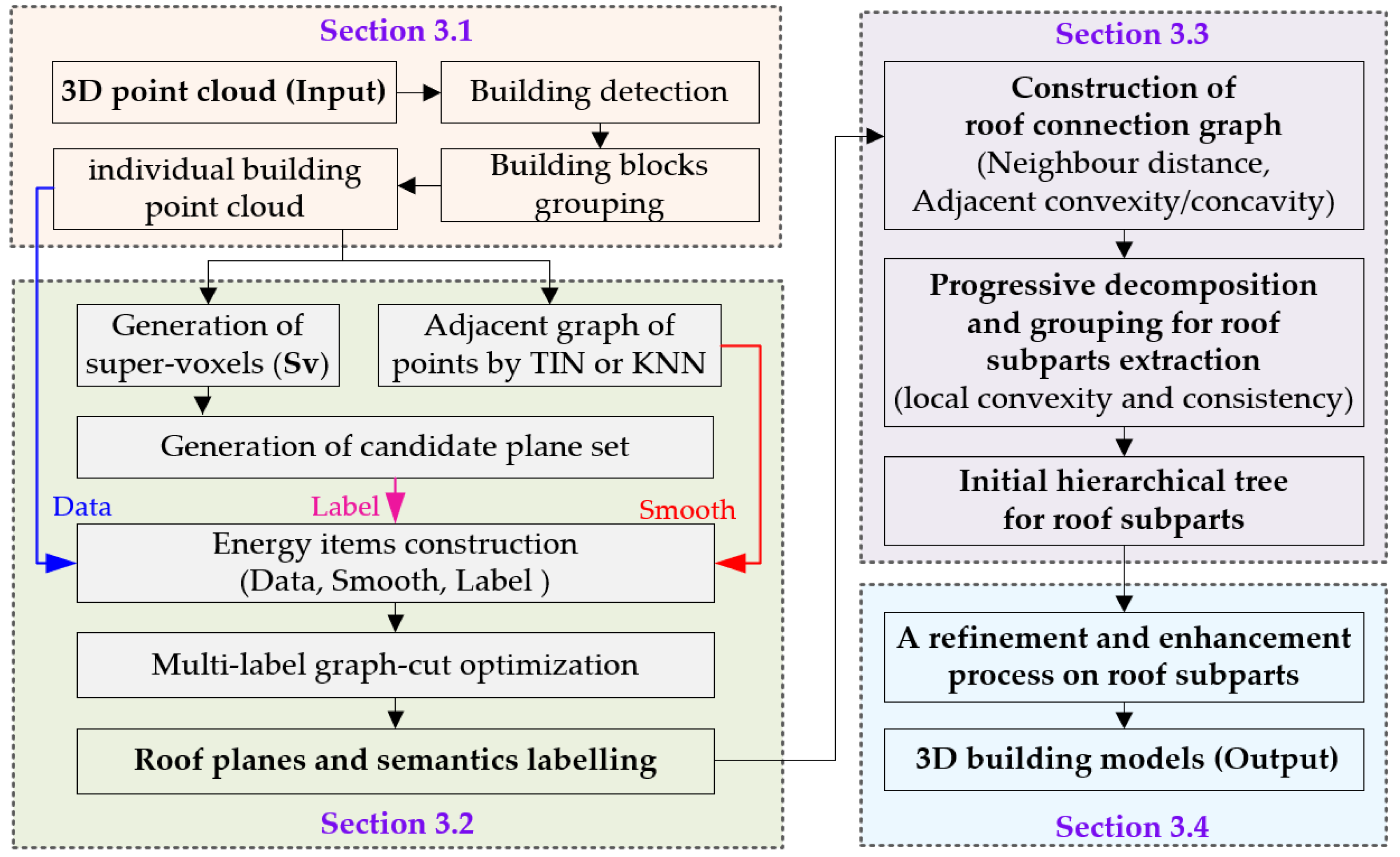

3. Methodology

3.1. Data Preprocessing

3.2. Roof Plane Extraction and Semantic Labeling

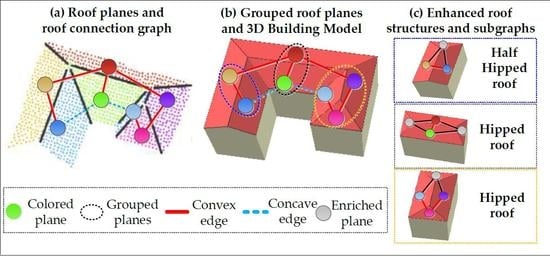

3.3. Semantic Decomposition of Compound Building

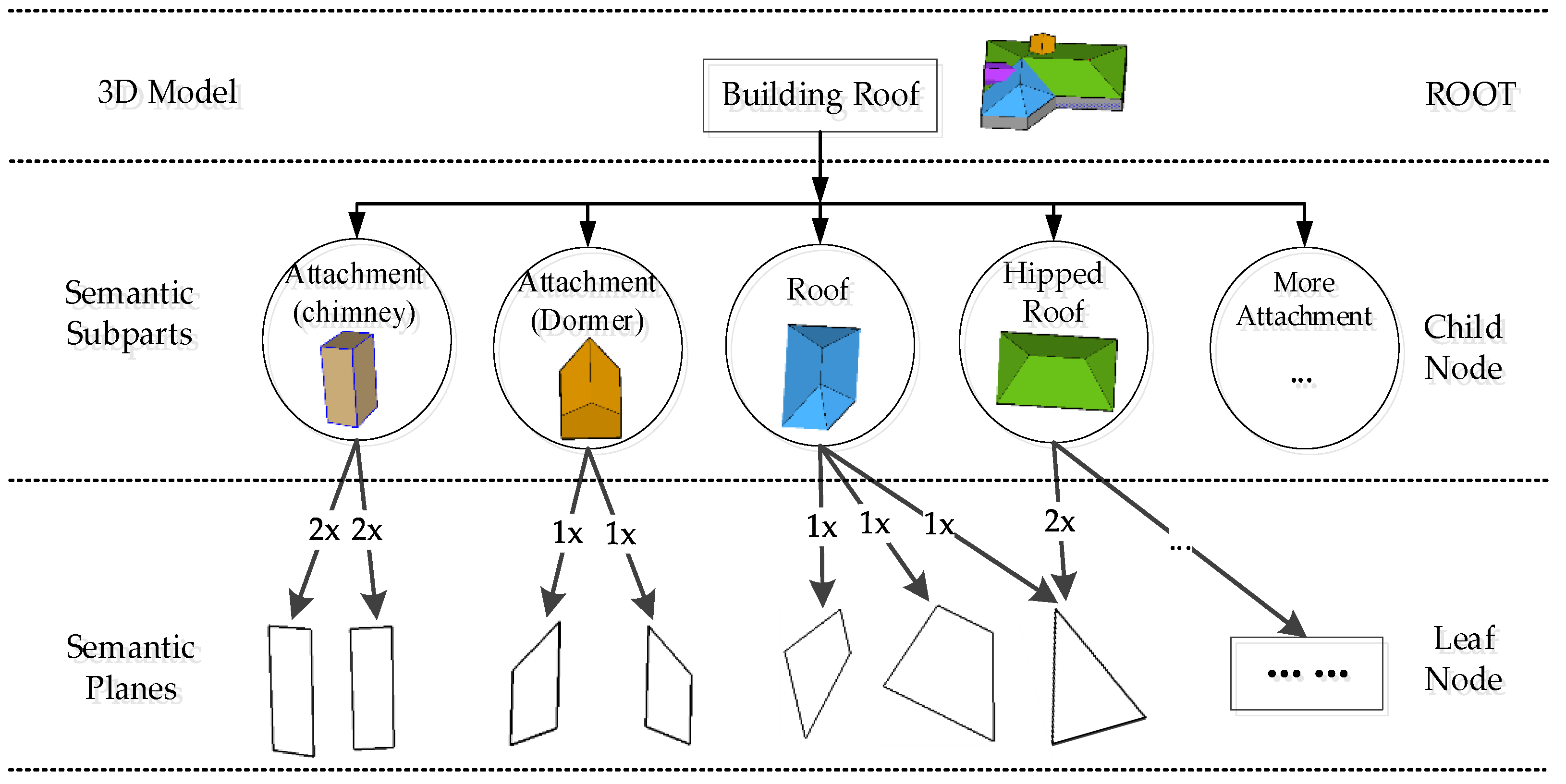

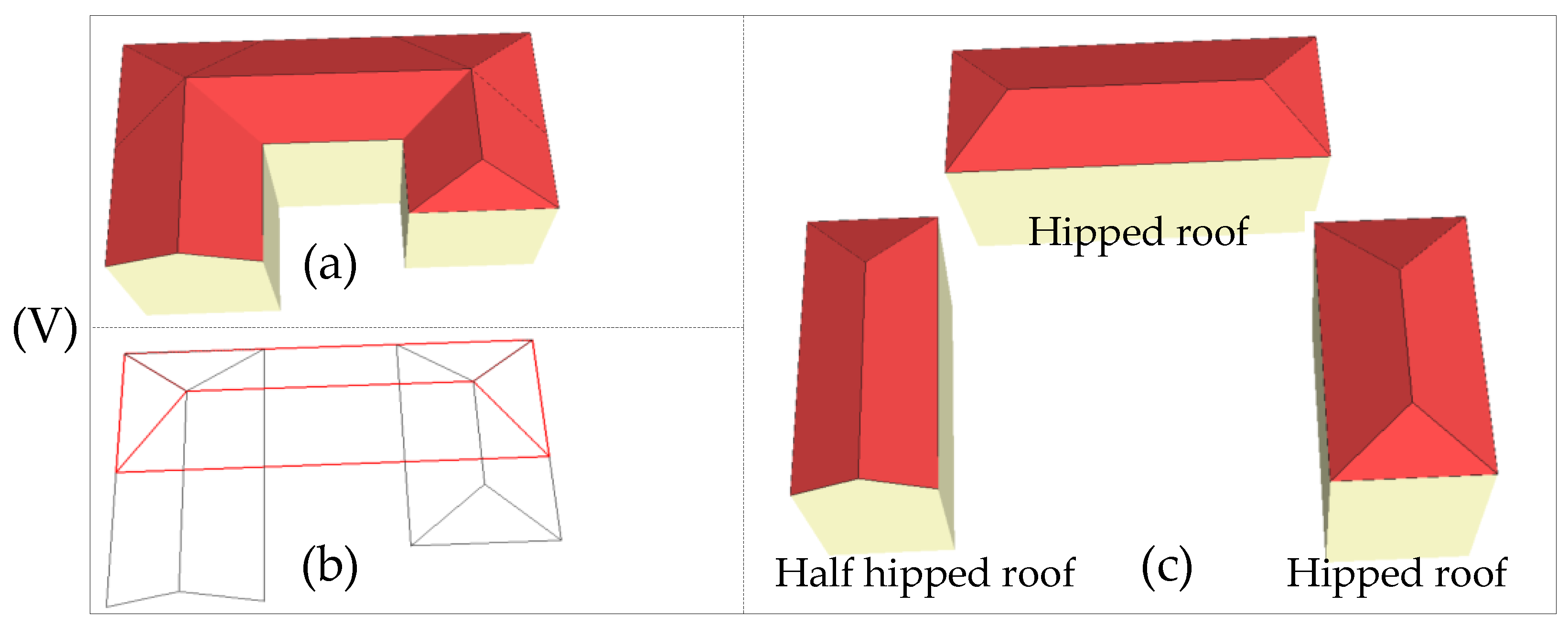

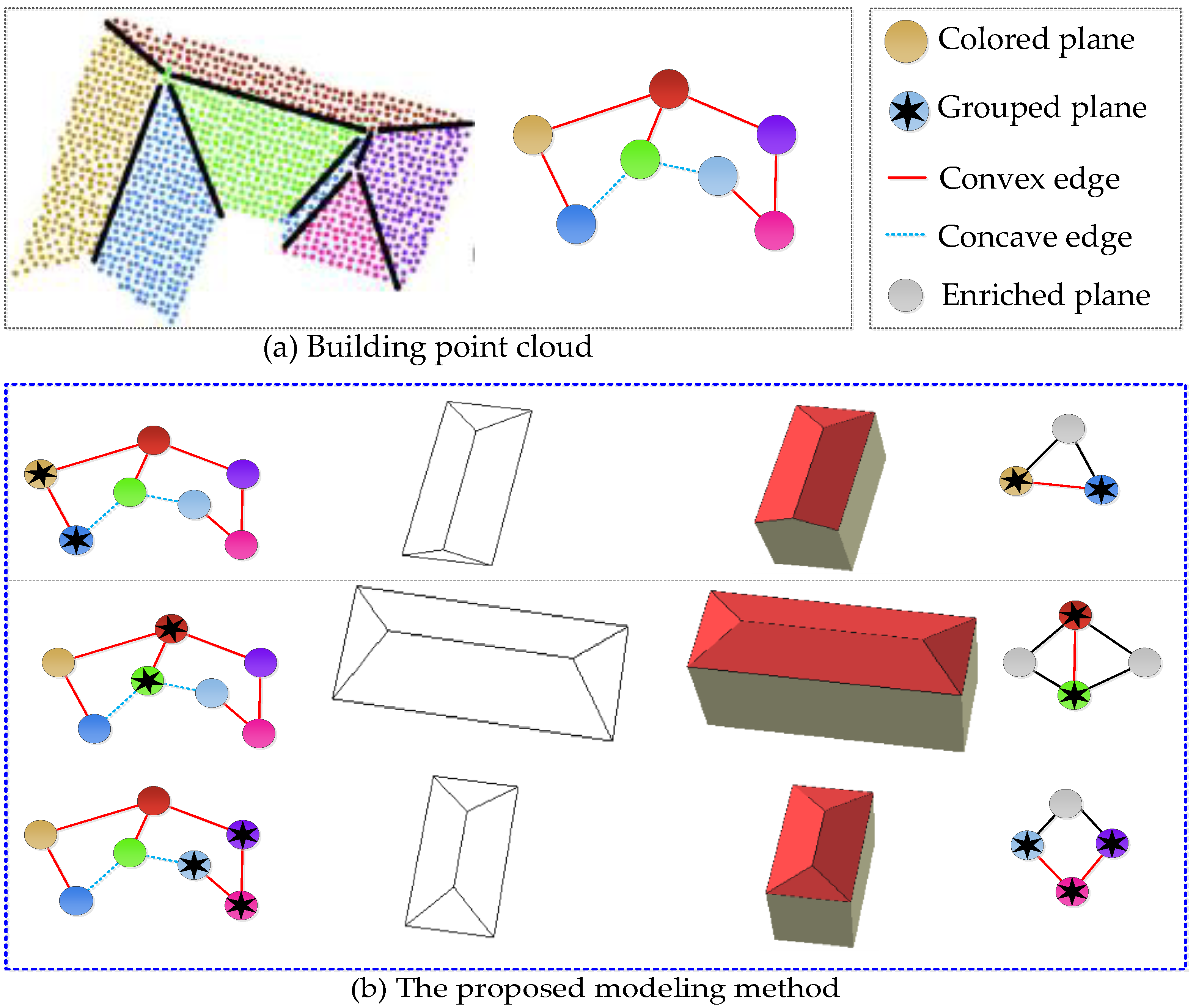

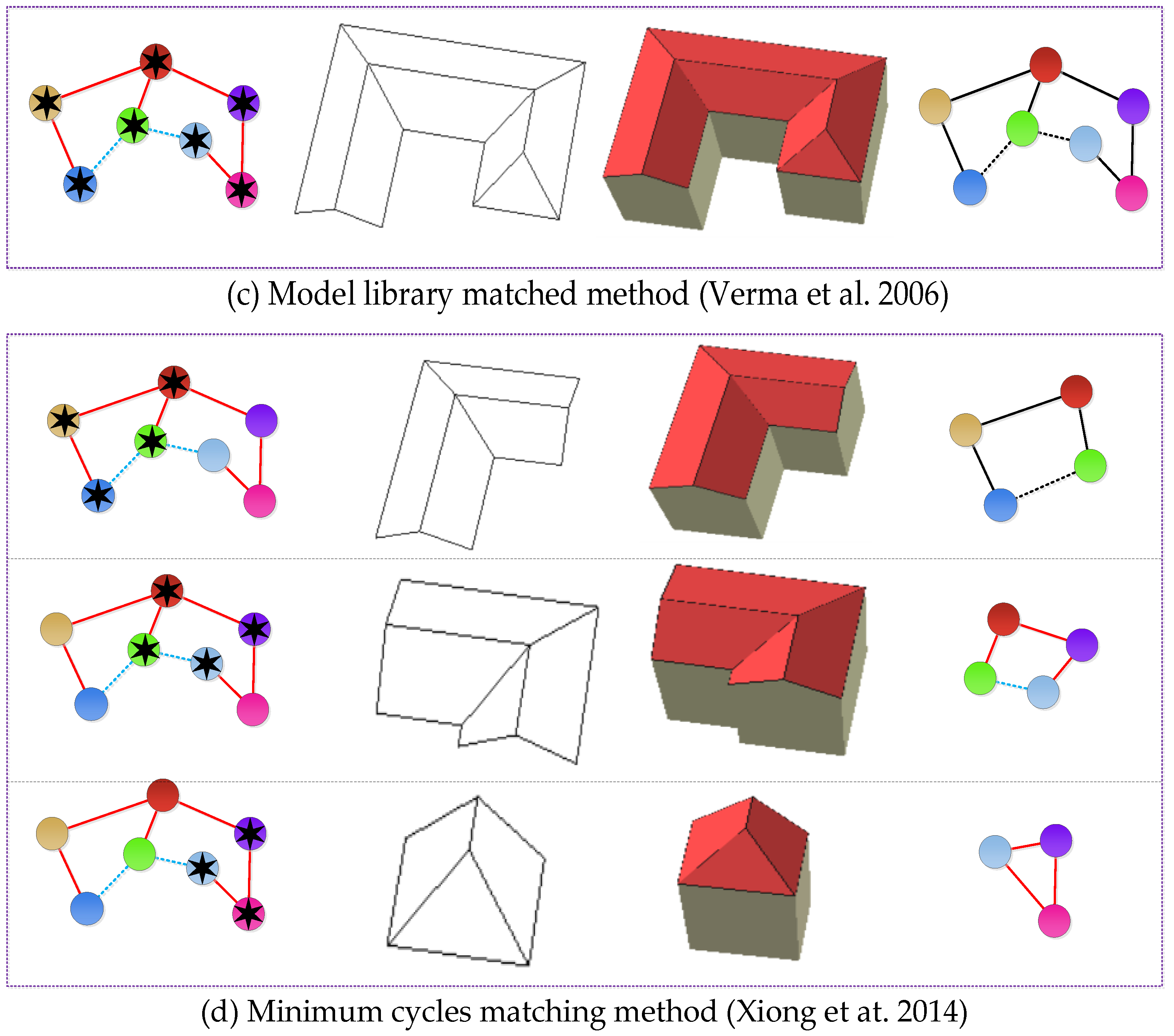

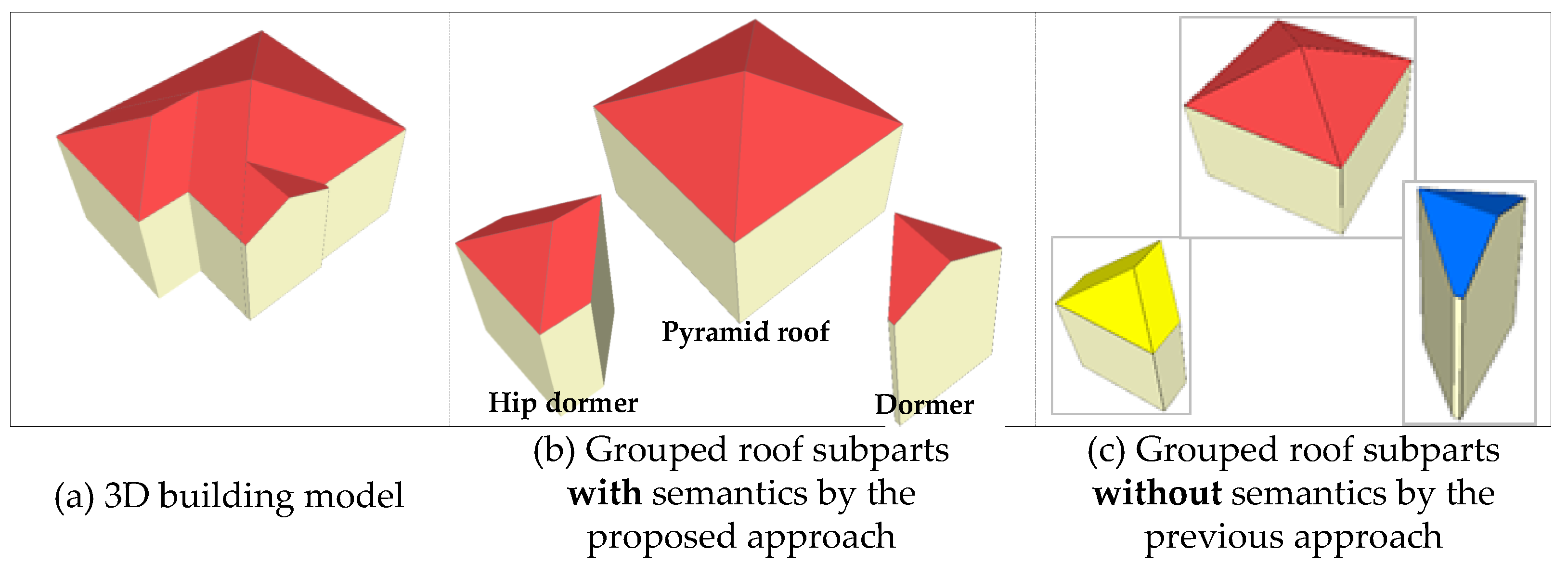

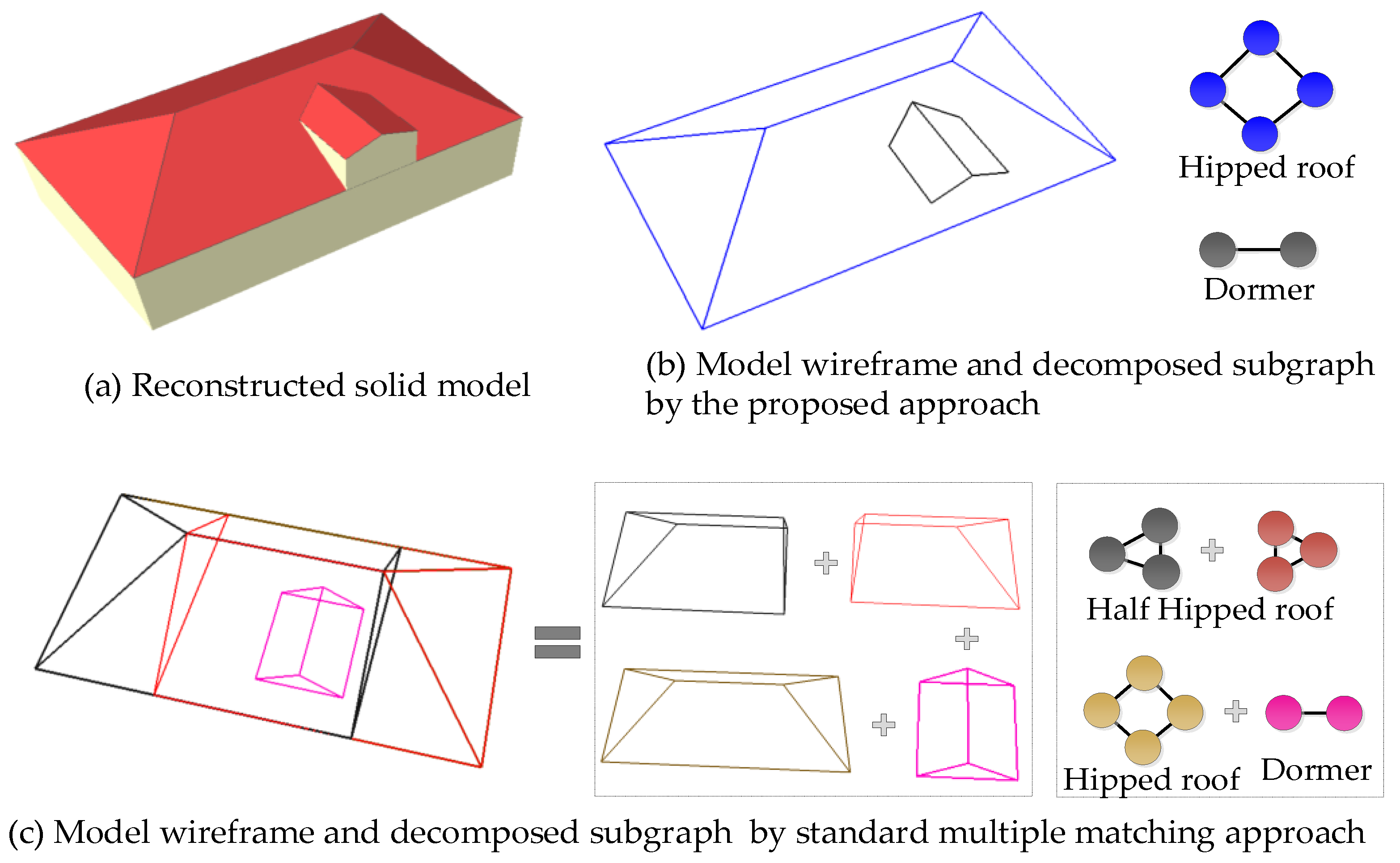

3.3.1. Hierarchy Tree Representation of Complex Buildings

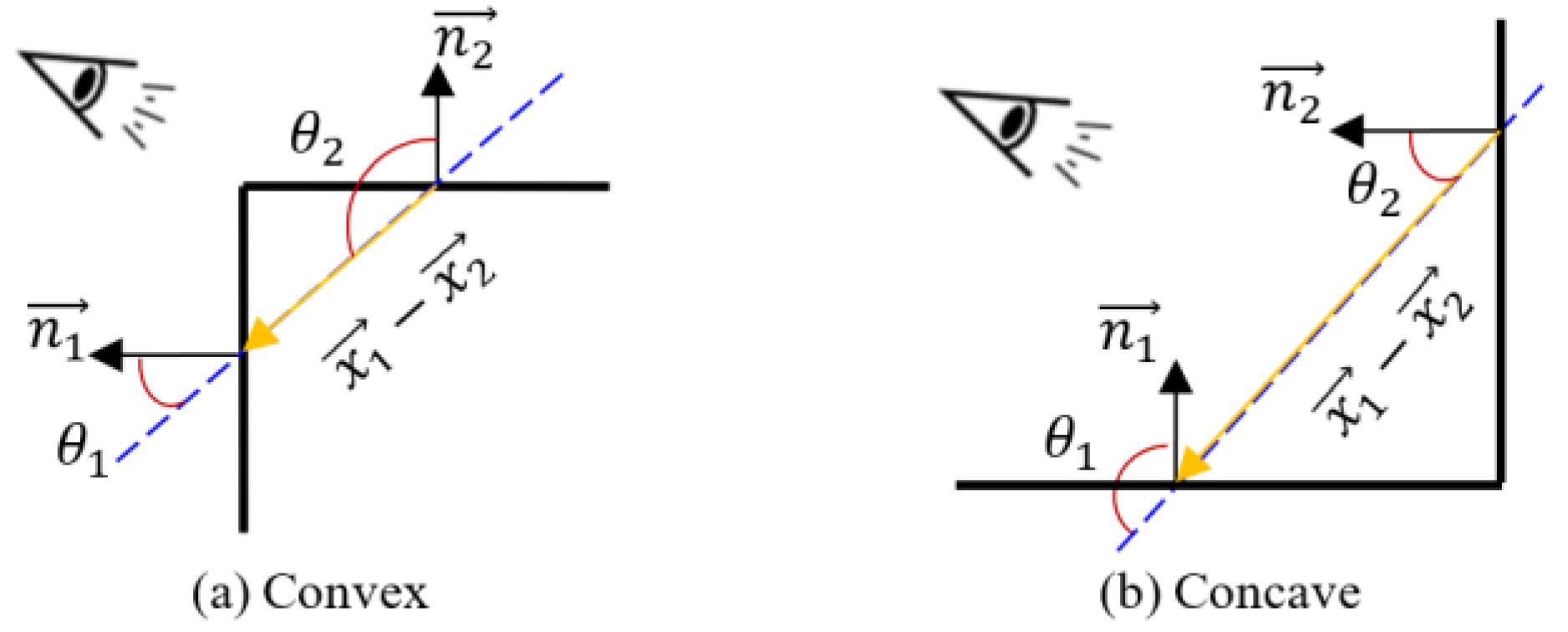

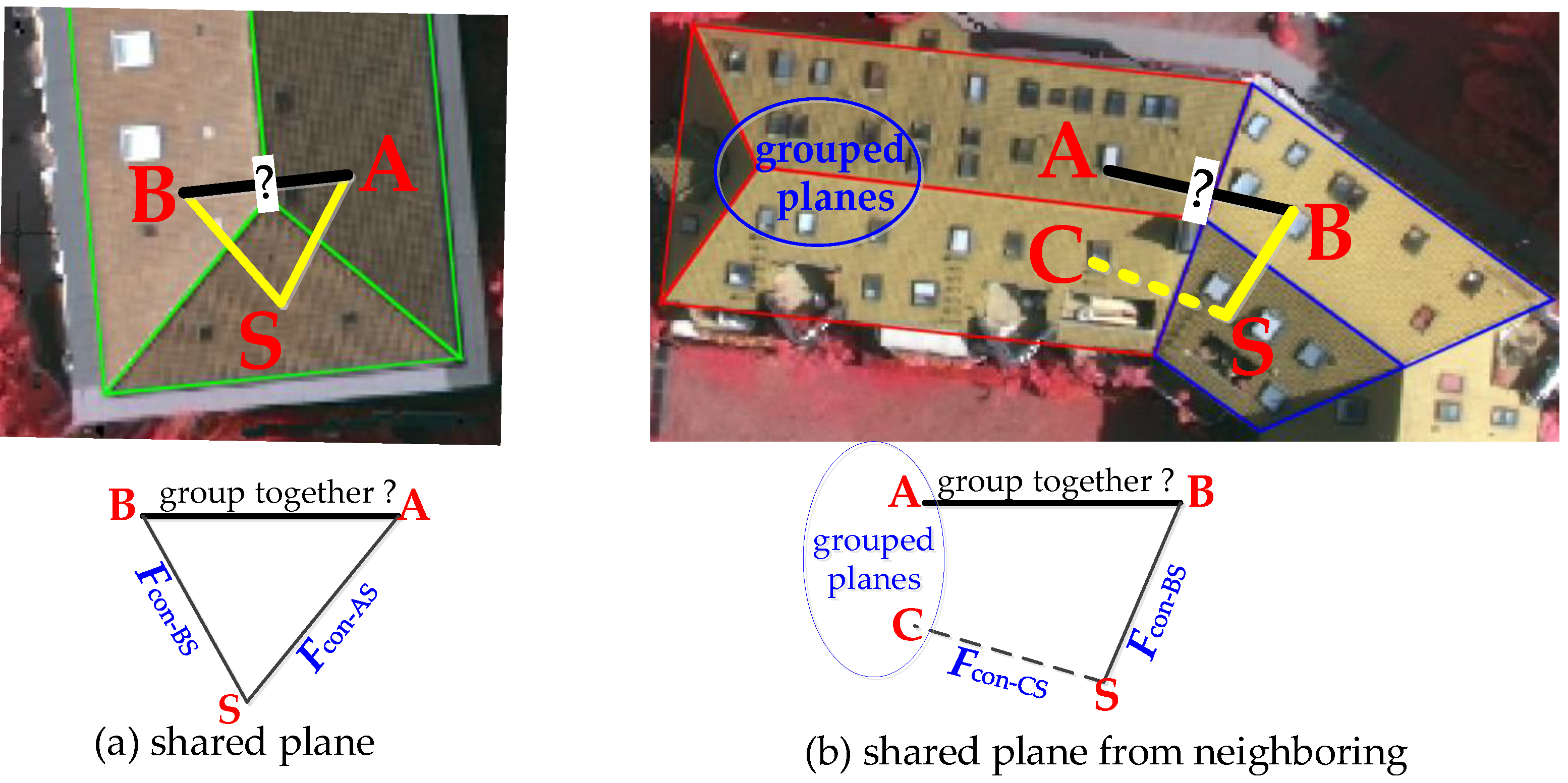

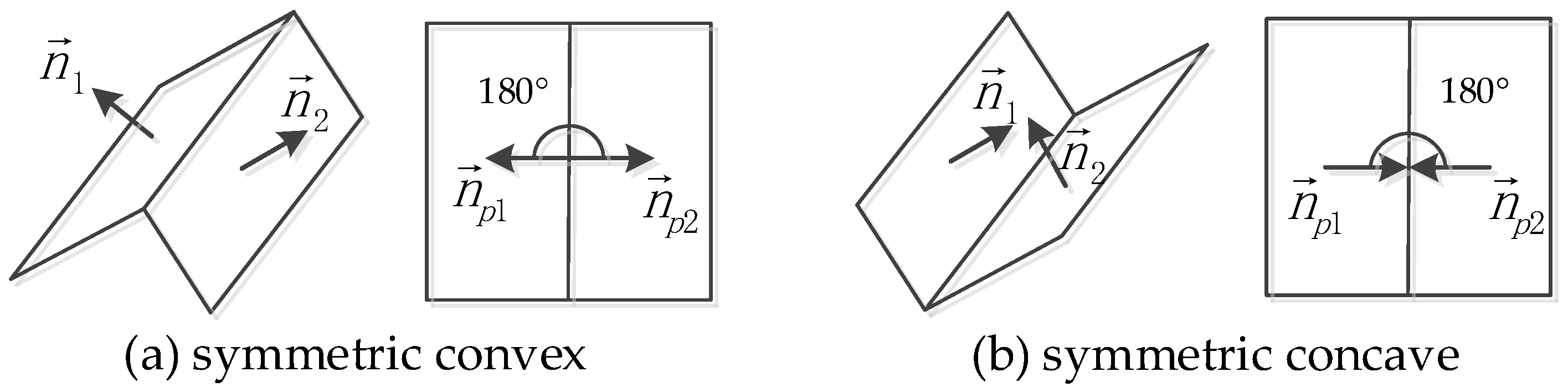

3.3.2. Construction of the Roof Connection Graph

3.3.3. Progressive Decomposition for Subparts Extraction

| Algorithm 1. Progressive Decomposition |

| Input: a roof connection graph G and roof planar primitives PS = [Lp] |

| Output: roof subparts [GSub] and an initial building hierarchical tree T |

| 1: While PS ≠ ∅ do |

| 2: Find a planar primitive Lp0 with the largest area from PS |

| 3: Create an empty roof plane set GSub and initial it with plane Lp0 |

| 4: Generate GSub by the iteratively decomposing G using (FDist, FCon, FCC) |

| 5: Update the nodes of building hierarchical tree T from GSub |

| 6: For Lpi ∈ GSub do |

| 7: remove plane Lpi from PS |

| 8: update the nodes and edges of G |

| 9: End For |

| 10: End While |

3.4. Generation of 3D Building Models

4. Experimental Results

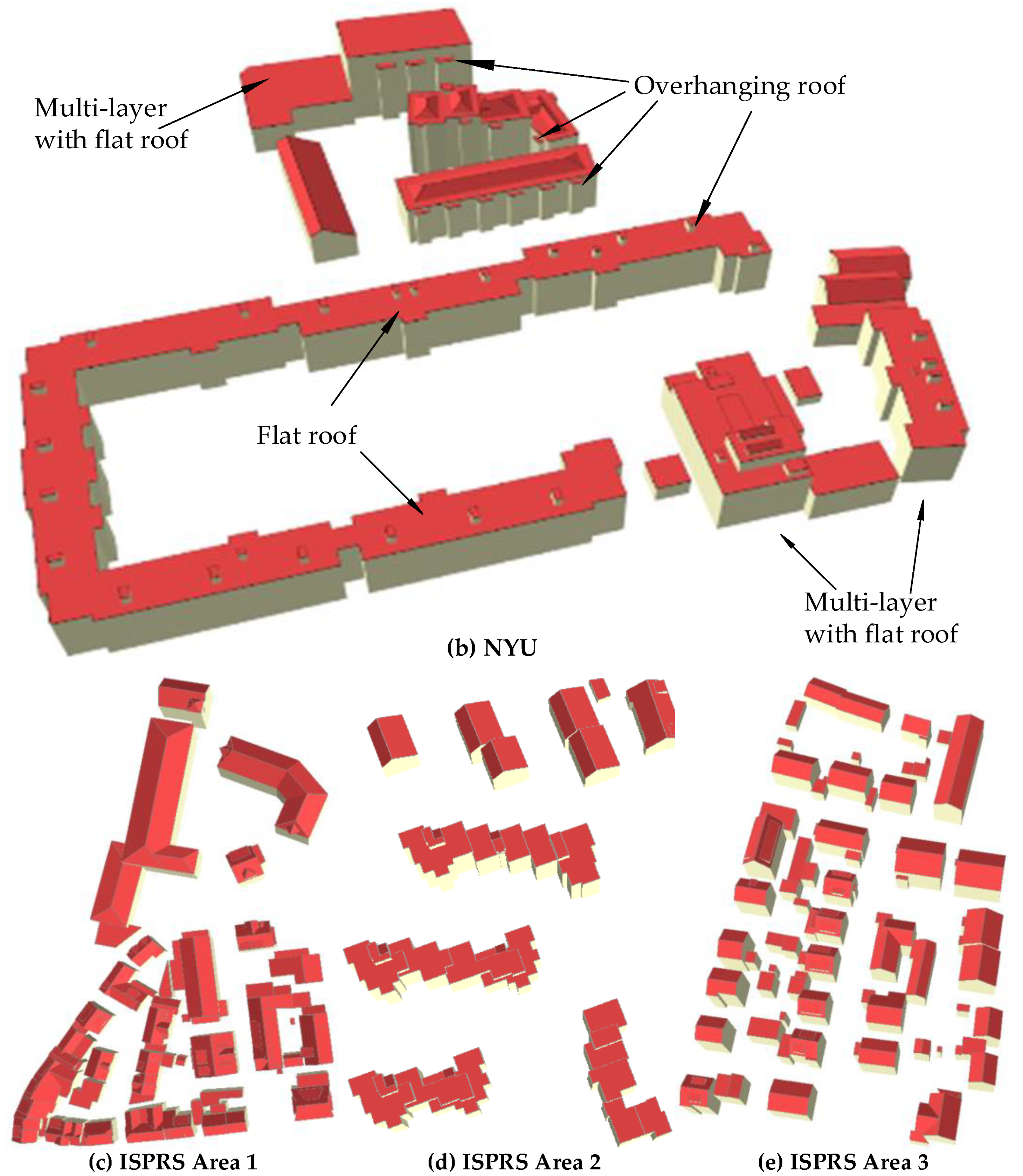

4.1. Description of the Datasets

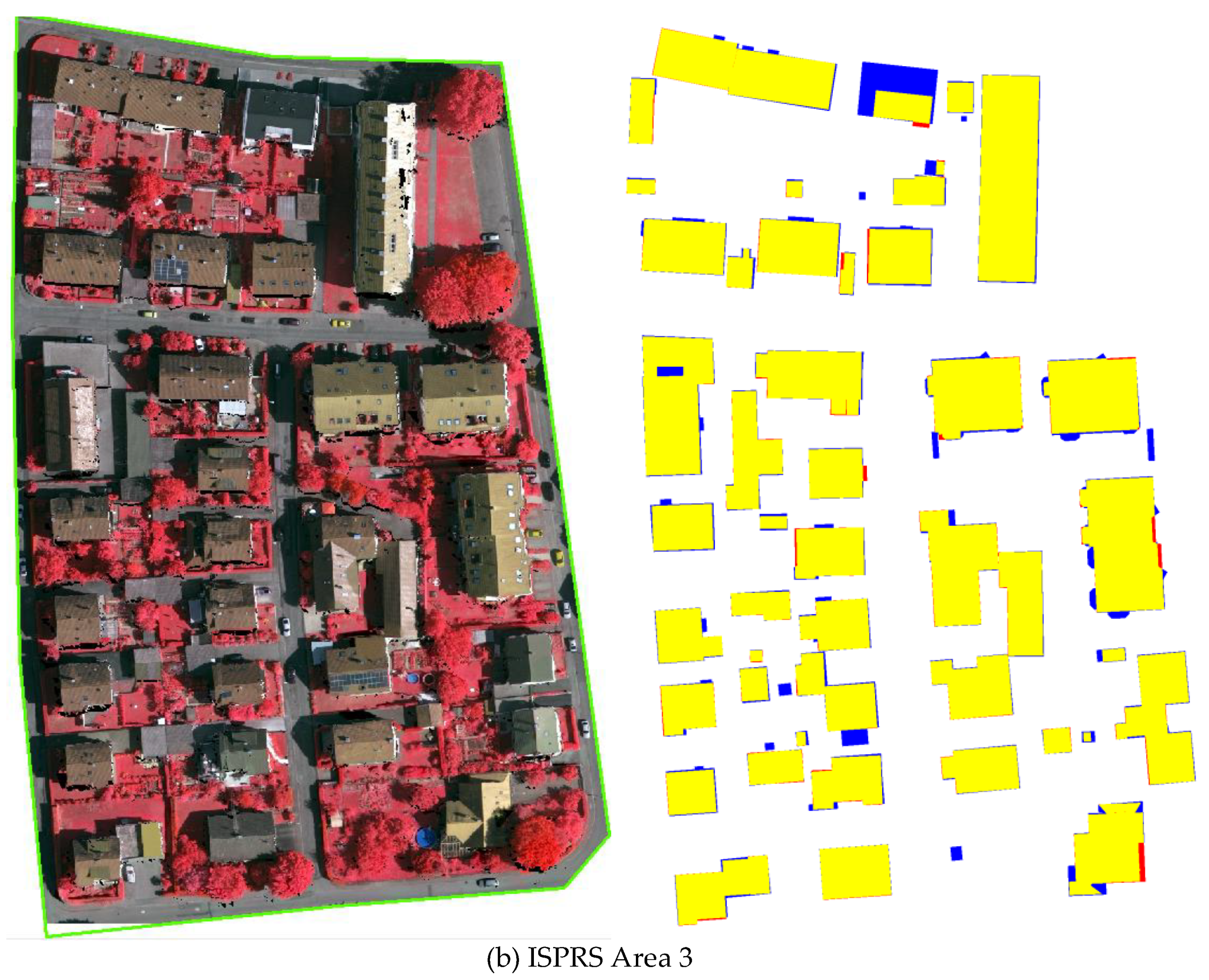

4.2. Results of Model Reconstruction

5. Discussion

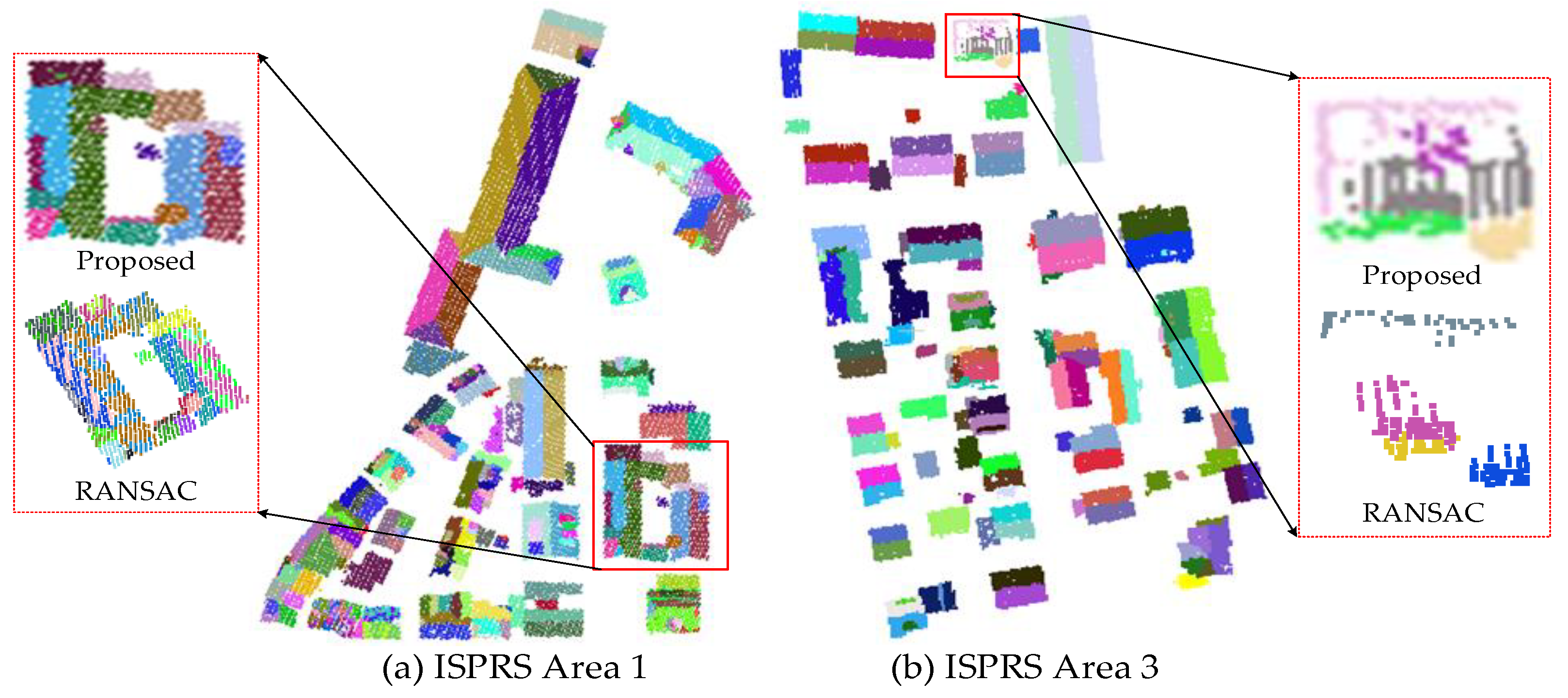

5.1. Visual Analysis of the Decomposition Results

5.2. Performance Analysis of Multi-Label Energy Optimization

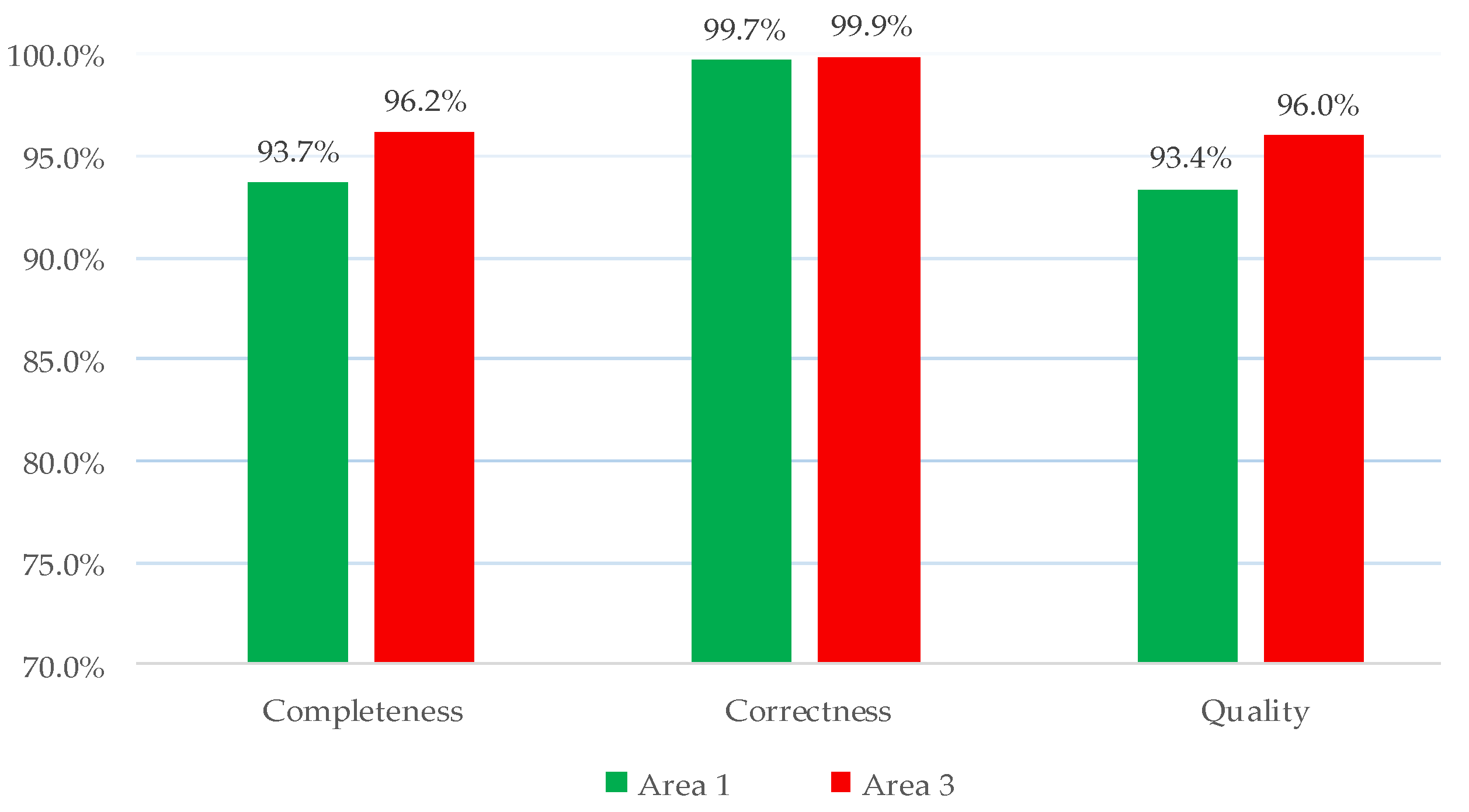

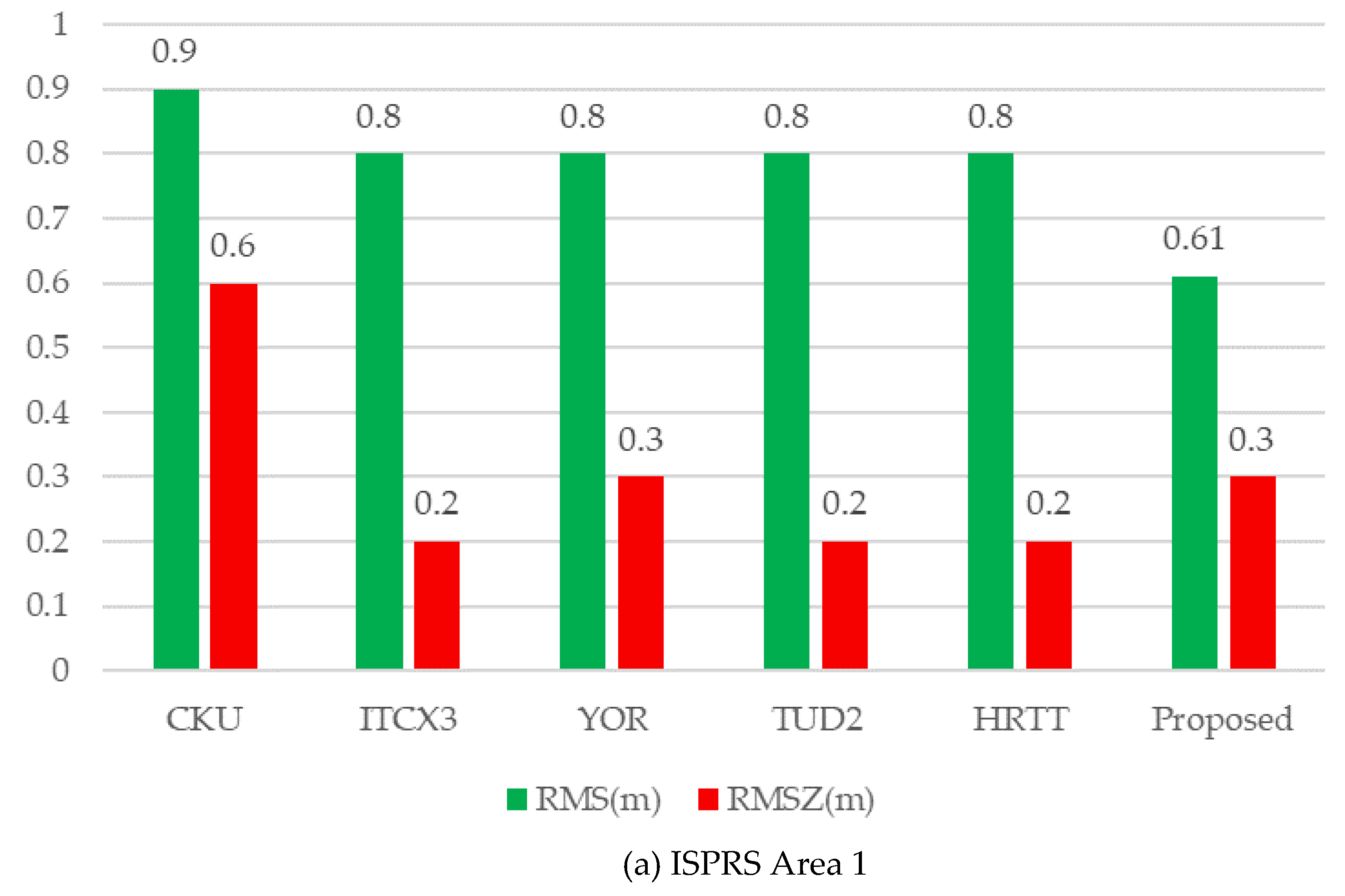

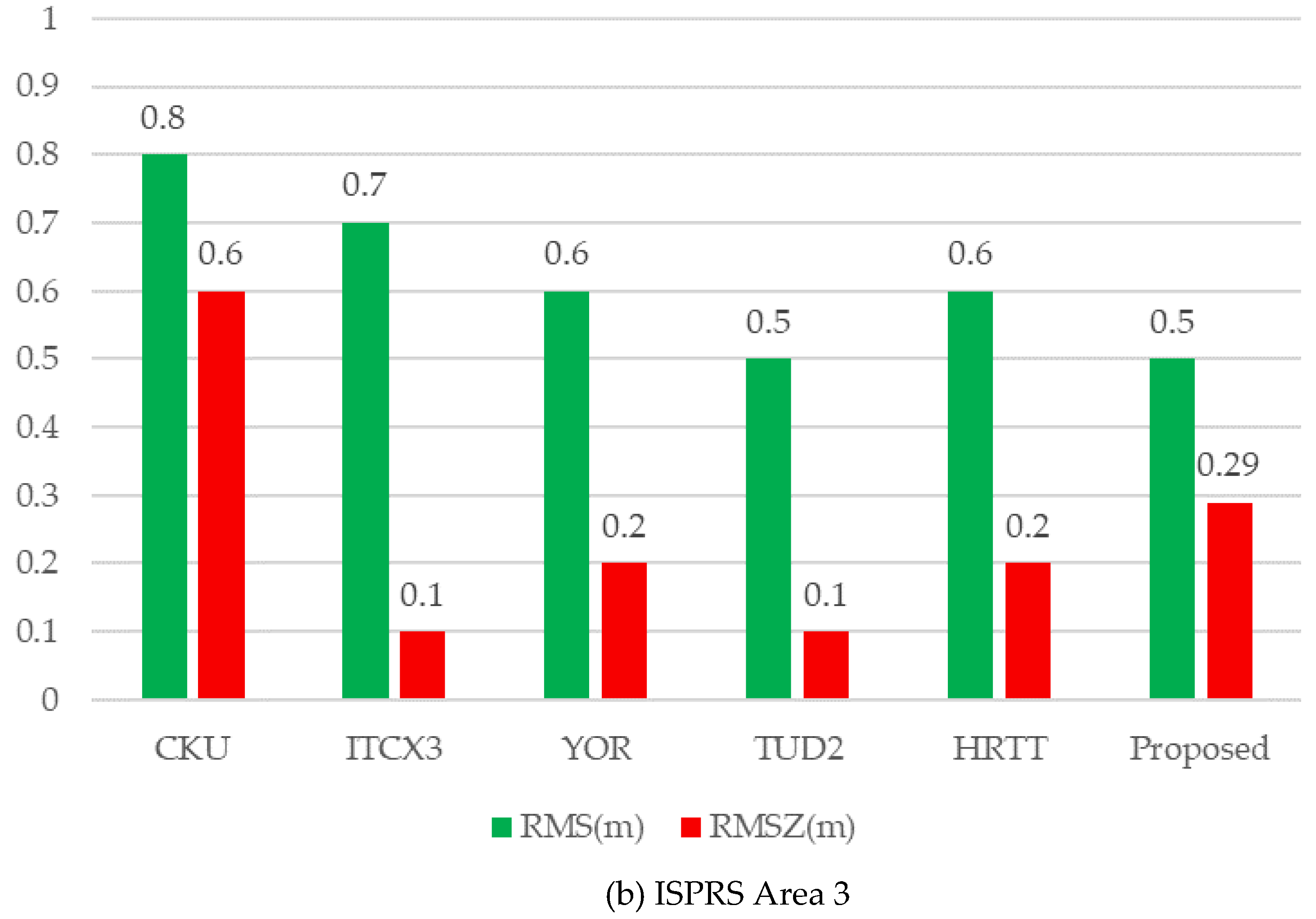

5.3. Accuracy Assessments on ISPRS Benchmark Dataset

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Fuxun, L.; Bisheng, Y.; Ronggang, H.; Zhen, D.; Jianping, L. Facade Solar Potential Analysis Using Multisource Point Cloud. Acta Geod. Cartogr. Sin. 2018, 47, 225–233. (In Chinese) [Google Scholar]

- Döllner, J.; Baumann, K.; Buchholz, H. Virtual 3D City Models as Foundation of Complex Urban Information Spaces. In Proceedings of the 11th International Conference on Urban Planning and Spatial Development in the Information Society, Vienna, Austria, 13–16 February 2006. [Google Scholar]

- Qing, Z.; Haowei, Z.; Yulin, D.; Xiao, X.; Fei, L.; Liguo, Z.; Haifeng, L.; Han, H.; Junxiao, Z.; Li, C.; et al. A review of major potential landslide hazards analysis. Acta Geod. Cartogr. Sin. 2019, 48, 1551–1561. (In Chinese) [Google Scholar]

- Nan, L.; Sharf, A.; Zhang, H.; Cohen-Or, D.; Chen, B. SmartBoxes for interactive urban reconstruction. ACM Trans. Graph. 2010, 29, 1–10. [Google Scholar] [CrossRef]

- Xiong, B.; Oude Elberink, S.; Vosselman, G. A graph edit dictionary for correcting errors in roof topology graphs reconstructed from point clouds. ISPRS J. Photogramm. Remote Sens. 2014, 93, 227–242. [Google Scholar] [CrossRef]

- Lafarge, F.; Mallet, C. Building large urban environments from unstructured point data. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1068–1075. [Google Scholar]

- Bulatov, D.; Häufel, G.; Meidow, J.; Pohl, M.; Solbrig, P.; Wernerus, P. Context-based automatic reconstruction and texturing of 3D urban terrain for quick-response tasks. ISPRS J. Photogramm. Remote Sens. 2014, 93, 157–170. [Google Scholar] [CrossRef]

- Zhu, Z.; Stamatopoulos, C.; Fraser, C.S. Accurate and occlusion-robust multi-view stereo. ISPRS J. Photogramm. Remote Sens. 2015, 109, 47–61. [Google Scholar] [CrossRef]

- Toschi, I.; Nocerino, E.; Remondino, F.; Revolti, A.; Soria, G.; Piffer, S. Geospatial Data Processing for 3d City Model Generation, Management and Visualization. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, XLII-1/W1, 527–534. [Google Scholar] [CrossRef]

- Rychard, M.; Borkowski, A. 3D building reconstruction from ALS data using unambiguous decomposition into elementary structures. ISPRS J. Photogramm. Remote Sens. 2016, 118, 1–12. [Google Scholar] [CrossRef]

- Aringer, K.; Roschlaub, R. Bavarian 3D Building Model and Update Concept Based on LiDAR, Image Matching and Cadastre Information. In Innovations in 3D Geo-Information Sciences; Isikdag, U., Ed.; Springer International Publishing: Cham, Switzerland, 2014; pp. 143–157. [Google Scholar] [CrossRef]

- Jarząbek-Rychard, M.; Maas, H.-G. Geometric Refinement of ALS-Data Derived Building Models Using Monoscopic Aerial Images. Remote Sens. 2017, 9, 282. [Google Scholar] [CrossRef]

- Lin, H.; Gao, J.; Zhou, Y.; Lu, G.; Ye, M.; Zhang, C.; Liu, L.; Yang, R. Semantic Decomposition and Reconstruction of Residential Scenes from LiDAR Data. ACM Trans. Graph. 2013, 32, 1–10. [Google Scholar] [CrossRef]

- Verdie, Y.; Lafarge, F.; Alliez, P. LOD Generation for Urban Scenes. ACM Trans. Graph. 2015, 34, 1–14. [Google Scholar] [CrossRef]

- Oesau, S.; Lafarge, F.; Alliez, P. Planar Shape Detection and Regularization in Tandem. Comput. Graph. Forum 2016, 35, 203–215. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Gerke, M.; Wegner, J.D.; Breitkopf, U.; Jung, J. Results of the ISPRS benchmark on urban object detection and 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2014, 93, 256–271. [Google Scholar] [CrossRef]

- Musialski, P.; Wonka, P.; Aliaga, D.G.; Wimmer, M.; Van Gool, L.; Purgathofer, W. A survey of urban reconstruction. Comput. Graph. Forum 2013, 32, 146–177. [Google Scholar] [CrossRef]

- Haala, N.; Kada, M. An update on automatic 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2010, 65, 570–580. [Google Scholar] [CrossRef]

- Wang, R.; Peethambaran, J.; Chen, D. LiDAR Point Clouds to 3D Urban Models: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 606–627. [Google Scholar] [CrossRef]

- Yang, B.; Dong, Z. A shape-based segmentation method for mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 81, 19–30. [Google Scholar] [CrossRef]

- Vo, A.-V.; Truong-Hong, L.; Laefer, D.F.; Bertolotto, M. Octree-based region growing for point cloud segmentation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 88–100. [Google Scholar] [CrossRef]

- Zhou, G.; Cao, S.; Zhou, J. Planar Segmentation Using Range Images From Terrestrial Laser Scanning. IEEE Geosci. Remote Sens. Lett. 2016, 13, 257–261. [Google Scholar] [CrossRef]

- Kim, C.; Habib, A.; Pyeon, M.; Kwon, G.-R.; Jung, J.; Heo, J. Segmentation of Planar Surfaces from Laser Scanning Data Using the Magnitude of Normal Position Vector for Adaptive Neighborhoods. Sensors 2016, 16, 140. [Google Scholar] [CrossRef]

- Vosselman, G.; Gorte, B.G.; Sithole, G.; Rabbani, T. Recognising structure in laser scanner point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 46, 33–38. [Google Scholar]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Yan, J.; Shan, J.; Jiang, W. A global optimization approach to roof segmentation from airborne lidar point clouds. ISPRS J. Photogramm. Remote Sens. 2014, 94, 183–193. [Google Scholar] [CrossRef]

- Dong, Z.; Yang, B.; Hu, P.; Scherer, S. An efficient global energy optimization approach for robust 3D plane segmentation of point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 137, 112–133. [Google Scholar] [CrossRef]

- Pham, T.T.; Eich, M.; Reid, I.; Wyeth, G. Geometrically consistent plane extraction for dense indoor 3D maps segmentation. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4199–4204. [Google Scholar]

- Chen, D.; Zhang, L.; Mathiopoulos, P.T.; Huang, X. A Methodology for Automated Segmentation and Reconstruction of Urban 3-D Buildings from ALS Point Clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4199–4217. [Google Scholar] [CrossRef]

- Chen, J.; Chen, B. Architectural Modeling from Sparsely Scanned Range Data. Int. J. Comput. Vis. 2008, 78, 223–236. [Google Scholar] [CrossRef]

- Sampath, A.; Shan, J. Segmentation and Reconstruction of Polyhedral Building Roofs From Aerial Lidar Point Clouds. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1554–1567. [Google Scholar] [CrossRef]

- Zhou, Q.; Neumann, U. 2.5D building modeling by discovering global regularities. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 326–333. [Google Scholar]

- Poullis, C. A Framework for Automatic Modeling from Point Cloud Data. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2563–2575. [Google Scholar] [CrossRef] [PubMed]

- Dehbi, Y.; Henn, A.; Gröger, G.; Stroh, V.; Plümer, L. Robust and fast reconstruction of complex roofs with active sampling from 3D point clouds. Trans. GIS 2020, 25, 112–133. [Google Scholar] [CrossRef]

- Karantzalos, K.; Paragios, N. Large-Scale Building Reconstruction Through Information Fusion and 3-D Priors. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2283–2296. [Google Scholar] [CrossRef]

- Huang, X. Building reconstruction from airborne laser scanning data. Geo-Spat. Inf. Sci. 2013, 16, 35–44. [Google Scholar] [CrossRef]

- Henn, A.; Gröger, G.; Stroh, V.; Plümer, L. Model driven reconstruction of roofs from sparse LIDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 76, 17–29. [Google Scholar] [CrossRef]

- Tseng, Y.-H.; Wang, S. Semi-automated Building Extraction Based on CSG Model-Image Fitting. Photogramm. Eng. Remote Sens. 2003, 69, 171–180. [Google Scholar] [CrossRef]

- Fayez, T.-K.; Tania, L.; Pierre, G. Extended RANSAC algorithm for automatic detection of building roof planes from LiDAR data. Photogramm. J. Finl. 2008, 21, 97–109. Available online: https://halshs.archives-ouvertes.fr/halshs-00278397 (accessed on 2 May 2021).

- Kada, M.; McKinley, L. 3D building reconstruction from LiDAR based on a cell decomposition approach. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, 38, W4. [Google Scholar]

- Lafarge, F.; Descombes, X.; Zerubia, J.; Pierrot-Deseilligny, M. Automatic building extraction from DEMs using an object approach and application to the 3D-city modeling. ISPRS J. Photogramm. Remote Sens. 2008, 63, 365–381. [Google Scholar] [CrossRef]

- Poullis, C.; You, S. Automatic reconstruction of cities from remote sensor data. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2775–2782. [Google Scholar]

- Brenner, C. Modelling 3d Objects Using Weak Csg Primitives. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 1085–1090. [Google Scholar]

- Vosselman, G.; Dijkman, S. 3D building model reconstruction from point clouds and ground plans. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2001, 34, 37–44. [Google Scholar]

- Kada, M.; Wichmann, A. Feature-Driven 3D Building Modeling using Planar Halfspaces. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, II-3/W3, 37–42. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, W.; Chen, Y.; Chen, M.; Yan, K. Semantic Decomposition and Reconstruction of Compound Buildings with Symmetric Roofs from LiDAR Data and Aerial Imagery. Remote Sens. 2015, 7, 13945–13974. [Google Scholar] [CrossRef]

- Suveg, I.; Vosselman, G. Reconstruction of 3D building models from aerial images and maps. ISPRS J. Photogramm. Remote Sens. 2004, 58, 202–224. [Google Scholar] [CrossRef]

- Hu, P.; Yang, B.; Dong, Z.; Yuan, P.; Huang, R.; Fan, H.; Sun, X. Towards Reconstructing 3D Buildings from ALS Data Based on Gestalt Laws. Remote Sens. 2018, 10, 1127. [Google Scholar] [CrossRef]

- Bauchet, J.P.; Lafarge, F. City Reconstruction from Airborne Lidar: A Computational Geometry Approach. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-4/W8, 19–26. [Google Scholar] [CrossRef]

- Perera, G.S.N.; Maas, H.-G. Cycle graph analysis for 3D roof structure modelling: Concepts and performance. ISPRS J. Photogramm. Remote Sens. 2014, 93, 213–226. [Google Scholar] [CrossRef]

- Verma, V.; Kumar, R.; Hsu, S. 3D Building Detection and Modeling from Aerial LIDAR Data. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 2213–2220. [Google Scholar]

- Elberink, S.O.; Vosselman, G. Quality analysis on 3D building models reconstructed from airborne laser scanning data. ISPRS J. Photogramm. Remote Sens. 2011, 66, 157–165. [Google Scholar] [CrossRef]

- Xiong, B.; Jancosek, M.; Oude Elberink, S.; Vosselman, G. Flexible building primitives for 3D building modeling. ISPRS J. Photogramm. Remote Sens. 2015, 101, 275–290. [Google Scholar] [CrossRef]

- Xu, B.; Jiang, W.; Li, L. HRTT: A Hierarchical Roof Topology Structure for Robust Building Roof Reconstruction from Point Clouds. Remote Sens. 2017, 9, 354. [Google Scholar] [CrossRef]

- Bassier, M.; Vergauwen, M.; Van Genechten, B. Automated classification of heritage buildings for as-built BIM using machine learning techniques. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 25–30. [Google Scholar] [CrossRef]

- Ma, L.; Sacks, R.; Kattel, U.; Bloch, T. 3D object classification using geometric features and pairwise relationships. Comput. Aided Civ. Infrastruct. Eng. 2018, 33, 152–164. [Google Scholar] [CrossRef]

- Wichmann, A.; Agoub, A.; Kada, M. RoofN3D: Deep Learning Training Data for 3D Building Reconstruction. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 1191–1198. [Google Scholar] [CrossRef]

- Axelsson, M.; Soderman, U.; Berg, A.; Lithen, T. Roof Type Classification Using Deep Convolutional Neural Networks on Low Resolution Photogrammetric Point Clouds From Aerial Imagery. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 1293–1297. [Google Scholar]

- Zhang, L.; Zhang, L. Deep Learning-Based Classification and Reconstruction of Residential Scenes From Large-Scale Point Clouds. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1887–1897. [Google Scholar] [CrossRef]

- Yu, D.; Ji, S.; Liu, J.; Wei, S. Automatic 3D building reconstruction from multi-view aerial images with deep learning. ISPRS J. Photogramm. Remote Sens. 2021, 171, 155–170. [Google Scholar] [CrossRef]

- Axelsson, P. DEM Generation from Laser Scanner Data Using adaptive TIN Models. Int. Arch. Photogramm. Remote Sens. 2006, 60, 71–80. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Yang, B.; Huang, R.; Dong, Z.; Zang, Y.; Li, J. Two-step adaptive extraction method for ground points and breaklines from lidar point clouds. ISPRS J. Photogramm. Remote Sens. 2016, 119, 373–389. [Google Scholar] [CrossRef]

- Huang, R.; Yang, B.; Liang, F.; Dai, W.; Li, J.; Tian, M.; Xu, W. A top-down strategy for buildings extraction from complex urban scenes using airborne LiDAR point clouds. Infrared Phys. Technol. 2018, 92, 203–218. [Google Scholar] [CrossRef]

- Boykov, Y.; Kolmogorov, V. An Experimental Comparison of Min-Cut/Max-Flow Algorithms for Energy Minimization in Vision. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1124–1137. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Delong, A.; Osokin, A.; Isack, H.N.; Boykov, Y. Fast Approximate Energy Minimization with Label Costs. Int. J. Comput. Vis. 2012, 96, 1–27. [Google Scholar] [CrossRef]

- Isack, H.; Boykov, Y. Energy-Based Geometric Multi-model Fitting. Int. J. Comput. Vis. 2012, 97, 123–147. [Google Scholar] [CrossRef]

- Pu, S.; Vosselman, G. Knowledge based reconstruction of building models from terrestrial laser scanning data. ISPRS J. Photogramm. Remote Sens. 2009, 64, 575–584. [Google Scholar] [CrossRef]

- Vosselman, G.; Coenen, M.; Rottensteiner, F. Contextual segment-based classification of airborne laser scanner data. ISPRS J. Photogramm. Remote Sens. 2017, 128, 354–371. [Google Scholar] [CrossRef]

- Desolneux, A.; Moisan, L.; Morel, J.-M. Gestalt theory and computer vision. In Seeing, Thinking and Knowing; Springer: Berlin/Heidelberg, Germany, 2004; pp. 71–101. [Google Scholar]

- Nan, L.; Sharf, A.; Xie, K.; Wong, T.-T.; Deussen, O.; Cohen-Or, D.; Chen, B. Conjoining Gestalt rules for abstraction of architectural drawings. ACM Trans. Graph. 2011, 30, 1–10. [Google Scholar] [CrossRef]

- Lun, Z.; Zou, C.; Huang, H.; Kalogerakis, E.; Tan, P.; Cani, M.-P.; Zhang, H. Learning to group discrete graphical patterns. ACM Trans. Graph. (TOG) 2017, 36, 1–11. [Google Scholar] [CrossRef]

- Yang, B.; Huang, R.; Li, J.; Tian, M.; Dai, W.; Zhong, R. Automated Reconstruction of Building LoDs from Airborne LiDAR Point Clouds Using an Improved Morphological Scale Space. Remote Sens. 2017, 9, 14. [Google Scholar] [CrossRef]

- People, C. The Computational Geometry Algorithms Library (CGAL). Available online: https://www.cgal.org/ (accessed on 4 May 2021).

- Laefer, D.F.; Abuwarda, S.; Vo, A.-V.; Truong-Hong, L.; Gharibi, H. 2015 Dublin LiDAR and NYU Research Data. Available online: https://geo.nyu.edu/ (accessed on 8 May 2021).

- Elberink, S.O.; Vosselman, G. Building Reconstruction by Target Based Graph Matching on Incomplete Laser Data: Analysis and Limitations. Sensors 2009, 9, 6101–6118. [Google Scholar] [CrossRef] [PubMed]

- Rutzinger, M.; Rottensteiner, F.; Pfeifer, N. A Comparison of Evaluation Techniques for Building Extraction From Airborne Laser Scanning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2009, 2, 11–20. [Google Scholar] [CrossRef]

- Rau, J.Y. A line-based 3d roof model reconstruction algorithm: Tin-merging and reshaping (tmr). ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 287–292. [Google Scholar] [CrossRef]

- Sohn, G.; Jwa, Y.; Jung, J.; Kim, H. An implicit regularization for 3D building rooftop modeling using airborne lidar data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 1, 305–310. [Google Scholar] [CrossRef]

| Items | Area 1 | Area 3 |

|---|---|---|

| Reconstructed planes | 202 | 133 |

| True Positive (TP) | 201 | 130 |

| False Positive (FP) | 1 | 3 |

| False Negative (FN) | 22 | 34 |

| Item | Building Points | Iterations | Run Times (min) from the Proposed | Run Times (min) from the RANSAC |

|---|---|---|---|---|

| ISPRS Area 1 | 24,971 | 4 | 1.7 | 0.9 |

| ISPRS Area 2 | 34,364 | 3 | 2.2 | 1.1 |

| ISPRS Area 3 | 39,938 | 4 | 2.6 | 1.2 |

| Guangdong | 76,824 | 5 | 4.3 | 2.1 |

| NYU | 111,289 | 3 | 5.7 | 3.9 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, P.; Miao, Y.; Hou, M. Reconstruction of Complex Roof Semantic Structures from 3D Point Clouds Using Local Convexity and Consistency. Remote Sens. 2021, 13, 1946. https://doi.org/10.3390/rs13101946

Hu P, Miao Y, Hou M. Reconstruction of Complex Roof Semantic Structures from 3D Point Clouds Using Local Convexity and Consistency. Remote Sensing. 2021; 13(10):1946. https://doi.org/10.3390/rs13101946

Chicago/Turabian StyleHu, Pingbo, Yiming Miao, and Miaole Hou. 2021. "Reconstruction of Complex Roof Semantic Structures from 3D Point Clouds Using Local Convexity and Consistency" Remote Sensing 13, no. 10: 1946. https://doi.org/10.3390/rs13101946

APA StyleHu, P., Miao, Y., & Hou, M. (2021). Reconstruction of Complex Roof Semantic Structures from 3D Point Clouds Using Local Convexity and Consistency. Remote Sensing, 13(10), 1946. https://doi.org/10.3390/rs13101946