1. Introduction

Desertification is a global environmental problem [

1], which affects 14% of the world's land area and nearly 1 billion people, and it continues to expand at a rate of 57,000 km

2 per year. China is one of the most affected countries by desertification in the world [

2]. Nearly 1.7 million km

2 of desert, Gobi and desertified land in China, of which nearly 400,000 km

2 is desertified land, posing a serious threat to the ecological environment and sustainable social and economic development in northern China [

3].

The monitoring and evaluation of desertification has always been a hot topic in the world and also it is an important way to prevent and control desertification effectively [

4]. Desertification study has become a hot issue in multi-disciplinary study, and rapid and accurate acquisition of desert vegetation information is the foundation and key link of desertification study. Remote sensing has been widely used in the monitoring and evaluation of land desertification due to its advantages of wide observation range, large amount of information, fast updating of data, and high accuracy.

At present, many traditional sensor data (such as MODIS and Landsat data, etc.) have been applied to desertification monitoring, but they can only capture the integrated vegetation cover information within the pixel [

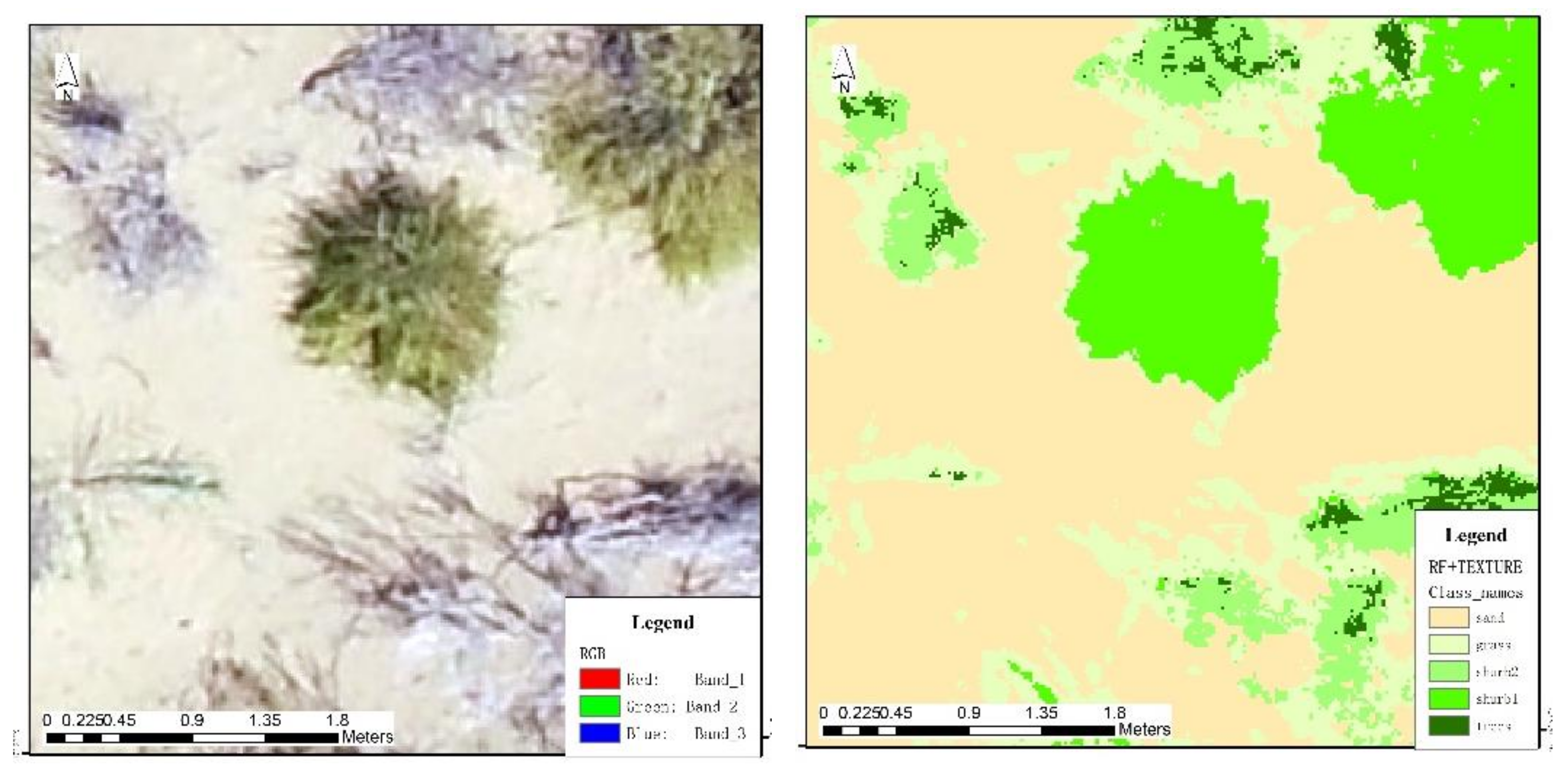

5], are unable to provide more specific information about desertification. The development of unmanned aerial vehicles (UAVs) can solve this problem. Compared with satellite remote sensing, UAVs with the high resolution image have rich geometric texture information [

5] and have the ability to capture detailed information. UAV with high resolution is rarely reported in desert vegetation mapping at home and abroad, especially at home.

Unmanned aerial vehicle (UAV) acts as a powered, controllable, low-altitude flight platform capable of carrying a variety of equipment and performing a variety of missions [

6]. Its combination with remote sensing technology has incomparable advantages over traditional satellite remote sensing technologies, such as low cost, simple operation, fast image acquisition and high spatial resolution [

7]. It has been widely used in land monitoring and urban management, urban vegetation mapping [

8,

9] geological disasters, environmental monitoring, emergency support and other important fields [

10,

11,

12,

13,

14]. However, it is rarely used in field of mapping the desert vegetation. The two main characteristics of the desert vegetation are vegetation index and texture.

Vegetation index is a simple and effective measurement for the status of surface vegetation, which can effectively reflect the vegetation vitality and vegetation information, and becomes an important technical means for remote sensing inversion of biophysical and biochemical parameters as chlorophyll content, FVC (fractional vegetation coverage), LAI (leaf area index), biomass, net primary productivity, and photosynthetic effective radiation absorption [

15].

The reflection spectrum of healthy green vegetation in visible band is characterized by strong absorption of blue and red, strong reflection of green, and strong reflection in near-infrared band [

16]. Based on the reflection spectral characteristics of green vegetation, a large number of vegetation indices based on visible-infrared band calculation have been proposed in the field of vegetation remote sensing. Common vegetation indexes based on multi-spectral bands include ratio vegetation index (RVI) [

17], differential vegetation index (DVI) [

18], normalized difference vegetation index (NDVI) [

19] and soil regulatory vegetation index (SAVI) [

20], etc. The operational bands are mainly based on visible and near-infrared spectral bands. The acquisition of near-infrared spectrum information requires the use of high-altitude remote sensing technology. However, due to its difficulties in acquisition and poor timeliness, the calculation of vegetation index based on the obtained remote sensing data cannot distinguish vegetation in a small range, which leads to the large errors and lag defects in the extracted vegetation information. UAV images are characterized by high resolution, rich texture features and easy access, and contain visible band data. Visible light vegetation index can be established based on visible light channels, and vegetation coverage can be estimated after vegetation information is extracted. In the visible band, the reflectance of green vegetation is high in the green light channel, but low in the red and blue light channels. Therefore, the calculation between the green light channel and the red and blue light channels can enhance the difference between vegetation and the surrounding ground objects, so as to facilitate the later extraction of target information more accurately. While there are many vegetation indexes based only on visible bands. Such as EXG (excess green), NGRDI (normalized green-red difference index), NGBDI (normalized green-blue difference index), and RGRI (red-green ratio index), MSAVI (modified soil-adjusted vegetation index) [

20], EGRBDI (excess green-red-blue difference index) [

21]. However, the spectral curve of desert vegetation usually does not have typical characteristics of healthy vegetation, and there is no obvious strong absorption valley and reflection peak, and the spectral information of vegetation detected on remote sensing image is relatively weak [

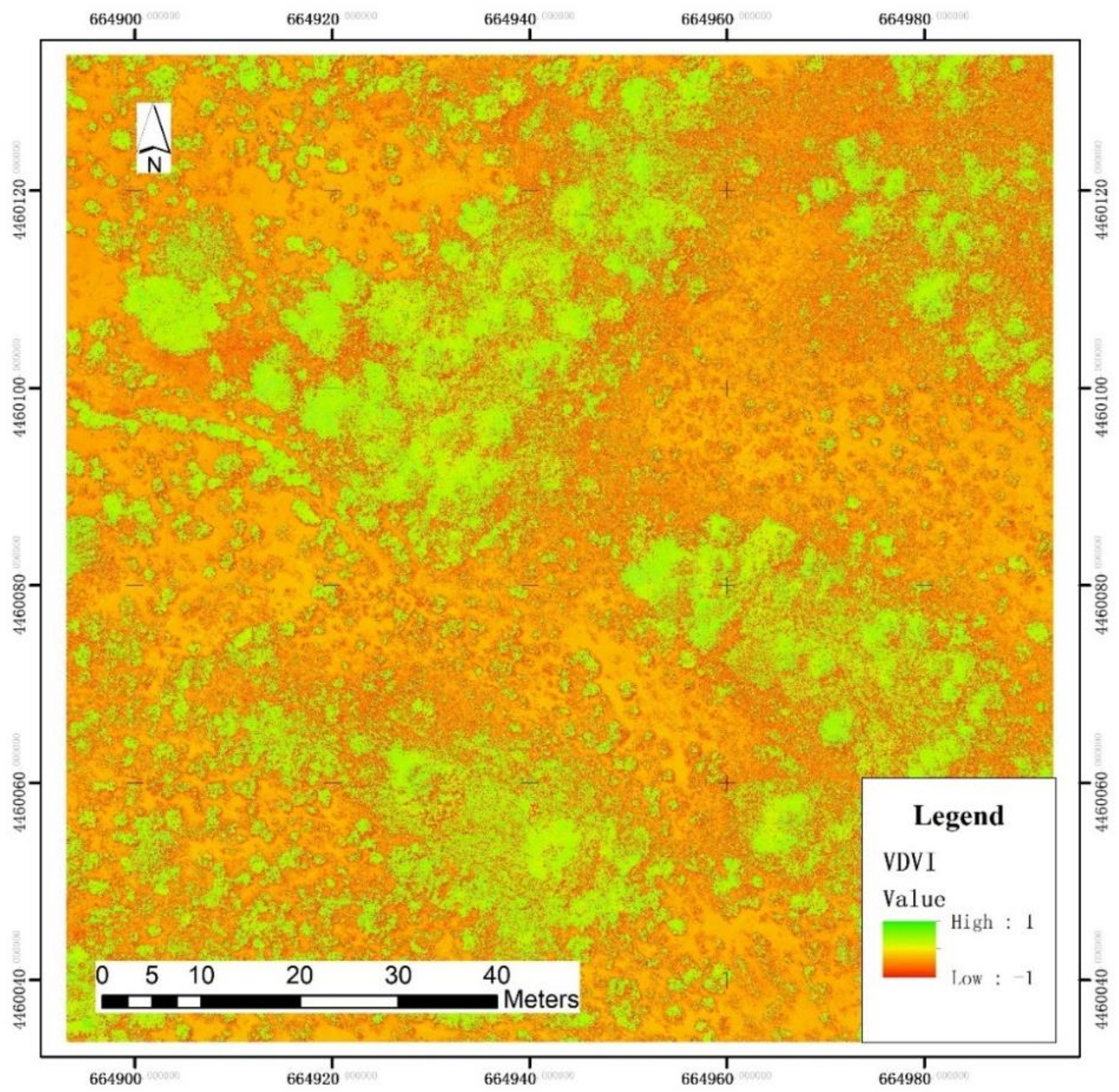

22]. VDVI (visible light difference vegetation index) will play a better role and have better applicability in the extraction of vegetation information of UAV image in visible band only [

16]. Therefore, visible light difference vegetation index (VDVI) is used in this study to estimate desert vegetation coverage rate.

Vegetation index can accurately distinguish vegetation and non-vegetation, but the vegetation index of shrub and herb is similar, so vegetation index cannot be used to distinguish vegetation [

23]. It is difficult to distinguish the phenomena of “same object different spectrum” and “same spectrum foreign body” in the image base on the pixels [

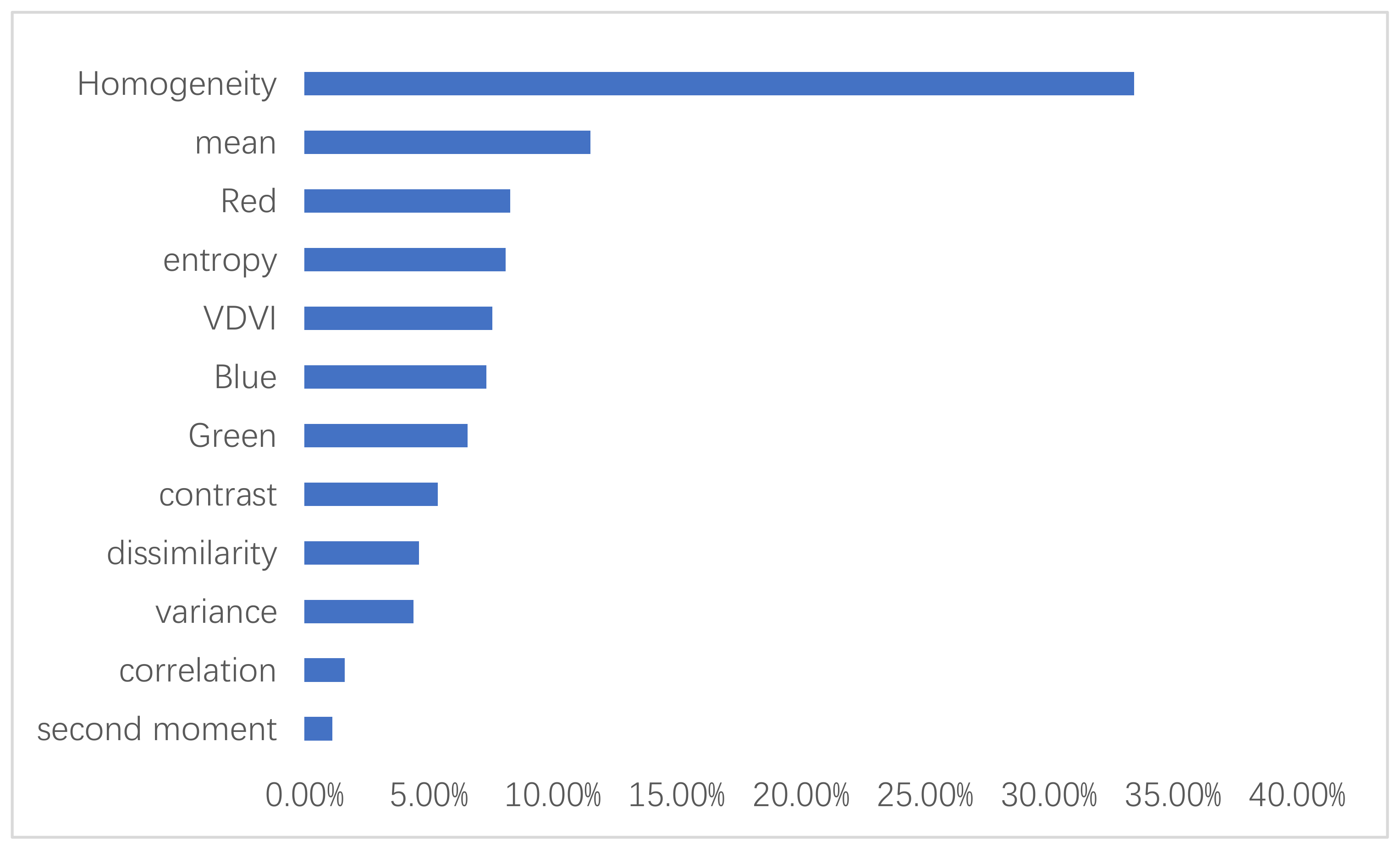

24]. Moreover, the accuracy is low with the high-resolution remote sensing, and the single-scale object-oriented segmentation classification is prone to “over-segmentation” and “under-segmentation” problems. The experience is needed for the researches to determine an optimal scale level to segment objects. To solve this problem, there have been several geospatial techniques to improve the classification accuracy, as an alternative to spectral-based traditional classifiers. Such as the GLCM (gray level co-occurrence matrix [

25]); fractal analysis [

26]; spatial auto-correlation and wavelet transforms [

27]. In the study, the GLCM is adopted due to it is a widely used texture statistical analysis method and texture measurement technology.

And which method shall be used for desert mapping? With the rapid development of remote sensing technology, pixel-based image classification and object-based image analysis (OBIA) are widely used in classifying high resolution remote sensing images [

28,

29,

30,

31]. The important point of OBIA is to combine numerous textural, spectral and spatial features into the classification process, and which was based on multi-scale image segmentation, therefore, it could improve the accuracy. but there are challenges with the OBIA process, the suitable features for image classification and find out the optimal scale value for image segmentation [

32]. The accuracy of OBIA depends on experience of the researchers and a priori knowledge. Deep learning is also widely used in many remote sensing related applications [

33,

34,

35], including image registration, classification, object detection, land-use classification, but it takes large amount of training data, but would have poor interpretability. In this study, a robust classifier random forest [

36] was adopted instead of OBIA, because of its simplicity and high performance [

37].

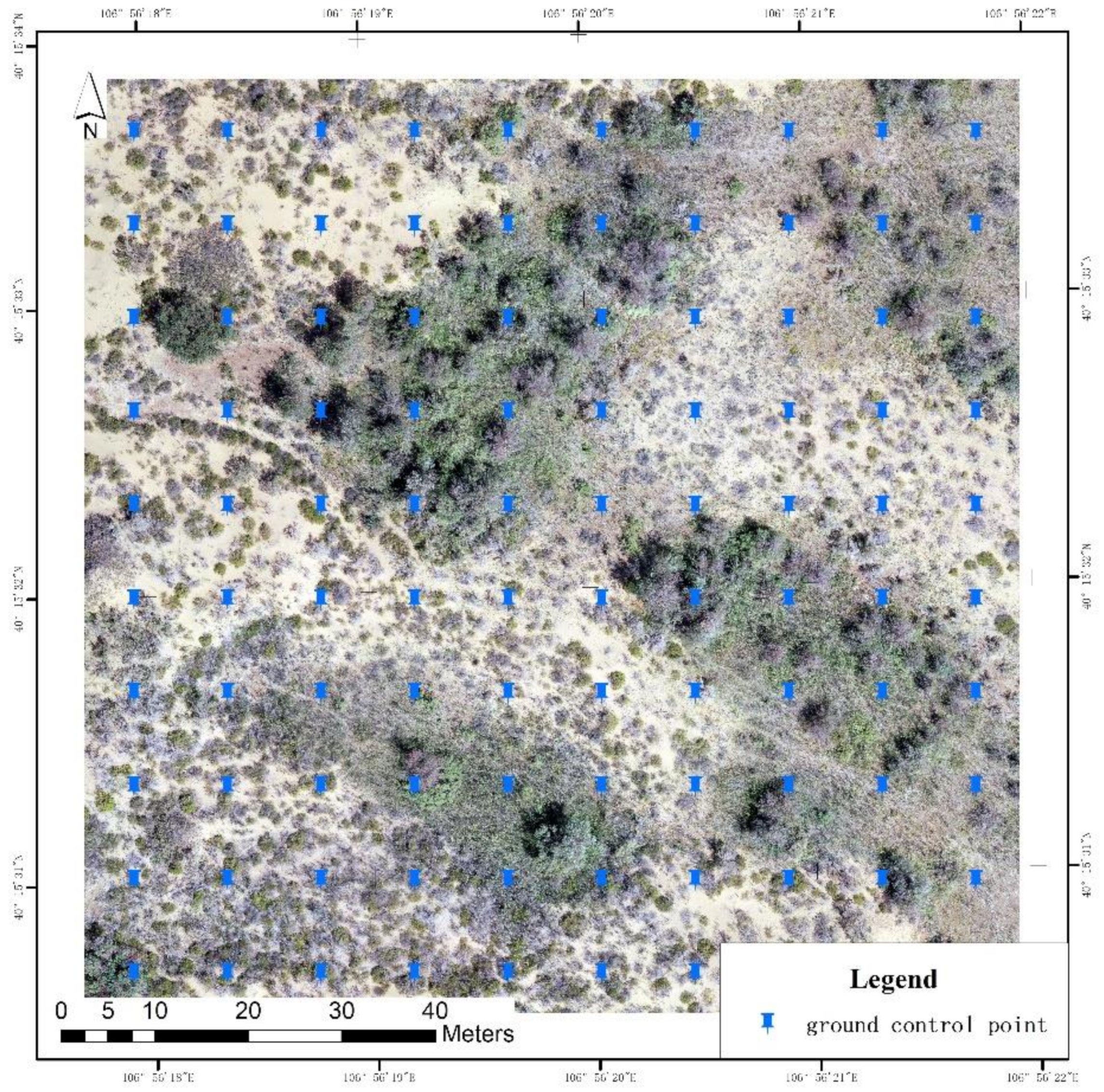

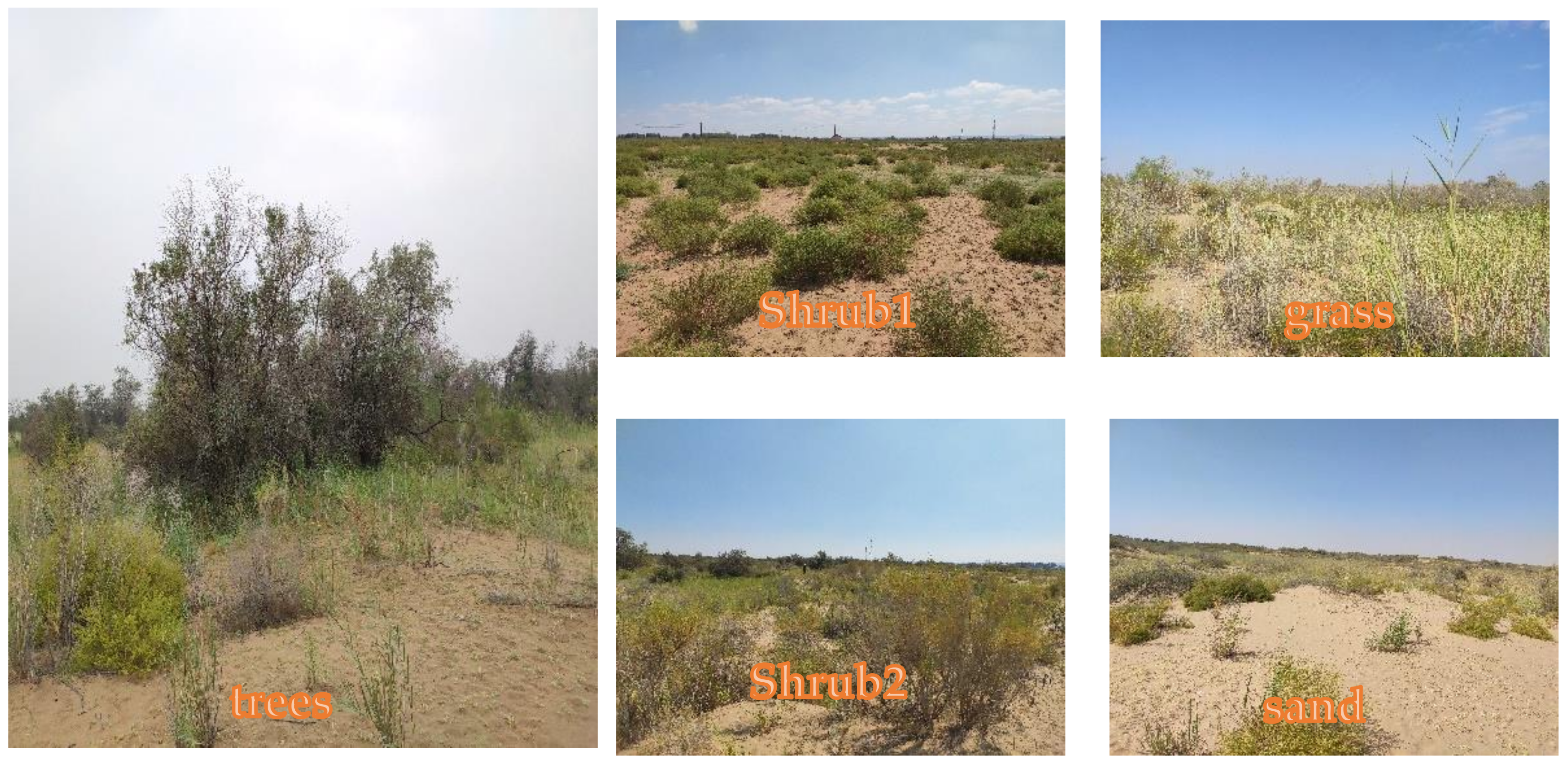

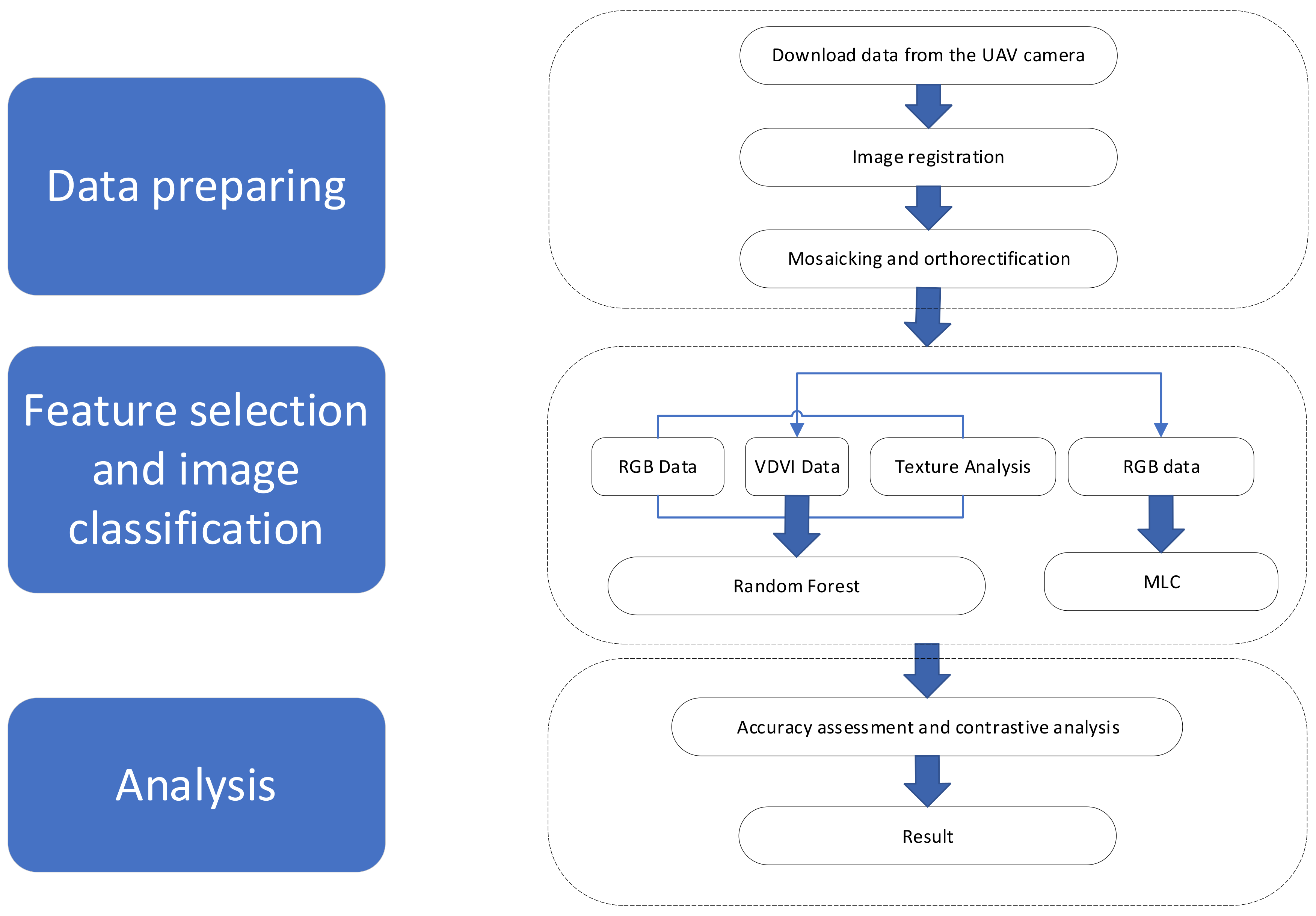

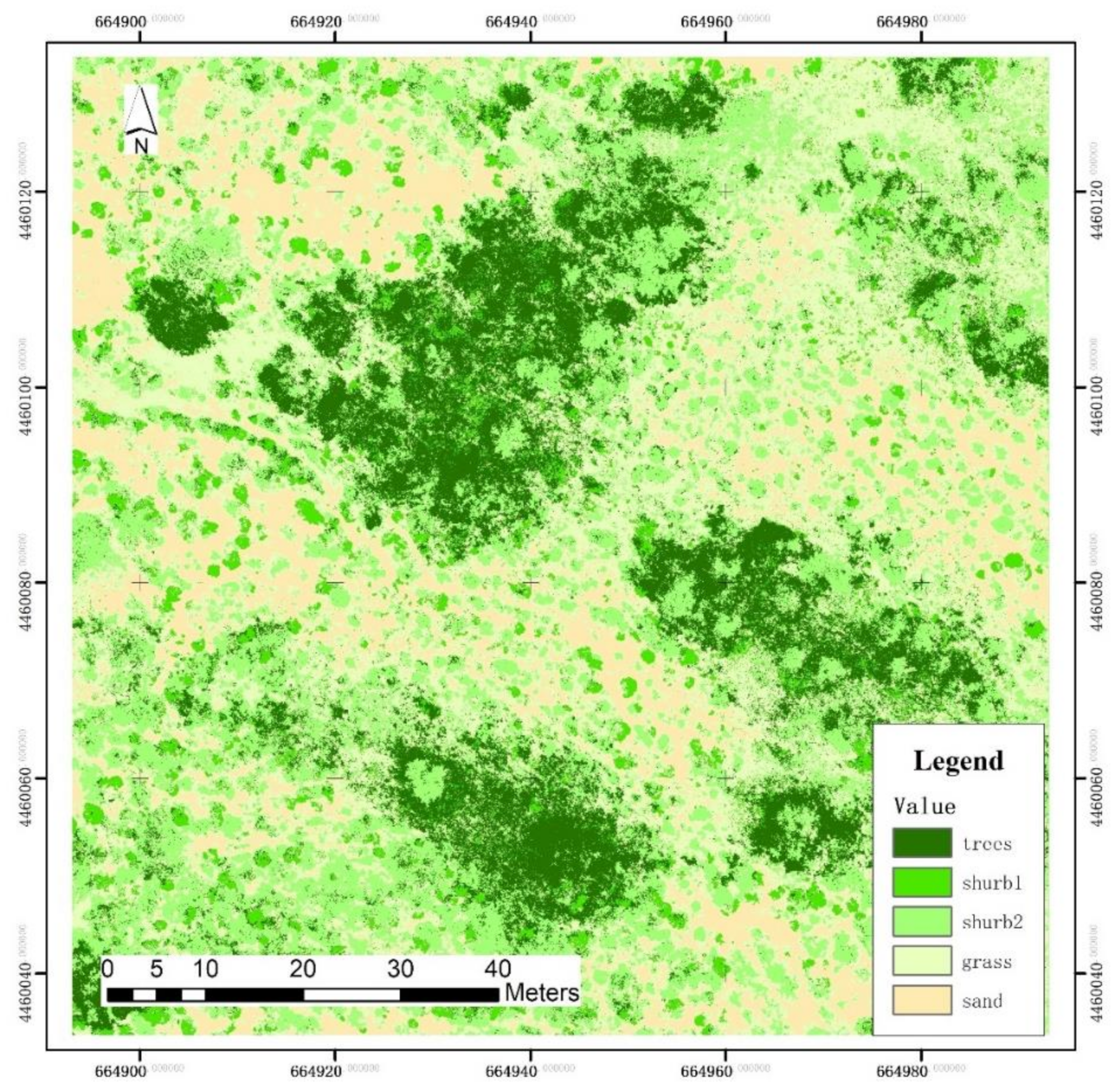

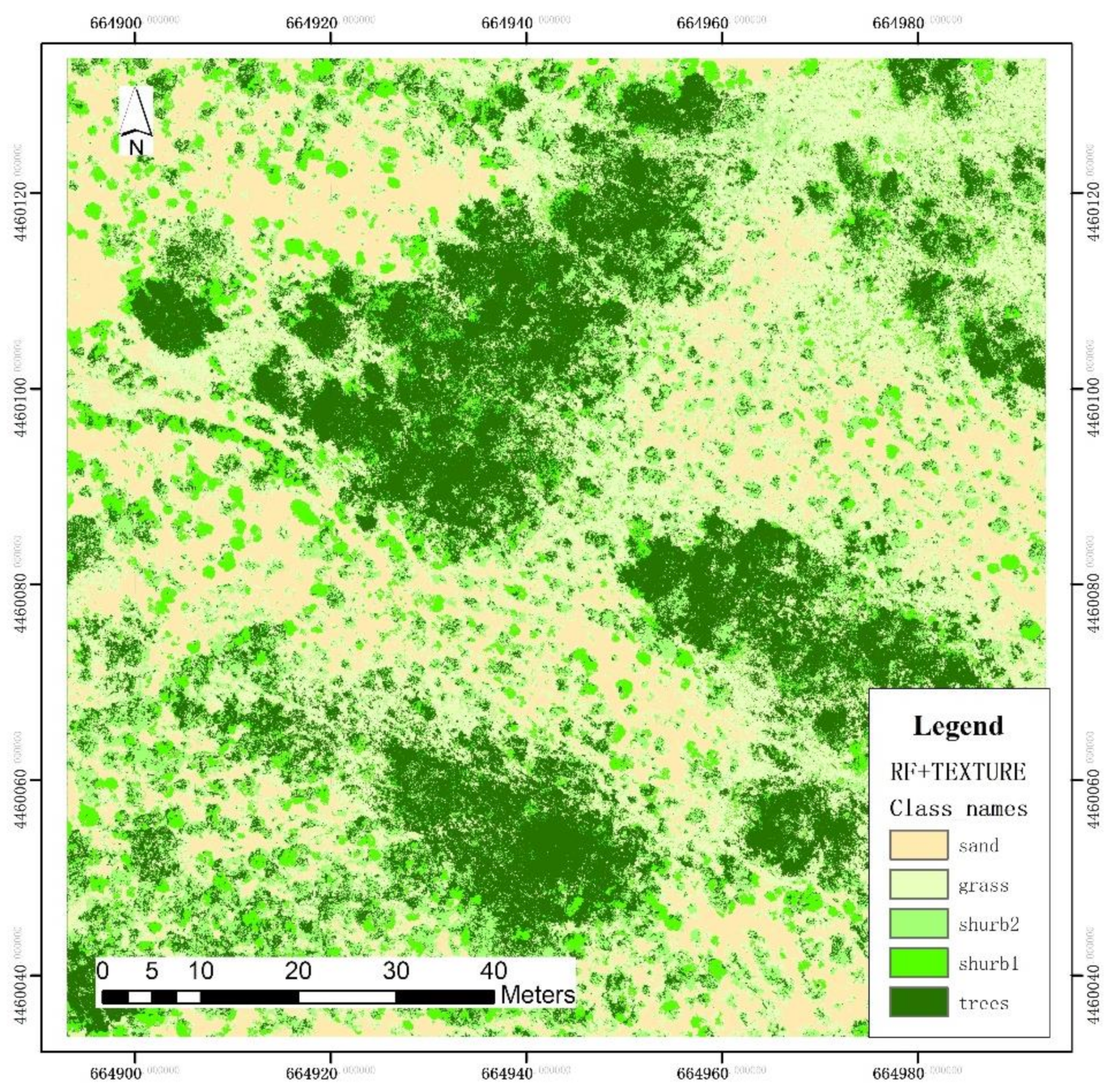

Therefore, in this study, based on the UAV RGB high resolution data including texture and VDVI features, the Random Forest classifier was used to map the desert vegetation. The desert vegetation were classified into five typical types, which include trees, shrub1(Artemisia desertorum Spreng), shrub2(Haloxylon ammodendron (C. A. Mey.) Bunge), grass and sand. The aims of the study: (1) to determine whether Random Forest classifier shows good performance in desert vegetation mapping; (2) to test if the inclusion of VDVI and texture features improve classification accuracy in heterogeneous desert vegetation; and (3) to compare the proposed method with the traditional Maximum Likelihood to verify the performance.

In a whole, the main aim is to measure desert vegetation as well as create accurate desert land cover maps (>80%), for future use in ecological modeling of desert landscapes. In the study, a hybrid classification approach was used for a subset of these cover types based on the pixel approaches to accurately reflect spatial heterogeneity on the small scale in ecology.