Fully Convolutional Networks and a Manifold Graph Embedding-Based Algorithm for PolSAR Image Classification

Abstract

1. Introduction

1.1. Traditional SAR Image Classification

1.2. SAR Image Classification Based on Deep Learning

1.3. Problems and Motivation

- (1)

- Scattering mechanism: There are different forms of electromagnetic wave scattering, such as plane scattering and dihedral angle scattering. Each pixel is formed by a mixture of various scattering forms. Therefore, the phenomenon of different objects have the same spectrum and the same objects have different spectrum exists in high-resolution SAR images, increasing the difficulty of semantic classification. In addition, there is speckle noise in PolSAR imagery, which can easily lead to misclassification.

- (2)

- Complex data format: In terms of the polarized coherence matrix, each pixel in an PolSAR images a complex matrix. For a large PolSAR image, there is much redundancy between each pixel. Therefore, how to extract effective features for ground objects becomes one of the major difficulties. In addition, for different kinds of terrain with various scales and shapes, it is quite hard for a single method to achieve a good classification for all objects.

- (3)

- Semantic gap: For the task of machine vision, people do not focus on each individual pixel but on the target formed by pixels. In fact, pixels are discrete points and have no specific meaning. Only when many discrete points aggregate to form targets can they be classified. However, discrete pixels with large differences in their underlying features (such as gray-scale features) are likely to represent the same object. This is the semantic gap problem, demonstrating differences between underlying features and high-level semantics. To overcome this problem and obtain a region-consistent classification result, higher-level semantic features should be mined.

1.4. Contributions and Structure

- (1)

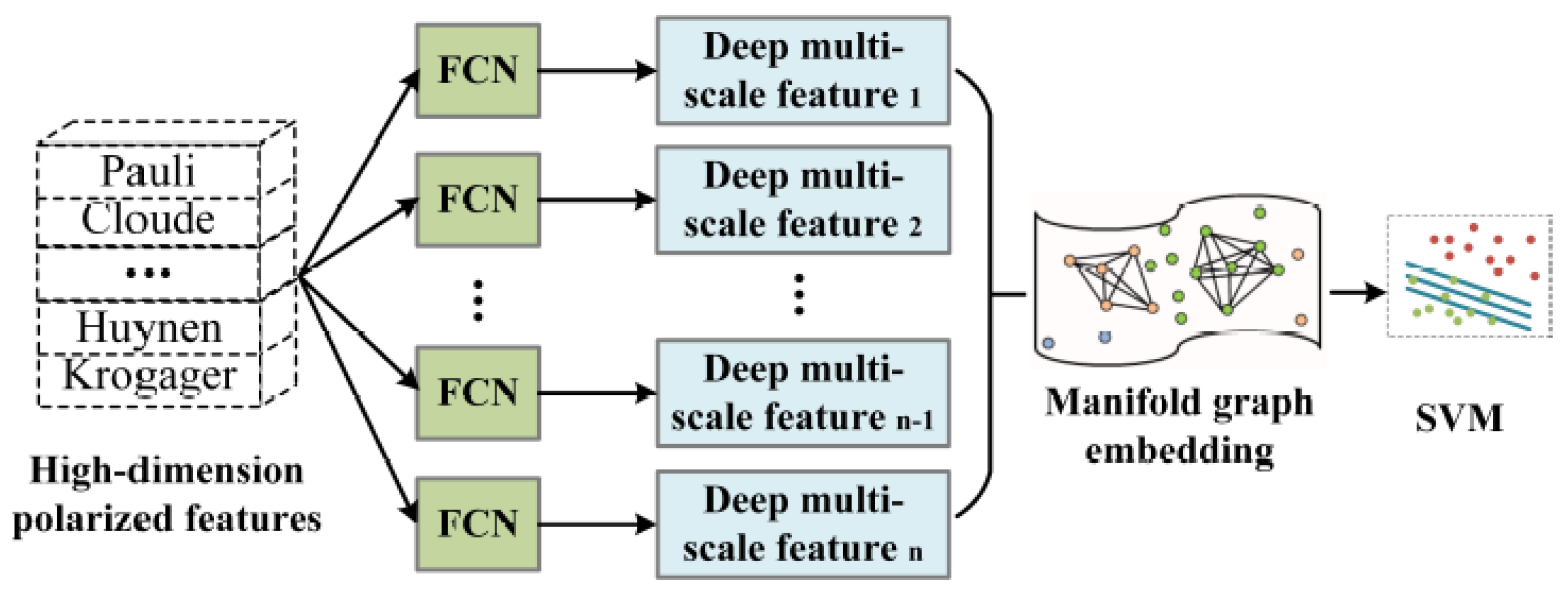

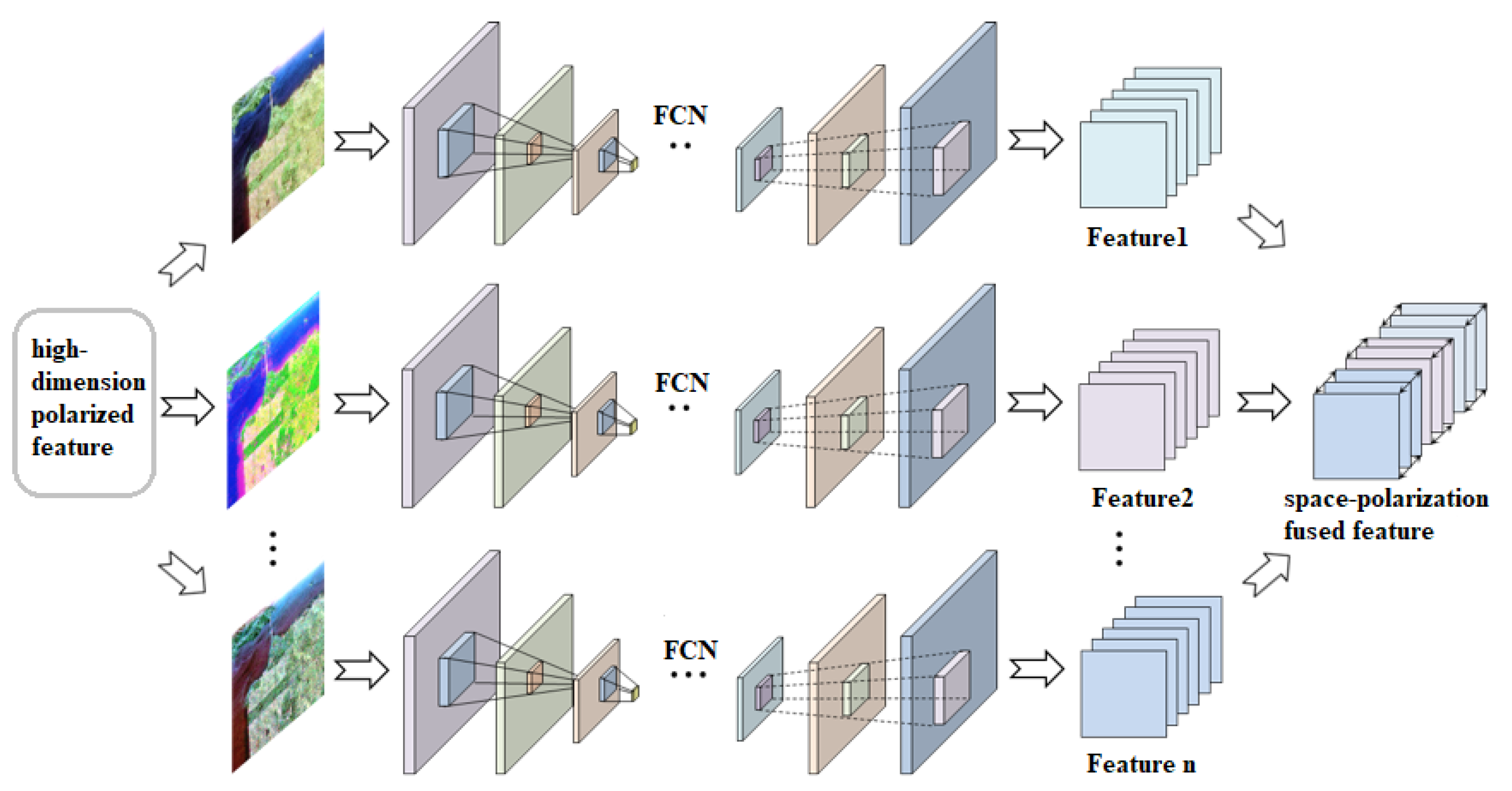

- Based on transfer learning, parallel FCN models are utilized to automatically learn deep multi-scale spatial features. Since the input of the MFCN originates from polarized features in PolSAR imagery, spatially polarized information can be adaptively fused while learning discriminative deep features.

- (2)

- The manifold graph embedding model explores the representation of spatially polarized fused features in a low-dimensional subspace, mining the essential structure of the PolSAR data to improve the fused feature s classification discriminability.

- (3)

- The proposed algorithm makes full use of the advantages of deep learning and shallow manifold graph embedding models, complementing each other to enhance the classification performance.

2. Preliminaries

2.1. Multi-Dimensional Polarization SAR Data

2.2. Fully Convolutional Networks

2.3. Graph Embedding Framework

3. Proposed Method

3.1. The Proposed Algorithm Framework for PolSAR Image Classification

3.2. Feature Learning Based on an MFCN

3.3. Dimensionality Reduction Based on Manifold Graph Embedding

4. Experiments and Analysis

4.1. Experiments Data

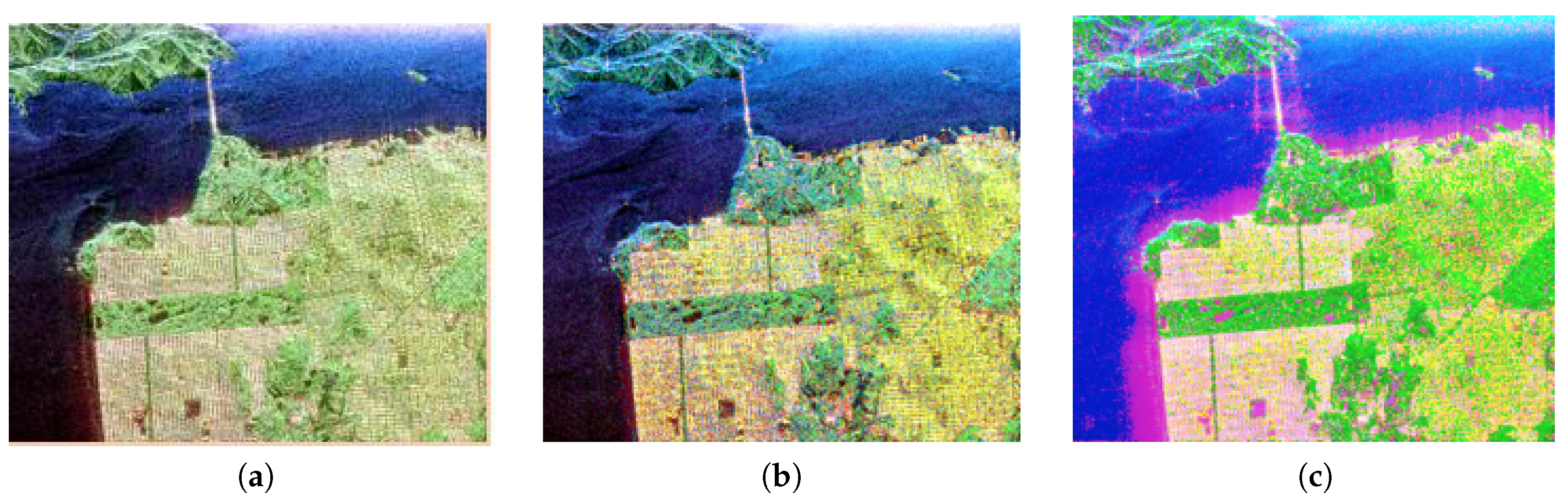

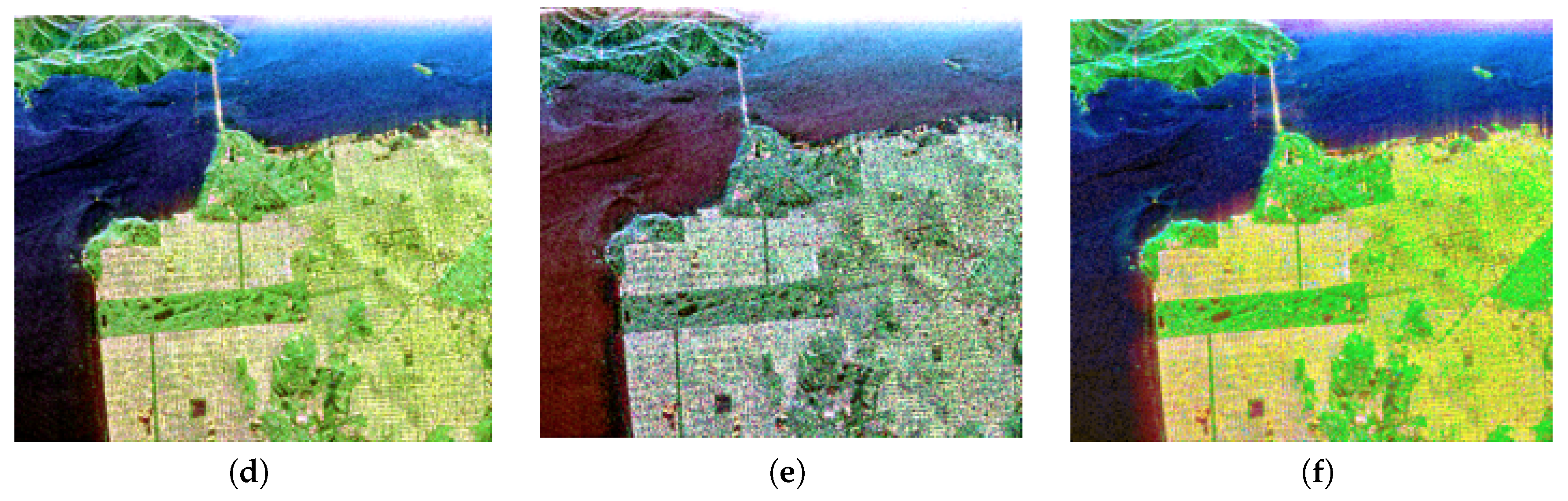

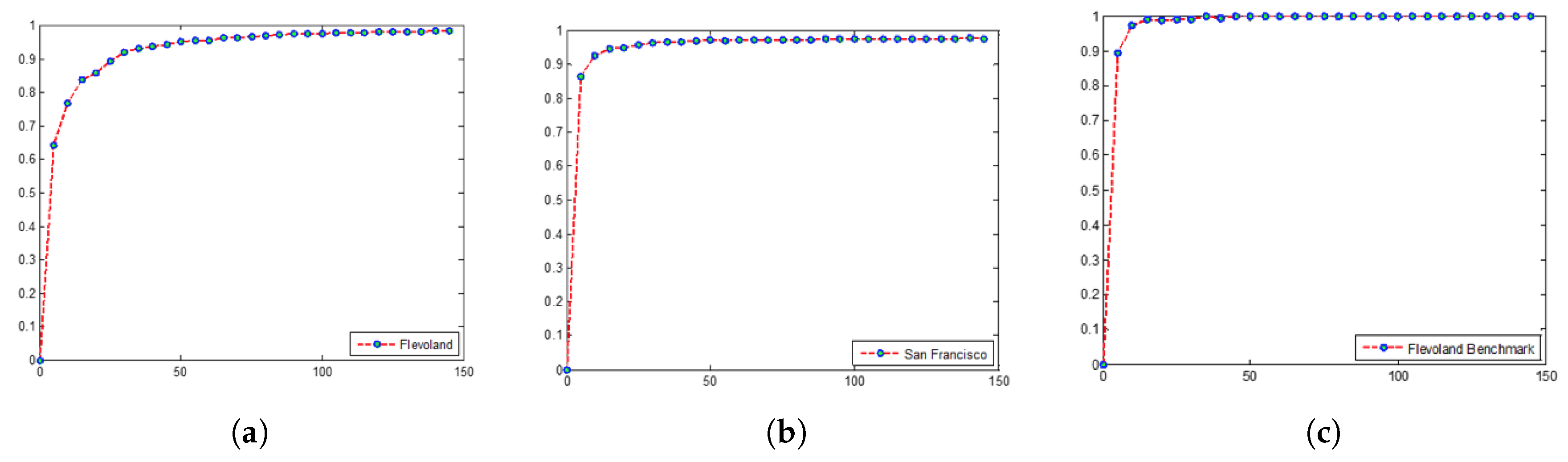

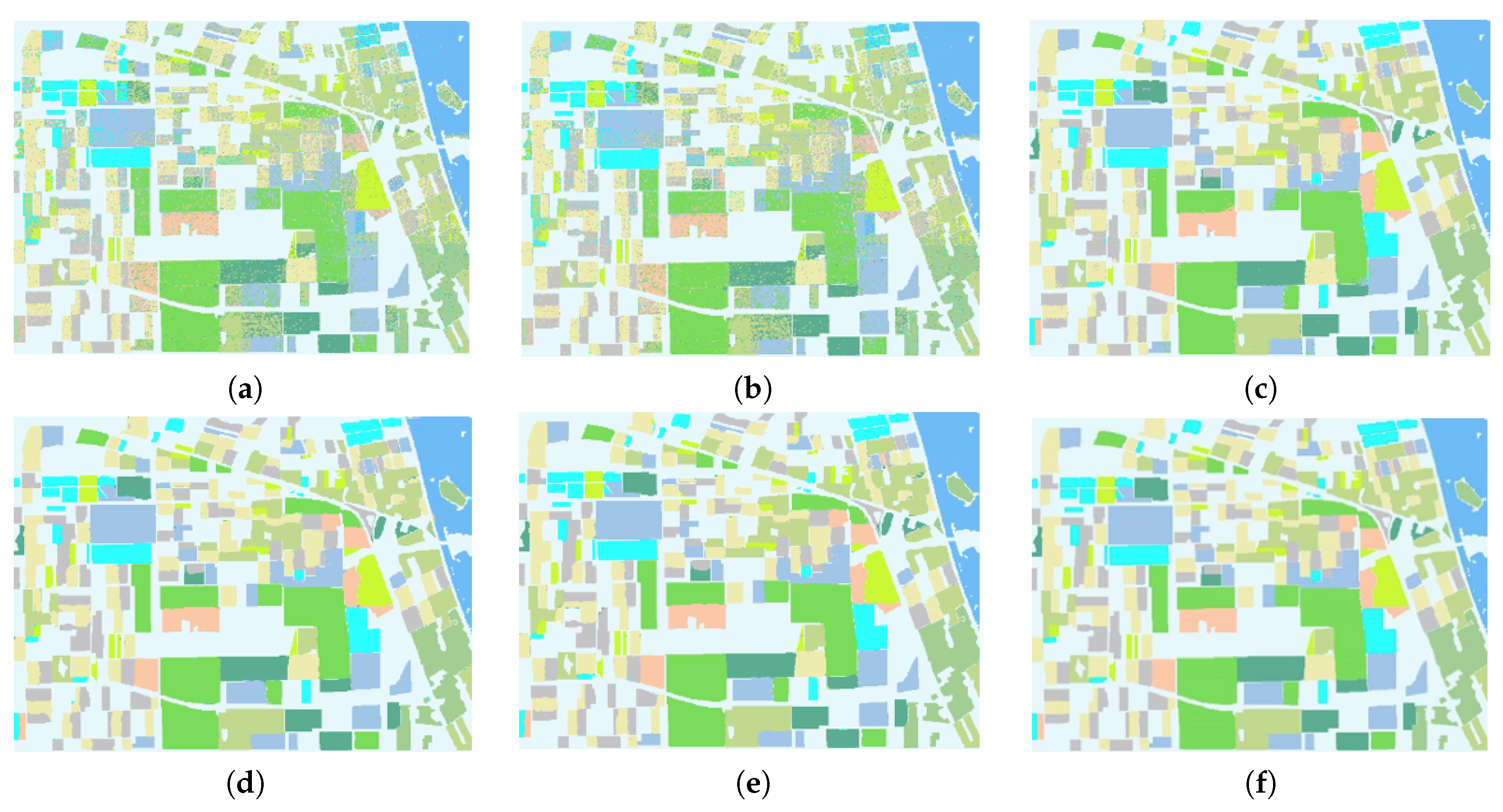

- (1)

- and 1: These data were obtained in the Netherlands by the AIRSAR system of the NASA Jet Propulsion Laboratory in 1989. As a four-look fully polarized image of the L-band, its image size is pixels, and the resolution of the distance and azimuth are 6 and 12 m, respectively. According to Wang et al. [31], the whole scene has been divided into 11 species: rapeseed, grass, forest, peas, lucerne, wheat, beets, bare soil, stem beans, water and potatoes. This fully PolSAR image, a classic farmland dataset, has been widely used to verify pixel-level classification performances. Its Pauli pseudo-color map, corresponding ground truth and labels are shown in Figure 5, and the pixel number of each class is given in the bracket of label image.

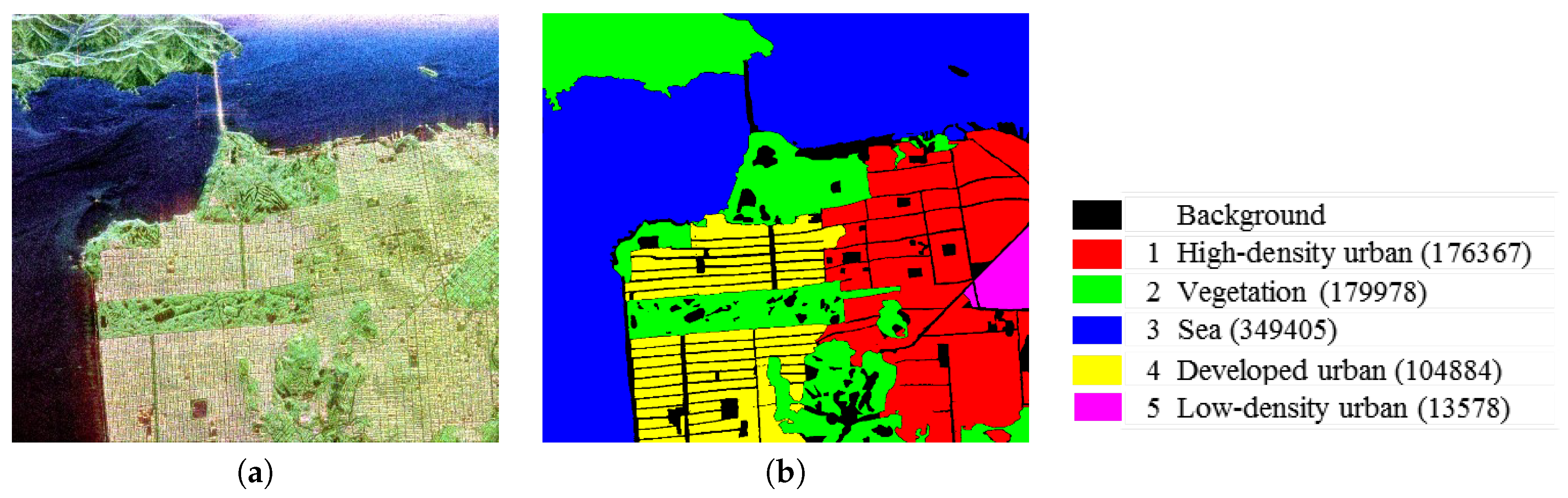

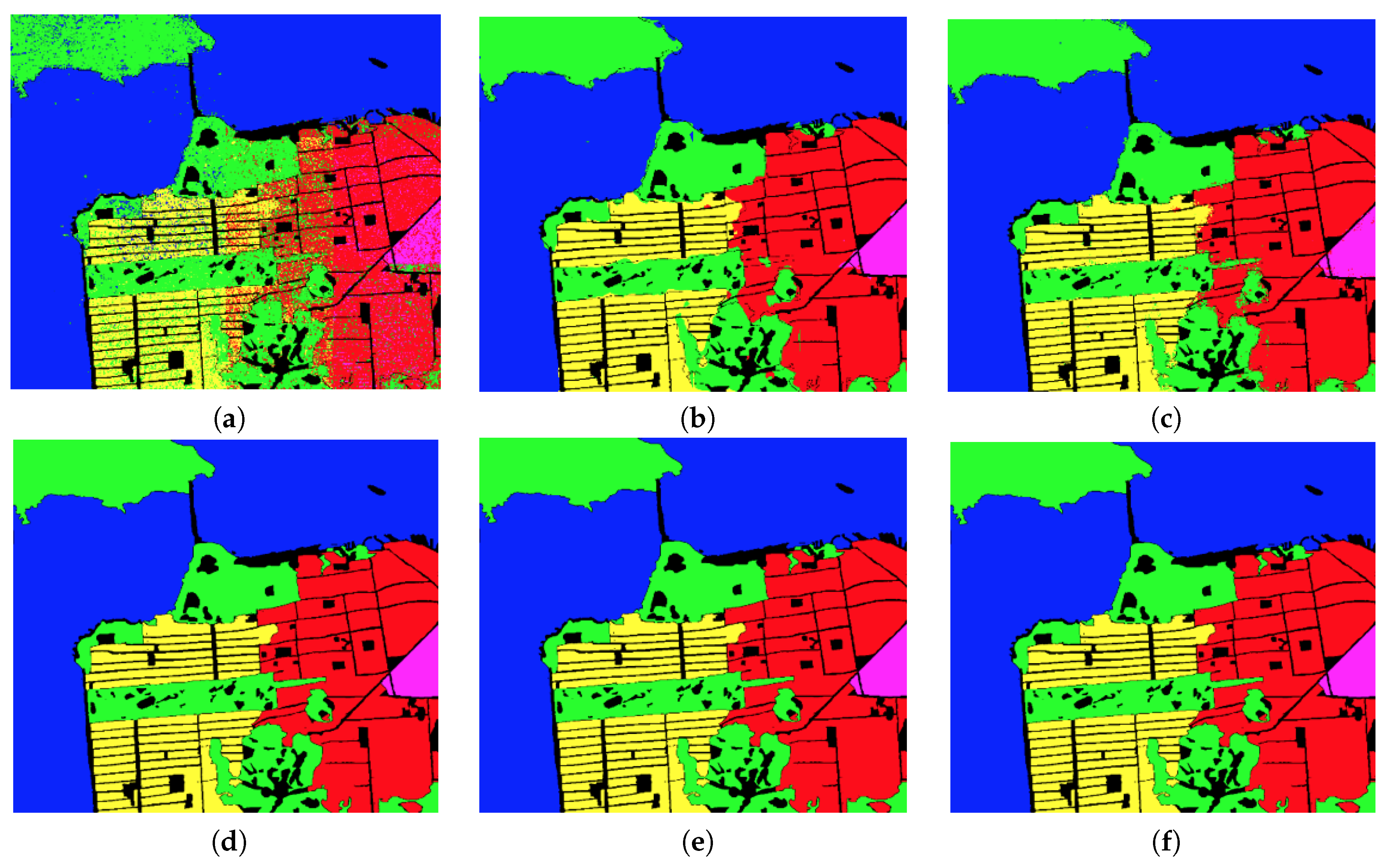

- (2)

- : This dataset is a four-look fully polarized image of the L-band, collected by NASA Jet Propulsion Laboratory’s AIRSAR system in the San Francisco Bay Area. The image size is pixels, and its spatial resolution is approximately 10 m × 10 m. As shown in the Pauli image and ground truth in Figure 6, there are three major types of objects, namely vegetation, sea and urban areas, the latter being further divided into high-density urban areas, developed urban areas and low-density urban areas.

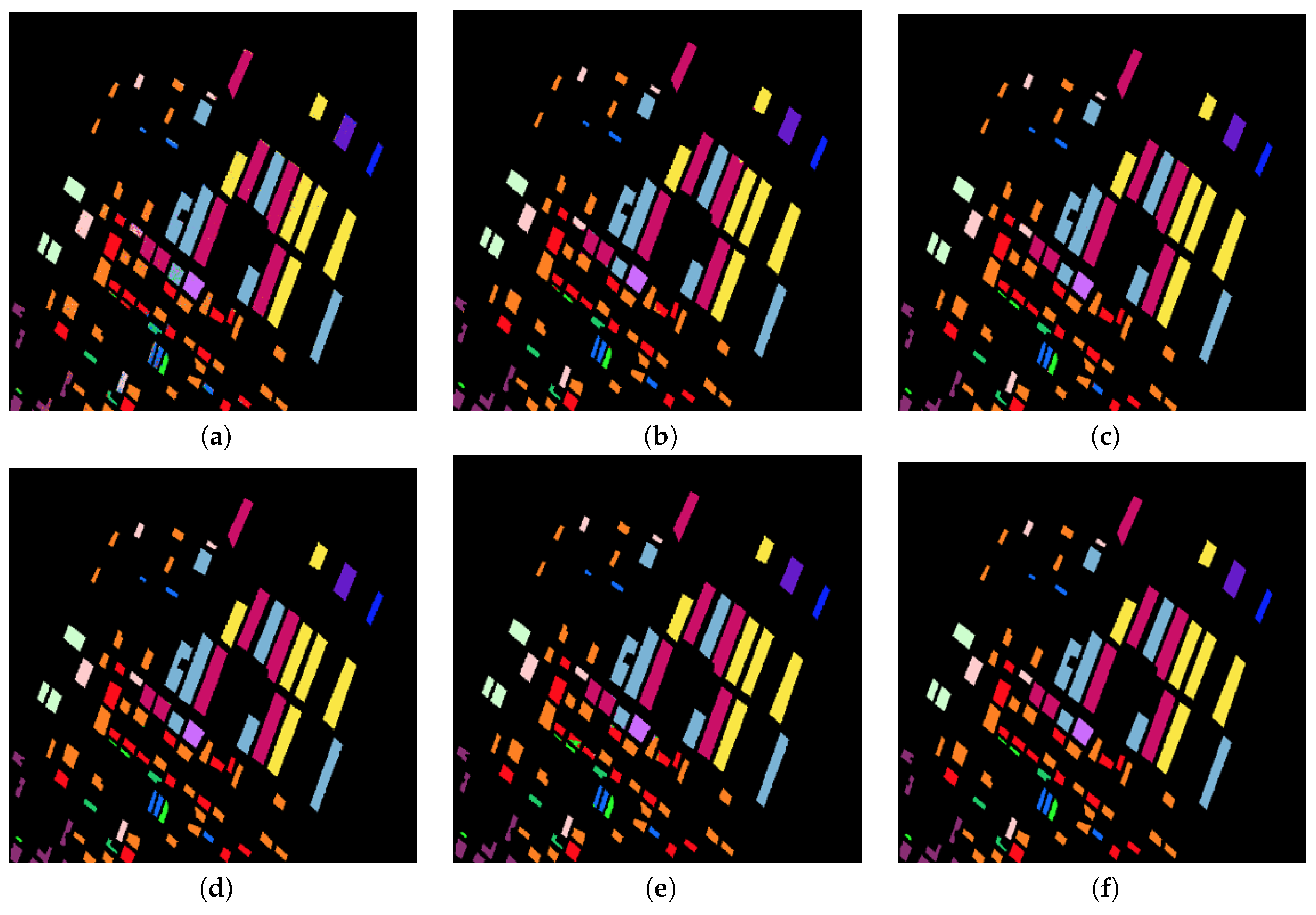

- (3)

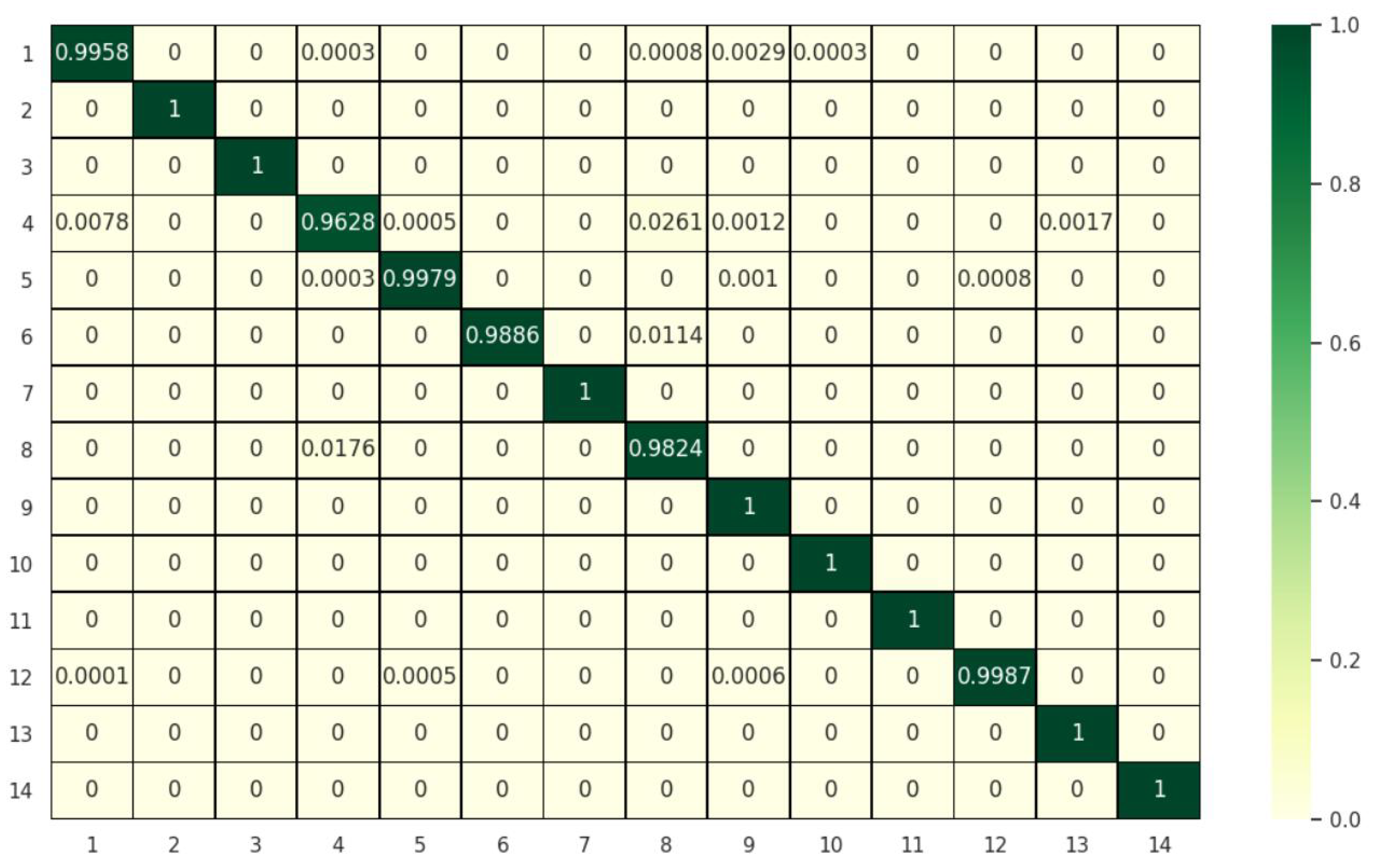

- and 2: The third fully polarized SAR image adopted in this paper is also L-band AIRSAR data collected in Holland in 1991. The number of looks from the dataset is four, and the image of size pixels contains only farmland areas. The scene consists of 14 crops: potatoes, fruit, oats, beet, barley, onions, wheat, beans, peas, maize, flax, rapeseed, grass and lucerne. Comprising a rich variety of geometric species with similar scattering characteristics, it is of great significance for this image to be utilized for verifying the proposed classification algorithm. Figure 7 shows the Pauli RGB map and the corresponding ground truth, as suggested by Zhang et al. [36].

4.2. Experimental Settings and Parameter Tuning

- (a)

- Local preserving projection (LPP) finds a manifold representation of polarized features in a low-dimensional subspace without considering the spatial relationship between pixels.

- (b)

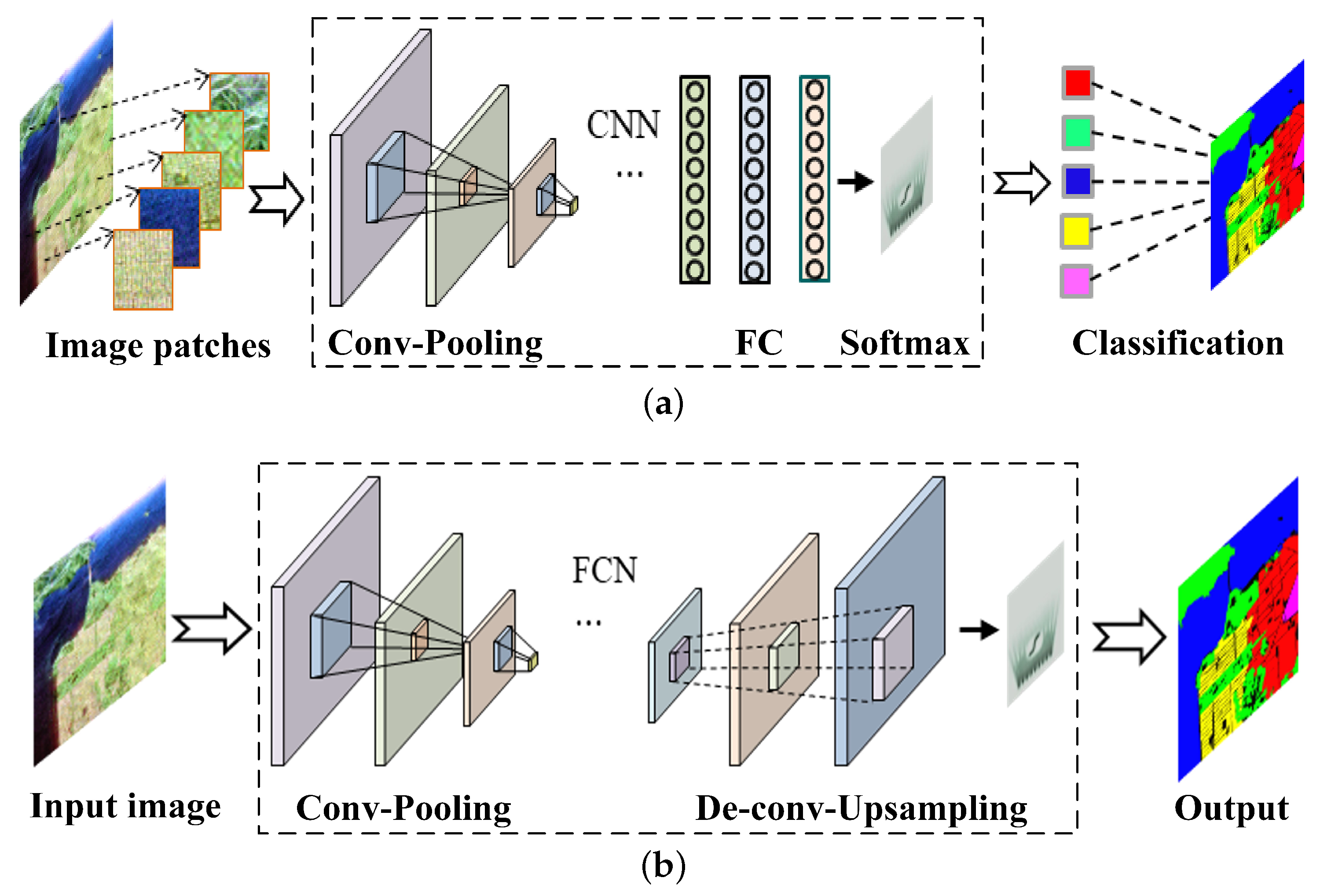

- FCN: A single pre-trained FCN is adopted to learn the multi-scale spatial structure of the PolSAR data. This nonlinear feature is actually a deep abstract semantic representation with relatively simple polarization properties.

- (c)

- FCN+LPP: A single FCN extracts the multi-scale spatial features in PolSAR imagery, which undergo dimensionality reduction by the LPP algorithm for further classification.

- (d)

- MFCN+LPP is he algorithm proposed in this paper. First, to describe various ground objects effectively, seven polarized decompositions are fed into multiple parallel FCN-8s models to learn multi-scale deep spatial features. Afterwards, through the manifold graph embedding model (LPP), the compact manifold representation of high-dimensional spatially polarized features from the MFCN is extracted for final classification.

4.3. Experimental Results and Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Niu, X.; Ban, Y. An adaptive contextual SEM algorithm for urban land cover mapping using multitemporal high-resolution polarimetric SAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1129–1139. [Google Scholar] [CrossRef]

- Van Zyl, J.J. Unsupervised classification of scattering behavior using radar polarimetry data. IEEE Trans. Geosci. Remote Sens. 1989, 27, 36–45. [Google Scholar] [CrossRef]

- Cloude, S.R.; Pottier, E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- Kong, J.; Swartz, A.; Yueh, H.; Novak, L.; Shin, R. Identification of terrain cover using the optimum polarimetric classifier. J. Electromagn. Waves Appl. 1988, 2, 171–194. [Google Scholar]

- Wang, S.; Liu, K.; Pei, J.; Gong, M.; Liu, Y. Unsupervised classification of fully polarimetric SAR images based on scattering power entropy and copolarized ratio. IEEE Geosci. Remote Sens. Lett. 2012, 10, 622–626. [Google Scholar] [CrossRef]

- Lee, J.S.; Grunes, M.R.; Ainsworth, T.L.; Du, L.J.; Schuler, D.L.; Cloude, S.R. Unsupervised classification using polarimetric decomposition and the complex Wishart classifier. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2249–2258. [Google Scholar]

- Dabboor, M.; Collins, M.J.; Karathanassi, V.; Braun, A. An unsupervised classification approach for polarimetric SAR data based on the Chernoff distance for complex Wishart distribution. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4200–4213. [Google Scholar] [CrossRef]

- Lee, J.S.; Grunes, M.R.; Kwok, R. Classification of multi-look polarimetric SAR imagery based on complex Wishart distribution. Int. J. Remote Sens. 1994, 15, 2299–2311. [Google Scholar] [CrossRef]

- Xing, X.; Ji, K.; Zou, H.; Sun, J. Feature selection and weighted SVM classifier-based ship detection in PolSAR imagery. Int. J. Remote Sens. 2013, 34, 7925–7944. [Google Scholar] [CrossRef]

- Feng, J.; Cao, Z.; Pi, Y. Polarimetric contextual classification of PolSAR images using sparse representation and superpixels. Remote Sens. 2014, 6, 7158–7181. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, X.; Moon, W.M. PolSAR images classification through GA-based selective ensemble learning. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 3770–3773. [Google Scholar]

- Pottier, E. Unsupervized classification scheme and topography derivation of POLSAR data based on the «H/A/α» polarimetric decomposition theorem. In Proceedings of the Fourth International Workshop on Radar Polarimetry, Nantes, France, 13–17 July 1998; pp. 535–548. [Google Scholar]

- Lee, J.S.; Grunes, M.R.; Pottier, E.; Ferro-Famil, L. Unsupervised terrain classification preserving polarimetric scattering characteristics. IEEE Trans. Geosci. Remote Sens. 2004, 42, 722–731. [Google Scholar]

- Suk, H.I.; Lee, S.W.; Shen, D.; Alzheimer’s Disease Neuroimaging Initiative. Latent feature representation with stacked auto-encoder for AD/MCI diagnosis. Brain Struct. Funct. 2015, 220, 841–859. [Google Scholar] [CrossRef] [PubMed]

- Rifai, S.; Bengio, Y.; Dauphin, Y.; Vincent, P. A generative process for sampling contractive auto-encoders. arXiv 2012, arXiv:1206.6434. [Google Scholar]

- Zhou, S.; Chen, Q.; Wang, X. Discriminative deep belief networks for image classification. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 1561–1564. [Google Scholar]

- Liu, D.; Han, L.; Han, X. High spatial resolution remote sensing image classification based on deep learning. Acta Opt. Sin. 2016, 36, 0428001. [Google Scholar]

- Jun-Fei, S.; Fang, L.; Yao-Hai, L.; Lu, L. Polarimetric SAR image classification based on deep learning and hierarchical semantic model. Acta Autom. Sin. 2017, 43, 215–226. [Google Scholar]

- Qu, J.; Sun, X.; Gao, X. Remote sensing image target recognition based on CNN. Foreign Electron. Meas. Technol. 2016, 8, 45–50. [Google Scholar]

- Dong, H.P. Classification of Polarimetric SAR Image with Feature Selection and Deep Learning. J. Signal Process. 2019, 35, 972. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Mohammadimanesh, F.; Salehi, B.; Mahdianpari, M.; Gill, E.; Molinier, M. A new fully convolutional neural network for semantic segmentation of polarimetric SAR imagery in complex land cover ecosystem. ISPRS J. Photogramm. Remote Sens. 2019, 151, 223–236. [Google Scholar] [CrossRef]

- Ding, J.; Chen, B.; Liu, H.; Huang, M. Convolutional neural network with data augmentation for SAR target recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 364–368. [Google Scholar] [CrossRef]

- Kwak, Y.; Song, W.J.; Kim, S.E. Speckle-noise-invariant convolutional neural network for SAR target recognition. IEEE Geosci. Remote Sens. Lett. 2018, 16, 549–553. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual tree detection and classification with UAV-based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Cook, D.; Feuz, K.D.; Krishnan, N.C. Transfer learning for activity recognition: A survey. Knowl. Inf. Syst. 2013, 36, 537–556. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.S.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Yamaguchi, Y.; Moriyama, T.; Ishido, M.; Yamada, H. Four-component scattering model for polarimetric SAR image decomposition. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1699–1706. [Google Scholar] [CrossRef]

- Cai, H.; Zheng, V.W.; Chang, K.C.C. A comprehensive survey of graph embedding: Problems, techniques, and applications. IEEE Trans. Knowl. Data Eng. 2018, 30, 1616–1637. [Google Scholar] [CrossRef]

- Yan, S.; Xu, D.; Zhang, B.; Zhang, H.J.; Yang, Q.; Lin, S. Graph embedding and extensions: A general framework for dimensionality reduction. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 29, 40–51. [Google Scholar] [CrossRef]

- Wang, Y.; He, C.; Liu, X.; Liao, M. A hierarchical fully convolutional network integrated with sparse and low-rank subspace representations for PolSAR imagery classification. Remote Sens. 2018, 10, 342. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- He, X.; Niyogi, P. Locality preserving projections. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 153–160. [Google Scholar]

- He, X.; Yan, S.; Hu, Y.; Niyogi, P.; Zhang, H.J. Face recognition using laplacianfaces. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 328–340. [Google Scholar]

- Cai, D.; He, X.; Han, J. Spectral regression for efficient regularized subspace learning. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.Q. Complex-valued convolutional neural network and its application in polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

| Polarized Decompositions | R Channel | G Channel | B Channel |

|---|---|---|---|

| Pauli | 2 | ||

| Cloude | |||

| Freeman | |||

| Huynen | |||

| Yamaguchi | |||

| Krogager |

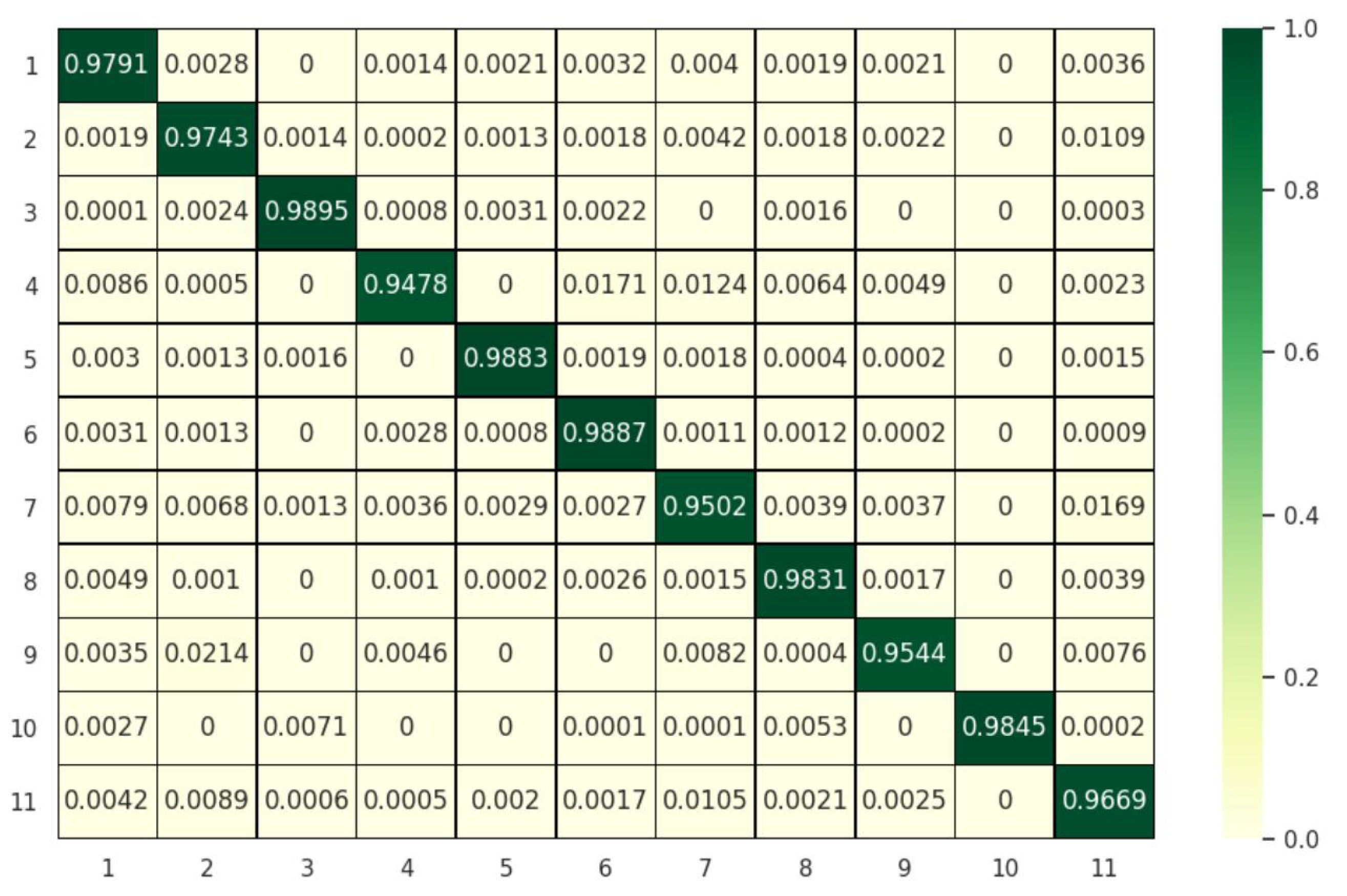

| Label/Class | LPP | FCN | FCN+LPP | MFCN+LPP |

|---|---|---|---|---|

| 1/Rapeseed | 75.64% | 89.67% | 96.21% | 97.91% |

| 2/Grass | 78.68% | 94.68% | 96.83% | 97.43% |

| 3/Forest | 71.80% | 97.40% | 98.87% | 98.95% |

| 4/Peas | 54.67% | 91.87% | 95.12% | 94.78% |

| 5/Lucerne | 65.07% | 92.98% | 96.64% | 98.83% |

| 6/Wheat | 84.43% | 95.03% | 97.31% | 98.86% |

| 7/Beets | 56.34% | 77.57% | 90.75% | 95.02% |

| 8/Bare soil | 49.08% | 95.99% | 98.30% | 98.30% |

| 9/Stem beans | 79.02% | 71.13% | 90.46% | 95.43% |

| 10/Water | 97.41% | 99.95% | 99.95% | 98.45% |

| 11/Potatoes | 77.49% | 90.81% | 96.09% | 96.69% |

| 73.70% | 90.99% | 96.08% | 97.39% | |

| 70.29% | 89.82% | 95.57% | 97.06% |

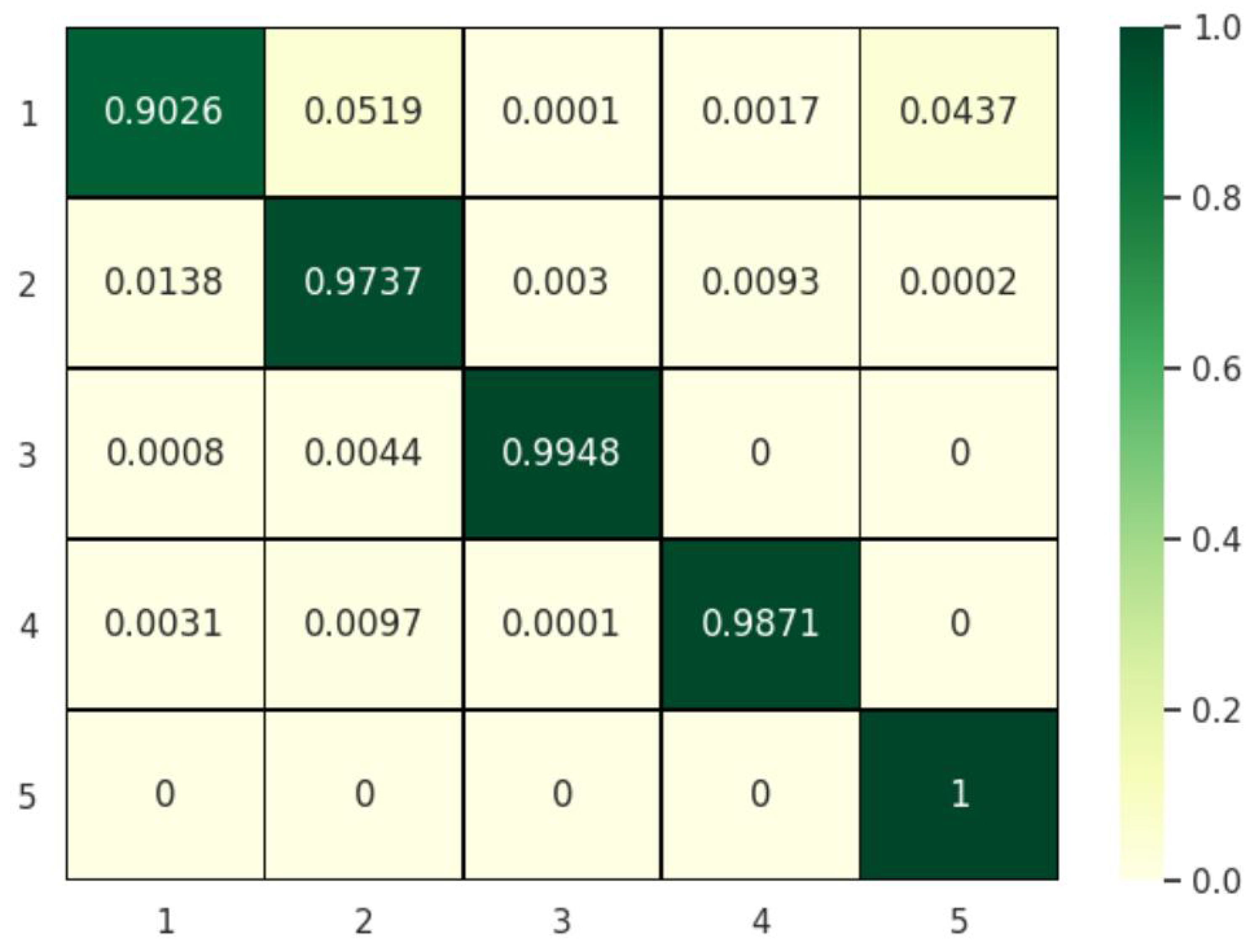

| Label/Class | LPP | FCN | FCN+LPP | MFCN+LPP |

|---|---|---|---|---|

| 1/Sea | 79.30% | 97.31% | 97.98% | 90.26% |

| 2/Vegetation | 88.19% | 95.41% | 96.68% | 97.37% |

| 3/Developed urban areas | 99.53% | 99.44% | 99.73% | 99.48% |

| 4/High-density urban areas | 77.63% | 97.40% | 93.29% | 98.71% |

| 5/Low-density urban areas | 65.09% | 97.79% | 91.73% | 100% |

| 89.37% | 97.82% | 98.46% | 96.95% | |

| 85.00% | 96.93% | 97.83% | 95.72% |

| Label/Class | LPP | FCN | FCN+LPP | MFCN+LPP |

|---|---|---|---|---|

| 1/Potatoes | 96.28% | 99.53% | 99.93% | 99.58% |

| 2/Fruit | 94.56% | 99.85% | 99.91% | 100% |

| 3/Oats | 96.15% | 100% | 100% | 100% |

| 4/Beets | 97.85% | 98.31% | 99.81% | 90.28% |

| 5/Barley | 97.25% | 99.90% | 99.82% | 91.79% |

| 6/Onions | 77.55% | 99.80% | 99.01% | 98.86% |

| 7/Wheat | 98.93% | 99.78% | 99.90% | 100% |

| 8/Beans | 56.39% | 90.54% | 98.36% | 98.24% |

| 9/Peas | 99.27% | 99.27% | 99.90% | 100% |

| 10/Maize | 86.45% | 99.27% | 99.35% | 100% |

| 11/Flax | 99.68% | 100% | 100% | 100% |

| 12/Rapeseed | 98.66% | 99.52% | 99.81% | 99.86% |

| 13/Grass | 84.79% | 98.65% | 99.57% | 100% |

| 14/Lucerne | 91.41% | 100% | 100% | 100% |

| 96.54% | 97.45% | 99.83% | 99.54% | |

| 95.92% | 96.53% | 99.80% | 95.46% |

| Dataset | Flevoland Dataset | San Francisco Dataset | Flevoland Dataset |

|---|---|---|---|

| Image size | 750 × 1024 | 900 × 1024 | 1024 × 1024 |

| Number of parallel networks | 7 | 7 | 7 |

| Time consumption | 423.08 | 490.98 | 559.86 |

| Dataset | LPP | FCN | FCN+LPP | MFCN+LPP |

|---|---|---|---|---|

| Flevoland Dataset 1 | 31.3375 | 14.37 | 28.2472 | 33.4559 |

| San Francisco dataset | 21.8552 | 13.99 | 20.7372 | 29.3127 |

| Flevoland Dataset 2 | 11.1774 | 11.83 | 13.3515 | 18.5695 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, C.; He, B.; Tu, M.; Wang, Y.; Qu, T.; Wang, D.; Liao, M. Fully Convolutional Networks and a Manifold Graph Embedding-Based Algorithm for PolSAR Image Classification. Remote Sens. 2020, 12, 1467. https://doi.org/10.3390/rs12091467

He C, He B, Tu M, Wang Y, Qu T, Wang D, Liao M. Fully Convolutional Networks and a Manifold Graph Embedding-Based Algorithm for PolSAR Image Classification. Remote Sensing. 2020; 12(9):1467. https://doi.org/10.3390/rs12091467

Chicago/Turabian StyleHe, Chu, Bokun He, Mingxia Tu, Yan Wang, Tao Qu, Dingwen Wang, and Mingsheng Liao. 2020. "Fully Convolutional Networks and a Manifold Graph Embedding-Based Algorithm for PolSAR Image Classification" Remote Sensing 12, no. 9: 1467. https://doi.org/10.3390/rs12091467

APA StyleHe, C., He, B., Tu, M., Wang, Y., Qu, T., Wang, D., & Liao, M. (2020). Fully Convolutional Networks and a Manifold Graph Embedding-Based Algorithm for PolSAR Image Classification. Remote Sensing, 12(9), 1467. https://doi.org/10.3390/rs12091467