Upscaling UAS Paradigm to UltraLight Aircrafts: A Low-Cost Multi-Sensors System for Large Scale Aerial Photogrammetry

Abstract

1. Introduction

2. Materials and Methods

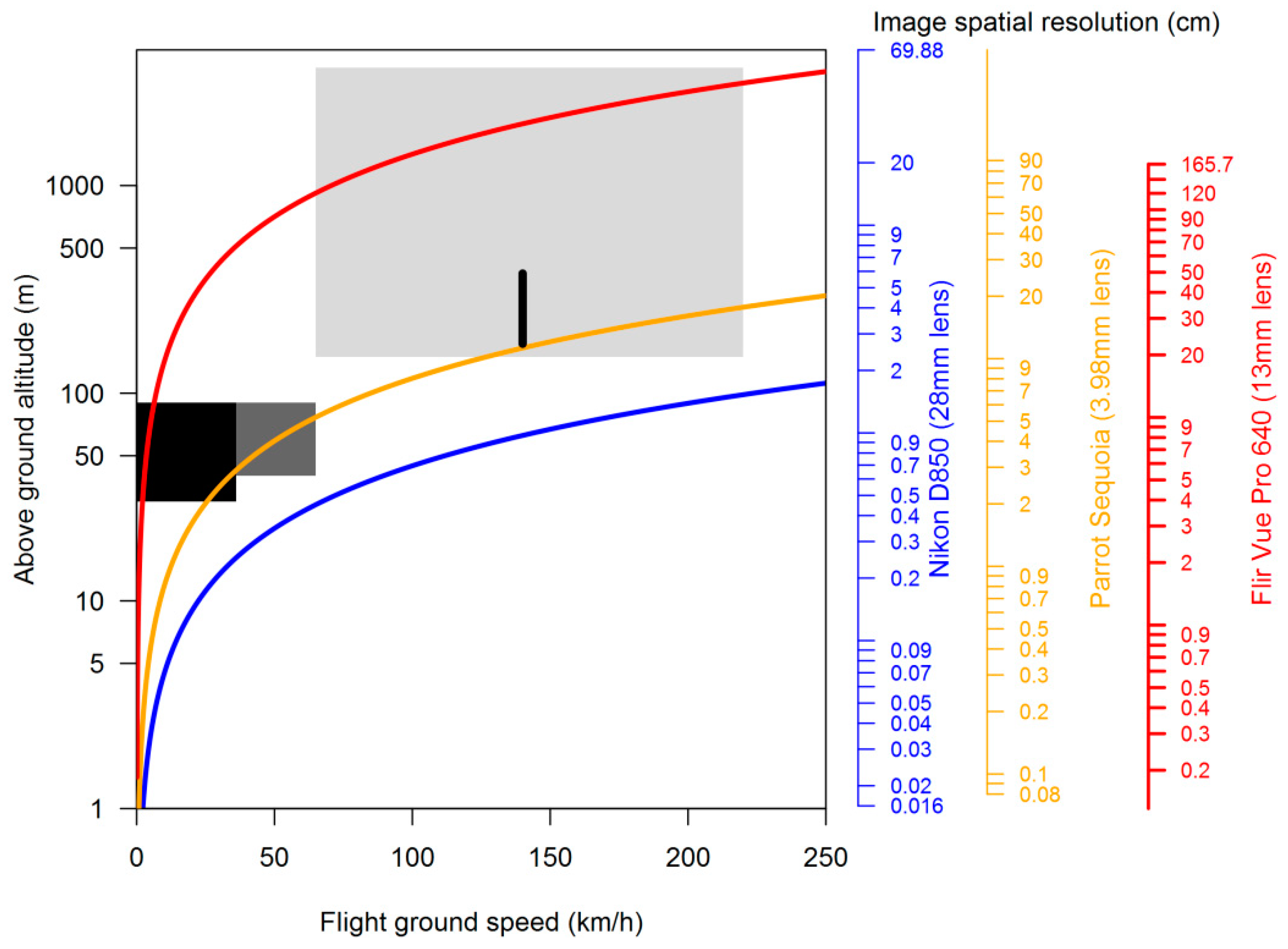

2.1. System Configuration: Sensors Requirements and Flight Plan

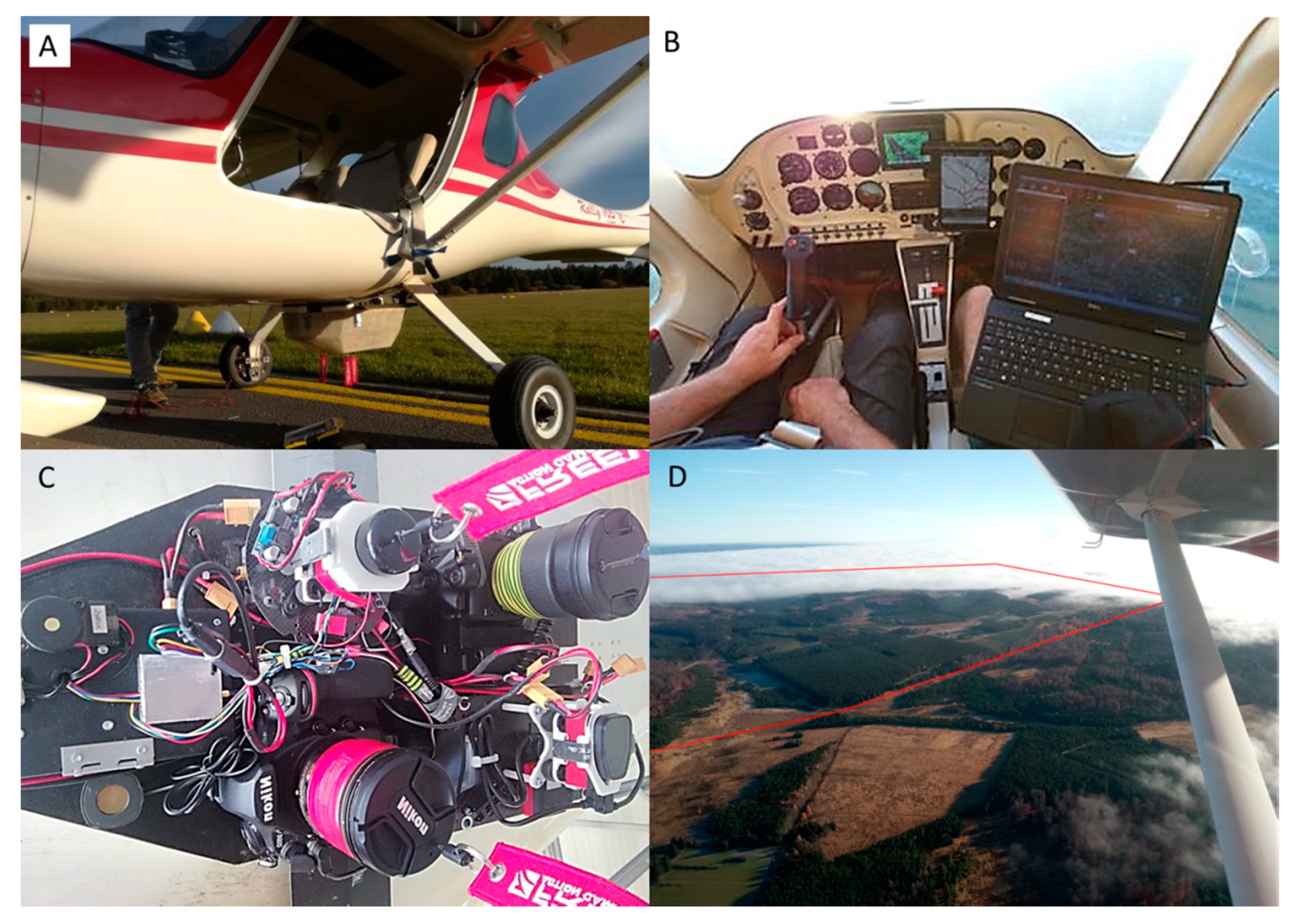

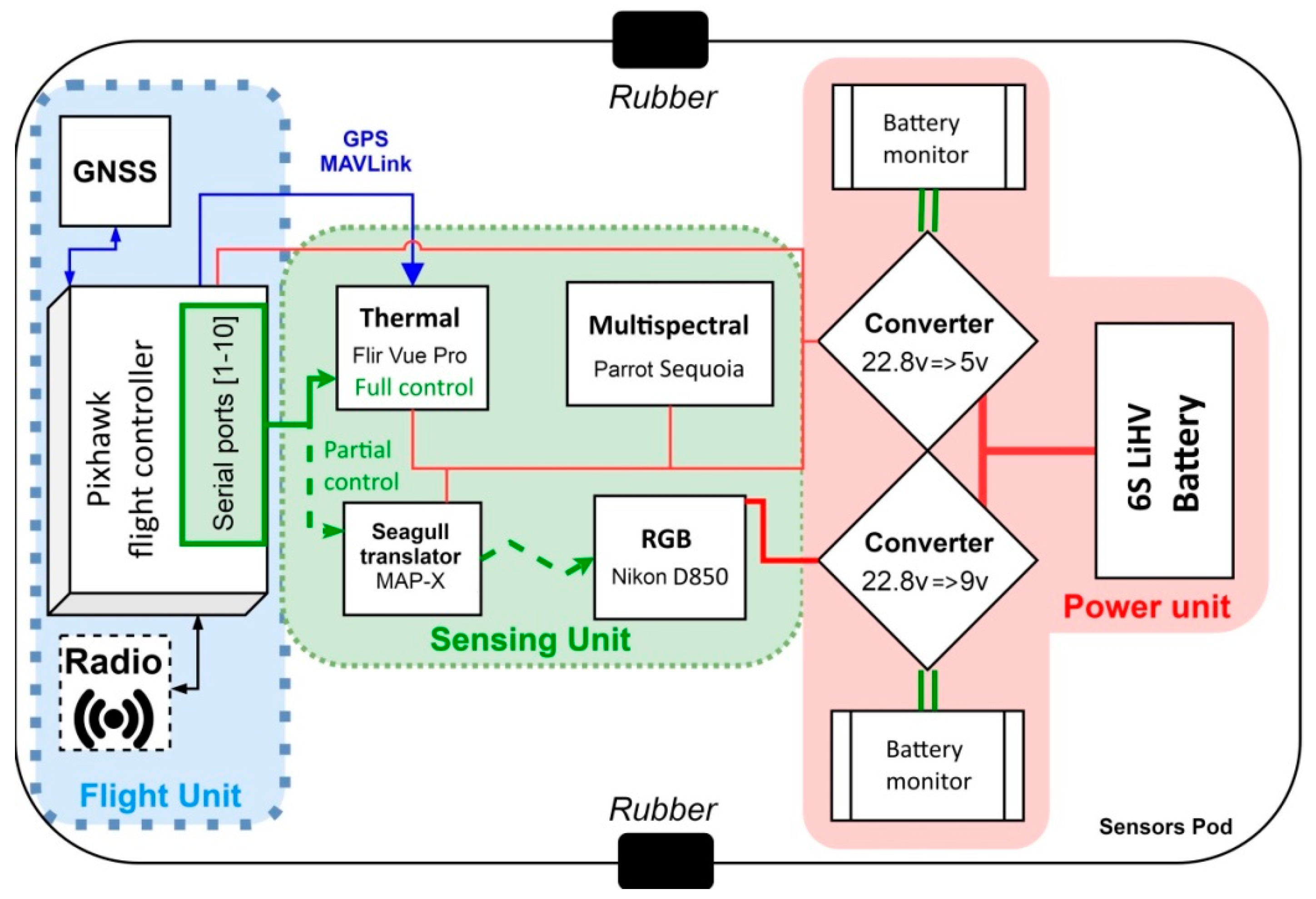

2.2. System Design: ULA Platform, Navigation Station and Sensors Pod

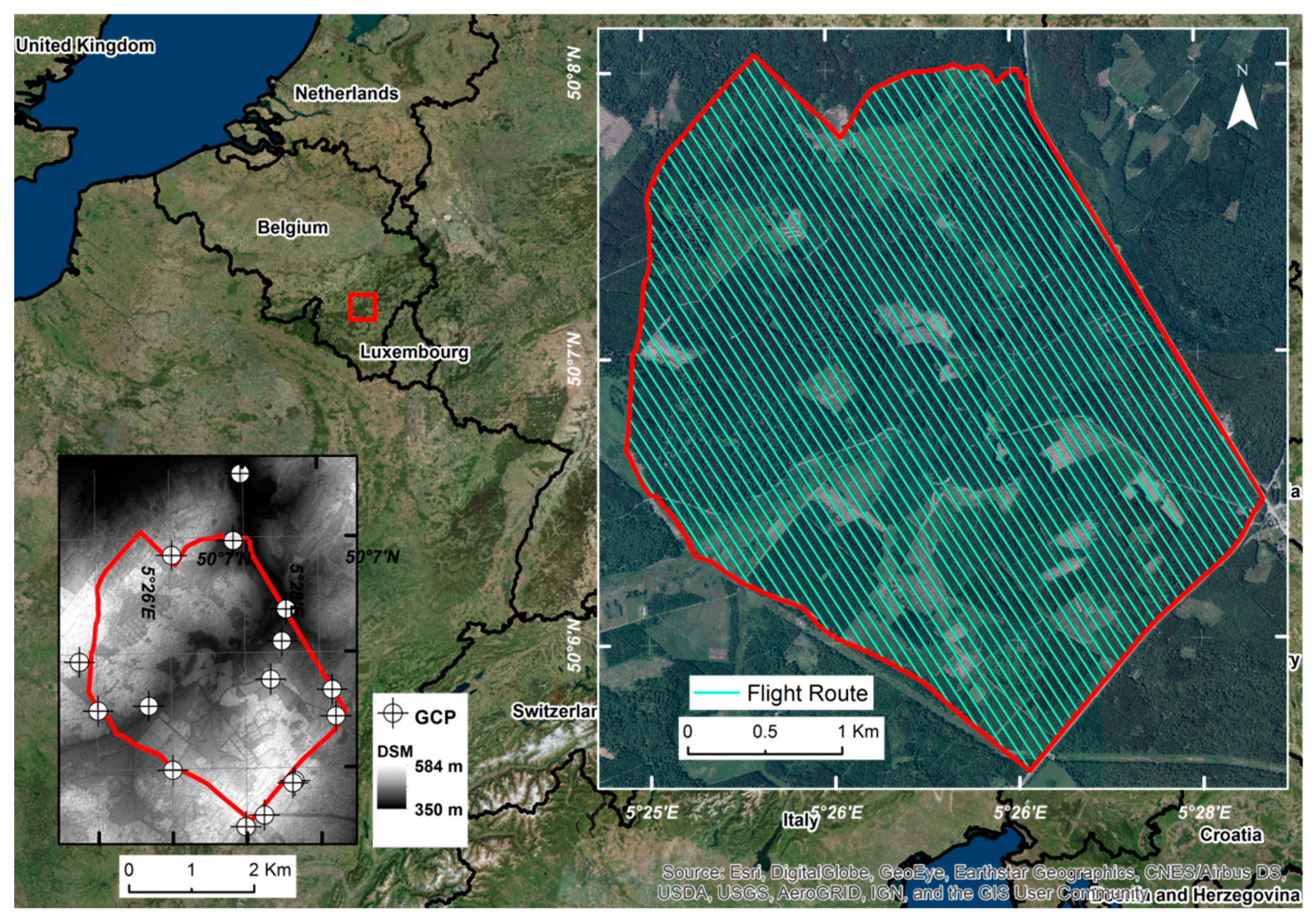

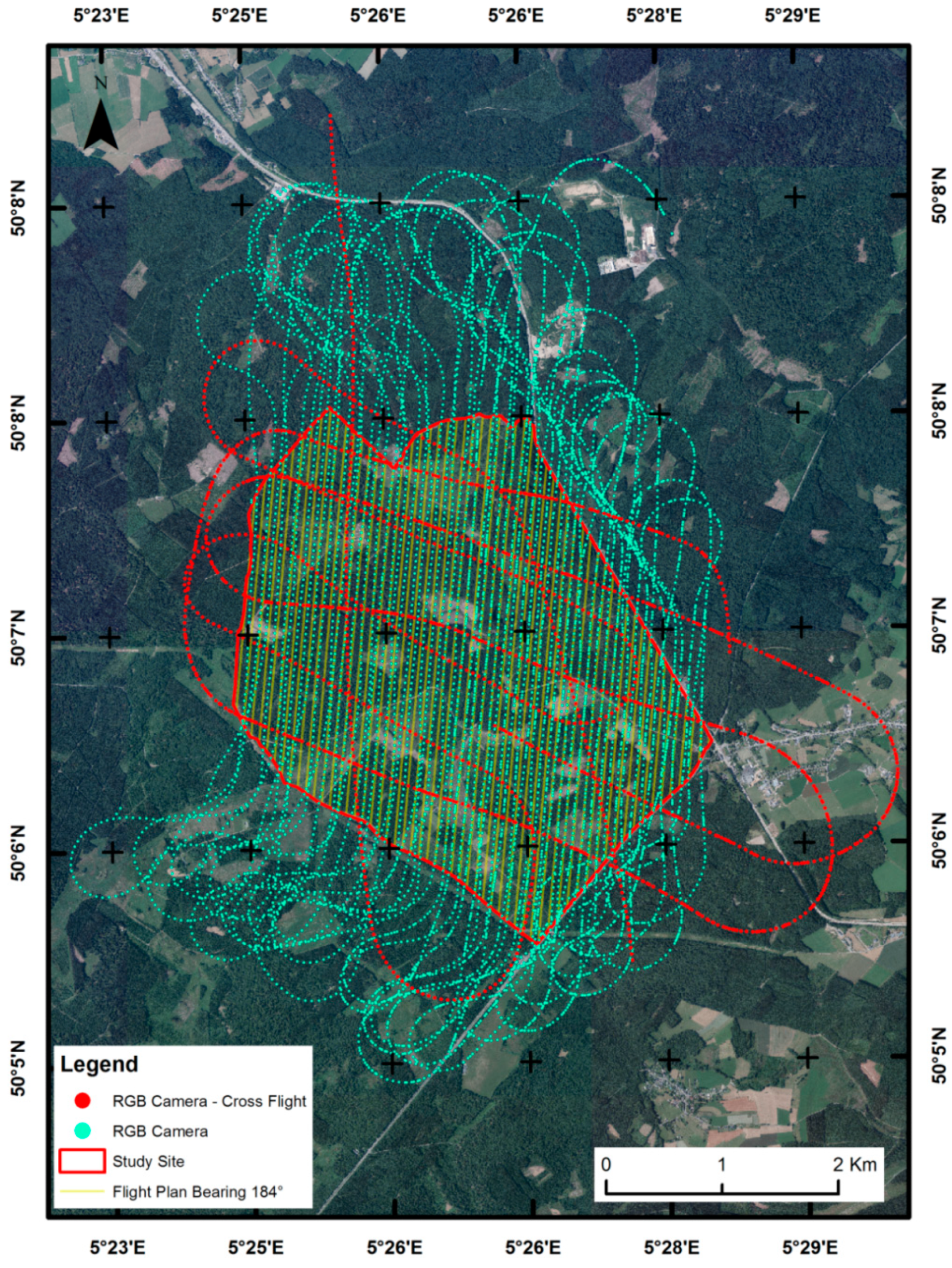

2.3. System Acquisition: Study Area, Selected Sensors and Flight Plan

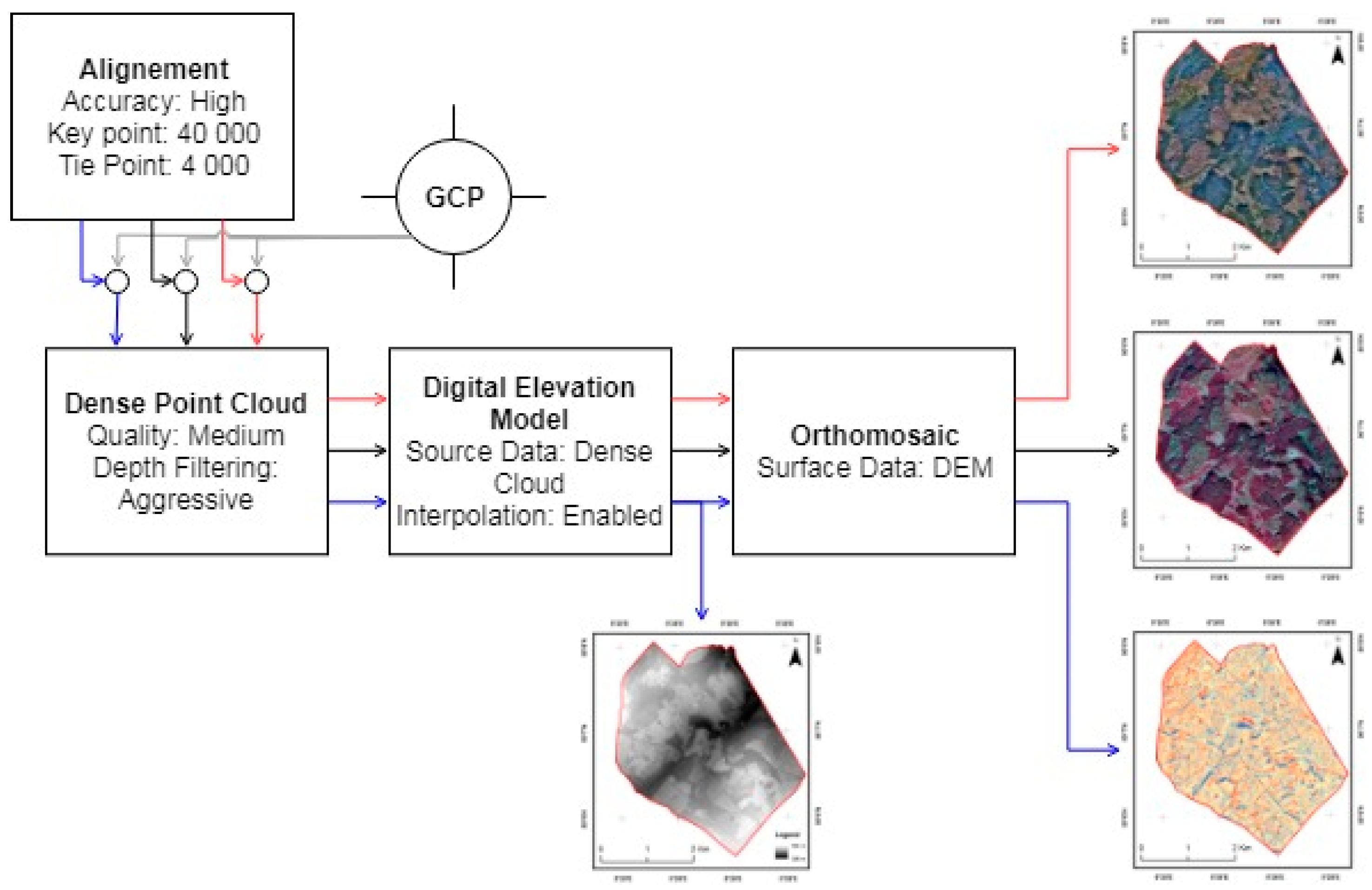

2.4. Photogrammetric Routine

3. Results

3.1. Flight and Images Acquisitions

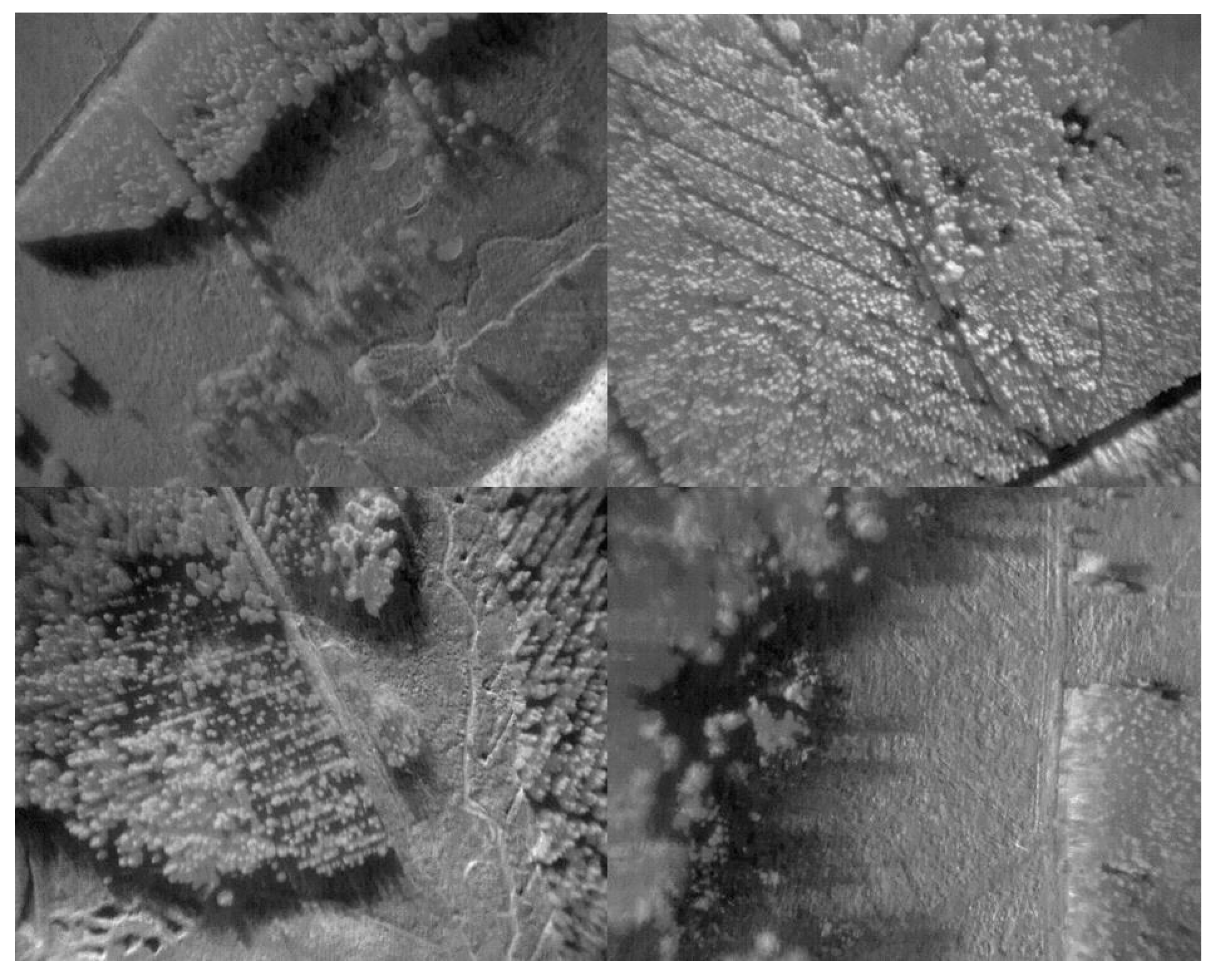

3.2. Image Quality

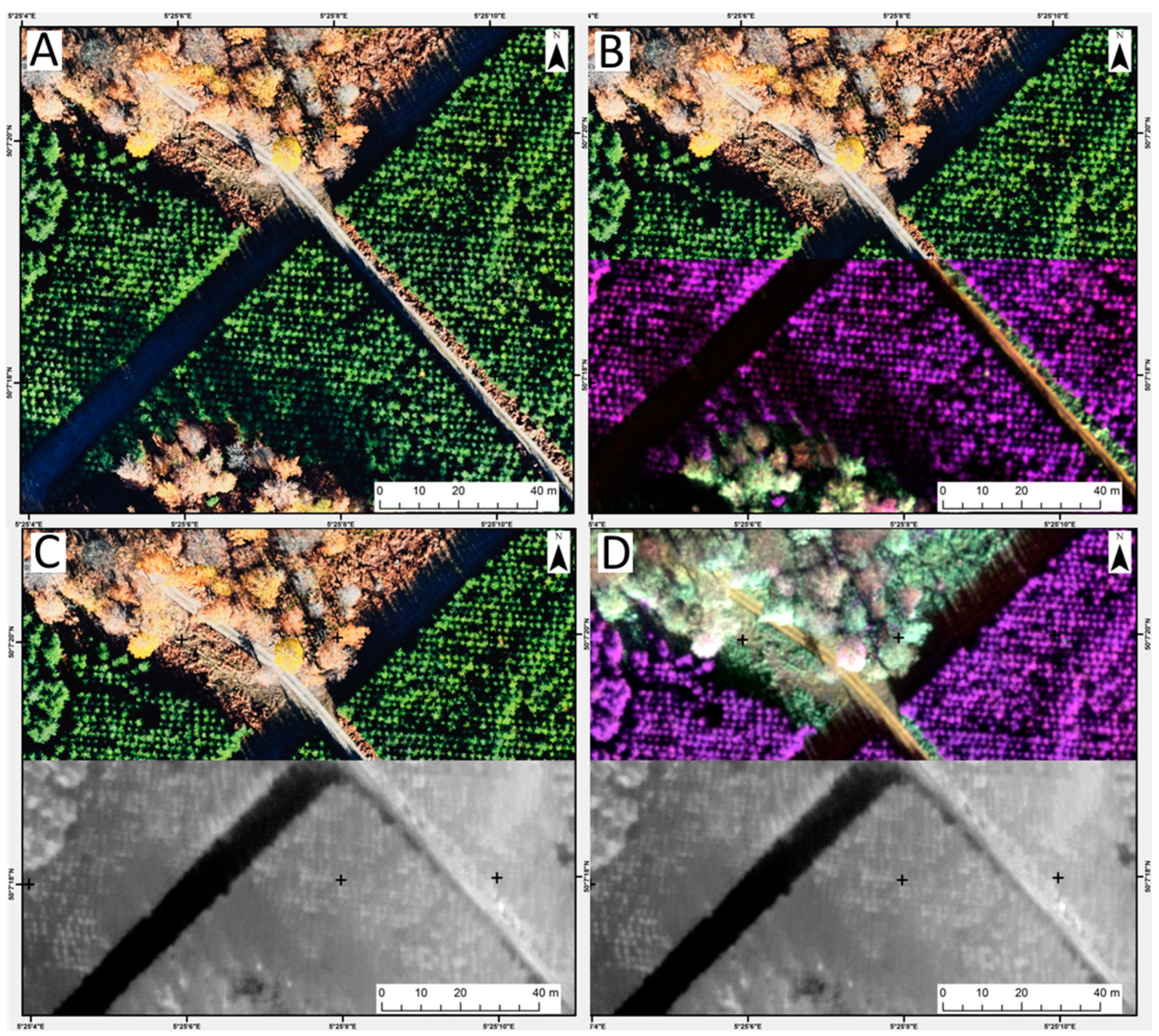

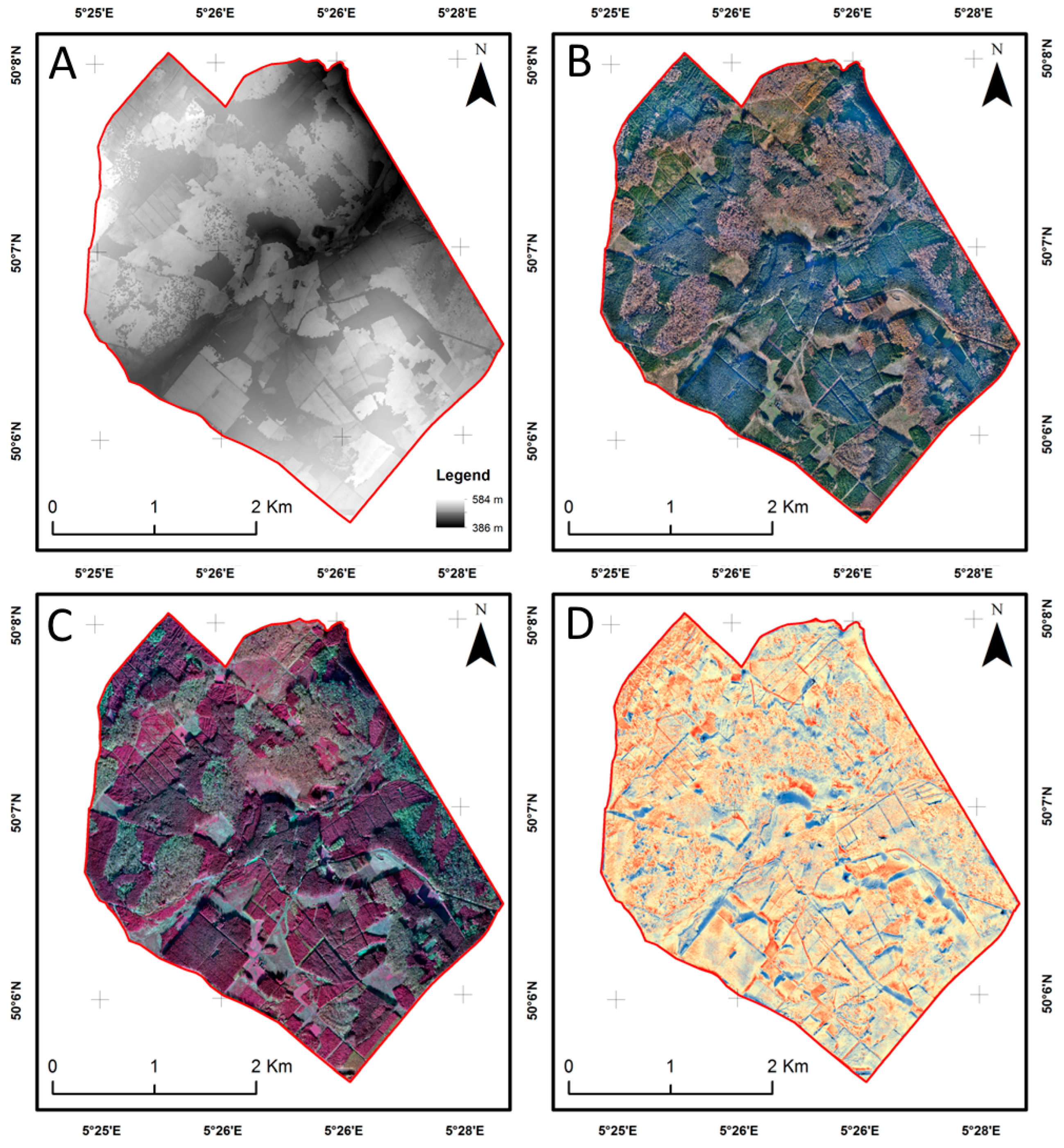

3.3. DEM and Orthophotomosaics

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Gerster, G. The Past from Above: Aerial Photographs of Archaeological Sites; Getty Publications: Los Angeles, CA, USA, 2005; ISBN 0-89236-875-6. [Google Scholar]

- Holdridge, L.R.; Grenke, W.C. Forest environments in tropical life zones: A pilot study. In Forest Environments in Tropical Life Zones: A Pilot Study; Pergamon Press: Oxford, UK, 1971. [Google Scholar]

- Fairchild Aerial Camera Corporation. Aerial Survey; Fairchild Aerial Camera Corporation: New York City, NY, USA, 1921. [Google Scholar]

- Sayab, M.; Aerden, D.; Paananen, M.; Saarela, P. Virtual Structural Analysis of Jokisivu Open Pit Using ‘Structure-from-Motion’ Unmanned Aerial Vehicles (UAV) Photogrammetry: Implications for Structurally-Controlled Gold Deposits in Southwest Finland. Remote Sens. 2018, 10, 1296. [Google Scholar] [CrossRef]

- Gunston, B.; Gunston, B. The Cambridge Aerospace Dictionary; Cambridge University Press: Cambridge, UK, 2009; ISBN 0-521-19165-3. [Google Scholar]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation; John Wiley & Sons: New York, NY, USA, 2014; ISBN 1-118-34328-X. [Google Scholar]

- Hueni, A.; Damm, A.; Kneubuehler, M.; Schlapfer, D.; Schaepman, M.E. Field and Airborne Spectroscopy Cross Validation—Some Considerations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1117–1135. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.; Genesio, L.; Vaccari, F.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Newhall, B. Airborne Camera: The World from the Air and Outer Space; Hasting House: New York, NY, USA, 1969. [Google Scholar]

- Morgan, D.; Falkner, E. Aerial Mapping: Methods and Applications; CRC Press: Boca Raton, FL, USA, 2001; ISBN 1-4200-3244-5. [Google Scholar]

- Asner, G.P.; Knapp, D.E.; Boardman, J.; Green, R.O.; Kennedy-Bowdoin, T.; Eastwood, M.; Martin, R.E.; Anderson, C.; Field, C.B. Carnegie Airborne Observatory-2: Increasing science data dimensionality via high-fidelity multi-sensor fusion. Remote Sens. Environ. 2012, 124, 454–465. [Google Scholar] [CrossRef]

- Sørensen, K.I.; Auken, E. SkyTEM—A new high-resolution helicopter transient electromagnetic system. Explor. Geophys. 2004, 35, 194–202. [Google Scholar] [CrossRef]

- Torgersen, C.E.; Faux, R.N.; McIntosh, B.A.; Poage, N.J.; Norton, D.J. Airborne thermal remote sensing for water temperature assessment in rivers and streams. Remote Sens. Environ. 2001, 76, 386–398. [Google Scholar] [CrossRef]

- Siemon, B.; Christiansen, A.V.; Auken, E. A review of helicopter-borne electromagnetic methods for groundwater exploration. Near Surf. Geophys. 2009, 7, 629–646. [Google Scholar] [CrossRef]

- Zhang, L.; Jiang, H.; Meng, L. Research of the observation suspended bin for helicopter power line inspection. In Proceedings of the 2009 International Conference on Mechatronics and Automation, Changchun, China, 9–12 August 2009; IEEE: Changchun, China, 2009; pp. 1689–1694. [Google Scholar]

- Skaloud, J.; Vallet, J. High Accuracy Handheld Mapping System for Fast Helicopter Deployment. In Proceedings of the Joint International Symposium on Geospatial Theory, Processing and Applications, ISPRS IV, Ottawa, ON, Canada, 9–12 July 2002. [Google Scholar]

- Desmond, G. Measuring and mapping the topography of the Florida Everglades for ecosystem restoration. US Geol. Surv. Fact Sheet 021-03. 2003. [Google Scholar]

- General Aviation Manufacturers Association (GAMA). Annual Report; GAMA: Washington, DC, USA, 2018; p. 62. [Google Scholar]

- European Commission. DG Mobility and Transport; Statistical Data, Data Analysis and Recommendation on Collection of Data in the Field of General Aviation in Europe, Final Report; European Commission: Brussels, Belgium, 2015. [Google Scholar]

- Manfreda, S.; McCabe, F.M.; Miller, E.P.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Gupta, S.G.; Ghonge, M.M.; Jawandhiya, P.M. Review of unmanned aircraft system (UAS). Int. J. Adv. Res. Comput. Eng. Technol. 2013, 2, 1646–1658. [Google Scholar] [CrossRef]

- Boon, M.A.; Drijfhout, A.P.; Tesfamichael, S. Comparison of a fixed-wing and multi-rotor uav for environmental mapping applications: a case study. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W6, 47–54. [Google Scholar] [CrossRef]

- Tahar, K.N.; Ahmad, A. An Evaluation on Fixed Wing and Multi-Rotor UAV Images Using Photogrammetric Image Processing. Int. J. Comput. Inf. Eng. 2013, 7, 5. [Google Scholar]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Linchant, J.; Lisein, J.; Semeki, J.; Lejeune, P.; Vermeulen, C. Are unmanned aircraft systems (UAS s) the future of wildlife monitoring? A review of accomplishments and challenges. Mammal Rev. 2015, 45, 239–252. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- PixhawkAdmin Home Page. Available online: https://pixhawk.org/ (accessed on 23 January 2019).

- Emlid Navio2—Raspberry Pi autopilot HAT Powered by ArduPilot & ROS. Available online: https://emlid.com/navio/.

- Tristan, R.H.; Nicholas, C.; Peter, L. Unmanned aerial systems for precision forest inventory purposes: A review and case study. For. Chron. 2017, 93, 71–81. [Google Scholar]

- Zhaolin, Y.; Feng, L.; Chen, B.M. Survey of autopilot for multi-rotor unmanned aerial vehicles. In Proceedings of the IECON 2016—42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 24–27 October 2016; IEEE: Florence, Italy, 2016; pp. 6122–6127. [Google Scholar]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Su, Y.; Guo, Q.; Fry, D.L.; Collins, B.M.; Kelly, M.; Flanagan, J.P.; Battles, J.J. A Vegetation Mapping Strategy for Conifer Forests by Combining Airborne LiDAR Data and Aerial Imagery. Can. J. Remote Sens. 2016, 42, 1–15. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A.; El-Ashmawy, N. Urban land cover classification using airborne LiDAR data: A review. Remote Sens. Environ. 2015, 158, 295–310. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A Comparison and Evaluation of Multi-View Stereo Reconstruction Algorithms. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 1, pp. 519–528. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Skeletal graphs for efficient structure from motion. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Turner, D.; Lucieer, A.; Watson, C. An Automated Technique for Generating Georectified Mosaics from Ultra-High Resolution Unmanned Aerial Vehicle (UAV) Imagery, Based on Structure from Motion (SfM) Point Clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef]

- Meinen, B.U.; Robinson, D.T. Mapping erosion and deposition in an agricultural landscape: Optimization of UAV image acquisition schemes for SfM-MVS. Remote Sens. Environ. 2020, 239, 111666. [Google Scholar] [CrossRef]

- Knight, J.E.; Foster, C.L.; Howard, V.W.; Schickedanz, J.G. A Pilot Test of Ultralight Aircraft for Control of Coyotes. Wildl. Soc. Bull. (1973–2006) 1986, 14, 174–177. [Google Scholar]

- Chazette, P.; Totems, J. Mini N2-Raman Lidar Onboard Ultra-Light Aircraft for Aerosol Measurements: Demonstration and Extrapolation. Remote Sens. 2017, 9, 1226. [Google Scholar] [CrossRef]

- Junkermann, W. An Ultralight Aircraft as Platform for Research in the Lower Troposphere: System Performance and First Results from Radiation Transfer Studies in Stratiform Aerosol Layers and Broken Cloud Conditions. J. Atmos. Ocean. Technol. 2001, 18, 934–946. [Google Scholar] [CrossRef]

- Lebourgeois, V.; Bégué, A.; Labbé, S.; Houlès, M.; Martiné, J.F. A light-weight multi-spectral aerial imaging system for nitrogen crop monitoring. Precis. Agric. 2012, 13, 525–541. [Google Scholar] [CrossRef]

- Mozgeris, G.; Gadal, S.; Jonikavičius, D.; Straigytė, L.; Ouerghemmi, W.; Juodkienė, V. Hyperspectral and color-infrared imaging from ultralight aircraft: Potential to recognize tree species in urban environments. In Proceedings of the 2016 8th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Los Angeles, CA, USA, 21–24 August 2016; pp. 1–5. [Google Scholar]

- Mozgeris, G.; Juodkienė, V.; Jonikavičius, D.; Straigytė, L.; Gadal, S.; Ouerghemmi, W. Ultra-Light Aircraft-Based Hyperspectral and Colour-Infrared Imaging to Identify Deciduous Tree Species in an Urban Environment. Remote Sens. 2018, 10, 1668. [Google Scholar] [CrossRef]

- Mozgeris, G.; Jonikavičius, D.; Jovarauskas, D.; Zinkevičius, R.; Petkevičius, S.; Steponavičius, D. Imaging from manned ultra-light and unmanned aerial vehicles for estimating properties of spring wheat. Precis. Agric. 2018, 19, 876–894. [Google Scholar] [CrossRef]

- EnsoMOSAIC Aerial Mapping System—Overview. Available online: http://www.mosaicmill.com/cessna_system/em_system.html (accessed on 27 February 2019).

- Pepe, M.; Fregonese, L.; Scaioni, M. Planning airborne photogrammetry and remote-sensing missions with modern platforms and sensors. Eur. J. Remote Sens. 2018, 51, 412–435. [Google Scholar] [CrossRef]

- Tmušić, G.; Manfreda, S.; Aasen, H.; James, R.M.; Gonçalves, G.; Ben-Dor, E.; Brook, A.; Polinova, M.; Arranz, J.J.; Mészáros, J.; et al. Current Practices in UAS-based Environmental Monitoring. Remote Sens. 2020, 12, 1001. [Google Scholar] [CrossRef]

- O’Connor, J.; Smith, M.J.; James, M.R. Cameras and settings for aerial surveys in the geosciences: Optimising image data. Prog. Phys. Geogr. Earth Environ. 2017, 41, 325–344. [Google Scholar] [CrossRef]

- Sieberth, T.; Wackrow, R.; Chandler, J.H. UAV image blur—its influence and ways to correct it. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 33–39. [Google Scholar] [CrossRef]

- mRo Pixhawk PX4 User Guide. Available online: https://docs.px4.io/en/flight_controller/mro_pixhawk.html (accessed on 28 January 2019).

- ArduPilot Open Source Autopilot. Available online: http://ardupilot.org/ (accessed on 28 January 2019).

- Gerke, M.; Przybilla, H.-J. Accuracy Analysis of Photogrammetric UAV Image Blocks: Influence of Onboard RTK-GNSS and Cross Flight Patterns. Photogramm. Fernerkund. Geoinf. 2016, 2016, 17–30. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. Remote sensing of vegetation structure using computer vision. Remote Sens. 2010, 2, 1157–1176. [Google Scholar] [CrossRef]

- Barry, P.; Coakley, R. Field accuracy test of RPAS photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 27–31. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; d’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, J.P. Quantitative Remote Sensing at Ultra-High Resolution with UAV Spectroscopy: A Review of Sensor Technology, Measurement Procedures, and Data Correction Workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- Schneider-Zapp, K.; Cubero-Castan, M.; Shi, D.; Strecha, C. A new method to determine multi-angular reflectance factor from lightweight multispectral cameras with sky sensor in a target-less workflow applicable to UAV. Remote Sens. Environ. 2019, 229, 60–68. [Google Scholar] [CrossRef]

- Roth, L.; Hund, A.; Aasen, H. PhenoFly Planning Tool: Flight planning for high-resolution optical remote sensing with unmanned areal systems. Plant Methods 2018, 14, 116. [Google Scholar] [CrossRef] [PubMed]

| Category | MTOW | AGL Altitude | Flight Radius |

|---|---|---|---|

| Micro | <2 kg | Up to 60 m | Up to 5 km |

| Mini | 2–20 kg | Up to 900 m | Up to 25 km |

| Small | 20–150 kg | Up to 1500 m | Up to 50 km |

| Names | Symbol | Units | Camera | Flight |

|---|---|---|---|---|

| CCD sensor size | Meters (width × height) | x | ||

| Image dimension | Number of pixels (width × height) | x | ||

| Focal length | Meters | x | ||

| Burst rate | Frames per second | x | ||

| Aperture | / | x | ||

| Sensor sensitivity | / | x | ||

| Integration time | Seconds | x | ||

| Ground Speed | Meters per second | x | ||

| Above ground level altitude | Meters | x | ||

| Image ground spatial resolution | Meters | x | x | |

| Front overlap | / | x | x | |

| Distance between successive images | Meters | x | x | |

| Side overlap | / | x | x | |

| Distance between lines | Meters | x | x | |

| Ground swath (image footprint) | Meters (width × height) | x | x |

| Model Aircraft | Storm Rally 105 |

|---|---|

| Engine | Rotax 912 ULS (100 hp) |

| Fuel capacity | 130 l |

| Normal operating velocity (maximum speed cruse) | 210 km/h; 58.3 m/s |

| Stall Speed 11 | 65 km/h; 18.1 m/s |

| Stall Speed 22 | 57 km/h; 15.8 m/s |

| Endurance | 1520 km / 7.2 hours |

| Plafond | 3658 m |

| Maximum Take-Off Weight | 598 kg |

| Standard empty weight | 345 kg |

| Maximum useful load | 253 kg |

| Specification | Nikon D850 | Parrot Sequoia | Flir Vue Pro 640 |

|---|---|---|---|

| 35.9 × 23.9 mm | 4.8 × 3.6 mm | 10.88 × 8.7 mm | |

| 8256 × 5504 px | 1280 × 960 px | 640 × 512 px | |

| 28 mm | 3.98 mm | 13 mm | |

| 5 | 2 | 1 | |

| Spectral range | visible | 0.53–0.81 μm | 7.5–13.5 μm |

| Number of bands | 3 | 4 | 1 |

| Weight | 1238 g | 133 g | 134 g |

| Parameters | Nikon D850 | Parrot Sequoia | Flir Vue Pro 640 | |||

|---|---|---|---|---|---|---|

| 173 m | 377 m | 173 m | 377 m | 173 m | 377 m | |

| 222 × 148 m | 483 × 322 m | 209 × 157 m | 455 × 341 m | 145 × 116 m | 315 × 252 m | |

| 70 % | 86 % | 70 % | 86 % | 66 % | 84 % | |

| 67 % | 85 % | 65 % | 84 % | 50 % | 77 % | |

| 0.88 | 0.83 | 0.99 | ||||

| 2.7 cm | 5.8 cm | 16.3 cm | 35.43 cm | 22.6 cm | 49.3 cm | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Latte, N.; Gaucher, P.; Bolyn, C.; Lejeune, P.; Michez, A. Upscaling UAS Paradigm to UltraLight Aircrafts: A Low-Cost Multi-Sensors System for Large Scale Aerial Photogrammetry. Remote Sens. 2020, 12, 1265. https://doi.org/10.3390/rs12081265

Latte N, Gaucher P, Bolyn C, Lejeune P, Michez A. Upscaling UAS Paradigm to UltraLight Aircrafts: A Low-Cost Multi-Sensors System for Large Scale Aerial Photogrammetry. Remote Sensing. 2020; 12(8):1265. https://doi.org/10.3390/rs12081265

Chicago/Turabian StyleLatte, Nicolas, Peter Gaucher, Corentin Bolyn, Philippe Lejeune, and Adrien Michez. 2020. "Upscaling UAS Paradigm to UltraLight Aircrafts: A Low-Cost Multi-Sensors System for Large Scale Aerial Photogrammetry" Remote Sensing 12, no. 8: 1265. https://doi.org/10.3390/rs12081265

APA StyleLatte, N., Gaucher, P., Bolyn, C., Lejeune, P., & Michez, A. (2020). Upscaling UAS Paradigm to UltraLight Aircrafts: A Low-Cost Multi-Sensors System for Large Scale Aerial Photogrammetry. Remote Sensing, 12(8), 1265. https://doi.org/10.3390/rs12081265