Automatic Mapping of Center Pivot Irrigation Systems from Satellite Images Using Deep Learning

Abstract

1. Introduction

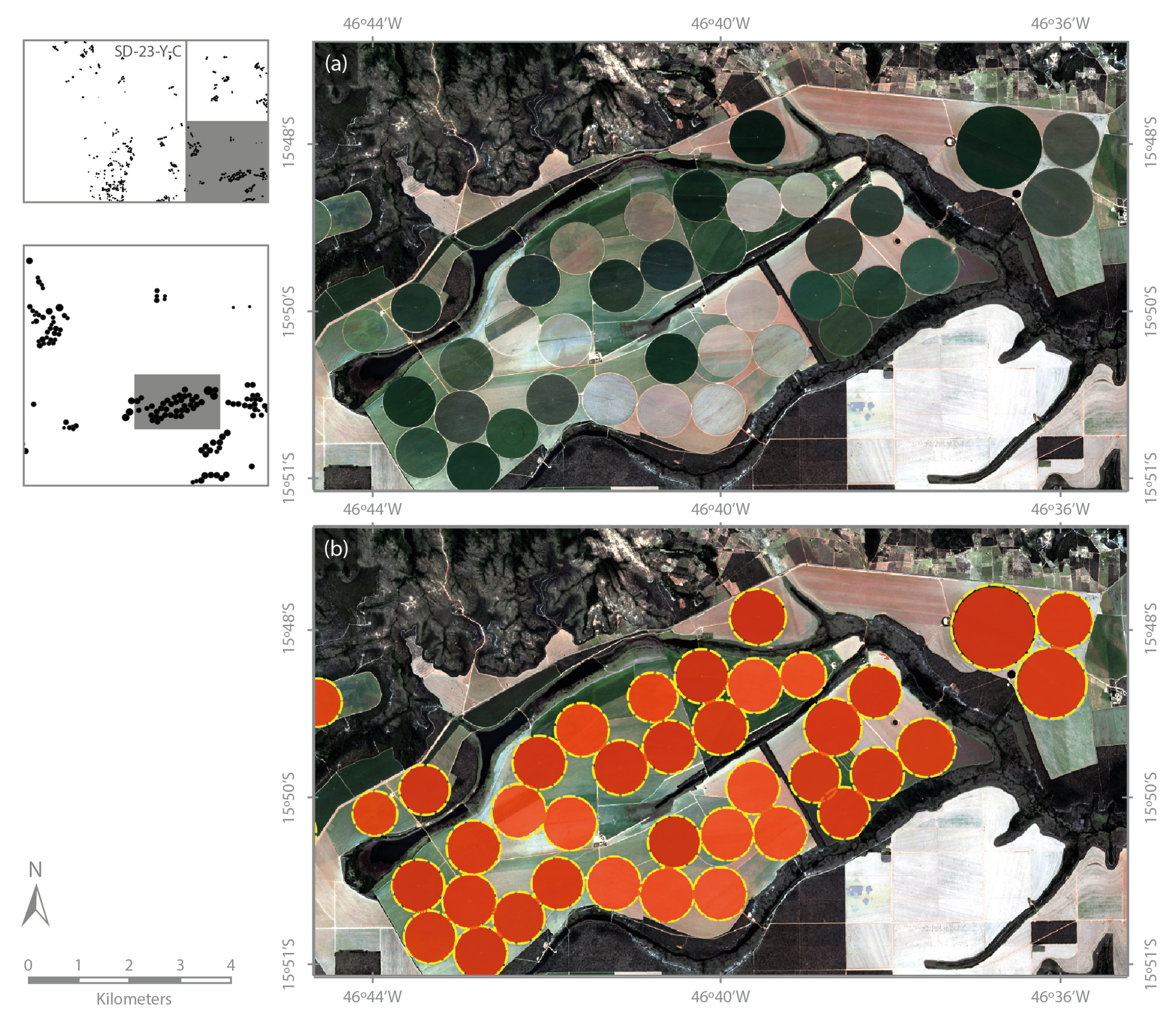

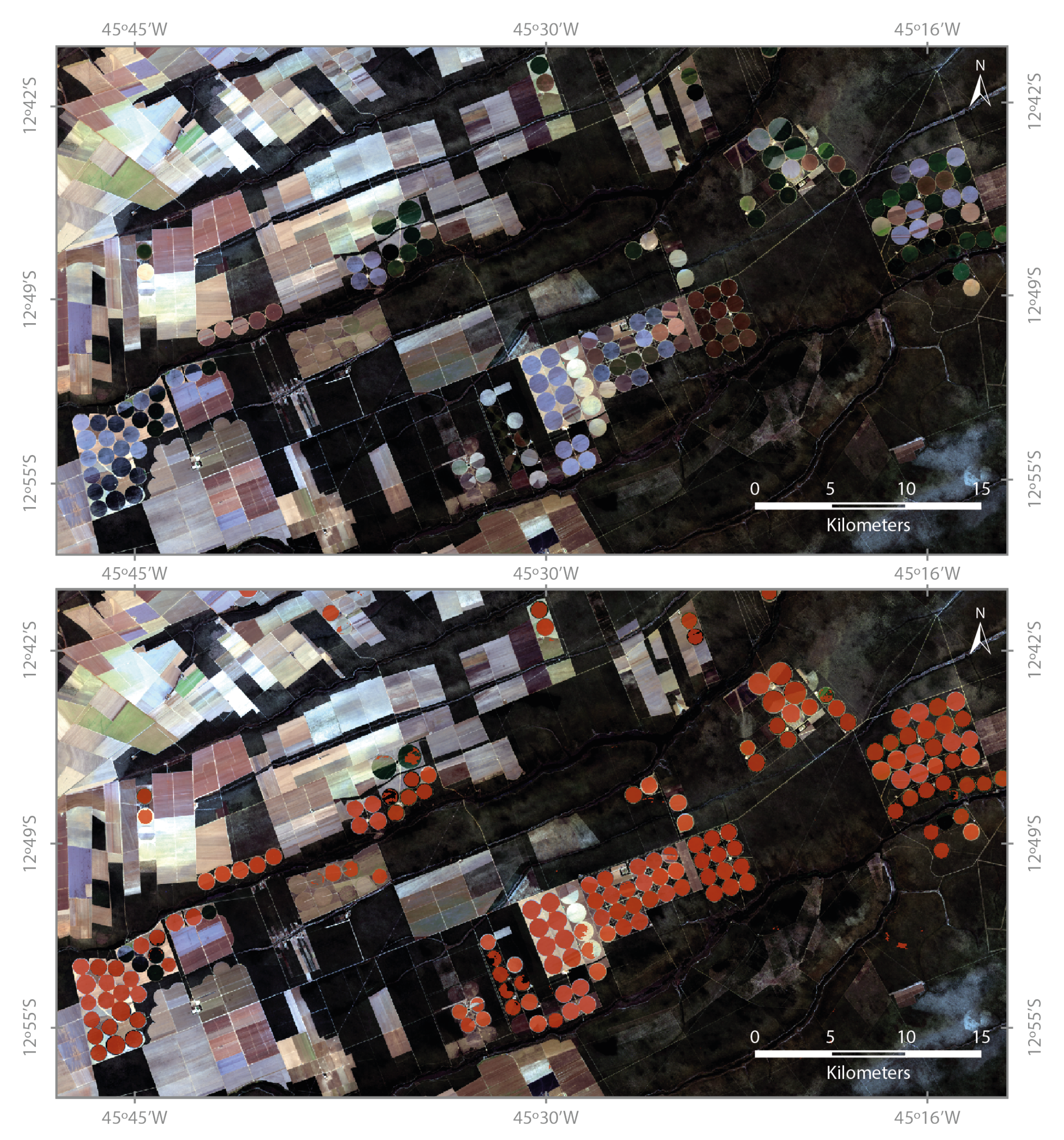

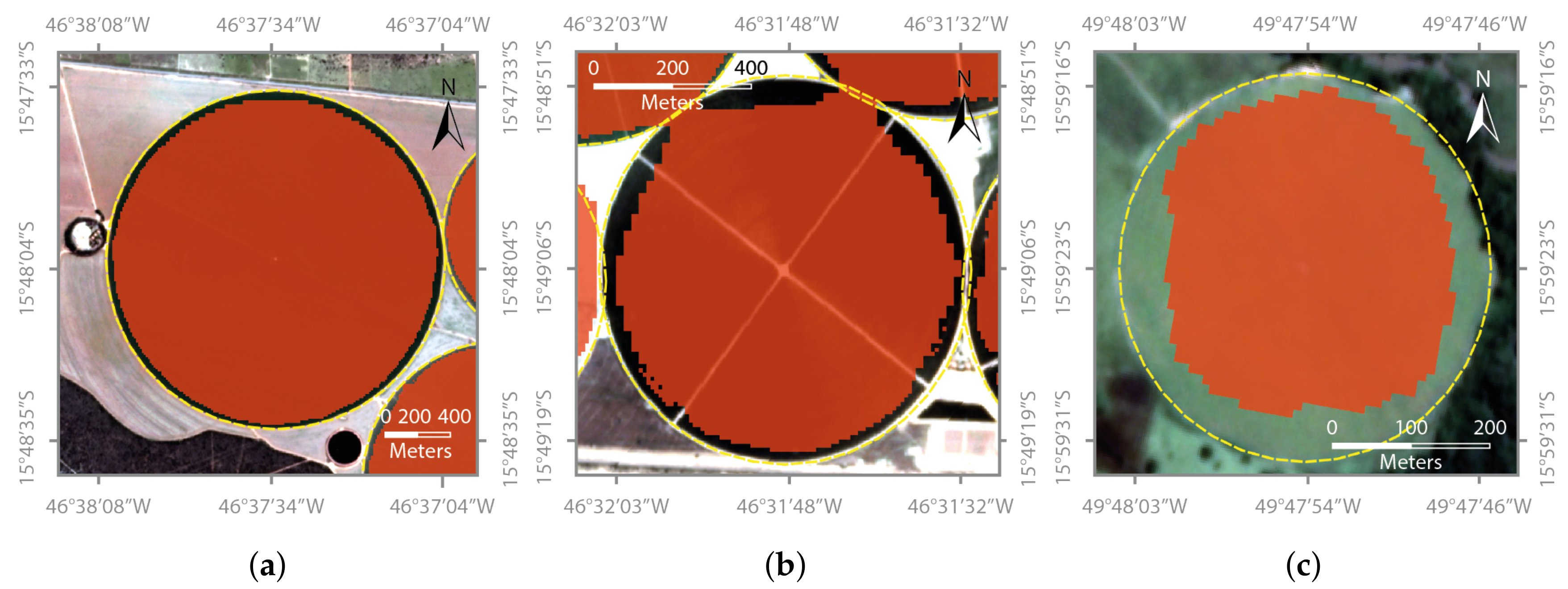

2. Materials and Methods

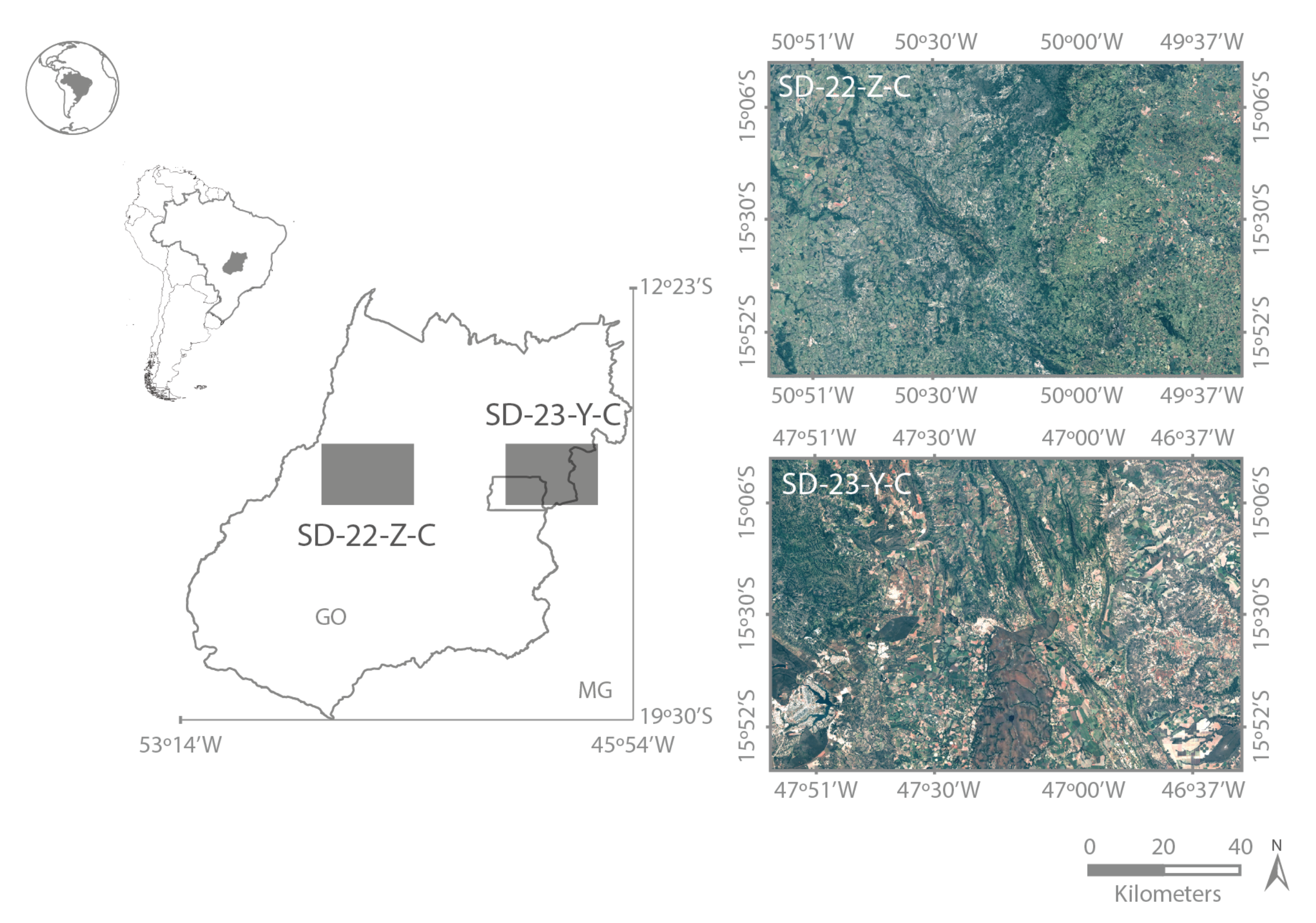

2.1. Study Area

2.2. Image Dataset

2.2.1. Planet Images

2.2.2. Planet Mosaics

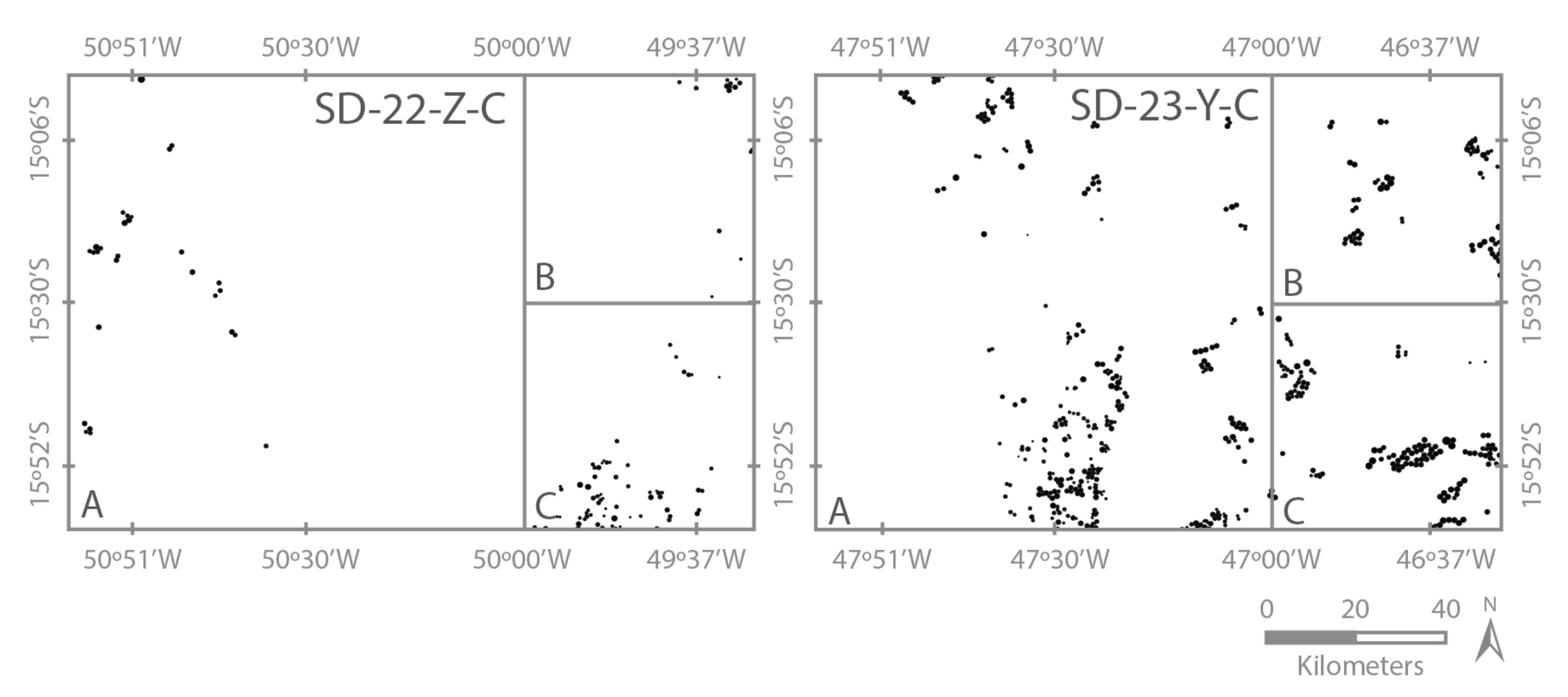

2.2.3. Training Data

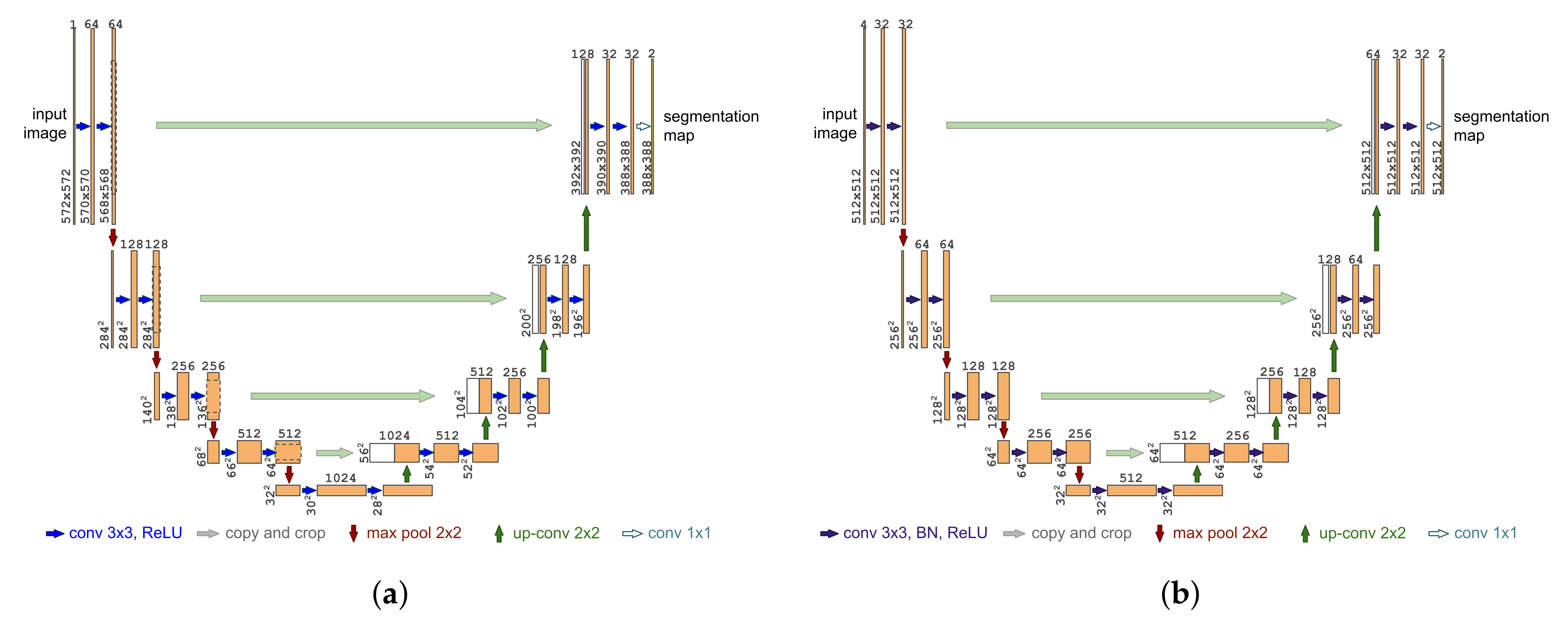

2.3. Network Architecture

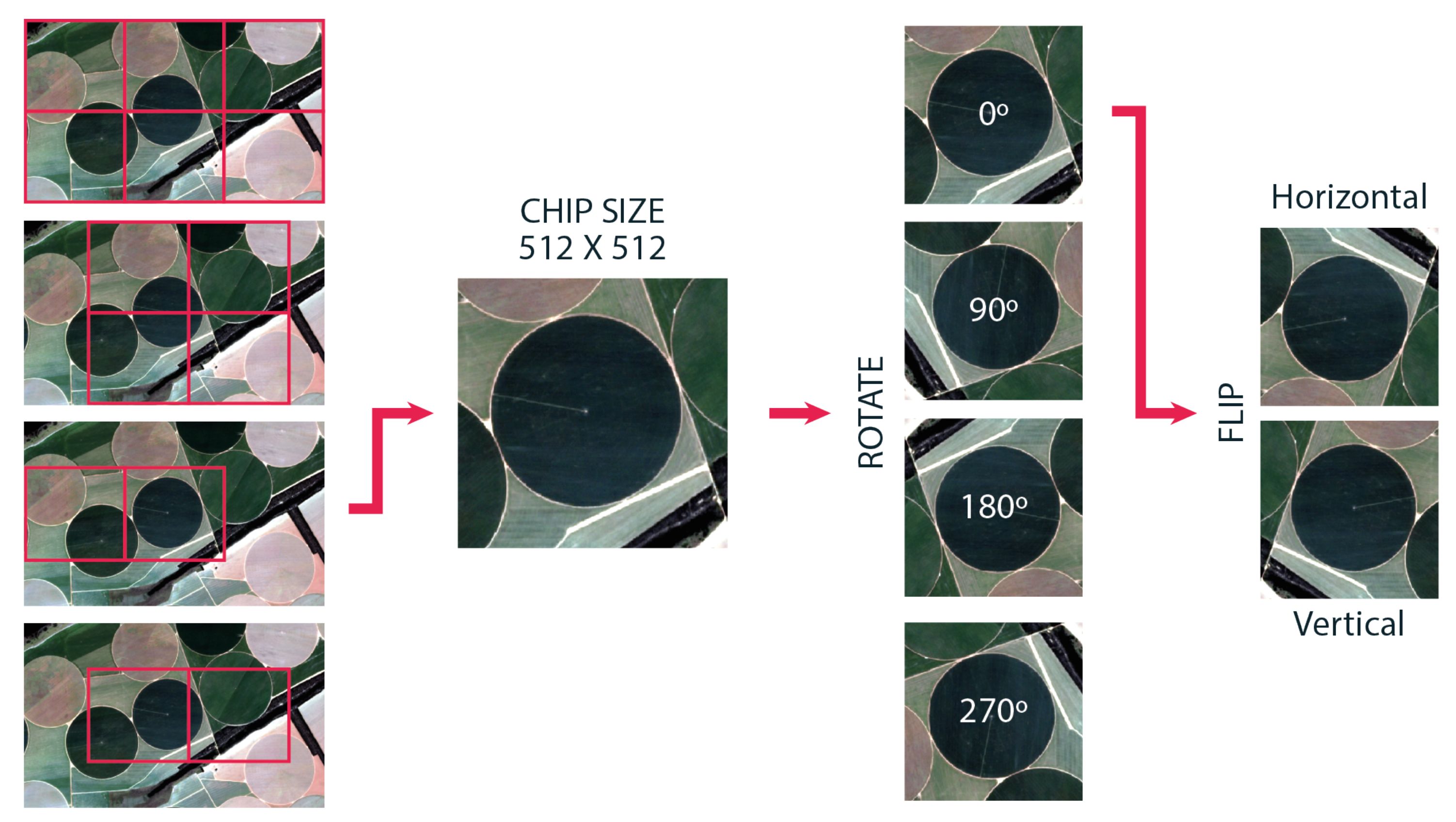

2.4. Preprocessing and Data Augmentation

2.5. Training

2.6. Evaluation Metrics

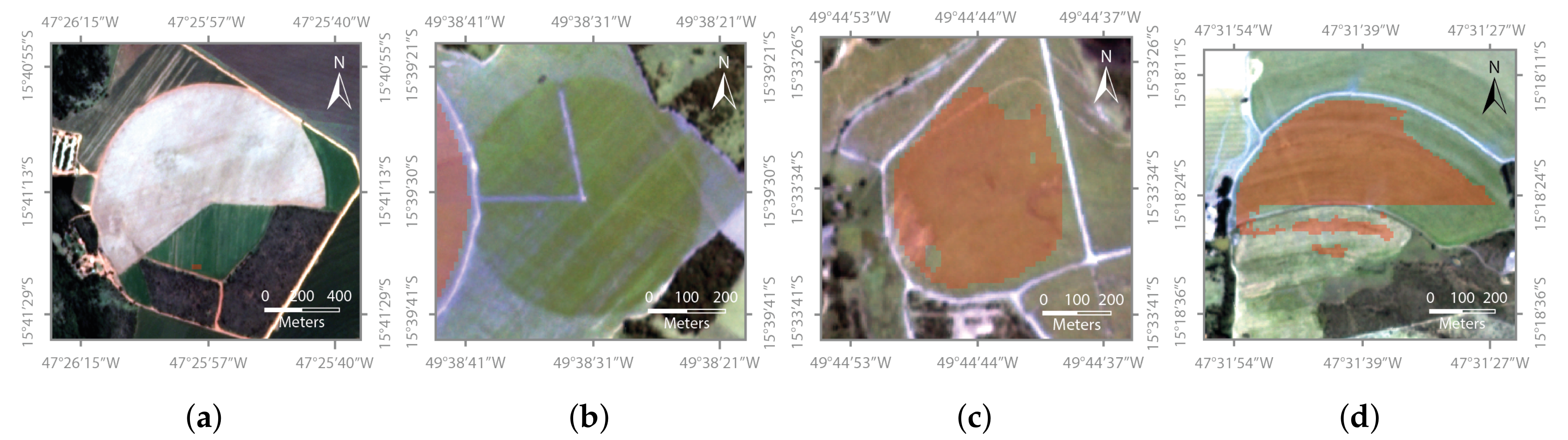

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rosegrant, M.W.; Cai, X. Global Water Demand and Supply Projections. Water Int. 2002, 27, 170–182. [Google Scholar] [CrossRef]

- Frenken, K.; Gillet, V. Irrigation Water Requirement and Water Withdrawal by Country; FAO: Rome, Italy, 2012. [Google Scholar]

- Singh, A. Decision support for on-farm water management and long-term agricultural sustainability in a semi-arid region of India. J. Hydrol. 2010, 391, 63–76. [Google Scholar] [CrossRef]

- Yu, Y.; Disse, M.; Yu, R.; Yu, G.; Sun, L.; Huttner, P.; Rumbaur, C. Large-Scale Hydrological Modeling and Decision-Making for Agricultural Water Consumption and Allocation in the Main Stem Tarim River, China. Water 2015, 7, 2821–2839. [Google Scholar] [CrossRef]

- Chen, Y.; Ye, Z.; Shen, Y. Desiccationof the Tarim River, Xinjiang, China, and mitigation strategy. Quat. Int. 2011, 244, 264–271. [Google Scholar] [CrossRef]

- Liu, Y.; Song, W.; Deng, X. Spatiotemporal Patterns of Crop Irrigation Water Requirements in the Heihe River Basin, China. Water 2017, 9, 616. [Google Scholar] [CrossRef]

- Instituto Brasileiro de Geografia e Estatística. Censo Agropecuário 2017: Resultados Preliminares; Instituto Brasileiro de Geografia e Estatística: Rio de Janeiro, Brazil, 2017. [Google Scholar]

- Agência Nacional de Águas. Levantamento da Agricultura Irrigada por Pivôs Centrais no Brasil, 2nd ed.; Agencia Nacional de Aguas (ANA): Brasilia, Brazil, 2019. [Google Scholar]

- Carlson, M.P. The Nebraska Center-Pivot Inventory: An example of operational satellite remote sensing on a long-term basis. Photogramm. Eng. Remote Sens. 1989, 55, 587–590. [Google Scholar]

- Duda, R.O.; Hart, P.E. Use of the Hough Transformation to Detect Lines and Curves in Pictures; Technical Report; SRI International Menlo Park CA Artificial Intelligence Center: Menlo Park, CA, USA, 1971. [Google Scholar]

- Yan, L.; Roy, D. Automated crop field extraction from multi-temporal Web Enabled Landsat Data. Remote Sens. Environ. 2014, 144, 42–64. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Di, L.; Wu, Z. Automatic Identification of Center Pivot Irrigation Systems from Landsat Images Using Convolutional Neural Networks. Agriculture 2018, 8, 147. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv Prepr. 2014, arXiv:1409.1556. [Google Scholar]

- Planet Labs. Planet Imagery Product Specification: PlanetScope & RapidEye. 2016. Available online: https://assets.planet.com/docs/combined-imagery-product-spec-april-2019.pdf (accessed on 1 November 2019).

- Neelakantan, A.; Vilnis, L.; Le, Q.; Sutskever, I.; Kaiser, L.; Kurach, K.; Martens, J. Adding Gradient Noise Improves Learning for Very Deep Networks. arXiv 2015, arXiv:1511.06807v1. [Google Scholar]

- Koziarski, M.; Cyganek, B. Image recognition with deep neural networks in presence of noise–dealing with and taking advantage of distortions. Integr. Comput. Aided Eng. 2017, 24, 337–349. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J.; Franklin, J. The elements of statistical learning: Data mining, inference and prediction. Math. Intell. 2005, 27, 222–223. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin, Germany, 2015; pp. 234–241. [Google Scholar]

- Arganda-Carreras, I.; Turaga, S.C.; Berger, D.R.; Cireşan, D.; Giusti, A.; Gambardella, L.M.; Schmidhuber, J.; Laptev, D.; Dwivedi, S.; Buhmann, J.M.; et al. Crowdsourcing the creation of image segmentation algorithms for connectomics. Front. Neuroanat. 2015, 9, 142. [Google Scholar] [CrossRef] [PubMed]

- Maška, M.; Ulman, V.; Svoboda, D.; Matula, P.; Matula, P.; Ederra, C.; Urbiola, A.; España, T.; Venkatesan, S.; Balak, D.M.; et al. A benchmark for comparison of cell tracking algorithms. Bioinformatics 2014, 30, 1609–1617. [Google Scholar] [CrossRef] [PubMed]

- Iglovikov, V.; Mushinskiy, S.; Osin, V. Satellite Imagery Feature Detection using Deep Convolutional Neural Network: A Kaggle Competition. arXiv 2017, arXiv:1706.06169. [Google Scholar]

- Feng, W.; Sui, H.; Huang, W.; Xu, C.; An, K. Water Body Extraction From Very High-Resolution Remote Sensing Imagery Using Deep U-Net and a Superpixel-Based Conditional Random Field Model. IEEE Geosci. Remote Sens. Lett. 2018, PP, 1–5. [Google Scholar] [CrossRef]

- Pan, X.; Yang, F.; Gao, L.; Chen, Z.; Zhang, B.; Fan, H.; Ren, J. Building Extraction from High-Resolution Aerial Imagery Using a Generative Adversarial Network with Spatial and Channel Attention Mechanisms. Remote Sens. 2019, 11, 917. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: www.tensorflow.org (accessed on 1 November 2019).

- Kreyszig, E. Advanced Engineering Mathematics, 4th ed.; John Wiley and Sons Ltd.: Hoboken, NJ, USA, 1979; p. 880. [Google Scholar]

- Dozat, T. Incorporating nesterov momentum into adam. In Proceedings of the ICLR, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Fernandez-Moral, E.; Martins, R.; Wolf, D.; Rives, P. A new metric for evaluating semantic segmentation: Leveraging global and contour accuracy. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; pp. 1051–1056. [Google Scholar]

- Rijsbergen, C.J.V. Information Retrieval, 2nd ed.; Butterworth-Heinemann: Newton, MA, USA, 1979. [Google Scholar]

- Planet Labs. Planet Imagery Product Specifications. 2019. Available online: https://www.planet.com/products/satellite-imagery/files/1610.06_Spec%20Sheet_Combined_Imagery_Product_Letter_ENGv1.pdf (accessed on 1 November 2019).

- U.S. Geological Survey. Landsat 8 (L8) Data Users Handbook; U.S. Geological Survey: Reston, VA, USA, 2019. [Google Scholar]

| Class | (Producer Accuracy) | (User Accuracy) | ||

|---|---|---|---|---|

| 1 | 0.99 | 0.88 | 0.93 | 0.99 |

| 0 | 0.99 | 0.99 | 0.99 |

| PlanetScope | Landsat 8 | ||||

|---|---|---|---|---|---|

| Sensor name | PS2.SD | Operational Land Imager—OLI | |||

| Spatial resolution | 3.7 m | 30 m | |||

| Spectral bands | Blue 0.45–0.51 m Green 0.50–0.59 m Red 0.59–0.67 m NIR 0.78–0.86 m | Blue 0.45–0.51 m Green 0.53–0.59 m Red 0.64–0.67 m NIR 0.85–0.88 m | |||

| Pixel value | TOA | Digital Number | |||

| Radiometric resolution | 16 bit | 12 bit |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saraiva, M.; Protas, É.; Salgado, M.; Souza, C. Automatic Mapping of Center Pivot Irrigation Systems from Satellite Images Using Deep Learning. Remote Sens. 2020, 12, 558. https://doi.org/10.3390/rs12030558

Saraiva M, Protas É, Salgado M, Souza C. Automatic Mapping of Center Pivot Irrigation Systems from Satellite Images Using Deep Learning. Remote Sensing. 2020; 12(3):558. https://doi.org/10.3390/rs12030558

Chicago/Turabian StyleSaraiva, Marciano, Églen Protas, Moisés Salgado, and Carlos Souza. 2020. "Automatic Mapping of Center Pivot Irrigation Systems from Satellite Images Using Deep Learning" Remote Sensing 12, no. 3: 558. https://doi.org/10.3390/rs12030558

APA StyleSaraiva, M., Protas, É., Salgado, M., & Souza, C. (2020). Automatic Mapping of Center Pivot Irrigation Systems from Satellite Images Using Deep Learning. Remote Sensing, 12(3), 558. https://doi.org/10.3390/rs12030558