1. Introduction

Fostered by significant advances in computer technology that have taken place from the end of the last century to now, the Earth observation (EO) field has greatly evolved over the last 20 years [

1]. Improvements in hardware and software have allowed for the development of more sophisticated and powerful remote sensing systems [

2], which in turn has enhanced the acquisition of remote sensing data in terms of both quantity and quality, and also improved the analysis and processing of these data [

3]. In fact, remote sensing technology has become a fundamental tool to increase our knowledge of the Earth and how human factors, such as globalization, industrialization and urbanization can affect the environment [

4]. It provides relevant information to address current environmental problems such as desertification [

5,

6], deforestation [

7,

8], water resources depletion [

9], soil erosion [

10,

11,

12], eutrophication of freshwater and coastal marine ecosystems [

13,

14], warming of seas and oceans [

15], together with global warming and abnormal climate changes [

16] or urban areas degradation [

17], among others.

In particular, advances in optical remote sensing imaging [

18] have allowed for the acquisition of high spatial, spectral and temporal resolution images, gathered from the Earth’s surface in multiple formats, ranging from very-high spatial-resolution (VHR) panchromatic images to hyperspectral images with hundreds of narrow and continuous spectral bands. Focusing on hyperspectral imaging (HSI) [

19], this kind of data comprises abundant spectral–spatial information for large coverage, obtained by capturing the solar radiation that is absorbed and reflected by ground targets at different wavelengths, usually ranging from the visible, to the near (NIR) and short wavelength infrared (SWIR) [

20]. In this sense, HSI data obtained by airborne and satellite platforms consist of huge data cubes, where each pixel represents the spectral signature of the captured object. The shape of these spectral signatures depends on the physical and chemical behavior of the materials that compose it, working as a fingerprint for each terrestrial material. This signature allows for a precise characterization of the land cover, and is currently widely exploited in the fields of image analysis and pattern recognition [

21]. Advances in HSI processing and analysis methods have enabled the widespread incorporation of these to a vast range of applications. Regarding the forest preservation and management [

22,

23,

24,

25], HSI data can be applied to invasive species detection [

26,

27,

28], forestry health and diseases [

29,

30,

31] and analyses of relationship between water precipitations, atmospheric conditions and forest health [

32,

33]. Also, regarding the management of other natural resources, there are works focused on freshwater and maritime resources [

34,

35,

36,

37] and geological and mineralogical resources [

38,

39,

40,

41]. In relation to agricultural and livestock farming activities, [

42], the available literature compiles a large number of works about HSI applied to precision agriculture [

43,

44], analyzing the soil properties and status [

45,

46,

47], investigating diseases and pests affecting crops [

48,

49] and developing libraries of spectral signatures specialized in crops [

50]. Moreover, HSI data can be applied to urban planning [

51,

52,

53], military and defense applications [

54,

55,

56] and disaster prediction and management [

57,

58], among others.

The wide applications of HSI images call for highly efficient and accurate methods to make the most of the rich spectral information contained in HSI data. In this context, machine learning algorithms have been adopted to process and analyze the HSI data. These algorithms include spectral unmixing [

59,

60], image segmentation [

61,

62,

63], feature extraction [

64,

65], spectral reduction [

66,

67], anomaly, change and target detection [

68,

69,

70,

71,

72,

73] and land-cover classification methods [

74,

75], among others. Among these algorithms, supervised pixel-wise classifiers can derive more accurate results and thence more widely used for images classification compared to unsupervised approaches. This higher accuracy is mainly due to the class-specific information provided during the training stage.

In order to define the classification problem in mathematical terms, let

denote the HSI scene—considered as an array of

N vectors, where each one

is composed by

B spectral bands—and let

be a set of

K land-cover classes. Classification methods define

as a mapping function with learnable parameters

that essentially describes the relationship between the spectral vector

(input) and its corresponding label

(output), creating feature–label pairs

. The final goal is to obtain the classification map

by modeling the conditional distribution

in order to infer the class labels for each pixel. Usually, this posterior distribution is optimized by training the classifier on a subset

composed by

M random independent identically distributed (i.i.d.) observations that follow the joint distribution

, i.e., a subset of known and representative labeled data, adjusting parameters

to minimize the empirical risk

[

76] defined as Equation (

1) indicates:

where

is the loss function defined over

as the discrepancy between the expected label

y and the obtained classifier’s output

. A wide variety of supervised-spectral techniques have been developed within the machine learning field to perform the classification of HSI data [

77]. Some of the most popular ones can be categorized into [

74]: (i)

probabilistic approaches, such as the multinomial logistic regression (MLR) [

62,

78] and its variants (sparse MLR (SMLR) [

79,

80] and subspace MLR -MLRsub- [

81,

82]), the logistic regression via variable splitting and augmented Lagrangian (LORSAL) [

63,

83] or the maximum likelihood estimation (MLE) [

84], among others, which obtain as a result the probability of

belonging to each of

K considered classes [

85]; (ii)

decision tree (DT) [

86,

87,

88], which defines a non-parametric classification/regression method with a hierarchical structure of branches and leaves; (iii)

ensemble methods, which are composed of multiple classifiers to enhance the classification performance, for instance random forests (RFs) [

89,

90], whose output is composed by the collective decisions of several DTs to which majority voting is applied, or boosting and bagging-based methods such as RealBoost [

91,

92], AdaBoost [

93,

94,

95,

96], Gradient Boosting [

97,

98] or the ensemble extreme learning machine (

LM) [

99], among others; (iv)

kernel approaches, such as the non-probabilistic support vector machine (SVM) [

100,

101], which exhibits a good performance when handling high-dimensional data and limited training samples, (although its performance is greatly affected by the kernel selection and the initial hyperparameters setting) and (v) the non-parametric

artificial neural networks (ANNs), which exhibit a great generalization power without prior knowledge about the statistical properties of the data, also offering a great variety of architectures thanks to their flexible structure based on the stacking of layers composed by computing neurons [

75], allowing for the implementation of traditional shallow-fully-connected models (such as the multilayer perceptron (MLP) [

102,

103]) and deep-convolutional models (such as convolutional neural networks (CNNs) [

104] and complex models as residual networks (ResNets) [

105] and capsule models [

106]).

These methods need to face the intrinsic complexity of processing HSI data, related to the huge amount of available spectral information (curse of dimensionality [

107]), the spectral bands correlation and redundancies [

108], the lack of enough labeled samples to perform supervised training [

109] and overfitting problems. Moreover, current HSI classification methods must satisfy a growing demand for effective and efficient methodologies from a computational point of view [

110,

111,

112], with the idea of being executed on low-power platforms that allow for on-board processing of data (e.g., smallsats [

113,

114]). In this sense, high performance computing (HPC) approaches such as commodity clusters [

115,

116] and graphic processing units (GPUs) have been widely employed to process HSI data [

117]. However, the adaptation of these computing platforms to on-board processing is quite difficult due to their high requirements in terms of energy consumption.

Traditionally, the data gathered by remote sensors have to be downloaded to the ground segment, when the aircraft or spacecraft platform is within the range of the ground stations, in order to be pre-processed by applying registration and correction techniques and then distributed to the final users, which perform the final processing (classification, unmixing and object detection). Nevertheless, this procedure introduces important delays related to the communication of a large amount of remote sensing data (which is usually in the range of GB–TB) between the source and the final target, producing a bottleneck that can seriously reduce the effectiveness of real-time applications [

118]. Hereof, real-time on-board processing is a very interesting topic within the remote sensing field that has significantly grown in recent years to mitigate these limitations, and to provide a solution to these types of applications [

119,

120,

121,

122,

123]. In addition to avoiding communication latencies, the on-board processing can considerably reduce the amount of bandwidth and storage required in the collection of HSI data, allowing for the development of a more selective data acquisition and reducing the cost of on-the-ground processing systems [

124]. As a result, low-power consumption architectures such as field-programmable gate array (FPGAs) [

125,

126] and efficient GPU architectures [

110] have emerged as an alternative to transfer part of the processing from the ground segment to the remote sensing sensor. A variety of techniques have been adapted to be carried out on-board [

127], ranging from pre-processing methods, such as data calibration [

128], correction [

129], compression [

123,

130] and georeferencing [

131], to final user applications, for instance data unmixing [

126], object detection [

132] and classification [

110,

133]. In the context of classification, usually, the training of supervised methods should be performed offline (in external systems), so that only the trained model will be implemented in the device (which will only perform the inference operation). On embedded and on-board systems, the size and energy consumption of the model are crucial parameters, so it is necessary to find an appropriate trade-off between performance (in terms of accuracy measurements) and energy consumption (in terms of power consumption and execution times). In this paper, we perform a detailed analysis and study of the performance of machine learning methods in the task of supervised, spectral-based classification of HSI data, with particular emphasis on the inference stage, as it is the part that is implemented in on-board systems. Specifically, we conduct an in-depth review and analysis of the advantages and disadvantages of these methods in the aforementioned context.

The remainder of this paper is organized as follows.

Section 2 provides an overview of the considered machine learning methods to perform supervised, spectral-based HSI classification.

Section 3 presents the considered HSI scenes and the experimental setting configurations adopted to conduct the analysis among the selected HSI classifiers.

Section 4 provides a detailed experimental discussion, highlighting the advantages and disadvantages of each method in terms of accuracy and computational measurements. Finally,

Section 5 concludes the paper with some remarks and hints at plausible future research lines.

2. Inference Characteristics of Models for Hyperspectral Images Classification

We selected some of the most relevant techniques for HSI data classification to be compared in the inference stage. These techniques are: multinomial logistic regression (MLR), random forest (RF), support vector machine (SVM), multi-layer perceptron (MLP) and a shallow convolutional neural network (CNN) with 1D kernel as well as gradient boosting decision Trees (GBDT), a tree based technique that has successfully been used in other classification problems. In order to compare them, it is necessary to perform the characterization of each algorithm in the inference stage, measuring the size in memory of the trained model and analyzing the number and type of operations needed to perform the complete inference stage for the input data.

In the case of HSI classification, the input is a single pixel vector composed of a series of features, and each one of these features is a 16-bit integer value. Each model treats the data in different ways so, for instance, the size of the layers of a neural network will depend on the number of features of the pixels of each data set, while the size of a tree-based model will not. We will explain the characteristics of the different models and the inference process for each of them.

2.1. Multinomial Logistic Regression

The MLR classifier is a probabilistic model that extends the performance of binomial logistic regression for multi-class classification, approximating the posterior probability of each class by a softmax transformation. In particular, for a given HSI training set

composed by

M pairs of spectral pixels

and their corresponding labels

, the posterior probability

of the

k-th class is given by Equation (

2) [

134]:

where

is the set of logistic regressors for class

k, considering

as all the coefficients of the MLR, while

is a feature extraction function defined over the spectral data

, which can be linear, i.e.,

, or non-linear (for instance, kernel approaches [

135]). In this work, linear MLR is considered.

Standardization of the data set is needed before training, so the data are compacted and centered around the average value. This process implies the calculation of the average (

) and standard deviation (

s) values of the entire data set

X to apply Equation (

3) to each pixel

. In HSI processing, it is common to pre-process the entire data set before splitting it into the training and testing subsets, so

and

s include the test set, which is already standardized to perform the inference after training. Nevertheless, in a real environment,

and

s values will be calculated from the training data and then the standardization should be applied on-the-fly, applying these values to the input data received from the sensor. This implies not only some extra calculations to perform the inference for each pixel, but also some extra assumptions on the representativeness of the training data distribution. These extra calculations are not included in the measurements of

Section 4.2.

The MLR model has been implemented in this work with the

scikit learn logistic regression model with a multinomial approach and

lbfgs solver [

136]. The trained model consists of one estimator for each class, so the output of each estimator represents the probability of the input belonging to that class. The formulation of the inference for the class

k estimator (

) corresponds to Equation (

4), where

is the input pixel and

correspond to the bias value and the coefficients of the estimator of class

k.

As a result, the model size depends on the number of classes (K) and features (B), having parameters. The inference of one pixel requires floating point multiplications and floating point accumulations. In this case, we have a very small model and it does not require many calculations. However, since it is a linear probabilistic model, its accuracy may be limited in practice, although it can be very accurate when there is a linear relation between the inputs and outputs.

2.2. Decision Trees

A decision tree is a decision algorithm based on a series of comparisons connected among them as in a binary tree structure, so that the node comparisons lead the search to one of the child nodes, and so on, until reaching a leaf node that contains the result of the prediction. During training, the most meaningful features are selected and used for the comparisons in the tree. Hence the features that contain more information will be used more frequently for the comparison, and those that do not provide useful information for the classification problem will simply be ignored [

137]. This is an interesting property of this algorithm since, based on the same decisions made during training to choose features, we can easily determine the feature importance. This means that decision trees can also be used to find out which features carry the main information load, and that information can be used to train even smaller models keeping most of the information of the image with much less memory impact.

Figure 1 shows the inference operation of a trained decision tree on a series of feature inputs with a toy example. In the first place, this tree takes feature 3 of the input and compares its value with the threshold value 14,300; as the input value is lower than the threshold it continues on the left child, and keeps with the same procedure until it reaches the leaf with 0.15 as output value.

One of the benefits of using decision trees over other techniques is that they do not need any input pre-processing such as data normalization, scaling or centering. They work with the input data as it is [

138]. The reason is that features are never mixed. As can be seen in

Figure 1, in each comparison the trees compare the value of an input feature with another value of the same feature. Hence, several features can have different scales. In other machine learning models, as we just saw in MLR for example, features are mixed to generate a single value so, if their values belong to different orders of magnitude, some features will initially dominate the result. This can be compensated for during the training process, but in general normalization or other pre-processing technique will be needed to speed up training and improve the results. Besides, the size of the input data does not affect the size of the model, so dimensionality reduction techniques such as principal component analysis (PCA) are not needed to reduce the model size, which substantially reduces the amount of calculation needed at inference.

Nevertheless, a single decision tree does not provide accurate results for complex classification tasks. The solution is to use an ensemble method that combines the results of several trees in order to improve the accuracy levels. We will analyze two of these techniques, random forest (RF) and gradient boosting decision trees (GBDT). In terms of computation, most of the machine learning algorithms need a significant amount of floating point operations on inference, and most of them are multiplications. By contrast, the inference with an ensemble of decision trees just need a few comparisons per tree. In terms of memory requirements, the size of this models depends on the number of trees and the number of nodes per tree, but the memory accesses, and therefore the used bandwidth, is much lesser than the size of the model because decision trees only need to access a small part of the model to perform an inference.

In the case of hyperspectral-images pixel classification, the input is a single pixel composed of a series of features. Each node specializes in a particular feature during training, meaning that, at the time of inference, one particular node performs a single comparison between its trained value and the value of the corresponding feature. Since the feature values of hyperspectral images are 16-bit integers, each node just needs an integer comparison to make their decision; i.e., left or right child. This is a very important characteristic for embedded and on-board systems. In most ML models the inputs are multiplied by a floating-point value, hence even when the input model is an integer, all the computations will be floating-point. However, a tree only need to know whether the input is smaller or greater than a given value, and that value can be an integer without any accuracy loss.

So in the case of hyperspectral images pixel-classification, this technique behaves exceptionally in terms of computation. Decision trees are fast and efficient during inference and can be executed even by low-power microcontrollers.

2.2.1. Random Forest

A typical approach to use as ensemble method is RF, where several trees are trained from the same data set, but each one of them from a random subsample of the entire data set. Moreover, the search of the best split feature for each node is done on a random subset of the features. Then each classifier votes for the selected class [

139].

The RF model has been implemented with the scikit learn random forest classifier model [

140]. In this implementation the final selection is done by averaging the predictions of every classifier instead of voting, which implies that each leaf node must keep a prediction value for each class, so after every tree performs the inference the class is selected from the average of every prediction. This generates big models but, as we said, it only needs to access a small part of it during inference.

2.2.2. Gradient Boosting

However, even better results can be obtained applying a different ensemble approach called gradient boosting. This technique is an ensemble method that combines the results of different predictors in such a way that each tree attempts to improve the results of the previous ones. Specifically, the gradient boosting method consists of training predictors sequentially so each new iteration tries to correct the residual error generated in the previous one. That is, each predictor is trained to correct the residual error of its predecessor. Once the trees are trained, they can be used for prediction by simply adding the results of all the trees [

138,

141].

The GBDT model has been implemented with the LightGBM library Classifier [

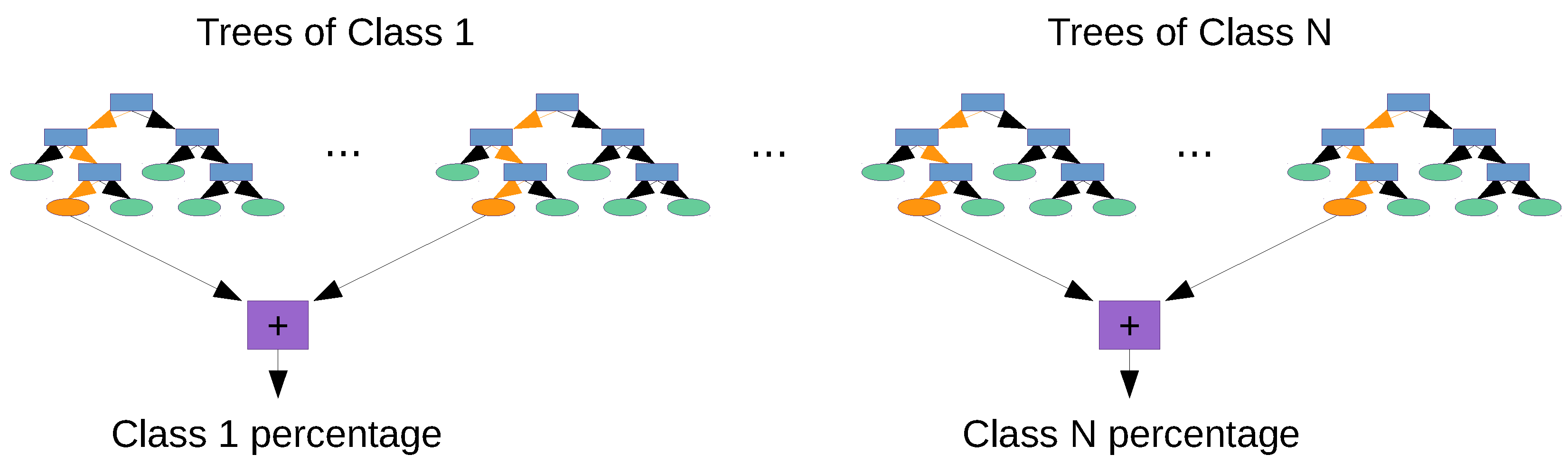

142]. For multi-class classification, one-vs-all approach is used in GBDT implementation, which means that the model trains a different estimator for each class. The output of the correspondent estimator represents the probability that the pixel belongs to that class, and the estimator with the highest result is the one that corresponds to the selected class. On each iteration, the model adds a new tree to each estimator. The one-vs-all approach makes it much easier to combine the results given that each class has their own trees, so we just need to add the results of the trees of each class separately, as shown in

Figure 2. This accumulations of the output values of the trees are the only operations in floating point that the GBDT need to perform.

Due to its iterative approach, the GBDT model also allows designers to trade-off accuracy for computation and model size. For example, if a GBDT is trained for 200 iterations, it will generate 200 trees for each class. Afterwards, the designer can decide whether to use all of them, or to discard the final ones. It is possible to find similar trade-off with other ML models, for instance reducing the number of convolutional layers in a CNN or the number of hidden neurons in a MLP. However, in that case, each possible design must be trained again, whereas in GBDT only one train is needed, and afterwards the designer can simply evaluate the results when using different number of trees and generate a Pareto curve with the different trade-offs.

2.3. Support Vector Machine

A support vector machine (SVM) is a kernel-based method commonly used for classification and regression problems. It is based on a two-class classification approach, the support vector network algorithm. To find the smallest generalization error, this algorithm searches for the optimal hyperplane, i.e., a linear decision function that maximizes the margin among the support vectors, which are the ones that define the decision boundaries of each class [

143]. In the case of pixel-based classification of hyperspectral images, we need to generalize this algorithm to a multi-class classification problem. This can be done following a one-vs-rest, or one-vs-all, approach, training

K separate SVMs, one for each class, so each two-class classifier will interpret the data from its own class as positive examples and the rest of the data as negative examples [

134].

The SVM model has been implemented with the scikit learn support vector classification (SVC) algorithm [

144], which implements one-vs-rest approach for multi-class classification. SVM model also requires pre-processing of the data applying the standardization Equation (

3), with the same implications explained in

Section 2.1. According to scikit learn SVC mathematical formulation [

144], the decision function is found in Equation (

5), where

corresponds to the kernel. We used the radial basis function (RBF) kernel, whose formulation is found in Equation (

6). So the complete formulation of the inference operations is found in Equation (

7), where

corresponds to the

i-th support vector,

product is the coefficient of this support vector,

corresponds to the input pixel,

is the bias value and

is the value of the

training parameter.

The number of support vectors defined as

M in Equation (

7) of the SVM model will be the amount of data used for the training set. So this model does not keep too many parameters, which makes it small in memory size, but in terms of computation, it requires a great amount of calculus to perform one inference. The number of operations will depend on the number of features and the number of training data, which makes it unaffordable in terms of computation for really big data sets. Moreover, as it uses one-vs-all, it will also depends on the number of classes because it will train an estimator for each one of them.

2.4. Neural Networks

Neural networks have become one of the most used machine learning techniques for images classification, and they have also proved to be a good choice for hyperspectral images classification. A neural network consists of several layers sequentially connected so that the output of one layer becomes the input of the next one. Some of the layers can be dedicated to intermediate functions, like pooling layers that reduce dimensionality highlighting the principal values, but the main operation, as well as most of the calculations, of a neural network resides in the layers based on neurons. Each neuron implements Equation (

8), where

x is the input value and

w and

b are the learned weight and bias respectively, which are float values.

Usually, when applying groups of neurons in more complex layers, the results of several neurons are combined such as in a dot product operation, as we will see for example in

Section 2.4.1, and this

w and

b values are float vectors, matrices or tensors, depending on the concrete scenario. So the main calculations in neural networks are float multiplications and accumulations, and the magnitude of these computations depends on the number and size of the layers of the neural network. The information we need to keep in memory for inference consists in all these learned values, so the size of the model will also depend on the number and size of the layers.

Neural network models also require pre-processing of the data. Without it, the features with higher and lower values will initially dominate the result. This can be compensated during training process, but in general normalization will be needed to speed up training and improve the results. As for MLR and SVM models, a standardization Equation (

3) of the data sets was applied.

2.4.1. Multi Layer Perceptron

A multi layer perceptron (MLP) is a neural network with at least one hidden layer, i.e., intermediate activation values, which requires at least two fully-connected layers. Considering the

l-th fully connected layer, its operation corresponds to Equation (

9), where

is the layer’s input, which can come directly from the original input or from a previous hidden layer

,

is the output of the current layer, resulting from applying the weights

and biases

of the layer. If the size of the input

is

, being

M the number of input samples and

the dimension of the feature space, and the size of the weights

is

, the output size will be

, i.e., the

M samples represented in the feature space of dimension

and defined by the

l-th layer. In the case of hyperspectral imaging classification, the input size for one spectral pixel will be

, where

B is the number of spectral channels, while the final output of the model size will be

, where

K is the number of considered classes.

The MLP model was implemented with the PyTorch neural network library [

145], using the Linear classes to implement two fully-connected layers. The number of neurons of the first fully-connected layer is a parameter of the network, and the size of each neuron of the last fully-connected layer will depend on it. In the case of hyperspectral images pixel classification, the input on inference will be a single pixel (

according to last explanation) with

B features and the final output will be the classification for the

K classes, so the size of each neuron of the first fully-connected layer will depend on the number of features, while the number of neurons of the last fully-connected layer will be the number of classes.

As the input for pixel classification is not very big, this model keeps a small size once trained. During inference it will need to perform a float multiplication and a float accumulation for each one of its parameters, among other operations, so even being small the operations needed are expensive in terms of computation.

2.4.2. Convolutional Neural Network

A convolutional neural network (CNN) is a neural network with at least one convolutional layer. Instead of fully-connected neurons, convolutional layers apply locally-connected filters per layer. These filters are smaller than the input and each one of them performs a convolution operation on it. During a convolution, the filter performs dot product operations within different sections of the input while it keeps moving along it. For hyperspectral images pixel classification, whose input consist in a single pixel, the 1D convolutional layer operation can be described with Algorithm 1, where input pixel

has

B features, the layer has

F filters and each filter

Q has

q values, i.e., weights in the case of 1D convolution, and one bias

, so the output

will be of shape

. The initialization values

and

correspond respectively to the learned weights and biases of the layer.

| Algorithm 1 1D convolutional layer algorithm. |

| 1: Input: |

| 2: | ▹ The input pixel is an array of B features |

| 3: Initialize: |

| 4: | ▹ Array of F filters, each one with q weights |

| 5: | ▹ Array of F bias values, corresponding to each filter |

| 6: | ▹ Output structure generation |

| 7: for do | ▹ For each filter |

| 8: | ▹ Get current filter |

| 9: | ▹ Get current bias value |

| 10: for do | ▹ Movement of the filter along the input |

| 11: |

| 12: for do | ▹ Dot product along the filter in current position |

| 13: | ▹ corresponds to the weight value |

| 14: end for |

| 15: | ▹ corresponds to the bias value |

| 16: end for |

| 17: end for |

| 18: Return: |

| 19: | ▹ The output matrix of shape |

The CNN model was implemented with PyTorch neural network library [

145], using the convolution, the pooling and the linear classes to define a Network with respectively one 1D convolutional layer, one max pooling layer and two fully connected layers at the end. The input of the 1D convolutional layer will be the input pixel, while the input of the rest of the layers will be the output of the previous one, in the specified order. The number and size of the filters of the 1D convolutional layer are parameters of the network, nevertheless the relation between the profundity of the filters and the number of features will determine the size of the first fully connected layer, which is the biggest one. The max pooling layer does not affect the size of the model, since it only performs a size reduction by selecting the maximum value within small sub-sections of the input, but it will affect the number of operations as it needs to perform several comparisons. The fully connected layers are actually an MLP, as explained in

Section 2.4.1. The size of the last fully connected layer will also depend on the number of classes. In terms of computation, the convolutional layer is very intensive in calculations, as can be observed in Algorithm 1, and most of them are floating point multiplications and accumulations.

2.5. Summary of the Relation of the Models with the Input

Each discussed model has different characteristics on its inference operation, and the size and computations of each one depends on different aspects of the input and the selected parameters.

Table 1 summarizes the importance of the data set size (in the case of hyperspectral images this is the number of pixels of the image), the number of features (number of spectral band of each hyperspectral pixel) and the number of classes (labels) in relation to the size and the computations of each model. The dots in

Table 1 correspond to a qualitative interpretation, from not influenced at all (zero dots) to very influenced (three dots), regarding how each model size and number of computations are influenced by the size of the data set, the number of features of each pixel and the number of classes. This interpretation is not intended to be quantitative but qualitative, i.e., just a visual support for the following explanations.

The number of classes is an important parameter for every model, but it affects them in a very different way. Regarding the size of the model, the number of classes defines the size of the output layer in the MLP and CNN, while for the MLR, GBDT and SVM the entire estimator is replied to as many times as the number of classes. Since the RF needs to keep the prediction for each class on every leaf node, the number of classes is crucial to determine the final size of the model, and affects it much more. Regarding the computation, in the MLR, GBDT and SVM models the entire number of computations is multiplied by the number of classes, so it affects them very much. Furthermore, in the SVM model the number of classes will also affect the number of support vectors needed, because it is necessary to have enough training data for every class, so each new class not only increases the number of estimators, but also increases the computational cost by adding new support vectors. In neural networks, the number of classes defines the size of the output (fully connected) layer, which implies multiply and accumulate floating point operations, but this is the smallest layer for both models. In the case of RF, it only affects the final calculations of the results, but it is important to remark that these are precisely the floating point operations of this model.

The number of features is not relevant for decision tree models during inference, that is why they do not need any dimensionality reduction techniques. The size of each estimator of the MLR and the SVM models will depend directly on the number of features, so it influences the size as much as the number of classes. In neural networks, it affects the size of the first fully connected layer (which is the biggest one), so the size of these models is highly influenced by the number of features. Nevertheless, in the case of the MLP, it only multiplies the dimension of the fully connected layer so it does not impact that much as in the case of the CNN, where it will be also multiplied by the number of filters of the convolutional layer. In a similar way, the number of operations of each estimator of the MLR and the SVM models will be directly influenced by the number of features. Again, for the MLP it will increase the number of operations of the first fully connected layer and for the CNN also the convolutional layer, which is very intensive in terms of calculations.

The size of the data set (and specifically the size of the training set) only affects the SVM model, because it will generate as many support vectors as the number of different data samples used in the training process. Regarding the size of the model, it multiplies the number of parameters of each estimator, so it will affect the size of the model as much as the number of classes. Actually, both the training set and the number of classes are related to each other. Regarding the number of operations, the core of Equation (

7) depends on the number of support vectors, so its influence is very high.

It is also worth noting that decision trees are the only ones that do not require any pre-processing to the input data. As we already explained in

Section 2.1, this implies some extra calculations not included in the measurements of

Section 4.2, but they can also be a source of possible inaccuracies because of the implications they could have once applied to a real system with entirely new data taken in different moments and conditions. For instance, applying standardization means that we will subtract the mean value of our training set to the data, and reduce it in relation to the standard deviation of our training set.

3. Data Sets and Training Configurations

The data sets selected for experiments are the Indian Pines (IP) [

146], Pavia University (PU) [

146], Kennedy Space Center (KSC) [

146], Salinas Valley (SV) [

146] and University of Houston (UH) [

147].

Table 2 shows the ground truth and the number of pixels per class for each image.

The IP data set is an image of an agricultural region, mainly composed of crop fields, collected by the Airborne Visible Infra-Red Imaging Spectrometer (AVIRIS) sensor in Northwestern Indiana, USA. It has 145 × 145 pixels with 200 spectral bands after removal of the noise and water absorption bands. Of the total 21,025 pixels, 10,249 are labeled into 16 different classes.

The UP data set is an image of an urban area, the city of Pavia in Italy, collected by the Reflective Optics Spectrographic Imaging System (ROSIS), a compact airborne imaging spectrometer. It is composed of 610 × 340 pixels with 103 spectral bands. Only 42,776 pixels from the total 207,400 are labeled into nine classes.

The KSC data set is an image with water and vegetation collected by the AVIRIS sensor over the Kennedy Space Center in Florida, USA. It has 512 × 614 pixels with 176 spectral bands after removing the water absorption and low signal-to-noise bands. Only 5211 out of the available 314,368 pixels are labeled into 13 classes.

The SV data set is an image composed of agricultural fields and vegetation, collected by the AVIRIS sensor in Western California, USA. It has 512 × 217 pixels with 204 spectral bands after removing the noise and water absorption bands. Of the total 111,104 pixels, 56,975 are labeled into 16 classes.

The UH data set is an image of an urban area collected by the Compact Airborne Spectrographic Imager (CASI) sensor over the University of Houston, USA. It has 349 × 1905 pixels with 144 spectral bands. Only 15,029 of the total 664,845 pixels are labeled into 15 classes. As it was proposed as the benchmark data set for the 2013 IEEE Geoscience and Remote Sensing Society data fusion contest [

148], it is already divided into training and testing sets, with 2832 and 12,197 pixels, respectively.

The implementation of the algorithms used in this review were developed and tested on a hardware environment with an X Generation Intel

® Core™i9-9940X processor with 19.25M of Cache and up to 4.40GHz (14 cores/28 way multi-task processing), installed over a Gigabyte X299 Aorus, with 128GB of DDR4 RAM. Also, a graphic processing unit (GPU) NVIDIA Titan RTX with 24GB GDDR6 of video memory and 4608 cores was used. We detailed in

Section 2 the libraries and classes used for the implementation of each model: MLR with scikit learn logistic regression, random forest with scikit learn random forest classifier, GBDT with LightGBM classifier, SVM with scikit learn support vector classification, MLP with PyTorch neural network linear layers and CNN1D with PyTorch neural network convolutional, pooling and linear layers.

For each dataset we trained the models applying cross-validation techniques to select the final training hyperparameters. After the cross-validation, the selected values did not always correspond to the best accuracy, but to the best relation between accuracy and model size and requirements. The selected hyperparameters shown in

Table 3 are the penalty of the error (

C) for the MLR, the number of trees (

n), the minimum number of data to split a node (

m) and maximum depth (

d) for both the RF and the GBDT, and also the maximum number of features to consider for each split (

f) for the RF, the penalty of the error (

C) and kernel coefficient (

) for the SVM, the number of neurons in the hidden layer (h.l.) for the MLP and for the CNN, the number of filters of the convolutional layer (

f), the number of values of each filter (

q), the size of the kernel of the max pooling layer (

p) and the number of neurons of the first and last fully connected layers (

) and (

), respectively.

The final configurations of some models not only depend on the selected hyperparameters, but also on the training data set (for the SVM model) and the training process itself (for the RF and GBDT models).

Table 4 shows the number of features (ft.) and classes (cl.) of each image and the final configurations of RF, GBDT and SVM models. For the tree models, the shown values are the total number of trees (trees), which in the case of the GBDT model depends on the number of classes of each image, the total number of non-leaf nodes (nodes) and leaf nodes (leaves) and the average depth of the trees of the entire model (depth). For the SVM model, the number of support vectors (s.v.) depends on the amount of training data.

4. Discussion of Results

First we present the accuracy results for all models and images, and then we report the size and computational measurements on inference. Then, we summarize and analyze the characteristics of each model in order to target an embedded or an on-board system.

4.1. Accuracy Results

Figure 3 depicts the accuracy evolution of each model when increasing the percentage of pixels for each class selected for training. Neural network models always achieve high accuracy values, with the CNN model outperforming all other models, and the SVM as a kernel-based model is always the next one or even outperforming the MLP. The only behavior that does not this pattern is the high accuracy values achieved by the MLR model on the KSC data set. Except for this case, the results obtained by neural networks, kernel-based models and the other models were expected [

75]. Nevertheless, it is worth mentioning that, for a tree based model, GBDT achieves great accuracy values which are very close to those obtained by neural networks and the SVM, which always provide higher values than the RF, which is also a tree based model.

The results obtained with the UH data set are quite particular, since it is not an entire image to work with, but two separated structures already prepared as training and testing sets. As we can observe in the values of the overall accuracy in Table 9, the accuracy of all models is below the score obtained for other images. However, the distribution of the different models keeps the same behavior described for the rest of the data sets, with the particularity that the MLR model outperforms the GBDT in this case.

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9 show the accuracy results of the selected configurations of each model. For the IP and KSC images, the selected training set is composed of 15% of the pixels from each class, while in the UP and SV only consists of 10% of the pixels from each class. The fixed training set for the UH image is composed of around 19% of the total pixels.

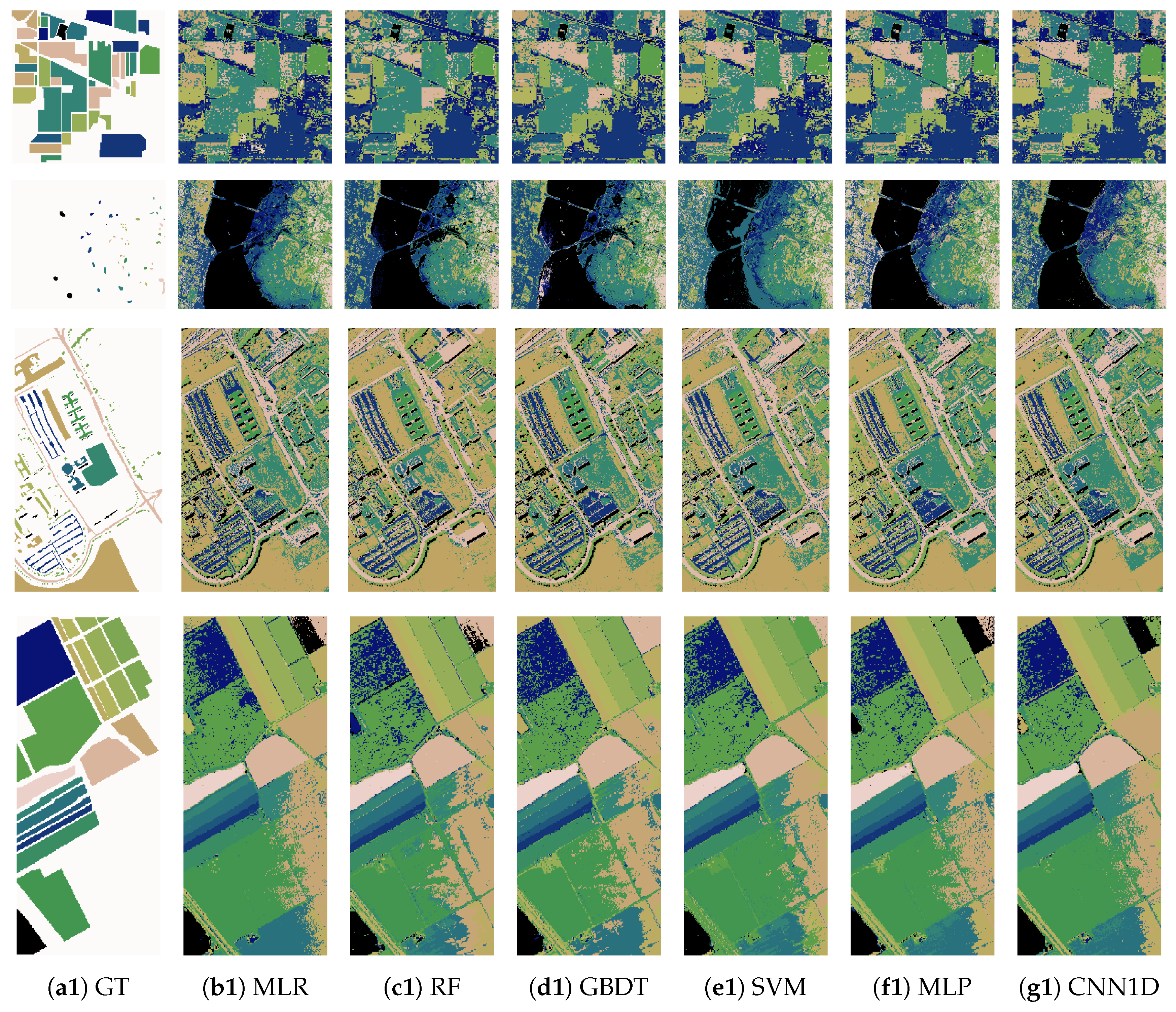

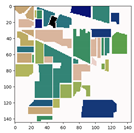

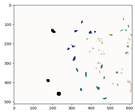

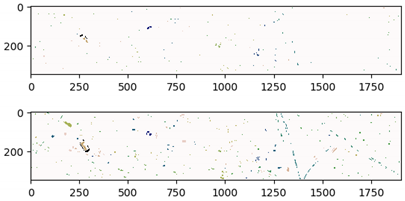

Figure 4 shows the classification maps obtained for the different data sets by all the models. As we can observe, most of the classification maps have the typical

salt and pepper effect of spectral models, i.e., classified trough individual pixels. There are some particular classes that are better modeled by certain models. For instance, the GBDT and SVM perfectly define the contour of the soil–vinyard–develop class of SV, while CNN1D exhibits a very good behavior on the cloudy zone in the right side of the UH data set, and both tree based models (RF and GBDT) perform very well on the swampy area on the right side of the river in the KSC data set. Nevertheless, the most significant conclusion that can be derived from these class maps is that the different errors of each model are distributed in a similar way along classes for each model, as it can be seen on

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9, but here we can confirm that it is consistent for the entire classification map. In general, all the classification maps are quite similar and well defined in terms of the contours, and the main classes are properly classified. We can conclude that the obtained accuracy levels are satisfactory, and the errors are well distributed, without significant deviations due to a particular class nor significant overfitting of the models.

4.2. Size and Computational Measurements

To perform the characterization of each algorithm in inference it is necessary to analyze their structure and operation. The operation during inference of every model has been explained in

Section 2, and the final sizes and configurations of the trained models after cross-validation for parameter selection has been detailed in

Section 3.

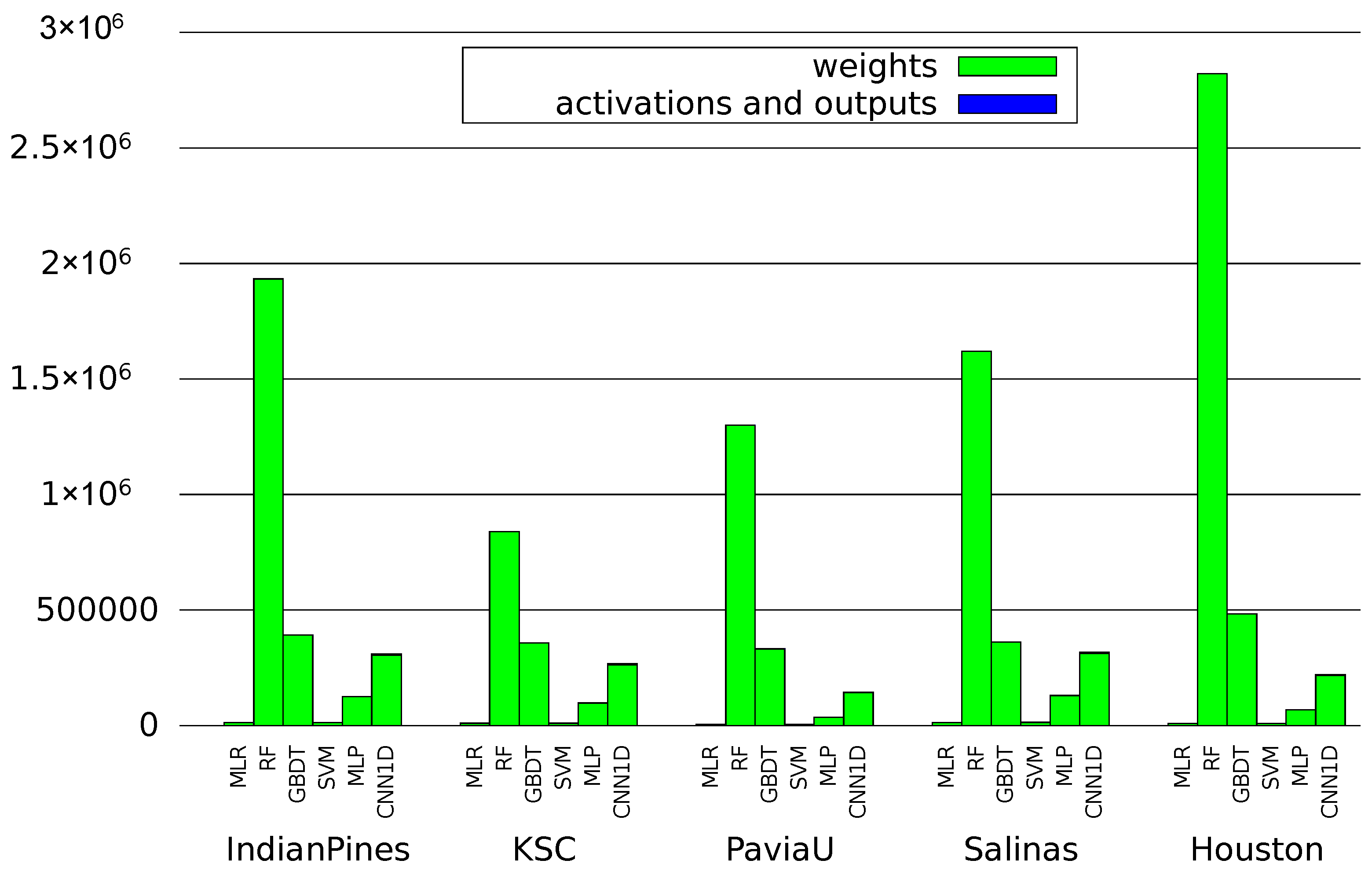

Figure 5 reports the sizes in Bytes of the trained models, while

Figure 6 shows the number and type of operations performed during the inference stage.

It is very important to remark that these measurements have been realized theoretically, based on the described operations and model configurations. For instance, the size measurements do not correspond to the size of a file with the model dumped on it, which is software-dependent, i.e., depends on the data structures it uses to keep much more information for the framework than the actual learned parameters needed for inference. As a result,

Figure 5 shows the theoretical size required in memory to store all the necessary structures for inference, based on the analysis of the models, exactly as it would be developed for a specific hardware accelerator or an embedded system.

As we can observe, the size of RF models is one order of magnitude bigger than the others. This is due to their need to save the values of the predictions for every class on each leaf node. This is a huge amount of information, even compared to models that train an entire estimator for each class, like GBDT. Actually, the size of MLR and SVM models is one order of magnitude smaller than GBDT, MLP and CNN1D models. Nevertheless, all the models (except the RF) are below 500 kilobytes, which makes them very affordable even for small low-power embedded devices.

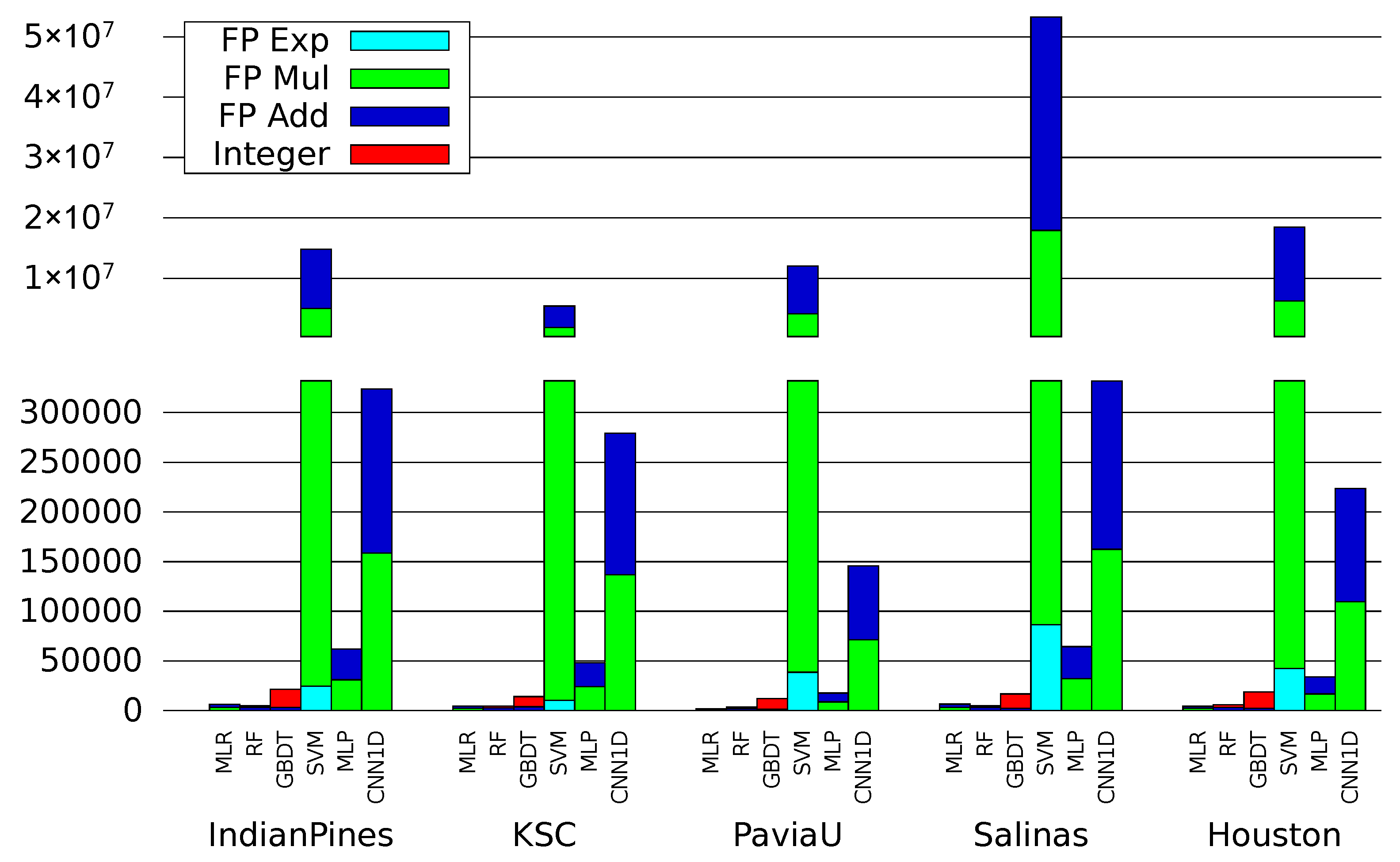

In a similar way, the operational measurements shown on

Figure 6 are based on the analysis of each algorithm, not in terms of software executions (that depend on the architecture, the system and the framework), and they are divided into four groups according to their computational complexity. The only models that use integers for the inference computations are the decision trees, and they only need integer comparisons. Floating point operations are the most common in the rest of the models, but they are also divided into three different categories.

FP Add refers to accumulations, subtractions and comparisons, which can be performed on an adder and are less complex,

FP Mul refers to multiplications and divisions and

FP Exp are exponential which are only performed by the SVM model. High-performance processors include powerful floating point arithmetic units, but for low-power processors and embedded devices, these computations can be very expensive.

Focusing on operations, the SVM model is two or even three orders of magnitude larger than the other models. Moreover, most of their operations are floating point multiplications and additions, but it also requires a great amount of complex operations such as exponential ones. In most of the data sets, it requires more exponential operations that the entire number of operations of the other models, except for the CNN. The number of operations required by the CNN model is one order of magnitude higher than the rest of the models, and it is basically composed of floating point multiplications and accumulations. MLR and RF models are the ones that require less operations during inference, while GBDT and MLP require several times the number of operations of the latter, sometimes even one order of magnitude more.

4.3. Characteristics of the Models in Relation to the Results

In this section, we will review the characteristics of every model in relation to this results. RF and GBDT models are composed of binary trees. The number of trees of each model are decided in the training time according to the results of the cross-validation methods explained above. The non-leaf nodes of each tree keep the value of the threshold and the number of features to compare with, which are integer values, while the leaf nodes keep the prediction value, which is a float. In the case of RF, leaf nodes keep the prediction for every class, which makes them very big models. Although these models are not the smallest, during inference they do not need to operate with the entire system; they just need to take the selected path of each tree. In terms of operations, each non-leaf node of a selected path implies an integer comparison, while the reached leaf node implies a float addition.

Notice that addressing operations, such as using the number of features to address the corresponding feature value, are not taken into account and are not considered in

Figure 6. The same occurs for the rest of the models, assuming that every computational operation needs its related addressing, so the comparison is fair.

The MLR model only requires, during inference, one float structure of the same size and shape as the entry, i.e., one hyperspectral pixel for each class. The operations realized are the dot product of the input and these structures and the result of each one of them is the prediction for the corresponding class.

The SVM model is small, in the same order of magnitude than the MLR, because it only needs the support vectors and the constants, some of which can be already pre-calculated together in just one value. But, in terms of computation, the calculation of Equation (

7) requires an enormous amount of operations compared to the rest of the methods.

The size and number of operations of the MLP model depends on the number of neurons in the hidden layer and the number of classes. For each neuron, there is a float structure of the same size and shape of the entry, and then for each class there is a float structure of the same size and shape of the result of the hidden layer. The operations realized correspond to all these dot products.

In the case of the CNN, the size corresponds to the filters of the convolutional layer and then the structures corresponding to the MLP at the end of the model, but this MLP is much bigger than the MLP model, because its entry is the output of the convolutional layer, which is much bigger than the original input pixel. The main difference with the MLP model (in terms of operations) lies in the behavior of the convolutional layer. It requires a dot product between each filter and the corresponding part of the input for each step of the convolutional filters across the entire input. This model also has a max pooling layer that slightly reduces the size of the model, because it is supposed to be executed on the fly, but adds some extra comparisons to the operations.

Since embedded or on-board systems require small, efficient models, we analyze the trade-off between the hardware requirements of each model and its accuracy results. In summary, neural networks and SVMs are very accurate models, and while they do not have large memory requirements, they require a great amount of floating point operations during inference. Furthermore, most of them are multiplications or other operations which are very expensive in terms of resources. Hence, they are the best option when using high-performance processors, but they may not be suitable for low-power processors or embedded systems. In the case of the RF, the number of operations is really small, and most of them are just integer comparisons, but the size of the model is very big compared to the other models, and it also achieves the lowest accuracy values.

According to our comparison, it seems that the best trade-off is obtained for MLR and GBDT models. Both models are reasonably small for embedded systems and require very few operations during inference. GBDT is bigger, but it still has very small dimensions. In terms of operations, even if GBDT needs to perform some more operations than the MLR, its important to remark that MLR operations are floating point multiplications and additions, while most of the GBDT operations are integer comparisons, which makes them a perfect target for on-board and embedded systems. In terms of accuracy, GBDT achieves better values in most scenarios.