Validation of Close-Range Photogrammetry for Architectural and Archaeological Heritage: Analysis of Point Density and 3D Mesh Geometry

Abstract

1. Introduction

2. Similar Works

3. Methodology

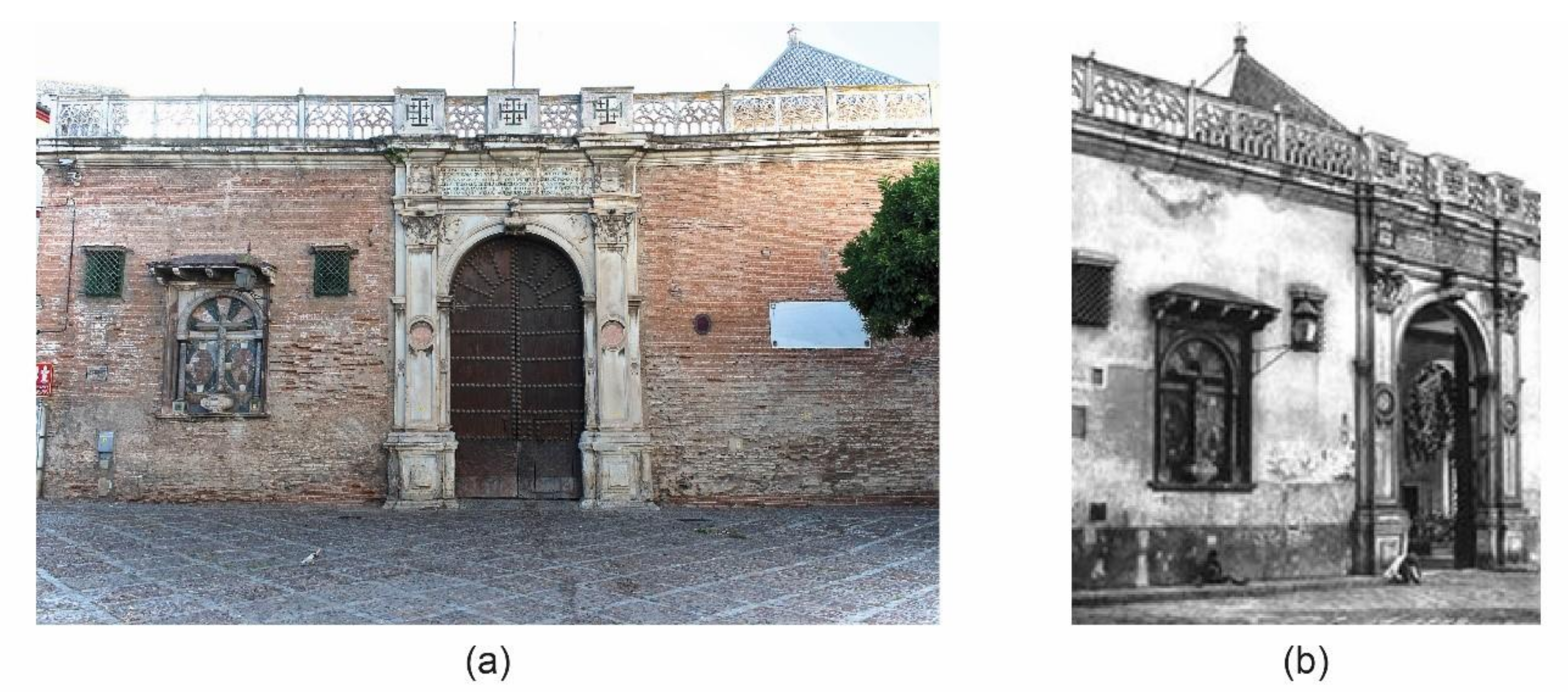

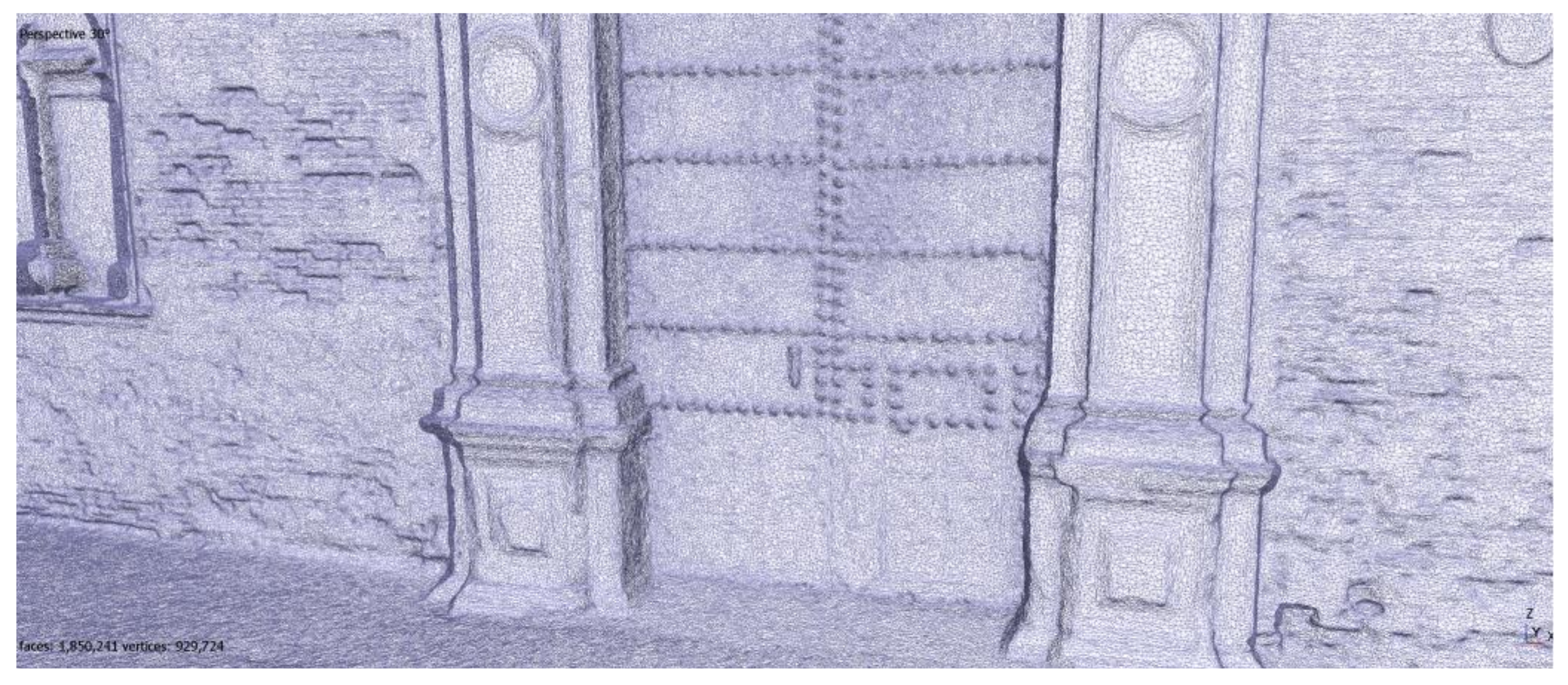

3.1. Case Study: The Main Façade of Casa de Pilatos

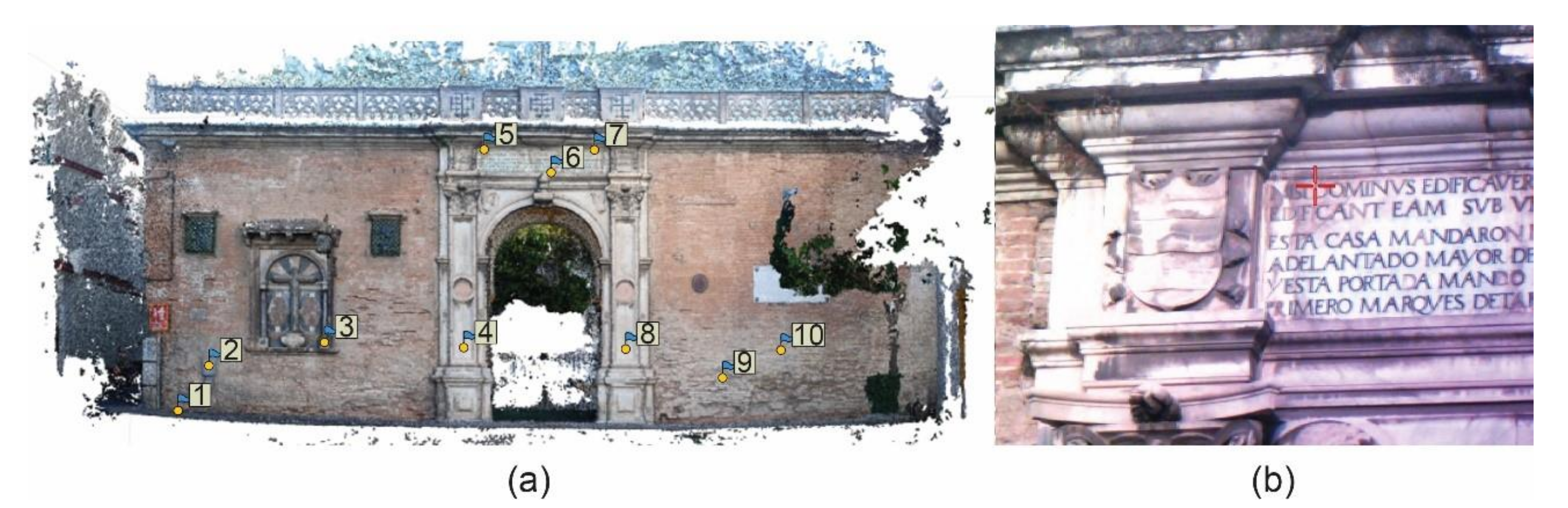

3.2. Data Collection

3.3. Validation of the Geometrical Pattern

4. Results and Discussion

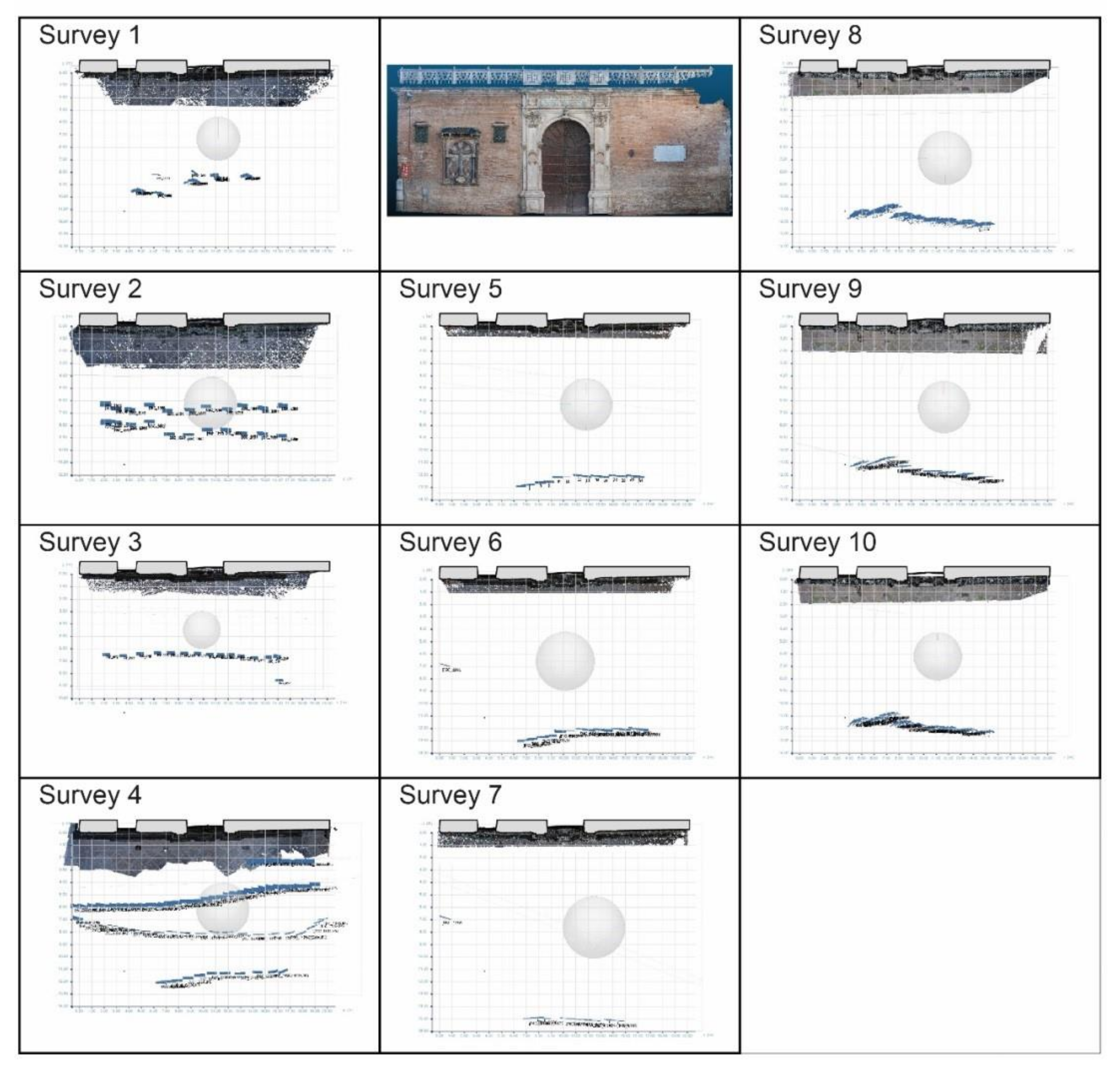

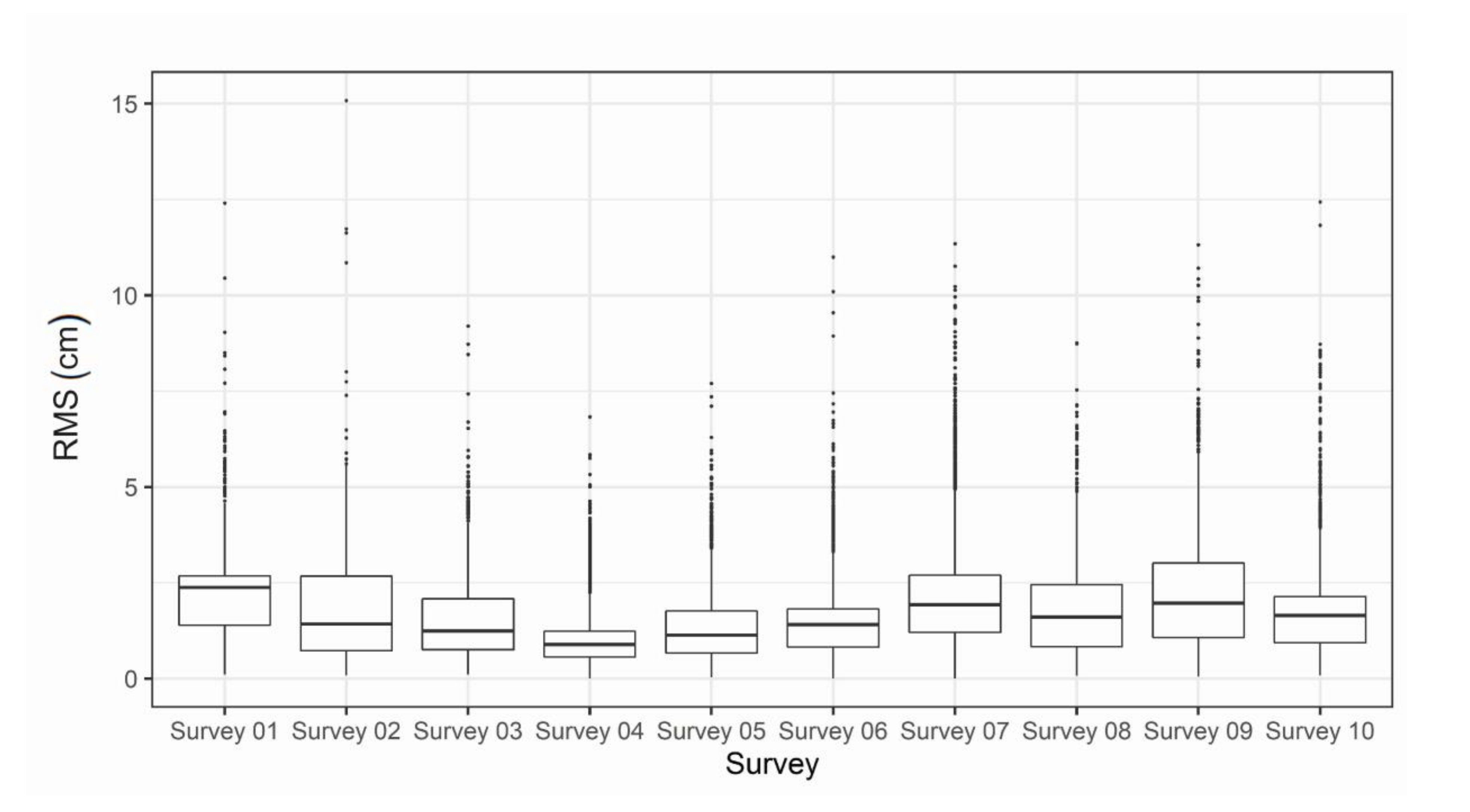

4.1. Point Cloud Spatial Resolution

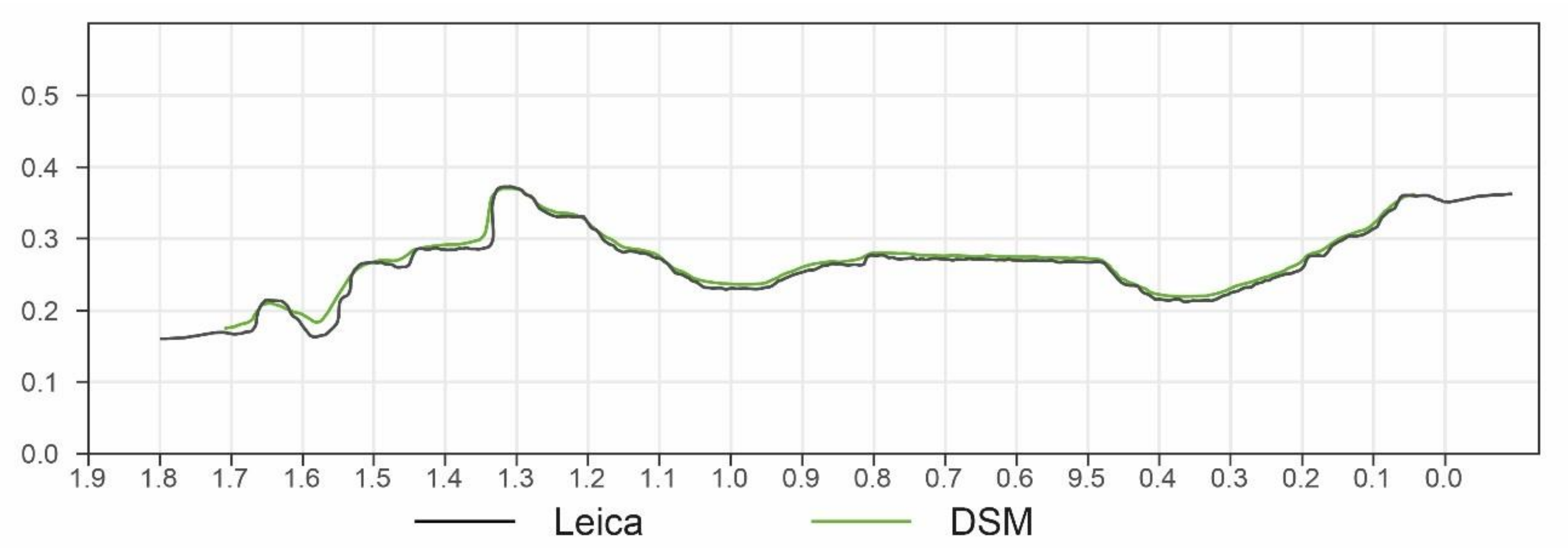

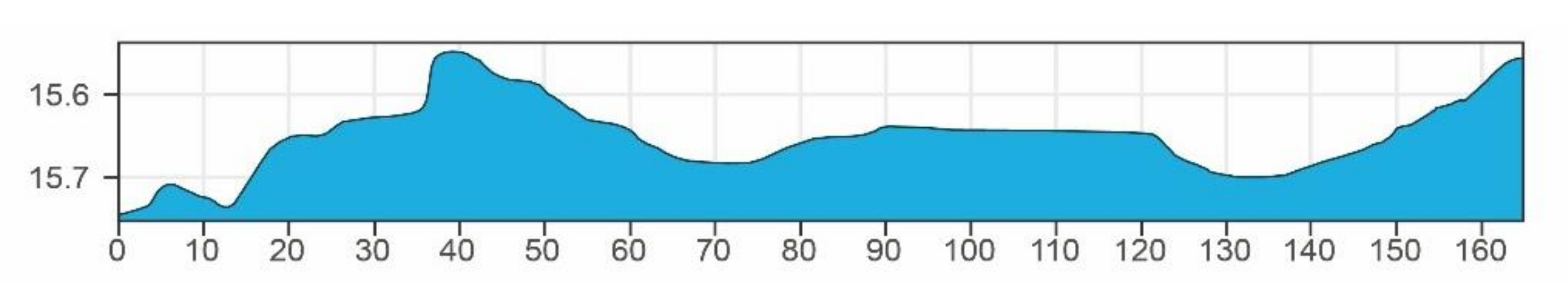

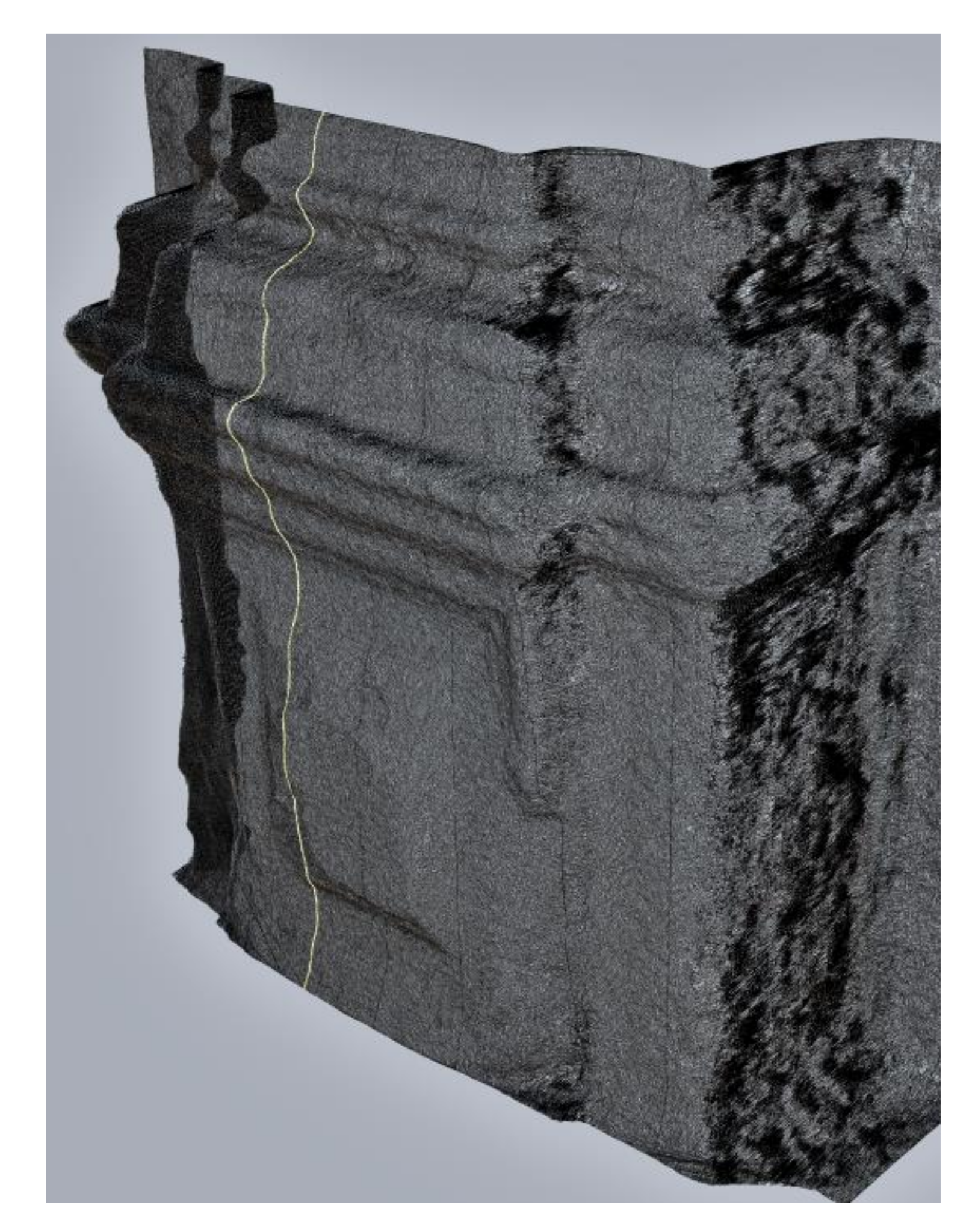

4.2. Comparison with TLS

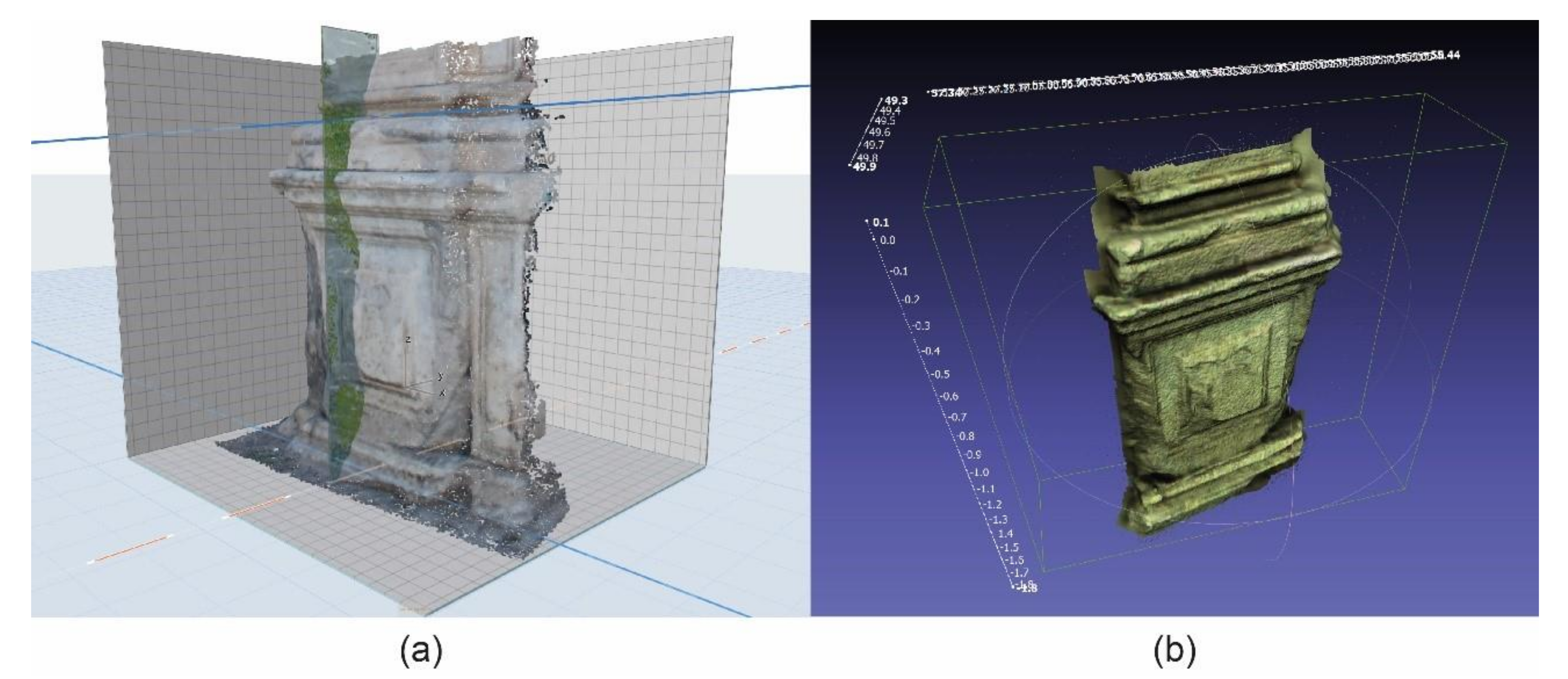

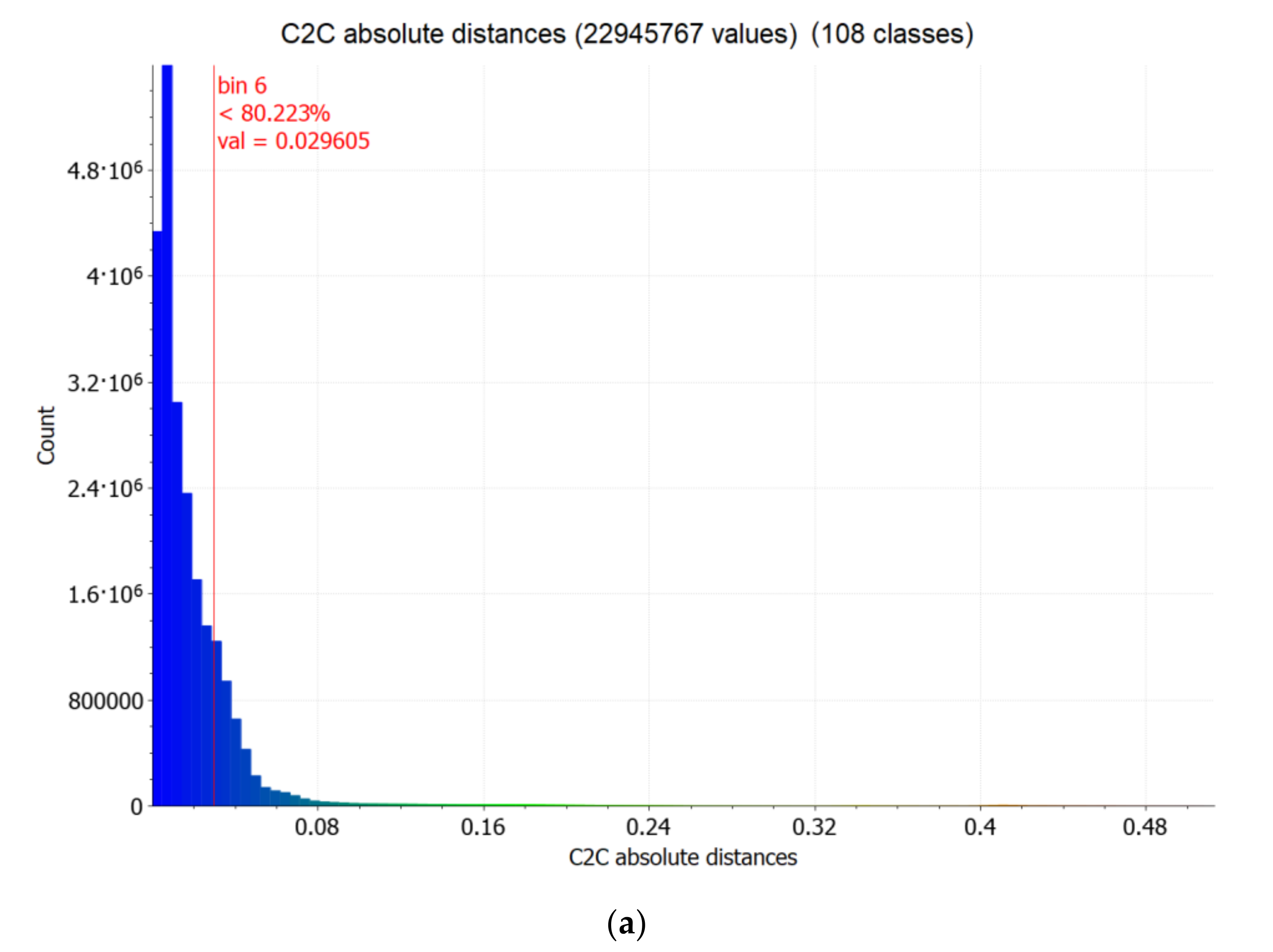

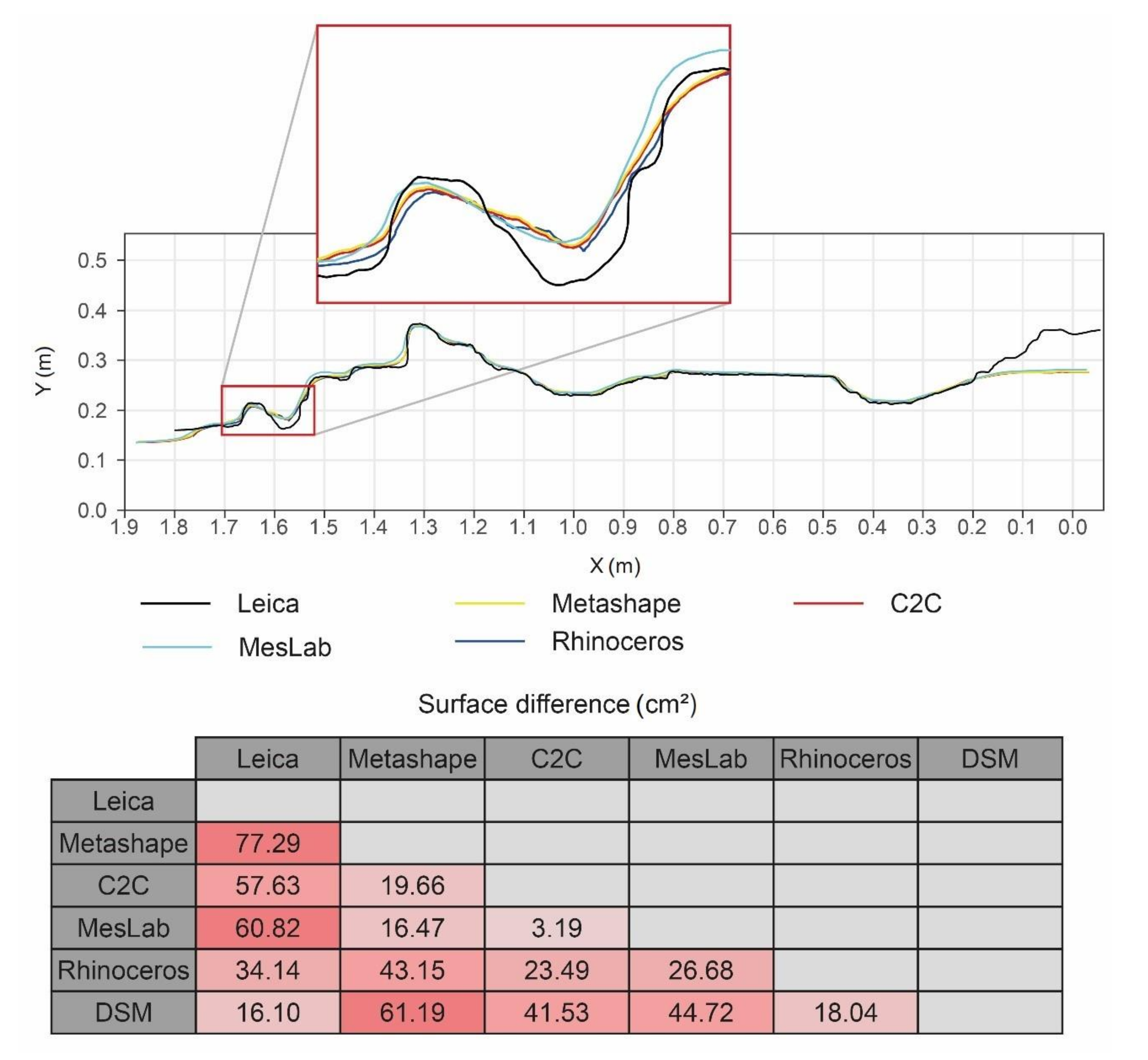

4.3. Model Geometry Analysis

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| TLS | Terrestrial Laser Scanning |

| SfM | Structure-from-Motion |

| MDCS | Massive Data Capture Systems |

| UAVs | Unmanned Aerial Vehicles |

| DSMs | Digital Surface Models |

| ICOMOS | International Council on Monuments and Sites |

| ISPRS | International Society for Photogrammetry and Remote Sensing |

| BIM | Building Information Modeling |

| HBIM | Historic Building Information Modeling |

| GCPs | Ground Control Points |

| DBAT | Damped Bundle Adjustment Toolbox |

| RPAS | Remotely Piloted Aircraft Systems |

| DSLR | Digital Single Lens Reflex |

| HDR | High Dynamic Range |

| RMS | Root-Mean-Square |

| RMSE | Root-Mean-Square-Error |

| MVS | Multi-view Stereo |

| EXIF | Exchangeable image file format |

Symbols

| Euclidean Distance | |

| w | Sensor width |

| W | Photograph width |

| H | Distance from camera to object |

| σ | Standard deviation |

| n | Sample size |

| x, y | Observed values |

| Mean value |

References

- Hambly, M. Drawing Instruments, 1580–1980; Sotheby’s Publications by Philip Wilson Publishers Ltd.: London, UK, 1988. [Google Scholar]

- Bork, R. The Flowering of Rayonnant Drawing in the Rhineland. In The Geometry of Creation Architectural Drawing and the Dynamics of Gothic Design; Routledge: Abingdon-on-Thames, UK, 2011; pp. 56–165. [Google Scholar]

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-The-Art and Applications of 3D Imaging Sensors in Industry, Cultural Heritage, Medicine, and Criminal Investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef] [PubMed]

- Bruno, S.; De Fino, M.; Fatiguso, F. Historic Building Information Modelling: Performance assessment for diagnosis-aided information modelling and management. Autom. Constr. 2018, 86, 256–276. [Google Scholar] [CrossRef]

- Omar, T.; Nehdi, M.L. Data acquisition technologies for construction progress tracking. Autom. Constr. 2016, 70, 143–155. [Google Scholar] [CrossRef]

- Rönnholm, P.; Honkavaara, E.; Litkey, P.; Hyyppä, H.; Hyyppä, J. Integration of laser scanning and photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2007, 36, 355–362. [Google Scholar]

- Cardaci, A.; Mirabella Roberti, G.; Versaci, A. The integrated 3d survey for planned conservation: The former church and convent of Sant’Agostino in Bergamo. ISPRS-Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 235–242. [Google Scholar] [CrossRef]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F. State of the art in high density image matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.; Rodríguez-Pérez, J.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points Used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef]

- Martínez-Carricondo, P.; Carvajal-Ramírez, F.; Yero-Paneque, L.; Agüera-Vega, F. Combination of nadiral and oblique UAV photogrammetry and HBIM for the virtual reconstruction of cultural heritage. Case study of Cortijo del Fraile in Níjar, Almería (Spain). Build. Res. Inf. 2019, 48, 140–159. [Google Scholar] [CrossRef]

- Guarnieri, A.; Milan, N.; Vettore, A. Monitoring Of Complex Structure For Structural Control Using Terrestrial Laser Scanning (Tls) And Photogrammetry. Int. J. Archit. Herit. 2013, 7, 54–67. [Google Scholar] [CrossRef]

- Nieto-Julián, J.E.; Antón, D.; Moyano, J.J. Implementation and Management of Structural Deformations into Historic Building Information Models. Int. J. Archit. Herit. 2020, 14, 1384–1397. [Google Scholar] [CrossRef]

- Moyano, J.; Odriozola, C.P.; Nieto-Julián, J.E.; Vargas, J.M.; Barrera, J.A.; León, J. Bringing BIM to archaeological heritage: Interdisciplinary method/strategy and accuracy applied to a megalithic monument of the Copper Age. J. Cult. Herit. 2020, 45, 303–314. [Google Scholar] [CrossRef]

- Bolognesi, M.; Furini, A.; Russo, V.; Pellegrinelli, A.; Russo, P. Accuracy of cultural heritage 3D models by RPAS and terrestrial photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2014, 40, 113–119. [Google Scholar] [CrossRef]

- Rebolj, D.; Pučko, Z.; Babič, N.Č.; Bizjak, M.; Mongus, D. Point cloud quality requirements for Scan-vs-BIM based automated construction progress monitoring. Autom. Constr. 2017, 84, 323–334. [Google Scholar] [CrossRef]

- U.S. General Services Administration. GSA Building Information Modeling Guide Series: 03; U.S. General Services Administration: Washington, DC, USA, 2009.

- Dai, F.; Rashidi, A.; Brilakis, I.; Vela, P. Comparison of image-based and time-of-flight-based technologies for three-dimensional reconstruction of infrastructure. J. Constr. Eng. Manag. 2013, 139, 69–79. [Google Scholar] [CrossRef]

- Akca, D.; Freeman, M.; Sargent, I.; Gruen, A. Quality assessment of 3D building data. Photogramm. Rec. 2010, 25, 339–355. [Google Scholar] [CrossRef]

- Khoshelham, K.; Elberink, S.O. Accuracy and Resolution of Kinect Depth Data for Indoor Mapping Applications. Sensors 2012, 12, 1437–1454. [Google Scholar] [CrossRef]

- Kinect, W.S.D.K. Download Kinect for Windows SDK v1.8 from Official Microsoft Download Center. Available online: https://www.microsoft.com/en-us/download/details.aspx?id=40278 (accessed on 5 June 2020).

- Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P. Accuracy of digital surface models and orthophotos derived from unmanned aerial vehicle photogrammetry. J. Surv. Eng. 2017, 143, 04016025. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.; Rumbao, I.; Berrocal, J.; Porras, A. Positional Quality Assessment of Orthophotos Obtained from Sensors Onboard Multi-Rotor UAV Platforms. Sensors 2014, 14, 22394–22407. [Google Scholar] [CrossRef]

- Gkintzou, C.; Georgopoulos, A.; Valle Melón, J.M.; Rodríguez Miranda, Á. Virtual Reconstruction of the ancient state of a ruined Church. In Project Paper in “Lecture Notes in Computer Science (LNCS)”, Proceedings of the 4th International EuroMediterranean Conference (EUROMED), Limassol, Cyprus, 29 October–3 November 2012; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Galeazzi, F. Towards the definition of best 3D practices in archaeology: Assessing 3D documentation techniques for intra-site data recording. J. Cult. Herit. 2016, 17, 159–169. [Google Scholar] [CrossRef]

- Gruen, A.; Akca, D. Least squares 3D surface and curve matching. ISPRS J. Photogramm. Remote Sens. 2005, 59, 151–174. [Google Scholar] [CrossRef]

- Galantucci, R.A.; Fatiguso, F. Advanced damage detection techniques in historical buildings using digital photogrammetry and 3D surface anlysis. J. Cult. Herit. 2019, 36, 51–62. [Google Scholar] [CrossRef]

- Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P. Assessment of photogrammetric mapping accuracy based on variation ground control points number using unmanned aerial vehicle. Meas. J. Int. Meas. Confed. 2017, 98, 221–227. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P.; Börlin, N. Reprocessing close range terrestrial and uav photogrammetric projects with the dbat toolbox for independent verification and quality control. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2017, 42, 171–177. [Google Scholar] [CrossRef]

- Russo, M.; Giugliano, A.M.; Asciutti, M. Mobile phone imaging for CH façade modelling. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2019, 42, 287–294. [Google Scholar] [CrossRef]

- Karachaliou, E.; Georgiou, E.; Psaltis, D.; Stylianidis, E. UAV for mapping historic buildings: From 3D modelling to BIM. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2, 397–402. [Google Scholar] [CrossRef]

- Achille, C.; Adami, A.; Chiarini, S.; Cremonesi, S.; Fassi, F.; Fregonese, L.; Taffurelli, L. UAV-Based Photogrammetry and Integrated Technologies for Architectural Applications-Methodological Strategies for the After-Quake Survey of Vertical Structures in Mantua (Italy). Sensors 2015, 15, 15520–15539. [Google Scholar] [CrossRef]

- Pepe, M.; Ackermann, S.; Fregonese, L.; Achille, C. 3D Point Cloud Model Color Adjustment by Combining Terrestrial Laser Scanner and Close Range Photogrammetry Datasets. World Acad. Sci. Eng. Technol. Int. J. Comput. Inf. Eng. 2016, 10, 1942–1948. [Google Scholar]

- Bernal, A.A. El origen de la Casa de Pilatos de Sevilla. 1483–1505. Atrio. Rev. Hist. Arte 2011, 17, 133–172. [Google Scholar]

- Cañal, V.L. La Casa de Pilatos. Archit. Vie 1994, 6, 181–192. [Google Scholar]

- Hielscher, K. La Espana Incognita; Arquitectura, Paisajes, Vida Popular. Available online: https://www.iberlibro.com/buscar-libro/titulo/la-espana-incognita-arquitectura-paisajes-vida-popular/autor/kurt-hielscher/ (accessed on 12 May 2020).

- Prautzsch, H.; Boehm, W. Computer Aided Geometric Design. In Handbook of Computer Aided Geometric Design; Academic Press: Cambridge, MA, USA, 2002; pp. 255–282. ISBN 978-0-444-51104-1. [Google Scholar]

- Grussenmeyer, P.; Al Khalil, O. Solutions for exterior orientation in photogrammetry: A review. Photogramm. Rec. 2002, 17, 615–634. [Google Scholar] [CrossRef]

- Yilmaz, H.M.; Yakar, M.; Gulec, S.A.; Dulgerler, O.N. Importance of digital close-range photogrammetry in documentation of cultural heritage. J. Cult. Herit. 2007, 8, 428–433. [Google Scholar] [CrossRef]

- Arias, P.; Herráez, J.; Lorenzo, H.; Ordóñez, C. Control of structural problems in cultural heritage monuments using close-range photogrammetry and computer methods. Comput. Struct. 2005, 83, 1754–1766. [Google Scholar] [CrossRef]

- Yilmaz, H.M.; Yakar, M.; Yildiz, F. Documentation of historical caravansaries by digital close range photogrammetry. Autom. Constr. 2008, 17, 489–498. [Google Scholar] [CrossRef]

- Yastikli, N. Documentation of cultural heritage using digital photogrammetry and laser scanning. J. Cult. Herit. 2007, 8, 423–427. [Google Scholar] [CrossRef]

- Geosystems, L. Leica ScanStation C10—The All-in-One Laser Scanner for Any Application. Available online: http://leica-geosystems.com/products/laser-scanners/scanners/leica-scanstation-c10 (accessed on 15 January 2020).

- Nctech iSTAR 360 Degree Measurement Module Integrated by Imaging Companies. Available online: https://www.nctechimaging.com/istar-360-degree-measurement-module-integrated-by-imaging-companies/ (accessed on 5 June 2020).

- Geosystems Leica Geosystems (2008) Leica FlexLine TS02/TS06/TS09 User Manual. Available online: https://leica-geosystems.com/ (accessed on 16 March 2020).

- Bohorquez, P.; del Moral-Erencia, J.D. 100 Years of Competition between Reduction in Channel Capacity and Streamflow during Floods in the Guadalquivir River (Southern Spain). Remote Sens. 2017, 9, 727. [Google Scholar] [CrossRef]

- Bohorquez, P.; Del Moral-Erencia, J.D. Parametric study of trends in flood stages over time in the regulated Guadalquivir River (years 1910–2016). Proc. Eur. Water 2017, 59, 145–151. [Google Scholar]

- Bolognesi, C.; Garagnani, S. From a point cloud survey to a mass 3d modelling: Renaissance HBIM in Poggio a Caiano. Isprs-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-2, 117–123. [Google Scholar] [CrossRef]

- Leachtenauer, J.C.; Driggers, R.G. Surveillance and Reconnaissance Imaging Systems: Modeling and Performance Prediction; Artech House: Norfolk County, MA, USA, 2001. [Google Scholar]

- Gonçalves, J.A.; Henriques, R. UAV photogrammetry for topographic monitoring of coastal areas. ISPRS J. Photogramm. Remote Sens. 2015, 104, 101–111. [Google Scholar] [CrossRef]

- David, P.H. Darktable. Available online: https://www.darktable.org/ (accessed on 30 January 2020).

- Sieberth, T.; Wackrow, R.; Hofer, V.; Barrera, V. Light Field Camera as Tool for Forensic Photogrammetry. Isprs-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-1, 393–399. [Google Scholar] [CrossRef]

- Agisoft PhotoScan Software. Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 30 January 2020).

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer-Verlag: London, UK, 2010. [Google Scholar]

- Jaud, M.; Passot, S.; Allemand, P.; Le Dantec, N.; Grandjean, P.; Delacourt, C. Suggestions to Limit Geometric Distortions in the Reconstruction of Linear Coastal Landforms by SfM Photogrammetry with PhotoScan® and MicMac® for UAV Surveys with Restricted GCPs Pattern. Drones 2018, 3, 2. [Google Scholar] [CrossRef]

- Verhoeven, G. Taking computer vision aloft-archaeological three-dimensional reconstructions from aerial photographs with photoscan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Haneberg, W.C. Using close range terrestrial digital photogrammetry for 3-D rock slope modeling and discontinuity mapping in the United States. Bull. Eng. Geol. Environ. 2008, 67, 457–469. [Google Scholar] [CrossRef]

- Romero, N.L.; Gimenez Chornet, V.V.G.C.; Cobos, J.S.; Selles Carot, A.A.S.C.; Centellas, F.C.; Mendez, M.C. Recovery of descriptive information in images from digital libraries by means of EXIF metadata. Libr. Hi Tech 2008, 26, 302–315. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A comparison and evaluation of multi-view stereo reconstruction algorithms. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 519–526. [Google Scholar]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Robert McNeel & Associates Rhinoceros. Available online: https://www.rhino3d.com/ (accessed on 24 January 2020).

- Girardeau-Montaut, D. Cloud-to-Mesh Distance. Available online: http://www.cloudcompare.org/doc/wiki/index.php?title=Cloud-to-Mesh_Distance (accessed on 2 May 2020).

- Robert McNeel & Associates Grasshopper Grasshopper. Available online: https://www.grasshopper3d.com/ (accessed on 4 March 2020).

- Antón, D.; Medjdoub, B.; Shrahily, R.; Moyano, J. Accuracy evaluation of the semi-automatic 3D modeling for historical building information models. Int. J. Archit. Herit. 2018, 12, 790–805. [Google Scholar] [CrossRef]

- Antón, D.; Pineda, P.; Medjdoub, B.; Iranzo, A. As-built 3D heritage city modelling to support numerical structural analysis: Application to the assessment of an archaeological remain. Remote Sens. 2019, 11, 1276. [Google Scholar] [CrossRef]

- Reverse, M. Mesh Flow. 2016. Available online: http://v5.rhino3d.com/forum/topics/mesh-flow-plug-in (accessed on 15 January 2020).

- Kazhdan, M.; Hoppe, H. Screened poisson surface reconstruction. ACM Trans. Graph. 2013, 32, 1–13. [Google Scholar] [CrossRef]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- Koutsoudis, A.; Vidmar, B.; Ioannakis, G.; Arnaoutoglou, F.; Pavlidis, G.; Chamzas, C. Multi-image 3D reconstruction data evaluation. J. Cult. Herit. 2014, 15, 73–79. [Google Scholar] [CrossRef]

- Poropat, G.; Tonon, F.; Kottenstette, J.J. Remote 3D mapping of rock mass structure. In Laser and Photogrammetric Methods for Rock Face Characterization; American Rock Mechanics Association: Alexandria, VA, USA, 2006; pp. 63–75. Available online: https://docplayer.net/48156338-Laser-and-photogrammetric-methods-for-rock-face-characterization.html (accessed on 31 October 2020).

- Bevilacqua, M.G.; Caroti, G.; Piemonte, A.; Ulivieri, D. Reconstruction of Lost Architectural Volumes By Integration of Photogrammetry From Archive Imagery With 3-D Models of the Status Quo. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W9, 119–125. [Google Scholar] [CrossRef]

- Aicardi, I.; Chiabrando, F.; Maria Lingua, A.; Noardo, F. Recent trends in cultural heritage 3D survey: The photogrammetric computer vision approach. J. Cult. Herit. 2018, 32, 257–266. [Google Scholar] [CrossRef]

- Peña-Villasenín, S.; Gil-Docampo, M.; Ortiz-Sanz, J. 3-D Modeling of Historic Façades Using SFM Photogrammetry Metric Documentation of Different Building Types of a Historic Center. Int. J. Archit. Herit. 2017, 11, 871–890. [Google Scholar] [CrossRef]

- Smith, M.W.; Vericat, D. From experimental plots to experimental landscapes: Topography, erosion and deposition in sub-humid badlands from Structure-from-Motion photogrammetry. Earth Surf. Process. Landf. 2015, 40, 1656–1671. [Google Scholar] [CrossRef]

- Rajendra, Y.D.; Mehrotra, S.C.; Kale, K.V.; Manza, R.R.; Dhumal, R.K.; Nagne, A.D.; Vibhute, D.; Ramanujan, S.; Chair, G. Evaluation of Partially Overlapping 3D Point Cloud’s Registration by using ICP variant and CloudCompare. Isprs Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-8, 891–897. [Google Scholar] [CrossRef]

- Moon, D.; Chung, S.; Kwon, S.; Seo, J.; Shin, J. Comparison and utilization of point cloud generated from photogrammetry and laser scanning: 3D world model for smart heavy equipment planning. Autom. Constr. 2019, 98, 322–331. [Google Scholar] [CrossRef]

- Georgantas, A.; Brédif, M.; Pierrot-Desseilligny, M. An accuracy assessment of automated photogrammetric techniques for 3D modelling of complex interiors. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B3, 23–28. [Google Scholar] [CrossRef]

- Morgan, J.A.; Brogan, D.J.; Nelson, P.A. Application of Structure-from-Motion photogrammetry in laboratory flumes. Geomorphology 2017, 276, 125–143. [Google Scholar] [CrossRef]

- Chiabrando, F.; Lingua, A.; Noardo, F.; Spanò, A. 3D modelling of trompe l’oeil decorated vaults using dense matching techniques. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 97–104. [Google Scholar] [CrossRef]

- Moyano, J.J.; Barrera, J.A.; Nieto, J.E.; Marín, D.; Antón, D. A geometrical similarity pattern as an experimental model for shapes in architectural heritage: A case study of the base of the pillars in the Cathedral of Seville and the church of Santiago in Jerez, Spain. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 511–517. [Google Scholar] [CrossRef]

- Yu, Y.; Zhou, K.; Xuz, D.; Shi, X.; Bao, H.; Guo, B.; Shum, H.Y. Mesh Editing with Poisson-Based Gradient Field Manipulation; ACM SIGGRAPH 2004 Papers; ACM Press: New York, NY, USA, 2004; pp. 644–651. [Google Scholar]

- Kazhdan, M.; Bolitho, M.; Hoppe, H. Poisson Surface Reconstruction. In Proceedings of the fourth Eurographics Symposium on Geometry Processing, Sardinia, Italy, 26–28 June 2006; pp. 61–70. [Google Scholar]

- Barba, S.; Barbarella, M.; Di Benedetto, A.; Fiani, M.; Gujski, L.; Limongiello, M. Accuracy Assessment of 3D Photogrammetric Models from an Unmanned Aerial Vehicle. Drones 2019, 3, 79. [Google Scholar] [CrossRef]

- McCarthy, J. Multi-image photogrammetry as a practical tool for cultural heritage survey and community engagement. J. Archaeol. Sci. 2014, 43, 175–185. [Google Scholar] [CrossRef]

- Pierrot-Deseilligny, M.; De Luca, L.; Remondino, F. Automated Image-Based Procedures for Accurate Artifacts 3D Modeling and Orthoimage Generation. Geoinform. FCE CTU 2011, 6, 291–299. [Google Scholar] [CrossRef]

- Chandler, J.; Fryer, J. Low cost digital photogrammetry. AutoDesk 123D Catch: How accurate is it? Geomat. World 2013, 2, 28–30. [Google Scholar]

- Bryan, P.; Blake, B.; Bedford, J.; Barber, D.; Mills, J. Metric Survey Specifications for Cultural Heritage; English Heritage: Swindon, UK, 2009. [Google Scholar]

| Reflex Digital Camera Nikon D80 | Reflex Digital Camera Canon E600D | Reflex Digital Camera Canon E650D | |

|---|---|---|---|

| N° of images | 496 | 65 | 65 |

| Resolution | 12 MP | 18 MP | 18 MP |

| Altitude in (m) | 9 m (relative to start altitude) | 9 m (relative to start altitude) | 9 m (relative to start altitude) |

| ISO | 200 | 400 | 400 |

| Sensor | CMOS APS-C (23.5 × 15.6 mm2) | Complementary Metal-Oxide Sensor (CMOS) (APS-C 14 × 22.3 mm2) | Complementary Metal-Oxide Sensor (CMOS) (APS-C 14 × 22.3 mm2) |

| Exposure (fix) | 1/400 s f 3.5 | 1/400 s f 3.5 | 1/400 s f 3.5 |

| image stabilizer | optical | optical | optical |

| Parameter | Selection | |

|---|---|---|

| Steps | ||

| Match photos Chunk. Align cameras | Accuracy | High (full resolution image files) |

| Generic/Reference preselection | yes/yes | |

| Key point limit | 40,000 | |

| Tie point limit | 4000 | |

| Adaptive camera model fifting | yes | |

| Build Dense cloud | Quality | High |

| Depth fiftering | Mild | |

| Calculate point colors | yes | |

| Build Mesh | Source date | Depth maps |

| Quality | High | |

| face count | High | |

| Build DEM | Projection | Type Geographic |

| Source date | Dense cloud | |

| interpolation | Enabled |

| Reconstruction Digital Elevation Model | |||||||

|---|---|---|---|---|---|---|---|

| Experimental Surveys | Camera Stations (No.) | Layout Point Cloud | Average Acquisition Distance (m) | Reprojections Error (pix) | Ground Resolution (mm/pix) | Resolution (mm/pix) | Point Density (points/cm2) |

| Survey 1 | 44 | Uniform | 8.87 | 0.436 | 3.45 | 2.94 | 2.138 |

| Survey 2 | 69 | Uniform | 7.63 | 0.594 | 3.53 | 3.77 | 2.073 |

| Survey 3 | 43 | Less Uniform | 6.53 | 0.368 | 1.21 | 2.42 | 3.284 |

| Survey 4 | 79 | Disorderly | 6.85 | 0.477 | 3.24 | 3.24 | 12.624 |

| Survey 5 | 27 | Uniform | 12.75 | 0.475 | 2.32 | 2.69 | 4.699 |

| Survey 6 | 28 | Less Uniform | 12.66 | 0.468 | 2.33 | 2.69 | 4.664 |

| Survey 7 | 10 | Disorderly | 15.24 | 0.413 | 3.45 | 4.24 | 2.599 |

| Survey 8 | 135 | Uniform | 11.70 | 0.566 | 3.9 | 3.58 | 16.862 |

| Survey 9 | 45 | Uniform | 11.85 | 0.522 | 3.94 | 7.81 | 16.326 |

| Survey 10 | 81 | Uniform | 11.94 | 0.559 | 3.94 | 7.88 | 16.503 |

| Experimental Surveys | Standart Deviation (σ) | RMS (m) | Min. Distance (m) | Max. Distance (m) | Average Distance (m) | Estimated Standard Error (m) | RMS Adjustment (m) |

|---|---|---|---|---|---|---|---|

| Survey 1 | 0.0536 | 0.0534 | 0 | 0.3718 | 0.0191 | 0.0831 | 0.0750 |

| Survey 2 | 0.2425 | 0.0663 | 0 | 14,957 | 0.0848 | 0.0830 | 0.0161 |

| Survey 3 | 0.0331 | 0.3461 | 0 | 0.4985 | 0.0554 | 0.0830 | 0.0076 |

| Survey 4 | 0.0494 | 0.0575 | 0 | 0.8923 | 0.0494 | 0.0828 | 0.0055 |

| Survey 5 | 0.2810 | 0.0636 | 0 | 21,283 | 0.1058 | 0.0830 | 0.0046 |

| Survey 6 | 0.0716 | 0.3051 | 0 | 0.7495 | 0.0338 | 0.0838 | 0.0046 |

| Survey 7 | 0.0572 | 0.1478 | 0 | 0.6893 | 0.0186 | 0.0836 | 0.0074 |

| Survey 8 | 0.2366 | 0.2476 | 0 | 14,957 | 0.0814 | 0.0831 | 0.0069 |

| Survey 9 | 0.1035 | 0.0899 | 0 | 0.8782 | 0.0716 | 0.0815 | 0.0153 |

| Survey 10 | 0.2303 | 0.0764 | 0 | 14,849 | 0.0895 | 0.0820 | 0.0076 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moyano, J.; Nieto-Julián, J.E.; Bienvenido-Huertas, D.; Marín-García, D. Validation of Close-Range Photogrammetry for Architectural and Archaeological Heritage: Analysis of Point Density and 3D Mesh Geometry. Remote Sens. 2020, 12, 3571. https://doi.org/10.3390/rs12213571

Moyano J, Nieto-Julián JE, Bienvenido-Huertas D, Marín-García D. Validation of Close-Range Photogrammetry for Architectural and Archaeological Heritage: Analysis of Point Density and 3D Mesh Geometry. Remote Sensing. 2020; 12(21):3571. https://doi.org/10.3390/rs12213571

Chicago/Turabian StyleMoyano, Juan, Juan Enrique Nieto-Julián, David Bienvenido-Huertas, and David Marín-García. 2020. "Validation of Close-Range Photogrammetry for Architectural and Archaeological Heritage: Analysis of Point Density and 3D Mesh Geometry" Remote Sensing 12, no. 21: 3571. https://doi.org/10.3390/rs12213571

APA StyleMoyano, J., Nieto-Julián, J. E., Bienvenido-Huertas, D., & Marín-García, D. (2020). Validation of Close-Range Photogrammetry for Architectural and Archaeological Heritage: Analysis of Point Density and 3D Mesh Geometry. Remote Sensing, 12(21), 3571. https://doi.org/10.3390/rs12213571