Color and Laser Data as a Complementary Approach for Heritage Documentation

Abstract

:1. Introduction

- -

- Proposes a method of visibility analysis to effectively filter out occluded areas within complex and massive structures.

- -

- Texture mapping and Laser–based True–orthophoto approaches for complex buildings.

- -

- Multi-scalar approach for heritage documentation.

2. Related Works

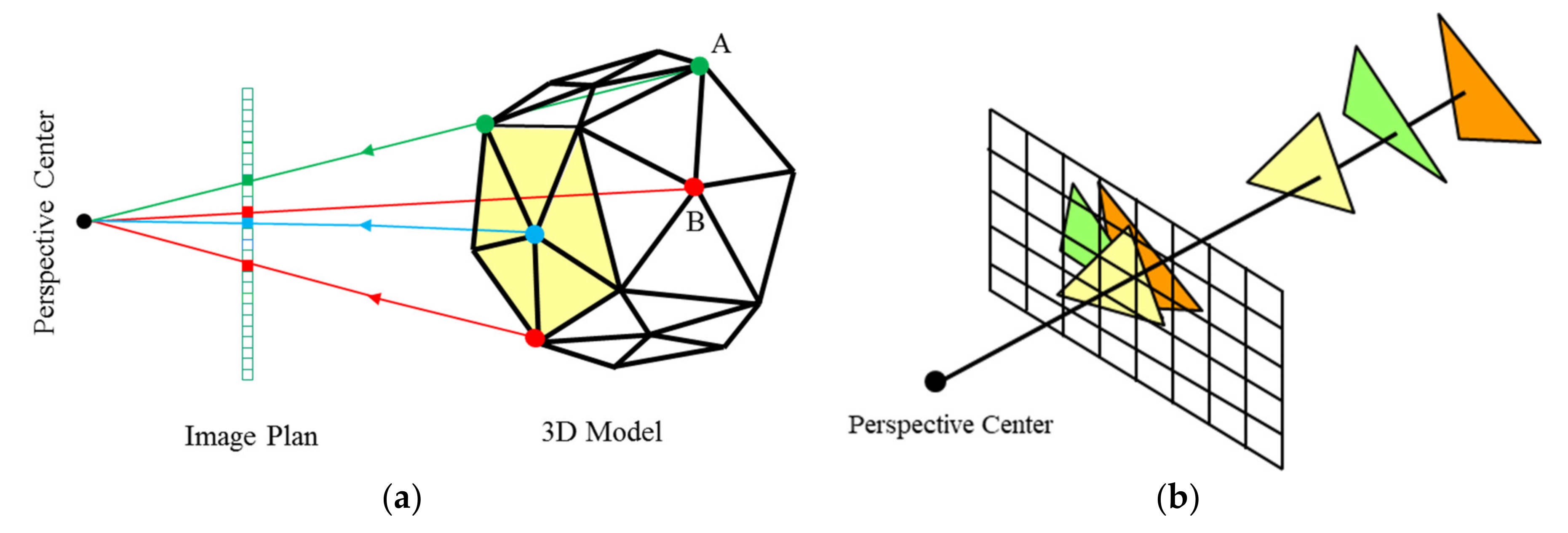

2.1. Texture Mapping

2.2. True Orthophoto

3. Data Collection and Sensors Configuration

3.1. Data Collection

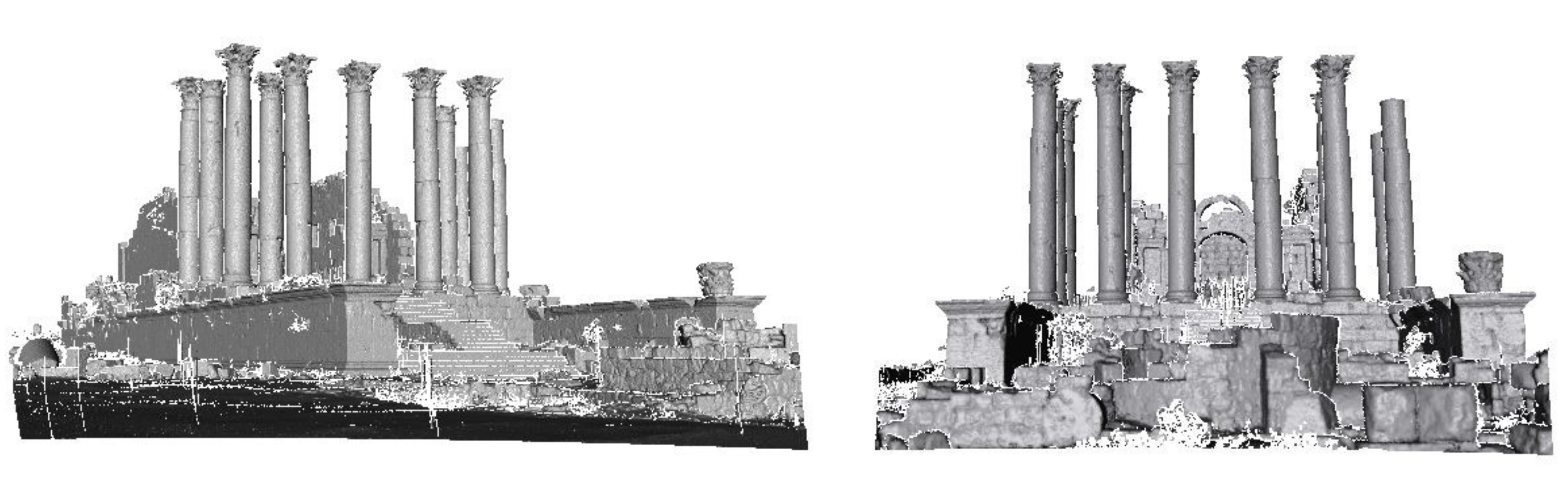

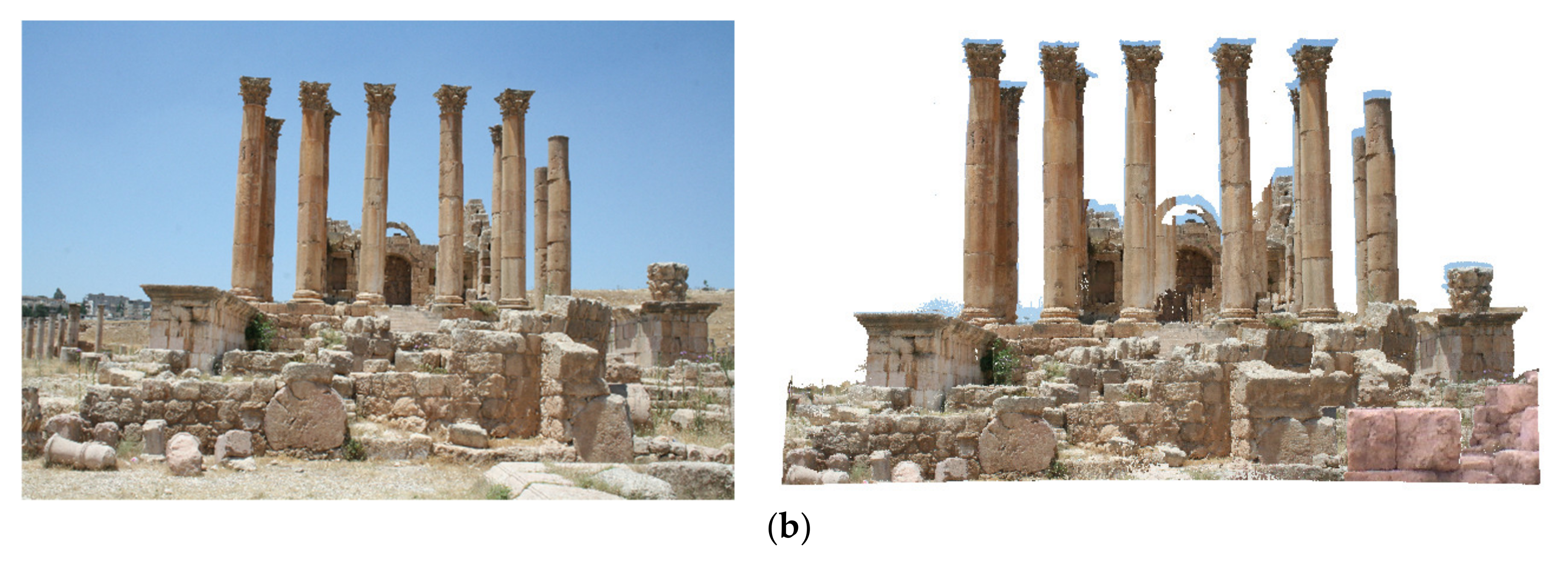

3.1.1. Gerasa

3.1.2. Qusayr ‘Amra

3.2. Sensors Applied

4. Data Fusion and Visibility Analysis

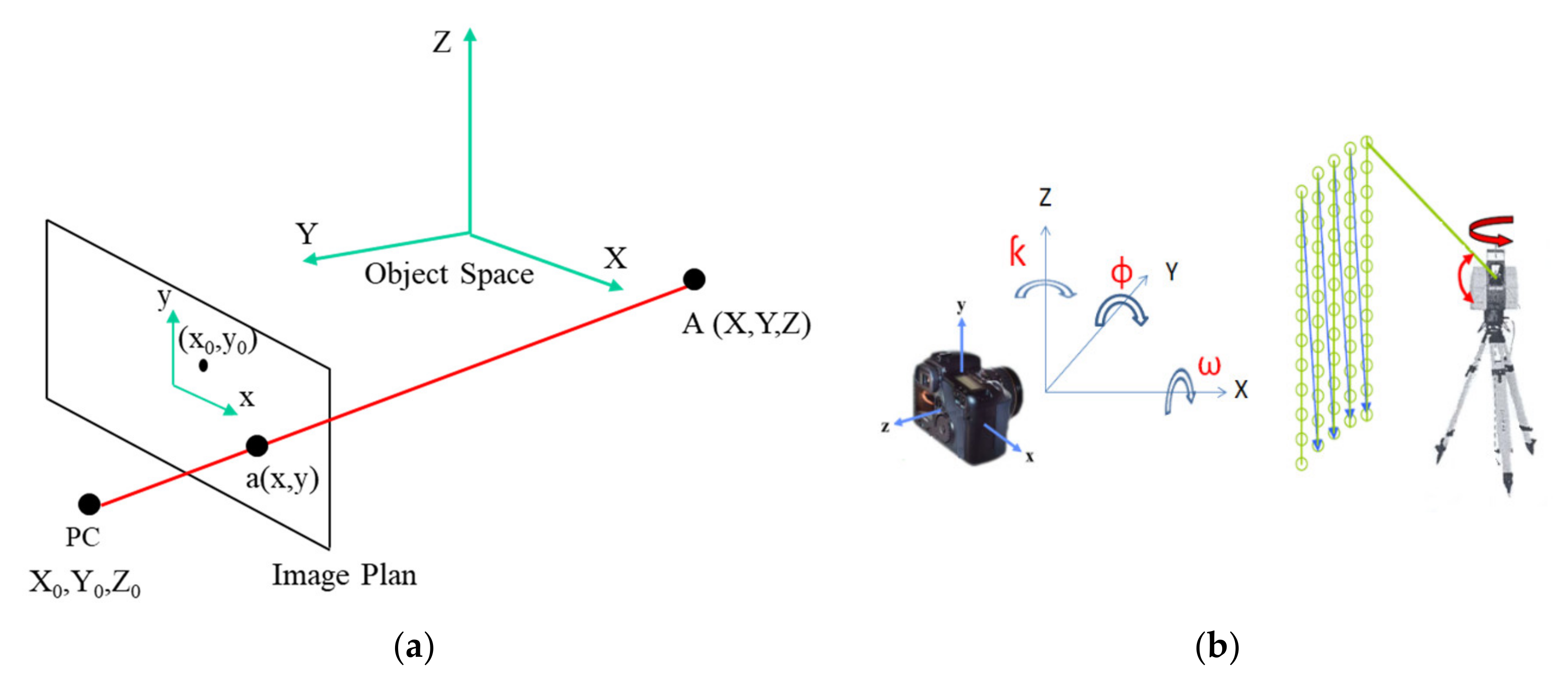

4.1. Camera Mathematical Model

4.2. Visibility Algorithm

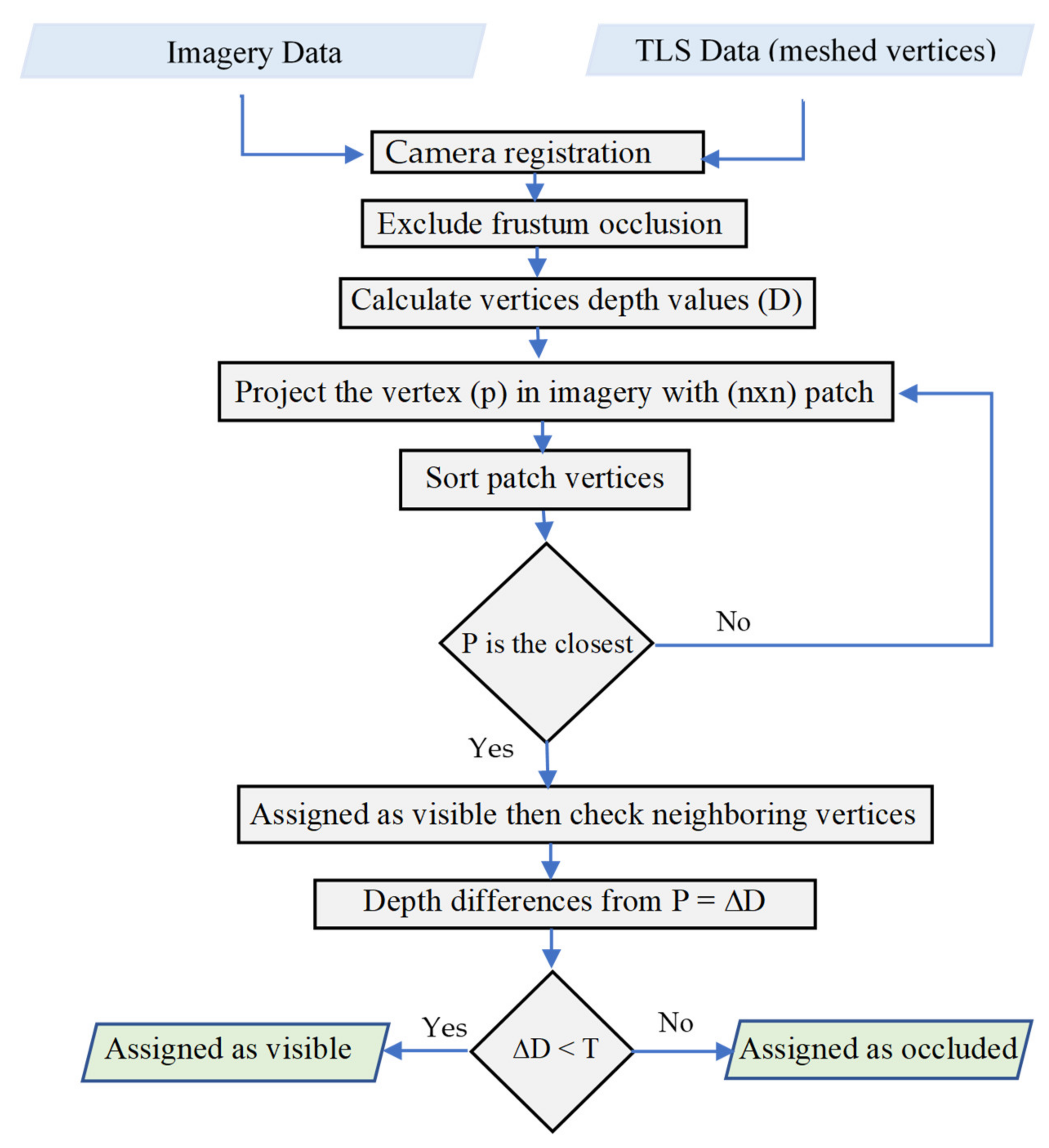

| Algorithm 1 The proposed visibility analysis |

| Input: Triangular vertices, selected scene image, image size in pixels, patch width (n) in pixels, triangle length T (mm, cm, or m) based on the model unit. |

| Output: Labeling the triangular vertices as visible or occluded. |

| 1. Considering the meshed vertices model in ASCII or WRL format. |

| 2. Calculate camera registration parameters. |

| 3. Checks the frustum occlusion using image size. |

| 4. The triangles vertices within camera view are stored in the matrix with their depth values D from the perspective center PC. |

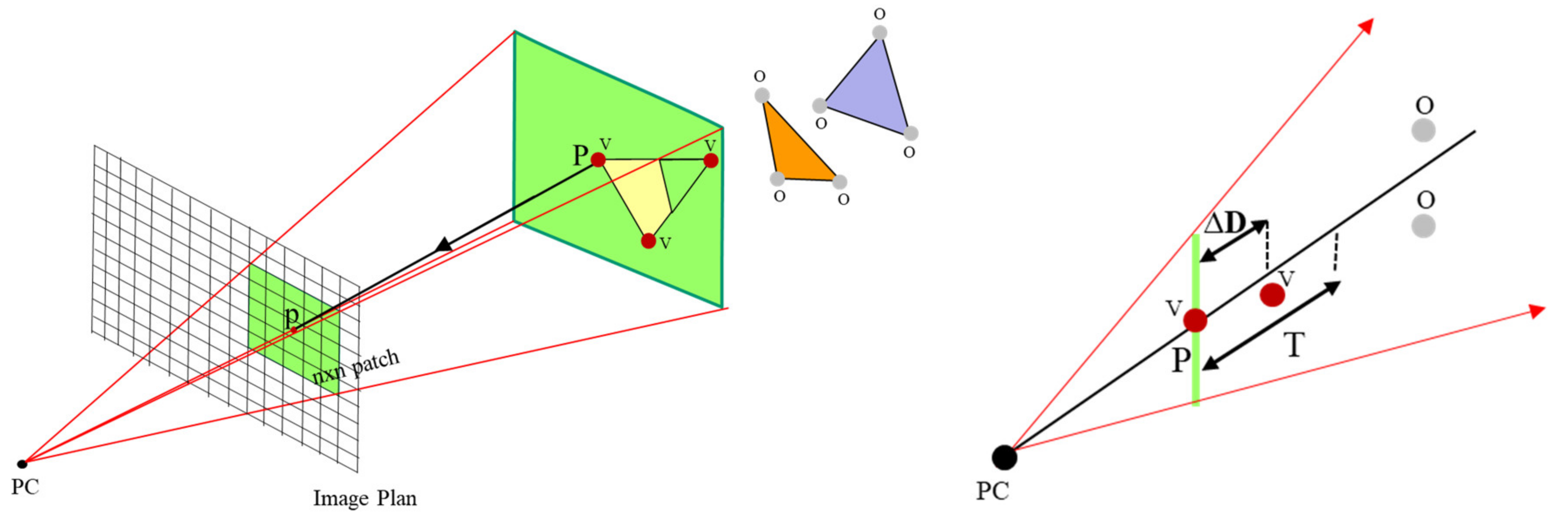

| 5. Project the vertex P to the image plane and set the patch (nxn) centered at the image point p. |

| 6. Sort the vertices within the patch depending on the D values and calculate their depth differences ∆D from P. |

| 7. The vertex P is assigned as visible if it is the closest to the image plane, otherwise it is assigned as occluded. |

| 8. If the vertex is assigned as visible, the neighboring vertices within the patch would be assigned as visible if ∆D does not exceed threshold value T. |

| 9. The process repeated for non-labeled vertices. |

| 10. All visible vertices will get their ID required for further texture processing and true orthophoto mapping. |

5. Laser Scanning Aided by Photogrammetry

5.1. Texture Mapping of the Laser Data

| Algorithm 2 Texturing mapping of triangular mesh |

| Input: Triangular vertices as a text or WRL file and selected images of the scene |

| Output: Textured model in WRL format |

| 1. Considering triangles vertices coordinates (Xi, Yi, Zi). |

| 2. Calculate camera registration parameters. |

| 3. Filter the occlusion triangles vertices using the proposed visibility algorithm. |

| 4. Assign color coordinates G(xi, yi) for every visible vertex, the occluded vertices are allocated with no texture values. |

| 5. The data is stored in the WRL format where the color is interpolated through the mesh. |

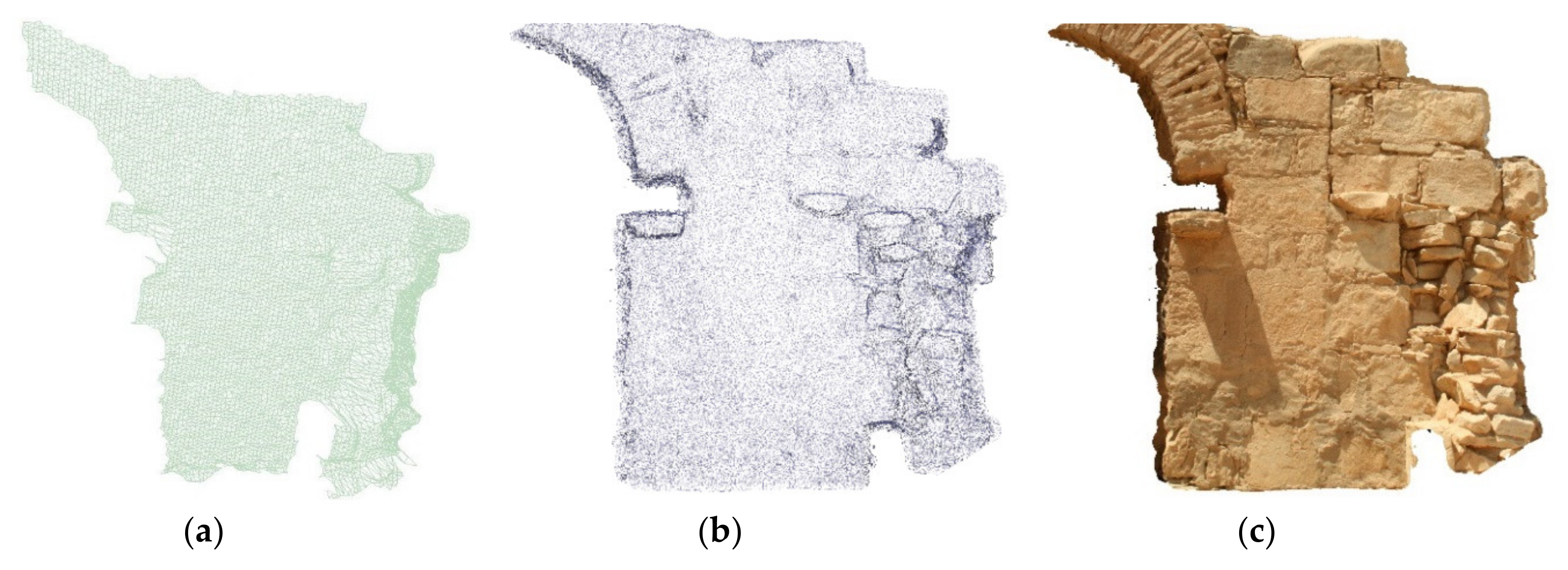

5.2. Multi-Scalar Recording Method

6. Photogrammetry Aided by Laser Scanning

| Algorithm 3 Laser based True-orthophoto |

| Input: Triangular vertices as text file and selected images of the scene |

| Output: True–orthophoto in JPG format |

| 1. Considering triangles vertices coordinates (Xi, Yi, Zi). |

| 2. Calculate camera registration parameters. |

| 3. Produce the regular DSM grid from model vertices over the mapped area with (Xi, Yi). |

| 4. Interpolate the depth values (Zi) in the DSM. |

| 5. Re–project the grid cell with its (Xi, Yi, Zi) into the image using the camera model. |

| 6. Filter the occlusion cells using the proposed visibility algorithm. |

| 7. For visible cell, set the grey value G (x, y) at the pixel (Xi, Yi) in the orthophoto. |

| 8. For occluded vertices, set fixed grey value G (0, 0), e.g., blue color in our example, at the pixel (Xi, Yi) in the orthophoto. |

| 9. The output is stored in JPG format |

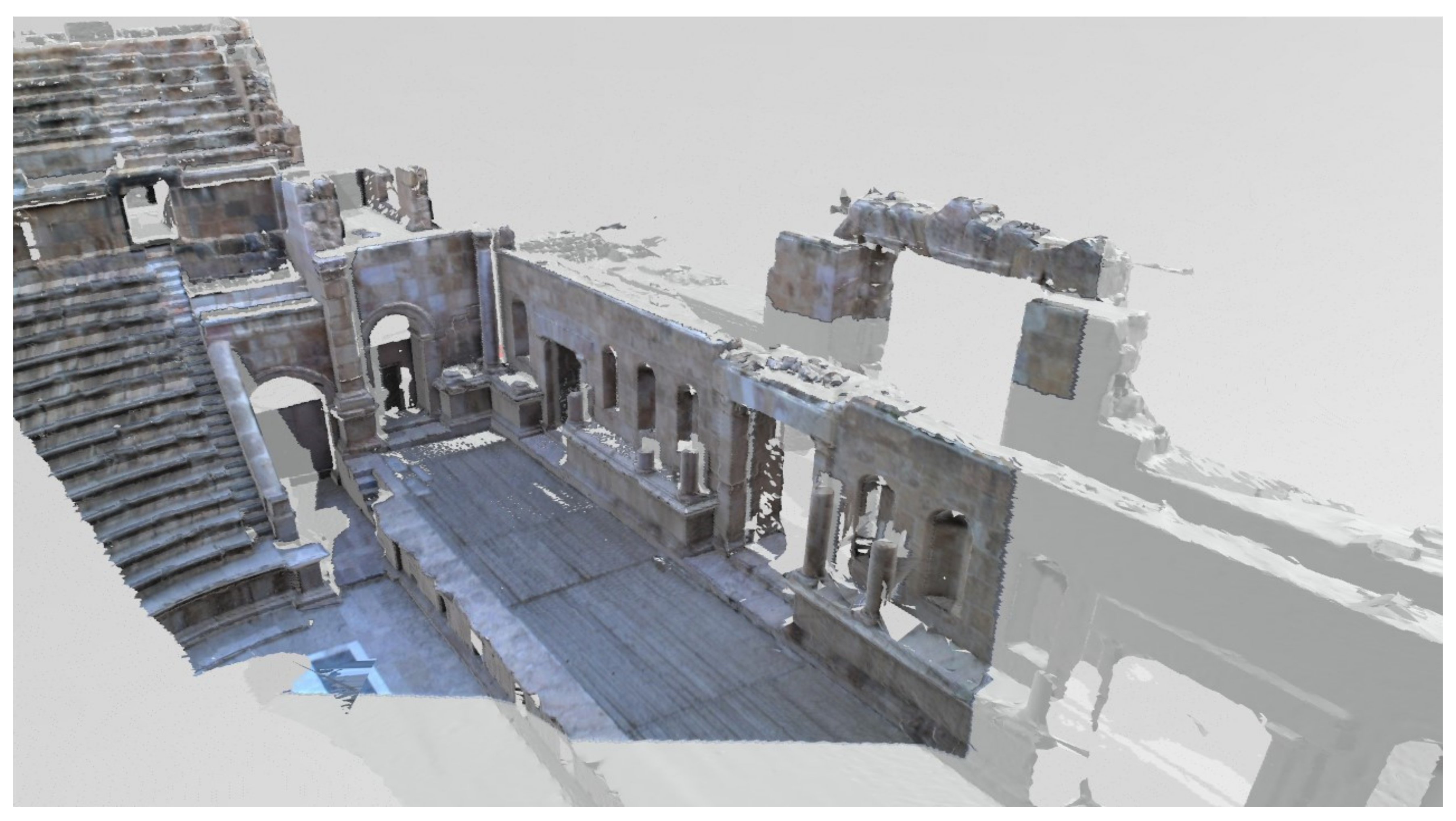

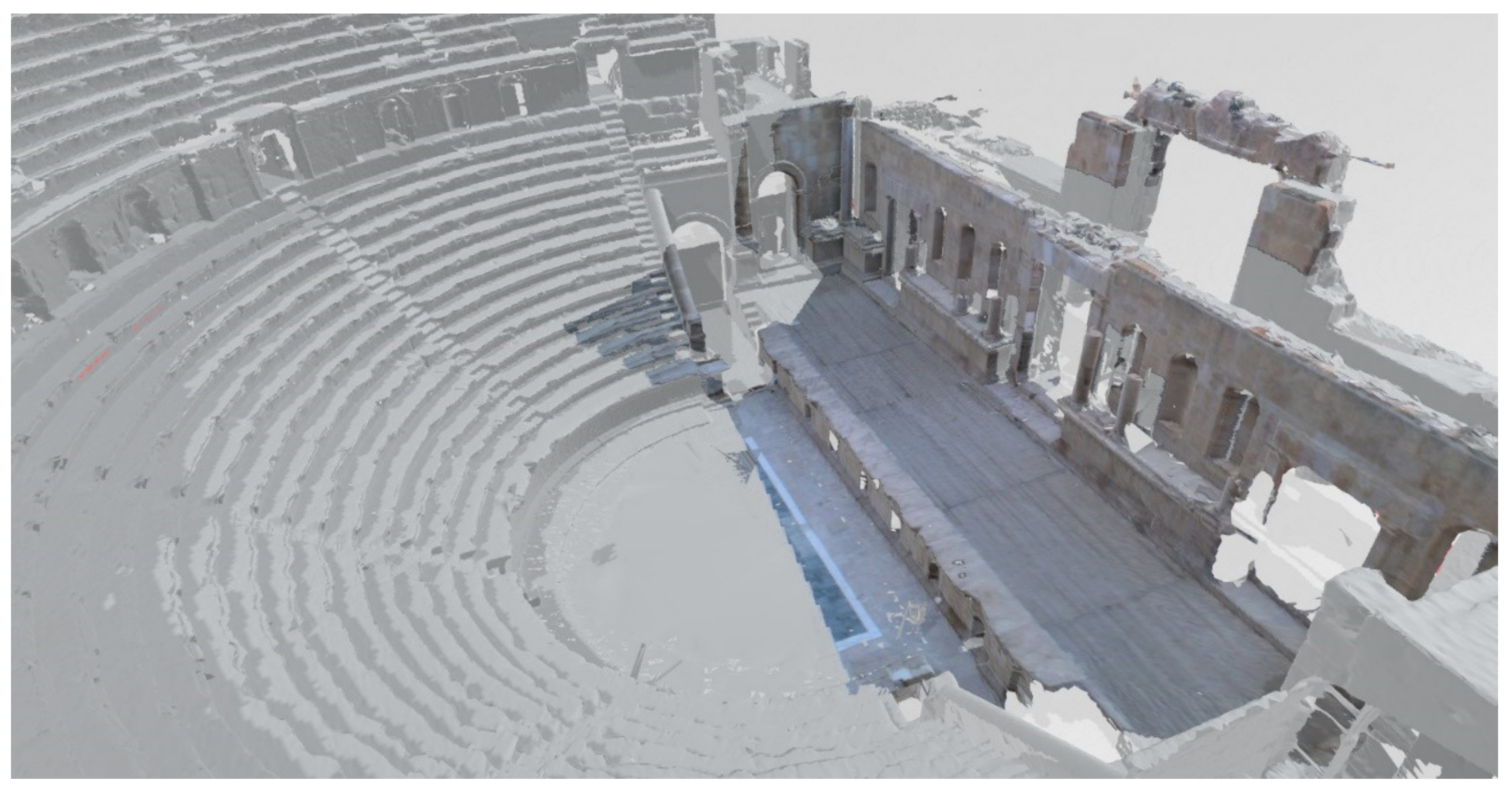

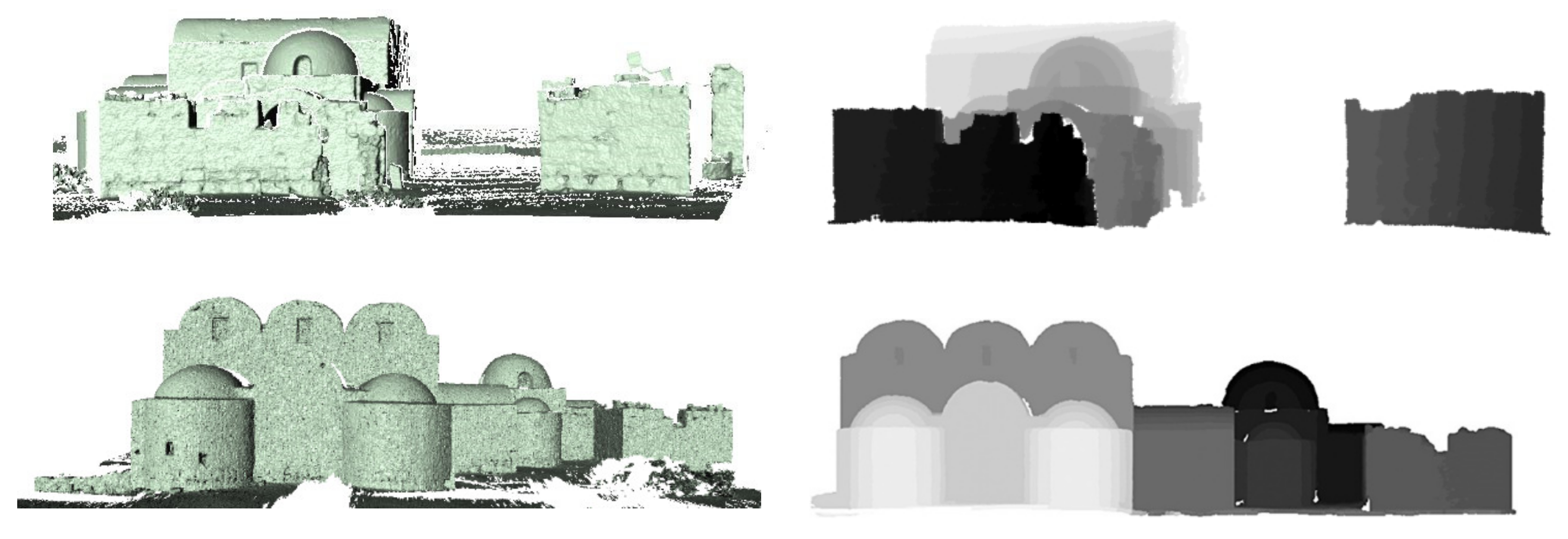

7. Discussion

- The results showed in Figure 13 and Figure 20b presented a high degree of completeness and correctness of occlusion detection, this highlights the fact that our suggested algorithm has considerable advantages in dealing with complex scenes and massive triangular facets. The visibility analysis does not need a priori knowledge of the occluding surfaces, i.e., information on the connectivity of the triangles, and the mesh holes do not affect the performance.

- Abovementioned methods, Z-buffer and ray tracing, show drawback and low performance on complex surfaces [56]. They have a high cost of computation incurred in pixel-by-pixel neighborhood searching, which further needs iteration processing to address intersection points between the rays and the DSM. In comparison, the proposed approach identifies the occluded region without iterative computation, relying on a patch scan. In the other hand, the new technique is able to detect occluded areas with great precision and more competitive computing time.

- Some other methods used 2D voxelization visibility approach in texture mapping process in order to reduce computing time [63,67]. The image plane is subdivided into 2D grid voxels, and each 2D voxel is given a list of triangles. The proposed method is different, the size and boundaries of the search are computed on the basis of a patch that is guided by the model resolution, which typically has lower resolution than the image.

- In the methods developed in this paper, the occlusion detection algorithm is integrated and performed as a part of texture mapping and true orthophoto algorithms. In other words, spectral mapping can also be done in a flexible manner without handling the visibility problems for some occasions; true orthophoto algorithm is performed as differential rectification in the flattening surface as depicted in Figure 19 and texture mapping algorithm in the occasion that the camera has the same model view as depicted in Figure 10.

8. Conclusions

Funding

Conflicts of Interest

References

- Porras-Amores, C.; Mazarrón, F.R.; Cañas, I.; Villoria Sáez, P. Terrestial Laser Scanning Digitalization in Underground Constructions. J. Cult. Herit. 2019, 38, 213–220. [Google Scholar] [CrossRef]

- Aicardi, I.; Chiabrando, F.; Maria Lingua, A.; Noardo, F. Recent Trends in Cultural Heritage 3D Survey: The Photogrammetric Computer Vision Approach. J. Cult. Herit. 2018, 32, 257–266. [Google Scholar] [CrossRef]

- Hoon, Y.J.; Hong, S. Three–Dimensional Digital Documentation of Cultural Heritage Site Based on the Convergence of Terrestrial Laser Scanning and Unmanned Aerial Vehicle Photogrammetry. ISPRS Int. J. Geo–Inf. 2019, 8, 53. [Google Scholar] [CrossRef] [Green Version]

- Urech, P.R.W.; Dissegna, M.A.; Girot, C.; Grêt–Regamey, A. Point Cloud Modeling as a Bridge between Landscape Design and Planning. Landsc. Urban Plan. 2020. [Google Scholar] [CrossRef]

- Balado, J.; Díaz–Vilariño, L.; Arias, P.; González–Jorge, H. Automatic Classification of Urban Ground Elements from Mobile Laser Scanning Data. Autom. Constr. 2018, 86, 226–239. [Google Scholar] [CrossRef] [Green Version]

- Šašak, J.; Gallay, M.; Kaňuk, J.; Hofierka, J.; Minár, J. Combined Use of Terrestrial Laser Scanning and UAV Photogrammetry in Mapping Alpine Terrain. Remote Sens. 2019, 11, 2154. [Google Scholar] [CrossRef] [Green Version]

- Risbøl, O.; Gustavsen, L. LiDAR from Drones Employed for Mapping Archaeology–Potential, Benefits and Challenges. Archaeol. Prospect. 2018, 25, 329–338. [Google Scholar] [CrossRef]

- Murphy, M.; Mcgovern, E.; Pavia, S. Historic Building Information Modelling (HBIM). Struct. Surv. 2009, 27, 311–327. [Google Scholar] [CrossRef] [Green Version]

- Banfi, F. HBIM, 3D Drawing and Virtual Reality for Archaeological Sites and Ancient Ruins. Virtual Archaeol. Rev. 2020, 11, 16–33. [Google Scholar] [CrossRef]

- Roca, D.; Armesto, J.; Lagüela, S.; Díaz–Vilariño, L. LIDAR–Equipped UAV for Building Information Modelling. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2014, 40, 523–527. [Google Scholar] [CrossRef] [Green Version]

- Abellán, A.; Calvet, J.; Vilaplana, J.M.; Blanchard, J. Detection and Spatial Prediction of Rockfalls by Means of Terrestrial Laser Scanner Monitoring. Geomorphology 2010, 119, 162–171. [Google Scholar] [CrossRef]

- Fortunato, G.; Funari, M.F.; Lonetti, P. Survey and Seismic Vulnerability Assessment of the Baptistery of San Giovanni in Tumba (Italy). J. Cult. Herit. 2017, 26, 64–78. [Google Scholar] [CrossRef]

- Balletti, C.; Ballarin, M.; Faccio, P.; Guerra, F.; Saetta, A.; Vernier, P. 3D Survey and 3D Modelling for Seismic Vulnerability Assessment of Historical Masonry Buildings. Appl. Geomat. 2018, 10, 473–484. [Google Scholar] [CrossRef]

- Barrile, V.; Fotia, A.; Bilotta, G. Geomatics and Augmented Reality Experiments for the Cultural Heritage. Appl. Geomat. 2018, 10, 569–578. [Google Scholar] [CrossRef]

- Gines, J.L.C.; Cervera, C.B. Toward Hybrid Modeling and Automatic Planimetry for Graphic Documentation of the Archaeological Heritage: The Cortina Family Pantheon in the Cemetery of Valencia. Int. J. Archit. Herit. 2019, 14, 1210–1220. [Google Scholar] [CrossRef]

- Dostal, C.; Yamafune, K. Photogrammetric Texture Mapping: A Method for Increasing the Fidelity of 3D Models of Cultural Heritage Materials. J. Archaeol. Sci. Rep. 2018, 18, 430–436. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P.; Suwardhi, D.; Awalludin, R. Multi–Scale and Multi–Sensor 3D Documentation of Heritage Complexes in Urban Areas. ISPRS Int. J. Geo–Inf. 2018, 7. [Google Scholar] [CrossRef] [Green Version]

- Sapirstein, P. Accurate Measurement with Photogrammetry at Large Sites. J. Archaeol. Sci. 2016, 66, 137–145. [Google Scholar] [CrossRef]

- Forlani, G.; Dall’Asta, E.; Diotri, F.; di Cella, U.M.; Roncella, R.; Santise, M. Quality Assessment of DSMs Produced from UAV Flights Georeferenced with On–Board RTK Positioning. Remote Sens. 2018, 10, 311. [Google Scholar] [CrossRef] [Green Version]

- Agüera–Vega, F.; Carvajal–Ramírez, F.; Martínez–Carricondo, P.; Sánchez–Hermosilla López, J.; Mesas–Carrascosa, F.J.; García–Ferrer, A.; Pérez–Porras, F.J. Reconstruction of Extreme Topography from UAV Structure from Motion Photogrammetry. Measurement 2018, 121, 127–138. [Google Scholar] [CrossRef]

- Lucieer, A.; de Jong, S.M.; Turner, D. Mapping Landslide Displacements Using Structure from Motion (SfM) and Image Correlation of Multi–Temporal UAV Photography. Prog. Phys. Geogr. 2014, 38, 97–116. [Google Scholar] [CrossRef]

- Stepinac, M.; Gašparović, M. A Review of Emerging Technologies for an Assessment of Safety and Seismic Vulnerability and Damage Detection of Existing Masonry Structures. Appl. Sci. 2020, 10, 5060. [Google Scholar] [CrossRef]

- Mohammadi, M.; Eskola, R.; Mikkola, A. Constructing a Virtual Environment for Multibody Simulation Software Using Photogrammetry. Appl. Sci. 2020, 10, 4079. [Google Scholar] [CrossRef]

- Poux, F.; Valembois, Q.; Mattes, C.; Kobbelt, L.; Billen, R. Initial User–Centered Design of a Virtual Reality Heritage System: Applications for Digital Tourism. Remote Sens. 2020, 12, 2583. [Google Scholar] [CrossRef]

- Fernández–Reche, J.; Valenzuela, L. Geometrical Assessment of Solar Concentrators Using Close–Range Photogrammetry. Energy Procedia 2012, 30, 84–90. [Google Scholar] [CrossRef] [Green Version]

- Luhmann, T. Close Range Photogrammetry for Industrial Applications. ISPRS J. Photogramm. Remote Sens. 2010, 65, 558–569. [Google Scholar] [CrossRef]

- Campana, S. Drones in Archaeology. State–of–the–Art and Future Perspectives. Archaeol. Prospect. 2017, 24, 275–296. [Google Scholar] [CrossRef]

- Tscharf, A.; Rumpler, M.; Fraundorfer, F.; Mayer, G.; Bischof, H. On the Use of Uavs in Mining and Archaeology–Geo–Accurate 3d Reconstructions Using Various Platforms and Terrestrial Views. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 15–22. [Google Scholar] [CrossRef] [Green Version]

- Mikita, T.; Balková, M.; Bajer, A.; Cibulka, M.; Patočka, Z. Comparison of Different Remote Sensing Methods for 3d Modeling of Small Rock Outcrops. Sensors 2020, 20, 1663. [Google Scholar] [CrossRef] [Green Version]

- Arza–García, M.; Gil–Docampo, M.; Ortiz–Sanz, J. A Hybrid Photogrammetry Approach for Archaeological Sites: Block Alignment Issues in a Case Study (the Roman Camp of A Cidadela). J. Cult. Herit. 2019, 38, 195–203. [Google Scholar] [CrossRef]

- Remondino, F.; Nocerino, E.; Toschi, I.; Menna, F. A Critical Review of Automated Photogrammetric Processing of Large Datasets. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2017, 42, 591–599. [Google Scholar] [CrossRef] [Green Version]

- Schonberger, J.L.; Frahm, J.M. Structure–from–Motion Revisited. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Alessandri, L.; Baiocchi, V.; Del Pizzo, S.; Di Ciaccio, F.; Onori, M.; Rolfo, M.F.; Troisi, S. A Flexible and Swift Approach for 3D Image–Based Survey in a Cave. Appl. Geomat. 2020. [Google Scholar] [CrossRef]

- Rönnholm, P.; Honkavaara, E.; Litkey, P.; Hyyppä, H.; Hyyppä, J. Integration of Laser Scanning and Photogrammetry. IAPRS 2007, 36, 355–362. [Google Scholar]

- Nex, F.; Rinaudo, F. Photogrammetric and Lidar Integration for the Cultural Heritage Metric Surveys. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 490–495. [Google Scholar]

- Remondino, F. Heritage Recording and 3D Modeling with Photogrammetry and 3D Scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef] [Green Version]

- Pepe, M.; Ackermann, S.; Fregonese, L.; Achille, C. 3D Point Cloud Model Color Adjustment by Combining Terrestrial Laser Scanner and Close Range Photogrammetry Datasets. Int. J. Comput. Electr. Autom. Control Inf. Eng. 2016, 10, 1889–1895. [Google Scholar]

- Sánchez–Aparicio, L.J.; Del Pozo, S.; Ramos, L.F.; Arce, A.; Fernandes, F.M. Heritage Site Preservation with Combined Radiometric and Geometric Analysis of TLS Data. Autom. Constr. 2018, 85, 24–39. [Google Scholar] [CrossRef]

- Chiabrando, F.; Sammartano, G.; Spanò, A.; Spreafico, A. Hybrid 3D Models: When Geomatics Innovations Meet Extensive Built Heritage Complexes. ISPRS Int. J. Geo–Inf. 2019, 8, 124. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Zheng, X.; Ai, G.; Zhang, Y.; Zuo, Y. Generating a High–Precision True Digital Orthophoto Map Based on UAV Images. ISPRS Int. J. Geo–Inf. 2018, 7, 333. [Google Scholar] [CrossRef] [Green Version]

- Soycan, A.; Soycan, M. Perspective Correction of Building Facade Images for Architectural Applications. Eng. Sci. Technol. Int. J. 2019, 22, 697–705. [Google Scholar] [CrossRef]

- Pintus, R.; Gobbetti, E.; Callieri, M.; Dellepiane, M. Techniques for Seamless Color Registration and Mapping on Dense 3D Models. In Sensing the Past. Geotechnologies and the Environment; Masini, N., Soldovieri, F., Eds.; Springer: Cham, Switzerland, 2017; pp. 355–376. [Google Scholar] [CrossRef]

- Altuntas, C.; Yildiz, F.; Scaioni, M. Laser Scanning and Data Integration for Three–Dimensional Digital Recording of Complex Historical Structures: The Case of Mevlana Museum. ISPRS Int. J. Geo–Inf. 2016, 5, 18. [Google Scholar] [CrossRef] [Green Version]

- Davelli, D.; Signoroni, A. Automatic Mapping of Uncalibrated Pictures on Dense 3D Point Clouds. Int. Symp. Image Signal Process. Anal. ISPA 2013, 576–581. [Google Scholar] [CrossRef]

- Luo, Q.; Zhou, G.; Zhang, G.; Huang, J. The Texture Extraction and Mapping of Buildings with Occlusion Detection. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 3002–3005. [Google Scholar] [CrossRef]

- Kersten, T.P.; Stallmann, D. Automatic Texture Mapping of Architectural and Archaeological 3D Models. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 273–278. [Google Scholar] [CrossRef] [Green Version]

- Koska, B.; Křemen, T. The Combination of Laser Scanning and Structure From Motion Technology for Creation of Accurate Exterior and Interior Orthophotos of St. Nicholas Baroque Church. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 133–138. [Google Scholar] [CrossRef] [Green Version]

- Pagés, R.; Berjõn, D.; Morán, F.; García, N. Seamless, Static Multi–Texturing of 3D Meshes. Comput. Graph. Forum 2015, 34, 228–238. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Guo, B.; Zhang, W. An Occlusion Detection Algorithm for 3D Texture Reconstruction of Multi–View Images. Int. J. Mach. Learn. Comput. 2017, 7, 152–155. [Google Scholar] [CrossRef]

- Amhar, F.; Jansa, J.; Ries, C. The Generation of True Orthophotos Using a 3D Building Model in Conjunction With a Conventional Dtm. IAPRS 1998, 32, 16–22. [Google Scholar]

- Chen, L.C.; Chan, L.L.; Chang, W.C. Integration Of Images and Lidar Point Clouds for Building Faç Ade Texturing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2016, 41, 379–382. [Google Scholar] [CrossRef]

- Kang, J.; Denga, F.; Li, X.; Wan, F. Automatic Texture Reconstruction of 3D City Model from Oblique Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2016, 41, 341–347. [Google Scholar] [CrossRef]

- Lensch, H.P.A.; Heidrich, W.; Seidel, H.P. Automated Texture Registration and Stitching for Real World Models. In Proceedings of the Eighth Pacific Conference on Computer Graphics and Applications, Hong Kong, China, 5 October 2000. [Google Scholar] [CrossRef] [Green Version]

- Poullis, C.; You, S.; Neumann, U. Generating High–Resolution Textures for 3d Virtual Environments Using View–Independent Texture Mapping Charalambos Poullis, Suya You, Ulrich Neumann University of Southern California Integrated Media Systems Center Charles Lee Powell Hall 3737 Watt Way. In Proceedings of the Nternational Conference on Multimedia & Expo, Beijing, China, 2–5 July 2007; pp. 1295–1298. [Google Scholar]

- Waechter, M.; Moehrle, N.; Goesele, M. Let There Be Color! Large–Scale Texturing of 3D Reconstructions BT–Computer Vision–ECCV 2014. In Computer Vision–ECCV; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 836–850. [Google Scholar]

- Huang, X.; Zhu, Q.; Jiang, W. GPVC: Graphics Pipeline–Based Visibility Classification for Texture Reconstruction. Remote Sens. 2018, 10, 1725. [Google Scholar] [CrossRef] [Green Version]

- Karras, G.E.; Grammatikopoulos, L.; Kalisperakis, I.; Petsa, E. Generation of Orthoimages and Perspective Views with Automatic Visibility Checking and Texture Blending. Photogramm. Eng. Remote Sens. 2007, 73, 403–411. [Google Scholar] [CrossRef]

- Zhang, W.; Li, M.; Guo, B.; Li, D.; Guo, G. Rapid Texture Optimization of Three–Dimensional Urban Model Based on Oblique Images. Sensors 2017, 17, 911. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, C.; Rhee, E. Realistic Façade Texturing of Digital Building Models. Int. J. Softw. Eng. Appl. 2014, 8, 193–202. [Google Scholar] [CrossRef]

- Lari, Z.; El–Sheimy, N.; Habib, A. A New Approach for Realistic 3D Reconstruction of Planar Surfaces from Laser Scanning Data and Imagery Collected Onboard Modern Low–Cost Aerial Mapping Systems. Remote Sens. 2017, 9, 212. [Google Scholar] [CrossRef] [Green Version]

- Previtali, M.; Barazzetti, L.; Scaioni, M. An Automated and Accurate Procedure for Texture Mapping from Images. In Proceedings of the 2012 18th International Conference on Virtual Systems and Multimedia, IEEE, Milan, Italy, 2–5 September 2012; pp. 591–594. [Google Scholar] [CrossRef]

- Hanusch, T. A New Texture Mapping Algorithm for Photorealistic Reconstruction of 3D Objects. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 699–705. [Google Scholar]

- Zalama, E.; Gómez–García–Bermejo, J.; Llamas, J.; Medina, R. An Effective Texture Mapping Approach for 3D Models Obtained from Laser Scanner Data to Building Documentation. Comput. Civ. Infrastruct. Eng. 2011, 26, 381–392. [Google Scholar] [CrossRef]

- Grammatikopoulos, L.; Kalisperakis, I.; Karras, G.; Petsa, E. Automatic Multi–View Texture Mapping of 3d Surface Projections. In 2nd ISPRS International Workshop 3D–Arch; ETH Zurich: Zurich, Switzerland, 2007; pp. 1–6. [Google Scholar]

- Chiabrando, F.; Donadio, E.; Rinaudo, F. SfM for Orthophoto Generation: Awinning Approach for Cultural Heritage Knowledge. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2015, 40, 91–98. [Google Scholar] [CrossRef] [Green Version]

- Bang, K.-I.; Kim, C.-J. A New True Ortho–Photo Generation Algorithm for High Resolution Satellite Imagery. Korean J. Remote Sens. 2010, 26, 347–359. [Google Scholar]

- Zhou, G.; Wang, Y.; Yue, T.; Ye, S.; Wang, W. Building Occlusion Detection from Ghost Images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1074–1084. [Google Scholar] [CrossRef]

- De Oliveira, H.C.; Poz, A.P.D.; Galo, M.; Habib, A.F. Surface Gradient Approach for Occlusion Detection Based on Triangulated Irregular Network for True Orthophoto Generation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 443–457. [Google Scholar] [CrossRef]

- Xie, W.; Zhou, G. Experimental Realization Of Urban Large–Scale True Orthoimage Generation. In Proceedings of the ISPRS Congress, Beijing, China, 3–11 July 2008; pp. 879–884. [Google Scholar]

- Wang, X.; Jiang, W.; Bian, F. Occlusion Detection Analysis Based on Two Different DSM Models in True Orthophoto Generation. In Proceedings of the SPIE 7146, Geoinformatics 2008 and Joint Conference on GIS and Built Environment: Advanced Spatial Data Models and Analyses, Guangzhou, China, 10 November 2008. [Google Scholar]

- Habib, A.F.; Kim, E.M.; Kim, C.J. New Methodologies for True Orthophoto Generation. Photogramm. Eng. Remote Sens. 2007, 73, 25–36. [Google Scholar] [CrossRef] [Green Version]

- Zhong, C.; Li, H.; Huang, X. A Fast and Effective Approach to Generate True Orthophoto in Built–up Area. Sens. Rev. 2011, 31, 341–348. [Google Scholar] [CrossRef]

- De Oliveira, H.C.; Galo, M.; Dal Poz, A.P. Height–Gradient–Based Method for Occlusion Detection in True Orthophoto Generation. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2222–2226. [Google Scholar] [CrossRef]

- Gharibi, H.; Habib, A. True Orthophoto Generation from Aerial Frame Images and LiDAR Data: An Update. Remote Sens. 2018, 10, 581. [Google Scholar] [CrossRef] [Green Version]

- Bowsher, J.M.C. An Early Nineteenth Century Account of Jerash and the Decapolis: The Records of William John Bankes. Levant 1997, 29, 227–246. [Google Scholar] [CrossRef]

- Lichtenberger, A.; Raja, R.; Stott, D. Mapping Gerasa: A New and Open Data Map of the Site. Antiquity 2019, 93, 1–7. [Google Scholar] [CrossRef]

- Balderstone, S.M. Archaeology in Jordan—The North Theatre AtJerash. Hist. Environ. 1985, 4, 38–45. [Google Scholar]

- Lichtenberger, A.; Raja, R. Management of Water Resources over Time in Semiarid Regions: The Case of Gerasa/Jerash in Jordan. WIREs Water 2020, 7, 1–19. [Google Scholar] [CrossRef] [Green Version]

- Parapetti, R. The Architectural Significance of the Sanctuary of Artemis at Gerasa. SHAJ Stud. Hist. Archaeol. Jordan 1982, 1, 255–260. [Google Scholar]

- Brizzi, M. The Artemis Temple Reconsidered. The Archaeology and History of Jerash. 110 Years of Excavations; Lichtenberger, A., Raja, R., Eds.; Brepols: Turnhout, Belgium, 2018. [Google Scholar]

- Ababneh, A. Qusair Amra (Jordan) World Heritage Site: A Review of Current Status of Presentation and Protection Approaches. Mediterr. Archaeol. Archaeom. 2015, 15, 27–44. [Google Scholar] [CrossRef]

- Aigner, H. Athletic Images in the Umayyid Palace of Qasr ‘Amra in Jordan: Examples of Body Culture or Byzantine Representation in Early Islam? Int. J. Phytoremediat. 2000, 21, 159–164. [Google Scholar] [CrossRef]

- Bianchin, S.; Casellato, U.; Favaro, M.; Vigato, P.A. Painting Technique and State of Conservation of Wall Paintings at Qusayr Amra, Amman–Jordan. J. Cult. Herit. 2007, 8, 289–293. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X. Advances in Fusion of Optical Imagery and LiDAR Point Cloud Applied to Photogrammetry and Remote Sensing. Int. J. Image Data Fusion 2017, 8, 1–31. [Google Scholar] [CrossRef]

- Pu, S.; Vosselman, G. Building Facade Reconstruction by Fusing Terrestrial Laser Points and Images. Sensors 2009, 9, 4525–4542. [Google Scholar] [CrossRef] [Green Version]

- Parmehr, E.; Fraser, C.S.; Zhang, C.; Leach, J. Automatic Registration of Optical Imagery with 3D LiDAR Data Using Statistical Similarity. ISPRS J. Photogramm. Remote Sens. 2014, 88, 28–40. [Google Scholar] [CrossRef]

- Morago, B.; Bui, G.; Le, T.; Maerz, N.H.; Duan, Y. Photograph LIDAR Registration Methodology for Rock Discontinuity Measurement. IEEE Geosci. Remote Sens. Lett. 2018, 15, 947–951. [Google Scholar] [CrossRef]

- González–Aguilera, D.; Rodríguez–Gonzálvez, P.; Gómez–Lahoz, J. An Automatic Procedure for Co–Registration of Terrestrial Laser Scanners and Digital Cameras. ISPRS J. Photogramm. Remote Sens. 2009, 64, 308–316. [Google Scholar] [CrossRef]

- Aicardi, I.; Chiabrando, F.; Grasso, N.; Lingua, A.M.; Noardo, F.; Spanó, A. UAV Photogrammetry with Oblique Images: First Analysis on Data Acquisition and Processing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2016, 41, 835–842. [Google Scholar] [CrossRef]

- Alshawabkeh, Y. Linear Feature Extraction from Point Cloud Using Color Information. Herit. Sci. 2020, 8, 1–13. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alshawabkeh, Y. Color and Laser Data as a Complementary Approach for Heritage Documentation. Remote Sens. 2020, 12, 3465. https://doi.org/10.3390/rs12203465

Alshawabkeh Y. Color and Laser Data as a Complementary Approach for Heritage Documentation. Remote Sensing. 2020; 12(20):3465. https://doi.org/10.3390/rs12203465

Chicago/Turabian StyleAlshawabkeh, Yahya. 2020. "Color and Laser Data as a Complementary Approach for Heritage Documentation" Remote Sensing 12, no. 20: 3465. https://doi.org/10.3390/rs12203465

APA StyleAlshawabkeh, Y. (2020). Color and Laser Data as a Complementary Approach for Heritage Documentation. Remote Sensing, 12(20), 3465. https://doi.org/10.3390/rs12203465