Abstract

Human-induced deforestation has a major impact on forest ecosystems and therefore its detection and analysis methods should be improved. This study classified landscape affected by human-induced deforestation efficiently using high-resolution remote sensing and deep-learning. The SegNet and U-Net algorithms were selected for application with high-resolution remote sensing data obtained by the Kompsat-3 satellite. Land and forest cover maps were used as base data to construct accurate deep-learning datasets of deforested areas at high spatial resolution, and digital maps and a softwood database were used as reference data. Sites were classified into forest and non-forest areas, and a total of 13 areas (2 forest and 11 non-forest) were selected for analysis. Overall, U-Net was more accurate than SegNet (74.8% vs. 63.3%). The U-Net algorithm was about 11.5% more accurate than the SegNet algorithm, although SegNet performed better for the hardwood and bare land classes. The SegNet algorithm misclassified many forest areas, but no non-forest area. There was reduced accuracy of the U-Net algorithm due to misclassification among sub-items, but U-Net performed very well at the forest/non-forest area classification level, with 98.4% accuracy for forest areas and 88.5% for non-forest areas. Thus, deep-learning modeling has great potential for estimating human-induced deforestation in mountain areas. The findings of this study will contribute to more efficient monitoring of damaged mountain forests and the determination of policy priorities for mountain area restoration.

1. Introduction

Forests perform many important roles that influence the lives of humans [1]. They store large amounts of carbon in vegetation and soil, and exchange it for oxygen (i.e., contribute oxygen to the atmosphere) [2]. Forests also affect the urban thermal environment via sheltering and evaporative cooling. The social impacts of forests also cannot be ignored [3,4,5]. Forests also produce wood for lumber, and help protect riverine ecosystems, prevent soil erosion, and mitigate climate change through carbon exchange [1,2]. Forests also have indirect economic value; they promote water security through the collection of approximately 139,000 gallons of rainwater annually, and they can reduce air conditioning costs by up to 56% [3]. One study reported that investment in small urban forests improved net profit by at least $232,000 through reducing air conditioning and water storage fees [6,7]. The economic value of Mediterranean forests, including the value of wood products such as cork and that of non-wood products such as honey, was estimated to be $149/ha [8], and the value of forests to the South Korean economy was estimated to be approximately $6 billion in 2008, based on seven criteria: atmosphere purification, recreation, forest water purification, watershed conservation, wildlife protection, soil runoff, and landslide prevention. The value was calculated using the effective storage quantity, landslide prevention, and oxygen quantity. Of these criteria, economic values were highest in watershed conservation areas and for atmosphere purification (≥$1.4 billion) [9].

Despite the vast public benefit provided by forests, these ecosystems are frequently damaged by human-induced large-scale deforestation, with consequences to their individual and industrial values [10,11]. In Columbia, the increasing global demand for coca in 2016 and 2017 resulted in 83 km2 of deforestation in mountain regions [12]. Myanmar is rich in forest resources, but 82,426 km2 of forest were disturbed in 2000–2010 due to illegal logging and timber export [13]. In South Korea, an estimated 8186 km2 of mountain forest damage is suspected to have occurred since 2012 due to illegal farming, cattle grazing, and deforestation [14].

Such deforested mountain areas are generally investigated by forestry experts in terms of forest cover [15]. Field studies covering broad areas, such as whole forests, are difficult to perform and require large amounts of time and resources. Recently, remote sensing has been used to survey and analyze landscape affected by deforestation; this approach is efficient in terms of time and cost [16]. Forest cover was estimated in Costa Rica from 1986 to 1991 using Landsat-5 Thematic Mapper (TM) satellite images; the results showed 2250 km2 of forest loss in the study area, which represented about 50% of the Costa Rican territory, and the deforestation of about 450 km2 annually [17]. A study of economic growth and mountain forest deforestation in southern Cameroon, in which land-use changes were examined using remote sensing images, demonstrated that deforestation had increased after the economic crisis of 1986 [18]. Studies of landscape affected by deforestation have involved remote sensing–based analysis using low- and medium-resolution satellite images obtained by Landsat TM or satellites with large instantaneous fields of view [19,20,21,22].

Remote sensing-based forest studies conducted in the early 2000s involved mainly the analysis of forest vitality using the normalized difference vegetation index or object-based tree classification [23,24]. With the rapid development of technologies such as data mining and machine learning since 2010, recent studies have integrated forest classification monitoring and detection over extensive mountain regions using remote sensing and machine learning (ML), a branch of artificial intelligence (AI) [25]. ML methods used in this effort include the classification and regression tree, decision tree, support vector machine, artificial neural network, and random forest algorithms [16,26,27,28]. Disadvantages of these methods include the need to create accurate learning data based on expertise in tasks such as tree characteristic extraction. Deep-learning emerged as a solution to this problem [29]. Most deep-learning studies of mountain deforestation have involved the analysis of high-resolution satellite and aerial images and models based on convolutional neural networks (CNNs) [30,31,32,33,34,35].

Recently, semantic segmentation developed from CNN-based image recognition has been proven to be useful in various fields requiring object recognition, such as surveillance, healthcare, and autonomous driving [36]. The typical model of semantic segmentation is the fully convolutional network (FCN) [37], which uses input data of random sizes for learning and creates output values at relevant sizes [37]. However, the FCN has disadvantages, such as the loss of spatial information in the pooling layer, which condenses data. To solve this problem, an advanced semantic segmentation algorithm was developed [36].

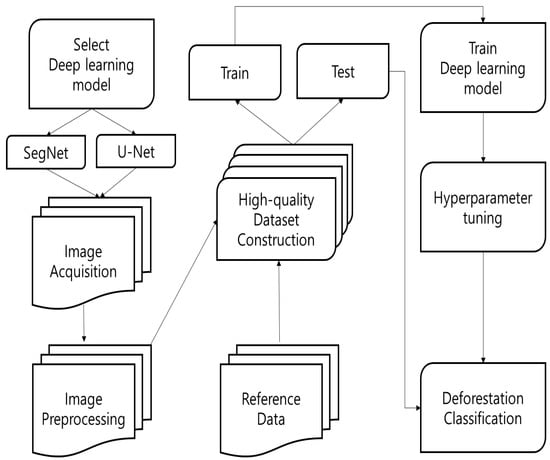

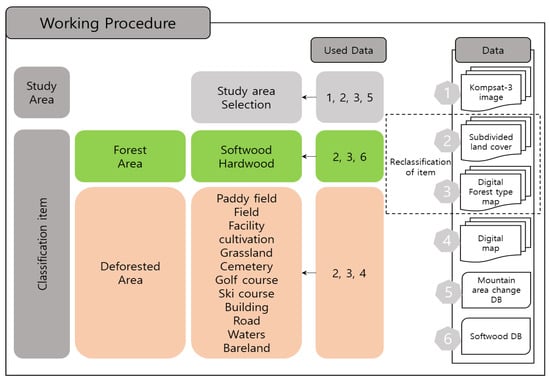

This study classified mountainous areas using remote sensing and deep-learning algorithms, to analyze land cover quantitatively in the context of landscape affected by deforestation in Korean forests. First, optimal computer vision-based deep-learning algorithms were selected for application to spatial information and remote sensing. The FCN-based U-Net and SegNet deep-learning algorithms were used, based on a literature review. As existing deep-learning algorithms do not calculate location information, the processing of satellite image analysis results can be challenging. Considering this technical problem, the best algorithms were selected to analyze the mountain forest area. Next, study areas showing recent deforestation were selected using high-resolution remote sensing data acquired by the Kompsat-3 satellite, and datasets for deep-learning using segmentation data were constructed to incorporate spatial characteristics. Classification items were selected based on different types of reference data. Third, the datasets were divided into learning and testing sets, and the learning data were applied to the selected deep-learning algorithms. Hyperparameters were tuned in the algorithm learning process, and the accuracy of the optimum test was evaluated. Finally, the results were analyzed to determine the applicability of the algorithm and data for the assessment of landscape affected by deforestation (Figure 1).

Figure 1.

Flow chart in this study.

2. Methodology

2.1. Method

Deep-learning algorithms are currently being used in various remote sensing and spatial information studies. The CNN is the most widely used computer vision-related deep-learning algorithm. CNNs reinforce the pixel characteristics of input images through a convolutional process and perform an iterative process of condensing and pooling reinforced characteristics in a feature map, ultimately producing fully connected layers that may be applied to a neural network. The CNN has evolved into various algorithms, including LeNet-5, AlexNet, and GoogLeNet. The spatial characteristics of input images and locational characteristics of objects are not involved in these processes.

FCN-based algorithms have been used recently to solve this problem of deep-learning algorithms based on computer vision. In FCNs, fully connected layers are replaced with convolution layers to overcome the limitations of the CNN model, such as the loss of image location information and fixation of input images. Due to these characteristics, FCNs can not only classify objects, but also semantically divide them [37].

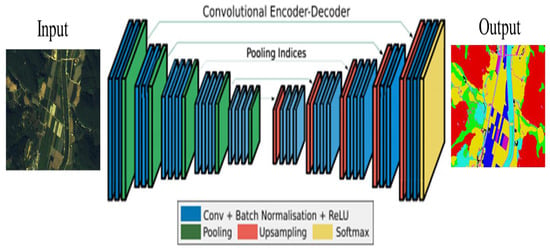

Various semantic segmentation models have emerged recently to supplement FCN methods [38]. Among them, SegNet [39] is effective in terms of learning speed and accuracy [40]. The architecture of SegNet consists of encoder and decoder processes. The encoder process consists of image compression and feature extraction using the rectified linear unit (ReLU) during activation. Upon its completion, the decoder process restores the image. Image spatial information is maintained during the decoder process because image restoration is performed using the same pooling layer as in the encoder process. This feature of SegNet differentiates it from FCNs. When image reconstruction is complete, the image is classified using a softmax function (Figure 2) [39,41].

Figure 2.

SegNet architecture introduced in [41].

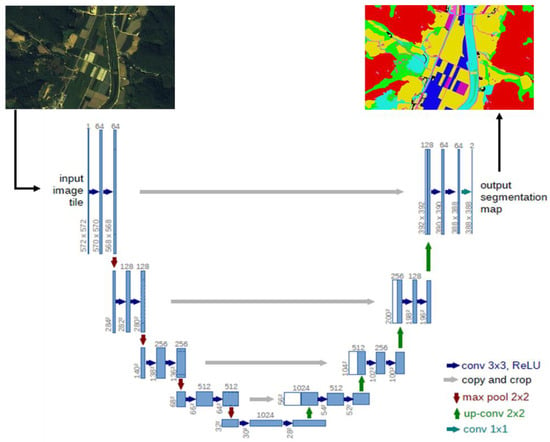

U-Net [42] was developed based on the FCN and is applied mainly for the segmentation of small numbers of medical images. The U-Net model architecture resembles the letter U [43], with a contracting path on the left and expansive path on the right (Figure 3). The contracting path uses an image patch, with the NxNxC C channel as input layers. In each path, sub-sampling is performed using convolution layers, ReLU activation functions, and max pooling. In the expansive path, U-Net has two definitive characteristics: the copy-and-crop step, which brings source information to the contracting path using a skip connection [44], and convolution layers without fully connected layers in the image restoration stage. In the network, input images are mirrored to predict the boundary value of the patch [45]. U-Net uses input data in patch units instead of a sliding window, thereby improving its speed over that of previous networks. This algorithm accurately captures the context of the image through concatenation using the copy-and-crop function while solving the FCN issue of localization [42].

Figure 3.

U-Net architecture introduced in [38].

2.2. Study Area and Dataset

2.2.1. Satellite Image Preprocessing

High-resolution Komsat-3 images were used to construct the datasets. Kompsat-3 data comprise five bands: panchromatic, blue, green, red, and near-infrared (NIR). Systematic errors in the data are eliminated using rational polynomial coefficient sensor modeling data, followed by orthometric correction by rectifying digital differentials through observation of ground control points. Atmospheric correction was performed using the COST model that approximates the transmittance from the sun to the earth by cosine [46]. The resolution of the Kompsat-3 panchromatic image was 0.7 m; resolution was corrected for the multispectral band using pan-sharpening, which merges high-resolution panchromatic images and relatively low-resolution multispectral images [47]. The pan-sharpened files used in this study to generate 0.7-m-resolution multispectral images were obtained from the Korea Aerospace Research Institute (KARI).

2.2.2. AI Learning Dataset Construction

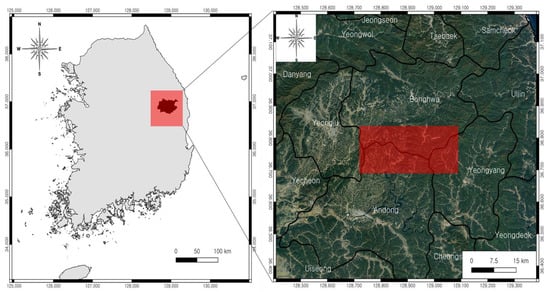

Precise deep learning data are needed to apply deep learning to high-resolution satellite images, to ensure accurate classification of regional attributes. Thus, learning and testing datasets are constructed after satellite image preprocessing. The study areas were selected in consultation with: Ministry of Environment land cover maps (1:5000); Korea Forest Service digital forest cover maps (1:5000) and a mountain forest database (Table 1). The study area is selected near Bonghwa-gun, Gyeongsangbuk-do, which has 19,031 km2 of mountainous area (Figure 4). In addition, according to the data of the Korea Forest Service [14], the number of deforestation cases is the highest in the country [14]. Furthermore, more than half of the forest area is made up of softwood and hardwoods, and more than 70% of them are Softwood.

Table 1.

Base data and reference data in this study.

Figure 4.

Study area in this study in the red box (Bonghwa-gun).

A training dataset was constructed using the study area data. The data were projected on the WGS 1984 UTM Zone 52N coordinate system, and a learning data plan was established based on a 1:5000 scale. The software used for this process was ArcGIS ver. 10.3, ENVI ver. 5.1, and ERDAS Imagine 2015 (or later versions). The working procedures for data labeling comprised five stages: data collection, data preparation, land cover classification, quality inspection, and creation of learning data.

For this study, satellite images and land and forest cover maps were collected as base data, and a continuous digital map (1:5000), mountain forest area database, and softwood database were collected as reference data (Figure 5). The study area was selected to include at least 50% forest. Land cover classification was performed of items that were visually distinct on the satellite images. Primary classification consisted of dividing the data into forest and non-forest areas, after which forest areas were subdivided into softwood and hardwood and non-forest areas were subdivided into buildings, farmland, and bare land, resulting in a total of 13 cover types (Table 2). Detailed revision of the data included corrections such as the elimination of roads blocked by trees in the satellite images (Figure 6).

Figure 5.

Working procedure and using data for the construction label dataset.

Table 2.

Label class in this study.

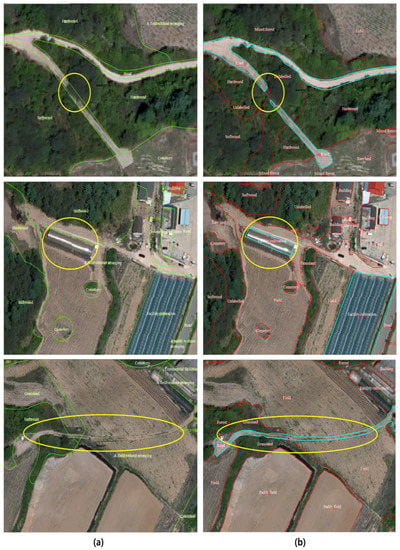

Figure 6.

Example of revised label. (a) is an example of an area to be revised and (b) is after revision.

Next, a quality inspection was conducted of the classified items to improve the efficiency of learning dataset creation. This inspection consisted of a visualization test of classification items at each step. Labeling was performed with precision revision when the accuracy was ≥95% and re-labeled when the accuracy was lower. Finally, learning and test datasets were constructed according to the relevant image data. For data labeling, the scope and resolution were equivalent to those of the image data. Image and labeling data were converted to the Geotiff (unsigned integer 8-bit) standard information compatibility format (Figure 7). Prior to the inclusion of satellite image and labeling data in the datasets, 63 pairs of images were created. Image data and ground truth pairs represent the same area, and all image pairs represent the areas that contain at least 50% of the forest area. Satellite image data that corresponded to labeling datasets were constructed with the inclusion of red, green, blue, and NIR bands. The 63 datasets were subdivided into 50 sets for learning and 13 for testing. The precisely constructed dataset was applied to the SegNet and U-Net algorithms.

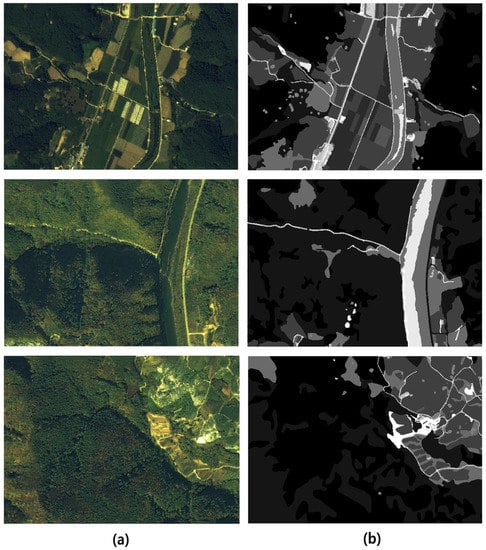

Figure 7.

Example of constructed dataset. (a) is image data and (b) is grayscale ground truth data.

3. Result

3.1. Hyperparameter Tuning

Model learning was performed before optimum hyperparameter estimation. The hyperparameters were estimated with considered of the number of iterations, batch size, patch size, and learning rate. All hyperparameters consider the change in “training loss value” and test accuracy to avoid overfitting. There was no underfitting; to minimize overfitting, a high-quality dataset was sought and the loss value and final accuracy during the learning process were considered. Iteration refers to learning trials; we began with 100,000 iterations and then increased this in steps of 100,000 considering the training loss value and test accuracy. Of the algorithms tested, SegNet was the most accurate and had the lowest loss value after 100,000 iterations, while U-Net was most accurate after 300,000 iterations. The training loss value was smaller than 0.4 when U-Net learned 300,000 times and SegNet learned 100,000 times. Batch size is the number of input data; in this study, it was fixed at 100 for SegNet and 1 for U-Net. A patch size of 256 × 256 was used. The learning rate is based on the amount of learning achieved in each iteration, which affects the model weights; in this study, it was set to 1 × 10−5 for both the SegNet and U-Net algorithms (Table 3).

Table 3.

Result of hyperparameter tuning.

3.2. Classification of Deforested Land

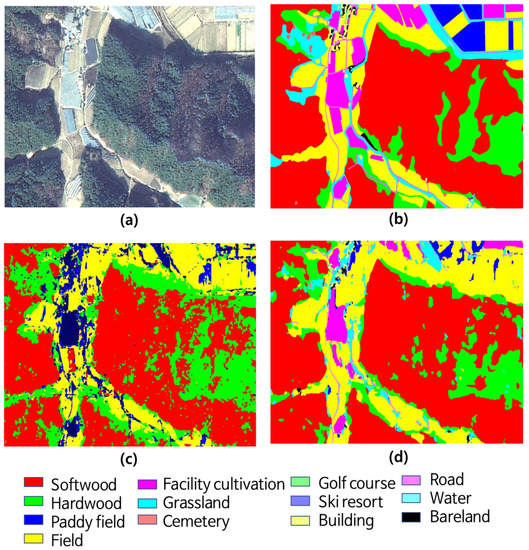

After constructed dataset learning and hyperparameter tuning, the final classification of the mountain deforestation area was performed. These results were obtained from images in which intact and damaged forests were distributed evenly among 13 test datasets. To obtain the results, the softmax function was used for both the SegNet and U-Net models. Thirteen classification items were obtained, and the total accuracy and item-level accuracy were calculated among all items not excluded from the test datasets. Three accuracy metrics were calculated (Table 4). During the learning process, hyperparameter tuning was performed to enhance overall accuracy. Mean intersection over union (MIoU) and frequency-weighted intersection over union (FIoU) values were derived from the calculated hyperparameters. MIoU, the mean of the IoU values of all classes, is used widely in computer vision-based object detection [48]. In this study, the MIoU values for SegNet and U-Net were 14.0% and 25.4%, respectively; classes containing relatively small areas appear to have shown low accuracy. The FIoU is calculated with greater weight on large-area items; it has been applied to various datasets, such as the COCO dataset [49]. FIoU values for SegNet and U-Net were 41.4% and 61.6%, respectively; with the U-Net model FIoU showing 10–20% less than total accuracy. The total accuracy values for SegNet and U-Net were 63.3% and 74.8%, respectively (Figure 8).

Table 4.

Result of MIoU, FIoU, and accuracy (unit %).

Figure 8.

Classification result. (a) is image, (b) is ground truth, (c) is SegNet result and (d) is U-Net result.

Item-level classification showed that softwood represented the largest forest area, with a high classification accuracy of 92.6% for U-Net and an accuracy of 84.4% for SegNet (Table 5). Hardwood forest, which covered a smaller area than the softwood forest, was identified at an accuracy of 61.0% by U-Net and 73.3% by SegNet. SegNet showed 54.6% higher accuracy than U-Net in classifying bare land. However, U-Net showed better classification accuracy than SegNet for other non-forest areas, perhaps because SegNet misclassified most non-forest areas as bare land. Fields represented the second largest land cover area among non-forest areas; U-Net showed 84.2% accuracy and SegNet showed 64.1% accuracy for their classification. Among non-forest land classes in the U-Net and SegNet algorithms, fields had the highest classification accuracy. U-Net classified “facility cultivation” with 50.7% accuracy, while SegNet did not classify it at all. In most cases, facility cultivation was misclassified as bare land or paddy field. U-Net and SegNet showed 21.2% and 11.5% classification accuracies, respectively, for agricultural land including paddy fields; the most frequent classification error was the misclassification of grassland as agricultural land, representing a 21.3% difference in accuracy. For cemeteries, which occupied the smallest area among all land use types, the classification accuracy of U-Net was 14.2%, which was misclassified to forest land. In the case of cemetery, all misclassifications occurred in SegNet. Both U-Net and SegNet misclassified by cemeteries as hardwood forest. Regarding the U-Net results for buildings and roads, the classification accuracy was less than 30%; it was typically misclassified as field. Regarding the SegNet results, the building and road land use types were misclassified as bare land and facility cultivation, resulting in low overall accuracy. Unlike U-Net, SegNet could not accurately classify buildings and roads. The five land use types with a 0% value in the SegNet model were misclassified as bare land or fields. Land use types that could not be classified by SegNet had significantly fewer pixels than the classified types. The classification performance of the U-Net algorithm also showed low accuracy in land cover types with a small number of pixels.

Table 5.

Test accuracy for each class (unit %).

4. Discussion

The SegNet algorithm outperformed U-Net in the classification of hardwood forests and bare land; for all other land use types, U-Net was more accurate. U-Net classified forests and non-forests, which was the basis of this study, with 98.4% and 88.5% accuracy, respectively. Previous studies of deforested area based on remote sensing data and deep-learning have also shown a high rate of accuracy (~90%), likely because land cover is divided into only two or three classes [35,50,51,52].

The overall accuracy of the U-Net model is 74.8%, which was about 11.5% higher than that of SegNet (63.3%). These results are consistent with those of previous studies, which have revealed greater accuracy of the U-Net model in single- and multi-class classification [53,54,55,56]. The model results showed that SegNet outperformed U-Net in the classification of hardwood forest and bare land, but in all other items, U-Net showed a higher rate of accuracy than SegNet. SegNet was not able to classify facility cultivation, grassland, cemetery, building, and road. These results are performance in classes with small number of pixels and are items that show low accuracy even in the U-Net model. As a result, it is judged that SegNet exhibited lower performance in terms of a small number of pixels compared to U-Net. The difference between the overall accuracy and FIoU differs by more than 20% for SegNet, and this indicates that the accuracy of SegNet is lower than that of U-Net for items with a small number of pixel values. Other studies have shown relatively low classification accuracy for farmland and roads [57]. SegNet shows lower performance in classifying buildings than U-Net according to the dataset [58]. This may be explained by the difference in the decoder stage upsampling process between U-Net and SegNet.

Forest and non-forest areas were classified with high accuracy (98.4% and 88.5%, respectively) in this study. However, the accuracy of non-forest land cover (grasslands, fields, buildings, and roads) classification was low. The distinction between bare land and fields was ambiguous because the Kompsat-3 images used as learning data in this study were obtained in the spring, when the aboveground plant biomass was low. Roads around disturbed mountain forests are typically made of concrete, rather than asphalt, which may explain the models’ low accuracy distinguishing roads from buildings. The facility cultivation, bare land, and cemetery land use types in the training dataset were insufficient. The performance of the SegNet and U-Net algorithms was different for each item. As a result, it is difficult to achieve high performance in 13 items with one algorithm, so each algorithm must be treated organically by item. For example, the U-Net algorithm showed high performance for forest/non-forest classification, but the SegNet showed better performance for hardwood and bare land items. Therefore, it is necessary to organically use an algorithm suitable for each cover types classification performance in the future, and the hardware performance limitations due to the use of multiple algorithms is a task to be solved.

5. Conclusions

In this study, landscape affected by human-induced deforestation from high-resolution Kompsat-3 satellite images was classified using the FCN-based U-Net and SegNet deep-learning algorithms. To ensure efficiency, precise training datasets were constructed from reference data, such as forest and land cover maps. To this end, satellite images were preprocessed, and labeling data were created from the reference data. In total, 13 classes were formed by the subdivision of forest and non-forest areas. Training and verification datasets were applied to the SegNet and U-Net models to estimate hyperparameters, considering batch size, patch size, number of iterations, and learning rate. For SegNet, the hyperparameter estimation was optimal at a batch size of 100 and patch size of 256 × 256, with 100,000 iterations; for U-Net, performance was optimal at a batch size of 1, patch size of 256 × 256, and 300,000 iterations. The final performance of the U-Net and SegNet algorithms was evaluated based on the FIoU and MIoU values.

The overall accuracy of the U-Net model was 74.8%, which was 11.5% higher than that of SegNet (63.3%). However, for land use types with a small number of pixels, both models showed low accuracy. Overall, the accuracy of U-Net was high, and when the results of U-Net were divided into forest and non-forest land, the forest land was misclassified within the forest land. The accuracy for non-forest lands was also low, but misclassification occurred within non-forest lands. SegNet outperformed U-Net in the classification of hardwood forest and bare land.

Training datasets can be constructed, and deep-learning algorithms like U-Net applied, for the interpretation of high-resolution satellite images in various ways. Moreover, the U-Net hyperparameters used in this study could be applied to other regions, which may facilitate quantitative analysis of larger areas disturbed by deforestation.

This study has several limitations. First, more advanced deep-learning algorithms have been developed since this study was conducted and which may improve upon the accuracy of our classification results. Second, larger datasets are needed to accurately train the AI algorithms and more clearly distinguish the various sub-classes used in this study, especially in non-forest areas. We anticipate that further research using improved algorithms and larger datasets will result in better estimation of the causes of deforested mountain areas. Furthermore, the implementation of a stable system for upgrading deep-learning algorithms will facilitate future monitoring and management of damaged mountain forests.

Author Contributions

Conceptualization, S.-H.L. and M.-J.L.; Data curation, S.-H.L. and K.-J.H.; Formal analysis, S.-H.L. and K.-J.H.; Investigation, S.-H.L. and M.-J.L.; Methodology, S.-H.L. and M.-J.L.; Project administration, M.-J.L.; Resources, K.-J.L. and K.-Y.O.; Software, S.-H.L. and K.L.; Supervision, M.-J.L.; Validation, S.-H.L. and M.-J.L.; Visualization, S.-H.L. and K.-J.H.; Writing—original draft, S.-H.L.; Writing—review and editing, M.-J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was conducted at Korea Environment Institute (KEI) with support from ‘Development of precision analysis technology for forest change using satellite images and machine learning’ by Korea Aerospace Research Institute (KARI), and with support from the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2018R1D1A1B07041203).

Acknowledgments

The authors would like to thank the anonymous reviewers for their very competent comments and helpful suggestions. The English in this document has been checked by at least two professional editors, both native speakers of English. For a certificate, please see: http://www.textcheck.com/certificate/index/HKUakp.

Conflicts of Interest

The authors declare no conflict of interest.

References

- World Wide Fund for Nature Home Page. Available online: https://wwf.panda.org/our_work/forests/importance_forests/ (accessed on 17 June 2020).

- Banskota, A.; Kayastha, N.; Falkowski, M.J.; Wulder, M.A.; Froese, R.E.; White, J.C. Forest Monitoring using Landsat Time Series Data: A Review. Can. J. Remote Sens. 2014, 40, 362–384. [Google Scholar] [CrossRef]

- Yang, J.; Su, J.; Chen, F.; Xie, P.; Ge, Q. A local land use competition cellular automata model and its application. ISPRS Int. J. Geo-Inf. 2016, 5, 106. [Google Scholar] [CrossRef]

- He, B.J.; Zhao, Z.Q.; Shen, L.D.; Wang, H.B.; Li, L.G. An approach to examining performances of cool/hot sources in mitigating/enhancing land surface temperature under different temperature backgrounds based on landsat 8 image. Sustain. Cities Soc. 2019, 44, 416–427. [Google Scholar] [CrossRef]

- Liu, H.; Zhan, Q.; Gao, S.; Yang, C. Seasonal variation of the spatially non-stationary association between land surface temperature and urban landscape. Remote Sens. 2019, 11, 1016. [Google Scholar] [CrossRef]

- American Forest Home Page. Available online: https://www.americanforests.org/blog/much-forests-worth/ (accessed on 21 July 2016).

- USDA Forest Service and NY State Department of Environmental Conservation Home Page. Available online: https://www.dec.ny.gov/lands/40243.html (accessed on 20 June 2020).

- Croitoru, L. Valuing the Non-Timber Forest Products in the Mediterranean Region. Ecol. Econ. 2007, 63, 768–775. [Google Scholar] [CrossRef]

- Kim, J.H.; Kim, R.H.; Youn, H.J.; Lee, S.W.; Choi, H.T.; Kim, J.J.; Park, C.R.; Kim, K.D. Valuation of Nonmarket Forest Resources. Korean Inst. For. Recreat. Welf. 2012, 16, 9–18. [Google Scholar]

- Kuvan, Y. Mass Tourism Development and Deforestation in Turkey. Anatolia 2010, 21, 155–168. [Google Scholar] [CrossRef]

- Hosonuma, N.; Herold, M.; De Sy, V.; De Fries, R.S.; Brockhaus, M.; Verchot, L.; Angelsen, A.; Romijn, E. An Assessment of Deforestation and Forest Degradation Drivers in Developing Countries. Environ. Res. Lett. 2012, 7, 044009. [Google Scholar] [CrossRef]

- Clerici, N.; Armenteras, D.; Kareiva, P.; Botero, R.; Ramírez-Delgado, J.; Forero-Medina, G.; Ochoa, J.; Pedraza, C.; Schneider, L.; Lora, C. Deforestation in Colombian Protected Areas Increased during Post-Conflict Periods. Sci. Rep. 2020, 10, 4971. [Google Scholar] [CrossRef]

- Wang, C.; Myint, S.W.; Hutchins, M. The Assessment of Deforestation, Forest Degradation, and Carbon Release in Myanmar 2000–2010. In Environmental Change in the Himalayan Region; Springer: Berlin/Heidelberg, Germany, 2019; pp. 47–64. [Google Scholar]

- Korea Forest Service Home Page. Available online: http://forest.go.kr (accessed on 29 August 2019).

- Fuller, D.O. Tropical Forest Monitoring and Remote Sensing: A New Era of Transparency in Forest Governance? Singap. J. Trop. Geogr. 2006, 27, 15–29. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Fusion of Hyperspectral and LIDAR Remote Sensing Data for Classification of Complex Forest Areas. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1416–1427. [Google Scholar] [CrossRef]

- Sanchez-Azofeifa, G.A.; Harriss, R.C.; Skole, D.L. Deforestation in Costa Rica: A quantitative analysis using remote sensing imagery 1. Biotropica 2001, 33, 378–384. [Google Scholar] [CrossRef]

- Mertens, B.; Sunderlin, W.D.; Ndoye, O.; Lambin, E.F. Impact of Macroeconomic Change on Deforestation in South Cameroon: Integration of Household Survey and Remotely-Sensed Data. World Dev. 2000, 28, 983–999. [Google Scholar] [CrossRef]

- Frohn, R.; McGwire, K.; Dale, V.; Estes, J. Using Satellite Remote Sensing Analysis to Evaluate a Socio-Economic and Ecological Model of Deforestation in Rondonia, Brazil. Remote Sens. 1996, 17, 3233–3255. [Google Scholar] [CrossRef]

- McCracken, S.D.; Brondizio, E.S.; Nelson, D.; Moran, E.F.; Siqueira, A.D.; Rodriguez-Pedraza, C. Remote Sensing and GIS at Farm Property Level: Demography and Deforestation in the Brazilian Amazon. Photogramm. Eng. Remote Sens. 1999, 65, 1311–1320. [Google Scholar]

- Chowdhury, R.R. Driving Forces of Tropical Deforestation: The Role of Remote Sensing and Spatial Models. Singap. J. Trop. Geogr. 2006, 27, 82–101. [Google Scholar] [CrossRef]

- Buchanan, G.M.; Butchart, S.H.; Dutson, G.; Pilgrim, J.D.; Steininger, M.K.; Bishop, K.D.; Mayaux, P. Using Remote Sensing to Inform Conservation Status Assessment: Estimates of Recent Deforestation Rates on New Britain and the Impacts upon Endemic Birds. Biol. Conserv. 2008, 141, 56–66. [Google Scholar] [CrossRef]

- Achard, F.; Estreguil, C. Forest Classification of Southeast Asia using NOAA AVHRR Data. Remote Sens. Environ. 1995, 54, 198–208. [Google Scholar] [CrossRef]

- Desclée, B.; Bogaert, P.; Defourny, P. Forest Change Detection by Statistical Object-Based Method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Lary, D.J.; Alavi, A.H.; Gandomi, A.H.; Walker, A.L. Machine Learning in Geosciences and Remote Sensing. Geosci. Front. 2016, 7, 3–10. [Google Scholar] [CrossRef]

- Carreiras, J.; Pereira, J.; Shimabukuro, Y.E. Land-Cover Mapping in the Brazilian Amazon using SPOT-4 Vegetation Data and Machine Learning Classification Methods. Photogramm. Eng. Remote Sens. 2006, 72, 897–910. [Google Scholar] [CrossRef]

- Papa, J.P.; FalcãO, A.X.; De Albuquerque, V.H.C.; Tavares, J.M.R. Efficient Supervised Optimum-Path Forest Classification for Large Datasets. Pattern Recognit. 2012, 45, 512–520. [Google Scholar] [CrossRef]

- Li, M.; Im, J.; Beier, C. Machine Learning Approaches for Forest Classification and Change Analysis using Multi-Temporal Landsat TM Images Over Huntington Wildlife Forest. GISci. Remote Sens. 2013, 50, 361–384. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hafemann, L.G.; Oliveira, L.S.; Cavalin, P. Forest Species Recognition using Deep Convolutional Neural Networks. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 1103–1107. [Google Scholar]

- Bragilevsky, L.; Bajic, I.V. Deep Learning for Amazon Satellite Image Analysis. In Proceedings of the 2017 IEEE Pacific Rim Conference on Communications, Computers and Signal Processing (PACRIM), Victoria, BC, Canada, 21–23 August 2017; pp. 1–5. [Google Scholar]

- Xu, E.; Zeng, O. Predicting Amazon Deforestation with Satellite Images. Available online: http://cs231n.stanford.edu/reports/2017/pdfs/916.pdf (accessed on 30 June 2020).

- Shah, U.; Khawad, R.; Krishna, K.M. Detecting, Localizing, and Recognizing Trees with a Monocular MAV: Towards Preventing Deforestation. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1982–1987. [Google Scholar]

- Lobo Torres, D.; Queiroz Feitosa, R.; Nigri Happ, P.; La Rosa, L.E.C.; Marcato, J., Jr.; Martins, J.; Olã Bressan, P.; Gonçalves, W.N.; Liesenberg, V. Applying Fully Convolutional Architectures for Semantic Segmentation of a Single Tree Species in Urban Environment on High Resolution UAV Optical Imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef]

- De Bem, P.P.; de Carvalho, J.; Osmar, A.; Fontes, G.R.; Trancoso, G.R.A. Change Detection of Deforestation in the Brazilian Amazon using Landsat Data and Convolutional Neural Networks. Remote Sens. 2020, 12, 901. [Google Scholar] [CrossRef]

- Andersson, V. Semantic Segmentation: Using Convolutional Neural Networks and Sparse Dictionaries. Available online: http://urn.kb.se/resolve?urn=urn:nbn:se:liu:diva-139367 (accessed on 25 June 2020).

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Cao, R.; Qiu, G. Urban Land use Classification Based on Aerial and Ground Images. In Proceedings of the 2018 International Conference on Content-Based Multimedia Indexing (CBMI), La Rochelle, France, 4–6 September 2018; pp. 1–6. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A Review on Deep Learning Techniques Applied to Semantic Segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Joint Learning from Earth Observation and Openstreetmap Data to Get Faster Better Semantic Maps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 67–75. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Liu, J.; Wang, X.; Wang, T. Classification of Tree Species and Stock Volume Estimation in Ground Forest Images using Deep Learning. Comput. Electron. Agric. 2019, 166, 105012. [Google Scholar] [CrossRef]

- Stoian, A.; Poulain, V.; Inglada, J.; Poughon, V.; Derksen, D. Land Cover Maps Production with High Resolution Satellite Image Time Series and Convolutional Neural Networks: Adaptations and Limits for Operational Systems. Remote Sens. 2019, 11, 1986. [Google Scholar] [CrossRef]

- Soni, A.; Koner, R.; Villuri, V.G.K. M-UNet: Modified U-Net Segmentation Framework with Satellite Imagery. In Proceedings of the Global AI Congress, Kolkata, India, 12–14 September 2019; pp. 47–59. [Google Scholar]

- Chavez, P.S. Image-Based Atmospheric Corrections-Revisited and Improved. Photogramm. Eng. Remote Sens. 1996, 62, 1025–1035. [Google Scholar]

- Zhang, Y.; Mishra, R.K. A Review and Comparison of Commercially Available Pan-Sharpening Techniques for High Resolution Satellite Image Fusion. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 182–185. [Google Scholar]

- Ghorbanzadeh, O.; Blaschke, T. Optimizing Sample Patches Selection of CNN to Improve the mIOU on Landslide Detection. In Proceedings of the GISTAM, Heraklion, Greece, 3–5 May 2019; pp. 33–40. [Google Scholar]

- Kirillov, A.; Girshick, R.; He, K.; Dollár, P. Panoptic Feature Pyramid Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6399–6408. [Google Scholar]

- López-Fandiño, J.; Garea, A.S.; Heras, D.B.; Argüello, F. Stacked Autoencoders for Multiclass Change Detection in Hyperspectral Images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1906–1909. [Google Scholar]

- Martins, F.F.; Zaglia, M.C. Application of Convolutional Neural Network to Pixel-Wise Classification in Deforestation Detection using PRODES Data. In Proceedings of the Geoinfo, São José dos Campos, Brazil, 11–13 November 2019; pp. 57–65. [Google Scholar]

- Ortega, M.; Bermudez, J.; Happ, P.; Gomes, A.; Feitosa, R. Evaluation of Deep Learning Techniques for Deforestation Detection in the Amazon Forest. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 2019, 4. [Google Scholar] [CrossRef]

- Luo, Q.; Gao, B.; Woo, W.L.; Yang, Y. Temporal and Spatial Deep Learning Network for Infrared Thermal Defect Detection. NDT E Int. 2019, 108, 102164. [Google Scholar] [CrossRef]

- Singh, V.K.; Rashwan, H.A.; Abdel-Nasser, M.; Sarker, M.; Kamal, M.; Akram, F.; Pandey, N.; Romani, S.; Puig, D. An Efficient Solution for Breast Tumor Segmentation and Classification in Ultrasound Images using Deep Adversarial Learning. arXiv 2019, arXiv:1907.00887. [Google Scholar]

- Zhang, X.; Wu, B.; Zhu, L.; Tian, F.; Zhang, M. Land use Mapping in the Three Gorges Reservoir Area Based on Semantic Segmentation Deep Learning Method. arXiv 2018, arXiv:1804.00498. [Google Scholar]

- Rastogi, K.; Bodani, P.; Sharma, S.A. Automatic Building Footprint Extraction from very High-Resolution Imagery using Deep Learning Techniques. Geocarto Int. 2020, 1–14. [Google Scholar] [CrossRef]

- Han, Z.; Dian, Y.; Xia, H.; Zhou, J.; Jian, Y.; Yao, C.; Wang, X.; Li, Y. Comparing Fully Deep Convolutional Neural Networks for Land Cover Classification with High-Spatial-Resolution Gaofen-2 Images. ISPRS Int. J. Geo-Inf. 2020, 9, 478. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C. Land Cover Classification from Remote Sensing Images Based on Multi-Scale Fully Convolutional Network. arXiv 2020, arXiv:2008.00168. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).