Abstract

Many studies have achieved efficient and accurate methods for identifying crop lodging under homogeneous field surroundings. However, under complex field conditions, such as diverse fertilization methods, different crop growth stages, and various sowing periods, the accuracy of lodging identification must be improved. Therefore, a maize plot featuring different growth stages was selected in this study to explore an applicable and accurate lodging extraction method. Based on the Akaike information criterion (AIC), we propose an effective and rapid feature screening method (AIC method) and compare its performance using indexed methods (i.e., variation coefficient and relative difference). Seven feature sets extracted from unmanned aerial vehicle (UAV) images of lodging and nonlodging maize were established using a canopy height model (CHM) and the multispectral imagery acquired from the UAV. In addition to accuracy parameters (i.e., Kappa coefficient and overall accuracy), the difference index (DI) was applied to search for the optimal window size of texture features. After screening all feature sets by applying the AIC method, binary logistic regression classification (BLRC), maximum likelihood classification (MLC), and random forest classification (RFC) were utilized to discriminate among lodging and nonlodging maize based on the selected features. The results revealed that the optimal window sizes of the gray-level cooccurrence matrix (GLCM) and the gray-level difference histogram statistical (GLDM) texture information were 17 × 17 and 21 × 21, respectively. The AIC method incorporating GLCM texture yielded satisfactory results, obtaining an average accuracy of 82.84% and an average Kappa value of 0.66 and outperforming the index screening method (59.64%, 0.19). Furthermore, the canopy structure feature (CSF) was more beneficial than other features for identifying maize lodging areas at the plot scale. Based on the AIC method, we achieved a positive maize lodging recognition result using the CSFs and BLRC. This study provides a highly robust and novel method for monitoring maize lodging in complicated plot environments.

1. Introduction

Maize plays an important role among the world’s cereal crops; it is used in food, fodder, and bioenergy production. High and stable maize yields are crucial to global food security. Lodging is a natural plant condition that reduces the yield and quality of various crops. In terms of different displacement positions, crop lodging can be divided into stem lodging (Lodging S) and root lodging (Lodging R). Lodging S involves the bending of crop stems from their upright position, while Lodging R refers to damage or failure to the plant’s root-soil anchorage system [1]. Due to maize lodging, harvest losses could be as high as 50% [2]. Timely and exact identification of maize lodging is essential for estimating yield loss, making comprehensive production decisions and supporting insurance compensation. The traditional approaches to lodging investigation rely on manual measurements made at plots, which is time consuming, laborious, inefficient, and unsuitable for large-scale lodging surveys.

Currently, given the rapid evolution of remote sensing techniques, an increasing number of scholars have used remote sensing imagery combined with various feature-extraction tools to obtain crop lodging information. Li et al. [3] investigated the potential for maize lodging extraction and feature screening from satellite remote sensing imagery based on the difference in texture values between lodging and nonlodging areas. Based on the changes in vegetable index (VI) features under lodging and nonlodging maize, Wang et al. [2] reported that the correlation between the changes and the lodging percentage could be used as a standard to select effective features. Crop lodging is frequently accompanied by adverse weather; hence, there is no guarantee that seasonable images can be obtained successfully due to the limited capabilities of optical satellites in poor weather conditions. In contrast to optical sensors, the spaceborne synthetic aperture radar (SAR) technique is not only robust to severe weather adverse effects but also provides abundant information regarding the structure of vegetation. SAR technology is currently widely used to discriminate between lodging and nonlodging crops. By utilizing the fully polarimetric C-band radar images, Yang et al. [4] explored the difference between lodging and nonlodging wheat areas in various growth periods. Chen et al. [5] reported on polarimetric features extracted from consecutive RADARSAT-2 data to identify sugarcane lodging areas. However, due to its low spatial resolution, the SAR technique is more applicable to large and relatively homogeneous crop planting areas than to small and heterogeneous plots [6].

To precisely extract lodging areas within a small patchwork field, unmanned aerial vehicles (UAV) can acquire remote sensing images that offer both high temporal and spatial resolution and have strong operability [7]. At present, researchers usually employ UAV images and their features acquired shortly after lodging to identify crop lodging at the field scale. The identified crops are mainly in the vigorous growth period. During this period, lodging crops and nonlodging crops have similar growth conditions, but their leaf color is quite different, which can be observed in UAV images. Therefore, high-accuracy identification results of maize lodging can be achieved by applying only red-green-blue (RGB) or multispectral images.

Using UAV-collected multispectral, RGB, and thermal infrared imagery, image features such as texture features, VI, and canopy structure features can be derived from these UAV images. Based on extracted feature information, single- and multiple-class feature sets (SFS and MFS, respectively) can be constructed and used for lodging identification [8]. SFSs can be used to promptly and efficiently recognize lodging areas due to their low data dimensions and straightforward computation. RGB, multispectral imagery, and VI were introduced to extract crop lodging based on the leaf color discrepancy between lodging and nonlodging areas [9]. However, this discrepancy experiences interference from different crop varieties and fertilization treatments. To address this situation, Liu et al. [10] reported that although thermal infrared images have low spatial resolution, features extracted from them are beneficial for lodging assessment. In addition, the patterns, shapes, and sizes of vegetation in UAV imagery can be quantitatively described by analyzing texture characteristics; thus, they have been widely employed in lodging assessment research [11]. However, these features also have some defects. For instance, it is difficult to determine the optimal scale and moving direction for the texture window. More recently, the canopy structure feature (CSF) has been broadly applied to determine whether lodging has occurred and, ultimately, to estimate lodging severity, which fosters accurate monitoring of crop lodging [12]. However, these features are likely to be limited by adverse field environments and high experimental consumption; therefore, SFS often cannot fully reflect the crop lodging properties under intricate field environments, and MFSs have been used more frequently in recent years. For example, by combining the digital surface model (DSM) and texture information, crop lodging recognition can be performed with reliable accuracy [13]. Based on a feature set containing color and texture features, adding temperature information also greatly improved the recognition accuracy of indica rice lodging [10]. In addition, the probability that lodging has occurred can be predicted by using a feature set containing the canopy structure and the VI feature [14].

Nevertheless, MFSs have a data redundancy defect that causes greater consumption of computing resources and reduces the identification efficiency, necessitating optimal feature selection. The number of studies regarding screening methods for lodging identification is limited. The existing methods rely mainly on the difference evaluation index, which has less computational overhead [1,15,16]. However, these methods do not consider the interactions between features, and there is no clear standard for determining the most appropriate feature dimension. While the feature numbers can be explicitly determined by utilizing single-feature probability values based on a Bayesian network [13], this approach assumes that all features are mutually independent and that no correlation occurs between the selected features. Han et al. [14] reported that univariate and multivariate logistic regression analysis methods can be used to screen lodging features. Although this method explores the underlying relationship between the outcome and the selected factors, it is not suitable for high-dimensional feature sets because of its complex screening process.

Therefore, the main purpose of this study is to construct a simple and efficient feature screening method for lodging recognition under complicated field environments that also considers the interactions between features. We verify the feasibility and stability of the proposed method under both high- and low-dimensional circumstances. The primary objectives are (1) to analyze the changes in lodging extraction accuracy under different texture window sizes and to determine the optimal window size that results in the maximal accuracy; (2) to determine the optimal screening method by comparing the accuracy of maize lodging results based on different feature screening methods; and (3) to extract maize lodging areas by using the optimal feature screening method and various classifications.

2. Materials and Methods

2.1. Study Area

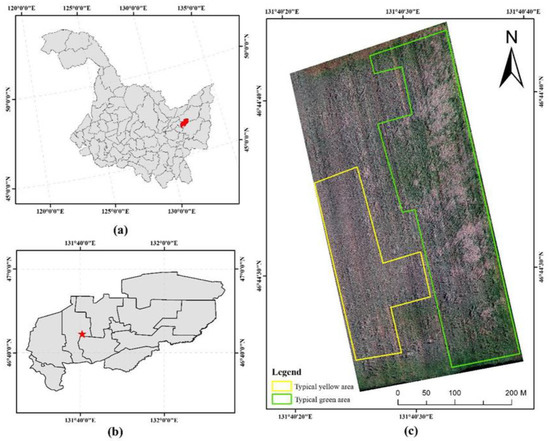

The maize field trial was conducted at Youyi Farm, Youyi County, Heilongjiang Province in China (46°44′N, 131°40′E), which covers an area of approximately 17.29 hectares. The area has a temperate continental monsoon climate with four distinct seasons, rain and heat occur during the same period, and the soil type is dark brown soil with high fertility.

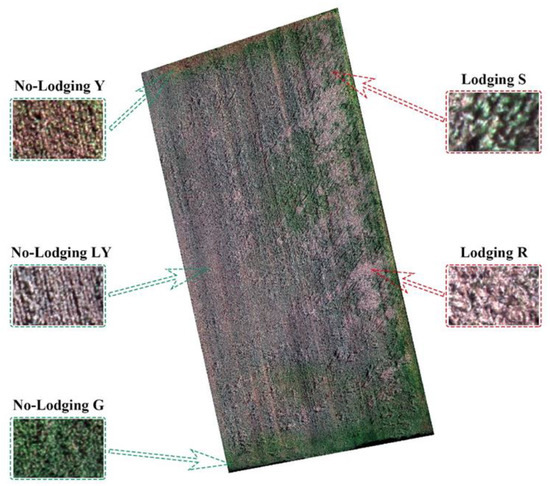

The maize planted in this experimental plot was sown on 6 May 2019, and harvested on 14 September 2019. Maize lodged on 28 July 2018, and multispectral imagery (Figure 1) was acquired on 12 September 2019. In the study site, which is affected by natural factors such as high winds, difficult terrain, and soil, water, and fertilizer transportation problems, maize development is diverse and exists in both development and mature periods. In Figure 1c, the typical yellow areas show maize in the mature period, and its growth is better than that of the typical green area maize in the development period. Maize lodging can be divided into the two groups shown in Figure 2: Lodging S and Lodging R. Compared with Lodging S, Lodging R is more serious. The nonlodging maize includes three classes (Figure 2): maize with green leaves (No-Lodging G), maize with yellow leaves (No-Lodging Y), and maize with yellowish leaves (No-Lodging LY). The growth of No-Lodging LY is better than that of the other two categories. Influenced by the edge effect [17], the growth status of No-Lodging Y is the worst.

Figure 1.

(a) The location of Youyi County in China (red area); (b) the location of the study site in Youyi County (red area); and (c) the multispectral imagery of the study site.

Figure 2.

Types of lodging and nonlodging maize.

2.2. Image Collection

This study used a RedEdge 3 camera equipped with a DJI M600 Pro UAV system (SZ DJI Technology Co., Shenzhen, China) to record multispectral images with five bands (Table 1) on 12 September 2018, and 10 April 2019. The camera has a resolution of 1280 × 960 pixels and a lens focal length of 5.5 mm. By using DJI GS Pro 1.0 (version 1.0, SZ DJI Technology Co., Shenzhen, China) software, the overflight speeds were set to 7 m/s and a flight altitude 110 m above ground level. The forward and lateral overlaps were configured to 80% and 79%, respectively, which yielded UAV images with a ground sampling distance of 7.5 cm.

Table 1.

The band information of RedEdge 3 camera.

2.3. Data Processing

The agricultural multispectral processing module in Pix4Dmapper 4.4 was used to automatically generate multispectral imagery and DSM. The fundamental working flow includes (1) radiometric calibration, (2) structure-from-motion (SFM) processing, (3) spatial correction, and (4) orthoimage and DSM generation. By employing radiometric calibration images and reflectance values, radiometric calibration can be performed for every image. Using the overlapping images, SFM processing recreates their positions and orientations in a densified point cloud by using automatic aerial triangulation. Based on four ground control points (manually collected before image acquisition), the three-dimensional point cloud was corrected during the stitching process and subsequently used to construct orthoimages and DSM.

Based on the multispectral imagery, the study area was divided into 119 blocks using the created fishnet function provided by ArcGIS 10.2. Then, 89 blocks were selected as the modeling areas and 30 as the verification areas. In the modeling areas, 643 maize lodging samples and 654 nonlodging samples were collected, marked as “1” and “0,” respectively. These maize samples were subjected to binary logistic regression classification (BLRC), texture information extraction and feature screening. We also selected 50 samples each of lodging and nonlodging maize from the verification areas to calculate the precision of all the lodging identification results in this study.

2.4. Feature Set Construction

Three SFSs for maize lodging recognition were extracted from multispectral imagery that included eight spectral features, 41 texture features, and nine CSFs. Moreover, we constructed all the possible feature sets of these three SFSs to build the MFSs.

2.4.1. Spectral Feature Set

In general, the spectral reflectance of vegetation changes after lodging. To take full advantage of this change, five multispectral image bands were added to the spectral feature set. The VI quantitatively reflects the growing situation of vegetation and the vegetation coverage [18,19]. In this study, three types of VI (see Table 2) were derived to expand the difference between lodging and nonlodging areas. Based on the above image features, a spectral feature set was constructed to identify maize lodging.

Table 2.

The calculation formula and implication of the vegetable index (VI).

2.4.2. Canopy Structure Feature Set

Based on the multispectral images of maize lodging and bare land collected by UAV, we constructed two DSMs of the study site. The DSM of bare land, which is not affected by maize height, can be considered as the digital elevation model (DEM) of the study area. The DSM of maize lodging was subtracted from the DEM to generate a canopy height model (CHM), which was employed to calculate nine CSFs (Table 3).

Table 3.

The calculation formula and implication of canopy structure features (CSFs).

2.4.3. Texture Feature Set

Texture features can express the pattern, size, and shape of various objects by measuring the adjacency relationship and frequency of gray-tone changes of pixels [33]. Based on texture features, the spatial heterogeneity of remote sensing images can be expressed quantitatively [34]. The calculation approaches of texture features are usually divided into four types: structural, statistical, model-based, and transformation approaches. At present, the statistical approaches are widely used in lodging recognition research, and the high accuracy of lodging monitoring results can be derived. In addition, the statistical approaches have proved to be superior to structural and transformation approaches. Therefore, the different measurement levels in statistical approaches (the gray-level cooccurrence matrices and gray-level difference matrices) were selected to calculate the texture features of remote sensing images. The gray-level cooccurrence matrices (GLCM) and gray-level difference matrices (GLDM) have been broadly used to quantitatively describe texture features that can be applied in image classification and parameter inversion [35].

To fully describe the details of the lodging and nonlodging maize in the image, we analyzed as many different types of texture features as possible based on the GLCMs and GLDMs. In this study, eight types of GLCM texture measures [36] (mean, variance, homogeneity, contrast, dissimilarity, entropy, second moment, and correlation) and five types of GLDM texture measures [37] (data range, mean, entropy, variance, and skewness) were extracted to construct a texture feature set to discriminate between lodging and nonlodging areas. Based on the average reflectivity of three bands (the red, green, and blue bands), red-edge, near-infrared bands and CHM, 41 texture measures were obtained. Specifically, we calculated only the mean texture features (GLCM and GLDM) of CHM to reduce data redundancy.

To speed up the processing, the texture features extracted from CHM were not used when determining the optimal texture window size. Fifteen different sizes of sliding windows were calculated based on the texture feature set without the mean texture features of CHM: 3 × 3, 5 × 5, 7 × 7, 9 × 9, 11 × 11, 13 × 13, 15 × 15, 17 × 17, 19 × 19, 21 × 21, 31 × 31, 41 × 41, 51 × 51, 61 × 61 and 71 × 71. Based on maximum likelihood classification (MLC), we analyzed the variations in the Kappa coefficient and the overall accuracy with the different window sizes. The difference index (DI) value of the texture feature with the highest classification accuracy was calculated. Finally, the filter window corresponding to the minimum DI value was adopted as the optimal texture window size for maize lodging extraction. To analyze the suitability of the selected optimal texture window, the areas of single lodging and nonlodging maize in multispectral images were measured as 1.55 m2 and 0.17 m2, respectively. In addition, we measured the area of small-scale maize lodging as 2.21 m2.

The formula for DI calculation is as follows:

where SD1 and SD2 are the standard deviations of the lodging and nonlodging samples, respectively, and MN1 and MN2 are the means of the lodging and nonlodging samples, respectively.

2.5. Feature Screening

2.5.1. Feature Screening Based on Akaike Information Criterion (AIC) Method

The Akaike information criterion (AIC), which is an information criterion based on the concept of entropy, can be used to select statistical models by evaluating the accuracy and complexity of the model [38,39]. In general, AIC must be combined with a logistic regression model to achieve feature selection. Therefore, in this study, we introduced binary logistic regression to describe the relationships between features, which can also help predict the probability of lodging.

In this study, the independent variables of the binary logistic regression model were the maize lodging recognition features, and the dependent variables were binary variables with a value of 1 or 0, representing lodging or nonlodging maize, respectively. The maximum likelihood method was used to estimate the parameters of the binary logistic regression model. Following this approach, the binary logistic regression model for predicting the occurrence probability of lodging is

where are the features to be screened, is the intercept, and are regression coefficients.

The maize samples were divided into a training set and a testing set, and the AIC value of the model was calculated as follows:

where stands for the natural logarithm. is the maximum likelihood function value of the logistic regression model, which indicates the probability that the model will result in a correct classification, and is the number of parameters in the logistic regression model.

When calculating the AIC value of the binary logistic regression model, three-tenths of 1297 maize samples were randomly selected as the training set, and the remaining maize samples were used as the testing set. The dependent variables in the model were eliminated along the decreasing direction of the AIC value until the AIC value reached its lowest point. Finally, the parameters contained in the model with the lowest AIC values were regarded as the optimal features.

2.5.2. Feature Screening Based on Index Method

Variation coefficients and relative differences have a wide range of applications in screening and analyzing the image features of crop lodging [6,7]. The variation coefficient directly reflects the dispersion degree of lodging and nonlodging crops in the image features, while the relative difference indicates the difference between the lodging and nonlodging areas based on the image characteristics. Features suitable for lodging identification should have low variation coefficients and high relative differences.

This study adopts the variation coefficient and relative difference as two evaluation indicators for feature selection. First, the variation coefficient and relative difference of the lodging and nonlodging areas in features were calculated, and the ten features with the largest relative difference were selected as predictors for lodging recognition. Based on these predictors, the factor whose variation coefficient was less than 20.57% was selected as the optimal feature to ensure classification stability.

The formulas to calculate the variation coefficient and relative difference are as follows:

where CV denotes the variation coefficient; sd and mn represent the standard deviation values of maize samples; RD denotes the relative difference; mn1 and mn2 represent the mean values of maize samples; and ABS is the absolute value algorithm.

2.6. Lodging Maize Identification

BLRC, MLC, and random forest classification (RFC) are specialized for maize lodging identification using image features. The samples used as inputs to the MLC and RFC were randomly collected by using the “region of interest” (ROI) tools provided by ENVI version 5.1. The distribution of the ROI regions was uniform, which made the samples highly representative of the lodging and nonlodging maize in the entire study area. Then, 27 and 28 ROI regions were selected for lodging and nonlodging maize samples, respectively. The total numbers of sample pixels representing lodging and nonlodging maize were 58,819 and 61,747 pixels, respectively. In terms of shadow, according to the predecessor’s processing method [9], shadows with areas of more than 5.41 m2 were placed into the lodging class to reduce the influence of shadows on the classification process.

2.6.1. Binary Logistic Regression Classification (BLRC)

Logistic regression is a generalized linear regression model that can explain the relationships between variables. This model has been extensively applied to classification and regression problems. Because lodging recognition is a binary classification problem in this study, we adopted a binary logistic regression model as a classifier [40].

In this binary logistic regression model, the maize sample values extracted from different image features form the independent variables. The predicted dependent variable is a function of the probability that maize lodging will occur. Moreover, there is a basic assumption about the dependent variable: the probability of the dependent variable takes the value of 1 (positive response). According to the logistic curve, the value of the dependent variable can be calculated using the following formula [41]:

where P represents the probability of maize lodging (the dependent variable); X indicates the independent variables; and C represents the estimated parameters.

The logit transformation was performed to linearize the above model and eliminate the 0/1 boundaries for the original dependent variable, which is the probability. The new dependent variable is boundless and continuous within the range from 0 to 1. After logit transformation, the above model is expressed by the following equation:

where P represents the probability of maize lodging (the dependent variable), X1, X2, X3, …, Xk are the independent variables, and C0, C1, C2, …, Ck represent the estimated parameters.

The binary logistic regression model for predicting maize lodging probability encoded by R programming language was trained using R Studio software. The encoded program automatically determined the coefficients of all independent variables according to the relationship between independent and dependent variables. The fitted model was inputted into the “Band Math” module of ENVI software to obtain the lodging probability map of the study area. Finally, a uniform and optimal probability threshold was determined for all pixels to judge whether they represented lodging.

2.6.2. Maximum Likelihood Classification (MLC)

MLC is a nonlinear discriminant function based on the Bayesian rule that is suitable for processing low-dimensional data [42]. MLC assumes that a hyper ellipsoid decision volume can be applied to approximate the shapes of the data clusters. The mean vector and covariance matrix of specified unknown pixels in each class is calculated. The probability of each pixel’s class is then analyzed, and the pixel is predicted to belong to the class with the maximum probability [43]. In this study, the MLC module from the ENVI software was used to identify maize lodging. The data scale factor, which is a major parameter in the classifier, was set to 1.0 in each classification. This is mainly because the UAV images and their characteristics involved in this study are floating-point data. The UAV image features and ROI regions were provided as the input data for the MLC to generate the maize lodging recognition results.

2.6.3. Random Forest Classification (RFC)

The random forest algorithm is an ensemble learning method based on the decision tree method that has been applied to both object classification and regression analysis of high-dimensional data [44]. In the random forest algorithm, all the decision trees are trained on a bootstrapped sample of the original training data. To completely divide the variable space, the split node in each decision tree is randomly selected from the input samples. Only two-thirds of the input samples are involved in the building of each decision tree. The remaining one-third of the samples, which are called out-of-bag (OOB) samples, are used to validate the accuracy of the prediction. Finally, the prediction result is obtained through a majority voting strategy of the individual decision trees [45,46,47]. In this study, the RFC program [48] embedded in ENVI software was employed to identify maize lodging, and two parameters were tuned to generate the optimal RFC model: the number of split nodes and the number of decision trees. The optimal parameters were determined according to the accuracy of the lodging classification results. In each classification, both were reset to adapt to different feature sets.

2.7. Accuracy Assessment of Lodging Detection Results

The Kappa coefficient is an index used to measure the spatial consistency of classification results; it explicitly reveals the spatial change in the classification results [49]. The overall accuracy (OA) reflects the quality of the classification result in terms of quantity [50]. In this study, the above indicators were chosen to evaluate the correctness of maize lodging detection results.

Some scholars believe that the Kappa coefficient is discontinuous and marginal in the evaluation of remote sensing image classification results [51]. However, these views are held only at the theoretical level and lack sufficient practical proof, especially in the study of binary classification based on remote sensing images. In general, remote sensing images and features are not considered to be independent, which is the main reason for the failure of confusion matrix and kappa coefficient [52]. The nonoverlapping ROI or sample points of lodging and nonlodging maize needed by model training were selected before image classification. Consequently, in each image feature, the values of lodging and nonlodging maize were independent and had no influence on each other. Supported by a large number of remote sensing application practices [53,54], this study uses the Kappa coefficient as the evaluation index for lodging recognition results.

2.8. Traditional Crop Lodging Identification Method

The main process of traditional crop lodging identification is as follows. First, different types of image features—color, spectral reflectance, vegetation index, GLCM texture, and canopy structure—are extracted from RGB and multispectral images. Certain image features are selected, and the optimal features are obtained by using the index method or prior knowledge. Finally, the optimal features and manually selected ROIs are input into the supervised classification model to obtain the recognition results of crop lodging in the plot.

There are three main differences between our new proposed method and the traditional method: (1) more image features (compared to less than 20 features in previous studies) are extracted to determine whether they are suitable for maize lodging identification under complicated field environments. (2) Both GLCM texture and GLDM texture are used for maize lodging recognition. In addition, the optimal GLCM and GLDM texture window sizes are determined. (3) The AIC method is used to screen the maize lodging identification features.

3. Results and Analysis

3.1. Optimal Window for Texture Features

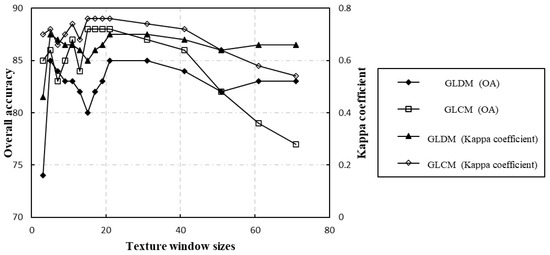

Based on the identification results obtained by using GLCM and GLDM textures with different window scales, the variations in the Kappa coefficient and OA are shown in Figure 3, where the overall accuracy and Kappa coefficient clearly have the same trend using both GLCM and GLDM textures. The Kappa coefficient and OA of the GLCM texture are higher than those of the GLDM texture at window scales from 3 to 51, but the Kappa coefficient and OA of the GLCM texture become lower than those of GLDM at window scales from 51 to 71. The highest accuracies of the GLCM texture window sizes are 15, 17, 19, and 21 (the OA is 88% and the Kappa coefficient is 0.76), and their DI values are 14.68, 12.69, 14.11, and 13.76, respectively. When using the GLDM texture, the most accurate window sizes are 7, 21, and 31 (the OA is 85% and the Kappa coefficient is 0.70), and their DI values are 56.39, 11.18, and 12.64, respectively. Therefore, GLDM texture with a window size of 21 and GLCM texture with a window size of 17 are adopted for subsequent maize lodging recognition.

Figure 3.

The changes in maize lodging recognition accuracy with gray-level cooccurrence matrix (GLCM) and gray-level difference matrix (GLDM) textures under different window sizes.

3.2. Performances of Different Feature Screening Methods

In this paper, two feature sets are established to compare the performance of the AIC method and index method: the GLCM texture features extracted from the blue, green, and red bands (RGB_GLCM) and the GLCM texture features extracted from blue, green, red, red-edge, and near-infrared bands (All_band_GLCM). When utilizing the AIC method to screen RGB_GLCM and All_band_GLCM, we obtained fourteen and sixteen satisfactory features, respectively. The optimal texture characteristics obtained by employing the index method are shown in Table 4.

Table 4.

The selected features using index method-based GLCM texture.

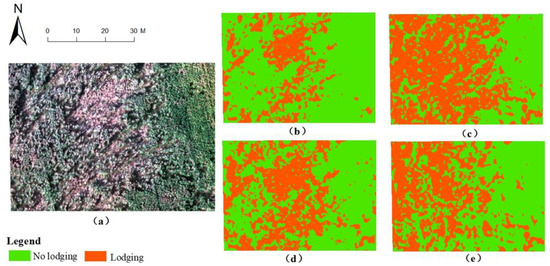

Table 5 and Table 6 display the recognition accuracy of AIC and the index method under different classification algorithms. The Kappa coefficient and OA of the AIC method are generally higher than those of the index method. The AIC method combined with RGB_GLCM enabled estimation of maize lodging with higher accuracy than that of the index method (average Kappa coefficient is 0.71, average OA is 85.67%). In contrast, when the All_band_GLCM is used, the average Kappa coefficient and average OA of the index method fall to 0.15 and 57.67%, respectively, considerably lower than those of the AIC method. Furthermore, comparing the AIC and index methods clarified that the index method seriously overestimated the lodging area (Figure 4).

Table 5.

The Kappa coefficient of the Akaike information criterion (AIC) and index methods.

Table 6.

The overall accuracy (OA) of AIC and index methods.

Figure 4.

Maps of the distribution of lodging and nonlodging maize based on the AIC and index methods. (a) Part of the multispectral image of the study area; (b) Maize lodging area using AIC method and binary logistic regression classification (BLRC) under RGB_GLCM; (c) Maize lodging area using index method and maximum likelihood classification (MLC) under RGB_GLCM; (d) Maize lodging area using AIC method and MLC under All_band_GLCM; (e) Maize lodging area using index method and MLC under All_band_GLCM.

The analyzed data are displayed in Table 5, and Table 6 clearly shows that RGB_GLCM achieves higher classification accuracy than All_band_GLCM for each screening method. During feature selection, we found that although RGB_GLCM is included in All_band_GLCM, the features derived from one screening method are not the same. Consequently, under the condition of using the feature selection method, it is necessary to construct as many feature sets as possible to obtain the optimal lodging recognition features.

Table 7 illustrates the performance of the AIC and index method for discriminating lodging and nonlodging areas in different regions within the study location under the maximum likelihood classification method. The accuracy of the two methods differs only slightly in the green region. On the one hand, using RGB_GLCM, the Kappa coefficient and OA of the index method were 0.034 and 1.00% higher than those of the AIC method, respectively. The same precision can be obtained from these methods when employing RGB_GLCM. On the other hand, the AIC method resulted in a smaller error than the index method in the yellow area. In particular, when using the All_band_GLCM, the Kappa coefficient and OA of the AIC method are 0.53 and 16.00% higher than those of the index method, respectively.

Table 7.

The accuracy assessment of AIC and index methods within different regions.

3.3. Identification Accuracy of Different Screening Methods

The number of features, AIC value, and Kappa coefficient of MLC before and after screening are presented in Table 8. The dimensions and AIC values of all feature sets are reduced by varying degrees after applying the AIC method. When the AIC method was used to screen the high-dimensional feature sets (number of features > 40), the feature dimensions and AIC values decreased significantly: the feature dimension decreased by 90.25% on average, and the AIC value decreased by 88.44% on average. However, for the low-dimensional feature sets, the number of features and the AIC values decreased only slightly after employing the AIC method: the feature dimension decreased by only 31.21% on average, and the AIC value decreased by only 5.83% on average. According to the reference data in Table 8, the average Kappa coefficient of each feature set before screening was 0.03 higher than that after screening. Moreover, the set with the highest Kappa coefficient (0.92) was Texture + canopy structure (CS) + Spectral.

Table 8.

Feature number, AIC value, and Kappa coefficient before and after using AIC method.

Here, we show the selected maize lodging features from the Texture + CS + Spectral set for further discussion, which includes the red band, the GLCM mean texture, and dissimilarity texture of the average reflectivity of the red, green, and blue bands; the GLCM mean texture of CHM; and the GLDM mean texture of the average reflectivity of the red, green, and blue bands.

3.4. Maize Lodging Identification Result

Based on the features after AIC method screening, the verification results under different classification methods are given in Table 9. The Texture + CS + Spectral set showed the best performance compared with the other feature sets in the MLC and RFC algorithms. However, MLC provided higher classification accuracy (Kappa coefficient = 0.92, OA = 96%) than RFC. As shown in Figure 5a, RFC overlooks more maize lodging pixels in the UAV image. A favorable lodging identification result (Kappa coefficient = 0.86, OA = 93.00%), which is shown in Figure 5c, can be obtained by utilizing BLRC combined with the CS feature set. Overall, the optimal lodging detection result (Kappa coefficient = 0.92, OA = 96.00%) was generated when using MLC and Texture + CS + Spectral (Table 9). In comparison to other feature sets, the Texture + CS + Spectral had the highest average Kappa coefficient and average OA; thus, it is more suitable for accurately extracting the lodging area.

Table 9.

Lodging identification accuracy under different classification methods and various feature sets.

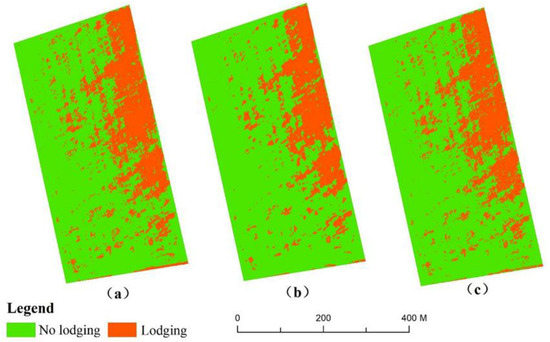

Figure 5.

The lodging identification results with the highest accuracies under MLC, BLRC, and random forest classification (RFC) (a) MLC combined with Texture + CS + Spectral; (b) RFC combined with Texture + CS + Spectral; (c) BLRC combined with CS.

In terms of the SFS in Table 9, CS had the best verification results (average Kappa coefficient = 0.74, average OA = 87.00%). Moreover, the Kappa coefficient and OA of texture + spectra are clearly the lowest among MFS (Table 9). The results of this study show that the CS still provides powerful support for accurate extraction of maize lodging, whether in SFS or MFS.

4. Discussion

In this study, nine CSFs, 41 texture features, and eight spectral features of maize in the study area were extracted based on UAV multispectral images and CHM. The different types of image characteristics can not only describe the discrepancies between lodging and nonlodging areas from various aspects but also establish information complementarity during classification. Therefore, we constructed all possible feature sets (SFS and MFS) of the above features as potential factors for the identification of lodging maize. After screening the above feature sets by the AIC method, MLC, BLRC, and RFC were implemented to identify maize lodging. Finally, we obtained the optimal maize lodging recognition result by analyzing the verification results. The present study offers a novel method for monitoring maize lodging. The results revealed that the AIC method is effective and helpful for discriminating among maize lodging and nonlodging areas.

4.1. Optimal Texture Window Size

The precision of image classification based on texture measures relies primarily on the size of the texture window and the size of the target object [34]. At present, accuracy evaluation indexes such as the Kappa coefficient and OA are usually used to determine the optimal texture window size for target recognition in images [55,56]. However, in this study, we found several texture window sizes with the highest accuracy when we used these indexes (Figure 3). Generally, higher separability between the image features of two objects indicates a greater likelihood that they will be correctly classified. Consequently, based on the Kappa coefficient and OA, we utilized DI to analyze the optimal window sizes for the GLCM and GLDM textures (which were 21 and 17, respectively) to ensure the suitability of the window size. Because the spatial resolution of the UAV image is 7.5 cm, the actual areas of the texture features at the 17 and 21 window scales are 1.59 m2 and 2.40 m2, respectively. The former area (1.59 m2) is close to the measured area (1.55 m2) of single lodging maize in the orthographic multispectral image, while the latter (2.40 m2) is quite different from the measured area (0.17 m2) of single nonlodging maize, but it is close to the area of small-scale maize lodging. Therefore, the texture measures of a single lodging plant and a small-scale lodging area are well described under the optimal window size in this study, which avoids missing maize lodging areas.

As shown in Figure 3, the accuracy of the GLDM texture measures increases sharply at window scales from 3 to 5 and decreases slowly at window scales from 5 to 7. This result occurs principally because the actual area of the 5 window size in the UAV image (0.14 m2) is the closest to the measured area of single nonlodging maize compared to the areas at window sizes of 3 and 7. In this way, the GLDM texture with a window size of 5 maintains good separability between lodging and nonlodging maize. In addition, the randomness of sample selection may cause large fluctuations in the accuracy curve.

4.2. Generalizability of the AIC Method in Selecting Lodging Features

As the fundamental method of identifying the feature selection of lodging crops, the index method [7,15,16] is unable to directly determine the optimal number of features. Conversely, the AIC method not only can quickly determine the optimal number of features but also has higher recognition accuracy (Table 5, Table 6 and Table 7). The AIC method maintains high recognition accuracy (Table 7) even in two maize regions in different growth stages; therefore, it also achieves good generalizability. Due to the complexity of the plot environment in the study area, we used multiple features to identify maize lodging. Nevertheless, the screening criterion used in the index method—which determines whether a single feature is suitable for lodging identification—does not consider the interaction between multiple features. In contrast, the AIC method establishes the relationships between multiple features using a logistic regression model, thereby enhancing the complementarity of selected features. Therefore, the accuracy of the AIC method is generally higher than that of the index method.

At present, scholars use UAV images to identify lodging crops mainly during the vigorous growth period of crops [12,57]; thus, the method for selecting lodging recognition features is greatly affected by other crop growth periods and field environments. In contrast, the study area in this study includes both lodging and nonlodging maize in different growth stages. Therefore, the AIC method can be extensively applied to various situations (different varieties, growth periods, leaf colors, etc.) In summary, the AIC screening method is more effective for identifying lodging maize in complex field environments or in plots with different fertilization rates, varieties, maturity dates, and sowing periods.

4.3. Suitability of Canopy Structure Feature for Extraction of Maize Lodging in Complex Environments

Texture, spectral, and color features extracted from remote sensing images are frequently used as effective factors to estimate crop lodging [3,11,13]. In addition to these characteristics, CSFs were calculated according to CHM. Among the SFS, the CS had the highest accuracy; its average Kappa coefficient and overall accuracy were 0.74 and 87.00%, respectively (Table 9). Lodging maize in the study area is dominated by Lodging R. The differences in CSFs between Lodging R and No-Lodging LR are significant, but their leaf color and spectral reflectance are similar. Therefore, compared with the texture and spectral features, the CSFs make it easier to identify lodging maize. Although both spectral and texture features are effective for assessing crop lodging [1], different crop types and growing periods still affect the recognition accuracy and stability of the features.

The MFS had the largest difference in the average Kappa coefficient and OA between Texture + Spectral and Texture + CS + Spectral: 0.19 and 9.33% (Table 9), respectively. These results further indicated that CSF is the primary factor that can improve the accuracy of maize lodging detection. Although CSF was able to directly reflect the height difference between lodging and nonlodging maize, their heights were sometimes similar due to the spatial heterogeneity of soil and terrain. Consequently, to compensate for this defect of CSF and accurately distinguish lodging and nonlodging areas, CSF must be combined with other image features such as texture and multispectral reflectance.

4.4. Comparison of Optimal Classification Results under Different Classification Algorithms

Among MLC, RFC, and BLRC, the most accurate respective feature sets were Texture + CS + Spectral, Texture + CS + Spectral, and CS (Table 9). Based on the same feature set, the accuracy of MLC was higher than that of RFC because MLC is more suitable for classifying low-dimensional data than RFC. The BLRC algorithm is very suitable for addressing the issue of binary classification in imagery used for object detection. However, both the lodging and nonlodging maize involved in this study can have multiple forms (Figure 2). Therefore, although high-precision lodging recognition results can be obtained by utilizing BLRC combined with CSF, the results were still lower than those of MLC.

4.5. Comparison of Traditional and New Methods for Crop Lodging Identification

Compared with the traditional crop lodging recognition methods, the new method proposed in this study describes the difference between lodging and nonlodging crops from more aspects—because it extracts more image features. In addition, the optimal and unique texture window size that fully expresses the morphological characteristics of crop lodging in the image was determined, thus improving the probability of successful crop lodging identification. However, extracting a large number of image features requires more computation and consumes more time. In this paper, the AIC method, which can obtain the optimal features more quickly and directly, was applied to feature selection while maintaining a strong relationship between features. Therefore, the AIC method is more suitable for identifying crop lodging using multiple image features. In contrast, the traditional crop lodging identification methods are based on homogeneous field environments in which the crops are all in the same growth period, and their growth conditions are similar. Nevertheless, different maize growth periods and growth statuses existed in the study area. Therefore, compared with traditional methods, the new crop lodging identification method is better able to identify crop lodging in a complex field environment, and it has stronger universality.

4.6. Analysis of Optimal Feature Set under Different Data Availability

In practice, the recognition of lodging maize must consider both data availability and the accuracy of the results. Therefore, based on the reference results in Table 9, we analyzed the optimal feature set of maize lodging extraction features using the AIC method under different data conditions. Compared with multispectral images, the CHM acquisition requires more manpower, material resources, and time. Therefore, we were able to employ only the texture and spectral features derived from the multispectral imagery to save data collection costs. Based on this approach, the optimal feature set and classification algorithm were Texture + Spectral and MLC, respectively. The Texture + Spectral feature set is suitable for situations in which the color discrepancy between leaves and stems is significant. In the absence of multispectral images, the optimal feature set and classification method were CS and BLRC, respectively. The CS feature set is suitable when there are large differences in lodging and nonlodging maize. When both CHM and multispectral imagery can be obtained, the most suitable feature set and classification method were Texture + CS + Spectral and MLC for lodging detection. In a complex field environment, the Texture + CS + Spectral feature set can accurately discriminate between lodging and nonlodging areas, but the time and economic costs of this approach are high; therefore, it is not practical for rapid monitoring of crop lodging.

4.7. Shortcoming and Prospects of Research

Although the new crop lodging identification method proposed in this paper has good stability and high accuracy in complicated field conditions, its universality at the regional scale requires further exploration and verification. When applied to satellite images, the accuracy of the new method may decrease due to the following factors: (1) the spatial resolution and image quality of satellite images are lower than those of UAV images; (2) satellite images include more non-vegetation objects, including houses, other lodgings, and lakes; and (3) detailed CSF cannot be obtained from satellite images.

Compared with the multispectral camera, the three-dimensional cloud points obtained by LiDAR have higher density and accuracy, which would improve the accuracy of CSF and lodging identification. In the future, we could use UAVs equipped with hyperspectral sensors to obtain hyperspectral images with abundant spectral information, allowing more vegetation index features to be constructed. Moreover, the relationship between background factors and lodging crops should be fully analyzed to determine the main factors that cause lodging.

5. Summary and Conclusions

In this study, the texture measures, spectral features, and CSFs of maize in the study area were extracted from UAV-acquired multispectral images, and the optimal texture window size was determined for lodging identification. Based on the above image features, the performance of the two screening methods was analyzed under the MLC, RFC, and BLRC algorithms. The main outcomes were as follows. (1) The optimal texture window sizes of GLCM and GLDM texture were 17 and 21, respectively, as determined by the Kappa coefficient, OA and DI. The area of the former size (1.59 m2) was close to the measured area (1.55 m2) of single lodging maize in the orthographic multispectral image, while the area of the latter (2.40 m2) was quite different from the measured area (0.17 m2) of single nonlodging maize but quite close to the maize lodging area with a small size. (2) Compared with the index method, the AIC method had a higher Kappa coefficient and OA; thus, this method is more suitable for screening lodging recognition features. In a complex field environment, the AIC method has strong generalizability when using MFS. (3) The accuracy of the detection result based on the Texture + CS + Spectral and AIC methods was the highest compared with the other feature sets, and CSF played an important role in lodging recognition. The Texture + CS + Spectral features, screened by the AIC method and classified by the MLC, achieved the highest lodging recognition accuracy: the Kappa coefficient and OA of this combination were 0.92% and 96.00%, respectively. (4) The new crop lodging identification method proposed in this paper can adapt to complex field environments, especially in crop fields with different growth periods. (5) In practice, researchers can obtain the CSF of maize first and then combine it with the BLRC algorithm to achieve fast extraction of crop lodging areas.

Author Contributions

Conceptualization, H.G. and H.L.; methodology, H.G.; software, X.M.; validation, Y.M. and Z.Y.; formal analysis, C.L.; investigation, H.G., Y.B., and Z.Y.; resources, X.Z.; data curation, Y.B.; writing—original draft preparation, H.G.; writing—review and editing, H.G.; visualization, H.G.; supervision, H.L.; project administration, H.L.; funding acquisition, H.L. and X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Natural Science Foundation of China (41671438) and the “Academic Backbone” Project of Northeast Agricultural University (54935112).

Acknowledgments

We thank Baiwen Jiang for helping in selection of survey fields and Qiang Ye for helping collect the field data. We are also grateful to the anonymous reviewers for their valuable comments and recommendations.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chauhan, S.; Darvishzadeh, R.; Boschetti, M.; Pepe, M.; Nelson, A. Remote sensing-based crop lodging assessment: Current status and perspectives. ISPRS J. Photogramm. Remote Sens. 2019, 151, 124–140. [Google Scholar] [CrossRef]

- Wang, L.Z.; Gu, X.H.; Hu, S.W.; Yang, G.J.; Wang, L.; Fan, Y.B.; Wang, Y.J. Remote sensing monitoring of maize lodging disaster with multi-temporal HJ-1B CCD image. Sci. Agric. Sin. 2016, 49, 4120–4129. [Google Scholar] [CrossRef]

- Li, Z.N.; Chen, Z.X.; Ren, G.Y.; Li, Z.C.; Wang, X. Estimation of maize lodging are based on Worldview-2 image. Trans. CSAE 2016, 32, 1–5. [Google Scholar] [CrossRef]

- Yang, H.; Chen, E.X.; Li, Z.Y.; Zhao, C.J.; Yang, G.J.; Pignatti, S.; Casa, R.; Zhao, L. Wheat lodging monitoring using polarimetric index from RADARSAT-2 data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 157–166. [Google Scholar] [CrossRef]

- Chen, J.; Li, H.; Han, Y. Potential of RADARSAT-2 data on identifying sugarcane lodging caused by typhoon. In Proceedings of the Fifth International Conference on Agro-geoinformatics (Agro-Geoinformatics 2016), Tianjin, China, 18–20 July 2016; pp. 36–42. [Google Scholar] [CrossRef]

- Somers, B.; Asner, G.P.; Tits, L.; Coppin, P. Endmember variability in spectral mixture analysis: A review. Remote Sens. Environ. 2011, 115, 1603–1616. [Google Scholar] [CrossRef]

- Li, Z.N.; Chen, Z.X.; Wang, L.M.; Liu, J.; Zhou, Q.B. Area extraction of maize lodging based on remote sensing by small unmanned aerial vehicle. Trans. CSAE 2014, 30, 207–213. [Google Scholar] [CrossRef]

- Yang, M.D.; Tseng, H.H.; Hsu, Y.C.; Tsai, H.P. Semantic segmentation using deep learning with vegetation indices for rice lodging identification in multi-date UAV visible images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef]

- Zhao, X.; Yuan, Y.T.; Song, M.D.; Ding, Y.; Lin, F.F.; Liang, D.; Zhang, D.Y. Use of unmanned aerial vehicle imagery and deep learning Unet to extract rice lodging. Sensors 2019, 19, 3859. [Google Scholar] [CrossRef]

- Liu, T.; Li, R.; Zhong, X.; Jiang, M.; Jin, X.; Zhou, P.; Liu, S.; Sun, C.; Guo, W. Estimates of rice lodging using indices derived from UAV visible and thermal infrared images. Agric. For. Meteorol. 2018, 252, 144–154. [Google Scholar] [CrossRef]

- Zhang, X.L.; Guan, H.X.; Liu, H.J.; Meng, X.T.; Yang, H.X.; Ye, Q.; Yu, W.; Zhang, H.S. Extraction of maize lodging area in mature period based on UAV multispectral image. Trans. CSAE 2019, 35, 98–106. [Google Scholar] [CrossRef]

- Chu, T.; Starek, M.; Brewer, M.; Murray, S.; Pruter, L. Assessing lodging severity over an experimental maize (Zea mays L.) field using UAS images. Remote Sens. 2017, 9, 923. [Google Scholar] [CrossRef]

- Yang, M.-D.; Huang, K.-S.; Kuo, Y.-H.; Tsai, H.; Lin, L.-M. Spatial and spectral hybrid image classification for rice lodging assessment through UAV imagery. Remote Sens. 2017, 9, 583. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Feng, H.; Zhou, C.; Yang, H.; Xu, B.; Li, Z.; Yang, X. Quantitative identification of maize lodging-causing feature factors using unmanned aerial vehicle images and a nomogram computation. Remote Sens. 2018, 10, 1528. [Google Scholar] [CrossRef]

- Wang, J.-J.; Ge, H.; Dai, Q.; Ahmad, I.; Dai, Q.; Zhou, G.; Qin, M.; Gu, C. Unsupervised discrimination between lodged and non-lodged winter wheat: A case study using a low-cost unmanned aerial vehicle. Int. J. Remote Sens. 2018, 39, 2079–2088. [Google Scholar] [CrossRef]

- Dai, J.G.; Zhang, G.S.; Guo, P.; Zeng, T.J.; Cui, M.; Xue, J.L. Information extraction of cotton lodging based on multi-spectral image from UAV remote sensing. Trans. CSAE 2019, 35, 63–70. [Google Scholar] [CrossRef]

- Orlowski, G.; Karg, J.; Kaminski, P.; Baszynski, J.; Szady-Grad, M.; Ziomek, K.; Klawe, J.J. Edge effect imprint on elemental traits of plant-invertebrate food web components of oilseed rape fields. Sci. Total Environ. 2019, 687, 1285–1294. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Patel, N.K.; Patnaik, C.; Dutta, S.; Shekh, A.M.; Dave, A.J. Study of crop growth parameters using Airborne Imaging Spectrometer data. Int. J. Remote Sens. 2010, 22, 2401–2411. [Google Scholar] [CrossRef]

- Hufkens, K.; Friedl, M.; Sonnentag, O.; Braswell, B.H.; Milliman, T.; Richardson, A.D. Linking near-surface and satellite remote sensing measurements of deciduous broadleaf forest phenology. Remote Sens. Environ. 2012, 117, 307–321. [Google Scholar] [CrossRef]

- Sonnentag, O.; Hufkens, K.; Teshera-Sterne, C.; Young, A.M.; Friedl, M.; Braswell, B.H.; Milliman, T.; O’Keefe, J.; Richardson, A.D. Digital repeat photography for phenological research in forest ecosystems. Agric. For. Meteorol. 2012, 152, 159–177. [Google Scholar] [CrossRef]

- Guan, S.; Fukami, K.; Matsunaka, H.; Okami, M.; Tanaka, R.; Nakano, H.; Sakai, T.; Nakano, K.; Ohdan, H.; Takahashi, K. Assessing correlation of high-resolution NDVI with fertilizer application level and yield of rice and wheat crops using small UAVs. Remote Sens. 2019, 11, 112. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Kanke, Y.; Tubaña, B.; Dalen, M.; Harrell, D. Evaluation of red and red-edge reflectance-based vegetation indices for rice biomass and grain yield prediction models in paddy fields. Precision Agric. 2016, 17, 507–530. [Google Scholar] [CrossRef]

- Bai, G.; Ge, Y.; Hussain, W.; Baenziger, P.S.; Graef, G. A multi-sensor system for high throughput field phenotyping in soybean and wheat breeding. Comput. Electron. Agric. 2016, 128, 181–192. [Google Scholar] [CrossRef]

- Næsset, E.; Bjerknes, K.-O. Estimating tree heights and number of stems in young forest stands using airborne laser scanner data. Remote Sens. Environ. 2001, 78, 328–340. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.; Li, D.; Wu, M.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Ciceu, A.; Garcia-Duro, J.; Seceleanu, I.; Badea, O. A generalized nonlinear mixed-effects height-diameter model for Norway spruce in mixed-uneven aged stands. For. Ecol. Manag. 2020, 477, 118507. [Google Scholar] [CrossRef]

- Wang, L.; Zheng, N.; Gao, S.; Huang, N.; Chen, H. Correlating the horizontal and vertical distribution of LiDAR point clouds with components of biomass in a Picea crassifolia forest. Forests 2014, 5, 1910–1930. [Google Scholar] [CrossRef]

- Kane, V.; Bakker, J.; McGaughey, R.; Lutz, J.; Gersonde, R.; Franklin, J. Examining conifer canopy structural complexity across forest ages and elevations with LiDAR data. Can. J. For. Res. 2010, 40, 774–787. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.; Li, D. Characterizing canopy structural complexity for the estimation of maize LAI based on ALS data and UAV stereo images. Int. J. Remote Sens. 2016, 38, 2106–2116. [Google Scholar] [CrossRef]

- Parker, G.; Harmon, M.; Lefsky, M.; Chen, J.; Van Pelt, R.; Weiss, S.; Thomas, S.; Winner, W.; Shaw, D.; Franklin, J. Three-dimensional structure of an old-growth Pseudotsuga-Tsuga canopy and its implications for radiation balance, microclimate, and gas exchange. Ecosystems 2004, 7, 440–453. [Google Scholar] [CrossRef]

- Coburn, C.; Roberts, A.C. A multiscale texture analysis procedure for improved forest stand classification. Int. J. Remote Sens. 2004, 25, 4287–4308. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Backes, A.R.; Casanova, D.; Bruno, O.M. Texture analysis and classification: A complex network-based approach. Inf. Sci. 2013, 219, 168–180. [Google Scholar] [CrossRef]

- Weszka, J.; Dyer, C.; Rosenfeld, A. A comparative study of texture measures for terrain classification. IEEE Trans. Syst. Man Cybern. 1976, 6, 269–285. [Google Scholar] [CrossRef]

- Conners, R.W.; Harlow, C.A. A Theoretical comparison of texture algorithms. IEEE Trans. Pattern Anal. 1980, PAMI-2, 204–222. [Google Scholar] [CrossRef]

- Nishimura, K.; Matsuura, S.; Suzuki, H. Multivariate EWMA control chart based on a variable selection using AIC for multivariate statistical process monitoring. Stat. Probab. Lett. 2015, 104, 7–13. [Google Scholar] [CrossRef]

- Hughes, A.; King, M. Model selection using AIC in the presence of one-sided information. J. Stat. Plan Inference 2003, 115, 397–411. [Google Scholar] [CrossRef]

- Ye, H.; Huang, W.; Huang, S.; Cui, B.; Dong, Y.; Guo, A.; Ren, Y.; Jin, Y. Recognition of banana fusarium wilt based on UAV remote sensing. Remote Sens. 2020, 12, 938. [Google Scholar] [CrossRef]

- Jokar Arsanjani, J.; Helbich, M.; Kainz, W.; Darvishi Boloorani, A. Integration of logistic regression, Markov chain and cellular automata models to simulate urban expansion. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 265–275. [Google Scholar] [CrossRef]

- Sun, J.; Yang, J.; Zhang, C.; Yun, W.; Qu, J. Automatic remotely sensed image classification in a grid environment based on the maximum likelihood method. Math. Comput. Model. 2013, 58, 573–581. [Google Scholar] [CrossRef]

- Jia, K.; Liang, S.; Zhang, L.; Wei, X.; Yao, Y.; Xie, X. Forest cover classification using Landsat ETM+ data and time series MODIS NDVI data. Int. J. Appl. Earth Obs. Geoinf. 2014, 33, 32–38. [Google Scholar] [CrossRef]

- Li, J.; Zhong, P.-A.; Yang, M.; Zhu, F.; Chen, J.; Liu, W.; Xu, S. Intelligent identification of effective reservoirs based on the random forest classification model. J. Hydrol. 2020, 591, 125324. [Google Scholar] [CrossRef]

- Breiman, L. Random forest. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Bao, Y.; Meng, X.; Ustin, S.; Wang, X.; Zhang, X.; Liu, H.; Tang, H. Vis-SWIR spectral prediction model for soil organic matter with different grouping strategies. Catena 2020, 195, 104703. [Google Scholar] [CrossRef]

- Meng, X.; Bao, Y.; Liu, J.; Liu, H.; Zhang, X.; Zhang, Y.; Wang, P.; Tang, H.; Kong, F. Regional soil organic carbon prediction model based on a discrete wavelet analysis of hyperspectral satellite data. Int. J. Appl. Earth Obs. Geoinf. 2020, 89, 102111. [Google Scholar] [CrossRef]

- Van der Linden, S.; Rabe, A.; Held, M.; Jakimow, B.; Leitão, P.; Okujeni, A.; Schwieder, M.; Suess, S.; Hostert, P. The enmap-box—A toolbox and application programming interface for enmap data processing. Remote Sens. 2015, 7, 11249. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Foody, G.M. Classification accuracy comparison: Hypothesis tests and the use of confidence intervals in evaluations of difference, equivalence and non-inferiority. Remote Sens. Environ. 2009, 113, 1658–1663. [Google Scholar] [CrossRef]

- Pontius, R.; Millones, M. Death to kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Foody, G.M. Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification. Remote Sens. Environ. 2020, 239, 111630. [Google Scholar] [CrossRef]

- Potnis, A.; Panchasara, C.; Shetty, A.; Nayak, U.K. Assessing the accuracy of remotely sensed data: Principles and practices. Photogram Rec. 2010, 25, 204–205. [Google Scholar] [CrossRef]

- Warrens, M.J. Category kappas for agreement between fuzzy classifications. Neurocomputing 2016, 194, 385–388. [Google Scholar] [CrossRef]

- Chen, D.; Stow, D.A.; Gong, P. Examining the effect of spatial resolution and texture window size on classification accuracy: An urban environment case. Int. J. Remote Sens. 2010, 25, 2177–2192. [Google Scholar] [CrossRef]

- Puissant, A.; Hirsch, J.; Weber, C. The utility of texture analysis to improve per-pixel classification for high to very high spatial resolution imagery. Int. J. Remote Sens. 2005, 26, 733–745. [Google Scholar] [CrossRef]

- Sun, Q.; Sun, L.; Shu, M.; Gu, X.; Yang, G.; Zhou, L. Monitoring maize lodging grades via unmanned aerial vehicle multispectral image. Plant Phenomics 2019, 2019, 1–16. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).