1. Introduction

Sea ice is a central component of the Arctic cryosphere. It covers the Arctic Ocean on an annual basis and persists throughout the summer months as multiyear sea ice. In a time of a rapidly changing climate, there is a demand for local-scale high-resolution information on Arctic marine conditions (e.g., environmental conditions, sea ice state and dynamics) to support logistical operations, transportation, and sea ice use. From an industrial and transportation perspective, knowledge of the ever-changing state of sea ice conditions is critical for operations planning (e.g., Environment and Climate Change Canada Regional Ice-Ocean Prediction System [

1]), shipping routes, and sustainable development of the North. During the summer season, changing sea ice conditions have led to changes in transportation and usage of Arctic waterways [

2]. The reduction of sea ice has led to greater mobility and a higher level of unpredictability and risk [

3]. Additionally, changing sea ice characteristics such as thickness, extent, type, and thermodynamic state collectively influence how energy transfers across the ocean/sea-ice/atmosphere (OSA) boundary. In the Arctic, sea ice influences radiative forcing and is a contributing factor to the heat budget of the planet. Therefore, knowledge of the physical and thermodynamic state of sea ice is critically important to understanding how climate change is affecting our world.

Satellite based synthetic aperture radar (SAR) systems are capable of measurements of the Earth’s surface in all weather conditions and in darkness. For these reasons, they are regularly used for monitoring the vast regions of the Arctic. There are many operational spaceborne SAR systems that regularly provide SAR imagery in the microwave C-band (5.5 GHz). Examples include the Canadian satellites RADARSAT-2 and RADARSAT Constellation Mission (RCM) and the European Space Agency’s Sentinel-1A and -1B. These systems have a high spatial resolution (e.g., below 100 m pixel spacing) and have regional coverage (e.g., up to 500 km by 500 km), making them ideal for monitoring large regions. Systems can operate in various acquisition modes (e.g., single polarization, HH; dual-polarization, HH and HV), with different polarization combinations yielding unique images of the region of interest. In simple terms, the processed output product of the imaging satellite SAR is a map of the normalized radar cross-section (NRCS, denoted as , with units of dB) that may contain regions of land, open water, and sea ice.

Within each satellite image, the microwave scattering response of sea ice, and therefore the NRCS, is highly dependent on the physical and thermodynamic state of the ice. The properties of sea ice as they pertain to remote sensing are well described in the literature (e.g., [

4]). The physical and thermodynamic state of the sea ice can be expressed as a collection of its dielectric properties, which in turn govern the microwave interactions for a given incidence angle and frequency. Briefly,

(horizontal co-polarization) generally provides information about the state of the sea ice or open water surface, whereas

(cross-polarization) generally provides information about volume scattering within snow and the sea ice surface, allowing for delineation of sea ice types (stages of development) from open water [

4]. Additionally, varying combinations of polarization of the transmitted and received microwaves provide further information about the composition of a surface cover, which in turn allows for better classification of sea ice by expert analysts. The ratio of HV to HH (the cross-polarized ratio) contributes further contrast between surface covers, with predominantly surface scattering or volume scattering caused by dielectric and structural properties, thereby providing additional information for ice classification by expert analysts.

Satellite SAR can be used to monitor the seasonal evolution of microwave scattering signatures [

5]. Seasonal variations in ice conditions, type, and extent are all observable in time-series SAR imagery. A single SAR image could have many different ice types present, as well as regions of open water. The definitions of sea ice types are given by the World Meteorological Organization (WMO) [

6]. In the spring melt season, imagery is highly influenced by the presence of liquid water content on sea ice surfaces [

7,

8]. Open water exhibits high variability as a function of wind speed and fetch. During the fall freeze-up, high variability can exist even within a single region, as ice forms under a variety of conditions [

9]. Due to the complex nature of the SAR imagery, expert analysis is required for evaluating and creating usable descriptions of sea ice coverage throughout the Arctic.

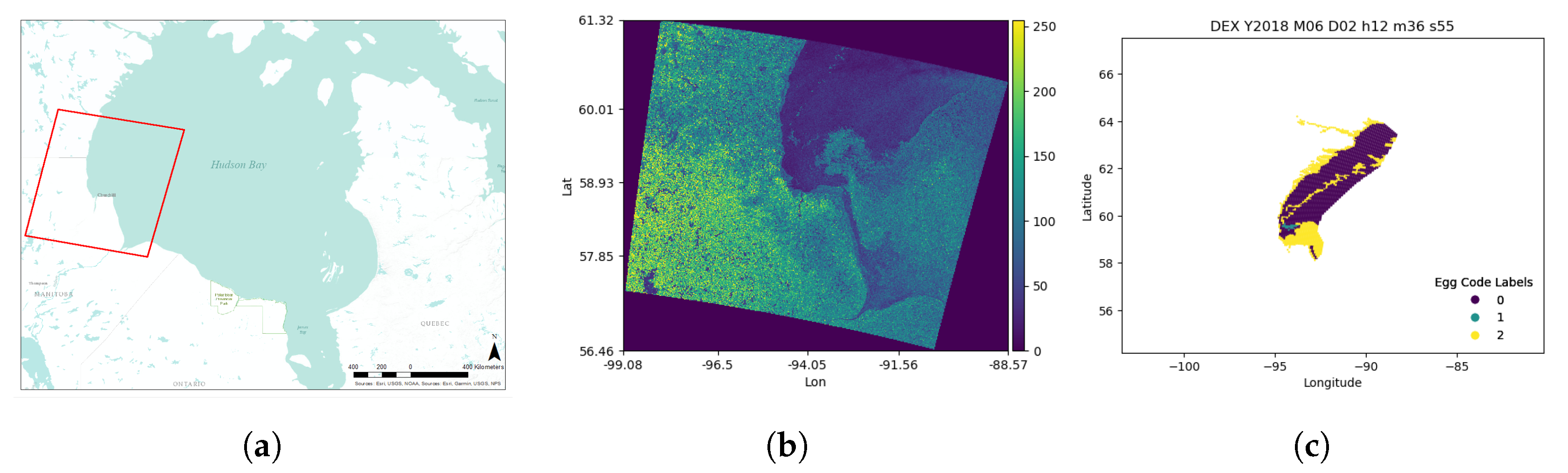

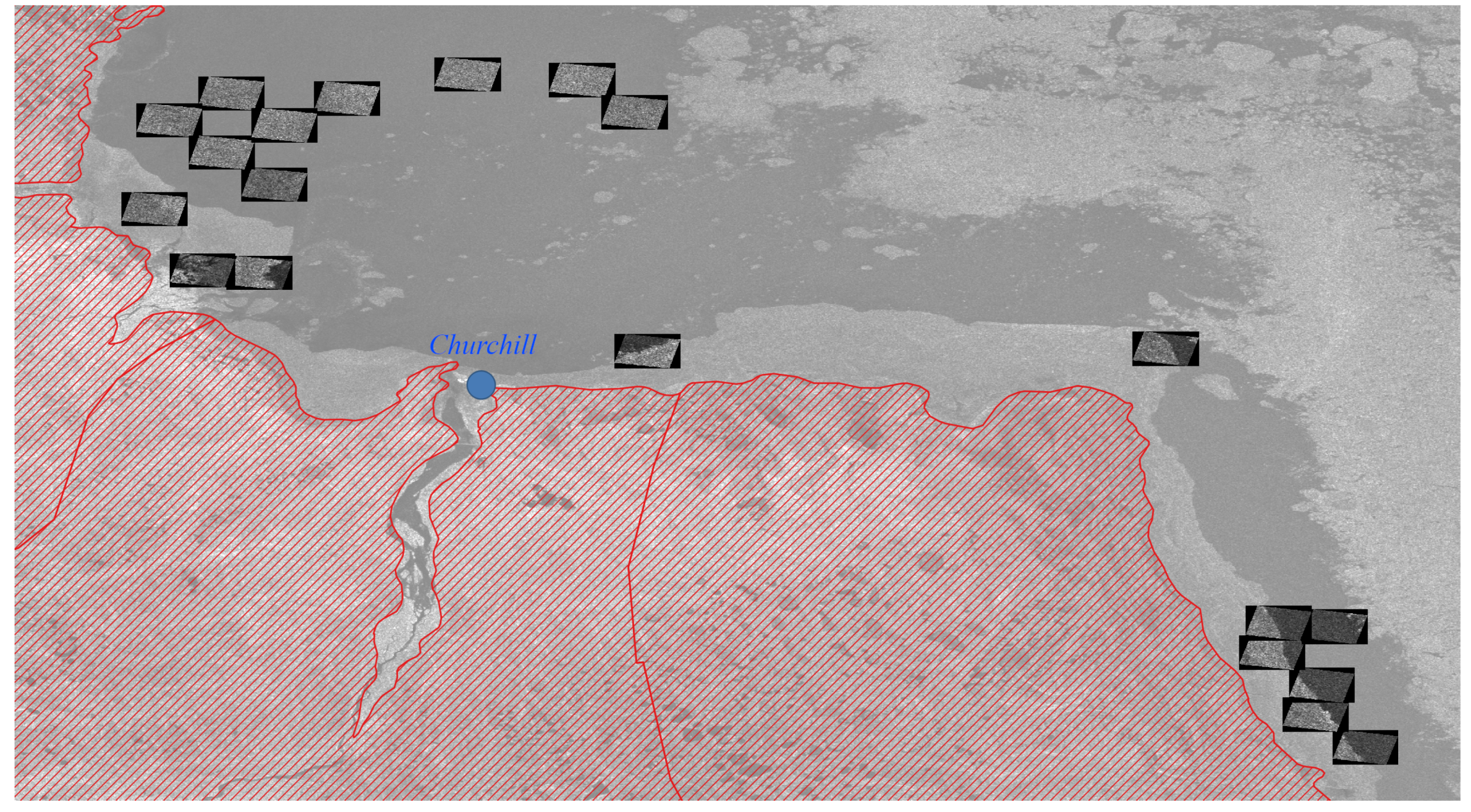

Methods for creating descriptive maps of sea ice variables have been developed by national ice monitoring agencies (e.g., the Canadian Ice Service (CIS) and the United States National Ice Center (USNIC)). Typically, ice analysts use expert knowledge to interpret SAR images manually. Automated processes that apply machine learning techniques to these large datasets could increase the data throughput and expedite expert analysis. Accurately identifying sea ice concentration and type from SAR data during spring and summer seasonal melt remains challenging due to many-to-one scenarios where many different sea ice geophysical properties have the potential to appear with the same SAR backscatter.

Previous neural network classification strategies have focused on sea ice concentration; herein, we consider classification by sea ice stage of development (sea ice type). Although thin ice (<50 cm) is less important for shipping and oil exploration and extraction, thin ice is important for sea ice model forecasts because it is used as the initial conditions for numerical weather prediction and mapping of seasonal melt and freeze-up. More recently, state-of-the-art deep learning techniques have achieved successful results for challenging predictive tasks in other areas, and there is also growing interest in applying deep learning techniques to sea ice classification.

While deep learning techniques are relatively new with regard to sea ice classification, the application of machine learning algorithms to sea ice classification has been a focus for much longer. Automated sea ice segmentation (ASIS), developed in 1999 [

10], combined image processing, data mining, and machine learning to automate segmentation of SAR images (single polarization) from RADARSAT and European Remote Sensing (ERS) satellites. The authors used multi-resolution peak detection and aggregated population equalization spatial clustering to segment SAR images for pre-classification of sea ice for subsequent expert classification and analysis. The results worked well at identifying ice classes in the image, but encountered issues with melting ice conditions in the summer season. Another sea ice classification algorithm was developed in 2005 [

11] using Freeman–Durden decomposition as a seed for an unsupervised Wishart based segmentation algorithm on SAR images. The algorithm was applied to single and dual frequency polarimetric data (C- and L-band) with good results, but noted that the summer melt season sea ice presented the greatest challenge with regard to SAR based classification. In a more recent publication, land-fast sea ice in Antarctica was mapped using decision trees and random forest machine learning approaches [

12]. These approaches were applied to a fusion of time-series AMSR-E radiometer sea ice brightness temperature data, MODIS spectroradiometer ice surface temperature data, and SSM/I ice velocity data. All three studies noted that summer melt season sea ice presented the greatest challenge with regard to SAR based classification because of the increased occurrence of many-to-one scenarios.

Although sea ice classification by type is the topic of this paper, it is important to review previous research that has been conducted on sea ice concentration estimation using deep learning to gain insights into other methodologies used to estimate ice characteristics. A recent study used a neural network called DenseNet [

13] to estimate sea ice concentration from training on a dataset of 24 SAR images [

14]. The authors of this paper used the ARTIST Sea Ice (ASI) algorithm [

15] to label their dataset. This study experimented with a variety of factors and found that the accuracy of the model was dependent on the size of the patches used from the SAR images. The authors addressed over-fitting using data augmentation to increase the dataset size by adding varying degrees of Gaussian noise. The authors also developed a novel two-step training technique that consisted of first training a model with augmented data until convergence to a local minimum. Subsequently, a new model was initialized with the weights from the previous model and trained with a smaller learning rate on the dataset without augmentation. The authors hypothesized that noise injection into the SAR patches removed the relevance of texture information and led to degraded results. This training process was found to improve the results because the texture information relevance was recovered. However, there were difficulties predicting ice concentrations for thin new ice. Another study [

16] used a five layer convolutional neural network to estimate sea ice concentration from SAR imagery. The dataset used in this study consisted of 11 HH and HV SAR images that were sub-sampled and labelled using CIS charts by extracting 41 × 41 sub-regions centred about the image analysis sample location denoted by latitude and longitude. The study found that the number of water samples contained in their dataset was about eight times greater than the next most common ice concentration class. Several approaches exist to work around such a disparity in the distribution of classes such as undersampling the majority, oversampling the minority, or using a Bayesian cross-entropy cost function, but the authors chose to train with the skewed dataset for a larger number of epochs (500) to get out of a local minimum found at early epochs due to the over representation of water.

The challenge in developing a deep learning classifier for sea ice type is the availability of a large number of accurately labelled data. Previous studies classifying ice types with neural networks labelled their datasets in a variety of ways. A pulse-coupled neural network (PCNN) was used with RADARSAT-1 ScanSAR Wide mode images over the Baltic Sea [

17]. Therein, classes for supervised training were created by decomposing homogeneous regions in the SAR image into a mixture of Gaussian distributions using an expectation-maximization algorithm. These generated classes were then paired with the associated region in the SAR image and given to the PCNN for training. [

18] generated classes for their dataset per the World Meteorological Organization (WMO) terminology [

6]. Their dataset consisted of SAR patches along the path of an icebreaker, and the generated classes were based on in situ observations and other sensor information.

As an alternative to the labelling methods above, herein we assess the ability for a neural network to predict ice type from SAR data labelled using the stage of development feature from CIS ice charts. The labelling method is similar to the 2016 study done by Wang et al. [

16], but is applied to the estimation of ice type, as opposed to ice concentration. While ice concentration is an important factor for naval navigation in the Arctic, our goal in this work is to predict ice stage of development. Knowledge of current ice type conditions can aid in the decision making process during naval routing because ships can break through regions where the ice concentration may be high, but the ice type is thin. Conversely, it is also important to know where the ice type is thick in order to avoid those regions.

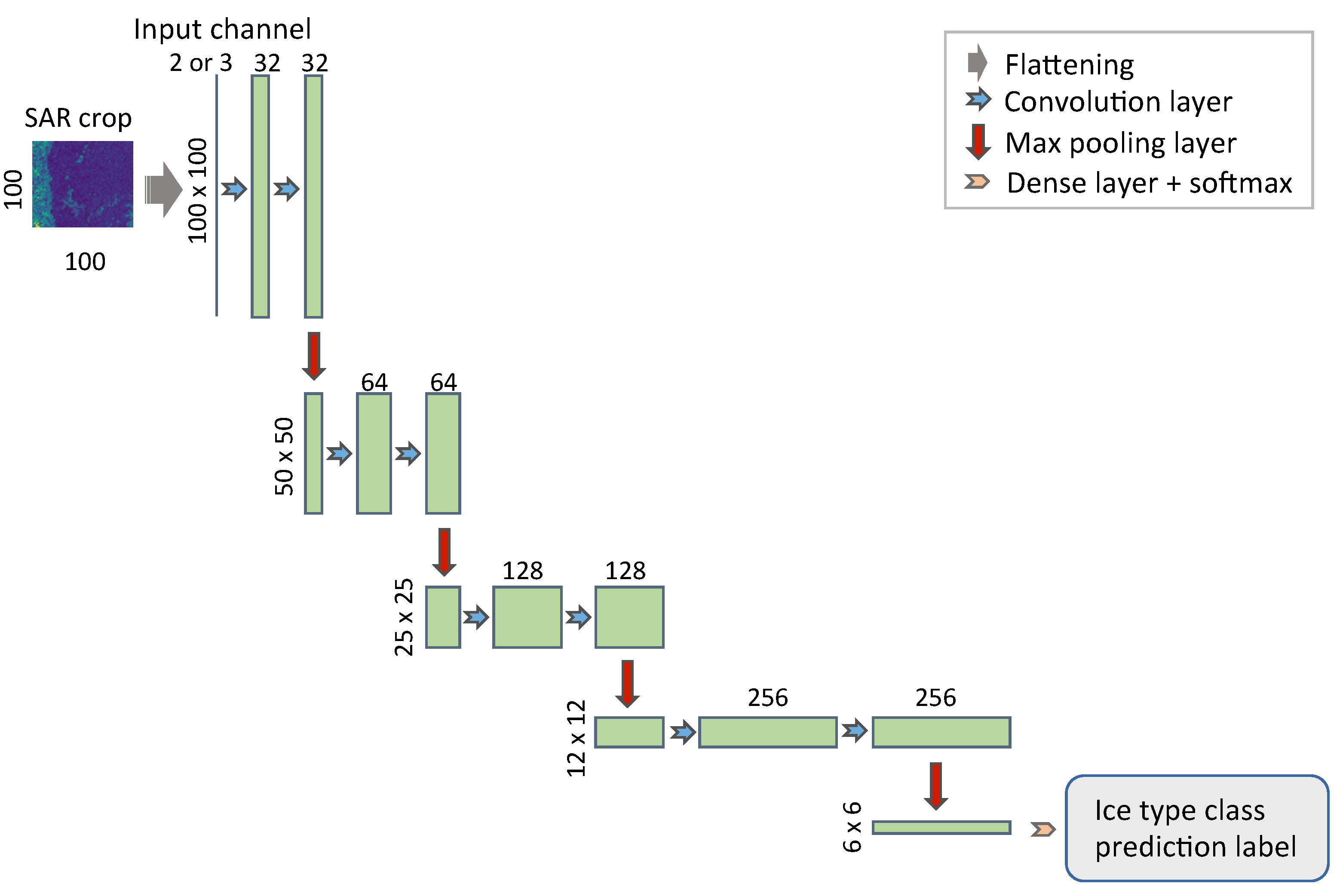

In this paper, we present new algorithms for predicting sea ice stage of development based on a deep learning concept. We develop a methodology, in which we create labelled datasets using a combination of SAR images and CIS ice charts. A comparative analysis of the predictive performance of two different neural networks is completed. We conclude with an interpretation of our results and suggestions for the next stage of algorithm development.

4. Discussion

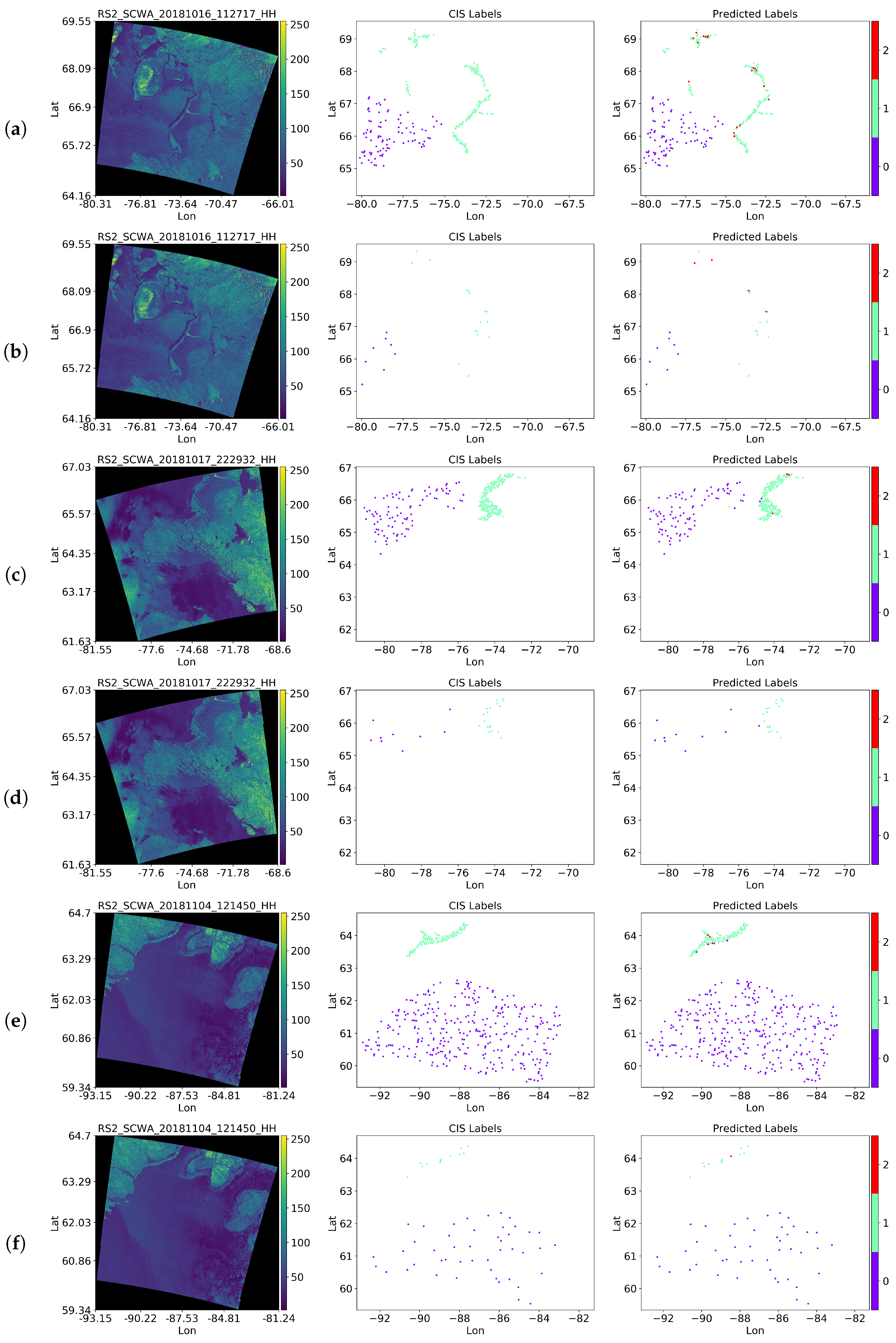

We showed that DenseNet accurately learns from expertly labelled data to classify between water and ice for the two class case, as well as water, new ice, and first-year ice for the three class case, using only uncalibrated HH and HV SAR images. The two class classifier provides a good baseline for the three class classifier as it sets upper-limit performance expectations. DenseNet consistently outperformed the U-Net based model, and it was observed that three channels in the input sample performed better than two channel experiments on the training set, but performed slightly worse on the test set. The visual representation of the predictions demonstrated that erroneous predictions could be rectified by sea ice experts based on the bulk of correctly classified samples that are in proximity to the erroneous predictions. An analysis of the class accuracy showed that the neural network would have a greater chance of producing erroneous predictions between ice types than it would between water and ice. No conclusions could be made concerning the accuracy of the model during the summer melt season due to the lack of representational data. Sea ice classification during the summer melt period has been attempted by others with little success. Our inability to assess the predictive quality of sea ice classification during the summer melt season using the tested neural networks herein was primarily due to the lack of representational data of all three classes during the month of June.

With the goal of producing accurate, high-resolution maps of Arctic marine conditions for industrial and marine transportation, it is also important to compare the results found in this study with existing systems and results from other published work, beyond the two class experiments that were conducted here to benchmark our own result. The study completed by [

17] generated six distributions using the expectation-maximization algorithm, which were used to label their dataset for training. While [

17] used a different labelling scheme than the one presented in this paper, a relatively similar behaviour was observed. The PCNN model used in their study to segment ice types also misclassified ice with other ice types, fast ice in particular. Unfortunately, no metrics were provided by the authors to assess the classification accuracy; therefore, no further comparison can be made with our results. The study completed by [

18] used the WMO terminology to label their dataset into six classes based on in situ observation. The six classes were smooth, medium, deformed first-year ice, young ice, nilas, and open water. The total accuracy achieved among these six classes was 91.2% with a neural network using a fusion of three types of data (ERS, RADARSAT SAR, and Meteor Visible) and noted that the addition of images in the visible spectrum helped improve classification between nilas and open water. While our results cannot be compared directly, due to the differences in the labelling methods and number of classes, the results found in [

18] showed similar levels of accuracy.

With limited available studies using deep learning and CIS labelled data for comparison of ice type classification, here we conduct comparisons with similar work for sea ice concentration. The study presented in [

14] used a DenseNet model to predict sea ice concentration from SAR imagery. The training labels used in that study were obtained from passive microwave data and determined by the ASI algorithm; the results were also compared to CIS charts. While this does not directly compare to the results presented herein, the error metrics used to evaluate performance achieved a similar error rate. Specifically, the test set error in [

14] was 7.87%, while the overall error found in our results was 5.98%. This shows that DenseNet can be used effectively to achieve good results in two different applications relating to the same input data with different outputs, a testament to the flexibility and generalizability of the deep learning model. Similarly, the study presented in [

16] also used a convolutional neural network (though not the same as DenseNet or U-Net) to predict sea ice concentration from SAR imagery. Therein, the methodology used to generate and label the dataset was similar to the methodology presented here, the only difference being in the resulting output. Given that estimating sea ice concentration is a regression problem, the L1 mean error metric was used in [

16] to report a 0.08 average

score. While this metric is not comparable to classification accuracy, the low error score achieved supports accurate prediction of ice characteristics with deep neural networks using SAR imagery, which is a similar experimental finding of the work herein.

4.1. The Utility of DenseNet for Sea Ice Mapping

The methodology presented in this paper can be applied towards the mapping of sea ice type in the Arctic by following a few steps. An incoming SAR image would be uniformly subdivided into 100 × 100 sub-regions without overlap as a first step. The collection of sub-regions would then be filtered such that sub-regions containing known land masses are removed from the collection. For accuracy purposes, it may also be desirable to exclude sub-regions having <80% of their pixels represent valid SAR data in order to imitate the samples used in the training set. The remaining sub-regions would then be processed and classified by the trained DenseNet model from this study into water, new ice, or first-year ice and assembled to form a segmented image. The time required for DenseNet to completely label a typical 10,000 × 10,000 pixel SAR image that has been pre-subdivided was approximately 40 s on a modern high-end GPU. This shows that DenseNet can automate the prediction of sea ice by type from SAR images to generate image analysis ice charts in a timely manner.

4.2. Future Work

The presented three class models for predicting CIS ice types from SAR imagery can be easily extended within the scope of the configurable parameters used to identify different datasets. As a first step to furthering the research to make it useful to operational sea ice services, the labelling type must be expanded to increase the number of ice classes. There are a total of 14 classes that CIS ice experts use to classify ice based on its stage of development [

25]. Given the success that both neural networks used in this study achieved on the experiments conducted, in particular the three class experiments, a natural next step would be to assess the performance of these neural networks in a similar manner for incremental class definitions from three to 14 class setups. In doing so, we should also study the relaxation of the ice concentration criteria to include more egg codes in order to increase the chances of obtaining a sufficient number of samples for each of the 14 classes, even though such an action would come at the cost of having impure samples in the sense that they will be a mix of water and ice. In addition to reducing purity requirements, class samples for each of the 14 ice classes can also be increased by expanding the region of interest and time of year. By expanding space and time, we will also be able to better assess the ability for the proposed networks to accurately classify ice type during seasonal melt and fall freeze-up.

We expect that as supported ice type classes are expanded, improvements to the dataset and model can be made to improve classification performance. Staying within the scope of the configurable parameters used to identify datasets, further testing should be conducted to assess the effects of calibration and noise removal on the accuracy of ice classification. This can be accomplished by using additional information provided with the SAR products detailing corrective gain values and noise signals [

26]. Furthermore, including a third channel for the HV/HH polarization ratio improved the three class classification accuracy between ice types, at least on the training set. Therefore, future work should also include the effects of regularization techniques on the models, in an attempt to translate the improved training set performance to unseen data.

To strengthen the case that this proof of concept is a viable method to classify ice by type, future studies with more ice types should include K-cross-validation for each experiment to verify the predictive quality and generalizability of the models. The difference in accuracy between each experiment in this proof of concept was within the range caused by random initialization of the models. K-cross-validation would also reduce the uncertainty that the differences in accuracies are caused by random initialization.

Another avenue for future research goes outside the confines of the current framework by constructing a dataset better suited for the complete encoder-decoder U-Net architecture. Although the results for the three class experiments conducted were good, the models treated each sample sub-region as an independent sample. However, sub-regions that are close in proximity to each other will be logically dependent on one another, with similarities in ice conditions. This is where the U-Net architecture would be able to extract the spatial context of nearby samples to hopefully provide better segmentation results. U-Net was designed with this aspect in mind and uses skip connections between the encoder and decoder in order to localize and contextualize features from the macro-resolution of the encoder with the generated micro-resolution features from the decoder side. U-Net also generates feature maps at various scales with different contexts, which is important for SAR imagery as features at large scales tend to appear at finer resolutions as well.

While this is a lengthy list of extensions that can be made to this work, they were not applied; however, the proof of concept was sufficiently validated to show that there is merit in pursuing these changes/improvements for broader ice type classification.