1. Introduction

To realize remote sensing photogrammetry, various photoelectric sensors have been carried out on aircrafts to obtain ground images. Obtaining the location information of the target in the image using a geo-location algorithm is a research hotspot in recent years [

1,

2,

3]. Currently, research on target positioning algorithms focuses on improving the positioning accuracy of ground targets, implying that the positioning error caused by the building height is rarely considered in the algorithm.

Obtaining high-accuracy geo-location of building targets in real time requires automatic detection of buildings in remote sensing images and an appropriate method to calculate the height of buildings from the image. Traditional aerial photogrammetry uses vertical overlook (i.e., nadir) imaging and uses an airborne camera to obtain a large-scale two-dimensional (2D) image of the city. On this basis, most research on building detection algorithms also aims at overlooking remote sensing images. Owing to the differences in buildings in an image, semantic analysis and image segmentation are used for automatic detection. A single overlooking remote sensing image can contain a large number of buildings. With the development of aerial photoelectric loads, such as airborne cameras and photoelectric pods, long-distance oblique imaging has become a major method for obtaining aerial remote sensing images. Unlike overlooking imaging, oblique imaging can better describe specific details of urban buildings, which is conducive to 3D modeling, map marking, and other works [

4]. To achieve these goals, a high-accuracy geo-location of urban building targets should be realized. Scholars have conducted extensive research on target geo-location algorithms. To locate the target with a single image, an earth ellipsoid model is widely used in the positioning process. For example, Stich proposed a target positioning algorithm based on the earth ellipsoid model; this realizes the passive positioning of the ground target from a single image by using an aerial camera and reduces the influence of the earth’s curvature on the positioning result [

5]. The Global Hawk unmanned aerial vehicle (UAV) positioning system is also based on an earth ellipsoid model to calculate the geodetic coordinates of the image center [

6]. To improve the positioning accuracy, scholars have optimized the algorithm by using multiple measurements and adding auxiliary information [

7,

8,

9]. L.G. Tan proposed the pixel sight vector method through the parameters of the laser range finder (LRF) and angle sensors, establishing a multi-target positioning model that improves the accuracy of the positioning algorithm. Similarly, N. Merkle used the information provided by the synthetic aperture radar (SAR) to achieve high-accuracy positioning of targets. Considering a problem wherein single UAV positioning is significantly affected by random errors, various multi-UAV measurement systems have been established to reduce random errors [

10,

11,

12,

13]. G. Bai and Y. Qu proposed cooperative positioning models for double UAVs and multi-UAVs, respectively. Many scholars used filtering algorithms to locate targets. For example, the target positioning algorithm based on Kalman filtering proposed by H.R. Hosseinpoor improves the accuracy of target positioning through multiple measurements. This filtering-based positioning algorithm is widely used in target tracking [

14].

Although extensive research has been conducted on positioning algorithms, only a few analyzed the positioning error caused by the target height. A remote sensing image is obtained by projecting the target area on a sensor that receives the photoelectric signal. The sensor is usually a charge-coupled device (CCD). The essence of an aerial remote sensing image is a 2D image, resulting in a traditional positioning algorithm that cannot calculate the height of the building in the image. C. Qiao considered the influence of elevation information on the positioning results and relied on digital elevation model (DEM) data, instead of the earth ellipsoid model, which resolved the elevation error of ground targets [

15,

16]. However, the DEM data do not contain the elevation information of the building and, thus, cannot resolve the large positioning error of the building target. Some algorithms, based on LRFs, for obtaining distance information can locate building targets. However, the positioning result is significantly affected by the ranging accuracy, and most airborne LRF has a limited working range (within 20 km). To obtain a large range for remote sensing images, the shooting distance of long-distance oblique remote sensing images is usually more than 30 km. Therefore, this algorithm cannot meet the requirements of high-accuracy positioning of building targets. Manual building identification and calculation by downloading the aerial remote sensing image results in poor timeliness of the data, and it cannot meet the requirements of obtaining the target’s geo-location in real time.

This study aims to address the problem of large positioning error of building targets and establishes a building target positioning algorithm that can be widely used in oblique remote sensing images. The remainder of this paper is organized as follows.

Section 2 optimizes the YOLO v4 convolutional neural network based on deep learning theory and characteristics of building remote sensing images and realizes the automatic detection of buildings in various squint remote sensing images.

Section 3 calculates the building height in the image based on the angle information and proposes a high-precision positioning algorithm for the building target.

Section 4 proves the importance and effectiveness of the algorithm through simulation analysis and actual flight tests. Finally,

Section 5 summarizes this study.

2. Automatic Building Detection Algorithm for Oblique Remote Sensing Images

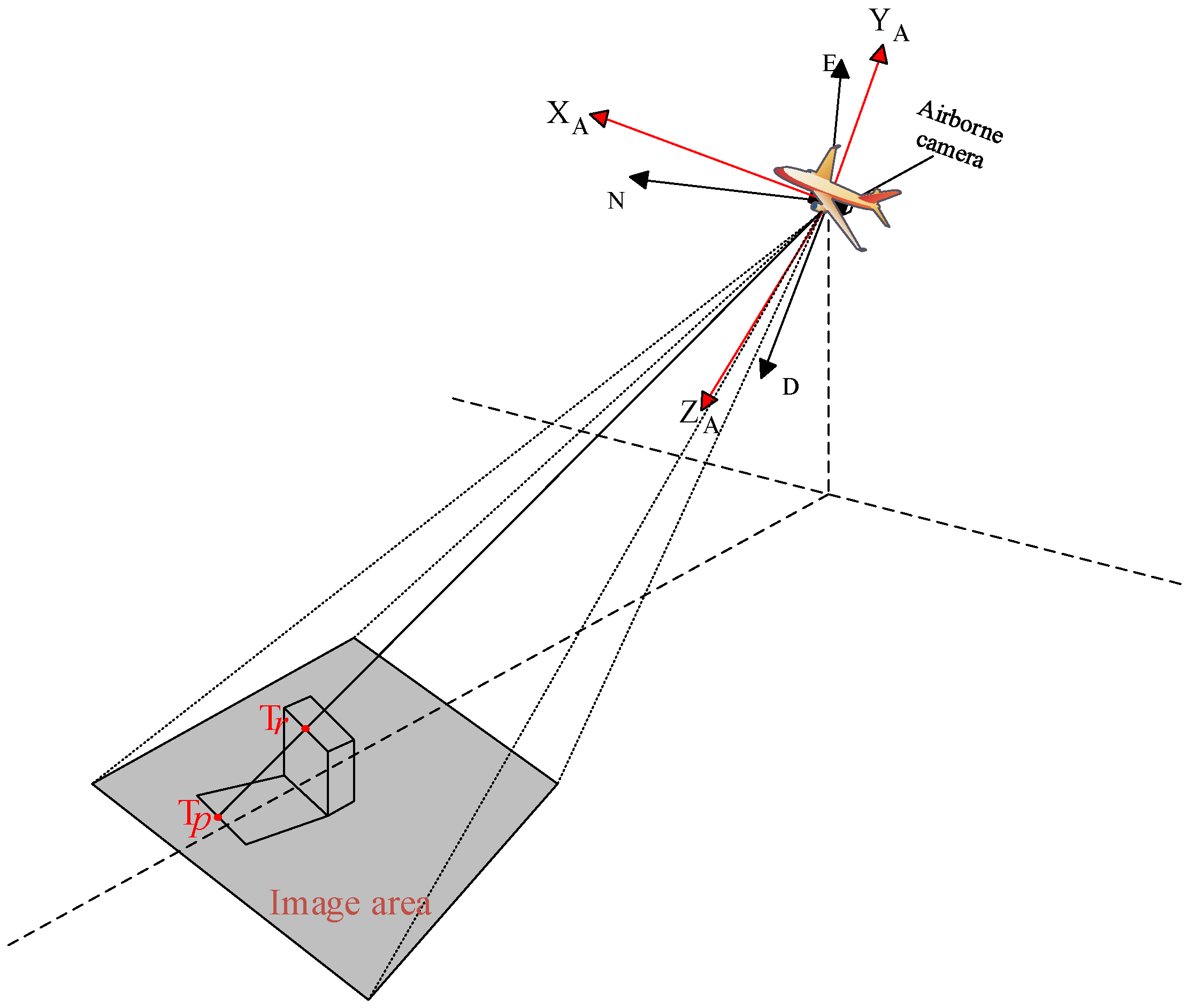

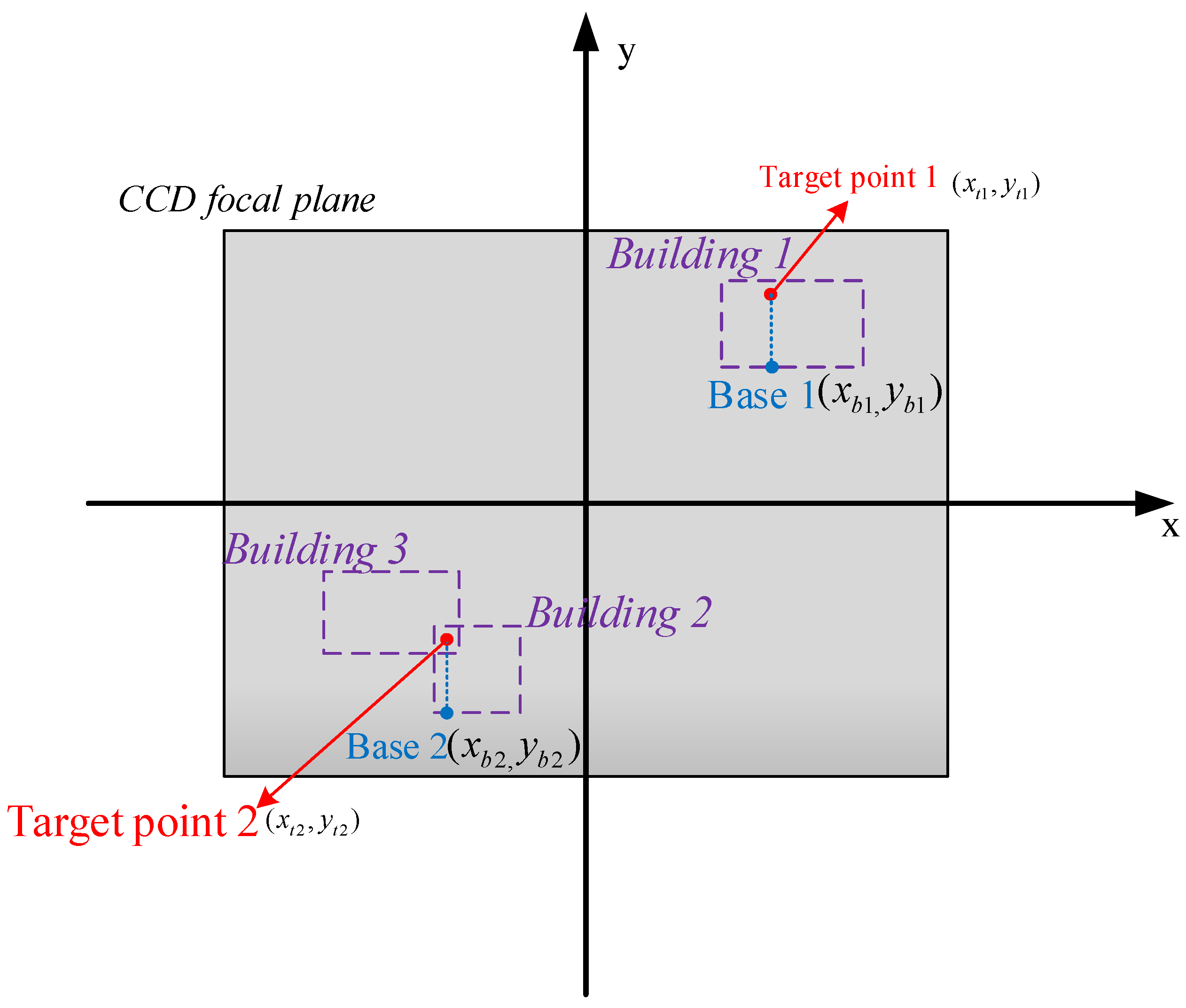

As mentioned previously, the essence of aerial remote sensing images is 2D images. The principle of the traditional target positioning algorithm is calculated by the intersection of the collinear equation composed of the projected pixel points, camera main point, and earth ellipsoid equation or DEM model. Therefore, when locating a building target, the actual positioning result is the ground position blocked by the building, as shown in

Figure 1. In the figure,

is the actual position of the target point, and

is the calculation result of the traditional positioning algorithm.

An appropriate method that can detect the image and automatically identify the building should be selected to distinguish the building target in the image from the ground target to avoid affecting the positioning result of the ground target. The buildings in the oblique images show different characteristics from those of the overlooking images, such as neighboring buildings being blocked in the image. The height, shadow, angle, and edge characteristics vary for each building. Therefore, the traditional top-down image recognition method is less effective and cannot detect buildings in oblique images. Recently, owing to the rapid development of deep learning algorithms based on convolutional neural networks, scholars have conducted research on the building detection in remote sensing images through deep learning. However, most research focused on the detection of targets from overlooking images, especially for the automatic recognition of large-scale urban images [

17,

18]. The relevant research results cannot be directly applied to oblique images. Therefore, although the application of long-range oblique imaging is widely used, limited research has been conducted on related datasets and detection algorithms.

YOLO is a mature open-source target detection algorithm with the advantages of high accuracy, small volume, and fast operation speed [

19,

20,

21,

22]. Its advantages and characteristics perfectly match the requirements of aerial remote sensing images. Aerial oblique images are usually captured using airborne cameras or photoelectric pods carried by an aircraft, requiring real-time image processing and high recognition accuracy. The computing power of aerial cameras is limited, so the operation speed requirement of the algorithm is also high. This study uses YOLO v4 as the basis and optimizes it to achieve the automatic detection of buildings from telephoto oblique remote sensing images.

Figure 2 clearly describes the neural network structure of the building detection algorithm. CBL is the most basic component of the neural network, consisting of a convolutional (Conv) layer, a batch normalization (BN) layer, and a leaky rectified linear unit (ReLU) activation function. In the CBM, the Mish activation function is used to replace the leaky ReLU activation function. Moreover, a Res unit exists to construct a deeper network. The CSP(n) consists of three convolutional layers and n Res unit modules. The SPP module is used to achieve multiscale integration. It uses four scales of 1 × 1, 5 × 5, 9 × 9, and 13 × 13 for maximum pooling [

22].

Training the neural network through a large amount of data is required to realize the automatic detection of buildings. However, existing public datasets do not have high-quality data for oblique aerial building images. A telephoto oblique aerial camera usually adopts the dual-wave or multi-wave band simultaneous photography mode, the original image is usually a grayscale image and the existing dataset cannot be used for the neural network training. To complete the neural network training, this study establishes a dataset of oblique aerial remote sensing images, which is against building images and is extracted from the remote sensing images captured by an aerial camera during multiple flights. The dataset currently contains 1500 training images and more than 10,000 examples. The images in the training set include remote sensing images obtained from different imaging environments, angles, and distances and are randomly collected from linear array and area array aerial cameras.

After training the original YOLO v4 algorithm through the dataset established in this study, the buildings in the tilted remote sensing image can be well detected, but some small low-rise buildings are still lost. To improve the detection accuracy and meet the requirements of real-time geographic positioning, this study optimizes the algorithm for building detection. The initial anchor boxes of YOLO v4 cannot be applied well to the building dataset established in this study. This is because the initial anchor box data provided by YOLO v4 is calculated based on the common objects in context (COCO) dataset, and the characteristics of the prediction box are completely different from this study’s building dataset. The initial anchor box data will affect the final detection accuracy. To obtain more effective initial parameters, the K-means clustering algorithm is used to perform a cluster analysis on the standard reference box data of the training set. The purpose is to select the appropriate box data in the clustering result as the prediction anchor box parameter of network initialization. The K-means clustering algorithm usually uses the Euclidean distance as the loss function. However, this loss function causes a larger reference box to produce a larger loss value than a smaller reference box, which produces a larger error in the clustering results. Owing to the large range of tilted aerial remote sensing images, buildings of different scales often exist in the image simultaneously, and the clustering results of the original K-means algorithm will produce large errors. To advance this phenomenon, the intersection over union (IOU) value between the prediction box and standard reference box is used as the loss function to reduce the clustering error. The improved distance function is shown in Equation (1).

This problem also exists when calculating the loss of the prediction box. To address this, the loss function in the YOLO v4 algorithm normalizes the position coordinates of the prediction box and increases the corresponding weight. The center coordinates, width, and height loss functions are shown in Equations (2) and (3). In the equations,

refer to the center coordinates of the prediction box, and

are the width and height values of the prediction box, respectively. Similarly,

refers to the real center coordinates of the marker box, and

are the real width and height values, respectively.

The loss function of YOLO v4 has a satisfactory effect in the training process, but it also leads to new problems. Owing to the loss function, the prediction frame coordinates given by YOLO are normalized center point coordinates, and the width and height values that cannot be directly applied to the positioning algorithm. To achieve high-accuracy building target positioning, a single prediction box is used as an example to provide the prediction frame coordinate calculation method in the image coordinate frame.

The conversion process is shown in

Figure 3. Considering the four corner points of the prediction frame as an example, the position of the prediction frame output by YOLO can be converted into the image coordinate system using the following method. First, the YOLO output information (width, height, and image center position) is converted into a normalized coordinate system with the image center as the origin.

Subsequently, the coordinates of the corner points of the predicted frame in this coordinate system are calculated.

Finally, the coordinates are enlarged according to the original size of the image (

) to obtain the coordinates of the corner points,

, in the image coordinate frame.

Additionally, this study improves the training speed of the detection algorithm by adjusting the initial value and decline of the learning rate. To facilitate the subsequent target positioning process, the detection algorithm can output the pixel coordinates of the prediction box in the image coordinate frame to be output in real time.

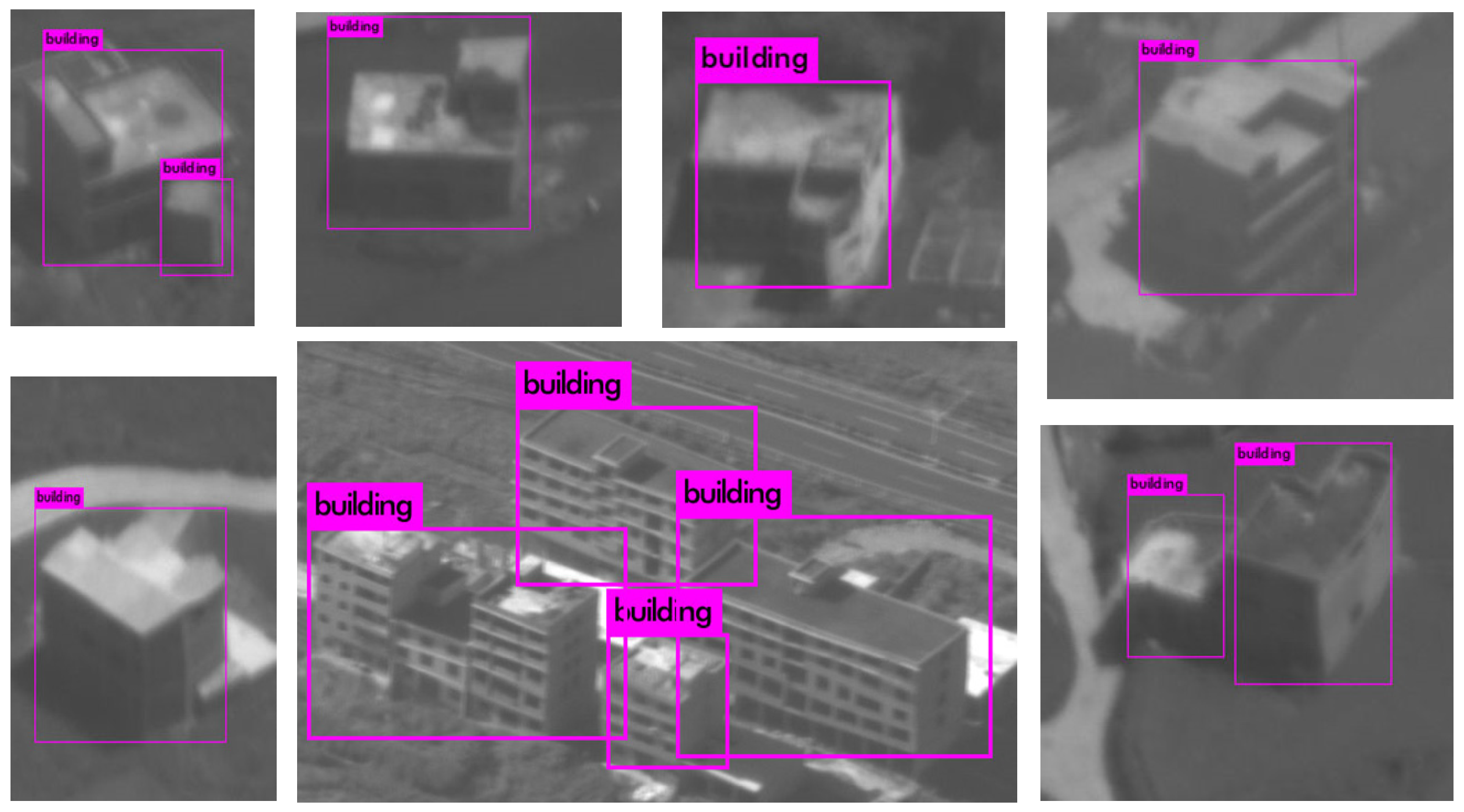

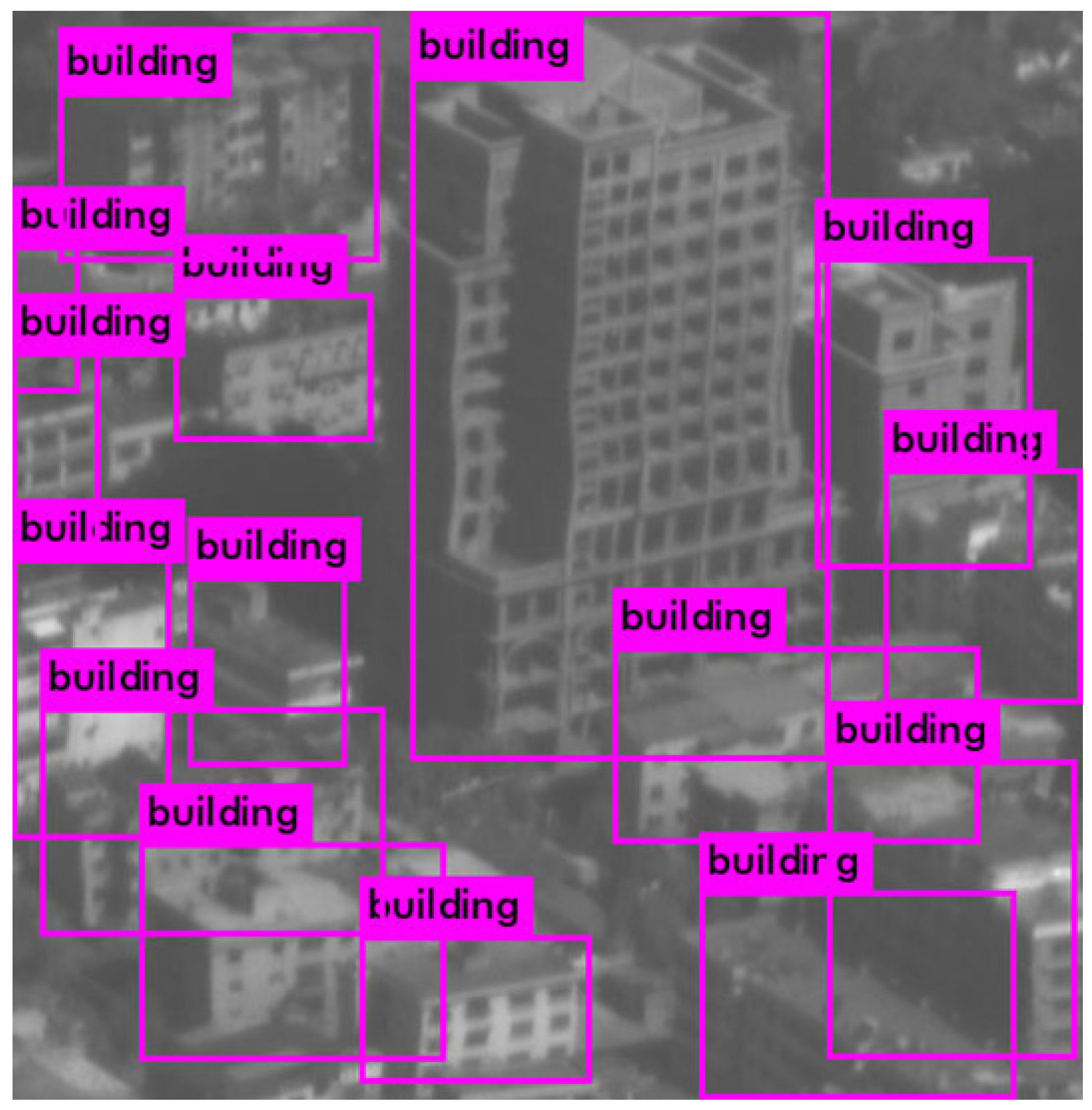

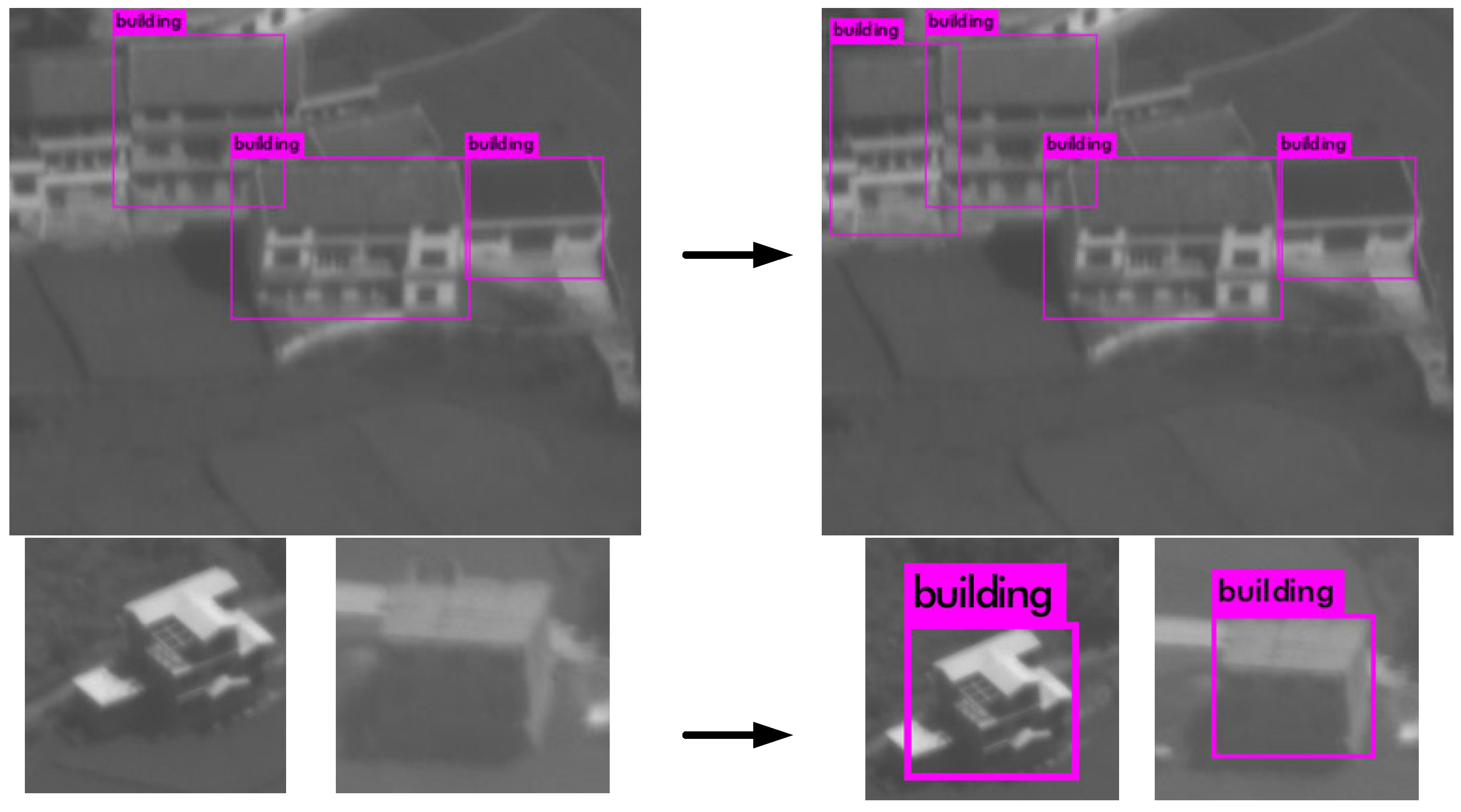

Figure 4 and

Figure 5 show the detection effect of the partial verification set, illustrating the detection results for small scattered buildings and dense urban buildings, respectively. The optimized building detection algorithm can better identify blocked buildings and small buildings in large-scale images. A comparison with the original YOLO v4 is shown in

Figure 6.

3. Building Target Geo-Location Algorithm

The building target location algorithm aims to obtain accurate geo-location of the target point. The aerial cameras may produce inverted images because of the structure of the camera’s optical system and the scanning direction. However, the image is rotated in post-processing, so the image results still present a positive image. It is found that the top and bottom of the building usually have the same latitude and longitude , but they differ in the height (h). Considering that no elevation error exists in the positioning result of the bottom of the building calculated by the collinear equation, the precise latitude and longitude information of the bottom of a building can be used as the overall building latitude and longitude. Furthermore, the height of targets on the building can be calculated by the algorithm provided in this section to improve the positioning accuracy of the building target.

The building detection algorithm described in

Section 2 can automatically detect buildings in remote sensing images and provide the position of the building prediction box in the image coordinate frame. The high-accuracy geo-location of building targets can be obtained in two steps. First, the target points on the same y-axis in the prediction box are considered having the same latitude and longitude. Subsequently, the appropriate base point position is selected and is regarded as the standard latitude and longitude of the target on a certain Y-axis. Second, the elevation information of the target point in the prediction frame is calculated according to the proposed building height algorithm. The base point is proposed to determine the latitude and longitude of the target point on a building. The base point is determined by the prediction box provided by the building detection algorithm. Considering that the aerial remote sensing image appears positive, the bottom of the prediction box (with the smaller

y coordinate value) is usually selected as the base point. It should be noted that the buildings in the remote sensing image may overlap, so the prediction boxes in the image will also overlap. This phenomenon occurs because the building in front (i.e., closer to the airborne camera) blocks the building at the back. Hence, only the prediction frame of the building in front should be considered and is reflected in the image coordinate frame as a prediction box with a small Y-axis coordinate. As shown in

Figure 7, the latitude and longitude of target points 1 and 2 are calculated by the base points 1 and 2, respectively.

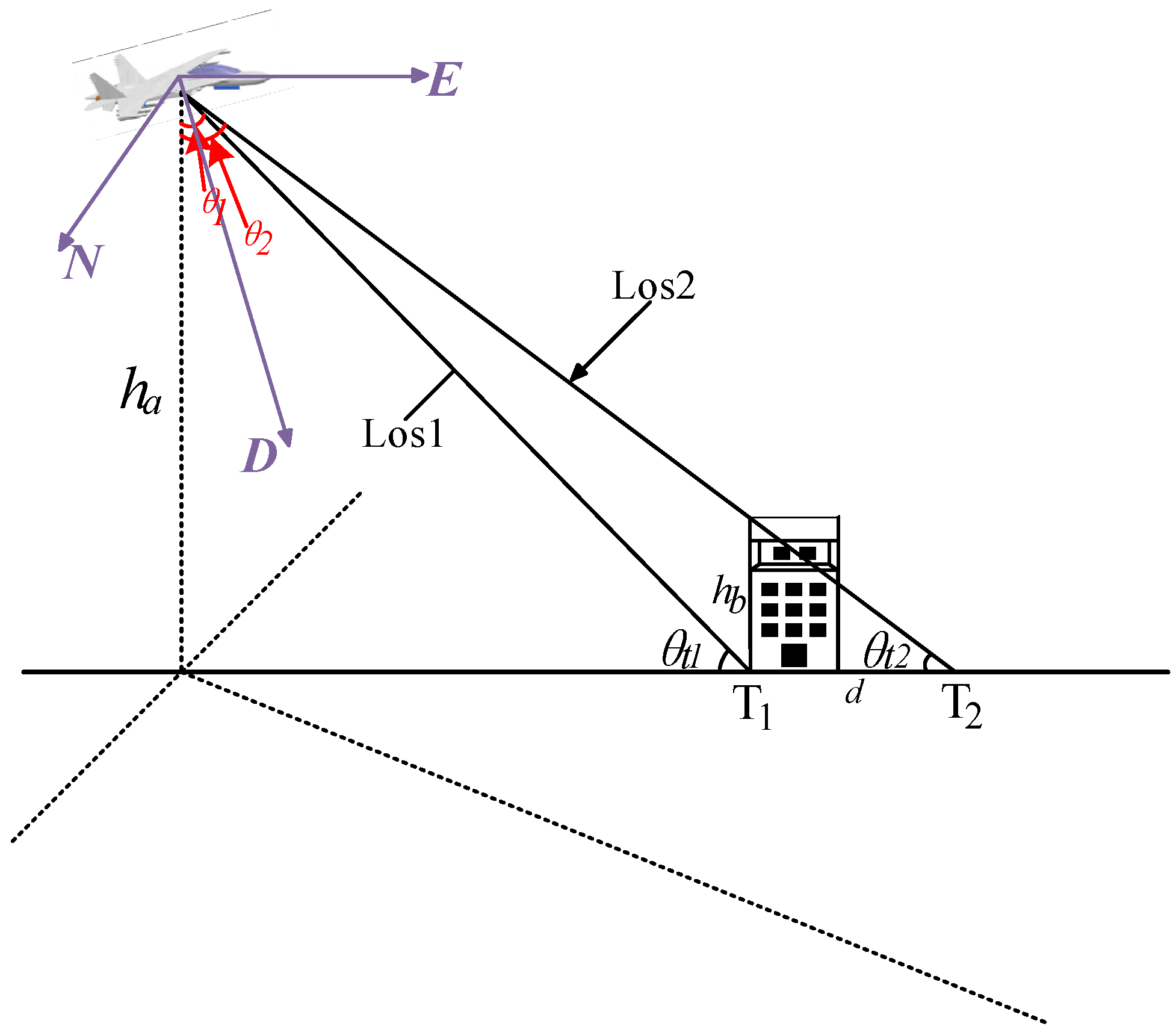

According to the difference between the image acquisition methods of line array and area array aerial cameras, the target point on the top floor of the building is used as an example. Two building height algorithms are presented in this paper. The line array camera can provide angle information when each line of the image is scanned, implying that the top and bottom of the building have different imaging angles. The geometric relationship when the linear array aerial camera obtains the building image is shown in

Figure 8.

In this figure,

and

are the location results of the bottom and top of the building, which are calculated using the geo-location algorithm;

and

are the corresponding imaging angles;

is the height of the building;

d is the distance between

and

; and

is the altitude of the aircraft. The height of the building can be calculated by trigonometric functions, as shown in Equations (7) and (8).

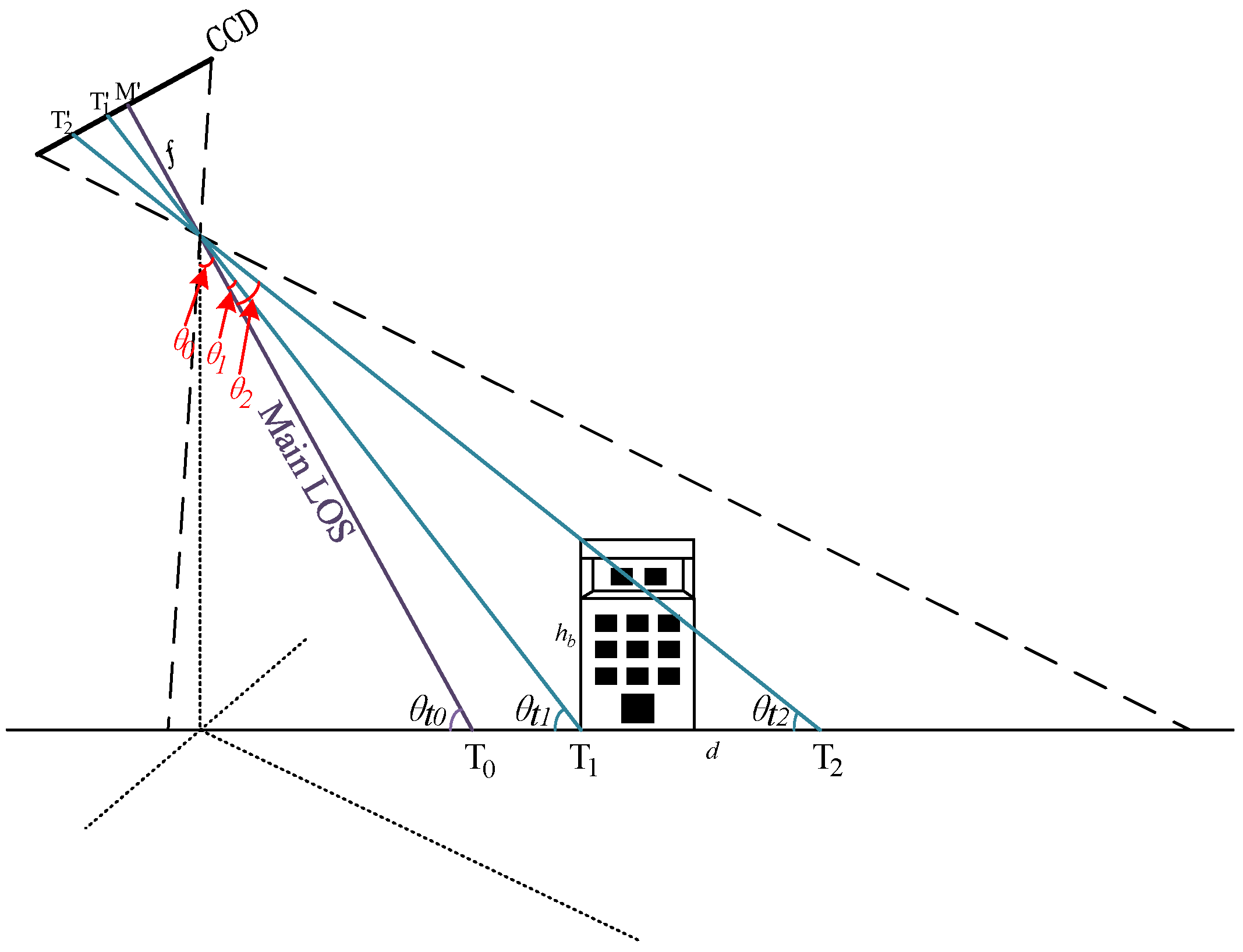

If the remote sensing image is acquired by an area array camera, the calculation process is more complicated. The area array camera records the imaging angle

of the main optical axis (also called line of sight, LOS) of the camera when obtaining each image. The angles corresponding to the top and bottom of the building in the image should be calculated through the geometric relationship, as shown in

Figure 9.

In this figure,

are the projection positions of the top and bottom of the building on the CCD, respectively. The number of pixels is represented by

m and

n. The angle

between the imaging light at the bottom of the building and the main optical axis can be calculated using Equation (9), where

f is the focal length of the camera and

a is the size of a single pixel.

The angle

between the imaging light at the bottom of the building and the main optical axis can be calculated similarly.

The angle parameters required by the building height algorithm can be calculated through geometric relationships as follows:

The angle information required by the algorithm can be obtained using Equations (9)–(12), and the height of the building in the area array camera image can also be calculated using Equation (8).

As shown this algorithm, the height of building targets is calculated based on the geographic location of the bottom of the building combined with the direct positioning result of the target point. This article provides a simple and fast positioning algorithm based on the collinear equation as a reference. The geographic coordinates of a point in the remote sensing image can be calculated using a series of coordinate transformation matrices.

represents the transformation process from the A coordinate frame to that of B;

can be abbreviated as a block form;

L is a third-order rotation matrix composed of cosines in the three-axis direction; and

R is a translation column matrix composed of the origin position of the coordinate system. The inverse matrix of

represents the transformation process from the B coordinate frame to that of A.

The earth-centered earth-fixed (ECEF) coordinate frame, also known as the earth coordinate frame, can be used to describe the position of a point relative to the earth’s center. The coordinates of the target projection point must be converted to the ECEF coordinate frame to establish the collinear equation in the earth coordinate frame. This process usually requires three coordinate frames: geographic coordinate frame, aircraft coordinate frame, and camera coordinate frame.

Figure 10 is a schematic diagram of the corresponding coordinate frames.

The optical system is fixed on a two-axis gimbal, which is rigidly connected to the UAV or other airborne platforms. The camera coordinate frame (C) and aircraft coordinate system (A) can be established with the center of the optical system as the origin. The X-axis of the aircraft frame points to the nose of the aircraft, the Y-axis points to the right wing, and the Z axis points downward to form an orthogonal right-handed set. The attitude of the camera can be described by the inner and outer frame angles,

and

, respectively, and the transformation matrix of the camera frame and aircraft frame is as expressed in Equation (14).

As shown in

Figure 8, according to the established method, the geographic coordinate frame is also called the north-east-down (NED) coordinate frame and is used to describe the position and attitude of the aircraft. The position of the airborne camera is considered as the origin, the N and E axes point to the real north and real east, and the D axis points to the geocentric along the normal line of the earth ellipsoid. Equation (15) is the transformation matrix between the A and NED coordinate frames, where attitude angles

φ, θ, and

ψ represent roll, pitch, and yaw angles, respectively.

The ECEF coordinate system can describe the position of the target, and its coordinate value can be converted into the geo-location information (i.e., latitude, longitude, and altitude). The origin of the ECEF coordinate frame is at the geometric center of the earth, where the X-axis points to the intersection of the equator and prime meridian, the Z-axis points to the geographic north pole, and the Y-axis forms an orthogonal right-handed set. Equation (16) is the conversion formula between the ECEF coordinate values and geo-location information. Equation (17) is the transformation matrix between the ECEF coordinate frame and NED coordinate frame, where

λ, ϕ, and

h correspond to longitude, latitude, and altitude, respectively.

If

is the position of the target projection point in the camera coordinate system, then its coordinates in the earth coordinate system

can be calculated using the above transformation matrices.

The collinear equation in the ECEF coordinate frame is composed of

and the origin

of the camera coordinate frame.

The position of the target in the ECEF coordinate system can be obtained by solving the intersection of the collinear equation and earth model. The earth model uses the ellipsoid model or DEM data. Our study uses the ellipsoid model as an example. If the altitude of the target is

, the earth ellipsoid equation of the target can be expressed as Equation (20) [

6].

where

and

are the semi major and semi-minor axes, respectively.

The geo-location information of the target can be obtained through an iterative algorithm based on the calculation results of Equations (19) and (20).

The precise geo-location information of the building

can be obtained using the above algorithm. Meanwhile, the precise geographic information of a target on the building consists of two parts: the latitude and longitude of the bottom of the building

and the height of the target point

. The complete positioning process is illustrated in

Figure 11.

6. Conclusions

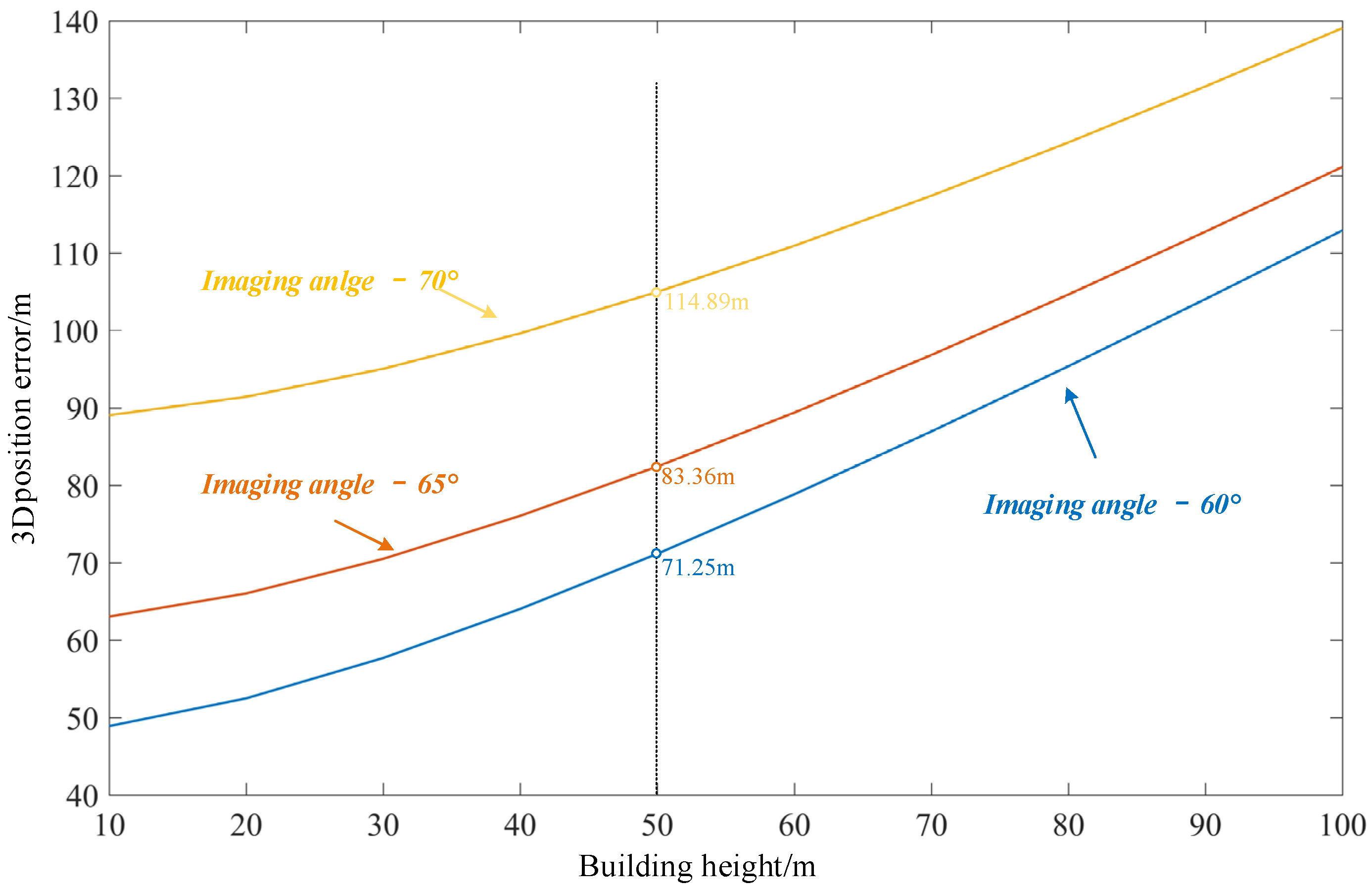

This study proposes a geo-location algorithm for targets in buildings. The algorithm uses deep learning to achieve automatic detection of building targets when acquiring images. Moreover, it provides a building height estimation model based on the location of the prediction box to achieve high-accuracy positioning of building targets. With the development of aeronautical photoelectric loads, oblique aerial remote sensing images are widely applied, and the requirements for image positioning capabilities are increasing. However, when the traditional positioning algorithm locates the building target in a single image, a large positioning error is generated. The positioning error of the building target has two main reasons. First, most of the existing long-distance positioning algorithms to locate the target are based on the collinear equation. The positioning result is the projection of the target on the ground rather than its real position. Second, the remote sensing image is essentially a 2D image that does not directly provide the height information of the target. Conversely, the algorithm proposed in this study remarkably improves the positioning accuracy of building targets and can be widely used in oblique aerial remote sensing images. The algorithm can also be used with various existing high-precision ground target positioning algorithms to improve its positioning effect on building targets. The limitation of the proposed method is that the detection algorithm can only output a rectangular prediction box, which may cause the prediction box to include other features apart from buildings. If the roof of a building is at a 45° angle, the prediction box can still completely cover the building. However, there must be a situation where the ground image is also covered in the prediction box. If a positioning target exists in the image at this time, it will inevitably lead to positioning errors during the automatic calculation by the algorithm. This problem can be solved by manually confirming the building target in the image. However, it should be noted that in real aerial remote sensing city images, no positioning target near the roof of the building usually exists. Owing to the large range of oblique imaging, the image of the near-roof building in an urban remote sensing image is usually other buildings in the distance. Compared to the entire image, the prediction box of low-rise buildings is small. The non-building area in the prediction box may only be 10 × 10 pixels and will not include ground positioning targets.

Experiments show that the proposed algorithm proposed can detect and estimate the height of buildings in an image. The algorithm can improve the accuracy by approximately 40% when the imaging angle is 70° and target height is 60 m. For buildings with a height of 20 m, the accuracy can be increased by approximately 25%. This algorithm can improve the accuracy of positioning, but the improvement effect is not fixed at 25–40%. This is because, in addition to the error caused by the target height, the positioning algorithm is also affected by other factors. For example, the positioning algorithm based on angle information will be affected by the accuracy of the angle measurement, and that based on laser distance measurement will be affected by the accuracy of the distance measurement. The higher the accuracy of the angle and distance measurements, the smaller is the error of the positioning algorithm and the greater is the relative weight of the influence of the building height error on the positioning result. Therefore, with the improvement of the accuracy of various angle and distance measuring sensors, the building target positioning algorithm established in this paper will be more effective for the optimization of traditional positioning algorithms and has greater practical significance.