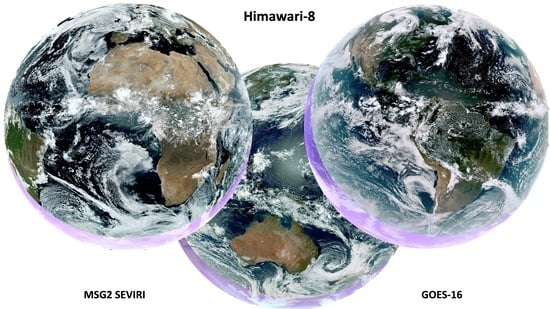

The Wall: The Earth in True Natural Color from Real-Time Geostationary Satellite Imagery

Abstract

:1. Introduction

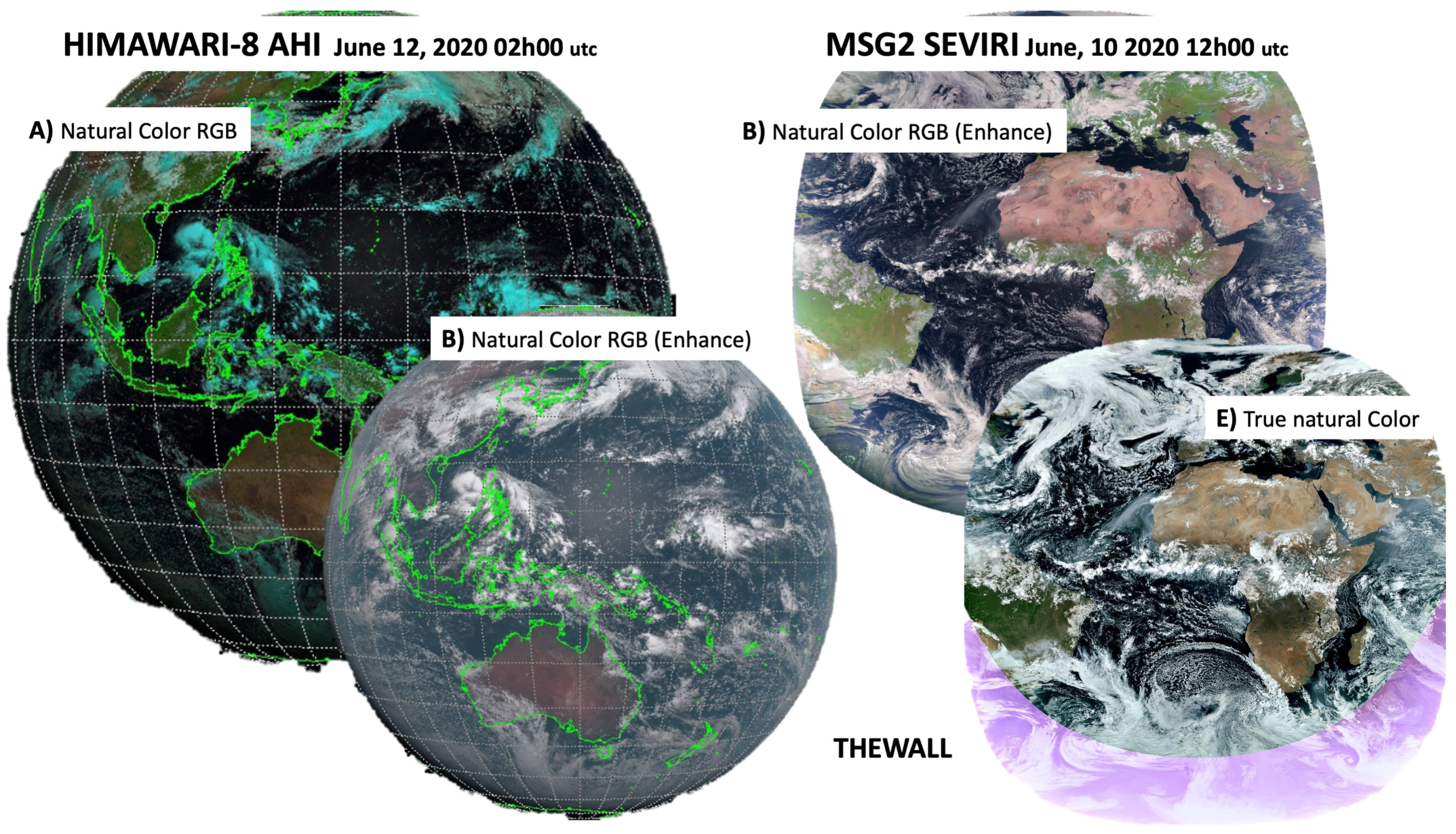

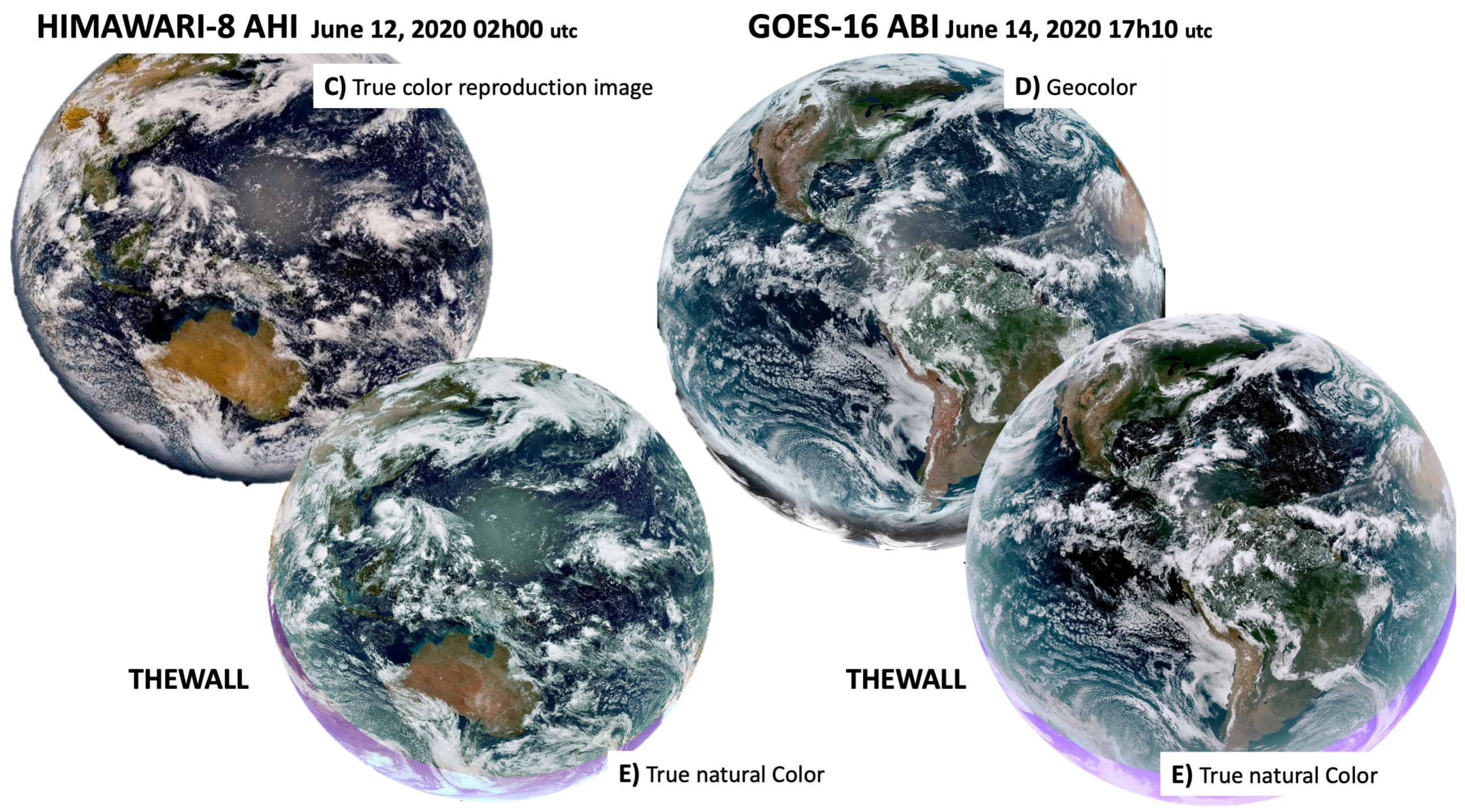

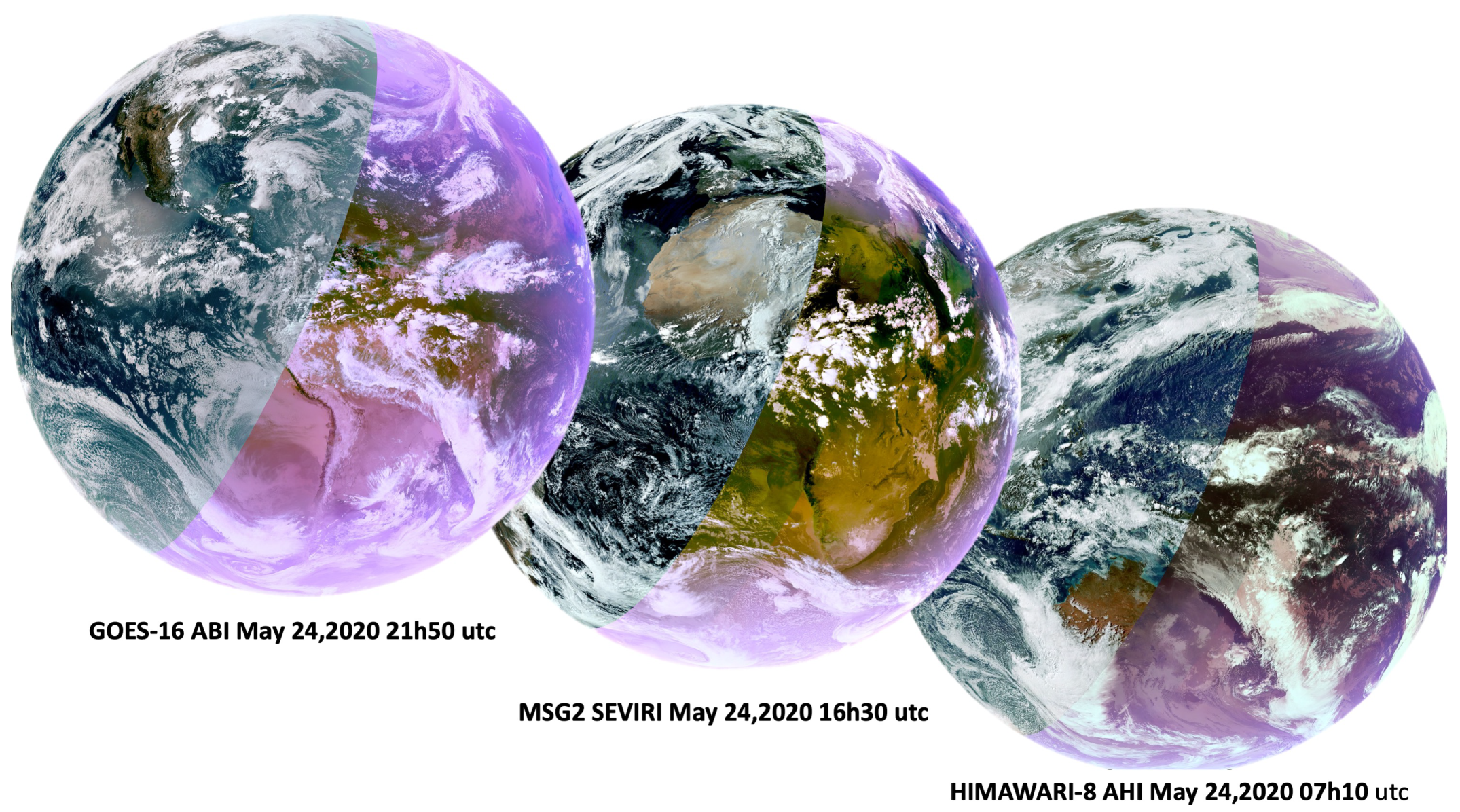

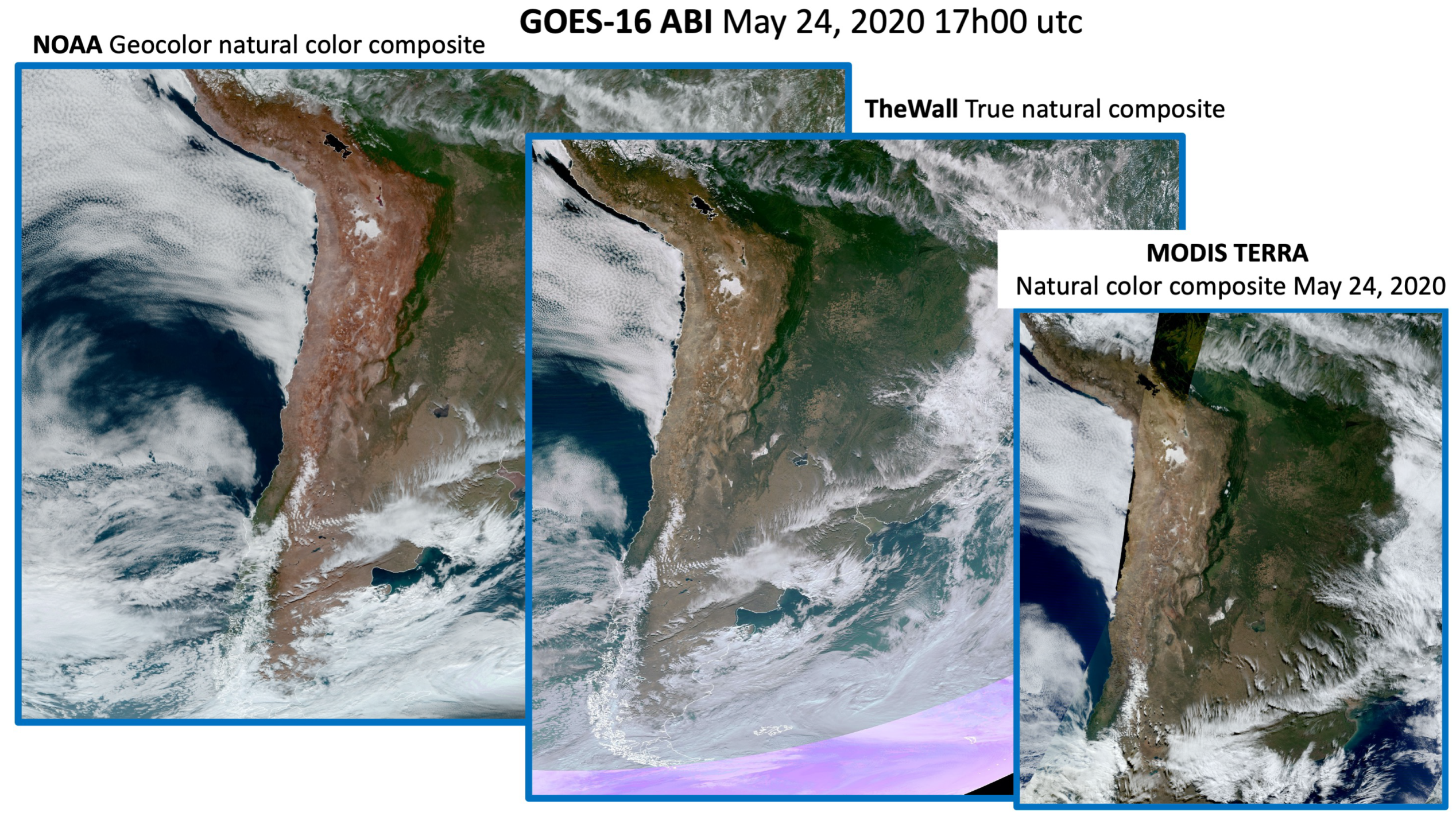

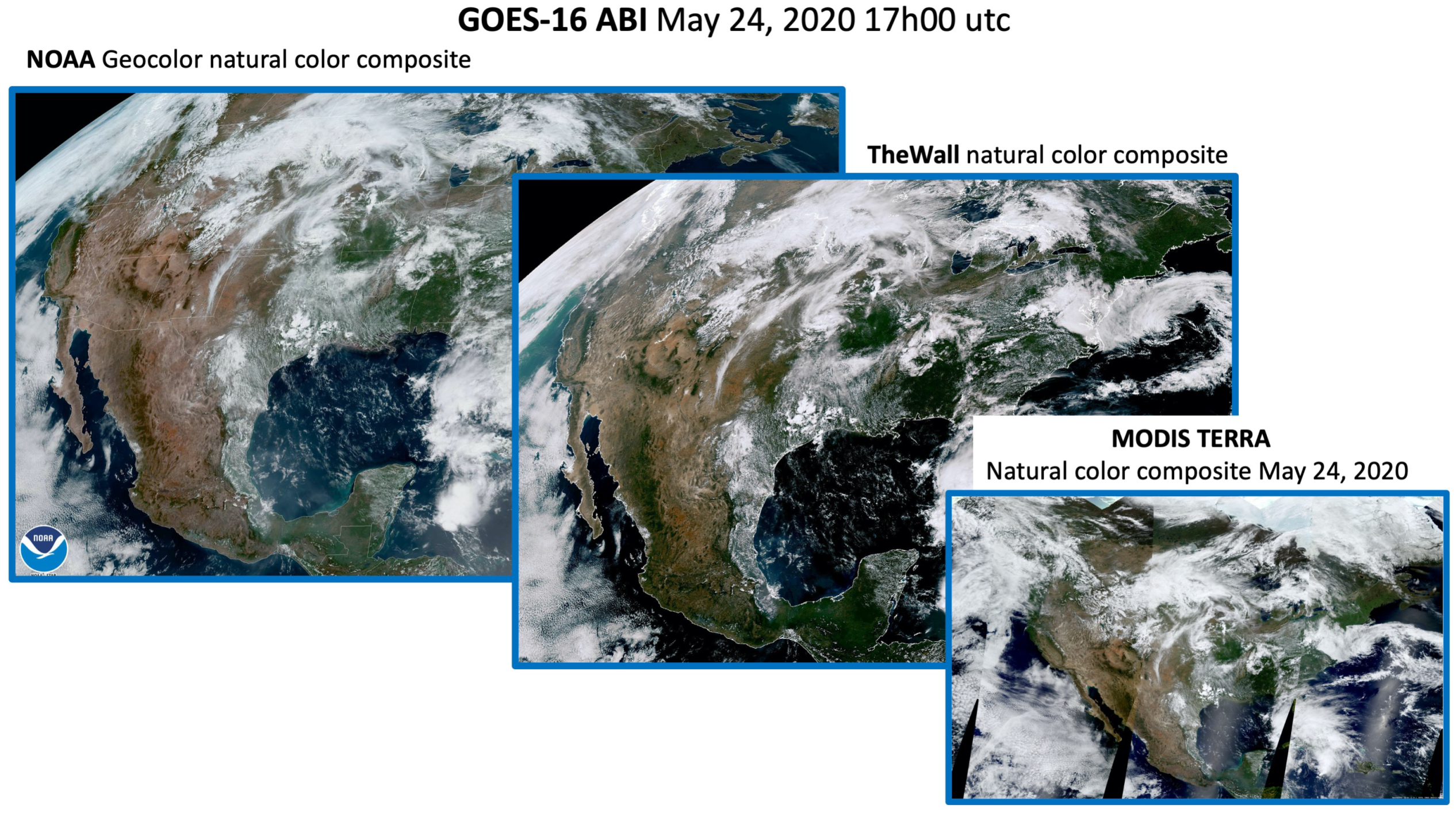

2. Methodology to Process the Geostationary Satellite Data

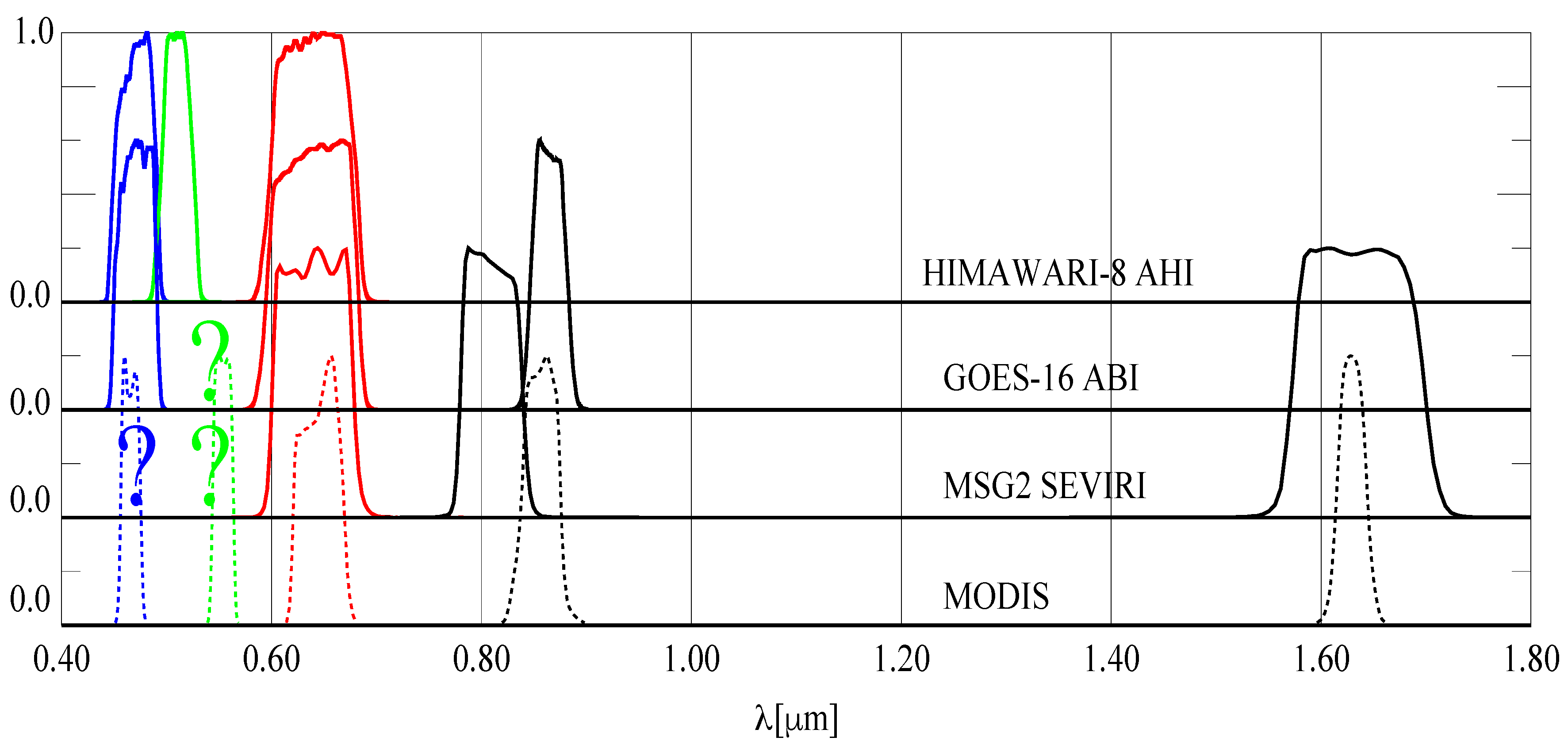

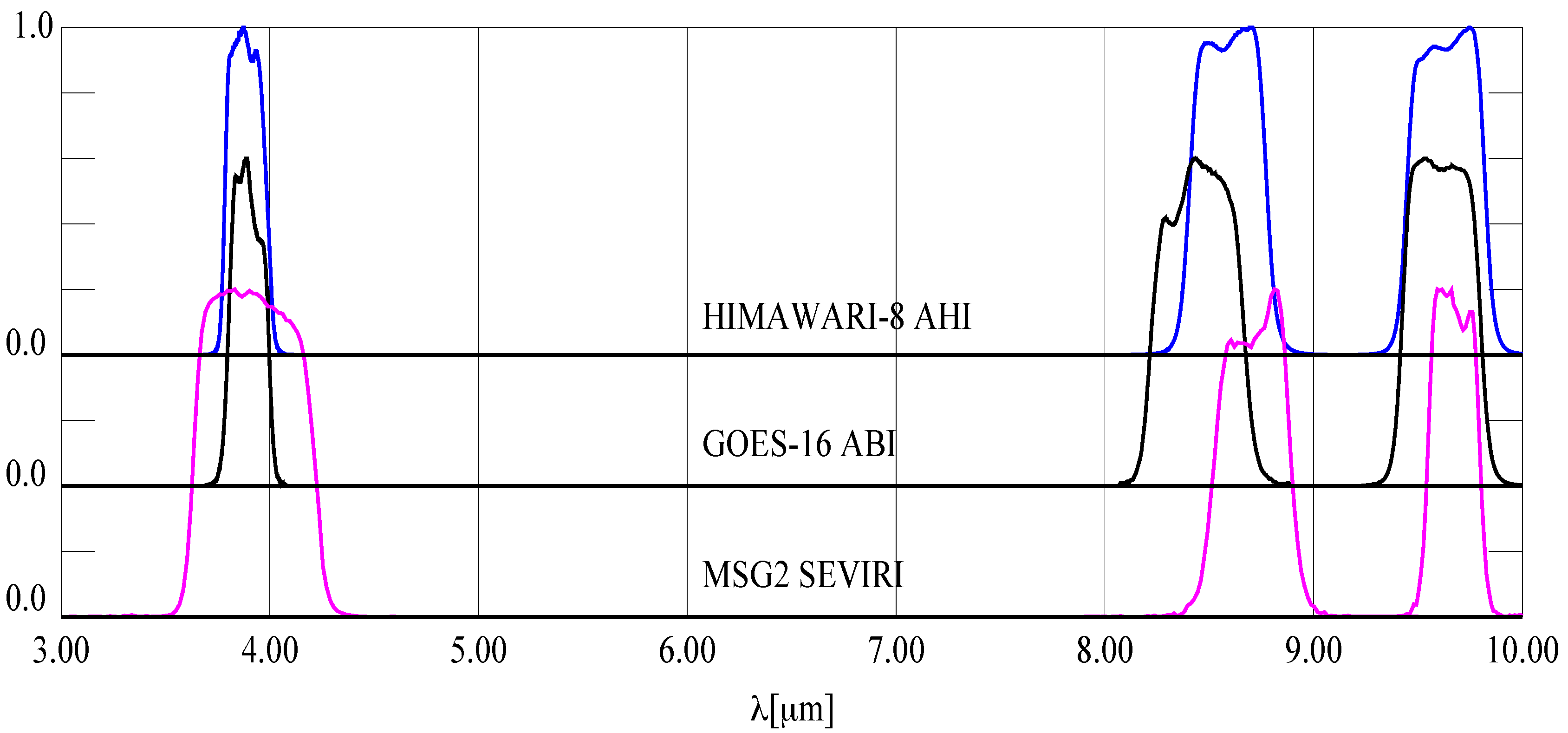

2.1. Spectral Bands and Satellite Specifications of the Level 1B Data Products

2.1.1. Satellites with 1 km Resolution at Nadir

2.1.2. MSG2 SEVIRI Data with 3 km Resolution at Nadir

2.2. Night-Time RGB Composite

2.3. Daytime RGB Composite

2.3.1. Atmospheric Corrections

2.3.2. RGB Visible Composites

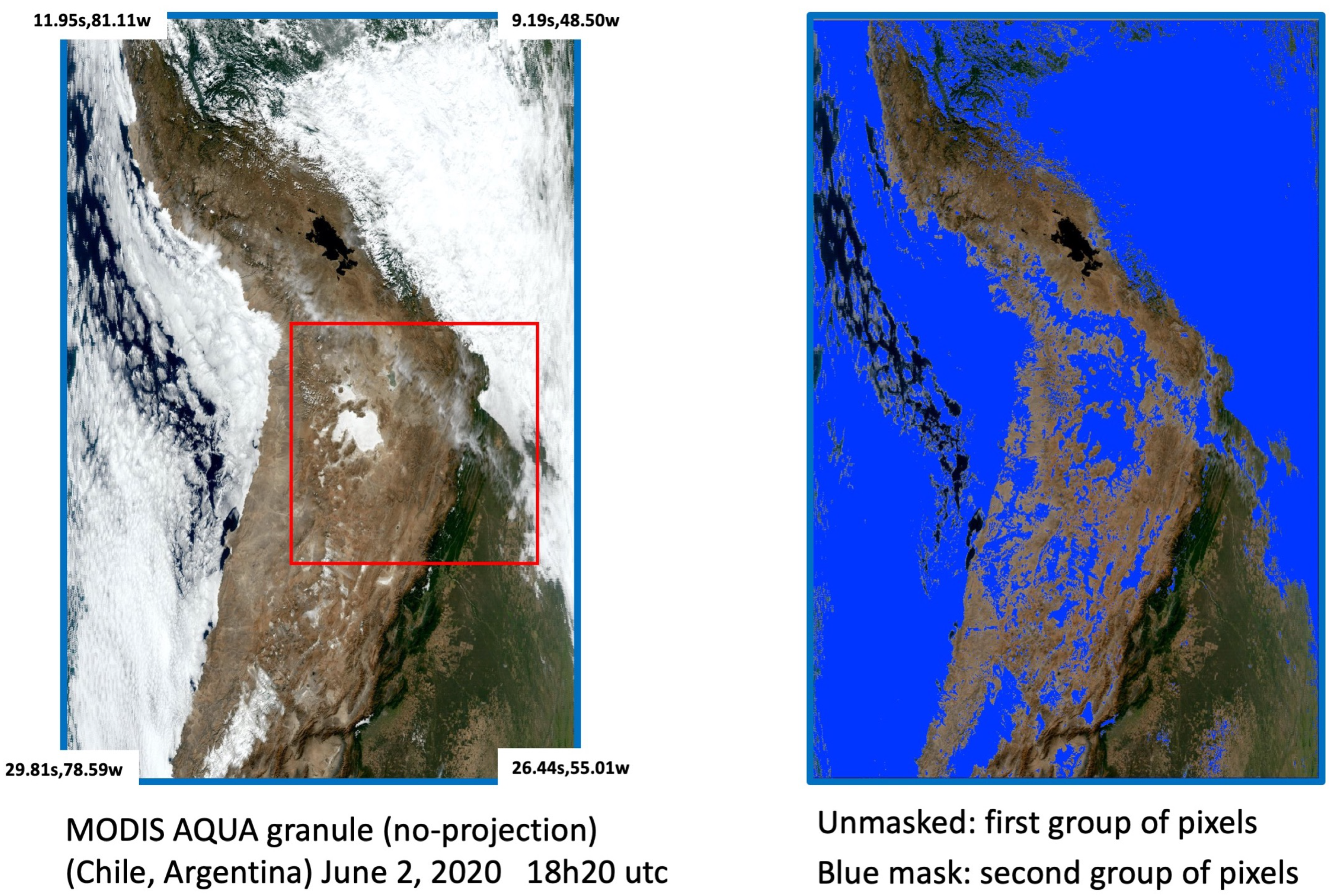

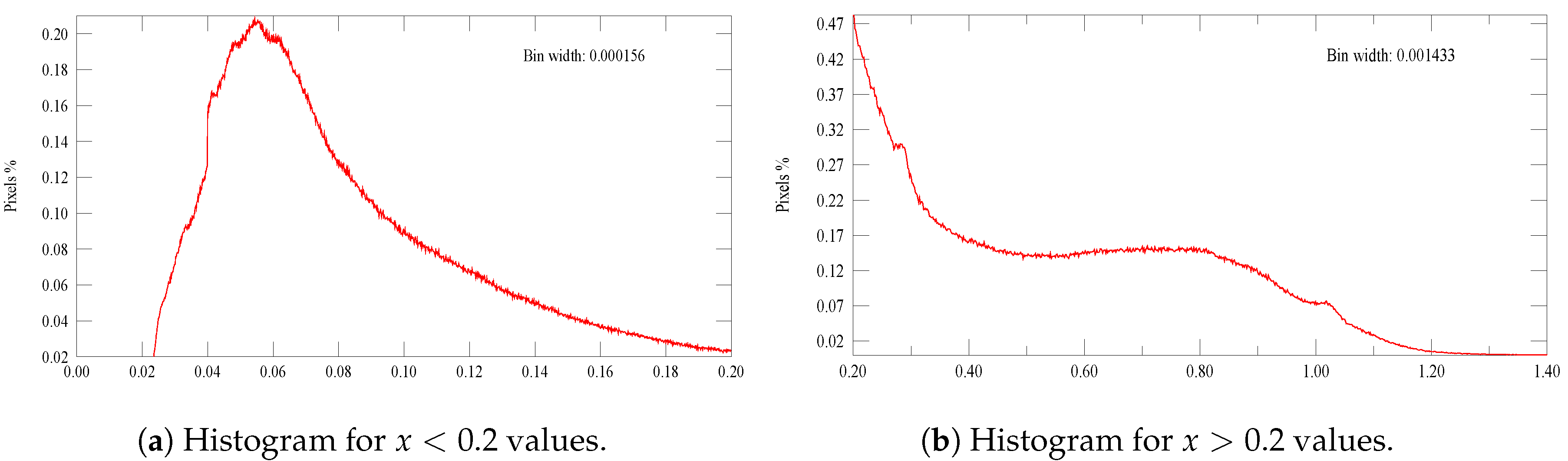

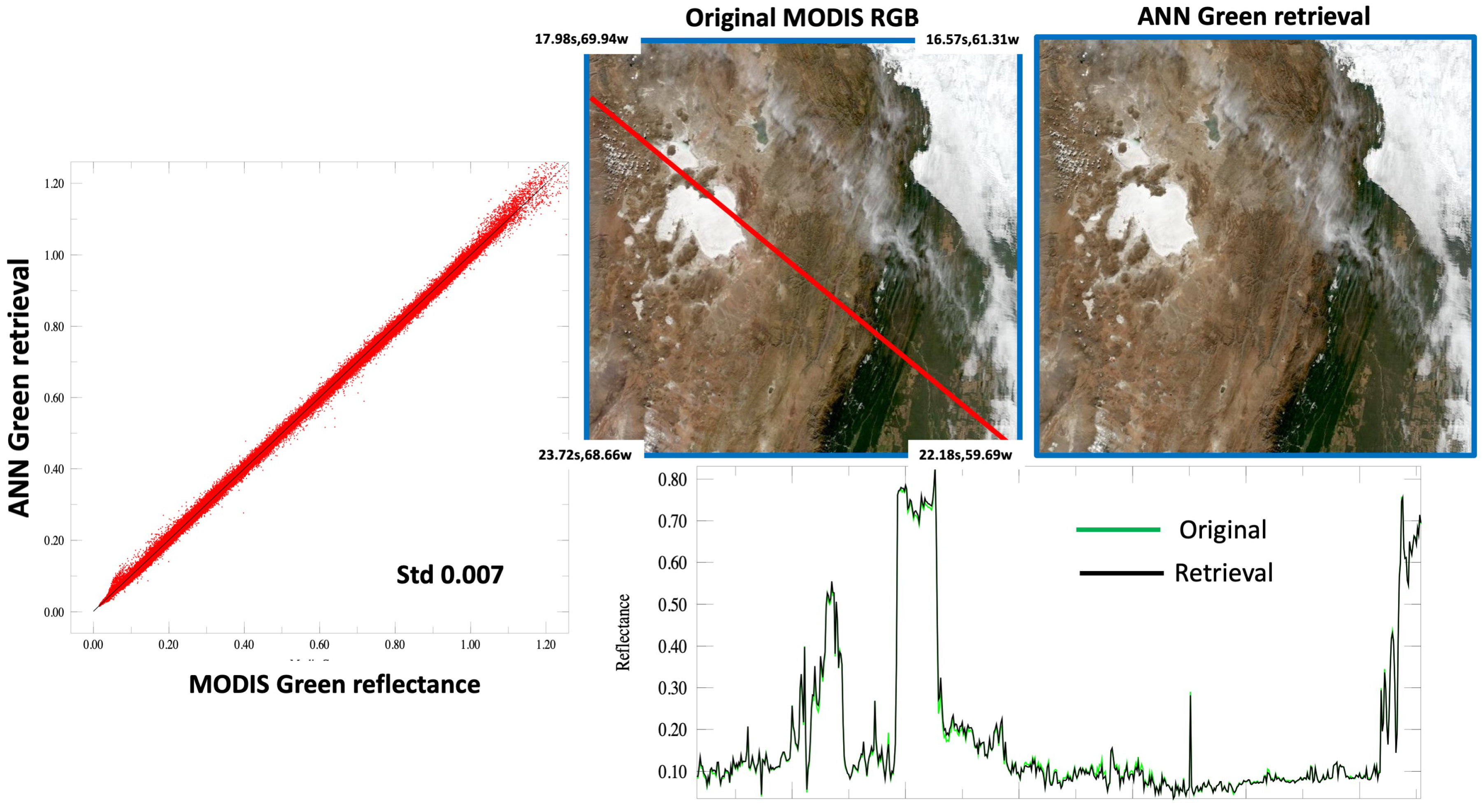

2.3.3. Reconstruction of the Missing Green Band for GOES-16 ABI

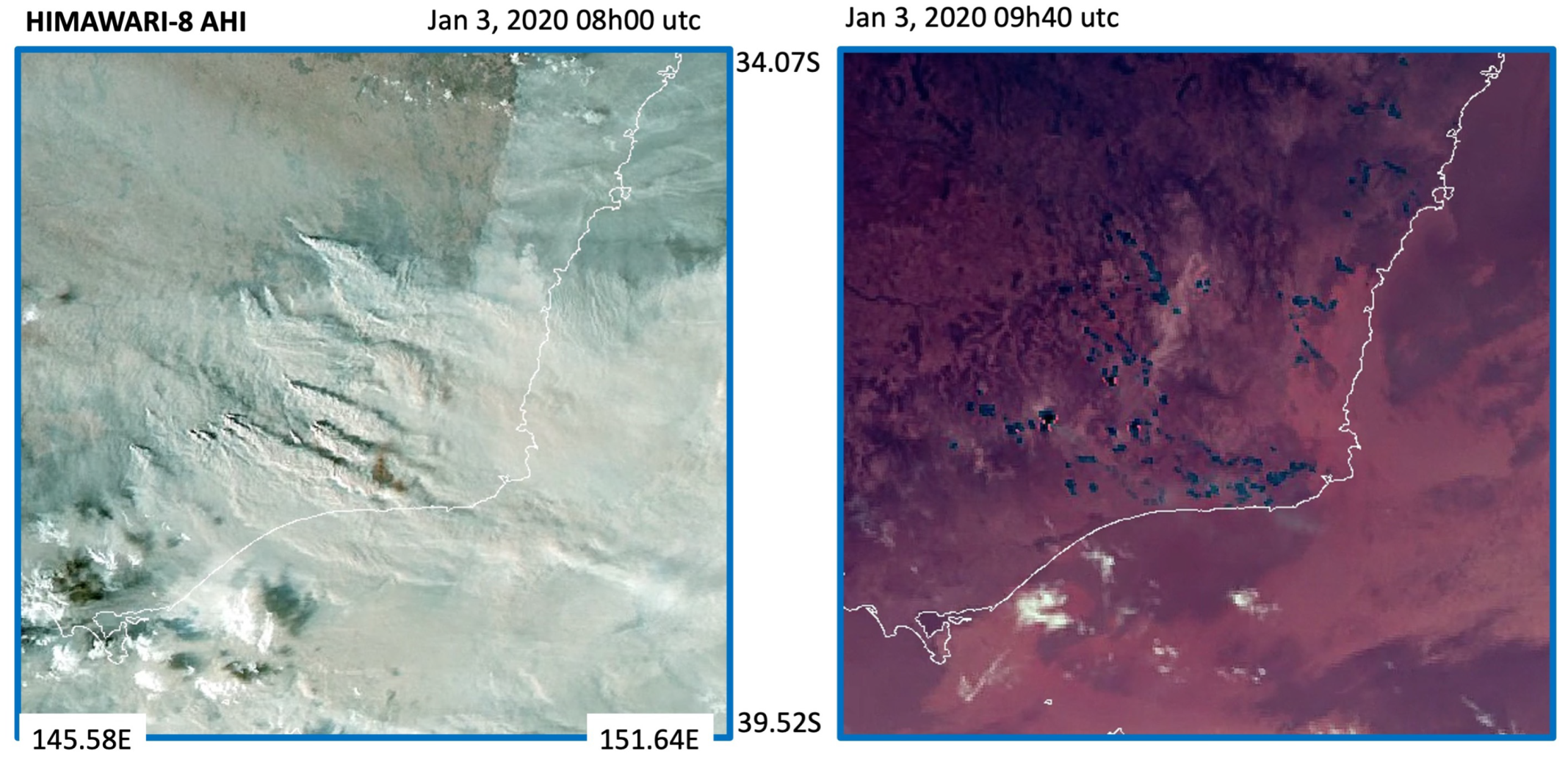

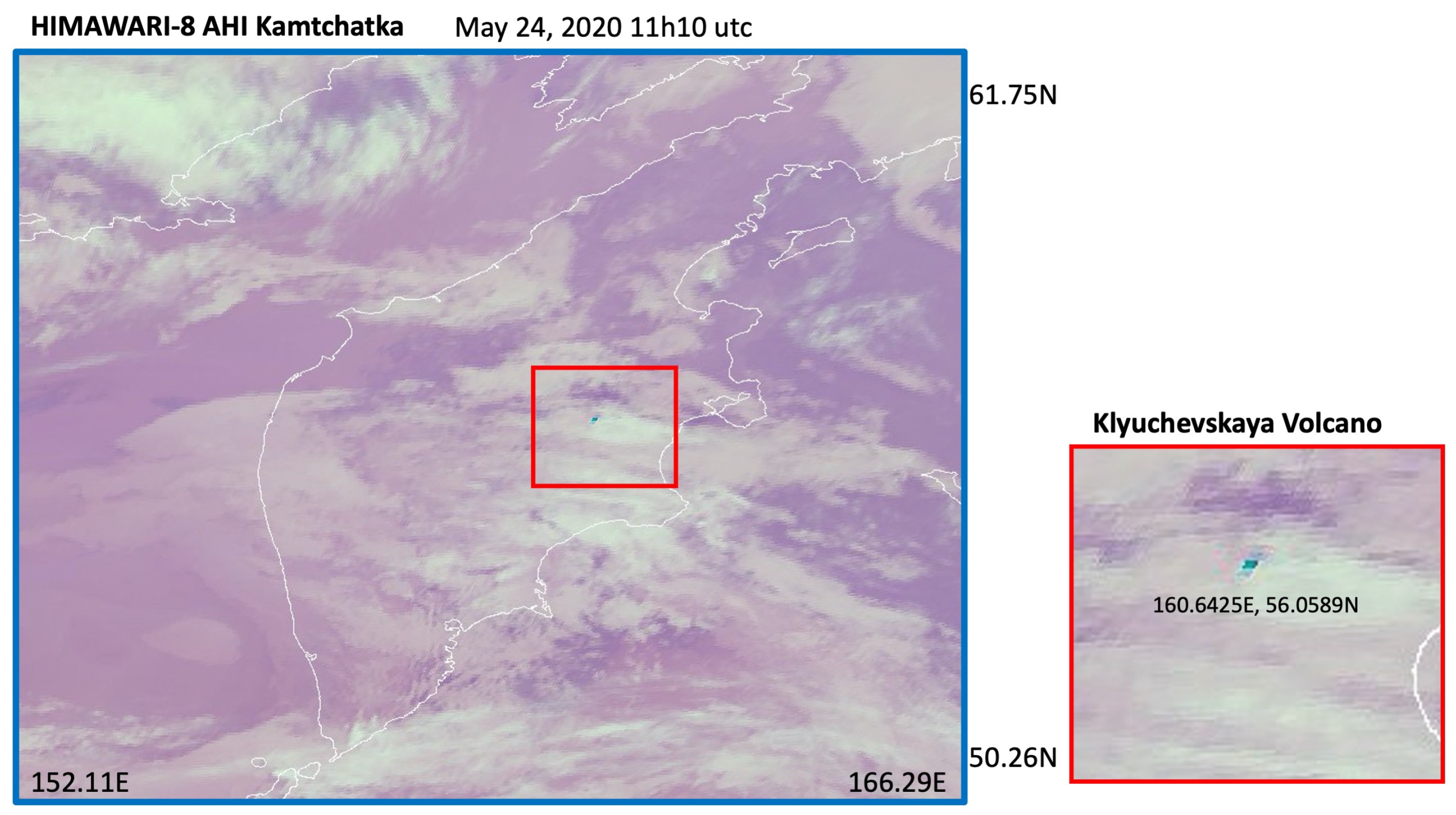

3. Results and Discussion

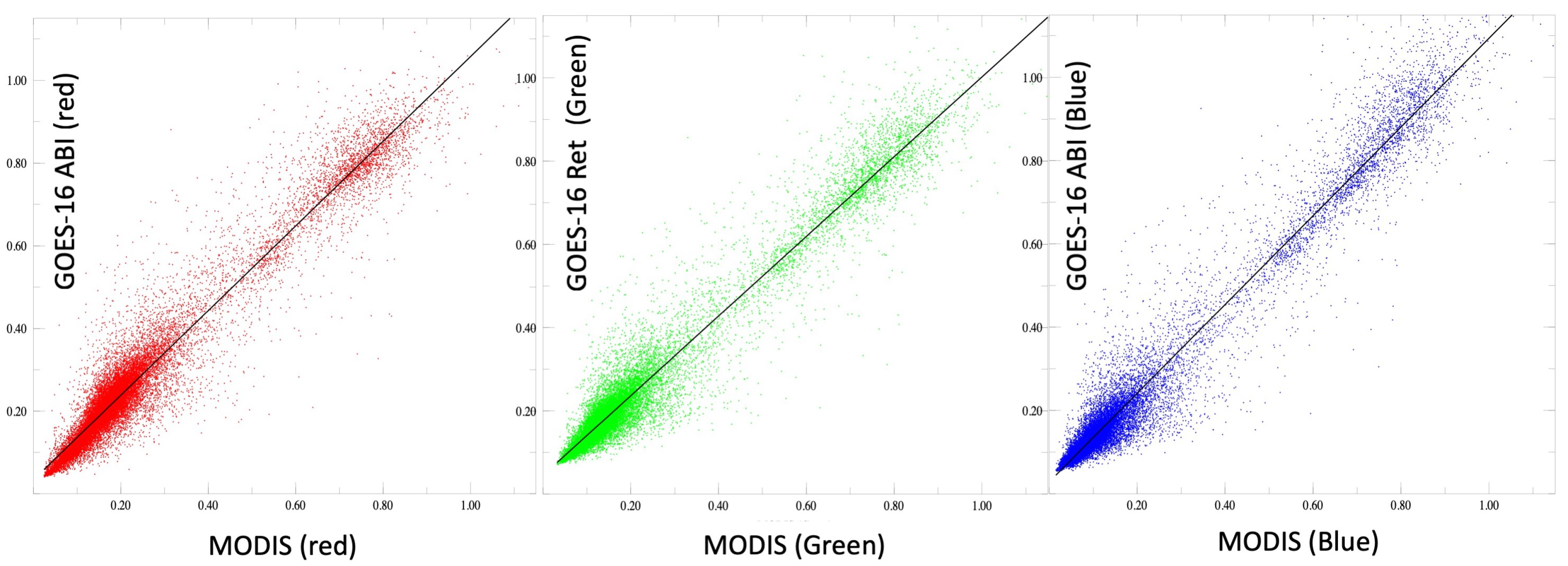

3.1. Validation of the ANN Green Reconstruction for GOES-16

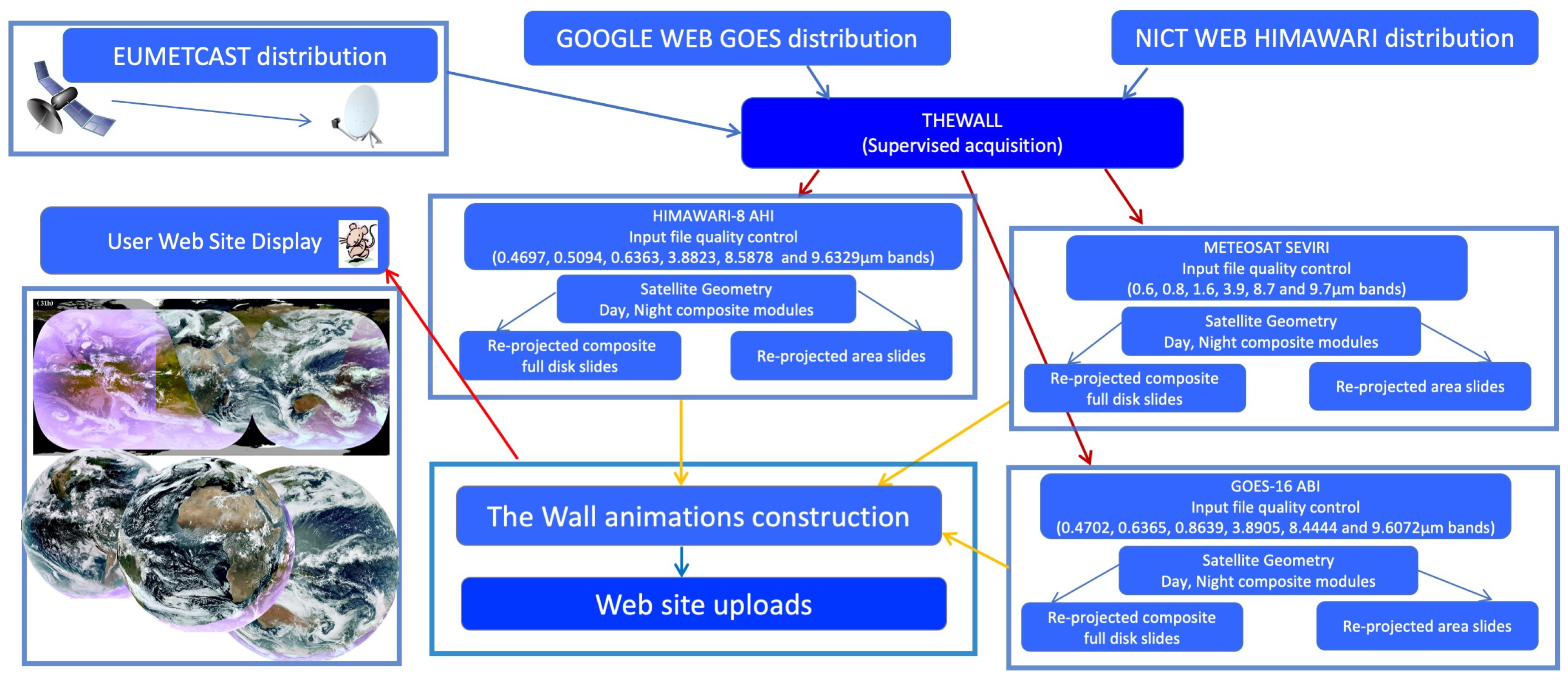

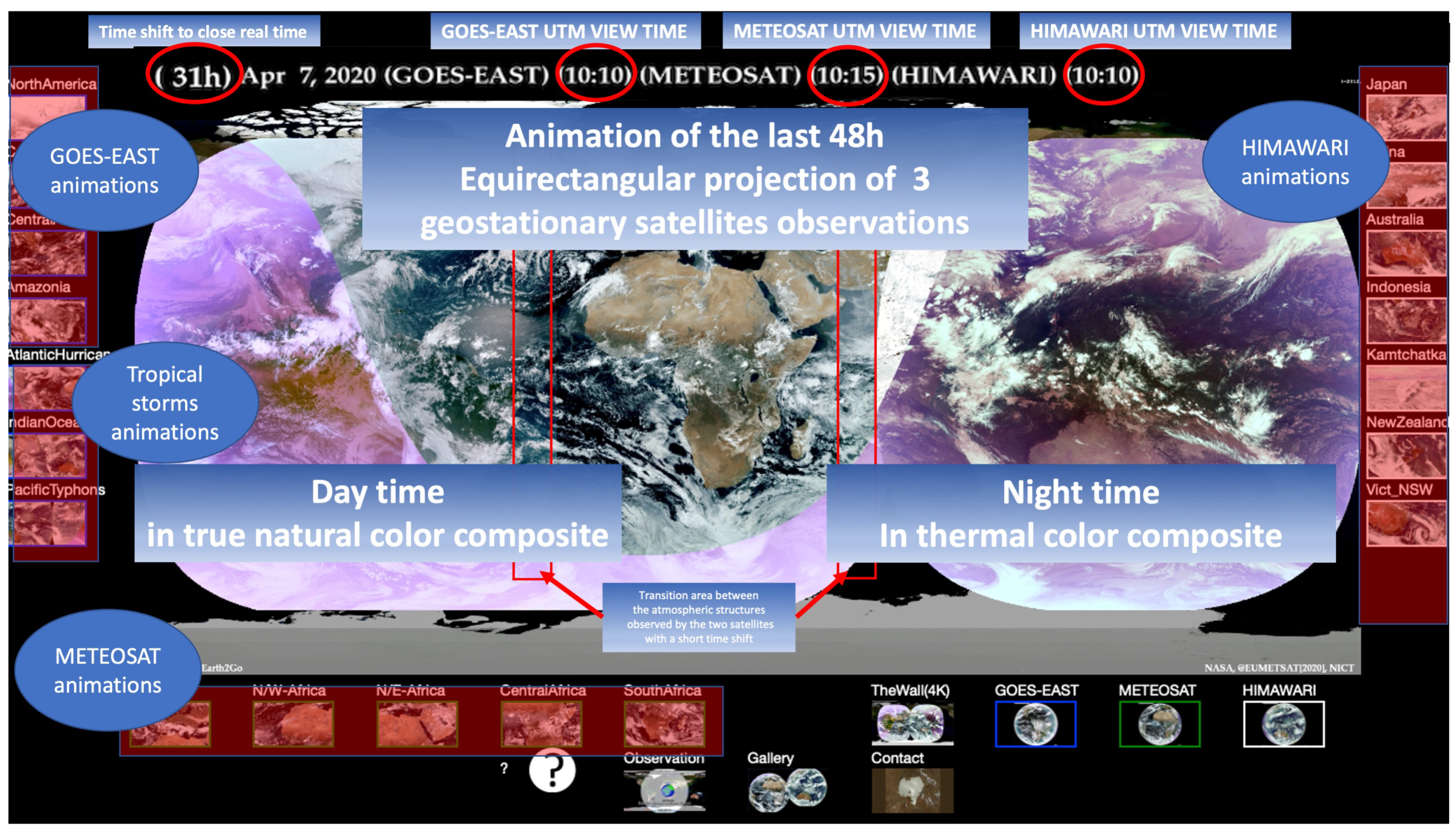

3.2. Description of “The Wall” Processing Chain and Web Visualization Platform

4. Conclusions and Perspectives, and “The Wall” Website

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ABI | Advanced Baseline Imager |

| AHI | Advanced Himawari Imager |

| MODIS | Moderate Resolution Imaging Spectroradiometer |

| MSG2 SEVIRI | Meteosat Second Generation Spinning Enhanced Visible and Infrared Imager |

| NICT | National Institute of Information and Communications Technology |

| PNC | Pseudo Night Composite |

| TNCC | True Natural Color Composite |

References

- Schmit, T.J.; Griffith, P.; Gunshor, M.M.; Daniels, J.M.; Goodman, S.J.; Lebair, W.J. A Closer Look at the ABI on the GOES-R Series. Bull. Am. Meteorol. Soc. 2017, 98, 681–698. [Google Scholar] [CrossRef]

- Bessho, K.; Date, K.; Hayashi, M.; Ikeda, A.; Imai, T.; Inque, H.; Kumagai, Y.; Miyakawa, T.; Murata, H.; Ohno, T.; et al. An Introduction to Himawari-8/9—Japan’s New-Generation Geostationary Meteorological Satellites. J. Meteorol. Soc. Jpn. Ser. II 2016, 94, 151–183. [Google Scholar] [CrossRef] [Green Version]

- Murata, H.; Saitoh, K.; Sumida, Y. True Color Imagery Rendering for Himawari-8 with a Color Reproduction Approach Based on the CIE XYZ Color System. J. Meteorol. Soc. Jpn. Ser. II 2018, 96B, 211–238. [Google Scholar] [CrossRef] [Green Version]

- Schmit, T.J.; Lindstrom, S.S.; Gerth, J.J.; Gunshor, M.M. Applications of the 16 Spectral Bands on the Advanced Baseline Imager (ABI). J. Oper. Meteorol. 2018, 6, 34–46. [Google Scholar] [CrossRef]

- Himawari Real-Time Image. Available online: https://www.data.jma.go.jp/mscweb/data/himawari/sat_img.php (accessed on 13 June 2020).

- Natural Colour Enhanced RGB—MSG—0 Degree. Available online: https://navigator.eumetsat.int/product/EO:EUM:DAT:MSG:NCL_ENH (accessed on 13 June 2020).

- Miller, S.D.; Schmidt, C.C.; Schmit, T.J.; Hillger, D.W. A case for natural colour imagery from geostationary satellites, and an approximation for the GOES-R ABI. Int. J. Remote Sens. 2012, 33, 3999–4028. [Google Scholar] [CrossRef]

- Miller, S.D.; Lindsey, D.T.; Seaman, C.J.; Solbrig, J.E. GeoColor: A Blending Technique for Satellite Imagery. J. Atmos. Ocean. Technol. 2020, 37, 429–448. [Google Scholar] [CrossRef]

- Gonzalez, L.; Briottet, X. North Africa and Saudi Arabia Day/Night Sandstorm Survey (NASCube). Remote Sens. 2017, 9, 896. [Google Scholar] [CrossRef] [Green Version]

- Murata, K.T.; Pavarangkoon, P.; Higuchi, A.; Toyoshima, K.; Yamamoto, K.; Muranaga, K.; Nagaya, Y.; Izumikawa, Y.; Kimura, E.; Mizuhara, T. A web-based real-time and full-resolution data visualization for Himawari-8 satellite sensed images. Earth Sci. Inform. 2018, 11, 217–237. [Google Scholar] [CrossRef] [Green Version]

- Murata, K.T.; Watanabe, H.; Ukawa, K.; Muranaga, K.; Suzuki, Y.; Yamamoto, K.; Kimura, E. A Report of the NICT Science Cloud in 2013. J. Jpn. Soc. Inf. Knowl. 2014, 24, 275–290. [Google Scholar]

- Google Cloud Platform for GOES-16 ABI. Available online: https://console.cloud.google.com/marketplace/product/noaa-public/goes-16?pli=1 (accessed on 13 June 2020).

- Vermote, E.F.; Kotchenova, S.Y.; Tanré, D.; Deuzé, J.L.; Herman, M.; Roger, J.C.; Morcrette, J.J. 6SV Code. 2015. Available online: http://6s.ltdri.org/2015 (accessed on 13 June 2020).

- Vermote, E.; Tanré, D. Analytical expressions for radiative properties of planar Rayleigh scattering media, including polarization contributions. J. Quant. Spectrosc. Radiat. Transf. 1992, 47, 305–314. [Google Scholar] [CrossRef]

- Vermote, E.F.; Tanré, D.; Deuzé, J.L.; Herman, M.; Morcrette, J.-J. Second Simulation of the Satellite Signal in the Solar Spectrum, 6S: An overview. IEEE Trans. Geosci. Remote Sens. 1997, 35, 675–686. [Google Scholar] [CrossRef] [Green Version]

- Shettle, E.P.; Kneizys, F.X.; Gallery, W.O. Suggested modification to the total volume molecular scattering coefficient in lowtran: Comment. Appl. Opt. 1980, 19, 2873–2874. [Google Scholar] [CrossRef]

- Chandrasekhar, S. Radiative Transfer; Dover Publications, Inc.: New York, NY, USA, 1960. [Google Scholar]

- Joseph, J.H.; Wiscombe, W.J.; Weinman, J.A. The Delta-Eddington Approximation for Radiative Flux Transfer. J. Atmos. Sci. 1976, 33, 2452–2459. [Google Scholar] [CrossRef]

- Lenoble, J.; Herman, M.; Deuzé, J.L.; Lafrance, B.; Santer, R.; Tanré, D. A successive order of scattering code for solving the vector equation of transfer in the earth’s atmosphere with aerosols. J. Quant. Spectrosc. Radiat. Transf. 2007, 107, 479–507. [Google Scholar] [CrossRef]

- Zell, A.; Mache, N.; Hübner, R.; Mamier, G.; Vogt, M.; Schmalzl, M.; Herrmann, K.U. SNNS (Stuttgart Neural Network Simulator). In Neural Network Simulation Environments; Springer: Boston, MA, USA, 1994; pp. 165–186. [Google Scholar]

- Miikkulainen, R. Topology of a Neural Network. In Encyclopedia of Machine Learning; Sammut, C., Webb, G.I., Eds.; Springer US: Boston, MA, USA, 2010; pp. 988–989. [Google Scholar] [CrossRef]

- Gonzalez, L.; Vallet, V.; Yamamoto, H. Global 15-Meter Mosaic Derived from Simulated True-Color ASTER Imagery. Remote Sens. 2019, 11, 441. [Google Scholar] [CrossRef] [Green Version]

| (μm) | PNC Channel | ||||

|---|---|---|---|---|---|

| MSG2 SEVIRI | |||||

| 3.88 | R | 0.004975 | 1.308458 | 7.5 | 9.5 |

| 8.58 | G | 0.002165 | 1.246753 | 7.7 | 9.5 |

| 9.63 | B | 0.004202 | 1.899160 | 7.5 | 9.5 |

| GOES-16 ABI | |||||

| 3.89 | R | 0.000338 | 1.007768 | 7.5 | 9.5 |

| 8.44 | G | 0.000501 | 1.046115 | 7.7 | 9.5 |

| 9.60 | B | 0.001000 | 1.176000 | 7.5 | 9.5 |

| Himawari-8 AHI | |||||

| 3.9 | R | −1.269036 | 0.842010 | N/A | |

| 8.7 | G | −0.119018 | 1.118907 | N/A | |

| 9.7 | B | −0.181937 | 1.250030 | N/A | |

| GOES-16 satellite |

| GOES-16 imagery |

| https://www.goes-r.gov/multimedia/dataAndImageryImagesGoes-16.html#GOES-EastOperational |

| NOAA GOES image viewer |

| https://www.star.nesdis.noaa.gov/GOES/fulldisk.php?sat=G17 |

| GOES-16 and Himawari-8 satellites |

| SSEC Geostationary Satellite Imagery |

| https://www.ssec.wisc.edu/data/geo/#/animation |

| Himawari-8 satellite |

| NICT |

| https://himawari8.nict.go.jp/ |

| JAXA EORC |

| https://www.eorc.jaxa.jp/ptree/index.html |

| Meteorological Satellite Center of JMA |

| https://www.data.jma.go.jp/mscweb/data/himawari/index.html |

| MSG2 SEVIRI |

| ICARE |

| https://www.icare.univ-lille.fr/data-access/browse-images/geostationary-satellites/ |

| METEOSAT |

| http://www.meteo-spatiale.fr/src/images_en_direct.php |

| NASCube |

| http://nascube.univ-lille1.fr/ |

| Events and hyperlinks to |

| http://earth2day.com/TheWall/Gallery/gallery.html |

| A huge sandstorm crossing the Atlantic in June 2020 |

| http://earth2day.com/TheWall/Gallery/Movies/SANDSTORM20200611140020200620160016F.html |

| Huge fires in Canada in June 2019 |

| http://earth2day.com/TheWall/Gallery/Movies/NORTHAMERIC2201905280000201906032355.html |

| One km accuracy image showing multiple fires in Amazonia in August 2019 |

| http://earth2day.com/TheWall/Gallery/Movies/AMAZONI1km201908110000201908172300.html |

| Wild fires in the South East of Australia in January 2020 |

| http://earth2day.com/TheWall/Gallery/Movies/VICT_OSW202001010000202001080700.html |

| Huge sand storm covering Australia in January 2020 |

| http://earth2day.com/TheWall/Gallery/Movies/AUSTRALI2202001100600202001130600.html |

| Huge sand storm starting in Mongolia and crossing China toward Japan in May 2020 |

| http://earth2day.com/TheWall/Gallery/Movies/MONGOLIASANDSTORM202005110600202005130540.html |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gonzalez, L.; Yamamoto, H. The Wall: The Earth in True Natural Color from Real-Time Geostationary Satellite Imagery. Remote Sens. 2020, 12, 2375. https://doi.org/10.3390/rs12152375

Gonzalez L, Yamamoto H. The Wall: The Earth in True Natural Color from Real-Time Geostationary Satellite Imagery. Remote Sensing. 2020; 12(15):2375. https://doi.org/10.3390/rs12152375

Chicago/Turabian StyleGonzalez, Louis, and Hirokazu Yamamoto. 2020. "The Wall: The Earth in True Natural Color from Real-Time Geostationary Satellite Imagery" Remote Sensing 12, no. 15: 2375. https://doi.org/10.3390/rs12152375

APA StyleGonzalez, L., & Yamamoto, H. (2020). The Wall: The Earth in True Natural Color from Real-Time Geostationary Satellite Imagery. Remote Sensing, 12(15), 2375. https://doi.org/10.3390/rs12152375