Author Contributions

Conceptualization, H.B. (Haris Balta), J.V., H.B. (Halil Beglerovic), G.D.C., and B.S.; methodology, H.B. (Haris Balta), J.V., G.D.C., and B.S.; software, H.B. (Haris Balta) and H.B. (Halil Beglerovic); validation, H.B. (Haris Balta) and H.B. (Halil Beglerovic), formal analysis, H.B. (Haris Balta), J.V., G.D.C., and B.S.; investigation, H.B. (Haris Balta); resources, H.B. (Haris Balta) and G.D.C.; writing—original draft preparation, H.B. (Haris Balta) and J.V.; writing—review and editing, H.B. (Halil Beglerovic), G.D.C., and B.S.; visualization, H.B. (Haris Balta) and H.B. (Halil Beglerovic); supervision, H.B. (Haris Balta), J.V., G.D.C., B.S.; project administration, G.D.C.; funding acquisition, H.B. (Haris Balta) and G.D.C. All authors have read and agreed to the published version of the manuscript.

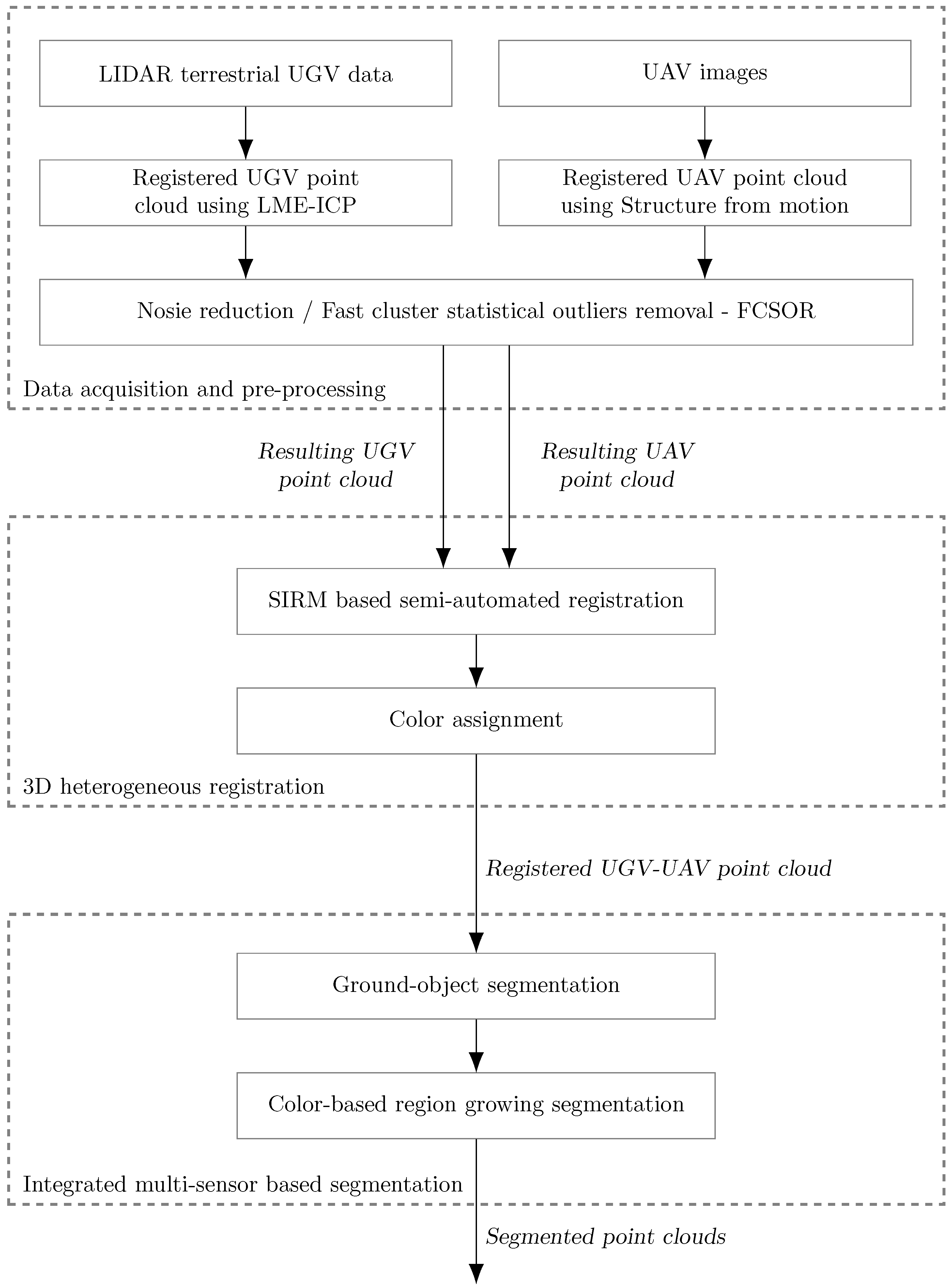

Figure 1.

A flowchart of the proposed 3D heterogeneous registration and integrated segmentation framework.

Figure 1.

A flowchart of the proposed 3D heterogeneous registration and integrated segmentation framework.

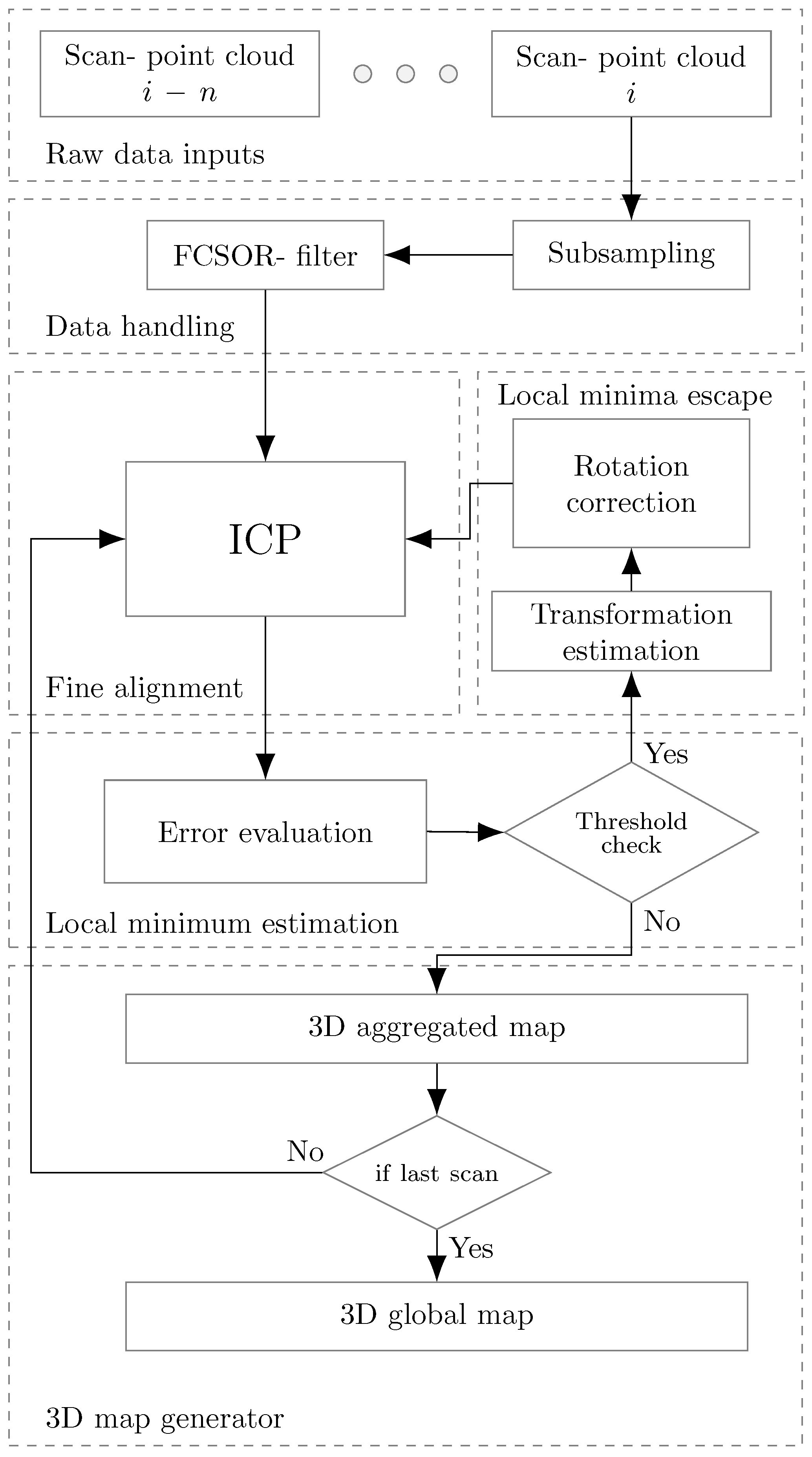

Figure 2.

Novel 3D model registration framework scheme.

Figure 2.

Novel 3D model registration framework scheme.

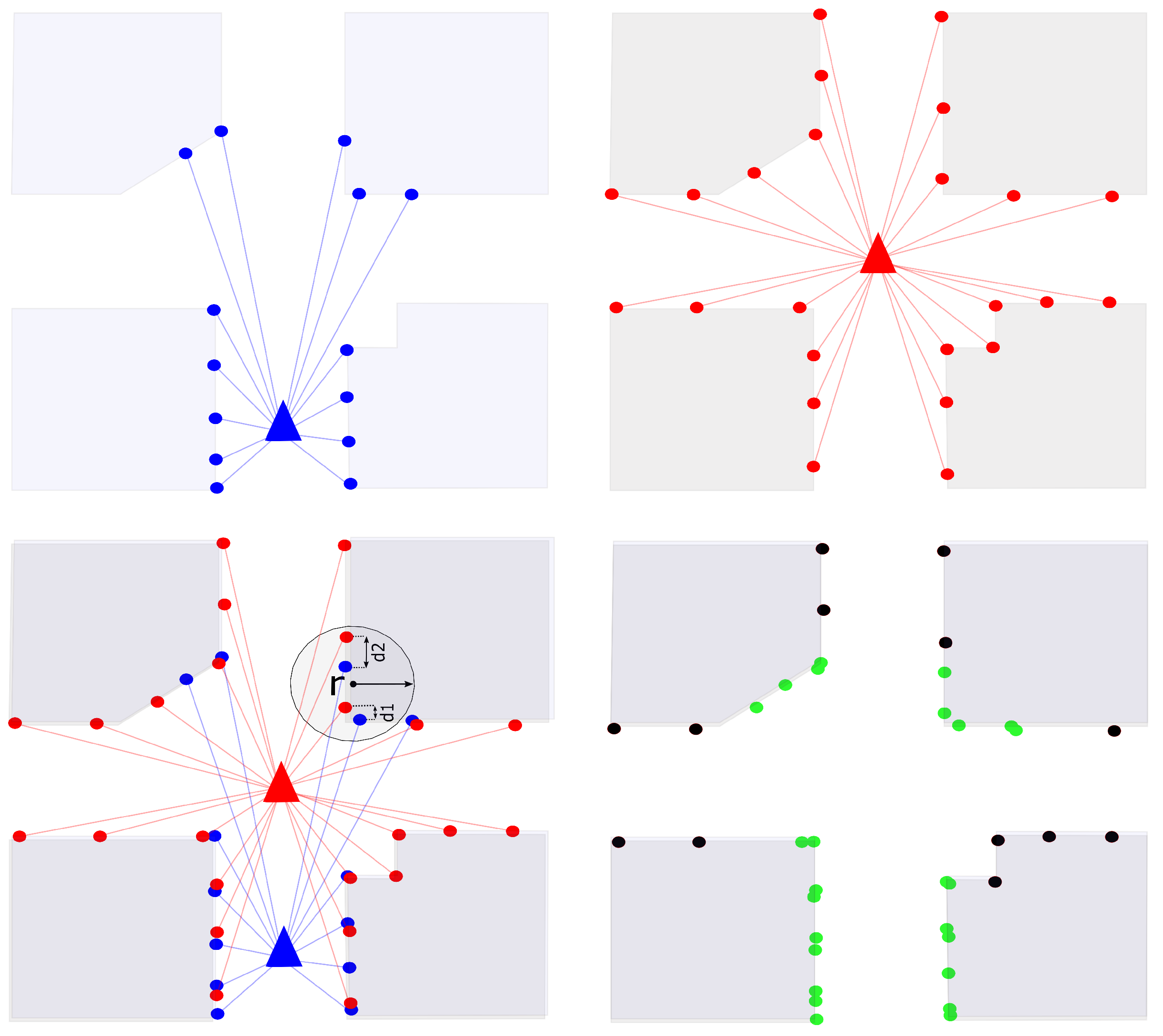

Figure 3.

Scan registration sequence, upper left and right illustrations are showing two successive scans, the left lower figure is showing the overlapping area with the error estimation in certain radius r, the right lower figure shows a registered scan where green points representing the overlapping points and the black point are the new added point from the second scan (red).

Figure 3.

Scan registration sequence, upper left and right illustrations are showing two successive scans, the left lower figure is showing the overlapping area with the error estimation in certain radius r, the right lower figure shows a registered scan where green points representing the overlapping points and the black point are the new added point from the second scan (red).

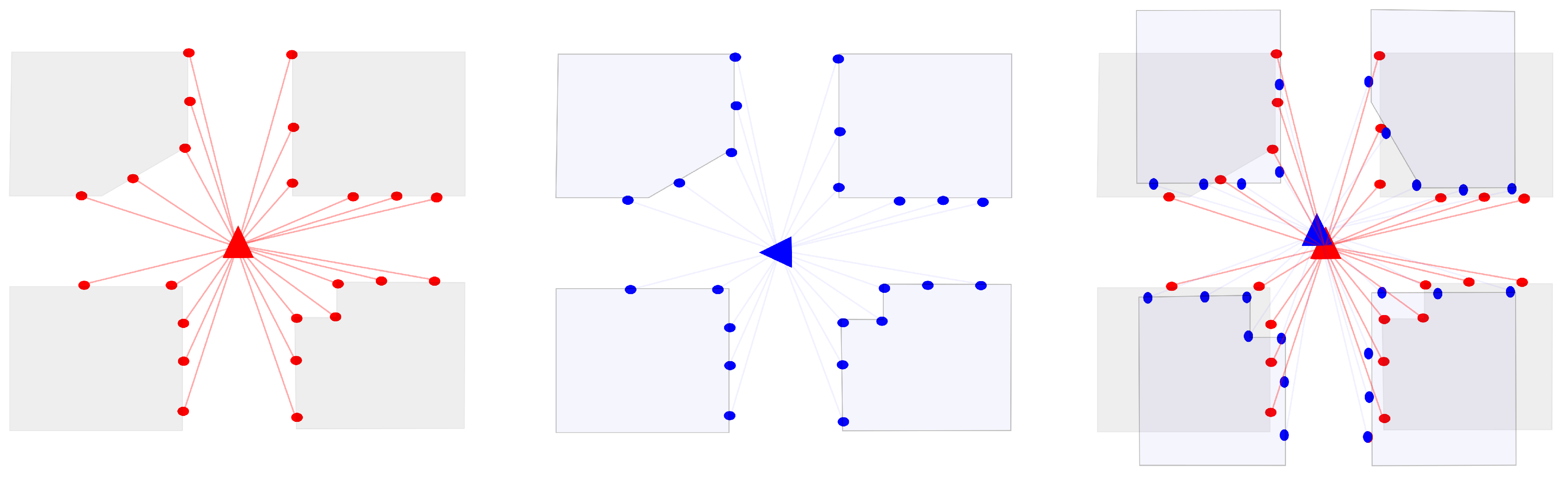

Figure 4.

Local minima situation caused by a significant change of the angle of rotation.

Figure 4.

Local minima situation caused by a significant change of the angle of rotation.

Figure 5.

Local minima escape, computation of surface normals, and two-step local minima escape.

Figure 5.

Local minima escape, computation of surface normals, and two-step local minima escape.

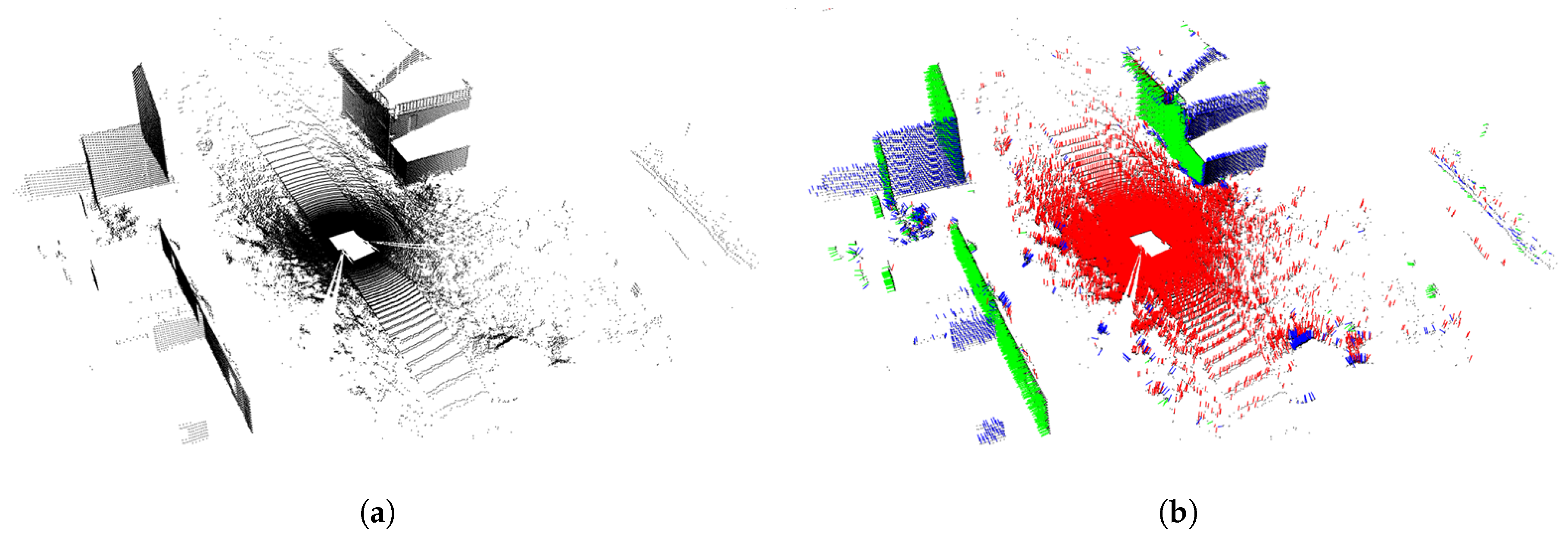

Figure 6.

Surface normal estimation of an example dataset. (a) raw data scan; (b) vectors (x, y, z) are represented by colors blue, green, and red, respectively (Dataset: Military-base Marche–en–Famenne).

Figure 6.

Surface normal estimation of an example dataset. (a) raw data scan; (b) vectors (x, y, z) are represented by colors blue, green, and red, respectively (Dataset: Military-base Marche–en–Famenne).

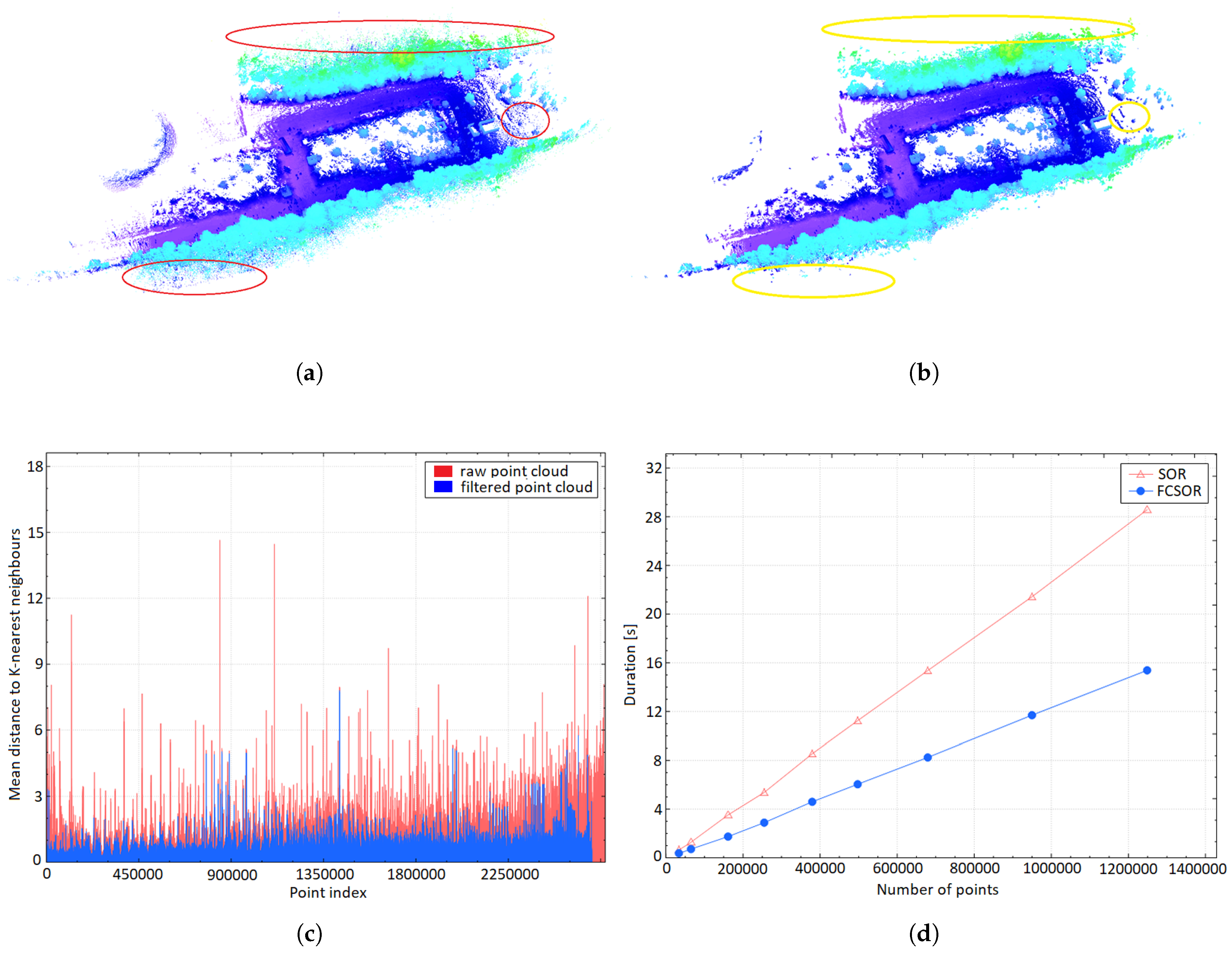

Figure 7.

(a) Raw input point cloud P; (b) FCSOR filtered point cloud (around points rejected); (c) relationship between the raw input and filtered output point cloud, (d) execution time comparison between FCSOR and SOR methods for different density 3D input (Dataset: Military-base Marche-en-Famenne, Belgium).

Figure 7.

(a) Raw input point cloud P; (b) FCSOR filtered point cloud (around points rejected); (c) relationship between the raw input and filtered output point cloud, (d) execution time comparison between FCSOR and SOR methods for different density 3D input (Dataset: Military-base Marche-en-Famenne, Belgium).

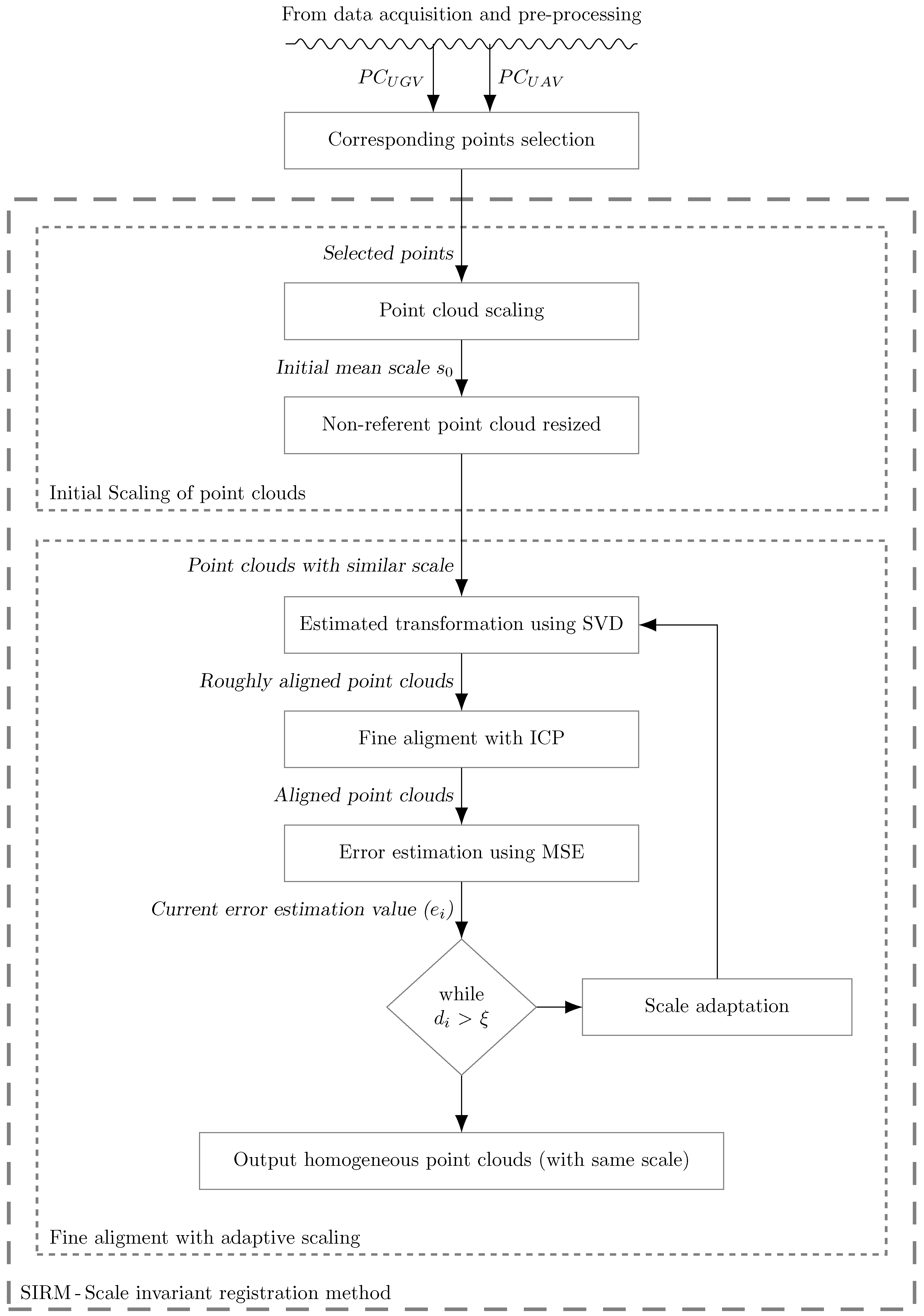

Figure 8.

Proposed architecture of the semi-automated 3D registration based on SIRM.

Figure 8.

Proposed architecture of the semi-automated 3D registration based on SIRM.

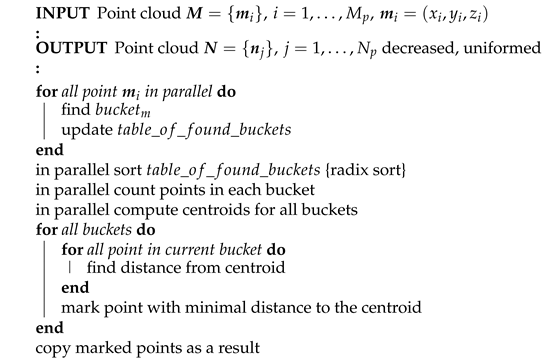

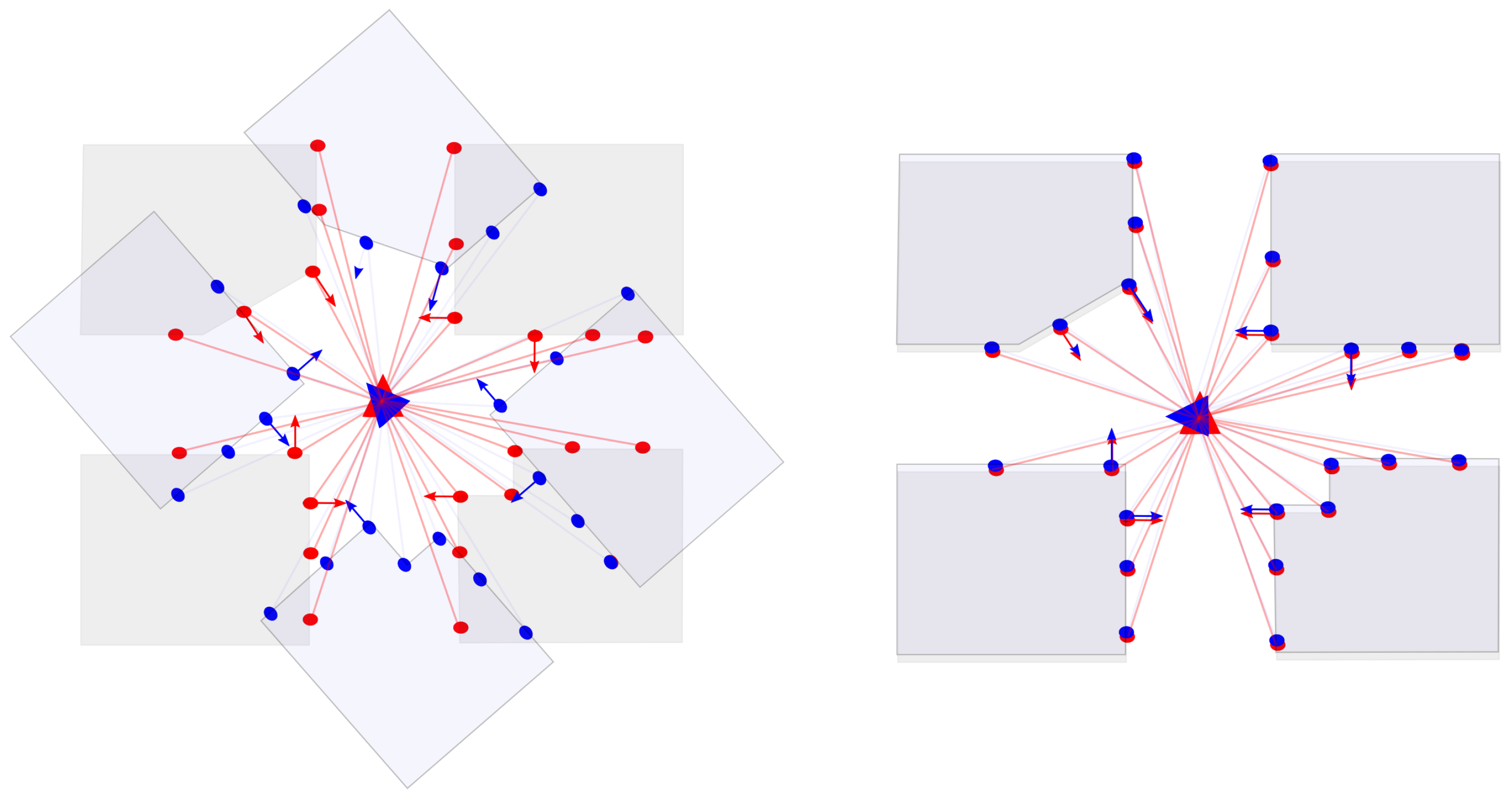

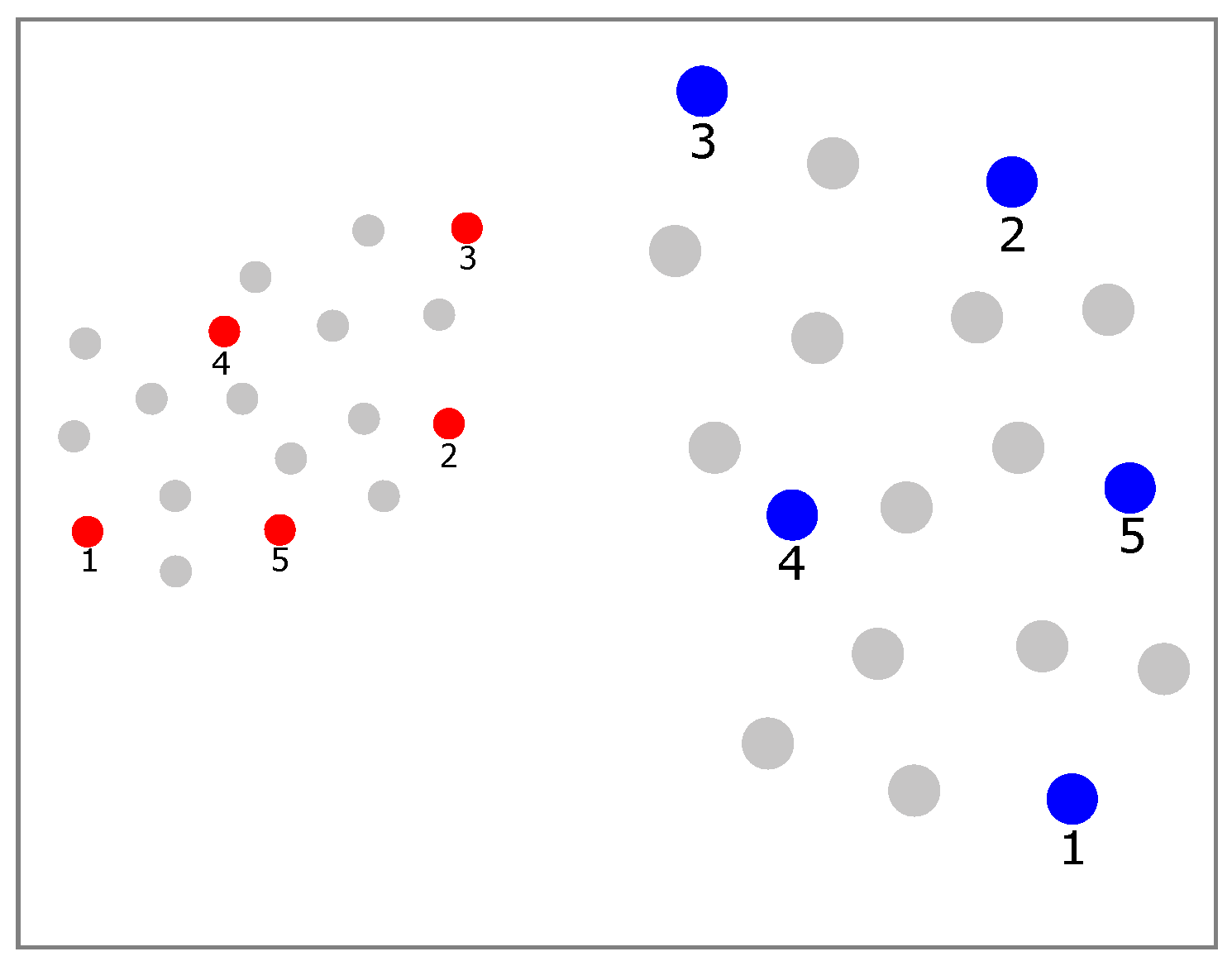

Figure 9.

Illustration of the corresponding point selection.

Figure 9.

Illustration of the corresponding point selection.

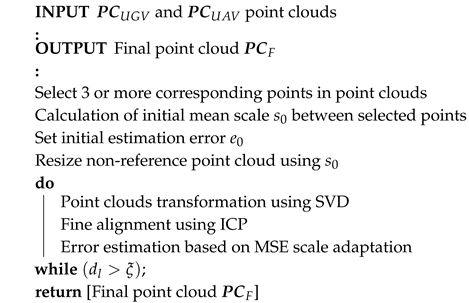

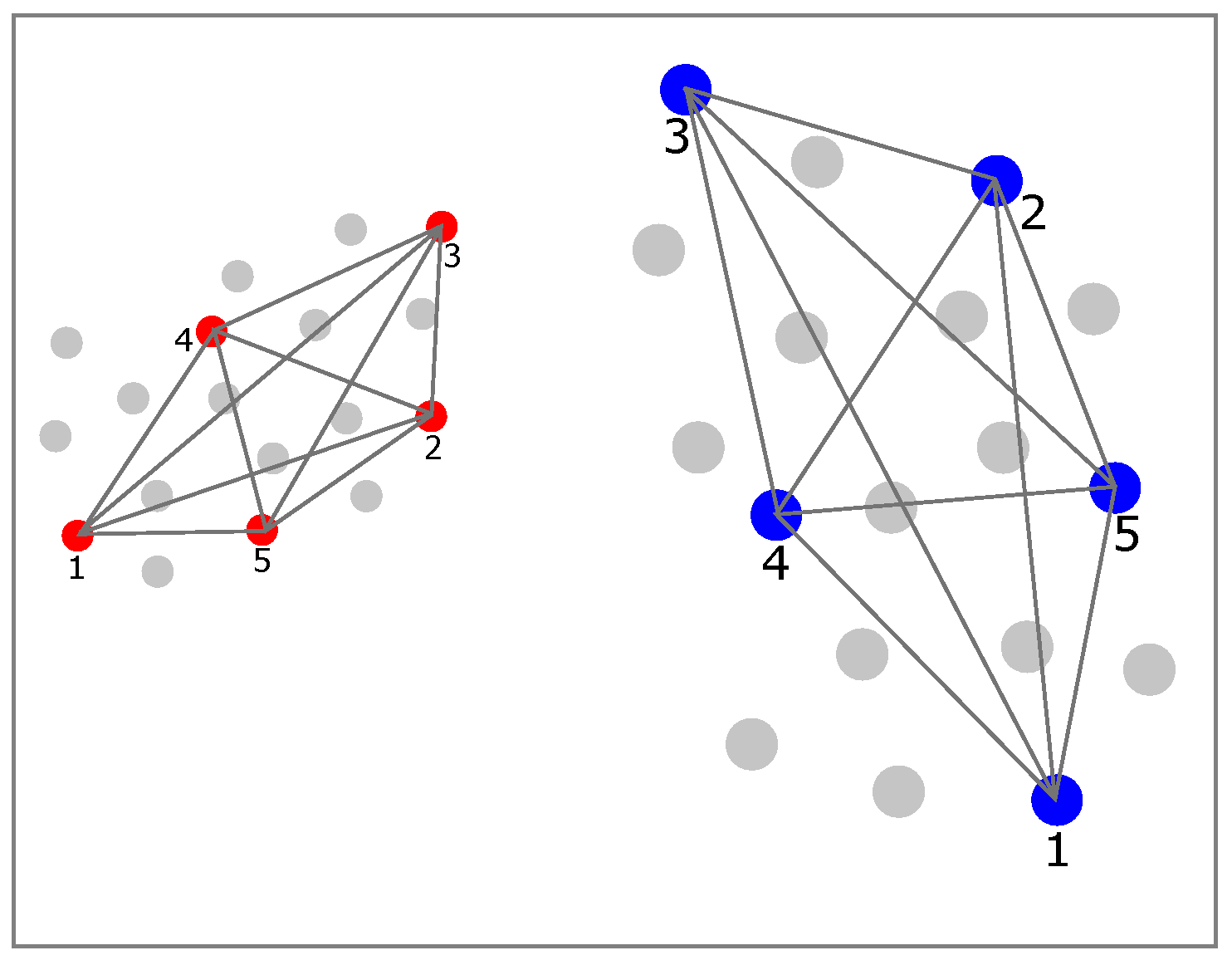

Figure 10.

Illustration of the initial scale estimation process.

Figure 10.

Illustration of the initial scale estimation process.

Figure 11.

Fine alignment tuning and registration.

Figure 11.

Fine alignment tuning and registration.

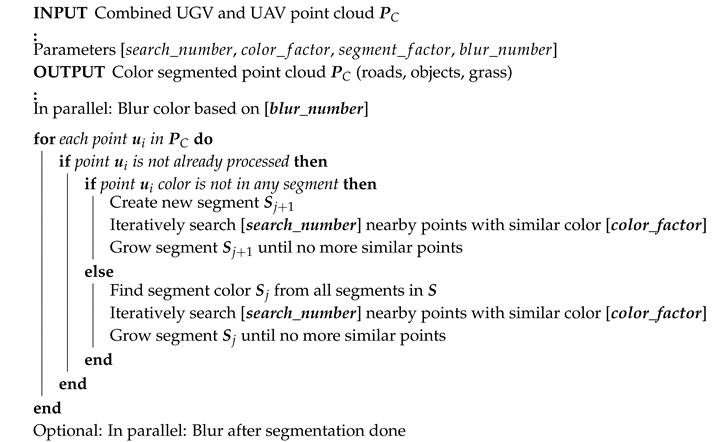

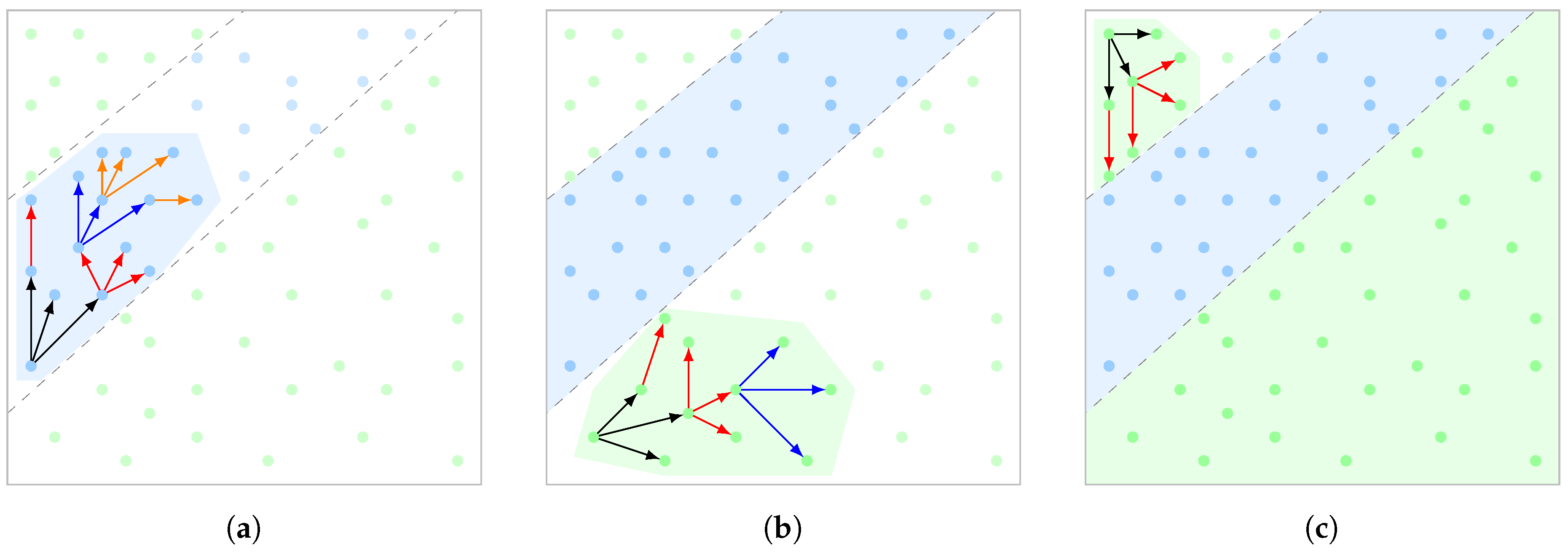

Figure 12.

Illustration of the color based region growing segmentation. (a) initial segment detection and growing; (b) similar point colors detection; (c) complete point cloud.

Figure 12.

Illustration of the color based region growing segmentation. (a) initial segment detection and growing; (b) similar point colors detection; (c) complete point cloud.

Figure 13.

RMA tEODor UGV with the 3D mapping hardware.

Figure 13.

RMA tEODor UGV with the 3D mapping hardware.

Figure 14.

UAV used for the data gathering.

Figure 14.

UAV used for the data gathering.

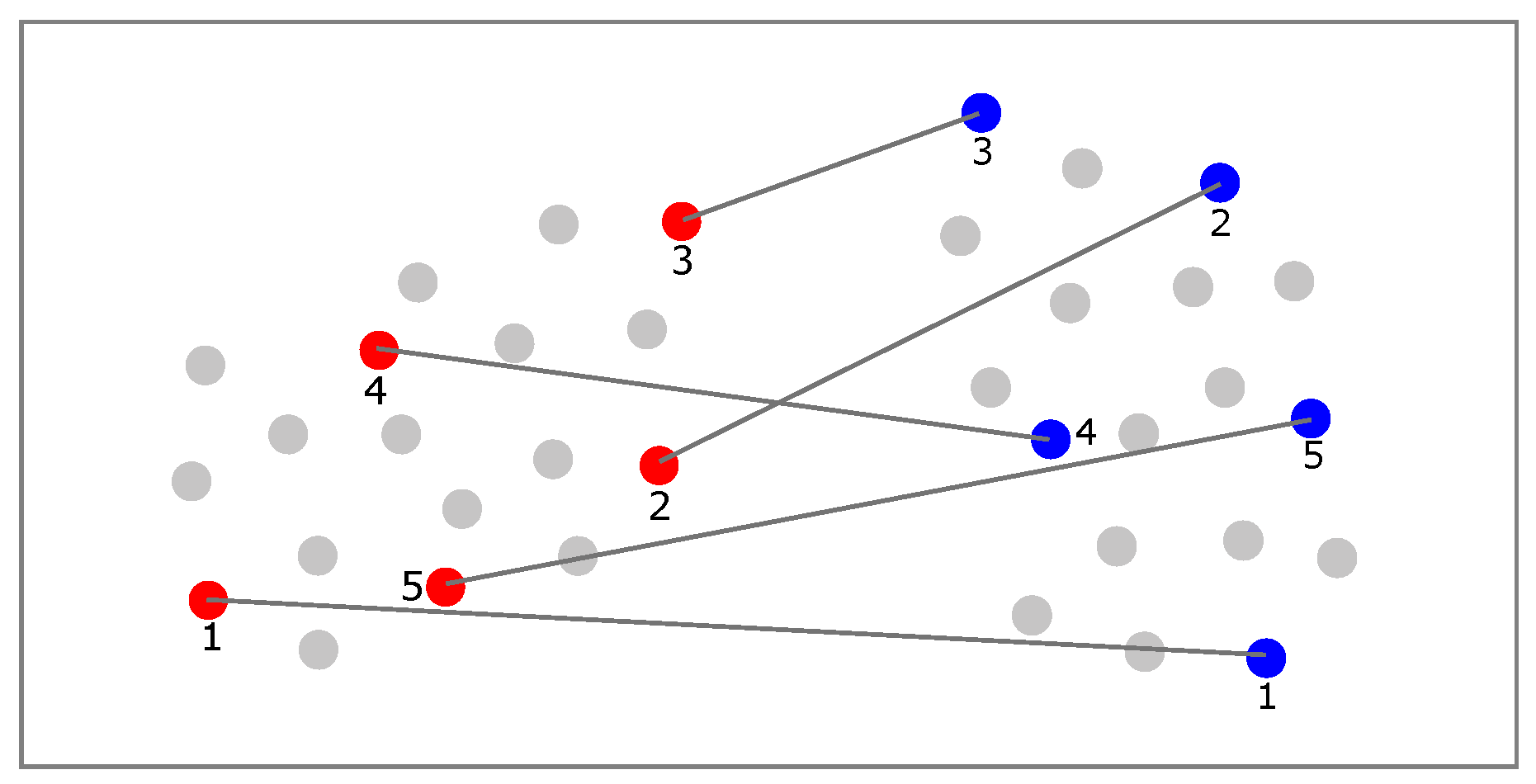

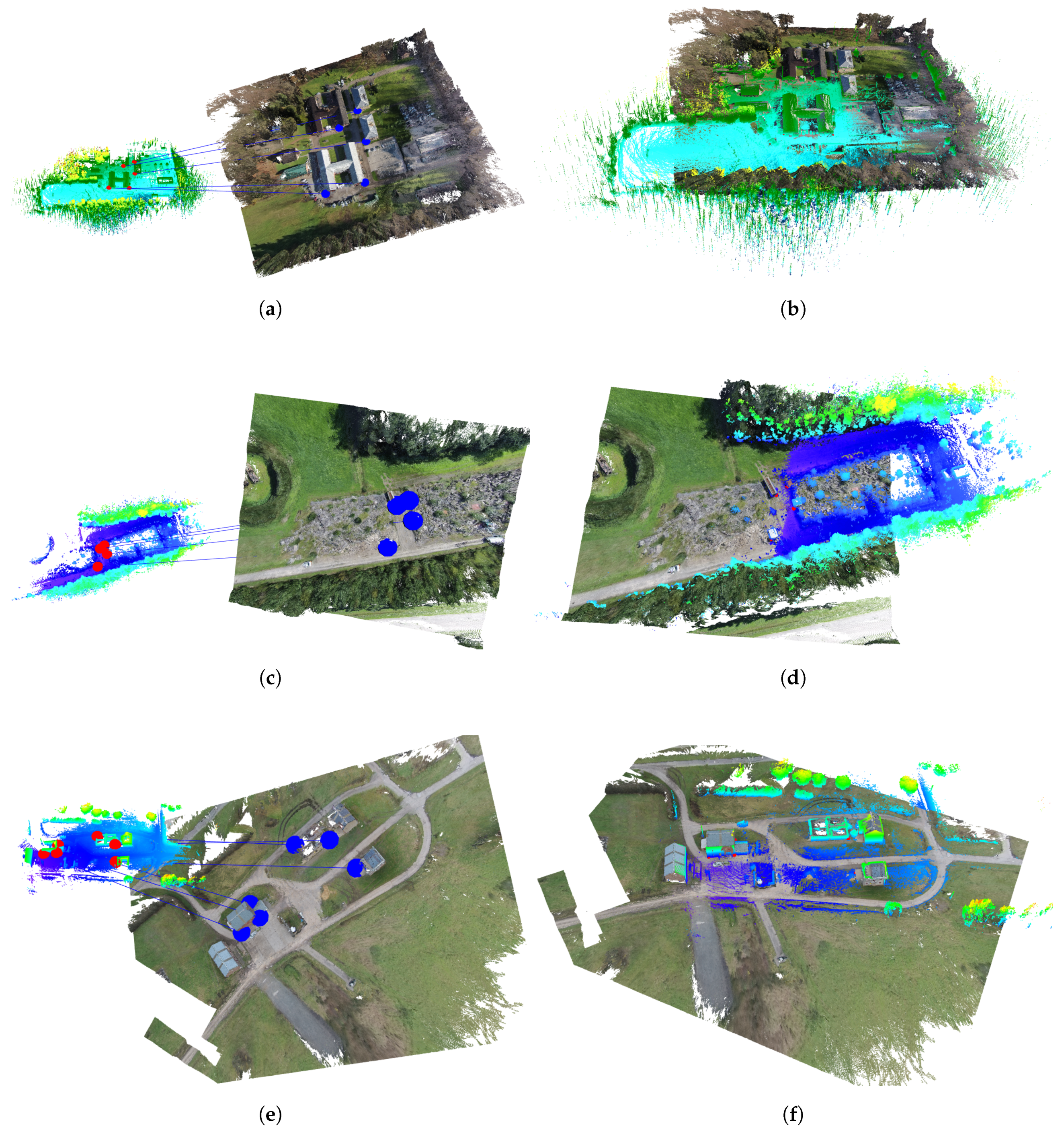

Figure 15.

Semi-automated 3D registration with preselected points and registered UAV-UGV global maps, where (a,c,e) show the manually selected points, the red points represent the UGV dataset, where the blue points represents the UAV dataset, while (b,d,f) show the final output where the semi-automated 3D registration system is applied and the two datasets are registred.

Figure 15.

Semi-automated 3D registration with preselected points and registered UAV-UGV global maps, where (a,c,e) show the manually selected points, the red points represent the UGV dataset, where the blue points represents the UAV dataset, while (b,d,f) show the final output where the semi-automated 3D registration system is applied and the two datasets are registred.

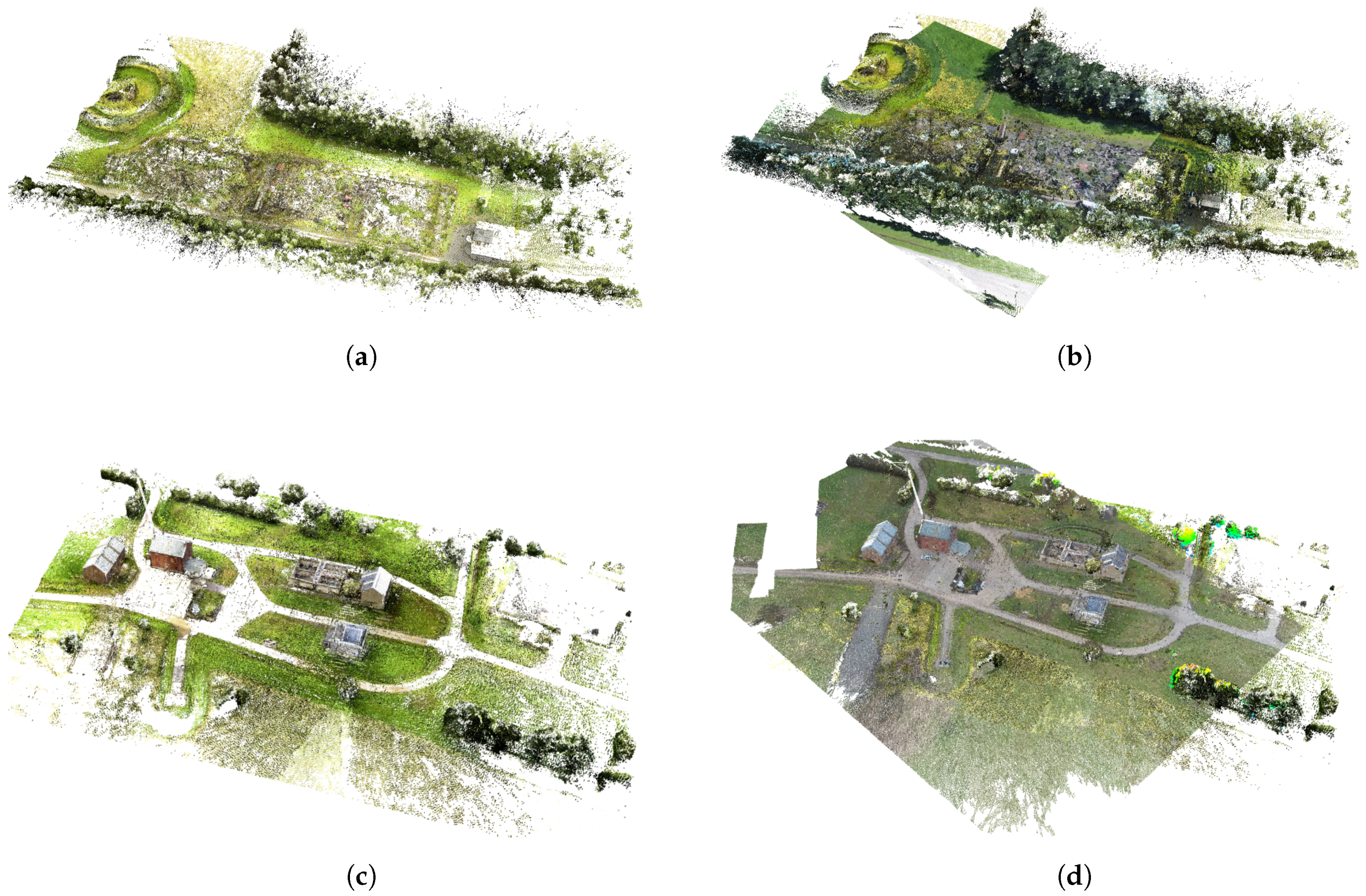

Figure 16.

Comparison of the reference ground truth model (multi-colored) and resulting UGV-UAV datasets. (a,b) rubble field scenario and (c,d) village scenario. Dataset: Military-base Marche en Famenne, Belgium.

Figure 16.

Comparison of the reference ground truth model (multi-colored) and resulting UGV-UAV datasets. (a,b) rubble field scenario and (c,d) village scenario. Dataset: Military-base Marche en Famenne, Belgium.

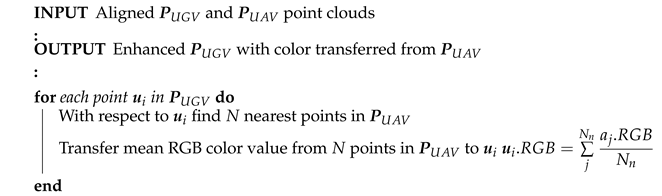

Figure 17.

Color transformation from the UAV point cloud to the UGV point cloud. The different scenarios are represented as follows: Dovo (a,b), Rubble (c,d) and Village (e,f).

Figure 17.

Color transformation from the UAV point cloud to the UGV point cloud. The different scenarios are represented as follows: Dovo (a,b), Rubble (c,d) and Village (e,f).

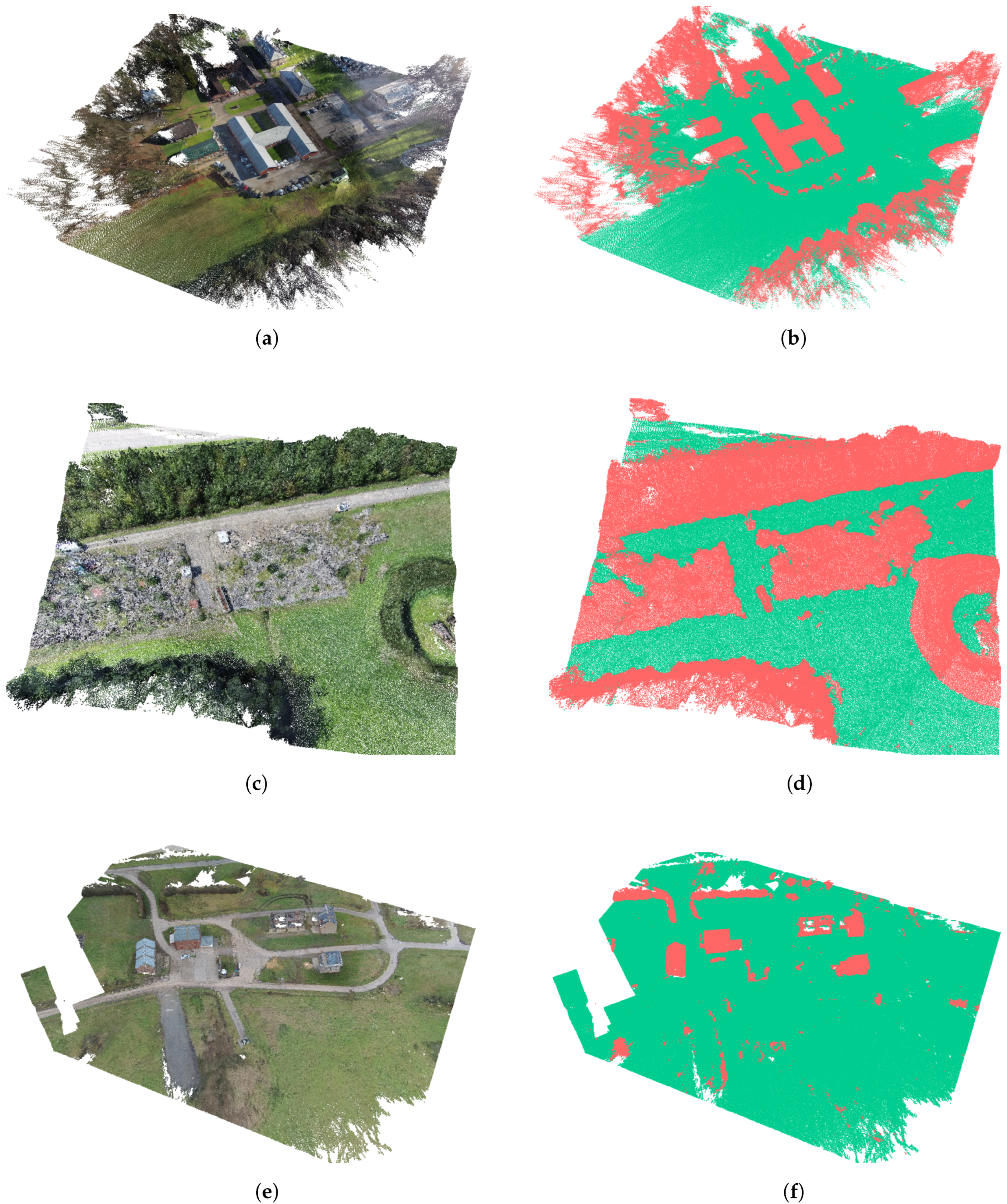

Figure 18.

Ground-object segmentation, the red points represent the segmented objects, while the green points represent the ground area. The different scenarios are represented as follows: Dovo (a,b), Rubble (c,d) and Village (e,f).

Figure 18.

Ground-object segmentation, the red points represent the segmented objects, while the green points represent the ground area. The different scenarios are represented as follows: Dovo (a,b), Rubble (c,d) and Village (e,f).

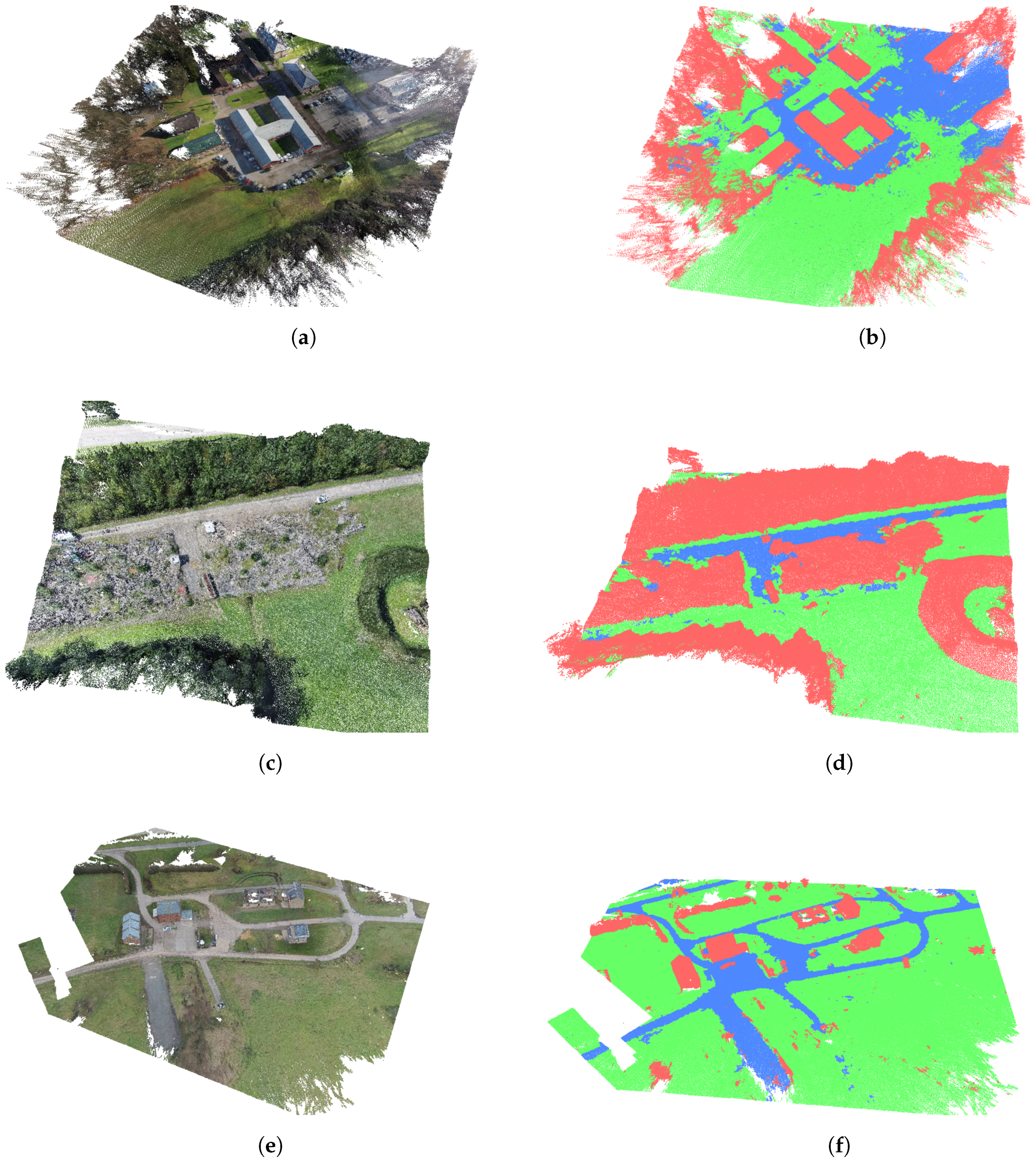

Figure 19.

Outputs of the color based region growing segmentation algorithm, blue points represent the roads while the green points represent the grass and field area. The red points are the obstacles. The different scenarios are represented as follows: Dovo (a,b), Rubble (c,d), and Village (e,f).

Figure 19.

Outputs of the color based region growing segmentation algorithm, blue points represent the roads while the green points represent the grass and field area. The red points are the obstacles. The different scenarios are represented as follows: Dovo (a,b), Rubble (c,d), and Village (e,f).

Table 1.

Analytical representation of the results with good pre-selected corresponding points (Dataset Dovo).

Table 1.

Analytical representation of the results with good pre-selected corresponding points (Dataset Dovo).

| Dataset Dovo |

|---|

| Parameters | | | | | | | Scale |

| 0.61 | 0.59 | 0.65 | 0.30 | 0.48 | 0.28 | 0.04 |

| 0.30 | 0.01 | 0.10 | 0.05 | 0.00 | 0.09 | 0.01 |

| 1.00 | 1.42 | 1.57 | 0.86 | 0.89 | 0.47 | 0.10 |

| 0.24 | 0.55 | 0.42 | 0.26 | 0.27 | 0.13 | 0.03 |

| 0.06 | 0.30 | 0.17 | 0.07 | 0.07 | 0.02 | 0.00 |

| | Translational Error [m] | Rotation Error [deg] |

| 1.07 | 0.63 |

Table 2.

Analytical representation of the results with uncertainty in selection of corresponding points (Dataset Dovo)—the error of selected corresponding points is approximately between 2–3 m.

Table 2.

Analytical representation of the results with uncertainty in selection of corresponding points (Dataset Dovo)—the error of selected corresponding points is approximately between 2–3 m.

| Dataset Dovo |

|---|

| Parameters | | | | | | | Scale |

| 0.92 | 0.98 | 0.69 | 0.59 | 0.67 | 0.54 | 0.15 |

| 0.51 | 0.81 | 0.13 | 0.15 | 0.54 | 0.13 | 0.13 |

| 1.15 | 1.25 | 1.25 | 1.38 | 1.29 | 1.01 | 0.21 |

| 0.29 | 0.19 | 0.60 | 0.58 | 0.34 | 0.40 | 0.04 |

| 0.08 | 0.04 | 0.36 | 0.34 | 0.11 | 0.16 | 0.00 |

| | Translational Error [m] | Rotation Error [deg] |

| 1.52 | 1.04 |

Table 3.

Performance analysis for the semi-automated registered global UAV-UGV maps.

Table 3.

Performance analysis for the semi-automated registered global UAV-UGV maps.

| Dataset | Number of SP | Good PSCPs | USCPs |

|---|

| CT (s) | CPU Load (%) | CT (s) | CPU Load (%) |

|---|

| Dovo | 5 | 223.57 | 35.05 | 229.12 | 36.34 |

| Rubble | 4 | 606.78 | 26.95 | 628.23 | 31.12 |

| Village | 6 | 301.52 | 32.35 | 312.41 | 34.51 |

Table 4.

Homogeneous point cloud properties.

Table 4.

Homogeneous point cloud properties.

| Dataset | Number of Points | Resolution (m) |

|---|

| Dovo | 31,124,101 | 0.04 |

| Rubble | 17,160,288 | 0.03 |

| Village | 19,204,987 | 0.03 |

Table 5.

Quantitative representation of the point to point evaluation for the semi-automated 3D registration and the fully automated 3D registration systems (both scenarios Rubble, Village).

Table 5.

Quantitative representation of the point to point evaluation for the semi-automated 3D registration and the fully automated 3D registration systems (both scenarios Rubble, Village).

| Type of Registration | 90% of the Points Are within |

|---|

| Rubble (m) | Village (m) | Rubble Average (m) | Village Average (m) |

|---|

| UGV LME-ICP | 0.53 | 0.58 | 0.23 | 0.22 |

| UAV-SfM | 0.59 | 0.60 | 0.29 | 0.29 |

| UGV-UAV proposed semi-automated reg. based on SIRM | 0.51 | 0.59 | 0.23 | 0.25 |

| UGV-UAV fully-automated registration with non-optimal alignment parameters | / | / | / | / |

| UGV-UAV fully-automated registration with with optimal alignment parameters | 0.72 | 0.60 | 0.34 | 0.24 |

Table 6.

Number of estimated object and ground points for each scenario with its percentage with the respect to the total number of points in datasets.

Table 6.

Number of estimated object and ground points for each scenario with its percentage with the respect to the total number of points in datasets.

| | Number of Points Detected as Object/Obstacle | Number of Points Detected as Ground |

|---|

| Dovo | 12,866,703 (41.34%) | 18,241,835 (58.66%) |

| Rubble | 11,399,579 (66.43%) | 5,760,708 (33.57%) |

| Village | 2,669,493 (13.90%) | 16,535,493 (86.10%) |