Road Extraction by Using Atrous Spatial Pyramid Pooling Integrated Encoder-Decoder Network and Structural Similarity Loss

Abstract

1. Introduction

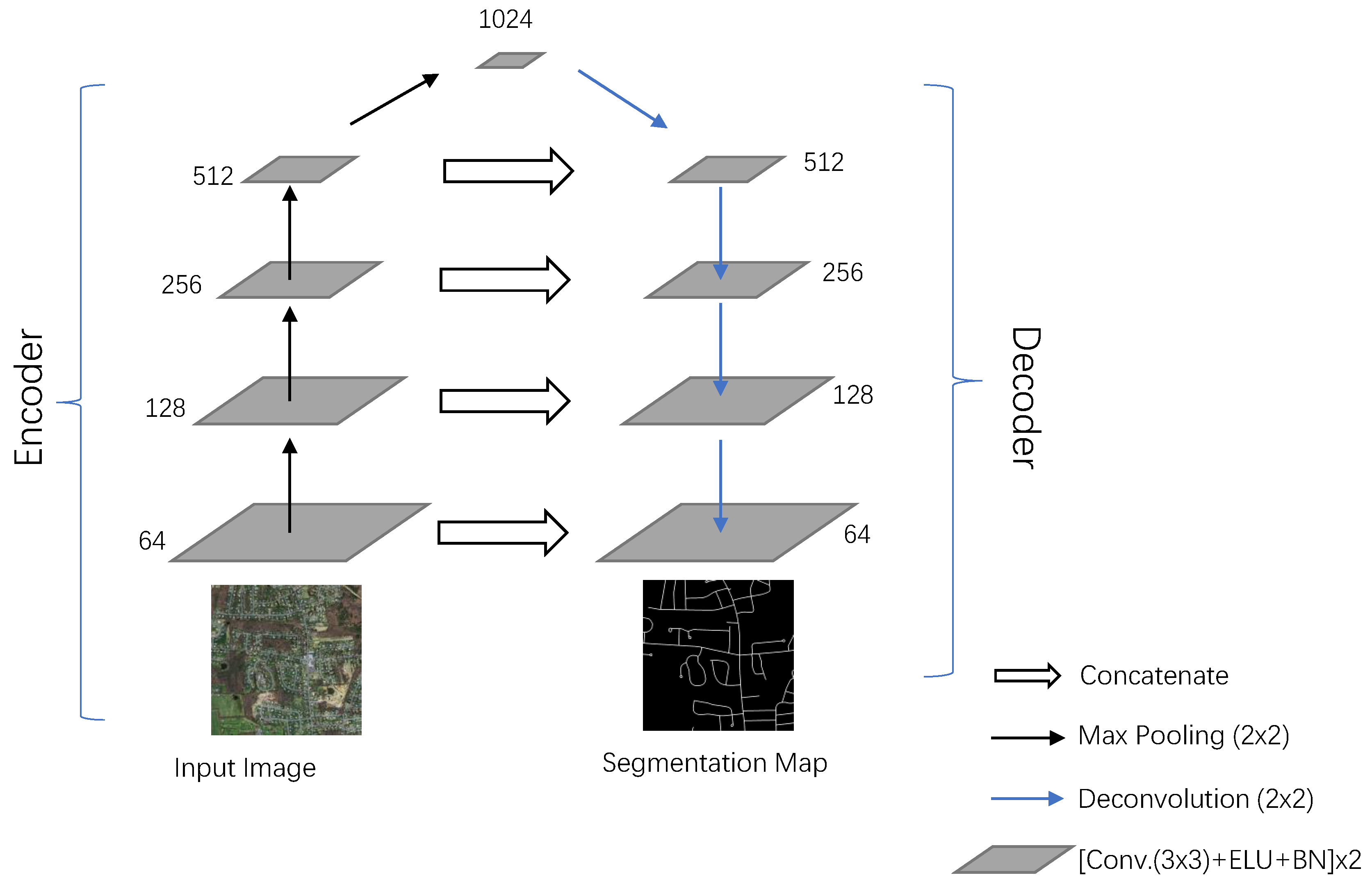

- A U-net-based Encoder-Decoder network is proposed as the baseline network for road extraction from remote sensing imagery. Firstly, the advanced ELU activation function was applied to improve the performance of the network. This was also used for road and building segmentation in References [17,27], so its effectiveness has been demonstrated. Secondly, batch normalization [28] was used to accelerate the convergence of the training process and to prevent the exploding gradient problem.

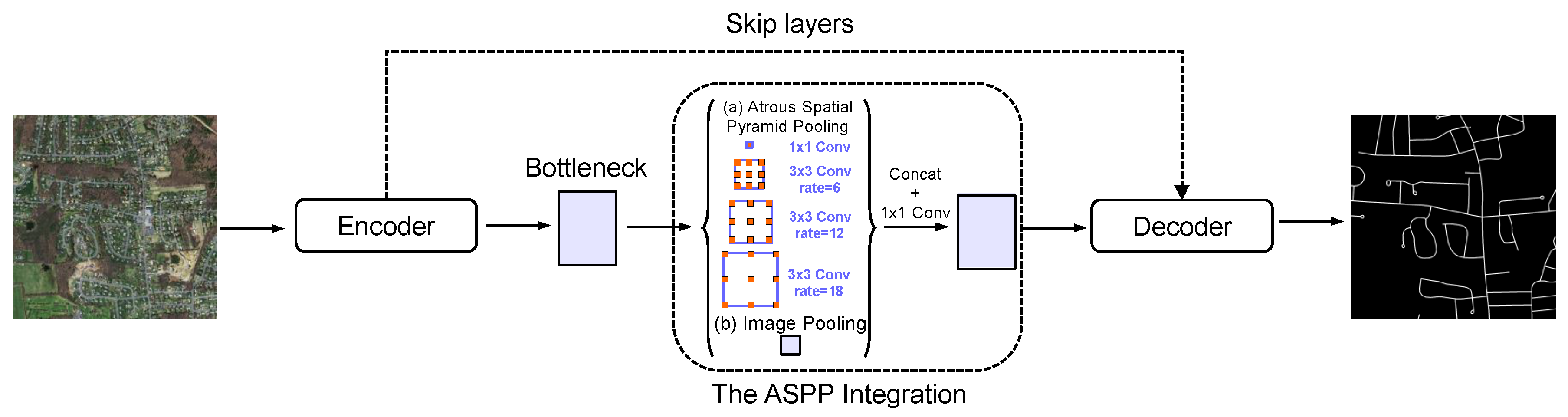

- The ASPP is integrated with the proposed Encoder-Decoder network to capture multiscale information. DeepLabv3+ [29], combined with the Encoder-Decoder and ASPP, is able to improve the extraction of small details. However, since DeepLabv3+ is mainly designed for daily scenes, it neglects targets that are really small. DeepLabv3+ applies the idea of gradually restoring the resolution from the Encoder-Decoder network, but it can only restore 1/4 of the resolution of the input, and these results should be improved by using a CRF to capture more detail. Hence, it is still insufficient for the road extraction task. Therefore, we integrated the ASPP with the strong Encoder-Decoder network of the U-net, and with the advantage of ASPP, we can overcome the deficiency of DeepLabv3+. Compared with DeepLabv3+, our approach can restore the resolution completely and is more suitable for processing remote sensing imagery.

- The SSIM is employed as a loss function to improve the quality of road segmentation. To the best of our knowledge, this is the first time that the SSIM has been used as a loss function for semantic segmentation. The SSIM loss function supplements the representation to a degree that the general cross-entropy loss function cannot reach, and it improves the quality of the target extraction results.

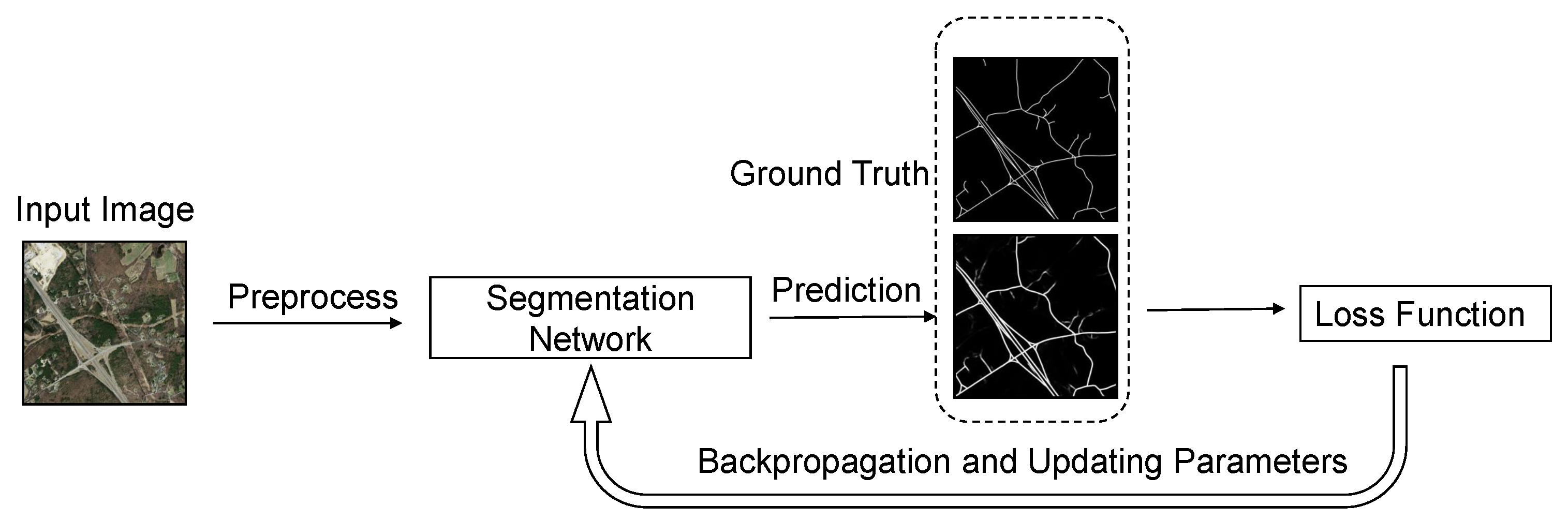

2. Materials and Methods

2.1. ASPP-Integrated Encoder-Decoder Network

2.1.1. Architecture of the Improved U-net

2.1.2. Integration of ASPP

2.2. SSIM Loss

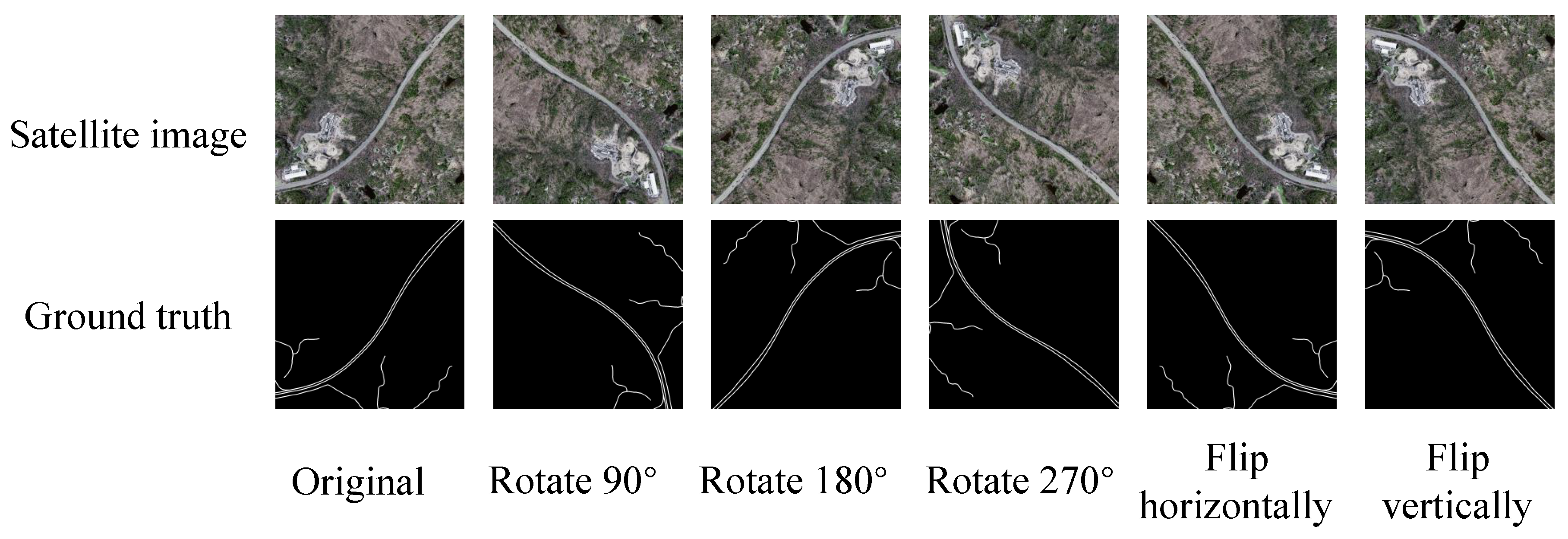

2.3. Dataset and Preprocessing

2.4. Evaluation Metrics

3. Experiment Results and Discussion

3.1. Implementation Details

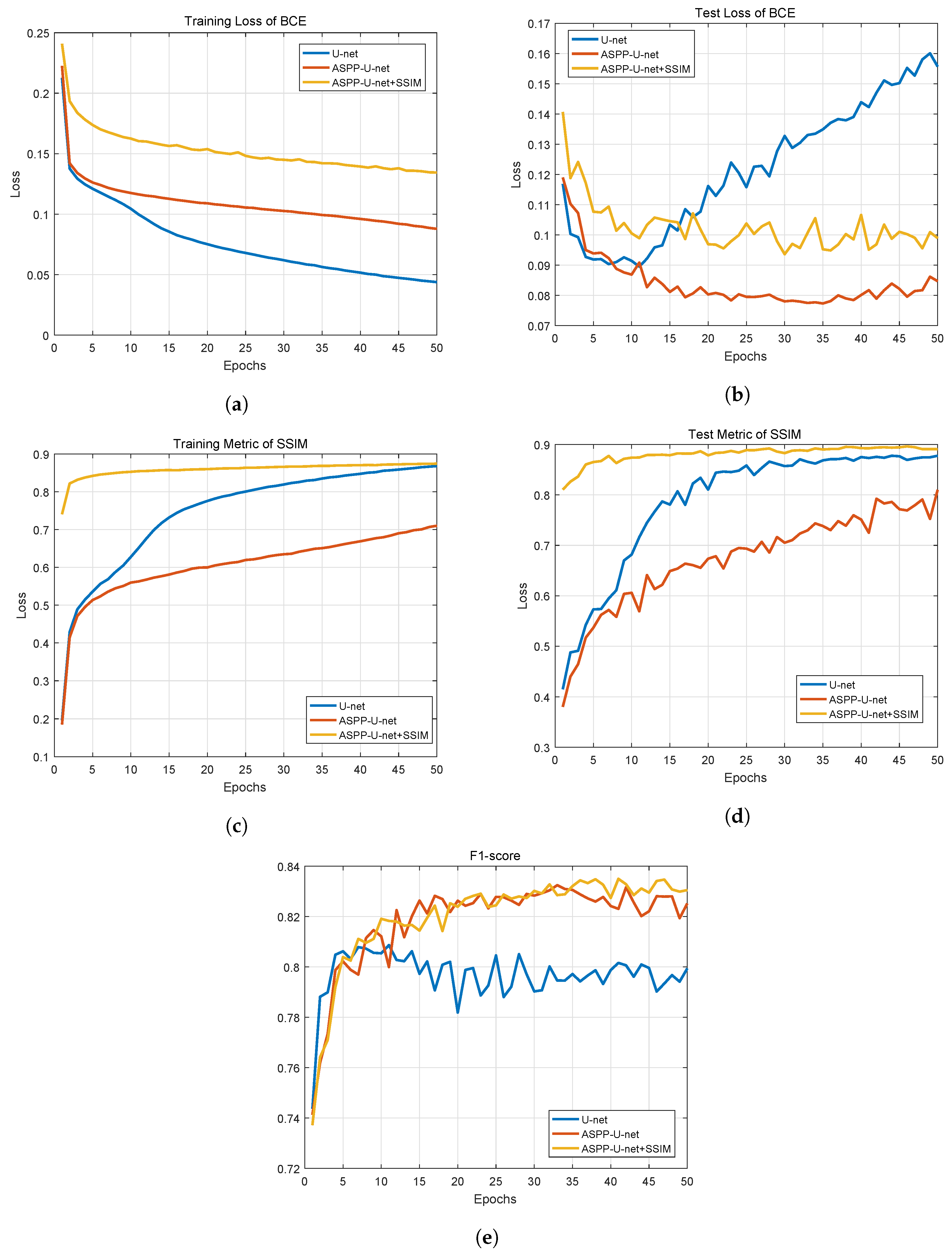

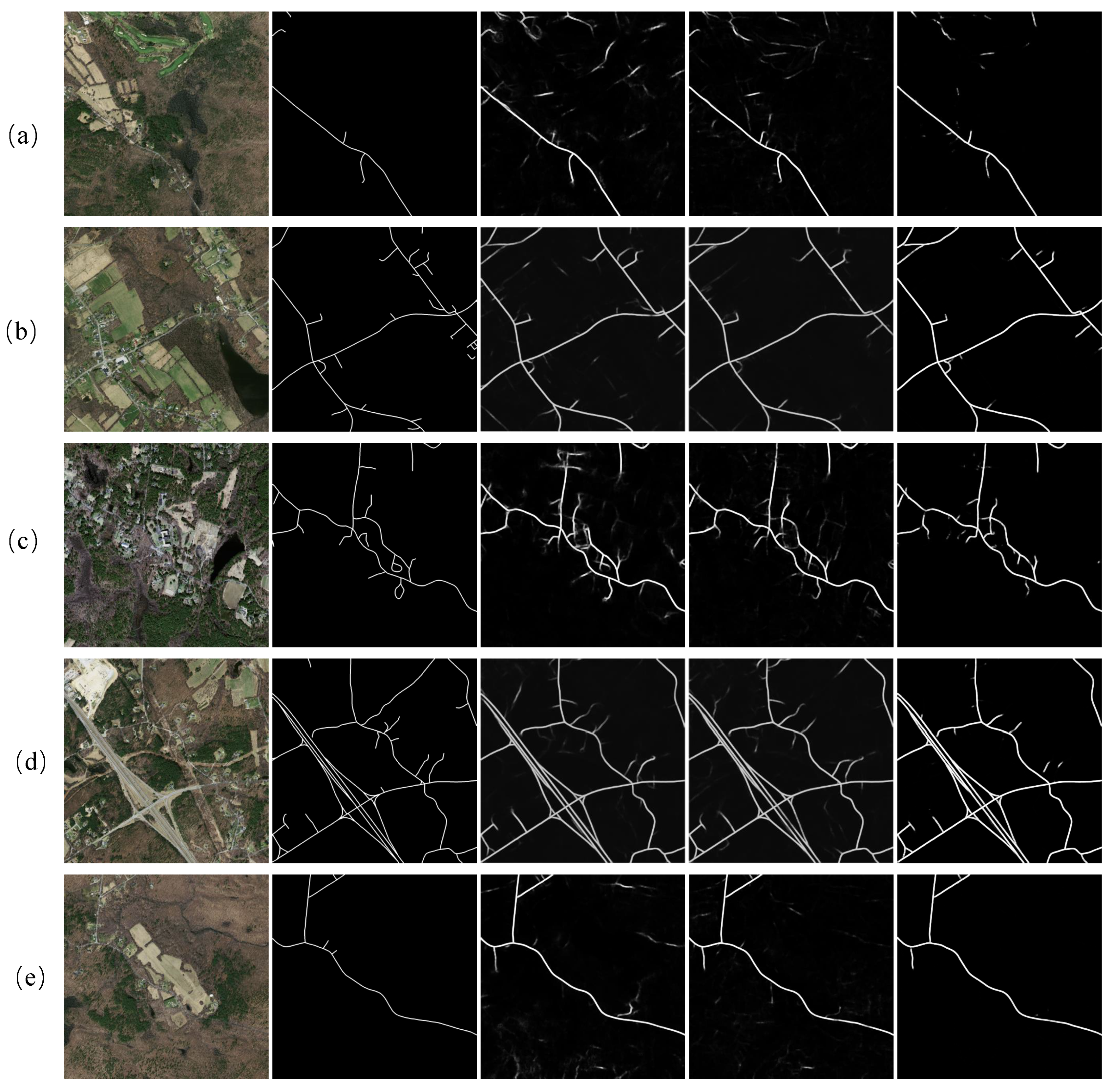

3.2. Discussion on Experimental Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ASPP | Atrous spatial pyramid pooling |

| AdaGrad | Adaptive gradient algorithm |

| ADAM | Adaptive Moment Estimation |

| BCE | Binary cross-entropy |

| CNN | Convolutional neural network |

| CRF | Conditional random field |

| ELU | Exponential linear unit |

| FCN | Fully convolutional network |

| FN | False Negative |

| FP | False Positive |

| FSM | Finite state machine |

| GIS | Geographic information system |

| MSSIM | Mean SSIM |

| ReLU | Rectified Linear Unit |

| RMSprop | Root-mean square prop |

| RSRCNN | Road structure refined CNN |

| SGD | Stochastic gradient descent |

| SSIM | Structural similarity |

| TN | True Negative |

| TP | True Positive |

References

- Steger, C. An unbiased detector of curvilinear structures. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 113–125. [Google Scholar] [CrossRef]

- Zhou, Y.T.; Venkateswar, V.; Chellappa, R. Edge detection and linear feature extraction using a 2-D random field model. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 84–95. [Google Scholar] [CrossRef]

- Koutaki, G.; Uchimura, K. Automatic road extraction based on cross detection in suburb. Electron. Imaging 2004, 36, 2–9. [Google Scholar]

- Hu, J.; Razdan, A.; Femiani, J.C.; Cui, M.; Wonka, P. Road network extraction and intersection detection from aerial images by tracking road footprints. IEEE Trans. Geosci. Remote Sens. 2007, 45, 4144–4157. [Google Scholar] [CrossRef]

- Ravanbakhsh, M.; Heipke, C.; Pakzad, K. Road junction extraction from high-resolution aerial imagery. Photogramm. Rec. 2010, 23, 405–423. [Google Scholar] [CrossRef]

- Marikhu, R.; Dailey, M.N.; Makhanov, S.; Honda, K. A Family of quadratic snakes for road extraction. In Proceedings of the 8th Asia Conference on Computer Vision, Tokyo, Japan, 18–22 November 2007; pp. 85–94. [Google Scholar]

- Wegner, J.D.; Montoya-Zegarra, J.A.; Schindler, K. A higher-order CRF model for road network extraction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1698–1705. [Google Scholar]

- Mnih, V.; Hinton, G.E. Learning to detect roads in high-resolution aerial images. In Proceedings of the European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; pp. 210–223. [Google Scholar]

- Mnih, V. Machine Learning for Aerial Image Labeling. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2013. [Google Scholar]

- Wang, J.; Song, J.; Chen, M.; Yang, Z. Road network extraction: A neural-dynamic framework based on deep learning and a finite state machine. Int. J. Remote Sens. 2015, 36, 3144–3169. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R.; Woon, W.L.; Mura, M.D. Simultaneous extraction of roads and buildings in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2017, 130, 139–149. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Zhong, Z.; Li, J.; Cui, W.; Jiang, H. Fully convolutional networks for building and road extraction: Preliminary results. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1591–1594. [Google Scholar]

- Wei, Y.; Wang, Z.; Xu, M. Road structure refined CNN for road extraction in aerial image. IEEE Geosci. Remote Sens. Lett. 2017, 14, 709–713. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Cheng, G.; Wang, Y.; Xu, S.; Wang, H.; Xiang, S.; Pan, C. Automatic road detection and centerline extraction via cascaded End-to-End convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3322–3337. [Google Scholar] [CrossRef]

- Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Road segmentation of remotely-Sensed images using deep convolutional neural networks with landscape metrics and conditional random fields. Remote Sens. 2017, 9, 680. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A Deep convolutional encoder-decoder architecture for image segmentation. arXiv 2015, arXiv:1511.00561. [Google Scholar] [CrossRef] [PubMed]

- Clevert, D.A.; Unterthiner, T.; Hochreiter, S. Fast and accurate deep network learning by exponential linear units (ELUs). arXiv 2015, arXiv:1511.07289. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Mosinska, A.; Márquez-Neila, P.; Kozinski, M.; Fua, P. Beyond the pixel-wise loss for topology-aware delineation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Munich, Germany, 8–14 September 2018; pp. 3136–3145. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Zhou, W.; Alan Conrad, B.; Hamid Rahim, S.; Simoncelli Eero, P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

- Søgaard, J.; Krasula, L.L.; Shahid, M.; Temel, D.; Søgaard, J.; Krasula, L.L.; Shahid, M.; Temel, D.; Brunnström, K.; Razaak, M. Applicability of Existing Objective Metrics of Perceptual Quality for Adaptive Video Streaming. Electron. Imaging 2016, 13, 1–7. [Google Scholar] [CrossRef]

- Shrestha, S.; Vanneschi, L. Improved Fully convolutional network with conditional random fields for building extraction. Remote Sens. 2018, 10, 1135. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the Iernational Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with atrous separable convolution for semantic image segmentation. arXiv 2018, arXiv:1802.02611. [Google Scholar]

- Iglovikov, V.; Mushinskiy, S.; Osin, V. Satellite Imagery Feature Detection using Deep Convolutional Neural Network: A Kaggle Competition. arXiv 2017, arXiv:1706.06169. [Google Scholar]

- He, H.; Wang, S.; Yang, D.; Wang, S.; Liu, X. A road extraction method for remote sensing image based on Encoder-Decoder network. Acta Geod. Cartogr. Sin. 2019, 48, 330–338. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, UK, 2016. [Google Scholar]

- Wiedemann, C.; Heipke, C.; Mayer, H.; Jamet, O. Empirical Evaluation of Automatically Extracted Road Axes. In Empirical Evaluation Techniques in Computer Vision; IEEE Computer Society Press: Los Alamitos, CA, USA, 1998; pp. 172–187. ISBN 978-0-818-68401-2. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization. arXiv 2016, arXiv:1609.04747. [Google Scholar]

| Model Name | ASPP Integration | Loss Function |

|---|---|---|

| Baseline U-net | No | |

| ASPP-U-net | Yes | |

| ASPP-U-net+SSIM | Yes |

| Model Name | Recall | Precision | F1-Score | Average MSSIM |

|---|---|---|---|---|

| FCN-4s [13] | 66.0% | 71.0% | 68.4% | / |

| RSRCNN [14] | 72.9% | 60.6% | 66.2% | / |

| ELU-SegNet-R [17] | 78.0% | 84.7% | 81.2% | / |

| Baseline U-net | 80.3% | 82.0% | 80.9% | 0.716 |

| ASPP-U-net | 81.9% | 84.9% | 83.2% | 0.730 |

| ASPP-U-net-SSIM | 80.5% | 87.1% | 83.5% | 0.893 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, H.; Yang, D.; Wang, S.; Wang, S.; Li, Y. Road Extraction by Using Atrous Spatial Pyramid Pooling Integrated Encoder-Decoder Network and Structural Similarity Loss. Remote Sens. 2019, 11, 1015. https://doi.org/10.3390/rs11091015

He H, Yang D, Wang S, Wang S, Li Y. Road Extraction by Using Atrous Spatial Pyramid Pooling Integrated Encoder-Decoder Network and Structural Similarity Loss. Remote Sensing. 2019; 11(9):1015. https://doi.org/10.3390/rs11091015

Chicago/Turabian StyleHe, Hao, Dongfang Yang, Shicheng Wang, Shuyang Wang, and Yongfei Li. 2019. "Road Extraction by Using Atrous Spatial Pyramid Pooling Integrated Encoder-Decoder Network and Structural Similarity Loss" Remote Sensing 11, no. 9: 1015. https://doi.org/10.3390/rs11091015

APA StyleHe, H., Yang, D., Wang, S., Wang, S., & Li, Y. (2019). Road Extraction by Using Atrous Spatial Pyramid Pooling Integrated Encoder-Decoder Network and Structural Similarity Loss. Remote Sensing, 11(9), 1015. https://doi.org/10.3390/rs11091015