Rigorous Boresight Self-Calibration of Mobile and UAV LiDAR Scanning Systems by Strip Adjustment

Abstract

:1. Introduction

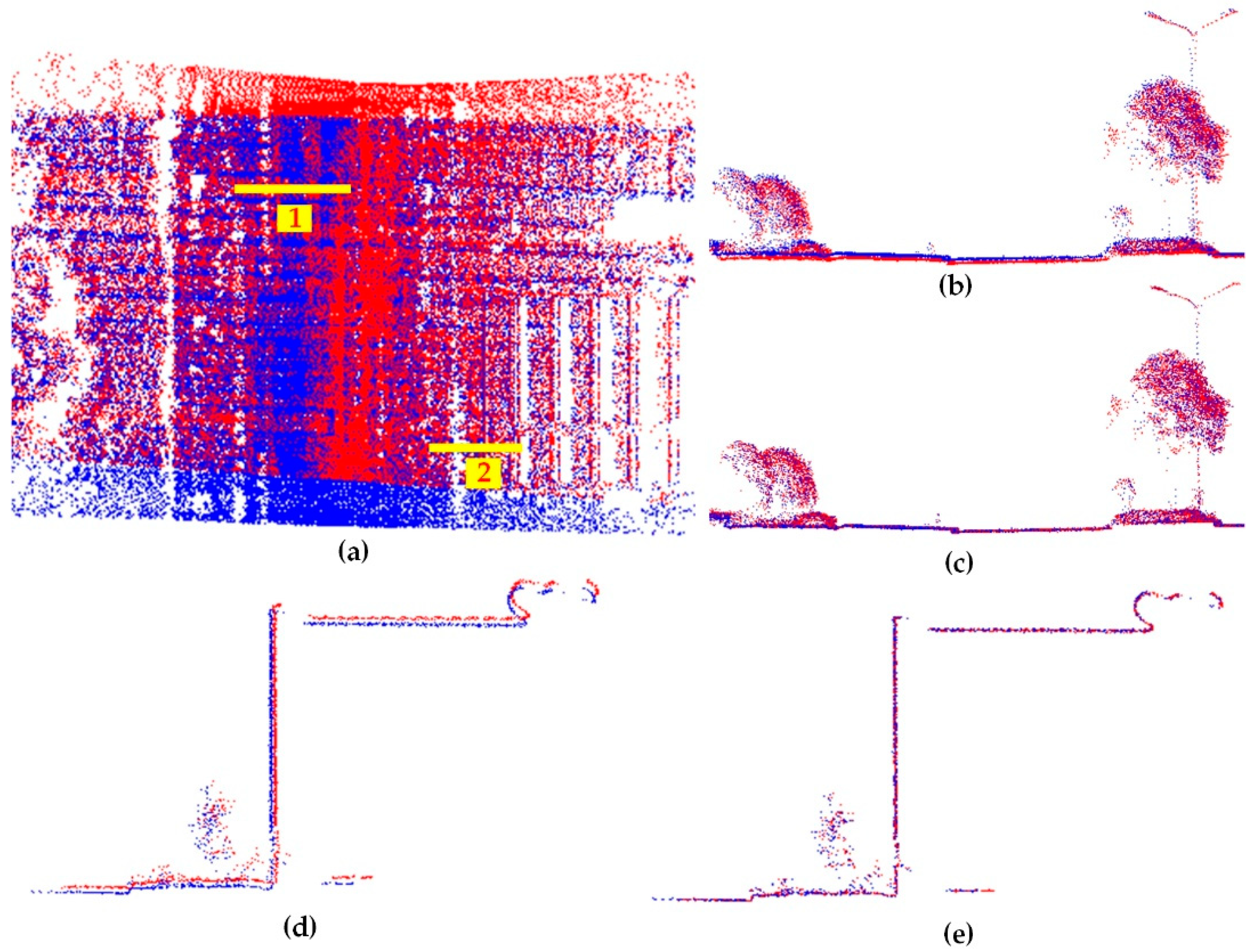

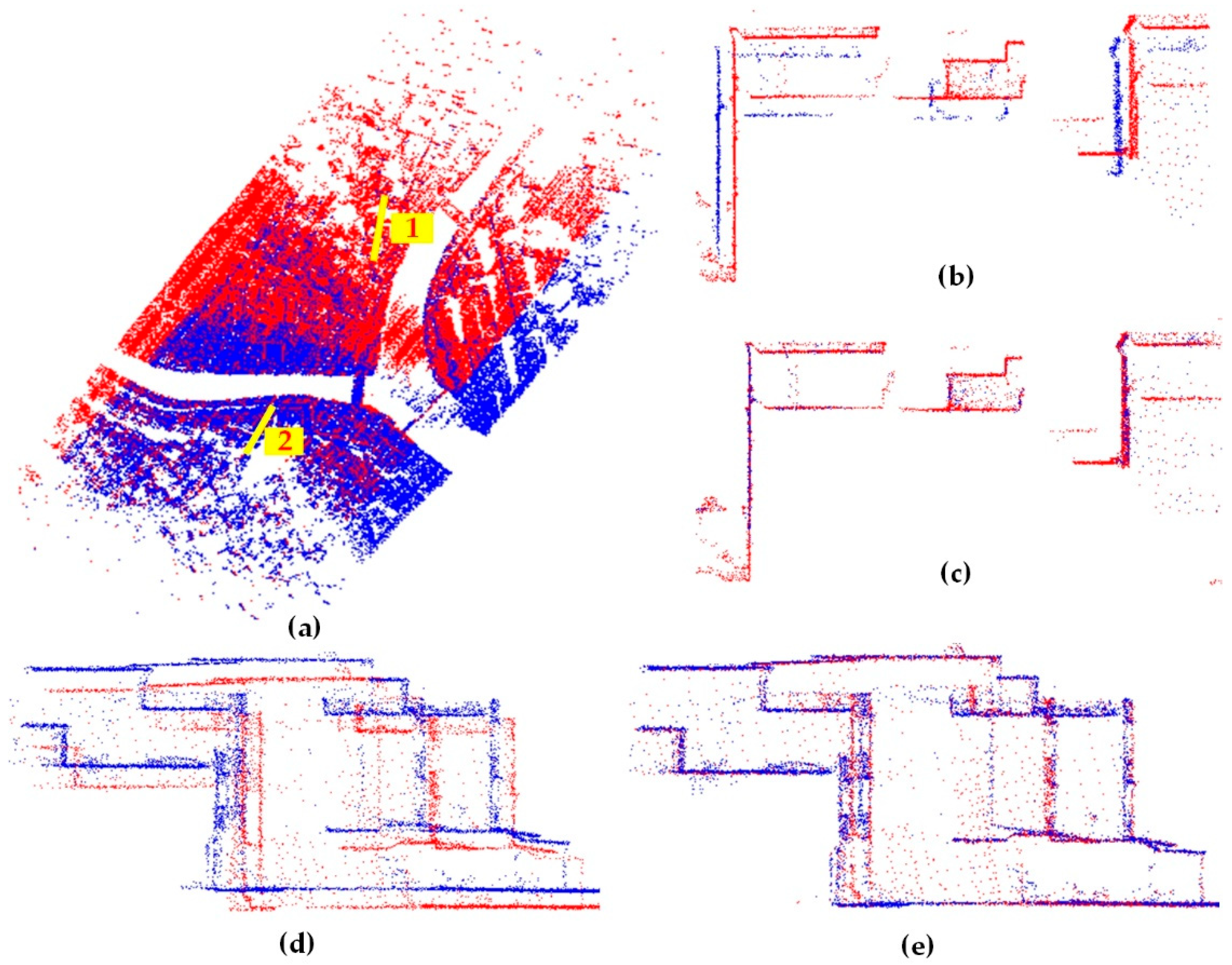

2. Materials and Methods

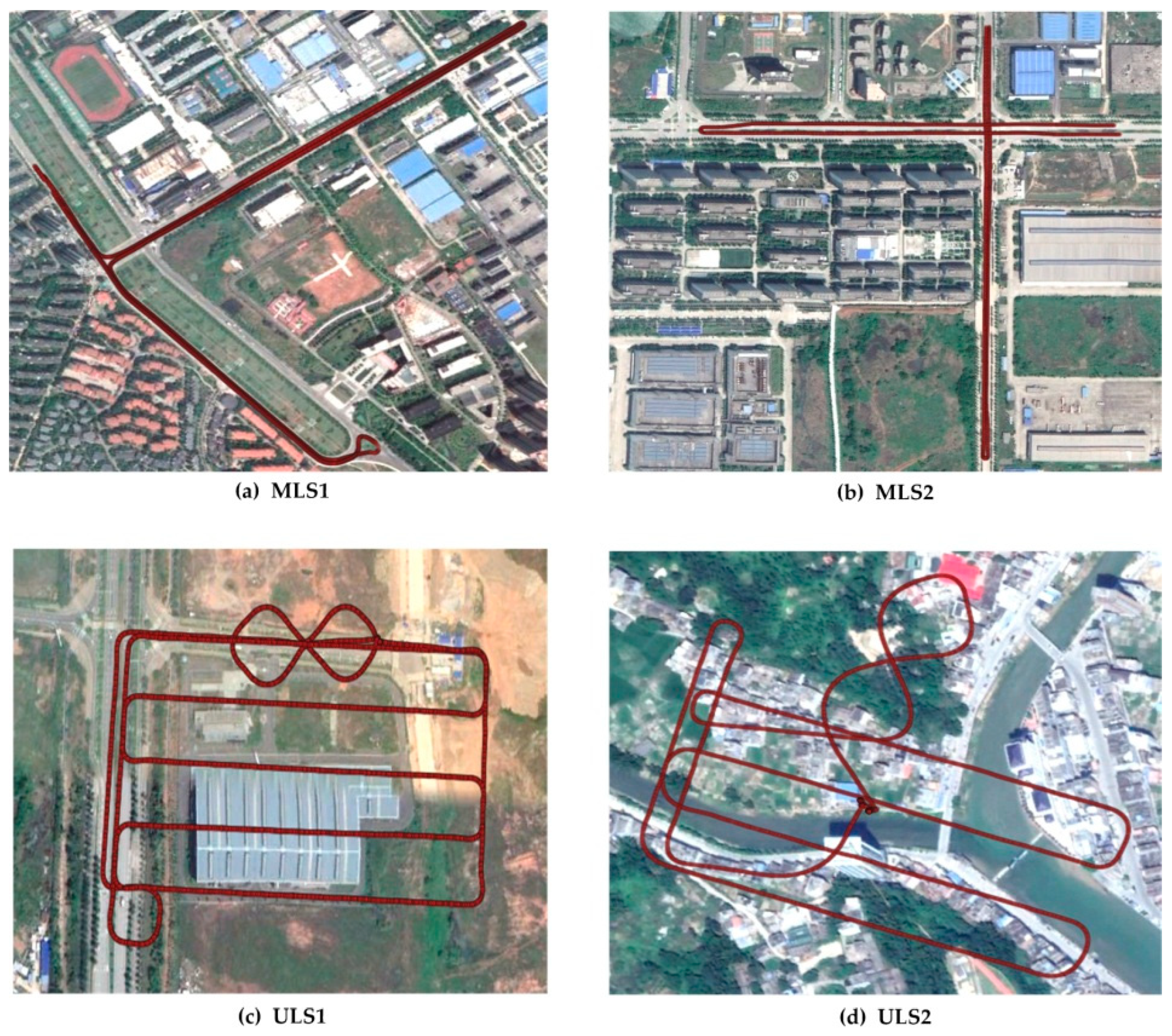

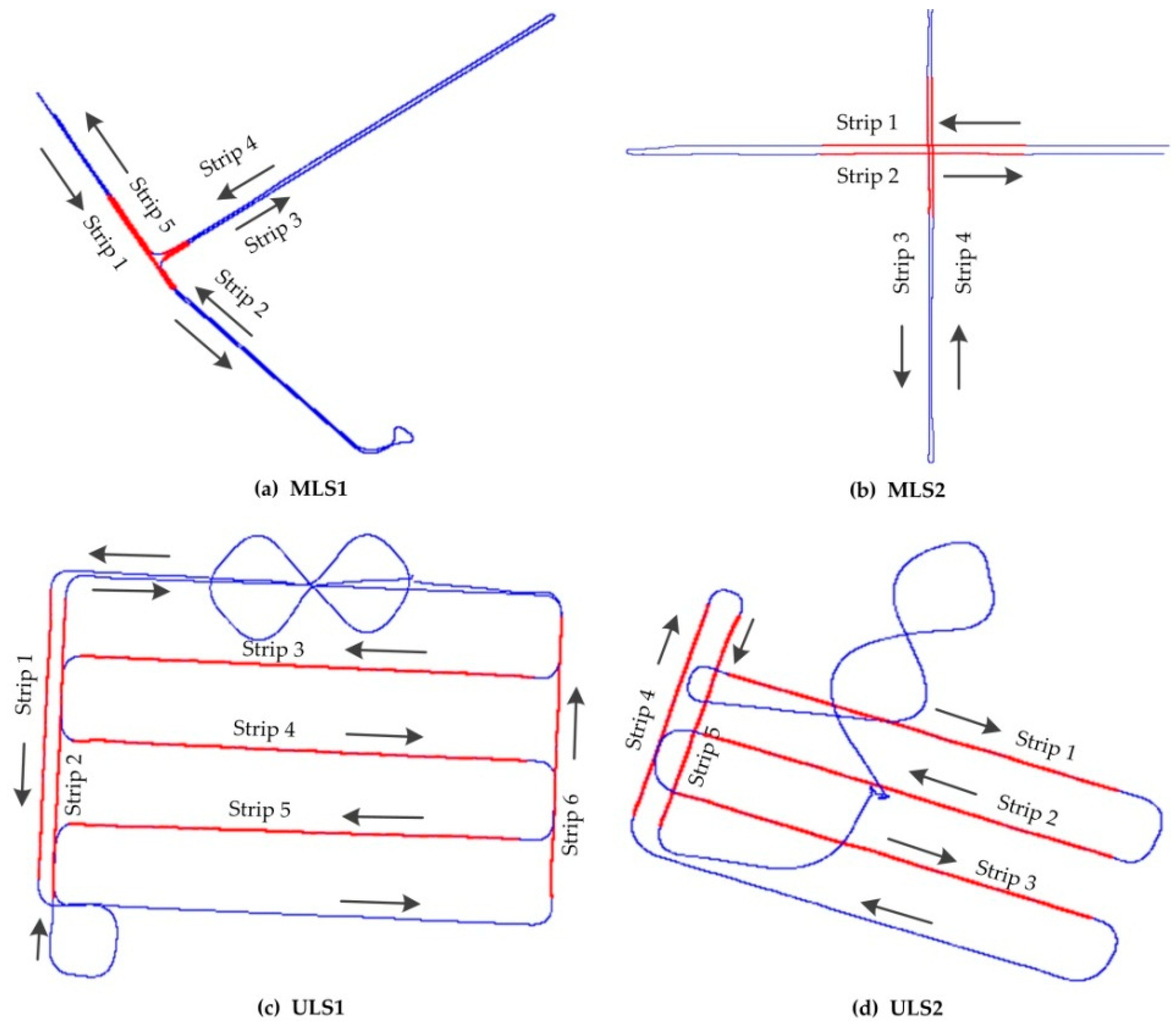

2.1. Datasets

2.2. Geo-Referencing Equation of MLS and ULS

- is the positioning vector of the point in the WGS84 ECEF frame;

- is the rotation matrix from the laser scanner frame to the IMU frame;

- is the position of the laser scanner in the IMU frame;

- are the boresight angles between the laser scanner and the GNSS/IMU system;

- are the lever-arm offsets between the laser scanner and the GNSS/IMU system;

- is the rotation matrix between the IMU frame and the local level frame;

- is the rotation matrix between the local level frame and the WGS84 ECEF frame;

- is the position of the vehicle in the WGS84 ECEF frame;

- are the latitude, longitude, ellipsoidal height provided by the GNSS/IMU; are the roll, pitch, heading provided by the GNSS/IMU.

2.3. Boresight Self-Calibration

2.3.1. Problem Formulation

2.3.2. Point Correspondences Matching

- ▪

- 10% points are selected as candidates from the source point clouds and 30% points are selected as candidates from the target point clouds by normal space sampling strategy [29] to improve the registration efficiency;

- ▪

- The distance threshold and normal threshold among correspondences are set to 1 m and 20° respectively to minimize the effect of non-overlapping area on the registration as soon as possible;

- ▪

- The correspondences with distances larger than the median distance are considered be poor correspondences and then rejected.

2.3.3. Boresight Angle Correction Parameters Estimation

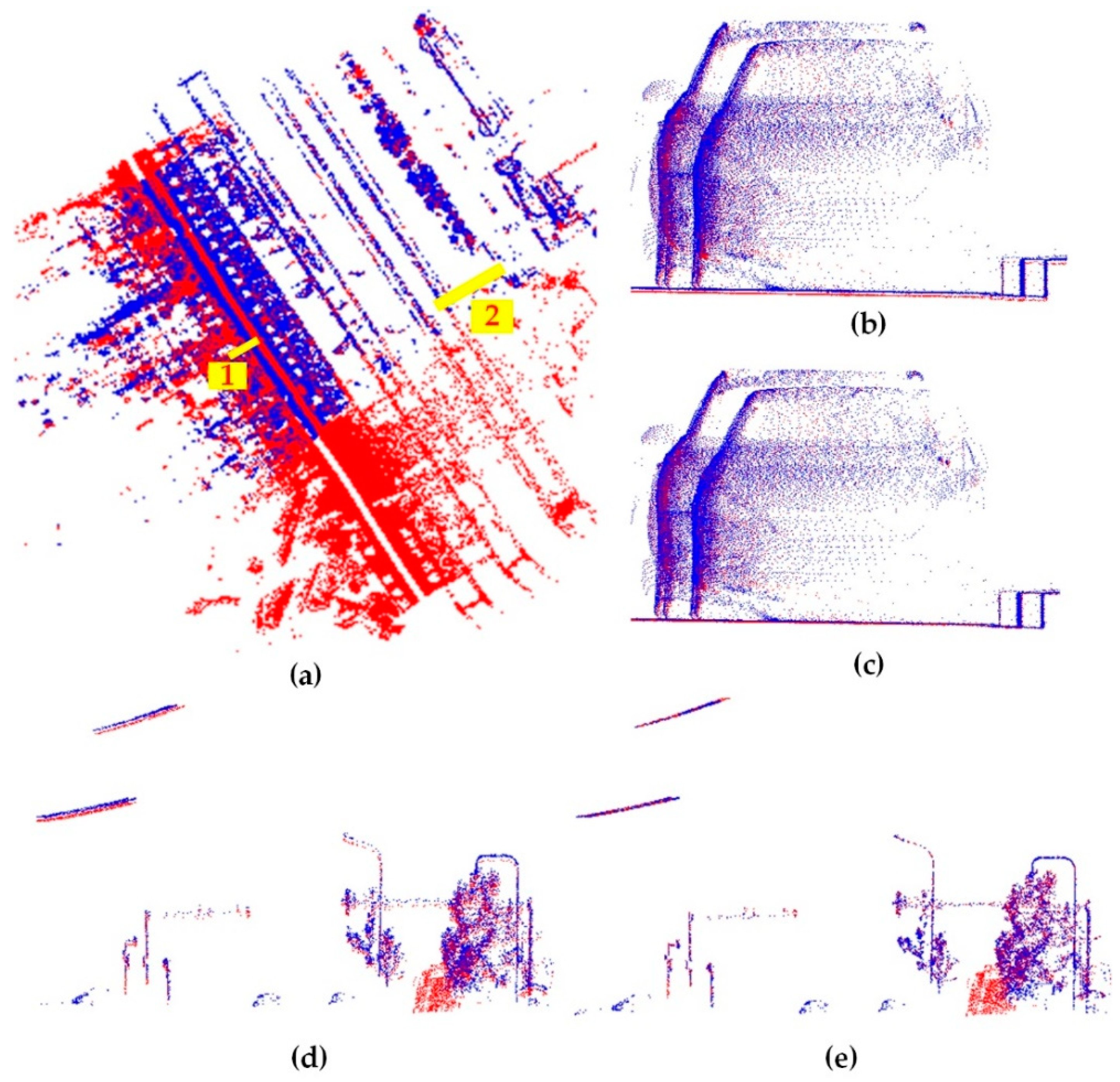

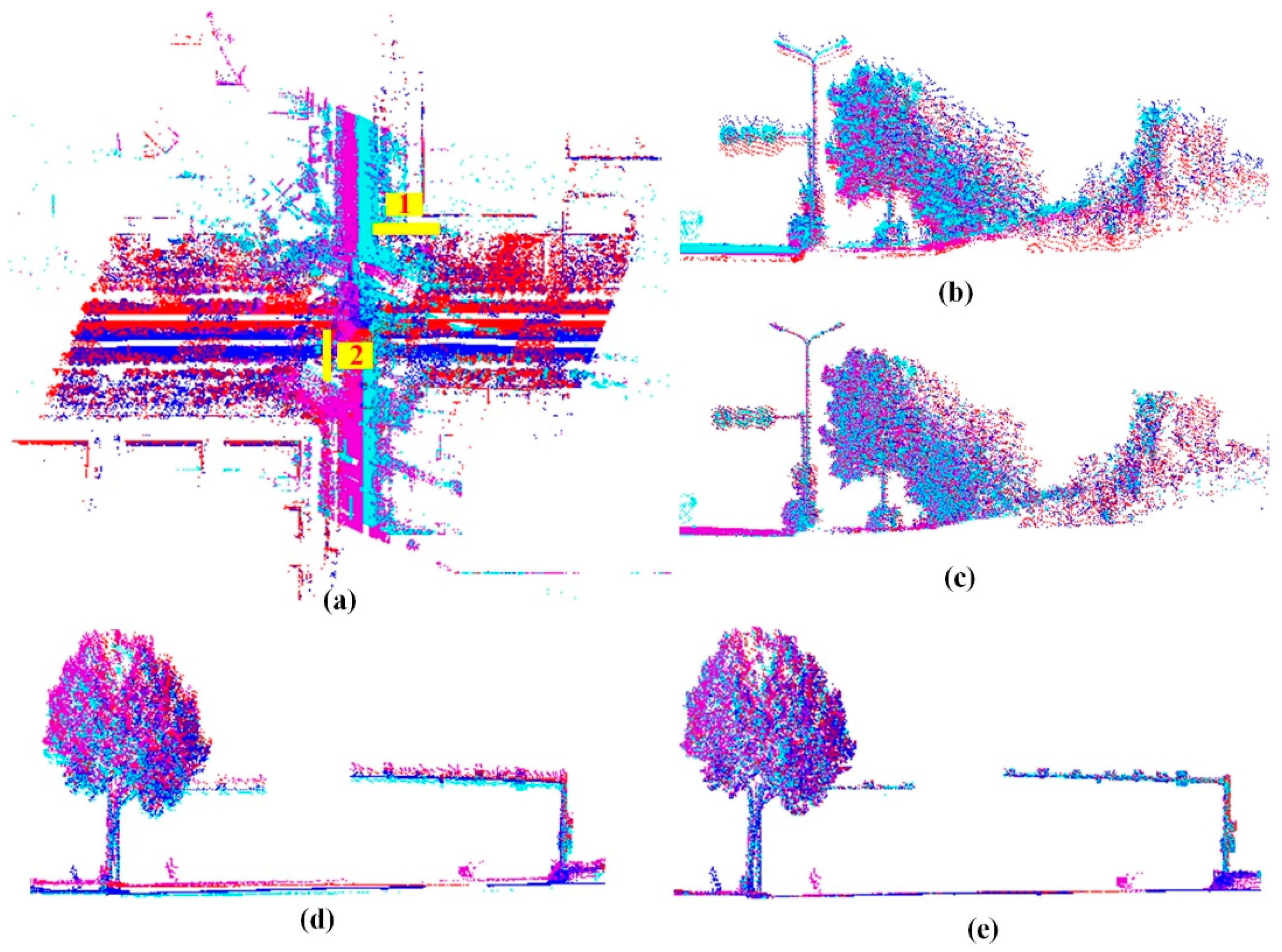

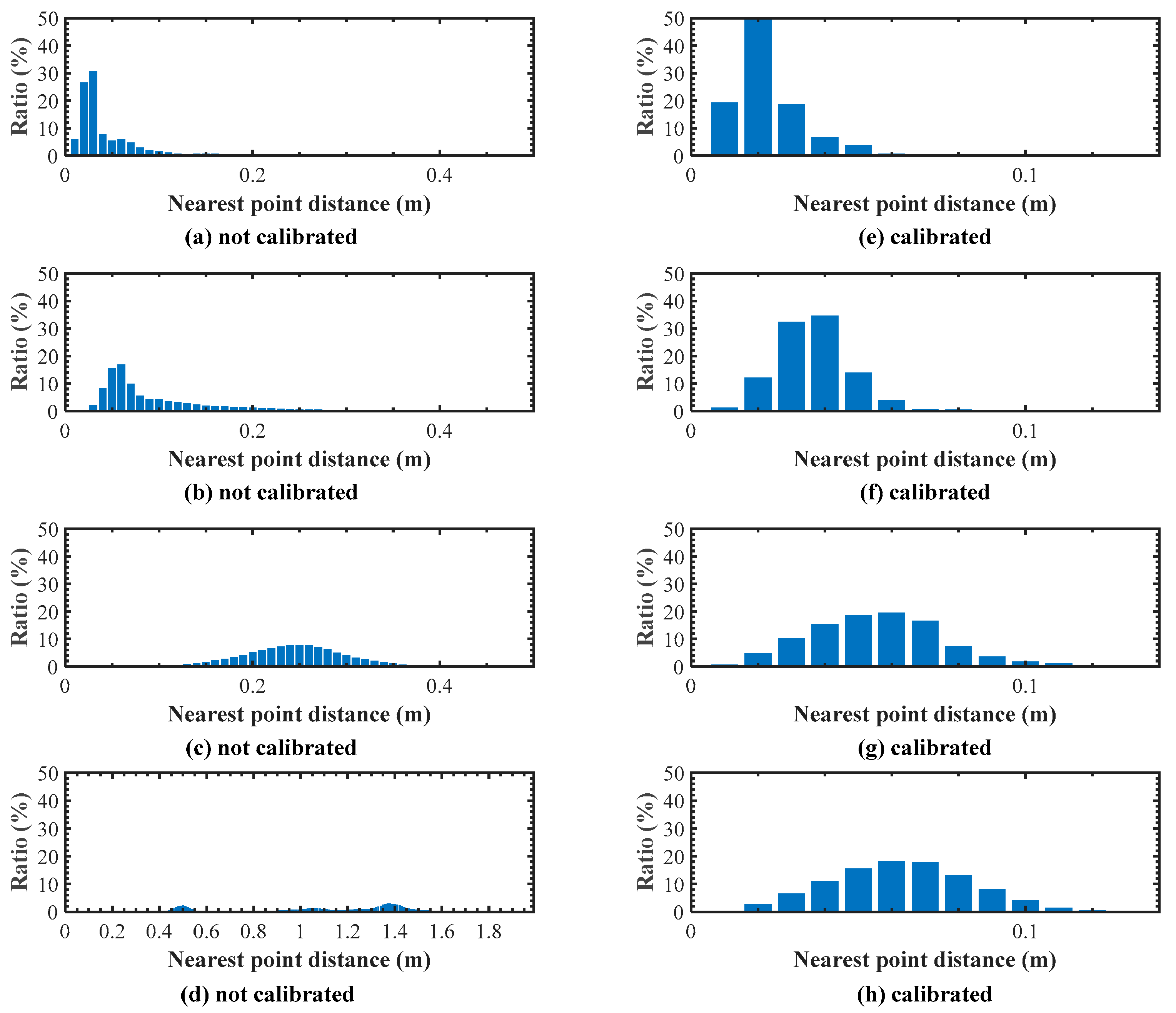

3. Results

4. Discussion

4.1. Influence of Pose Errors

4.2. Influence of Laser Scanner Mounting Styles

4.3. Influence of Point Density and ICP Registration

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Puente, I.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. Review of mobile mapping and surveying technologies. Measurement 2013, 46, 2127–2145. [Google Scholar] [CrossRef]

- Baltsavias, E.P. Airborne laser scanning: Existing systems and firms and other resources. ISPRS J. Photogramm. 1999, 54, 164–198. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR System with Application to Forest Inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Liang, X.; Kukko, A.; Kaartinen, H.; Hyyppä, J.; Yu, X.; Jaakkola, A.; Wang, Y. Possibilities of a Personal Laser Scanning System for Forest Mapping and Ecosystem Services. Sensors 2014, 14, 1228–1248. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Cheng, L.; Chen, Y.; Wu, Y.; Li, M. Building Point Detection from Vehicle-Borne LiDAR Data Based on Voxel Group and Horizontal Hollow Analysis. Remote Sens. 2016, 8, 419. [Google Scholar] [CrossRef]

- Lin, Y.; Hyyppa, J.; Jaakkola, A. Mini-UAV-Borne LIDAR for Fine-Scale Mapping. IEEE Geosci. Remote Sens. 2011, 8, 426–430. [Google Scholar] [CrossRef]

- Holgado-Barco, A.; Gonzalez-Aguilera, D.; Arias-Sanchez, P.; Martinez-Sanchez, J. An automated approach to vertical road characterisation using mobile LiDAR systems: Longitudinal profiles and cross-sections. ISPRS J. Photogramm. 2014, 96, 28–37. [Google Scholar] [CrossRef]

- Kang, Y.; Roh, C.; Suh, S.; Song, B. A Lidar-Based Decision-Making Method for Road Boundary Detection Using Multiple Kalman Filters. IEEE Trans. Ind. Electron. 2012, 59, 4360–4368. [Google Scholar] [CrossRef]

- Vaaja, M.; Hyyppä, J.; Kukko, A.; Kaartinen, H.; Hyyppä, H.; Alho, P. Mapping Topography Changes and Elevation Accuracies Using a Mobile Laser Scanner. Remote Sens. 2011, 3, 587–600. [Google Scholar] [CrossRef]

- Flener, C.; Vaaja, M.; Jaakkola, A.; Krooks, A.; Kaartinen, H.; Kukko, A.; Kasvi, E.; Hyyppä, H.; Hyyppä, J.; Alho, P. Seamless Mapping of River Channels at High Resolution Using Mobile LiDAR and UAV-Photography. Remote Sens. 2013, 5, 6382–6407. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Cao, S.; Yu, Y. Use of mobile LiDAR in road information inventory: A review. Int. J. Image Data Fusion 2016, 7, 219–242. [Google Scholar] [CrossRef]

- Soilán, M.; Riveiro, B.; Martínez-Sánchez, J.; Arias, P. Traffic sign detection in MLS acquired point clouds for geometric and image-based semantic inventory. ISPRS J. Photogramm. 2016, 114, 92–101. [Google Scholar] [CrossRef]

- Liang, X.; Hyyppa, J.; Kukko, A.; Kaartinen, H.; Jaakkola, A.; Yu, X. The use of a mobile laser scanning system for mapping large forest plots. IEEE Geosci. Remote Sens. 2014, 11, 1504–1508. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.S. Evaluating Tree Detection and Segmentation Routines on Very High Resolution UAV LiDAR Data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7619–7628. [Google Scholar] [CrossRef]

- Glennie, C. Rigorous 3D error analysis of kinematic scanning LIDAR systems. J. Appl. Geod. 2007, 1, 147–157. [Google Scholar] [CrossRef]

- Kilian, J.; Haala, N.; Englich, M. Capture and Evaluation of Airborne Laser Scanner Data. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Vienna, Austria, 12–18 July 1996; ISPRS; Volume 31, pp. 383–388. [Google Scholar]

- Maas, H. Least-squares matching with airborne laser scanning data in a TIN structure. Int. Arch. Photogramm. Remote Sens. 2000, 33, 548–555. [Google Scholar]

- Skaloud, J.; Lichti, D. Rigorous approach to bore-sight self-calibration in airborne laser scanning. ISPRS J. Photogramm. 2006, 61, 47–59. [Google Scholar] [CrossRef]

- Chan, T.O.; Lichti, D.D.; Glennie, C.L. Multi-feature based boresight self-calibration of a terrestrial mobile mapping system. ISPRS J. Photogramm. 2013, 82, 112–124. [Google Scholar] [CrossRef]

- Filin, S. Recovery of systematic biases in laser altimetry data using natural surfaces. Photogramm. Eng. Remote Sens. 2003, 69, 1235–1242. [Google Scholar] [CrossRef]

- Hauser, D.; Glennie, C.; Brooks, B. Calibration and accuracy analysis of a low-cost mapping-grade mobile laser scanning system. J. Surv. Eng. 2016, 142, 4016011. [Google Scholar] [CrossRef]

- Hong, S.; Park, I.; Lee, J.; Lim, K.; Choi, Y.; Sohn, H. Utilization of a Terrestrial Laser Scanner for the Calibration of Mobile Mapping Systems. Sensors 2017, 17, 474. [Google Scholar] [CrossRef] [PubMed]

- Ravi, R.; Shamseldin, T.; Elbahnasawy, M.; Lin, Y.; Habib, A. Bias Impact Analysis and Calibration of UAV-Based Mobile LiDAR System with Spinning Multi-Beam Laser Scanner. Appl. Sci. 2018, 8, 297. [Google Scholar] [CrossRef]

- Rieger, P.; Studnicka, N.; Pfennigbauer, M.; Zach, G. Boresight alignment method for mobile laser scanning systems. J. Appl. Geod. 2010, 4, 13–21. [Google Scholar] [CrossRef]

- Glennie, C. Calibration and kinematic analysis of the velodyne HDL-64E S2 lidar sensor. Photogramm. Eng. Remote Sens. 2012, 78, 339–347. [Google Scholar] [CrossRef]

- Guo, Q.; Su, Y.; Hu, T.; Zhao, X.; Wu, F.; Li, Y.; Liu, J.; Chen, L.; Xu, G.; Lin, G.; et al. An integrated UAV-borne lidar system for 3D habitat mapping in three forest ecosystems across China. Int. J. Remote Sens. 2017, 38, 2954–2972. [Google Scholar] [CrossRef]

- Kumari, P.; Carter, W.E.; Shrestha, R.L. Adjustment of systematic errors in ALS data through surface matching. Adv. Space Res. 2011, 47, 1851–1864. [Google Scholar] [CrossRef]

- Yan, L.; Tan, J.; Liu, H.; Xie, H.; Chen, C. Automatic non-rigid registration of multi-strip point clouds from mobile laser scanning systems. Int. J. Remote Sens. 2018, 39, 1713–1728. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling IEEE, Quebec, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

| Datasets | (°) | (°) | (°) |

|---|---|---|---|

| MLS1 | 0 | 60 | −90 |

| MLS2 | −70 | 90 | 0 |

| ULS1 | −70 | 90 | 0 |

| ULS2 | −90 | 90 | 0 |

| Datasets | (°) | (°) | (°) | Running Time (s) |

|---|---|---|---|---|

| MLS1 | 0.064961 | −0.089058 | −0.114466 | 404 |

| MLS2 | 0.008410 | 0.399149 | 0.142419 | 238 |

| ULS1 | −0.090418 | 0.122082 | −0.044299 | 447 |

| ULS2 | 0.225435 | 0.202054 | −0.006619 | 280 |

| Datasets | Number of Point Correspondences | RMSE before Calibration(cm) | RMSE after Calibration (cm) | Accuracy Improved (%) |

|---|---|---|---|---|

| MLS1 | 975,300 | 5.2 | 2.1 | 59.6 |

| MLS2 | 374,100 | 13.8 | 3.4 | 75.4 |

| ULS1 | 1,041,850 | 24.5 | 5.4 | 78.0 |

| ULS2 | 231,980 | 117.8 | 6.1 | 94.8 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Tan, J.; Liu, H. Rigorous Boresight Self-Calibration of Mobile and UAV LiDAR Scanning Systems by Strip Adjustment. Remote Sens. 2019, 11, 442. https://doi.org/10.3390/rs11040442

Li Z, Tan J, Liu H. Rigorous Boresight Self-Calibration of Mobile and UAV LiDAR Scanning Systems by Strip Adjustment. Remote Sensing. 2019; 11(4):442. https://doi.org/10.3390/rs11040442

Chicago/Turabian StyleLi, Zhen, Junxiang Tan, and Hua Liu. 2019. "Rigorous Boresight Self-Calibration of Mobile and UAV LiDAR Scanning Systems by Strip Adjustment" Remote Sensing 11, no. 4: 442. https://doi.org/10.3390/rs11040442

APA StyleLi, Z., Tan, J., & Liu, H. (2019). Rigorous Boresight Self-Calibration of Mobile and UAV LiDAR Scanning Systems by Strip Adjustment. Remote Sensing, 11(4), 442. https://doi.org/10.3390/rs11040442