Hyperspectral Images Classification Based on Dense Convolutional Networks with Spectral-Wise Attention Mechanism

Abstract

:1. Introduction

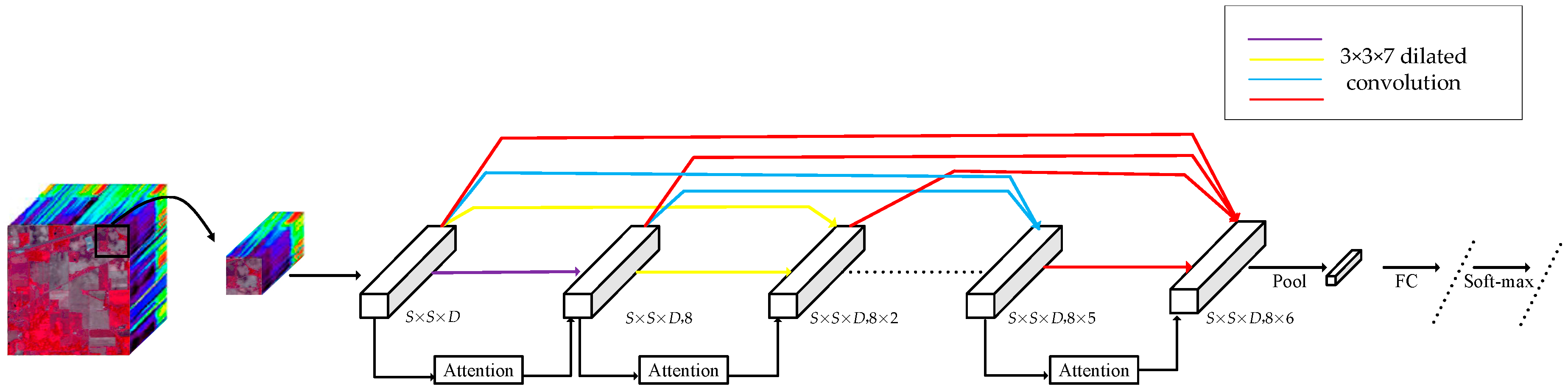

- We introduce a network architecture specifically designed for the 3-D patches of HSI. The network uses dilated convolutions to capture features at various patch scales, thereby obtaining multiple scales within a single layer. Dense connectivity connects 3-D feature maps learned from different layers, increasing the diversity of inputs in subsequent layers.

- A new spectral-wise attention mechanism is aiming to selectively emphasize informative spectral features and suppress less useful spectral features. The spectral-wise attention mechanism that applies soft weights on features is well suited and more efficient for the following HSI classification tasks. To the best of our knowledge, this is the first time an attention mechanism has been introduced for HSI classification.

- Experimental results on three HSI datasets demonstrate that our novel end-to-end 3-D dense convolutional network with spectral-wise attention mechanism (MSDN-SA) method outperforms the state-of-the-art methods.

2. Related Work

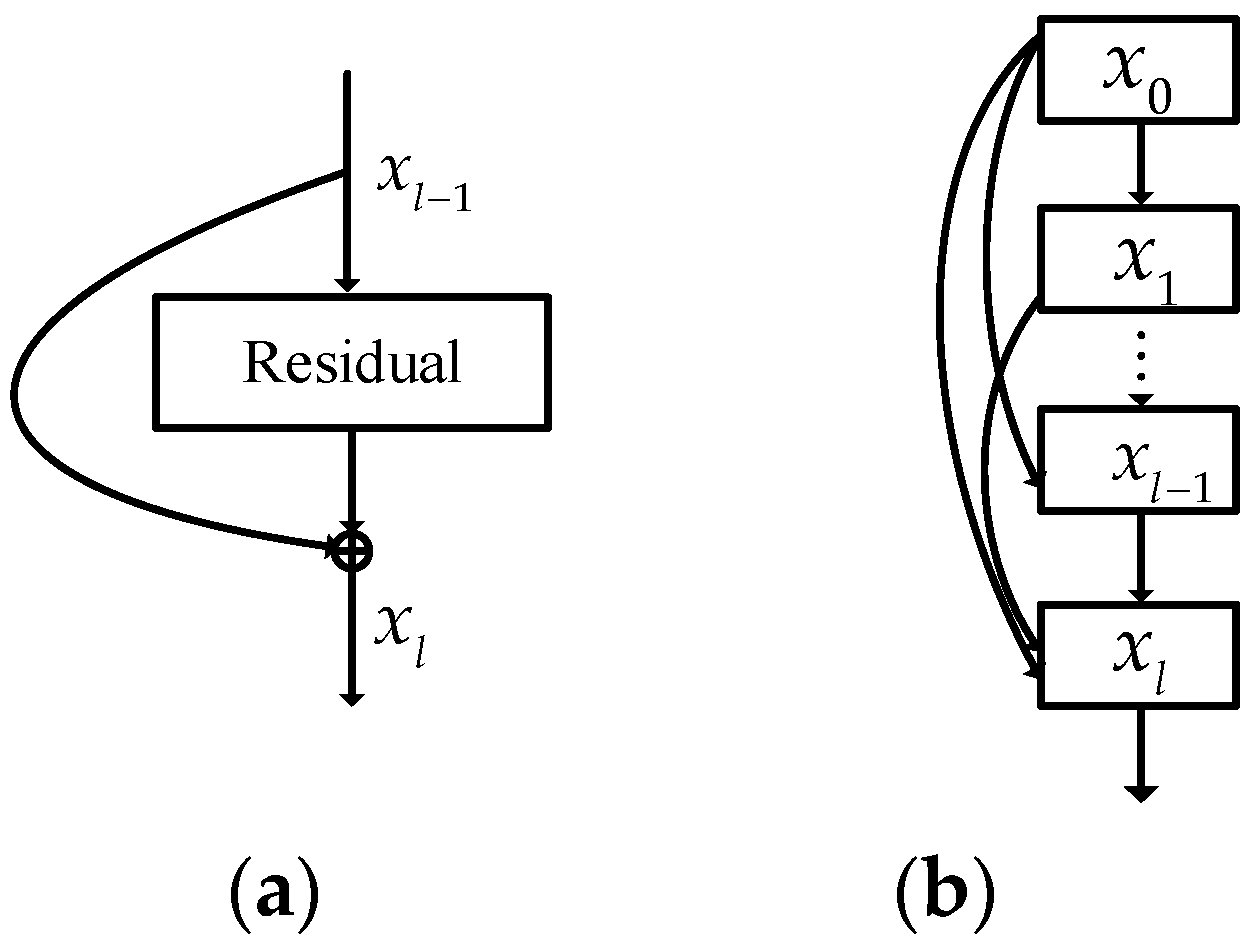

2.1. Residual Connections and Dense Connectivity in CNNs

2.2. Attention Mechanism

3. Proposed Methods

3.1. Dense Convolutional Network with Dilated Convolution

3.2. Spectral-Wise Attention Mechanism

3.3. Network Implementation Details

4. Experiments Results

4.1. Datasets

- (1)

- Indian Pines Dataset

- (2)

- University of Pavia Dataset

- (3)

- University of Houston Dataset

4.2. Experimental Setting

- (1)

- CCF [52]: Canonical Correlation Forests based on spectral feature with 100 trees.

- (2)

- SVM-3DG [14]: An SVM-based classification method by applying the 3-D discrete wavelet transform and Markov random field (MRF).

- (3)

- CNN-transfer [23]: A CNN with two-branch architecture based on spectral-spatial feature, where a transfer learning strategy is used. Specifically, the source datasets of Indian Pines for pretraining are Salinas Valley, which were collected by the same sensor AVIRIS, and the source datasets of Pavia University for pretraining are Pavia Center which were collected by the same sensor ROSIS.

- (4)

- (5)

- SSRN [31]: The architecture of the SSRN is set out in [31]. The spectral feature learning part includes two convolutional layers and two spectral residual blocks, the spatial feature learning part comprises of one 3-D convolutional layer and two spatial residual blocks. Finally, there is an average pooling layer and a fully connected layer to output the results.

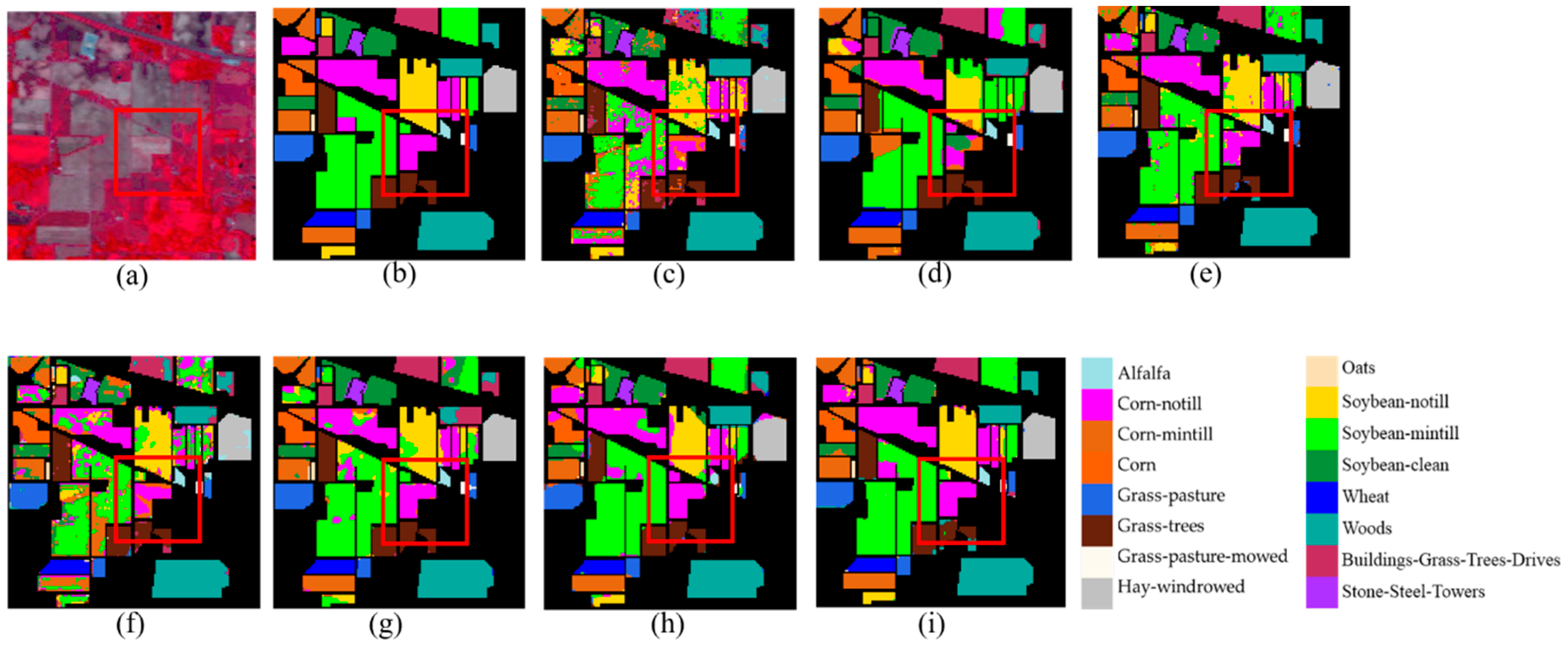

4.3. Results of Indian Pines Dataset

4.4. Results of University of Pavia Dataset

4.5. Results of University of Houston Dataset

5. Analysis and Discussion

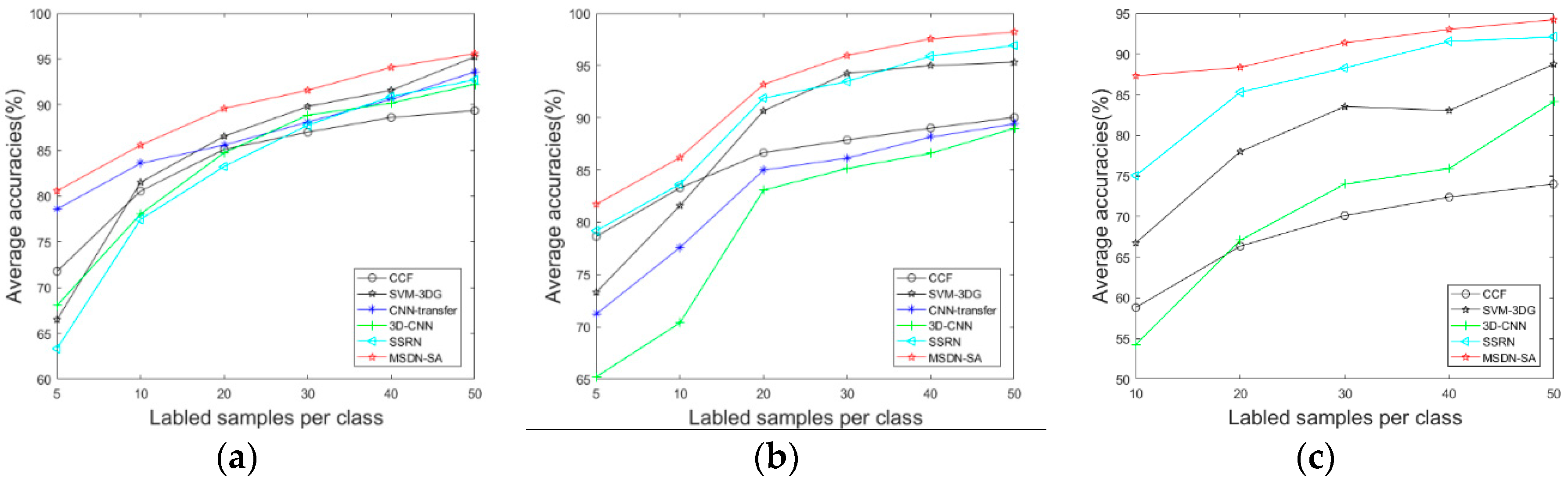

5.1. Effect of Training Samples

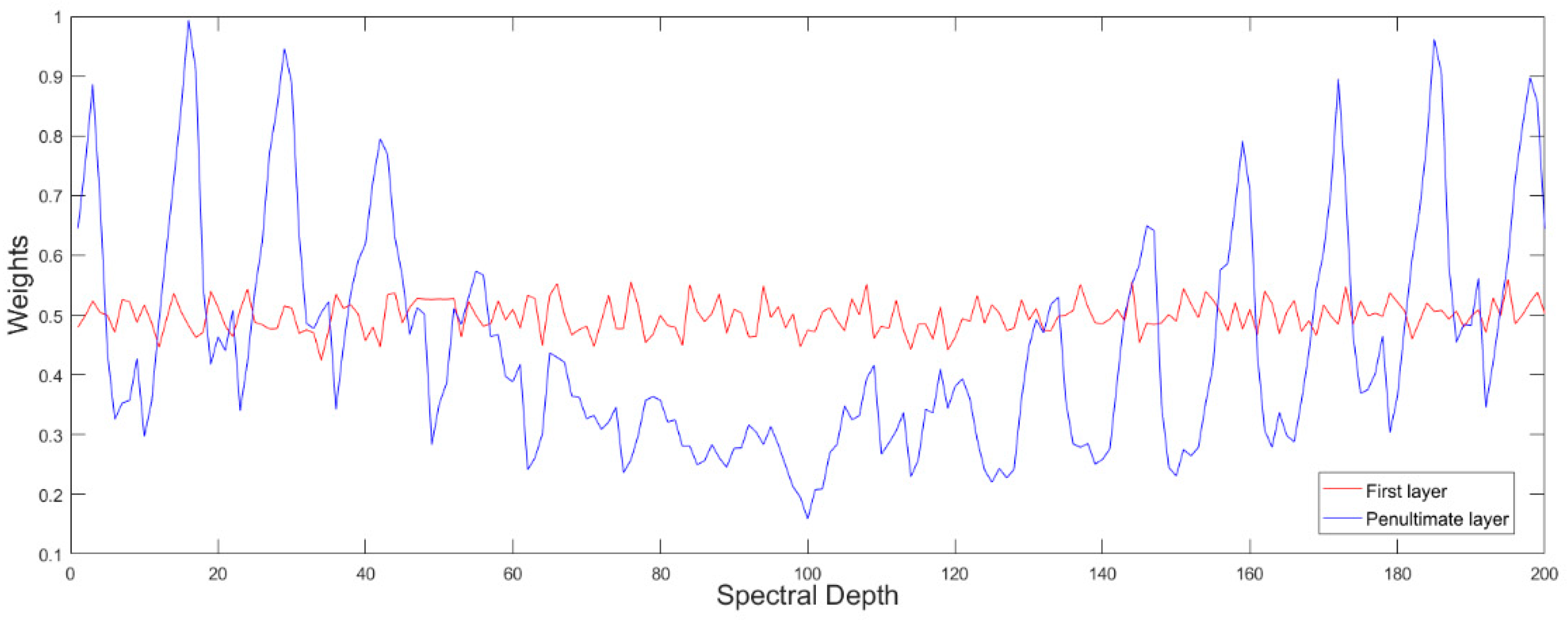

5.2. Effect of Spectral-Wise Attention Mechanism

5.3. Effect of Dilated Convolution

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y.F. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Liang, L.; Di, L.; Zhang, L.; Deng, M.; Qin, Z.; Zhao, S.; Lin, H. Estimation of crop LAI using hyperspectral vegetation indices and a hybrid inversion method. Remote Sens. Environ. 2015, 165, 123–134. [Google Scholar] [CrossRef]

- Yokoya, N.; Chan, J.; Segl, K. Potential of resolution-enhanced hyperspectral data for mineral mapping using simulated enmap and sentinel-2 images. Remote Sens. 2016, 8, 172. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Landgrebe, D.A. Signal Theory Methods in Multispectral Remote Sensing; Wiley: Hoboken, NJ, USA, 2003; Chapter 3. [Google Scholar]

- He, L.; Li, J.; Liu, C.; Li, S. Recent advances on spectral-spatial hyperspectral image classification: An overview and new guidelines. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1579–1597. [Google Scholar] [CrossRef]

- Du, Q.; Chang, C. A linear constrained distance-based discriminant analysis for hyperspectral image classification. Pattern Recognit. 2001, 34, 361–373. [Google Scholar] [CrossRef]

- Samaniego, L.; Bardossy, A.; Schulz, K. Supervised classification of remotely sensed imagery using a modified k-NN technique. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2112–2125. [Google Scholar] [CrossRef]

- Ediriwickrema, J.; Khorram, S. Hierarchical maximum-likelihood classification for improved accuracies. IEEE Trans. Geosci. Remote Sens. 1997, 35, 810–816. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Semisupervised hyperspectral image segmentation using multinomial logistic regression with active learning. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4085–4098. [Google Scholar] [CrossRef]

- Xia, J.; Bombrun, L.; Berthoumieu, Y.; Germain, C.; Du, P. Spectral–spatial rotation forest for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 4605–4613. [Google Scholar] [CrossRef]

- Chan, C.W.; Paelinckx, D. Evaluation of random forest and adaboost tree-based ensemble classification and spectral band selection for ecotope mapping using airborne hyperspectral imagery. Remote Sens. Environ. 2008, 112, 2999–3011. [Google Scholar] [CrossRef]

- Qian, Y.; Ye, M.; Zhou, J. Hyperspectral image classification based on structured sparse logistic regression and three-dimensional wavelet texture features. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2276–2291. [Google Scholar] [CrossRef]

- Cao, X.; Xu, L.; Meng, D.; Zhao, Q.; Xu, Z. Integration of 3-dimensional discrete wavelet transform and Markov random field for hyperspectral image classification. Neurocomputing 2017, 226, 90–100. [Google Scholar] [CrossRef]

- Jia, S.; Shen, L.; Li, Q. Gabor feature-based collaborative representation for hyperspectral imagery classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1118–1129. [Google Scholar]

- Shen, L.; Jia, S. Three-dimensional gabor wavelets for pixel-based hyperspectral imagery classification. IEEE Trans. Geosci. Remote Sens. 2011, 49, 5039–5046. [Google Scholar] [CrossRef]

- Tang, Y.Y.; Lu, Y.; Yuan, H. Hyperspectral image classification based on three-dimensional scattering wavelet transform. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2467–2480. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, X.; Jia, X. Spectral–spatial classification of hyperspectral data based on deep belief network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Petersson, H.; Gustafsson, D.; Bergstrom, D. Hyperspectral image analysis using deep learning—A review. In Proceedings of the 2016 Sixth International Conference on Image Processing Theory, Tools and Applications (IPTA), Oulu, Finland, 12–15 December 2016; pp. 1–6. [Google Scholar]

- Yang, J.; Zhao, Y.Q.; Chan, C.W. Learning and transferring deep joint spectral–spatial features for hyperspectral classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4729–4742. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Shen, Q. Spectral-spatial classification of hyperspectral imagery with 3d convolutional neural network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef]

- Gao, Q.; Lim, S.; Jia, X. Hyperspectral image classification using convolutional neural networks and multiple feature learning. Remote Sens. 2018, 10, 299. [Google Scholar] [CrossRef]

- Yang, X.; Ye, Y.; Li, X.; Lau, R.Y.K.; Zhang, X. Hyperspectral image classification with deep learning models. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5408–5423. [Google Scholar] [CrossRef]

- Ma, X.; Wang, H.; Geng, J. Spectral-spatial classification of hyperspectral image based on deep auto-encoder. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4073–4085. [Google Scholar] [CrossRef]

- Pan, B.; Shi, Z.; Xu, X. MugNet: Deep learning for hyperspectral image classification using limited samples. ISPRS J. Photogramm. Remote Sens. 2018, 145, 108–119. [Google Scholar] [CrossRef]

- He, Z.; Liu, H.; Wang, Y.; Hu, J. Generative adversarial networks based semi-supervised learning for hyperspectral image classification. Remote Sens. 2017, 9, 1042. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral–spatial residual network for hyperspectral image classification: A 3-d deep learning framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 847–858. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Laurens, V.D.M.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 39, 640–651. [Google Scholar]

- Sermanet, P.; Kavukcuoglu, K.; Chintala, S.; Lecun, Y. Pedestrian Detection with Unsupervised Multi-stage Feature Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Hariharan, B.; Arbelaez, P.; Girshick, R.; Malik, J. Hypercolumns for object segmentation and fine-grained localization. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML 2015), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the AISTATS, Ft. Lauderdale, FL, USA, 11–13 April 2011; p. 3. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, K. Recurrent models of visual attention. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, CA, USA, 8–13 December 2014. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

- Zhu, Y.; Groth, O.; Bernstein, M.; Fei-Fei, L. Visual7w: Grounded question answering in images. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4995–5004. [Google Scholar]

- Yang, Z.; He, X.; Gao, J.; Deng, L.; Smola, A. Stacked attention networks for image question answering. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 21–29. [Google Scholar]

- Nam, H.; Ha, J.W.; Kim, J. Dual attention networks for multimodal reasoning and matching. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2156–2164. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation net-works. arXiv 2017, arXiv:1709.01507. [Google Scholar]

- Fu, J.; Zheng, H.; Mei, T. Look closer to see better: Recurrent attention convolutional neural network for fine-grained image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4476–4484. [Google Scholar]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid attention network for semantic segmentation. arXiv 2018, arXiv:1805.10180v2. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Li, F.; Xu, L.; Siva, P.; Wong, A.; Clausi, D.A. Hyperspectral image classification with limited labeled training samples using enhanced ensemble learning and conditional random fields. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1–12. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Xia, J.; Yokoya, N.; Iwasaki, A. Hyperspectral image classification with canonical correlation forests. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1–11. [Google Scholar] [CrossRef]

| Layer | Kernel size | Network | Output Size |

|---|---|---|---|

| Inputs | - | - | 13 × 13 × 200 |

| 3-D-DConv1 | 3 × 3 × 7 | DConv-BN | 13 × 13 × 200, 8 |

| Attention mechanism | - | - | 1 × 1 × 200, 8 |

| 3-D-DConv2 | 3 × 3 × 7 | DConv-BN | 13 × 13 × 200, 8 |

| Attention mechanism | - | - | 1 × 1 × 200, 8 |

| Dense concatenate | - | - | 13 × 13 × 200, 16 |

| 3-D-DConv3 | 3 × 3 × 7 | DConv-BN | 13 × 13 × 200, 8 |

| Attention mechanism | - | - | 1 × 1 × 200, 8 |

| Dense concatenate | - | - | 13 × 13 × 200, 24 |

| 3-D-DConv4 | 3 × 3 × 7 | DConv-BN | 13 × 13 × 200, 8 |

| Attention mechanism | - | - | 1 × 1 × 200, 8 |

| Dense concatenate | - | - | 13 × 13 × 200, 32 |

| 3-D-DConv5 | 3 × 3 × 7 | DConv-BN | 13 × 13 × 200, 8 |

| Attention mechanism | - | - | 1 × 1 × 200, 8 |

| Dense concatenate | - | - | 13 × 13 × 200, 40 |

| 3-D-DConv6 | 3 × 3 × 7 | DConv-BN | 13 × 13 × 200, 8 |

| Attention mechanism | - | - | 1 × 1 × 200, 8 |

| Dense concatenate | - | - | 13 × 13 × 200, 48 |

| 3-D-Average Pooling | 3 × 3 × 8 | stride 2 | 5 × 5 × 48, 8 |

| FC, Soft-max | 360 |

| Class | Samples | Methods | ||||||

|---|---|---|---|---|---|---|---|---|

| Train/Test | CCF | SVM-3DG | CNN-Transfer | 3D-CNN | SSRN | MSDN | MSDN-SA | |

| 1 | 20/26 | 95.77 ± 2.84 | 97.44 ± 2.22 | 97.95 ± 2.44 | 98.08 ± 2.72 | 86.59 ± 7.31 | 95.65 ± 2.94 | 95.62 ± 1.60 |

| 2 | 20/1408 | 67.12 ± 6.67 | 70.12 ± 7.54 | 65.21 ± 6.56 | 64.42 ± 6.43 | 93.80 ± 3.83 | 71.77 ± 1.96 | 78.31 ± 2.83 |

| 3 | 20/810 | 67.14 ± 5.29 | 71.73 ± 17.88 | 67.10 ± 4.17 | 65.72 ± 2.15 | 93.75 ± 4.48 | 78.46 ± 5.18 | 89.20 ± 3.02 |

| 4 | 20/217 | 89.54 ± 4.05 | 91.71 ± 5.77 | 88.51 ± 3.19 | 88.02 ± 1.95 | 80.82 ± 6.23 | 94.65 ± 2.01 | 92.68 ± 4.75 |

| 5 | 20/463 | 87.58 ± 3.41 | 88.26 ± 8.04 | 88.06 ± 2.61 | 88.39 ± 0.95 | 98.64 ± 1.75 | 93.76 ± 3.27 | 87.79 ± 0.58 |

| 6 | 20/710 | 93.21 ± 2.56 | 97.46 ± 1.94 | 94.28 ± 1.39 | 94.65 ± 0.59 | 99.49 ± 0.52 | 97.57 ± 1.69 | 94.27 ± 0.38 |

| 7 | 14/14 | 95.00 ± 6.78 | 100 ± 0.00 | 95.54 ± 5.80 | 96.43 ± 5.05 | 66.46 ± 10.58 | 92.86 ± 5.83 | 96.47 ± 4.74 |

| 8 | 20/458 | 98.19 ± 0.50 | 99.41 ± 0.83 | 91.13 ± 0.82 | 86.79 ± 3.24 | 99.96 ± 0.10 | 97.43 ± 1.10 | 98.18 ± 0.09 |

| 9 | 10/10 | 100 ± 0.00 | 100 ± 0.00 | 100 ± 0.00 | 100 ± 0.00 | 61.67 ± 7.39 | 100 ± 0.00 | 100 ± 0.00 |

| 10 | 20/952 | 81.16 ± 6.41 | 74.37 ± 6.15 | 78.69 ± 4.14 | 76.24 ± 2.69 | 68.86 ± 17.70 | 80.48 ± 4.63 | 81.28 ± 2.00 |

| 11 | 20/2435 | 58.73 ± 4.41 | 74.74 ± 4.60 | 59.55 ± 2.16 | 60.14 ± 1.89 | 83.28 ± 1.82 | 74.43 ± 3.85 | 79.99 ± 1.09 |

| 12 | 20/573 | 80.38 ± 4.89 | 91.04 ± 6.92 | 78.59 ± 3.64 | 61.43 ± 14.07 | 83.58 ± 7.01 | 89.69 ± 1.79 | 84.82 ± 3.20 |

| 13 | 20/185 | 99.03 ± 0.34 | 99.10 ± 0.31 | 98.27 ± 0.60 | 98.92 ± 0.21 | 96.90 ± 2.18 | 98.21 ± 0.41 | 97.23 ± 0.61 |

| 14 | 20/1245 | 90.25 ± 4.83 | 86.43 ± 7.87 | 90.24 ± 0.53 | 91.41 ± 0.14 | 99.98 ± 0.04 | 88.43 ± 2.49 | 95.80 ± 0.21 |

| 15 | 20/366 | 62.90 ± 4.40 | 94.72 ± 9.15 | 77.93 ± 3.83 | 85.22 ± 10.22 | 60.16 ± 1.93 | 79.46 ± 4.95 | 64.97 ± 1.62 |

| 16 | 20/73 | 95.75 ± 2.77 | 96.35 ± 2.85 | 95.97 ± 1.75 | 100 ± 0.00 | 79.96 ± 2.99 | 95.34 ± 1.24 | 96.71 ± 1.97 |

| OA(%) | 75.60 ± 1.04 | 81.43 ± 1.05 | 75.18 ± 1.02 | 74.51 ± 1.10 | 84.35 ± 4.19 | 83.62 ± 3.95 | 86.62 ± 2.36 1 | |

| AA(%) | 85.11 ± 0.58 | 89.56 ± 0.60 | 85.59 ± 1.01 | 84.74 ± 1.76 | 83.24 ± 3.26 | 89.26 ± 2.66 | 89.58 ± 1.82 | |

| × 100 | 72.50 ± 1.14 | 78.93 ± 1.10 | 72.80 ± 1.10 | 71.21 ± 1.30 | 82.20 ± 4.68 | 80.96 ± 2.07 | 85.16 ± 2.02 | |

| Class | Samples | Methods | ||||||

|---|---|---|---|---|---|---|---|---|

| Train/Test | CCF | SVM-3DG | CNN-Transfer | 3D-CNN | SSRN | MSDN | MSDN-SA | |

| 1 | 20/6611 | 73.21 ± 6.47 | 91.99 ± 4.87 | 70.62 ± 3.59 | 68.05 ± 3.98 | 98.95 ± 0.62 | 96.76 ± 2.77 | 93.31 ± 2.02 |

| 2 | 20/18629 | 79.88 ± 6.79 | 90.74 ± 5.48 | 75.41 ± 5.50 | 66.58 ± 4.80 | 99.85 ± 0.09 | 91.81 ± 2.08 | 98.88 ± 1.36 |

| 3 | 20/2079 | 80.15 ± 6.25 | 81.84 ± 9.84 | 78.08 ± 4.12 | 75.47 ± 3.94 | 87.58 ± 2.74 | 85.33 ± 3.21 | 87.93 ± 1.97 |

| 4 | 20/3044 | 92.81 ± 5.15 | 89.99 ± 3.82 | 91.44 ± 1.26 | 92.62 ± 0.94 | 82.48 ± 7.56 | 92.74 ± 1.73 | 91.33 ± 3.32 |

| 5 | 20/1325 | 99.53 ± 0.43 | 96.54 ± 1.84 | 98.87 ± 1.87 | 98.15 ± 2.62 | 99.98 ± 0.05 | 98.26 ± 0.22 | 99.97 ± 0.11 |

| 6 | 20/5009 | 82.65 ± 2.86 | 84.12 ± 10.63 | 78.19 ± 2.88 | 71.49 ± 5.16 | 71.46 ± 3.23 | 81.81 ± 2.13 | 87.29 ± 1.78 |

| 7 | 20/1310 | 93.96 ± 2.34 | 90.06 ± 3.86 | 91.30 ± 2.30 | 88.09 ± 2.16 | 89.74 ± 4.46 | 90.46 ± 2.47 | 91.68 ± 2.41 |

| 8 | 20/3662 | 77.96 ± 7.23 | 90.83 ± 5.75 | 81.65 ± 5.09 | 87.56 ± 2.53 | 84.18 ± 5.69 | 88.35 ± 3.17 | 89.14 ± 3.32 |

| 9 | 20/927 | 99.81 ± 0.10 | 99.98 ± 0.05 | 99.10 ± 0.87 | 99.63 ± 0.08 | 98.48 ± 0.42 | 99.01 ± 0.89 | 99.08 ± 0.39 |

| OA(%) | 81.42 ± 2.88 | 90.04 ± 1.36 | 75.48 ± 1.54 | 73.97 ± 0.35 | 92.30 ± 1.97 | 91.01 ± 2.53 | 92.99 ± 2.02 | |

| AA(%) | 86.66 ± 1.06 | 90.68 ± 1.65 | 84.99 ± 1.30 | 83.07 ± 1.25 | 91.88 ± 1.90 | 91.39 ± 1.56 | 92.98 ± 1.04 | |

| × 100 | 76.22 ± 3.36 | 86.95 ± 1.61 | 73.14 ± 3.17 | 67.52 ± 0.09 | 90.01 ± 2.49 | 88.29 ± 2.21 | 90.98 ± 2.94 | |

| Class | Samples | Methods | |||||

|---|---|---|---|---|---|---|---|

| Train/Test | CCF | SVM-3DG | 3D-CNN | SSRN | MSDN | MSDN-SA | |

| 1 | 20/1231 | 74.76 ± 4.58 | 77.01 ± 6.68 | 89.65 ± 4.98 | 71.04 ± 4.11 | 83.50 ± 3.79 | 85.04 ± 3.72 |

| 2 | 20/1234 | 69.30 ± 5.07 | 78.53 ± 1.76 | 65.24 ± 0.79 | 87.09 ± 2.05 | 86.76 ± 4.75 | 88.11 ± 0.80 |

| 3 | 20/677 | 79.07 ± 6.31 | 87.99 ± 12.26 | 89.96 ± 4.59 | 98.81 ± 0.95 | 93.69 ± 4.29 | 95.80 ± 4.17 |

| 4 | 20/1224 | 62.01 ± 3.35 | 69.91 ± 4.29 | 62.14 ± 2.26 | 78.88 ± 5.74 | 87.61 ± 3.19 | 88.50 ± 0.03 |

| 5 | 20/1222 | 90.39 ± 1.87 | 93.64 ± 2.72 | 92.76 ± 2.49 | 94.36 ± 1.67 | 90.65 ± 0.96 | 92.93 ± 1.72 |

| 6 | 20/305 | 66.02 ± 5.96 | 78.69 ± 6.63 | 59.51 ± 8.58 | 87.52 ± 9.09 | 76.85 ± 4.29 | 69.87 ± 1.59 |

| 7 | 20/1248 | 38.69 ± 5.96 | 84.43 ± 3.09 | 48.48 ± 7.14 | 79.42 ± 3.97 | 80.41 ± 4.73 | 89.30 ± 4.52 |

| 8 | 20/1224 | 56.95 ± 5.78 | 61.41 ± 4.89 | 51.96 ± 5.96 | 96.35 ± 4.70 | 90.08 ± 5.57 | 94.16 ± 3.10 |

| 9 | 20/1232 | 47.95 ± 5.13 | 68.78 ± 3.79 | 74.51 ± 1.03 | 72.26 ± 3.35 | 70.73 ± 4.28 | 81.96 ± 4.52 |

| 10 | 20/1207 | 71.48 ± 6.99 | 72.74 ± 7.21 | 50.04 ± 6.21 | 83.70 ± 4.33 | 80.31 ± 5.33 | 88.10 ± 7.87 |

| 11 | 20/1215 | 54.93 ± 6.69 | 65.27 ± 6.33 | 39.88 ± 6.58 | 92.94 ± 5.22 | 82.22 ± 5.43 | 89.54 ± 3.45 |

| 12 | 20/1213 | 71.27 ± 7.69 | 77.96 ± 6.68 | 67.07 ± 6.00 | 77.40 ± 3.53 | 80.77 ± 6.14 | 88.43 ± 0.44 |

| 13 | 20/449 | 60.51 ± 4.45 | 87.97 ± 6.39 | 45.77 ± 8.66 | 79.84 ± 12.49 | 78.43 ± 3.90 | 88.69 ± 3.53 |

| 14 | 20/408 | 84.39 ± 2.35 | 94.28 ± 6.03 | 84.19 ± 0.17 | 90.23 ± 1.80 | 90.92 ± 3.08 | 91.92 ± 0.95 |

| 15 | 20/640 | 67.34 ± 7.59 | 88.33 ± 4.42 | 84.93 ± 2.76 | 89.47 ± 4.83 | 92.39 ± 4.99 | 92.83 ± 3.45 |

| OA(%) | 65.09 ± 1.60 | 77.17 ± 0.76 | 66.17 ± 0.99 | 83.21 ± 0.98 | 84.69 ± 0.80 | 88.32 ± 0.34 | |

| AA(%) | 66.34 ± 1.42 | 79.13 ± 1.09 | 67.07 ± 1.62 | 85.29 ± 1.33 | 84.35 ± 0.79 | 88.34 ± 0.28 | |

| × 100 | 62.31 ± 1.71 | 75.32 ± 0.84 | 63.44 ± 1.05 | 81.85 ± 1.05 | 83.13 ± 0.86 | 87.37 ± 0.37 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, B.; Li, Y.; Zhang, H.; Chan, J.C.-W. Hyperspectral Images Classification Based on Dense Convolutional Networks with Spectral-Wise Attention Mechanism. Remote Sens. 2019, 11, 159. https://doi.org/10.3390/rs11020159

Fang B, Li Y, Zhang H, Chan JC-W. Hyperspectral Images Classification Based on Dense Convolutional Networks with Spectral-Wise Attention Mechanism. Remote Sensing. 2019; 11(2):159. https://doi.org/10.3390/rs11020159

Chicago/Turabian StyleFang, Bei, Ying Li, Haokui Zhang, and Jonathan Cheung-Wai Chan. 2019. "Hyperspectral Images Classification Based on Dense Convolutional Networks with Spectral-Wise Attention Mechanism" Remote Sensing 11, no. 2: 159. https://doi.org/10.3390/rs11020159

APA StyleFang, B., Li, Y., Zhang, H., & Chan, J. C.-W. (2019). Hyperspectral Images Classification Based on Dense Convolutional Networks with Spectral-Wise Attention Mechanism. Remote Sensing, 11(2), 159. https://doi.org/10.3390/rs11020159