True-Color Three-Dimensional Imaging and Target Classification BASED on Hyperspectral LiDAR

Abstract

1. Introduction

2. System Description and Experimental Design

2.1. System Description

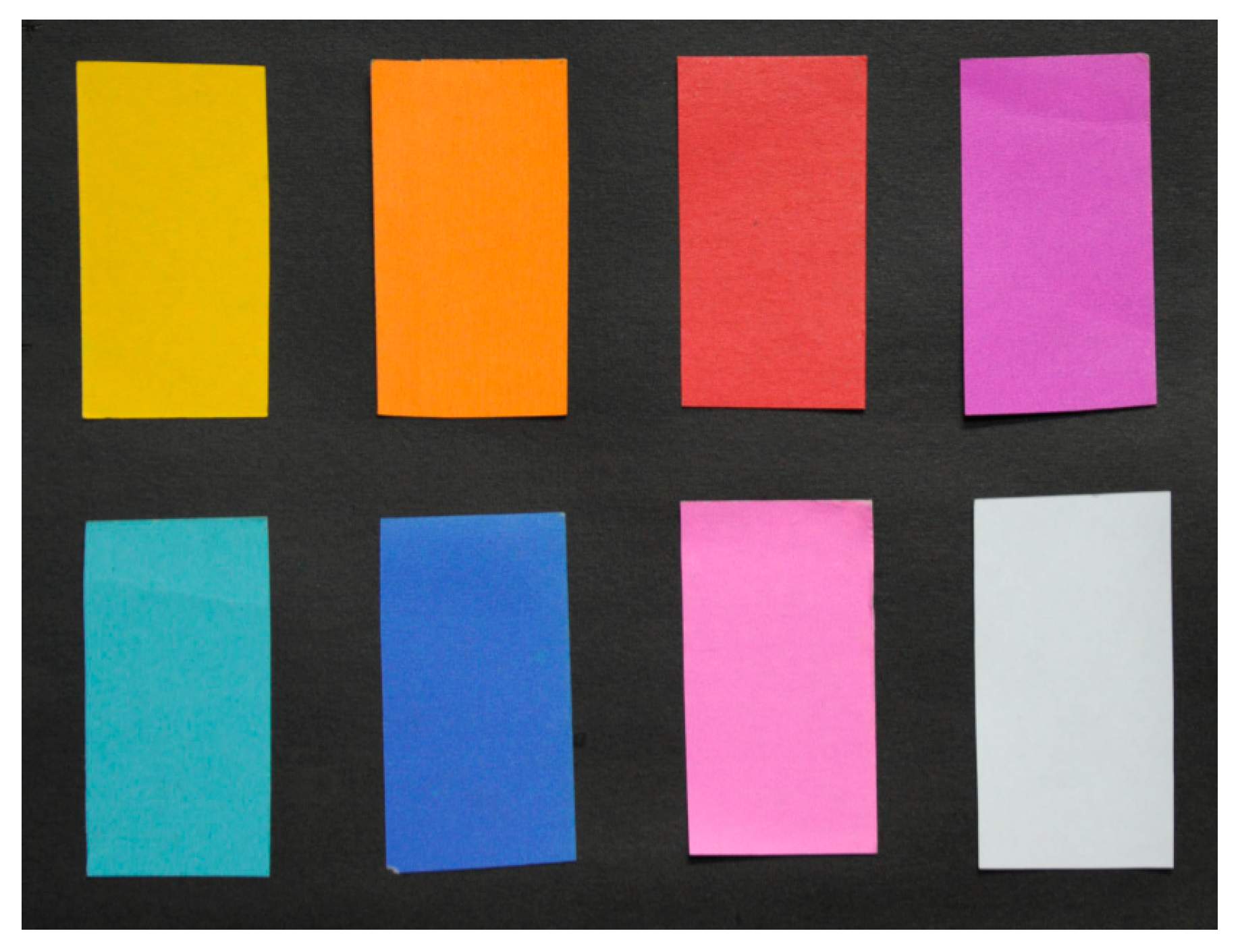

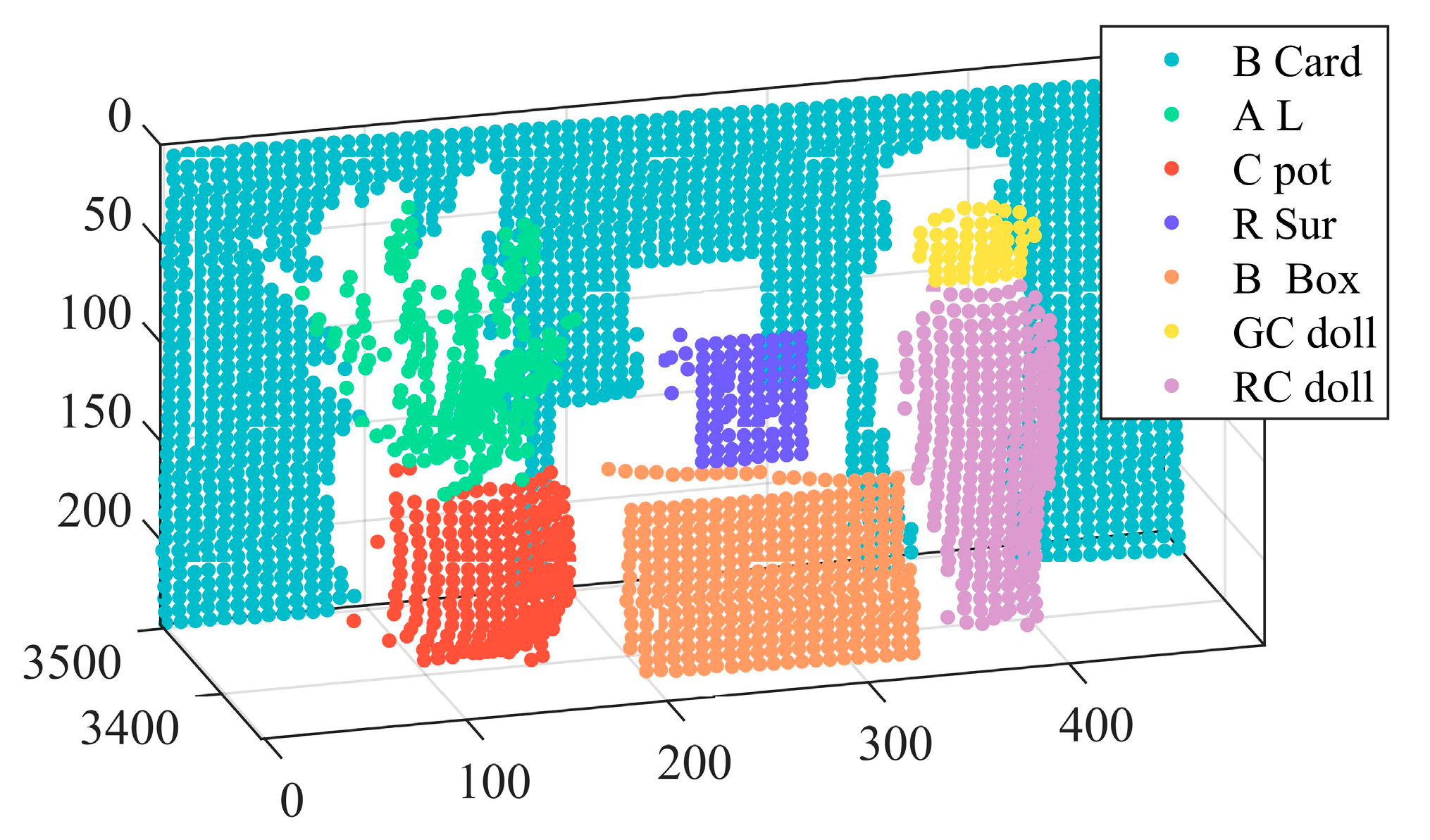

2.2. Experimental Design

3. Methods

3.1. Data Preprocessing

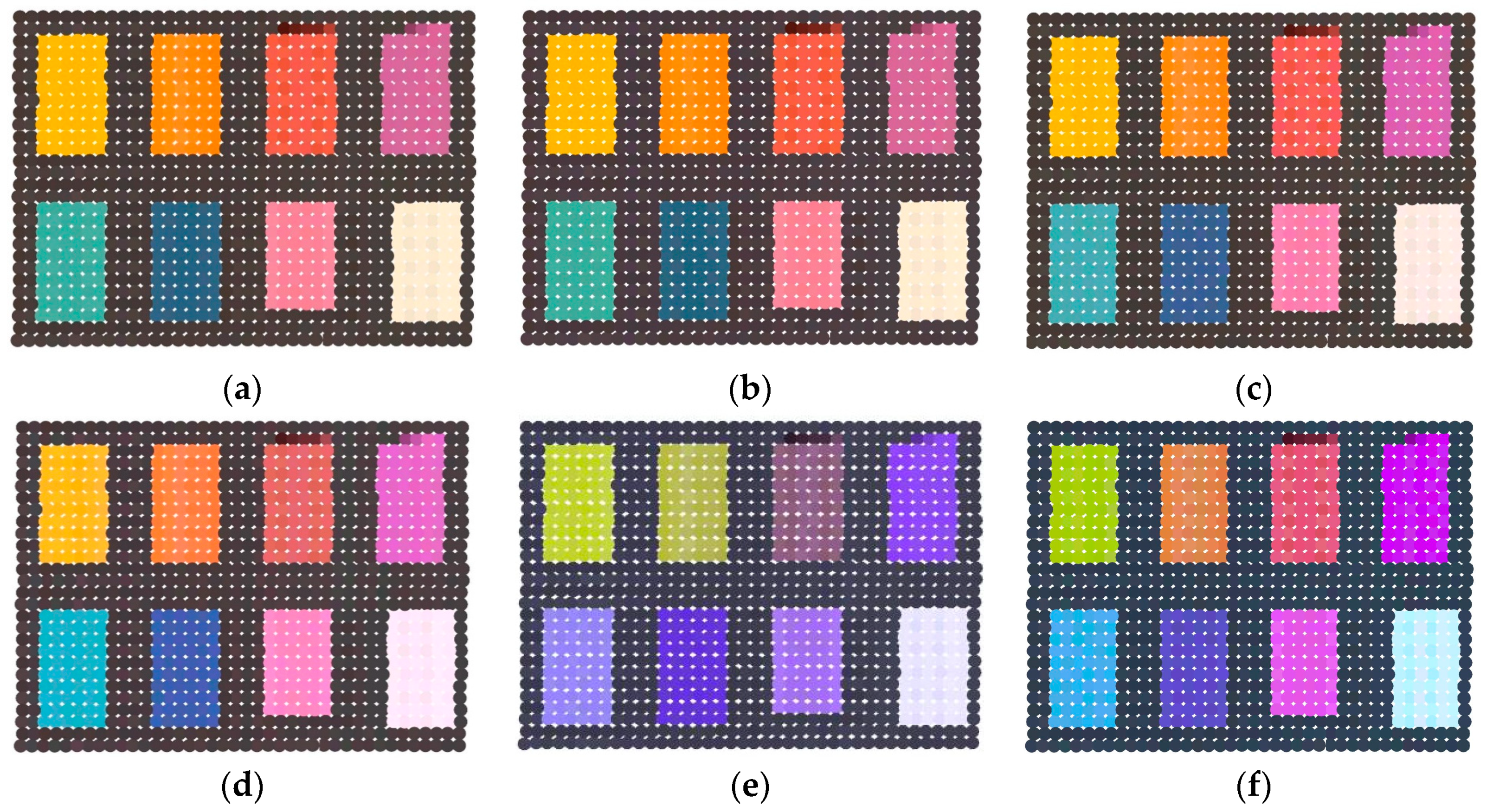

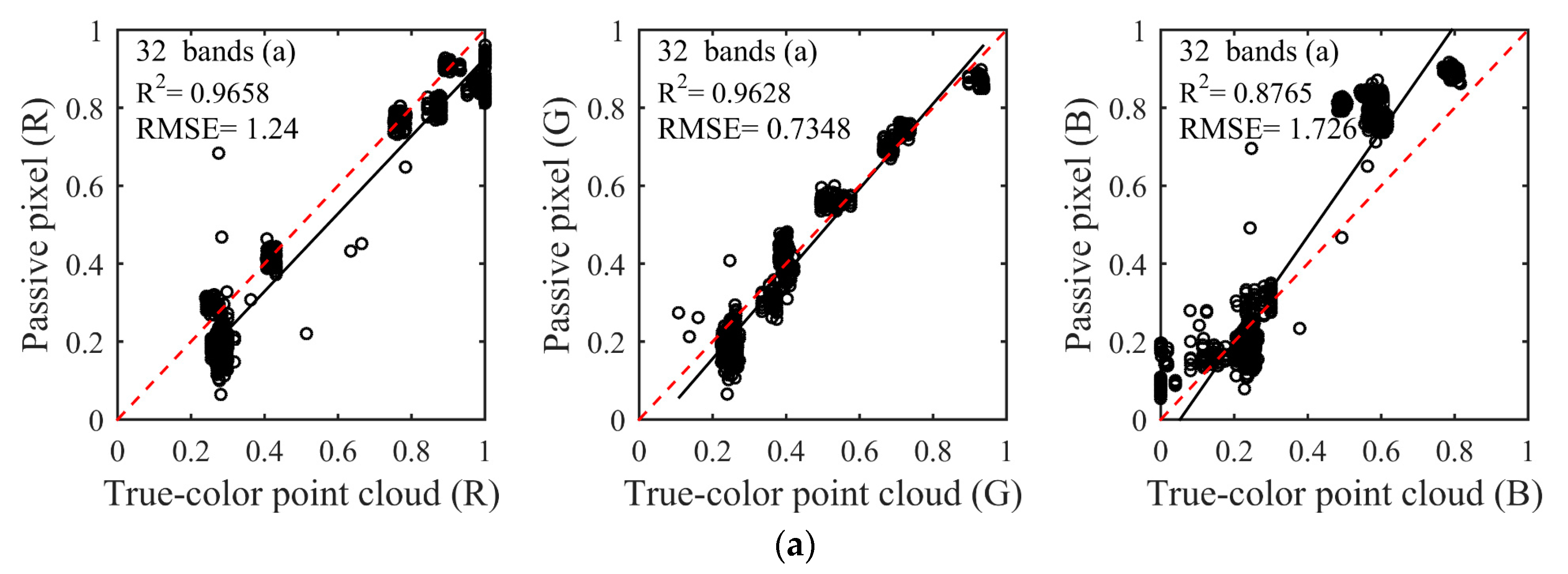

3.2. True-Color Composition

3.3. Wavelength Selection

3.4. Target Classification

4. Results and Discussion

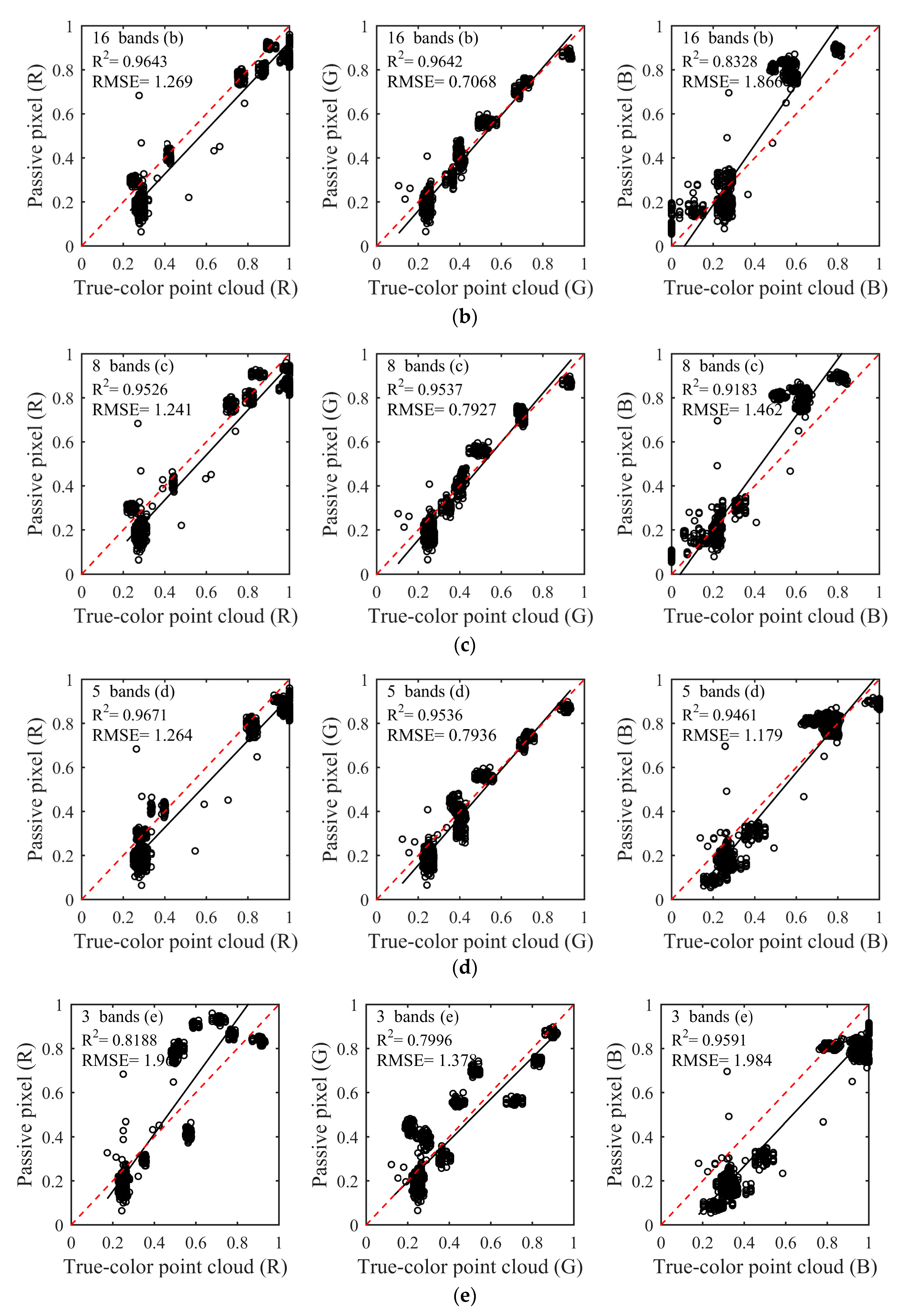

4.1. True-Color Composition

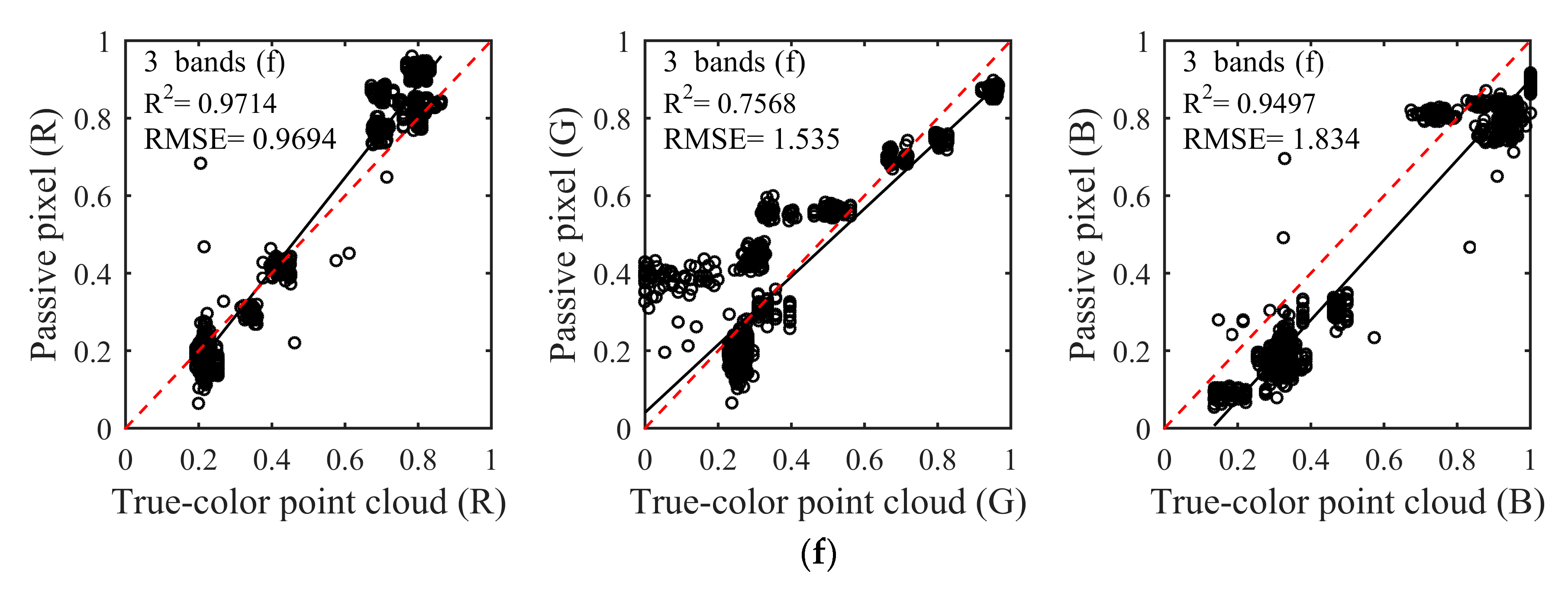

4.2. Wavelength Selection

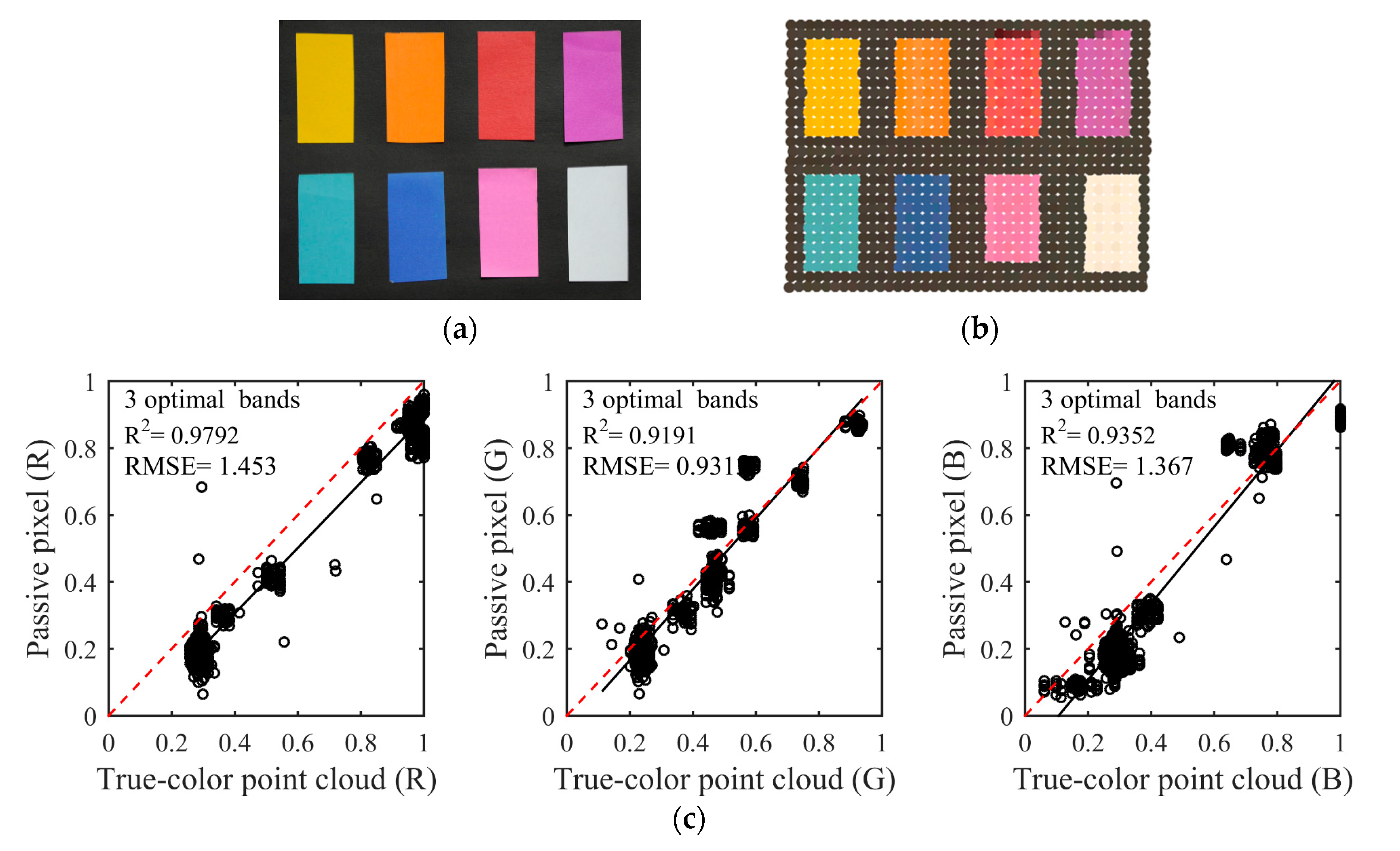

4.3. RGB-Based Target Classification

4.4. Inadequacies of the Proposed Method

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Tebaldini, S.; Rocca, F. Multibaseline polarimetric SAR tomography of a boreal forest at P-and L-bands. IEEE Trans. Geosci. Remote Sens. 2012, 50, 232–246. [Google Scholar] [CrossRef]

- Vaughan, R.G.; Hook, S.J.; Calvin, W.M.; Taranik, J.V. Surface mineral mapping at Steamboat Springs, Nevada, USA, with multi-wavelength thermal infrared images. Remote Sens. Environ. 2005, 99, 140–158. [Google Scholar] [CrossRef]

- Dale, L.M.; Thewis, A.; Boudry, C.; Rotar, I.; Dardenne, P.; Baeten, V.; Pierna, J.A.F. Hyperspectral imaging applications in agriculture and agro-food product quality and safety control: A review. Appl. Spectrosc. Rev. 2013, 48, 142–159. [Google Scholar] [CrossRef]

- Omasa, K.; Hosoi, F.; Konishi, A. 3D lidar imaging for detecting and understanding plant responses and canopy structure. J. Exp. Bot. 2006, 58, 881–898. [Google Scholar] [CrossRef] [PubMed]

- Garini, Y.; Young, I.T.; McNamara, G. Spectral imaging: Principles and applications. Cytom. Part A J. Int. Soc. Anal. Cytol. 2006, 69, 735–747. [Google Scholar] [CrossRef] [PubMed]

- Pan, Z.; Mao, F.; Wang, W.; Logan, T.; Hong, J. Examining Intrinsic Aerosol-Cloud Interactions in South Asia Through Multiple Satellite Observations. J. Geophys. Res. Atmos. 2018, 123, 11210–11224. [Google Scholar] [CrossRef]

- Botha, E.J.; Leblon, B.; Zebarth, B.; Watmough, J. Non-destructive estimation of potato leaf chlorophyll from canopy hyperspectral reflectance using the inverted PROSAIL model. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 360–374. [Google Scholar] [CrossRef]

- Li, Z.; Wu, E.; Pang, C.; Du, B.; Tao, Y.; Peng, H.; Zeng, H.; Wu, G. Multi-beam single-photon-counting three-dimensional imaging lidar. Opt. Express 2017, 25, 10189–10195. [Google Scholar] [CrossRef]

- Weibring, P.; Johansson, T.; Edner, H.; Svanberg, S.; Sundner, B.; Raimondi, V.; Cecchi, G.; Pantani, L. Fluorescence lidar imaging of historical monuments. Appl. Opt. 2001, 40, 6111–6120. [Google Scholar] [CrossRef]

- Lu, G.; Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014, 19, 010901. [Google Scholar] [CrossRef]

- Thorp, K.; Tian, L. A review on remote sensing of weeds in agriculture. Precis. Agric. 2004, 5, 477–508. [Google Scholar] [CrossRef]

- Vaughan, R.G.; Calvin, W.M.; Taranik, J.V. SEBASS hyperspectral thermal infrared data: Surface emissivity measurement and mineral mapping. Remote Sens. Environ. 2003, 85, 48–63. [Google Scholar] [CrossRef]

- Lim, K.; Treitz, P.; Wulder, M.; St-Onge, B.; Flood, M. LiDAR remote sensing of forest structure. Prog. Phys. Geogr. 2003, 27, 88–106. [Google Scholar] [CrossRef]

- Nevalainen, O.; Hakala, T.; Suomalainen, J.; Kaasalainen, S. Nitrogen concentration estimation with hyperspectral LiDAR. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, II-5/W2, 205–210. [Google Scholar] [CrossRef]

- Gong, W.; Sun, J.; Shi, S.; Yang, J.; Du, L.; Zhu, B.; Song, S. Investigating the Potential of Using the Spatial and Spectral Information of Multispectral LiDAR for Object Classification. Sensors 2015, 15, 21989–22002. [Google Scholar] [CrossRef] [PubMed]

- Erdody, T.L.; Moskal, L.M. Fusion of LiDAR and imagery for estimating forest canopy fuels. Remote Sens. Environ. 2010, 114, 725–737. [Google Scholar] [CrossRef]

- Alonzo, M.; Bookhagen, B.; Roberts, D.A. Urban tree species mapping using hyperspectral and lidar data fusion. Remote Sens. Environ. 2014, 148, 70–83. [Google Scholar] [CrossRef]

- Du, L.; Gong, W.; Shi, S.; Yang, J.; Sun, J.; Zhu, B.; Song, S. Estimation of rice leaf nitrogen contents based on hyperspectral LIDAR. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 136–143. [Google Scholar] [CrossRef]

- Wei, G.; Shalei, S.; Bo, Z.; Shuo, S.; Faquan, L.; Xuewu, C. Multi-wavelength canopy LiDAR for remote sensing of vegetation: Design and system performance. ISPRS J. Photogramm. Remote Sens. 2012, 69, 1–9. [Google Scholar] [CrossRef]

- Suomalainen, J.; Hakala, T.; Kaartinen, H.; Räikkönen, E.; Kaasalainen, S. Demonstration of a virtual active hyperspectral LiDAR in automated point cloud classification. ISPRS J. Photogramm. Remote Sens. 2011, 66, 637–641. [Google Scholar] [CrossRef]

- Niu, Z.; Xu, Z.; Sun, G.; Huang, W.; Wang, L.; Feng, M.; Li, W.; He, W.; Gao, S. Design of a new multispectral waveform LiDAR instrument to monitor vegetation. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1506–1510. [Google Scholar]

- Fernandez-Diaz, J.; Carter, W.; Glennie, C.; Shrestha, R.; Pan, Z.; Ekhtari, N.; Singhania, A.; Hauser, D.; Sartori, M. Capability assessment and performance metrics for the Titan multispectral mapping LiDAR. Remote Sens. 2016, 8, 936. [Google Scholar] [CrossRef]

- Sun, J.; Shi, S.; Yang, J.; Gong, W.; Qiu, F.; Wang, L.; Du, L.; Chen, B. Wavelength selection of the multispectral lidar system for estimating leaf chlorophyll and water contents through the PROSPECT model. Agric. For. Meteorol. 2019, 266, 43–52. [Google Scholar] [CrossRef]

- Chen, B.; Shi, S.; Gong, W.; Zhang, Q.; Yang, J.; Du, L.; Sun, J.; Zhang, Z.; Song, S. Multispectral LiDAR point cloud classification: A two-step approach. Remote Sens. 2017, 9, 373. [Google Scholar] [CrossRef]

- Yu, X.; Hyyppä, J.; Litkey, P.; Kaartinen, H.; Vastaranta, M.; Holopainen, M. Single-Sensor Solution to Tree Species Classification Using Multispectral Airborne Laser Scanning. Remote Sens. 2017, 9, 108. [Google Scholar] [CrossRef]

- Miller, C.I.; Thomas, J.J.; Kim, A.M.; Metcalf, J.P.; Olsen, R.C. Application of image classification techniques to multispectral lidar point cloud data. In Proceedings of the Laser Radar Technology and Applications XXI, Baltimore, MA, USA, 13 May 2016; p. 98320X. [Google Scholar]

- Puttonen, E.; Hakala, T.; Nevalainen, O.; Kaasalainen, S.; Krooks, A.; Karjalainen, M.; Anttila, K. Artificial target detection with a hyperspectral LiDAR over 26-h measurement. Opt. Eng. 2015, 54, 013105. [Google Scholar] [CrossRef]

- Wright, W.D. A re-determination of the trichromatic coefficients of the spectral colors. Trans. Opt. Soc. 1929, 30, 141. [Google Scholar] [CrossRef]

- Guild, J. The Colorimetric Properties of the Spectrum. Philos. Trans. R. Soc. Lond. 1932, 230, 149–187. [Google Scholar] [CrossRef]

- Shaw, M.; Fairchild, M. Evaluating the 1931 CIE color-matching functions. Color Res. Appl. 2002, 27, 316–329. [Google Scholar] [CrossRef]

- Schanda, J. Colorimetry: Understanding the CIE System; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2007; ISBN 978-0-470-04904-4. [Google Scholar]

- Chen, H.; Liang, T.; Yao, J. The Processing Algorithms and EML Modeling of True Color Synthesis for SPOT5 Image. Appl. Mech. Mater. 2013, 373–375, 564–568. [Google Scholar] [CrossRef]

- Song, S.; Gong, W.; Zhu, B.; Huang, X. Wavelength selection and spectral discrimination for paddy rice, with laboratory measurements of hyperspectral leaf reflectance. ISPRS J. Photogramm. Remote Sens. 2011, 66, 672–682. [Google Scholar] [CrossRef]

- Van der Meer, F. The effectiveness of spectral similarity measures for the analysis of hyperspectral imagery. Int. J. Appl. Earth Obs. Geoinf. 2006, 8, 3–17. [Google Scholar] [CrossRef]

- Puttonen, E.; Suomalainen, J.; Hakala, T.; Räikkönen, E.; Kaartinen, H.; Kaasalainen, S.; Litkey, P. Tree species classification from fused active hyperspectral reflectance and LIDAR measurements. For. Ecol. Manag. 2010, 260, 1843–1852. [Google Scholar] [CrossRef]

- Popescu, S.C. Estimating biomass of individual pine trees using airborne lidar. Biomass Bioenergy 2007, 31, 646–655. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. Toward intelligent training of supervised image classifications: Directing training data acquisition for SVM classification. Remote Sens. Environ. 2004, 93, 107–117. [Google Scholar] [CrossRef]

- Tarabalka, Y.; Fauvel, M.; Chanussot, J.; Benediktsson, J.A. SVM-and MRF-based method for accurate classification of hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2010, 7, 736–740. [Google Scholar] [CrossRef]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer Science & Business Media: New York, NY, USA, 2013. [Google Scholar]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2011, 2. [Google Scholar] [CrossRef]

- Tien Bui, D.; Pradhan, B.; Lofman, O.; Revhaug, I. Landslide susceptibility assessment in vietnam using support vector machines, decision tree, and Naive Bayes Models. Math. Probl. Eng. 2012, 2012, 974638. [Google Scholar] [CrossRef]

- Kaasalainen, S.; Hyyppa, H.; Kukko, A.; Litkey, P.; Ahokas, E.; Hyyppa, J.; Lehner, H.; Jaakkola, A.; Suomalainen, J.; Akujarvi, A. Radiometric Calibration of LIDAR Intensity With Commercially Available Reference Targets. IEEE Trans. Geosci. Remote Sens. 2009, 47, 588–598. [Google Scholar] [CrossRef]

- Kaasalainen, S.; Jaakkola, A.; Kaasalainen, M.; Krooks, A.; Kukko, A. Analysis of Incidence Angle and Distance Effects on Terrestrial Laser Scanner Intensity: Search for Correction Methods. Remote Sens. 2011, 3, 2207–2221. [Google Scholar] [CrossRef]

- Höfle, B.; Pfeifer, N. Correction of laser scanning intensity data: Data and model-driven approaches. ISPRS J. Photogramm. Remote Sens. 2007, 62, 415–433. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A. Radiometric correction and normalization of airborne LiDAR intensity data for improving land-cover classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7658–7673. [Google Scholar]

- Shi, S.; Song, S.; Gong, W.; Du, L.; Zhu, B.; Huang, X. Improving backscatter intensity calibration for multispectral LiDAR. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1421–1425. [Google Scholar] [CrossRef]

- Kaasalainen, S.; Krooks, A.; Kukko, A.; Kaartinen, H. Radiometric calibration of terrestrial laser scanners with external reference targets. Remote Sens. 2009, 1, 144–158. [Google Scholar] [CrossRef]

- Prasad, K.K.; Raheem, S.; Vijayalekshmi, P.; Sastri, C.K. Basic aspects and applications of tristimulus colorimetry. Talanta 1996, 43, 1187–1206. [Google Scholar]

- Shi, Y.; Ding, Y.; Zhang, R.; Li, J. Structure and hue similarity for color image quality assessment. In Proceedings of the 2009 International Conference on Electronic Computer Technology, Macau, China, 20–22 February 2009; pp. 329–333. [Google Scholar]

- Trussell, H.J.; Saber, E.; Vrhel, M. Color image processing: Basics and special issue overview. IEEE Signal Process. Mag. 2005, 22, 14–22. [Google Scholar] [CrossRef]

- Süsstrunk, S.; Buckley, R.; Swen, S. Standard RGB color spaces. In Proceedings of the Color and Imaging Conference, Scottsdale, AZ, USA, 16–19 November 1999; pp. 127–134. [Google Scholar]

- Asmare, M.H.; Asirvadam, V.S.; Iznita, L. Color space selection for color image enhancement applications. In Proceedings of the 2009 International Conference on Signal Acquisition and Processing, Kuala Lumpur, Malaysia, 3–5 April 2009; pp. 208–212. [Google Scholar]

- Zhang, X.; Zhang, A.; Meng, X. Automatic fusion of hyperspectral images and laser scans using feature points. J. Sens. 2015, 2015, 415361. [Google Scholar] [CrossRef]

- Meero, F.V.D.; Bakker, W. Cross correlogram spectral matching: Application to surface mineralogical mapping by using AVIRIS data from Cuprite, Nevada. Remote Sens. Environ. 1997, 61, 371–382. [Google Scholar] [CrossRef]

- Avci, E. Selecting of the optimal feature subset and kernel parameters in digital modulation classification by using hybrid genetic algorithm–support vector machines: HGASVM. Expert Syst. Appl. 2009, 36, 1391–1402. [Google Scholar] [CrossRef]

- Patil, T.R.; Sherekar, S. Performance analysis of Naive Bayes and J48 classification algorithm for data classification. Int. J. Comput. Sci. Appl. 2013, 6, 256–261. [Google Scholar]

- Wong, T.-T. Performance evaluation of classification algorithms by k-fold and leave-one-out cross validation. Pattern Recognit. 2015, 48, 2839–2846. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Ratanamahatana, C.A.; Gunopulos, D. Feature selection for the naive bayesian classifier using decision trees. Appl. Artif. Intell. 2003, 17, 475–487. [Google Scholar] [CrossRef]

- Challis, K.; Carey, C.; Kincey, M.; Howard, A.J. Airborne lidar intensity and geoarchaeological prospection in river valley floors. Archaeol. Prospect. 2011, 18, 1–13. [Google Scholar] [CrossRef]

| Scheme | Number | Serial Number | Central Wavelength |

|---|---|---|---|

| a | 32 | 1 2 3 4 … 29 30 31 32 | 436 446 456 466 … 716 726 736 746 |

| b | 16 | 1 3 5 … 27 29 31 | 436 456 476 … 696 716 736 |

| c | 8 | 2 6 10 … 22 26 30 | 446 486 526 … 646 686 726 |

| d | 5 | 3 10 17 24 31 | 456 526 596 666 736 |

| e | 3(1) | 3 14 25 | 456 566 676 |

| f | 3(2) | 2 12 22 | 446 546 646 |

| PCA Components | PCA1 | PCA2 | PCA3 |

|---|---|---|---|

| Spectral band with largest contribution | 626 nm (0.2653) | 466 nm (0.2896) | 546 nm (0.3507) |

| Band Combination | Result | Rank | Band Combination | Result | Rank |

|---|---|---|---|---|---|

| 466 536 626 | 0.6151 | 1 | 476 536 636 | 0.6435 | 6 |

| 466 546 626 | 0.6177 | 2 | 476 546 626 | 0.6437 | 7 |

| 466 546 636 | 0.6325 | 3 | 476 546 636 | 0.6441 | 8 |

| 466 516 636 | 0.6353 | 4 | 466 516 626 | 0.6463 | 9 |

| 476 536 626 | 0.6405 | 5 | 466 536 636 | 0.6479 | 10 |

| Predicted Class | Producer Accuracy | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| B Card | A L | C Pot | R Sur | B Box | GC Doll | RC Doll | |||

| three spectral reflectance | B Card | 1650 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

| A L | 225 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C Pot | 53 | 0 | 100 | 0 | 45 | 0 | 0 | 0.5051 | |

| R Sur | 29 | 0 | 20 | 0 | 45 | 0 | 0 | 0 | |

| B Box | 66 | 0 | 0 | 0 | 260 | 0 | 0 | 0.7975 | |

| GC Doll | 48 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | |

| RC Doll | 89 | 0 | 0 | 0 | 19 | 0 | 149 | 0.5798 | |

| User accuracy | 0.7639 | 0 | 0.8264 | 0 | 0.7027 | 0 | 1 | 0.7711 | |

| Overall accuracy (%) 77.11% | |||||||||

| RGB | B Card | 1645 | 5 | 0 | 0 | 0 | 0 | 0 | 0.9970 |

| A L | 50 | 170 | 0 | 0 | 5 | 0 | 0 | 0.7556 | |

| C Pot | 1 | 36 | 151 | 0 | 10 | 0 | 0 | 0.7626 | |

| R Sur | 0 | 1 | 0 | 82 | 1 | 0 | 10 | 0.8723 | |

| B Box | 18 | 38 | 0 | 0 | 270 | 0 | 0 | 0.8282 | |

| GC Doll | 4 | 17 | 1 | 0 | 0 | 28 | 0 | 0.5600 | |

| RC Doll | 9 | 17 | 3 | 2 | 3 | 1 | 222 | 0.8638 | |

| User accuracy | 0.9525 | 0.5986 | 0.9742 | 0.9762 | 0.9343 | 0.9655 | 0.9569 | 0.9171 | |

| Overall accuracy (%) 91.71% | |||||||||

| Predicted Class | Producer Accuracy | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| B Card | A L | C Pot | R Sur | B Box | GC Doll | RC Doll | |||

| three spectral reflectance | B Card | 1632 | 15 | 0 | 0 | 1 | 0 | 2 | 0.9891 |

| A L | 11 | 152 | 0 | 0 | 1 | 0 | 61 | 0.6756 | |

| C Pot | 1 | 13 | 114 | 0 | 48 | 0 | 22 | 0.5758 | |

| R Sur | 4 | 14 | 30 | 0 | 38 | 0 | 8 | 0 | |

| B Box | 10 | 39 | 18 | 0 | 249 | 0 | 10 | 0.7638 | |

| GC Doll | 2 | 7 | 1 | 0 | 2 | 19 | 19 | 0.38 | |

| RC Doll | 10 | 97 | 0 | 0 | 18 | 0 | 132 | 0.5136 | |

| User accuracy | 0.9772 | 0.451 | 0.6994 | 0 | 0.6975 | 1 | 0.5197 | 0.8207 | |

| Overall accuracy (%) 82.07% | |||||||||

| RGB | B Card | 1622 | 16 | 0 | 0 | 1 | 11 | 0 | 0.983 |

| A L | 15 | 195 | 0 | 0 | 15 | 0 | 0 | 0.8667 | |

| C Pot | 1 | 18 | 125 | 0 | 50 | 0 | 4 | 0.6313 | |

| R Sur | 0 | 0 | 0 | 78 | 3 | 0 | 13 | 0.8298 | |

| B Box | 10 | 39 | 12 | 0 | 257 | 0 | 8 | 0.7883 | |

| GC Doll | 2 | 9 | 1 | 0 | 2 | 36 | 0 | 0.72 | |

| RC Doll | 4 | 19 | 0 | 10 | 7 | 1 | 216 | 0.8405 | |

| User accuracy | 0.9807 | 0.6588 | 0.9058 | 0.8864 | 0.7672 | 0.7500 | 0.8963 | 0.9032 | |

| Overall accuracy (%) 90.32% | |||||||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, B.; Shi, S.; Gong, W.; Sun, J.; Chen, B.; Du, L.; Yang, J.; Guo, K.; Zhao, X. True-Color Three-Dimensional Imaging and Target Classification BASED on Hyperspectral LiDAR. Remote Sens. 2019, 11, 1541. https://doi.org/10.3390/rs11131541

Chen B, Shi S, Gong W, Sun J, Chen B, Du L, Yang J, Guo K, Zhao X. True-Color Three-Dimensional Imaging and Target Classification BASED on Hyperspectral LiDAR. Remote Sensing. 2019; 11(13):1541. https://doi.org/10.3390/rs11131541

Chicago/Turabian StyleChen, Bowen, Shuo Shi, Wei Gong, Jia Sun, Biwu Chen, Lin Du, Jian Yang, Kuanghui Guo, and Xingmin Zhao. 2019. "True-Color Three-Dimensional Imaging and Target Classification BASED on Hyperspectral LiDAR" Remote Sensing 11, no. 13: 1541. https://doi.org/10.3390/rs11131541

APA StyleChen, B., Shi, S., Gong, W., Sun, J., Chen, B., Du, L., Yang, J., Guo, K., & Zhao, X. (2019). True-Color Three-Dimensional Imaging and Target Classification BASED on Hyperspectral LiDAR. Remote Sensing, 11(13), 1541. https://doi.org/10.3390/rs11131541