Extraction of Visible Boundaries for Cadastral Mapping Based on UAV Imagery

Abstract

1. Introduction

1.1. Visible Boundary Detection and Extraction for Cadastral Mapping

1.2. Objective of the Study

2. Materials and Methods

2.1. UAV Data

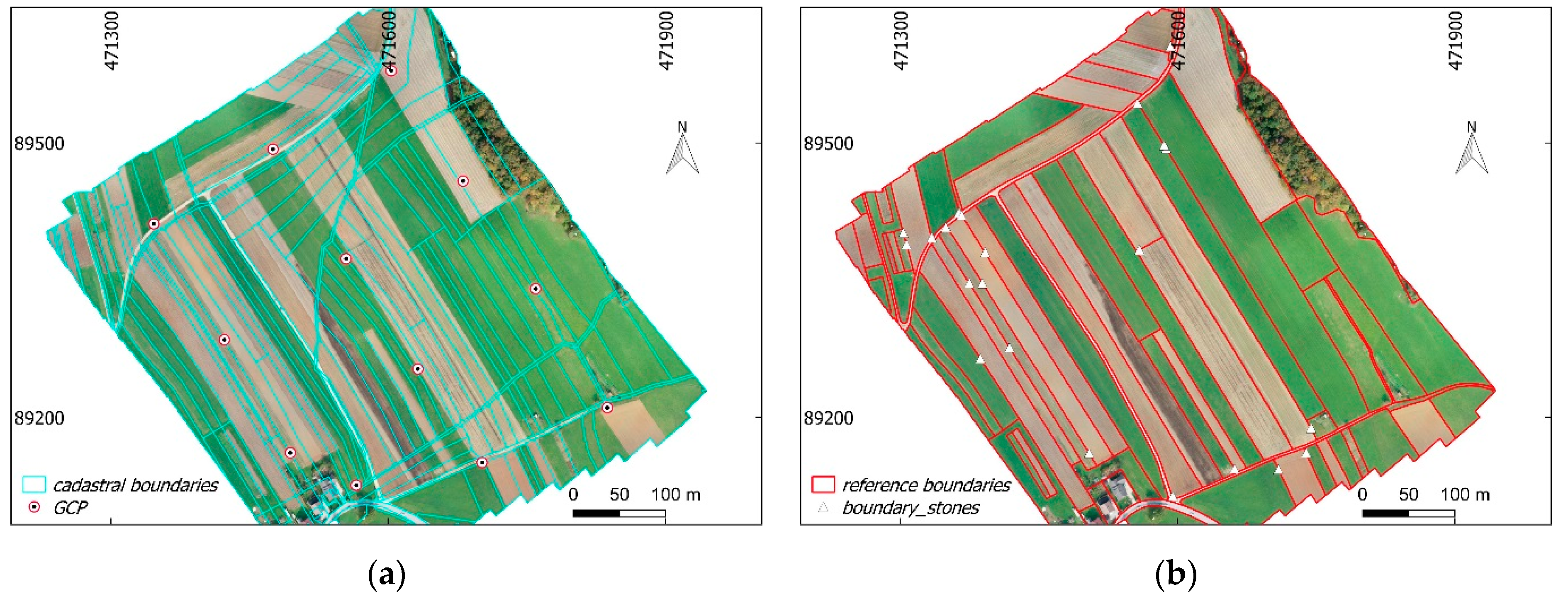

2.2. Reference data

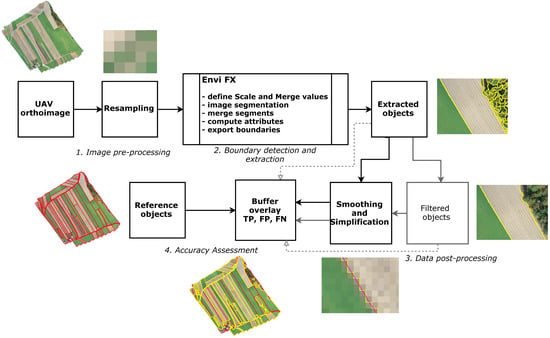

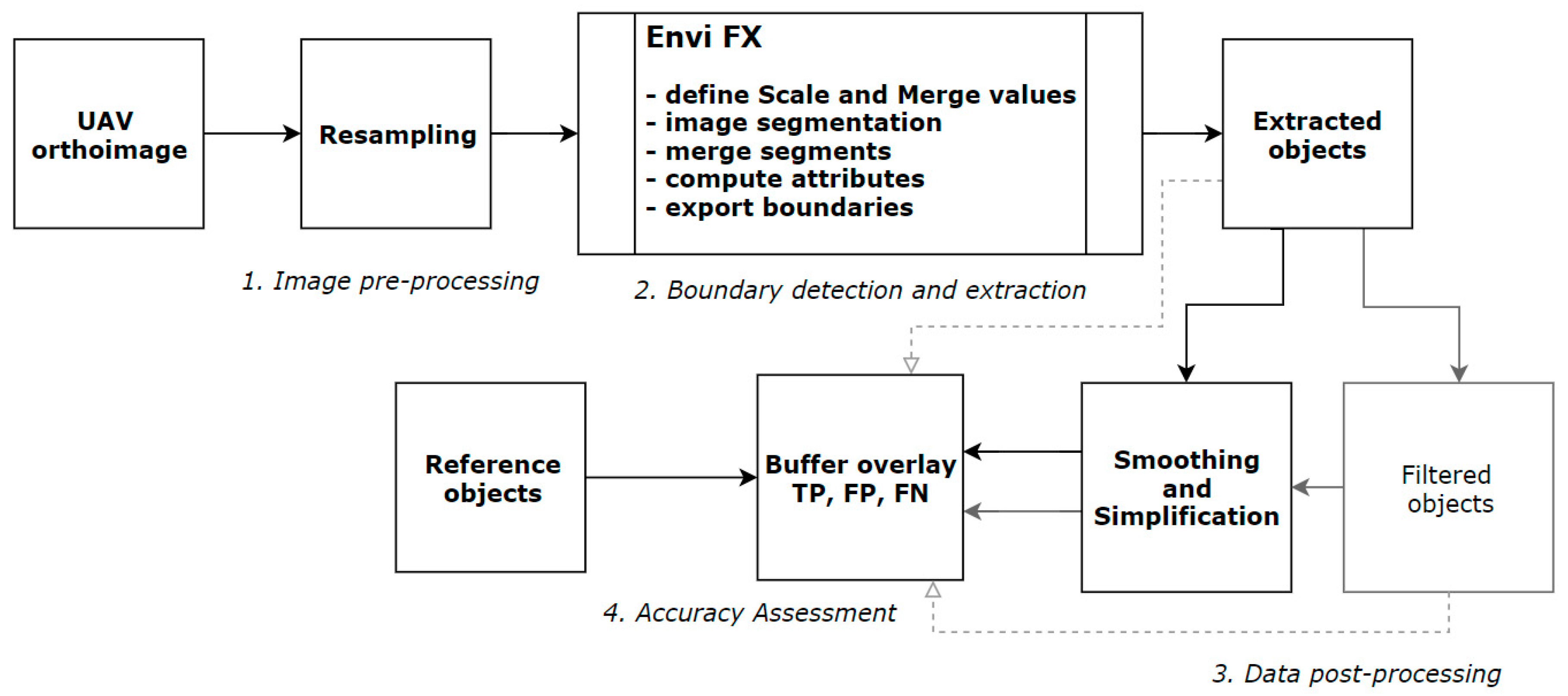

2.3. Visible Boundary Delineation Method and Workflow

2.3.1. ENVI Feature Extraction (FX)

2.3.2. Visible Boundary Delineation Workflow

- Image pre-processing: The first step is resampling the UAV orthoimage. The UAV orthoimage was resampled from 2 cm to lower spatial resolutions—25 cm, 50 cm and 100 cm ground sample distances (GSD). The selected GSDs allowed the identification of the impact of different GSDs on the results of automatic boundary extractions. The pixel average method was used for resampling the UAV orthoimage as it provides a smoother image. In addition, further resampling methods (nearest neighbor and bilinear) were tested and did not provide significant differences in the number of automatic object boundary extractions—at higher scale and merge levels of the ENVI FX algorithm. The resampling step was also applied in [26], to make transferable the investigated method to a UAV orthoimage for cadastral mapping purposes. In addition, extracting objects from a UAV orthoimage of lower spatial resolution is computationally less expensive.

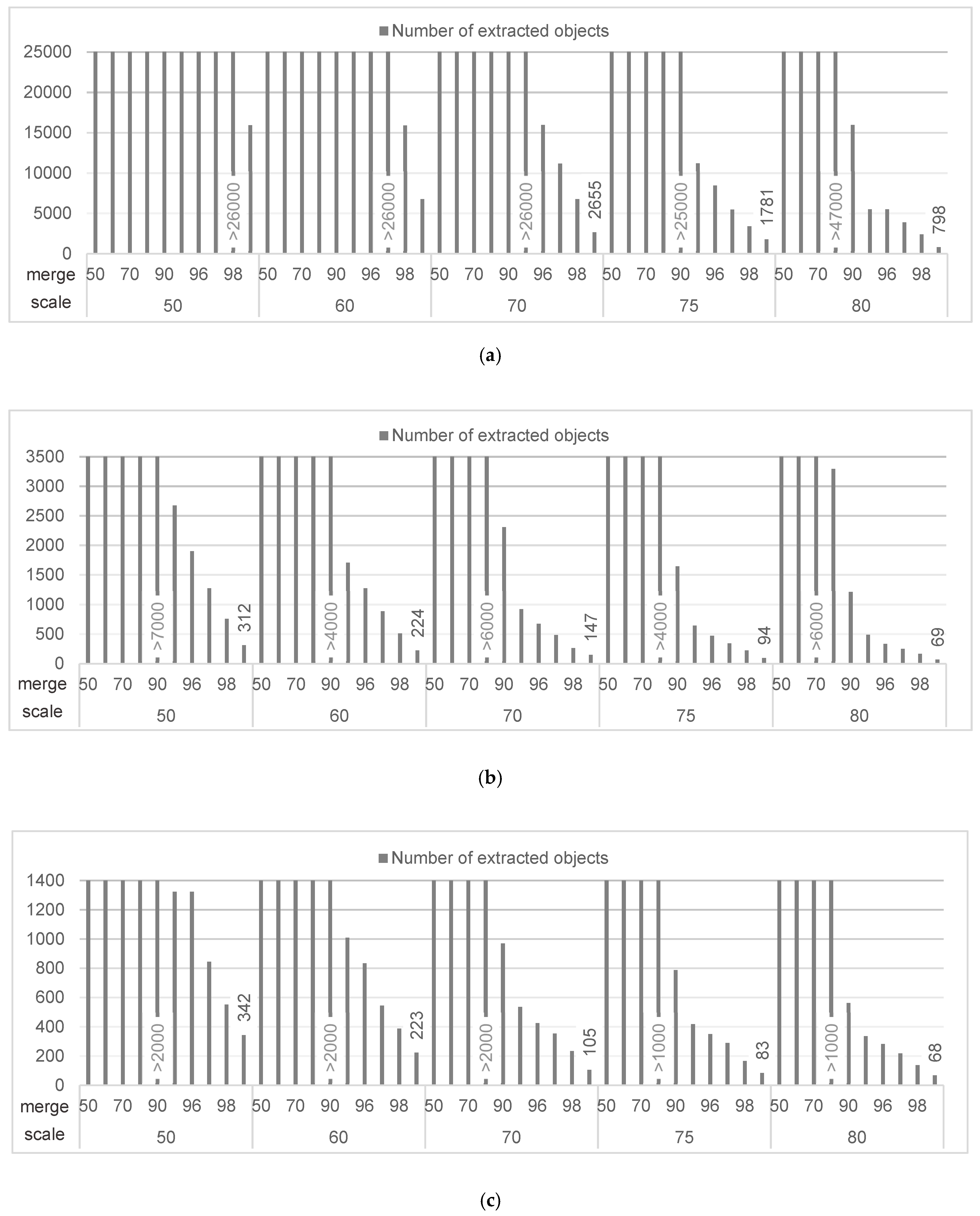

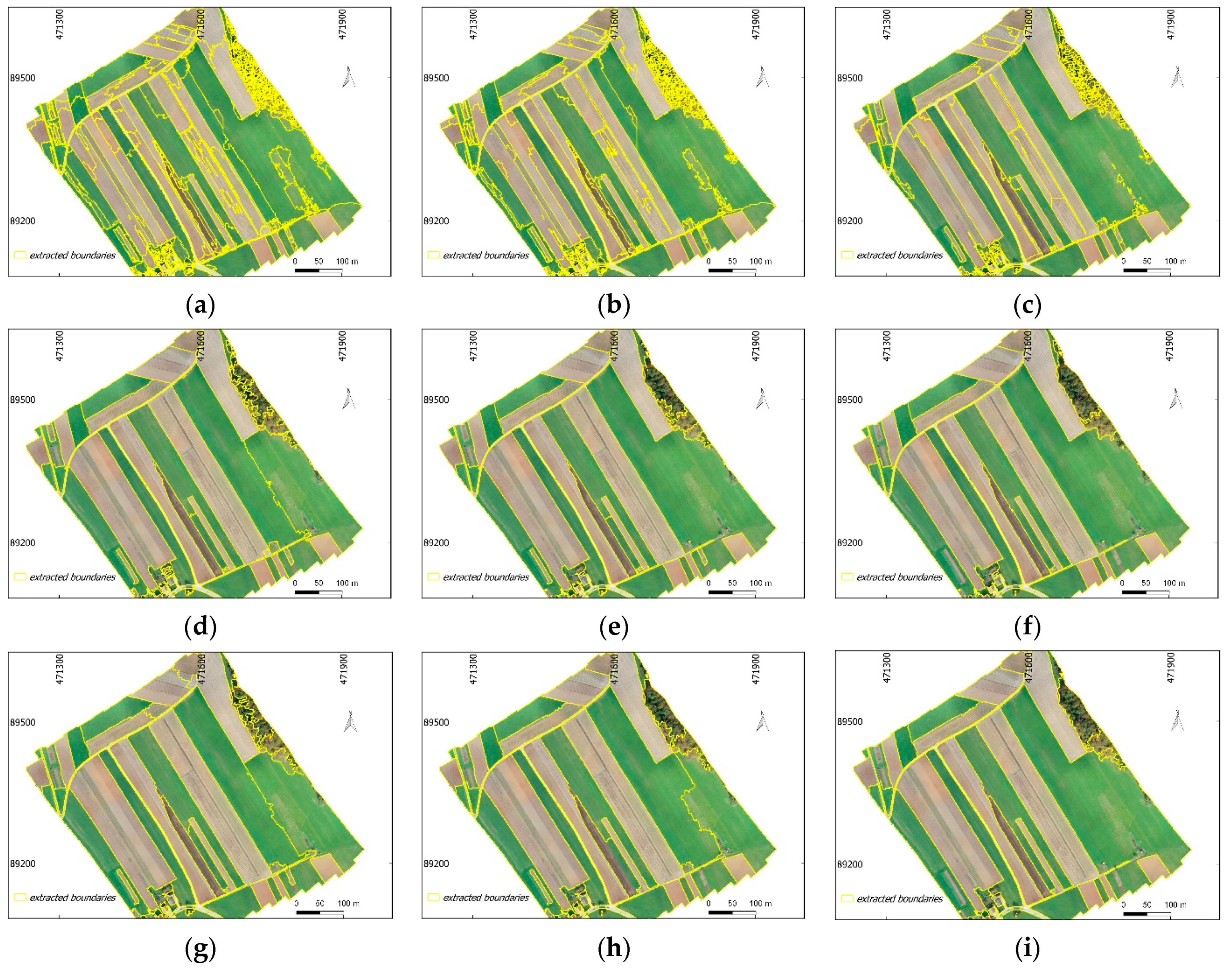

- Boundary detection and extraction: The ENVI FX module was applied to each down-sampled UAV orthoimage. The detection and extraction of visible boundaries from the UAV orthoimage was based on the ENVI FX scale and merge level values. The texture kernel size was set to default, i.e., 3. In addition, further object extractions were tested at the highest texture kernel size and no differences in the number and locations of extracted objects were identified. Scale level values ranged from 50 to 80 and merge level values from 50 to 99. The initial incremental value for both scale and merge levels was 10. In cases where a jump in the total number of extracted objects was detected the incremental value was dropped for both scale and merge levels. In order to identify the optimal scale and merge values for the detection and extraction of visible objects for cadastral mapping, all possible range values of scale and merge combinations were tested. For each extraction information about the total number of extracted objects and processing time was stored. This resulted in 50 boundary maps for each resampled UAV orthoimage. The boundary map consisted of extracted objects (polygon-based), which were in digital vector format.

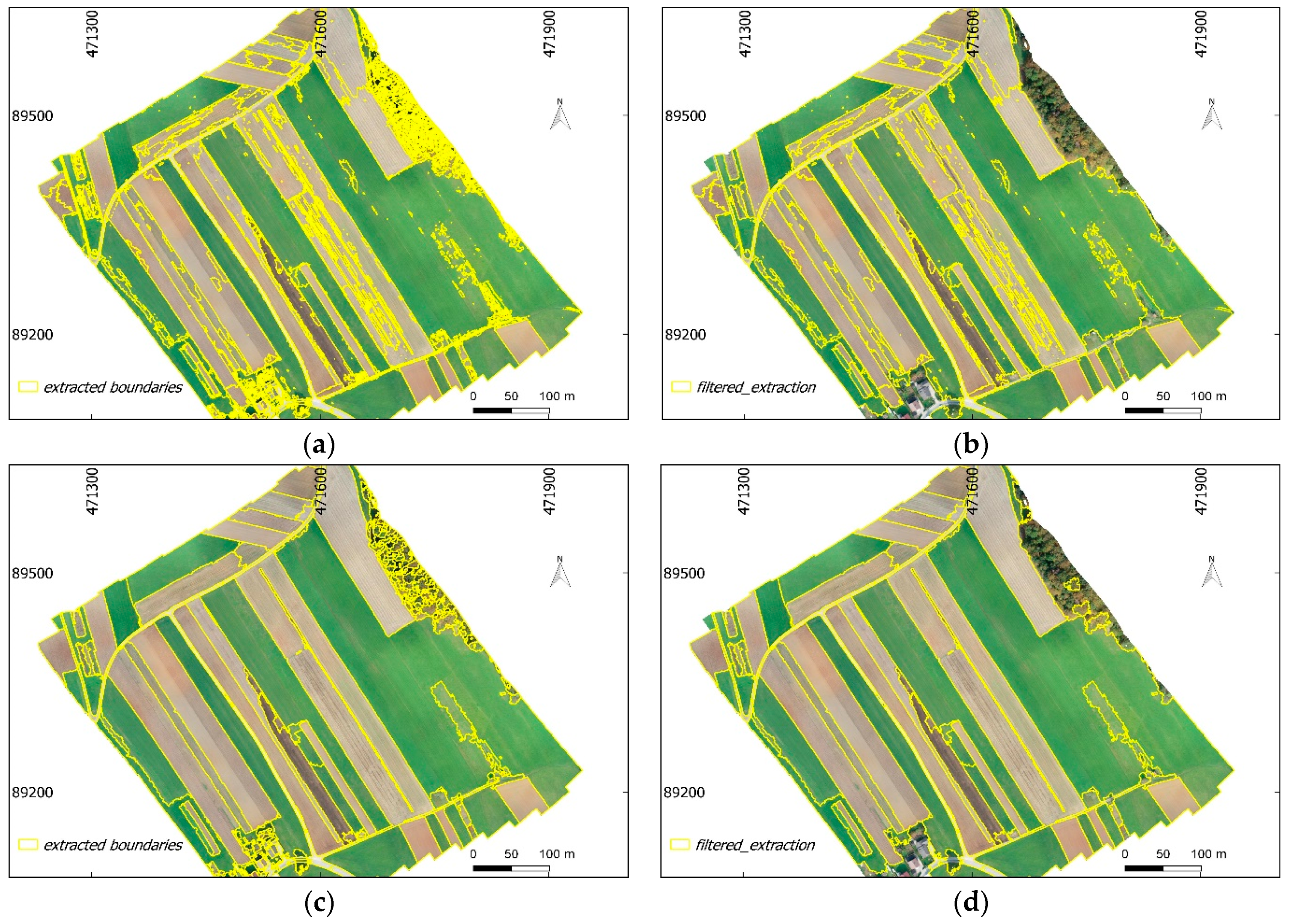

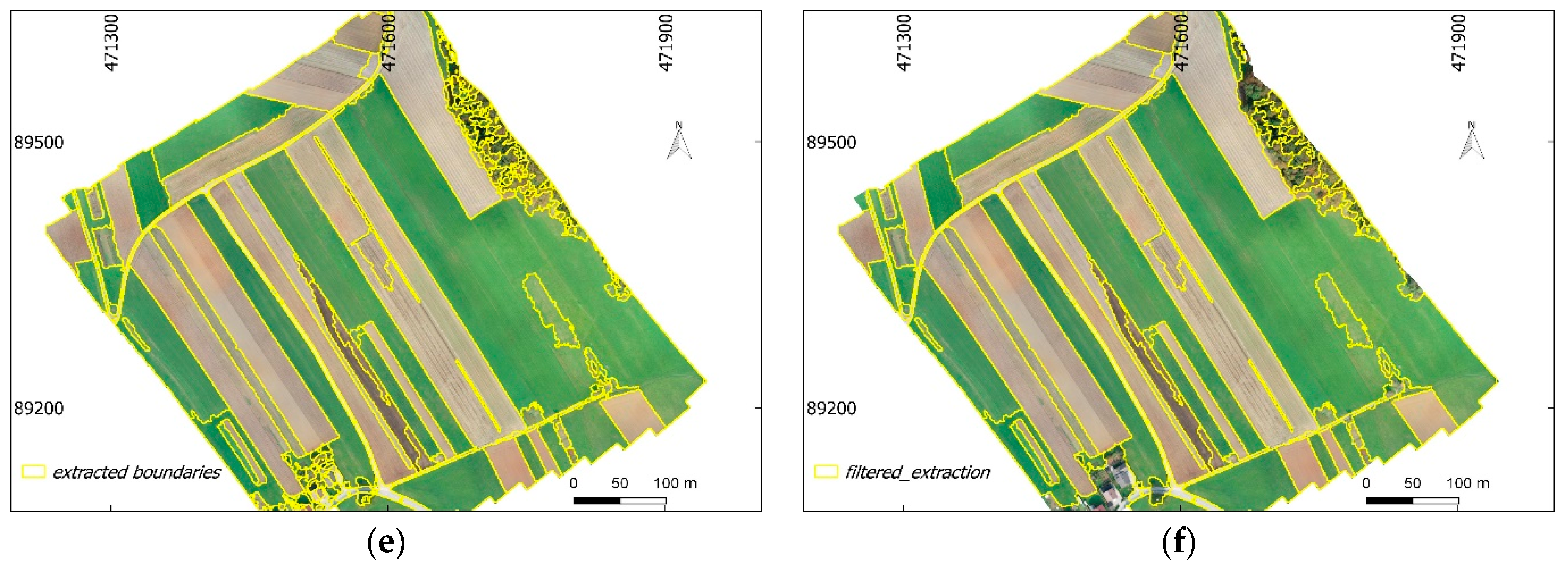

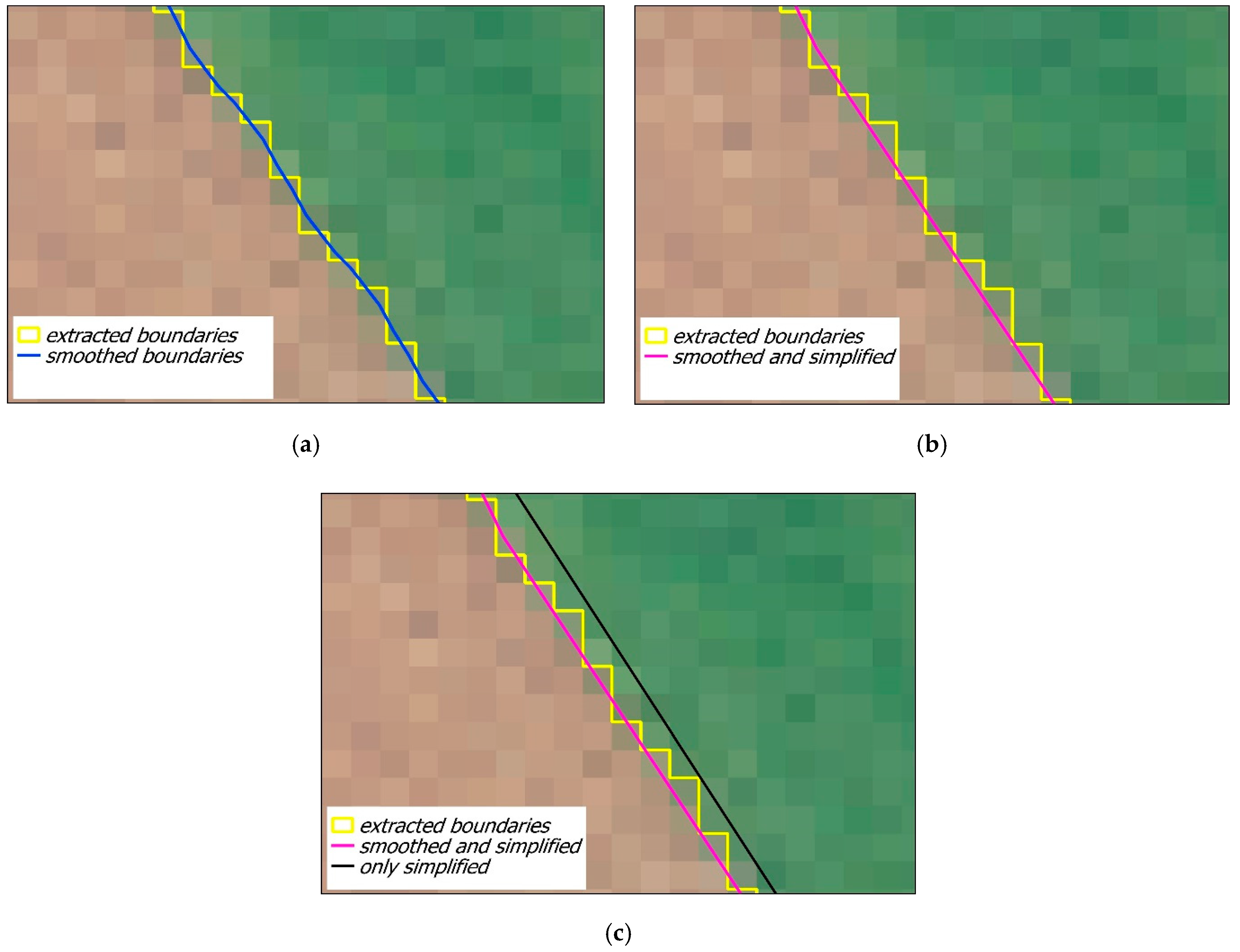

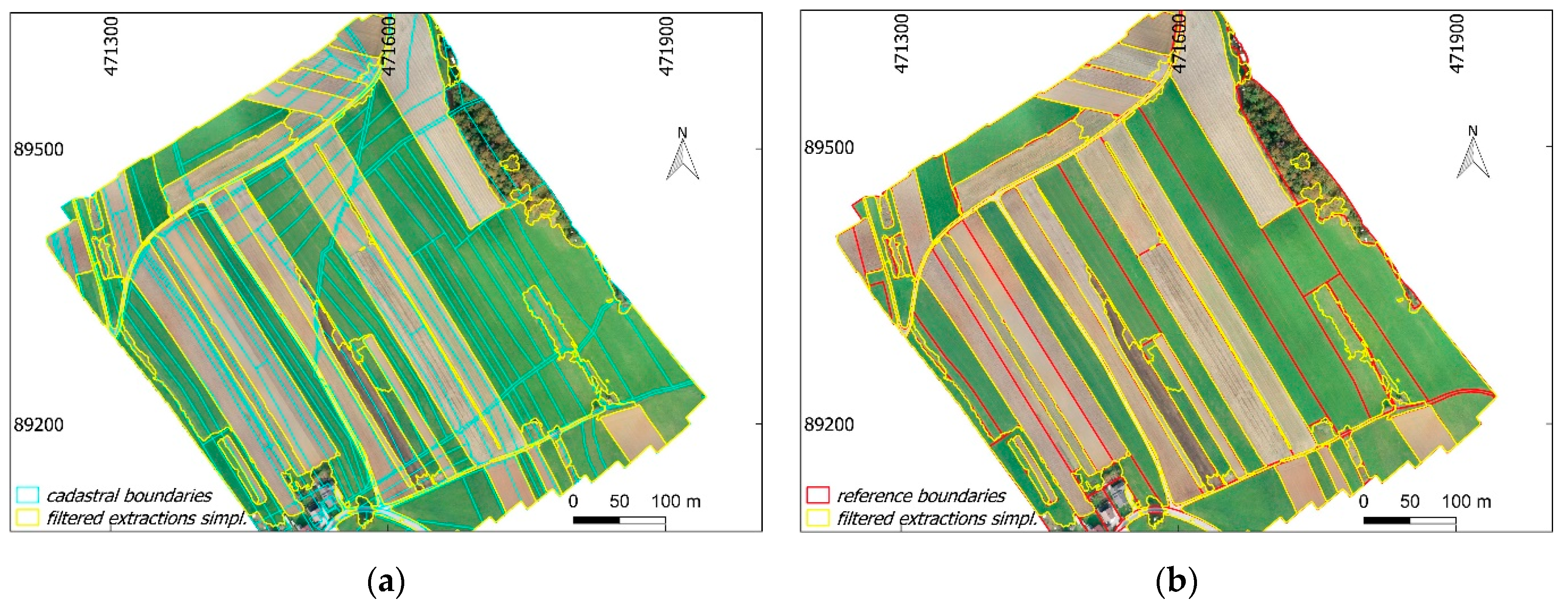

- Data post-processing: The process included two steps: (i) the filtering of extracted objects, and (ii) the simplification of extracted objects. (i) The minimum object area and the total number of objects identified in the reference data (Figure 1b) were used to determine optimal scale and merge levels. The minimum reference object area was 204 m2, and the total number of objects was 68. All extracted objects that were smaller than the minimum object area from reference data were filtered out (removed). The total number of remaining objects was compared with the total number of objects from the reference data and the tolerance of +/- 10 objects was set—those parameters that produced numbers of objects that were closest to those found in the reference data, i.e., within defined tolerance, were deemed optimal. The boundary maps from which smaller objects were removed were labeled as filtered objects. The output of filtered objects consisted of holes, i.e., due to polygon-based geometry of objects, which were mostly present either in the forest or individual trees and are of less relevance for cadastral applications—a boundary between adjacent objects belongs to both. (ii) Extracted and filtered object boundaries were smoothed and simplified to be used for the interpretation of possession boundaries aiming to support a cadastral mapping (i.e., land plot restructuring in this case, as the situation requires a new cadastral survey or land consolidation). The smoothing of extracted/filtered object boundaries was done by using the Snakes algorithm [49]. The Douglas–Peucker algorithm was applied to the smoothed object boundaries in order to further simplify the object boundaries [22,49]. These objects both smoothed and simplified were labeled as simplified extracted/filtered objects.

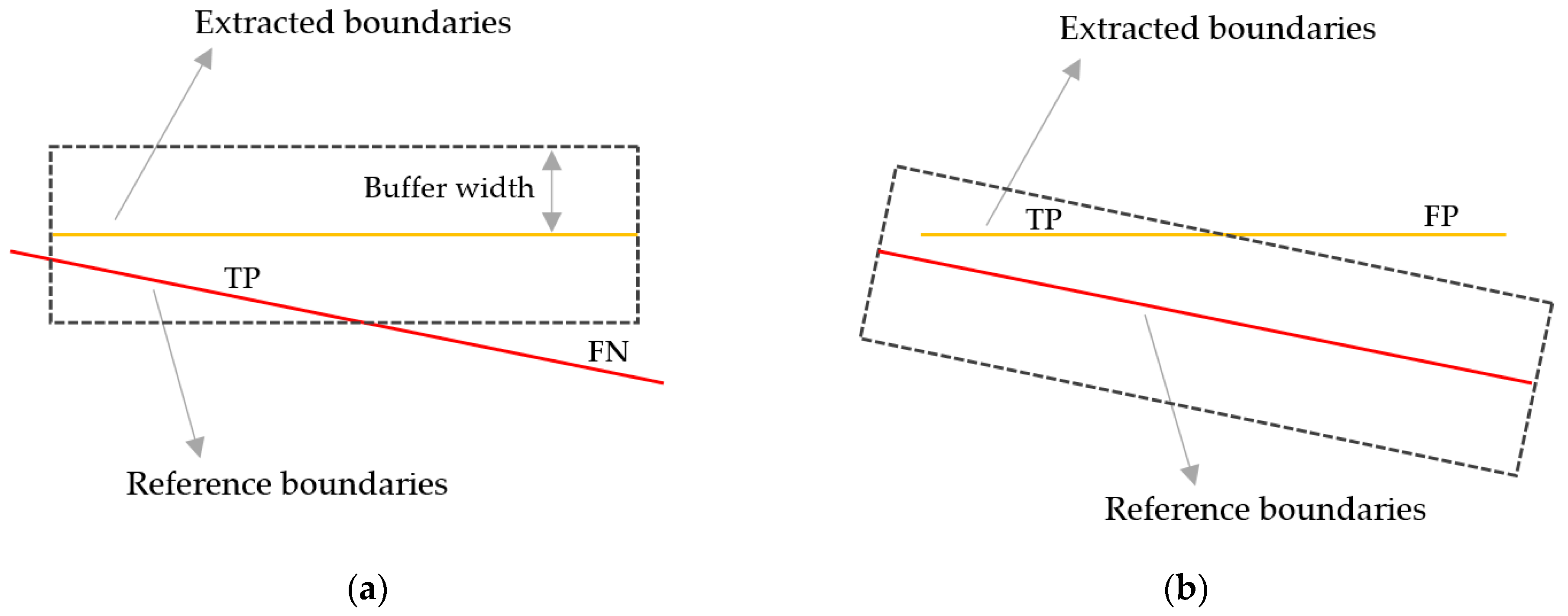

- Accuracy assessment: The accuracy assessment was object-based since the results were in vector format. The buffer overlay method was used for accuracy assessment. The method is described in detail in Heipke et al. [50]. The accuracy assessment was based on computing the percentages of extracted (or reference) boundary lengths which overlapped within a buffer polygon area generated around the reference (or extracted) boundaries (Figure 3) [50]. To determine the completeness, correctness, and quality of extracted boundaries, calculated boundary lengths of true positives (TP), false positives (FP), and false negatives (FN) were used. The completeness refers to the percentage of reference boundaries which lie within the buffer around the extracted boundaries (matched reference). The correctness refers to the percentage of extracted boundaries, which lie within the buffer around the reference boundaries (matched extraction). The accuracy assessment was performed on buffer widths of 25 cm, 50 cm, 100 cm, and 200 cm. The selection of buffer widths is in line with other studies and was based on the most common tolerances regarding boundary positions in land administration, especially for rural areas [26,27]. The percentage indicating the overall quality was generated from the previous two by dividing the length of the matched extractions with the sum of the length of extracted data and the length of unmatched reference [50]. The accuracy assessment was applied to automatic extracted objects, simplified extracted objects, filtered objects, and simplified filtered objects (Figure 2).

3. Results

4. Discussion

4.1. The Developed Workflow

4.2. Quality Assessment

4.3. Strengths and Limitations of the Automatic Extraction Method Used

4.4. Applicability of the Developed Workflow

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| DSM | Digital Surface Model |

| ESP | Estimation of Scale Parameter |

| FIG | International Federation of Surveyors |

| FN | False Negative |

| FP | False Positive |

| FX | ENVI Feature Extraction |

| GCP | Ground Control Point |

| GIS | Geographic Information System |

| GNSS | Global Navigation Satellite System |

| gPb | Global Probability of Boundary |

| GSD | Ground Sample Distance |

| HRSI | High-Resolution Satellite Imagery |

| MRS | Multi-Resolution Segmentation |

| PDOP | Position Dilution of Precision |

| RMSE | Root-Mean-Square-Error |

| RTK | Real-Time Kinematic |

| SLIC | Simple Linear Iterative Clustering |

| TP | True Positive |

| UAV | Unmanned Aerial Vehicle |

References

- Enemark, S. International Federation of Surveyors. In Fit-For-Purpose Land Administration: Joint FIG/World Bank Publication; FIG: Copenhagen, Denmark, 2014; ISBN 978-87-92853-10-3. [Google Scholar]

- Luo, X.; Bennett, R.; Koeva, M.; Lemmen, C.; Quadros, N. Quantifying the Overlap between Cadastral and Visual Boundaries: A Case Study from Vanuatu. Urban Sci. 2017, 1, 32. [Google Scholar] [CrossRef]

- Zevenbergen, J. A Systems Approach to Land Registration and Cadastre. Nord. J. Surv. Real Estate Res. 2004, 1, 11–24. [Google Scholar]

- Simbizi, M.C.D.; Bennett, R.M.; Zevenbergen, J. Land tenure security: Revisiting and refining the concept for Sub-Saharan Africa’s rural poor. Land Use Policy 2014, 36, 231–238. [Google Scholar] [CrossRef]

- Land Administration for Sustainable Development, 1st ed.; Williamson, I.P., Ed.; ESRI Press Academic: Redlands, CA, USA, 2010; ISBN 978-1-58948-041-4. [Google Scholar]

- Zevenbergen, J.A. Land Administration: To See the Change from Day to Day; ITC: Enschede, The Netherlands, 2009; ISBN 978-90-6164-274-9. [Google Scholar]

- Luo, X.; Bennett, R.M.; Koeva, M.; Lemmen, C. Investigating Semi-Automated Cadastral Boundaries Extraction from Airborne Laser Scanned Data. Land 2017, 6, 60. [Google Scholar] [CrossRef]

- Enemark, S. Land Administration and Cadastral Systems in Support of Sustainable Land Governance—A Global Approach. In Proceedings of the Re-Engineering the Cadastre to Support E-Government, Tehran, Iran, 4–26 May 2009. [Google Scholar]

- Maurice, M.J.; Koeva, M.N.; Gerke, M.; Nex, F.; Gevaert, C. A Photogrammetric Approach for Map Updating Using UAV in Rwanda. Available online: https://bit.ly/2FyhbEi (accessed on 25 June 2019).

- Wayumba, R.; Mwangi, P.; Chege, P. Application of Unmanned Aerial Vehicles in Improving Land Registration in Kenya. Int. J. Res. Eng. Sci. 2017, 5, 5–11. [Google Scholar]

- Ramadhani, S.A.; Bennett, R.M.; Nex, F.C. Exploring UAV in Indonesian cadastral boundary data acquisition. Earth Sci. Inform. 2018, 11, 129–146. [Google Scholar] [CrossRef]

- Mumbone, M.; Bennet, R.; Gerke, M. Innovations in Boundary Mapping: Namibia, Customary Lands and UAVs. In Proceedings of the Linking Land Tenure and Use for Shared Prosperity, Washington, DC, USA, 23–27 March 2015; p. 22. [Google Scholar]

- Volkmann, W.; Barnes, G. Virtual Surveying: Mapping and Modeling Cadastral Boundaries Using Unmanned Aerial Systems (UAS). In Proceedings of the FIG Congress 2014, Kuala Lumpur, Malaysia, 16–21 June 2014; p. 13. [Google Scholar]

- Rijsdijk, M.; van Hinsbergh, W.H.M.; Witteveen, W.; ten Buuren, G.H.M.; Schakelaar, G.A.; Poppinga, G.; van Persie, M.; Ladiges, R. Unmanned Aerial Systems in the process of Juridical verification of Cadastral borde. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W2, 325–331. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Notario-García, M.D.; Meroño de Larriva, J.E.; Sánchez de la Orden, M.; García-Ferrer Porras, A. Validation of measurements of land plot area using UAV imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 33, 270–279. [Google Scholar] [CrossRef]

- Manyoky, M.; Theiler, P.; Steudler, D.; Eisenbeiss, H. Unmanned Aerial Vehicle in Cadastral Applications. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXVIII-1/C22, 57–62. [Google Scholar] [CrossRef]

- Kurczynski, Z.; Bakuła, K.; Karabin, M.; Kowalczyk, M.; Markiewicz, J.S.; Ostrowski, W.; Podlasiak, P.; Zawieska, D. The posibility of using images obtained from the UAS in cadastral works. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 909–915. [Google Scholar] [CrossRef]

- Cramer, M.; Bovet, S.; Gültlinger, M.; Honkavaara, E.; McGill, A.; Rijsdijk, M.; Tabor, M.; Tournadre, V. On the use of RPAS in National Mapping—The EuroSDR point of view. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W2, 93–99. [Google Scholar] [CrossRef]

- Binns, B.O.; Dale, P.F. Cadastral Surveys and Records of Rights in Land. Available online: http://www.fao.org/3/v4860e/v4860e03.htm (accessed on 20 March 2019).

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned Aircraft Systems in Remote Sensing and Scientific Research: Classification and Considerations of Use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Nex, F.; Yang, M.; Vosselman, G. Review of Automatic Feature Extraction from High-Resolution Optical Sensor Data for UAV-Based Cadastral Mapping. Remote Sens. 2016, 8, 689. [Google Scholar] [CrossRef]

- Heipke, C.; Woodsford, P.A.; Gerke, M. Updating geospatial databases from images. In Advances in Photogrammetry, Remote Sensing and Spatial Information Sciences: 2008 ISPRS Congress Book; Baltsavias, E., Li, Z., Chen, J., Eds.; CRC Press: London, UK, 2008. [Google Scholar]

- Bennett, R.; Kitchingman, A.; Leach, J. On the nature and utility of natural boundaries for land and marine administration. Land Use Policy 2010, 27, 772–779. [Google Scholar] [CrossRef]

- Zevenbergen, J.; Bennett, R. The visible boundary: More than just a line between coordinates. In Proceedings of the GeoTech Rwanda, Kigali, Rwanda, 18–20 November 2015; pp. 1–4. [Google Scholar]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Yang, M.; Vosselman, G. Contour Detection for UAV-Based Cadastral Mapping. Remote Sens. 2017, 9, 171. [Google Scholar] [CrossRef]

- Wassie, Y.A.; Koeva, M.N.; Bennett, R.M.; Lemmen, C.H.J. A procedure for semi-automated cadastral boundary feature extraction from high-resolution satellite imagery. J. Spat. Sci. 2018, 63, 75–92. [Google Scholar] [CrossRef]

- Kohli, D.; Crommelinck, S.; Bennett, R. Object-Based Image Analysis for Cadastral Mapping Using Satellite Images. In Proceedings of the International Society for Optics and Photonics, Image Signal Processing Remote Sensing XXIII; SPIE. The International Society for Optical Engineering: Warsaw, Poland, 2017. [Google Scholar]

- Kohli, D.; Unger, E.-M.; Lemmen, C.; Koeva, M.; Bhandari, B. Validation of a cadastral map created using satellite imagery and automated feature extraction techniques: A case of Nepal. In Proceedings of the FIG Congress 2018, Istanbul, Turkey, 6–11 May 2018; p. 17. [Google Scholar]

- Singh, P.P.; Garg, R.D. Road Detection from Remote Sensing Images using Impervious Surface Characteristics: Review and Implication. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-8, 955–959. [Google Scholar] [CrossRef]

- Kumar, M.; Singh, R.K.; Raju, P.L.N.; Krishnamurthy, Y.V.N. Road Network Extraction from High Resolution Multispectral Satellite Imagery Based on Object Oriented Techniques. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II-8, 107–110. [Google Scholar] [CrossRef]

- Wang, J.; Qin, Q.; Gao, Z.; Zhao, J.; Ye, X. A New Approach to Urban Road Extraction Using high-resolution aerial image. ISPRS Int. J. Geo-Inf. 2016, 5, 114. [Google Scholar] [CrossRef]

- Paravolidakis, V.; Ragia, L.; Moirogiorgou, K.; Zervakis, M. Automatic Coastline Extraction Using Edge Detection and Optimization Procedures. Geosciences 2018, 8, 407. [Google Scholar] [CrossRef]

- Mayer, H.; Hinz, S.; Bacher, U.; Baltsavias, E. A test of Automatic Road Extraction approaches. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 209–214. [Google Scholar]

- Dey, V.; Zhang, Y.; Zhong, M. A review of image segmentation techniques with remote sensing perspective. In Proceedings of the ISPRS TC VII Symposium, Vienna, Austria, 5–7 July 2010; Volume XXXVIII. Part 7a. [Google Scholar]

- Mueller, M.; Segl, K.; Kaufmann, H. Edge- and region-based segmentation technique for the extraction of large, man-made objects in high-resolution satellite imagery. Pattern Recognit. 2004, 37, 1619–1628. [Google Scholar] [CrossRef]

- Crommelinck, S.; Höfle, B.; Koeva, M.N.; Yang, M.Y.; Vosselman, G. Interactive Cadastral Boundary Deliniation from UAV data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV-2, 81–88. [Google Scholar] [CrossRef]

- Babawuro, U.; Zou, B. Satellite Imagery Cadastral Features Extractions using Image Processing Algorithms: A Viable Option for Cadastral Science. IJCSI Int. J. Comput. Sci. Issues 2012, 9, 30–38. [Google Scholar]

- Wang, J.; Song, J.; Chen, M.; Yang, Z. Road network extraction: A neural-dynamic framework based on deep learning and a finite state machine. Int. J. Remote Sens. 2015, 36, 3144–3169. [Google Scholar] [CrossRef]

- Poursanidis, D.; Chrysoulakis, N.; Mitraka, Z. Landsat 8 vs. Landsat 5: A comparison based on urban and peri-urban land cover mapping. Int. J. Appl. Earth Obs. Geoinf. 2015, 35, 259–269. [Google Scholar] [CrossRef]

- ITT Visual Information Solutions ENVI Feature Extraction User’s Guide; Harris Geospatial Solutions: Broomfield, CO, USA, 2008.

- The Surveying and Mapping Authority of the Republic of Slovenia e-Surveying Data. Available online: https://egp.gu.gov.si/egp/?lang=en (accessed on 29 May 2019).

- Jin, X. Segmentation-Based Image Processing System. U.S. Patent 8,260,048, 4 September 2012. [Google Scholar]

- ENVI Segmentation Algorithms Background. Available online: https://www.harrisgeospatial.com/docs/backgroundsegmentationalgorithm.html#Referenc (accessed on 21 March 2019).

- ENVI Merge Algorithms Background. Available online: https://www.harrisgeospatial.com/docs/backgroundmergealgorithms.html (accessed on 21 March 2019).

- ENVI Development Team. ENVI The Leading Geospatial Analytics Software; Harris Geospatial Solutions: Broomfield, CO, USA, 2018. [Google Scholar]

- Extract Segments Only. Available online: https://www.harrisgeospatial.com/docs/segmentonly.html (accessed on 25 March 2019).

- QGIS Development Team. QGIS a Free and Open Source Geographic Information System, Version 2.18; Las Palmas de, G.C., Ed.; Open Source Geospatial Foundation: Beaverton, OR, USA, 2018. [Google Scholar]

- GRASS GIS Development Team. GRASS GIS Bringing Advanced Geospatial Technologies to the World, Version 7.4.2; Open Source Geospatial Foundation: Beaverton, OR, USA, 2018. [Google Scholar]

- Heipke, C.; Mayer, H.; Wiedemann, C. Evaluation of Automatic Road Extraction. Int. Arch. Photogramm. Remote Sens. 1997, 32, 151–160. [Google Scholar]

| Location | UAV Model | Camera/Focal Length [mm] | Overlap Forward/Sideward [%] | Flight altitude [m] | GSD [cm] | Pixels |

|---|---|---|---|---|---|---|

| Ponova vas, Slovenia | DJI Phantom 4 Pro | 1” CMOS 20mp/24 | 80/70 | 80 | 2.0 | 35,551 × 31,098 |

| GSD [cm] | Pixels | Resampling Method |

|---|---|---|

| 25 | 2856 × 2498 | Pixel average |

| 50 | 1428 × 1249 | Pixel average |

| 100 | 714 × 625 | Pixel average |

| Buffer width [cm] | Completeness [%] | Correctness [%] | Quality [%] | |||

|---|---|---|---|---|---|---|

| Extracted | Filtered | Extracted | Filtered | Extracted | Filtered | |

| 25 | 58 | 37 | 18 | 26 | 16 | 20 |

| 50 | 73 | 48 | 28 | 39 | 26 | 31 |

| 100 | 78 | 56 | 38 | 50 | 36 | 41 |

| 200 | 81 (81) 1 | 61 (62) 1 | 48 (49) 1 | 59 (61) 1 | 46 (46) 1 | 50 (48) 1 |

| Buffer width [cm] | Completeness [%] | Correctness [%] | Quality [%] | |||

|---|---|---|---|---|---|---|

| Extracted | Filtered | Extracted | Filtered | Extracted | Filtered | |

| 25 | 45 | 40 | 28 | 35 | 21 | 23 |

| 50 | 64 | 55 | 46 | 56 | 38 | 41 |

| 100 | 71 | 61 | 57 | 68 | 48 | 52 |

| 200 | 75 (74) 1 | 65 (67) 1 | 65 (66) 1 | 76 (77) 1 | 56 (53) 1 | 59 (56) 1 |

| Buffer Width [cm] | Completeness [%] | Correctness [%] | Quality [%] | |||

|---|---|---|---|---|---|---|

| Extracted | Filtered | Extracted | Filtered | Extracted | Filtered | |

| 25 | 31 | 27 | 21 | 24 | 14 | 15 |

| 50 | 53 | 47 | 39 | 43 | 29 | 30 |

| 100 | 67 | 59 | 58 | 64 | 47 | 47 |

| 200 | 73 (71) 1 | 63 (67) 1 | 66 (66) 1 | 72 (73) 1 | 55 (52) 1 | 55 (52) 1 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fetai, B.; Oštir, K.; Kosmatin Fras, M.; Lisec, A. Extraction of Visible Boundaries for Cadastral Mapping Based on UAV Imagery. Remote Sens. 2019, 11, 1510. https://doi.org/10.3390/rs11131510

Fetai B, Oštir K, Kosmatin Fras M, Lisec A. Extraction of Visible Boundaries for Cadastral Mapping Based on UAV Imagery. Remote Sensing. 2019; 11(13):1510. https://doi.org/10.3390/rs11131510

Chicago/Turabian StyleFetai, Bujar, Krištof Oštir, Mojca Kosmatin Fras, and Anka Lisec. 2019. "Extraction of Visible Boundaries for Cadastral Mapping Based on UAV Imagery" Remote Sensing 11, no. 13: 1510. https://doi.org/10.3390/rs11131510

APA StyleFetai, B., Oštir, K., Kosmatin Fras, M., & Lisec, A. (2019). Extraction of Visible Boundaries for Cadastral Mapping Based on UAV Imagery. Remote Sensing, 11(13), 1510. https://doi.org/10.3390/rs11131510