1. Introduction

The new generation of transportation systems employ advanced communication technologies, such as 5G network [

1]; dedicated short-range communication (DSRC) [

2]; and sensing technologies, such as Light Detection and Ranging (LiDAR) and video sensors, to exchange each other’s real-time status and to understand the surrounding traffic environment. When autonomous vehicle platforms can achieve real-time detection and control, connected-vehicle technologies allow drivers/vehicles to “see” across buildings, over surrounding vehicles and other obstacles [

3,

4]. The advantages of connected vehicles rely on vehicle2x wireless communication, including but not limited to vehicle-to-vehicle, vehicle-to-infrastructure, and vehicle-to-other-road users. However, it is estimated that the full deployment of connected-vehicle equipment will take decades [

5]. During this period, the traffic flow will include both the connected/autonomous vehicles and the unconnected road users who are “black spots” of the intelligent traffic systems. Without all users being sensed accurately, the benefits of connected vehicles and other advanced traffic systems will be limited.

Research has been started to enhance traffic infrastructures by deploying light detection and ranging (LiDAR) sensors on roadsides [

6,

7,

8,

9,

10]. The roadside LiDAR sensors allow traffic infrastructures to sense the real-time high-accuracy trajectories of every vehicle/pedestrian/bicycle, so the system is not impacted by whether all road users share their real-time status. With the real-time data from roadside LiDARs, connected/autonomous vehicles know all the other road users without “black spots.” Traffic infrastructures can actively trigger traffic signals/warnings whenever they detect crash risks or changed traffic patterns. When the recent research efforts have developed and implemented the LiDAR data processing algorithms to extract high-resolution high-accuracy trajectories from roadside LiDAR data [

7,

8], a solution to integrate LiDAR data from different roadside sensors is needed. Each LiDAR sensor generates the 3D cloud points in a local coordinate system with the sensor at the origin. When LiDAR sensors are installed at an intersection or along an arterial, the data needs to be integrated by converting all LiDAR data into the same coordinate system. Incorporating LiDAR data from different scans or sensors, also called LiDAR data registration, has been studied for many years for mapping and autonomous vehicle/robots sensing [

11,

12,

13]. 3D mapping scans an object from different viewpoints by rotating the object or moving scanners and then merging them into a complete model [

14]. A point cloud from each LiDAR scan is in a local coordinate system so that the transformation of each view into the same coordinate system is needed. This process is called point cloud registration, and it is one of the critical problems in 3D reconstruction [

15]. The general non-automated registration methods include manual registration, attaching markers onto the scanned object, and calibrating the movement between two scans in advance [

15]. The automatic registration methods of 3D LiDAR scan can be classified into three types: point-based transformation model, line-based transformation model, and plane-based transformation model [

16].

The spatial transformation of point clouds using point features can be established by a point-to-point correspondence and solved by least-squares adjustment. Besl and Mckay [

17] conducted the foundation work of point-based automatic registration with the classical iterative closest point (ICP) algorithm. ICP starts with two point clouds and an initial guess for the transformation, and iteratively refines the transformation by repeatedly generating pairs of corresponding points in the point clouds and minimizing an error metric. However, the premise of using ICP for data integration was that the point clouds should be adequately close to each other [

17]. Furthermore, the ICP lacks the ability to register the points with outliers and variable density [

18]. Researchers also improved the ICP method to improve the computation efficiency and accuracy [

18,

19,

20,

21,

22,

23]. Wu et al. developed a revised partial iterative closest point algorithm (P-ICP) for LiDAR point clouds integration [

19]. By selecting at least three representative points (usually corner points) from the two-point sets, the revised P-ICP can integrate the points within 0.2 s of time delay. The accuracy of the point registration has been greatly improved compared to that of the traditional ICP. The line-based automatic transformation comprises a 3D line feature transformed into another coordinate system that needs to be collinear with its counterpart. Stamos and Allen developed a method for range-to-range registration with manually selected 3D line features [

24]. Stamos and Leordeanu developed an automated registration algorithm with corresponding line features [

25]. A number of parameters were required to be calibrated in advance to customize the algorithm. The line-based registration was also used for registering LIDAR datasets and photogrammetric datasets [

26]. The plane-based transformation is based on plane features [

27,

28,

29]. The plane-based transformation methods formulated the problem of aligning two point clouds as a minimization of the squared distance between the corresponding surfaces. However, the above-mentioned three typical types of point registration methods require either large overlapping areas or clear features between two point clouds. Considering the massive deployment of the roadside LiDAR in the connect-vehicles in the future, only cost-effective LiDARs (8 beams or 16 beams) can be adopted. The extensive detection range of roadside LiDARs further decreased the overlapping areas and the point density. Therefore, the above-mentioned three methods could not be used for point registration of the roadside LiDARs.

The sensing system of autonomous vehicles requires the integration of multiple sensors such as LiDAR, video, radio detection and ranging (RADAR), short-wavelength infrared (SWIR), and global positioning system (GPS). The sensor fusion also requires the registration of LiDAR sensors’ data, or the registration of LiDAR data and other sensor data. The LiDAR-video integration normally builds separate visions, and the LiDAR feature extraction methods identify common anchor points in both [

30,

31]. For integrating multiple LiDAR sensors on a mobile platform, Cho et al. [

32] treated six LIDARs in four planes as one homogeneous sensor, and analyzed sensor measurements using built-in segmentation and extracted features, like line segments or junctions of lines (“L”) shape [

33].

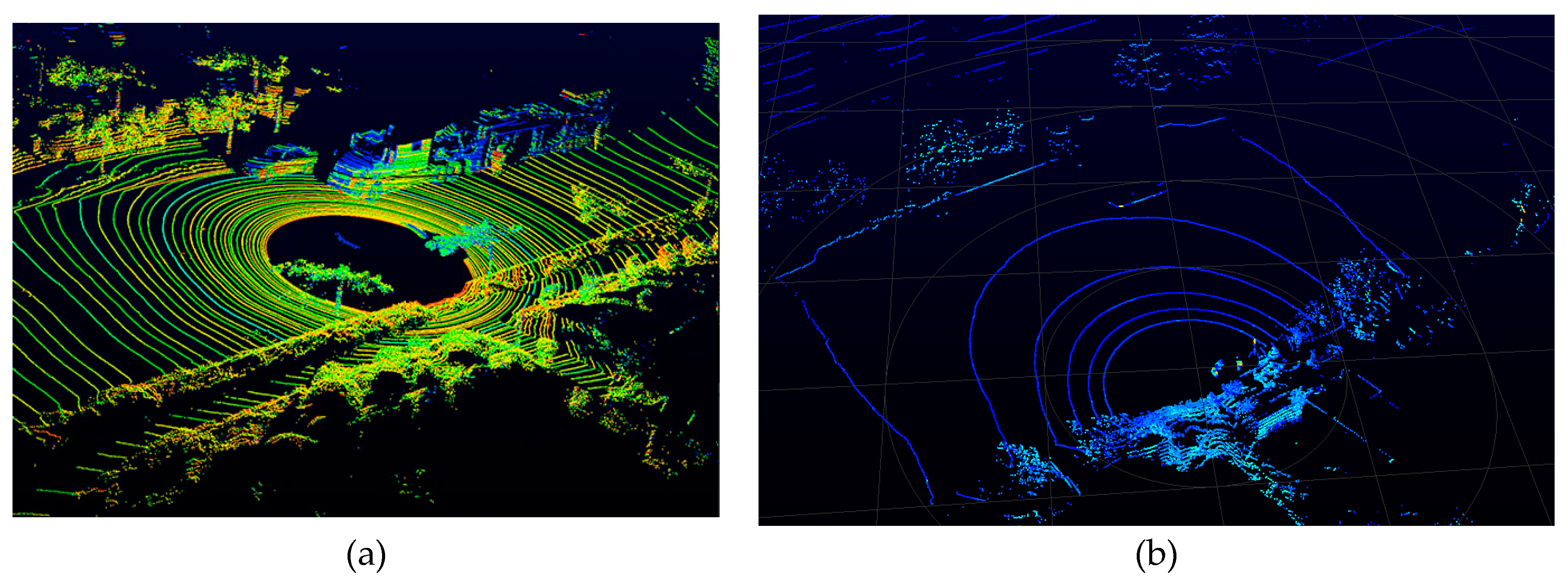

All the reviewed registration methods in mapping and autonomous sensing only serve the scenarios with relative high-density cloud points, as shown in

Figure 1a. Even the methods registering LiDAR data to images require many details of cloud points and pixels to achieve coordinate conversion. Some researchers on autonomous vehicle sensing systems use a board with a standard round hole or mark for registering multiple sensors. Researchers sometimes assume that LiDAR sensors on a vehicle platform are in a uniform coordinate system because the sensors are close to each other. These existing methodologies and assumptions do not apply to registering roadside LiDAR sensors for relative low-density cloud points caused by the wide detecting range and offset between laser beams of two sensors [

34]. An example of the roadside LiDAR data is shown in

Figure 1b. It differs noticably from

Figure 1a.

This paper introduces a method particularly developed for registering roadside LiDAR sensors data into the same coordinate system. This approach innovatively integrates LiDAR datasets based on the 3D cloud points of the road surface and the selected 2D reference points, so the method is abbreviated as Registration with Ground and Points (RGP). The RGP method was developed for roadside sensors, and it can also be used to register the LiDAR sensors on a mobile platform such as an autonomous vehicle or a robot. The last step of the RGP method is to identify the parameters of linear coordinate transformation, offset-in-x, offset-in-y, offset-in-z, rotation-in-x, rotation-in-y, and rotation-in-z, with optimization algorithms. An objective function related to the 3D points of the ground surface and an objective function corresponding to the selected 2D reference points is defined. The two objective functions are further combined into a single objective function. The objective function has various local minimum/maximum values, so this research considered the genetic algorithm (GA) for global optimization and the hill climbing (HC) algorithm for local optimization for their advantages and disadvantages. The HC algorithm used an initial input that was estimated with a tool visualizing and customizing coordinate transformation. The performance of the RGP method and the optimization methods were evaluated with LiDAR data collected with two LiDAR sensors on different sides of a road.

This article is organized as the following:

Section 2 presents the conception of coordinate transformation and the algorithm details of the RGP method. The performance evaluation results are demonstrated in

Section 3.

Section 4 provides the discussion of the influence of the proposed method on new transportation sensing systems and the extended research in the future. By the end,

Section 5 summarizes the findings of this research.

2. Methodology

2.1. Coordinate Transformation

Linear transformations, as a type of coordinate transformation [

35], convert points, lines, and planes in one coordinate to those in a second coordinate by a system of linear algebraic equations. A vector X in a 3-D coordinate system that has components

Xj (

xj,

yj,

zj), and in the primed-coordinate system the corresponding vector X

’ has components

Xi (

xi,

yi,

zi) given by

Equations (1) and (2) give the general linear transformation of 3-D coordinate systems. This general linear form is divided into two constituents: the matrix A and the vector B. The vector B can be interpreted as a shift in the origin of the coordinate system. The elements Aij in the matrix A are the cosines of the angles between the axis of Xi and Xj. This is called the direction cosine. It needs to be noted that this linear conversion only includes the offsets in the x, y, and z axis and the rotations along x, y, and z axis, without changing the scales of x, y, and z. Therefore, a total of six parameters determine the required linear transformation (matrix A and vector B) for registering a LiDAR sensor dataset to another. To determine the six parameters: offset-in-x, offset-in-y, offset-in-z, rotation-in-x, rotation-in-y, and rotation-in-z, coordinates of two reference points in two coordinate systems are theoretically enough. The known coordinates of two reference points can be used to generate six equations, which are enough for solving the six transformation parameters. In the actual datasets, it is difficult to find accurate coordinates of a reference point in two coordinates. Therefore, optimization methods are commonly applied to identify the best linear transformation with two or more reference points, lines, or planes.

The reference features (points, lines, and planes) used in existing methods still need relatively accurate x, y, and z values of the same feature in two coordinates (overlap points). The high-resolution LiDAR cloud points, such as the ones used for mapping, can provide the required overlaps. For example, the LiDAR points of a building corner from the different stations can provide decent x, y, and z values of the same feature point in different station coordinates for high-resolution LiDAR cloud points. However, it is much more difficult or impossible to find the overlap x, y, and z values of the corresponding reference feature in different coordinates with the roadside sensors.

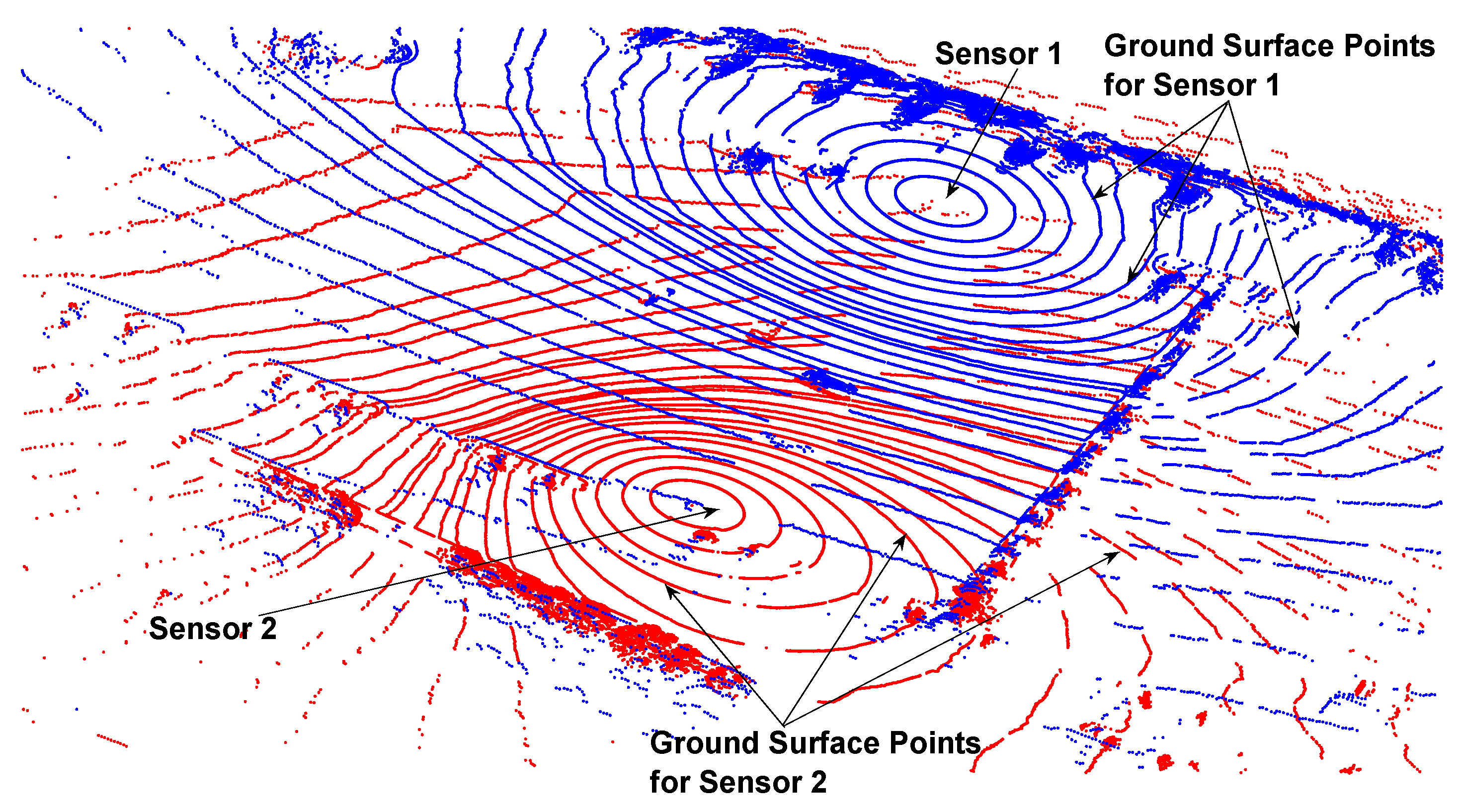

Figure 2 presents the offsets of laser beams of two roadside LiDAR sensors in the z-axis. The two different colors mean the points are from the two different sensors. The points in the rounded rectangular box are of the same building, but the points of the different sensors are at different heights. In other words, the registered two LiDAR datasets may not have overlaps because of the z-axis offsets and the distance between the laser beams. This is the primary reason that the existing algorithms developed for mapping and autonomous sensing systems cannot register the roadside LiDAR data.

When it was not possible to find the coordinates of a reference feature in the two sensor coordinates, the authors innovatively used the ground surface LiDAR points of two roadside sensors. Though there is no guarantee of a building or object points from two sensors have overlap, there must be overlap points of the ground reflections in the two sensors’ data. The LiDAR points of ground surface are circles with the LiDAR sensor as the center. So, if two LiDAR datasets are correctly registered, the ground surface LiDAR points of the two sensors must have crossing points (overlaps), as shown in

Figure 3. The problem of optimizing the linear transformation is that it needs to include an optimization function to minimize z difference of the overlap ground points (best match of the ground surface). With the ground surface points from the two LiDAR datasets, in each iteration, the converted coordinate is zoned into subareas with squares on the x-y surface. All ground surface LiDAR points are projected into the continuous x-y squares based on their x and y values. In each x-y square, the difference between the average z values of projected surface points of sensor 1 and the average z value of projected surface points of Sensor 2 (after the linear transformation) is calculated. If a square has only projected points from one sensor or has no projected points, this square is excluded from consideration. With the z difference calculated for all squares, the average z difference is calculated as an objective function.

The objective function to minimize the z difference of ground points of two sensors is described by Equations (3) and (4).

F1—Objective function of minimum z difference of ground points of two sensors;

z_diffi—z Difference of the x-y square i;

zk_s1_i—z value of LiDAR point k of sensor 1 in square i;

zl_s2_i—z value of LiDAR point l of sensor 2 (after linear transformation) in square i;

xi_min—Minimum x boundary value of square i;

xi_max—Maximum x boundary value of square i;

yi_min—Minimum y boundary value of square i;

xi_max—Maximum y boundary value of square i;

xk_s1_i—x value of LiDAR point k of sensor 1 in square i;

yk_s1_i—y value of LiDAR point k of sensor 1 in square i;

xl_s2_i—x value of LiDAR point l of sensor 2 (after linear transformation) in square i;

yl_s2_i—y value of LiDAR point l of sensor 2 (after linear transformation) in square i.

The test of optimization to minimize z difference of ground points found that the optimization result provided a good match of the ground surface of the two LiDAR datasets. However, this objective function is not sensitive enough to minimize offsets in x-axis and y-axis. For an accurate match in the x-direction and y-direction, two or more reference points are needed in the x-y 2D space.

It is impossible to find accurate coordinates related to the same reference feature in the two sensor datasets because of the z offsets, but x, y values corresponding to the same reference features (overlap points) can be found in the x–y space of the two sensor datasets. It is challenging to find the line or surface features from the two roadside datasets, because the sensors are distant from each other. In addition, when one sensor can detect a line or a surface of a building, the other sensor may not be able to catch the same surface or edge, as trees or obstacles around the two sensors are different. At least coordinates of two reference points in the two LiDAR datasets need to be identified to optimize the linear transformation for accurate registration in the x-axis and y-axis. More reference points can be used, if they are available, to improve the accuracy and reliability of the optimization. A semi-automatic method for the reference points detection can be found in reference [

18].

The second objective function is to minimize the offsets of LiDAR points at the same point features in the x-y space. The objective function can be described by Equations (5) and (6).

F2—The objective function of the minimum difference between the x,y coordinates of Sensor 1 and x,y coordinates of Sensor 2 after transformation at the selected reference point m;

dm_s1_s2—Distance between the x,y coordinates of Sensor 1 and x,y coordinates of Sensor 2 after transformation at the selected reference point m;

xm_s1—x value of LiDAR the coordinate of LiDAR Sensor 1 at the reference point m;

xm_s2—x value of LiDAR the coordinate of LiDAR Sensor 2 at the reference point m (after the linear transformation);

ym_s1—y value of LiDAR the coordinate of LiDAR Sensor 1 at the reference point m;

ym_s2—y value of LiDAR the coordinate of LiDAR Sensor 2 at the reference point m (after the linear transformation).

In this multiple-objective optimization problem, the difference of z value of ground points and the difference of x-y coordinates at the reference points are considered to have the same importance, so they have the same weight values.

Therefore, the multi-objective functions for optimizing the linear transformation can be expressed as a single-objective optimization, as shown in Equation (7):

2.2. Procedure to Optimize Linear Transformation

The procedure of determining the linear transformation parameters for registering multiple roadside LiDAR sensor datasets can be described as the following:

Step 1: Extract the cloud points of the ground surface from the LiDAR datasets by defining x, y, and z boundaries (minor non-surface points in the extracted surface clouds do not influence the registration results);

Step 2: Select the reference points and the related coordinates in x–y 2D space from the Sensor 1 dataset and the Sensor 2 dataset (the corner points of buildings or crossing roads are suggested);

Step 3: Optimize the transformation parameter array [offset-in-x, offset-in-y, offset-in-z, rotation-in-x, rotation-in-y, and rotation-in-z] to minimize the objective function F. The optimization starts with an initial transformation parameter array (which is different for the different optimization algorithms);

Step 4: Convert the Sensor 2 dataset into the Sensor 1 coordinate system with the optimized coordinate transformation parameters.

Each optimization iteration includes the following processes:

(1) Calculate the linear transformation matrix A and vector B with the transformation parameter array;

(2) Convert cloud points of Sensor 2 to the coordinate system of Sensor 1 with the transformation matrix A and vector B calculated in (1);

(3) Divide the coordinate space into subareas along the x-y surface, using the x-y square with a side length of 5 cm;

(4) Project the cloud points of Sensor 1 and Sensor 2 into the x-y squares;

(5) Calculate the objective function F with Equations (3)–(7);

(6) Adjust the transformation parameters based on the objective function F. This step is different from the various optimization algorithms.

Since the objective functions have already been defined in

Section 2.1, an optimization algorithm is needed to get the solution. It is known that the objective function F has multiple local minimum values, so the global optimization algorithms are ideal to solve this problem. However, global optimization takes a much longer time than the local optimization algorithms and may not be able to deeply search in a local area to identify the actual minimum objective function. Therefore, this study compared the performance of the GA that is a global optimization method and the HC algorithm that is a local optimization method. The initial input to HC was selected manually, and the process is introduced in the later section.

2.2.1. GA Global Optimization

GAs are a subclass of evolutionary algorithms where the elements of the search space are the six parameters offset-in-x, offset-in-y, offset-in-z, rotation-in-x, rotation-in-y, and rotation-in-z with the double type [

36,

37]. GA optimization can be described by

Figure 4 [

38].

The objective function here is referred to as the fitness function. The following GA options in

Table 1 are used in this research [

39].

2.2.2. Hill Climbing

Hill Climbing (HC) [

38] is a simple search and optimization algorithm for single objective functions F. Hill Climbing algorithms use the current best solution to produce one offspring. If this new individual is better than its parent, it replaces it. The general HC algorithm can be described as

Figure 5 [

36].

The objective function—Equation (7)—is used to evaluate each individual’s performance. It is known that the Hill Climbing method is a local optimization algorithm, so the initial input is critical. The authors have developed a tool (available at

https://nevada.box.com/s/sz45lorntec4rbxyzk06j8s3zstd3m3g) that can quickly adjust the six linear transformation parameters to estimate the parameters. The estimated six parameters were used as the initial input. The number of iterations of hill climbing algorithm was 100, and the stopping criterion was function tolerance 1 × 10

−10.

2.3. Method of Performance Evaluation

The collected data were stored in the Cartesian coordinate system. To compare the LiDAR data to their real location, the LiDAR data should be transformed into the world geodetic system (WGS). The transformation required at least four reference points that have coordinate values in both coordinate systems. Normally, the coordinate values in the geodetic coordinate system are represented by BLH (latitude, longitude, and height), and the coordinate values in the Cartesian coordinate system are represented by XYZ. The proposed method includes three major steps: the first step is to convert the BLH values of the reference points into XYZ values, then two different group of coordinate values for the same reference point in the Cartesian coordinate system with two different origins are obtained. The second step is to make the same point in the two coordinate systems coincide via scaling, skewing, rotation, projection, and translation. Thus, a transformation matrix can be available to transform all the points collected by the LiDAR in the Cartesian coordinate system into the WGS (usually WGS-84 system). The LiDAR points can then be shown in GoogleEarth. There were two sensors (A and B); the points in sensor A were mapped onto the Google Earth. Then, points in sensor B were integrated into sensor A. The points in sensor B after integration could be compared with the “ground truth” data in Google Earth.

3. Results

The developed procedure for integrating multiple roadside LiDAR sensor data was evaluated with the LiDAR data collected with two Velodyne VLP-16 LiDAR sensors at the intersection- S Boulder Hwy@ E Texas Ave, as shown in

Figure 6. The data were collected to study the behaviors of vehicles/pedestrians/bicycles/skateboarders at the site. The two LiDAR datasets needed to be integrated before extracting the trajectories of the road users.

The VLP-16 LiDAR sensor is a cost-effective 3D LiDAR unit. The LiDAR sensor creates a 360° 3D point cloud with 16 laser beams. The unit inside rapidly spins to scan the surrounding environment with a detection range of 100 m (328 ft). The LiDAR has the rotation frequency in the range of 5–20 Hz. It can generate 600,000 3D points per second. The sensor’s low power consumption (~8w), lightweight (830g), compact footprint, dual return capability, and reasonable price make it ideal for roadside deployment for serving connected vehicles and other traffic engineering applications. Each VLP-16 reports the cloud points’ locations (x, y, and z) in a local coordinate system with the sensor at the origin. The rotation frequency was set as 10 Hz in the data collection. One frame from each LiDAR sensor dataset was extracted for the LiDAR data integration/registration.

For Sensor 1, the boundaries for the ground points extraction were defined as x: −35~35 m, y: −20~10 m, and z: −2~1 m. For Sensor 2, the boundaries for the ground points extraction were defined as x: −20~10 m, y: −35~35 m, and z: −3~2 m. The boundaries need to be selected with consideration of the sensor location, surrounding environment, and terrain. Two reference points at building corners were selected in this test.

3.1. Performance of GA Optimization

The computer was configured with Intel I7-3740QM CPU @ 2.70 GHz and 16 GB memory (RAM). Because of the distance of the LiDAR sensors and different surrounding environments of trees and obstacles, it is difficult to evaluate the results quantitatively, so this paper assessed the accuracy based on the visualization of the registered LiDAR data and checks points of the building surface and a parked vehicle.

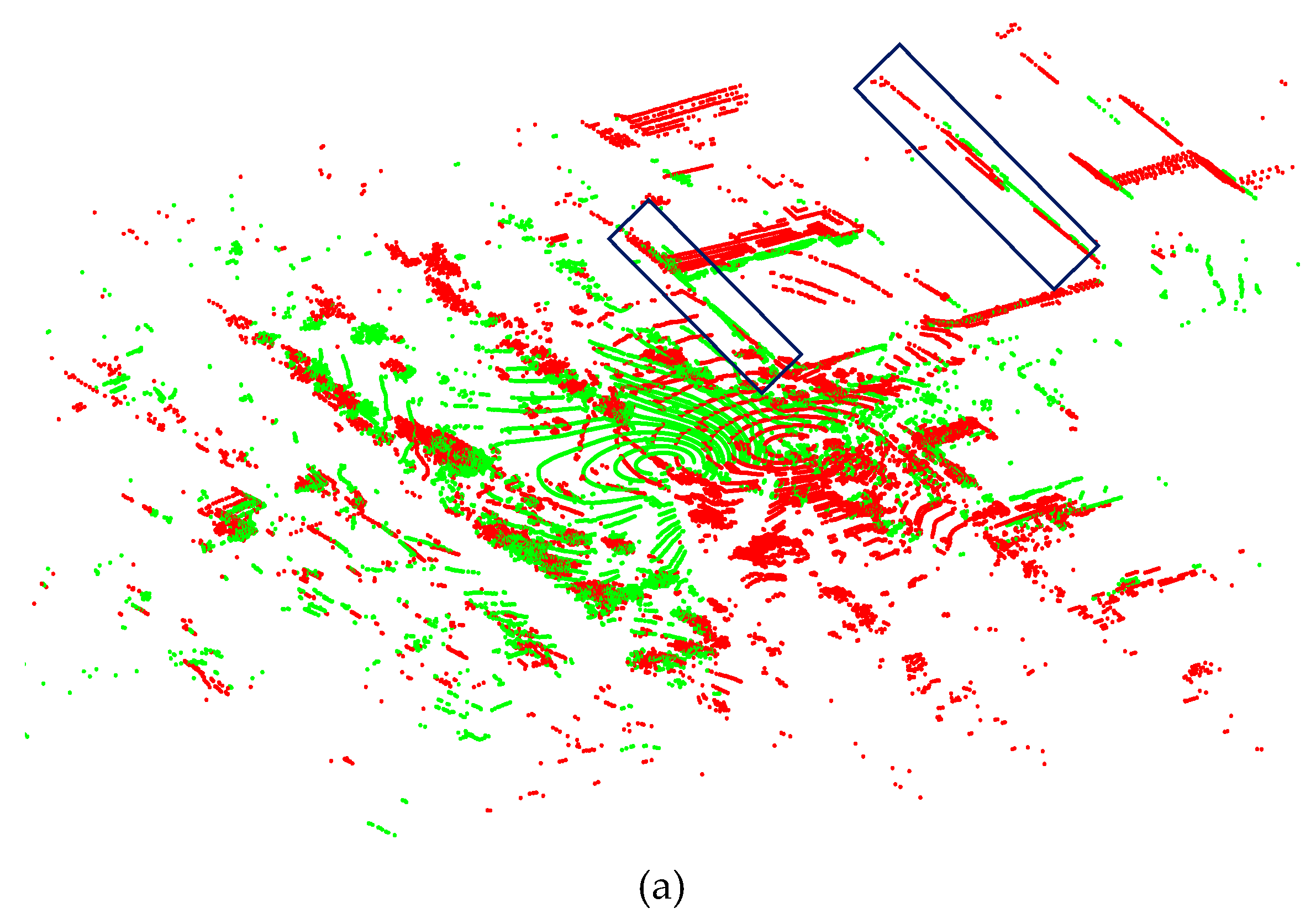

Figure 7 visualizes the integrated data of two LiDAR sensors with GA optimization.

Figure 7a shows minor offsets in x-axis and y-axis of the building edges in the box.

Figure 7b shows a mismatch in the z-axis of the LiDAR points of the building edges, as surrounded by the box.

3.2. Performance of HC Optimization

With the visualization tool, the initial parameters that were the input of HC were estimated. The transformation parameters were optimized by the HC method with the objective function. The computation time was 34 s.

Figure 8 presents the registration results of HC.

The LiDAR data registration converted the Sensor 2 data into the Sensor 1 coordinate with a relatively accurate match. The boxes in

Figure 8a highlight the exact matches of the building edges in 2D and the box in

Figure 8b highlights the match of the building edges in 3D. It should be noted that there should be some offsets in z-axis, since the heights of the LiDARs were different.

Based on the testing with the GA method and the HC method, HC can provide more accurate registration of the two datasets. Although the GA method is a global optimization method, it can only give an estimation of the optimization solution. The GA method does not guarantee the minimum objective function to be found, and each run offered different parameters [

40]. On the other hand, the HC algorithm is a local optimization algorithm, but it can give actual optimization results with the initial input estimated by the visualization tool. The integrated point clouds can generate the vehicle trajectories with higher accuracy. An example of the integrated vehicle trajectories can be found at

https://youtu.be/s5MT1UrabeQ.

3.3. Comparison to ICP

The RGP method was also compared to the existing method—a revised ICP algorithm [

35]. To evaluate the results of the two methods quantitatively, the LiDAR points were firstly mapped from the local coordinate system to the World Geodetic System-WGS-84.

Figure 9 shows an example of integrated sensor data at one intersection in Reno. Here, the locations of the infrastructures (such as the corner of the buildings) in Google Earth were considered as the “ground truth” data.

The distance (D) between the LiDAR data and the corresponding infrastructure (such as the corner of the building) was manually checked by the authors. The data were collected at four sites: N McCarran @ Evans Ave, S Boulder Hwy @ E Texas Ave, I-80 @ Keystone Avenue, and I-80 @ N McCarran. For each site, an average D was calculated by randomly selecting 10 points. The results of the RGP and the revised ICP are summarized in

Table 2. It is clearly shown that the RGP can generate a smaller error than the revised ICP at each site.