Dual and Single Polarized SAR Image Classification Using Compact Convolutional Neural Networks

Abstract

:1. Introduction

2. Related Work

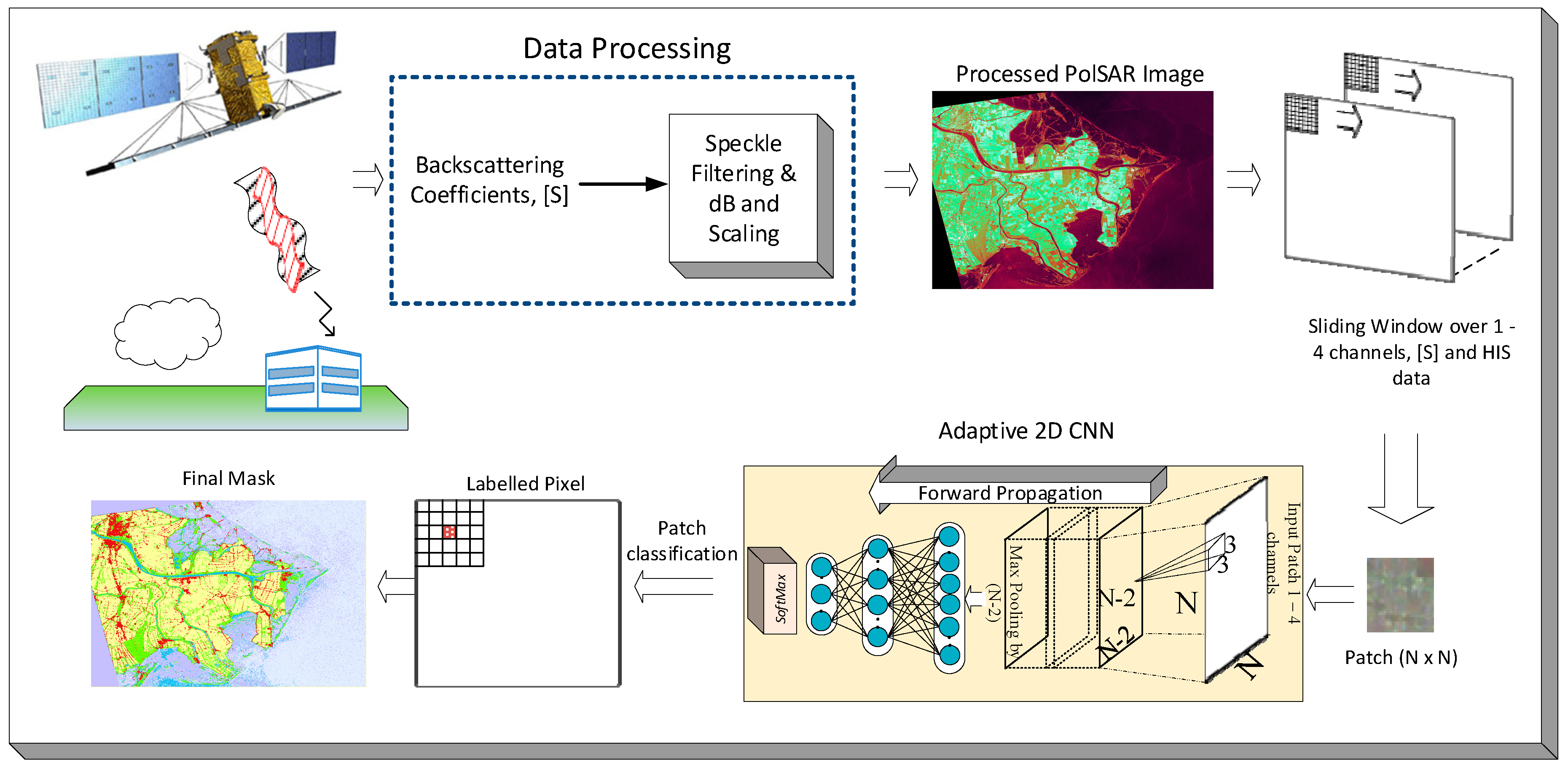

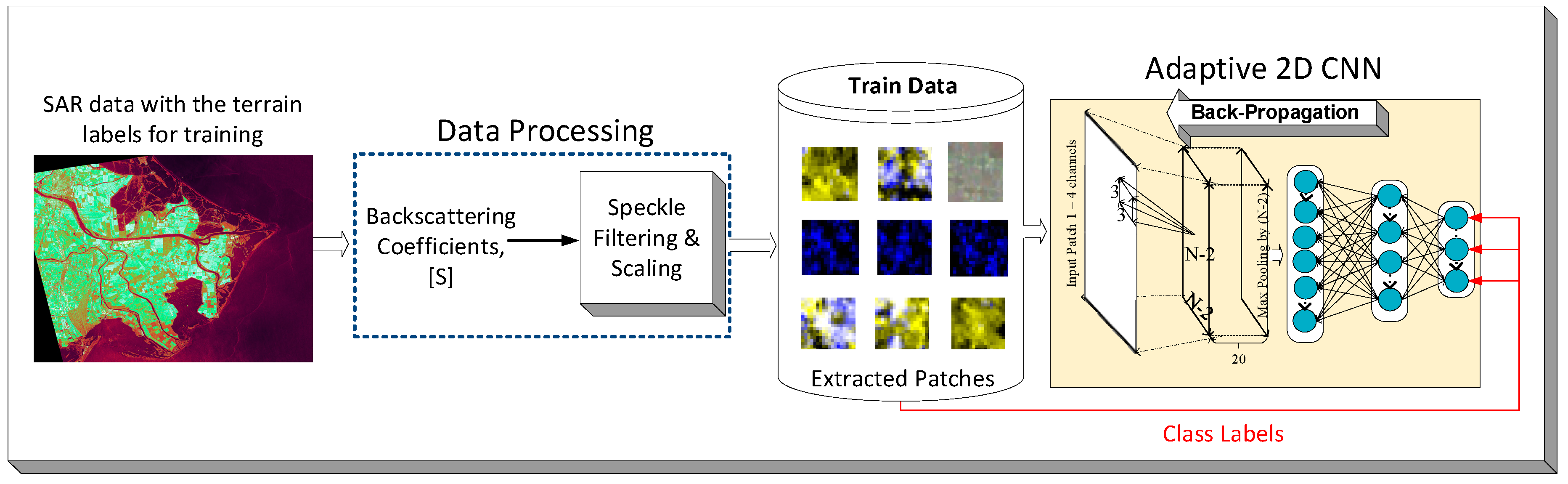

3. Methodology

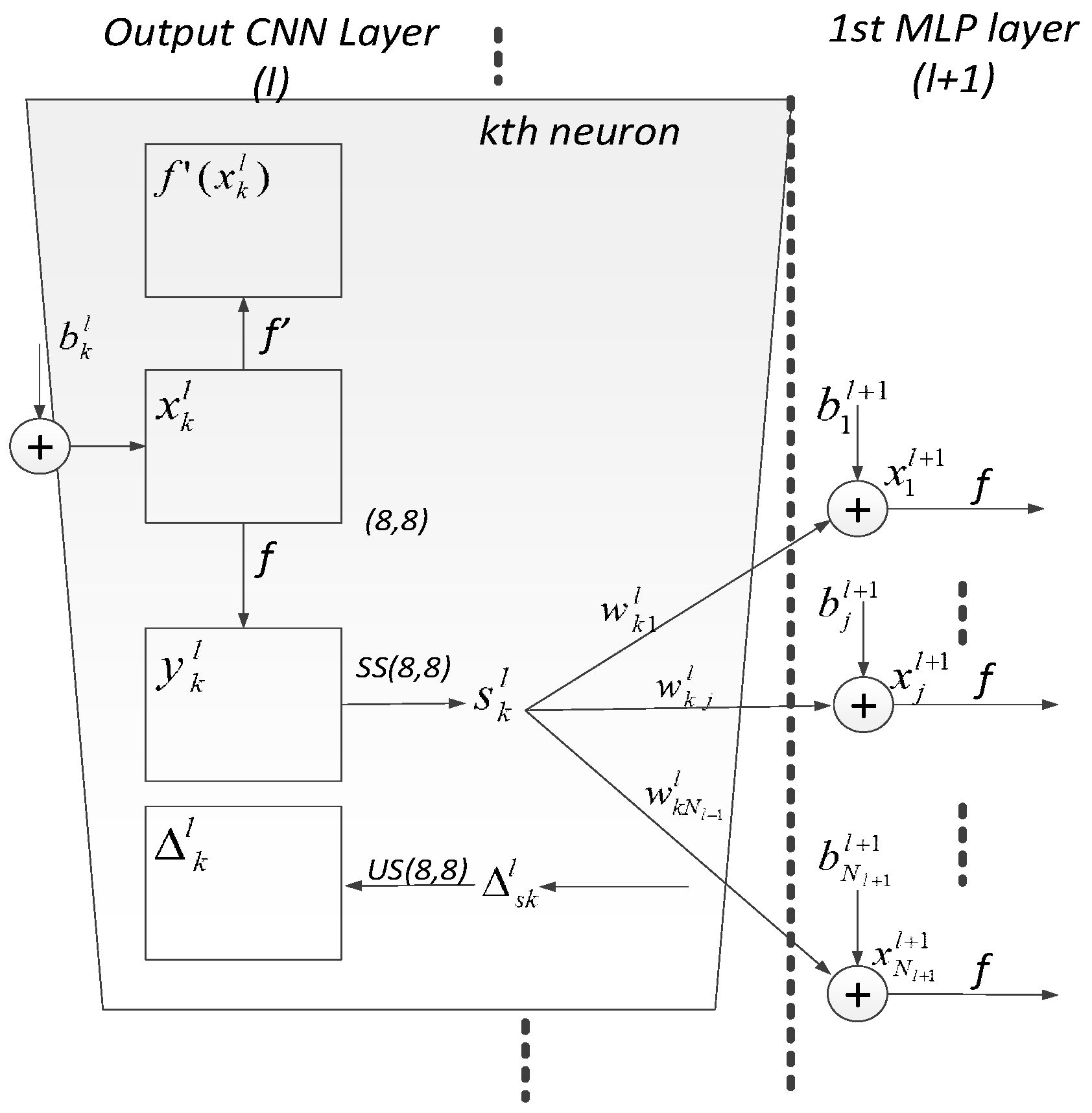

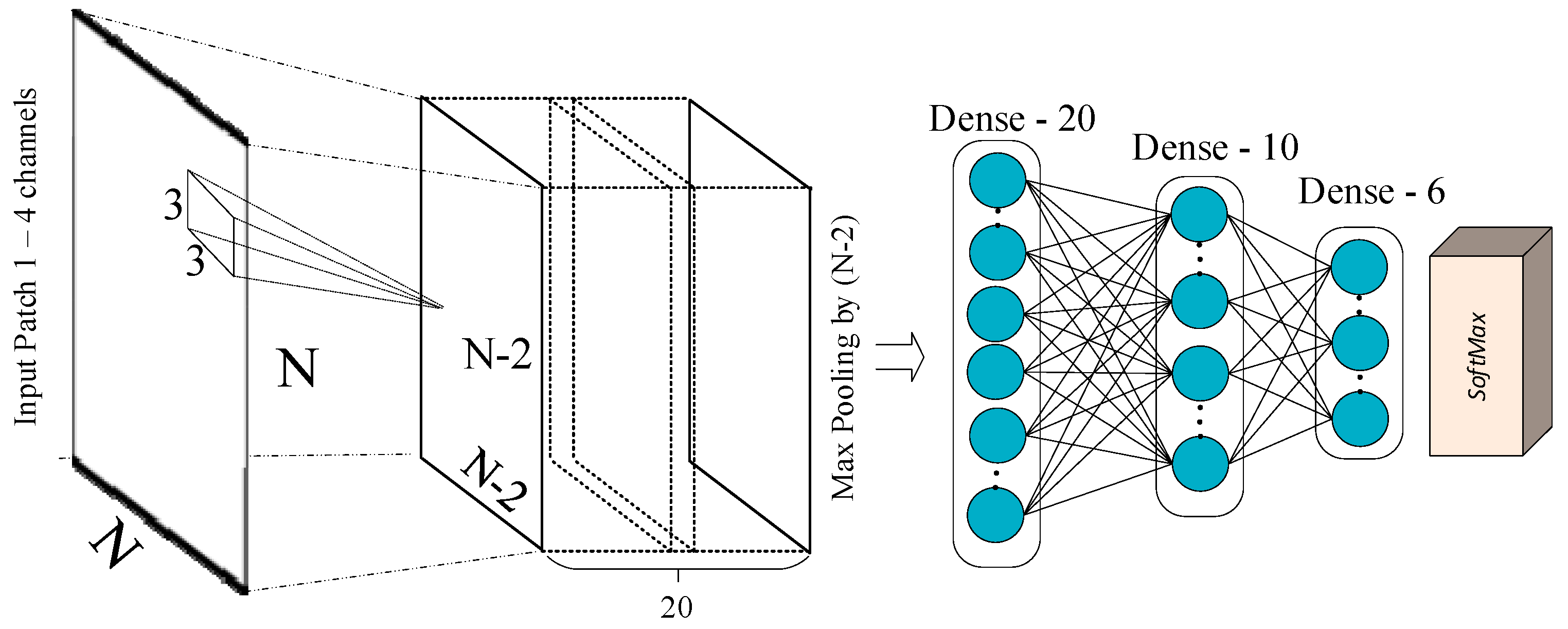

3.1. Adaptive CNN Implementation

3.2. Back-Propagation for Adaptive CNNs

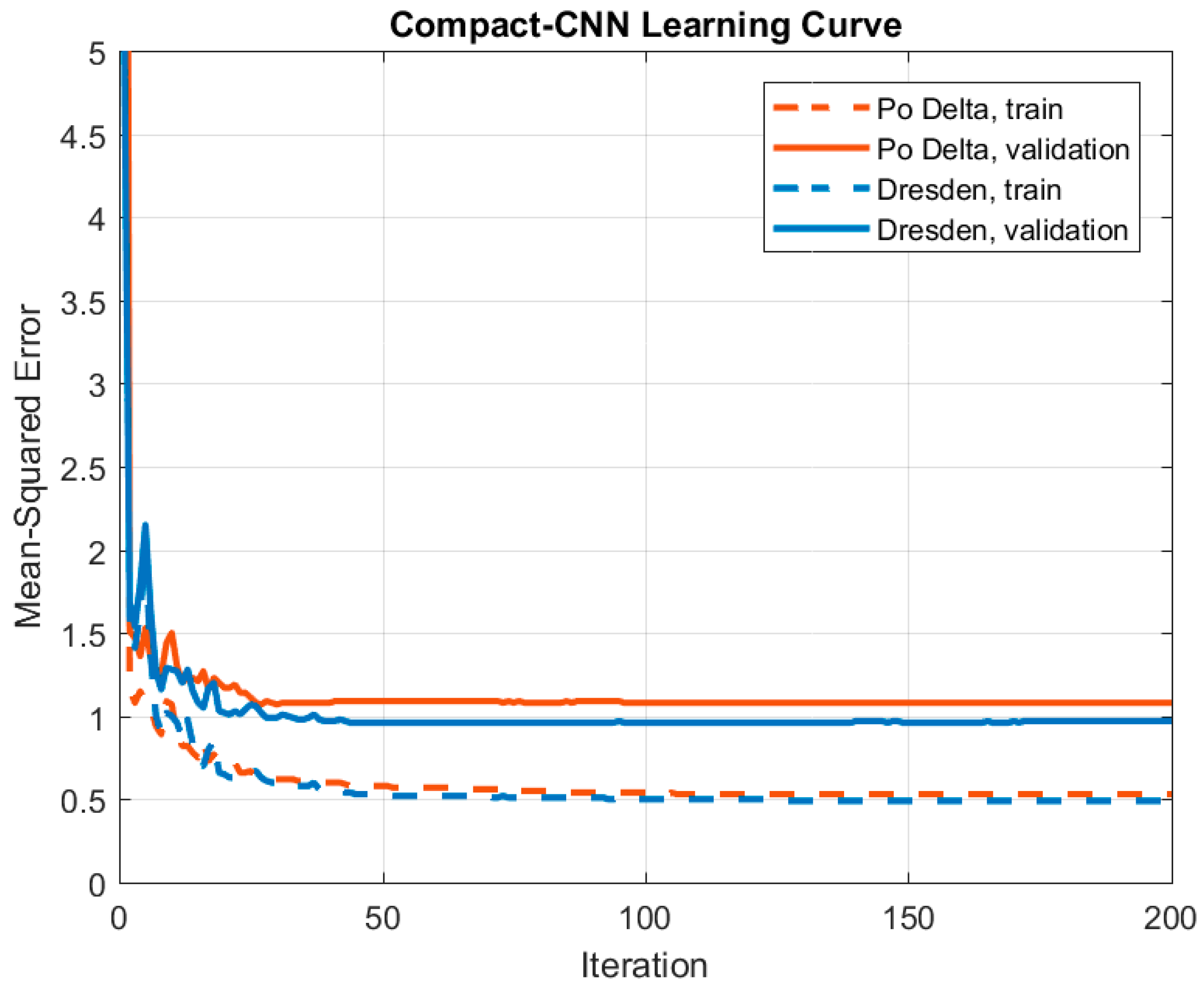

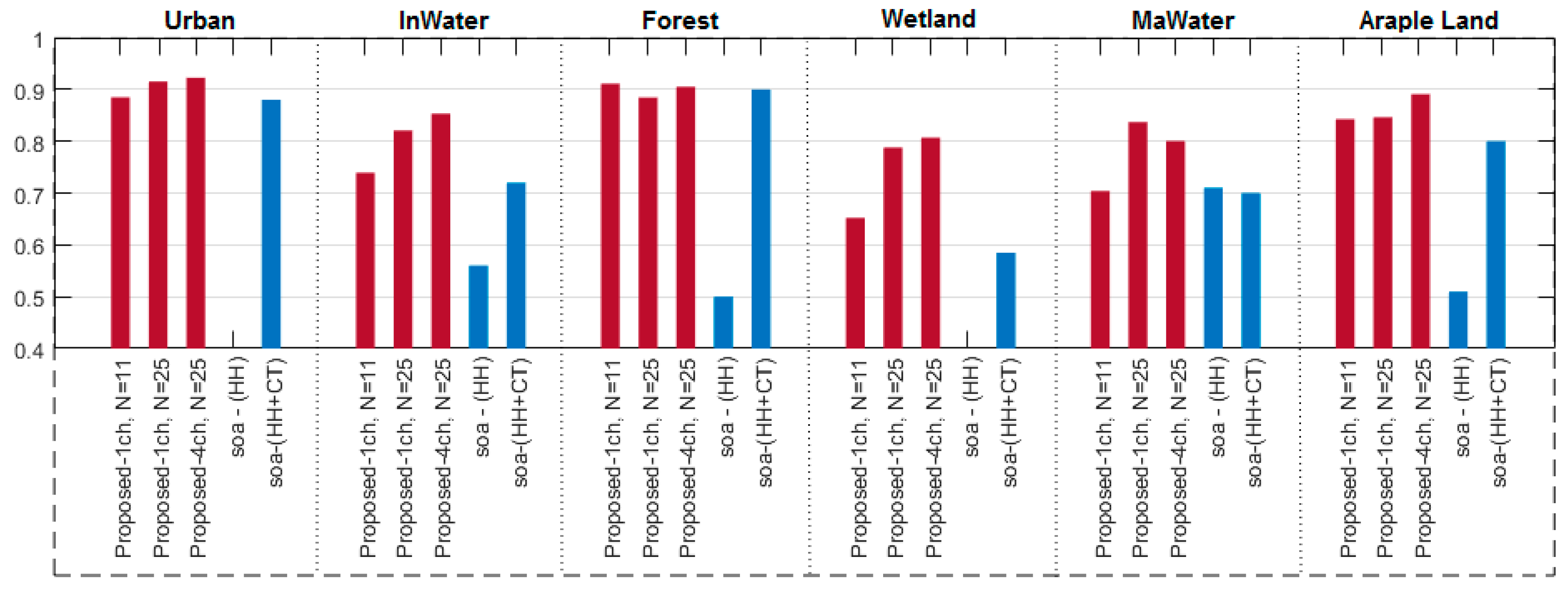

4. Experimental Results

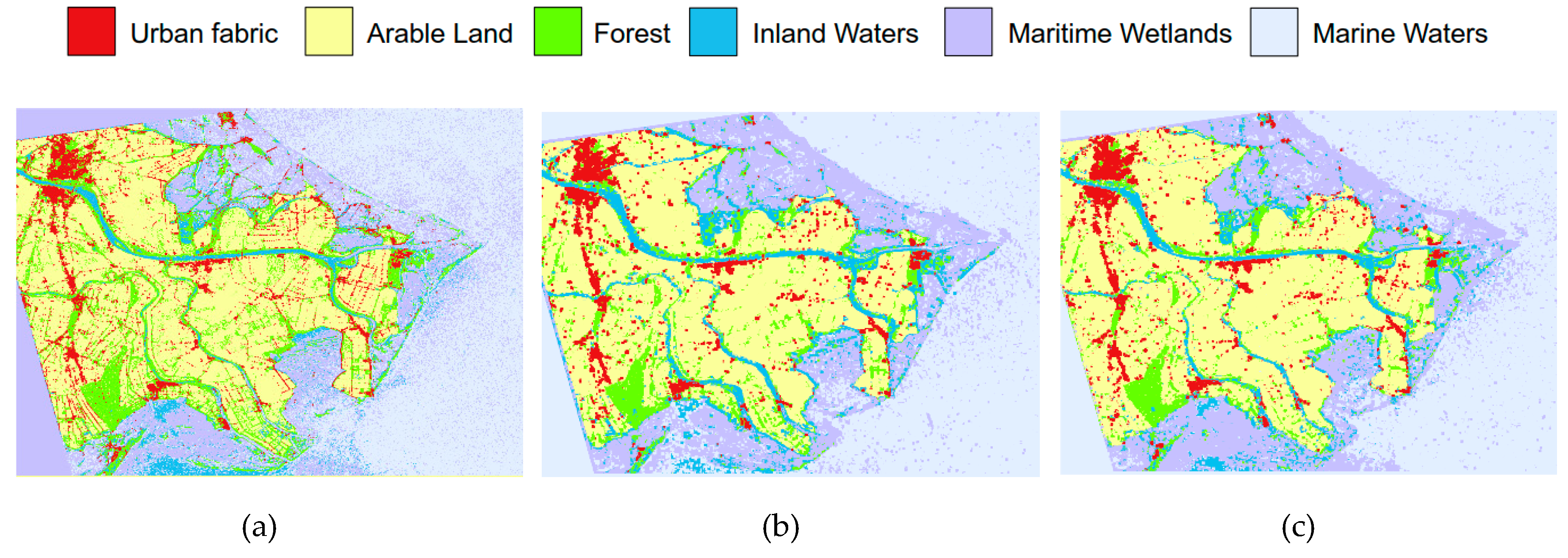

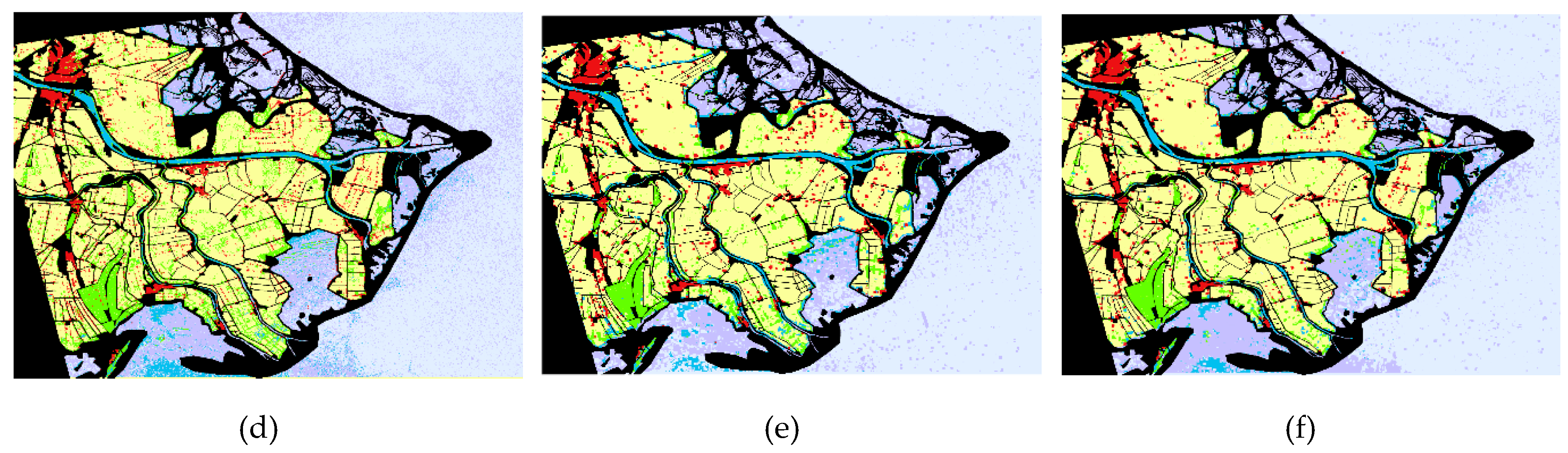

4.1. Benchmark SAR Data

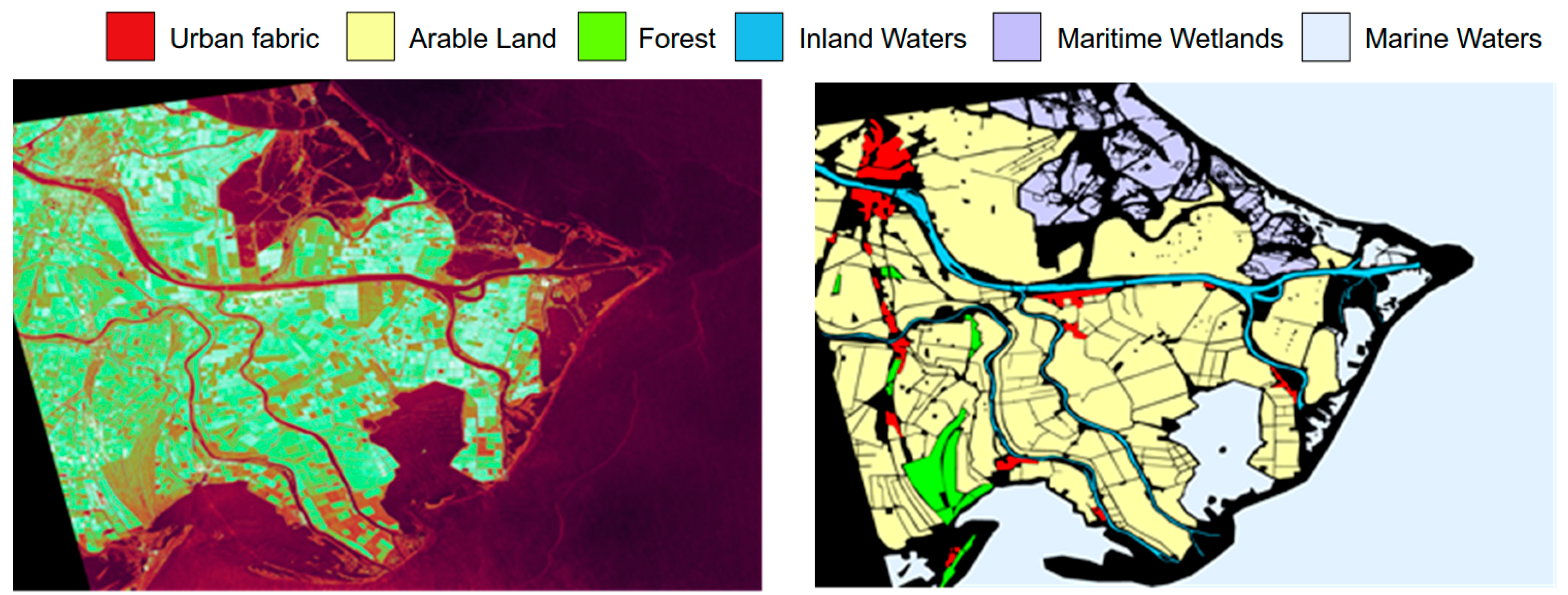

4.1.1. Po Delta, COSMO-SkyMed, and X-Band

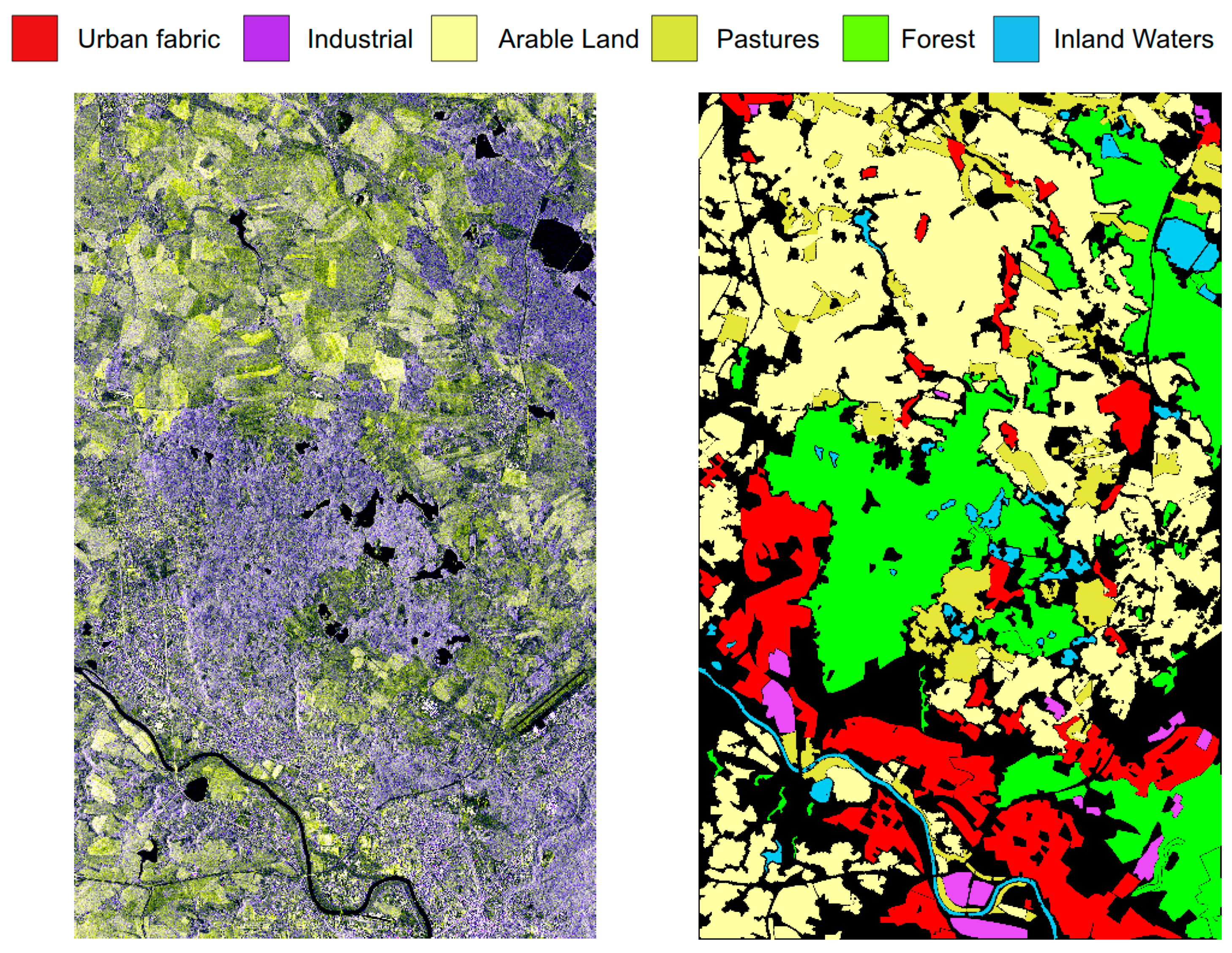

4.1.2. Dresden, TerraSAR-X, and X-Band

4.2. Experimental Setup

4.3. Results and Performance Evaluations

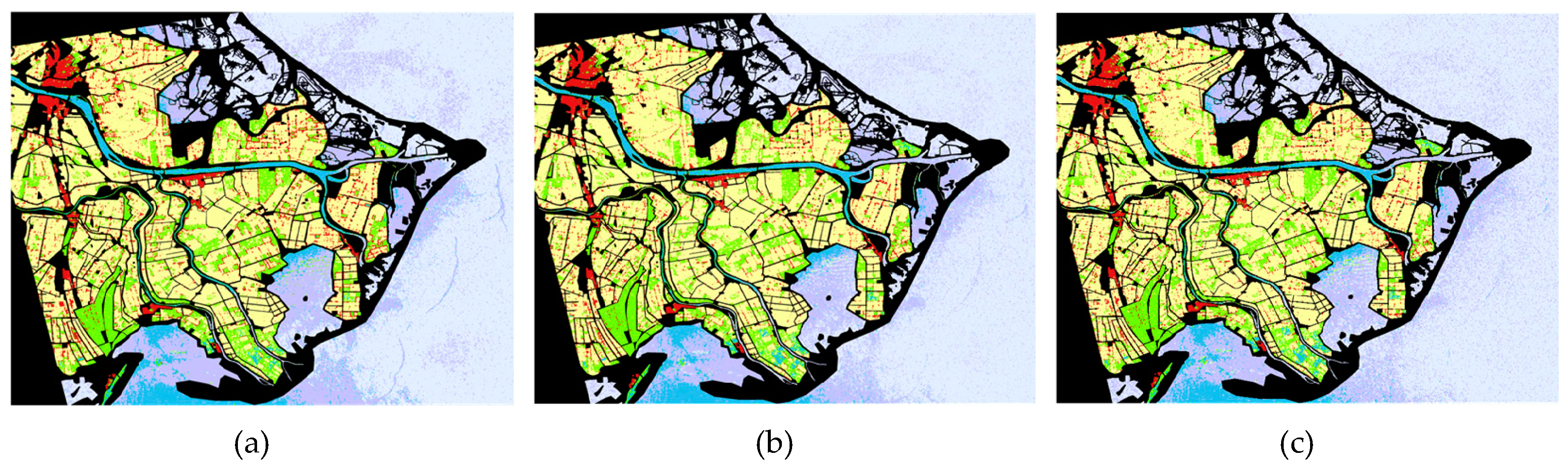

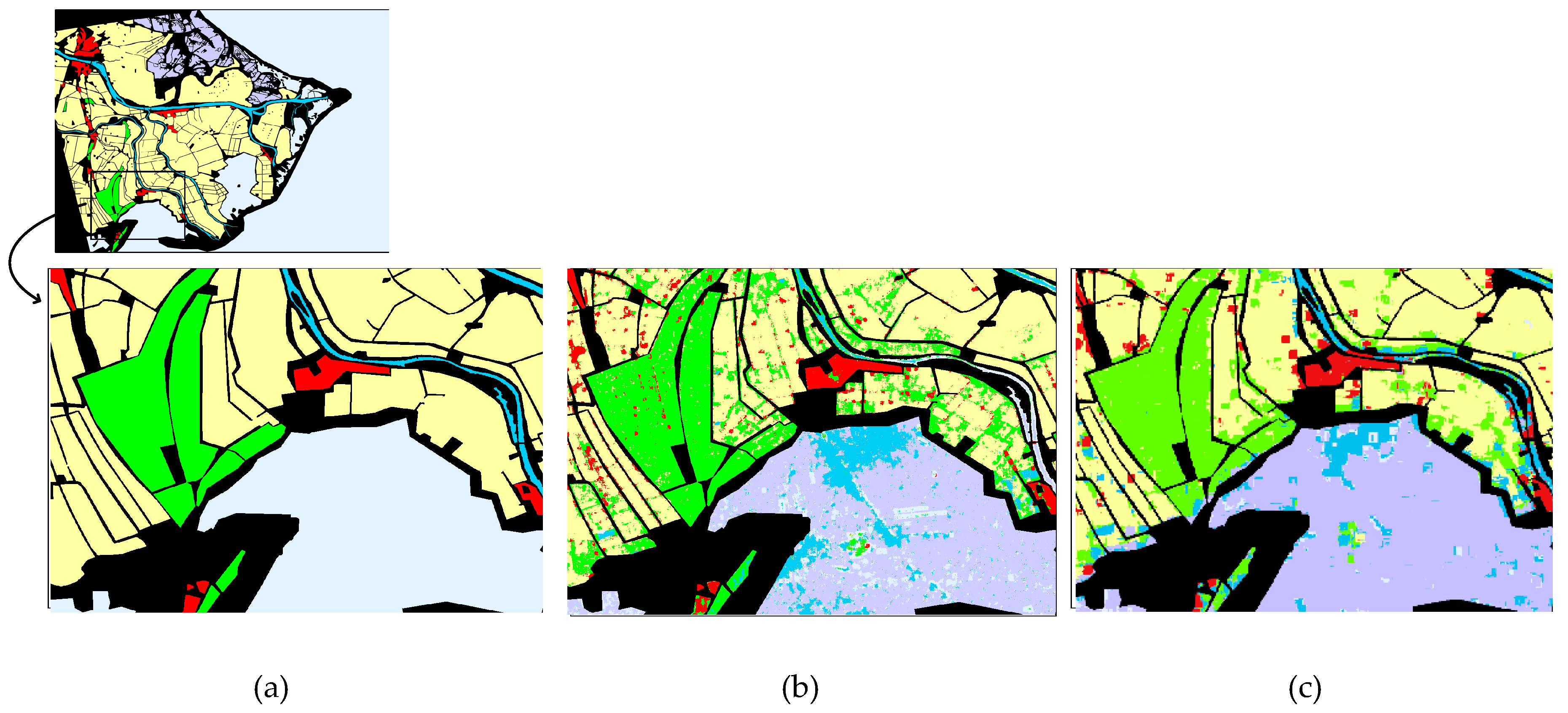

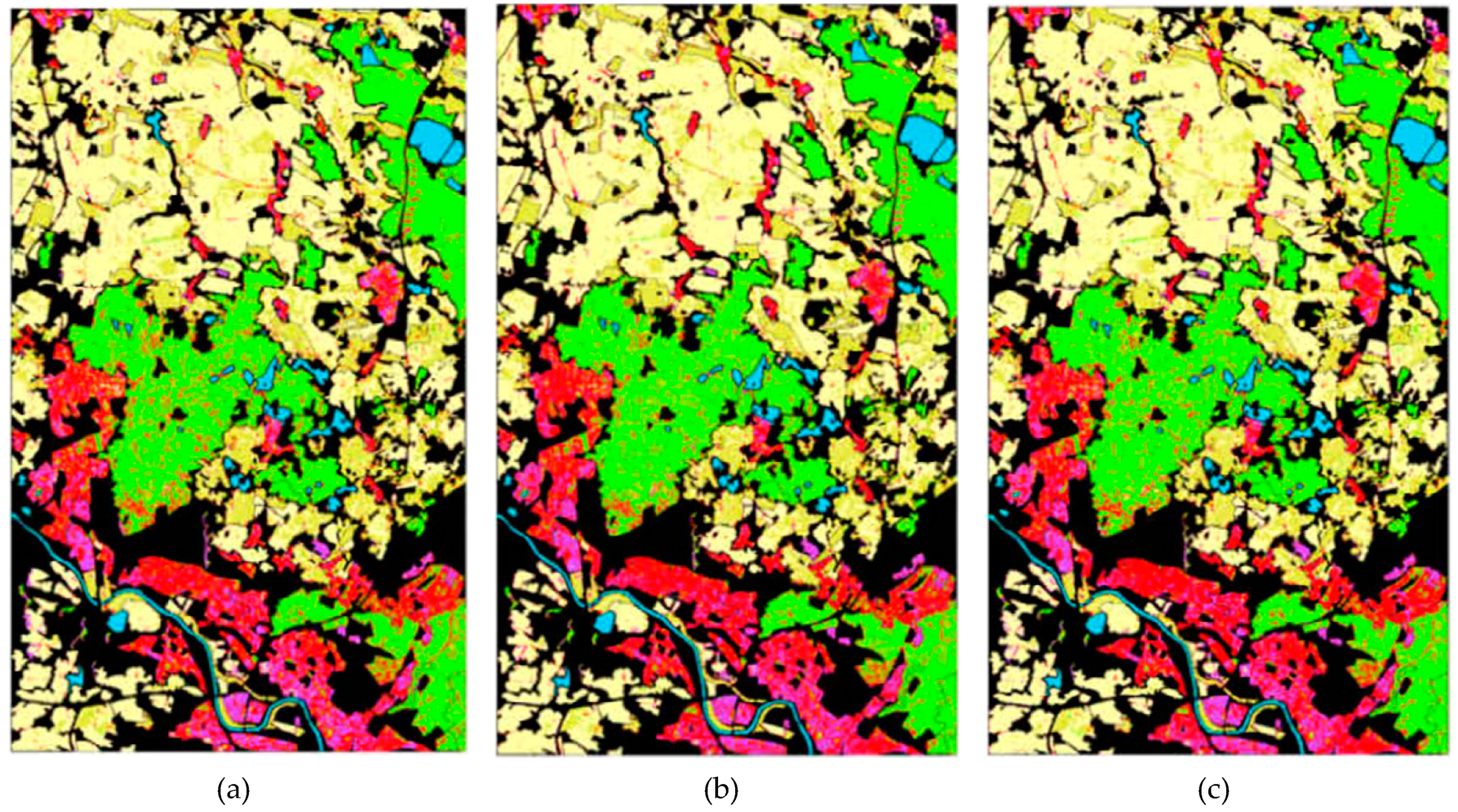

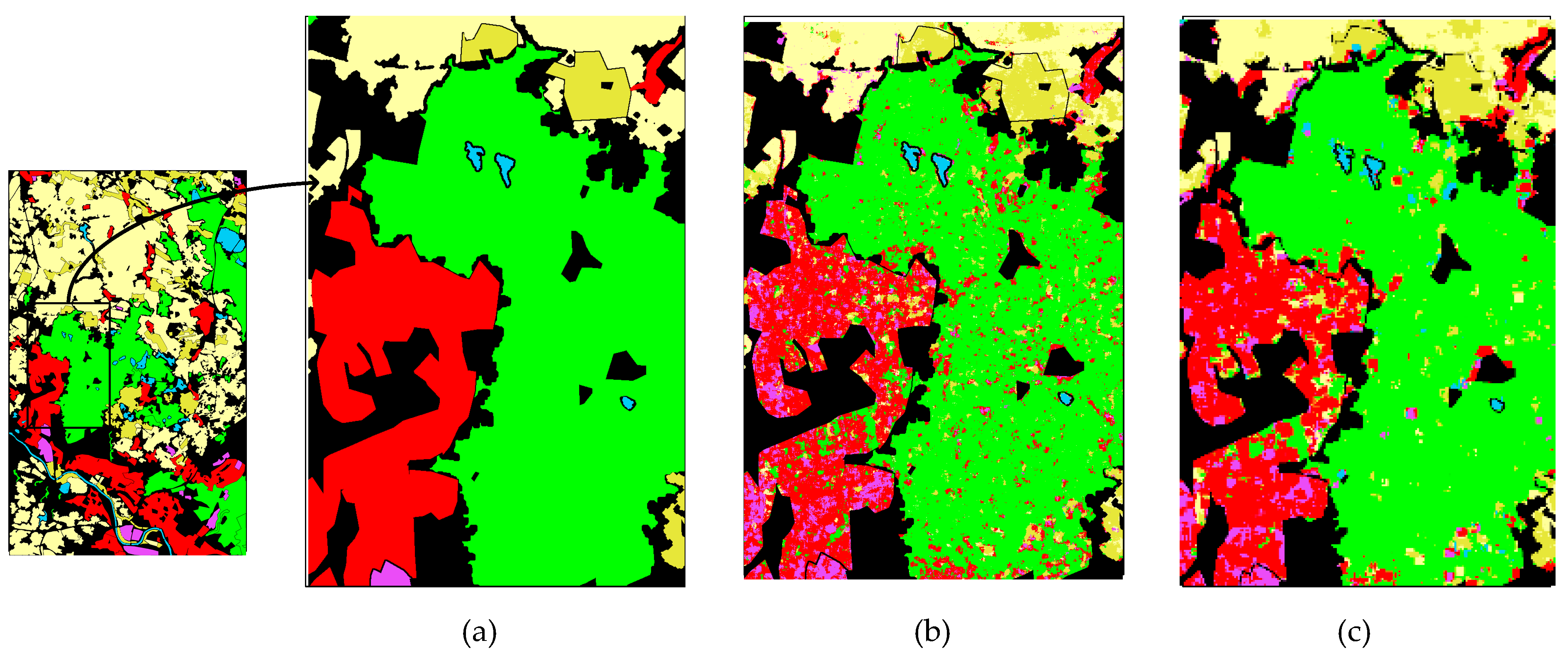

4.3.1. Performance Evaluations over Po Delta Data

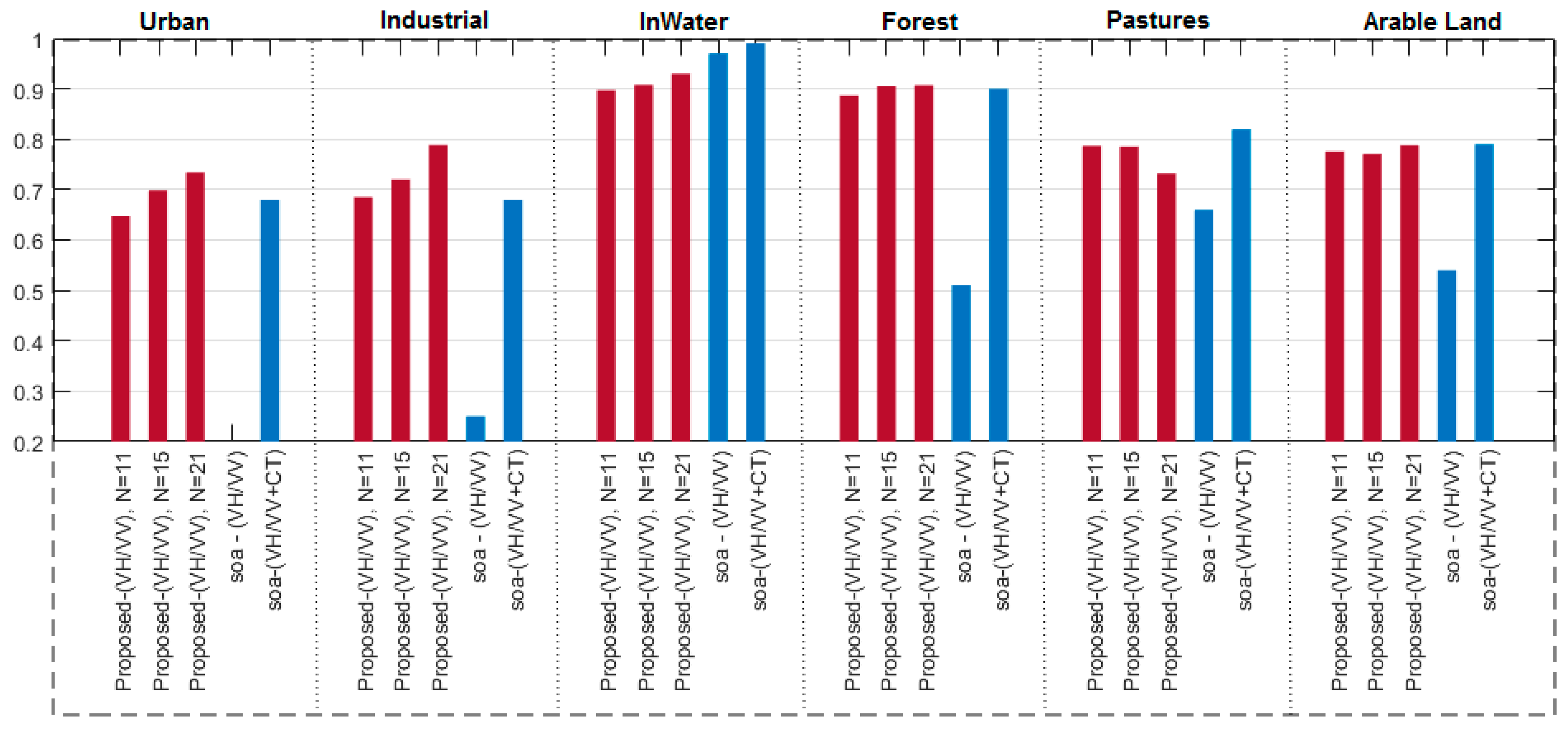

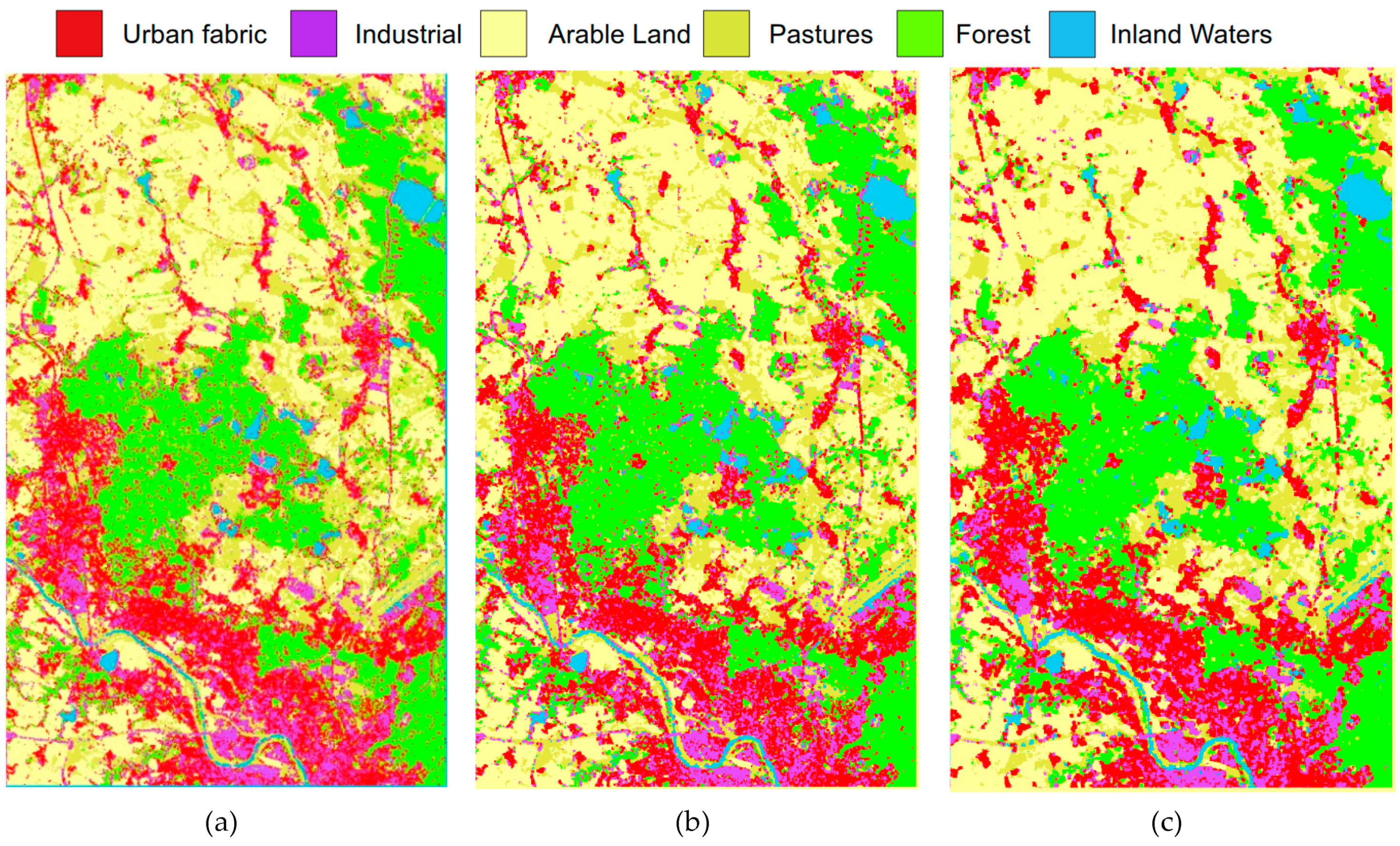

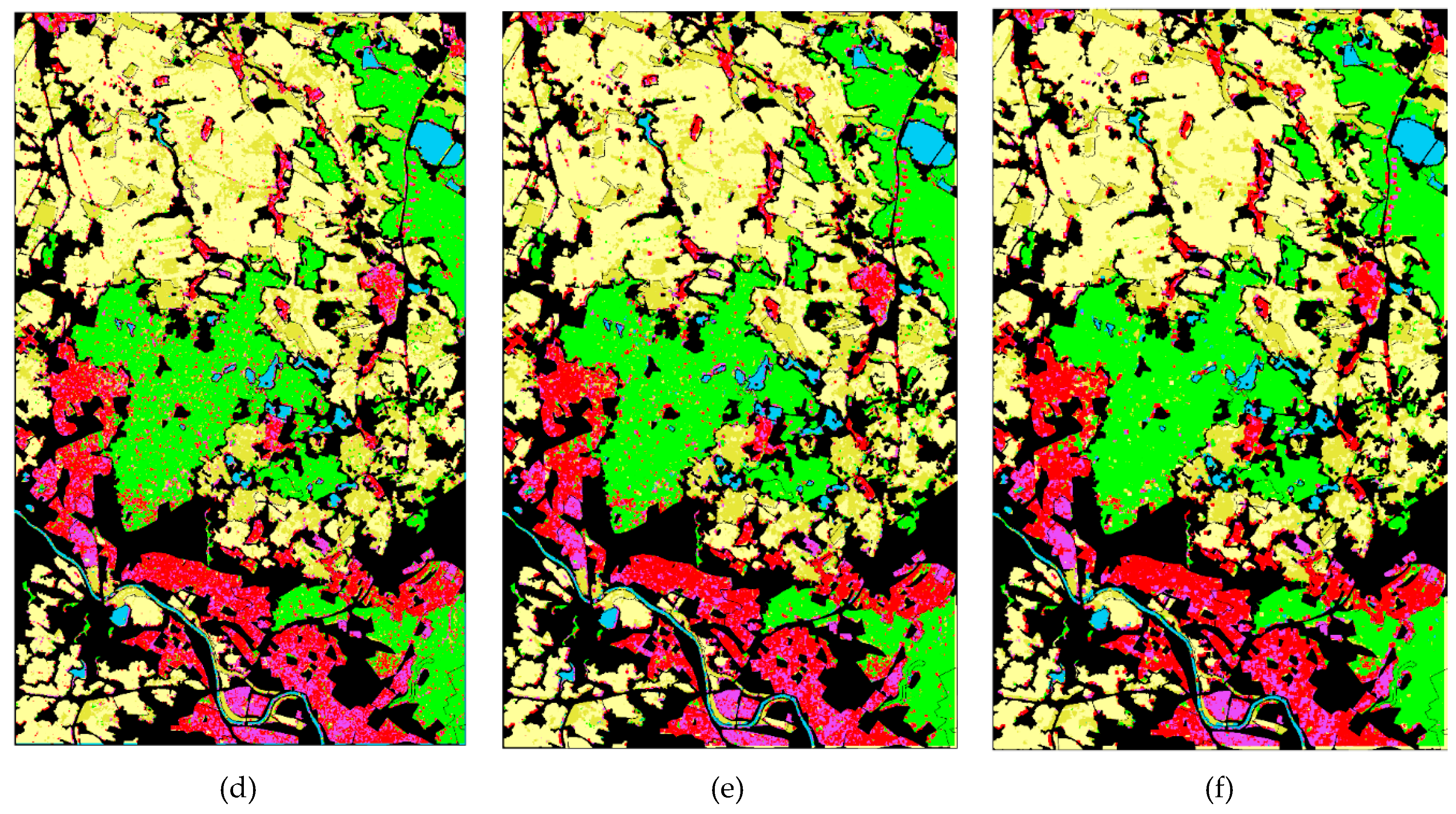

4.3.2. Performance Evaluations over Dresden Data

4.3.3. Deep versus Compact CNNs

4.4. Sensitivity Analysis on Hyper-Parameters

4.5. Computational Complexity

5. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

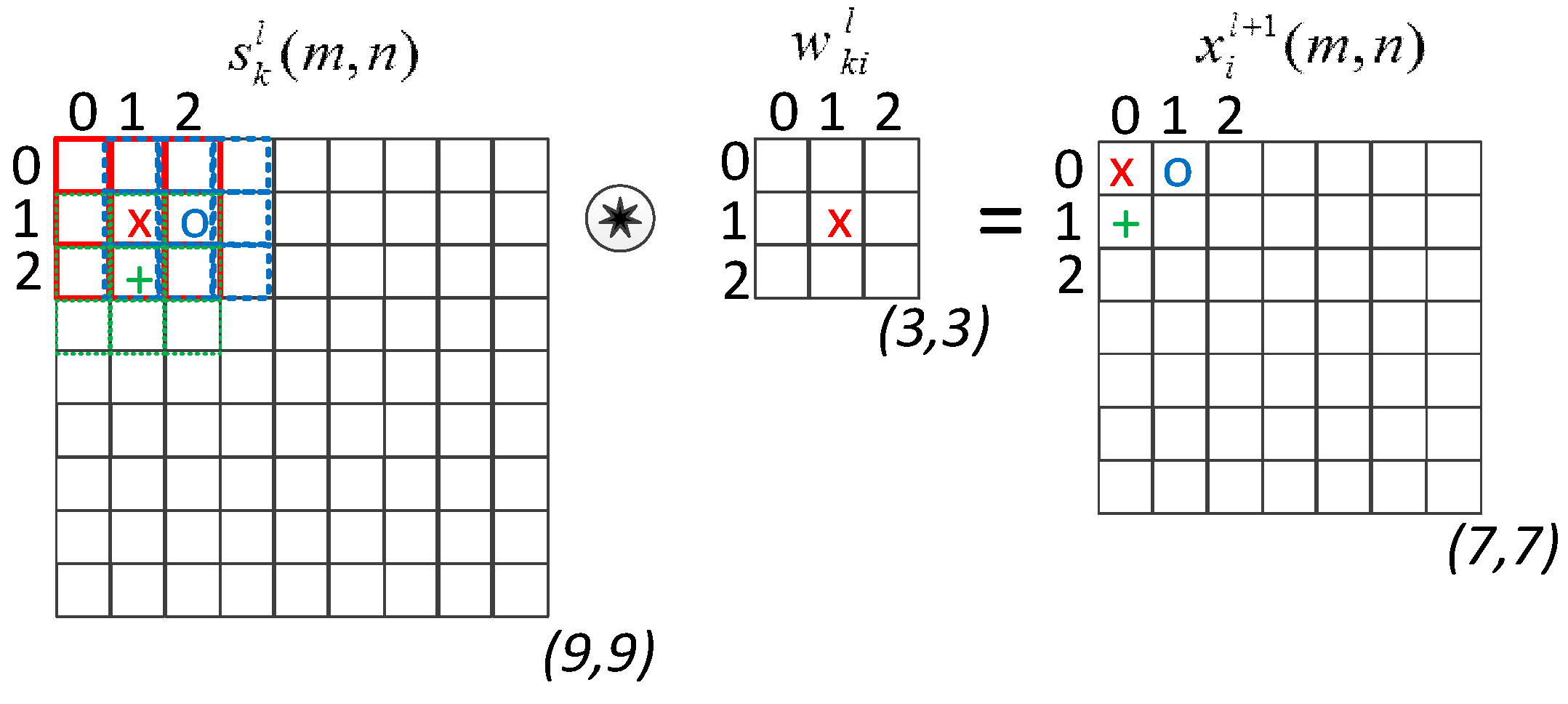

Appendix A.1. Adaptive CNN Implementation

Appendix A.2. Back-Propagation for Adaptive CNNs

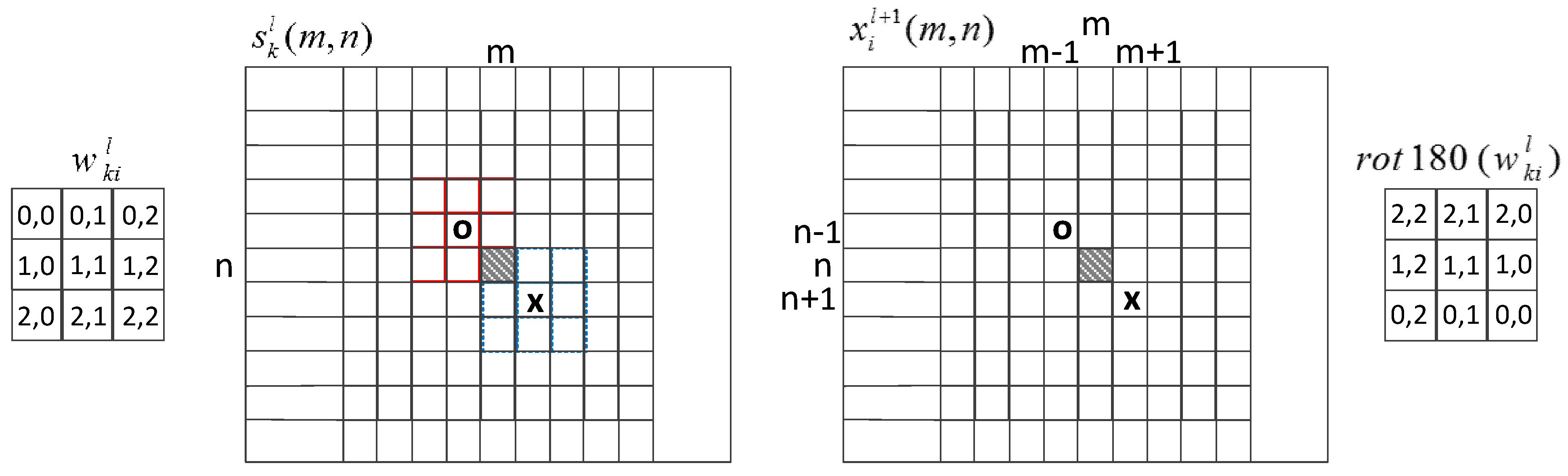

Appendix A.2.1. Inter-BP among CNN Layers:

Appendix A.2.2. Intra-BP within a CNN Neuron:

Appendix A.2.3. BP from the First MLP Layer to the Last Convolutional Layer

Appendix A.2.4. Computation of the Weight (Kernel) and Bias Sensitivities

- (1)

- Initialize weights (kernels) and biases (e.g., randomly, U(−0.5, 0.5)) of the CNN.

- (2)

- For each BP iteration (t=1:iterNo) DO:

- For each patch, p, in the train set, DO:

- FP: Forward propagate from the input layer to the output layer to find output of each neuron at each layer, [1,L].

- BP: Compute delta error at the output (MLP) layer and back-propagate it to first hidden CNN layer to compute the delta errors, .

- PP: Post-process the delta error to obtain the weight and bias sensitivities using Equations (A14) and (A15).

- Update: Cumulate the sensitivities in iii and scale with the learning factor, ε, and update the weights and biases as follows:

References

- Endo, Y.; Adriano, B.; Mas, E.; Koshimura, S. New Insights into Multiclass Damage Classification of Tsunami-Induced Building Damage from SAR Images. Remote Sens. 2018, 10, 2059. [Google Scholar] [CrossRef]

- Sun, T.; Zhang, G.; Perrie, W.; Zhang, B.; Guan, C.; Khurshid, S.; Warner, K.; Sun, J. Ocean Wind Retrieval Models for RADARSAT Constellation Mission Compact Polarimetry SAR. Remote Sens. 2018, 10, 1938. [Google Scholar] [CrossRef]

- Brekke, C.; Solberg, A.H.S. Oil spill detection by satellite remote sensing. Remote Sens. Environ. 2005, 95, 1–13. [Google Scholar] [CrossRef]

- Qi, Z.; Yeh, A.G.-O.; Li, X.; Lin, Z. A novel algorithm for land use and land cover classification using RADARSAT-2 polarimetric SAR data. Remote Sens. Environ. 2012, 118, 21–39. [Google Scholar] [CrossRef]

- Frison, P.-L.; Fruneau, B.; Kmiha, S.; Soudani, K.; Dufrêne, E.; Le Toan, T.; Koleck, T.; Villard, L.; Mougin, E.; Rudant, J.-P. Potential of Sentinel-1 Data for Monitoring Temperate Mixed Forest Phenology. Remote Sens. 2018, 10, 2049. [Google Scholar] [CrossRef]

- Dabrowska-Zielinska, K.; Musial, J.; Malinska, A.; Budzynska, M.; Gurdak, R.; Kiryla, W.; Bartold, M.; Grzybowski, P. Soil Moisture in the Biebrza Wetlands Retrieved from Sentinel-1 Imagery. Remote Sens. 2018, 10, 1979. [Google Scholar] [CrossRef]

- El Hajj, M.; Baghdadi, N.; Zribi, M.; Belaud, G.; Cheviron, B.; Courault, D.; Charron, F. Soil moisture retrieval over irrigated grassland using X-band SAR data. Remote Sens. Environ. 2016, 176, 202–218. [Google Scholar] [CrossRef] [Green Version]

- Ouchi, K. Recent trend and advance of synthetic aperture radar with selected topics. Remote Sens. 2013, 5, 716–807. [Google Scholar] [CrossRef]

- Santi, E.; Paloscia, S.; Pettinato, S.; Fontanelli, G.; Mura, M.; Zolli, C.; Maselli, F.; Chiesi, M.; Bottai, L.; Chirici, G. The potential of multifrequency SAR images for estimating forest biomass in Mediterranean areas. Remote Sens. Environ. 2017, 200, 63–73. [Google Scholar] [CrossRef]

- Jonsson, P. Vegetation as an urban climate control in the subtropical city of Gaborone, Botswana. Int. J. Climatol. 2004. [Google Scholar] [CrossRef]

- Chen, X.-L.; Zhao, H.-M.; Li, P.-X.; Yin, Z.-Y. Remote sensing image-based analysis of the relationship between urban heat island and land use/cover changes. Remote Sens. Environ. 2006, 104, 133–146. [Google Scholar] [CrossRef]

- Mennis, J. Socioeconomic-Vegetation Relationships in Urban, Residential Land. Photogramm. Eng. Remote Sens. 2006, 11, 911–921. [Google Scholar] [CrossRef]

- Yu, P.; Qin, A.K.; Clausi, D.A. Unsupervised Polarimetric SAR Image Segmentation and Classification Using Region Growing With Edge Penalty. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1302–1317. [Google Scholar] [CrossRef]

- Amelard, R.; Wong, A.; Clausi, D.A. Unsupervised classification of agricultural land cover using polarimetric synthetic aperture radar via a sparse texture dictionary model. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Melbourne, VIC, Australia, 21–26 July 2013; pp. 4383–4386. [Google Scholar]

- Uhlmann, S.; Kiranyaz, S. Integrating color features in polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2197–2216. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Ince, T.; Uhlmann, S.; Gabbouj, M. Collective Network of Binary Classifier Framework for Polarimetric SAR Image Classification: An Evolutionary Approach. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 1169–1186. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ince, T.; Ahishali, M.; Kiranyaz, S. Comparison of polarimetric SAR features for terrain classification using incremental training. In Proceedings of the Progress In Electromagnetics Research Symposium, St. Petersburg, Russia, 22–25 May 2017; pp. 3258–3262. [Google Scholar]

- Uhlmann, S.; Kiranyaz, S. Classification of dual- and single polarized SAR images by incorporating visual features. ISPRS J. Photogramm. Remote Sens. 2014, 90, 10–22. [Google Scholar] [CrossRef]

- Braga, A.M.; Marques, R.C.P.; Rodrigues, F.A.A.; Medeiros, F.N.S. A median regularized level set for hierarchical segmentation of SAR images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1171–1175. [Google Scholar] [CrossRef]

- Jin, R.; Yin, J.; Zhou, W.; Yang, J. Level Set Segmentation Algorithm for High-Resolution Polarimetric SAR Images Based on a Heterogeneous Clutter Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4565–4579. [Google Scholar] [CrossRef]

- Stutz, D.; Hermans, A.; Leibe, B. Superpixels: An evaluation of the state-of-the-art. Comput. Vis. Image Underst. 2018, 166, 1–27. [Google Scholar] [CrossRef] [Green Version]

- Lang, F.; Yang, J.; Yan, S.; Qin, F. Superpixel Segmentation of Polarimetric Synthetic Aperture Radar (SAR) Images Based on Generalized Mean Shift. Remote Sens. 2018, 10, 1592. [Google Scholar] [CrossRef]

- Cousty, J.; Bertrand, G.; Najman, L.; Couprie, M. Watershed cuts: Thinnings, shortest path forests, and topological watersheds. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 925–939. [Google Scholar] [CrossRef] [PubMed]

- Ciecholewski, M. River channel segmentation in polarimetric SAR images: Watershed transform combined with average contrast maximisation. Expert Syst. Appl. 2017, 82, 196–215. [Google Scholar] [CrossRef]

- Uhlmann, S.; Kiranyaz, S.; Gabbouj, M. Semi-supervised learning for ill-posed polarimetric SAR classification. Remote Sens. 2014, 6, 4801–4830. [Google Scholar] [CrossRef]

- Uhlmann, S.; Kiranyaz, S. Evaluation of classifiers for polarimetric SAR classification. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Melbourne, VIC, Australia, 21–26 July 2013; pp. 775–778. [Google Scholar]

- Uhlmann, S.; Kiranyaz, S.; Gabbouj, M. Polarimetric SAR classification using visual color features extracted over pseudo color images. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Melbourne, VIC, Australia, 21–26 July 2013; pp. 1999–2002. [Google Scholar]

- Uhlmann, S.; Kiranyaz, S.; Gabbouj, M.; Ince, T. Incremental evolution of collective network of binary classifier for polarimetric SAR image classification. In Proceedings of the International Conference on Image Processing, ICIP, Brussels, Belguim, 11–14 September 2011; pp. 177–180. [Google Scholar]

- Uhlmann, S.; Kiranyaz, S.; Gabbouj, M.; Ince, T. Collective Network of Binary Classifier Framework for Polarimetric SAR Images. In Proceedings of the IEEE Workshop on Evolving and Adaptive Intelligent Systems(EAIS), Paris, France, 11–15 April 2011; pp. 1–4. [Google Scholar]

- Uhlmann, S.; Kiranyaz, S.; Ince, T.; Gabbouj, M. Polarimetric SAR Images Classification using Collective Network of Binary Classifiers. In Proceedings of the Joint Urban Remote Sensing Event, JURSE 2011, Munich, Germany, 11–13 April 2011; pp. 245–248. [Google Scholar]

- Uhlmann, S.; Kiranyaz, S.; Ince, T.; Gabbouj, M. SAR imagery classification in extended feature space by Collective Network of Binary Classifiers. In Proceedings of the European Signal Processing Conference, Barcelona, Spain, 29 August–2 September 2011; pp. 1160–1164. [Google Scholar]

- Uhlmann, S.; Kiranyaz, S.; Ince, T.; Gabbouj, M. Dynamic and data-driven classification for polarimetric SAR images. In Proceedings of the SPIE—The International Society for Optical Engineering, San Diego, CA, USA, 7–10 March 2011; Volume 8180. [Google Scholar]

- Yang, W.; Zou, T.; Dai, D.; Sun, H. Polarimetric SAR image classification using multifeatures combination and extremely randomized clustering forests. EURASIP J. Adv. Signal Process. 2010, 2010, 1–12. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems 25, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the AAAI, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.; Wang, H.; Xu, F.; Jin, Y.-Q. Polarimetric SAR Image Classification Using Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1935–1939. [Google Scholar] [CrossRef]

- Gao, F.; Huang, T.; Wang, J.; Sun, J.; Hussain, A.; Yang, E. Dual-Branch Deep Convolution Neural Network for Polarimetric SAR Image Classification. Appl. Sci. 2017, 7, 447. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.-S.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; CRC Press: Boca Raton, FL, USA, 2009; ISBN 9781420054972. [Google Scholar]

- Lee, J.S.; Grunes, M.R.; Pottier, E.; Ferro-Famil, L. Unsupervised terrain classification preserving polarimetric scattering characteristics. IEEE Trans. Geosci. Remote Sens. 2004, 42, 722–731. [Google Scholar]

- Hoekman, D.H. A New Polarimetric Classification Approach Evaluated for Agricultural Crops; European Space Agency, (Special Publication) ESA SP: Paris, France, 2003; pp. 71–79. [Google Scholar]

- Lee, J.S.; Grunes, M.R.; Pottier, E. Quantitative comparison of classification capability: Fully polarimetric versus dual and single-polarization SAR. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2343–2351. [Google Scholar]

- Lonnqvist, A.; Rauste, Y.; Molinier, M.; Hame, T. Polarimetric SAR Data in Land Cover Mapping in Boreal Zone. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3652–3662. [Google Scholar] [CrossRef]

- Turkar, V.; Deo, R.; Rao, Y.S.; Mohan, S.; Das, A. Classification accuracy of multi-frequency and multi-polarization SAR images for various land covers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 936–941. [Google Scholar] [CrossRef]

- Skriver, H. Crop classification by multitemporal C- and L-band single- and dual-polarization and fully polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2138–2149. [Google Scholar] [CrossRef]

- Pietikäinen, M.; Ojala, T.; Xu, Z. Rotation-invariant texture classification using feature distributions. Pattern Recognit. 2000, 33, 43–52. [Google Scholar] [CrossRef] [Green Version]

- Manjunath, B.S.; Ohm, J.R.; Vasudevan, V.V.; Yamada, A. Color and texture descriptors. IEEE Trans. Circuits Syst. Video Technol. 2001, 11, 703–715. [Google Scholar] [CrossRef] [Green Version]

- Manjunath, B.S.; Wu, P.; Newsam, S.; Shin, H. A texture descriptor for browsing and similarity retrieval. J. Signal Process. Image Commun. 2000, 16, 33–43. [Google Scholar]

- Haralick, R.M.; Dinstein, I.; Shanmugam, K. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Swain, M.J.; Ballard, D.H. Color indexing. Int. J. Comput. Vis. 1991, 7, 11–32. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, C.; Li, S. A perceptive uniform pseudo-color coding method of SAR images. In Proceedings of the CIE International Conference of Radar Proceedings, Shanghai, China, 16–19 October 2006. [Google Scholar]

- Sim, J.; Wright, C.C. The kappa statistic in reliability studies: Use, interpretation, and sample size requirements. Phys. Ther. 2005, 85, 257–268. [Google Scholar]

- Corine Land Cover. Available online: http://sia.eionet.europa.eu/CLC2006/ (accessed on 9 September 2012).

- Chollet François Keras: The Python Deep Learning library. keras.io, 2015.

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv arXiv:1603.04467, 2016.

- Serpen, G.; Gao, Z. Complexity analysis of multilayer perceptron neural network embedded into a wireless sensor network. Procedia Comput. Sci. 2014, 36, 192–197. [Google Scholar] [CrossRef]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q. V Learning Transferable Architectures for Scalable Image Recognition. Proceedings of The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

| Name | System and Band | Date | Incident Angles | Mode |

|---|---|---|---|---|

| Po Delta | COSMO-SkyMed, (X-band) | September 2007 | 30° | Single |

| Dresden | TerraSAR-X, (X-band) | Feburary 2008 | 41–42° | Dual |

| Name | Dimensions | # Class | Samples in Training per Class | Total Samples in GTD |

|---|---|---|---|---|

| Po Delta | 6 | 2000 | 612,000 | |

| Dresden | 6 | 1000 | 606,000 |

| Po Delta (COSMO-SkyMed) | 1-channel | 4-channels |

|---|---|---|

| Window Size | ||

| 5 × 5 | 0.7098 | 0.708 |

| 7 × 7 | 0.7482 | 0.7501 |

| 9 × 9 | 0.7698 | 0.7668 |

| 11 × 11 | 0.789 | 0.7838 |

| 13 × 13 | 0.8075 | 0.8037 |

| 15 × 15 | 0.8147 | 0.8167 |

| 17 × 17 | 0.8276 | 0.83 |

| 19 × 19 | 0.8387 | 0.8442 |

| 21 × 21 | 0.8404 | 0.8537 |

| 23 × 23 | 0.848 | 0.8539 |

| 25 × 25 | 0.8487 | 0.8632 |

| 27 × 27 | 0.8533 | 0.8615 |

| Predicted | ||||||||

|---|---|---|---|---|---|---|---|---|

| Urban | InWater | Forest | Wetland | Water | Crop | Total | ||

| True | Urban | 92,264 | 607 | 1322 | 54 | 0 | 5753 | 100,000 |

| InWater | 931 | 85,308 | 3824 | 6781 | 1210 | 1946 | 100,000 | |

| Forest | 934 | 2581 | 90,507 | 909 | 186 | 4883 | 100,000 | |

| Wetland | 166 | 6153 | 1157 | 80,683 | 11,744 | 97 | 100,000 | |

| MaWater | 48 | 2196 | 166 | 17,502 | 80,067 | 21 | 100,000 | |

| Crop | 4680 | 1055 | 4875 | 253 | 52 | 89,085 | 100,000 | |

| Total | 99,023 | 97,900 | 101,851 | 106,182 | 93,259 | 101,785 | 517,914 | |

| Dresden (TerraSAR-X) | 2-channel | ||

|---|---|---|---|

| Window Size | Window Size | ||

| 5 × 5 | 0.7059 | 17 × 17 | 80.07 |

| 7 × 7 | 0.7509 | 19 × 19 | 0.8105 |

| 9 × 9 | 0.7654 | 21 × 21 | 0.8133 |

| 11 × 11 | 0.7797 | 23 × 23 | 0.8029 |

| 13 × 13 | 0.7898 | 25 × 25 | 0.8092 |

| 15 × 15 | 0.798 | 27 × 27 | 0.8062 |

| Predicted | ||||||||

|---|---|---|---|---|---|---|---|---|

| Urban | Industrial | InWater | Forest | Pastures | Crop | Total | ||

| True | Urban | 73,409 | 18,980 | 169 | 3323 | 1775 | 2344 | 100,000 |

| Industrial | 16,492 | 78,870 | 172 | 1003 | 415 | 3048 | 100,000 | |

| InWater | 1474 | 1192 | 93,012 | 1955 | 2182 | 185 | 100,000 | |

| Forest | 3081 | 1189 | 855 | 90,712 | 2193 | 1970 | 100,000 | |

| Pastures | 3961 | 1199 | 977 | 3863 | 73,175 | 16,825 | 100,000 | |

| Crop | 2895 | 1035 | 113 | 1337 | 15,802 | 78,818 | 100,000 | |

| Total | 101,312 | 102,465 | 95,298 | 102,193 | 95,542 | 103,190 | 487,996 | |

| Window Size | Xception | Xception* | Inception ResNet-v2 | Inception ResNet-v2* | Proposed |

|---|---|---|---|---|---|

| 5 × 5 | 0.6688 | 0.6963 | 0.6862 | 0.6928 | 0.708 |

| 11 × 11 | 0.7563 | 0.7736 | 0.7608 | 0.7656 | 0.7838 |

| 17 × 17 | 0.7896 | 0.7943 | 0.8032 | 0.8121 | 0.83 |

| 25 × 25 | 0.8445 | 0.8435 | 0.8555 | 0.8447 | 0.8632 |

| Window Size | Xception | Xception* | Inception ResNet-v2 | Inception ResNet-v2* | Proposed |

|---|---|---|---|---|---|

| 5 × 5 | 0.4637 | 0.5004 | 0.4657 | 0.4754 | 0.7059 |

| 11 × 11 | 0.6481 | 0.6836 | 0.6556 | 0.6488 | 0.7797 |

| 17 × 17 | 0.7342 | 0.7596 | 0.7441 | 0.7459 | 0.8007 |

| 21 × 21 | 0.7706 | 0.7783 | 0.7767 | 0.7796 | 0.8133 |

| 27 × 27 | 0.7960 | 0.8068 | 0.8116 | 0.8064 | 0.8062 |

| Po Delta (Classes) | Precision Xcep. Inc. Res2. Prop. | Recall Xcep. Inc. Res2. Prop. | F1 Score Xcep. Inc. Res2. Prop. | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Urban | 0.9617 | 0.7246 | 0.9317 | 0.9631 | 0.7341 | 0.9226 | 0.9624 | 0.7293 | 0.9272 |

| InWater | 0.8091 | 0.7697 | 0.8714 | 0.8907 | 0.7887 | 0.8531 | 0.8479 | 0.7791 | 0.8621 |

| Forest | 0.902 | 0.9760 | 0.8886 | 0.9244 | 0.9301 | 0.9051 | 0.9131 | 0.9525 | 0.8968 |

| Wetland | 0.7014 | 0.8877 | 0.7599 | 0.6956 | 0.9071 | 0.8068 | 0.6985 | 0.8973 | 0.7826 |

| MaWater | 0.7903 | 0.7659 | 0.8585 | 0.7053 | 0.7318 | 0.8007 | 0.7454 | 0.7484 | 0.8286 |

| Araple Land | 0.8939 | 0.7638 | 0.8752 | 0.8838 | 0.7882 | 0.8909 | 0.8888 | 0.7758 | 0.8830 |

| Dresden (Classes) | Precision Xcep Inc. Res2. Prop. | Recall Xcep. Inc. Res2. Prop. | F1 Score Xcep. Inc. Res2. Prop. | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Urban | 0.6387 | 0.6875 | 0.7246 | 0.6144 | 0.5883 | 0.7341 | 0.6263 | 0.6341 | 0.7293 |

| Industrial | 0.7116 | 0.7136 | 0.7697 | 0.6608 | 0.7349 | 0.7887 | 0.6852 | 0.7241 | 0.7791 |

| InWater | 0.999 | 0.9995 | 0.9760 | 0.965 | 0.9743 | 0.9301 | 0.9817 | 0.9867 | 0.9525 |

| Forest | 0.8412 | 0.8426 | 0.8877 | 0.9022 | 0.9067 | 0.9071 | 0.8706 | 0.8735 | 0.8973 |

| Pastures | 0.791 | 0.8025 | 0.7659 | 0.7933 | 0.8259 | 0.7318 | 0.7921 | 0.8141 | 0.7484 |

| Araple Land | 0.7868 | 0.8112 | 0.7638 | 0.8404 | 0.8391 | 0.7882 | 0.8127 | 0.8249 | 0.7758 |

| SAR Data | Xception | Inception ResNet-v2 | Proposed |

|---|---|---|---|

| Po Delta | 0.7203 | 0.7278 | 0.7369 |

| Dresden | 0.6828 | 0.6950 | 0.6986 |

| Po Delta (HH) | Dresden (VH/VV) | |

|---|---|---|

| m = 1, n = 1 | 0.8487 | 0.8133 |

| m = 1, n = 2 | 0.8015 | 0.7772 |

| m = 2, n = 1 | 0.8493 | 0.8176 |

| m = 2, n = 2 | 0.7945 | 0.7747 |

| m = 4, n = 1 | 0.8683 | 0.8371 |

| m = 4, n = 2 | 0.7908 | 0.7766 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahishali, M.; Kiranyaz, S.; Ince, T.; Gabbouj, M. Dual and Single Polarized SAR Image Classification Using Compact Convolutional Neural Networks. Remote Sens. 2019, 11, 1340. https://doi.org/10.3390/rs11111340

Ahishali M, Kiranyaz S, Ince T, Gabbouj M. Dual and Single Polarized SAR Image Classification Using Compact Convolutional Neural Networks. Remote Sensing. 2019; 11(11):1340. https://doi.org/10.3390/rs11111340

Chicago/Turabian StyleAhishali, Mete, Serkan Kiranyaz, Turker Ince, and Moncef Gabbouj. 2019. "Dual and Single Polarized SAR Image Classification Using Compact Convolutional Neural Networks" Remote Sensing 11, no. 11: 1340. https://doi.org/10.3390/rs11111340

APA StyleAhishali, M., Kiranyaz, S., Ince, T., & Gabbouj, M. (2019). Dual and Single Polarized SAR Image Classification Using Compact Convolutional Neural Networks. Remote Sensing, 11(11), 1340. https://doi.org/10.3390/rs11111340