1. Introduction

Recently, remote sensing image has been studied in more and more areas, including image registration [

1,

2,

3], change detection [

4,

5], object detection [

6] and so on. As is known to all, Hyperspectral Imaging (HSI) is a special type of remote sensing image which has abundant spectral and spatial information [

7], and has been studied in many fields, including forest vegetation cover monitoring [

8], classification of land-use [

9,

10], change area detection [

11], anomaly detection [

12] and environmental protection [

13].

In HSI, supervised classification is the most studied task. However, the high-dimensional nature of the spectral channel can bring with it the ’curse of dimensionality’, which makes conventional techniques inefficient. How to extract the most discriminative feature from the high dimensionality of the spectral channel is the key in HSI classification. Therefore, traditional HSI classification methods usually contain two steps, e.g., feature engineering and classifier classification. There are two mainstreams in feature engineering, one is feature selection and the other is feature extraction. Feature selection aims to pick up several spectral channel to reduce dimensionality and feature extraction refers to using some nonlinear mapping function to transform the original spectral domain to a lower dimensional space. After feature engineering, the selected feature or extracted feature will be fed to general-purpose classifiers for classification.

In the early stage, researchers focused on spectral-based methods and without considering the spatial information. However, HSI has local consistency, so some researchers took spatial information into consideration and had performed better. Gabor feature [

14] and differential morphological profile (DMP) [

15] feature are two types of low-level feature which could represent the shape information of the HSI and could also lead to satisfactory classification results. In [

16], Paheding et al. used multiscale spatial texture features for HSI classification. However, The HSI usually contains various types and levels features, so it is impossible to describe all types of objects by setting empirical parameters. One method may perform well on a dataset while performs worse on another dataset.

Deep Learning (DL) has shown extremely powerful ability to extract hierarchical and nonlinear features, which are very useful for classification. So far, many works based on DL have been done in the community of HSI classification. For example, Chen et al. [

17] used stacked autoencoder (SAE) to extract spectral and spatial features and use logistic regression to get classification result. Similarly, they used a Restricted Boltzmann Machine (RBM) and deep belief network (DBN) in [

18] for classification. Tao et al. [

19] used two sparse stacked auto-encoder to learn the spatial and spectral features of the HSI separately, then he stacked the spatial and spectral features and fed them into a liner SVM for classification. Ma et al. [

20] used a spatial updated deep autoencoder to extract both spatial and spectral information with a single deep network, and utilized an improved collaborative representation in feature space for classification. Zhang et al. [

21] utilized a recursive autoencoder to learn spatial and spectral information and adopted a weighting scheme to fuse the spatial information. In [

22], Paheding et al. proposed a Progressively Expanded Neural Network (PEN Net), which is a novel neural network.

The input of the aforementioned methods is one dimensional, and they utilized the spatial feature but destroyed the initial spatial structure. With the emergence of the convolutional neural network (CNN), some new methods have also been introduced. CNN can extract the spatial information without destroying the original spatial structure. For example, Hu et al. [

23] employed deep CNN for HSI classification. Chen et al. [

24] proposed a novel 3D-CNN model combined with regularization to extract spectral-spatial features for classification. The obtained results reveal that 3D-CNN perform better than 1D-CNN and 2D-CNN. Mercedes E. Paoletti et al. [

25] proposed the deep pyramidal residual network to extract multi-scale spatial feature for classification. Recently, some new training methods also have emerged in the literature, including active learning [

26], self-pace learning [

27], semi-supervised learning [

28] and generative adversarial network (GAN) [

29]. Furthermore, some superpixels based methods also play an important role in HSI classification [

30,

31]. In [

32], Jiang et al. studied the influence of label noise on the HSI classification problem and proposed a random label propagation algorithm (RLPA) which is used to cleanse the label noise.

1.1. Motivation

Inspired by the residual network [

33], Zhong et al. [

34] proposed a Spectral–Spatial Residual Network (SSRN) which contains spectral residual block and spatial residual block to extract spectral features and spatial features sequentially. SSRN has achieved the state-of-the-art performance in HSI classification problem. Based on SSRN and DenseNet [

35], Wang et al. [

36] proposed a fast densely connected spectral–spatial convolution network (FDSSC) for HSI classification and has achieved better performance while reducing the training time.

Although SSRN and FDSSC have achieved the highest classification accuracy, there are still some problems need to be solved. The biggest problem is that the two frameworks firstly extracts spectral features then extracts spatial features. In the procedure of extracting spatial features, the extracted spectral features may be destroyed because the spectral features and spatial features are in different domain.

More recently, Fang et al. [

37] proposed a network using 3-D CNN with spectral-wise attention mechanism (MSDN-SA) which applied spectral-wise attention mechanism in a densely connected 3D convolution network. However, it only considers the spectral-wise attention while not considering the spatial-wise attention.

Recently, an intuitive and effective attention module named Convolutional Block Attention Module (CBAM) was proposed in [

38], which sequentially applies channel attention mechanism and spatial attention mechanism in the network to adaptively refine the feature map, which results in improvements in classification performance.

Inspired by the CBAM and to solve the problem of SSRN and FDSSC, we propose the double-branch multi-attention mechanism network for HSI classification. The framework consists of two parallel branches, i.e., spectral branch and spatial branch. To extract more discriminative features, in the spectral branch and spatial branch we apply channel-wise attention and spatial-wise attention separately. After the two branches extract corresponding features, we fuse them by a concatenation operation to get the spectral-spatial feature. Finally, the softmax classifier are added to get the last classification result.

1.2. Contribution

To be summarized, our main contributions can be listed as follows:

We propose a densely connected 3DCNN-based Double-Branch Multi-Attention mechanism network (DBMA). This network has two branches to extract spectral and spatial features separately which can reduce the interference between the two types of features. The extracted spectral and spatial features are fused for classification.

We apply both the channel-wise attention and spatial-wise attention in the HSI classification problem. The channel-wise attention is aiming to emphasize informative spectral features while suppress less useful spectral features, while the spatial attention is aimed at focusing on the most informative ares in the input patches.

Compared with other recently proposed methods, the proposed network achieves the best classification accuracy. Furthermore, the training time and test time of our proposed network are also less than the two compared deep-learning algorithm, which indicates the superiority of our method.

The rest of this paper is organized as follows:

Section 2 illustrates the related work.

Section 3 presents a detailed description of the proposed classification method. The experiment results and analysis are provided in

Section 4. Finally,

Section 5 concludes the whole paper and briefly introduce our future research.

2. Related Work

In this section, we will briefly introduce some basic knowledge and related work, including cube-based HSI classification framework, residual connection and densely connection, FDSSC and attention mechnasim.

2.1. Cube-Based HSI Classification Framework

Traditional pixel-based classification architecture only uses spectral information for classification while cube-based architecture uses both spectral and spatial information. Given an HSI dataset with size of , There are total pixels in the image, however, only N pixels has corresponding labels. Firstly, we random split the pixels with their labels into three sets, i.e., training set, validation set and test set. Then, we extract the 3D cube as the input of the network. Different from a pixel-based architecture which directly uses the pixel as input to train network for classification, cube-based framework uses 3D structure of HSI for classification. The reason using cube-based framework is that the spatial information is also important for classification.

2.2. Residual Connection and Densely Connection

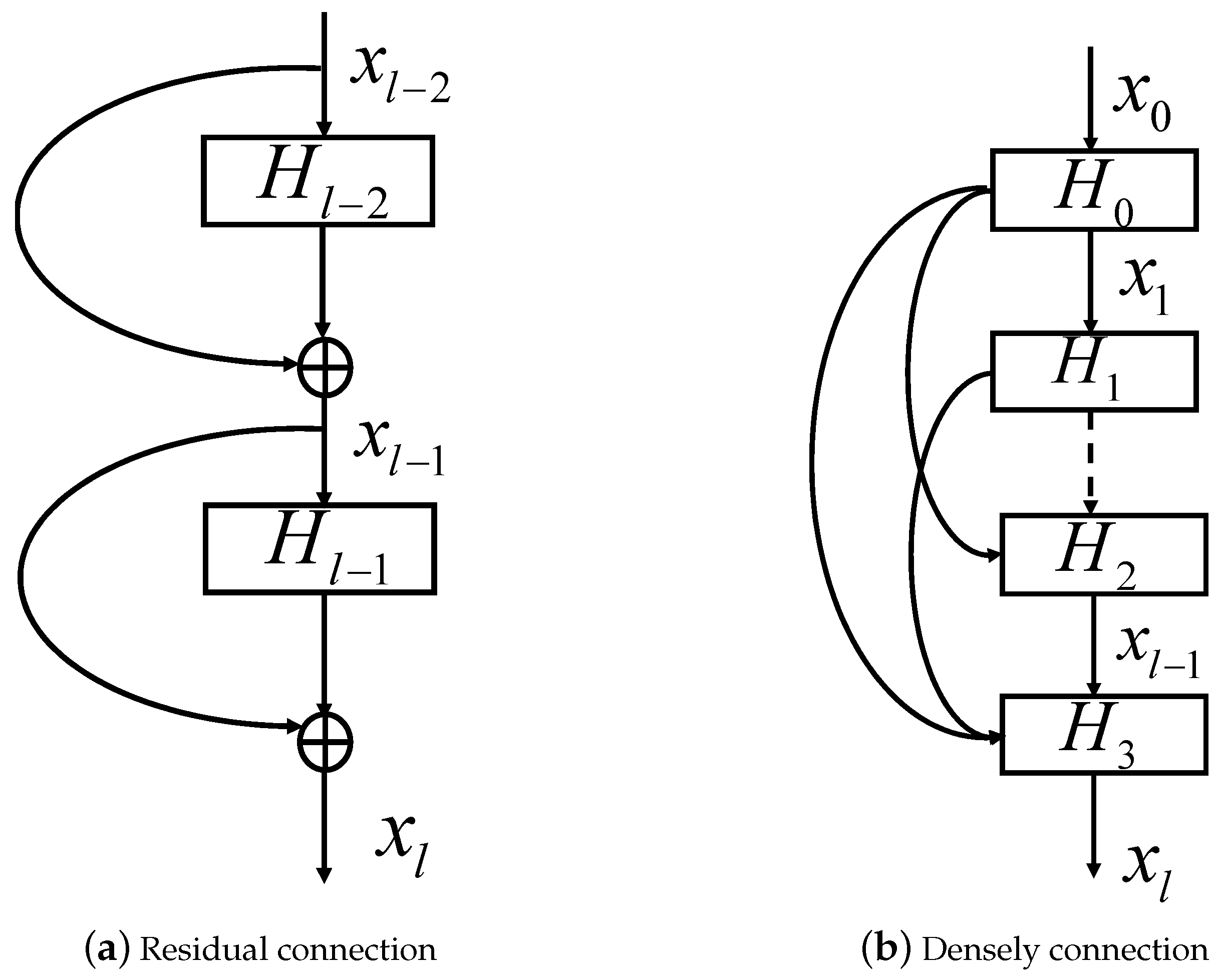

Residual connection was first proposed in [

33]. In principle, a residual connection adds a skip connection in the basic of tradition CNN model. As is shown in

Figure 1a,

H is the abbreviation of hidden block and represents several convolutional layers with activation layers and BatchNorm layers. ResNet allows input information to be passed directly to subsequent layers. The skip connection can be seen as an identity mapping. In ResNet, the output of the

l-th block can be computed as:

Through the residual connection, the original function can be transformed to . In addition the is easy to learn than . Therefore, ResNet can achieve better result than traditional CNN models. Furthermore, ResNet wouldn’t bring extra parameters but can speed up the training process.

Based on residual connection, Gao et al. [

35] proposed the concept of densely connection and DenseNet. In DenseNet, any hidden block has path to any previous block and back block. Differing from the residual connection, which combines features through summation, dense connectivity combines features by concatenating them. In DenseNet, all previous feature maps of lblocks can be used to compute the output of the

l-th block:

where

is the feature maps of the previous blocks.

consists of batch normalization (BN), activation layers and convolution layers. In DenseNet, as is shown in

Figure 1b, each block has been linked to each previous block and back block. Note that if each function

produces

k feature maps, the

layer will have

input features, where

is the number of channels in the input layer, while the output will still be

k feature maps.

2.3. Fast Dense Spectral–Spatial Convolution Network (FDSSC)

Based on residual connection, Zhong et al. [

34] proposed a Spectral–Spatial Residual Network (SSRN) which contains spectral residual block and spatial residual block to extract spectral features and spatial features sequentially. Inspired by SSRN and DenseNet, Wang et al. [

36] proposed the FDDSC network for HSI classification which achieved better performance while reduced the training time. In this part, we will introduce FDSSC in detail.

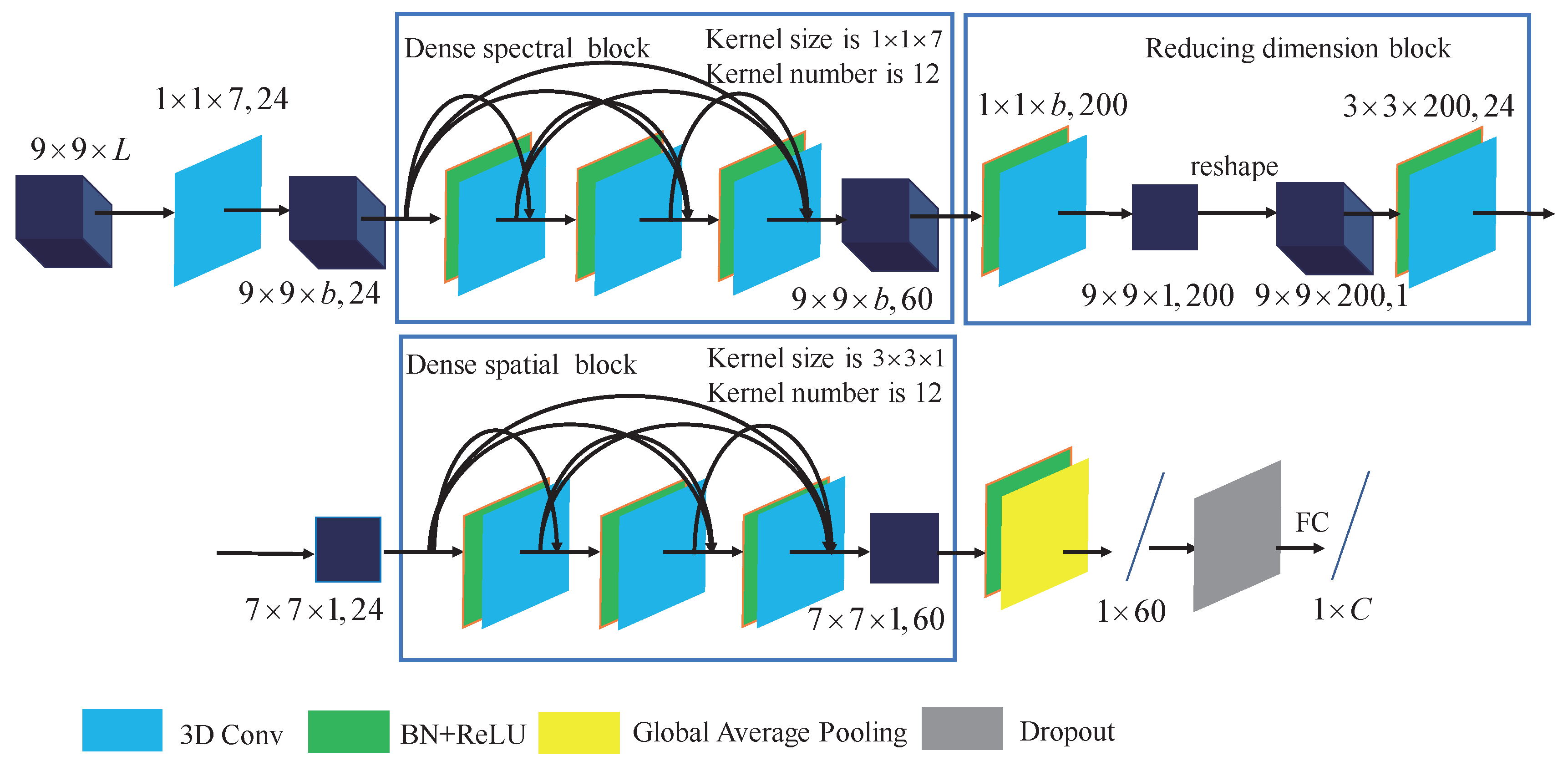

As illustrated in [

36], the structure of FDSSC is shown in

Figure 2. FDSSC consists of a dense spectral block, a reducing dimension block and a dense spatial block. The input patch of FDSSC is set to

. The dense spectral block aims to extract spectral feature using densely connected 3D convolution and the kernel size is set to

. The

(

) convolution operation does not extract any spatial features because the kernel size of spatial dimension is set to 1. Therefore, a kernel size of

extracts the spectral features and retains the spatial features. Through the dense spectral block, we get spectral feature with size of

. 60 refers to the number of feature maps.

The reducing dimension block aims to reduce the dimension of feature maps and the number of parameters to be trained. In reducing dimension block, the padding method of 3D convolution is set to ’valid’ to decrease the size of feature maps. After learning the spectral features, we get 60 feature maps with size of . Then, the 3D convolution layer with kernel size of is used to get 200 feature maps with size of . After that, the feature maps are reshaped to get 1 feature map with size of . To further reduce the dimension of feature maps’ size, the convolution layer with kernel size of transformed the feature maps to get feature maps with size of .

Then, the dense spatial block is used to extract spatial features. The kernel size in the dense spatial block is set to . A kernel with size of () learns the spatial features while not learning any spectral features.

After the dense spatial block, we get feature with size of . Then, the global average pooling layer is employed to get a feature vector with length of 60. The global average pooling layer can be seen as a special case of pooling layer which can aggregate information and reduce parameters. The feature vector is feed to softmax classifier for classification result.

2.4. Attention Mechanism

Inspired by the human perception process [

39], the attention mechanism has been applied in the image categorization [

40], and were later shown to yield significant improvements for Visual Question Answering (VQA) and captioning [

41,

42,

43]. As is known to all, the importance of every spectral channel and the area of the input patch is different while extracting features. In addition, the attention mechanism can focus on the most informative part and decrease other region’s weight, which is believed to be similar to the human eye’s attention mechanism. In CBAM [

38], the network has two attention module, i.e., channel attention module and spatial attention module which focus on informative channel and informative area respectively. Later, we will introduce the two modules in detail.

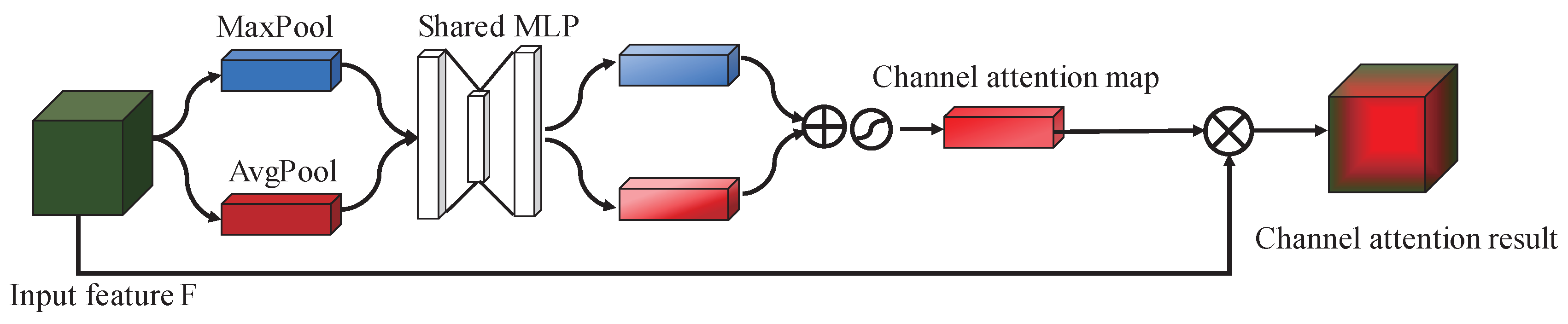

2.4.1. Channel-Wise Attention Module

The channel-wise attention module mainly refines the feature maps’ weight in the channel-wise. Each channel of the feature map can be seen as a feature detector, and channel attention focuses on the meaningful channel and decrease the meaningless channel’s value to a certain degree.

As is shown in

Figure 3, a MaxPooling layer and an AvgPooling layer are used to aggregate spatial information, the two pooling operations can be seen as two different spatial descriptors:

and

, which denote average-pooled features and max-pooled features respectively. Note that the output features are a one-dimensional vector and the length of the vector is the same as the number of the input features. Then the two types of features are feed forwarded to a shared network to produce the channel attention map. The shared network is composed of a 3-layer perceptron (MLP) with one hidden layer. The hidden layer has

units, which is used to reduce the training numbers and generate more nonlinear mapping, where

L is the reduction ratio and

C is the channel numbers. Then the output feature vectors are merged using element-wise summation. Through the sigmoid function, the channel attention map is obtained. The channel attention map is a vector of which the length is the same as the number of input feature maps and the value is in range of (0,1). The bigger the value is, the more important the corresponding channel is. Then the channel attention map is multiplied with the input feature to get the channel-refined feature. The procedure of generating mapping function can be computed as:

where

is the sigmoid function,

and

. It has to be noted that the MLP weights,

and

are shared for both inputs.

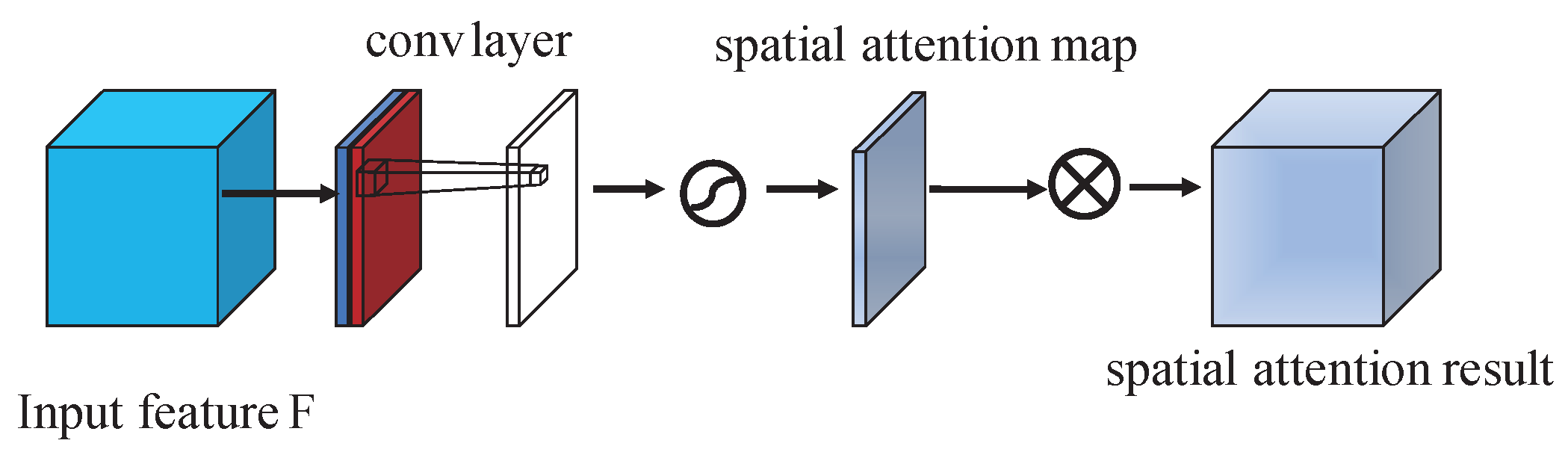

2.4.2. Spatial-Wise Attention Module

In contrast to the channel-wise attention, the spatial-wise attention focuses on the informative region of the spatial dimension. As is shown in

Figure 4, similar to the channel-wise attention module, two types of pooling operations are used to generate different feature descriptors:

and

. In contrast with the channel-wise attention module, the pooling operation in the spatial-wise attention module is along the channel axis. Then, the output feature descriptors are fused by concatenation operation. Then a convolution layer is applied to the concatenated feature. After the convolution layer, we can get the spatial attention map. Then, the input feature is multiplied with the spatial attention map to get spatial-refined feature maps which focus on the most informative region. To be summarized, the spatial attention map is computed as:

where

denotes the activation function and we choose the sigmoid function here,

represents a convolution operation with the filter size of

.

3. Methodology

FDSSC has achieved a very high performance in HSI classification, however, it firstly extracts spectral feature then extracts spatial feature. It means that the firstly extracted spectral features may be influenced in the process of extracting the spatial features because the two types of features are in different domain. In contrast to FDSSC, in our framework, the spectral feature and spatial feature are extracted in two parallel branches and fused for classification.

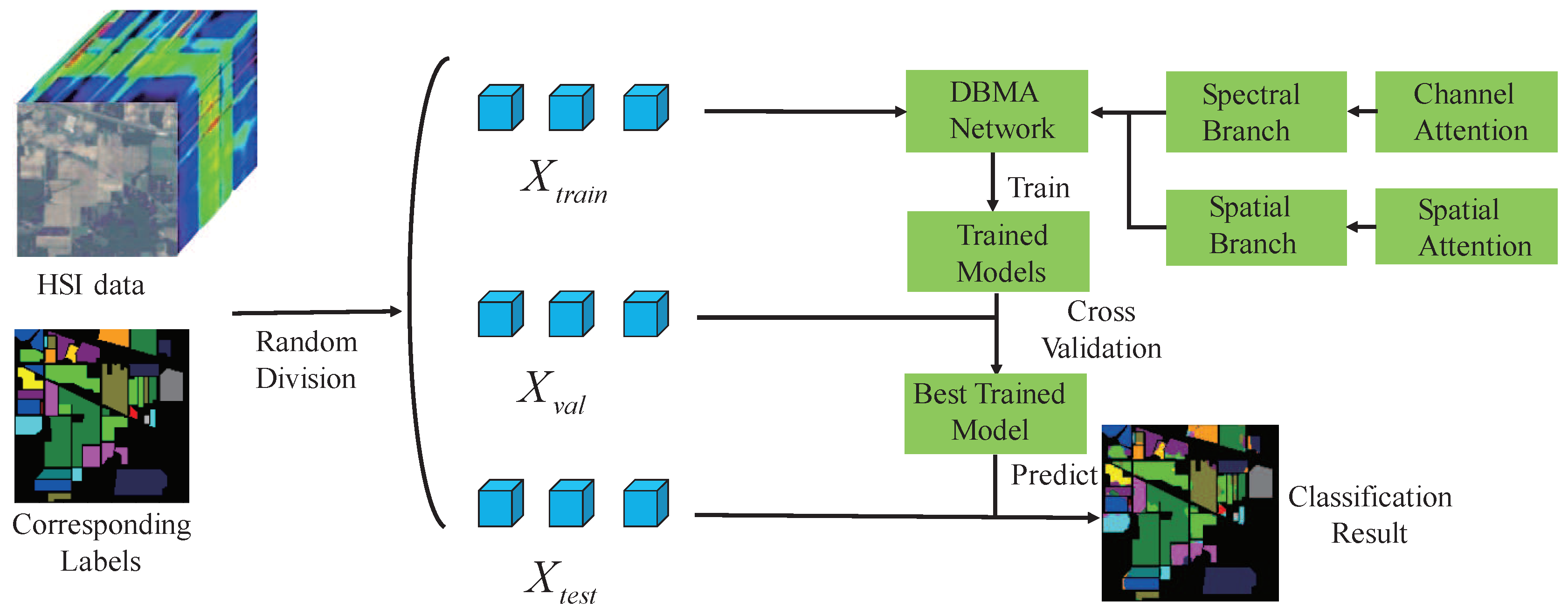

Figure 5 illustrates the whole framework of our method. Firstly, given a hyperspectral image with

size, we extract the

neighborhoods of the center pixel together with its corresponding category label as samples. In contrast to FDSSC using

neighborhoods as input, we use a smaller input size which can reduce the training time. Then, we divide the samples into 3 sets, i.e., training set

, validation set

and testing set

. The training set is used for training model for many epochs, validation set is used for evaluating the classification accuracy and to pick up the network with the highest classification accuracy. Finally, the testing set is used for testing the trained model and the effectiveness of the proposed method. As can be seen in

Figure 5, our network has two branches, i.e., Spectral Branch with Channel Attention and Spatial Branch with Spatial Attention. As can be seen in

Figure 6, for convenience, the top branch is called Spectral Branch while the bottom one is called Spatial Branch. Next, we will introduce the two branches.

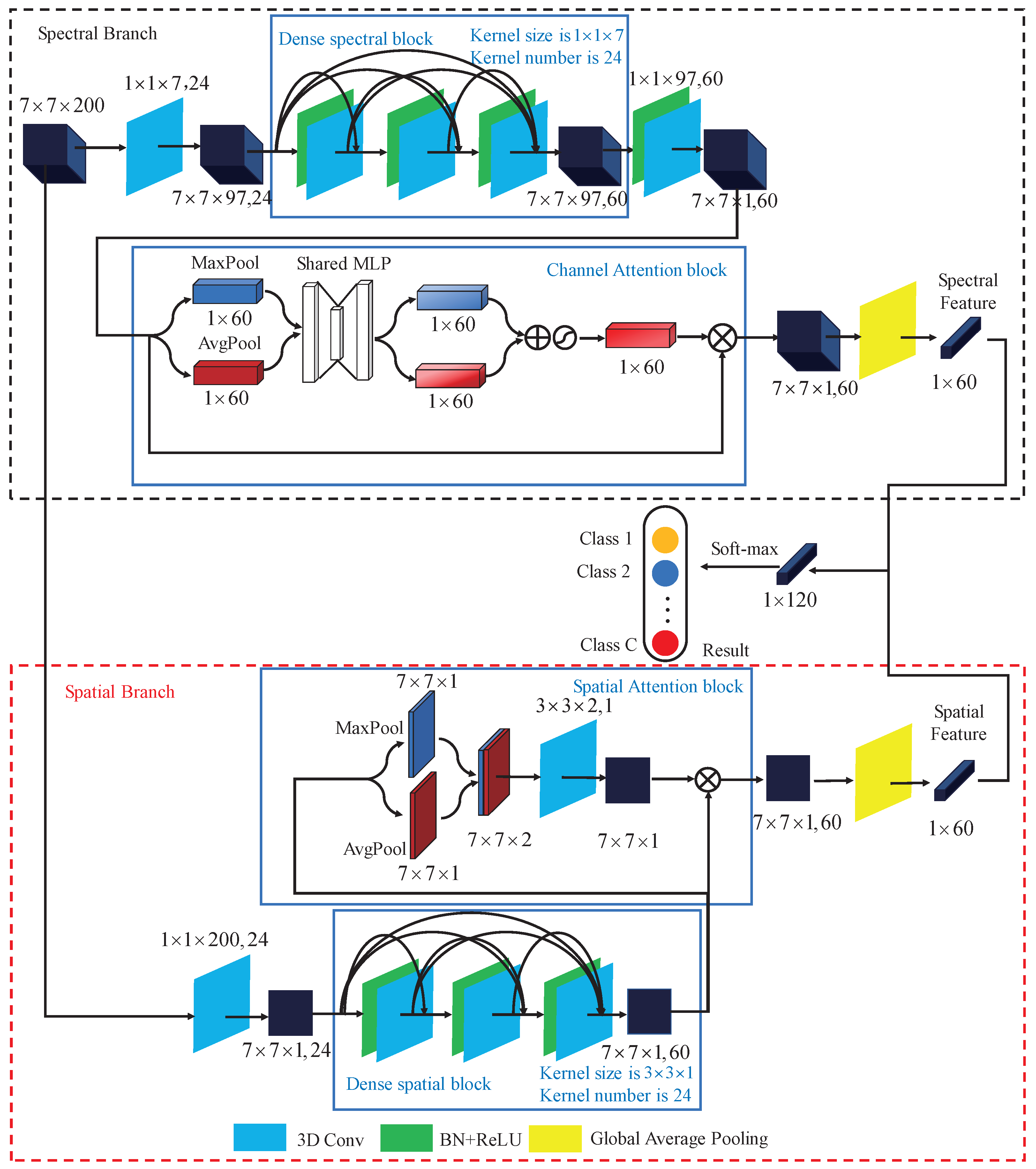

3.1. Spectral Branch with Channel Attention

We take Indian Pines dataset for example and the input patch size is set to

. Spectral Branch consists of a dense spectral block and a channel attention block. First of all, 3D convolutional with kernel size of

is used. In the first convolutional operation, we use ’valid’ padding method and the stride is set to (1,1,2), which is used to reduce the number of spectral channels to a certain degree. After the first convolutional layer, feature maps’ with shape of (

, 24) are obtained. Then, the dense spectral block which consists of 3 convolutional layers with batch normalization layers is used to extract spectral feature. In the dense spectral block, as the existence of concatenation, we set the stride to (1,1,1) to maintain the feature maps’ size. After dense spectral block, spectral feature with size of (

, 60) is obtained. However, the importance of the 60 channels is different. To focus on which is important and obtain more discriminative spectral feature, channel attention block as illustrated in

Section 2.4.1 is applied. After channel attention block, the important channel will be highlighted while the less important channel will be suppressed. Finally the Global Average Pooling layer is employed to get the spectral feature with size of

. Details of the layers of the Spectral Branch are described in

Table 1.

3.2. Spatial Branch with Spatial Attention

Spatial Branch consists of a dense spatial block and a spatial attention block. First of all, 3D convolutional with kernel size of

is used to reduce the number of spectral channels. After the first convolution layer, feature maps with shape of (

, 24) will be obtained. The number of spectral channel decreases from 200 to 1, which will reduce the number of training parameters and prevent overfitting. Then the dense spatial block consists of 3 convolutional layers together with batch normalization layers is used to extract spatial feature. After dense spatial block, spatial feature with size of (

, 60) is obtained. The dense spatial block aims to extract spatial feature, however, the importance of different position of the input patch is different. To focus on ’where’ is an informative part and get more discriminative spatial feature, the spatial attention block in

Section 2.4.2 is used. After Spatial attention block, the features of areas where is more important will be highlighted while the features of areas where is less important will be suppressed. Then the Global Average Pooling layer is employed to get the spatial feature with size of

. Details of the layers of the Spatial Branch are described in

Table 2.

3.3. Spectral-Spatial Fusion for Classification

Through Spectral Branch and Spatial Branch, the spectral feature and spatial feature are obtained. Afterwards, the two features are fused through concatenation for classification. As the two features are not in the same domain, the concatenation operation is used instead of add operation. Through the fully connected layer and soft-max activation, final classification result is obtained.

Network implementation details for other datasets are carried out in a similar manner.

4. Experiments Results

4.1. Datasets Description

In the experiments, three widely used HSI datasets are used to test the proposed method, i.e., the Indian Pines (IP) dataset, the Pavia University (UP) dataset and Salinas Valley (SV) dataset. Three metrics, i.e., overall accuracy (OA), average accuracy (AA), and Kappa coefficient (K) are used to quantitatively evaluate the classification performance. OA refers to the ratio of the number of correct classifications to the total number of pixels to be classified. AA refers to the average accuracy of all classes. Kappa coefficients are used for consistency testing and can also be used to measure classification accuracy. The higher of the 3 index’s value, the better the classification effect is.

Indian Pines (IP): The Indian Pines dataset, was firstly gathered by Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) from Northwest Indiana. The image has 16 classes and pixels with a resolution of 20 m/pixel. 20 bands was discarded and the remaining 200 bands are adopted for analysis. The wavelength of spectral is in range of 0.4 um to 2.5 um.

Pavia University (UP): Pavia University dataset, was firstly gathered by the reflective optics imaging spectrometer (ROSIS-3) from the University of Pavia, Italy. The image has 9 classes and pixels with a spatial resolution of 1.3 m/pixel. 12 noisy bands are removed and the left 103 bands are used for analysis. The wavelength of spectral is in range of 0.43 um to 0.86 um.

Salinas Valley (SV): This dataset was gatherd by the AVIRIS sensor from Salinas Valley, CA, USA. The image has 16 classes and pixels with a resolution of 3.7 m/pixel. For classification, 20 bands are removed and 204 bands are preserved. The wavelength is in range of 0.4 um to 2.5 um.

4.2. Experimental Setting

To demonstrate the effectiveness of the proposed method, our method is compared with several widely used methods and the state-of-the-art methods, including (1) spectral-based classifier, i.e., the SVM with RBF kernel [

44]; (2) spectral-spatial classifier Gabor-SVM [

45] and DMP-SVM [

46]; (3) deeplearning-based classifier 3DCNN [

24], SSRN [

34] and the recently proposed method fast dense Spectral–Spatial Network (FDSSC) [

36]. Next, we will introduce these methods separately.

SVM: For SVM, we simply feed all bands of the HSI to SVM with an radial basis function kernel.

Gabor-SVM: For Gabor-SVM, we extract gabor feature of the HSI and feed the gabor feature into SVM with an RBF kernel. We use PCA to extract first 10 PCs of the original image. 4 orientations and 3 scales are selected to construct the Gabor filters. For each PC, the length of the gabor feature vector is 12. So the gabor feature vector length is 120.

DMP-SVM: For DMP-SVM, we extract the differential morphological profiles features and feed the feature into the SVM with radial basis function. To extract the DMP feature, we use the first 5 PCs, and the sizes of the structure elements are set to 2, 4, 6, 8 and 10 so the DMP feature vector length is 50.

It has to be noted that the best parameter setting of SVM, Gabor-SVM, DMP-SVM are obtained by cross validation to ensure the best classification result.

3DCNN: For 3DCNN, we use

,

,

neighbors of each pixel as the input data, respectively. We design the network follow the instruction in [

24].

SSRN: The architecture of the SSRN is set out in [

34]. We use

neighbors of each pixel as the input data, where

L denotes the spectral channel number of the dataset. We set two spectral residual blocks and two spatial residual blocks according to [

34].

FDSSC: The architecture of the FDSSC is set out in [

36]. The input patch size is set to

and we set one dens spectral dense block and one spatial dense block in the architecture.

besides the training method, the number of samples used for training also plays an important role. The more data used in training stage usually leads to a higher test accuracy, but the corresponding training time and computation complexity will increase dramatically. Therefore, for IP dataset, we choose training samples and validation samples. In addition, for UP dataset and SV dataset, since their samples are enough for every class, we only choose training samples and validation samples to save the training time.

For 3DCNN, SSRN, FDSSC and our method, the batch size is set to 32 and the Adam optimizer is adopted. The learning rate is set to 0.01 and we train each model for 200 epochs. While training the model, the model with the highest classification performance in validation samples is restored for testing. The early stopping strategy is also adopted, i.e., if the accuracy in validation set does not improve for 20 epochs, we terminate the training stage.

4.3. Classification Maps and Results

4.3.1. Classification Maps and Result of IP Dataset

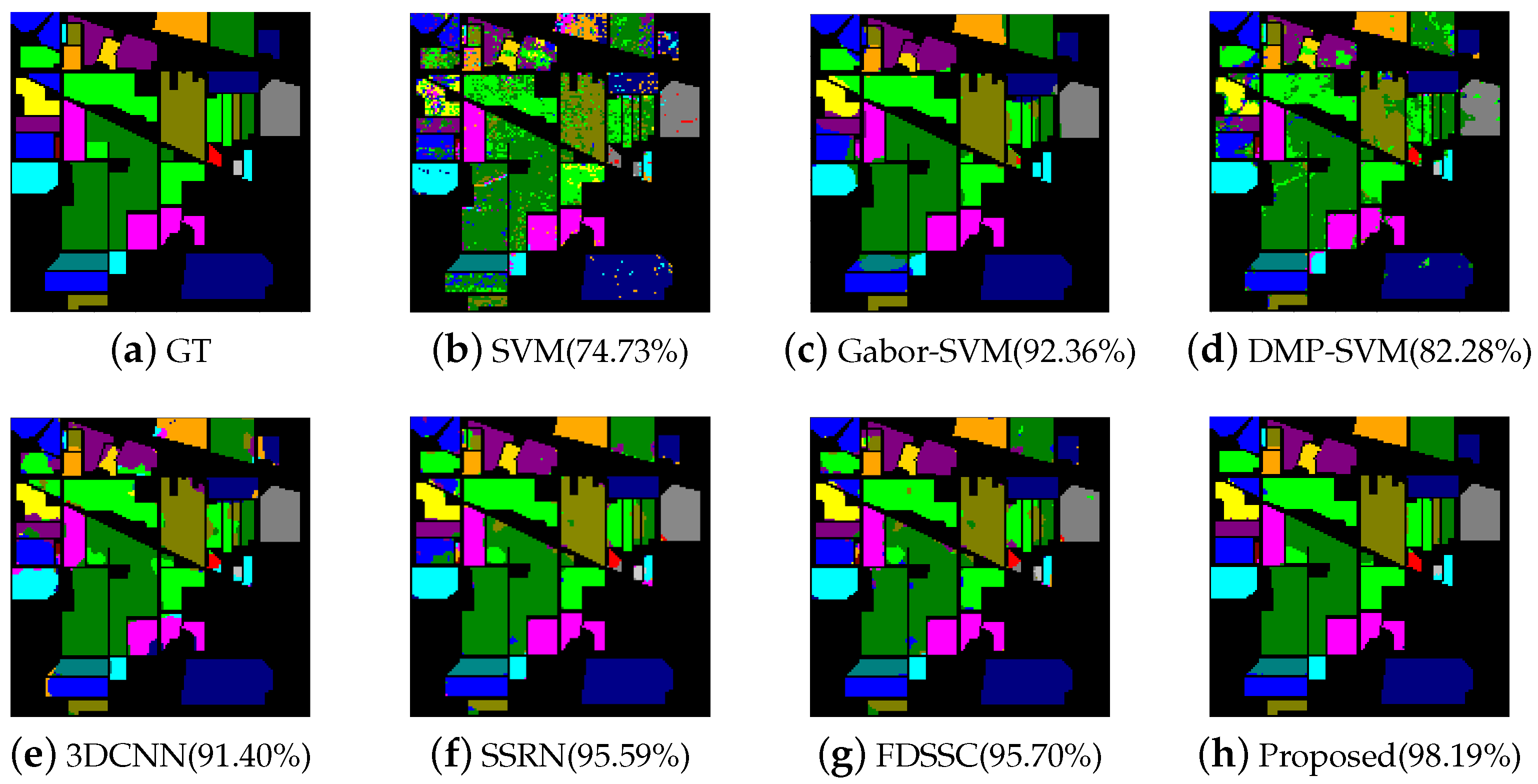

The results of IP dataset are reported in

Table 6 and the highest class-specific accuracies are in bold.

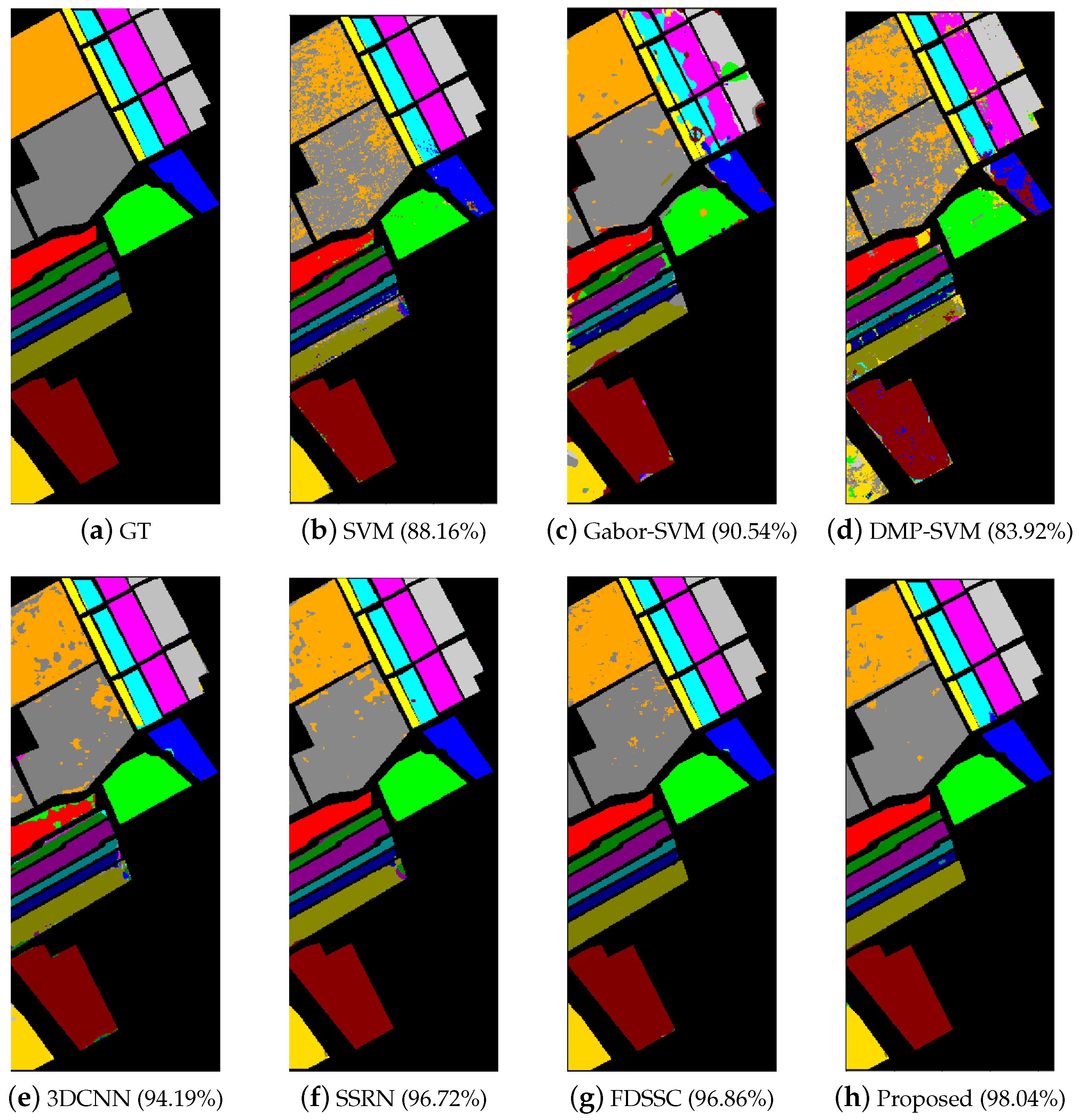

Figure 7 shows the classification maps of different methods.

From

Table 6, we can see that our method achieves the best performance, with 98.19% OA, 96.31% AA and 0.9794 Kappa. For SVM, it achieves the worst performance with only

OA. Compared with the original SVM, the Gabor-SVM and DMP-SVM lead to a better performance because they also consider the spatial information for classification. However, the Gabor feature performs better than the DMP feature in terms of 3 indexes. For the four deep learning method, i.e., 3DCNN, SSRN, FDSSC and our method, 3DCNN is better than DMP-SVM with nearly

improvement in OA but worse than Gabor-SVM. SSRN and FDSSC is better than 3DCNN with nearly

improvement in OA. The reason of the FDSSC’s success in HSI classification can be concluded as the following: first, it extracts spectral feature and spatial feature separately. Second, the dense connection can deepen the structure. The two advantage ensures FDSSC can extract more discriminative features. However, our method, improves the OA

compared with FDSSC and the other two indexs are also higher than FDSSC. Although our method achieves worse result than FDSSC in some classes, the OA, AA and kappa coefficient are the highest among these methods.

From the classification maps shown in

Figure 7, ’salt-and-pepper’ noise is the worst for SVM due to the lack of incorporation of spatial information in the classification while the classification map of Gabor-SVM and DMP-SVM show more spatial continuity because they have consider the spatial information. Among these methods, our method shows least ’salt-and-pepper’ noise which corresponds to the result of

Table 6.

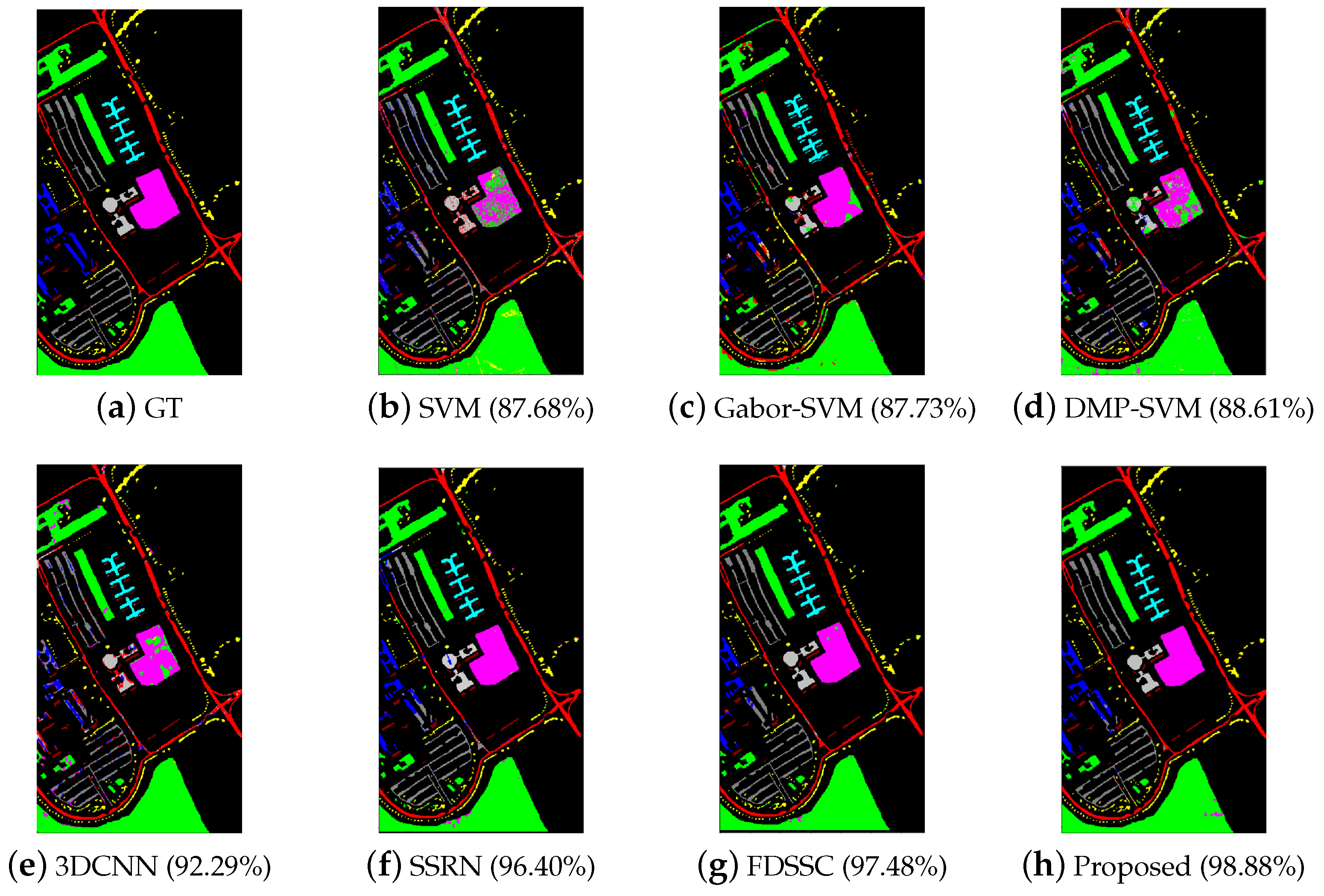

4.3.2. Classification Maps and Result of UP Dataset

The results of the Pavia University dataset are reported in

Table 7 and the highest class-specific accuracies are in bold. The classification maps of different methods are shown in

Figure 8.

From

Table 7 we can see that our method achieves the best performance in terms of 3 index. For accuracy of every class, although our method has not achieved the best performance in every class, but for class 7, which have only 13 training samples, our method performs well, while other methods performed poor in this class. For class 8, other methods’ accuracy are all lower than

, which is a very low accuracy, but our method can achieve accuracy of 95%.

Although Gabor-SVM and DMP-SVM show little improvement in the aspect of OA, but the classification maps of them show more spatial continuity than SVM. For deep-learning-based models, 3DCNN improves OA about 4.5% compared with Gabor-SVM while FDSSC improves OA about 5% compared with 3DCNN which is very large improvement. However, our method achieves the highest performance in the three index among these methods.

4.3.3. Classification Maps and Results of SV Dataset

The results of the SV dataset are listed in

Table 8 and the highest class-specific accuracies are in bold. The classification maps of different methods are shown in

Figure 9.

From

Table 8 we can see that SVM, Gabor-SVM and DMP-SVM perform poorly in terms of OA, which are all below 91%. The classification maps of them also show large areas of mislabeled. This phenomenon has been avoided in 3DCNN, SSRN, FDSSC and our method. Furthermore, our method performs the best in terms of 3 indexes compared with other methods. In addition, the classification map of our method shows less mislabeled areas than other methods. For class 15, the accuracy of other method are all low than 93%, but our method can achieve the accuracy of 98.28%, which is the highest among these methods.

4.4. Investigation on Running Time

Table 9,

Table 10 and

Table 11 list the training and test time of the seven methods on the IP, UP and SV datasets, respectively. From

Table 9,

Table 10 and

Table 11, we can find that SVM-based methods usually spend less time than deep-learning-based methods. Furthermore, Gabor-SVM and DMP-SVM spend less time than SVM because the length of Gabor-feature and DMP feature is shorter than the original feature. It has to be noted that, for Gabor-SVM and DMP-SVM, the training stage does not include the process of extracting the Gabor and DMP feature. For deep-learning-based methods, 3DCNN spends the most time due to the large input size and the large number of parameters to be trained. The training time and test time of SSRN and FDSSC is less than 3DCNN and the accuracy of them is much higher than 3DCNN, which proves the superiority of SSRN and FDSSC. FDSSC spends less time in training stage while more time in test stage compared with SSRN because the dense connected structure helps FDSSC to come to convergence more quickly, while FDSSC usually have more parameters which slows down the test speed. For our method, it spends less training time while gets much higher classification accuracy than FDSSC.

4.5. Investigation on the Number of Training

In

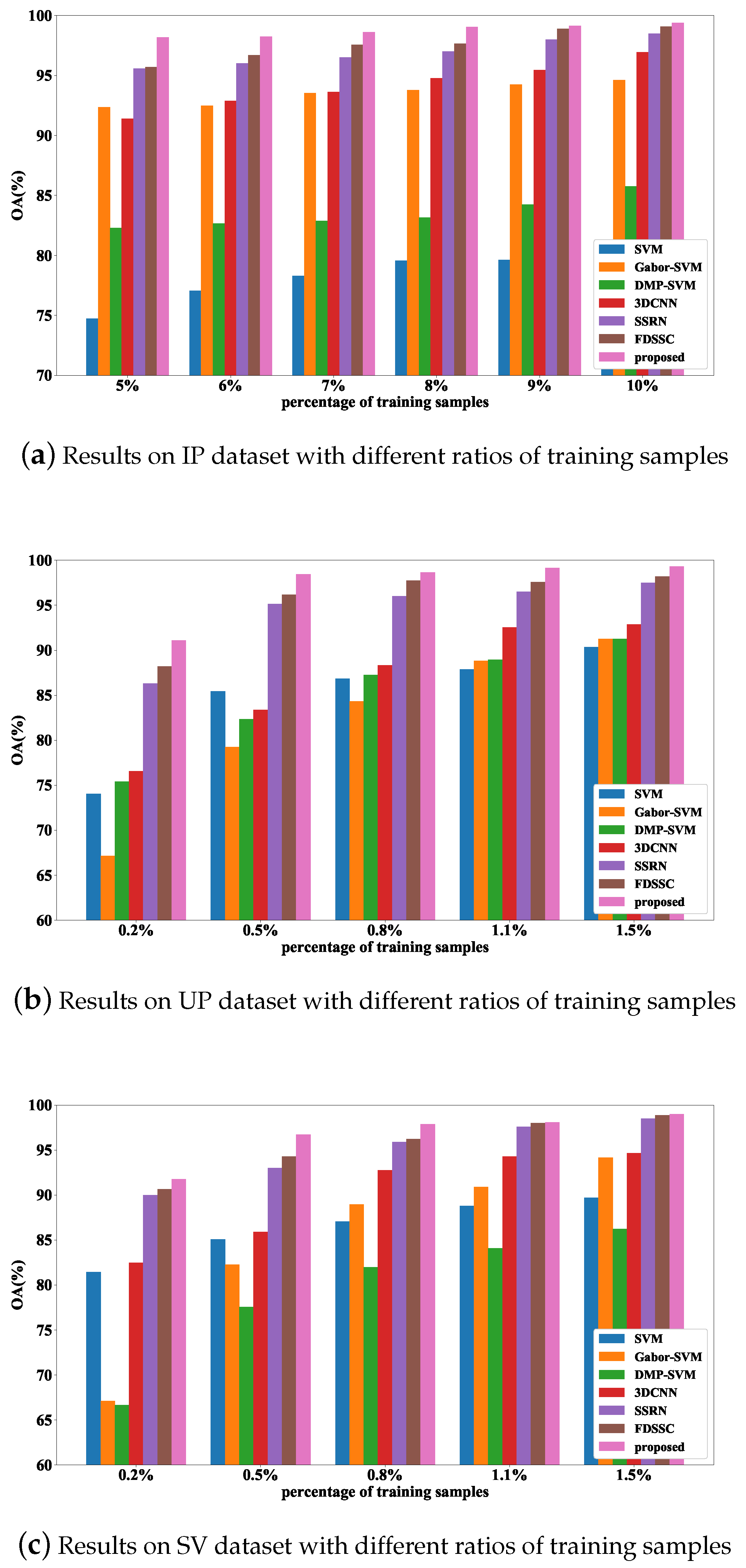

Section 4.2, we have illustrated the effectiveness of our method, especially in the case of having a small number of training samples. In this part, we would further investigate the performance with different number of training samples.

Figure 10 shows the experiment results. For IP dataset, the number of training samples per class is varied from 5% to 10% with an interval of 1%. For UP dataset and SV dataset, the number of training samples per class is varied from 0.2% to 1.4% with an interval of 0.3%.

As expected, with the training samples’ number increasing, the accuracy increases. We can see that no matter in what case, our method still performs better than other methods. From

Figure 10a, we can see that SVM has the worst performance among the 7 methods and the OA is not higher than 80% in all cases. The Gabor-SVM outperforms DMP-SVM in all cases. With the number of training samples increasing, the 3DCNN gradually outperforms Gabor-SVM. The accuracy of FDSSC is slenderly higher than SSRN. Among these 7 methods, our method is always better than FDSSC in term of OA, especially in the circumstance of having very few training samples, which indicates the superiority of our method.

As is shown in

Figure 10b, interestingly, Gabor-SVM performs worse than DMP-SVM and when the training samples are very few (i.e. 0.2%–0.5%), SVM performs better than DMP-SVM, Gabor-SVM and 3DCNN, which indicates that when the training samples is very few, the Gabor feature, DMP feature give little improvement for classification, 3DCNN is also not suitable in the case of having very few training samples, while SVM seems very suitable for classification in this case. In contrast with the aforementioned methods, FDSSC, SSRN and our method still perform well in all cases which indicates the stability of the 3 methods. Apparently, our method performs better than FDSSC and SSRN in all cases.

As is shown in

Figure 10c, the same as UP dataset, SVM performs well in SV dataset, always better than DMP-SVM. For Gabor-SVM, when the training samples is very few, it performs worse than SVM, but with the training samples increasing, it outperforms SVM. Also, Gabor feature seems be more suitable for SV dataset than DMP feature. Among these methods, FDSSC, SSRN and our method still have good performance, which is much better than 3DCNN. Besides, our method achieves the highest accuracy in all cases.

Thus, our method is suitable in the circumstance when the number of training samples is limited.

4.6. Effectiveness of Channel Attention Mechanism and Spatial Attention Mechanism

To validate the effectiveness of channel-wise attention mechanism and spatial-wise attention mechanism, we do three another experiments, i.e., without spectral attention and spatial attention (denoted as proposed1), only with spatial attention (denoted as proposed2) and only with spectral attention (denoted as proposed3). From

Figure 11 we can see that without attention mechanism, the accuracy of three datasets will decrease in three dataset, which proves the effectiveness of attention mechanism. Furthermore, the spectral attention mechanism plays a more important role in HSI classification than spatial attention mechanism.

5. Conclusions

In this paper, a Double-Branch Multi-Attention mechanism network was proposed for HSI classification. It has two branches to extract spectral feature and spatial feature respectively, using densely connected 3D convolution layer with kernels of different sizes. Furthermore, according to the different purposes and characteristics of the two branches, the channel attention and spatial attention are applied in the two branches respectively to extract more discriminative feature. Our work is on the basic of FDSSC and CBAM. FDSSC is the state-of-the-art architecture in HSI classification, and CBAM is a novel and efficient attention network in image classification. Although it seems like a minor improvement, a lot of experiment results shows that our proposed method outperforms other state-of-the-art methods, especially in the case of having very few training samples. Furthermore, the training time is also reduced compared with the other two deep-learning methods because the attention blocks speed up the convergence of the network.

However, due to the attention block, the parameters of the network increase, which results in more time cost while testing stage. On the one hand, 3DCNN uses kernels of 3 dimensions and results in more parameters to train. To reduce the impact, we first reduce the spectral channels to 1 using 3D kernel with size of (L represents the number of spectral channel), and set the kernel size of spectral domain to 1 in the dense spectral block. In our future work, we will try to use 2DCNN directly to extract spatial information. On the other hand, Recurrent Neural Network (RNN) seems more suitable for dealing with sequence data than CNN because it considers the order and relationship of the data. Obviously, HSI data can be regarded as sequence data and the relationship between different bands is useful for classification. In our future work, we will try to use RNN to extract spectral information.