A Framelet-Based Iterative Pan-Sharpening Approach

Abstract

1. Introduction

2. Related Work

3. The Proposed Method

- (1)

- For , update each by solving:

- (2)

- For , update each by solving:

- (3)

- For , update each by solving:

- (4)

- For , update each by .

- (5)

- For , update each by .

| Algorithm 1 ADMM scheme for the proposed model |

| Input: panchromatic image , upsampled multispectral image , . Output: high-resolution multispectral image . while not converged do for do (1) Solve by (12). (2) Solve by (14). (3) Solve by (15). (4) Update by . (5) Update by . end for end while |

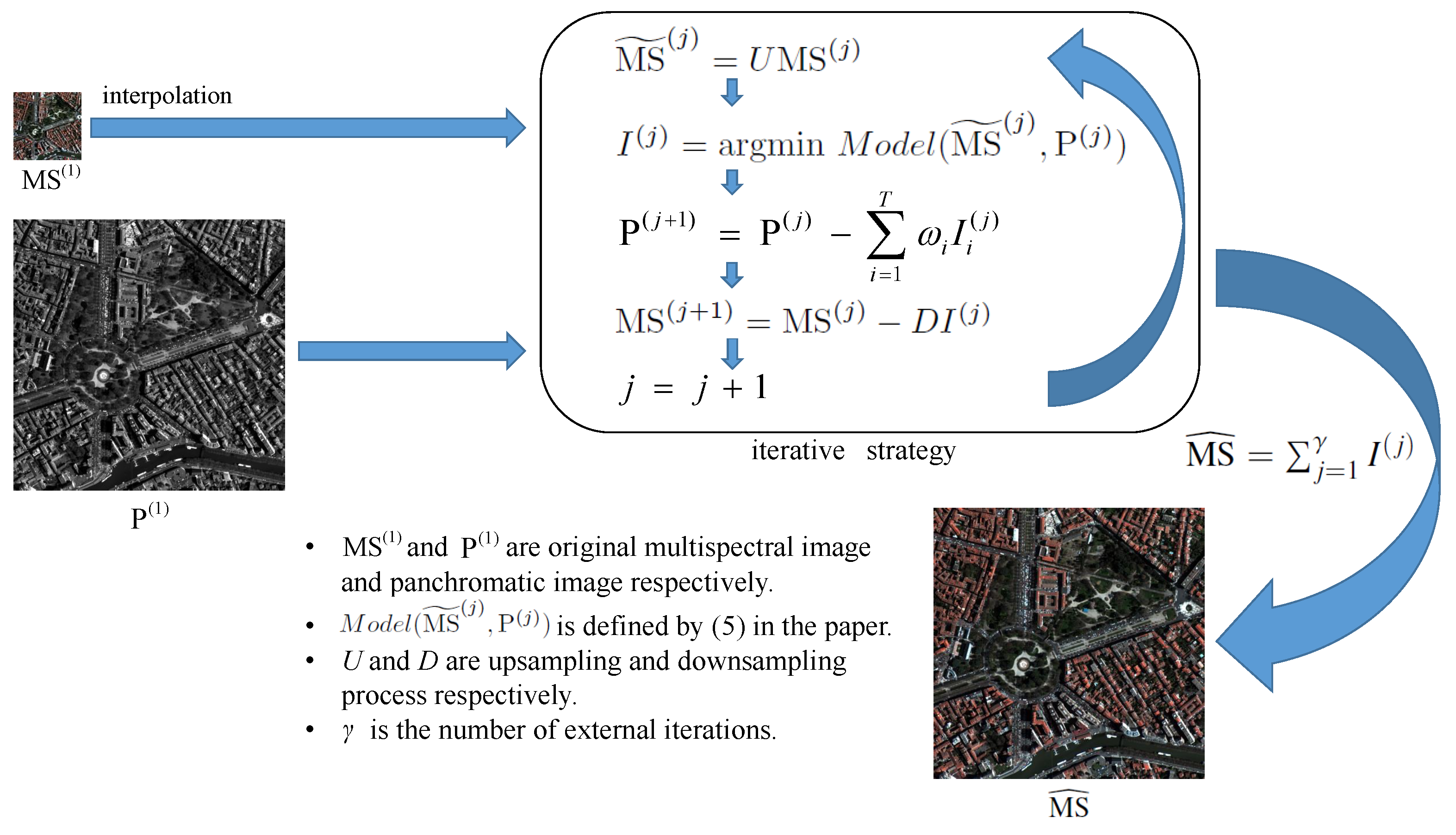

| Algorithm 2 The proposed iterative pan-sharpening algorithm |

| Input: panchromatic image , multispectral image , . Output: high-resolution multispectral image . 1. Initialization: . for do (1) Upsample to obtain . (2) Compute by implementing Algorithm 1 ( instead of as input). (3) Update by . (4) Update by . end for 2. Compute the final output: . |

4. Results and Discussion

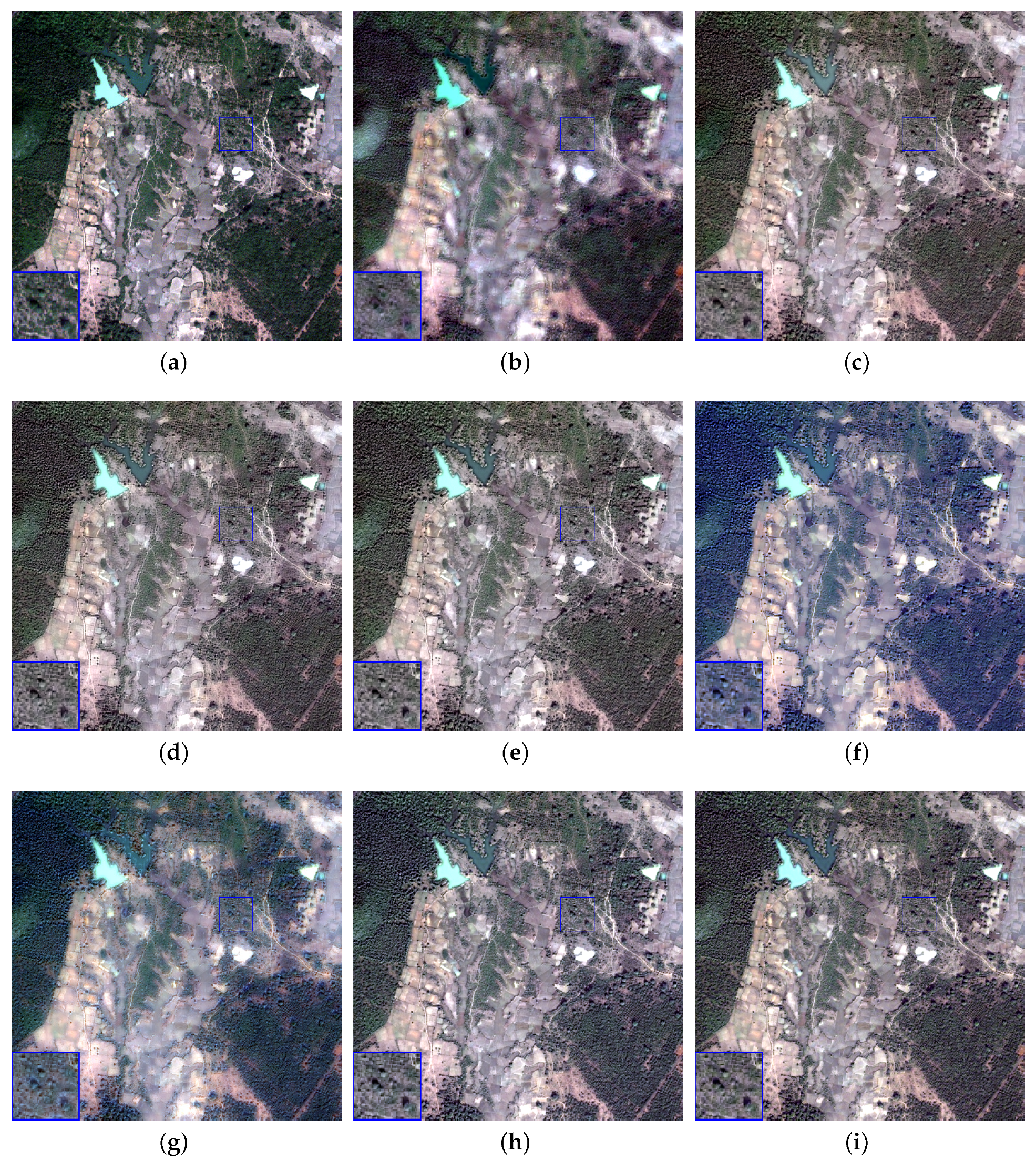

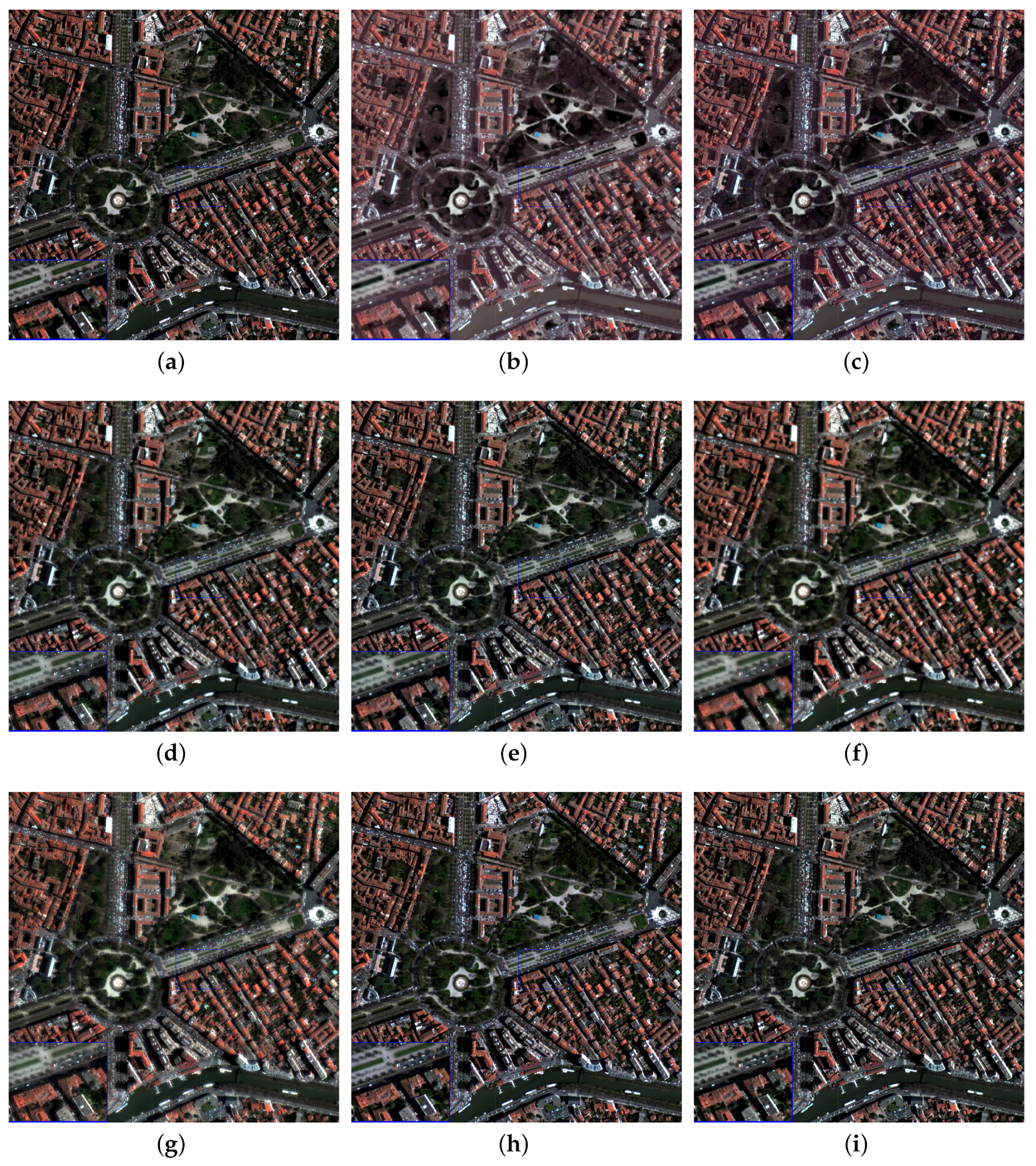

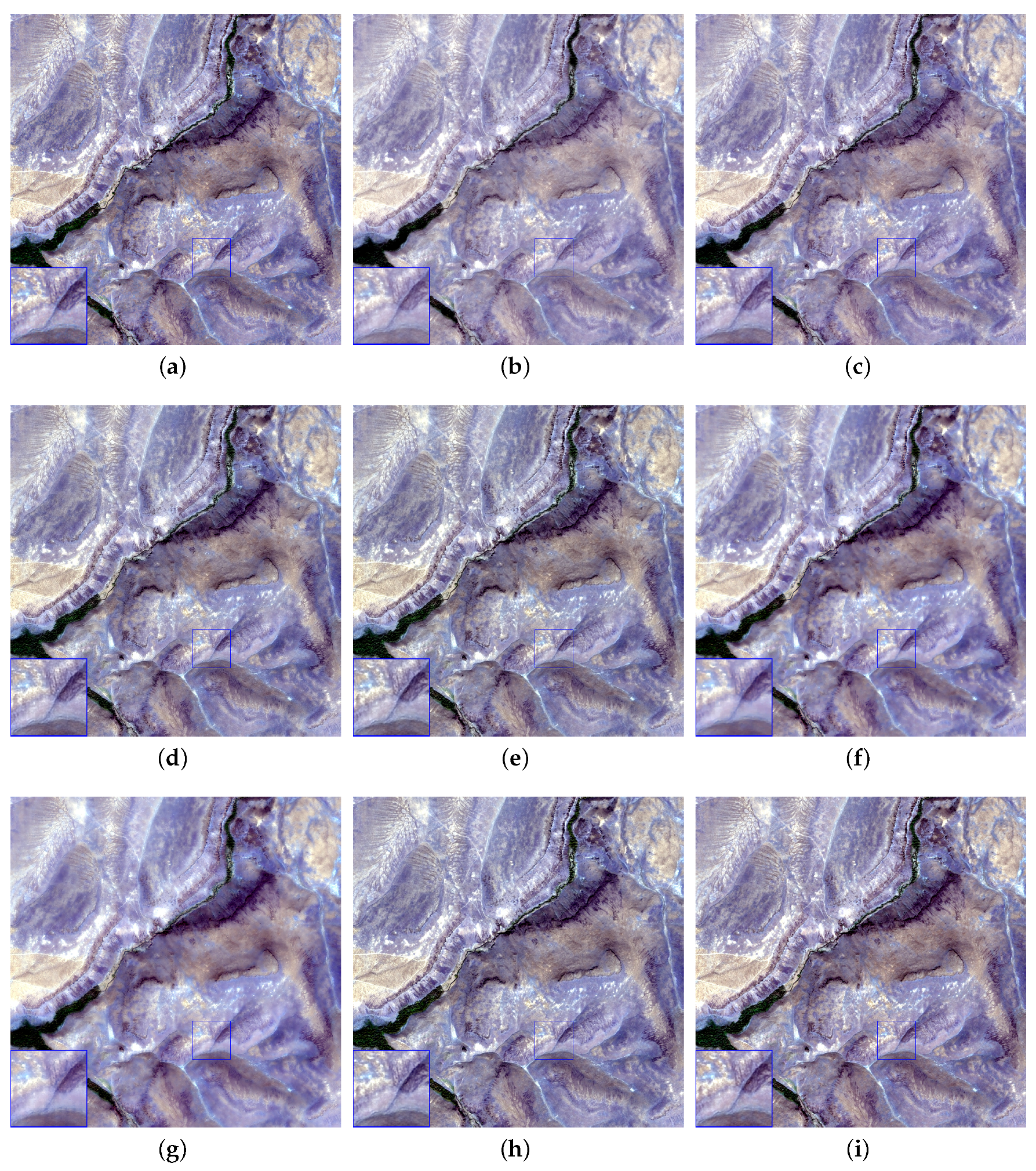

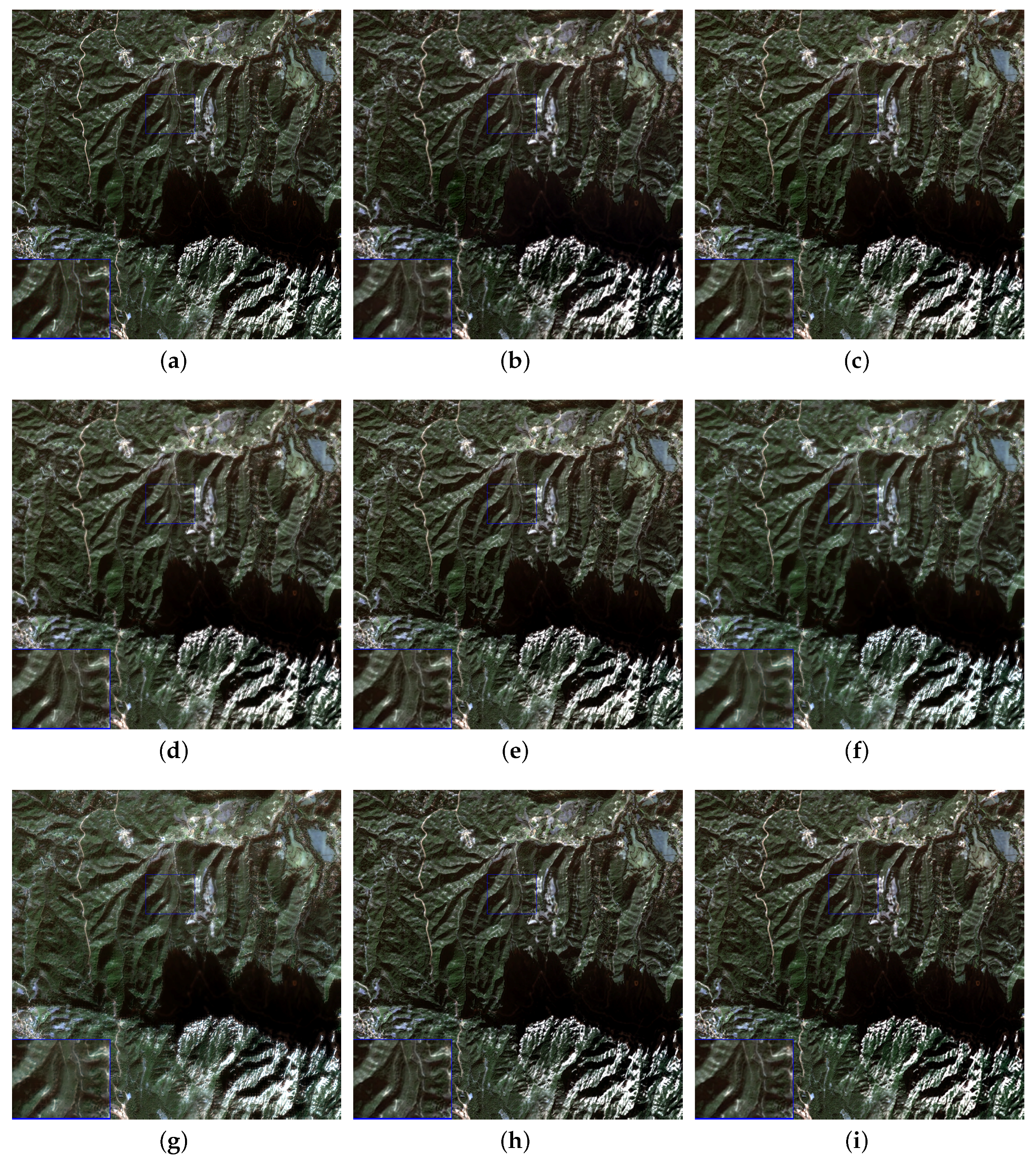

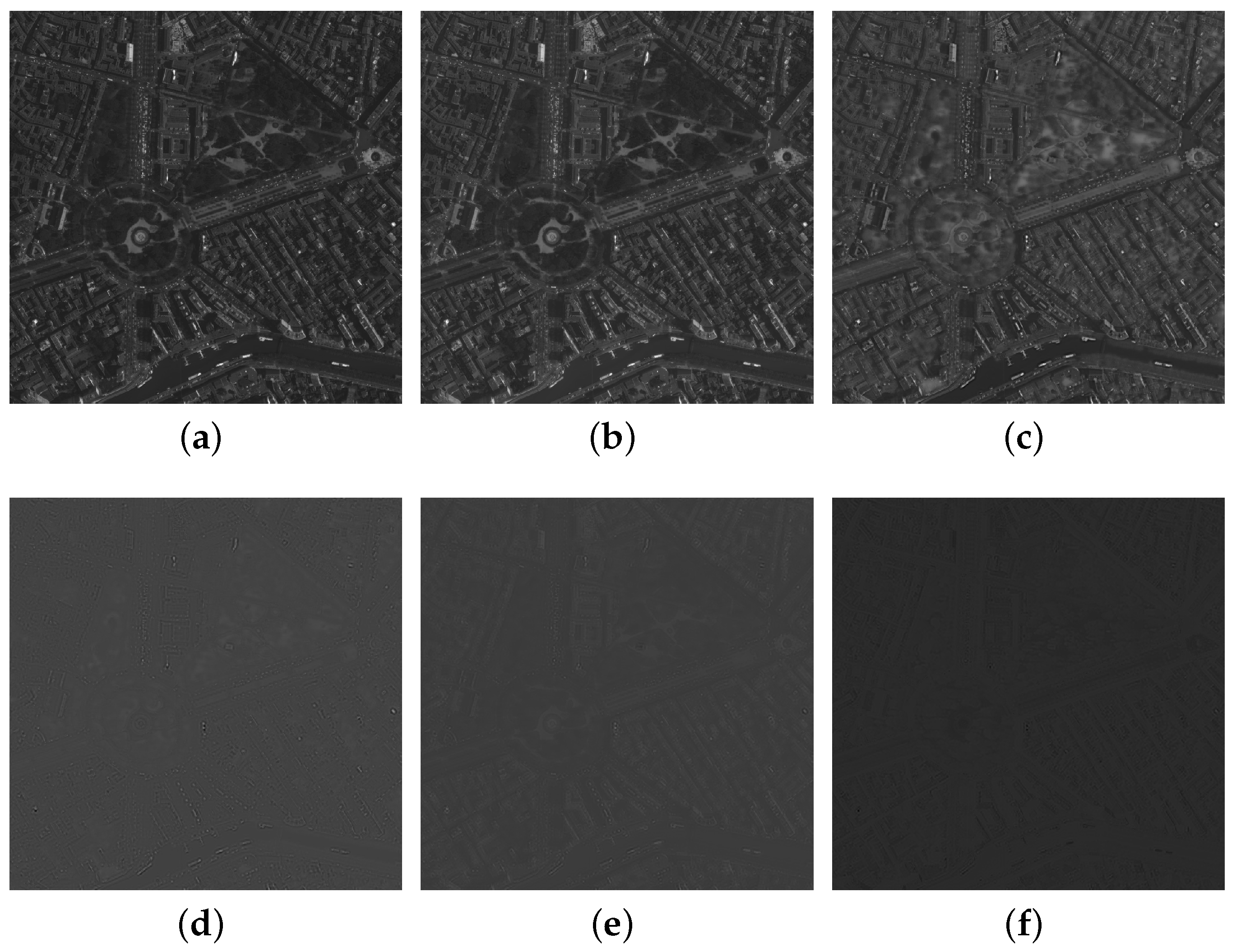

4.1. Visual Comparison

4.2. Quantitative Comparison

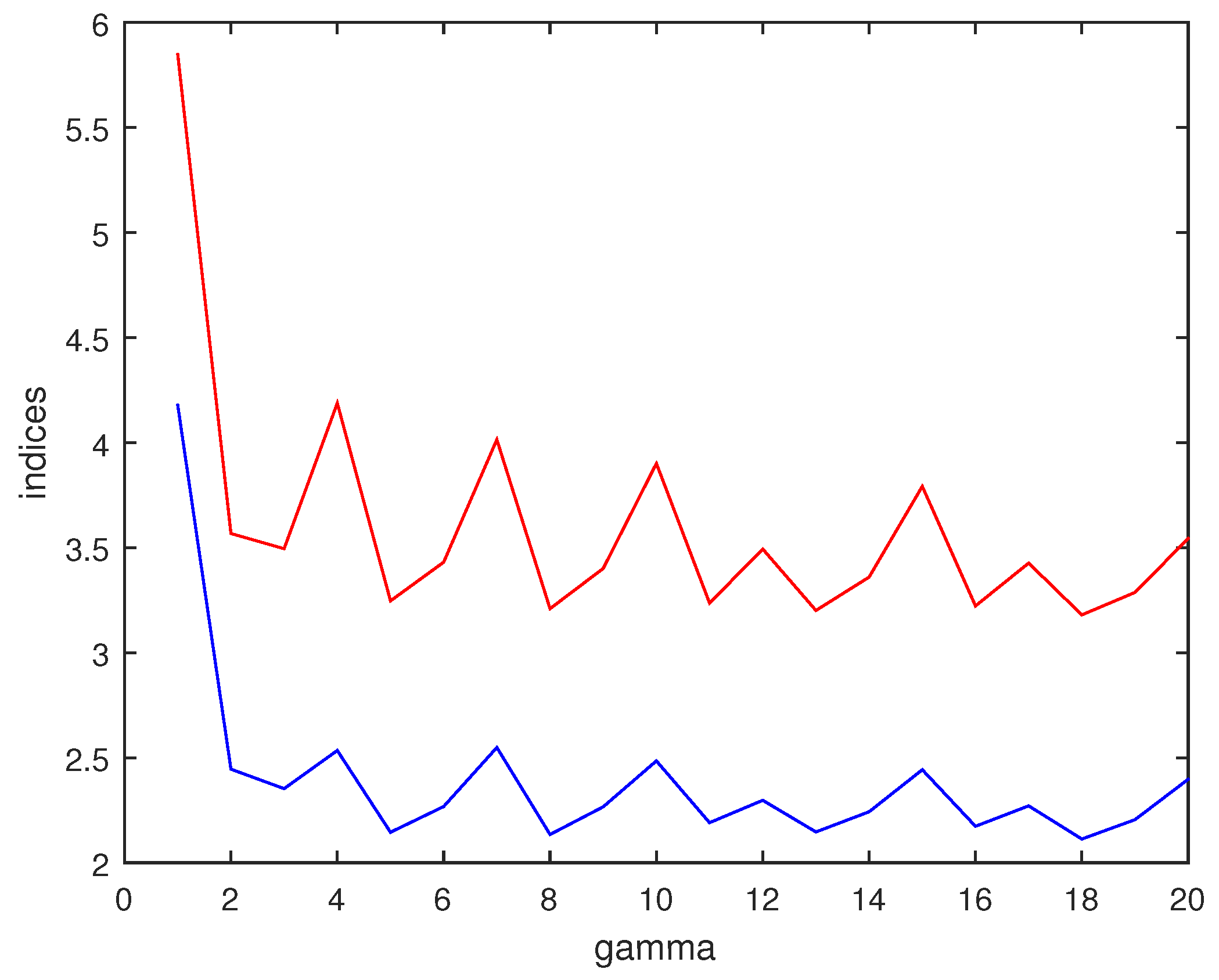

4.3. Discussion on the Number of Outer Iterations

4.4. Time Comparison with RKHS Method

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Mohammadzadeh, A.; Tavakoli, A.; Valadan Zoej, M.J. Road extraction based on fuzzy logic and mathematical morphology from pansharpened IKONOS images. Photogramm. Rec. 2006, 21, 44–60. [Google Scholar] [CrossRef]

- Souza, C., Jr.; Firestone, L.; Silva, L.M.; Roberts, D. Mapping forest degradation in the Eastern Amazon from SPOT 4 through spectral mixture models. Remote Sens. Environ. 2003, 87, 494–506. [Google Scholar] [CrossRef]

- Carper, W.; Lillesand, T.; Kiefer, R. The use of intensity-hue-saturation transformations for merging SPOT panchromatic and multispectral image data. Photogramm. Eng. Remote Sens. 1990, 56, 459–467. [Google Scholar]

- Chavez, P.S., Jr.; Kwarteng, A.W. Extracting spectral contrast in Landsat thematic mapper image data using selective principal component analysis. Photogramm. Eng. Remote Sens. 1989, 55, 339–348. [Google Scholar]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6011875, 2000. [Google Scholar]

- Xu, Q.; Li, B.; Zhang, Y.; Ding, L. High-fidelity component substitution pansharpening by the fitting of substitution data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7380–7392. [Google Scholar]

- Kang, X.; Li, S.; Benediktsson, J.A. Pansharpening with Matting model. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5088–5099. [Google Scholar] [CrossRef]

- Shensa, M.J. The discrete wavelet transform: Wedding the à trous and Mallat algorithm. IEEE Trans. Signal Process. 1992, 40, 2464–2482. [Google Scholar] [CrossRef]

- Mallat, S. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Nason, G.P.; Silverman, B.W. The stationary wavelet transform and some statistical applications. In Wavelets and Statistics; Springer: New York, NY, USA, 1995; pp. 281–299. [Google Scholar]

- Burt, P.J.; Adelson, E.H. The Laplacian pyramid as a compact image code. IEEE Trans. Commun. 1983, COM-31, 532–540. [Google Scholar] [CrossRef]

- Starck, J.L.; Fadili, J.; Murtagh, F. The undecimated wavelet decomposition and its reconstruction. IEEE Trans. Image Process. 2007, 16, 297–309. [Google Scholar] [CrossRef] [PubMed]

- Nencini, F.; Garzelli, A.; Baronti, S.; Alparone, L. Remote sensing image fusion using the curvelet transform. Inf. Fusion 2007, 8, 143–156. [Google Scholar] [CrossRef]

- Price, J.C. Combining panchromatic and multispectral imagery from dual resolution satellite instruments. Remote Sens. Environ. 1987, 21, 119–128. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. A new pansharpening algorithm based on total variation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 318–322. [Google Scholar] [CrossRef]

- Zhao, X.L.; Wang, F.; Huang, T.Z.; Ng, M.K.; Plemmons, R. Deblurring and sparse unmixing for hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4045–4058. [Google Scholar] [CrossRef]

- Fang, F.M.; Li, F.; Shen, C.M.; Zhang, G.X. A variational approach for pan-sharpening. IEEE Trans. Image Process. 2013, 22, 2822–2834. [Google Scholar] [CrossRef] [PubMed]

- Fang, F.M.; Li, F.; Zhang, G.X.; Shen, C.M. A variational method for multisource remote-sensing image fusion. Int. J. Remote Sens. 2013, 34, 2470–2486. [Google Scholar] [CrossRef]

- He, X.; Condat, L.; Bioucas-Dias, J.; Chanussot, J.; Xia, J. A new pansharpening method based on spatial and spectral sparsity priors. IEEE Trans. Image Process. 2014, 23, 4160–4174. [Google Scholar] [CrossRef] [PubMed]

- Pan, Z.X.; Yu, J.; Huang, H.J.; Hu, S.X.; Zhang, A.W.; Ma, H.B.; Sun, W.D. Super-Resolution Based on Compressive Sensing and Structural Self-Similarity for Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4864–4876. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Deng, L.J.; Vivone, G.; Guo, W.H.; Mura, M.D.; Chanussot, J. A variational pansharpening approach based on reproducible kernel Hilbert space and Heaviside function. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving Component Substitution Pansharpening Through Multivariate Regression of MS + Pan Data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Deng, L.J.; Guo, W.H.; Huang, T.Z. Single image super-resolution via an iterative reproducing kernel Hilbert space method. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 2001–2014. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.X.; Bamler, R. A sparse image fusion algorithm with application to pan-sharpening. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2827–2836. [Google Scholar] [CrossRef]

- Dong, B.; Zhang, Y. An efficient algorithm for l0 minimization in wavelet frame based image restoration. J. Sci. Comput. 2013, 54, 350–368. [Google Scholar] [CrossRef]

- Huang, J.; Donatelli, M.; Chen, R. Nonstationary iterated thresholding algorithms for image deblurring. Inverse Probl. Imaging 2013, 7, 717–736. [Google Scholar]

- Cai, J.F.; Dong, B.; Shen, Z.W. Image restoration: A wavelet frame based model for piecewise smooth functions and beyond. Appl. Comput. Harmon. Anal. 2016, 41, 94–138. [Google Scholar] [CrossRef]

- Shi, Y.; Yang, X.; Cheng, T. Pansharpening of multispectral images using the nonseparable framelet lifting transform with high vanishing moments. Inf. Fusion 2014, 20, 213–224. [Google Scholar] [CrossRef]

- Fang, F.M.; Zhang, G.X.; Li, F.; Shen, C.M. Framelet based pan-sharpening via a variational method. Neurocomputing 2014, 129, 362–377. [Google Scholar] [CrossRef]

- Ron, A.; Shen, Z. Affine systems in L2(ℝd): The analysis of the analysis operator. J. Funct. Anal. 1997, 148, 408–447. [Google Scholar] [CrossRef]

- Dong, B.; Shen, Z. MRA-Based Wavelet Frames and Applications; IAS Lecture Notes Series; The Mathematics of Image Processing: Park City, UT, USA, 2010. [Google Scholar]

- Cai, J.F.; Osher, S.; Shen, Z.W. Split Bregman methods and frame based image restoration. Multiscale Model. Simul. SIAM Interdiscip. J. 2009, 8, 337–369. [Google Scholar] [CrossRef]

- Elad, M.; Starck, J.; Querre, P.; Donoho, D. Simultaneous cartoon and texture image inpainting using morphological component analysis (MCA). Appl. Comput. Harmonic Anal. 2005, 19, 340–358. [Google Scholar] [CrossRef]

- Chambolle, A.; Pock, T. A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 2011, 40, 120–145. [Google Scholar] [CrossRef]

- He, B.; Tao, M.; Yuan, X. Alternating direction method with Gaussian back substitution for separable convex programming. SIAM J. Optim. 2012, 22, 313–340. [Google Scholar] [CrossRef]

- Liu, J.; Huang, T.Z.; Selesnick, I.W.; Lv, X.G.; Chen, P.Y. Image restoration using total variation with overlapping group sparsity. Inf. Sci. 2015, 195, 232–246. [Google Scholar] [CrossRef]

- Deng, L.J.; Guo, W.H.; Huang, T.Z. Single image super-resolution by approximated Heaviside functions. Inf. Sci. 2016, 348, 107–123. [Google Scholar] [CrossRef]

- Ji, T.Y.; Huang, T.Z.; Zhao, X.L.; Ma, T.H.; Liu, G. Tensor completion using total variation and low-rank matrix factorization. Inf. Sci. 2016, 326, 243–257. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, T.Z.; Zhao, X.L.; Deng, L.J.; Huang, J. Stripe noise removal of remote sensing images by total variation regularization and group sparsity constraint. Remote Sens. 2017, 9, 559. [Google Scholar] [CrossRef]

- Dou, H.X.; Huang, T.Z.; Deng, L.J.; Zhao, X.L.; Huang, J. Directional l0 sparse modeling for image stripe noise removal. Remote Sens. 2018, 10, 361. [Google Scholar] [CrossRef]

- Oden, J.T.; Glowinski, R.; Tallec, P.L. Augmented Lagrangian and Operator Splitting Method in Non-Linear Mechanics; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1989. [Google Scholar]

- Shi, W.; Ling, Q.; Yuan, K.; Wu, G.; Yin, W. On the linear convergence of the ADMM in decentralized consensus optimization. IEEE Trans. Signal Process. 2014, 62, 1750–1761. [Google Scholar] [CrossRef]

- Donoho, D. De-noising by soft-thresholding. IEEE Trans. Inf. Theory 1995, 41, 613–627. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Geosci. Remote Sens. Lett. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Chavez, P.S., Jr.; Sides, S.C.; Anderson, J.A. Comparison of three different methods to merge multiresolution and multispectral data: Landsat TM and SPOT panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 295–303. [Google Scholar]

- Vivone, G.; Restaino, R.; Dalla Mura, M.; Licciardi, G.; Chanussot, J. Contrast and error-based fusion schemes for multispectral image pansharpening. IEEE Geosci. Remote Sens. Lett. 2014, 11, 930–934. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of highresolution MS and PAN imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Zeng, D.; Hu, Y.; Huang, Y.; Xu, Z.; Ding, X. Pan-sharpening with Structural Consistency and L1/2 Gradient Prior. Remote Sens. Lett. 2016, 7, 1170–1179. [Google Scholar] [CrossRef]

- Ghahremani, M.; Ghassemian, H. Nonlinear IHS: A promising method for pan-sharpening. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1606–1610. [Google Scholar] [CrossRef]

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L.M. Comparison of pansharpening algorithms: Outcome of the 2006 grs-s data-fusion contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Alparone, L.; Baronti, S.; Garzelli, A.; Nencini, F. A global quality measurement of pan-sharpened multispectral imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 313–317. [Google Scholar] [CrossRef]

- Choi, M. A new intensity-hue-saturation fusion approach to image fusion with a tradeoff parameter. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1672–1682. [Google Scholar] [CrossRef]

- Zhou, J.; Civco, D.L.; Silander, J.A. A wavelet transform method to merge Landsat TM and SPOT panchromatic data. Int. J. Remote Sens. 1998, 19, 743–757. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Osher, S.; Burger, M.; Goldfarb, D.; Xu, J.; Yin, W. An iterative regularization method for total variation-based image restoration. Multiscale Model. Simul. 2005, 4, 460–489. [Google Scholar] [CrossRef]

| Method | SAM | Q4 | Q | RASE | ERGAS | CC | RMSE | PSNR |

|---|---|---|---|---|---|---|---|---|

| PCA | 5.2812 | 0.7734 | 0.3895 | 21.5874 | 4.7453 | 0.7520 | 0.1246 | 18.0910 |

| GS | 2.3162 | 0.8510 | 0.8345 | 12.3841 | 2.9310 | 0.9433 | 0.0715 | 22.9177 |

| HPF | 2.1727 | 0.8561 | 0.8299 | 8.5465 | 2.0681 | 0.9420 | 0.0493 | 26.1392 |

| MTFGLP | 2.2767 | 0.8756 | 0.8399 | 6.1273 | 1.6287 | 0.9439 | 0.0354 | 29.0296 |

| PHLP | 5.0053 | 0.8184 | 0.7736 | 11.5526 | 2.9020 | 0.9077 | 0.0667 | 23.5214 |

| NIHS | 2.9060 | 0.7212 | 0.7521 | 14.4955 | 3.4247 | 0.9161 | 0.0837 | 21.5503 |

| RKHS | 3.0682 | 0.8725 | 0.8380 | 7.4955 | 1.9868 | 0.9413 | 0.0433 | 27.2789 |

| Proposed | 2.2422 | 0.8816 | 0.8525 | 5.1265 | 1.4605 | 0.9484 | 0.0296 | 30.5785 |

| Method | SAM | Q4 | Q | RASE | ERGAS | CC | RMSE | PSNR |

|---|---|---|---|---|---|---|---|---|

| PCA | 9.5457 | 0.7829 | 0.8387 | 32.8033 | 8.0507 | 0.9276 | 0.0637 | 23.9147 |

| GS | 9.1222 | 0.8336 | 0.8900 | 28.8060 | 6.4917 | 0.9645 | 0.0560 | 25.0434 |

| HPF | 10.8694 | 0.8376 | 0.9243 | 27.8449 | 6.7583 | 0.9722 | 0.0541 | 25.3382 |

| MTFGLP | 4.7925 | 0.9063 | 0.9653 | 14.6515 | 3.2470 | 0.9801 | 0.0285 | 30.9155 |

| PHLP | 3.9558 | 0.7749 | 0.9186 | 23.6349 | 4.8308 | 0.9508 | 0.0459 | 26.7620 |

| NIHS | 5.8053 | 0.7807 | 0.8954 | 32.9408 | 6.6098 | 0.9500 | 0.0640 | 23.8784 |

| RKHS | 3.8294 | 0.9071 | 0.9710 | 12.0542 | 2.5088 | 0.9829 | 0.0234 | 32.6103 |

| Proposed | 3.2465 | 0.9278 | 0.9775 | 10.3886 | 2.1452 | 0.9857 | 0.0202 | 33.9019 |

| Method | SAM | Q4 | Q | RASE | ERGAS | CC | RMSE | PSNR |

|---|---|---|---|---|---|---|---|---|

| PCA | 7.1137 | 0.9256 | 0.9137 | 17.4524 | 3.9960 | 0.9608 | 0.0778 | 22.1796 |

| GS | 5.2254 | 0.9312 | 0.9438 | 13.1991 | 3.2049 | 0.9776 | 0.0588 | 24.6058 |

| HPF | 4.1352 | 0.9185 | 0.9487 | 9.9563 | 2.4925 | 0.9832 | 0.0444 | 27.0547 |

| MTFGLP | 4.3926 | 0.9372 | 0.9531 | 9.1386 | 2.3852 | 0.9857 | 0.0407 | 27.7991 |

| PHLP | 4.0716 | 0.7879 | 0.9058 | 13.5540 | 3.2978 | 0.9720 | 0.0604 | 24.3753 |

| NIHS | 1.7128 | 0.7751 | 0.8781 | 29.6650 | 5.6749 | 0.9645 | 0.1323 | 17.5718 |

| RKHS | 5.3524 | 0.9340 | 0.9546 | 11.7716 | 2.9588 | 0.9864 | 0.0525 | 25.6000 |

| Proposed | 3.5176 | 0.9441 | 0.9647 | 6.6818 | 1.7829 | 0.9879 | 0.0298 | 30.5188 |

| Method | SAM | Q4 | Q | RASE | ERGAS | CC | RMSE | PSNR |

|---|---|---|---|---|---|---|---|---|

| PCA | 8.4179 | 0.8594 | 0.9002 | 23.3863 | 6.1677 | 0.9653 | 0.0565 | 24.9547 |

| GS | 7.0558 | 0.8976 | 0.9248 | 25.6653 | 5.2751 | 0.9804 | 0.0620 | 24.1470 |

| HPF | 7.7539 | 0.8707 | 0.9172 | 26.0012 | 5.5211 | 0.9727 | 0.0628 | 24.0340 |

| MTFGLP | 3.8118 | 0.9162 | 0.9362 | 20.5174 | 4.1687 | 0.9795 | 0.0496 | 26.0915 |

| PHLP | 5.8827 | 0.7768 | 0.9044 | 21.1517 | 4.8940 | 0.9659 | 0.0511 | 25.8270 |

| NIHS | 3.8008 | 0.7848 | 0.8770 | 34.3983 | 6.4343 | 0.9588 | 0.0831 | 21.6032 |

| RKHS | 2.5938 | 0.9266 | 0.9530 | 11.8152 | 2.8106 | 0.9842 | 0.0286 | 30.8851 |

| Proposed | 3.2818 | 0.9300 | 0.9566 | 10.8640 | 2.6335 | 0.9843 | 0.0263 | 31.6141 |

| Case | SAM | Q4 | Q | RASE | ERGAS | CC | RMSE | PSNR |

|---|---|---|---|---|---|---|---|---|

| 3.6036 | 0.8633 | 0.8274 | 10.0852 | 2.5146 | 0.9393 | 0.0582 | 24.7013 | |

| 2.6197 | 0.8796 | 0.8468 | 6.3718 | 1.6839 | 0.9471 | 0.0368 | 28.6898 | |

| 2.2813 | 0.8816 | 0.8499 | 5.4827 | 1.5165 | 0.9476 | 0.0316 | 29.9951 | |

| 2.3563 | 0.8828 | 0.8496 | 5.2938 | 1.5354 | 0.9465 | 0.0306 | 30.2996 | |

| 2.2422 | 0.8816 | 0.8525 | 5.1265 | 1.4605 | 0.9484 | 0.0296 | 30.5785 | |

| 2.3382 | 0.8822 | 0.8500 | 5.1863 | 1.5145 | 0.9465 | 0.0299 | 30.4778 |

| Case | SAM | Q4 | Q | RASE | ERGAS | CC | RMSE | PSNR |

|---|---|---|---|---|---|---|---|---|

| 5.8470 | 0.8758 | 0.9383 | 19.2451 | 4.1779 | 0.9754 | 0.0374 | 28.5467 | |

| 3.5671 | 0.9187 | 0.9725 | 11.8189 | 2.4454 | 0.9830 | 0.0230 | 32.7816 | |

| 3.4947 | 0.9244 | 0.9746 | 11.5754 | 2.3527 | 0.9839 | 0.0225 | 32.9624 | |

| 4.1877 | 0.9247 | 0.9731 | 12.5506 | 2.5343 | 0.9843 | 0.0244 | 32.2598 | |

| 3.2465 | 0.9278 | 0.9775 | 10.3886 | 2.1452 | 0.9857 | 0.0202 | 33.9019 | |

| 3.4306 | 0.9275 | 0.9761 | 10.9675 | 2.2676 | 0.9854 | 0.0213 | 33.4309 |

| Case | SAM | Q4 | Q | RASE | ERGAS | CC | RMSE | PSNR |

|---|---|---|---|---|---|---|---|---|

| 5.2761 | 0.9338 | 0.9484 | 12.1816 | 3.0319 | 0.9816 | 0.0543 | 25.3026 | |

| 4.2752 | 0.9422 | 0.9608 | 8.2856 | 2.1955 | 0.9872 | 0.0369 | 28.6502 | |

| 3.7884 | 0.9436 | 0.9637 | 7.1468 | 1.8866 | 0.9877 | 0.0319 | 29.9344 | |

| 3.6676 | 0.9439 | 0.9640 | 7.3470 | 1.9681 | 0.9878 | 0.0328 | 29.6944 | |

| 3.5176 | 0.9441 | 0.9647 | 6.6818 | 1.7829 | 0.9879 | 0.0298 | 30.5188 | |

| 3.2613 | 0.9433 | 0.9652 | 6.7456 | 1.8407 | 0.9876 | 0.0301 | 30.4363 |

| Case | SAM | Q4 | Q | RASE | ERGAS | CC | RMSE | PSNR |

|---|---|---|---|---|---|---|---|---|

| 6.8677 | 0.9109 | 0.9284 | 23.4889 | 4.8319 | 0.9803 | 0.0568 | 24.9167 | |

| 3.6602 | 0.9270 | 0.9504 | 13.6097 | 3.1098 | 0.9824 | 0.0329 | 29.6569 | |

| 2.8394 | 0.9287 | 0.9535 | 10.9922 | 2.6297 | 0.9824 | 0.0266 | 31.5123 | |

| 2.6245 | 0.9268 | 0.9518 | 10.1182 | 2.5081 | 0.9819 | 0.0245 | 32.2318 | |

| 3.2818 | 0.9300 | 0.9566 | 10.8640 | 2.6335 | 0.9843 | 0.0263 | 31.6141 | |

| 3.8526 | 0.9295 | 0.9558 | 10.8789 | 2.6852 | 0.9841 | 0.0263 | 31.6022 |

| Method | Quickbird | Pléiades | WorldView-2 | SPOT-6 |

|---|---|---|---|---|

| RKHS | 7263.22 | 27882.87 | 16559.93 | 31576.65 |

| Proposed | 207.71 | 851.11 | 512.47 | 863.59 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.-Y.; Huang, T.-Z.; Deng, L.-J.; Huang, J.; Zhao, X.-L.; Zheng, C.-C. A Framelet-Based Iterative Pan-Sharpening Approach. Remote Sens. 2018, 10, 622. https://doi.org/10.3390/rs10040622

Zhang Z-Y, Huang T-Z, Deng L-J, Huang J, Zhao X-L, Zheng C-C. A Framelet-Based Iterative Pan-Sharpening Approach. Remote Sensing. 2018; 10(4):622. https://doi.org/10.3390/rs10040622

Chicago/Turabian StyleZhang, Zi-Yao, Ting-Zhu Huang, Liang-Jian Deng, Jie Huang, Xi-Le Zhao, and Chao-Chao Zheng. 2018. "A Framelet-Based Iterative Pan-Sharpening Approach" Remote Sensing 10, no. 4: 622. https://doi.org/10.3390/rs10040622

APA StyleZhang, Z.-Y., Huang, T.-Z., Deng, L.-J., Huang, J., Zhao, X.-L., & Zheng, C.-C. (2018). A Framelet-Based Iterative Pan-Sharpening Approach. Remote Sensing, 10(4), 622. https://doi.org/10.3390/rs10040622