Abstract

Feature-based matching methods have been widely used in remote sensing image matching given their capability to achieve excellent performance despite image geometric and radiometric distortions. However, most of the feature-based methods are unreliable for complex background variations, because the gradient or other image grayscale information used to construct the feature descriptor is sensitive to image background variations. Recently, deep learning-based methods have been proven suitable for high-level feature representation and comparison in image matching. Inspired by the progresses made in deep learning, a new technical framework for remote sensing image matching based on the Siamese convolutional neural network is presented in this paper. First, a Siamese-type network architecture is designed to simultaneously learn the features and the corresponding similarity metric from labeled training examples of matching and non-matching true-color patch pairs. In the proposed network, two streams of convolutional and pooling layers sharing identical weights are arranged without the manually designed features. The number of convolutional layers is determined based on the factors that affect image matching. The sigmoid function is employed to compute the matching and non-matching probabilities in the output layer. Second, a gridding sub-pixel Harris algorithm is used to obtain the accurate localization of candidate matches. Third, a Gaussian pyramid coupling quadtree is adopted to gradually narrow down the searching space of the candidate matches, and multiscale patches are compared synchronously. Subsequently, a similarity measure based on the output of the sigmoid is adopted to find the initial matches. Finally, the random sample consensus algorithm and the whole-to-local quadratic polynomial constraints are used to remove false matches. In the experiments, different types of satellite datasets, such as ZY3, GF1, IKONOS, and Google Earth images, with complex background variations are used to evaluate the performance of the proposed method. The experimental results demonstrate that the proposed method, which can significantly improve the matching performance of multi-temporal remote sensing images with complex background variations, is better than the state-of-the-art matching methods. In our experiments, the proposed method obtained a large number of evenly distributed matches (at least 10 times more than other methods) and achieved a high accuracy (less than 1 pixel in terms of root mean square error).

1. Introduction

Image matching refers to a fundamental task of establishing correspondences between two or more images of the same scene taken at different times, from different sensors, or from different viewpoints. It is widely used in various applications of computer vision and remote sensing, such as image registration and fusion, change detection, and environment monitoring. Automatic image matching technology has been widely studied in the fields of computer vision and remote sensing in the past decades [1,2,3,4]. Unlike those in computer vision applications, the images in remote sensing (multi-temporal and multi-source images) are usually affected by complex background variations, such as noise caused by cloud and haze weather conditions and land cover changes caused by human construction activities and disasters (earthquakes and floods) [5]. These variations make image matching difficult.

Existing matching methods are mainly divided into area-based and feature-based methods [3]. The second group is more popular than the first one due to the robustness and reliability of those methods against image geometric distortion and radiometric difference [6,7]. Feature-based methods generally consist of three steps: feature detection, description, and matching. Scale-invariant feature transform (SIFT) is one of the popular feature-based matching methods [8]. Considering the success of the SIFT algorithm, many improved versions have been proposed to enhance the performance of feature detection, description, and matching. The improved algorithms for feature detection include speeded-up robust features (SURF) [9] and complex SIFT (CSIFT) [10], among others. SURF can accelerate feature detection using FAST-Hessian and Haar wavelets, and CSIFT can detect features of complex-valued images. Many improved descriptors, such as principal component analysis–SIFT [11], gradient location and orientation histogram [12], and Affine–SIFT [13], have been investigated to make the SIFT features distinctive in image deformation. Feature descriptors are combined with several similarity metrics or constraints, such as scale-orientation joint restriction criteria [14], weight-based topological map-matching algorithm [15], normalized cross correlation and least square matching [16], perspective scale invariant feature [17], lq-estimator [18], and L2-minimizing estimation [6], to match remote sensing images. Despite significant improvements to the feature-based matching method, the manually designed methods (e.g., SIFT) cannot fully obtain the invariant descriptors with the appearance of nonlinear illumination changes, shadows, and occlusions [19]. Unfortunately, the aforementioned issues are common in high-resolution remote sensing images with background variations. Traditional image matching methods do not work well for these kinds of images.

To improve matching performance in the context of image background variations, the multiscale edge features-based rotation and scale invariant shape context are proposed [5]. In the method, the multiscale morphological operator is used to detect local scale invariant features and the descriptor in the rotation-invariant shape context is designed to match the images. However, this method does not match with high-resolution remote sensing images because unreliable edge gradient information exists in these kinds of images. A line segment-based method is proposed to match remote sensing images with large background variations [20]. In this method, line segments are extracted by using an edge drawing line (EDLines [20]) detector. Line validation is performed to obtain the main shape contour, and histogram binning shape-based descriptors are used to match the line segments. The line segment-based matching methods are robust against global geometrical distortions and can achieve high accuracy. However, line segment-based methods strongly depend on relatively stable linear objects, such as coastlines, riverside lines, and mountain ridges. In actual scenarios, the shape of linear objects may be changed significantly for remote sensing images with complex background variations. For example, the shapes of coastlines are inconsistent in long-term multi-temporal images for human construction activities or sea inundation, and the corresponding lines extracted by edge detectors do not correspond to the conjugated locations. Therefore, complex background variations between two multi-temporal remote sensing images may significantly disrupt the feature detection and representation of conjugated regions and lead to unsatisfactory matching results using the traditional feature-based methods.

In recent years, several image matching methods based on deep learning have been proposed [19,21,22]. Deep networks determine the similarity between image patches, and this is achieved by learning directly from the training examples without having to consider manually designed features. High-level features, rather than the low-level point, line, and region features, are learned in matching. The Siamese-type architecture with two-stream network is commonly used to learn similarities in image matching [19,23,24,25]. The two-stream Siamese-type network is regarded an effective deep network because of its capability for high-level feature representation. It can improve performance in terms of viewpoint, illumination changes, and background variations. However, the Siamese-type architecture mainly focused on image matching in computer vision, and it does not work well for remote sensing images with complex background variations (complex spatial structures and severe intensity changes). In addition, the benefits of multiscale patch comparison and searching efficiency are difficult to balance due to the large size of remote sensing images.

In this study, we focus on learning how to match remote sensing images with complex background variations. This study aims to design a new technical framework based on the Siamese-type network to directly determine the similarity between remote sensing image patches without manually designed features and descriptors. Currently, no generic rule is applied when determining the architecture of deep learning. Generally, the architecture and parameters are determined through many repeated trials. Thus, the integrated multiple factors of nonlinear transformations between reference and sensed images are difficult to analyze. In this study, we considered each of the factors that may be involved in the remote sensing of images with complex background variations, such as geometric deformation and quality degradation. Then, the number of convolutional layers is determined on the basis of the factors rather than the architecture of deep network by blind repeated trials. In the proposed Siamese-type architecture, the convolutional layers with rectified linear unit (ReLU) activation and one max-pooling layer are arranged to learn abstract feature representations. Subsequently, two streams of convolutional layers share identical weights. In the output layer, the sigmoid function is employed to compute the positive and negative probabilities. The similarity of patch pairs is learned from the labeled training examples of matching and non-matching true color patches. To achieve high accuracy and efficiency of patch matching, sub-pixel Harris algorithm (S-Harris) and Gaussian pyramid coupling quadtree (GPCQ) are performed to obtain accurate localization. The searching space of candidate matches is narrowed, and multiscale patches are used to synchronously capture the matches. After initial matching, the false matches are removed by the random sample consensus (RANSAC) algorithm [26] and whole-to-local quadratic polynomial constraints.

The main contribution of this study centers on the design of the deep network for multiscale patch comparison, which, when combined with the S-Harris corner detector, can improve the matching performance for remote sensing images with complex background variations.

2. Methodology

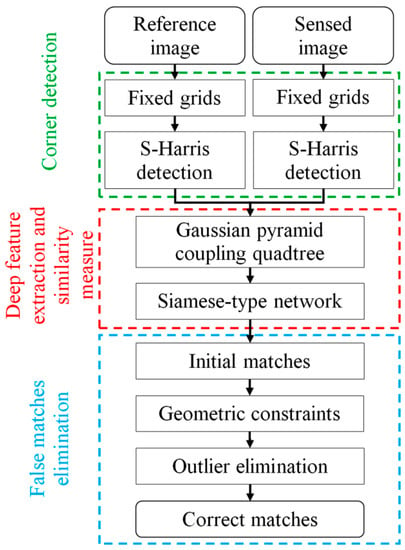

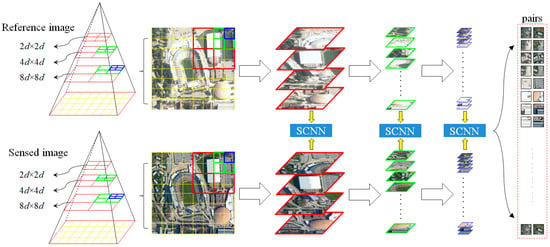

The proposed matching framework mainly includes three steps: Siamese-type network training, S-Harris corner detection, and patch matching. At the training phase, the Siamese-type network (i.e., see Figure 1) is trained by the labeled examples of matching and non-matching true color patches. Then, in the matching phase with the trained network, the reference and sensed images are divided into grids with fixed sizes, and a number of sub-pixel Harris corners are extracted from each grid. Subsequently, GPCQ is established, deep features are extracted through the Siamese-type network, and multiscale similarity measure is synchronously performed. Unlike SIFT, multiscale image blocks are extracted directly to match via the pipeline of Siamese-type network in image Gaussian pyramid instead of three separate steps of multiscale feature detection, description, and matching. It is of importance that a known spatial resolution is used to resample the approximate scale for capturing the candidate conjugated patches. Despite the restriction of spatial resolution of the reference and sensed images, it is suited to satellite images matching because of a known resolution and rough georeference obtained from the space-borne equipments, such as GPS for sensor position and star trackers for sensor attitude [27,28]. Finally, geometrical constraints are used to remove outliers based on whole-to-local quadratic polynomial functions. The detailed steps are presented in Figure 2.

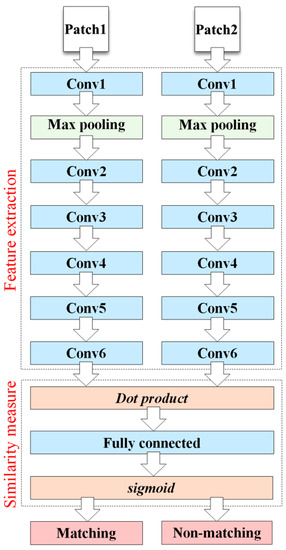

Figure 1.

Architecture of Siamese convolutional neural network.

Figure 2.

Schematic of the proposed matching framework.

As shown in Figure 1, input patches are extracted from reference and sensed images. Six convolutional layers are arranged for the extracted features in each stream. One max-pooling layer is wedged between the convolutional layers of Conv1 and Conv2, and two streams share identical weights. The similarity measure consists of one dot product layer, one fully connected layer, and one sigmoid layer. The matching and non-matching probabilities between 1 and 0 given by sigmoid function () are used to define the similarity, in which 1 and 0 correspond to matching and non-matching target output values, respectively.

2.1. Siamese Convolutional Neural Network

The architecture of the Siamese convolutional neural network (SCNN) significantly affects the performance of similarity learning. However, except for repeated trials, no effective rule on determining the architecture of SCNN has been reported in the literature. In our study, the architecture is designed on the basis of the factors that affect matching performance. For the multi-temporal remote sensing images shown in Figure 3, the complex background variations can be simplified as certain types of factors, as listed below.

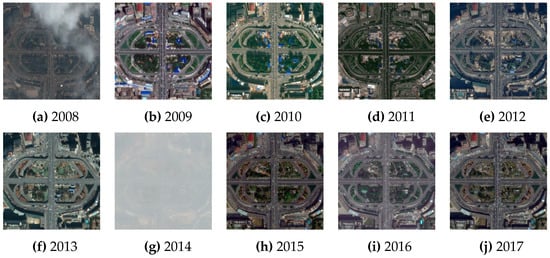

Figure 3.

Multi-temporal Google Earth images of the same area from 2008 to 2017. Images are affected by complex background variations, including small rotation and translation, nonlinear geometric deformation, shadow, image quality degradation, and land cover changes.

Factor 1: Rotation and translation. These factors are the most basic problems and should be estimated between reference and sensed images. In satellite images, rotation and translation errors generally come from image distortion and navigation error. The transformation of rotation and translation can be represented as

in which and are the coordinates of the patch centers, is the rotation angle, and denotes translation.

Factor 2: Nonlinear geometric deformation. Remote sensing images are generally affected by complex nonlinear geometric deformations, and they may be caused by topographic reliefs and earth curvature. Nonlinear geometric deformation between remote sensing images can be approximately described by polynomial transformation.

Factor 3: Shadow. Shadows are common in high-resolution remote sensing images, especially in areas with significant topography. The influence of shadow can be expressed as [29]

in which and indicate the grayscale of pixels without shadow and with shadow, respectively. Furthermore, and are two coefficients.

Factor 4: Image quality degradation. This factor is a widespread problem when surface radiations pass through the atmosphere. The grayscale degradation model can be written as [30]

in which and are the true and degradation images, respectively; is a diagonal matrix with diagonal elements composed of 0 and 1; and represents the noise vector.

Factor 5: Land cover changes. This factor is considered a widely existing complex nonlinear problem in multi-temporal remote sensing images. Nonlinear grayscale changes are difficult to describe using a generic mathematical model.

Apart from the aforementioned factors, other changes (i.e., sensor settings) may be found but are rather difficult to model. Moreover, although the relationship of pairs after decoupling is simple to determine, many uncertainties are found in the coupled factors. Consequently, the integration of factors is difficult to analyze, and the coefficients of each transformation are difficult to compute simultaneously. In this study, the combination of these factors is represented in multiple hidden layers to avoid explicit solutions. The multiple transformations between output and input of the deep network can be expressed as

in which denotes the various transformations caused by the involved factors of image matching (e.g., reflects the factor in Equation (1)), while denotes the related weights. In the proposed method, five convolutional layers are arranged to describe the five aforementioned factors. An additional layer is also added to describe the unknown factors. The architecture of the proposed Siamese-type network includes three types of layers: convolutional, pooling, and fully connected layers (Figure 1). Batch normalization [31] is wedged into each convolutional layer before the activation of neurons. In this network, two streams of convolutional and pooling layers sharing the weights are arranged without assuming any feature extraction and description. ReLU activation is employed for feature sparse representation in the convolutional layers. Max-pooling is used for feature map compression and complexity simplification. Subsequently, one fully connected layer is concatenated to the decision network. The sigmoid function is employed to define the similarity in the fully connected layer. Output of the jth feature map in the lth layer via convolution can be written as

where is the ith feature map in the layer; is the number of feature maps in the layer; and represent the convolution kernels (weights) and biases, respectively; is the convolution operator; and denotes the activation function. ReLU is applied in our method. Unlike the activation function used in the output layer of deep networks [19], in the proposed Siamese-type network, sigmoid function instead of ReLU is adopted to compute matching and non-matching probabilities, which are restricted between 0 and 1. Hence, the hinge-based loss function may be unsuitable for computing the loss in terms of the output values, while the global cost function is an alternative function with regard to sigmoid output. Therefore, the proposed Siamese-type network is trained in a supervised manner by minimizing cost function .

in which h(x) are the trained results of the output layer; y are the expected output values given in a supervised manner; and are the number of trained data and layers, respectively; is the weight decay parameter; and and are the number of feature maps in layers and , respectively. Back propagation, which is used to update the weights and biases from one layer to the next via stochastic gradient descent, can be written as

in which is the learning rate. The residual error of the output layer can be computed by

The residual error of back propagation in the ith feature map of lth convolutional layer is computed as

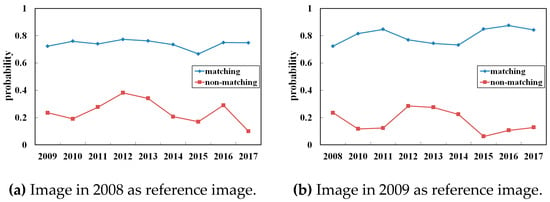

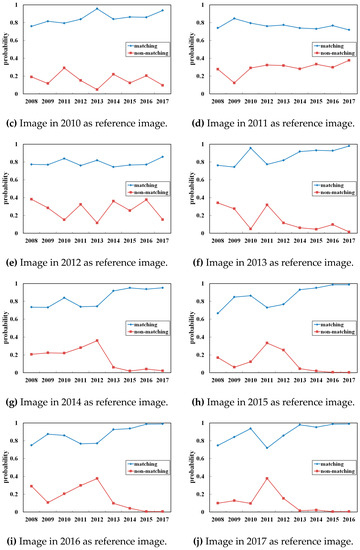

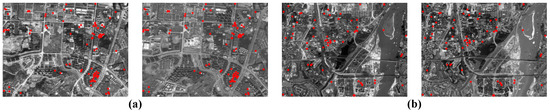

The main advantage of the proposed SCNN is its strong capability to deal with nonlinear problems using the multilayer network. Figure 4 shows the pairwise matching and non-matching probabilities computed by the proposed SCNN for all the patch pairs in Figure 3. The image patch of each year is compared with other years. The architecture of the proposed SCNN in this test is expressed as follows: The two streams initially consist of the same branch –ReLU–––ReLU––ReLU––ReLU––ReLU––ReLU. The concatenated part -Sigmoid- . denotes the convolutional layer with filters of spatial size of band number . represents a max-pooling layer with size of stride . is a fully connected layer with output units. Training dataset with true-color patches is generated from Google Earth images. Multi-temporal remote sensing images on conjugated areas are divided as image patches (size ) to be used as inputs. Overfitting is avoided with a data augmentation strategy [19], Gaussian filtering with standard deviation and respectively. Weights are initialized by Gaussian random distribution. The initial learning rate of 0.01 and momentum of 0.9 are used to train the proposed SCNN. In the results, the matching probabilities are considered high-level, whereas the non-matching probabilities are considered low-level. This indicates that the images of the same scene can be considered to be highly similar by the proposed SCNN in spite of severe intensity changes (as described in Figure 3) between images. We can thus infer that the proposed SCNN is robust to linear and nonlinear transformations, such as illumination, cloud cover, and land cover changes.

Figure 4.

Matching and non-matching probabilities between multi-temporal remote image patches in Figure 3. (a–j) show the statistical results from 2008 to 2017.

2.2. Coarse-Conjugated Patch Decision

Considering that many similar objects or patterns may exist in different image areas in remote sensing images, traditional matching methods that use exhaustive searching strategies may cause matching ambiguity and generate false matches. To limit the search area and improve efficiency, a GPCQ-based coarse-to-fine method is exploited to find the conjugated area in this study. Image patches with fixed sizes are extracted from the reference and sensed images. Patch comparison is performed from the top of the Gaussian image pyramid to the bottom, as shown in Figure 5. First, a Gaussian image pyramid is established based on the given size of patches. If the given size of patches is , then the image size in the top pyramid is and the size of next layer is . Thus, one upper layer has half spatial resolution of a pixel, so that we can cover wider area with a fixed image size in a higher layer and conduct coarse searching. In this paper, the minimum number of layers is set as 5. The bottom layer is the original image. If the number of layers established is less than 5, the original image will be enlarged to reach the minimum number of layers. Second, the Gaussian image pyramid is split into a series of patches with fixed size based on the quadtree principle. Third, SCNN is used to compare the similarity of the reference and the sensed patches in the current above-and-below scales of the Gaussian image pyramid in the range of the conjugated patches obtained from one upper layer. The patch similarity is defined based on the difference of matching probability and non-matching probability , to which denotes the maximum difference of the ith patch in the sensed image to the jth patch in the reference image. This definition satisfies the constraint in Equation (13), and patches and are regarded as a pair of conjugated regions.

in which is the second maximum difference on the ith patch in the sensed image while is the ratio threshold set to 0.6.

Figure 5.

Patch comparison via GPCQ. The red rectangles are the patches, which are located at the top layer of Gaussian image pyramid. For example, four patches with sizes are found in the top pyramid layer with size , in which is set to 96 pixels. The green and blue rectangles are the patches in the second and third layers, respectively. SCNN is used to compare the similarity between the patches in the reference and sensed images.

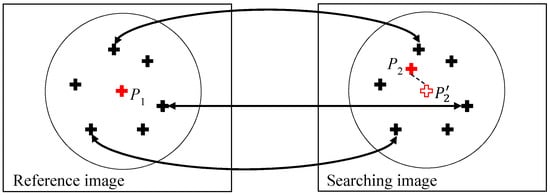

2.3. Multiscale-Conjugated Point Decision

Coarse-conjugated patches are found using the method described in the previous section. However, the conjugated patches from the comparison of grids with fixed sizes are difficult to locate precisely, and a 2D shift between coarse-conjugated patches exists. Furthermore, the center of the coarse patches cannot be easily used to obtain the accurate localization of the matches. In Ref. [32], normalized cross-correlation (NCC) is adopted to determine the precise conjugated points of optical and synthetic aperture radar images in Siamese-type networks. Score maps for the searching space with 51 × 51 pixels are generated from the reference and sensed images, and the high peak in the score map is considered a conjugated point location. However, the NCC is a time-consuming method.

In this study, we use a fast-point localization method based on the Harris algorithm [33], which is regarded as a simple and efficient approach. Only the corners of the coarse-conjugated patches, rather than every pixel in the patch, are selected as candidates to find the precise point locations. The algorithm is expressed below.

| Algorithm: |

| Input: and are the coarse-conjugated patches in the sensed and reference images, respectively; are the Harris corners in and , and is the number of Harris corners Parameters: matching probability , non-matching probability , and similarity index . Compute the distance of center to Harris corners and in the patches. Traverse the Harris corners based on from min to max. for to do for to do Compute if then Update end for end for Record the coordinates of Harris corners with . |

The location accuracy of the original Harris algorithm is expressed at the pixel level. To achieve sub-pixel accuracy, we utilize the improved sub-pixel level Harris operator (S-Harris). The neighbors of the Harris corner, rather than by using local non-maximum suppression of a response function, are considered for corner detection. The least square method is adopted to refine the location of the Harris corner at the sub-pixel level.

in which ; and are the coordinates of the refined corner and neighbors, respectively; is the number of neighbors; and is the diagonal weight matrix , which is computed with a corner response value. To ensure that the corners are evenly distributed, the reference and sensed images are divided into fixed grid sizes (i.e., pixels in this paper). After non-maximum suppression, the pixels in each grid are sorted in descending order based on their corner response values. The first 100 pixels (the given number) are considered as the corners of each grid. If the total number of S-Harris corners is less than 3000 in an image, the given number of each grid is set to 200. In this paper, the S-Harris with grids is named gridding S-Harris, while the corners detected in the whole image are named non-gridding S-Harris.

Matching S-Harris corners with a fixed window is difficult to accomplish for remote sensing images with changed scales. To find the scale-invariant matches, the multiscale patches are compared synchronously to capture the S-Harris corners for the initial matches.

2.4. Outlier Elimination

Outliers are inevitable in initial matching. Thus, polynomials and the RANSAC algorithm are usually combined to eliminate outliers. However, local geometric distortions may be inconsistent for different terrains or land covers. To overcome this problem, a whole-to-local outlier elimination is implemented from the top to bottom layers of the Gaussian pyramid. The main steps of outlier elimination are as follows:

Step 1: Find the correct match set for the top layer of the Gaussian pyramid by using geometric transformation and RANSAC.

Step 2: In the next Gaussian pyramid layer, validate all initial matches by using local polynomials. As shown in Figure 6, six correct matches for the initial match are selected to solve the local polynomial coefficients, and point is estimated with . If the residual error of and is less than the triple standard deviation, then is regarded as a pair of correct matches and is saved in set .

Figure 6.

Local outlier elimination. denotes the initial matches of the reference and sensed images. is estimated from based on local polynomial coefficients.

Step 3: Repeat Step 2 until the validation task is completed for all the Gaussian pyramid layers.

In the validation, if more than six matches are found around a point, then the quadratic polynomial of Equation (15) is selected to fit the local geometric transformation. Otherwise, the affine transformation of Equation (16) is used to describe the local geometric transformation.

in which and are the coordinates of the matches in the reference and sensed images, respectively, while and are the polynomial coefficients.

in which and are the coordinates of the matches in the reference and sensed images, respectively, while and are the coefficients of the affine transformation.

3. Experimental Evaluation and Discussion

3.1. Experimental Datasets

The training datasets are generated from Google Earth historical images. The datasets include rivers, coastlines, roads, farmlands, forests, mountains, and buildings in urban and rural areas. The datasets also contain patches with different periods, scales, illuminations, shadows, and land cover changes. A total of 80,000 pairs of patches (half matching and half non-matching patches) with a fixed size of 96 × 96 pixels are extracted in a supervised manner from Google Earth historical images. The non-matching patches examples were generated by two ways: firstly, we randomly select patches from different matching patch pairs to construct non-matching patches; besides, some examples are produced by cropping similar objects (e.g., two different buildings) from Google Earth historical images. Furthermore, 240,000 pairs of patches are extended to avoid overfitting by image rotation, Gaussian blur, and affine transformation. Therefore, the total number of patch pairs is 320,000, in which 312,000 and 8000 pairs of patches are randomly selected as training and test datasets, respectively.

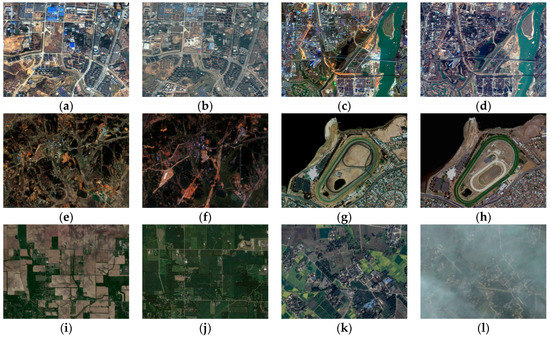

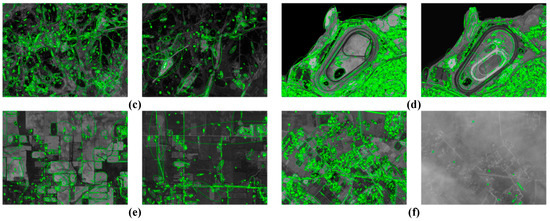

ZY3, GF1, IKONOS, and Google Earth high-resolution remote sensing images with complex background variations are selected to construct the image pairs shown in Figure 7. Then, the proposed matching framework is evaluated. Different objects (buildings, rivers, coastlines, and forests) and different types of terrain are found in the images. A detailed description of each image pair is shown in Table 1.

Figure 7.

Experimental image pairs. (a,b) is a pair of ZY3 (fusion image obtained from multispectral and panchromatic images) and Google Earth images in an urban area in China. (c,d) is a pair of GF1 (fusion image obtained from multispectral and panchromatic images) and Google Earth images in China. (e,f) is a pair of ZY3 and GF1 images with large background variations in a mountain area in China. (g,h) is a pair of IKONOS and Google Earth images with coastline in Australia. The images in (i,j) are a pair of Google Earth images with farmlands in different seasons in the United States. (k,l) is a pair of Google Earth images in China, in which (l) is contaminated by cloud and haze.

Table 1.

Description for each pair of images in the experiments.

3.2. SCNN Training

To improve the reliability of the training samples derived from multi-temporal remote sensing images with background variations, a batch size of 200 is used, which is larger than the batch size of 128 in Ref. [19]. Therefore, 1560 iterations exist in each round. The SCNN is trained in parallel on Nvidia GPUs within 100 rounds, and the training is forced to terminate when the average value of the loss function is less than 0.001. Weights are initialized for training by random Gaussian distributions [34]. The momentum and weight decay are set to 0.9 and 0.0005, respectively. Then, the learning rate is reduced to accelerate the training and obtain good performance. In this study, a piecewise function is adopted to adjust the learning rate. The initial learning rate is set to 0.01 then decreased gradually with the following formula:

in which denotes the number of iterations; denotes the learning rate of the iteration, which is updated based on previous learning rate ; is an operator for computing the remainder; the optimal convergence can be achieved by decreasing the learning rate at about every 100 iterations based on the observation of our experiments; and is a constant, which is set to .

3.3. Feature Visualization

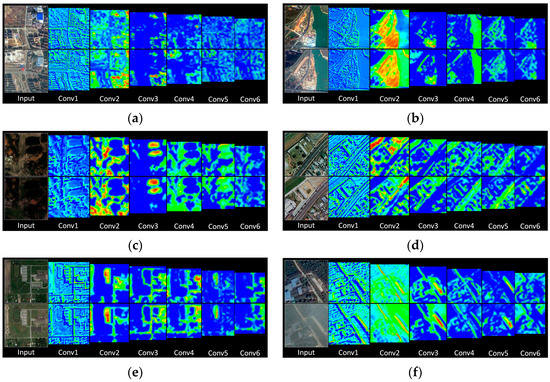

Figure 8 shows the visualization of features at each convolutional layer (Conv1–Conv6) of the SCNN after ReLU activation. The feature maps show one of the features in the convolutional layer, e.g., one of 64 features is shown in the feature maps labeled Conv1. We can see that low-level texture information is captured in Conv1, and many high-level semantic features are extracted in deep convolutional layers. For example, the feature maps labeled as Conv3 in Figure 8a highlight high-rise residential community regions, and Conv4 in Figure 8b highlight the regions with bodies of water. The two compared feature maps in each convolutional layer for all pairs are similar, which demonstrates that the SCNN is suitable for the feature extraction of images with complex background variations.

Figure 8.

Examples of feature visualization learned by the proposed SCNN. (a), (b), (c), (d), (e) and (f) are the visual features in Pair1, Pair2, Pair3, Pair4, Pair5 and Pair6 respectively.

3.4. Evaluation Criteria of Matching Performance

Three indicators, namely, the number of correct matches (), matching precision (), and root mean square error (RMSE), are used to evaluate the proposed method in our experiments. and can be computed as follows:

in which is the number of total matches (initial matches).

in which is the coordinate of the correct matches in the sensed image, while is the transformed coordinate of the correct matches in the reference image. The is counted and manually checked in our experiments.

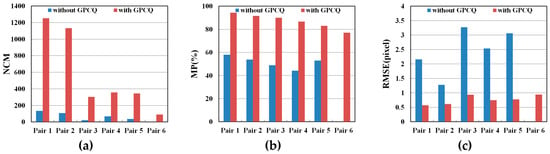

3.5. Comparison of SCNNs with Different Numbers of Layers

To evaluate the effects of layer number in our method, we reduced (layer−) and added (layer+) one convolutional layer to train and test the datasets. The performance is evaluated by determining average accuracy (), which is computed as follows:

in which is the number of iteration in each round; is the number of positives for matching and non-matching pairs in the iteration; and is the total number of pairs.

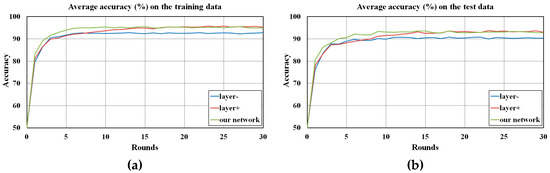

Figure 9 shows the average accuracy of each round for the training and test data with layer− and layer+. Our network and the deep network (layer+) achieved higher accuracy by nearly 3% compared with layer−. Layer+ converged slower than our network. Layer− and our network converged at nearly 10 rounds ( iterations), whereas layer+ converged at nearly 18 rounds ( iterations). In addition, our network and layer+ performed better than layer− in terms of , , and , as shown in Figure 10. The experimental results demonstrate the effective performance of our network given the tradeoff between accuracy and network complexity.

Figure 9.

Comparison of average accuracies for each round between training (a) and test (b) data with layer−, layer+, and our network.

Figure 10.

Comparison of (a) NCM, (b) MP, and (c) RMSE values with different deep SCNNs.

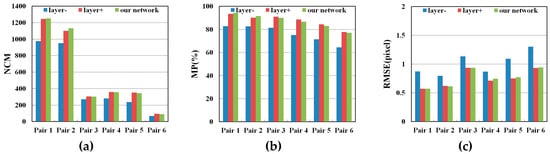

3.6. Comparison between Gridding and Non-Gridding S-Harris

The gridding S-Harris detector is compared with the non-gridding S-Harris for the proposed matching framework. The filtering radius pixels and standard deviation are used for Gaussian filtering. The corner response value is computed as , in which is a Harris matrix and is set as 0.04. The radius of the local window is set to 7 pixels. The computed NCM and RMSE of the gridding S-Harris and non-gridding S-Harris are shown in Figure 11.

Figure 11.

Comparison of (a) NCM, (b) MP, and (c) RMSE between gridding S-Harris and non-gridding S-Harris.

As shown Figure 11, the gridding S-Harris performs better than the non-gridding S-Harris in terms of NCM, MP, and RMSE. This finding is attributed to the detection of a fixed number of corners in each grid that is not easily affected by image grayscale variations. Then, the evenly distributed corners are obtained to accurately compute the geometric transformation (as shown in Section 3.8). Many homogenous or weakly textured areas can be seen in the images of Pairs 5 and 6, while large background variations exist in Pairs 3 and 4. In those images, unevenly distributed corners and matching ambiguity caused by non-gridding S-Harris significantly reduced the number of matches and accuracy.

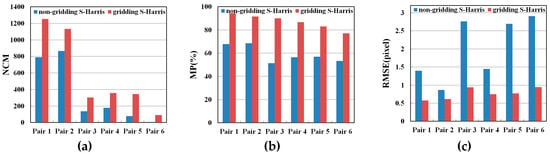

3.7. Evaluation of GPCQ

GPCQ is adopted in our method to narrow down the searching space of conjugated points and reduce matching ambiguity. Then, the proposed matching frameworks with and without GPCQs are compared for the performance evaluation of the GPCQ. In the test, the patch size is set to pixels. The SCNN-based pairwise similarity measure and the quadratic polynomial constraint are used to obtain the matches through a bi-directional matching strategy. Figure 12 shows the comparative results in terms of the NCM, MP, and RMSE.

Figure 12.

Comparison of (a) NCM, (b) MP, and (c) RMSE with and without GPCQs.

On the basis of the experimental results, the proposed matching framework with GPCQ performed better than the framework without GPCQ. This finding is attributed to two reasons. First, many local image contents may be similar in the different areas of the same image, which result in many patches with low distinctiveness. The images in Figure 7i,j,l contain more highly similar local regions compared with the other experimental images. Thus, the exhaustive searching strategy may have produced matching ambiguity in the proposed framework without GPCQ, such that only a few correct matches are obtained. Second, the limited, unevenly distributed matches hindered the implementation of an accurate polynomial geometric registration.

3.8. Performance Evaluation of the Proposed Matching Framework

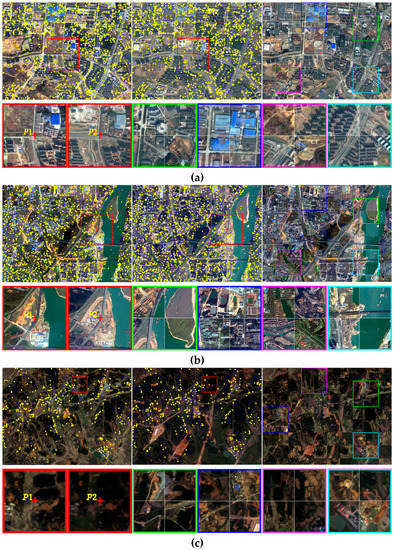

To evaluate its performance, the complete framework is compared with SIFT and other three state-of-the-art matching methods, namely, two matching methods for remote sensing images with background variations (i.e., see Jiang [5] and Shi [20]) and a 2-channel deep network-based method (i.e., see Zagoruyko [19]). The comparative NCM, MP, and RMSE values are shown in Table 2, Table 3 and Table 4, respectively. In the experiments, the initial matches of RMSE over 3 pixels are considered to be false matches for all comparative methods. The visualization results of the proposed matching framework and the comparative methods are shown in Figure 13, Figure 14, Figure 15, Figure 16 and Figure 17. The proposed matching framework can obtain many correct and regularly distributed matches for all experimental pairs, and it can achieve relatively higher accuracy of less than 1 pixel, as shown in Table 4.

Table 2.

NCM values of each method for remote sensing images with complex background variations.

Table 3.

MP values of each method for remote sensing images with complex background variations.

Table 4.

RMSE values of each method for remote sensing images with complex background variations.

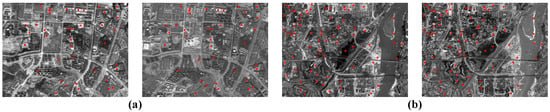

Figure 13.

Matching and registration results of the proposed matching framework. The matches of Pairs 1–6 are pinned to the top-left two images of (a–f) using yellow dots. The two small sub-regions marked by red boxes correspond to the two conjugated patches P1 and P2. The top-right image shows the registration result of the checkerboard overlay of the image pair. The four small sub-regions marked by green, blue, magenta, and cyan are enlarged to show the registration details.

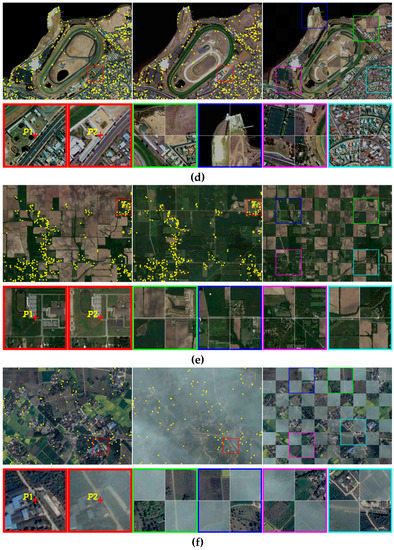

Figure 14.

Matching results of SIFT. The matches of Pairs 1, 2, 3, and 5 are shown in (a), (b), (c), and (d), respectively. No correct match is obtained for the images of Pairs 4 and 6 (i.e., see Table 2).

Figure 15.

Matching results using Jiang’s method [5]. (a–c) are the matching results of Pairs 1–3. No correct match is obtained for the images of Pairs 4–6 (i.e., see Table 2).

Figure 16.

Matching results using Shi’s method [20]. (a–e) are the matching results of Pairs 1–5. No correct match is obtained for the image of Pair 6 (i.e., see Table 2).

Figure 17.

Matching results using Zagoruyko’s method [19]. (a,b) are the matching results of Pairs 1 and 2, respectively. No correct match is obtained for the images of Pairs 3–6 (i.e., see Table 2). (c–f) highlights the ellipse and centroids of MSER of Pairs 3–6.

Table 2 presents the comparative results of the five methods in terms of NCM. Except for our method, the methods used for the comparison are sensitive to image quality degradation and failed in Pair 6. The matches obtained by using the methods of Jiang and Shi are mainly distributed on the line objects, as shown in Figure 15 and Figure 16, respectively. The method of Zagoruyko, a deep learning-based method that combined 2-channel deep network with MSER [35], worked well for Pairs 1 and 2, as shown in Figure 17a,b, respectively. However, the method of Zagoruyko is less effective than the four other methods, because few corresponding MSERs are detected, as shown in Figure 17c–f. The MP values of the methods of Jiang and Shi are higher than those of the SIFT method, and their corresponding correct matches are less. Moreover, as shown in Table 4, the RMSEs of the methods of Jiang and Shi are over 2 pixels. These results can be attributed to the line location determined by the edge detector, which is easily affected by complex background variations. The methods of Jiang and Shi were less effective than SIFT in terms of RMSE.

On the basis of experimental results, the proposed framework presented more significant improvements than the other methods in terms of matching performance when remote sensing images with complex background variations were used. The effectiveness of the proposed matching framework can be explained by a number of reasons. First, the deep and abstract features obtained by training are more salient than the manually designed features. As shown by the feature maps of the conjugated patches in Figure 8, the conjugated features are highly similar despite the existence of significant background variations. Second, the gridding S-Harris operator can find evenly distributed corners with high-location accuracy. Third, the GPCQ-based searching strategy can improve the comparison reliability of conjugated patches. In the GPCQ algorithm, the Gaussian pyramid-based multiscale patch similarity comparison and quadtree can reduce the searching space of S-Harris corners and improve the robustness of comparison. Finally, the quadratic polynomial-based whole-to-local outlier elimination method can remove false matches and improve matching precision.

In the SIFT method, the difference-of-Gaussian detector and the gradient-based SIFT descriptor are both sensitive to significant changes in intensity caused by complex background variations. However, background variations may result in local support regions with different image contents for each SIFT feature. Subsequently, the SIFT method generates descriptors with low similarity, thereby resulting in many outliers. In the methods of Jiang and Shi, the matches are produced from relatively stable shapes and structures, such as coastlines and roads. However, these kinds of line objects seldom present rich and regularly distributed contents in remote sensing images, and thus, they are unsuitable for obtaining satisfactory point matching results. In addition, the edge detector is sensitive to nonlinear grayscale changes. If changes occur between the line objects (e.g., inconsistent shape of coastlines in Figure 7g,h), then the location accuracy of these lines will be low. In the method of Zagoruyko, the MSER detector is sensitive to local noise and structure component changes caused by complex background variations. A highly similar shape context for conjugated regions is difficult to detect. The failed examples in Figure 17c–f suggest that the MSER is unstable for complex background variations. As evidenced by the experimental results, the line- and region-based methods cannot fully guarantee good matching results when using remote sensing images with complex background variations, because the intensity information is unreliable.

4. Conclusions

In this paper, we present the SCNN-based matching framework to effectively find matches between remote sensing images with complex background variations. First, a Siamese-type network is designed to directly learn the similarity of conjugated patches. Second, a gridding S-Harris algorithm is developed to determine the coordinates of point matches, and the GPCQ-based searching strategy is used to narrow down the searching space and achieve multiscale comparison of conjugated patches. Finally, a whole-to-local quadratic polynomial constraint is used to remove the false matches. The deep features detected by the SCNN are more distinctive and unambiguous compared with the manually designed features. Furthermore, the statistical and visualization results indicate that our framework can obtain more well-distributed matches and higher matching precision and accuracy for all experimental image pairs compared with the state-of-the-art matching methods. The results proved the high capability of the proposed framework in matching remote sensing images with complex background variations.

However, the proposed matching framework is constrained by the unknown spatial resolutions of the reference and sensed images. The reference and sensed images should be resampled by approximating the same spatial resolution before matching. In addition, the image matching framework is proposed to improve matching performance in the context of image background variations, in which there should be significant texture changes, so some images with discriminative textures were collected to generate the training and test datasets. As we know, the accuracy of supervised machine learning highly depends on the quality and variety of the training data set. As a result, a lot of correct matches can be obtained in the images with discriminative textures (i.e., Figure 13a,b), while only a few correct matches were obtained in some areas with homogeneous or weak textures (i.e., the farmland areas with homogeneous textures in Figure 13e, the weakly textured areas covered by thick cloud in Figure 13f). Therefore, the proposed image matching framework may not be suitable for the images fully covered by homogeneous or weak textures.

Our future work may focus on improving the matching performance of the proposed method for images without known spatial resolutions or with several homogenous areas.

Acknowledgments

This research was supported by the National Key Research and Development Program of China (2016YFB0502603), National Natural Science Foundation of China (41401526 and 41501492), and Jiangxi Natural Science Foundation of China (20171BAB213025). The authors would like to thank the editor-in-chief, the anonymous associate editor, and the reviewers for their systematic review and valuable comments.

Author Contributions

Haiqing He was primarily responsible for conceiving the method and writing the source code and the paper. Min Chen designed the experiments and revised the paper. Ting Chen generated the datasets and performed the experiments. Dajun Li improved the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Brown, L.G. A survey of image registration techniques. ACM Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Maintz, J.B.A.; Viergever, M.A. A survey of medical image registration. Med. Image Anal. 1998, 2, 1–36. [Google Scholar] [CrossRef]

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Dawn, S.; Saxena, V.; Sharma, B. Remote sensing image registration techniques: A survey. In International Conference on Image and Signal Processing; Elmoataz, A., Lezoray, O., Nouboud, F., Mammass, D., Meunier, J., Eds.; Springer-Berlin: Heidelberg, Germany, 2010; Volume 6134, pp. 103–112. [Google Scholar]

- Jiang, J.; Zhang, S.; Cao, S. Rotation and scale invariant shape context registration for remote sensing images with background variations. J. Appl. Remote Sens. 2015, 9, 92–110. [Google Scholar] [CrossRef]

- Yang, K.; Pan, A.; Yang, Y.; Zhang, S.; Ong, S.H.; Tang, H. Remote sensing image registration using multiple image features. Remote Sens. 2017, 9, 581. [Google Scholar] [CrossRef]

- Chen, M.; Habib, A.; He, H.; Zhu, Q.; Zhang, W. Robust feature matching method for SAR and optical images by using Gaussian-Gamma-shaped bi-windows-based descriptor and geometric constraint. Remote Sens. 2017, 9, 882. [Google Scholar] [CrossRef]

- Lowe, D. Object recognition from local scale-invariant features. In Proceedings of the 7th IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; p. 1150. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.C. Speeded-up robust features (SURF). Comput. Vis. Image Und. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Bradley, P.E.; Jutzi, B. Improved feature detection in fused intensity-range image with complex SIFT. Remote Sens. 2011, 3, 2076–2088. [Google Scholar] [CrossRef]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; pp. 506–513. [Google Scholar]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

- Morel, J.M.; Yu, G. ASIFT: A new framework for fully affine invariant image comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Li, Q.; Wang, G.; Liu, J.; Chen, S. Robust scale-invariant feature matching for remote sensing image registration. IEEE Geosci. Remote Sens. Lett. 2009, 6, 287–291. [Google Scholar]

- Brook, A.; Bendor, E. Automatic registration of airborne and spaceborne images by topology map matching with SURF processor algorithm. Remote Sens. 2011, 3, 65–82. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, S.; Wang, B.; Sun, M. Automatic registration method for fusion of ZY-1-02C satellite images. Remote Sens. 2013, 6, 157–279. [Google Scholar] [CrossRef]

- Cai, G.R.; Jodoin, P.M.; Li, S.Z.; Wu, Y.D.; Su, S.Z. Perspective-SIFT: An efficient tool for low-altitude remote sensing image registration. Signal Process. 2013, 93, 3088–3110. [Google Scholar] [CrossRef]

- Li, J.; Hu, Q.; Ai, M. Robust feature matching for remote sensing image registration based on lq-estimator. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1989–1993. [Google Scholar] [CrossRef]

- Zagoruyko, S.; Komodakis, N. Learning to compare image patches via convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4353–4361. [Google Scholar]

- Shi, X.; Jiang, J. Automatic registration method for optical remote sensing images with large background variations using line segments. Remote Sens. 2016, 8, 426. [Google Scholar] [CrossRef]

- Altwaijry, H.; Trulls, E.; Hays, J.; Fua, P.; Belongie, S. Learning to match aerial images with deep attentive architecture. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 3539–3547. [Google Scholar]

- Chen, L.; Rottensteiner, F.; Heipke, C. Invariant descriptor learning using a Siamese convolutional neural network. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; pp. 11–18. [Google Scholar]

- Simo-Serra, E.; Trulls, E.; Ferraz, L.; Kokkinos, I.; Fua, P.; Moreno-Noguer, F. Discriminative learning of deep convolutional feature point descriptors. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 118–126. [Google Scholar]

- Ahmed, E.; Jones, M.; Marks, T.K. An improved deep learning architecture for person re-identificaiton. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3908–3916. [Google Scholar]

- Melekhov, I.; Kannala, J.; Rahtu, E. Siamese Network Features for Image Matching. In Proceedings of the 23rd International Conference on Pattern Recognition, Cancun, Mexico, 4–8 December 2016; pp. 378–383. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Brum, A.G.V.; Pilchowski, H.U.; Faria, S.D. Attitude determination of spacecraft with use of surface imaging. In Proceedings of the 9th Brazilian Conference on Dynamics Control and their Applications (DICON’10), Serra Negra, Brazil, 7–11 June 2010; pp. 1205–1212. [Google Scholar]

- Kouyama, T.; Kanemura, A.; Kato, S.; Imamoglu, N.; Fukuhara, T.; Nakamura, R. Satellite attitude determination and map projection based on robust image matching. Remote Sens. 2017, 9, 90. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, K.; Li, W. Object-oriented shadow detection and removal from urban high-resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6972–6982. [Google Scholar] [CrossRef]

- Cheng, Q.; Shen, H.; Zhang, L.; Li, P. Inpainting for remotely sensed images with a multichannel nonlocal total variation model. IEEE Trans. Geosci. Remote Sens. 2014, 52, 175–187. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML–15), Lille, France, 6–11 July 2015. [Google Scholar]

- Merkle, N.; Luo, W.; Auer, S.; Müller, R.; Urtasun, R. Exploiting deep matching and SAR data for the geo-localization accuracy improvement of optical satellite images. Remote Sens. 2017, 9, 586. [Google Scholar] [CrossRef]

- Harris, C. A combined corner and edge detector. In Proceedings of the 4th Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Brown, M.; Hua, G.; Winder, S. Discriminative learning of local image descriptors. IEEE Trans. Pattern Anal. 2011, 33, 43–57. [Google Scholar] [CrossRef] [PubMed]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust wide baseline stereo from maximally stable extremal regions. In Proceedings of the British Machine Vision Conference, Cardiff, UK, 2–5 September 2002; pp. 384–396. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).