Tri-Camera High-Speed Videogrammetry for Three-Dimensional Measurement of Laminated Rubber Bearings Based on the Large-Scale Shaking Table

Abstract

1. Introduction

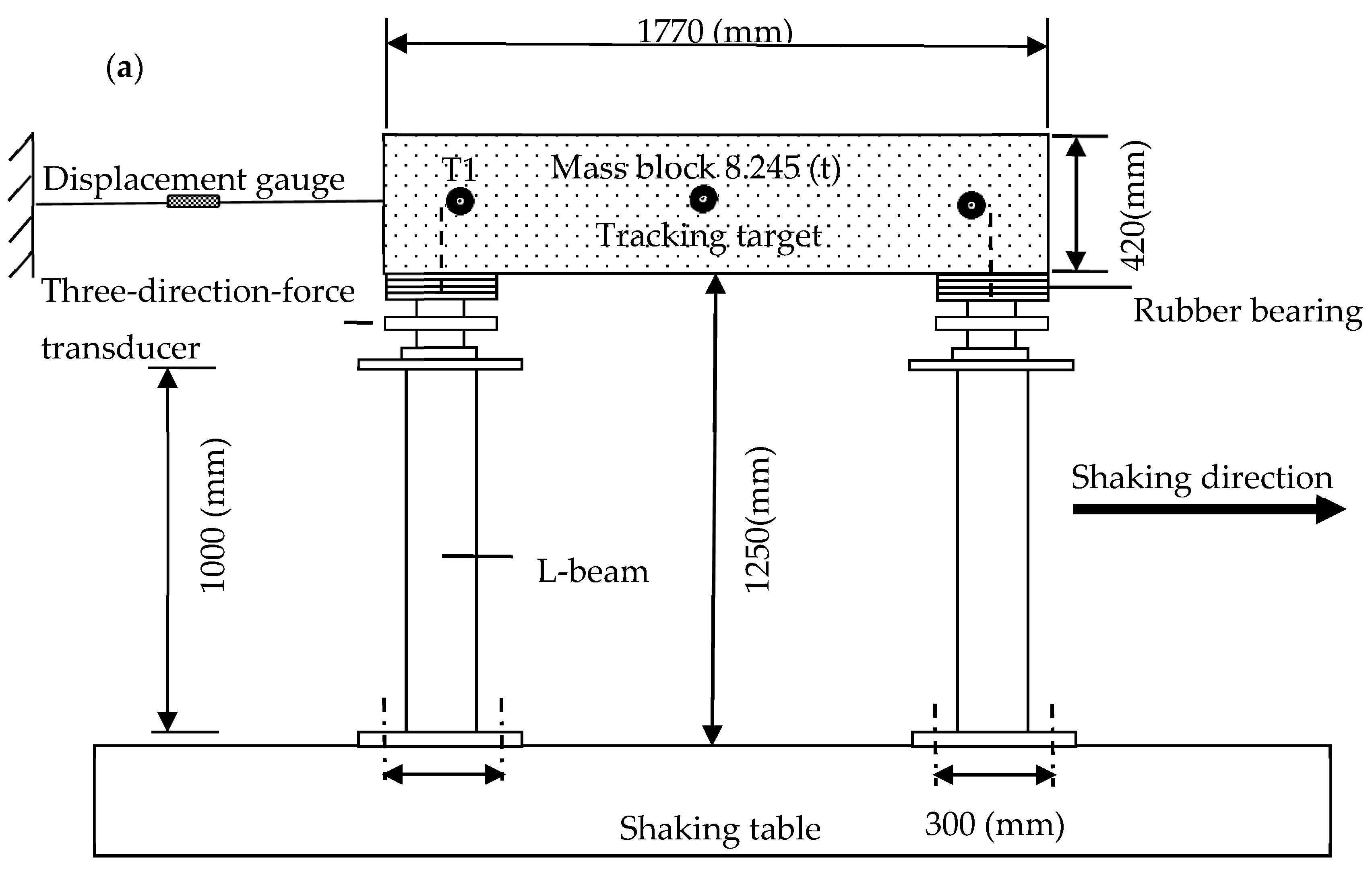

2. Experimental Site and Structure Model

3. Approaches

3.1. Tri-Camera Image Sequences Based Elliptical-Shaped-Target Matching, Detection, and Tracking

3.2. Tri-Camera Image Sequences Based Three-Dimensional Reconstruction of Continuous Tracking Points

4. Experimental Results and Discussions

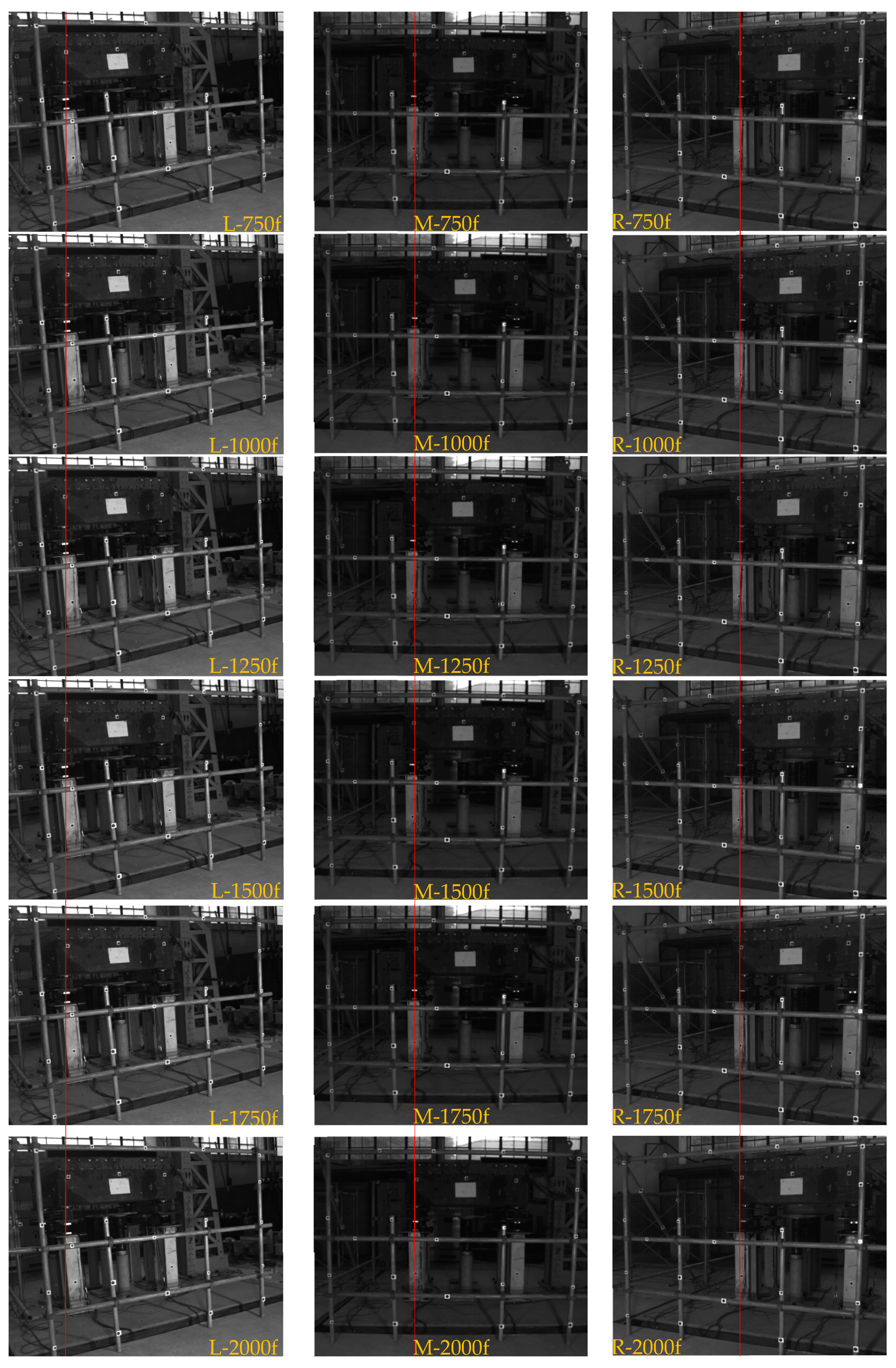

4.1. Results of Detecting and Tracking the Ellipical Targets

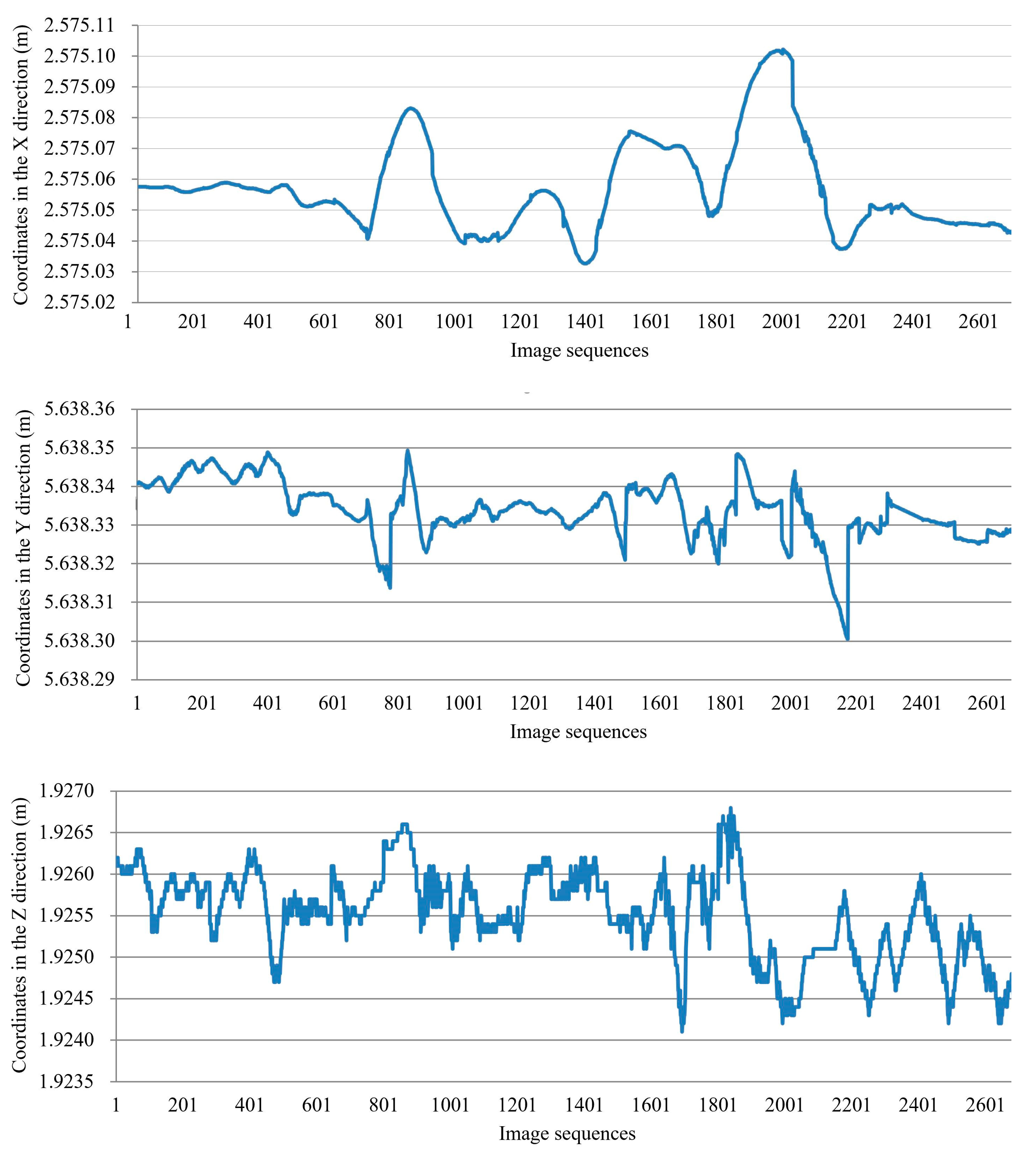

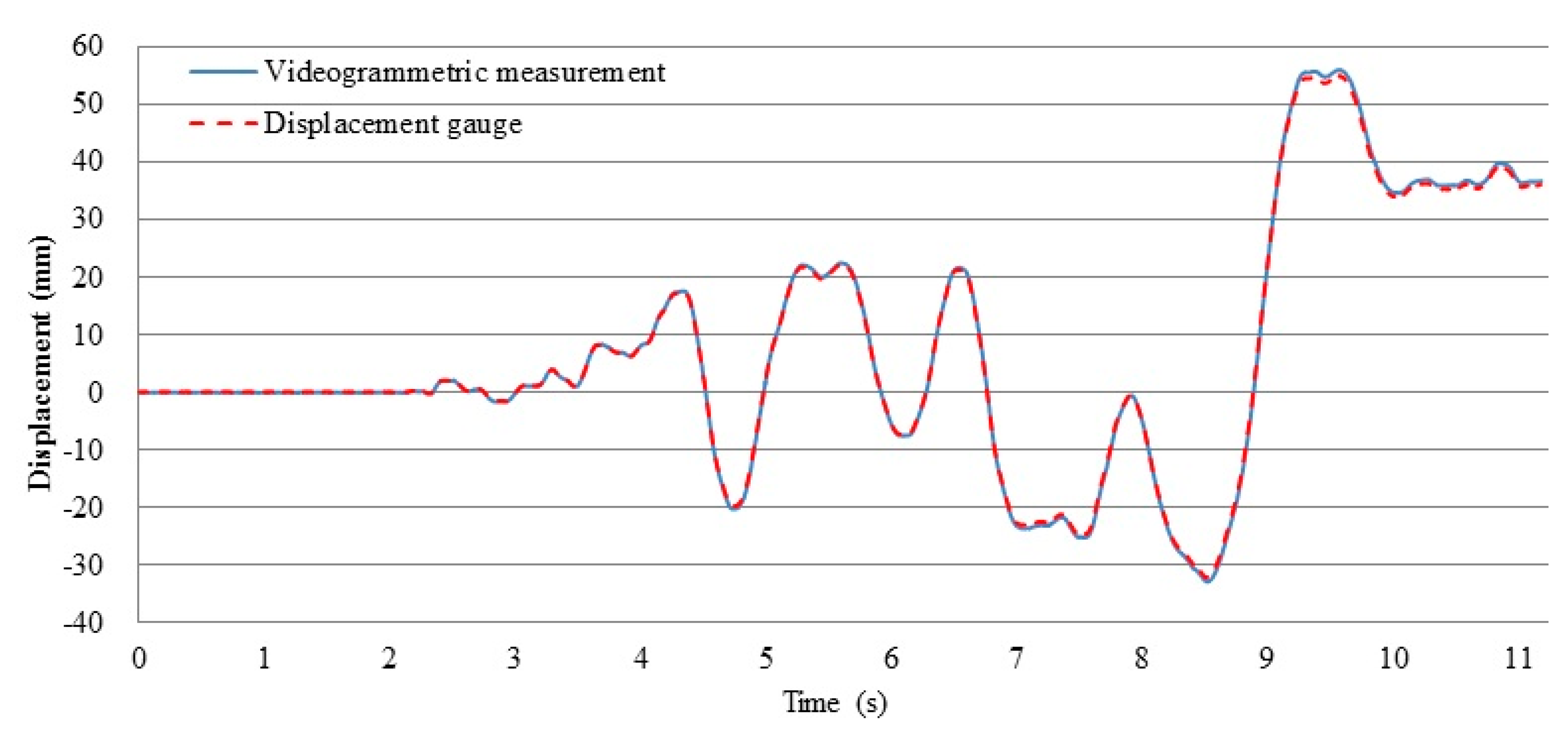

4.2. Result of Accuracy Assessment of the Tri-Camera Videogrammetry

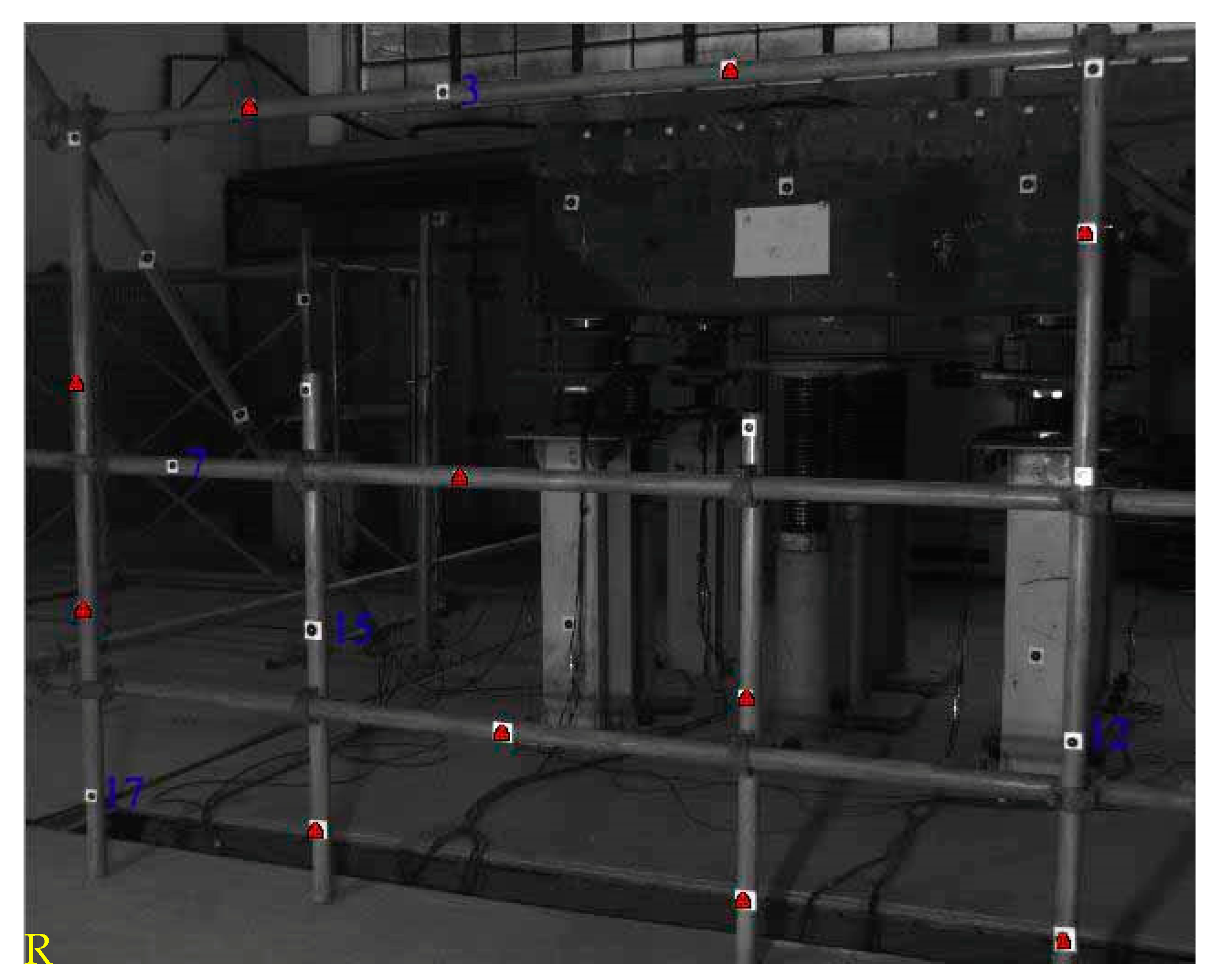

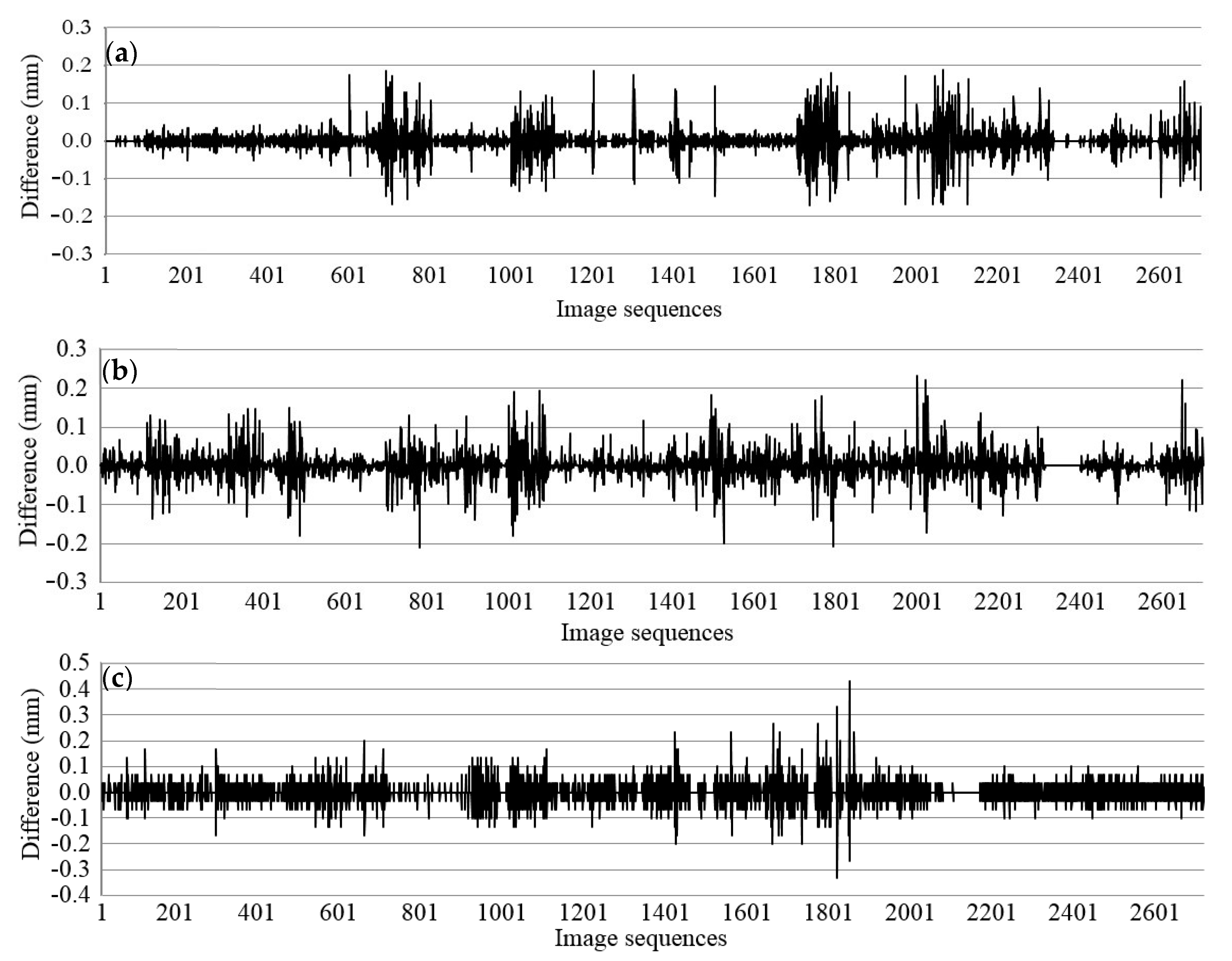

4.3. Discussions of the Tri-Camera Videogrammetric Measurement of Laminated Rubber Bearings

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Nomenclature

| m | meter |

| mm | millimeter |

| t | ton |

| m/s2 | meter per square of seconds |

| fps | frame per second |

| µs | microsecond |

| s | second |

| CPU | central processing unit |

| GB | gigabyte |

| DDR | double data rate |

References

- Ding, L.; Zhu, H.P.; Wu, L. Analysis of mechanical properties of laminated rubber bearings based on transfer matrix method. Compos. Struct. 2017, 159, 390–396. [Google Scholar] [CrossRef]

- Castaldo, P.; Ripani, M.; Lo Priore, R. Influence of soil conditions on the optimal sliding friction coefficient for isolated bridges. Soil Dyn. Earthq. Eng. 2018, 111, 131–148. [Google Scholar] [CrossRef]

- Abe, M.; Yoshida, J.; Fujino, Y. Multiaxial behaviors of laminated rubber bearings and their modeling. I: Experimental study. J. Struct. Eng. 2004, 130, 1119–1132. [Google Scholar] [CrossRef]

- Castellano, A.; Foti, P.; Fraddosio, A.; Marzano, S.; Mininno, G.; Piccioni, M.D. Seismic response of a historic masonry construction isolated by stable unbonded fiber-reinforced elastomeric isolators (SUFREI). Key Eng. Mat. 2014, 628, 160–167. [Google Scholar] [CrossRef]

- Midorikawa, M.; Azuhata, T.; Ishihara, T.; Wada, A. Shaking table tests on seismic response of steel braced frames with column uplift. Earthq. Eng. Struct. D. 2006, 35, 1767–1785. [Google Scholar] [CrossRef]

- Ohsaki, M.; Miyamura, T.; Kohiyama, M.; Yamashita, T.; Yamamoto, M.; Nakamura, N. Finite-element analysis of laminated rubber bearing of building frame under seismic excitation. Earthq. Eng. Struct. D. 2015, 44, 1881–1898. [Google Scholar] [CrossRef]

- Housner, G.W.; Bergman, L.A.; Caughey, T.K.; Chassiakos, A.G. Structural control: past, present, and future. J. Eng. Mech. 1997, 123, 897–971. [Google Scholar] [CrossRef]

- Wahbeh, A.M.; Caffrey, J.P.; Masri, S.F. A vision-based approach for the direct measurement of displacements in vibrating systems. Smart Mater. Struct. 2003, 12, 785–794. [Google Scholar] [CrossRef]

- Vintzileou, E.; Mouzakis, C.; Adami, C.E.; Karapitta, L. Seismic behavior of three-leaf stone masonry buildings before and after interventions: Shaking table tests on a two-storey masonry model. B. Earthq. Eng. 2015, 13, 3107–3133. [Google Scholar] [CrossRef]

- Fraser, C.S.; Riedel, B. Monitoring the thermal deformation of steel beams via vision metrology. ISPRS J. Photogramm. Remote Sens. 2000, 55, 268–276. [Google Scholar] [CrossRef]

- Vallet, J.; Turnbull, B.; Jolya, S.; Dufour, F. Observations on powder snow avalanches using videogrammetry. Cold. Reg. Sci. Technol. 2004, 39, 153–159. [Google Scholar] [CrossRef]

- Pascual, J.F.; Neucimar, J.L.; Ricardo, M.; Barros, L. Tracking soccer players aiming their kinematical motion analysis. Comput. Vis. Image Und. 2006, 101, 122–135. [Google Scholar]

- Lin, S.Y.; Mills, J.P.; Gosling, P.D. Videogrammetric monitoring of as-built membrane roof structures. Photogramm. Rec. 2008, 23, 128–147. [Google Scholar] [CrossRef]

- Birkin, P.R.; Nestoridi, M.; Pletcher, D. Studies of the anodic dissolution of aluminium alloys containing tin and gallium using imaging with a high-speed camera. Electrochim. Acta 2009, 54, 6668–6673. [Google Scholar] [CrossRef]

- Liu, X.L.; Tong, X.H.; Yin, X.J.; Gu, X.L.; Ye, Z. Videogrammetric technique for three-dimensional structural progressive collapse measurement. Measurement 2015, 63, 87–99. [Google Scholar] [CrossRef]

- Shi, Z.M.; Wang, Y.Q.; Peng, M.; Guan, S.G.; Chen, J.F. Landslide dam deformation analysis under aftershocks using large-scale shaking table tests measured by videogrammetric technique. Eng. Geol. 2015, 186, 68–78. [Google Scholar] [CrossRef]

- Qiao, G.; Mi, H.; Feng, T.T.; Lu, P.; Hong, Y. Multiple constraints based robust matching of poor-texture close-range images for monitoring a simulated landslide. Remote Sens. 2016, 8, 396. [Google Scholar] [CrossRef]

- Herráez, J.; Martínez, J.C.; Coll, E.; Martín, M.T.; Rodríguez, J. 3D modeling by means of videogrammetry and laser scanners for reverse engineering. Measurement 2016, 87, 216–227. [Google Scholar] [CrossRef]

- Tong, X.H.; Gao, S.; Liu, S.J.; Ye, Z.; Chen, P.; Yan, S.; Luan, K.F. Monitoring a progressive collapse test of a spherical lattice shell using high-speed videogrammetry. Photogramm. Rec. 2017, 32, 230–254. [Google Scholar] [CrossRef]

- Anweiler, S. Development of videogrammetry as a tool for gas-particle fluidization research. J. Environ. Manag. 2017, 203, 942–949. [Google Scholar] [CrossRef] [PubMed]

- Beraldin, J.A.; Latouche, C.; El-Hakim, S.F.; Filiatrault, A. Applications of photo-grammetric and computer vision techniques in shake table testing. In Proceedings of the 13th World Conference on Earthquake Engineering, Vancouver, BC, Canada, 1–6 August 2004; National Research Council of Canada: Vancouver, BC, Canada, 2004; pp. 1–17. [Google Scholar]

- Chang, C.C.; Ji, Y.F. Flexible Videogrammetric technique for three-dimensional structural vibration measurement. J. Eng. Mech. 2007, 133, 656–664. [Google Scholar] [CrossRef]

- Fukuda, Y.; Feng, M.Q.; Shinozuka, M. Cost-effective vision-based system for monitoring dynamic response of civil engineering structures. Struct. Control Health 2010, 17, 918–936. [Google Scholar] [CrossRef]

- Leifer, J.; Weems, B.J.; Kienle, S.C.; Sims, A.M. Three-dimensional acceleration measurement using videogrammetry tracking data. Exp. Mech. 2011, 51, 199–217. [Google Scholar] [CrossRef]

- De Canio, G.; Mongelli, M.; Roselli, I. 3D Motion capture application to seismic tests at ENEA Casaccia Research Center: 3DVision system and DySCo virtual lab. WIT Trans. Built Environ. 2013, 134, 803–814. [Google Scholar]

- Ye, Z.; Tong, X.; Xu, Y.; Gao, S.; Liu, S.; Xie, H.; Chen, P.; Lu, W.; Liu, X. An improved subpixel phase correlation method with application in videogrammetric monitoring of shaking table tests. Photogramm. Eng. Rem. Sens. 2018, 84, 579–592. [Google Scholar] [CrossRef]

- Ying, T.H.; Cheng, L.C.; Yen, L.L.; Jiang, J.-A. Robust multiple objects tracking using image segmentation and trajectory estimation scheme in video frames. Image Vision Comput. 2006, 24, 1123–1136. [Google Scholar]

- Shen, Q.N.; An, X.H. A target tracking system for applications in hydraulic engineering. Tsinghua Sci. Technol. 2008, 13, 343–347. [Google Scholar] [CrossRef]

- Markus, V. Robust tracking of ellipses at frame rate. Pattern Recogn. 2001, 34, 487–498. [Google Scholar]

- Maas, H.G.; Hampel, U. Photogrammetric techniques in civil engineering material testing and structure monitoring. Photogramm. Eng. Rem. Sens. 2006, 72, 39–45. [Google Scholar] [CrossRef]

- Lei, Y.; Wong, K.C. Ellipse detection based on symmetry. Pattern Recogn. Lett. 1999, 20, 41–47. [Google Scholar]

- Mai, F.; Hung, Y.S.; Zhong, H.; Sze, W.F. A hierarchical approach for fast and robust ellipse extraction. Pattern Recogn. 2008, 41, 2512–2524. [Google Scholar] [CrossRef]

- Obata, K.; Yoshioka, H. Comparison of the noise robustness of FVC retrieval algorithms based on linear mixture models. Remote Sens. 2011, 3, 1344–1364. [Google Scholar] [CrossRef]

- Fornaciari, M.; Prati, A.; Cucchiara, R. A fast and effective ellipse detector for embedded vision applications. Pattern Recogn. 2014, 47, 3693–3708. [Google Scholar] [CrossRef]

- Hough, V.; Paul, C. Method and Means for Recognizing Complex Patterns. US Patent No. 3,069,654, 18 December 1962. [Google Scholar]

- Lu, W.; Yu, J.; Tan, J. Direct inverse randomized Hough transform for incomplete ellipse detection in noisy images. J. Pattern Recogn. Res. 2014, 1, 13–24. [Google Scholar] [CrossRef]

- Mukhopadhyay, P.; Chaudhuri, B.B. A survey of Hough Transform. Pattern Recogn. 2015, 48, 993–1010. [Google Scholar] [CrossRef]

- Ho, C.T.; Chen, L.H. A fast ellipse/circle detector using geometric symmetry. Pattern Recogn. 1995, 28, 117–124. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.; Hartley, R.; Fitzgibbon, A. Bundle adjustment—A modern synthesis. In Proceedings of the International Workshop on Vision Algorithms: Theory and Practice, Corfu, Greece, 21–22 September 1999; Volume 1883, pp. 298–372. [Google Scholar]

- Li, R.X.; Ma, F.; Xu, F.L. Localization of Mars rovers using descent and surface-based image data. J. Geophys. Res. 2002, 107, FIDO 4-1–FIDO 4-8. [Google Scholar] [CrossRef]

- Dolloff, J.; Settergren, R. An assessment of worldview-1 positional accuracy based on fifty contiguous stereo pairs of imagery. Photogramm. Eng. Rem. Sens. 2010, 76, 935–943. [Google Scholar] [CrossRef]

- Liu, R.; Wang, D.; Jia, P.; Sun, H. An omnidirectional morphological method for aerial point target detection based on infrared dual-band model. Remote Sens. 2018, 10, 1054. [Google Scholar] [CrossRef]

- Liu, X.L.; Tong, X.H.; Ma, J. A systemic algorithm of elliptical artificial targets identification and tracking for image sequences from videogrammetry. Acta Geodaetica et Cartographica Sinica 2015, 44, 663–669. [Google Scholar]

- Levenberg, K. A method for the solution of certain non-linear problems in least squares. Q. Appl. Math. 1944, 2, 164–168. [Google Scholar] [CrossRef]

- Marquardt, D. An algorithm for least-squares estimation of nonlinear parameters. SIAM J. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- Li, W.; Sun, K.; Li, D.; Bai, T.; Sui, H. A new approach to performing bundle adjustment for time series UAV images 3d building change detection. Remote Sens. 2017, 9, 625. [Google Scholar] [CrossRef]

- Jacobsen, K. Exterior orientation parameters. Photogramm. Eng. Rem. Sens. 2001, 67, 1321–1332. [Google Scholar]

- Wolf, P.R.; DeWitt, B.A. Elements of Photogrammetry: With Application in GIS, 3rd ed.; McGraw Hill: Boston, MA, USA, 2000. [Google Scholar]

| Parameter | RHT (30) | RHT (50) | TM (30) | TM (50) | MA (30) | MA (50) |

|---|---|---|---|---|---|---|

| Accuracy (pixel) | 0.018 | 0.018 | 0.02 | 0.02 | 0.019 | 0.019 |

| Time (s) | 128.533 | 363.484 | 108.823 | 238.234 | 10.266 | 15.187 |

| ID | Point Coordinates Obtained from the Videogrammetric Technique (m) | Point Coordinates Measured with the Electric Total Station (m) | Difference of the Point Coordinates (mm) | Positional Error (mm) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | Z | DX | DY | DZ | ||

| 3 | 2575.6082 | 5636.9146 | 2.0294 | 2575.6087 | 5636.9140 | 2.029 | 0.5 | 0.6 | 0.5 | 0.61 |

| 7 | 2574.8303 | 5636.8226 | 1.1814 | 2574.8301 | 5636.8221 | 1.1821 | 0.4 | 0.5 | 0.7 | 0.55 |

| 12 | 2577.0103 | 5637.0238 | 1.2252 | 2577.0108 | 5637.0243 | 1.2246 | 0.5 | 0.5 | 0.6 | 0.54 |

| 15 | 2575.1937 | 5636.9139 | 0.7881 | 2575.1932 | 5636.9142 | 0.7884 | 0.5 | 0.3 | 0.3 | 0.38 |

| 17 | 2574.5877 | 5636.7308 | 0.3264 | 2574.5871 | 5636.7303 | 0.3261 | 0.6 | 0.5 | 0.4 | 0.51 |

| Average difference of coordinates | 0.50 | 0.48 | 0.5 | |||||||

| RMS errors of coordinates | 0.50 | 0.49 | 0.52 | |||||||

| ID | Coordinate Difference between the Three- Camera Videogrammetry and the Electric Total Station (mm) | Coordinate Difference between the two- Camera Videogrammetry and the Electric Total Station (mm) | ||||

|---|---|---|---|---|---|---|

| DX | DY | DZ | DX | DY | DZ | |

| 3 | 0.5 | 0.6 | 0.5 | 0.7 | 0.6 | 0.5 |

| 7 | 0.4 | 0.5 | 0.7 | 0.6 | 0.6 | 0.7 |

| 12 | 0.5 | 0.5 | 0.6 | 0.6 | 0.4 | 0.6 |

| 15 | 0.5 | 0.3 | 0.3 | 0.7 | 0.5 | 0.2 |

| 17 | 0.6 | 0.5 | 0.4 | 0.5 | 0.6 | 0.5 |

| RMS | 0.50 | 0.49 | 0.52 | 0.62 | 0.55 | 0.53 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tong, X.; Luan, K.; Liu, X.; Liu, S.; Chen, P.; Jin, Y.; Lu, W.; Huang, B. Tri-Camera High-Speed Videogrammetry for Three-Dimensional Measurement of Laminated Rubber Bearings Based on the Large-Scale Shaking Table. Remote Sens. 2018, 10, 1902. https://doi.org/10.3390/rs10121902

Tong X, Luan K, Liu X, Liu S, Chen P, Jin Y, Lu W, Huang B. Tri-Camera High-Speed Videogrammetry for Three-Dimensional Measurement of Laminated Rubber Bearings Based on the Large-Scale Shaking Table. Remote Sensing. 2018; 10(12):1902. https://doi.org/10.3390/rs10121902

Chicago/Turabian StyleTong, Xiaohua, Kuifeng Luan, Xianglei Liu, Shijie Liu, Peng Chen, Yanmin Jin, Wensheng Lu, and Baofeng Huang. 2018. "Tri-Camera High-Speed Videogrammetry for Three-Dimensional Measurement of Laminated Rubber Bearings Based on the Large-Scale Shaking Table" Remote Sensing 10, no. 12: 1902. https://doi.org/10.3390/rs10121902

APA StyleTong, X., Luan, K., Liu, X., Liu, S., Chen, P., Jin, Y., Lu, W., & Huang, B. (2018). Tri-Camera High-Speed Videogrammetry for Three-Dimensional Measurement of Laminated Rubber Bearings Based on the Large-Scale Shaking Table. Remote Sensing, 10(12), 1902. https://doi.org/10.3390/rs10121902