Abstract

The concepts of sustainable development, quality of life, wellbeing, green growth, etc., and their assessment by various kinds of indicators (within the “Beyond GDP”, or later known as the “GDP and Beyond” movement) have become important features of the professional life of many researchers, administrators and even policy makers. The underlying concepts, as well as the indicators are very broad, are often closely linked or overlapping and are in a continuous process of development. Information about them is primarily available in a scientific form—hypotheses, models, scenarios and figures—seldom comprehensible for a broad spectrum of final users. Some recent surveys show that the proliferation of indicators and the complexity (and complicatedness) of the underlying concepts impede the willingness of policy makers to use them. One of the most viable and effective ways to overcome this barrier is to provide users with accurately targeted information about particular indicators. The article introduces “indicator policy factsheets” complementing the already developed and routinely used “indicator methodology sheets”; indicator policy factsheets contain specific and easy-to-obtain information supporting instrumental, conceptual and symbolic use of indicators.

1. Introduction

Since the UN Conference in Rio de Janeiro in 1992 [1], governments and many international, as well as national organizations have been elaborating upon sustainable development indicators (SDIs) with the aim of providing a basis for reliable information for a varied audience—the lay public, experts and decision makers at all levels (from individuals to those making international and global assessments). Due to the complex, still vague, challenging nature of the sustainable development concept, discussions on what to sustain and what to develop, on a quantitative vs. qualitative approach, on current vs. future conditions and on physical (real) vs. monetary features, have been underway for a quarter of a century and continue. However, a great deal of progress has also been achieved: most countries now monitor and report progress towards sustainable development; the assessment is based on agreed frameworks and sets of SDIs; the indicators meet many quality criteria to provide credible information; new and formerly neglected aspects are included in the assessments (as, e.g., people’s preferences, non-economic values of natural and social capital, etc.); a long-term initiative calling for other than economically-oriented indicators (“Beyond GDP” indicators) has emerged; researchers have come up with new methods and indicators for sustainability assessment; and some politicians have even made attempts to derive targets and base policies on this evidence [2,3,4,5].

Soon after the UN Conference in Rio in 1992, the Commission on Sustainable Development obtained a mandate to adopt an indicators work program. It involved, inter alia, consensus-building on a core list of SDIs and development of the related methodological guidelines. These methodology sheets have become widely used since the publication of the so-called “Blue Book” in 1996 [6]. Other agencies dealing with environmental and sustainability indicators (e.g., Eurostat, European Environmental Agency, OECD, World Bank) have also developed similar methodology factsheets [7,8,9]. Their main purpose has been to enable replication of assessment; in other words, they ensured standardization, unified presentation by a common reporting format and contributed to full transparency of the indicator development. Although the structure of the indicator factsheets has not been strictly specified, their formats have quickly converged to comprise a definition, description (relevance), key message, results assessment, context or links to other relevant topics and underlying data and metadata (including information on the quality of the indicator). These methodology factsheets have become a standard tool that has remarkably increased an important criterion of indicator use: scientific soundness.

There are a range of suitable indicators for assessments of both current wellbeing and sustainability already in place [10]. The least developed are those for measuring subjective dimensions of wellbeing and for some forms of natural and social capital. Nevertheless, even these areas have been progressing, and a number of major statistical projects include them [11]. However, despite all of the efforts of many national and international organizations and governments, a persistent question remains: Why do economic indicators still dominate policy making to this day? Or phrased differently: Why have alternative indicators so far failed to seriously shape key public policies? [12,13].

2. Theoretical Background: Misty Indicators

With the growing demands on limited financial resources and a general culture of accountability, greater emphasis is being placed on generating scientific knowledge (often based on indicators) that may have a practical impact on policy making [14,15]. The process seems obvious and compatible: scientists provide ample information—indicators and other evidence—that policy makers use to manage public affairs accountably and effectively. In return, policy makers demand concrete research outcomes. However, in practice, indicator-based decision making is not a prevailing style of the management of public affairs (e.g., [16,17,18]).

The political agenda is broad—quality of life, sustainable development, wellbeing, green, smart or inclusive growth, green economy, better life, resilient people, low-carbon development and, most recently, a decent life), all concepts dealing with the protection of the social and natural environment, while fostering economic welfare, which have emerged in the past three decades. Moreover, all of these concepts are related to measurement (indicator) systems that make the overall terrain unclear or even confusing for politics at the practical level. Nevertheless, nearly every politician—national or local—has already faced some or probably more of these concepts and related indicators. Therefore, politicians must have been somehow informed about them to a certain level (just because they are often exposed to pressure from their electorate—the public or various civic organizations), and they must have had to decide, based on this information, whether these concepts and indicators are worth paying attention to in regard to their potential contribution to improving policy making.

This problematic agenda is not the only difficulty: there is also a general problem of the science policy making interface stemming from different mindsets of scientists and politicians/policy makers [19,20]. Published studies and political experience have identified barriers in the use of scientific knowledge by politicians and policy makers: the “traditional” (i.e., difficult to easily grasp) format of academic communication, research deemed irrelevant to practical political reality and a lack of timely results are the reasons most cited. Scientists, on the other hand, argue that they provide information about a particular segment of the real world that cannot often be easily interpreted in a broader sense or in general terms. Moreover, research usually takes more time than politicians expect or are willing to accept.

Several studies (e.g., [21,22]) have shown that politicians, depending on their age and family status, are or perceive themselves to be stressed: (i) overworked; (ii) isolated from their families; and (iii) unable to “switch off” from work, etc. Such symptoms hamper the knowledge transfer necessary for the acceptance of new ideas and concepts. It may be assumed on that account that developers and promoters of new concepts (including alternative (or Beyond GDP) indicators) have difficulty attracting the attention of politicians or even persuading them to apply innovative approaches in policy making. Despite all that, politicians in democratic regimes stay in the spotlight of indicator providers and promoters, since only they have a sufficient mandate and competency to effectively promote these concepts in society.

The number of potentially usable indicators and concepts is high, and there are a lot of overlaps between them (e.g., [10,23,24,25]). Thus, politicians feel overwhelmed or even confused by inappropriately or interchangeably labelled ideas, concepts and indicators. Further, the concepts and/or indicators are often too complicated to be understood by the (even highly educated) intended users [25]. Most politicians use both of these arguments to maintain the status quo. Despite all of these barriers, new and innovative efforts to measure prosperity and wellbeing are emerging from different sources—national governments, civil society organizations and scientists [7,26,27]. We assume that both existing (but unused) and newly developed initiatives may at least attract the attention of policy makers on the proviso that they can easily find the indicators that meet their demand. In this respect, Bell and Morse [28] believe that the most “used” indicators are those that are relevant and, hence, match a need. If the indicator is in demand by a stakeholder (e.g., a politician or policy maker), then that indicator is more likely to continue to exist and develop. It is true even if the indicator is very technical and difficult to assess. Those demanding the indicators may not necessarily know how they are calculated or their limitations, but as long as the indicators help them to encapsulate complexity for someone else to understand, then they will be of use. The referred authors formulated, and confirmed, inter alia, the following hypotheses: (i) indicators work if they match stakeholder requirements; and (ii) few indicators are so powerful as to be able to “find their way” without a degree of marketing. Similarly, Parris and Kates [29] and Hak et al. [30] consider relevance as one of the key criteria of a good indicator. However, on the whole, the efforts of indicator providers to ensure salience (relevance to decision makers) are rather weak. Many indicator efforts are not or only loosely linked to specific decision makers and decisions. One of the reasons may be the lack of ability of the indicator providers and promoters to assess the policy market for their “product” and then use media exposure as their primary means to influence decision making. Thus, the indicators are not used as a source of significant argument and remain at the side lines of the public policy debate. This often leads to a growing disinterest in continuing regular indicator analyses and updates.

3. Methodological Approach

This article responds to a need to enhance and speed up the effective use of Beyond GDP indicators to counterbalance the use of conventional indicators and, thus, support sustainable development implementation [13,31,32]. Specifically, the paper emphasizes the need to create tailored information on alternative indicators that match the needs of the potential users. Ideally, indicator users need to be aware of the knowledge that exists, and vice versa, indicator producers and developers need to be aware of the context(s) in which the indicators will be potentially used. Therefore, alternative indicators should be developed within an interactive process ensuring that user demands are taken on board.

The authors have based this article on more than ten years’ experience with developing, promoting and using sustainability indicators. The findings that serve as a rationale for the development of the indicator policy fact sheets stem from three main sources: analyses of relevant scientific literature (books and journals), project outcomes (reports, workshops and conferences, web pages) and stakeholders. There is a vast literature on sustainability indicators, knowledge-based and, more recently, evidence-based policy making (e.g., [33,34]). However, only a few authors have looked specifically at the problem of the lack of use and minor influence of alternative indicators on decision making (see, e.g., [18,32,35]); moreover, little or no practical guidance for both indicator developers and politicians can be found. Like the literature sources, a lot of projects have been devoted to indicators over the past 20 years [36,37]. They have mostly introduced new indicators and employed existing ones in relevant analyses and models. Recently, two major projects have focused specifically on the issue of the effective use of indicators: POINT (Policy Influence of Indicators) applied a variety of methods—documentary analysis, interviews, questionnaires, group discussions, etc.—to obtain a number of different perspectives through which the use and influence of indicators could be viewed [28]. The BRAINPOol (Bringing Alternative Indicators into Policy) project identified a wealth of different barriers, the “success factors” of selected indicator initiatives and demand for alternative indicators and recommended ways to overcome barriers by case studies [35]. The proposed indicator policy fact sheets are part of the knowledge brokerage project’s outcomes. Finally, the most relevant source of information has been stakeholders. Many direct contacts with both indicator providers and promoters and potential indicator users (policy makers and senior officials at both national and international levels—mayors, parliamentarians, members of national councils of sustainable development, ministers), as well as indirect knowledge about stakeholder opinion, have been obtained from collaborating organizations and experts of various kinds (civil society organizations, think-tanks, governmental agencies, intergovernmental organizations). Almost 40 structured interviews were conducted during the last two years with the aim of obtaining a clearer picture on indicator supply promotion and demand and primarily on the reasons for the low uptake of alternative indicators in decision making. All of these inputs have been analysed in the context of the ongoing long-term programs of the European Commission’s “Beyond GDP” and the OECD’s “Measuring the Progress of Societies”.

We assume that effective communication, i.e., communication based on an analysis of the barriers and application of effective user-oriented knowledge transfer methods, as part of innovative indicators may overcome the identified barriers. We therefore propose using the specific information tools’ “indicator policy fact sheets” that summarize and elucidate the most important information about the indicators in terms of their use and impact. The indicator policy factsheets that we propose may be modest, but are effective tools for increasing the indicators’ policy relevance. The following sections deal with their development and use and provide questions and examples to enhance the discussion on this topic.

4. The Development of the Indicator Policy Fact Sheets

Indicators are not just information; they are specific information: most experts would agree that indicators have to have a purpose; they monitor the effectiveness of a policy or serve many other objectives (based on the type of indicator, context, target group, etc.). At some phase of the whole “life cycle” of the indicator, it serves as a mediation tool towards other audiences. Therefore, some connection between the indicator developers and users must exist. Many authors have already asked how this connection becomes established. Bell and Morse [38] found in a survey that a critical factor was the need for better education and communication on the part of those promoting the indicators. Along the same lines, Rey-Valette et al. [39] conclude that whatever its origin, an indicator must be developed in a context (conditions) favourable for its future development. Eurostat [40] has recently made a great effort to “get messages across using indicators”. It seems true that the success or failure of an indicator depends directly on the situations and periods in which it is used.

While indicator methodology factsheets look like a technical manual of a product (the indicators), the ultimate indicator users need a concise list of arguments for the use of the product. While the former is useful for indicator professionals and increases harmonization and standardization of indicators and, thus, also, their credibility, the latter helps to strengthen the indicators; relevance and, therefore, their uptake by decision makers. The niche for such a communication tool may be filled by the indicator policy factsheets. Unlike conventional indicator methodological factsheets, the purpose of the indicator policy factsheets is to provide the most important information about the indicator producers’/promoters’ intentions and the indicators’ known or potential impact/success. Therefore, indicator policy factsheets should not be designed for performing a thorough scientific review of the indicator, but rather, to highlight the information about an indicator’s influencing factors that might be important for indicator users. The indicator policy factsheets thus complement methodological sheets and highlight the indicator factors (in terms of indicator influence) that are unknown, invisible and unreported. Additionally, if the hypothesis that matching a user’s need has greater influence on an indicator’s use than its scientific quality is true, then the policy factsheets should be a necessary part of the “meta-information package” of each indicator. Table 1 shows an example of the indicator policy factsheet for natural resource use.

Table 1.

A policy factsheet for the indicator “domestic material consumption”.

| Name of Indicator/Index | Domestic Material Consumption (DMC) |

|---|---|

| Author | Eurostat |

| Year | 2001 (first methodological report)/annually/2013 |

| created/frequency/last publication | Last report using DMC (sustainable development in the EU—2013 monitoring report of the EU sustainable development strategy) is available at http://epp.eurostat.ec.europa.eu/ |

| Brief description | DMC is a measure of the use of natural resources. DMC is defined as the annual quantity of raw materials extracted from the domestic territory of an economy plus all physical imports minus all physical exports. DMC is used as a proxy for the indicator “raw material consumption” (RMC), which is currently under development (providing the most accurate picture on resource use because it “corrects” imports and exports of products with the equivalent amount of domestic extraction of raw materials that was needed to manufacture the respective traded good). Both DMC and RMC are measures of environmental pressures exerted by humans on the environment. |

| Country coverage | EU 27, plus Norway, Switzerland, Turkey, and many other countries, like Japan and the USA, and developing countries of South America and Asia |

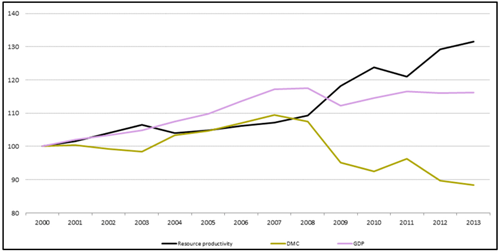

| Method of presentation | Resource productivity in comparison to GDP and DMC, EU-27, 2000–2013, (index: year 2000 = 100). Source: Eurostat.  The resource productivity (RP) is the ratio of the volume of gross domestic product (GDP) over DMC. RP in the EU rose almost continuously between 2000 and 2011 by about 20%. DMC may be presented in many other ways to reveal time development (trend), international comparison, per capita values, etc. |

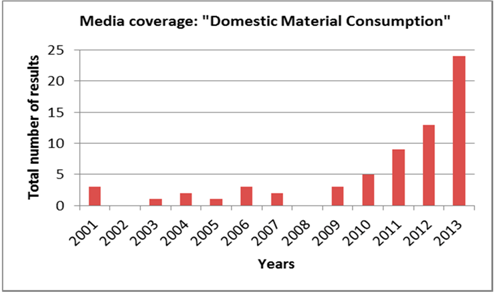

| Indicator factors from a policy perspective | Over the past 13 years, DMC was not often a topic of major newspaper articles; however, the interest of media has remarkably increased.  DMC (similarly RMC) is a key indicator for the assessment of sustainable consumption and production (making consumption more sustainable means improving the quality of life, while using fewer natural resources, such as raw materials, energy, land and water). Besides the absolute reduction of consumption, all countries monitor their resource productivity (RP)—the amount of gross value added (measured as GDP) that an economy generates by using one unit of material (measured as DMC). RP (and thus DMC) is a leading indicator for the achievement of a “resource-efficient Europe” initiative of the Europe 2020 strategy. The indicator may be readily used for target setting (some countries aim at absolute decoupling (GDP growth < RP growth; others stipulate that RP will increase by 60% by 2015, etc.) |

| Indicator factors from a scientific perspective | DMC has a large analytical potential: it is used for trend analyses, international comparisons, efficiency calculations (decoupling analysis), etc. It has been combined with an input-output analysis and life cycle analysis to get information on the impact of our consumption. Recently, a more correct metric, RMC (based on the DMC method), for measuring an economy’s material throughput has been developed. Since 2001, in total, 54 scientific and expert publications devoted to DMC appeared:

|

| Indicator factors from a public perspective | DMC is an indicator that has been developed primarily for politicians (at all levels) and policy makers (together with other material flow analysis-based indicators), but other practitioners may be among its target group (mining industry, construction, metallurgy, etc.). So far, it has not been used much for communication with the public. However, if appropriately interpreted, it has great potential to contribute to public debate, e.g., on resource (energy and material) security, a hot topic particularly during global economic and political crises. Furthermore, decoupling analysis (“to do more with less”) is a comprehensible concept with a nice graphical presentation. Since 2001, in total, 22 scientific newspaper and expert publications devoted to DMC appeared:

|

The indicator policy factsheets map out indicator characteristics (basics on methodological foundation, presentation style, frequency of appearance in media, etc.) and some factors related to the policy process (intentions and perceptions of indicator providers and use by media). The structure of the indicator policy factsheets is based on findings from interviews with indicator providers and promoters, as well as users, and also on desk-based analysis of relevant literature (journal articles, scientific studies, project reports). The synthesis of the findings resulted in a definition of categories relevant to the indicators’ use phase. These categories are to assist users to get quick insight into an indicator’s potential and strength. In terms of methodology, the policy factsheets do not repeat the information from the methodology factsheets; rather, they clearly state whether the methodology is settled and accepted by the scientific community or not. The main purpose is to quickly inform the user on an indicator’s potential for communication with the politicians’ target groups—the lay public, media or other politicians.

5. Results: Indicator Policy Fact Sheets

5.1. General Categories

The general categories employ existing information that, however, often needs adjustment: reduction, focus, simplification. This text information may be coherently structured in a tabular format. It is instrumental in deciding whether the user becomes interested in the indicator and proceeds to the analytical categories. In addition to very basic information, such as name of the indicator, author, periodicity and country coverage, “brief description” and “style of presentation” are also included in this category (see Table 1).

5.1.1. The “Brief Description” Category

The concepts behind SDIs are often difficult to understand for non-experts. They are numerous, require both detailed and broad interdisciplinary knowledge and often represent complex systems that must be first understood and then incorporated into political decisions. Moreover, scholars and experts from both universities and think-tanks often lack the skills necessary to effectively communicate their results [19]. In this respect, Seideman [41] concludes that academics frequently do not care about policy relevance, as they face incentives to publish highly theoretical work. Another reason may be that they are simply incapable of it due to years of training that detrimentally affects their ability to communicate with non-academics (jargon, focus on methods, numbers, etc.). The POINT project concludes that this general complexity results in a vague and unclear demand from decision makers [28]. It notes, e.g., that the politicians interviewed were not able to distinguish between concepts of sustainable development and wellbeing. Perhaps as an acknowledgement of this reality, the BRAINPOol project found that producers of SDIs consider it vital that the information conveyed by the indicators be easily understandable, no matter how complex the calculations behind them are. Otherwise, the demand for SDIs diminishes greatly. Some SDI developers therefore cooperate with communication experts to provide the information in its simplest form (while ensuring it still makes sense and is correct). This comprehensible result—the indicator—may also ultimately clarify the underlying concept [27].

5.1.2. The “Style of Presentation” Category

Information visualization is concerned with exploiting the cognitive capabilities of human visual perception in order to convey meaningful patterns and trends hidden in abstract datasets. The right style of presentation can, for instance, attract the attention of a sizable audience, compel potential users to engage with the visualization or share the visualization experience with others [42]. These aspects play a vital role in indicator understanding and usage.

Numerical SDI results in the form of tables and charts have often been recently replaced and/or complemented by more attractive visualizations (e.g., barometers, maps). The aim is to gain users’ attention in a crowded field of similar concepts and indicators. Postrel [43] states that aesthetics is an increasingly important element of our society with a strong focus on how things look and feel, as well as their function. The importance of aesthetics has also been confirmed by Swan et al. [44], who demonstrate that product aesthetics affects how they are evaluated. If we consider SDIs as a market product to be sold to politicians, then according to Zeithaml’s Product Quality Framework [45], the product must evince a proper subjective quality represented by image design. We have found that indicator providers and promoters are becoming increasingly aware of this [27].

Web platforms have recently allowed the involvement of users in indicators calculations. One of the first of these interactive tools was the Sustainability Dashboard [46] (see the web application at http://esl.jrc.ec.europa.eu/envind/dashbrds.htm). Great popularity has recently been obtained by the GapMinder—an initiative promoting sustainable global development through the increased use and understanding of statistics [47] (see the web application at http://ww.gapminder.org/). It uses Trendalyzer software, which converts the statistical time series of the users’ own choice into animated and interactive graphics. It offers several hundreds of pre-loaded data, and indicators enable not just the tracking of global development (e.g., to check achievements in the UN Millennium Development Goals), but also fast analyses. Most recently, the OECD introduced the Better Life Index with the aim of engaging people in the debate on wellbeing. The web application allows comparisons of wellbeing across countries according to the importance users give to 11 topics (e.g., community, education, environment, health, life satisfaction, etc.) [48] (see the web application at http://ww.oecdbetterlifeindex.org/). For both awareness raising and educational goals, various footprint calculators have also been developed and freely shared (e.g., [49,50]).

5.2. Analytical Categories

These categories comprise new information (e.g., a media analysis) that complements the previous categories towards a more comprehensive picture of the indicator. For example, they provide additional sources that can demonstrate the importance of the topic and/or the indicator. This is expressed by the number of times the topic and/or indicator has appeared in various publications, including mass media. This information is important for policy makers: theoretically, a high incidence of, e.g., the Water Footprint Index (expressed by the total number of results) might indicate increasing worries about water resources; frequent articles in scientific journals might signal new discoveries; while greater coverage in public media shows that the topic is publicly attractive.

Indicators can have multiple functions; for understanding their role in policy (and politics), it is common to distinguish between instrumental, conceptual and political (symbolic) uses. The instrumental role is the most visible: indicators are seen as objective information tools to improve policy making. Typically, the discourse used at this level is about solving problems and providing information for planning, target setting, interventions and assessment of policy effectiveness, etc. In this case, an indicator has an influence when it is “used” directly by a policy maker, and this consciously influences their decisions (see, e.g., [51,52,53,54]). The conceptual role of indicators helps the politician establish a broad information base for decisions by shaping conceptual frameworks, mostly through dialogue, public debate and argumentation. In this way, indicators might affect the problem definition of decision makers and provide new perspectives and insights, rather than targeted information for a specific point in the decision making. Since users’ personal value orientation projects itself into the conceptual role (and, conversely, indicators may influence and form users’ personal values), the indicators also employ an education function [17]. The symbolic role means that the indicator is used as support for policy decisions: it conveys a message and, thus, becomes a communication tool among stakeholders (see, e.g., [55]). This support may be of a strategic or tactical nature, but it always serves to legitimize the decisions made. The excerpted literature suggests that direct instrumental use of indicators shows limited potential, whereas conceptual use is the key for enhanced indicator influence in the long term. Additionally, political use of indicators cannot be controlled by the indicator developers [56].

If indicator policy factsheets are to be useful to users, they should comprise elements of all of the above categories (roles). They should provide users with concise information: (i) on credibility (does the indicator contain correct and reliable information?) and relevance (does the indicator convey a message that is necessary to make the right decisions for the right target group?); (ii) on the best use (which concepts and frameworks can be developed using the indicator, and does it fit into my value system?); and, finally, (iii) on various aspects of political use (does the indicator provide a suitable argument for my decision, and does it communicate my decision understandably?).

While considering the factors that make an indicator successful, it is important to consider the users for whom the indicator is intended. As such, we categorize indicator attributes first and foremost by the user to which they refer: policy, public and scientific domain.

5.2.1. The “Indicator Factors from a Policy Perspective” Category

Politicians enforce programs and policies that they deem have public utility or support. At the same time, politicians must defend such programs from competition both inside and outside their political party. This and other factors dictate a choice of themes for policy making. In this category, we therefore include additional information on possible uses of the indicator (more effective communication, comparison with other subjects—benchmarking, context for the policy, etc.). In addition, we include information on media interest here (we have done a simple media analysis using the ProQuest databases that cover hundreds of U.S., Canadian and international newspapers and thousands of journals, dissertations, reports and other expert publications (written in English) (for more details see [53])), taking it to be a proxy for interest in the topic or indicator for both the public and experts, with higher incidence denoting higher interest. Media analysis of selected sustainability indicators use also has been done by Morse [57].

The example policy factsheet (domestic material consumption (DMC); see Table 1) shows possible information pointing out the strengths of DMC for policy making: increasing trends in media coverage, a key role of the indicator in a “resource-efficient Europe” initiative and the possibility for deriving measurable targets.

5.2.2. The “Indicator Factors from a Scientific Perspective” Category

Politicians need reliable indicators that can be employed for both instrumental use (accountable decision making) and for communication with other stakeholders (symbolic use) [58]. It is important to establish the accuracy and trustworthiness of the indicator: is it a new or established methodology? What is the process of publishing (review, data quality, etc.)? Media analysis helps here again: it provides information on the total amount of expert media coverage (scholarly journals, trade journals, reports, theses and dissertations, books, etc.). Despite the distribution and frequency of results giving an interesting snapshot of an indicator’s popularity, this kind of analysis does not distinguish between articles referring to the indicator positively or negatively.

The example policy factsheet (Table 1) shows possible information pointing out the strengths of DMC for policy making from a scientific perspective. It is its large analytical potential: it may be used for various analyses as trends, international comparisons, productivity (e.g., decoupling), material security, environmental impacts of material use, etc. There are about 54 scientific and expert publications specifically devoted to DMC (and several hundred articles with DMC used or referenced). This signals to the user that the indicator is sound and well established and that the support of the scientific community is in place.

5.2.3. The “Indicator Factors from a Public Perspective” Category

An indicator with a high public relevance provides information that responds to people’s concerns: it is generally accessible and publicly appealing. The information on the number of mentions easily answers a pertinent question: how much has the issue/indicator appeared in the public media (newspapers, magazines and wire feeds)? The ratio between public and expert media also demonstrates whether the issue is communicated more to the lay public or to experts. These figures are accompanied by information on who the main users of the indicator are and how (by what means) the indicator addresses its audience. This category thus contains two important messages for the user-politician: media interest in an indicator and an indicator’s attractiveness (comprehensibility, accessibility, linkage to other important issues, etc.). Public interest strengthens the chance of symbolic use of the indicator.

The example policy factsheet (Table 1) shows possible information pointing out the strengths of DMC for policy making, from a public perspective: although DMC has been developed primarily for politicians and policy makers, there have already been several major newspaper stories worldwide. DMC is an almost intuitively comprehensible indicator, and it thus has a great potential to contribute to public debate on many natural resources-related topics (sustainability, security, environmental concerns, etc.).

6. Conclusions

Indicator policy factsheets are a modest attempt to respond to the findings of recent studies on the use of indicators by decision makers. Decision makers themselves have not stipulated their need, neither content-wise, nor in terms of required impacts, targets audiences, etc. They have rather intensified their call for better, alternative, “Beyond GDP” indicators. However, their availability is not sufficient to guarantee their use. The availability of such indicators nowadays is already good and, moreover, competition and innovation is ongoing: more and better indicators will inevitably emerge.

The proposed indicator policy factsheets are designed to help policy makers simply choose and use the most appropriate indicators for assessing a given phenomenon with regard to their requirements and needs (they are not intended for comparison or ranking indicators of diverse phenomena). The factsheets provide a format for gathering missing pieces of information (indicator meta-information) that is beneficial for the instrumental, conceptual and symbolic use of indicators and packaging them into handy policy factsheets. These can also serve as educational tools, since they may influence or help form the personal values of politicians.

Indicator policy factsheets are open, dynamic systems. They are adaptable depending on the results of further research or policy maker needs. The frequency of these updates will follow methodological and other advancements in the field of indicators (e.g., indicators related to sustainable development goals) [59,60].

The question remains as to who should develop the indicator policy factsheets further and put them into practice. The simple answer is knowledge brokers: an expert who acts as a link between researchers and decision makers [61]; and/or an intermediary organizations that have an increasing role in promoting sustainable development [62]. In general, while the key interest in bringing indicators closer to policymakers or other end users is held by the developers of indicators, for the sake of objectivity, as well as in terms of available expertise and experience, this phase of indicator development should be undertaken by a third party—knowledge brokers—and reviewed by relevant experts.

Acknowledgments

This work has been supported by the project “Various aspects of anthropogenic material flows in the Czech Republic” (No. P402/12/2116) funded by the Czech Science Foundation.

Author Contributions

The authors contributed equally to this work. Janoušková and Hák developed the policy fact sheets and drafted the paper. Whitby and Abdallah contributed by their expertise on the use of sustainability indicators. Kovanda contributed to the particular indicators fact sheets for DMC.

Conflicts of Interest

The authors declare no conflict of interest.

References

- UN. Earth Summit Agenda 21. The United Nations Programme of Action from Rio; United Nations Department of Public Information: New York, NY, USA, 1992. [Google Scholar]

- Waheed, B.; Khan, F.; Veitch, B. Linkage-Based Frameworks for Sustainability Assessment: Making a Case for Driving Force-Pressure-State-Exposure-Effect-Action (DPSEEA) Frameworks. Sustainability 2009, 1, 441–463. [Google Scholar] [CrossRef]

- Tasaki, T.; Kameyama, Y.; Hashimoto, S.; Moriguchi, Y.; Harasawa, H. A survey of national sustainable development indicators. Int. J. Sust. Dev. 2010, 13, 337–361. [Google Scholar] [CrossRef]

- Happaerts, S. Sustainable development in Quebec and Flanders: Institutionalizing symbolic politics? Can Public Adm. 2012, 4, 553–573. [Google Scholar] [CrossRef]

- Dahl, A.L. Achievements and gaps in indicators for sustainability. Ecol. Indic. 2012, 17, 14–19. [Google Scholar] [CrossRef]

- UN. Indicators of Sustainable Development: Guidelines and Methodologies, 3rd ed.; UN Statistical Division: New York, NY, USA, 2007. [Google Scholar]

- OECD. Compendium of OECD Well-Being Indicators; OECD: Paris, France, 2011. [Google Scholar]

- EEA. Guidelines for EEA Indicator Profile Review and Update. 2012. Available online: http://forum.eionet.europa.eu/ (accessed on 15 September 2014).

- Eurostat. Guide to Statistics in European Commission Development Co-Operation—Sustainable Development Indicators; Eurostat: Luxembourg, 2013. [Google Scholar]

- Stiglitz, J.; Sen, A.; Fitoussi, J.P. The Measurement of Economic Performance and Social Progress Revisited. Reflections and Overview; Commission on the Measurement of Economic Performance and Social Progress: Paris, France, 2009. [Google Scholar]

- UN. System of Environmental-Economic Accounting 2012: Central Framework; UN: New York, NY, USA, 2014. [Google Scholar]

- Jesinghaus, J. Measuring European environmental policy performance. Ecol. Indic. 2012, 17, 29–37. [Google Scholar] [CrossRef]

- Costanza, R.; Kubiszewski, I.; Giovannini, E.; Lovins, H.; McGlade, J.; Pickett, K.E.; Ragnarsdóttir, K.V.; Roberts, D.; de Vogli, R.; Wilkinson, R. Development: Time to leave GDP behind. Nature 2014, 505, 283–285. [Google Scholar] [CrossRef] [PubMed]

- Garnåsjordet, P.A.; Aslaksen, I.; Giampietro, M.; Funtowic, S.; Ericson, T. Sustainable Development Indicators: From Statistics to Policy. Environ. Policy Gov. 2012, 22, 322–336. [Google Scholar] [CrossRef]

- Hyder, A.; Corluka, A.; Winch, P.J.; el-Shinnawy, A.; Ghassany, H.; Malekafzali, H.; Lim, M.-K.; Mfutso-Bengo, J.; Segura, E.; Ghaffar, A. National policy-makers speak out: Are research giving them what they need? Health Policy Plann. 2011, 26, 73–82. [Google Scholar] [CrossRef]

- Sébastien, L.; Bauler, T. Use and influence of composite indicators for sustainable development at the EU-level. Ecol. Indic. 2013, 35, 3–12. [Google Scholar] [CrossRef]

- Gudmundsson, H. The policy use of environmental indicators—Learning from evaluation research. J. Transdisciplinary Environ. Stud. 2003, 2, 1–12. [Google Scholar]

- Hildén, M.; Rosenström, U. The Use of Indicators for Sustainable Development. Sustan. Dev. 2008, 16, 237–240. [Google Scholar] [CrossRef]

- Morrone, M.; Hawley, M. Improving environmental indicators through involvement of experts, stakeholders, and the public. Ohio J. Sci. 1998, 98, 52–58. [Google Scholar]

- Parsons, W. Scientists and politicians: The need to communicate. Public Underst. Sci. 2001, 10, 303–314. [Google Scholar] [CrossRef]

- Weinberg, A.; Cooper, C.L.; Weinberg, A. Workload, stress and family life in British Members of Parliament and the psychological impact of reforms to the working hours. Stress Med. 1999, 15, 79–87. [Google Scholar] [CrossRef]

- Hak, T.; Moldan, B.; Dahl, A. Sustainability Indicators: A Scientific Assessment; Island Press: Washington, DC, USA, 2007. [Google Scholar]

- Singh, R.K.; Murtyb, H.R.; Guptac, S.K.; Dikshitc, A.K. An overview of sustainability assessment methodologies. Ecol. Indic. 2009, 9, 189–212. [Google Scholar] [CrossRef]

- Karlsson, S. Meeting conceptual challenges. In Sustainability Indicators: Scientific Assessment; Hak, T., Moldan, B., Dahl, A., Eds.; Island Press: Washington, DC, USA, 2007; pp. 27–48. [Google Scholar]

- Giesselman, M.; Hilmer, R.; Siegel, N.A.; Wagner, G.G. Alternative Wohlstandsmessung: Neun Indikatoren können das Bruttoinlandsprodukt ergänzen und relativieren. DIW Wochenber. 2013, 80, 3–12. [Google Scholar]

- Hák, T.; Janoušková, S.; Saamah, A.; Seaford, C.; Mahony, S. Review Report on Beyond GDP Indicators: Categorisation, Intensions and Impacts. Available online: http://www.czp.cuni.cz/czp/images/stories/Projekty/BRAINPOoL_Review_report_Beyond-GDP_indicators.pdf (accessed on 15 September 2014).

- Bell, S.; Eason, K.; Frederiksen, P. A Synthesis of the Findings of the POINT Project. 2011. Available online: http://www.point-eufp7.info/storage/POINT_synthesis_deliverable%2015.pdf (accessed on 15 September 2014).

- Parris, T.M.; Kates, R.W. Characterizing and measuring sustainable development. Annu. Rev. Environ. Resour. 2003, 28, 559–586. [Google Scholar] [CrossRef]

- Hak, T.; Kovanda, J.; Weinzettel, J. A method to assess the relevance of sustainability indicator: Application to the indicator set of the Czech Republic’s Sustainable Development Strategy. Ecol. Indic. 2012, 17, 46–57. [Google Scholar] [CrossRef]

- Wesselink, B.; Bakkes, J.; Best, A.; Hinterberger, F.; ten Brink, P. Measurement beyond GDP. Available online: http://assets.wwfza.panda.org/downloads/beyond_gdp.pdf (accessed on 10 March 2015).

- Thiry, G.; Bauler, T.; Sébastien, L.; Paris, S.; Lacro, V. Characterizing Demand for “Beyond GDP”. Available online: http://ww.brainpoolproject.eu/wp-content/uploads/2013/06/D2.1_BRAINPOoL_Characterizing_demand.pdf (accessed on 15 September 2014).

- Banks, G. Evidence-Based Policy Making: What is it? How Do We Get It? Productivity Commission: Canberra, Australia, 2009.

- DFID. Systematic Reviews in International Development: An Initiative to Strengthen Evidence-Informed Policy Making. 2012. Available online: http://www.oxfordmartin.ox.ac.uk/blog/view/199 (accessed on 15 September 2014).

- Whitby, A.; Seaford, C.; Berry, C. Beyond GDP -From Measurement to Politics and Policy. Available online: http://www.brainpoolproject.eu/wp-content/uploads/2014/05/BRAINPOoL-Project-Final-Report.pdf (accessed on 15 September 2014).

- EC. Sustainable Development Indicators: Overview of Relevant FP-Funded Research and Identification of Further Needs in View of EU and International Activities. Available online: http://www.ieep.eu/assets/443/sdi_review.pdf (accessed on 15 September 2014).

- WB. Sustainable Development Projects & Programs. Available online: http://www.worldbank.org/en/topic/sustainabledevelopment/projects (accessed on 15 September 2014).

- Bell, S.; Morse, S. An analysis of the factors influencing the use of indicators in the European Union. Local Environ. 2011, 16, 281–302. [Google Scholar] [CrossRef]

- Rey-Valette, H.; Laloe, F.; le Fur, J. Introduction to the key issue concerning the use of sustainable development indicators. Int. J. Sust. Dev. 2007, 10, 4–13. [Google Scholar] [CrossRef]

- Eurostat. Getting messages across using indicators. In A Handbook Based on Experiences from Assessing Sustainable Development Indicators; Eurostat: Luxembourg, 2014. [Google Scholar]

- Seideman, S. The Fundamental Problem of Policy Relevance. 2013. Available online: http://opencanada.org/features/blogs/roundtable/the-fundamental-problem-of-policy-relevance/ (accessed on 15 September 2014).

- Moere, A.V.; Purchase, H. On the role of design in information visualization. Inf. Vis. 2011, 10, 356–371. [Google Scholar] [CrossRef]

- Postrel, V. The Economics of Aesthetics. Available online: http://www.strategy-business.com/article/03313?gko=0173c (accessed on 15 September 2014).

- Swan, K.S.; Kotabe, M.; Allred, B.B. Exploring robust design capabilities, their role in creating global products, and their relationship to firm performance. J. Prod. Innovat. Manag. 2005, 22, 144–164. [Google Scholar] [CrossRef]

- Zeithaml, V.A. Consumer perceptions of price, quality and value: A means—End model and synthesis of evidence. J. Mark. 1988, 52, 2–22. [Google Scholar] [CrossRef]

- Jesinghaus, J. Indicators: Boring statistics or the key to sustainable development? In Sustainability Indicators: Scientific Assessment; Hak, T., Moldan, B., Dahl, A., Eds.; Island Press: Washington, DC, USA, 2007; pp. 83–96. [Google Scholar]

- Rosling, H. Visual technology unveils the beauty of statistics and swaps policy from dissemination to access. Stat. J. IAOS 2007, 24, 103–104. [Google Scholar]

- OECD. Better Life Index; Organisation for Economic Co-operation and Development: Paris, France, 2011. [Google Scholar]

- NTNU; CICERO. Carbon Footprint of Nations. Available online: http://carbonfootprintofnations.com/ (accessed on 15 September 2014).

- GFN. Footprint Calculators. Available online: http://ww.footprintnetwork.org/en/index.php/GFN/page/calculators/ (accessed on 15 September 2014).

- Hall, S. Indicators of Sustainable Development in the UK. In Proceedings of the Conference of European Statisticians, Fifty-Third Plenary Session, Geneva, Switzerland, 13–15 June 2005.

- Weiss, C.; Murphy-Graham, E.; Birkeland, S. An alternate route to policy influence: How evaluations affect D.A.R.E. Am. J. Eval. 2005, 26, 12–30. [Google Scholar] [CrossRef]

- Hezri, A.A. Sustainability indicators system and policy processes in Malaysia: A framework for utilisation and learning. J. Environ. Manag. 2004, 73, 357–371. [Google Scholar] [CrossRef]

- Rydin, Y.; Holman, N.; Wolff, E. Local Sustainability Indicators. Local Environ. 2003, 8, 581–589. [Google Scholar] [CrossRef]

- Hezri, A.A.; Dovers, S.R. Sustainability indicators, policy, governance: Issues for ecological economics. Ecol. Econ. 2006, 60, 86–99. [Google Scholar] [CrossRef]

- Rinne, J.; Lyytimäki, J.; Kautto, P. From sustainability to well-being: Lessons learned from the use of sustainable development indicators at national and EU level. Ecol. Indic. 2013, 35, 35–42. [Google Scholar] [CrossRef]

- Morse, S. Out of sight, out of mind. Reporting of three indices in the UK national press between 1990 and 2009. Sustain. Dev. 2013, 21, 242–259. [Google Scholar] [CrossRef]

- Waas, T.; Hugé, J.; Block, T.; Wright, T.; Benitez-Capistros, F.; Verbruggen, A. Sustainability Assessment and Indicators: Tools in a Decision-Making Strategy for Sustainable Development. Sustainability 2014, 6, 5512–5534. [Google Scholar] [CrossRef]

- Rosenström, U. Exploring the policy use of sustainable development indicators: interviews with Finnish politicians. J. Transdiscipl. Environ. Stud. 2006, 5, 1–13. [Google Scholar]

- Ioppolo, G.; Cucurachi, S.; Salomone, R.; Saija, G.; Ciraolo, L. Industrial Ecology and Environmental Lean Management: Lights and Shadows. Sustainability 2014, 6, 6362–6376. [Google Scholar] [CrossRef]

- Deutz, P.; Ioppolo, G. From Theory to Practice: Enhancing the Potential Policy Impact of Industrial Ecology. Sustainability 2015, 7, 2259–2273. [Google Scholar] [CrossRef]

- Findlay, S.S. Knowledge Brokers: Linking Researchers and Policy Makers. Available online: http://www.ihe.ca/documents/HTA-FR14.pdf (accessed on 15 September 2014).

- Cash, D.; Clark, W.C.; Alcock, F.; Dickson, N.M.; Eckley, N.; Guston, D.H.; Jager, J.; Mitchel, R.B. Knowledge systems for sustainable development. Proc. Natl. Acad. Sci. USA 2003, 100, 8086–8091. [Google Scholar] [CrossRef] [PubMed]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).