The Effectiveness and Sustainability of Tier Diagnostic Technologies for Misconception Detection in Science Education: A Systematic Review

Abstract

1. Introduction

1.1. Core Role of Scientific Concepts in Scientific Literacy

1.2. Contributions of Existing Research in Revealing Misconceptions

1.3. Necessity of Conducting a Systematic Review on Multi-Tier Diagnostic Technologies

2. Methodology

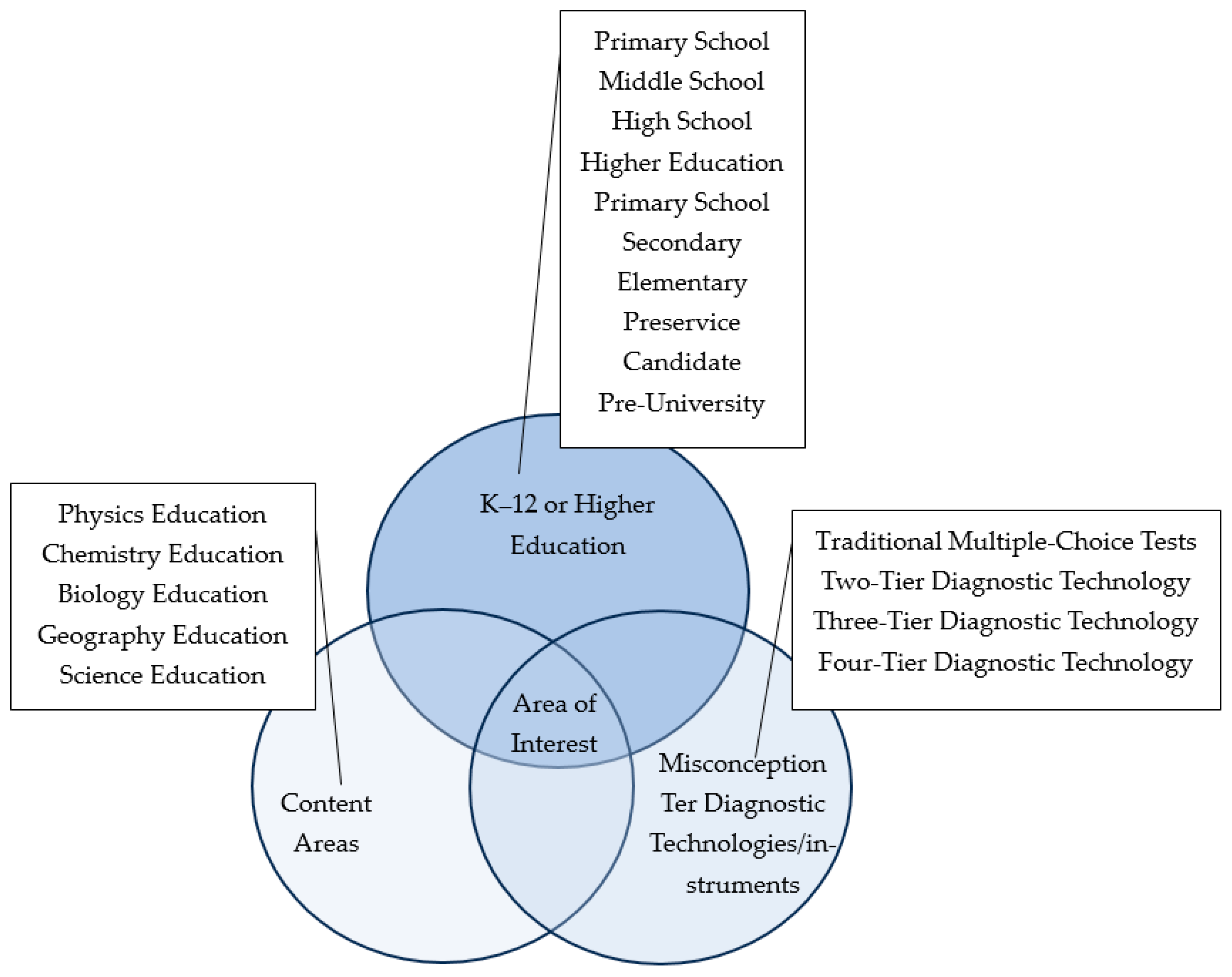

2.1. Literature Search

2.2. Inclusion and Exclusion Criteria

2.3. Types of Studies Reviewed

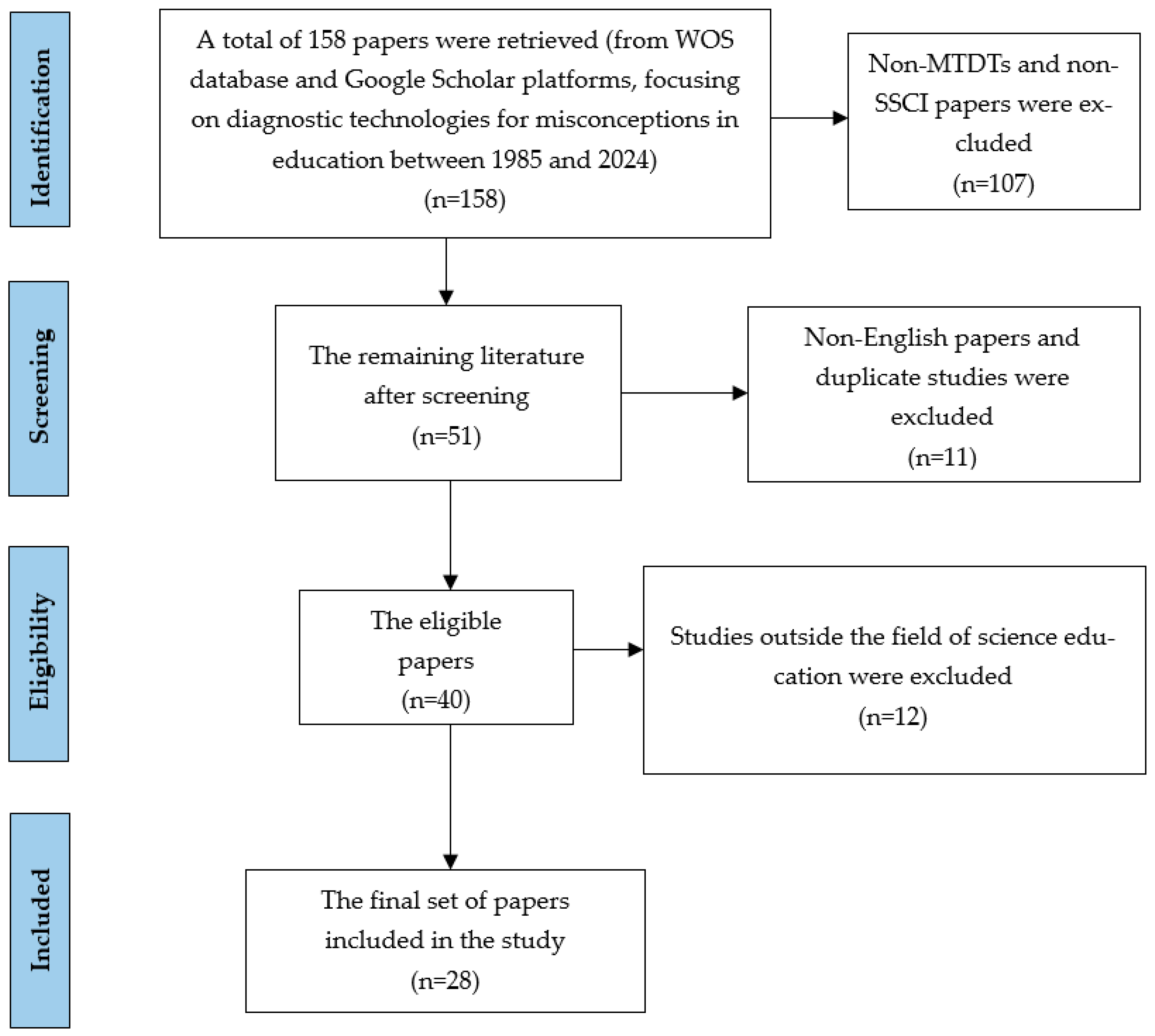

2.4. Literature Screening Process

2.5. Content Analysis Process

3. Results

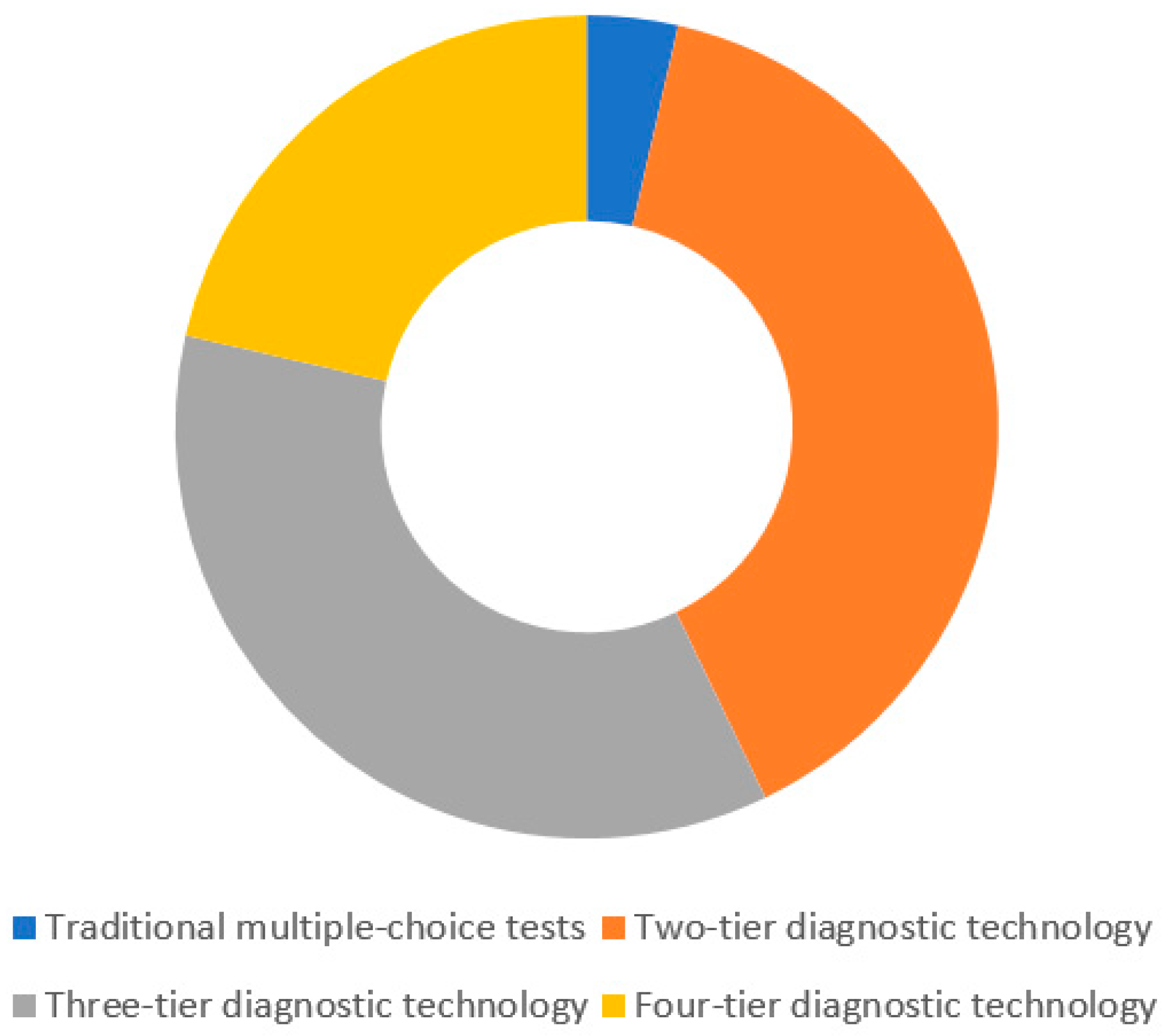

3.1. Descriptive Characteristics

3.1.1. Analyzed Documents and Their Locations

3.1.2. Content Areas

3.1.3. Research Methods and Data Analyses

3.2. Synthesis of the Studies

3.2.1. Development of MTDTs

3.2.2. Validation of the Reliability and Validity

3.2.3. Application of MTDTs Across Disciplines and Educational Stages

3.2.4. Misconceptions Identified by MTDTs and Proposed Interventions

3.2.5. Representational Effectiveness of MTDTs in Assessing Conceptual Understanding

4. Discussion

4.1. Are the Development and Overall Reliability and Validity of MTDTs Scientifically Sound?

4.2. Which Scientific Discipline Has the Widest Application of MTDTs?

4.3. Application of MTDTs in Different Educational Stages (K12 vs. Higher Education)

4.4. Can MTDTs Be Applied on a Large Scale in Online Settings?

4.5. What Common Misconceptions Do MTDTs Reveal in Science Education, and Are the Teaching Strategies Targeting These Misconceptions Effective?

4.6. Which MTDT Is the Most Effective in Assessing Student Conceptual Understanding?

4.7. Future Research Directions and Recommendations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- European Commission. Europe 2020: A Strategy for Smart, Sustainable and Inclusive Growth: Communication from the Commission; Publications Office of the European Union: Luxembourg, 2010. [Google Scholar]

- The State Council of the People’s Republic of China. Outline of Action Plan for National Scientific Literacy (2021–2035); The State Council of the People’s Republic of China: Beijing, China, 2021.

- United Nations. Transforming Our World: The 2030 Agenda for Sustainable Development; United Nations: New York, NY, USA, 2015; Available online: https://sdgs.un.org/2030agenda (accessed on 10 July 2024).

- NGSS Lead States. Next Generation Science Standards: For States, by States; The National Academy Press: Washington, DC, USA, 2013. [Google Scholar] [CrossRef]

- Giner-Baixauli, A.; Corbí, H.; Mayoral, O. Exploring the Intersection of Paleontology and Sustainability: Enhancing Scientific Literacy in Spanish Secondary School Students. Sustainability 2024, 16, 5890. [Google Scholar] [CrossRef]

- Guevara-Herrero, I.; Bravo-Torija, B.; Pérez-Martín, J.M. Educational Practice in Education for Environmental Justice: A Systematic Review of the Literature. Sustainability 2024, 16, 2805. [Google Scholar] [CrossRef]

- The State Council of the People’s Republic of China. China’s National Science Literacy Action Plan (2021–2035). 2021. Available online: https://www.gov.cn/zhengce/content/2021-06/25/content_5620813.htm (accessed on 22 June 2024).

- Mohd Saat, R.; Mohd Fadzil, H.; Aziz, N.A.A.; Haron, K.; Rashid, K.; Shamsuar, N. Development of an online three-tier diagnostic test to assess pre-university students? Understanding of cellular respiration. J. Balt. Sci. Educ. 2016, 15, 532–546. [Google Scholar] [CrossRef]

- Kao, H.L. A Study of Aboriginal and Urban Junior High School Students’ Alternative Conceptions on the Definition of Respiration. Int. J. Sci. Educ. 2007, 29, 517–533. [Google Scholar] [CrossRef]

- Haslam, F.; Treagust, D.F. Diagnosing secondary students’ misconceptions of photosynthesis and respiration in plants using a two-tier multiple choice instrument. J. Biol. Educ. 1987, 21, 203–211. [Google Scholar] [CrossRef]

- Treagust, D.F. Development and use of diagnostic tests to evaluate students’ misconceptions in science. Int. J. Sci. Educ. 1988, 10, 159–169. [Google Scholar] [CrossRef]

- Viehmann, C.; Fernández Cárdenas, J.M.; Reynaga Peña, C.G. The Use of Socioscientific Issues in Science Lessons: A Scoping Review. Sustainability 2024, 16, 5827. [Google Scholar] [CrossRef]

- Cairns, D.; Areepattamannil, S. Exploring the Relations of Inquiry-Based Teaching to Science Achievement and Dispositions in 54 Countries. Res. Sci. Educ. 2017, 49, 1–23. [Google Scholar] [CrossRef]

- Bao, L.; Koenig, K. Physics education research for 21st century learning. Discip. Interdiscip. Sci. Educ. Res. 2019, 1, 2. [Google Scholar] [CrossRef]

- Zhou, S.-N.; Han, J.; Koenig, K.; Raplinger, A.; Pi, Y.; Li, D.; Xiao, H.; Fu, Z.; Bao, L. Assessment of Scientific Reasoning: The Effects of Task Context, Data, and Design on Student Reasoning in Control of Variables. Think. Ski. Creat. 2016, 19, 175–187. [Google Scholar] [CrossRef]

- Omer, L. Successful Scientific Instruction Involves More Than Just Discovering Concepts through Inquiry-Based Activities. Education 2002, 123, 318. [Google Scholar]

- Marx, R.; Blumenfeld, P.; Krajcik, J.; Fishman, B.; Soloway, E.; Geier, R.; Tal, R. Inquiry-based science in the middle grades: Assessment of learning in urban systemic reform. J. Res. Sci. Teach. 2004, 41, 1063–1080. [Google Scholar] [CrossRef]

- Fernández-Huetos, N.; Pérez-Martín, J.M.; Guevara-Herrero, I.; Esquivel-Martín, T. Primary-Education Students’ Performance in Arguing About a Socioscientific Issue: The Case of Pharmaceuticals in Surface Water. Sustainability 2025, 17, 1618. [Google Scholar] [CrossRef]

- Maillard, O.; Michme, G.; Azurduy, H.; Vides-Almonacid, R. Citizen Science for Environmental Monitoring in the Eastern Region of Bolivia. Sustainability 2024, 16, 2333. [Google Scholar] [CrossRef]

- Kioupi, V.; Voulvoulis, N. Education for Sustainable Development: A Systemic Framework for Connecting the SDGs to Educational Outcomes. Sustainability 2019, 11, 6104. [Google Scholar] [CrossRef]

- Pozuelo-Muñoz, J.; de Echave Sanz, A.; Cascarosa Salillas, E. Inquiring in the Science Classroom by PBL: A Design-Based Research Study. Educ. Sci. 2025, 15, 53. [Google Scholar] [CrossRef]

- Chen, S.M. Shadows: Young Taiwanese children’s views and understanding. Int. J. Sci. Educ. 2009, 31, 59–79. [Google Scholar] [CrossRef]

- Novak, J.D. Concept mapping: A tool for improving science teaching and learning. Improv. Teach. Learn. Sci. Math. 1996, 32–43. [Google Scholar]

- Langley, D.; Ronen, M.; Eylon, B.-S. Light propagation and visual patterns: Preinstruction learners’ conceptions. J. Res. Sci. Teach. 1997, 34, 399–424. [Google Scholar] [CrossRef]

- Kaltakci-Gurel, D.; Eryilmaz, A.; McDermott, L.C. Development and application of a four-tier test to assess pre-service physics teachers’ misconceptions about geometrical optics. Res. Sci. Technol. Educ. 2017, 35, 238–260. [Google Scholar] [CrossRef]

- Canal, P. Photosynthesis and ’inverse respiration’ in plants: An inevitable misconception? Int. J. Sci. Educ. 1999, 21, 363–371. [Google Scholar] [CrossRef]

- Gönen, S. A Study on Student Teachers’ Misconceptions and Scientifically Acceptable Conceptions About Mass and Gravity. J. Sci. Educ. Technol. 2008, 17, 70–81. [Google Scholar] [CrossRef]

- Treagust, D. Evaluating students’ misconceptions by means of diagnostic multiple choice items. Res. Sci. Educ. 1986, 16, 199–207. [Google Scholar] [CrossRef]

- Peşman, H.; Eryılmaz, A. Development of a Three-Tier Test to Assess Misconceptions About Simple Electric Circuits. J. Educ. Res. 2010, 103, 208–222. [Google Scholar] [CrossRef]

- Aydeniz, M.; Bilican, K.; Kirbulut, Z.D. Exploring Pre-Service Elementary Science Teachers’ Conceptual Understanding of Particulate Nature of Matter through Three-Tier Diagnostic Test. Int. J. Educ. Math. Sci. Technol. 2017, 5, 221–234. [Google Scholar] [CrossRef]

- Kiray, S.A.; Simsek, S. Determination and Evaluation of the Science Teacher Candidates’ Misconceptions About Density by Using Four-Tier Diagnostic Test. Int. J. Sci. Math. Educ. 2021, 19, 935–955. [Google Scholar] [CrossRef]

- Guerra-Reyes, F.; Guerra-Dávila, E.; Naranjo-Toro, M.; Basantes-Andrade, A.; Guevara-Betancourt, S. Misconceptions in the Learning of Natural Sciences: A Systematic Review. Educ. Sci. 2024, 14, 497. [Google Scholar] [CrossRef]

- Mwangi, S.W. Effects of the Use of Computer Animated Loci Teaching Technique on Secondary School Students’ Achievement and Misconceptions in Mathematics Within Kitui County, Kenya. Ph.D. Thesis, Egerton University, Njoro, Kenya, 2019. Available online: http://41.89.96.81:4000/items/4fe69378-ff6d-4d8e-973d-cf44e39611a7 (accessed on 13 July 2024).

- Menz, C.; Spinath, B.; Seifried, E. Misconceptions die hard: Prevalence and reduction of wrong beliefs in topics from educational psychology among preservice teachers. Eur. J. Psychol. Educ. 2020, 36, 477–494. [Google Scholar] [CrossRef]

- Ruiz-Gallardo, J.; Reavey, D. Learning Science Concepts by Teaching Peers in a Cooperative Environment: A Longitudinal Study of Preservice Teachers. J. Learn. Sci. 2018, 28, 107–173. [Google Scholar] [CrossRef]

- Diani, R.; Alfin, J.; Anggraeni, Y.M.; Mustari, M.; Fujiani, D. Four-Tier Diagnostic Test With Certainty of Response Index on The Concepts of Fluid. J. Phys. Conf. Ser. 2019, 1155, 012078. [Google Scholar] [CrossRef]

- Miller, D.M.; Scott, C.E.; McTigue, E.M. Writing in the Secondary-Level Disciplines: A Systematic Review of Context, Cognition, and Content. Educ. Psychol. Rev. 2018, 30, 83–120. [Google Scholar] [CrossRef]

- Scott, C.E.; McTigue, E.M.; Miller, D.M.; Washburn, E.K. The what, when, and how of preservice teachers and literacy across the disciplines: A systematic literature review of nearly 50 years of research. Teach. Teach. Educ. 2018, 73, 1–13. [Google Scholar] [CrossRef]

- Díaz-Burgos, A.; García-Sánchez, J.-N.; Álvarez-Fernández, M.L.; de Brito-Costa, S.M. Psychological and Educational Factors of Digital Competence Optimization Interventions Pre- and Post-COVID-19 Lockdown: A Systematic Review. Sustainability 2024, 16, 51. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. Declaración PRISMA 2020: Una guía actualizada para la publicación de revisiones sistemáticas. Rev. Esp. Cardiol. 2021, 74, 790–799. [Google Scholar] [CrossRef] [PubMed]

- Risko, V.J.; Roller, C.M.; Cummins, C.; Bean, R.M.; Block, C.C.; Anders, P.L.; Flood, J. A Critical Analysis of Research on Reading Teacher Education. Read. Res. Q. 2008, 43, 252–288. [Google Scholar] [CrossRef]

- Bean, T.W. Preservice Teachers’ Selection and Use of Content Area Literacy Strategies. J. Educ. Res. 1997, 90, 154–163. [Google Scholar] [CrossRef]

- Nourie, B.L.; Lenski, S.D. The (In)Effectiveness of Content Area Literacy Instruction for Secondary Preservice Teachers. Clear. House A J. Educ. Strateg. Issues Ideas 1998, 71, 372–374. [Google Scholar] [CrossRef]

- Wang, J.-R. Development and Validation of a Two-Tier Instrument to Examine Understanding of Internal Transport in Plants and the Human Circulatory System. Int. J. Sci. Math. Educ. 2004, 2, 131–157. [Google Scholar] [CrossRef]

- Sesli, E.; Kara, Y. Development and application of a two-tier multiple-choice diagnostic test for high school students’ understanding of cell division and reproduction. J. Biol. Educ. 2012, 46, 214–225. [Google Scholar] [CrossRef]

- Taslidere, E. Development and use of a three-tier diagnostic test to assess high school students’ misconceptions about the photoelectric effect. Res. Sci. Technol. Educ. 2016, 34, 164–186. [Google Scholar] [CrossRef]

- Caleon, I.S.; Subramaniam, R. Development and Application of a Three-Tier Diagnostic Test to Assess Secondary Students’ Understanding of Waves. Int. J. Sci. Educ. 2010, 32, 939–961. [Google Scholar] [CrossRef]

- Arslan, H.O.; Cigdemoglu, C.; Moseley, C. A Three-Tier Diagnostic Test to Assess Pre-Service Teachers’ Misconceptions about Global Warming, Greenhouse Effect, Ozone Layer Depletion, and Acid Rain. Int. J. Sci. Educ. 2012, 34, 1667–1686. [Google Scholar] [CrossRef]

- Gurcay, D.; Gulbas, E. Development of three-tier heat, temperature and internal energy diagnostic test. Res. Sci. Technol. Educ. 2015, 33, 197–217. [Google Scholar] [CrossRef]

- Yan, Y.K.; Subramaniam, R. Using a multi-tier diagnostic test to explore the nature of students’ alternative conceptions on reaction kinetics. Chem. Educ. Res. Pract. 2018, 19, 213–226. [Google Scholar] [CrossRef]

- Lin, S.-W. Development and Application of a Two-Tier Diagnostic Test for High School Students’ Understanding of Flowering Plant Growth and Development. Int. J. Sci. Math. Educ. 2004, 2, 175–199. [Google Scholar] [CrossRef]

- Yeo, J.-H.; Yang, H.-H.; Cho, I. Using a Three-Tier Multiple-Choice Diagnostic Instrument toward Alternative Conceptions among Lower-Secondary School Students in Taiwan: Taking Ecosystems Unit as an Example. J. Balt. Sci. Educ. 2022, 21, 69–83. [Google Scholar] [CrossRef]

- Zhao, C.; Zhang, S.; Cui, H.; Hu, W.; Dai, G. Middle school students’ alternative conceptions about the human blood circulatory system using four-tier multiple-choice tests. J. Biol. Educ. 2021, 57, 51–67. [Google Scholar] [CrossRef]

- Coştu, B.; Ayas, A.; Niaz, M.; Ünal, S.; Çalik, M. Facilitating Conceptual Change in Students’ Understanding of Boiling Concept. J. Sci. Educ. Technol. 2007, 16, 524–536. [Google Scholar] [CrossRef]

- Caleon, I.S.; Subramaniam, R. Do Students Know What They Know and What They Don’t Know? Using a Four-Tier Diagnostic Test to Assess the Nature of Students’ Alternative Conceptions. Res. Sci. Educ. 2010, 40, 313–337. [Google Scholar] [CrossRef]

- Putica, K.B. Development and Validation of a Four-Tier Test for the Assessment of Secondary School Students’ Conceptual Understanding of Amino Acids, Proteins, and Enzymes. Res. Sci. Educ. 2023, 53, 651–668. [Google Scholar] [CrossRef]

- Odom, A.L.; Barrow, L.H. Development and application of a two-tier diagnostic test measuring college biology students’ understanding of diffusion and osmosis after a course of instruction. J. Res. Sci. Teach. 1995, 32, 45–61. [Google Scholar] [CrossRef]

- Milenković, D.D.; Hrin, T.N.; Segedinac, M.D.; Horvat, S. Development of a Three-Tier Test as a Valid Diagnostic Tool for Identification of Misconceptions Related to Carbohydrates. J. Chem. Educ. 2016, 93, 1514–1520. [Google Scholar] [CrossRef]

- Yang, W.; Chan, A.; Gagarina, N. Editorial: Remote online language assessment: Eliciting discourse from children and adults. Front. Commun. 2024, 9, 1508448. [Google Scholar] [CrossRef]

- Irmak, M.; İnaltun, H.; Ercan-Dursun, J.; Yaniş-Kelleci, H.; Yürük, N. Development and Application of a Three-Tier Diagnostic Test to Assess Pre-service Science Teachers’ Understanding on Work-Power and Energy Concepts. Int. J. Sci. Math. Educ. 2023, 21, 159–185. [Google Scholar] [CrossRef]

- Milenković, D.D.; Segedinac, M.D.; Hrin, T.N. Increasing High School Students’ Chemistry Performance and Reducing Cognitive Load through an Instructional Strategy Based on the Interaction of Multiple Levels of Knowledge Representation. J. Chem. Educ. 2014, 91, 1409–1416. [Google Scholar] [CrossRef]

- Putra, A.S.U.; Hamidah, I.; Nahadi. The development of five-tier diagnostic test to identify misconceptions and causes of students’ misconceptions in waves and optics materials. J. Phys. Conf. Ser. 2020, 1521, 022020. [Google Scholar] [CrossRef]

- Rodrigues, H.; Jesús, A.; Lamb, R.; Choi, I.; Owens, T. Unravelling Student Learning: Exploring Nonlinear Dynamics in Science Education. Int. J. Psychol. Neurosci. 2023, 9, 118–137. [Google Scholar] [CrossRef]

- Sawaki, Y. 4. Norm-referenced vs. criterion-referenced approach to assessment. In Handbook of Second Language Assessment; Dina, T., Jayanti, B., Eds.; De Gruyter Mouton: Berlin, Germany, 2016; pp. 45–60. [Google Scholar]

- Kumar, R.V. Cronbach’s Alpha: Genesis, Issues and Alternatives. IMIB J. Innov. Manag. 2024, 1, 17. [Google Scholar] [CrossRef]

- Avinç, E.; Doğan, F. Digital literacy scale: Validity and reliability study with the rasch model. Educ. Inf. Technol. 2024, 29, 22895–22941. [Google Scholar] [CrossRef]

- Grau-Gonzalez, I.A.; Villalba-Garzon, J.A.; Torres-Cuellar, L.; Puerto-Rojas, E.M.; Ortega, L.A. A psychometric analysis of the Early Trauma Inventory-Short Form in Colombia: CTT and Rasch model. Child Abus. Negl. 2024, 149, 106689. [Google Scholar] [CrossRef]

- Nayak, A.; Khuntia, R. Development and Content Validation of a Measure to Assess the Parent-Child Social-emotional Reciprocity of Children with ASD. Indian J. Psychol. Med. 2024, 46, 66–71. [Google Scholar] [CrossRef] [PubMed]

- Ardianto, D.; Rubini, B.; Pursitasari, I. Assessing STEM career interest among secondary students: A Rasch model measurement analysis. Eurasia J. Math. Sci. Technol. Educ. 2023, 19, em2213. [Google Scholar] [CrossRef] [PubMed]

- Gurel, D.K.; Eryılmaz, A.; McDermott, L.C. A review and comparison of diagnostic instruments to identify students’ misconceptions in science. Eurasia J. Math. Sci. Technol. Educ. 2015, 11, 989–1008. [Google Scholar] [CrossRef]

- Soeharto, S.; Csapó, B.; Sarimanah, E.; Dewi, F.I.; Sabri, T. A Review of Students’ Common Misconceptions in Science and Their Diagnostic Assessment Tools. J. Pendidik. IPA Indones. 2019, 8, 247–266. [Google Scholar] [CrossRef]

- Özmen, K. Health Science Students’ Conceptual Understanding of Electricity: Misconception or Lack of Knowledge? Res. Sci. Educ. 2024, 54, 225–243. [Google Scholar] [CrossRef]

- Niaoustas, G. Primary School Teacher’s Views on the Purpose and Forms of Student Performance Assessment. Int. J. Elem. Educ. 2024, 8, 132–140. [Google Scholar] [CrossRef]

- Anwyl-Irvine, A.; Massonnié, J.; Flitton, A.; Kirkham, N.; Evershed, J. Gorilla in our midst: An online behavioral experiment builder. Behav. Res. Methods 2018, 52, 388–407. [Google Scholar] [CrossRef]

- Permatasari, G.A.; Ellianawati, E.; Hardyanto, W. Online web-based learning and assessment tool in vocational high school for physics. J. Penelit. Pengemb. Pendidik. Fis. 2019, 5, 1–8. [Google Scholar] [CrossRef]

- Das, A.; Malaviya, S. AI-Enabled Online Adaptive Learning Platform and Learner’s Performance: A Review of Literature. Empir. Econ. Lett. 2024, 23, 234. [Google Scholar] [CrossRef]

- Erickson, J.A.; Botelho, A.F.; McAteer, S.; Varatharaj, A.; Heffernan, N.T. The automated grading of student open responses in mathematics. In Proceedings of the Tenth International Conference on Learning Analytics & Knowledge, Frankfurt, Germany, 23–27 March 2020. [Google Scholar]

- Pacaci, C.; Ustun, U.; Ozdemir, O.F. Effectiveness of conceptual change strategies in science education: A meta-analysis. J. Res. Sci. Teach. 2024, 61, 1263–1325. [Google Scholar] [CrossRef]

- Briggs, A.G.; Morgan, S.K.; Sanderson, S.K.; Schulting, M.C.; Wieseman, L.J. Tracking the Resolution of Student Misconceptions about the Central Dogma of Molecular Biology. J. Microbiol. Biol. Educ. 2016, 17, 339–350. [Google Scholar] [CrossRef]

- Alismaiel, O.A. Using Structural Equation Modeling to Assess Online Learning Systems’ Educational Sustainability for University Students. Sustainability 2021, 13, 13565. [Google Scholar] [CrossRef]

- Okada, A.; Gray, P. A Climate Change and Sustainability Education Movement: Networks, Open Schooling, and the ‘CARE-KNOW-DO’ Framework. Sustainability 2023, 15, 2356. [Google Scholar] [CrossRef]

| First Classification | Secondary Classification | Definitions and Explanations |

|---|---|---|

| Basic Information | Author(s), publication year, region, participant scale | |

| Development Process | Theoretical framework, confidence ratings, concept statement, concept scope, distractors, instrument proofreading, instrument number of items, type of instrument | Theoretical framework: the foundational theoretical principles guiding the development of diagnostic instruments. Concept statement: a scientific articulation of the main concept and its sub-concepts. Concept scope: the boundaries defining the main concept and its sub-concepts. Distractors: erroneous options based on potential misconceptions. |

| Instrument Quality Assessment | Reliability testing, validity testing, conclusion on effectiveness | - |

| Application of MTDTs | Implementation methods, educational stages, sample sizes, subject areas, theme concept | Theme concept: specific disciplines encompass key theme concepts that students need to understand, such as photosynthesis. |

| Misconceptions and Interventions | Identified misconceptions, types, psychological variables, intervention recommendations, validation of intervention recommendations | Psychological variables: these variables include the mean confidence (CF), mean confidence of correct responses (CFC), mean confidence of wrong responses (CFW), mean confidence of discrimination quotient (CDQ), confidence bias (CB). |

| Representational Effectiveness of MTDTs | Representational effectiveness of traditional multiple-choice tests, two-tier diagnostic instruments, three-tier diagnostic instruments, and four-tier diagnostic instruments | Representational effectiveness: the degree of precision with which different tiers of MTDTs evaluate participants’ levels of conceptual understanding. |

| First Classification | Secondary Classification | Tertiary Code (Specific Categories) | Frequency of Occurrence |

|---|---|---|---|

| Development Process | TF (Theoretical Framework) | DP1 Fully follows Treagust’s model | 2 |

| DP2 Partially follows Treagust’s model | 1 | ||

| DP3 Does not fully follow Treagust’s model | 24 | ||

| DP4 Not described | 1 | ||

| SP (Source of Proposition Concepts Statements) | SP1 Science curriculum standards/syllabus | 18 | |

| SP2 Textbooks | 12 | ||

| SP3 Literature review | 18 | ||

| SP4 Expert opinions or reviews | 8 | ||

| SP5 Teaching experience | 3 | ||

| SP6 Others | 4 | ||

| CT (Scope of Conceptual Themes) | CT1 Defined by propositional knowledge statements | 4 | |

| CT2 Concept map construction | 12 | ||

| CT3 Focus on core concepts | 9 | ||

| CT4 Hierarchical conceptual framework | 1 | ||

| CT5 Following curriculum requirements | 2 | ||

| CT6 Others | 3 | ||

| AM (Acquisition of Potential Misconceptions) | AM1 Literature review | 25 | |

| AM2 Classroom observation | 6 | ||

| AM3 Student interviews | 21 | ||

| AM4 Open-ended questions/tests | 15 | ||

| AM5 Classroom discussion and feedback | 4 | ||

| AM6 Expert feedback | 5 | ||

| AM7 Others | 2 | ||

| CRs (Confidence Ratings) | CR1 No confidence scale involved | 12 | |

| CR2 2-point scale | 7 | ||

| CR3 4-point scale | 2 | ||

| CR4 5-point scale | 1 | ||

| CR5 6-point scale | 5 | ||

| CR6 7-point scale | 1 | ||

| IP (Instrument Proofreading) | IP1 Expert review feedback | 25 | |

| IP2 Pilot testing feedback | 24 | ||

| IP3 Validation not involved | 1 | ||

| Development Process | IN1 (Instrument Number of Items) | IN1-1 8–10 items | 2 |

| IN1-2 11–13 items | 13 | ||

| IN1-3 14–16 items | 8 | ||

| IN1-4 More than 16 items | 4 | ||

| IN2 (Type of Instrument) | IN2-1 Two-tier diagnostic instrument | 11 | |

| IN2-2 Three-tier diagnostic instrument | 10 | ||

| IN2-3 Four-tier diagnostic instrument | 6 | ||

| IN2-4 Traditional multiple-choice testing | 1 |

| First Classification | Secondary Classification | Tertiary Code (Specific Categories) | Frequency of Occurrence |

|---|---|---|---|

| Instrument quality Assessment | RV (Reliability Validation) | RV1 Cronbach’s alpha coefficient only | 9 |

| RV2 Cronbach’s alpha combined | 14 | ||

| RV3 No Cronbach’s alpha | 3 | ||

| RV4 Not involved | 2 | ||

| VV (Validity Validation) | VV1 Expert review only | 24 | |

| VV2 Expert review combined | 9 | ||

| VV3 No expert review | 2 | ||

| VV4 Not involved | 1 | ||

| CC (Conclusion on Effectiveness) | CC1 Good reliability and validity | 12 | |

| CC2 High reliability and validity | 4 | ||

| CC3 Excellent reliability and validity | 3 | ||

| CC4 Effective and reliable instrument | 4 | ||

| CC5 Demonstrates good reliability and validity | 2 | ||

| CC6 Fair reliability and validity | 2 | ||

| CC7 Not involved or described | 1 |

| First Classification | Secondary Classification | Tertiary Code (Specific Categories) | Frequency of Occurrence |

|---|---|---|---|

| Applicant of MTDTs | IMs (Implementation of Methods) | IM1 Paper-based test | 26 |

| IM2 Online test | 1 | ||

| IM3 Not involved | 1 | ||

| ELs (Educational Stages) | EL1 Primary school | 0 | |

| EL2 Junior high school | 3 | ||

| EL3 Senior high school | 11 | ||

| EL4 University | 10 | ||

| EL5 Cross-school segments | 4 | ||

| PS (Participant Scale) | PS1 < 200 participants | 7 | |

| PS2 200–400 participants | 11 | ||

| PS4 > 400 participants | 7 | ||

| PS5 Not specified | 3 | ||

| AS (Applicable Subjects) | AS1 Biology | 11 | |

| AS2 Chemistry | 4 | ||

| AS3 Physics | 10 | ||

| AS4 Geography | 1 | ||

| AS5 Interdisciplinary | 2 | ||

| FTs (Focus Topics) | FT1 Respiration | 4 | |

| FT2 Photosynthesis | 3 | ||

| FT3 Covalent bond structure | 2 | ||

| FT4 Diffusion and osmosis | 1 | ||

| FT5 Internal transport and circulation | 1 | ||

| FT6 Plant growth and development | 1 | ||

| FT7 Boiling phenomena | 1 | ||

| FT8 Mass and gravity | 1 | ||

| FT9 Cell division and reproduction | 1 | ||

| FT10 Chemical reactions | 1 | ||

| FT11 Waves (mechanical and propagation) | 2 | ||

| FT12 Electric circuits | 1 | ||

| FT13 Environmental issues (global warming, acid rain, ozone depletion) | 1 | ||

| FT14 Heat and temperature | 1 | ||

| FT15 Cellular respiration | 1 | ||

| FT16 Photoelectric effect | 1 | ||

| FT17 Carbohydrates | 1 | ||

| FT18 Work, power, energy | 1 | ||

| FT19 Ecosystems | 1 | ||

| FT20 Particle nature of matter | 1 | ||

| FT21 Reaction kinetics | 1 | ||

| FT22 Geometrical optics | 1 | ||

| FT23 Density | 1 | ||

| FT24 Amino acids, proteins, enzymes | 1 |

| First Classification | Secondary Classification | Tertiary Code (Specific Categories) | Frequency of Occurrence |

|---|---|---|---|

| Misconceptions and Intervention | RMs (Revealed Misconceptions) | RM1 (1–5 misconceptions) | 19 |

| RM2 (6–10 misconceptions) | 6 | ||

| RM3 (11 or more misconceptions) | 3 | ||

| CMs (Categories of Misconceptions) | CM1 Common Misconceptions | 27 | |

| CM2 Not involved | 1 | ||

| PVs (Use of Psychological Variables) | PV1 Involved (CF, CFW, CFC, CDQ, CB) | 6 | |

| PV2 Not involved | 22 | ||

| IRs (Intervention Recommendations) | IR1 Teaching sequence optimization | 2 | |

| IR2 Hands-on experiments and simulations | 22 | ||

| IR3 Concept maps and visual models | 10 | ||

| IR4 Conceptual change texts | 1 | ||

| IR5 Cognitive conflict approach | 1 | ||

| IR6 Real-life analogies and examples | 11 | ||

| IR7 Formative assessments and continuous feedback | 4 | ||

| IR8 Multimodal and inquiry-based teaching | 2 | ||

| IR9 Personalized/tiered teaching | 5 | ||

| IR10 Clarification of terminology and concept distinctions | 8 | ||

| IR11 Student-centered discussions and group activities | 3 | ||

| EIs (Examination of Interventions) | EI1 Examined | 1 | |

| EI2 Unexamined | 27 |

| Two-Tier Diagnostic Technology | Traditional Multiple-Choice Tests | |||

|---|---|---|---|---|

| Content Tier (First Tier) | Reason Tier (Second Tier) | Conceptual Understanding Performance | Content Tier | Conceptual Understanding Performance |

| True | True | Understanding | True | Understanding |

| True | False | - | ||

| False | True | Partial understanding | False | Misconception |

| False | False | Misconception | ||

| Content Tier (First Tier) | Reason Tier (Second Tier) | Confidence Tier (Third Tier) | Conceptual Understanding Performance |

|---|---|---|---|

| True | True | Certain | Scientific concept |

| True | True | Uncertain | Knowledge gap |

| True | False | Certain | FP |

| True | False | Uncertain | Knowledge gap |

| False | True | Certain | FN |

| False | True | Uncertain | Knowledge gap |

| False | False | Certain | Misconception |

| False | False | Uncertain | Knowledge gap |

| Content Tier (First Tier) | Confidence Tier (Second Tier) | Reason Tier (Third Tier) | Confidence Tier (Fourth Tier) | Conceptual Understanding Performance |

|---|---|---|---|---|

| True | Certain | True | Certain | Scientific concept |

| True | Certain | True | Uncertain | Knowledge gap |

| True | Uncertain | True | Certain | Knowledge gap |

| True | Uncertain | True | Uncertain | Knowledge gap |

| True | Certain | False | Certain | FP |

| True | Certain | False | Uncertain | Knowledge gap |

| True | Uncertain | False | Certain | Knowledge gap |

| True | Uncertain | False | Uncertain | Knowledge gap |

| False | Certain | True | Certain | FN |

| False | Certain | True | Uncertain | Knowledge gap |

| False | Uncertain | True | Certain | Knowledge gap |

| False | Uncertain | True | Uncertain | Knowledge gap |

| False | Certain | False | Certain | Misconception |

| False | Certain | False | Uncertain | Knowledge gap |

| False | Uncertain | False | Certain | Knowledge gap |

| False | Uncertain | False | Uncertain | Knowledge gap |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, H.; Yang, H.; Li, C.; Ma, S.; Li, G. The Effectiveness and Sustainability of Tier Diagnostic Technologies for Misconception Detection in Science Education: A Systematic Review. Sustainability 2025, 17, 3145. https://doi.org/10.3390/su17073145

Ma H, Yang H, Li C, Ma S, Li G. The Effectiveness and Sustainability of Tier Diagnostic Technologies for Misconception Detection in Science Education: A Systematic Review. Sustainability. 2025; 17(7):3145. https://doi.org/10.3390/su17073145

Chicago/Turabian StyleMa, Huangdong, Hongbin Yang, Chen Li, Shiwen Ma, and Gaofeng Li. 2025. "The Effectiveness and Sustainability of Tier Diagnostic Technologies for Misconception Detection in Science Education: A Systematic Review" Sustainability 17, no. 7: 3145. https://doi.org/10.3390/su17073145

APA StyleMa, H., Yang, H., Li, C., Ma, S., & Li, G. (2025). The Effectiveness and Sustainability of Tier Diagnostic Technologies for Misconception Detection in Science Education: A Systematic Review. Sustainability, 17(7), 3145. https://doi.org/10.3390/su17073145