Sustainable Economic Development Through Crisis Detection Using AI Techniques

Abstract

1. Introduction

2. Materials and Methods

| Algorithm 1. Pseudo Code for Economic Crisis Detection Using NLP. |

|

2.1. Dataset and Preprocessing

- Removing HTML Tags: we cleaned the HTML and its derivative tags from the text.

- Converting Numerical Expressions into Text: we converted the numbers in the text to word form so that the text could be processed more effectively by semantic analysis.

- Clearing Non-Alphanumeric Symbols: we removed non-alphanumeric symbols from the text while preserving the characters of the alphabet.

- Removing Stop Words: By removing stop words that have no meaning in the language, the text analysis focused on more meaningful words and reduced the impact of stop words on semantic analysis. Example: I, you, and, but, is, are, the, for, yet

- Creating Text Types: In this step, raw, lemmatized, and stemmed forms of the message text were created. The root of a word found by removing its suffixes, usually without considering grammar rules, is called a stem. The root of a word found using grammar rules, context, and meaning is called a lemma.Example for stem: studies → studiExample for lemma: studies → study

2.2. Natural Language Processing Methods

2.2.1. Definition and Overview of Natural Language Processing

2.2.2. Cosine Similarity

2.2.3. Distributional Similarity

2.2.4. Distributional Hypothesis

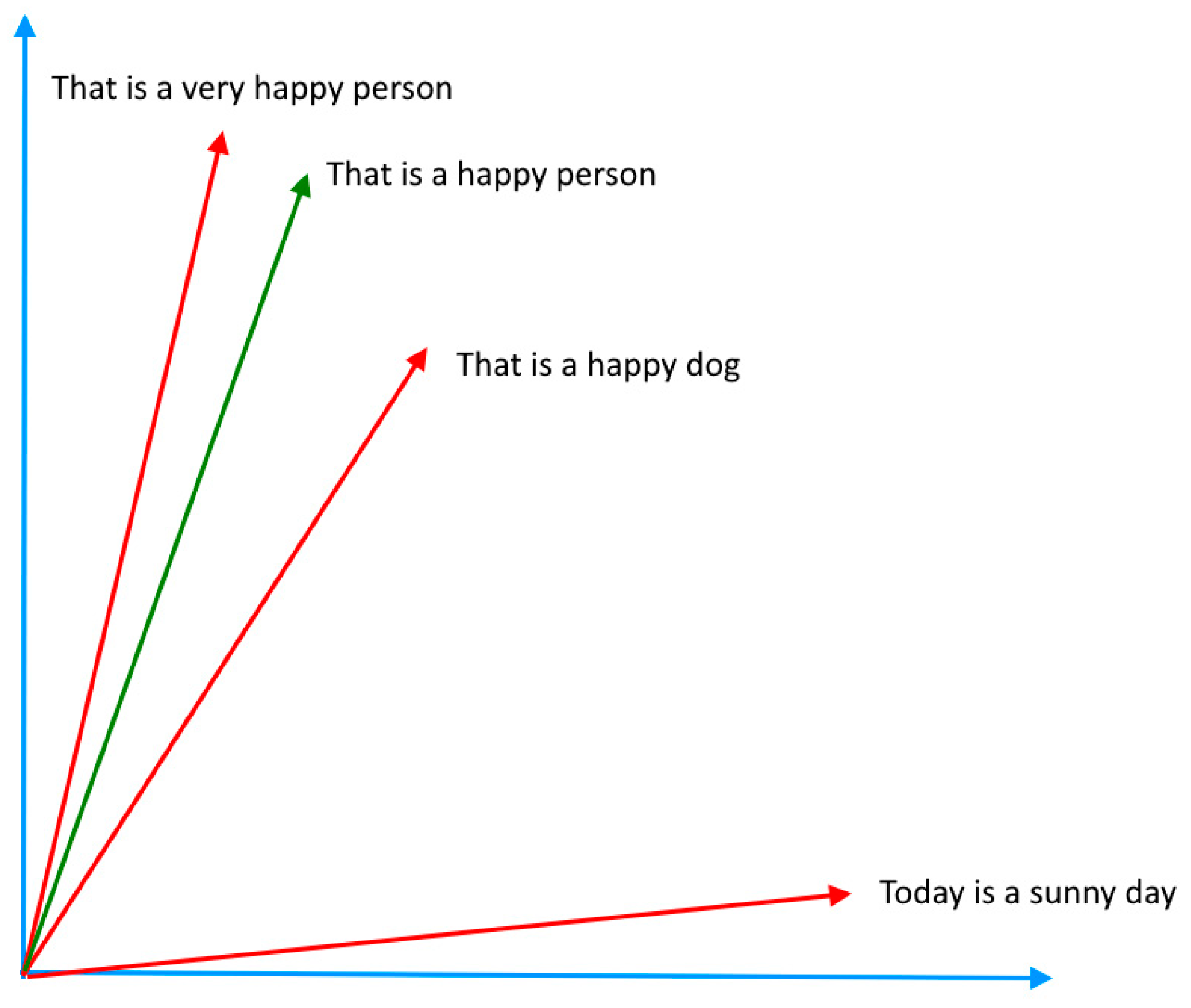

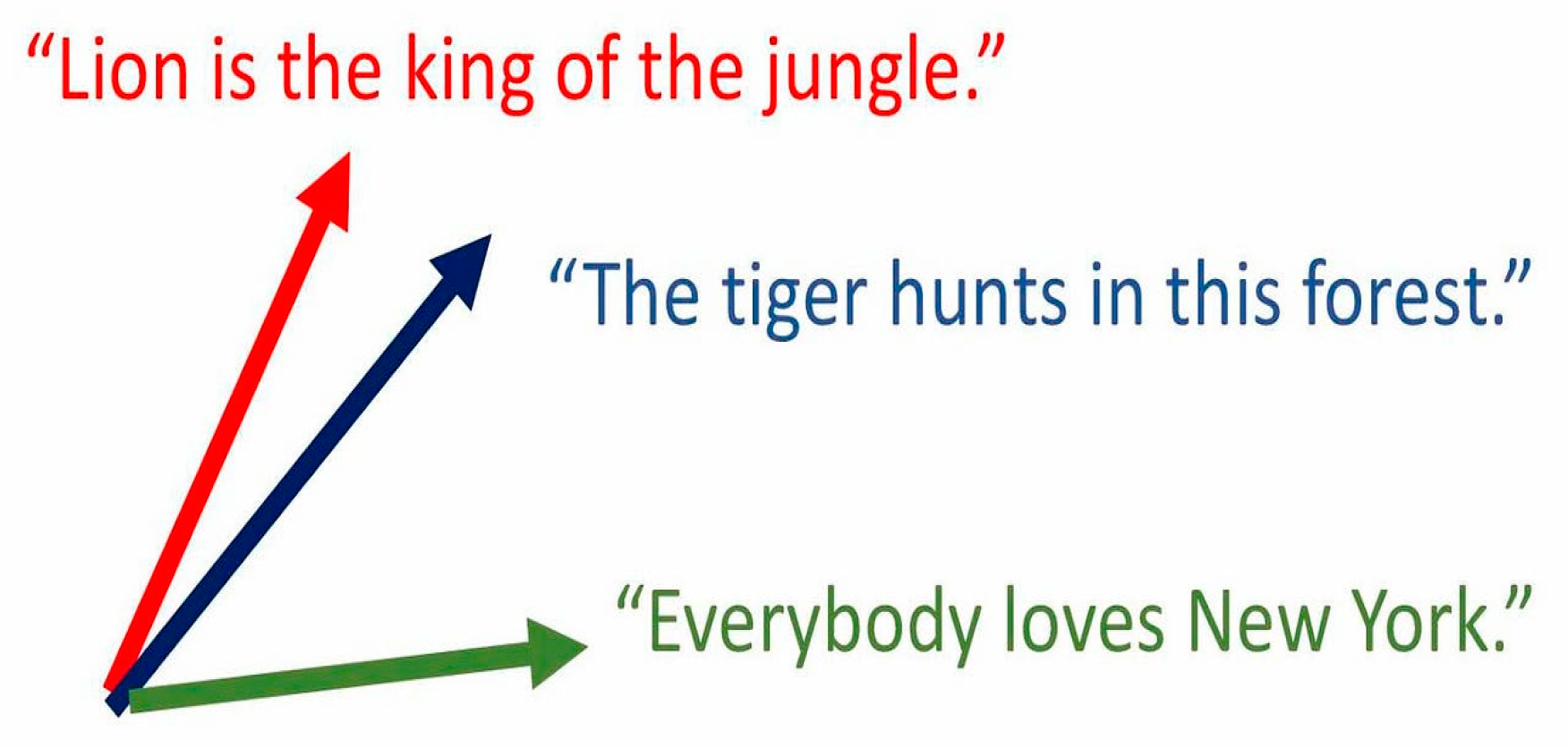

2.2.5. Distributional Representation

- One Hot Encoding (OHE): Each word in a sentence is represented by a vector of a size equal to the number of unique words in the sentence. The value 1 in the vector representation of a word indicates the location of the word in the sentence.

- Bag of Words (BoW): BoW refers to the representation of a text by ignoring contextual information such as word order or grammar, focusing only on the presence of the words it contains and the number of times those words occur in the text. Unique words in the text are identified and vocabulary is created. Each piece of text is represented as a vector according to the frequency of the words in the vocabulary in the text.

- Bag of N-grams (BoN): BoN is a version of BoW. It was created to address the lack of contextual information in BoW. Instead of counting the frequency of words in BoN, we create groups of N tokens and count the words consisting of these N tokens instead. Each group is called an N-gram.

- Term Frequency-Inverse Document Frequency (TF-IDF): This is a weighting technique used to determine how important a particular term is in a text (document). The TF-IDF score is equal to the product of TF (Term Frequency) and IDF (Inverse of Document Frequency) as shown in Equation (2) [20].

2.2.6. Distributed Representations

- 1.

- Continuous Bag of Words (CBoW): This is the method used to train word embedding words offered by Word2Vec. CBoW predicts the target word from the context, i.e., it tries to predict the word itself based on the surrounding words (context). The order of the words in the context is not important. For example, let us guess each word in the sentence “The stock market crash caused a severe recession” from the two words closest to it. This number 2 is called the window size.The stock market crash caused a severe recession. → TheThe stock market crash caused a severe recession. → stockThe stock market crash caused a severe recession. → marketThe stock market crash caused a severe recession. → crashThe stock market crash caused a severe recession. → causedThe stock market crash caused a severe recession. → aThe stock market crash caused a severe recession. → severeThe stock market crash caused a severe recession. → recession

- 2.

- Skip-Gram: This is the method used to train the word embedding words offered by Word2Vec. Skip-Gram, which is the opposite of CBoW, predicts from an input word the words in its context, i.e., the words around it. Using the example given in CBoW, the result would be as follows.The stock market crash caused a severe recession. → stock, marketThe stock market crash caused a severe recession. → The, market, crashThe stock market crash caused a severe recession. → The, stock, crash, causedThe stock market crash caused a severe recession. → stock, market, caused, aThe stock market crash caused a severe recession. → market, crash, a, severeThe stock market crash caused a severe recession. → crash, caused, severe, recessionThe stock market crash caused a severe recession. → caused, a, recessionThe stock market crash caused a severe recession. → a, severeHere, the model is trained by giving red words as input and blue words as output. The trained model is then used to predict the surrounding words of a given word.

2.2.7. Word Embedding Methods

- In the first image, the vector relationship between the words “king” and “man” is parallel to the vector relationship between the words “queen” and “woman”. This shows that the vector from ‘king’ to ‘queen’ is parallel to the vector from ‘man’ to ‘woman’. We can also express this mathematically as king–man = queen–woman. The equality here says that there will be almost similar vectors, though not exactly, and their projections onto each other will be close to the norms of those vectors. From this, we can say that similar results will occur if the words “boy” and “girl” are written instead of the words “man” and “woman”. We can also show our vector operation as king–boy = queen–girl.

- Similarly, in the second image, the vector relations between ‘walked’ and ‘walking’ and ‘swam’ and ‘swimming’ show the regular transition between the past and present tense forms of these verbs.

- The last figure shows that the vector representation of each country’s relationship with its capital is almost parallel to the vector representation of its relationship with the capitals of other countries.

2.2.8. Sentence Embedding (Language Representation Models)

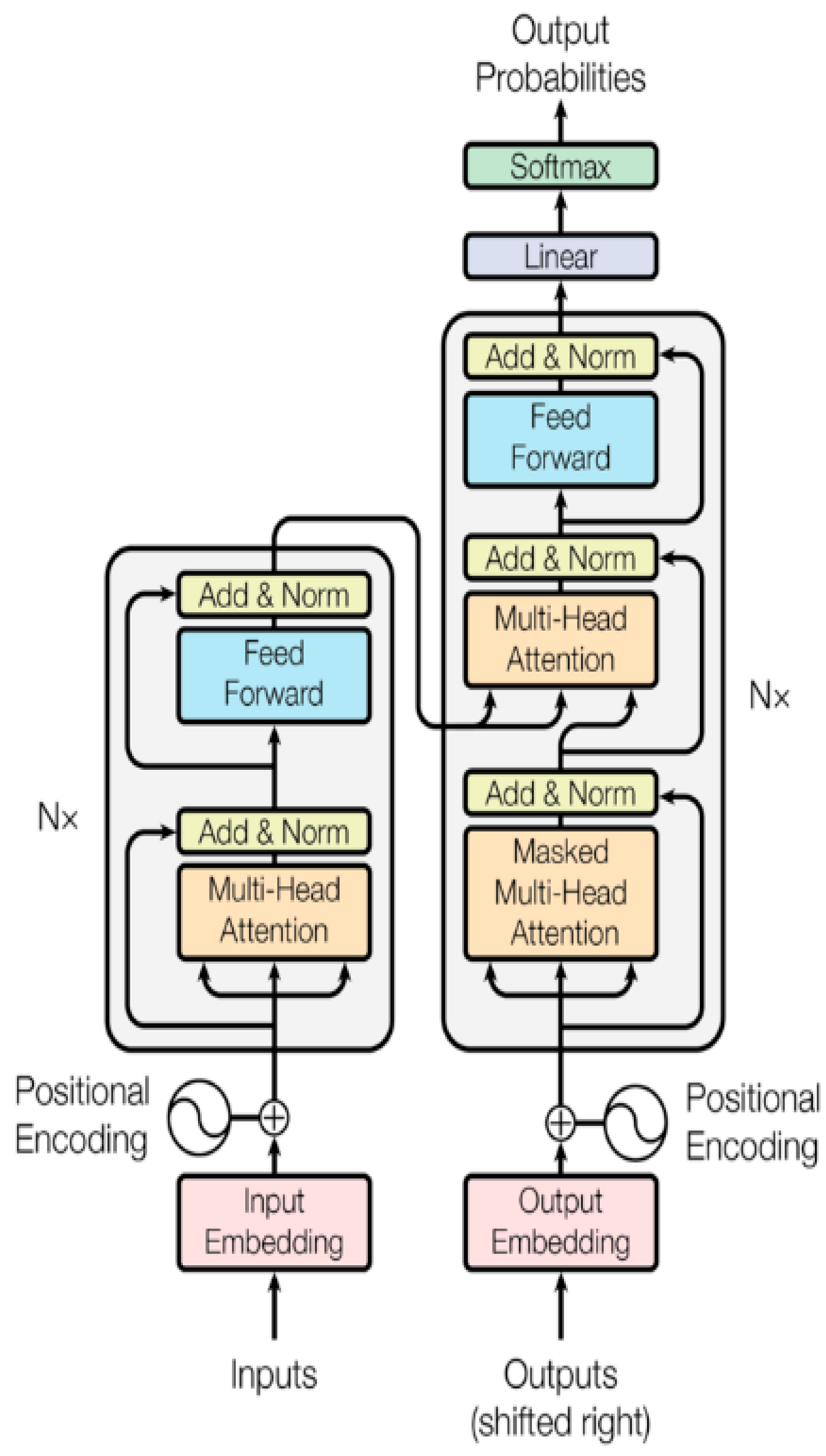

- BERT (Bidirectional Encoder Representations from Transformers): BERT is a sentence embedding model, and recent research has extensively studied the construction and use of Bidirectional Encoder Representations of Transformers, i.e., the BERT model [23]. BERT and Transformer-like models such as BERT perform well in complex NLP tasks because they can better understand a broader context of the language. BERT plays a particularly important role in understanding the context of expressions and words. For example, in applications such as sentiment analysis of customer reviews on an e-commerce platform, this feature allows us to better understand what customers think about products and thus make more accurate business decisions [23]. BERT is a Transformer, and Transformer was first introduced by Vaswani et al. and is a pioneering model in the field of NLP [24]. Figure 4 shows the basic architecture of the Transformer model.

- The “Input Embedding” module creates fixed-size vector representations of words or tokens. In this way, each word or token is associated with a numerical vector that the model can learn. This is the first step for the model to understand the input.

- “Positional Encoding” adds sequence information, i.e., the number of times the word occurs in the sentence. Although RNNs or LSTMs have input sequence information, Transformers do not have input sequence information and therefore cannot directly process sequential data. To overcome this limitation, this module passes the position of each word in the sentence to the model.

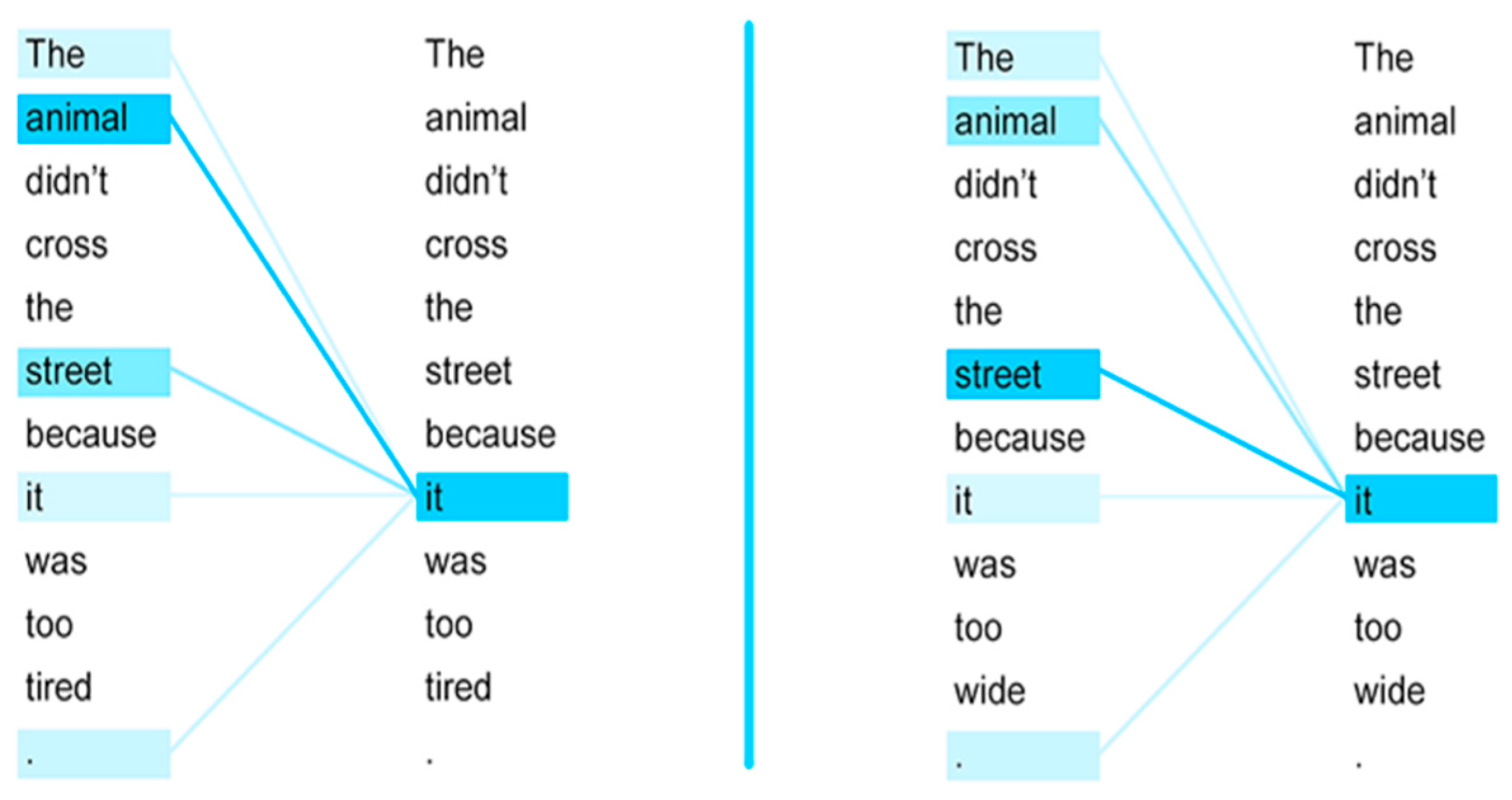

- The “Multi-Head Attention” module allows the model to “pay attention” to information in different places at the same time to better understand the relationships between words. For example, this module shows whether the pronoun “it” in the sentence “The animal didn’t cross the street because it was too tired” is related to the word “animal” or the word “street”. In this way, the meaning of the word “it” in the sentence is more accurately determined. In Figure 5, to illustrate the mechanism of “Self-Attention” in this module, the colors of the words with which the word “it” is most related are shown in shades according to the level of relationship. In the sentence on the left, the word “it” is related to the word “animal”, while in the sentence on the right, the word “it” is related to the word “street”.

- The “Add & Norm” module consists of parts that perform two separate functions. The addition part, with a structure known as Residual Connection, tries to prevent potential problems such as gradient disappearance in deep learning networks by collecting the output from each sublayer and the input before entering that layer to ensure that gradients in deep networks are propagated back more effectively. In the normalization part, the vector obtained after the addition process is subjected to layer normalization to accelerate learning and increase stability in different layers of the model.

- The “Feed Forward” module has two important functions. The expansion and activation part, which allows the model to learn more complex relationships, and the contraction part, which brings the output of each layer to the appropriate size for the next layer.

- The “Masked Multi-Head Attention” module supports an autoregressive prediction structure by ensuring that the model only sees the words produced so far when generating a sentence. That is, it only considers the previous words when predicting the next word. It works very well in sequential data processing, such as text generation and translation, because it prevents information leakage about future words.

- The “Output Embedding” module does the opposite of the word embedding carried out in the “Input Embedding” module in the Encoder section, i.e., it converts the output vectors into words.

- The “Linear” module converts the size of the vectors output from the Decoder into the size of the vocabulary.

- The “Softmax” module normalizes these scores by converting the scores from the linear layer into a probability distribution where the sum of all word probabilities is 1. It then predicts what the next word will be based on this probability distribution.

- 2.

- Word2Vec: The Word2Vec algorithm was introduced by Mikolov et al. [21,32]. Word2Vec is a popular unsupervised learning algorithm. It is not possible to extract the relationships between two different vectors with One Hot Coding. Additionally, when the number of words in sentences increases, the number of zero elements in the word representation vector will increase, which increases the memory requirement. Word2Vec uses 2 important methods to solve these two problems. As explained before, these are CBoW and Skip-Gram methods [32]. Both architectures have been shown to be capable of producing high-quality word embedding. TheWord2Vec algorithm is based on the distributional hypothesis described earlier, that is, the idea that words in similar contexts tend to have similar meanings [32]. Word2Vec learns distributed representations of words by training a neural network with data obtained from CBoW and Skip-Gram on a large text set [32].

- 3.

- GloVe (Global Vectors for Word Representation): GloVe is an unsupervised learning algorithm that learns word vectors [22]. GloVe generates word vectors by analyzing the likelihood of word pairs appearing together in a given text corpus. The local context window and global matrix factorization form Glove’s count-based global log-bilinear regression model and it has been widely used in various natural language processing tasks [22]. The algorithm trains non-zero elements in a word–word co-occurrence matrix using statistical information [35]. With this method, GloVe captures fine-grained semantic and syntactic regularities using vector arithmetic [22].

2.2.9. Detection of Crisis Moments with NLP

2.2.10. Detection of Crisis Moments

3. Results

3.1. Parameter Settings of the Models Used

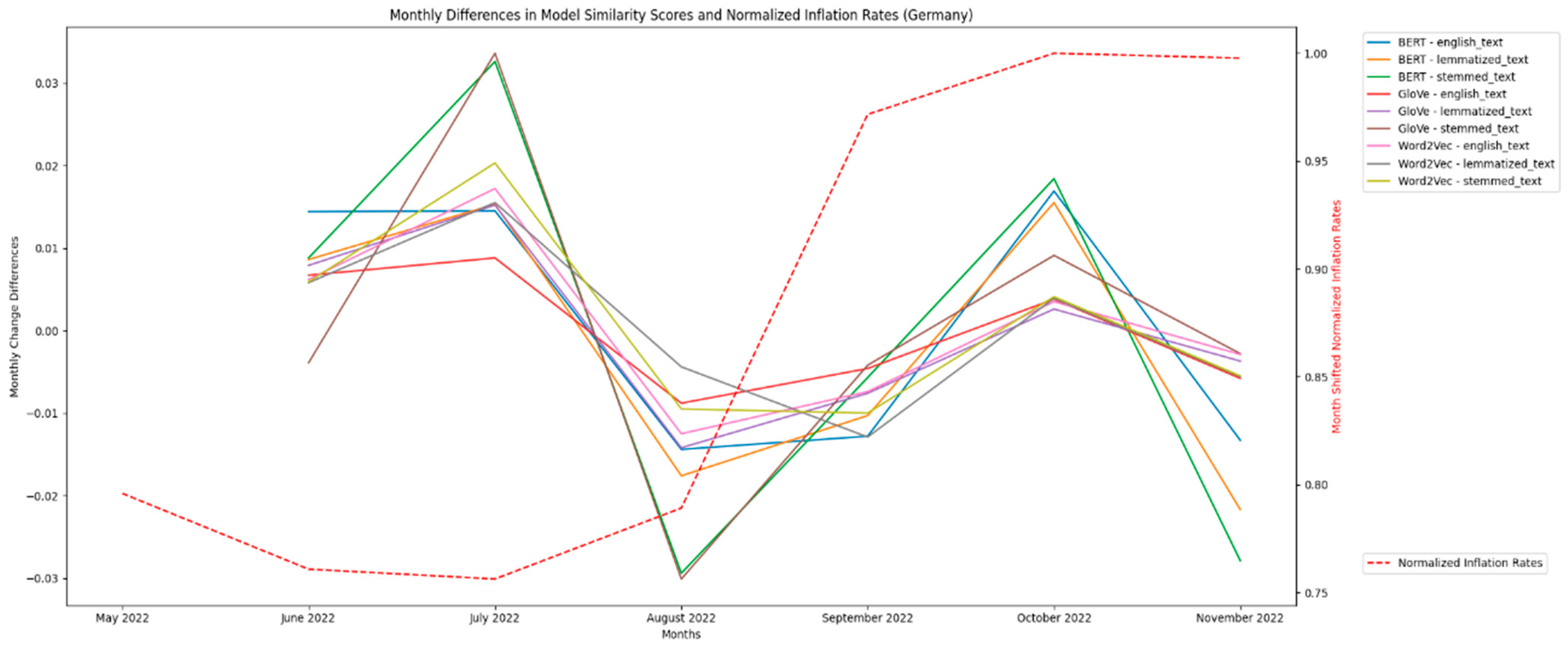

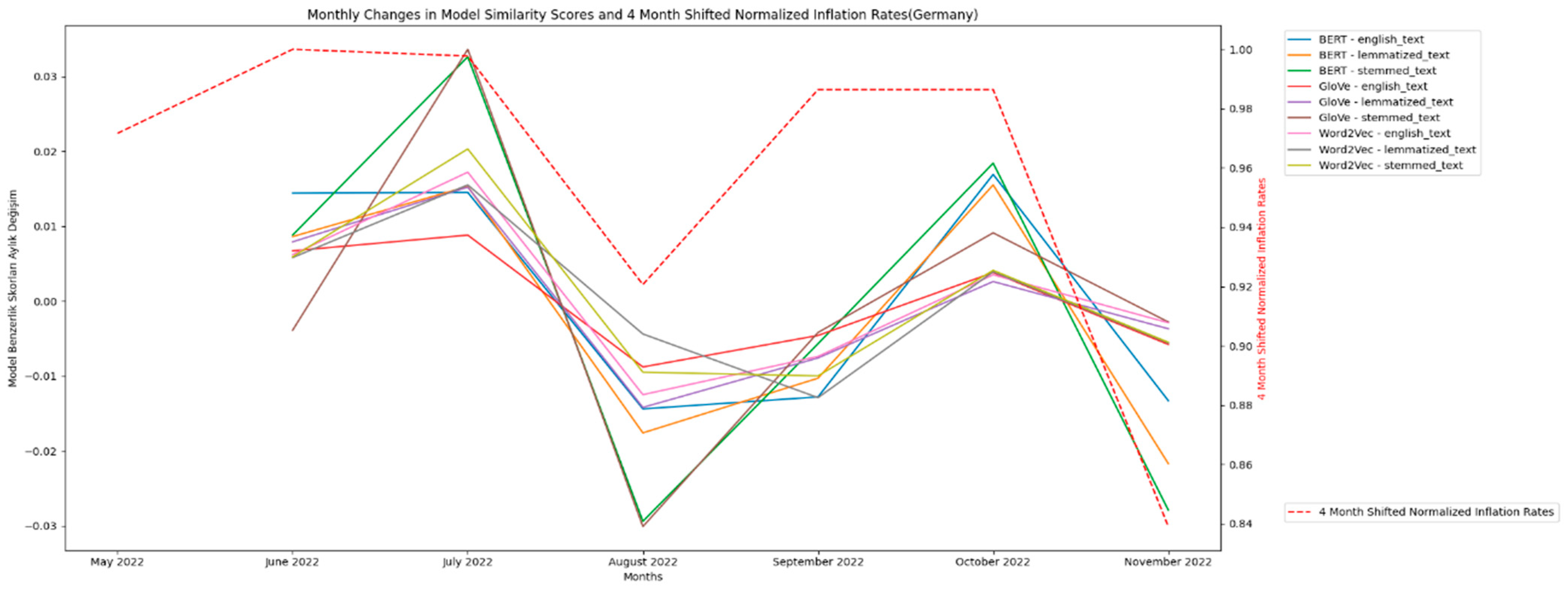

3.2. Analyses

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Naghdi, Y.; Kaghazian, S.; Kakoei, N. Global Financial Crisis and Inflation: Evidence from OPEC. Middle-East J. Sci. Res. 2012, 11, 525–530. [Google Scholar]

- Reynard, S. Assessing Potential Inflation Consequences of QE after Financial Crises. Peterson Inst. Int. Econ. Work. Pap. 2012, 12, 22. [Google Scholar] [CrossRef][Green Version]

- Bijapur, M. Do Financial Crises Erode Potential Output? Evidence from OECD Inflation Responses. Econ. Lett. 2012, 117, 700–703. [Google Scholar] [CrossRef][Green Version]

- Reznikov, R. Data Science Methods and Models in Modern Economy. SSRN Electron. J. 2024. Available online: https://papers.ssrn.com/sol3/Delivery.cfm?abstractid=4851627 (accessed on 16 December 2024). [CrossRef]

- Qi, L. FITE4801 Final Year Project. The University of Hong Kong. 2024. Available online: https://wp2023.cs.hku.hk/fyp23021/wp-content/uploads/sites/22/FITE4801_Interim_Report_fyp23021.pdf (accessed on 16 December 2024).

- Bodislav, D.A.; Popescu, G.; Niculescu, I.; Mihalcea, A. The Integration of Machine Learning in Central Banks: Implications and Innovations. Eur. J. Sustain. Dev. 2024, 13, 23. [Google Scholar] [CrossRef]

- Farahani, M.S. Analysis of Business Valuation Models with AI Emphasis. Sustain. Econ. 2024, 2, 132. [Google Scholar] [CrossRef]

- Ginsburg, R. Harnessing AI for Accurate Financial Projections. ResearchGate. 2024. Available online: https://www.researchgate.net/profile/Husam-Rajab-4/publication/385385326_Harnessing_AI_for_Accurate_Financial_Projections/links/6722bf12ecbbde716b4c5469/Harnessing-AI-for-Accurate-Financial-Projections.pdf (accessed on 16 December 2024).

- Ari, M.A.; Chen, S.; Ratnovski, M.L. The Dynamics of Non-Performing Loans During Banking Crises: A New Database; IMF Working Paper; International Monetary Fund: Washington, DC, USA, 2019. [Google Scholar]

- Kilimci, Z.H.; Duvar, R. An Efficient Word Embedding and Deep Learning Based Model to Forecast the Direction of Stock Exchange Market Using Twitter and Financial News Sites: A Case of Istanbul Stock Exchange (BIST 100). IEEE Access 2020, 8, 188186–188198. [Google Scholar] [CrossRef]

- Othan, D.; Kilimci, Z.H.; Uysal, M. Financial Sentiment Analysis for Predicting Direction of Stocks Using Bidirectional Encoder Representations from Transformers (BERT) and Deep Learning Models. In Proceedings of the International Conference on Innovative Intelligent Technologies (ICIT), Istanbul, Turkey, 5–6 December 2019; pp. 30–35. [Google Scholar]

- Atak, A. Exploring the Sentiment in Borsa Istanbul with Deep Learning. Borsa Istanb. Rev. 2023, 23, S84–S95. [Google Scholar] [CrossRef]

- Hellwig, K.-P. Predicting Fiscal Crises: A Machine Learning Approach; International Monetary Fund: Washington, DC, USA, 2021. [Google Scholar]

- Chen, M.; DeHaven, M.; Kitschelt, I.; Lee, S.J.; Sicilian, M.J. Identifying Financial Crises Using Machine Learning on Textual Data. J. Risk Financ. Manag. 2023, 16, 161. [Google Scholar] [CrossRef]

- Chen, Y.; Kelly, B.T.; Xiu, D. Expected Returns and Large Language Models. SSRN 2022. Available online: https://ssrn.com/abstract=4416687 (accessed on 16 December 2024).

- Reimann, C. Predicting Financial Crises: An Evaluation of Machine Learning Algorithms and Model Explainability for Early Warning Systems. Rev. Evol. Polit. Econ. 2024, 1, 1–33. [Google Scholar] [CrossRef]

- Nyman, P.; Tuckett, D. Measuring Financial Sentiment to Predict Financial Instability: A New Approach Based on Text Analysis; University College London: London, UK, 2015. [Google Scholar]

- Usui, M.; Ishii, N.; Nakamura, K. Extraction and Standardization of Patient Complaints from Electronic Medication Histories for Pharmacovigilance: Natural Language Processing Analysis in Japanese. JMIR Med. Inform. 2018, 6, e11021. [Google Scholar] [CrossRef]

- Bird, S.; Klein, E.; Loper, E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit; O’Reilly Media: Sebastopol, CA, USA, 2009. [Google Scholar]

- Manning, C.D.; Raghavan, P.; Schütze, H. Boolean Retrieval. In Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008; pp. 1–18. [Google Scholar]

- Harris, Z. Distributional Hypothesis. Word World 1954, 10, 146–162. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Dai, Z.; Callan, J. Deeper Text Understanding for IR with Contextual Neural Language Modeling. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019. [Google Scholar]

- Bao, T.; Wang, S.; Li, J.; Liu, B.; Chen, X. A BERT-Based Hybrid Short Text Classification Model Incorporating CNN and Attention-Based BiGRU. J. Organ. End User Comput. 2021, 33, 1–21. [Google Scholar] [CrossRef]

- Sun, C.; Qiu, X.; Huang, X. How to Fine-Tune BERT for Text Classification? In Proceedings of the 18th China National Conference on Chinese Computational Linguistics (CCL 2019), Kunming, China, 18–20 October 2019.

- Shi, Z.; Yuan, Z.; Wang, Q.; Song, J.; Zhang, J. News Image Text Classification Algorithm with Bidirectional Encoder Representations from Transformers Model. J. Electron. Imaging 2023, 32, 011217. [Google Scholar] [CrossRef]

- Khandve, S.I.; Bhave, S.; Nene, R.; Kulkarni, R.V. Hierarchical Neural Network Approaches for Long Document Classification. In Proceedings of the 14th International Conference on Machine Learning and Computing (ICMLC 2022), Guangzhou, China, 18–20 February 2022. [Google Scholar]

- Glass, M.; Subramanian, S.; Wang, Y.; Smith, N.A. Span Selection Pre-Training for Question Answering. arXiv 2019, arXiv:1909.04120. [Google Scholar]

- Abdel-Salam, S.; Rafea, A. Performance Study on Extractive Text Summarization Using BERT Models. Information 2022, 13, 67. [Google Scholar] [CrossRef]

- Chung, Y.-A.; Weng, S.-W.; Chen, Y.-S.; Glass, J. Audio Word2Vec: Unsupervised Learning of Audio Segment Representations Using Sequence-to-Sequence Autoencoder. arXiv 2016, arXiv:1603.00982. [Google Scholar]

- Tulu, C.N. Experimental Comparison of Pre-Trained Word Embedding Vectors of Word2Vec, Glove, FastText for Word Level Semantic Text Similarity Measurement in Turkish. Adv. Sci. Technol. Res. J. 2022, 16, 45–51. [Google Scholar] [CrossRef]

- Juneja, P.; Gupta, S.; Anand, A. Context-Aware Clustering Using GloVe and K-Means. Int. J. Softw. Eng. Appl. 2017, 8, 21–38. [Google Scholar] [CrossRef]

- Mafunda, M.C.; Mhlanga, S.; Dube, A.; Dlodlo, M. A Word Embedding Trained on South African News Data. Afr. J. Inf. Commun. 2022, 30, 1–24. [Google Scholar] [CrossRef]

- Kusum, S.P.P.; Soehardjo, S.K. Sentiment Analysis Using Global Vector and Long Short-Term Memory. Indones. J. Electr. Eng. Comput. Sci. 2022, 26, 414–422. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Shirai, K.; Velcin, J. Sentiment Analysis on Social Media for Stock Movement Prediction. Expert Syst. Appl. 2015, 42, 9603–9611. [Google Scholar] [CrossRef]

| Date | English_Text | Lemmatized_Text | Stemmed_Text |

|---|---|---|---|

| 1 January 2022 T23:56:43Z | The December inflation data, which concerns millions of people, will be announced today by the Turkish Statistical Institute, TurkStat. With the clarification… | december inflation data concern million people announce today turkish statistical institute turkstat clarification… | decemb inflat data concern million peopl announc today turkish statist institut turkstat clarif... |

| 1 January 2022 T23:26:28Z | As of the first day of the new year, the electricity tariffs, which were switched to a gradual system, increased by an average of fifty-two percent to one hundred… | first day new year electricity tariff switch gradual system increase average fifty two percent one hundred… | first day new year electr tariff switch gradual system increas averag fifti two percent one hundr… |

| 1 January 2022 T23:15:29Z | How can the price of red meat decrease? The decline in foreign exchange prices has not yet been reflected in market prices, especially red meat prices are high. | price red meat decrease decline foreign exchange price yet reflect market price especially red meat price high. | price red meat decreas declin foreign exchang price yet reflect market price especi red meat price high. |

| 1 January 2022 T22:46:22Z | The wall was built on the Osmangazi Bridge, where a dollar-based transit guarantee was applied on the first day of Two Thousand and Twenty One, … | wall built osmangazi bridge dollar base transit guarantee apply first day two thousand twenty one … | wall built osmangazi bridg dollar base transit guarante appli first day two thousand twenti one … |

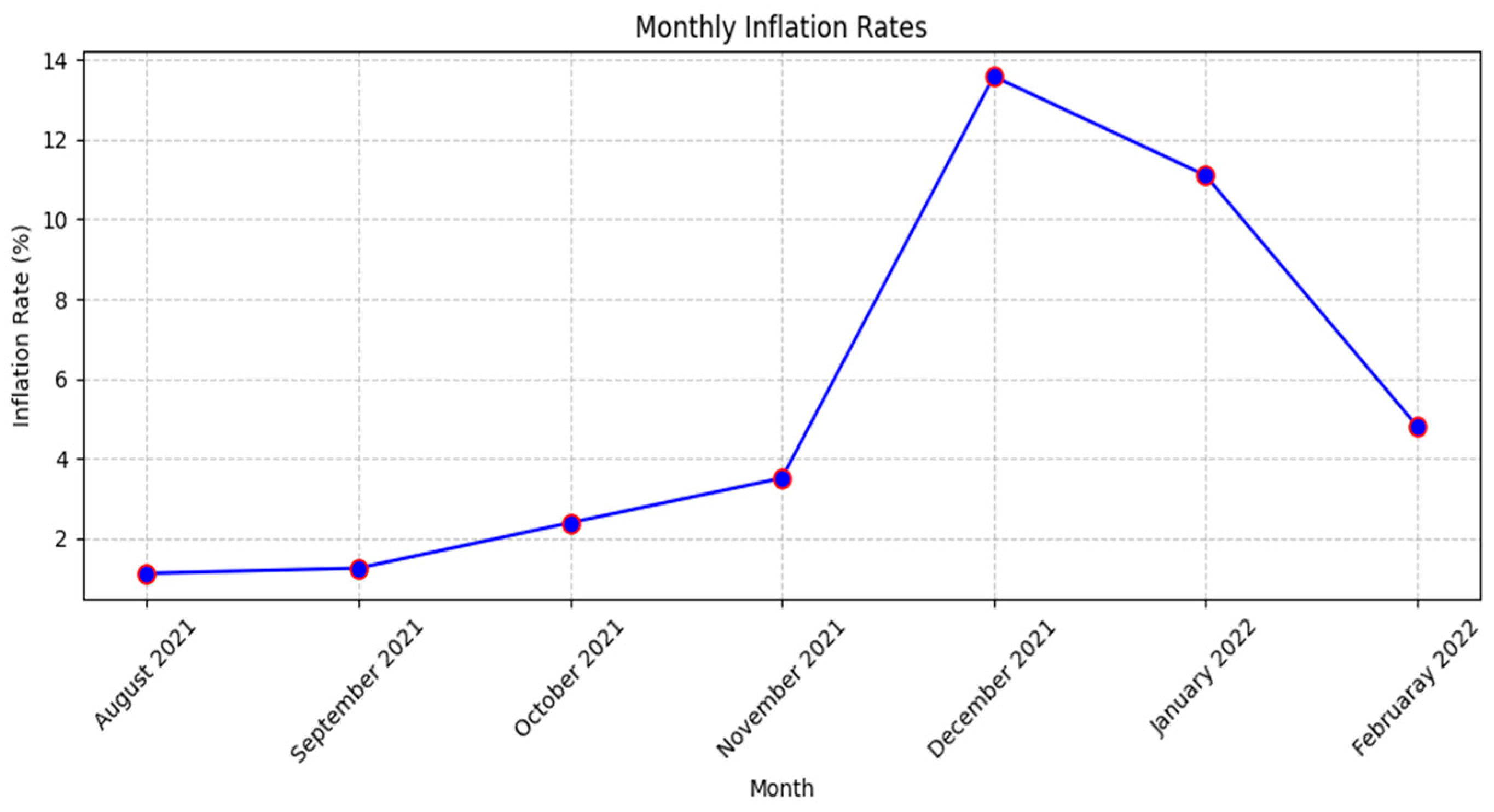

| Date | Inflation Rate (%) |

|---|---|

| 2021 August | 1.12 |

| 2021 September | 1.25 |

| 2021 October | 2.39 |

| 2021 November | 3.51 |

| 2021 December | 13.58 |

| 2022 January | 11.10 |

| 2022 February | 4.81 |

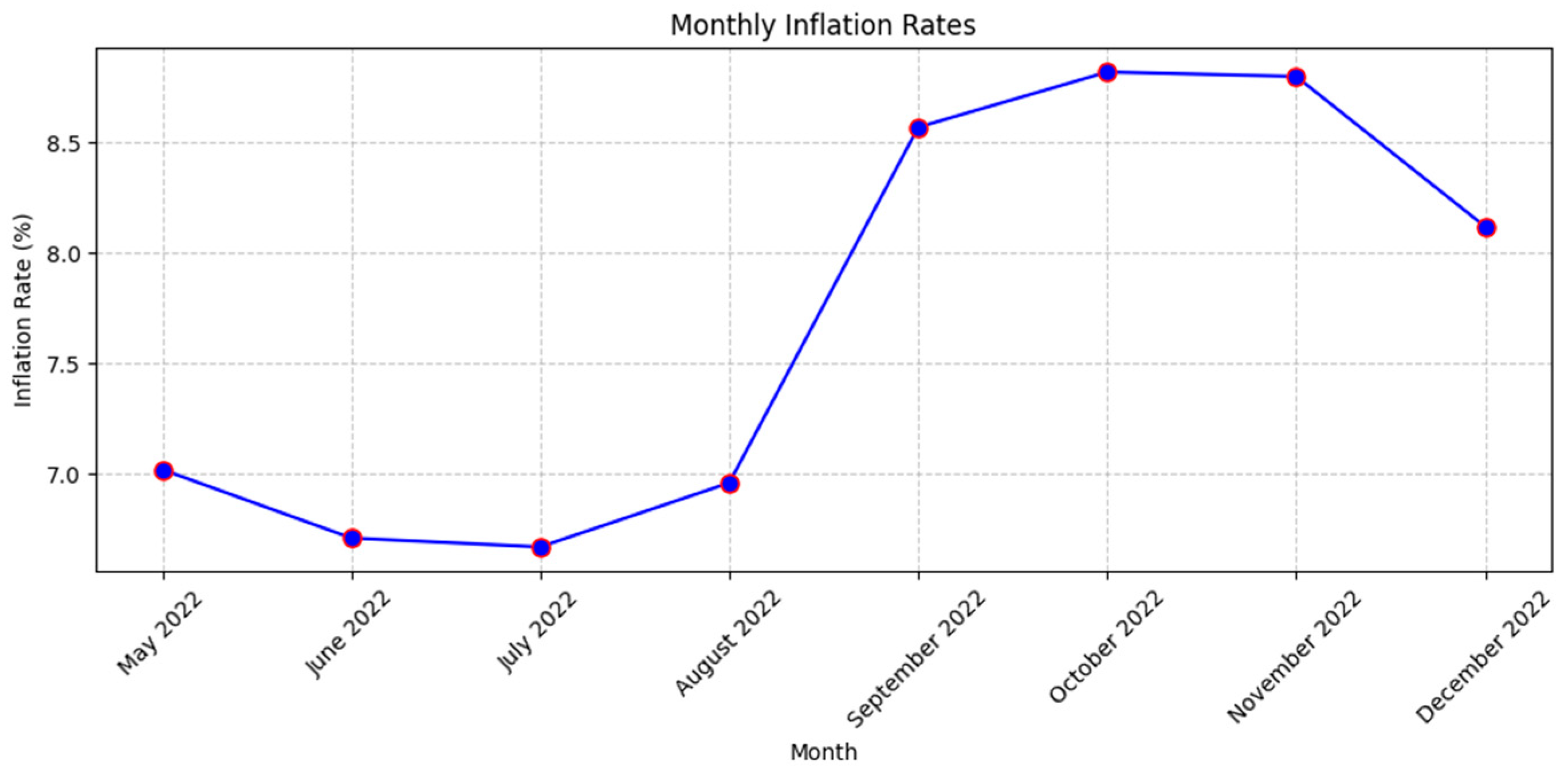

| Date | Inflation Rate (%) |

|---|---|

| 2022 May | 7.02 |

| 2022 June | 6.71 |

| 2022 July | 6.67 |

| 2022 August | 6.69 |

| 2022 September | 8.57 |

| 2022 October | 8.82 |

| 2022 November | 8.80 |

| 2022 December | 8.12 |

| Model | Correlation Rate (%) |

|---|---|

| BERT—english_text | 0.7773809409530984 |

| BERT—lemmatized_text | 0.7757496734223899 |

| BERT—stemmed_text | 0.7726356949494878 |

| GloVe—english_text | 0.7770495657907177 |

| GloVe—lemmatized_text | 0.7771488080800077 |

| GloVe—stemmed_text | 0.7688405897606074 |

| Word2Vec—english_text | 0.7729892929417653 |

| Word2Vec—lemmatized_text | 0.4389700146889638 |

| Word2Vec—stemmed_text | 0.7309490371841797 |

| Model | Correlation Rate (%) |

|---|---|

| BERT—english_text | 0.6799395942765429 |

| BERT—lemmatized_text | 0.8033144229669527 |

| BERT—stemmed_text | 0.8012420137842662 |

| GloVe—english_text | 0.6937973380871284 |

| GloVe—lemmatized_text | 0.5154347580040074 |

| GloVe—stemmed_text | 0.4173783860657952 |

| Word2Vec—english_text | 0.4882908537947667 |

| Word2Vec—lemmatized_text | 0.4483222620155073 |

| Word2Vec—stemmed_text | 0.5128112255327603 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kotan, K.; Kırışoğlu, S. Sustainable Economic Development Through Crisis Detection Using AI Techniques. Sustainability 2025, 17, 1536. https://doi.org/10.3390/su17041536

Kotan K, Kırışoğlu S. Sustainable Economic Development Through Crisis Detection Using AI Techniques. Sustainability. 2025; 17(4):1536. https://doi.org/10.3390/su17041536

Chicago/Turabian StyleKotan, Kurban, and Serdar Kırışoğlu. 2025. "Sustainable Economic Development Through Crisis Detection Using AI Techniques" Sustainability 17, no. 4: 1536. https://doi.org/10.3390/su17041536

APA StyleKotan, K., & Kırışoğlu, S. (2025). Sustainable Economic Development Through Crisis Detection Using AI Techniques. Sustainability, 17(4), 1536. https://doi.org/10.3390/su17041536