Detection of Defective Solar Panel Cells in Electroluminescence Images with Deep Learning

Abstract

1. Introduction

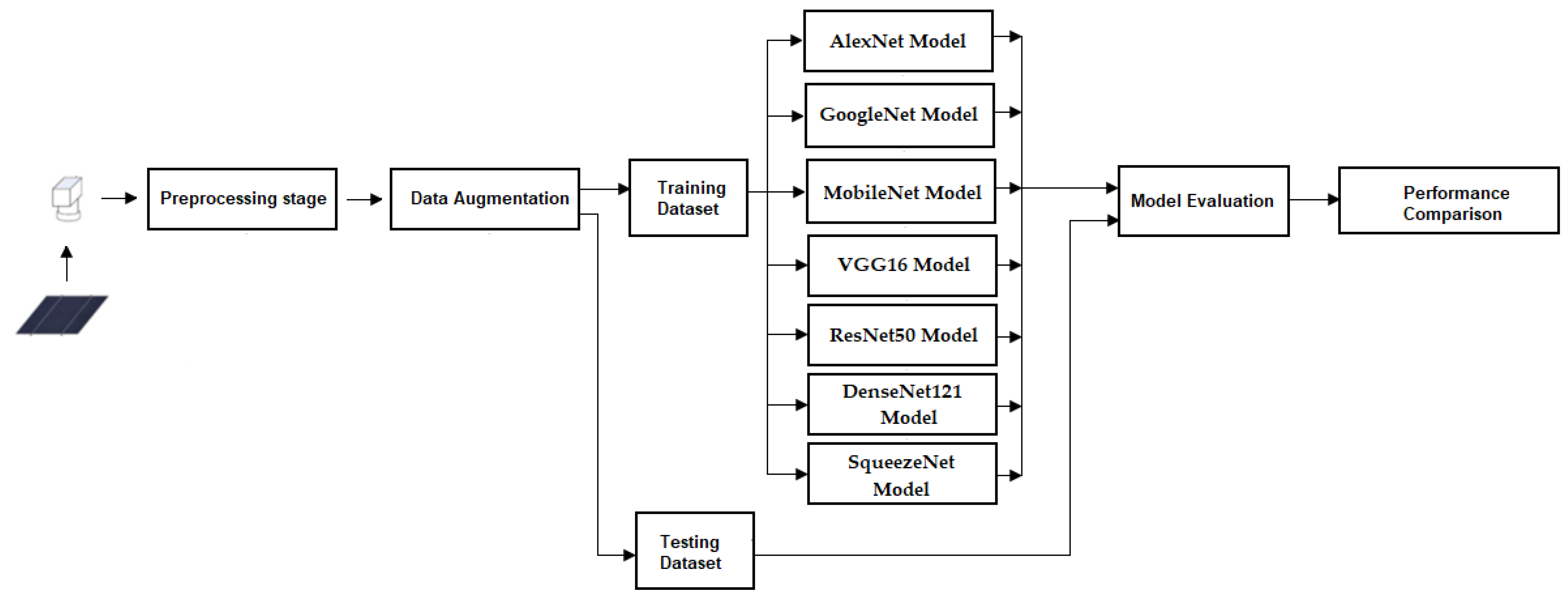

2. Materials and Methods

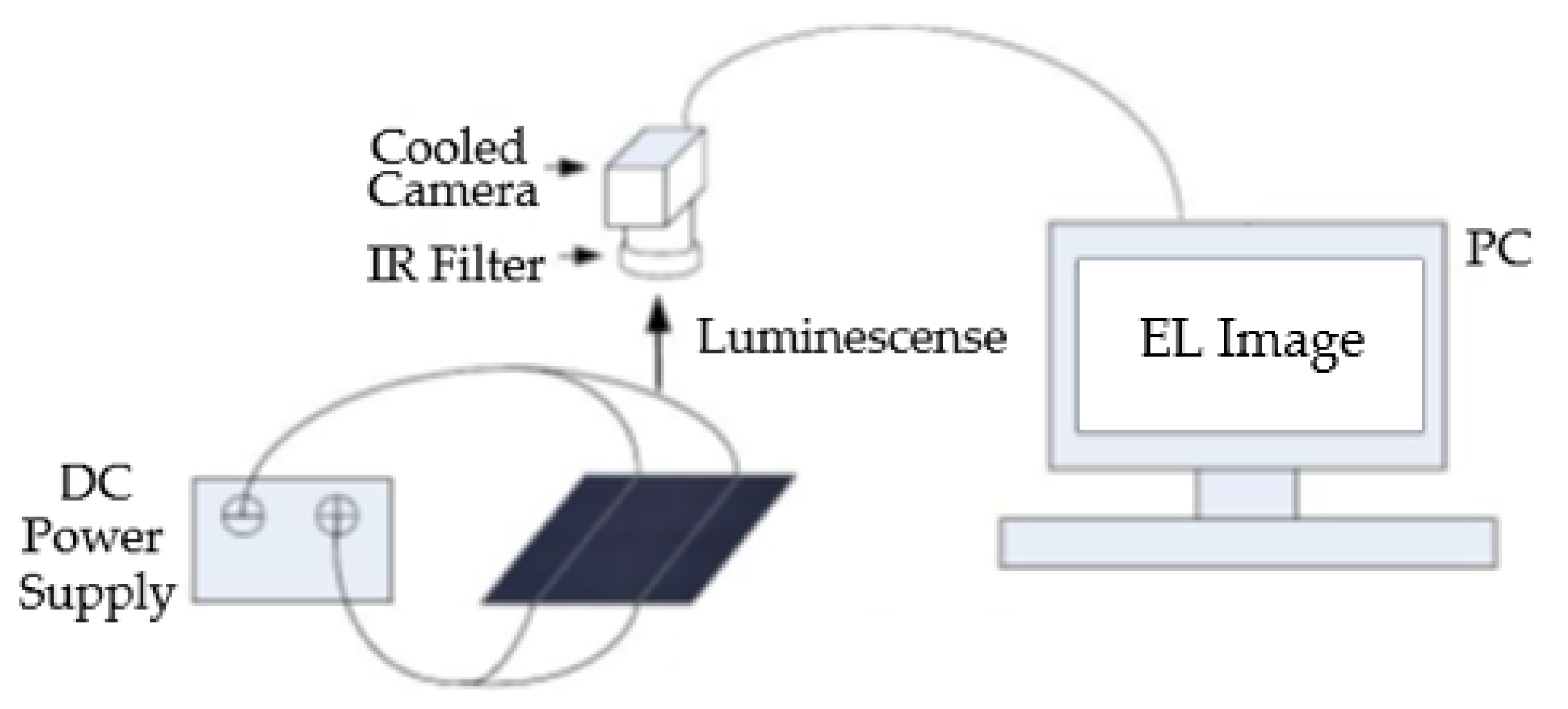

2.1. Electroluminescence

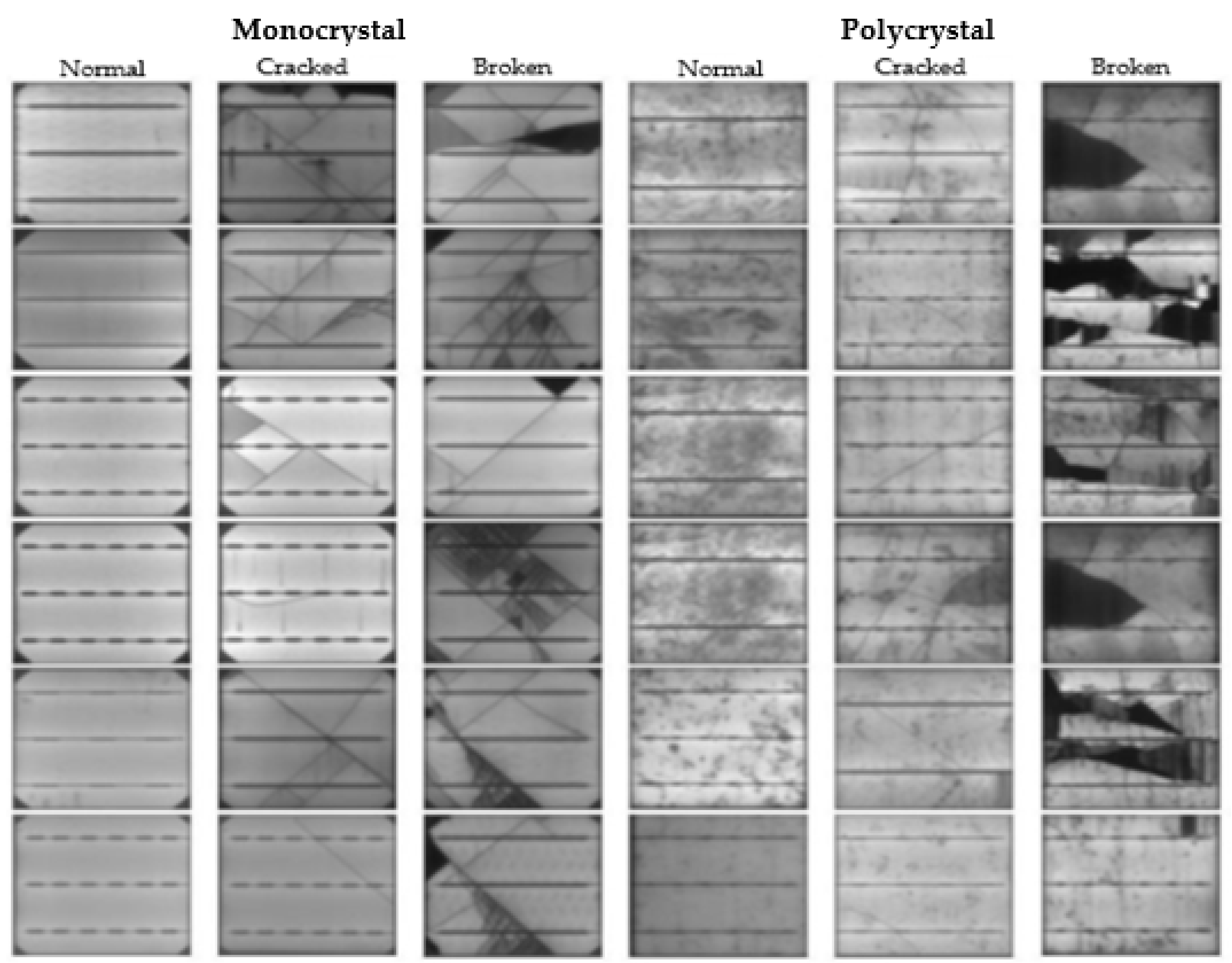

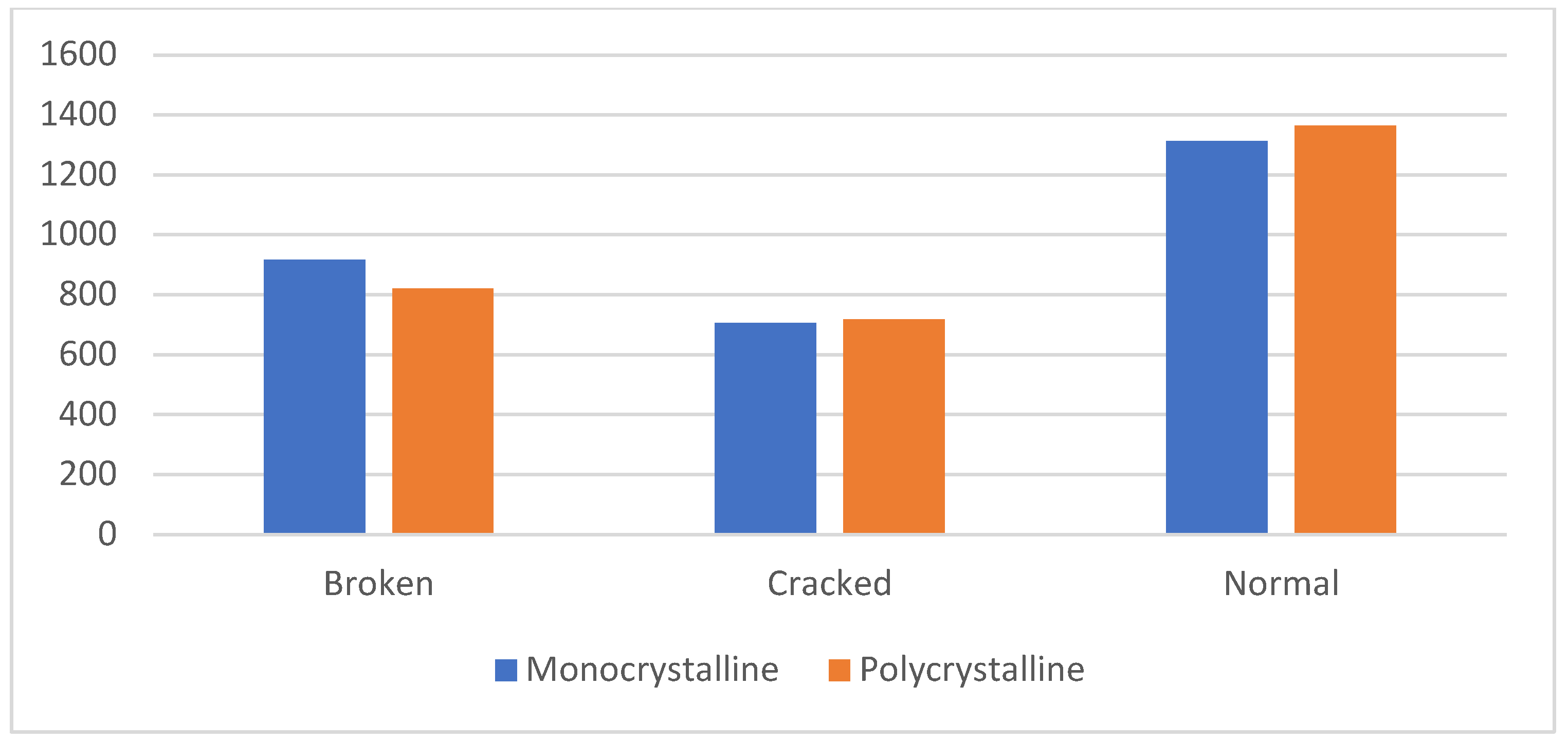

2.2. Dataset

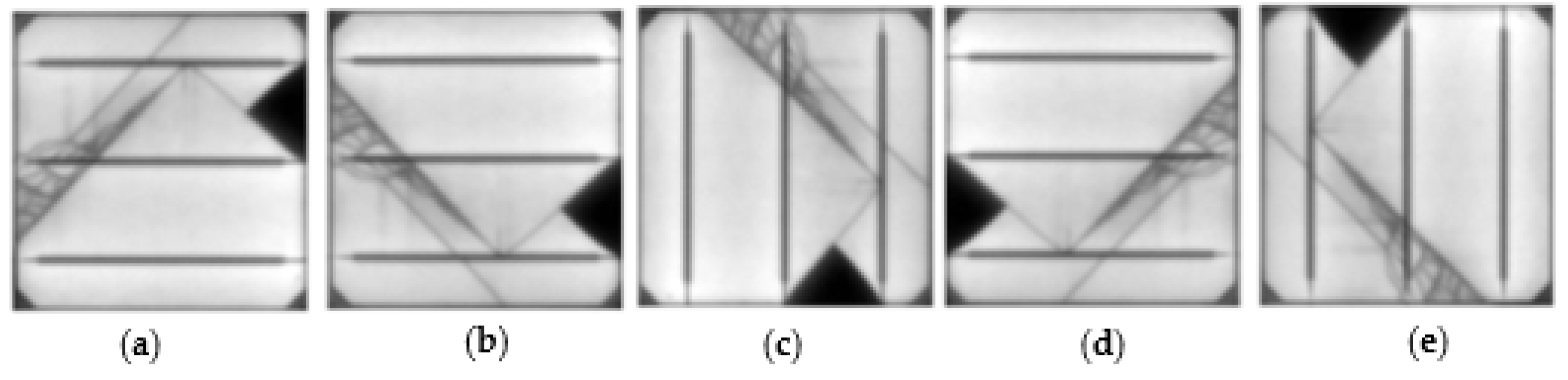

2.3. Data Replication

2.4. Deep Learning

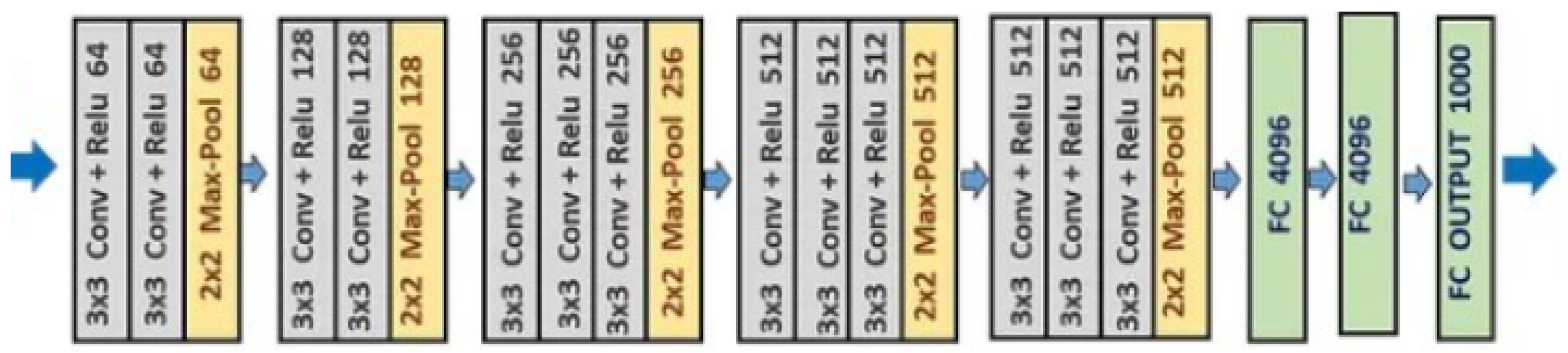

2.4.1. VGG16 Architecture

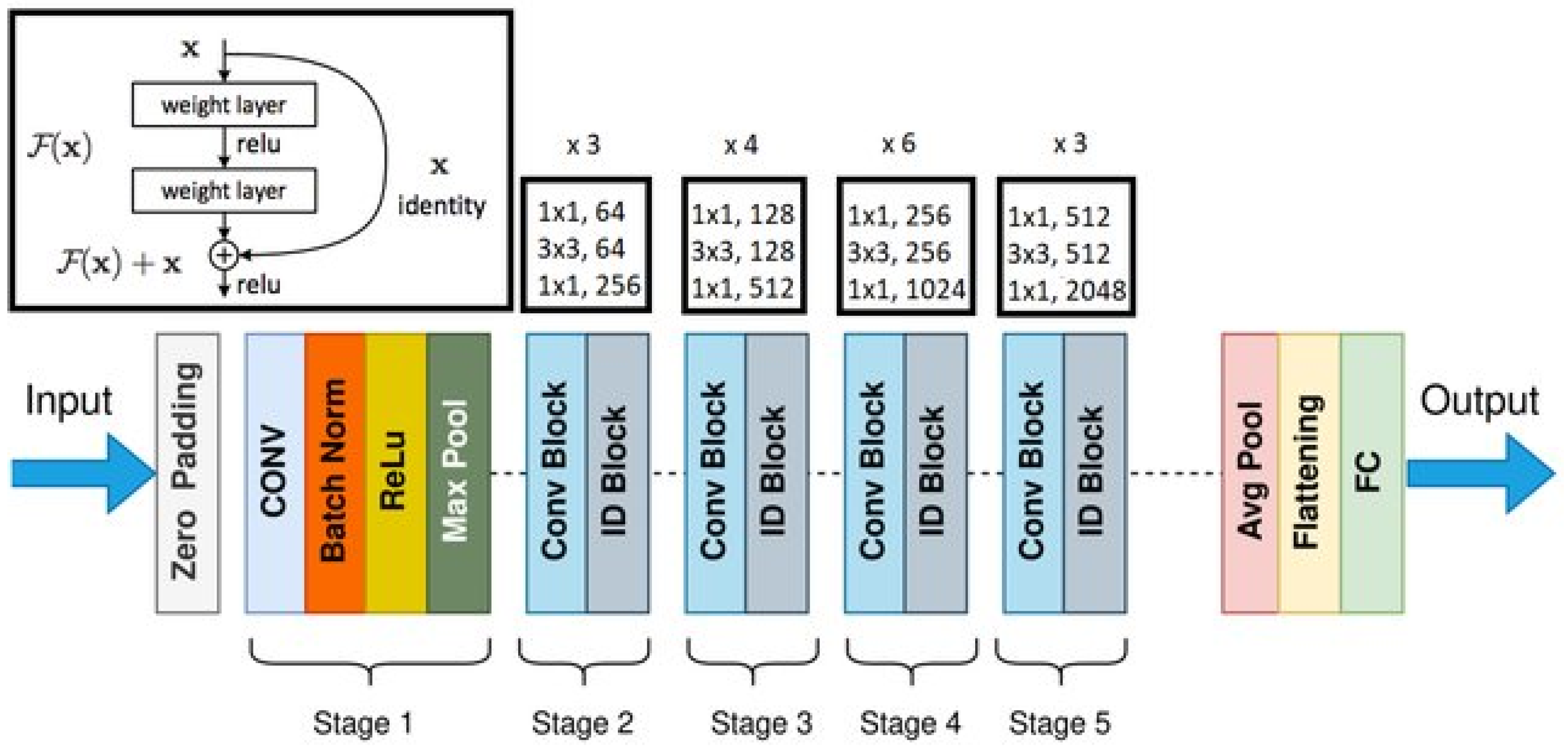

2.4.2. ResNet50 Architecture

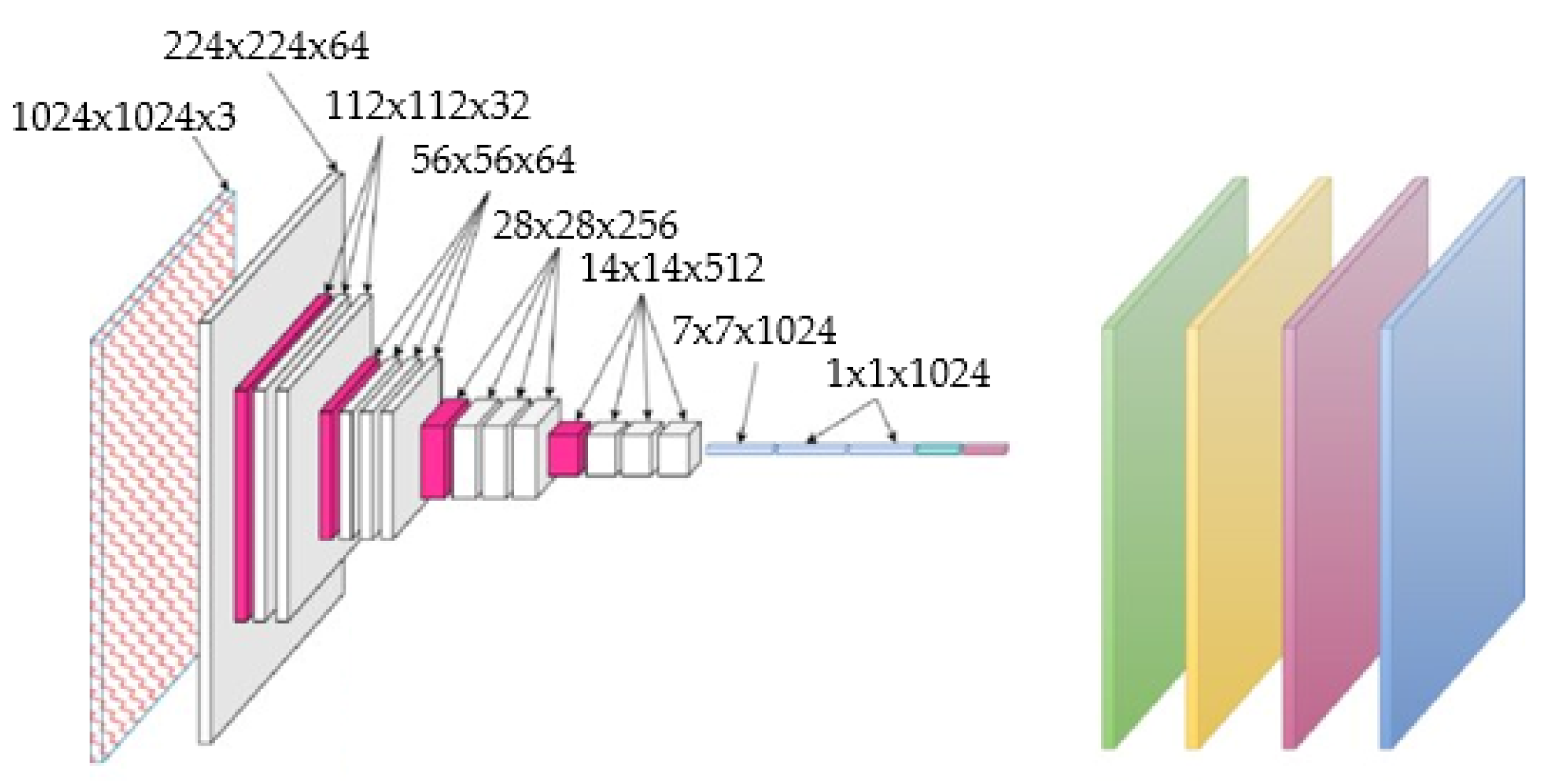

2.4.3. MobileNet Architecture

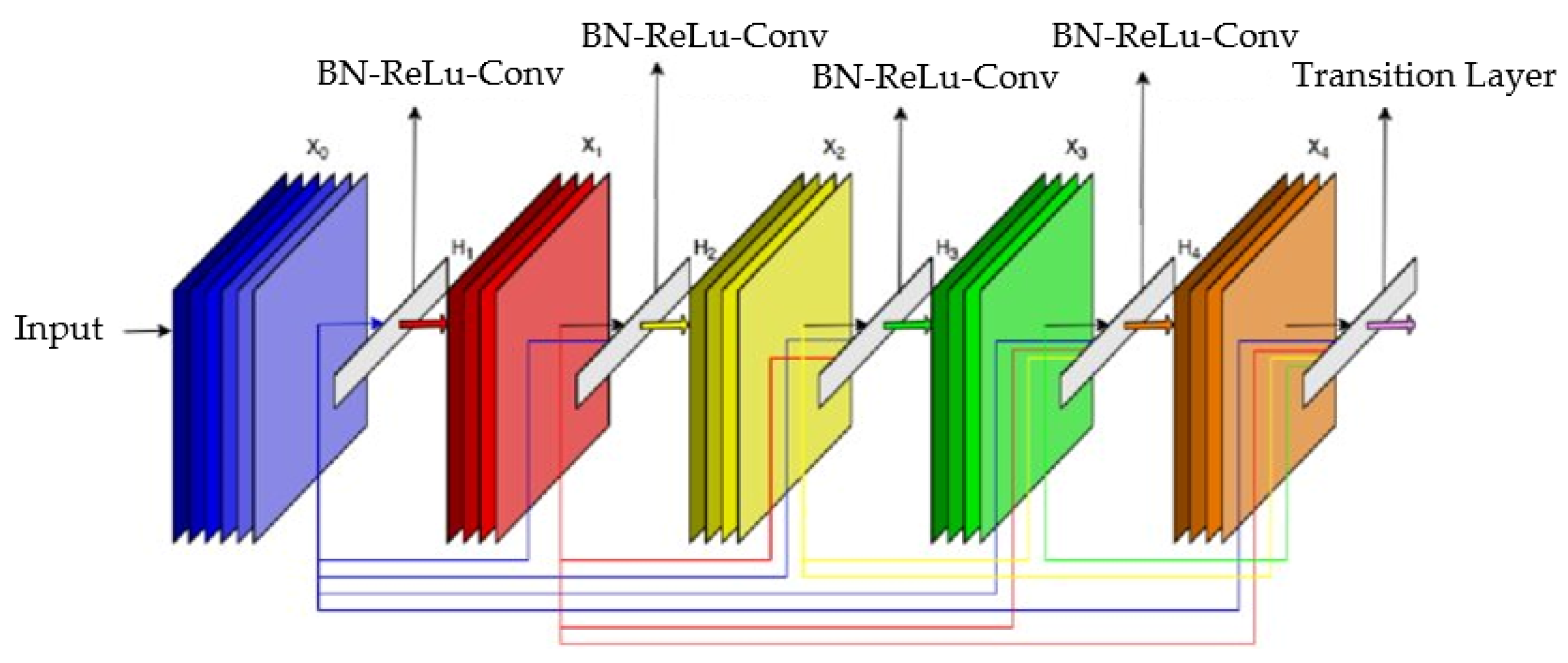

2.4.4. DenseNet121 Architecture

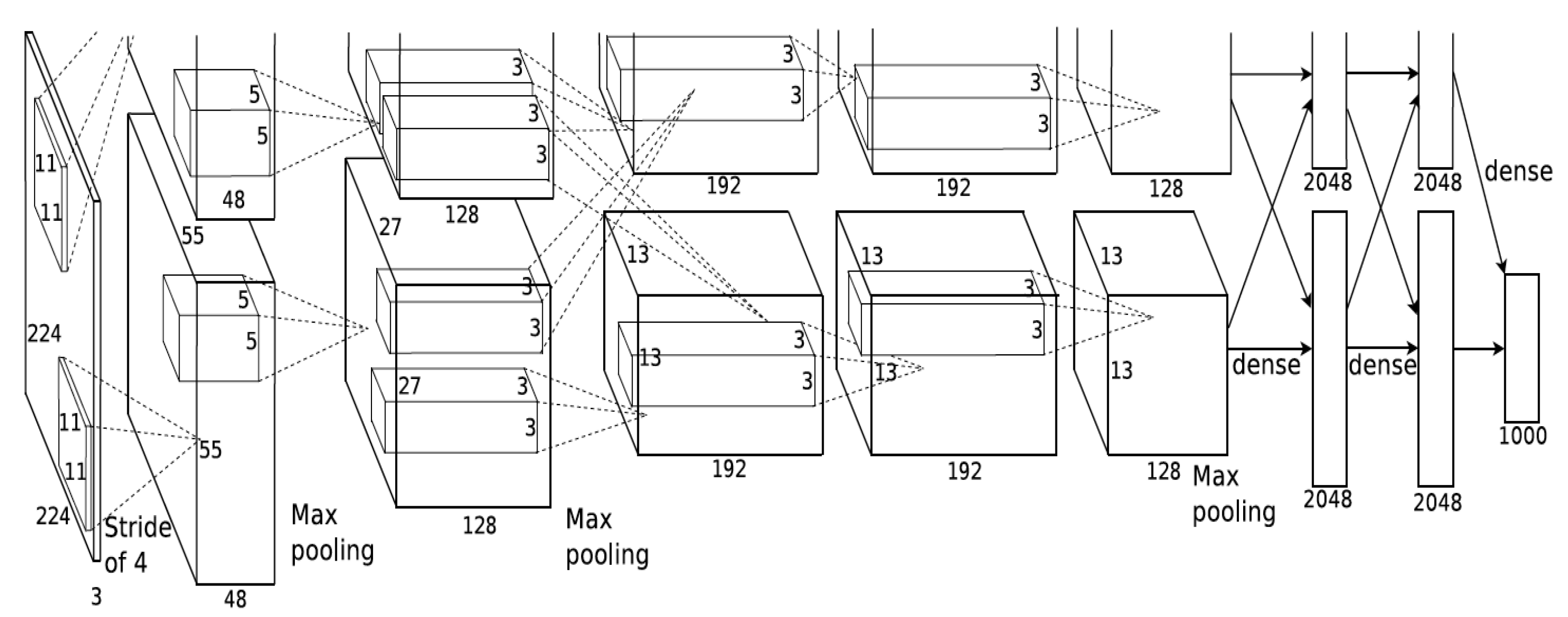

2.4.5. AlexNet Architecture

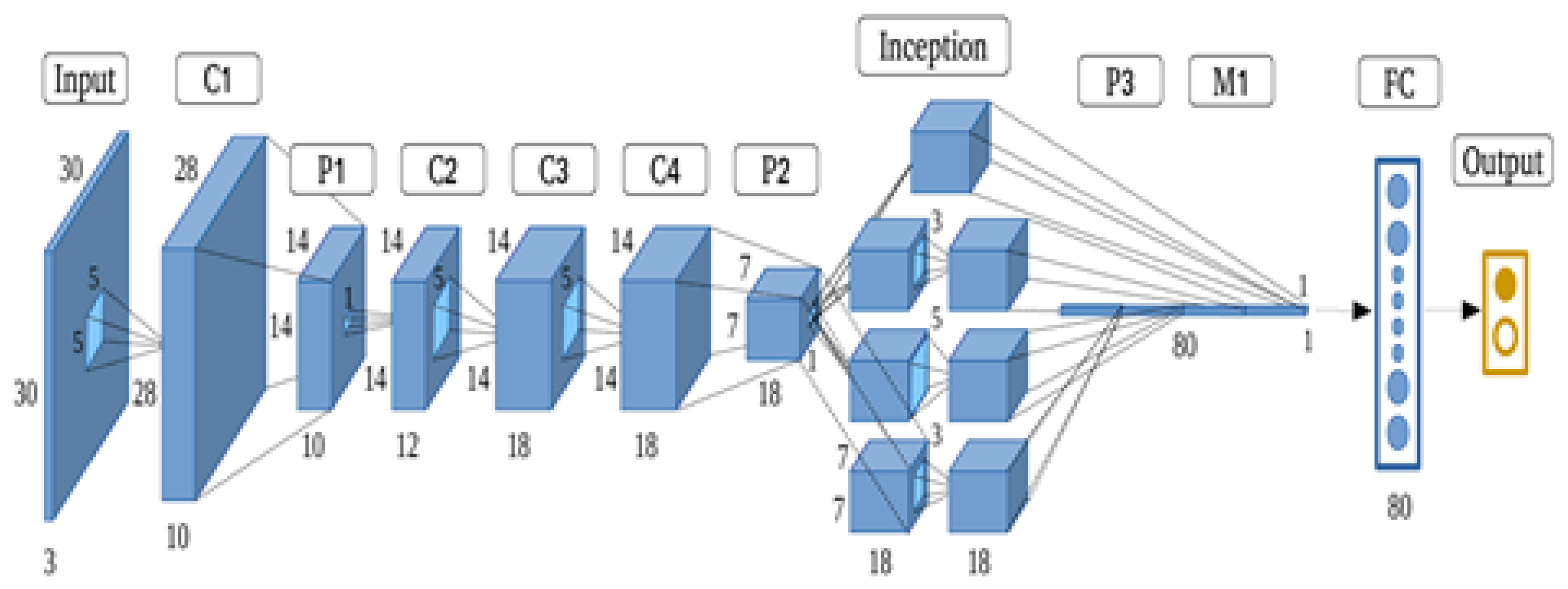

2.4.6. GoogleNet Architecture

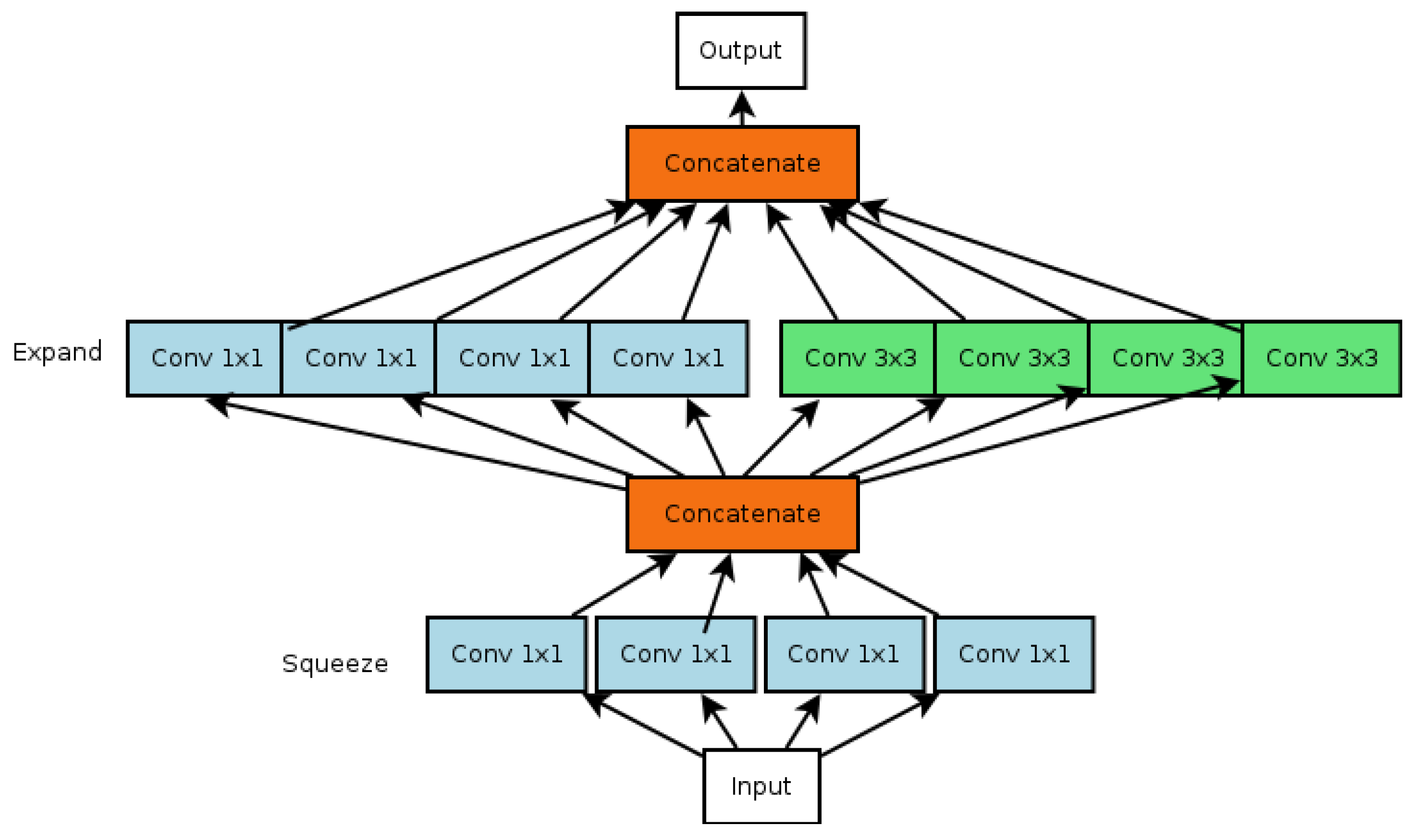

2.4.7. SqueezeNet Architecture

3. Result and Discussion

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Demirci, M.Y.; Beşli, N.; Gümüşçü, A. Efficient deep feature extraction and classification for identifying defective photovoltaic module cells in Electroluminescence images. Expert. Syst. Appl. 2021, 175, 114810. [Google Scholar] [CrossRef]

- Buerhop-Lutz, C.; Deitsch, S.; Maier, A.; Gallwitz, F.; Berger, S.; Doll, B.; Brabec, C.J. A benchmark for visual identification of defective solar cells in electroluminescence imagery. In Proceedings of the 35th European PV Solar Energy Conference and Exhibition, Brussels, Belgium, 24–28 September 2018. [Google Scholar]

- Deitsch, S.; Christlein, V.; Berger, S.; Buerhop-Lutz, C.; Maier, A.; Gallwitz, F.; Riess, C. Automatic classification of defective photovoltaic module cells in electroluminescence images. Sol. Energy 2019, 185, 455–468. [Google Scholar] [CrossRef]

- Chen, H.; Zhao, H.; Han, D.; Liu, K. Accurate and robust crack detection using steerable evidence filtering in electroluminescence images of solar cells. Opt. Lasers Eng. 2019, 118, 22–33. [Google Scholar] [CrossRef]

- Ali, M.U.; Khan, H.F.; Masud, M.; Kallu, K.D.; Zafar, A. A machine learning framework to identify the hotspot in photovoltaic module using infrared thermography. Sol. Energy 2020, 208, 643–651. [Google Scholar] [CrossRef]

- Pratt, L.; Govender, D.; Klein, R. Defect detection and quantification in electroluminescence images of solar PV modules using U-net semantic segmentation. Renew. Energy 2021, 178, 1211–1222. [Google Scholar] [CrossRef]

- Naveen Venkatesh, S.; Sugumaran, V. Machine vision based fault diagnosis of photovoltaic modules using lazy learning approach. Meas. J. Int. Meas. Confed. 2022, 191, 110786. [Google Scholar] [CrossRef]

- Moradi Sizkouhi, A.; Aghaei, M.; Esmailifar, S.M. A deep convolutional encoder-decoder architecture for autonomous fault detection of PV plants using multi-copters. Sol. Energy 2021, 223, 217–228. [Google Scholar] [CrossRef]

- Chindarkkar, A.; Priyadarshi, S.; Shiradkar, N.S.; Kottantharayil, A.; Velmurugan, R. Deep Learning Based Detection of Cracks in Electroluminescence Images of Fielded PV modules. In Proceedings of the 2020 47th IEEE Photovoltaic Specialists Conference, Calgary, AB, Canada, 15 June–21 August 2020; pp. 1612–1616. [Google Scholar] [CrossRef]

- Haidari, P.; Hajiahmad, A.; Jafari, A.; Nasiri, A. Deep learning-based model for fault classification in solar modules using infrared images. Sustain. Energy Technol. Assess. 2022, 52, 102110. [Google Scholar] [CrossRef]

- Rico Espinosa, A.; Bressan, M.; Giraldo, L.F. Failure signature classification in solar photovoltaic plants using RGB images and convolutional neural networks. Renew. Energy 2020, 162, 249–256. [Google Scholar] [CrossRef]

- Su, B.; Chen, H.; Liu, K.; Liu, W. RCAG-Net: Residual Channelwise Attention Gate Network for Hot Spot Defect Detection of Photovoltaic Farms. IEEE Trans. Instrum. Meas. 2021, 70, 3510514. [Google Scholar] [CrossRef]

- Fuyuki, T.; Kondo, H.; Kaji, Y.; Yamazaki, T.; Takahashi, Y.; Uraoka, Y. One shot mapping of minority carrier diffusion length in polycrystalline silicon solar cells using electroluminescence. Sol. Energy 2005, 1343–1345. [Google Scholar] [CrossRef]

- Breitenstein, O.; Bauer, J.; Bothe, K.; Hinken, D.; Müller, J.; Kwapil, W.; Schubert, M.C. Can Luminescence Imaging Replace Lock-In Thermography on Solar Cells and Wafers? In Proceedings of the 37th IEEE Photovoltaic Specialists Conference, Seattle, WA, USA, 19–24 June 2011; pp. 159–167. [Google Scholar] [CrossRef]

- Qian, X.; Li, J.; Cao, J.; Wu, Y.; Wang, W. Micro-cracks detection of solar cells surface via combining short-term and long-term deep features. Neural Netw. 2020, 127, 132–140. [Google Scholar] [CrossRef]

- Chen, H.; Pang, Y.; Hu, Q.; Liu, K. Solar cell surface defect inspection based on multispectral convolutional neural network. J. Intell. Manuf. 2020, 31, 453–468. [Google Scholar] [CrossRef]

- Gallardo-Saavedra, S.; Hernández-Callejo, L.; del Carmen Alonso-García, M.; Santos, J.D.; Morales-Aragonés, J.I.; Alonso-Gómez, V.; Moretón-Fernández, Á.; González-Rebollo, M.Á.; Martínez-Sacristán, O. Nondestructive characterization of solar PV cells defects by means of electroluminescence, infrared thermography, I–V curves and visual tests: Experimental study and comparison. Energy 2020, 205, 117930. [Google Scholar] [CrossRef]

- Mathias, N.; Shaikh, F.; Thakur, C.; Shetty, S.; Dumane, P.; Chavan, D. Detection of Micro-Cracks in Electroluminescence Images of Photovoltaic Modules. In Proceedings of the 3rd International Conference on Advances in Science & Technology (ICAST), Bahir Dar, Ethiopia, 8–10 May 2020; pp. 342–347. [Google Scholar] [CrossRef]

- Fan, T.; Sun, T.; Xie, X.; Liu, H.; Na, Z. Automatic Micro-Crack Detection of Polycrystalline Solar Cells in Industrial Scene. IEEE Access 2022, 10, 16269–16282. [Google Scholar] [CrossRef]

- Rahman, M.R.; Tabassum, S.; Haque, E.; Nishat, M.M.; Faisal, F.; Hossain, E. CNN-based Deep Learning Approach for Micro-crack Detection of Solar Panels. In Proceedings of the 2021 3rd International Conference on Sustainable Technologies for Industry 4.0 (STI), Dhaka, Bangladesh, 18–19 December 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Grunow, P.; Clemens, P.; Hoffmann, V.; Litzenburger, B.; Podlowski, L. Influence of Micro Cracks in Multi-Crystalline Silicon Solar Cells on the Reliability of Pv Modules. In Proceedings of the 20th European Photovoltaic Solar Energy Conference, Barcelona, Spain, 6–10 June 2005; pp. 2380–2383. [Google Scholar]

- Köntges, M.; Kurtz, S.; Packard, C.; Jahn, U.; Berger, K.A.; Kato, K.; Friesen, T. Review of Failures of Photovoltaic Modules; IEA—International Energy Agency: Paris, France, 2014. [Google Scholar]

- Bothe, K.; Pohl, P.; Schmidt, J.; Weber, T.; Altermatt, P.; Fischer, B.; Brendel, R. Electroluminescence Imaging as an In-Line Characterisation Tool for Solar Cell Production. In Proceedings of the 21st European Photovoltaik Solae Energy Conference, Dresden, Germany, 4–8 September 2006; pp. 597–600. [Google Scholar]

- Tsai, D.M.; Wu, S.C.; Li, W.C. Defect detection of solar cells in electroluminescence images using Fourier image reconstruction. Sol. Energy Mater. Sol. Cells 2012, 99, 250–262. [Google Scholar] [CrossRef]

- Denio, H. Aerial solar thermography and condition monitoring of photovoltaic systems. In Proceedings of the 2012 38th IEEE Photovoltaic Specialists Conference, Austin, TX, USA, 3–8 June 2012. [Google Scholar]

- Kasemann, M.; Kwapil, W.; Walter, B.; Giesecke, J.; Michl, B.; The, M.; Glunz, S.W. Progress in silicon solar cell characterization with infrared imaging methods. In Proceedings of the 23rd European Photovoltaic Solar Energy Conference, Valencia, Spain, 1–5 September 2008; pp. 965–973. [Google Scholar]

- Ge, C.; Liu, Z.; Fang, L.; Ling, H.; Zhang, A.; Yin, C. A hybrid fuzzy convolutional neural network based mechanism for photovoltaic cell defect detection with electroluminescence images. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 1653–1664. [Google Scholar] [CrossRef]

- Wang, J.; Bi, L.; Sun, P.; Jiao, X.; Ma, X.; Lei, X.; Luo, Y. Deep-learning-based automatic detection of photovoltaic cell defects in electroluminescence images. Sensors 2023, 23, 297. [Google Scholar] [CrossRef]

- Acikgoz, H.; Korkmaz, D.; Budak, U. Photovoltaic cell defect classification based on integration of residual-inception network and spatial pyramid pooling in electroluminescence images. Expert Syst. Appl. 2023, 229, 120546. [Google Scholar] [CrossRef]

- Munawer Al-Otum, H. Deep learning-based automated defect classification in electroluminescence images of solar panels. Adv. Eng. Inform. 2023, 58, 102147. [Google Scholar] [CrossRef]

- Xie, X.; Lai, G.; You, M.; Liang, J.; Leng, B. Effective transfer learning of defect detection for photovoltaic module cells in electroluminescence images. Sol. Energy 2023, 250, 312–323. [Google Scholar] [CrossRef]

- Korovin, A.; Vasilev, A.; Egorov, F.; Saykin, D.; Terukov, E.; Shakhray, I.; Zhukov, L.; Budennyy, S. Anomaly detection in electroluminescence images of heterojunction solar cells. Sol. Energy 2023, 259, 130–136. [Google Scholar] [CrossRef]

- Tang, W.; Yang, Q.; Xiong, K.; Yan, W. Deep learning based automatic defect identification of photovoltaic module using electroluminescence images. Sol. Energy 2020, 201, 453–460. [Google Scholar] [CrossRef]

- Et-taleby, A.; Chaibi, Y.; Allouhi, A.; Boussetta, M.; Benslimane, M. A combined convolutional neural network model and support vector machine technique for fault detection and classification based on electroluminescence images of photovoltaic modules. Sustain. Energy Grids Netw. 2022, 32, 100946. [Google Scholar] [CrossRef]

- Karimi, A.M.; Fada, J.S.; Hossain, M.A.; Yang, S.; Peshek, T.J.; Braid, J.L.; French, R.H. Automated pipeline for photovoltaic module electroluminescence image processing and degradation feature classification. IEEE J. Photovolt. 2019, 9, 1324–1335. [Google Scholar] [CrossRef]

- Chen, X.; Karin, T.; Jain, A. Automated defect identification in electroluminescence images of solar modules. Sol. Energy 2022, 242, 20–29. [Google Scholar] [CrossRef]

- Zhang, X.; Hao, Y.; Shangguan, H.; Zhang, P.; Wang, A. Detection of surface defects on solar cells by fusing multi-channel convolution neural networks. Infrared Phys. Technol. 2020, 108, 103334. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhan, K.; Wang, Z.; Shen, W. Deep learning-based automatic detection of multitype defects in photovoltaic modules and application in real production line. Prog Photovolt. Res. Appl. 2021, 29, 471–484. [Google Scholar] [CrossRef]

- Fioresi, J.; Colvin, D.J.; Frota, R.; Gupta, R.; Li, M.; Seigneur, H.P.; Vyas, S.; Oliveira, S.; Shah, M.; Davis, K.O. Automated defect detection and localization in photovoltaic cells using semantic segmentation of electroluminescence images. IEEE J. Photovolt. 2022, 12, 53–61. [Google Scholar] [CrossRef]

- Akram, M.W.; Li, G.; Jin, Y.; Chen, X.; Zhu, C.; Zhao, X.; Khaliq, A.; Faheem, M.; Ahmad, A. CNN based automatic detection of photovoltaic cell defects in electroluminescence images. Energy 2019, 189, 116319. [Google Scholar] [CrossRef]

- Zhao, X.; Song, C.; Zhang, H.; Sun, X.; Zhao, J. HRNet-based automatic identification of photovoltaic module defects using electroluminescence images. Energy 2023, 267, 126605. [Google Scholar] [CrossRef]

- Wang, H.; Chen, H.; Wang, B.; Jin, Y.; Li, G.; Kan, Y. Highefficiency low-power microdefect detection in photovoltaic cells via a field programmable gate array-accelerated dual-flow network. Appl. Energy 2022, 318, 119203. [Google Scholar] [CrossRef]

| Class | Types of Solar Panels | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| AlexNet | Monocrystalline | 86.89 | 81.05 | 86.53 | 82.99 |

| Polycrystalline | 85.26 | 79.96 | 84.16 | 80.69 | |

| GoogleNet | Monocrystalline | 87.25 | 82.13 | 87.02 | 84.08 |

| Polycrystalline | 86.78 | 81.01 | 85.96 | 83.13 | |

| MobileNet | Monocrystalline | 88.01 | 84.07 | 87.25 | 84.93 |

| Polycrystalline | 87.23 | 82.05 | 85.78 | 83.36 | |

| VGG16 | Monocrystalline | 91.26 | 86.48 | 89.89 | 86.15 |

| Polycrystalline | 90.01 | 85.29 | 87.47 | 85.19 | |

| ResNet50 | Monocrystalline | 93.48 | 87.63 | 90.37 | 87.81 |

| Polycrystalline | 91.25 | 87.08 | 89.45 | 86.89 | |

| DenseNet121 | Monocrystalline | 96.17 | 89.42 | 91.48 | 91.89 |

| Polycrystalline | 96.01 | 89.27 | 90.82 | 90.12 | |

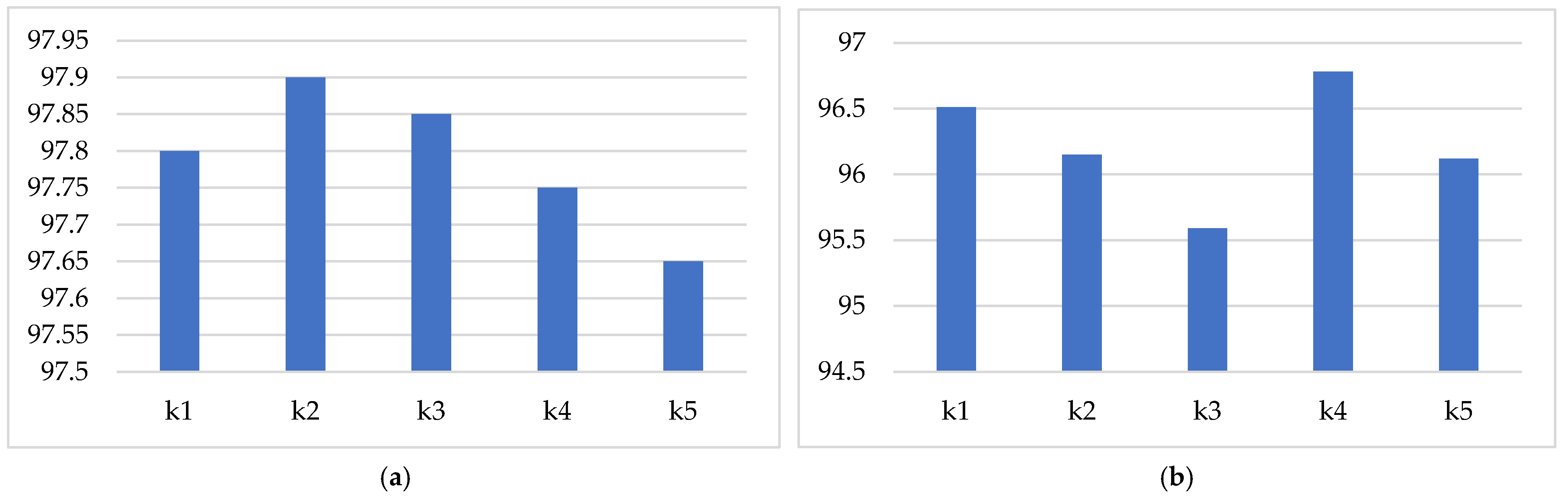

| SqueezeNet | Monocrystalline | 97.82 | 91.75 | 95.81 | 95.39 |

| Polycrystalline | 96.42 | 90.82 | 94.72 | 94.57 |

| Class | Types of Solar Panels | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

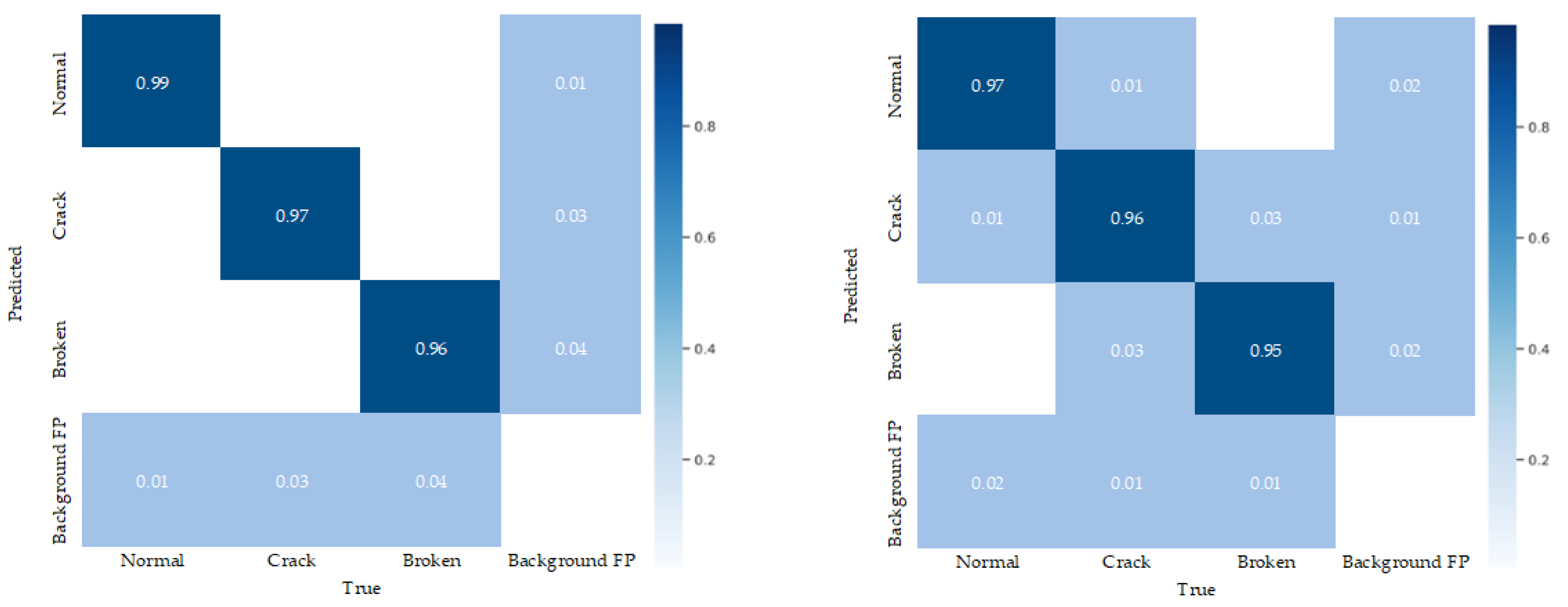

| Normal | Monocrystalline | 98.99 | 93.25 | 97.49 | 96.53 |

| Polycrystalline | 97.26 | 92.36 | 95.54 | 95.63 | |

| Cracked | Monocrystalline | 97.47 | 90.15 | 95.70 | 95.02 |

| Polycrystalline | 96.51 | 90.95 | 94.96 | 94.92 | |

| Broken | Monocrystalline | 96.99 | 91.60 | 94.24 | 94.61 |

| Polycrystalline | 95.26 | 89.15 | 93.66 | 92.16 | |

| Average | Monocrystalline | 97.82 | 91.75 | 95.81 | 95.39 |

| Polycrystalline | 96.42 | 90.82 | 94.72 | 94.57 |

| Authors | Architecture | Accuracy % |

|---|---|---|

| Kasemann et al. [26] | CNN | 93.02 |

| Buerhop-Lutz et al. [2] | VGG19 | 88.42 |

| Ge et al. [27] | Fuzzy-CNN | 88.35 |

| Deitsch et al. [3] | SeF-HRNet | 94.90 |

| Wang et al. [28] | ResNet152 | 92.13 |

| Açikgöz et al. [29] | Res-INC-V3-SPP | 93.59 |

| Munawer et al. [30] | CNN-ILD | 95.80 |

| Xie et al. [31] | ConvNext-CNFP | 96.36 |

| Krovin et al. [32] | CNN | 85.20 |

| Tang et al. [33] | CNN | 83.00 |

| Et-talebi et al. [34] | CNN + SWM | 90.57 |

| Karimi et al. [35] | YOLO | 78 |

| Chen et al. [36] | VGG16 | 82 |

| Zhang et al. [37] | Faster R-CNN | 91.3 |

| Zhao et al. [38] | Mask R-CNN | 70.2 |

| Firesi et al. [39] | ResNet50 | 95.4 |

| Akram et al. [40] | GCAM- EfficientNet | 93.59 |

| Xialog et al. [41] | CNN | 88.12 |

| Wang et al. [42] | CNN | 92.02 |

| This study | AlexNet, GoogleNet, MobileNet, VGG16, ResNet50, DenseNet121, SqueezeNet | 97.82 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karakan, A. Detection of Defective Solar Panel Cells in Electroluminescence Images with Deep Learning. Sustainability 2025, 17, 1141. https://doi.org/10.3390/su17031141

Karakan A. Detection of Defective Solar Panel Cells in Electroluminescence Images with Deep Learning. Sustainability. 2025; 17(3):1141. https://doi.org/10.3390/su17031141

Chicago/Turabian StyleKarakan, Abdil. 2025. "Detection of Defective Solar Panel Cells in Electroluminescence Images with Deep Learning" Sustainability 17, no. 3: 1141. https://doi.org/10.3390/su17031141

APA StyleKarakan, A. (2025). Detection of Defective Solar Panel Cells in Electroluminescence Images with Deep Learning. Sustainability, 17(3), 1141. https://doi.org/10.3390/su17031141