Abstract

The sustainable integration of Artificial Intelligence in Education (AIEd) in higher education hinges on students’ prolonged and meaningful adoption. Grounded in the Acceptance of AI Device Usage (AIDUA) framework, this study extends the model by incorporating novelty value and trust to investigate the determinants of students’ willingness to use AIEd Tools sustainably. Data from 400 university students in China were analyzed using Partial Least Squares Structural Equation Modeling (PLS-SEM). The results reveal that novelty value acts as a powerful catalyst, substantially boosting performance expectancy and diminishing effort expectancy. Furthermore, this study delineates a dual-pathway mechanism where performance and effort expectancies shape both emotions and trust, which in turn directly determine adoption intention—with emotion exhibiting the stronger influence. Theoretically, this research validates an extended AIDUA model, highlighting the critical roles of sustained innovation perception and cognitive-affective dual pathways. Practically, it advises higher education institutions to prioritize building robust trust through transparent practices and to design AIEd Tools that deliver lasting innovative value and positive learning experiences to foster sustainable adoption.

1. Introduction

Building sustainable and inclusive educational systems in the digital era necessitates a thoughtful integration of Sustainable Education and Artificial Intelligence in Education (AIEd). This is particularly critical in the context of the United Nations Sustainable Development Goals (SDGs), specifically SDG 4, which aims to “ensure inclusive and equitable quality education and promote lifelong learning opportunities for all”, with Targets 4.3 and 4.4 emphasizing inclusive tertiary education and skills development. Meanwhile, SDG 9 calls for building resilient infrastructure, promoting inclusive and sustainable industrialization, and fostering innovation. AIEd is often positioned as a key innovative tool for advancing these goals—enabling equitable access to quality education and supporting continuous learning through intelligent infrastructure. However, this perspective is not without its critics; the rapid adoption of AIEd also raises profound concerns regarding its potential to encourage academic misconduct, undermine deep learning through over-reliance, and contribute to significant environmental costs due to substantial energy and resource consumption [1,2,3]. While the broader discourse on AIEd sustainability encompasses environmental, social, and economic dimensions (as detailed in Section 2.2), this study operationalizes "sustainable adoption" primarily from a user-centered perspective. We posit that the long-term viability of any educational technology is fundamentally predicated on its continued and meaningful use by students. Without this user-level sustainability, the potential macro-level benefits of AIEd cannot be fully realized. Consequently, this research focuses on investigating the determinants of students’ sustained willingness to use AIEd Tools as a critical prerequisite for achieving the broader goals of sustainable higher education.

Existing studies on technology acceptance in education often rely on generalized models such as TAM and UTAUT2 to explain adoption behaviors [4,5]. However, these frameworks may overlook the unique intelligent attributes of AI—such as autonomy, adaptability, and perceived agency—which necessitate a more tailored approach. The Acceptance of AI Device Usage (AIDUA) model [6] offers a promising alternative by focusing on the cognitive and emotional processes specific to AI interactions. Nevertheless, its application within educational contexts, especially in developing regions like China, remains underexplored. More importantly, the original AIDUA framework does not fully capture two aspects critical to the sustainable adoption of AIEd: the role of perceived innovation (“novelty value”) as a driver of lasting engagement beyond initial exposure, and the role of trust as a fundamental cognitive belief that must be earned and maintained in the ethically sensitive context of education [7,8]. In response, this study employs an extended AIDUA framework—integrating novelty value and trust as parallel mediators—to empirically examine their effects, alongside social influence, hedonic motivation, and anthropomorphism, on students’ willingness to use AIEd Tools in mainland China. This research seeks to make three key contributions. Theoretically, it aims to extend and validate the AIDUA model in a sustainable education context, explicitly testing the roles of novelty value and trust, thereby providing a more holistic, dual-pathway (affective-cognitive) understanding of AIEd acceptance. Empirically, it identifies which factors are most influential in driving sustained acceptance among a cohort of experienced users, offering robust evidence from a key demographic. Practically, the findings are intended to provide actionable insights for fostering trust and positive learning experiences and for prioritizing features that deliver lasting innovative value, thereby supporting the long-term and sustainable integration of AI in education.

2. Literature Review and Theoretical Model

2.1. Functional Categories of AIEd Tools

Popenici and Kerr (2017) [9] describe Artificial Intelligence in education as computational systems that learn, adapt, synthesize, and self-correct while processing data. Building on this view, recent reviews organize AIEd tools by function rather than by brand or platform. First, assessment and learning analytics combine automated scoring with predictive models—such as Predictive Learning Analytics (PLA)—to identify at-risk students and deliver timely feedback [10,11,12]. Second, personalized learning systems, including intelligent tutoring and adaptive platforms, tailor content and pacing to learner profiles, improving relevance and progression [11,12]. Third, immersive learning uses AI-supported virtual-reality environments to provide safe, authentic practice in realistic scenarios [13,14]. Fourth, generative content systems (GenAI) produce explanatory text, images, and code to support creation, homework assistance, and formative feedback—for example, large-language-model assistants such as ChatGPT and image generators such as Midjourney [2,8,15]. Taken together, these functional strands enhance flexibility and immediacy in teaching and learning while strengthening feedback loops and access [16].

2.2. AIEd Tools as Enablers for Sustainable Higher Education

To systematically evaluate the role of AIEd Tools discussed in the previous section, this study adopts the tripartite sustainability framework (environmental, social, economic), which aligns with the multifaceted missions of modern universities. Within this framework, AIEd Tools act as critical enablers across multiple dimensions of sustainability:

Environmental Sustainability: Digital learning tools contribute to reducing the ecological footprint of education by minimizing reliance on paper and decreasing the need for commuting, thereby lowering carbon emissions [3,17]. However, a comprehensive and critical sustainability assessment must contend with the significant environmental costs associated with AI itself, ensuring that the pursuit of educational goals (SDG 4) does not inadvertently undermine environmental ones (SDGs 7, 12, and 13). The development and operationalization of AI, particularly large-scale models, are extraordinarily energy intensive, contributing to a substantial carbon footprint [3,18]. Beyond operational emissions, the full lifecycle of AI infrastructure poses substantial sustainability challenges [1]. Therefore, the purported environmental benefits of digitalization can be partially offset by these direct impacts. A sustainable approach therefore necessitates a balance, involving strategies such as prioritizing computationally efficient models [19], leveraging cloud providers committed to renewable energy, and incorporating “Green AI” principles into institutional procurement and policy [3,20].

Social Sustainability: By providing scalable access to personalized and high-quality educational resources, AIEd Tools can help bridge educational inequalities, offering support to students in remote or underserved regions [14,21]. Concurrently, it is crucial to address concerns that AIEd may undermine deep learning and critical thinking if used to circumvent the learning process, potentially exacerbating educational inequities in the long run [1].

Economic Sustainability: These tools enhance instructional efficiency, optimize resource allocation, and equip students with future-ready skills essential for thriving in an AI-driven economy [1,2]. However, the realization of these sustainable benefits is fundamentally contingent upon one critical factor: stakeholder acceptance. Without widespread willingness to adopt and use these technologies—particularly among students, the primary consumers—even the most advanced AIEd Tools risk underutilization or rejection, nullifying their potential to contribute to a sustainable educational future [1,22]. Therefore, understanding the determinants of students’ acceptance is not merely a question of technology adoption but a prerequisite for harnessing AI to build resilient, inclusive, and sustainable higher education systems.

According to the Theory of Reasoned Action [20], user intentions and willingness are key predictors of actual behavior toward AIEd Tools [23]. However, identifying the factors that shape these intentions remains a complex challenge. Previous research on AIEd acceptance has predominantly relied on generalized technology acceptance models such as TAM, UTAUT, and UTAUT2, as well as information system frameworks. While these models offer valuable insights into user intentions, they were originally designed to evaluate non-AI technologies and may not fully capture the unique attributes of AI—such as autonomy, adaptability, and perceived agency [6,11,24]. In response, recent theoretical developments have introduced AI-specific frameworks like the AIDUA model, which aims to elucidate users’ willingness to adopt AI devices in service contexts. Nevertheless, few studies have applied the AIDUA model to AIEd Tools in depth, with most existing research focusing narrowly on ChatGPT.

Table 1 provides a detailed overview of prior studies on user behavior and perceptions of AIEd Tools, analyzing motivations for both initial and continued use. While these studies offer valuable insights, the limited scope of research leaves significant gaps in our understanding of AIEd adoption. As a key potential user group, university students’ willingness to use AIEd Tools is crucial to the tools’ practical effectiveness and market viability. Thus, an in-depth investigation into the determinants of their acceptance provides essential theoretical support and practical guidance for the optimal design and effective promotion of AIEd Tools.

Table 1.

Summary of prior studies on users’ perceptions of AIEd Tools.

2.3. The Extended AIDUA Model

The AIDUA model, introduced by Gursoy et al. (2019) [6], provides a theoretical framework for understanding the acceptance of artificial intelligence devices by integrating cognitive evaluation and dissonance theories. Within this framework, user acceptance unfolds across three sequential phases: primary appraisal, secondary appraisal, and the outcome phase. During primary appraisal, individuals assess the relevance of using an AI device, influenced by factors such as social influence, hedonic motivation, and anthropomorphism. These perceptions then shape performance and effort expectations in the secondary appraisal phase, which in turn trigger emotional responses. Finally, in the outcome stage, users’ attitudes conclusively determine their adoption intention [6].

While the original AIDUA model has been validated in various service contexts (e.g., Mei et al., 2024 [30]; Wang & Yin, 2025 [31]), its application to AIEd Tools—characterized by rapid iteration and the critical need for ethical, sustained integration into learning—demands a context-specific extension. Building on the precedent of researchers introducing new predictors to tailor the framework (e.g., Ma & Huo, 2023 [32], who incorporated novelty value for chatbots), this study innovatively extends the AIDUA model in two key ways to better capture the dynamics of sustainable adoption.

First, we integrate Novelty Value into the Primary Appraisal stage. This placement is justified by the stage’s function of assessing a stimulus’s relevance and significance [6]. In the context of rapidly evolving educational technology, the initial and ongoing perception of a tool’s innovativeness is posited as a fundamental driver of attention and relevance judgment, preceding the formation of specific performance and effort expectations.

Second, we introduce Trust as a parallel mediator to Emotion in the Secondary Appraisal stage. This stage involves a deliberate cost–benefit analysis, resulting in both affective and cognitive responses [6]. While the original model emphasizes the affective pathway (Emotion), integrating AI into the sensitive domain of education necessitates a stronger consideration of cognitive assessments of reliability and integrity. Trust constitutes such a higher-order cognitive belief, and we posit that it is formed in parallel with Emotion during secondary appraisal, with both independently informing the final behavioral decision. This parallel mediation is strongly precedented by the literature indicating distinct cognitive and affective mechanisms in technology acceptance [31].

This extended AIDUA model establishes a comprehensive theoretical framework for investigating the determinants of university students’ sustainable adoption of AIEd Tools, guiding the following research questions:

Q1: What are the specific behavioral intentions of university students towards AIEd Tools?

Q2: How do novelty value and trust influence university students’ acceptance of AIEd Tools?

3. Hypothesis Development

3.1. Social Influence (SI)

Social influence refers to how the social environment affects consumers’ perceptions of the benefits of using specific technologies [33]. It has been proven to motivate users to utilize chatbots. According to the AIDUA framework, social influence will likely play a significant role in determining how consumers initially perceive the services provided by AI devices. When deciding whether or not to employ AI service devices, customers are likely to follow their social groups’ norms, behaviors, and attitudes [6]. This is particularly evident in educational contexts, where social influence has been identified as a strong positive predictor of students’ challenge appraisal (a concept closely aligned with performance expectancy) regarding the use of Generative AI [31], as well as a key factor in their adoption of ChatGPT [5,10]. A possible explanation for this influence is that consumers gain a deeper understanding of AI technology from influential figures, reducing their uncertainty level [34,35]. In our study, social influence is defined as the social environment that affects university students’ use of AIEd Tools in their learning, such as teachers, family, friends, and social networks.

Based on the above considerations, we hypothesize that:

H1a:

Social influence positively influences users’ performance expectancy of AIEd Tools.

H1b:

Social influence negatively influences users’ effort expectancy of AIEd Tools.

3.2. Hedonic Motivation (HM)

Hedonic motivation refers to the perceived enjoyment or pleasure that individuals expect to gain from using artificial intelligence devices to provide services [6]. Hedonic motivation is a significant predictive factor in technology adoption behavior, which intrinsically drives individuals to seek advanced technology to satisfy their hedonic needs [6,36]. Previous studies focusing on artificial intelligence have revealed that hedonic motivation significantly influences users’ intentions to use AI technology [5,12,37], positively enhancing users’ performance expectations of AI devices [5,32,38]. Similarly, considering that AI devices can meet one’s needs, users can more easily cope with the effort required to use AI devices. In other words, hedonic motivation should negatively impact perceived effort expectations [32,37,38], as consumers with high hedonic motivation do not perceive tasks related to AI devices as brutal [6]. In educational settings, this intrinsic enjoyment is pivotal in reframing the adoption of AI as a manageable challenge rather than a hindrance [31]. Studies by Chi et al. (2021, 2023) [39,40] indicate that when people are motivated by hedonic needs, they are more likely to have a positive level of trust in adopting the technology, meaning hedonic motivation positively affects users’ trust in AI. In the context of this study, hedonic motivation refers to the enjoyment, fun, and entertainment university students perceive when using AIEd Tools [41].

Therefore, we propose the following hypotheses:

H2a:

Hedonic motivation positively enhances the performance expectations of AIEd Tools users.

H2b:

Hedonic motivation positively affects users’ trust in AIEd Tools.

H2c:

Hedonic motivation negatively impacts the effort expectations of AIEd Tools users.

3.3. Anthropomorphism (A)

In the original AUDUA framework, anthropomorphism refers to the degree to which an object possesses human-like characteristics, such as human appearance, self-awareness, and emotions [6,16,42]. Studies have shown that anthropomorphism strongly affects consumers’ willingness to use robots [6,18,43]. However, its impact appears to be context-dependent. For instance, while it drives acceptance in service settings like banking [30], it can be perceived as a hindrance in educational contexts [31]. This divergence suggests that in learning environments, highly human-like AI might be viewed as threatening human distinctiveness or as unsettling, thereby fostering negative appraisals [31]. As the degree of anthropomorphism in AI devices increases, users require more effort [37], as they are expected to put in the potential effort needed to understand a technological device while also exerting the effort required for communication with a natural person [6]. Research findings also demonstrate that providing consumers with human-like interactions can lead to higher trust [44,45,46]. In the context of this study, anthropomorphism refers to the responsive, considerate, and friendly nature of AIEd Tools, which is full of humanity.

Based on the discussion, the following hypotheses are proposed:

H3a.

Anthropomorphism positively influences users’ performance expectations of AIEd Tools.

H3b.

Anthropomorphism positively influences trust in AIEd Tools.

H3c.

Anthropomorphism negatively influences users’ expectation of effort from AIEd Tools.

3.4. Novelty Value (NV)

As a construct introduced into the primary appraisal stage of our extended AIDUA model, novelty value is expected to shape users’ initial relevance assessment and subsequent cognitive evaluations.

Novelty value is a newly introduced variable. Novelty refers to the unique and novel degree to which users perceive and recognize technology in helping them complete tasks [47]. Numerous research conclusions consistently indicate that novelty value, as a core characteristic of new technology, plays a crucial role in influencing the acceptance of innovative technology [23,47,48,49]. When users perceive the novel value of technology, they pleasantly complete tasks [47], which helps reduce users’ psychological resistance to new technology [50]. Ma & Huo’s (2023) [32] research shows that novel value positively affects individuals’ perceived performance expectations of ChatGPT and negatively affects effort expectations. The indirect effect of novel value on users’ acceptance intention is much greater than the indirect effect of social influence. In this study, novelty is defined as the perception of university students that AIEd Tools are innovative and can effectively and creatively assist their learning process.

Thus, the following hypothesis was formulated:

H4a.

Novelty value positively influences users’ performance expectations of AIEd Tools.

H4b.

Novelty value negatively influences users’ expectation of effort in AIEd Tools.

3.5. Performance Expectancy (PE), Effort Expectancy (EE), and Emotion (E) and Trust (T)

In the second evaluation phase of the AIDUA model, users assess the costs and benefits of using artificial intelligence devices in service delivery based on their perceived performance and effort expectations. They then form emotions regarding using AI devices in service delivery [6]. This secondary appraisal is posited to generate not only affective responses (Emotion) but also a key cognitive belief (Trust), both of which are central to our extended model. Performance expectancy refers to users’ evaluation of the AI device’s performance in terms of service accuracy and consistency [33], while effort expectancy pertains to users’ perception of the psychological and mental effort required to interact with the AI device [33]. Numerous studies have consistently shown that higher levels of performance expectancy lead to a more positive overall emotion towards using AI devices [6]. This is strongly supported in educational settings, where a challenge appraisal (akin to performance expectancy) directly fosters positive achievement and challenge emotions [31]. Conversely, negative emotions arise when AI devices demand additional cognitive work [6]. Similarly, a hindrance appraisal in learning contexts is linked to increased negative emotions, such as loss and deterrence emotions [31].

A limited number of studies have extended trust [40,46,51] into technology acceptance research. Trust in technology is often defined as a belief developed through a cognitive assessment [39]. It has been identified as a critical factor in determining technology adoption behavior [40]. Previous research suggests that trust may be a higher-order cognitive belief influenced by performance expectancy [39,46], effort expectancy, anthropomorphism, and hedonic motivation [40], and it directly affects customers’ willingness to use AI robots [39,40]. While the emotional pathway is well established, recent findings suggest that cognitive appraisals might exert a more substantial direct influence on behavioral outcomes than emotions in certain learning contexts [31], underscoring the importance of also investigating the direct cognitive route through trust.

Therefore, we hypothesize as follows:

H5a.

Performance expectancy positively influences users’ emotions.

H5b.

Performance expectancy positively influences users’ trust.

H6a.

Effort expectancy negatively influences users’ emotions.

H6b.

Effort expectancy negatively influences users’ trust.

3.6. Willingness to Accept or Reject Use

In the outcome stage of the AIDUA framework, the affective and cognitive evaluations from the secondary appraisal converge to shape behavioral intentions. The initial AIDUA framework suggests that customer emotions fully mediate the relationship between cognitive evaluation and behavioral intention, as better service performance often brings customers joy, delight, and emotional elevation. Positive emotions should lead to accepting artificial intelligence devices, while negative emotions should result in rejecting such devices [6,32,37,52]. In the context of AI-powered learning, positive emotions like achievement emotions have been empirically linked to higher learning productivity, while negative emotions such as loss emotions correlate with lower productivity [31]. Subsequent research has indicated that customers’ behavioral intentions towards AI robots are also directly influenced by cognitive beliefs such as their trust in AI robots. Trust positively affects the willingness to use AI robots [40,46] and negatively influences opposition to their use [40]. This aligns with the perspective that rational, cognitive assessments can be a primary driver of technology adoption decisions, potentially complementing or even surpassing the affective pathway in influence [31].

Therefore, we hypothesize as follows:

H7a:

Emotional evaluation positively influences users’ acceptance of AIEd Tools.

H7b:

Emotional evaluation negatively influences users’ rejection of AIEd Tools.

H8a:

Trust evaluation positively influences users’ acceptance of AIEd Tools.

H8b:

Trust evaluation negatively influences users’ rejection of AIEd Tools.

A summary of all proposed hypotheses is presented in Table 2.

Table 2.

Summary of research hypotheses.

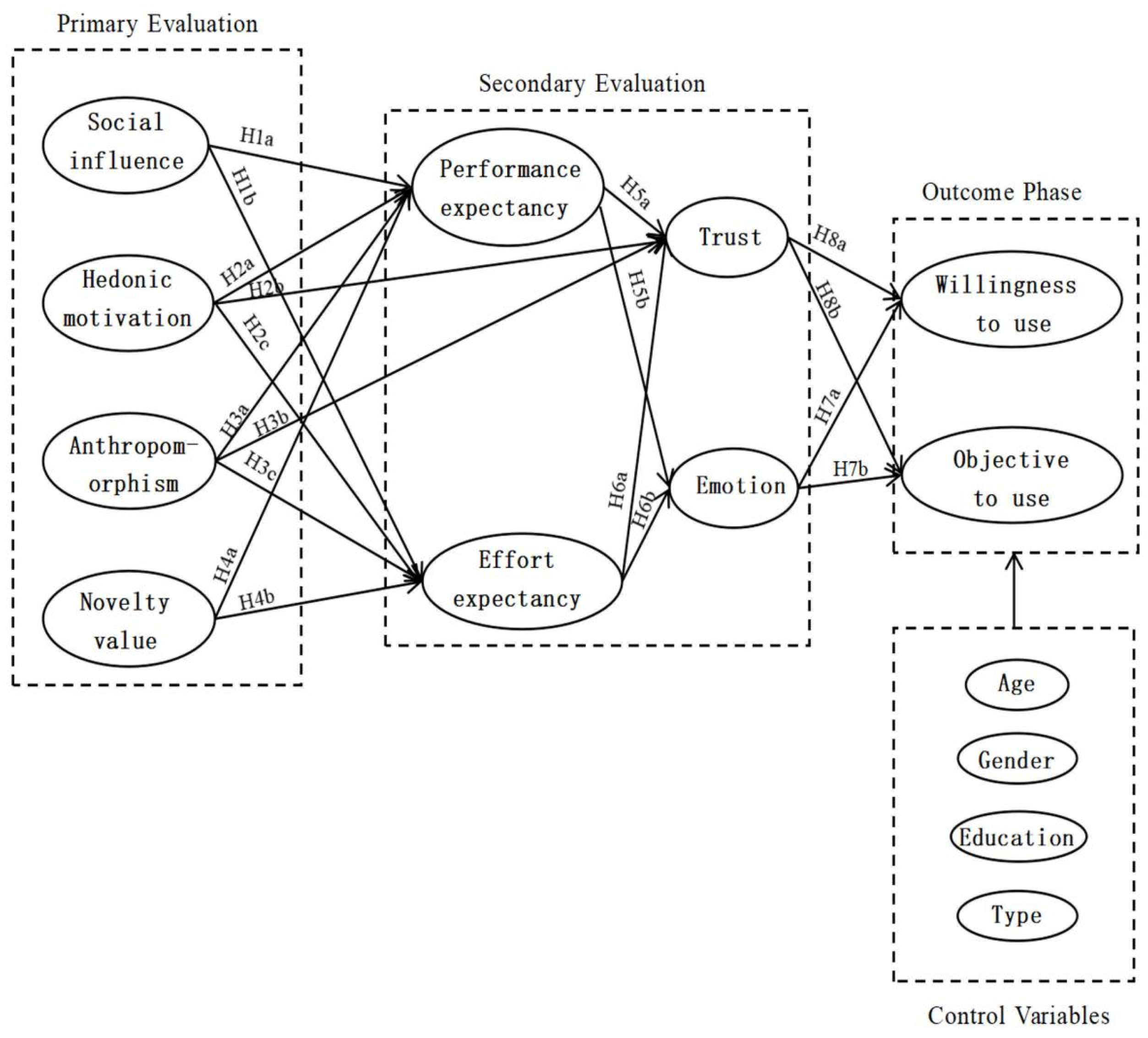

Based on the AIDUA model, this study proposes a research design to investigate university students’ acceptance of AIEd Tools (see Figure 1). The research model assumes that social influence and eight other factors can affect university students’ willingness to accept or reject AIEd Tools. To further explore the potential impact of demographic variables on users’ willingness to accept or reject ChatGPT, we consider age, gender, education level, and types of AIEd Tools as control variables.

Figure 1.

Proposed model.

4. Methodology

4.1. Participants and Sampling Procedure

Given that this study aims to explore the key determinants influencing Chinese university students’ acceptance of AIEd Tools and to examine the relationships among perceptual constructs (e.g., trust, performance expectancy) at a specific time point, a cross-sectional design was adopted [53]. This design enables simultaneous analysis of multiple variables through a single-round data collection, aligning with the requirements of exploratory research.

Data collection was conducted via an online questionnaire distributed on the platform https://www.wjx.cn from 13 February to 25 February 2024. A combination of convenience and snowball sampling, both non-probability sampling methods, was employed to recruit participants. This strategy was deemed appropriate for efficiently accessing the target population of university students who are active online and have experience with AIEd Tools.

To ensure participant eligibility, two screening questions were implemented at the survey’s outset: “Which stage of university education are you in?” and “How often do you use AIEd Tools?”. This process filtered out individuals who were not current university students or who rarely/never used AIEd Tools. The survey reached students across multiple major regions in mainland China, including East China, North China, and South China. It is important to note that the sample did not include respondents from Hong Kong, Taiwan, Xinjiang, Inner Mongolia, or Fujian. Consequently, while the sample offers valuable insights, its geographic concentration may limit the generalizability of the findings to the entire Chinese student population.

From an initial pool of 606 submissions, rigorous data cleaning was performed. This process excluded 76 responses from ineligible participants (non-university students or those who rarely/never used AIEd Tools), 78 responses with long-string responding (>70% identical selections across Likert items), and 52 responses that failed attention-check questions. Consequently, 400 valid responses were retained for analysis, resulting in an effective response rate of 66.0%.

To justify the sample size of 400 for the PLS-SEM analysis, a post hoc power analysis was conducted using G*Power 3.1. With an effect size f2 of 0.15, an alpha error probability of 0.05, and 10 predictors, the analysis indicated a statistical power (1 ) exceeding 0.99. This result substantially surpasses the conventional threshold of 0.80, confirming that the sample size is more than adequate to detect the effects in the proposed model [54].

Sample characteristics were analyzed to profile the respondents. Among the 400 valid participants, 42.5% were male and 57.5% were female. The majority (82.3%) were under the age of 25. In terms of educational level, 77% were undergraduate students and 23% were postgraduate students. An analysis of the types of AIEd Tools used revealed a high adoption rate of intelligent chatbots, with 92% of the participants reporting the use of tools such as ChatGPT to aid their learning process, underscoring the prominence of this tool category in the current educational landscape. This high adoption rate signifies that our sample consists of experienced users, whose perceptions are not based on hypothetical scenarios but on actual interaction with AIEd Tools. Consequently, their evaluations of factors like novelty, trust, and performance are grounded in real experience, providing robust and ecologically valid insights into the determinants of continued and mature usage.

4.2. Measures and Questionnaire Design

The questionnaire comprised two sections: demographic information and perceptions of AIEd Tools. All constructs were measured with reflective indicators on a five-point Likert scale (1 = strongly disagree, 5 = strongly agree). The measurement scales, including all specific items, their exact wording, and sources, are detailed in Table 3. This comprehensive presentation directly addresses the need for full operational transparency.

Table 3.

Measurement scales and sources.

To ensure conceptual equivalence and content validity across the linguistic and cultural contexts, a rigorous process was followed. The original English items were independently translated into Chinese by two professional translators. Subsequently, a back-translation into English was performed by two additional translators to verify accuracy and conceptual consistency. Furthermore, the original English scales were reviewed by two professors specializing in Information Systems to confirm that the items adequately and comprehensively captured the intended theoretical constructs.

The finalized Chinese questionnaire was administered to a pilot sample of 100 university students with experience using AIEd Tools. An exploratory factor analysis of the pilot data (principal axis factoring, Promax rotation) resulted in the removal of four items. These items were deleted due to low factor loadings (below the conservative threshold of 0.5) and a cross-loading difference <0.20 with a competing construct, which indicated potential ambiguities and threatened the discriminant validity of the initial scales. The final instrument retained 37 items, which demonstrated robust reliability and validity in the main study, as reported in Section 5.

4.3. Data Analysis Strategy

The proposed research model and hypotheses were tested using Partial Least Squares Structural Equation Modeling (PLS-SEM) with SmartPLS 4.0 software. PLS-SEM was selected for its suitability for analyzing complex models, its ability to handle non-normal data, and its focus on prediction and theory development. The model’s complexity, featuring 10 latent constructs (SI, HM, A, NV, PE, EE, T, E, W, O), 37 measurement indicators, and multiple parallel mediation paths (through Emotion and Trust), makes PLS-SEM a robust and appropriate analytical technique. The model comprises 10 reflective latent constructs and 37 indicators, with the maximum number of arrows pointing at a single construct = 6 and 2 parallel mediators (Emotion, Trust), indicating moderate-to-high model complexity suitable for PLS-SEM [66].

Given that the data were collected from a single source at one point in time, we assessed the potential threat of common method bias (CMB) using both procedural and statistical remedies. Procedurally, we ensured respondent anonymity and improved scale items through pilot testing [9]. Statistically, we employed Harman’s single-factor test. An exploratory factor analysis including all items revealed that the largest factor accounted for 36.8% of the variance, which is below the critical threshold of 50%. This suggests that common method bias is not a pervasive issue in this dataset.

The data analysis followed a two-stage analytical procedure. First, the measurement model was assessed for reliability and validity. This involved examining internal consistency via Cronbach’s alpha and composite reliability, convergent validity via factor loadings and average variance extracted (AVE), and discriminant validity using the Fornell–Larcker criterion and the Heterotrait–Monotrait (HTMT) ratio of correlations. Second, the structural model was evaluated. This step included examining the significance of path coefficients () using a bootstrapping procedure with 5000 subsamples, assessing the coefficient of determination () for the endogenous constructs, and evaluating the effect sizes ().

Additionally, control variable analysis was conducted. As detailed in Section 5.2, Analysis of Variance (ANOVA) and t-tests indicated that demographic variables—namely age, gender, education level, and user type—had no significant impact on the behavioral intention to reject AIEd Tools. Consequently, these variables were excluded from the final structural model to maintain parsimony.

5. Results

5.1. Measurement Model

The convergent validity of the measurement model was assessed by examining average variance extracted (AVE) scores, factor loadings, Cronbach’s alpha, and composite reliability [10]. As shown in Table 4, all factor loadings exceeded the threshold of 0.60, indicating strong relationships between the items and their respective constructs. Furthermore, both Cronbach’s alpha and composite reliability (CR) scores for each construct surpassed the recommended value of 0.70, demonstrating high internal consistency and reliability. Additionally, the AVE scores for all constructs were greater than 0.50 [54]. These results provide strong evidence for the convergent validity of the measurement model, confirming that the measures accurately capture their intended constructs.

Table 4.

Properties of measurement items and constructs.

The Fornell–Larcker criterion and the Heterotrait–Monotrait criterion were used to evaluate discriminant validity. As demonstrated in Table 5, each construct’s square root of the Average Variance Extracted (AVE) surpasses its strongest correlation with any other construct. Furthermore, all correlation values stay under the 0.90 limit. These results suggest that the items and constructs in this study possess adequate discriminant validity, effectively distinguishing between the distinctiveness of various constructs.

Table 5.

Discriminant validity.

5.2. Structural Model

Prior to testing the research hypotheses, control variable analysis was conducted. The results of Analysis of Variance (ANOVA) and t-tests indicated that demographic variables—namely age, gender, education level, and user type—had no significant impact on the behavioral intention to reject AIEd Tools. Consequently, these variables were excluded from the final structural model.

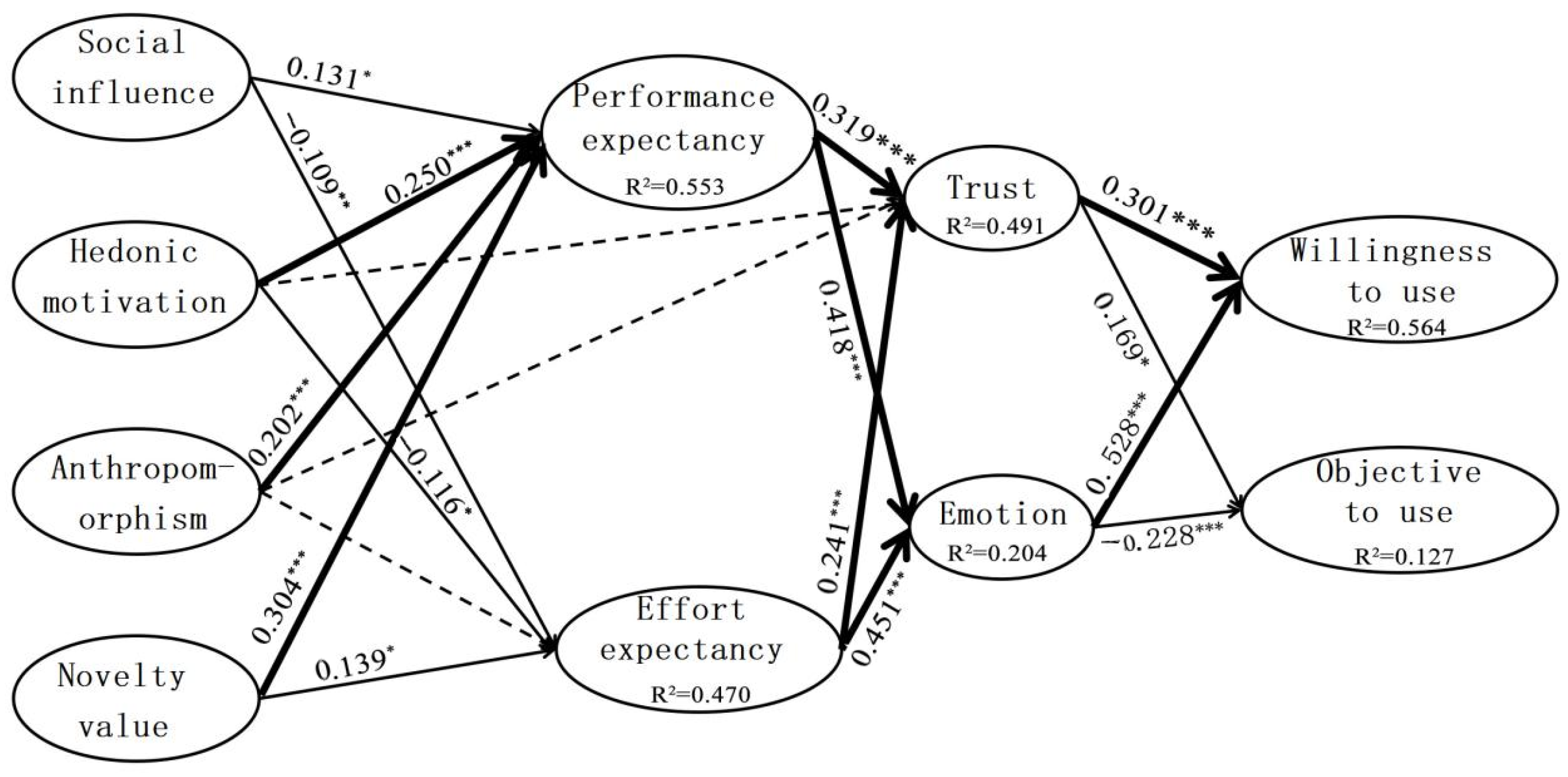

The evaluation of the structural model, with results detailed in Table 6 and illustrated in Figure 2, provides strong support for the extended AIDUA framework. The path coefficients and their significance levels are summarized as follows.

Table 6.

Results of structural model assessment and hypotheses testing.

Figure 2.

Results of the structural equation model. * for p < 0.05, ** for p < 0.01, *** for p < 0.001.

In the primary appraisal stage, Social Influence (SI) exhibited a significant positive effect on Performance Expectancy (PE) (, p = 0.015) and a significant negative effect on Effort Expectancy (EE) (, p = 0.006), supporting H1a and H1b. Hedonic Motivation (HM) significantly enhanced PE (, p < 0.001) and reduced EE (, p = 0.031), supporting H2a and H2c; however, its effect on Trust (T) was not significant (, p = 0.057), thus H2b was not supported. Anthropomorphism (A) demonstrated a significant positive effect on PE (, p < 0.001), supporting H3a, but its effects on Trust (T) (, p = 0.081) and EE (, p = 0.066) were not significant, leading to the rejection of H3b and H3c. Novelty Value (NV) showed the strongest positive effect on PE (, p < 0.001) among all primary appraisal factors and a significant negative effect on EE (, p = 0.014), supporting H4a and H4b.

In the secondary appraisal stage, Performance Expectancy (PE) positively influenced both Emotion (E) (, p < 0.001) and Trust (T) (, p < 0.001), supporting H5a and H5b. Effort Expectancy (EE) negatively influenced both Emotion (E) (, p < 0.001) and Trust (T) (, p < 0.001), supporting H6a and H6b.

In the outcome stage, Emotion (E) was a significant positive predictor of Willingness to Use (W) (, p < 0.001) and a significant negative predictor of Objection to Use (O) (, p = 0.002), supporting H7a and H7b. Trust (T) also significantly positively influenced Willingness to Use (W) (, p < 0.001) and negatively influenced Objection to Use (O) (, p = 0.027), supporting H8a and H8b.

The model exhibited strong explanatory power for the primary outcome variable. The combination of Emotion and Trust explained a substantial 56.0% of the variance in students’ Willingness to Use (W) AIEd Tools (, see Figure 2).

6. Discussion

6.1. Primary Appraisal: The Centrality of Novelty Value and Contextual Drivers

A central finding from the primary appraisal stage is the potent role of novelty value, which emerged as the most influential driver of performance expectancy. This underscores that for experienced users in a “post-adoption” context, the initial “wow” factor evolves into a perception of ongoing innovation, which remains critical for shaping utility judgments. Consistent with prior research, social influence, hedonic motivation, and anthropomorphism were confirmed as significant positive antecedents to performance expectancy (H1a, H2a, H3a supported). The significant negative effect of social influence on effort expectancy (H1b supported) suggests that alignment with group norms can effectively lower perceived barriers to use, facilitating wider adoption. Similarly, hedonic motivation significantly reduced effort expectancy (H2c supported), indicating that the inherent enjoyment of tool use makes the interaction process feel less arduous.

However, the most pivotal finding addressing Q2 is the dominant role of novelty value. Operationalized not as a transient sense of newness but as the “perception of useful innovation” [32], it demonstrated the strongest positive effect on performance expectancy (H4a) among all primary appraisal factors while also significantly reducing effort expectancy (H4b). Its indirect influence on adoption intention substantially surpassed that of social influence, positioning the intrinsic attraction to a tool’s innovative capacity as a more reliable pathway to sustained use than extrinsic social pressure. This re-conceptualization shifts the focus for sustainable adoption from the initial "wow" factor to the continuous delivery of perceptibly useful and creative functionalities, which is critical for long-term integration and avoiding the resource waste associated with abandoned technologies.

Conversely, the non-significant effects of anthropomorphism on effort expectancy and trust (H3c, H3b not supported) provide a critical nuance. This divergence from studies in service contexts (e.g., Mei et al., 2024 [30]) can likely be explained by our sample’s profile of experienced users in a learning environment. For this tech-savvy cohort, the initial novelty of human-like interaction may have diminished, causing evaluation criteria to evolve and prioritize utilitarian performance and functional robustness over superficial, human-like appeal. This suggests that anthropomorphism’s value is highly context-dependent and may wane as users mature in their interaction with AI.

6.2. Secondary Appraisal: The Dual-Pathway Mechanism of Cognition to Intention

The secondary appraisal stage elucidates the core mechanism through which cognitive evaluations translate into intention, robustly validating our extended dual-pathway model. A nuanced finding is that the impact of effort expectancy on emotion was slightly stronger than that of performance expectancy. This diverges from some prior studies [6] but aligns with the complex mediation patterns in learning contexts [31], suggesting that for students, the immediate cognitive burden and ease of use weigh more heavily on their emotional state during the learning process than the tool’s perceived potential benefits. This underscores that a sustainable implementation strategy must prioritize minimizing initial learning curves to foster positive early experiences, as ease of use is a powerful lever for emotional engagement [67,68].

The formation of trust was primarily driven by these utilitarian appraisals (PE and EE), reinforcing its nature as a rational cognitive belief forged through a cost–benefit analysis. However, the hypothesized direct effects of hedonic motivation and anthropomorphism on trust were not supported (H2b, H3b not supported). This indicates that for experienced students, while enjoyment and human-like design can enhance initial engagement, they are insufficient to foster the deeper, reliability-based trust required for sustained adoption in the ethically sensitive context of education. Trust is instead predicated on the tool’s consistent performance and functional robustness.

6.3. Outcome Stage: The Affective Primacy in Behavioral Decision

In the outcome stage, a key revelation is the primacy of the affective pathway. Emotion emerged as the strongest direct predictor of students’ willingness to use and the strongest negative predictor of objection to use. This pattern suggests that the decision to adopt AIEd Tools is disproportionately steered by immediate, visceral emotional responses—such as feelings of pleasure, satisfaction, or hope—which are triggered more rapidly than the deliberative, evidence-based formation of trust. This affective primacy is particularly critical in the context of learning technologies, where user experience is paramount [31]. It implies that even a highly trustworthy tool may fail to gain traction if it fails to deliver a positive and engaging user experience [69]. While trust remains essential for mitigating long-term objections and consolidating use, the initial gateway to acceptance is overwhelmingly emotional.

6.4. General Discussion: Synthesizing the Pathway to Sustainable Adoption

Synthesizing the findings across the three appraisal stages provides a clear and integrated answer to our research questions. Regarding our first research question (Q1), the specific behavioral intentions of Chinese university students are not a simple binary of acceptance or rejection but a complex interplay of willingness and objection. This interplay is directly governed by the dynamic between their immediate emotional responses and their deliberative cognitive trust in the AIEd Tools, with emotion exhibiting a stronger direct influence.

To answer our second question (Q2), our model reveals a sequential cognitive-affective process that clarifies the distinct roles of novelty value and trust. Novelty value, reconceptualized as perceived ongoing innovation, acts as a powerful initiator in the primary appraisal, primarily by boosting performance expectancy. Trust, in turn, serves a dual role: it is a key mediator that translates positive cognitive evaluations into a stable belief during secondary appraisal, and it is a direct, though secondary, driver of behavioral intention in the outcome stage.

This elucidated mechanism reframes the social dimension of sustainability in higher education. It shifts the focus from the environmental footprint of AI (a critical but separate issue) to the sustainability of the educational practice itself—ensuring that AIEd Tools are not merely adopted but are meaningfully and sustainably integrated into learning ecosystems, thereby avoiding the resource waste associated with rejected or abandoned technologies. The dominant force of the affective pathway underscores that for AIEd Tools to achieve this deep integration, they must be designed not just as reliable cognitive tools but as facilitators of positive feelings and enjoyable learning experiences.

In conclusion, this research demonstrates that sustainable adoption hinges on a dual-pronged approach: designing tools that deliver continuous innovative value to boost confidence and positive feelings while simultaneously ensuring reliable performance to build robust trust. This framework provides a comprehensive understanding of how to move beyond initial use toward the meaningful and sustained integration of AI in education.

7. Conclusions and Implications

This study confirms that the sustainable adoption of AIEd Tools is driven by a cognitive-affective dual pathway, with emotion—more so than trust—being the dominant force in shaping students’ willingness to use. This pivotal role of affective response, initiated by novelty value perceived as ongoing innovation, underscores that sustainable integration requires tools that are not only reliable but also a source of positive learning experiences.

7.1. Theoretical Contributions

This research makes three key theoretical contributions. First, it advances the AIDUA model for the sustainable education context by integrating novelty value as a primary appraisal factor and establishing trust as a parallel mediator to emotion, providing a more holistic framework for AIEd acceptance. Second, it reconceptualizes novelty value as “perceived ongoing innovation”, demonstrating its enduring role as a fundamental driver of performance expectations even among experienced users, beyond initial engagement. Third, it validates a dual-mediation model where behavioral intentions are jointly shaped by immediate affective responses and deliberate cognitive judgments, reconciling these pathways and providing a more comprehensive understanding of acceptance mechanisms in learning environments.

7.2. Practical Implications

The findings offer actionable guidance for multiple stakeholders in achieving sustainable AIEd integration. To translate the empirical results into practice, we delineate specific recommendations for key actors.

For AIEd developers and marketers, the focus must be on pedagogically meaningful innovation and trust-building transparency. Specifically, developers should prioritize creating features that deliver enduring educational value, such as AI that scaffolds critical thinking rather than merely providing answers. To foster the trust identified as crucial in our model, tools should incorporate features that explain AI-driven decisions (e.g., providing rationale for feedback) and allow users some control over AI behavior. Marketing strategies should evolve beyond promoting initial appeal to communicating the tool’s capacity for “perceived ongoing innovation,” highlighting how it continuously evolves to support long-term learning goals.

For higher education institutions and instructors, strategic efforts should center on building a trustworthy AI ecosystem and designing for positive learning experiences. Institutions are advised to:

- Establish a robust ethical AI framework that includes transparent data usage policies, data anonymization protocols, and procedures for regular audits of algorithmic fairness.

- Integrate AI literacy and ethics into the curriculum, empowering students to engage with AI tools critically and responsibly.

- Design authentic learning tasks that leverage AI productively to generate positive emotions and demonstrate utility. For instance, instructors could assign tasks where students use an AI tool to generate a first draft of an essay and then submit a critical analysis and improvement of that draft, thereby fostering critical engagement over passive acceptance.

- Foster a community of practice where faculty and peer leaders model effective AI use.

- Align technological adoption with broader sustainability goals by prioritizing procurement from AI service providers committed to renewable energy and algorithmic transparency.

For policymakers, the goal is to cultivate a supportive and accountable governance ecosystem. Initiatives should include promoting “Green AI” standards that incentivize computationally efficient models, mandating transparency from cloud providers regarding the carbon footprint of AI services, and funding interdisciplinary research into the long-term ethical and social implications of AI in education.

These recommendations underscore that building trust—a core finding of this study—is both a technical and an ethical imperative. For developers, this means designing for transparency and fairness for educators, it involves creating a context for critical and positive use; and for institutions, it requires governing AI with clear policies. This multi-stakeholder approach is essential for addressing ethical concerns such as data protection and algorithmic bias, thereby fostering the trust necessary for the sustainable, long-term integration of AIEd Tools.

8. Limitations and Future Research

This study, while providing insights into the determinants of sustainable AIEd adoption, is subject to several limitations that also chart a course for future research.

First, regarding generalizability, the findings are interpreted within the context of our sample—university students from mainland China. While this focus offers valuable insights into a key demographic within a rapidly expanding AIEd market, the cultural and educational specificities of this context mean that the structural relationships identified (e.g., the pronounced role of novelty value) may not be directly generalizable to other cultural or national settings. Future research should therefore prioritize cross-cultural and comparative studies to validate the extended AIDUA model, which would help distinguish universal acceptance mechanisms from culturally contingent factors.

Second, this study inherits the common limitations of a cross-sectional design and self-reported data. The snapshot nature of the data precludes the assessment of how the identified relationships, particularly the development of trust and the evolution of novelty perception, unfold over time. Longitudinal studies are needed to track the dynamics of AIEd acceptance, observing how initial adoption transitions into sustained use or abandonment. Although self-reported data were the appropriate method for capturing perceptual constructs, they are susceptible to social desirability and common method biases. While our statistical check (Harman’s single-factor test) indicated that common method bias was not a critical threat, future work would benefit from triangulating survey data with behavioral metrics, such as actual usage logs and learning analytics, to bridge the intention–behavior gap.

Third, although our extended AIDUA model demonstrated strong explanatory power, it is not exhaustive. The model deliberately focused on extending the core AIDUA framework and did not incorporate other potentially significant variables. Notably, factors such as AI-related anxiety and specific AI ethics concerns (e.g., perceptions of fairness and accountability) were not directly measured. Integrating these constructs in future research could provide a more holistic understanding of the barriers to adoption and further illuminate the formation of trust. Our study, by establishing a validated dual-pathway model, provides a solid foundation upon which to incorporate these nuanced psychological and ethical variables.

Finally, while we examined key demographic variables and found no significant effects, other nuanced individual differences may play a moderating role. Factors such as students’ specific learning habits, disciplinary background (e.g., STEM vs. humanities), and intrinsic learning motivation could potentially moderate the appraisal processes identified in our model. Future studies should include a wider range of these granular individual characteristics to better account for heterogeneity in student populations and uncover potential segment-specific adoption drivers.

Author Contributions

Methodology, X.G.; Validation, W.G.; Writing—original draft, Q.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Ministry of Education Industry-University Cooperation Collaborative Education Project (Grant No. 230907606235344).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the School of Art, Hebei Agricultural University (Approval Code: 2024010601, Approval Date: 6 January 2024).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. All participants signed the Participant Consent Form which clearly stated the research purpose, procedures, confidentiality measures, and voluntary nature of participation.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Okulich-Kazarin, V.; Artyukhov, A.; Skowron, L.; Artyukhova, N.; Wołowiec, T. Will AI Become a Threat to Higher Education Sustainability? A Study of Students’ Views. Sustainability 2024, 16, 4596. [Google Scholar] [CrossRef]

- Chiu, T.K.F. Future Research Recommendations for Transforming Higher Education with Generative AI. Comput. Educ. Artif. Intell. 2024, 6, 100197. [Google Scholar] [CrossRef]

- Kamalov, F.; Santandreu Calonge, D.; Gurrib, I. New Era of Artificial Intelligence in Education: Towards a Sustainable Multifaceted Revolution. Sustainability 2023, 15, 12451. [Google Scholar] [CrossRef]

- Al Darayseh, A. Acceptance of Artificial Intelligence in Teaching Science: Science Teachers’ Perspective. Comput. Educ. Artif. Intell. 2023, 4, 100132. [Google Scholar] [CrossRef]

- Habibi, A.; Muhaimin, M.; Danibao, B.K.; Wibowo, Y.G.; Wahyuni, S.; Octavia, A. ChatGPT in Higher Education Learning: Acceptance and Use. Comput. Educ. Artif. Intell. 2023, 5, 100190. [Google Scholar] [CrossRef]

- Gursoy, D.; Chi, O.H.; Lu, L.; Nunkoo, R. Consumers’ Acceptance of Artificially Intelligent (AI) Device Use in Service Delivery. Int. J. Inf. Manag. 2019, 49, 157–169. [Google Scholar] [CrossRef]

- Bhukya, R.; Paul, J. Social Influence Research in Consumer Behavior: What We Learned and What We Need to Learn?—A Hybrid Systematic Literature Review. J. Bus. Res. 2023, 162, 113870. [Google Scholar] [CrossRef]

- Lund, B.D.; Wang, T. Chatting about ChatGPT: How May AI and GPT Impact Academia and Libraries? Library Hi Tech News 2023, 40, 26–29. [Google Scholar] [CrossRef]

- Popenici, S.A.D.; Kerr, S. Exploring the Impact of Artificial Intelligence on Teaching and Learning in Higher Education. Res. Pract. Technol. Enhanc. Learn. 2017, 12, 22. [Google Scholar] [CrossRef]

- Boubker, O. From Chatting to Self-Educating: Can AI Tools Boost Student Learning Outcomes? Expert Syst. Appl. 2024, 238, 121820. [Google Scholar] [CrossRef]

- Kizilcec, R.F. To Advance AI Use in Education, Focus on Understanding Educators. Int. J. Artif. Intell. Educ. 2023, 34, 12–19. [Google Scholar] [CrossRef]

- Tseng, T.H.; Lin, S.; Wang, Y.S.; Liu, H.X. Investigating Teachers’ Adoption of MOOCs: The Perspective of UTAUT2. Interact. Learn. Environ. 2022, 30, 635–650. [Google Scholar] [CrossRef]

- Hannan, E.; Liu, S. AI: New Source of Competitiveness in Higher Education. Compet. Rev. 2021, 33, 269. [Google Scholar] [CrossRef]

- Luan, H.; Geczy, P.; Lai, H.; Gobert, J.; Yang, S.J.H.; Ogata, H.; Tsai, C.C. Challenges and Future Directions of Big Data and Artificial Intelligence in Education. Front. Psychol. 2020, 11, 2748. [Google Scholar] [CrossRef]

- Peres, R.; Schreier, M.; Schweidel, D.A.; Sorescu, A. On ChatGPT and Beyond: How Generative Artificial Intelligence May Affect Research, Teaching, and Practice. Int. J. Res. Mark. 2023, 40, 269–275. [Google Scholar] [CrossRef]

- Nazari, N.; Shabbir, M.S.; Setiawan, R. Application of Artificial Intelligence Powered Digital Writing Assistant in Higher Education: Randomized Controlled Trial. Heliyon 2021, 7, e06949. [Google Scholar] [CrossRef] [PubMed]

- Roy, R.; Naidoo, V. Enhancing Chatbot Effectiveness: The Role of Anthropomorphic Conversational Styles and Time Orientation. J. Bus. Res. 2021, 126, 23–34. [Google Scholar] [CrossRef]

- Kim, H.; Ben-Othman, J.; Mokdad, L. Intelligent Terrestrial and Non-Terrestrial Vehicular Networks with Green AI and Red AI Perspectives. Sensors 2023, 23, 806. [Google Scholar] [CrossRef]

- Li, T.; Luo, J.; Liang, K.; Yi, C.; Ma, L. Synergy of Patent and Open-Source-Driven Sustainable Climate Governance under Green AI: A Case Study of TinyML. Sustainability 2023, 15, 13779. [Google Scholar] [CrossRef]

- Abdelmagid, A.; Jabli, N.; Al-Mohaya, A.; Teleb, A. Integrating Interactive Metaverse Environments and Generative Artificial Intelligence to Promote the Green Digital Economy and e-Entrepreneurship in Higher Education. Sustainability 2025, 17, 5594. [Google Scholar] [CrossRef]

- Hwang, G.J.; Xie, H.; Wah, B.W.; Gašević, D. Vision, Challenges, Roles, and Research Issues of Artificial Intelligence in Education. Comput. Educ. Artif. Intell. 2020, 1, 100001. [Google Scholar] [CrossRef]

- Strzelecki, A. To Use or Not to Use ChatGPT in Higher Education? A Study of Students’ Acceptance and Use of Technology. Interact. Learn. Environ. 2023, 32, 5142–5155. [Google Scholar] [CrossRef]

- Cronan, T.P.; Mullins, J.K.; Douglas, D.E. Further Understanding Factors That Explain Freshman Business Students’ Academic Integrity Intention and Behavior: Plagiarism and Sharing Homework. J. Bus. Ethics 2018, 147, 197–220. [Google Scholar] [CrossRef]

- Lu, L.; Cai, R.; Gursoy, D. Developing and Validating a Service Robot Integration Willingness Scale. Int. J. Hosp. Manag. 2019, 80, 36–51. [Google Scholar] [CrossRef]

- Niu, B.; Mvondo, G.F.N. I Am ChatGPT, the Ultimate AI Chatbot! Investigating the Determinants of Users’ Loyalty and Ethical Usage Concerns of ChatGPT. J. Retail. Consum. Serv. 2024, 76, 103562. [Google Scholar] [CrossRef]

- Menon, D.; Shilpa, K. “Chatting with ChatGPT”: Analyzing the Factors Influencing Users’ Intention to Use the Open AI’s ChatGPT Using the UTAUT Model. Heliyon 2023, 9, e13478. [Google Scholar] [CrossRef]

- Duong, C.D.; Vu, T.N.; Ngo, T.V.N. Applying a Modified Technology Acceptance Model to Explain Higher Education Students’ Usage of ChatGPT: A Serial Multiple Mediation Model with Knowledge Sharing as a Moderator. Int. J. Manag. Educ. 2023, 21, 100883. [Google Scholar] [CrossRef]

- Widjaja, H.A.E.; Santoso, S.W.; Petrus, S. The Enhancement of Learning Management System in Teaching Learning Process with the UTAUT2 and Trust Model. In Proceedings of the 2019 International Conference on Information Management and Technology (ICIMTech), Jakarta/Bali, Indonesia, 19–20 August 2019; Volume 1, pp. 309–313. [Google Scholar]

- Ashraf, M.A.; Shabnam, N.; Tsegay, S.M.; Huang, G. Acceptance of Smart Technologies in Blended Learning: Perspectives of Chinese Medical Students. Int. J. Environ. Res. Public Health 2023, 20, 2756. [Google Scholar] [CrossRef] [PubMed]

- Mei, H.; Bodog, S.A.; Badulescu, D. Artificial Intelligence Adoption in Sustainable Banking Services: The Critical Role of Technological Literacy. Sustainability 2024, 16, 8934. [Google Scholar] [CrossRef]

- Wang, C.; Yin, H. How do Chinese undergraduates harness the potential of appraisal and emotions in generative AI-Powered learning? A multigroup analysis based on appraisal theory. Comput. Educ. 2025, 228, 105250. [Google Scholar] [CrossRef]

- Ma, X.; Huo, Y. Are Users Willing to Embrace ChatGPT? Exploring the Factors on the Acceptance of Chatbots from the Perspective of AIDUA Framework. Technol. Soc. 2023, 75, 102362. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Customer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Cheng, X.; Zhang, X.; Cohen, J.; Mou, J. Human vs. AI: Understanding the Impact of Anthropomorphism on Customer Response to Chatbots from the Perspective of Trust and Relationship Norms. Inf. Process. Manag. 2022, 59, 102940. [Google Scholar] [CrossRef]

- Oldeweme, A.; Martins, J.; Westmattelmann, D.; Schewe, G. The Role of Transparency, Trust, and Social Influence on Uncertainty Reduction in Times of Pandemics: Empirical Study on the Adoption of COVID-19 Tracing Apps. J. Med. Internet Res. 2021, 23, e25893. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Roy, P.; Ramaprasad, B.S.; Chakraborty, M.; Prabhu, N.; Rao, S. Customer Acceptance of Use of Artificial Intelligence in Hospitality Services: An Indian Hospitality Sector Perspective. Glob. Bus. Rev. 2020, 21, 1205–1222. [Google Scholar] [CrossRef]

- Wong, I.K.A.; Zhang, T.; Lin, Z.; Peng, Q. Hotel AI Service: Are Employees Still Needed? J. Hosp. Tour. Manag. 2023, 55, 416–424. [Google Scholar] [CrossRef]

- Chi, O.H.; Gursoy, D.; Chi, C.G. Tourists’ Attitudes toward the Use of Artificially Intelligent (AI) Devices in Tourism Service Delivery: Moderating Role of Service Value Seeking. J. Travel Res. 2022, 61, 170–185. [Google Scholar] [CrossRef]

- Chi, O.H.; Gursoy, D.; Nunkoo, R. Customers’ Acceptance of Artificially Intelligent Service Robots: The Influence of Trust and Culture. Int. J. Inf. Manag. 2023, 70, 102623. [Google Scholar] [CrossRef]

- Yoon, N.; Lee, H.K. AI Recommendation Service Acceptance: Assessing the Effects of Perceived Empathy and Need for Cognition. J. Theor. Appl. Electron. Commer. Res. 2021, 16, 1912–1928. [Google Scholar] [CrossRef]

- Kim, H.C.; McGill, A.L. Minions for the Rich? Financial Status Changes How Consumers See Products with Anthropomorphic Features. J. Consum. Res. 2018, 45, 429–450. [Google Scholar] [CrossRef]

- Blut, M.; Wang, C.; Wünderlich, N.V.; Brock, C. Understanding Anthropomorphism in Service Provision: A Meta-Analysis of Physical Robots, Chatbots, and Other AI. J. Acad. Mark. Sci. 2021, 49, 632–658. [Google Scholar] [CrossRef]

- Tung, V.W.S.; Au, N. Exploring Customer Experiences with Robotics in Hospitality. Int. J. Contemp. Hosp. Manag. 2018, 30, 2680–2697. [Google Scholar] [CrossRef]

- Xu, K. First Encounter with Robot Alpha: How Individual Differences Interact with Vocal and Kinetic Cues in Users’ Social Responses. New Media Soc. 2019, 21, 2522–2547. [Google Scholar] [CrossRef]

- Pande, S.; Gupta, K.P. Indian Customers’ Acceptance of Service Robots in Restaurant Services. Behav. Inf. Technol. 2022, 41, 2315–2330. [Google Scholar] [CrossRef]

- Adapa, S.; Fazal-e Hasan, S.M.; Makam, S.B.; Azeem, M.M.; Mortimer, G. Examining the Antecedents and Consequences of Perceived Shopping Value through Smart Retail Technology. J. Retail. Consum. Serv. 2020, 52, 101901. [Google Scholar] [CrossRef]

- Wells, J.D.; Campbell, D.E.; Valacich, J.S.; Featherman, M. The Effect of Perceived Novelty on the Adoption of Information Technology Innovations: A Risk/Reward Perspective. Decis. Sci. 2010, 41, 813–843. [Google Scholar] [CrossRef]

- Croes, E.A.J.; Antheunis, M.L. Can We Be Friends with Mitsuku? A Longitudinal Study on the Process of Relationship Formation between Humans and a Social Chatbot. J. Soc. Pers. Relationsh. 2021, 38, 279–300. [Google Scholar] [CrossRef]

- Xie, L.; Liu, X.; Li, D. The Mechanism of Value Cocreation in Robotic Services: Customer Inspiration from Robotic Service Novelty. J. Hosp. Mark. Manag. 2022, 31, 962–983. [Google Scholar] [CrossRef]

- Della Corte, V.; Sepe, F.; Gursoy, D.; Prisco, A. Role of Trust in Customer Attitude and Behaviour Formation towards Social Service Robots. Int. J. Hosp. Manag. 2023, 114, 103587. [Google Scholar] [CrossRef]

- Lin, H.; Chi, O.H.; Gursoy, D. Antecedents of Customers’ Acceptance of Artificially Intelligent Robotic Device Use in Hospitality Services. J. Hosp. Mark. Manag. 2020, 29, 530–549. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E.; Tatham, R.L. Multivariate Data Analysis, 8th ed.; Prentice Hall: Hoboken, NJ, USA, 2019. [Google Scholar]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 2nd ed.; Sage Publications: Thousand Oaks, CA, USA, 2016. [Google Scholar]

- Li, D.-H.; Li, H.-Y.; Wei, L.; Guo, J.-J.; Li, E.-Z. Application of Flipped Classroom Based on the Rain Classroom in the Teaching of Computer-Aided Landscape Design. Comput. Appl. Eng. Educ. 2020, 28, 357–366. [Google Scholar] [CrossRef]

- Cai, J.; Wu, J.; Zhang, H.; Cai, Y. Research on the Influence Mechanism of Fashion Brands’ Crossover Alliance on Consumers’ Online Brand Engagement: The Mediating Effect of Hedonic Perception and Novelty Perception. Sustainability 2023, 15, 3953. [Google Scholar] [CrossRef]

- Castelli, M.; Manzoni, L. Generative Models in Artificial Intelligence and Their Applications. Appl. Sci. 2022, 12, 4127. [Google Scholar] [CrossRef]

- Cukurova, M.; Luckin, R.; Kent, C. Impact of an Artificial Intelligence Research Frame on the Perceived Credibility of Educational Research Evidence. Int. J. Artif. Intell. Educ. 2020, 30, 205–235. [Google Scholar] [CrossRef]

- VanDerSchaaf, H.P.; Daim, T.U.; Basoglu, N.A. Factors Influencing Student Information Technology Adoption. IEEE Trans. Eng. Manag. 2021, 70, 631–643. [Google Scholar] [CrossRef]

- Karnouskos, S. Self-Driving Car Acceptance and the Role of Ethics. IEEE Trans. Eng. Manag. 2018, 67, 252–265. [Google Scholar] [CrossRef]

- Kim, W.B.; Hur, H.J. What Makes People Feel Empathy for AI Chatbots? Assessing the Role of Competence and Warmth. Int. J. Hum.-Comput. Interact. 2023, 39, 833–846. [Google Scholar] [CrossRef]

- Tzafilkou, K.; Perifanou, M.; Economides, A.A. Negative Emotions, Cognitive Load, Acceptance, and Self-Perceived Learning Outcome in Emergency Remote Education during COVID-19. Educ. Inf. Technol. 2021, 26, 7497–7521. [Google Scholar] [CrossRef]

- Sarkar, S.; Chauhan, S.; Khare, A. A Meta-Analysis of Antecedents and Consequences of Trust in Mobile Commerce. Int. J. Inf. Manag. 2020, 50, 286–301. [Google Scholar] [CrossRef]

- Van Pinxteren, M.M.E.; Wetzels, R.W.H.; Rüger, J.; Pluymaekers, M.; Wetzels, M. Trust in Humanoid Robots: Implications for Services Marketing. J. Serv. Mark. 2019, 33, 461–473. [Google Scholar] [CrossRef]

- Ni, A.; Cheung, A. Understanding Secondary Students’ Continuance Intention to Adopt AI-Powered Intelligent Tutoring System for English Learning. Educ. Inf. Technol. 2023, 28, 3191–3216. [Google Scholar] [CrossRef] [PubMed]

- Hair, J.F.; Sarstedt, M.; Hopkins, L.; Kuppelwieser, V.G. Partial Least Squares Structural Equation Modeling (PLS-SEM): An Emerging Tool in Business Research. Eur. Bus. Rev. 2014, 26, 106–121. [Google Scholar] [CrossRef]

- Liu, H.; Yan, M. College Students’ Use of Smart Teaching Tools in China: Extending Unified Theory of Acceptance and Use of Technology 2 with Learning Value. In Proceedings of the 2020 International Conference on Modern Education and Information Management (ICMEIM), Dalian, China, 25–27 September 2020; pp. 842–847. [Google Scholar]

- Qiao, X. Integration Model for Multimedia Education Resource Based on Internet of Things. Int. J. Contin. Eng. Educ. Life Long Learn. 2021, 31, 17–35. [Google Scholar] [CrossRef]

- Rekha, I.S.; Shetty, J.; Basri, S. Students’ Continuance Intention to Use MOOCs: Empirical Evidence from India. Educ. Inf. Technol. 2023, 28, 4265–4286. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).