Abstract

Big data analytics has become a cornerstone of modern industries, driving advancements in business intelligence, competitive intelligence, and data-driven decision-making. This study applies Neural Topic Modeling (NTM) using the BERTopic framework and N-gram-based textual content analysis to examine job postings related to big data analytics in real-world contexts. A structured analytical process was conducted to derive meaningful insights into workforce trends and skill demands in the big data analytics domain. First, expertise roles and tasks were identified by analyzing job titles and responsibilities. Next, key competencies were categorized into analytical, technical, developer, and soft skills and mapped to corresponding roles. Workforce characteristics such as job types, education levels, and experience requirements were examined to understand hiring patterns. In addition, essential tasks, tools, and frameworks in big data analytics were identified, providing insights into critical technical proficiencies. The findings show that big data analytics requires expertise in data engineering, machine learning, cloud computing, and AI-driven automation. They also emphasize the importance of continuous learning and skill development to sustain a future-ready workforce. By connecting academia and industry, this study provides valuable implications for educators, policymakers, and corporate leaders seeking to strengthen workforce sustainability in the era of big data analytics.

1. Introduction

In today’s data-driven world, the ability to collect, analyze, and extract meaningful insights from vast amounts of data has become a critical competency for organizations across various industries. The field of big data analytics has witnessed remarkable growth, driven by the increasing availability of digital information, advancements in computational power, and the rapid evolution of artificial intelligence and machine learning techniques [1,2]. As organizations strive to remain competitive, the demand for professionals with expertise in big data technologies, analytics, and decision-making continues to rise [3,4,5]. In this context, big data analytics plays a crucial role in business intelligence and competitive intelligence, enabling organizations to make data-driven strategic decisions [6,7]. By leveraging advanced analytics, companies can optimize operations, enhance customer insights, and gain a competitive edge in the market [8,9,10]. The integration of big data with business intelligence tools allows businesses to uncover patterns, trends, and correlations that drive innovation and efficiency [11,12,13]. The growing use of machine learning, deep learning, and generative AI techniques across predictive analytics applications further underscores the sophisticated competencies now expected in big data analytics roles [11,14,15].

A sustainable workforce in the era of big data analytics refers to a dynamic and future-oriented labor force that continuously adapts to technological, organizational, and societal transformations [16,17]. The concept extends beyond traditional employability and emphasizes the long-term capacity of individuals and organizations to remain resilient amid automation, digitalization, and evolving data-driven processes [18]. It is closely aligned with the broader principles of sustainability and the ESG (Environmental, Social, and Governance) framework, which advocate inclusive growth, ethical technological practices, and equitable access to opportunities [16,19,20]. Within this perspective, workforce sustainability highlights the importance of lifelong learning, reskilling, and upskilling to ensure that professionals remain competent and responsive to rapidly changing digital ecosystems [16,17,21]. Cultivating such a sustainable workforce not only strengthens innovation and productivity but also supports the transition toward more socially responsible, environmentally conscious, and economically resilient data ecosystems [17,18].

Big data analytics encompasses a broad range of disciplines, including data engineering, data science, machine learning, cloud computing, and business intelligence [1,7,9]. The complexity of these domains necessitates a diverse set of skills and competencies, ranging from technical proficiency in programming languages and data management tools to analytical thinking and problem-solving capabilities [9,22,23,24]. However, identifying the most in-demand skills and competencies within this rapidly evolving field remains a challenge for both job seekers and employers [25,26]. Moreover, the increasing integration of automation, artificial intelligence, and real-time analytics into business operations highlights the necessity for professionals who can adapt to these dynamic changes [27,28,29].

1.1. Research Background and Related Work

In recent years, the rapid expansion of big data analytics in various industries has led to an increasing number of studies focused on understanding workforce trends and skill requirements in this domain [9,12,22,23,25,26,28,29,30,31,32,33]. Many domain-specific studies have explored job market analytics, workforce competency mapping, and data-driven hiring strategies to identify evolving demands in data-centric roles [9,26,32]. Existing research has largely focused on leveraging various topic modeling approaches, such as Latent Dirichlet Allocation (LDA), Non-Negative Matrix Factorization (NMF), and BERTopic, alongside text mining and machine learning techniques [12,23,34,35,36]. These methods have been employed to analyze job postings, identify key competencies, and track emerging trends in workforce requirements [1,5,7,9,12]. These studies have provided valuable insights into the required skills for data professionals, industry-driven curriculum development, and the alignment of workforce training with market needs [7]. While existing studies have significantly contributed to understanding workforce trends and competency mapping in big data analytics, there remain critical gaps that require further investigation [12,13,27,28,32,33]. To cultivate a well-rounded and agile understanding of big data workforce trends, research must expand to include multi-sector analyses, real-time job market fluctuations, and continuously evolving skill taxonomies [3,12,24,33]. Given the dynamic nature of this field, changing skill requirements must be analyzed at regular intervals. To ensure continuous adaptation, periodic studies should be conducted to track and evaluate evolving competencies over time [1,6,7,37].

1.2. Motivation and Contribution of the Study

Although substantial research has been conducted on the skills and competencies required in the field of big data analytics, existing studies exhibit certain limitations regarding datasets, analysis methodologies, and scope. These studies typically focus on specific competencies or utilize limited datasets, occasionally failing to comprehensively present and understand the required skills and tools in big data analytics. Furthermore, detailed analyses correlating the skills and professional roles demanded in big data analytics job listings have not been adequately addressed. Employing text mining and neural topic modeling-based machine learning techniques for extensive analysis of online job postings, which significantly influence the labor market, holds considerable potential in addressing these limitations. Such methodologies can provide clearer and more comprehensive insights into the skills and areas of expertise sought in the industry.

To bridge this knowledge gap, this study aims to identify the competencies, skills, and expert roles required in big data analytics and comprehensively highlight significant industry trends. To achieve this goal, content from job listings on leading job market platforms was analyzed using neural topic models and text mining. Ultimately, the application of neural topic modeling in analyzing big data analytics job contents offers valuable contributions to better understand industry dynamics and inform workforce development. The contributions of this study are as follows:

- Identification of key expertise roles and task definitions in big data analytics.

- Determination of essential skills and competencies required for big data analytics.

- Development of a skill taxonomy based on technical background.

- Taxonomic distribution and correlation analysis of skills according to expertise roles.

- Analysis of job types and requirements for education and experience levels.

- Highlighting key tasks, techniques, and tools utilized in big data analytics workflows.

2. Materials and Methods

2.1. Data Collection

Online web platforms are leading data sources for scientific research due to their vast and diverse information. For this study, we selected Indeed.com [38], a widely recognized job platform with powerful search and filtering capabilities, to source relevant data. Indeed.com, with over 250 million monthly visitors, is the most frequented job site globally [38]. It is one of the most widely used platforms for job postings worldwide. However, since most listings on the platform are in English and primarily originate from North America and Western Europe, the dataset may not fully represent all countries and industries. Therefore, the findings provide a general overview of global workforce trends but may not entirely capture regional variations.

In this analysis, job listings with the phrases “big data analytics” or “big data analysis” in their titles were classified as big data analytics positions. We retrieved a total of 3156 job postings from Indeed.com, covering the period from 15 July 2024 to December 2024, via the platform’s API. The postings were stored in a structured database, which formed the foundation for the experimental dataset. For each job entry, information was collected in several sections: job title, job description, company name, location, job type, required qualifications, salary (if available), and job posting date [23,26].

2.2. Data Preprocessing

Once the dataset was established, a series of preprocessing techniques were applied to the raw text data to prepare it for analysis [31,39,40]. Preprocessing is a crucial step in working with unstructured data, particularly for web-based textual analysis. Initially, the text was tokenized into individual words, converting the content into analyzable elements. All text was converted to lowercase to standardize it, and extraneous punctuation, hyperlinks, HTML tags, and special characters were removed. English stop words were filtered out to reduce unnecessary noise. Finally, lemmatization was applied to the words, converting them to their base forms [11,41]. To enhance the quality of the corpus, rare terms occurring fewer than five times were removed, and overly frequent generic terms with little semantic contribution were pruned. The preprocessed corpus was then transformed into a document–term matrix (DTM), a sparse numerical representation in which documents are expressed as rows, terms as columns, and cell values denote weighted term frequencies, thereby providing the structured input required for subsequent topic modeling and clustering algorithms [36,42,43,44].

2.3. Expertise Roles and Job Title Classification

The job postings were analyzed using an N-gram approach, where recurring phrase patterns in job titles were identified, and the most frequent role-related terms were extracted. Each job title was tokenized into unigrams, bigrams, and trigrams, and the most representative role expressions were determined. Based on this analysis, four dominant expertise roles consistently emerged in job postings: “Analyst”, “Engineer”, “Developer”, and “Architect”.

In the classification procedure, a straightforward rule was applied: whenever one of these role terms appeared directly in the job title (e.g., Big Data Analyst, Senior Big Data Engineer, Big Data Developer, Big Data Architect), the posting was assigned to the corresponding role, regardless of the presence of other terms. This approach standardized the classification process, reduced ambiguity, and ensured consistency in role assignment. In cases where more than one role indicator appeared in the same title, the dominant and more frequently occurring role term was prioritized.

The percentage share of each role was calculated by dividing the number of postings assigned to that role by the total number of postings in the dataset. This allowed us to quantitatively demonstrate which roles are more in demand within the labor market. Furthermore, roles with a frequency below 1% (such as Architectural Consultant, Data Strategist, Data Manager) were either merged into the closest major category or excluded from the analysis. This approach prevented data sparsity and maintained a more interpretable classification structure, thereby providing a robust representation of expertise role distributions derived from job titles and establishing a solid framework for subsequent analyses of skills, competencies, and tasks.

2.4. Skill and Competency Extraction Using Neural Topic Modeling

This study employs N-gram-based textual content analysis and Neural Topic Modeling (NTM) to extract meaningful themes (skills) from job postings related to big data analytics [31,35,45]. By using NTM, the study aims to provide a deeper understanding of the major themes in the big data analytics job market, highlighting the skills, qualifications, and trends most commonly sought by employers [40,46]. NTM, a sophisticated method leveraging neural networks, allows for the extraction of thematic structures from large text datasets, offering an advanced alternative to traditional probabilistic models like Latent Dirichlet Allocation (LDA) [34,45,47,48,49]. To further justify the methodological choice, BERTopic was preferred over alternative topic-modeling approaches such as Latent Dirichlet Allocation (LDA), Non-negative Matrix Factorization (NMF), and Top2Vec. Traditional probabilistic models like LDA and NMF rely on the bag-of-words assumption, which often limits their performance on short and unstructured texts such as job postings. In contrast, BERTopic integrates transformer-based contextual embeddings, UMAP for dimensionality reduction, and HDBSCAN for density-based clustering, resulting in semantically coherent and context-aware topics even in small text segments [34,45,47,48,50]. Compared to Top2Vec, BERTopic offers more flexible control over topic granularity and improved interpretability through its use of c-TF-IDF term weighting. These advantages make BERTopic particularly suitable for labor-market data, where short textual descriptions require methods capable of capturing subtle semantic distinctions [34,45].

BERTopic benefits from deep learning’s ability to model complex, nonlinear relationships within the data, enabling it to uncover subtle patterns that other methods may overlook [35,47]. This method automatically extracts key features from the data, eliminating the need for manual feature engineering and allowing the model to learn directly from the text [34,40,41]. The use of word embedding techniques further improves the model’s ability to capture semantic relationships, enhancing its capacity to accurately represent the meaning of the text. By learning latent representations of the text, BERTopic generates more generalized and meaningful data models, which are especially useful for analyzing large, unstructured datasets [46,47,50,51].

For this study, the BERTopic approach was employed, utilizing various algorithms such as c-TF-IDF for term weighting, Uniform Manifold Approximation and Projection (UMAP) for dimensionality reduction, Hierarchical Density-Based Spatial Clustering of Applications with Noise (HDBSCAN) for clustering, and BERT (Bi-directional Encoder Representations from Transformers) for semantic embeddings [41,45,51]. These algorithms work together to convert the text into high-dimensional vectors, capture contextual relationships between words, and identify the most relevant topics and keywords [34,45].

In the BERTopic implementation, the number of topics and associated word counts are determined by considering specific threshold values within the NTM, which help identify the optimal number of topics [34,35,52]. These measures ensured that the resulting topics were both statistically valid and semantically interpretable [34,45,53]. As a result of parameter optimization, 26 topics were identified as the optimal solution, meeting the criteria of “ideal topic representation” from a statistical perspective while also providing valuable insights into trends in the big data analytics job market.

For each topic, the top 10 most representative keywords were identified using BERTopic. These keywords were arranged in descending order of frequency, where the first keyword represented the term most strongly associated with the topic, and the last keyword represented a less frequent but still distinctive term. This ordering enhanced the transparency of the topic clusters and allowed each skill domain to be interpreted more meaningfully. Topic labels were generated directly from these representative keywords, ensuring that each label accurately reflected the semantic content of its cluster.

The BERTopic implementation used the all-MiniLM-L6-v2 sentence transformer model for embedding generation, as it provides an optimal balance between performance and computational efficiency for short recruitment texts. Dimensionality reduction was conducted using UMAP with the parameters n_neighbors = 15, n_components = 2, min_dist = 0.0, and metric = ‘cosine’. Clustering was performed using HDBSCAN with min_cluster_size = 20, min_samples = 10, and cluster_selection_epsilon = 0.05. Topic dimensionality reduction was automatically handled with nr_topics = ‘auto’, producing 26 coherent topics after optimization. The topic representation step employed c-TF-IDF weighting with a term frequency smoothing parameter of 0.5, and Maximal Marginal Relevance (MMR) with a diversity parameter of 0.3 to ensure that the selected topic keywords were both highly relevant and semantically diverse [34,35,45,54].

Topic quality was quantitatively evaluated using c-TF-IDF coherence, intra-topic cosine similarity, and topic diversity metrics. The model achieved a mean coherence score of 0.71 and a topic diversity of 0.82, indicating strong internal consistency and minimal redundancy between clusters. For human-in-the-loop validation, two independent reviewers assessed the semantic accuracy of the automatically generated topic labels and their correspondence to the derived skills taxonomy, reaching an inter-annotator agreement of 0.86 (Cohen’s Kappa). Additionally, a compact baseline comparison using Latent Dirichlet Allocation (LDA) and Non-Negative Matrix Factorization (NMF) confirmed that BERTopic achieved higher coherence and better interpretability for short job-posting texts [21,34,45,54].

To ensure the robustness and reliability of the BERTopic results, a systematic sensitivity and stability analysis was performed. The stability evaluation involved perturbing key model hyperparameters within empirically reasonable ranges: min_cluster_size ∈ {15, 20, 25}, n_neighbors ∈ {12, 15, 18}, and n_components ∈ {2, 3, 5}. For each configuration, the resulting topic–skill distributions were recomputed, and rank-order consistency was measured using the Spearman rank correlation coefficient (ρ) between baseline and perturbed models. The mean ρ value exceeded 0.93, indicating high ranking stability across all configurations [21,34,45,54].

To further quantify sampling uncertainty, a nonparametric bootstrap procedure with 1000 replicates was applied to the job-posting corpus. For each bootstrap sample, BERTopic was re-fitted, and the frequency of top-10 skills per topic was re-estimated. The resulting 95% bootstrap confidence intervals for skill frequency proportions were narrow (average ±2.1%), suggesting low variance across resamples. In addition, the topic coherence (c-TF-IDF) and topic diversity metrics were recomputed for each bootstrap iteration, yielding stable mean values of 0.71 ± 0.02 and 0.82 ± 0.01, respectively. These analyses confirm that the BERTopic model exhibits strong stability and robustness to moderate hyperparameter perturbations and corpus resampling noise [21,34,45,54].

2.5. Taxonomic Classification of Skills

The skills obtained through BERTopic were organized into a higher-level taxonomy to facilitate interpretation and provide a systematic structure. In this process, all skills extracted from job postings were first subjected to a preliminary classification by considering semantic similarity and contextual usage. Subsequently, frequency analysis, keyword clustering, and literature comparison were applied together. In the first step, skill terms identified within the 26 topics generated by BERTopic were examined, and recurring concepts in similar contexts were grouped [34]. In the second step, the usage frequencies of these terms were evaluated; low-frequency but distinctive concepts (e.g., project management, teamwork) were specifically noted. In the third step, these preliminary groups were compared with classifications in the literature [21,28,32,33] and adjusted to form a coherent higher-level category structure.

As a result, the skills were grouped into four main categories: analytical skills, technical skills, developer skills, and soft skills. Analytical skills cover competencies related to data analysis, interpretation, and problem solving; technical skills include knowledge of infrastructures, platforms, and methods required for big data analytics; developer skills emphasize software engineering and programming processes; while soft skills consist of interpersonal competencies such as communication, teamwork, and project management. This distinction enabled a systematic analysis of the skills demanded in the big data analytics labor market across analytical, technical, developer, and soft dimensions.

2.6. Analysis of the Taxonomic Distribution of Skills Across Expertise Roles

To examine the distribution of skills across the four expertise roles (Analyst, Engineer, Developer, and Architect), the role classification was cross-referenced with the skill taxonomy. This analysis aimed to identify which skills are prioritized for each role. First, the pre-assigned roles of each job posting were matched with the percentage values of the skills extracted from the job descriptions. In this way, a role–skill distribution was obtained for each posting. Subsequently, the overall proportional representation of skills across all postings was calculated in percentage terms for each role. For each role, the ten most frequent skills were then selected and ranked in descending order. The resulting ranking table indicates the relative position of each of the top ten skills, allowing systematic comparison of shared and distinct skills among roles [11,21,27,28,30].

The identified skills were then mapped to four higher-level categories: analytical, technical, developer, and soft skills. Within each role, the percentage share of these categories was calculated by dividing the number of skills in a given role–category combination by the total number of skills assigned to that role. This allowed for a quantitative assessment of the extent to which each role emphasizes analytical, technical, developer, or soft skills. Finally, the results were visualized to present the comparative distribution of skills across roles. This method enabled a structured analysis of role–skill relationships in the big data analytics job market.

2.7. Analysis of Education, Experience, and Job Type Requirements

N-gram-based text mining was applied to the job posting texts for this analysis. In this process, the postings were first tokenized, stop words were removed, and potential unigram, bigram, and trigram candidate phrases were extracted for each posting. Next, N-gram phrases directly related to education level (e.g., Master’s degree, Bachelor’s degree, Ph.D.), experience level (e.g., entry-level, senior-level), and job type (e.g., full-time, remote work) were selected through dictionary-based filtering. Education, experience, and job-type attributes were systematically standardized through a rule-based keyword mapping procedure. Degree-related expressions (e.g., BSc, Bachelor’s degree, MSc, Master’s degree, Ph.D., Doctorate) were normalized and grouped into four hierarchical categories: Bachelor’s, Master’s, Ph.D., and Unspecified. Job postings lacking explicit educational information were retained in the dataset and coded as Unspecified rather than excluded, ensuring full corpus coverage [11,21,31,36,43,55,56].

Experience-related indicators were classified into Entry-level, Mid-level, Senior-level, and Managerial/Executive categories based on lexical patterns such as “junior”, “associate”, “senior”, “lead”, “principal”, and “manager”. Multi-term co-occurrences were resolved by assigning the highest detected seniority level to prevent overlapping classifications. This rule-based taxonomy enabled consistent and reproducible quantification of education, experience, and job-type attributes across all postings, facilitating accurate frequency and proportion estimates for comparative labor-market analysis. In this way, only terms relevant to the analysis were retained [11,21,31,36,43,55,57].

The frequency count of each N-gram phrase was calculated across the postings. At this stage, lexical normalization was applied to merge variants of the same term (e.g., MSc and Master’s degree). Then, the total frequency count of each category was divided by the total number of postings to obtain normalized percentage values. For example, when the phrase Master’s degree appeared in 426 postings out of a total of 1000, the requirement for a master’s degree was calculated as 42.6%. Similarly, when the phrase entry-level appeared in 281 postings, the proportion for this experience level was determined as 28.1%. Using this same approach, all education, experience, and job type categories were analyzed, quantitatively revealing which requirements dominate in the big data analytics labor market [21,31,36,43,55].

2.8. Identification of Tasks and Related Tools in Big Data Analytics

N-gram–based text mining was applied to the job postings to extract task and tool terms. First, single-word (unigram) task terms were extracted (e.g., analytics, processing, modeling, integration, optimization). Among the task candidates, morphological variants were merged under a single canonical form (e.g., classify, classification, classifying → classification). For each task term, the frequency count across postings was calculated and normalized by the total number of postings to obtain percentage values. In this way, the top 15 most frequent tasks were identified. The use case explanations of tasks were derived by analyzing the job posting sentences in which the task terms appeared. For instance, the task analytics was frequently associated with “extracting insights from large datasets”, while processing was linked to “cleaning, transforming, and structuring data” [11,21,31,36,43].

For tools, multi-word N-grams were extracted from the postings, with a particular focus on trigram structures, since tool combinations are most commonly expressed in sets of three (e.g., Python–Spark–SQL, Java–Kafka–Flink, Python–TensorFlow–SQL). These trigram N-grams represent tool ecosystems (tool stacks) in which different technologies are used together. The frequency of each tool combination was calculated, normalized by the total number of postings, and the top 15 most frequent combinations were identified. The use case explanations of tools were derived by analyzing the typical contexts in which these technologies appeared in job postings. For example, the Python–Spark–SQL combination was associated with “data processing, analytics, and querying”, while Java–Kafka–Flink was linked to “real-time stream processing”.

2.9. Validity and Reproducibility

To ensure the credibility of the findings, this study systematically examined the validity and reproducibility of the modeling process across multiple dimensions, including internal, construct, and external validity. Internal validity was assessed through sensitivity and stability analyses using the same parameter settings described in Section 2.4. The BERTopic model was tested with small adjustments to its configuration to confirm that the results were not affected by minor parameter changes. The model consistently produced similar topic and skill structures, demonstrating stable and reliable behavior.

Construct validity was supported by aligning the extracted skill categories with internationally recognized frameworks such as ESCO and OECD Skills for Jobs. The identified topics were also reviewed by domain experts to verify their interpretability and coherence. The strong agreement between automated results and expert judgments confirmed that the model effectively captured meaningful patterns among roles, skills, and tools. External validity was examined by comparing findings across different subsets of the dataset. The observed patterns remained consistent, indicating that the results were not dependent on a specific sample or time period.

Reproducibility was ensured through a consistent experimental setup using deterministic computation with a fixed random seed (seed = 42). All analyses were conducted in Python 3.12 with stable library versions (bertopic = 0.16.3, umap-learn = 0.5.5, hdbscan = 0.8.33, sentence-transformers = 2.2.2, and scikit-learn = 1.4.2). These measures ensured consistent outputs across repeated runs and allowed the results to be reliably replicated under identical conditions [21,34,45,54].

3. Results

3.1. Expertise Roles, Job Titles, and Task Definitions

This section presents the results of the analysis of job postings related to big data analytics. Expertise roles and job titles were extracted from the content of job titles in these postings. Based on the distribution of job titles across roles, four primary expertise roles in big data analytics were identified. Table 1 provides an overview of these expertise roles, derived from the content analysis of job postings, along with their respective proportions, the five most common job titles, and a brief definition of each role’s core responsibilities. Our findings indicate that professionals working in big data analytics are generally classified into four main expertise roles: “Analyst” (29.14%), “Engineer” (28.20%), “Developer” (27.25%), and “Architect” (15.41%). The “Analyst” role is responsible for performing analysis, modeling, testing, and visualization on large datasets. The most prevalent job titles in this category are “Big Data Analyst” and “Business Analyst”. The “Engineer” role focuses on designing and managing big data infrastructure while contributing to the development of big data solutions across various industries. The most common job titles within this role include “Big Data Engineer” and “Senior Big Data Engineer”. The “Developer” role is centered on developing big data services and applications, integrating software engineering processes with big data analytics. The most frequently observed job titles in this category are “Big Data Developer” and “Big Data Software Engineer”. Lastly, the “Architect” role is responsible for designing and maintaining the necessary infrastructure and architecture for big data analytics processes, as well as planning data flows and strategies. The most common job titles associated with this role are “Big Data Architect” and “Big Data Solution Architect”.

Table 1.

Expertise roles, job titles, and task definitions in big data analytics (% representation).

3.2. Knowledge-Domains, Skills, and Competencies Required for Big Data Analytics

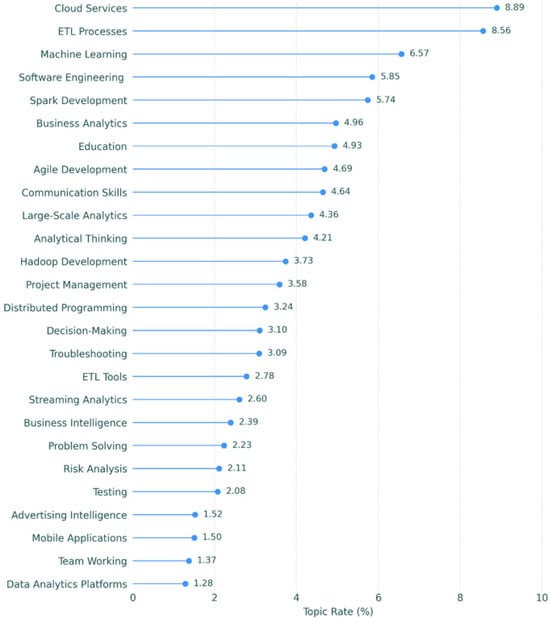

At this stage of our analysis, we have identified the competencies, skills, and knowledge domains required in the field of big data analytics. Through the application of neural topic modeling analysis, 26 distinct topics (skills) have been identified. The results, as illustrated in Figure 1, reveal a wide range of technical, analytical, and soft skills essential in big data analytics. These 26 skills also highlight the interdisciplinary nature of competencies in big data analytics. This analysis identifies the most in-demand skills and competencies in big data analytics, shedding light on the technical and analytical proficiencies that professionals in this field should possess. According to Figure 1, “Cloud Services” (8.89%) and “ETL Processes” (8.56%) emerge as the most critical competencies. This indicates that big data management is closely tied to cloud-based infrastructures and data integration processes. Technical skills such as “Machine Learning” (6.57%), “Software Engineering” (5.85%), and “Spark Development” (5.74%) also play a pivotal role in big data analytics. While “Machine Learning” is crucial for deriving meaningful insights from large datasets, “Software Engineering” and “Spark Development” are essential for enhancing the performance of big data applications.

Figure 1.

The most in-demand skills and competencies for big data analytics (% representation).

Niche areas like “Advertising Intelligence” (1.52%) and “Mobile Applications” (1.50%) provide insights into how big data analytics is applied across different industries. Finally, the emphasis on skills such as “Teamwork” (1.37%) and “Data Analytics Platforms” (1.28%) demonstrates that, in addition to technical knowledge, effective team coordination and the use of appropriate tools are critical in big data projects. Overall, this analysis underscores the importance of both technical and analytical thinking, communication, and project management skills in big data analytics. Particularly, areas such as cloud technologies, data processing, and machine learning stand out as key priorities. In addition, the top 15 descriptive keywords for the 26 discovered topics are provided in Appendix A.

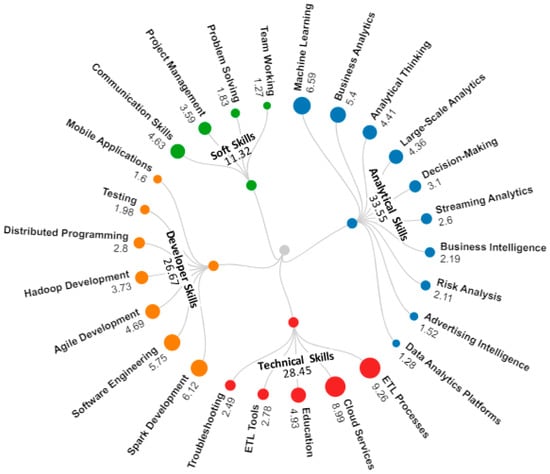

3.3. Taxonomy of the Skills by Technical Knowledge and Background

At this stage of the analysis, the domains of knowledge and skill sets have been categorized and presented in a more systematic manner. The 26 identified knowledge areas and skills have been assigned to four competency domains, providing a structured competency map for big data analytics. The percentages of skills by competency domain are shown in Figure 2. This figure presents the necessary skills for big data analytics under four main categories: “Analytical Skills” (33.55%), “Developer Skills” (26.67%), “Soft Skills” (11.32%), and “Technical Skills” (28.45%).

Figure 2.

Taxonomy of the skills and competencies for big data analytics (% representation).

“Analytical Skills” (33.55%) focus on data processing, modeling, and decision-making. Key skills include “Machine Learning” (6.59%) and “Business Analytics” (5.40%), essential for interpreting large datasets and supporting strategic decisions. “Large-Scale Analytics” (4.36%) and “Analytical Thinking” (4.41%) further reinforce these capabilities. “Technical Skills” (28.45%) cover knowledge essential for building and managing big data infrastructure. “ETL Processes” (9.26%) and “Cloud Services” (8.99%) highlight critical areas in data integration and storage. The presence of “Education” (4.93%) underscores the necessity of continuous learning. Additionally, “ETL Tools” (2.78%) and “Troubleshooting” (2.49%) are vital for maintaining data workflows.

“Developer Skills” (26.67%) pertain to software development in big data environments. “Spark Development” (6.12%) and “Software Engineering” (5.75%) are fundamental, while “Agile Development” (4.69%) and “Hadoop Development” (3.73%) ensure adaptability. “Soft Skills” (11.32%) emphasize teamwork, communication, and project management. “Communication Skills” (4.63%) and “Project Management” (3.59%) enhance collaboration, while “Problem Solving” (1.83%) and “Teamwork” (1.27%) support project success. In summary, big data analytics requires a diverse skill set. Analytical and technical skills are essential for data processing and interpretation. Developer skills enable system construction and operation. Soft skills enhance teamwork and project management, contributing to overall success.

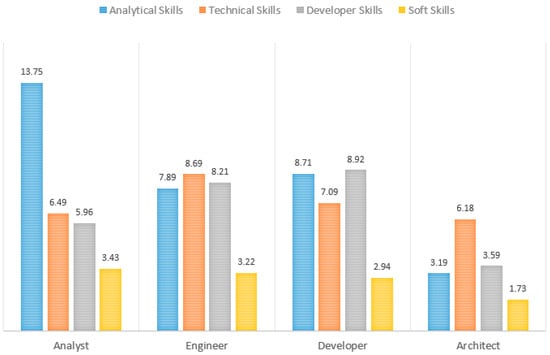

3.4. Taxonomic Distribution of Skills According to Expertise Roles

This analysis examines skill distribution across four expertise roles in big data analytics: “Analyst”, “Engineer”, “Developer”, and “Architect”. Figure 3 presents how each role prioritizes “Analytical Skills”, “Technical Skills”, “Developer Skills”, and “Soft Skills”. The “Analyst” role has the highest share of “Analytical Skills” (13.75%), emphasizing data interpretation, modeling, and decision support. While “Technical” (6.49%) and “Developer Skills” (5.96%) are also relevant, “Soft Skills” (3.43%) play a key role in communication and business collaboration. The “Engineer” role requires a strong technical foundation, with 8.69% in “Technical Skills” and 8.21% in “Developer Skills”, focusing on system setup and data management. Their “Analytical Skills” (7.89%) highlight their contribution to both infrastructure and data processes, while “Soft Skills” (3.22%) support teamwork and communication.

Figure 3.

Distribution of skill sets across expertise roles (% representation).

The “Developer” role leads in “Developer Skills” (8.92%), underscoring expertise in software development and platform integration. Their “Technical Skills” (7.09%) reflect deep involvement in system functionality, while “Analytical Skills” (8.71%) aid in optimizing data workflows. “Soft Skills” (2.94%) are essential for collaboration. The “Architect” role prioritizes “Technical Skills” (6.18%) and “Developer Skills” (3.59%), focusing on system design. Their lower “Analytical Skills” (3.19%) indicate limited direct data analysis involvement, while “Soft Skills” (1.73%) help facilitate communication with teams. Consequently, analysts focus on data insights, engineers and developers balance technical and development skills, and architects concentrate on system design. This distribution highlights the complementary nature of these roles in building effective big data solutions.

3.5. Distribution of Skills by Expertise Roles

At this stage of the analysis, skill sets and roles are matched. From this perspective, a systematic taxonomy of roles and skills for big data analytics is provided. The distribution matrix of each skill in the big data field across roles is presented in Table 2. The skills in the table are listed in descending order according to their total percentages in the last column. Each skill has been individually scored for the four primary roles: Analyst, Engineer, Developer, and Architect, and their share in the total percentage has been determined. During the coding process, each skill was matched with the corresponding expertise role to calculate their weights.

Table 2.

Distribution of skills across expertise roles (% representation).

According to the table, the “Analyst” role has the highest total skill percentage at 29.14%, with a particular focus on “Machine Learning” (2.42), “Business Analytics” (1.96), and “Large-Scale Analytics” (1.69). The “Engineer” role follows with a total of 28.20%, excelling in “ETL Processes” (2.79), “Cloud Services” (2.04), and “Software Engineering” (1.82). The “Developer” role, with 27.25%, similarly emphasizes technical and software development skills, with notable areas being “Software Engineering” (2.44), “Spark Development” (1.63), and “ETL Processes” (2.38). The “Architect” role has the lowest total percentage at 15.41%, focusing primarily on infrastructure-related skills such as “Cloud Services” (2.37) and “ETL Processes” (2.17).

When evaluating the table by rows, each row shows the percentage distribution of each skill across the expertise roles in big data analytics. For instance, “Cloud Services” (8.89) holds the highest weight, particularly standing out in the “Analyst” (2.39) and “Architect” (2.37) roles, highlighting the importance of cloud-based data processing and infrastructure design. The second highest, “ETL Processes” (8.56), is most strongly associated with the “Engineer” (2.79) role, indicating the critical nature of managing and transforming data flows for engineers. “Machine Learning” (6.57) is especially prominent in the “Analyst” (2.42) and “Developer” (2.11) roles, underscoring the importance of modeling for analysts and integration for developers.

To enhance the clarity of the analysis results, Table 3 summarizes the top ten skills for each role. Out of the 26 skills, 19 appear among the top ten skills for any role. As shown in Table 3, “Cloud Services”, “Education”, and “Communication Skills” are among the top ten skills for all big data analytics positions. Secondly, the term “ETL Processes” appears in the top ten of three out of the four roles. Additional dominant skills include “Machine Learning”, “Business Analytics”, and “Big Data Processing”. The term “Streaming Analytics” in the last row is the only skill that appears in the eighth position for the Analyst role. When considering the column-based table, each column shows the top ten skills for each role. For instance, for the Analyst role, the skill in the first position is “Machine Learning”, while the skill in the tenth position is “Education”.

Table 3.

Ranked matching of the ten skills for each expertise role.

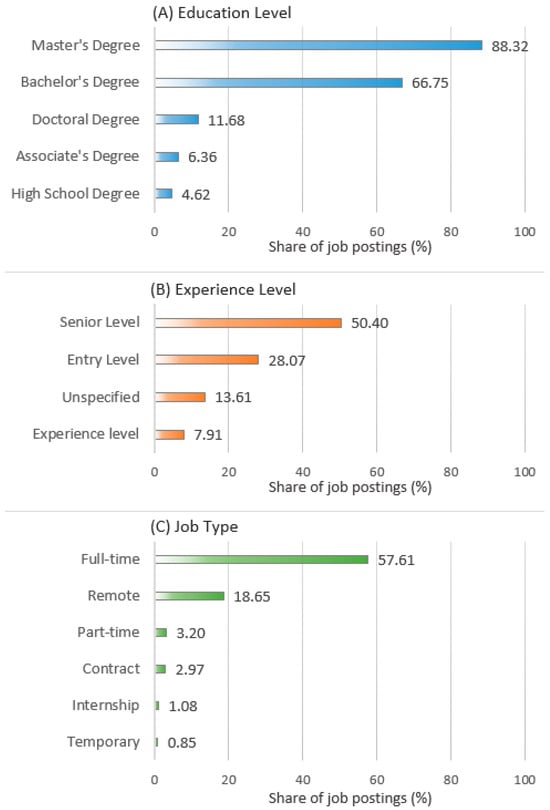

3.6. Requirements for Job Type, Education, and Experience

This section of our analysis includes findings on the education levels, experience levels, and job types needed in big data analytics. Figure 4 presents statistical data related to careers in big data analytics, offering insights into education levels (Figure 4A), experience levels (Figure 4B), and job types (Figure 4C). Regarding education levels, a significant majority of professionals in this field hold a master’s degree (88.32%), underscoring the importance of advanced education for success in big data analytics. Additionally, a substantial portion of individuals possess a bachelor’s degree (66.75%), indicating that undergraduate education serves as a foundational requirement for entering this domain. In contrast, the proportion of professionals with a doctoral degree is relatively low (11.68%), suggesting that a Ph.D. is not a prerequisite for most roles in this field. Associate’s and high school degrees are held by a small fraction of professionals (6.36% and 4.62%, respectively), further emphasizing the preference for higher education in this industry.

Figure 4.

Education level, experience level and job type requirements (% representation).

Regarding experience levels, a majority of professionals are at a senior level (50.40%), reflecting the value placed on experience and expertise in big data analytics. Entry-level professionals constitute a notable portion (28.07%), indicating that the field also offers opportunities for newcomers. A smaller percentage of individuals fall into unspecified or other experience categories (13.61% and 7.91%, respectively), which may include transitional or niche roles.

In terms of job types, full-time positions dominate the field (57.61%), highlighting the prevalence of traditional employment structures in big data analytics. Remote work is also significant (18.65%), reflecting the adaptability of the industry to flexible work arrangements. Part-time, contract, internship, and temporary roles are less common (3.20%, 2.97%, 1.08%, and 0.85%, respectively), suggesting that these types of employment are less prevalent in this sector.

As the findings indicate, big data analytics is a knowledge-intensive field requiring advanced education and extensive professional experience. However, it also offers opportunities for entry-level professionals to gain practical experience and develop their expertise. The prevalence of full-time positions highlights the industry’s demand for skilled professionals, while the availability of remote work options reflects the increasing flexibility in modern employment structures. These trends underscore the dynamic and rapidly expanding nature of career opportunities in big data analytics.

3.7. Key Tasks and Related Tools in Big Data Analytics

This analysis identifies the most needed tasks, highlighting the key technical competencies required for efficient data processing, integration, and decision-making in modern data-driven environments. A task in big data analytics refers to a specific computational or analytical process essential for handling, processing, and extracting insights from large datasets. Table 4 presents the top 15 most needed tasks in big data analytics, highlighting the key areas of expertise required in the field. The highest-ranking task, “Analytics” (7.75%), emphasizes the importance of extracting insights from data and data-driven decision-making processes. The second-ranked task, “Processing” (7.04%), highlights the critical role of preparing raw data for analysis. Ranked third and fourth, “Modeling” (6.37%) and “Integration” (6.06%) indicate the industry’s fundamental needs for developing advanced analytical models and integrating various data sources. “Optimization” (5.51%) and “Prediction” (4.89%), also among the top tasks, underline the significance of efficiently managing resources and forecasting future outcomes. Additionally, tasks such as “Classification” (4.56%) and “Management” (4.18%) in the middle of the list reflect the necessity for effective data categorization and governance. Towards the bottom of the list, “Forecasting” (3.82%), “Visualization” (3.62%), and “Mining” (3.46%) emphasize the importance of data visualization, trend analysis, and pattern discovery processes. Lower-ranked tasks like “Recognition” (3.22%), “Automation” (2.92%), “Deployment” (2.65%), and “Segmentation” (2.45%) remain relevant but less prominent.

Table 4.

Top 15 most needed tasks for big data analytics (% representation).

In addition, Table 5 provides an overview of the top 15 tools most needed for big data analytics, highlighting the diverse combinations of technologies employed for different tasks. The findings show that these tool combinations are more efficient and functional in big data analytics when used together, rather than individually. The listed tools reflect the growing importance of data processing, analytics, machine learning, real-time processing, and cloud solutions. “Python, Spark, SQL” emerges as the most popular set (6.73%), vital for analytics, querying. “Python, TensorFlow, SQL” follows closely (6.04%), highlighting Python’s dominance in machine learning tasks. “Java, Kafka, Flink” (5.62%) underscores the importance of real-time stream processing. Cloud solutions, including “Python, Snowflake, Tableau” (5.38%), reflect a strong preference for scalable data management, visualization. Lastly, the orchestration stack “Python, Airflow, Kubernetes” (4.31%) highlights cloud-native workflows. The overview indicates a clear preference for Python-based technologies and emphasizes the integration of real-time data processing with cloud-native infrastructure. The data underscores the industry’s shift towards flexible, scalable, and integrated analytics solutions.

Table 5.

Top 15 most needed tools for big data analytics (% representation).

4. Discussion

This study systematically examines the skills, tasks, and tools required in the big data analytics labor market and provides valuable insights into the evolving structure of the field. Using N-gram–based text mining and Neural Topic Modeling (NTM) with the BERTopic framework, data extracted from job postings were cross-referenced with role classifications and skill taxonomies. The findings reveal that analytical, technical, developer, and soft skills have different priorities across the Analyst, Engineer, Developer, and Architect roles, while task–tool relationships reflect the functional and technological ecosystems that dominate the market. Furthermore, the results emphasize advancements in data processing workflows, the role of automation, the increasing reliance on cloud-based solutions, and the integration of AI-driven technologies. The big data ecosystem is continuously shaped by evolving tasks, methods, tools, and technologies, requiring professionals to possess a broad spectrum of both technical and soft skills [29,32,58,59].

The study underscores the necessity of real-time and scalable solutions in big data analytics, as managing the increasing volume and velocity of data remains a fundamental challenge. Additionally, cloud-based data services have been widely adopted, offering enhanced flexibility, scalability, and cost efficiency in big data workflows. Snowflake, Databricks, Azure, and AWS have emerged as dominant platforms due to their scalable storage, data processing, and integration capabilities [3,23]. The increasing importance of real-time data analytics is also evident, with technologies such as Apache Spark, Kafka, and Flink playing a crucial role in efficiently managing high-velocity data streams. Furthermore, the transition from traditional databases to modern data warehouses has significantly improved data accessibility, processing speed, and the efficiency of analytical processes. The prominence of the “ETL Processes” topic (8.56%) and the high demand for the “Python, Spark, and SQL” toolset emphasize the critical role of data integration and automation in big data analytics [6,11,37,60,61,62].

Another key finding highlights the importance of selecting the appropriate analytical tasks and tools to enhance data processing, analysis, and visualization efficiency. The transition to intelligent data platforms has also emerged as a crucial trend, with AI-driven data storage and analytics improving automation and operational efficiency [6,11,26,55]. The study further emphasizes the growing role of automation in big data analytics, streamlining data processing, reducing manual effort, and enhancing decision-making accuracy [63,64,65,66]. Artificial intelligence and machine learning have been identified as essential components in predictive analytics and automated decision-making processes [1,4,14]. Additionally, the findings suggest that big data analytics plays a pivotal role in strengthening competitive intelligence, enabling organizations to obtain real-time market insights and make more informed strategic decisions [7,13,60,67].

Beyond technical findings, the study highlights the importance of soft skills in big data analytics. Effective communication, critical thinking, and problem-solving have been identified as crucial for transforming data-driven insights into actionable strategies. The prominence of the “Communication Skills” topic (4.64%) underscores its role as one of the most essential competencies, as it enables professionals to effectively convey analytical findings to both technical and non-technical stakeholders. Given the interdisciplinary nature of big data projects, communication skills are essential for facilitating collaboration among various stakeholders [23,24]. Moreover, teamwork and cross-functional collaboration are necessary for successfully implementing big data projects and aligning them with business objectives. Effective collaboration between functional teams ensures synergy between technical efforts and organizational goals [24,28,32,33,67].

Due to the ever-evolving nature of big data technologies, continuous workforce development is imperative. The study highlights “Education” as a fundamental skill across all roles (4.93%), emphasizing the importance of lifelong learning. A significant proportion of professionals in big data analytics hold a master’s degree (88.32%), reflecting the growing demand for highly qualified individuals [9,20,29,68]. Addressing the skills gap through interdisciplinary education and competency-based IT training programs is crucial for ensuring an adequately skilled workforce. Given the dynamic, competitive, and constantly evolving nature of the big data analytics domain, maintaining continuous skill development is essential for the sustainability of the field [13,15,25,58,69].

Beyond these empirical findings, the study offers both theoretical and practical contributions. From a theoretical perspective, it introduces a methodological framework that integrates N-gram–based text mining, the BERTopic model, and role–skill taxonomy mapping, extending beyond prior studies that relied mainly on keyword frequency analysis. This approach not only identifies the most frequent tasks and tools but also clarifies their contextual use cases, thus offering a more nuanced understanding of labor market dynamics. The taxonomic classification of skills (analytical, technical, developer, and soft skills) and their cross-mapping with expertise roles (Analyst, Engineer, Developer, Architect) provide a structured view of how competencies are distributed across roles, while the recurring associations between tool stacks and tasks (e.g., Python–Spark–SQL for data processing, Java–Kafka–Flink for real-time analytics) illustrate how technological ecosystems evolve in response to domain-specific requirements.

At the same time, the study has important practical implications for employers, educators, and professionals. Employers can refine job descriptions by emphasizing the most critical tool stacks and skill sets, aligning recruitment more closely with organizational needs. Educational institutions and training providers can redesign curricula that balance analytical, technical, and interpersonal skills, ensuring that graduates are prepared for interdisciplinary challenges. For example, the widespread demand for Python–Spark–SQL highlights the need to include these technologies in technical training, while the prominence of “Communication Skills” (4.64%) signals the importance of integrating soft skill development into data science education. For professionals, the results highlight the competencies most valued in the labor market, showing that 88.32% of practitioners hold a master’s degree, which underlines the necessity of advanced education and lifelong learning. Continuous upskilling and reskilling remain essential in an environment shaped by rapid technological change, where interdisciplinary expertise, teamwork, and adaptability are critical to sustaining employability.

5. Conclusions

This study presents a comprehensive analysis of the essential skills and competencies required in big data analytics using Neural Topic Modeling (NTM) with the BERTopic framework applied to real-world job postings. The analysis began by identifying key expertise roles in big data analytics and defining their responsibilities. It then determined the essential technical, analytical, developer, and soft skills for these roles. These skills were categorized based on technical background, forming a skill taxonomy. The study also examined the distribution of skills across roles, their correlations, and the requirements for job types, education, and experience. Lastly, it analyzed core tasks, techniques, and tools in big data workflows, identifying emerging trends and industry needs. This comprehensive analysis serves as a crucial foundation for understanding the structure of the big data analytics ecosystem and the skill requirements necessary to develop long-term data-driven strategies.

Although this study provides valuable insights, it also has limitations concerning its dataset and methodological approach. First, the dataset was derived from publicly available job postings, which may not fully reflect internal hiring criteria or unpublished skill demands. Consequently, validation and expansion of the findings could be achieved through analyses conducted on different real-world labor market datasets. Moreover, as the findings capture trends at a specific point in time, they are subject to change with ongoing technological trends and industrial needs. Therefore, it is recommended that similar analyses be conducted periodically to track evolving skill demands. Second, the accuracy of competencies identified through BERTopic is dependent on the quality and completeness of the textual data analyzed. Future studies could enhance their methodologies by incorporating additional techniques to explore the broader impact of emerging technologies such as artificial intelligence, automation, and real-time analytics on employment trends. In addition, the dataset covers a six-month period (July–December 2024), which reflects short-term patterns in skill demand. Although this timeframe provides a timely snapshot of current trends, future studies should extend the analysis across multiple years to better capture long-term workforce dynamics.

This study also provides guidance on advancing topic modeling techniques by leveraging transformer-based architectures, including Neural Topic Modeling (NTM) and self-supervised learning methods. The continuous evolution of big data analytics necessitates ongoing learning and rapid adaptability among professionals. To remain competitive in an evolving job market, professionals must consistently update their skill sets. By contributing to the development of a future-ready workforce capable of navigating the complexities of big data analytics environments, this research bridges the gap between academia and industry.

Author Contributions

Conceptualization, A.Q.K., A.S. and F.G.; methodology, A.Q.K., A.S. and F.G.; software, A.Q.K., A.S. and F.G.; validation, A.Q.K., A.S. and F.G.; formal analysis, A.Q.K., A.S. and F.G.; investigation, A.Q.K., A.S. and F.G.; resources, A.Q.K., A.S. and F.G.; data curation, A.Q.K., A.S. and F.G.; writing—original draft preparation, A.Q.K., A.S. and F.G.; writing—review and editing, A.Q.K., A.S. and F.G.; visualization, A.Q.K., A.S. and F.G.; supervision, A.Q.K., A.S. and F.G.; project administration, A.Q.K., A.S. and F.G.; funding acquisition, A.Q.K., A.S. and F.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset is publicly available and cited in the article.

Acknowledgments

The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Topic name, descriptive keywords and their percentages.

Table A1.

Topic name, descriptive keywords and their percentages.

| Topic Name | Keywords | % |

|---|---|---|

| Cloud Services | cloud, aws, azure, gcp, serverless, network, security, storage, architecture, devops | 8.89 |

| ETL Processes | data, pipeline, etl, ingestion, transformation, warehouse, integration, extraction, processing, automation | 8.56 |

| Machine Learning | machine, model, feature, learning, algorithm, class, regression, training, dataset, prediction | 6.57 |

| Software Engineering | software, development, coding, testing, debugging, design, version, deployment, integration, scalability | 5.85 |

| Spark Development | spark, rdd, dataframe, dataset, cluster, parallel, execution, optimization, partition, transformation | 5.74 |

| Business Analytics | business, insight, analytic, machine, learning, predict, python, metric, forecast, scenario | 4.96 |

| Education | degree, science, bachelor, master, related, course, faculty, field, equivalent, engineering | 4.93 |

| Agile Development | agile, scrum, kanban, backlog, sprint, iteration, planning, velocity, story, release | 4.69 |

| Communication Skills | communication, speaking, strong, verbal, english, listening, writing, presentation, argument, persuasion | 4.64 |

| Large-Scale Analytics | scale, processing, framework, parallel, distributed, large, optimization, computation, data, modeling | 4.36 |

| Analytical Thinking | reasoning, logic, deduction, induction, evaluation, inference, problem, interpretation, decision, synthesis | 4.21 |

| Hadoop Development | hadoop, hive, spark, hdfs, mapreduce, yarn, hbase, pig, sqoop, tez | 3.73 |

| Project Management | project, planning, scope, timeline, risk, stakeholder, requirement, deliverable, budget, execution | 3.58 |

| Distributed Programming | programming, distributed, parallel, concurrency, communication, develop, process, fault, replication | 3.24 |

| Decision-Making | decision, strategy, insight, risk, evaluation, analysis, choice, priority, tradeoff, impact | 3.10 |

| Troubleshooting | troubleshooting, issue, problem, debugging, diagnosis, resolution, failure, testing, recovery, log | 3.09 |

| ETL Tools | airflow, talend, informatica, pentaho, databricks, fivetran, matillion, ssis, dbt, stitch | 2.78 |

| Streaming Analytics | streaming, event, real-time, ingestion, processing, window, computation, change, aggregation, low-latency | 2.60 |

| Business Intelligence | business, dashboard, intelligence, insight, metric, visualization, kpi, trend, reporting, strategy | 2.39 |

| Problem Solving | problem, solve, solution, logic, reasoning, creativity, innovation, challenge, approach, analysis | 2.23 |

| Risk Analysis | risk, security, threat, mitigation, exposure, assessment, compliance, fraud, vulnerability, impact | 2.11 |

| Testing | test, validation, verification, unit, integration, regression, automation, bug, performance, acceptance | 2.08 |

| Advertising Intelligence | advertising, campaign, targeting, stream, marketing, conversion, optimization, bidding, strategy, message | 1.52 |

| Mobile Applications | mobile, app, interface, usability, performance, optimization, framework, backend, frontend, security | 1.50 |

| Team Working | collaboration, coordination, leadership, team, relation, engagement, interaction, alignment, teamwork | 1.37 |

| Data Analytics Platforms | aws, azure, google, platform, warehouse, lake, analytics, integration, query, scalability, computation, | 1.28 |

References

- Rahmani, A.M.; Azhir, E.; Ali, S.; Mohammadi, M.; Ahmed, O.H.; Ghafour, M.Y.; Ahmed, S.H.; Hosseinzadeh, M. Artificial Intelligence Approaches and Mechanisms for Big Data Analytics: A Systematic Study. PeerJ Comput. Sci. 2021, 7, e488. [Google Scholar] [CrossRef]

- Chen, H.; Chiang, R.H.L.; Storey, V.C. Business Intelligence and Analytics: From Big Data to Big Impact. Mis Q. 2012, 36, 1165–1188. [Google Scholar] [CrossRef]

- Hu, H.; Wen, Y.; Chua, T.S.; Li, X. Toward Scalable Systems for Big Data Analytics: A Technology Tutorial. IEEE Access 2014, 2, 652–687. [Google Scholar] [CrossRef]

- Liang, T.P.; Liu, Y.H. Research Landscape of Business Intelligence and Big Data Analytics: A Bibliometrics Study. Expert Syst. Appl. 2018, 111, 2–10. [Google Scholar] [CrossRef]

- Kumar, N.; Hema, K.; Hordiichuk, V.; Menon, R.; Catherene; Aarthy, C.C.J.; Gonesh, C. Harnessing the Power of Big Data: Challenges and Opportunities in Analytics. Tuijin Jishu/J. Propuls. Technol. 2023, 44, 363–371. [Google Scholar] [CrossRef]

- Philip Chen, C.L.; Zhang, C.-Y. Data-Intensive Applications, Challenges, Techniques and Technologies: A Survey on Big Data. Inf. Sci. 2014, 275, 314–347. [Google Scholar] [CrossRef]

- Sun, Z.; Sun, L.; Strang, K. Big Data Analytics Services for Enhancing Business Intelligence. J. Comput. Inf. Syst. 2018, 58, 162–169. [Google Scholar] [CrossRef]

- Zheng, Z.; Cai, Y.; Li, Y. Oversampling Method for Imbalanced Classification. Comput. Inform. 2015, 34, 1017–1037. [Google Scholar]

- Halwani, M.A.; Amirkiaee, S.Y.; Evangelopoulos, N.; Prybutok, V. Job Qualifications Study for Data Science and Big Data Professions. Inf. Technol. People 2022, 35, 510–525. [Google Scholar] [CrossRef]

- Rodríguez-Mazahua, L.; Rodríguez-Enríquez, C.A.; Sánchez-Cervantes, J.L.; Cervantes, J.; García-Alcaraz, J.L.; Alor-Hernández, G. A General Perspective of Big Data: Applications, Tools, Challenges and Trends. J. Supercomput. 2016, 72, 3073–3113. [Google Scholar] [CrossRef]

- Gurcan, F. What Issues Are Data Scientists Talking about? Identification of Current Data Science Issues Using Semantic Content Analysis of Q&A Communities. PeerJ Comput. Sci. 2023, 9, e1361. [Google Scholar] [CrossRef]

- Debortoli, S.; Müller, O.; Vom Brocke, J. Comparing Business Intelligence and Big Data Skills: A Text Mining Study Using Job Advertisements. Bus. Inf. Syst. Eng. 2014, 6, 289–300. [Google Scholar] [CrossRef]

- Rahhal, I.; Kassou, I.; Ghogho, M. Data Science for Job Market Analysis: A Survey on Applications and Techniques. Expert Syst. Appl. 2024, 251, 124101. [Google Scholar] [CrossRef]

- Raschka, S.; Patterson, J.; Nolet, C. Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence. Information 2020, 11, 193. [Google Scholar] [CrossRef]

- Turulja, L.; Vugec, D.S.; Bach, M.P. Big Data and Labour Markets: A Review of Research Topics. Procedia Comput. Sci. 2022, 217, 526–535. [Google Scholar] [CrossRef]

- Jackson, S.E.; Seo, J. The Greening of Strategic HRM Scholarship. Organ. Manag. J. 2010, 7, 278–290. [Google Scholar] [CrossRef]

- Ehnert, I.; Parsa, S.; Roper, I.; Wagner, M.; Muller-Camen, M. Reporting on Sustainability and HRM: A Comparative Study of Sustainability Reporting Practices by the World’s Largest Companies. Int. J. Hum. Resour. Manag. 2016, 27, 88–108. [Google Scholar] [CrossRef]

- World Economic Forum. The Future of Jobs Report. 2023. Available online: https://www.weforum.org/reports/the-future-of-jobs-report-2023 (accessed on 15 October 2025).

- Aljohani, N.R.; Aslam, M.A.; Khadidos, A.O.; Hassan, S.U. A Methodological Framework to Predict Future Market Needs for Sustainable Skills Management Using AI and Big Data Technologies. Appl. Sci. 2022, 12, 6898. [Google Scholar] [CrossRef]

- Han, F.; Ren, J. Analyzing Big Data Professionals: Cultivating Holistic Skills Through University Education and Market Demands. IEEE Access 2024, 12, 23568–23577. [Google Scholar] [CrossRef]

- Gurcan, F.; Gudek, B.; Menekse Dalveren, G.G.; Derawi, M. Future-Ready Skills Across Big Data Ecosystems: Insights from Machine Learning-Driven Human Resource Analytics. Appl. Sci. 2025, 15, 5841. [Google Scholar] [CrossRef]

- Moreno, A.M.; Sanchez-Segura, M.I.; Medina-Dominguez, F.; Carvajal, L. Balancing Software Engineering Education and Industrial Needs. J. Syst. Softw. 2012, 85, 1607–1620. [Google Scholar] [CrossRef]

- Gurcan, F.; Cagiltay, N.E. Big Data Software Engineering: Analysis of Knowledge Domains and Skill Sets Using LDA-Based Topic Modeling. IEEE Access 2019, 7, 82541–82552. [Google Scholar] [CrossRef]

- Montandon, J.E.; Politowski, C.; Silva, L.L.; Valente, M.T.; Petrillo, F.; Guéhéneuc, Y.G. What Skills Do IT Companies Look for in New Developers? A Study with Stack Overflow Jobs. Inf. Softw. Technol. 2021, 129, 106429. [Google Scholar] [CrossRef]

- Terblanche, C.; Wongthongtham, P. Ontology-Based Employer Demand Management. Softw. Pract. Exp. 2015, 46, 469–492. [Google Scholar] [CrossRef]

- Radovilsky, Z.; Hegde, V.; Acharya, A.; Uma, U. Skills Requirements of Business Data Analytics and Data Science Jobs: A Comparative Analysis. J. Supply Chain Oper. Manag. 2018, 16, 82–101. [Google Scholar]

- De Mauro, A.; Greco, M.; Grimaldi, M.; Ritala, P. Human Resources for Big Data Professions: A Systematic Classification of Job Roles and Required Skill Sets. Inf. Process. Manag. 2018, 54, 807–817. [Google Scholar] [CrossRef]

- Gardiner, A.; Aasheim, C.; Rutner, P.; Williams, S. Skill Requirements in Big Data: A Content Analysis of Job Advertisements. J. Comput. Inf. Syst. 2018, 58, 374–384. [Google Scholar] [CrossRef]

- Debao, D.; Yinxia, M.; Min, Z. Analysis of Big Data Job Requirements Based on K-Means Text Clustering in China. PLoS ONE 2021, 16, e0255419. [Google Scholar] [CrossRef]

- Ozyurt, O.; Gurcan, F.; Dalveren, G.G.M.; Derawi, M. Career in Cloud Computing: Exploratory Analysis of In-Demand Competency Areas and Skill Sets. Appl. Sci. 2022, 12, 9787. [Google Scholar] [CrossRef]

- Ningrum, P.K.; Pansombut, T.; Ueranantasun, A. Text Mining of Online Job Advertisements to Identify Direct Discrimination during Job Hunting Process: A Case Study in Indonesia. PLoS ONE 2020, 15, e0233746. [Google Scholar] [CrossRef] [PubMed]

- Persaud, A. Key Competencies for Big Data Analytics Professions: A Multimethod Study. Inf. Technol. People 2021, 34, 178–203. [Google Scholar] [CrossRef]

- Calanca, F.; Sayfullina, L.; Minkus, L.; Wagner, C.; Malmi, E. Responsible Team Players Wanted: An Analysis of Soft Skill Requirements in Job Advertisements. EPJ Data Sci. 2019, 8, 13. [Google Scholar] [CrossRef]

- Egger, R.; Yu, J. A Topic Modeling Comparison Between LDA, NMF, Top2Vec, and BERTopic to Demystify Twitter Posts. Front. Sociol. 2022, 7, 886498. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Chen, J.; Chen, J.; Chen, H. Identifying Interdisciplinary Topics and Their Evolution Based on BERTopic. Scientometrics 2023, 129, 7359–7384. [Google Scholar] [CrossRef]

- Pejic-Bach, M.; Bertoncel, T.; Meško, M.; Krstić, Ž. Text Mining of Industry 4.0 Job Advertisements. Int. J. Inf. Manag. 2020, 50, 416–431. [Google Scholar] [CrossRef]

- Gurcan, F. Major Research Topics in Big Data: A Literature Analysis from 2013 to 2017 Using Probabilistic Topic Models. In Proceedings of the 2018 International Conference on Artificial Intelligence and Data Processing, IDAP 2018, Malatya, Turkey, 28–30 September 2018; pp. 1–4. [Google Scholar]

- Indeed Job Search|Indeed. Available online: https://www.indeed.com/ (accessed on 16 January 2024).

- Gurcan, F.; Erdogdu, F.; Cagiltay, N.E.; Cagiltay, K. Student Engagement Research Trends of Past 10 Years: A Machine Learning-Based Analysis of 42,000 Research Articles. Educ. Inf. Technol. 2023, 28, 15067–15091. [Google Scholar] [CrossRef]

- Feng, J.; Zhang, Z.; Ding, C.; Rao, Y.; Xie, H.; Wang, F.L. Context Reinforced Neural Topic Modeling over Short Texts. Inf. Sci. 2022, 607, 79–91. [Google Scholar] [CrossRef]

- Hickman, L.; Thapa, S.; Tay, L.; Cao, M.; Srinivasan, P. Text Preprocessing for Text Mining in Organizational Research: Review and Recommendations. Organ. Res. Methods 2022, 25, 114–146. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet Allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar] [CrossRef]

- Gurcan, F. Identification of Mobile Development Issues Using Semantic Topic Modeling of Stack Overflow Posts. PeerJ Comput. Sci. 2023, 9, e1658. [Google Scholar] [CrossRef]

- Boztaş, G.D.; Berigel, M.; Altınay, F. A Bibliometric Analysis of Educational Data Mining Studies in Global Perspective. Educ. Inf. Technol. 2024, 29, 8961–8985. [Google Scholar] [CrossRef]

- Grootendorst, M. BERTopic: Neural Topic Modeling with a Class-Based TF-IDF Procedure. arXiv 2022, arXiv:2203.05794. [Google Scholar]

- Wu, X.; Nguyen, T.; Luu, A.T. A Survey on Neural Topic Models: Methods, Applications, and Challenges. Artif. Intell. Rev. 2024, 57, 18. [Google Scholar] [CrossRef]

- Xu, K.; Lu, X.; Li, Y.-F.; Wu, T.; Qi, G.; Ye, N.; Wang, D.; Zhou, Z. Neural Topic Modeling with Deep Mutual Information Estimation. Big Data Res. 2022, 30, 100344. [Google Scholar] [CrossRef]

- Gurcan, F.; Dalveren, G.G.M.; Cagiltay, N.E.; Soylu, A. Detecting Latent Topics and Trends in Software Engineering Research Since 1980 Using Probabilistic Topic Modeling. IEEE Access 2022, 10, 74638–74654. [Google Scholar] [CrossRef]

- Blei, D.M. Probabilistic Topic Models. Commun. ACM 2012, 55, 77–84. [Google Scholar] [CrossRef]

- Lukauskas, M.; Šarkauskaitė, V.; Pilinkienė, V.; Stundžienė, A.; Grybauskas, A.; Bruneckienė, J. Enhancing Skills Demand Understanding through Job Ad Segmentation Using NLP and Clustering Techniques. Appl. Sci. 2023, 13, 6119. [Google Scholar] [CrossRef]

- Subakti, A.; Murfi, H.; Hariadi, N. The Performance of BERT as Data Representation of Text Clustering. J. Big Data 2022, 9, 15. [Google Scholar] [CrossRef]

- Murakami, R.; Chakraborty, B. Investigating the Efficient Use of Word Embedding with Neural-Topic Models for Interpretable Topics from Short Texts. Sensors 2022, 22, 852. [Google Scholar] [CrossRef]

- Raschka, S.; Mirjalili, V. Python Machine Learning: Machine Learning and Deep Learning with Python, Scikit-Learn, and TensorFlow 2; Packt Publishing Ltd.: Birmingham, UK, 2019. [Google Scholar]

- Kilinc, M.; Gurcan, F.; Soylu, A. LLM-Based Generative AI in Medicine: Analysis of Current Research Trends with BERTopic. IEEE Access 2025, 13, 157026–157043. [Google Scholar] [CrossRef]

- Gurcan, F. Extraction of Core Competencies for Big Data: Implications for Competency-Based Engineering Education. Int. J. Eng. Educ. 2019, 35, 1110–1115. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Scikit-Learn 1. Supervised Learning—Scikit-Learn 1.4.2 Documentation. Available online: https://scikit-learn.org/stable/supervised_learning.html (accessed on 29 April 2024).

- Booker, Q.; Rebman, C.; Wimmer, H.; Levkoff, S.; Powell, L.; Breese, J. Data Analytics Position Description Analysis: Skills Review and Implications for Data Analytics Curricula. Inf. Syst. Educ. J. 2024, 22, 76–87. [Google Scholar] [CrossRef]

- Chang, J.C.; Lu, H.Q. Competency and Training Needs for Net-Zero Sustainability Management Personnel. Sustainability 2025, 17, 3244. [Google Scholar] [CrossRef]

- Boselli, R.; Cesarini, M.; Mercorio, F.; Mezzanzanica, M. Classifying Online Job Advertisements through Machine Learning. Futur. Gener. Comput. Syst. 2018, 86, 319–328. [Google Scholar] [CrossRef]

- Mostafaeipour, A.; Jahangard Rafsanjani, A.; Ahmadi, M.; Arockia Dhanraj, J. Investigating the Performance of Hadoop and Spark Platforms on Machine Learning Algorithms. J. Supercomput. 2021, 77, 1273–1300. [Google Scholar] [CrossRef]

- Smaldone, F.; Ippolito, A.; Lagger, J.; Pellicano, M. Employability Skills: Profiling Data Scientists in the Digital Labour Market. Eur. Manag. J. 2022, 40, 671–684. [Google Scholar] [CrossRef]

- Kantardzic, M. Data Mining: Concepts, Models, Methods, and Algorithms, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2011; ISBN 9780470890455. [Google Scholar]

- Al-Azzam, N.; Shatnawi, I. Comparing Supervised and Semi-Supervised Machine Learning Models on Diagnosing Breast Cancer. Ann. Med. Surg. 2021, 62, 53–64. [Google Scholar] [CrossRef]

- Verma, A.; Yurov, K.M.; Lane, P.L.; Yurova, Y.V. An Investigation of Skill Requirements for Business and Data Analytics Positions: A Content Analysis of Job Advertisements. J. Educ. Bus. 2019, 94, 243–250. [Google Scholar] [CrossRef]

- Najafabadi, M.M.; Villanustre, F.; Khoshgoftaar, T.M.; Seliya, N.; Wald, R.; Muharemagic, E. Deep Learning Applications and Challenges in Big Data Analytics. J. Big Data 2015, 2, 1. [Google Scholar] [CrossRef]

- Miller, S. Collaborative Approaches Needed to Close the Big Data Skills Gap. J. Organ. Des. 2014, 3, 26–30. [Google Scholar] [CrossRef]

- Karakolis, E.; Kapsalis, P.; Skalidakis, S.; Kontzinos, C.; Kokkinakos, P.; Markaki, O.; Askounis, D. Bridging the Gap between Technological Education and Job Market Requirements through Data Analytics and Decision Support Services. Appl. Sci. 2022, 12, 7139. [Google Scholar] [CrossRef]

- Alibasic, A.; Upadhyay, H.; Simsekler, M.C.E.; Kurfess, T.; Woon, W.L.; Omar, M.A. Evaluation of the Trends in Jobs and Skill-Sets Using Data Analytics: A Case Study. J. Big Data 2022, 9, 32. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).