1. Introduction

Generative artificial intelligence (AI) technologies, typified by ChatGPT4, Sora, and DeepSeek, are evolving at an unprecedentedly rapid pace. Accompanying this, risks related to data usage, misuse of generated content, and algorithmic abuse [

1,

2,

3,

4] are increasingly prominent. The 2025 AI Index Report [

5], released by Stanford University’s Stanford Institute for Human-Centered AI, reveals that global AI-related harm incidents surged to 233 in 2024, representing a year-on-year increase of 56.4%.

AI governance has become a key priority for countries worldwide. Since 2023, countries around the world have accelerated their reform processes in the field of AI governance [

6]. Major economies represented by Europe, the United States, the United Kingdom, and China have shown distinct divergences in policies.

The European Union (EU) has established a horizontal unified legislative framework, which takes the world’s first AI Act [

7] as the core, and it is supplemented by supporting regulations, such as the Digital Services Act (DSA) [

8] and the Digital Markets Act (DMA) [

9]. It forms a hierarchically clear “hard” regulatory system and establishes the fundamental principle of “setting differentiated compliance obligations based on risk levels”, requiring ex ante assessment and full-process documentation for high-risk AI systems [

10].

The United States has undergone a policy shift. Executive Order 14110, issued during the Biden administration, has been repealed by the Trump administration. Currently, the country mainly relies on “soft” regulatory approaches such as local autonomy, industry rules, and voluntary initiatives for guidance [

11]. Studies have shown that since 2018, more than 397 AI governance-related policy documents have been accumulated at the U.S. state level, including 140 policies that have been legislated and implemented and 257 policies that are under proposal and discussion [

12]. Overall, the United States focuses on minimum regulatory requirements, aiming to maintain competitive advantages by removing regulatory barriers.

The United Kingdom adheres to regulatory principles of encouraging technological innovation and industrial development. It tends to rely on existing legislation and regulatory authorities to gradually build a flexible and innovation-friendly strategy [

13] and explicitly encourages collaboration among the government, regulatory authorities, industries, and civil society.

China has gradually established an AI governance system with Chinese characteristics. By continuously introducing specialized systems, it requires AI technology enterprises to fulfill obligations, such as algorithm filing [

14] and service filing [

15], and assume management responsibilities for deep synthesis content [

16]. However, in practice, there is still room for improvement in the aforementioned governance system. On the one hand, redundant and complicated filing information poses a severe challenge to the government’s professional review capabilities. According to monitoring data from the China Academy of Information and Communications Technology, the number of core AI technology enterprises in China has exceeded 5100, among which the proportion of enterprises that meet the filing requirements of the country’s regulatory authorities is less than 10% [

17]. On the other hand, the establishment of complex and numerous specialized systems keeps government supervision in a state of passive response. With the continuous emergence of new algorithms and new services, this challenge will increasingly intensify [

18].

In summary, although governments around the world have successively introduced relevant governance frameworks and principles, constrained by the rigidity and lag of traditional laws, the “one-size-fits-all” regulatory model is unable to respond to new issues arising from AI applications in a refined manner, and it is highly prone to falling into the dilemma of “chaos when laissez-faire, stagnation when over-regulated” [

19]. It is foreseeable that the tension between AI enterprises’ demand for “specialized and refined supervision” [

20] and, conversely, the government’s struggle with a shortage of administrative resources and a rigid institutional system, will drive the transformation of AI governance models from “responsive regulation” [

21,

22,

23] to “agile governance” [

24,

25]. This transformation is of crucial importance for achieving the alignment between AI development and sustainable goals, as it ensures that technological innovation advances on the premise of safeguarding long-term social well-being and systemic stability.

From the perspective of the full life cycle of AI technology enterprises, this study explores the evolutionary mechanism of the multi-stakeholder collaborative mechanism at the micro level. Furthermore, by rooting the development of AI in enduring social and environmental well-being, it proposes the “Credit Entropy” theory and applies it to the optimization of dynamic reward and punishment strategies. The study provides practical insights for enhancing the agility of AI governance approaches and exploring the realization path for nurturing the long-term systemic stability required for sustainable development.

Definition of Terms:

Evolutionary game theory is an interdisciplinary theory [

26] that integrates biological evolution theory and traditional game theory. Its core centers on a theoretical system that studies the laws governing the dynamic adjustment and evolution of strategy selection over time, alongside the resulting stable equilibrium states, among boundedly rational agents within a population during long-term repeated games.

Evolutionarily Stable Strategy (ESS) refers to a “self-sustaining” strategy within a population [

27]. Once most individuals in the population adopt it, any small proportion of “mutant strategies” cannot displace it through natural selection and will ultimately be eliminated by the population, thus maintaining the long-term evolutionary stability of the original strategy.

Credit Entropy is defined as a concept used to describe the extent to which market entities have historically complied with administrative supervision regulations in this study, and it also functions as a variable for reflecting the uncertainty of market entities’ credit risks. The higher the entropy value, the greater the uncertainty surrounding an entity’s credit status; conversely, the lower the entropy value, the more stable and predictable this credit status becomes.

2. Related Work

The establishment of an AI governance mechanism that promotes development and controls risks has attracted widespread attention from global stakeholders and is a key research focus in the academic community [

28].

Classified by research perspectives, the relevant research of this study focuses on two aspects. On the one hand, it concentrates on the innovation and practice of governance paradigms. Scholars such as S. Sharma [

29] and C. Vincent [

30] argue that rapid technological iteration requires a shift from static to dynamic governance models. They also emphasize the need to establish multi-stakeholder collaborative mechanisms involving the government, enterprises, and the public. On the other hand, centering on credit supervision and collaboration, X. Hu [

31] and Y. Wa [

32] explore the institutional logic of credit supervision. Wei Xiaochao [

33] and others apply the stochastic mutation theory to study the elasticity of credit supervision on e-commerce platforms, revealing the correlation between system mutation and elastic evolution. These studies collectively outline the practical logic of credit mechanisms in cross-departmental collaboration and third-party participation in supervision.

Classified by research methods, in the field of theoretical analysis and framework construction, K. Jia [

34] and others have constructed risk classification dimensions and policy toolkits through comparative analysis. D. Steven [

35] and others, along with W. Allison [

36], based on holistic governance theory and institutional logic, have, respectively, designed credit supervision frameworks and analytical paths for the legitimacy of credit supervision. In the field of quantitative and modeling methods, Chen [

37] and others have adopted spatial econometric methods to empirically test the economic effects of green credit supervision. Chen [

38] and others have constructed stochastic evolutionary game models, combined with stochastic differential equations and numerical simulations, to explore the impact mechanism of digital trust supervision on financing credit risks.

In terms of using game theory to balance regulation and innovation, existing studies suggest that adopting the “Regulator-Intermediaries-Enterprise” model—where third-party technical audit institutions (hereinafter referred to as “third-party institutions”) are integrated into the collaborative governance system—can not only meet enterprises’ demand for specialized regulation to a certain extent but also alleviate the government’s administrative burden. To illustrate, H. An has applied game theory to the financial sector to explore stable equilibrium strategies between financial institutions and regulatory authorities [

39]; others have constructed an evolutionary game model involving pharmaceutical companies, third-party audit institutions, and medical insurance regulators in the healthcare field and established a “profit-sharing-joint liability” linkage mechanism—a measure that has raised the compliance rate from 60% to 85% [

40,

41]. In food safety regulation, X. Dai has proposed introducing a “violation-related reputation decay factor” and establishing “public reporting incentives” to reduce the government’s regulatory costs [

42]; meanwhile, in digital platform regulation, Z. Feng has incorporated “public oversight” and designed a combination of “green subsidies + heavy penalties for non-compliance,” which drives the system toward a state of “enterprise emission reduction and moderate government regulation” [

43]. However, some scholars have also noted that, driven by factors such as profit-seeking motives and vicious industry competition, third-party institutions may engage in improper practices, which in turn breed irregularities, like corruption and fraud [

44]. Against the backdrop of competition in the AI field, regulated entities—supported by algorithmic technologies—will see their information advantages further amplified [

45], potentially leading to more frequent illegal activities [

46]. Furthermore, to curb the harms caused by non-compliant AI, governments need to strengthen administrative law enforcement; yet issues such as the application of laws and the exercise of discretionary power in this process are likely to trigger new public controversies.

In summary, the relatively static “dualistic” governance theory applicable to traditional industries lacks effective means to break down information barriers and achieve precise and efficient supervision and thus struggles to meet the practical needs of AI governance. Existing research mostly focuses on macro-level governance models or credit supervision in a single field. It lacks tracking of the dynamic coupling of micro-strategies among multi-stakeholders across different stages of AI evolution, thus failing to reveal the trajectory of behavioral collaboration throughout the full life cycle. Furthermore, in quantitative research, the Credit Entropy theory is rarely incorporated. While relevant game models do focus on stakeholder interactions, they do not integrate cross-domain credit data (e.g., the correlation between enterprises’ historical compliance records and multi-scenario behaviors). Additionally, theoretical research inadequately examines the institutional coupling between credit supervision and AI governance.

This study extends the credit theory and proposes a dynamic mechanism based on enterprises’ Credit Entropy, which takes enterprises’ historical compliance performance as a key consideration for the government to implement nonlinear dynamic reward and punishment. The significance of this mechanism for the sustainable development of AI technology is reflected in the following:

It addresses the limitations of short-term regulatory measures and underpins the long-term compliant development of AI technology enterprises. Governments calculate enterprises’ Credit Entropy through daily collection of their historical violation records and establish “Credit Entropy profiles”. This long-term mechanism not only focuses on oversight of current improper behaviors but also prioritizes continuous supervision throughout the entire life cycle. It can identify potential risks in AI technology iteration in advance, preventing the technology from deviating from the sustainable path due to regulatory lag and making it far better adapted to governing rapidly iterating technologies.

It improves the precision of regulatory resources and balances technological innovation and risks. Leveraging enterprises’ “Credit Entropy profiles”, governments can move to further implement classified and hierarchical supervision, ultimately enabling appropriate intervention at different development stages of new tech enterprises. This makes it far better adapted to the fast-booming new technology sector.

It guides the “innovation-responsibility” synergy of AI technology and anchors it in sustainable value. Through linked dynamic rewards and punishments, it urges enterprises to internalize compliance responsibilities into innovation drivers, avoiding the sacrifice of social value (e.g., ethical risks) for short-term interests and ensuring that AI technology achieves sustainable development in the balance between innovative breakthroughs and social responsibilities.

Given this, this study is of significant necessity.

The innovation in research perspective breaks through the analytical boundaries of a single subject or field. From a micro perspective, dynamically track the trajectory of the strategic evolution of all participants under the multi-stakeholder collaborative mechanism throughout the full cycle of technological evolution. Innovatively propose the Credit Entropy theory to characterize enterprises’ historical compliance performance and apply it to optimize the nonlinear fluctuation control strategy, so as to enhance the agility of the collaborative mechanism.

Interpretability of methods involves adopting evolutionary game theory and credit theory to construct an analytical model, integrating numerical simulation with sensitivity analysis to identify key disturbance factors and introducing a comparative perspective to verify the adaptability of nonlinear fluctuation control strategies. The methodological chain is complete, with solid data support.

The practical significance method can address the shortcomings of existing research in the adaptation of multi-stakeholder collaborative mechanisms to AI agile governance. It also provides new theoretical and practical references for advancing research on multi-stakeholder collaborative AI governance.

3. Problem Statement

The development of new technologies is heavily shaped by factors such as policy and financing, which is a fact repeatedly validated in the history of sci-tech industry evolution. In the early stage of the mobile internet industry’s rise, the government of a certain country provided targeted support to local enterprises through special fund allocations, paired with lenient market access policies. Within three years, the number of mobile application development enterprises in the country grew by over threefold compared to the pre-policy period. However, as government incentive policies continued to expand, the absence of commensurate oversight mechanisms for such high-level investment easily created opportunities for rent-seeking. Some local enterprises colluded with third-party entities to falsify R&D progress reports and achievement acceptance materials and illegally embezzled government subsidies. Later, when regulatory authorities imposed penalties on non-compliant enterprises, it sparked public debate: lenient penalties were criticized for “enabling fiscal waste,” while severe penalties stirred disputes about “hampering the upgrading of the communications sector.” Ultimately, the actual utilization efficiency of the special funds fell by 40% against expectations.

Now, AI technology innovation is defined by its knowledge intensity and rapid iteration. It demands significant enterprise investment in high-performance computing resources, alongside large-scale platform development and collaboration across operation and maintenance teams. Thus, the full life cycle development of AI enterprises aligns with the contextual logic outlined above.

To address the aforementioned challenges, resolve related contradictions, and realize sustainable development, this study abstracts these issues into the following model discussion: governments guide the behaviors of stakeholders through reward and punishment strategies. It also supervises the market through active sampling inspections and public reports. Third-party institutions assist governments in making decisions on the access and exit of enterprises in market operations (positive monitoring results are a prerequisite for enterprises to obtain benefits), conducting timely risk assessment, and proposing response measures. The behaviors of enterprises and third-party institutions are affected by collaborative mechanisms, including government incentives, enterprise rent-seeking, and profit-sharing.

The research framework is shown in

Figure 1.

Regarding the selection of research methods, the evolutionary game model breaks through the assumption of traditional game theory that states that “fully rational subjects pursue the maximization of immediate interests” and is more in line with the “bounded rationality” characteristics of real-world subjects caused by information asymmetry and cognitive limitations. Instead of focusing on “the optimal strategy selection of individual subjects”, it emphasizes the “changing trends of strategy frequencies at the population level”. It proves an effective approach to analyzing dynamic strategic changes among boundedly rational multi-stakeholder groups in long-term repeated games. However, traditional evolutionary game models assume different payoff values as fixed, a setup that leads to information asymmetry among stakeholders in these models; further, since each stakeholder prioritizes maximizing its own interests, this pursuit of self-interest results in game imbalance and strategic fluctuations. Consequently, governments struggle to design effective supervision schemes, and issues like non-compliance, rent-seeking, and collusion persist despite repeated efforts to curb them.

To tackle this problem, this study integrates Credit Entropy theory into the model, drawing on an analysis of traditional evolutionary game models. By setting nonlinear payoff parameters, this mechanism effectively mitigates system fluctuations and drives all stakeholders’ strategies toward a relatively stable, predictable, and cooperative positive equilibrium.

The research path of this study is as follows.

First, we adopt the evolutionary game method to establish a stochastic dynamic system. From a micro perspective, we track the trajectories of strategic evolution of governments, enterprises, and third-party institutions across different stages of technological evolution.

In the initial stage of new technologies, government supervision is often guided by the principles of “promoting the innovative development” and “inclusive and prudent”. It entrusts third-party institutions to provide support for enterprise R&D. At this stage, all revenues of third-party institutions come from government appropriations. As new technologies mature, governments reduce appropriations to third-party institutions and gradually shift to market-oriented management. At this stage, in addition to government appropriations, third-party institutions also obtain profit shares from enterprises.

Further, we adopt numerical simulation methods to conduct sensitivity analysis of each parameter. This aims to explore the key factors that cause fluctuations in the collaborative system and clarify their mechanism of action.

Finally, we introduce the Credit Entropy theory. Enterprises’ historical compliance performance is incorporated into dynamic nonlinear control. Through simulation comparison, we verify its performance in the agility optimization of the multi-stakeholder collaborative system.

4. Evolutionary Game Model

AI technology iterates rapidly, and market competition is fierce. To seize opportunities, some enterprises tend to engage in irregularities. In a multi-stakeholder collaborative mechanism, such enterprises will not pass the technical review conducted by third-party institutions. This means they cannot operate to gain profits. But, if such enterprises seek rent from third-party institutions, and if their “collusion” succeeds, they may operate irregularly and obtain excess profits.

Governments provide phased financial support to AI startups and third-party institutions while being subject to public oversight. To address public concerns, governments conduct random inspections on listed technical services and supervise the performance of duties by third-party institutions. For social issues, such as interest infringement caused by enterprises’ illegal listing, governments need to invest resources in compensation.

Third-party institutions conduct technical reviews of enterprises and issue business licenses upon approval. Driven by interests, third-party institutions, in an effort to reduce operating costs, tend to collude with non-compliant enterprises to defraud business licenses.

4.1. Model Variables and Assumptions

Assumption 1.

All three stakeholders are bounded and rational, and their strategy choices gradually evolve toward the optimal strategy over time.

Assumption 2.

The strategy space of enterprises is compliant operation K1 and non-compliant operation K2; that of governments is active supervision M1 and inactive supervision M2; and that of third-party institutions is strict performance of duties N1 and neglect of duties N2. The probabilities of adopting the first strategy are x, y, and z, respectively, which are all functions of time and simplified to x, y, and z.

Assumption 3.

Enterprises engaging in compliant operations (compared with traditional operations) need to invest additional R&D costs (such as data procurement, computing power rental, and talent incentives) C1. In this case, they will receive financial support under governments’ active supervision and obtain operating revenue (where, representing the revenue share that the enterprises provide to the third party). Enterprises engaging in non-compliant operations will definitely choose to seek rent.

Assumption 4.

When enterprises choose non-compliant, if the collusion fails, they will not obtain the business licenses; they will have no revenue and incur no government penalties. If the collusion succeeds, enterprises need to transfer benefits B1 to the third-party institutions. At this time, enterprises will obtain excess profit (where). Under governments’ active supervision, they will be penalized (where, indicating that governments impose heavier penalties on collusive behaviors).

Assumption 5.

Governments’ active supervision is reflected in providing financial support to compliant enterprises and third-party institutions (E1 and E2, respectively) and imposing penalties on irregular behaviors. Active supervision will gain social reputation benefits F. For social issues caused by collusive behaviors, governments need to invest resources in compensation, at a cost of R.

Assumption 6.

When governments exercise inactive supervision, they will suffer reputation loss L due to inaction. In this case, for social issues caused by collusive behaviors, the delay in intervention leads to secondary impacts, and the governance cost increases by a factor of k, with the total cost being (where).

Assumption 7.

Third-party institutions’ strict performance of duties means conducting technical reviews in accordance with regulatory requirements and the latest standards, at a cost of C2. Under governments’ active supervision, they will receive financial support E2 and obtain a revenue share when enterprises are listed and operating.

Assumption 8.

When third-party institutions neglect their duties, they incur no actual monitoring cost (cost = 0). Under governments’ active supervision, if the collusion fails, they are penalized P2 and obtain revenue share; if the collusion succeeds, they receive benefit transfer B1 and revenue share and are penalized (where).

4.2. Model Construction

Based on the above assumptions, the mixed-strategy game matrix of enterprises, governments, and third-party institutions is shown in

Table 1.

4.3. Model Analysis

4.3.1. Enterprises

The expected revenue for an enterprise choosing Strategy 1 is

v11, the expected revenue for choosing Strategy 2 is

v12, and the average expected revenue is, respectively, as follows:

Based on the single-population replicator dynamics theory proposed by Peter D. Taylor and L. B. Jonker in their 1978 paper Evolutionary Stable Strategies and Game Dynamics, the replicator dynamics equation for enterprises’ strategy selection is constructed as follows:

4.3.2. Government

The expected revenue for governments choosing Strategy 1 is

v21, the expected revenue for choosing Strategy 2 is

v22, and the average expected revenue is, respectively, as follows:

The replicator dynamics equation for governments’ strategy selection is constructed as follows:

4.3.3. Third-Party Institutions

The expected revenue for a third-party institution choosing Strategy 1 is

v31, the expected revenue for choosing Strategy 2 is

v32, and the average expected revenue is as follows, respectively:

The replicator dynamics equation for third-party institutions’ strategy selection is constructed as follows:

4.4. Stability Analysis of Evolutionary Strategies

According to the properties of dynamic system equilibria, the system equilibrium points must satisfy the condition that the time derivatives of strategy frequencies are zero: . By solving the system of equations simultaneously, 15 system equilibrium points can be obtained. Among them, there exist eight pure-strategy Nash equilibria, which are, respectively, E1(0, 0, 0), E2(1, 0, 0), E3(0, 1, 0), E4(0, 0, 1), E5(1, 1, 0), E6(1, 0, 1), E7(0, 1, 1), and E8(1, 1, 1).

Proposition 1:

The game system has four possible ESSs, E3(0, 1, 0), E5(1, 1, 0), E7(0, 1, 1), and E8(1, 1, 1), as follows:

- (1)

When , the ESS of the system is E7(0, 1, 1);

- (2)

When , the ESS of the system is E8(1, 1, 1);

- (3)

When , the ESS of the system is E5(1, 1, 0);

- (4)

When , the ESS of the system is E3(0, 1, 0).

Proof. The stability of the replicated dynamic equation is determined based on the Lyapunov stability criterion. That is, if the real parts of all eigenvalues of the Jacobian matrix are negative (the determinant value is positive, and the sum of diagonal values is negative), then this point is the stable point of the evolutionary game, which is the ESS mentioned earlier and which conforms to the strict Nash equilibrium state. □

The Jacobian matrix is described as follows:

where

By bringing eight equilibrium points into the Jacobian matrix, the eigenvalues of all the equilibrium points can be calculated, as shown in

Table 2.

According to the actual situation, the second eigenvalue in E1 is always positive, so E1(0, 0, 0) is unstable. The third eigenvalue in E4 is always positive, so E4(0, 0, 1) is unstable. The third eigenvalue in E6 is always positive, so E6(1, 0, 1) is unstable. In this study, it is assumed that governments value reputation and respond to public expectations; that is, F is a relatively large number. Therefore, the second eigenvalue in E2 is positive, making E2(1, 0, 0) unstable.

4.5. Analysis of Strategy Evolution Trajectories from a Life Cycle Perspective

Based on the stability conditions of equilibrium points shown in

Table 2 and drawing on the expression logic for deducing multi-stakeholder collaborative evolution and mechanisms in the cited literature [

47], this study divides the life cycle of AI technology enterprises into three stages and analyzes the evolutionary trajectories of different stages.

Based on the stability conditions of equilibrium points shown in

Table 2, the life cycle of AI technology enterprises is divided into three stages, and the evolution trajectories of different stages are analyzed.

4.5.1. Initial Stage: Support and Cultivation

Corollary 1.

When , the ESS of the system is E7(0, 1, 1). Enterprises operate non-compliantly, governments perform actively, and third-party institutions perform their duties strictly. At this stage, as the market is still in the early stage, a stable input–output model has not yet been formed. Enterprises bear R&D cost C1, while the short-term operating income

is uncertain. Although governments invest financial support E1, it has not yet helped enterprises stabilize expectations. Enterprises face survival difficulties and choose non-compliant operations.

4.5.2. Development Stage: Innovation Breakthrough

Corollary 2.

When

, the Evolutionary Stable Strategy (ESS) of the system is E8(1, 1, 1). Enterprises operate compliantly, governments perform actively, and third-party institutions perform their duties strictly. At this stage, technological research has made significant breakthroughs, and applications have achieved industrialization benefits. means that governments perform actively, continuously providing financial support to enterprises and third-party institutions. indicates that third-party institutions, under governments’ supervision, perform their duties actively and obtain a share of enterprise profits.

Corollary 3.

When

, the ESS of the system is E5(1, 1, 0). Enterprises operate compliantly, governments perform actively, and third-party institutions slack off (adopt a “lying flat” approach) due to dereliction of duty. At this stage, with the reduction and removal of technological barriers, the number of new market entrants is increasing, and thus the monitoring costs of third-party institutions are rising significantly. To maintain profitability, third-party institutions take the risk of being penalized, choose to slack off, and still participate in the profit share of enterprises .

4.5.3. Crisis Stage: Reaching “Collusion”

Corollary 4.

When

, the ESS of the system is E3(0, 1, 0). Enterprises and third-party institutions operate irregularly and reach “collusion”. At this point, the market profit margin is gradually saturated. Some enterprises believe that if they collude with third-party institutions, even if penalized by governments, their remaining revenue will still be greater than the profits from compliant operations. means that collusive behaviors bring high governance costs to governments. Government supervision fails, and the market moves toward disorder.

5. Evolutionary Game Model Based on Credit Entropy

In the three-party evolutionary game, the strategy choices are not only affected by the other two parties but may also form a chain reaction through the “third-party indirect effect”. Considering the rapid iteration of AI technology, the dynamics and randomness of the system may be more significant. Under lenient supervision, the “collusion” between enterprises and third-party institutions may trigger herd-like imitation of group strategies. Under strict supervision, a “black swan” event may lead to significant deviations in enterprise strategies (for example, under the influence of public opinion, Didi directly shut down its ride-hailing service after the ride-sharing incident), resulting in cyclical suppression of the strategies of all stakeholders. That is, at this point, the evolutionary game system has no ESS.

System fluctuations will reduce evolutionary efficiency and give rise to social issues, such as waste of administrative resources and fund corruption. To this end, this study introduces “credit” into the multi-stakeholder collaborative mechanism, proposes the Credit Entropy theory, and establishes a credit-based dynamic nonlinear reward and punishment mechanism.

5.1. Model Variables and Assumptions Based on Credit Entropy

Credit Entropy reflects the uncertainty of credit risks of stakeholders (referring to enterprises or third-party institutions in this study), describing the level of the market entities in compliance with administrative regulatory provisions in the past. The higher the entropy value, the stronger the uncertainty of its credit status; the lower the entropy value, the more stable and predictable its credit status.

Assume that a stakeholder has s possible credit states, and the probability of the

i-th state occurring is

(satisfying

and

). The calculation formula for the Credit Entropy H of the market entity is

Based on the symmetry of the entropy function, a composite function

is constructed, with a regulatory factor

(satisfying

) as follows:

When x = 0, H(0) = 0 and F(0) = 0, when x = 1, H(1) = 0 and F(1) = 1, and as x increases from 0 to 1, the increasing trend of x dominates. The volatility of entropy is suppressed by a, and it remains monotonically increasing overall. This function can be used as a feedback regulation to control system volatility, ultimately achieving system convergence. When system fluctuations are small, the intensity of rewards and punishments is weak, avoiding excessive intervention that would disrupt the system’s spontaneous stability; when system fluctuations increase, the intensity of rewards and punishments automatically strengthens—by increasing the cost of “deviant behaviors” (punishments) or the benefits of “compliant behaviors” (rewards), it suppresses the spread of fluctuations, offsets the impact of disturbances on the system, and keeps fluctuations within an acceptable range.

In this section, we adjust Assumptions 4 and 5 in

Section 4.1 as follows.

5.1.1. Linear Reward and Punishment Scheme

When governments choose active supervision, if enterprises operate compliantly, they will receive a financial subsidy of ; if non-compliant, they will be subject to an administrative penalty of . For third-party institutions, if they perform compliantly, they will receive a financial subsidy of ; if non-compliant, they will be subject to an administrative penalty of .

5.1.2. Nonlinear Dynamic Reward and Punishment Scheme

When governments choose active supervision, the reward mechanism remains unchanged. We adjust the administrative penalties for non-compliant operations. Enterprises will be subject to an administrative penalty of ; third-party institutions will be subject to an administrative penalty of .

To simplify the analysis, in this section, the penalty coefficient, governance coefficient, and entropy coefficient are all set as constants (, etc.), with the rest remaining unchanged.

5.2. Model Construction and Analysis

Based on the above assumptions, by the same logic, it can be derived that after adopting the nonlinear dynamic reward and punishment mechanism, the eigenvalues of the system’s Jacobian matrix are shown in

Table 3. We will adopt numerical simulation to further elaborate on the process by which the aforementioned mechanism controls the system’s volatility.

6. Numerical Simulation

In this section, we use Matlab2016b for numerical simulation analysis in order to explore the impact of key factors—such as government support, innovation costs, multiples of excess profits, and intensity of penalties for violations—on promoting phase transformation in the evolution of the full life cycle of AI technology development. On this basis, we further verify the application effectiveness of the Credit Entropy-based dynamic nonlinear agile governance mechanism proposed above.

The setting of external parameter variables, such as costs and revenues in this model, refers to Reference [

48] and incorporates the opinions from a number of technological innovation enterprises (including China Mobile Jiutian Artificial Intelligence Technology Co., Ltd., Shenzhen, China and China Mobile Information Technology Co., Ltd., Shanghai, China), relevant government departments, and experts and scholars.

The array settings are as follows:

6.1. Parameter Sensitivity Analysis for Static Reward and Punishment Mechanism

6.1.1. Initial Stage

Substitute Array 1 into the evolutionary game model in 4.1. The game system satisfies the stability conditions of E7(0, 1, 1).

At this stage, enterprises choose non-compliant operations, governments actively perform to provide financial support, and third-party institutions perform their duties strictly.

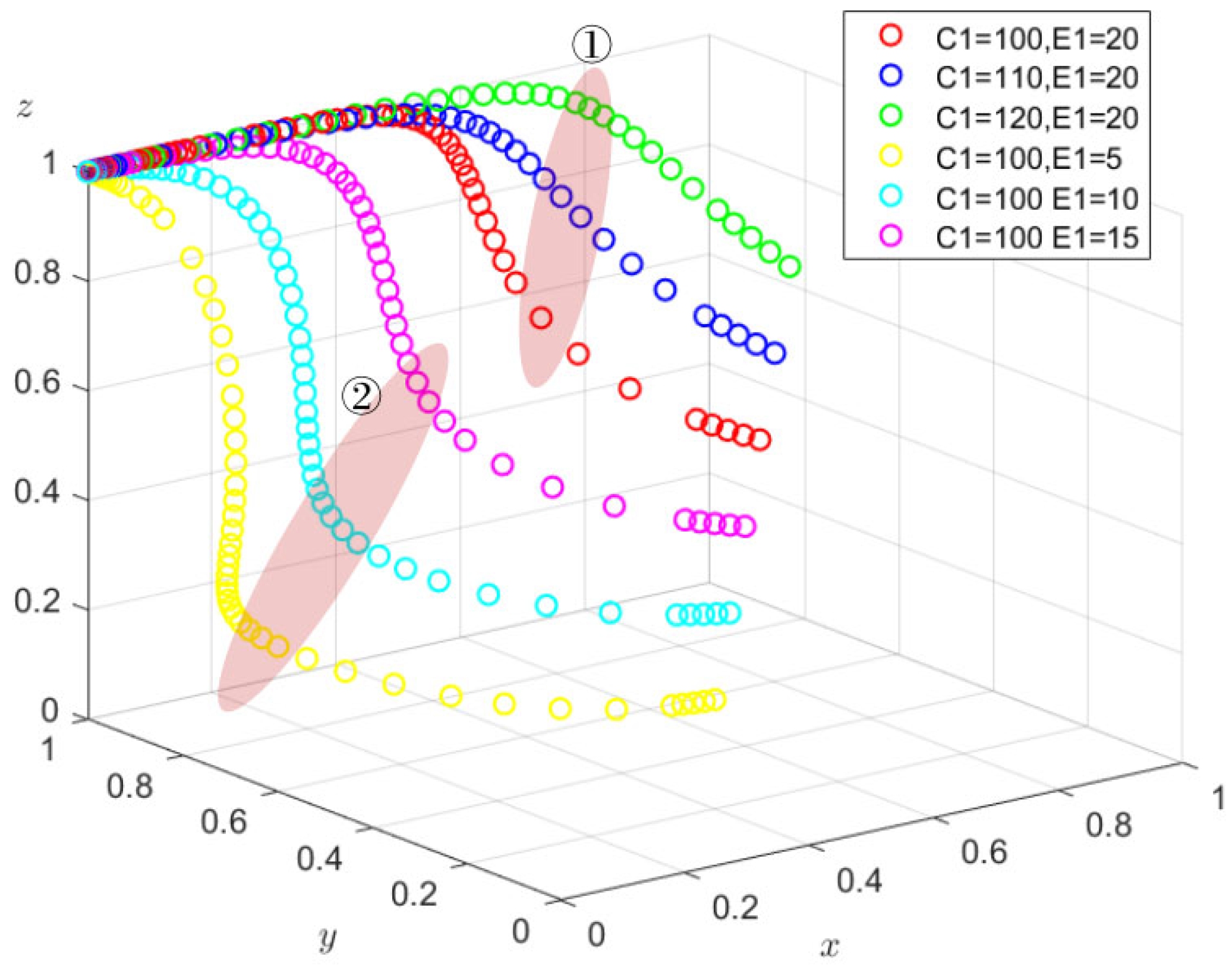

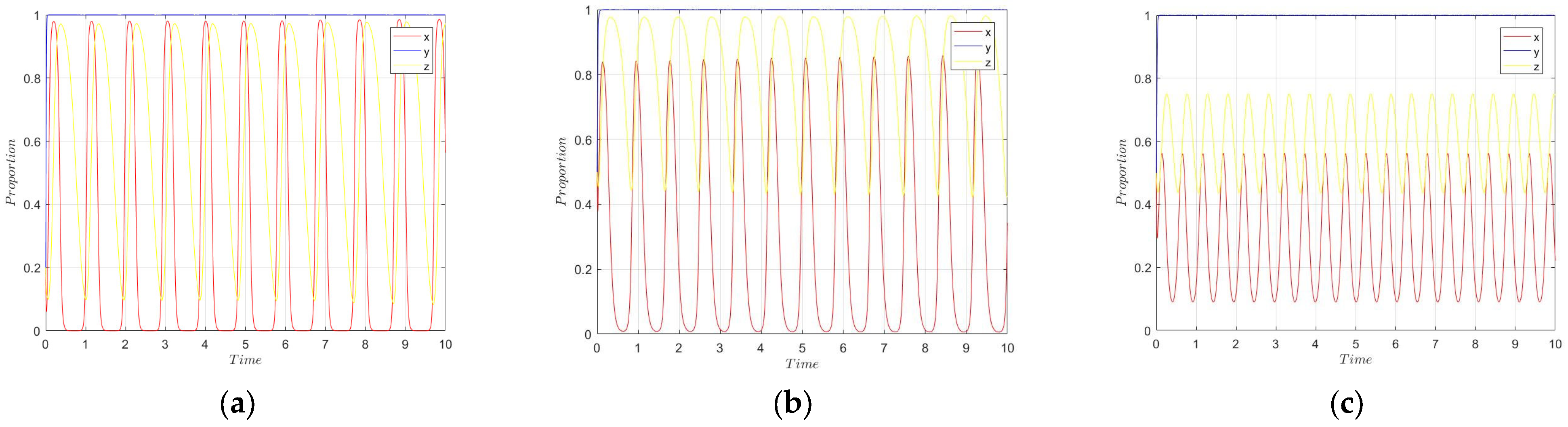

Different combinations of initial values are assigned to x, y, and z. This part explores the impact of parameter changes on the system’s evolutionary path under different initial strategy combinations of (x, y, z). The simulation results are shown in the figures.

Figure 2 above indicates the following: with different initial values of

x,

y, and

z, the system eventually evolves to the equilibrium point E7(0, 1, 1), which is consistent with the conclusion of Corollary 1. It illustrates that in the initial stage of the evolution, the final state is fixed. No matter the initial intentions of enterprises, governments, or third-party institutions, enterprises will end up operating non-compliantly. Governments will actively perform and provide financial support, and third-party institutions will strictly perform their duties.

In the initial stage of technological development, enterprises hold that technological innovation is a key factor in gaining market recognition. Thus, they invest substantial funds in research and development as well as testing. This is an inevitable decision made by enterprises based on the laws of market competition and development.

As shown in the circled part of 1, since the market is still in its embryonic stage at this point, its capacity to absorb and transform technological achievements remains limited. Even if enterprises invest substantial resources in striving for technological breakthroughs (with C1 increasing), they can hardly obtain positive feedback in the short term. This means that they fail to generate sufficient economic benefits and may adhere to compliant operations only temporarily; soon after, survival difficulties will force them to produce low-quality products or provide shoddy services, which ultimately leads to non-compliant operations.

As shown in the circled part of 2, even if the government increases financial and institutional support for enterprises (with E1 rising) to promote the healthy development of the industry, the overall trend has not changed significantly. This indicates that government financial support has failed to play its positive role at this stage. Some enterprises even choose to rely on government support, shifting the testing costs that they should bear themselves to third-party institutions. This will further lead to a significant decline in innovation efficiency and trap technological development in a predicament.

6.1.2. Development Stage

Substitute Array 2 into the evolutionary game model in

Section 4.1. In the development stage, there are two possible collaborative innovation mechanisms: E8(1, 1, 1) and E5(1, 1, 0). With the initial value (

x,

y,

z) = (0.2, 0.5, 0.8), this section analyzes the impact of parameter changes on the system’s evolutionary path.

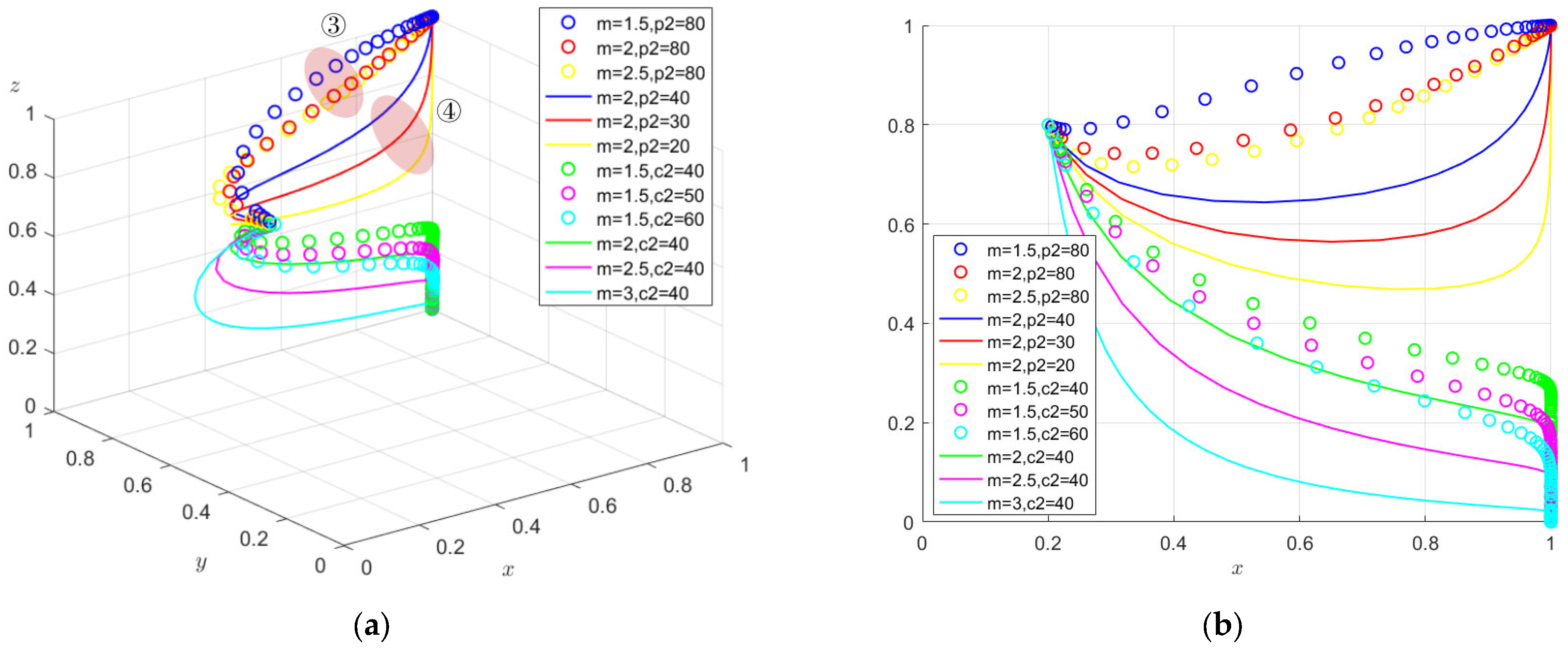

Figure 3 shows the evolution from E7 to E8. As enterprises’ input–output efficiency undergoes a significant reversal, the system’s stable point will evolve from E7(0, 1, 1) to E8(1, 1, 1). At this point, AI technology enters the development stage. This indicates that technological breakthroughs and the transformation of research outcomes are the key drivers for the game system to transition from the initial stage to the development stage.

In the process of system evolution, technological breakthroughs interact with changes in market maturity. The multi-stakeholder collaborative mechanism plays a key role. Adjusting to C1 < Q1 means that technological breakthroughs have brought substantial industrialization benefits for enterprises. At this point, enterprises’ attitudes toward R&D investment will undergo a substantial shift. Meanwhile, such active investment will further drive the growth of enterprise revenue, forming a virtuous cycle.

As shown in the circled part of 3 and 4, as enterprises’ profits continue to increase (reflected by the rise in Q1), high-risk, high-return illegal profits also increase relatively and become more attractive (reflected by the rise in m). To attract more enterprises and jointly share the dividends brought by technological development, governments may choose to loosen law enforcement, reduce or mitigate the fines for violations (with P2 decreasing), and foster an innovation-friendly policy environment. As can be seen from the curvature of the curve in the figure, the strategies of third-party institutions are disturbed; their decisions undergo a brief backtrack, yet the final stable decisions remain unchanged.

In this stage, the multi-stakeholder collaborative mechanism plays a significant positive role. It guides enterprises to focus on technological R&D and compliant operations by establishing a sound market order and appropriately regulating enterprise behaviors, thus driving the industry into a virtuous cycle of development.

Transition from E8 to E5. As more enterprises engage in open-source R&D, the cumulative monitoring costs of third-party institutions rise significantly. The system’s stable point evolves from E8(1, 1, 1) to E5(1, 1, 0). From the full life cycle perspective, it marks the start of the entire system moving toward the crisis stage.

In the process of system evolution, the operation of third-party institutions is closely linked to factors such as the compliance of AI technology enterprises, the input costs for implementing monitoring, and the intensity of government supervision. Continued increases in C2 signify a rise in the actual monitoring cost input of third-party institutions; that is, an increase in operational pressure. At this point, the strategies of third-party institutions will gradually shift from strict performance of duties to “passive response” or “inaction”.

There is a clear logic behind this shift. When in the development stage, there is an innovation-friendly policy environment for enterprises. Whether third-party institutions perform their duties strictly or not, they have extremely high probabilities of obtaining profit shares from enterprises. In this scenario, if third-party institutions directly reduce monitoring costs, they can both enjoy government financial support and share from enterprises. Even though such passive performance carries the risk of punishment, low fines fail to form an effective deterrent. It is hard to alter their decision-making direction. Meanwhile, as monitoring costs increase sharply, third-party institutions will converge toward passive performance more quickly. This indicates that in the multi-stakeholder collaborative mechanism, third-party institutions are key to ensuring the positive and orderly operation of the system. If third-party institutions perform their duties passively and neglect their responsibilities, it will make it difficult to detect the potential violations of some enterprises in the market, posing a serious threat to the sustainable development of the entire industry.

6.1.3. Crisis Stage

Substitute Array 3 into the evolutionary game model in

Section 4.1. The system’s stable point will evolve to E3(0, 1, 0).

At this stage, both enterprises and third-party institutions choose non-compliant operations. They engage in “collusion,” triggering a market crisis.

Figure 4 shows the evolution from E5 to E3. The neglect of duties by third-party institutions means that the supervisory role of the multi-stakeholder collaborative mechanism will continue to weaken. This provides opportunities for enterprises to engage in non-compliant operations. A large number of enterprises that fail technical audits gain access to the market through fraud and obtain excess profits by doing so. If m is adjusted to increase (i.e., illegal profits rise), the stable point of the game system will shift from E5(1, 1, 0) to E3(0, 1, 0). This process illustrates that, driven by excess profits, non-compliant enterprises and third-party institutions that perform their duties passively reach a “collusion”.

Once such “collusion” takes shape, the system will stabilize in the crisis stage. At this stage, non-compliant enterprises need the cover of third-party institutions to continuously secure excess profits; meanwhile, third-party institutions that neglect their duties seek to reduce their operational costs and share the benefits from violations through colluding with non-compliant enterprises. This phenomenon continues to accumulate and deteriorate, ultimately leading to the vicious cycle of “bad money driving out good money”. The living space of compliant enterprises is increasingly squeezed, violations prevail in the market, and the fairness and healthy order of the entire market are severely damaged, which will ultimately threaten the sustainable development of the market.

To sum up, there exist close logical causal relationships between all links: third-party institutions’ neglect of duties, non-compliant enterprises taking the opportunity to rent-seek for excess profits, the transformation of the system’s stable point, and the formation of “collusion,” which ultimately leads to the vicious cycle of “bad money driving out good money.” These links clearly demonstrate the evolution process of the market from order to crisis.

6.2. Analysis of Agile Effectiveness for the Dynamic Reward and Punishment Mechanism Based on Credit Entropy

From the above analysis of the full life cycle, it can be seen that changes in key parameters are the driving factors behind changes in system equilibrium points. Between equilibrium states, a small number of individuals will undergo sudden strategy changes due to “disturbances” of key factors, thereby obtaining high returns. This, in turn, triggers learning and imitation by other individuals, ultimately leading to the absence of an ESS (Evolutionarily Stable Strategy) in the system, which then enters a fluctuating state.

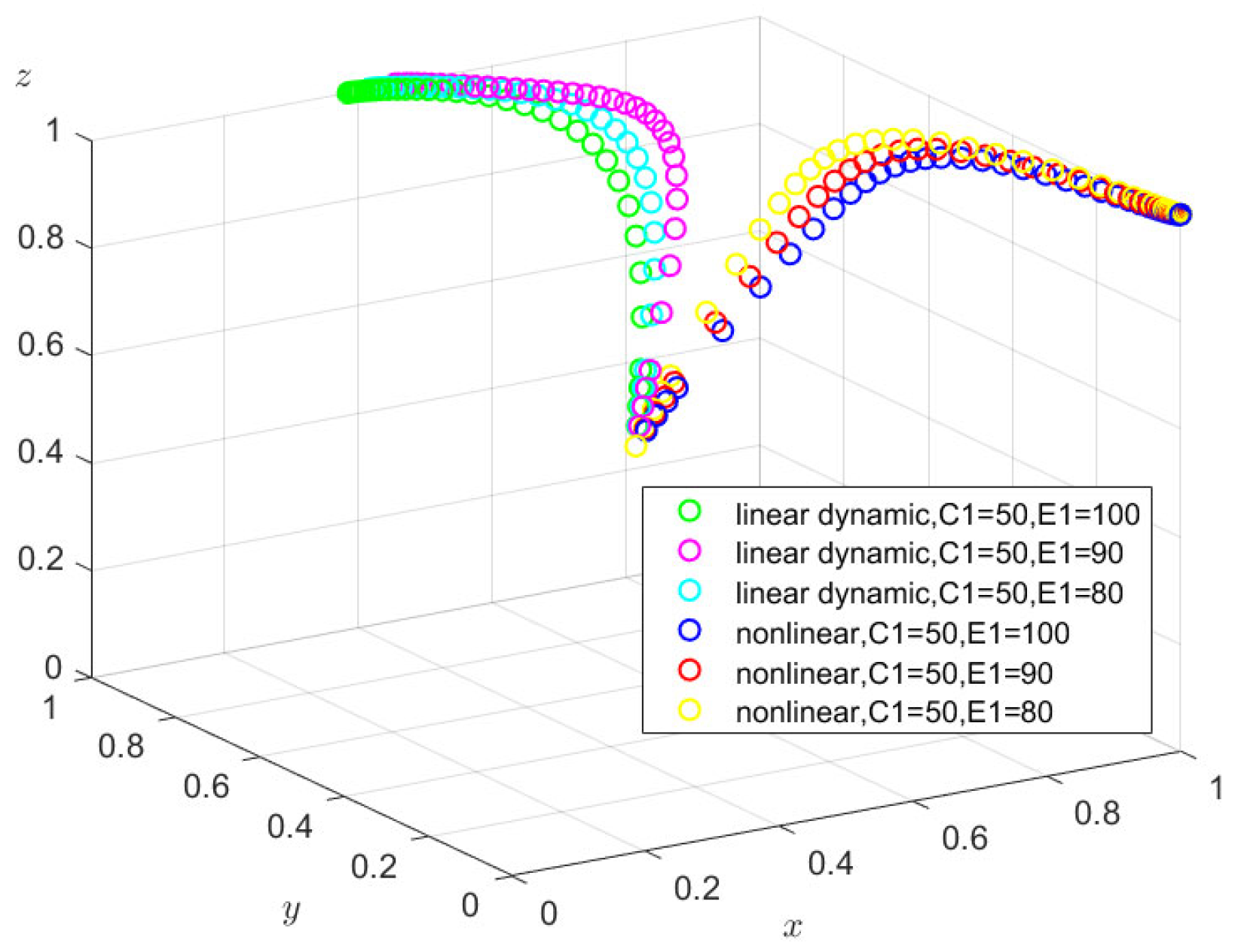

Substitute Array 4 into the dynamic reward and punishment model in

Section 5.2 based on Credit Entropy. Randomly consider the initial strategies of 0.2, 0.5, and 0.8. The simulation results are shown as follows.

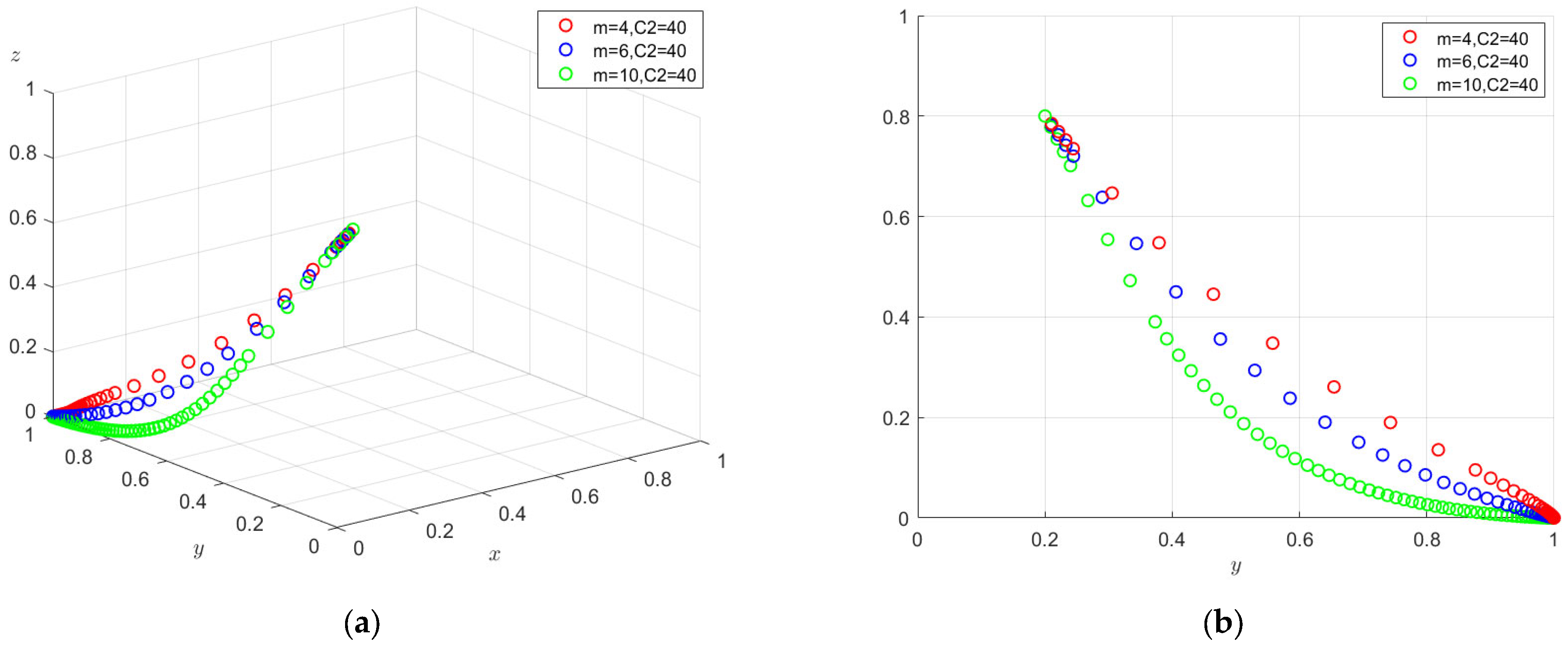

As can be seen from

Figure 5 (3D demonstration diagram) and

Figure 6 (2D demonstration diagram),, the system is in a fluctuating state due to disturbances at this point. Except that government behavior remains stable in active supervision, the behaviors of enterprises and third-party institutions fluctuate repeatedly. The amplitude of fluctuations varies with the initial strategies. When stakeholders enter the system with a higher willingness to comply, the amplitude of fluctuation deviation is smaller (yellow circle); when they enter with a lower willingness to comply, the amplitude of fluctuation deviation is larger (blue circle).

The complexity of the tripartite evolutionary game inherently endows higher volatility. The dynamic reward and punishment mechanism referred to in

Section 5.1 can dynamically adjust intervention intensity by responding to fluctuation intensity in real time. Its essence is a “closed-loop control” over such volatility, which not only prevents the system from falling into inefficient cycles due to uncontrolled fluctuations but also maintains the flexibility of evolution by minimizing unnecessary interventions, ultimately guiding the system to stably converge to the optimal equilibrium.

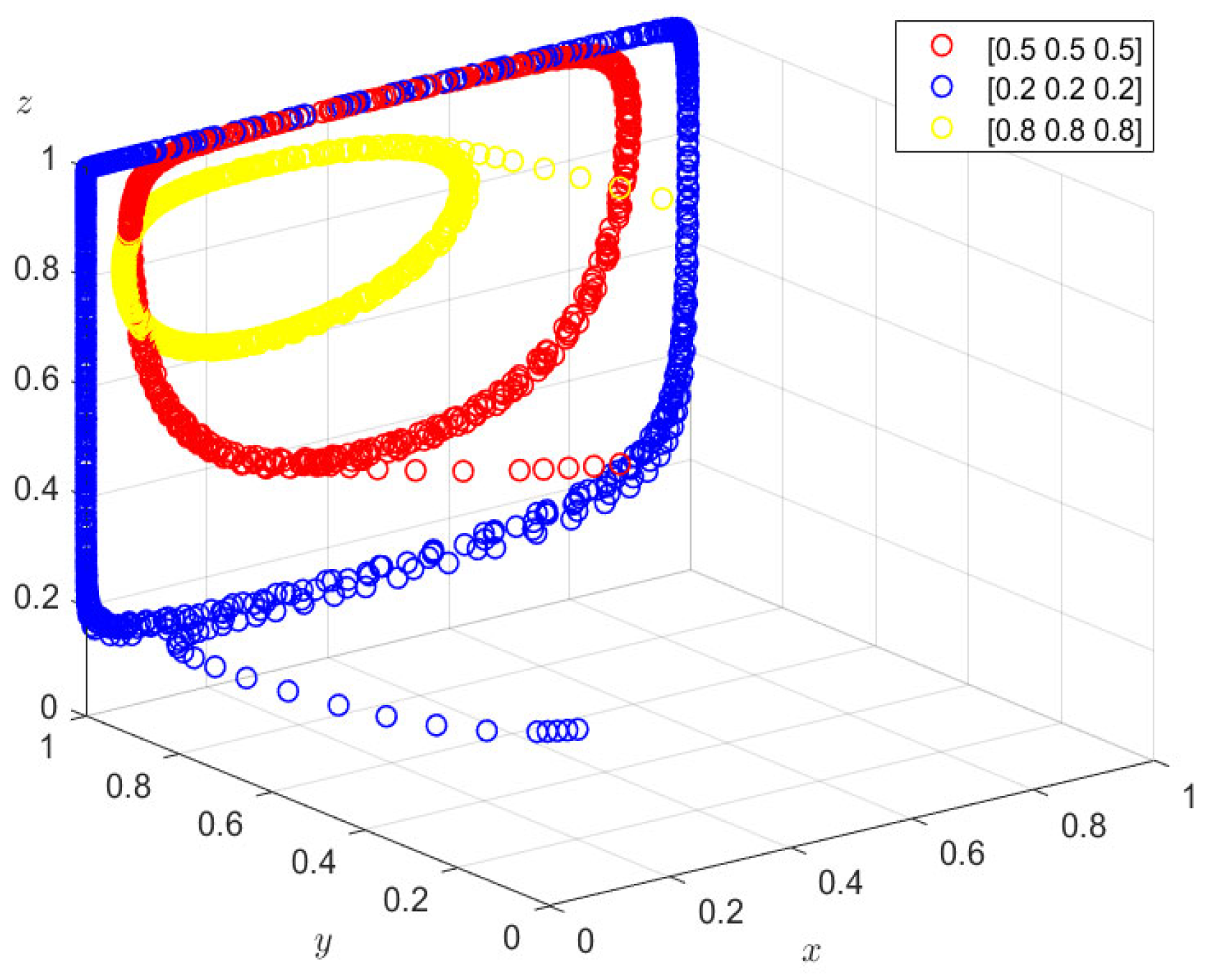

In this section, the linear and nonlinear dynamic reward and punishment strategies proposed above will be applied to the system, respectively.

With the initial strategy set as (0.5, 0.5, 0.5), Array 4 is substituted into both the Credit Entropy-based linear dynamic reward and punishment system proposed in

Section 5.1.1 and the nonlinear system proposed in

Section 5.1.2. The simulation diagrams are shown as

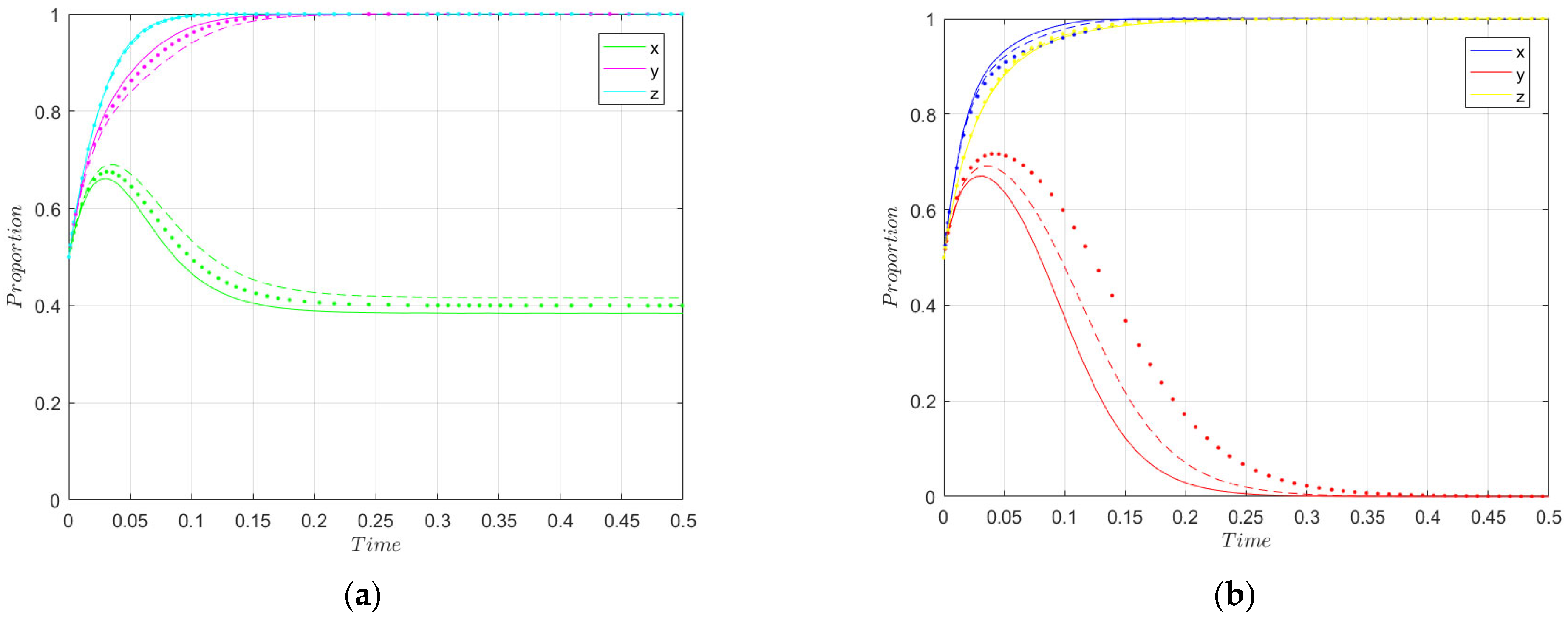

Figure 7.

As indicated in

Figure 8a the fluctuation amplitudes of enterprises and third-party institutions are quickly controlled. It indicates that the linear strategy can significantly curb fluctuations, but the convergence result is not ideal. Even if the incentive amount is increased at this point, the stable state of the system remains unchanged.

Figure 8b indicates that the nonlinear strategy further controls the system to a more desirable state. Here, both enterprises and third-party institutions choose to perform their duties in compliance. After a short period of iteration, governments may adopt a relaxed regulatory strategy, enabling the market to move toward the optimal form of agile governance. It can be seen from this that optimizing the dynamic system control strategy can effectively curb potential fluctuations in the tripartite collaborative mechanism, thereby enabling the system game to achieve a healthy, stable, and ideal steady state.

7. Conclusions and Implications

China’s government supervision of internet platforms has evolved from the early approach of “prioritizing development over governance” to the gradual realization of “regular supervision.” Such inclusive and prudent regulatory policies—continuously adjusted in line with the stages of technological development and characterized by encouraging innovation—are regarded as a key driver behind the rapid and successful growth of China’s digital economy.

To meet the needs for agile governance required by the rapid iteration of AI technology, this study adopts an evolutionary game approach to depict the operational laws throughout the full life cycle of AI technology development. It reveals the mechanism of action of key disturbance factors under the multi-stakeholder collaborative mechanism and proposes effective fluctuation control methods. From the initial stage to the development stage, the multi-stakeholder collaborative mechanism plays a positive role, providing a stable framework for the sustainable iteration of AI technology; from the development stage to the crisis stage, shifts in third-party institutions’ attitudes toward performing their duties trigger market fluctuations. To resolve the crisis and escape the predicament, a dynamic nonlinear reward and punishment mechanism based on Credit Entropy is constructed. This mechanism effectively eliminates the deviation of overly lenient or severe law enforcement in “one-size-fits-all” supervision and enhances the agility performance of the multi-stakeholder collaborative regulatory mechanism. When setting the lookback period for Credit Entropy, it is necessary to consider the full life cycle of AI enterprises’ development. For example, for the initial stage, a 1-year lookback period is appropriate, as information concentration is high and Credit Entropy remains low; for the development stage, a 3-year lookback period is needed to meet cohesive regulatory needs, linking the previous and subsequent stages; for the crisis stage, a 5-year lookback period is recommended, which can cover user feedback and incident response, ensuring Credit Entropy plays an effective regulatory role and safeguarding the long-term sustainability of the AI ecosystem.

The entire process follows a clear logical thread (stage characteristics → core contradictions → solutions), with tight logical connections between each link. This fully demonstrates the innovation and effectiveness of the method proposed in this study in addressing the contradiction between the agility of technological iteration and the finiteness of government resources in AI governance, a balance critical to aligning AI advancement with sustainable development goals.

In the initial market stage, third-party institutions assist the government in making scientific decisions regarding market access, licensing, and exit. They also conduct timely risk assessments and analyses of AI and propose targeted prevention and response measures. This can improve administrative efficiency to a certain extent, alleviate the government’s burden, and thus protect, encourage, and promote enterprise innovation, injecting momentum into the sustainable growth of the digital economy. At this stage, raising the access threshold for third-party institutions and providing them with sufficient financial and resource support will help guide enterprises to exert their innovative efficiency and focus on achieving technological breakthroughs. Drawing on international practices, the core indicators for the access threshold of third-party institutions should include Legal Person and Capital Strength Indicators, Premises and Facilities Indicators, Service Experience and Track Record Indicators, Internal Governance and Contingency Plan Indicators, Team Competence and Configuration Indicators, and Regulatory Compliance and Filing Indicators.

In the market development stage, allowing third-party institutions to operate in a market-oriented manner and participate in profit-sharing from enterprises’ compliant operations will further promote the transformation of research outcomes while alleviating the pressure of government financial support. At this stage, strengthening the independence and professionalism of third-party monitoring institutions will help leverage the regulatory role of the market economy, stimulate the vitality of market entities, and ensure healthy and orderly operation, sustaining the vitality of the digital economy in line with principles of equitable and responsible development.

In the market crisis stage, “collusive” behaviors can trigger corruption, harm public interests, and cause systemic fluctuations. These fluctuations make it difficult for government regulatory rules to be implemented stably, leading to a vicious cycle of “bad money driving out good money.” At this stage, actively constructing nonlinear dynamic reward and punishment strategies based on enterprises’ Credit Entropy and optimizing the multi-stakeholder agile governance mechanism will effectively suppress fluctuations and facilitate adaptive governance of enterprises’ operational behaviors and market competition order, upholding the sustainable order of AI markets and protecting social public welfare as a cornerstone of sustainability.

As mentioned above, major economies worldwide are actively exploring AI governance practices. The multi-stakeholder collaborative governance mechanism proposed in this study is aligned with the regulatory framework of the EU’s AI Act, which explicitly stipulates that “high-risk AI systems must undergo conformity assessment by designated bodies before being placed on the market”. The formal implementation and entry into force of the EU’s conformity assessment mechanism are contingent on member states establishing designated bodies and rolling out unified technical standards—standards that, to date, have not yet been harmonized.

The dynamic reward and punishment mechanism based on corporate Credit Entropy proposed herein is logically consistent with the “classified and hierarchical supervision” and “credit disciplinary system for untrustworthy entities” currently adopted in China’s market supervision system. According to public data, since China’s State Administration for Market Regulation (SAMR) integrated corporate credit into government random inspection processes, the rate of detecting non-compliance issues in inspections has increased by an average of 51.9% compared with the period prior to the implementation of the classification system. Furthermore, as corporate credit has been leveraged to enhance market constraints, it has driven a steady improvement in corporate credit ratings. In October 2024, China’s corporate credit index reached 158.83 points, an increase of 0.47 points month-on-month (from September), and law-abiding, honest operations have become the market norm [

49].

Therefore, it can be inferred that the corporate Credit Entropy-based collaborative governance mechanism proposed in this study holds potential for national-level implementation and is also applicable to economies like China.

8. Limitations and Future Work

From the perspective of corporate Credit Entropy, this paper develops an evolutionary game model to examine the dynamic collaborative evolution process of multiple stakeholders in AI governance. It employs numerical simulation to identify the key disturbance factors that trigger imbalance and fluctuation in the game system, while also validating the effectiveness of the nonlinear dynamic reward and punishment mechanism (developed based on corporate Credit Entropy) in mitigating system fluctuations and driving convergence toward a positive equilibrium.

However, there are still limitations in the following aspects:

- (1)

There is a lack of empirical evidence. In the future, specific collaborative innovation cases can be used to verify and improve the research framework of this paper.

- (2)

It also lacks detailed discussions on the access thresholds and indicators for third-party institutions. As AI technology iterates rapidly, the demand for such professional review bodies to participate in collaborative governance will grow increasingly pressing. Future work could draw on international practices to explore the development of relevant indicator systems.