Performance Comparison of LSTM and ESN Models in Time-Series Prediction of Solar Power Generation

Abstract

1. Introduction

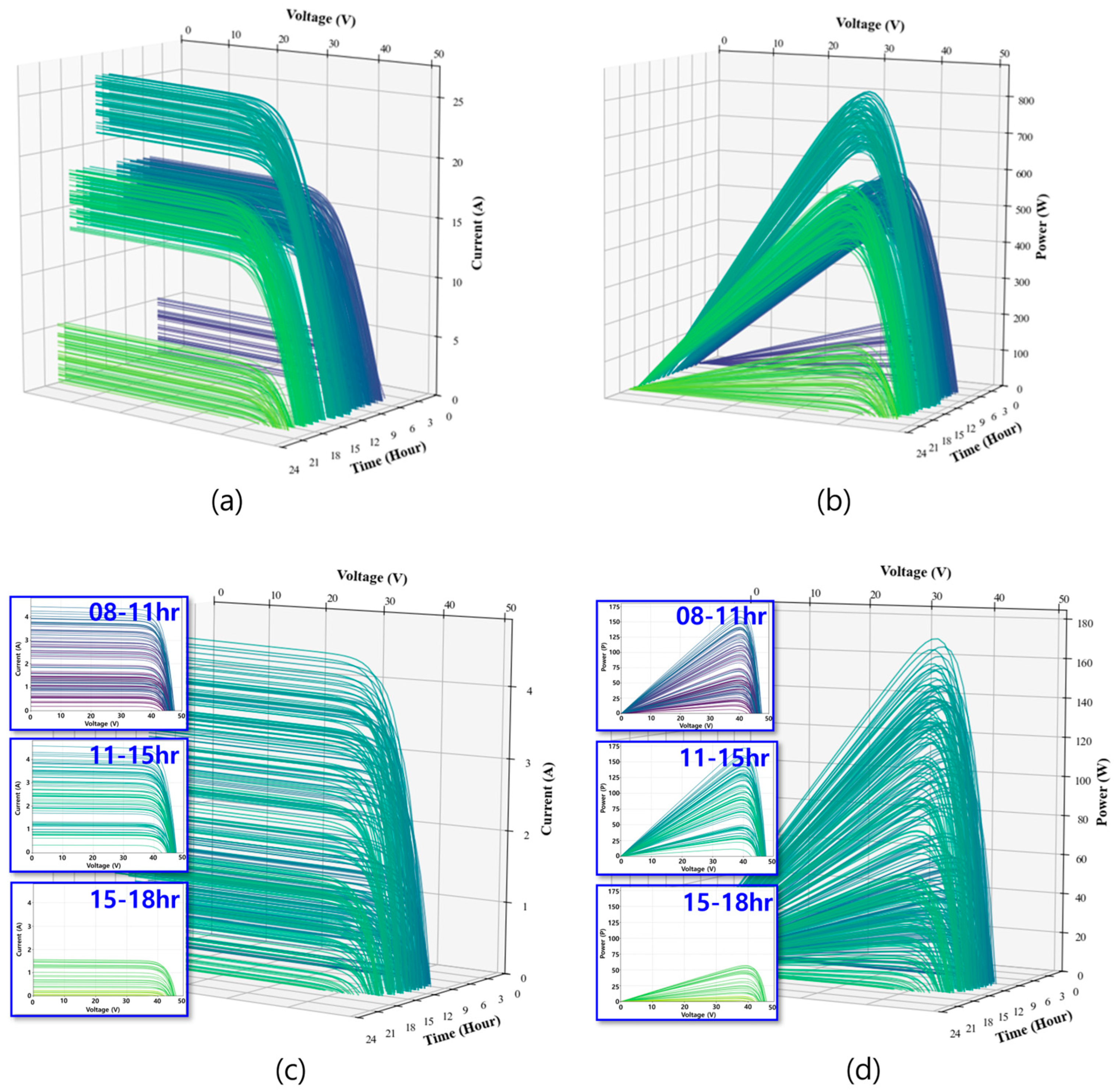

2. I-V and P-V Characteristics

3. Principle of LSTM Networks and Echo State Networks

3.1. Machine Learning in Time-Series Prediction

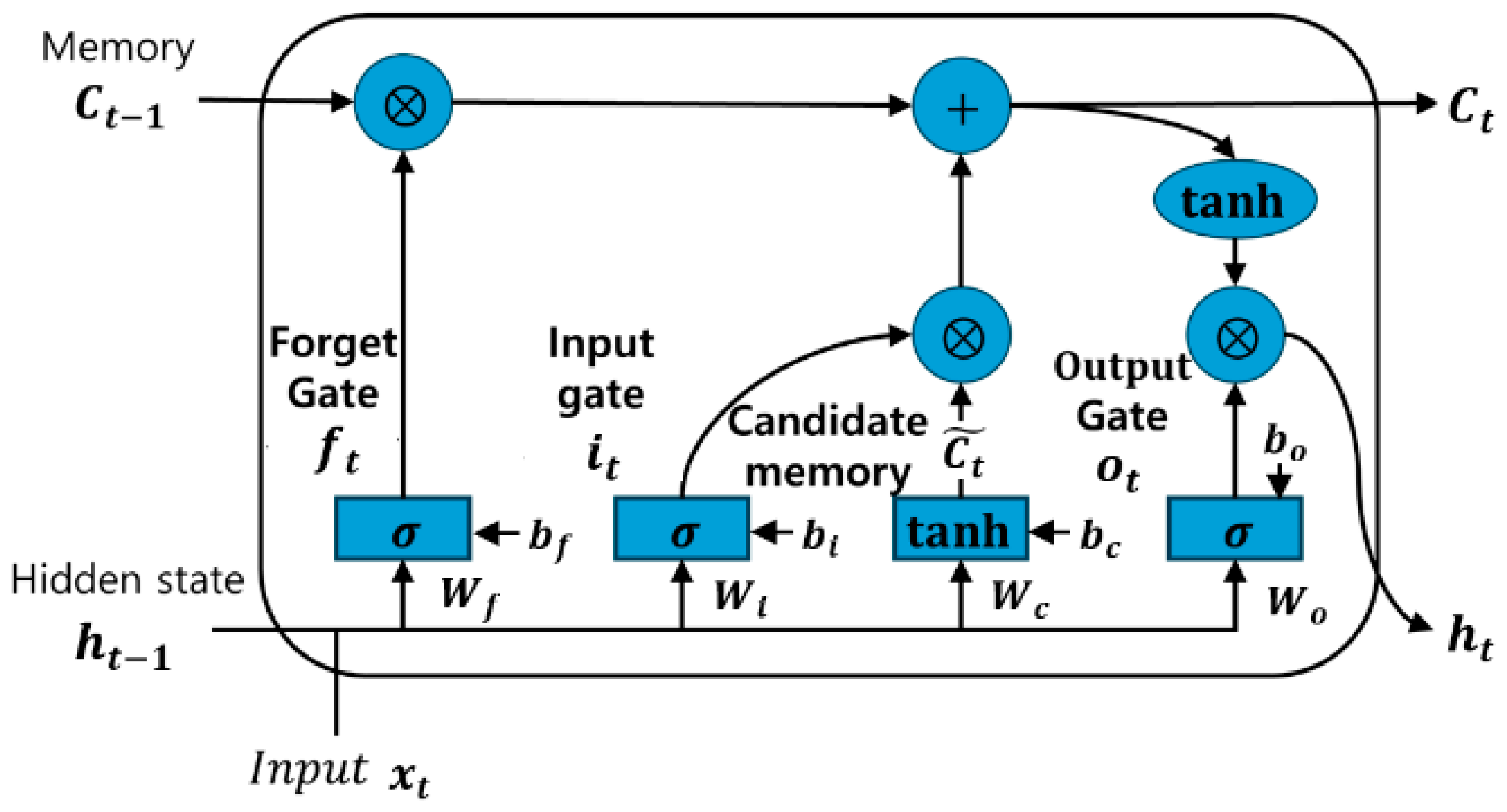

3.2. LSTM Networks

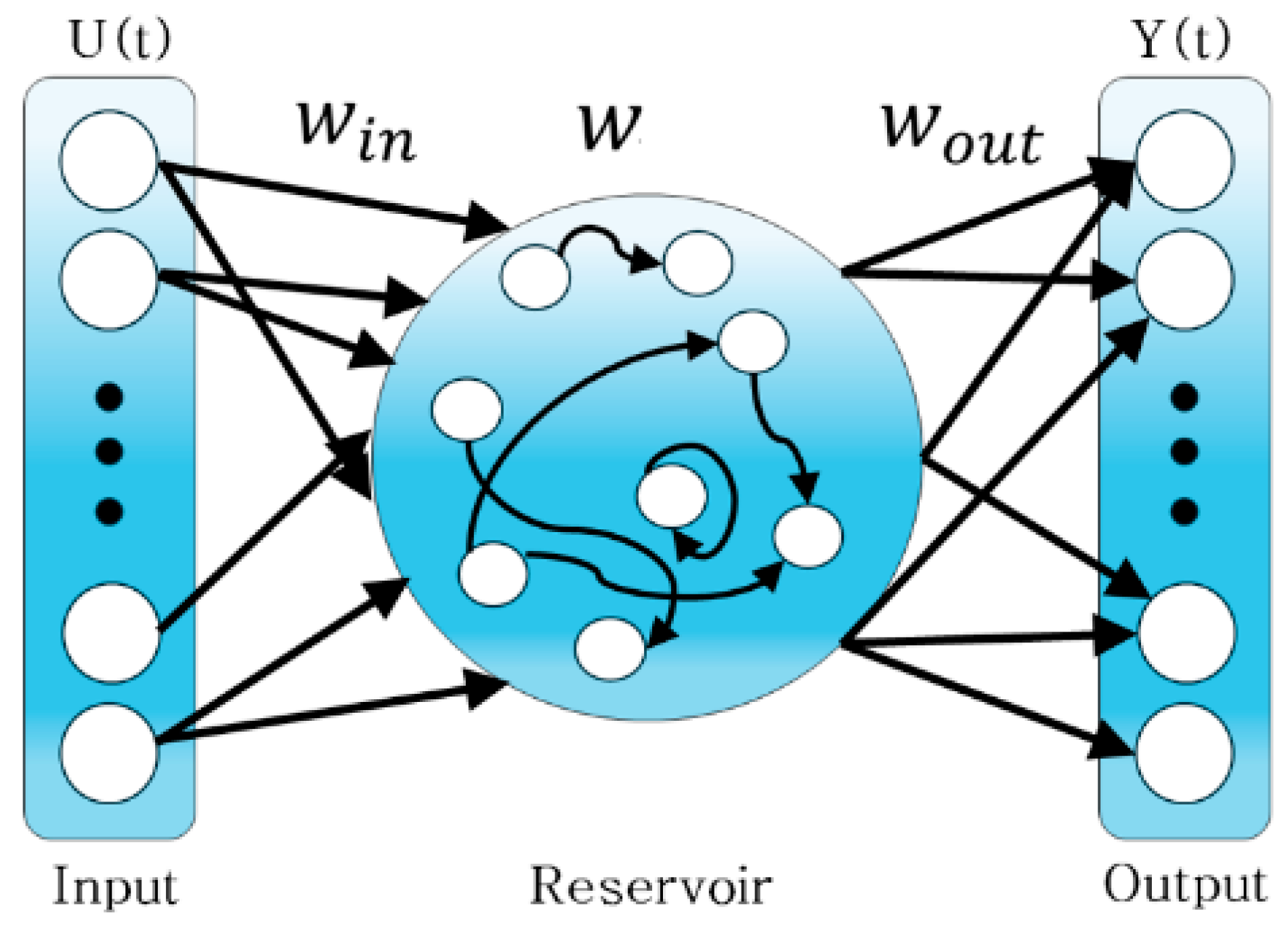

3.3. Echo State Networks

3.4. Performance Evaluation Metrics

3.5. Experimental Design and Validation

4. Simulation Results and Performance Comparison

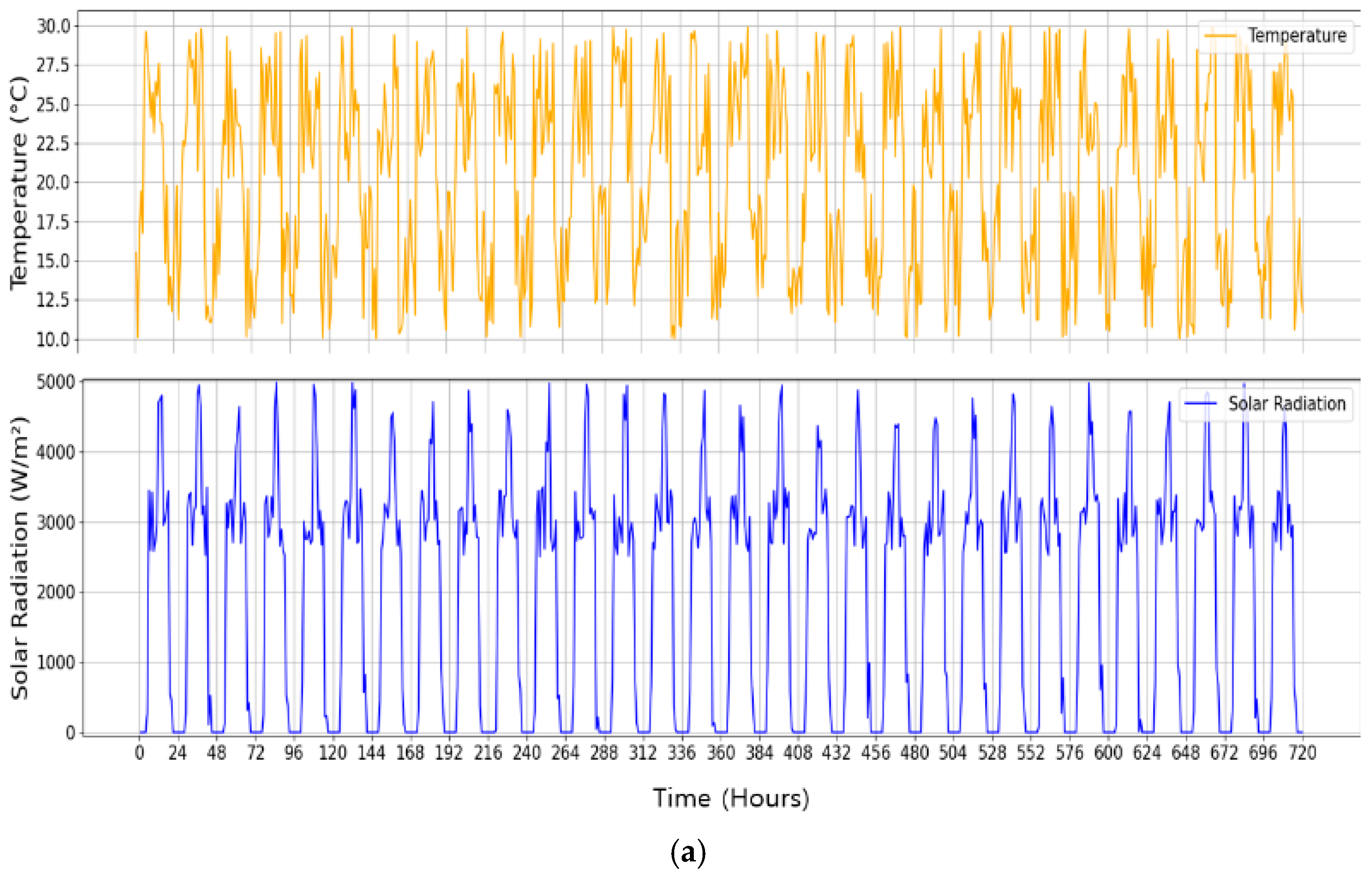

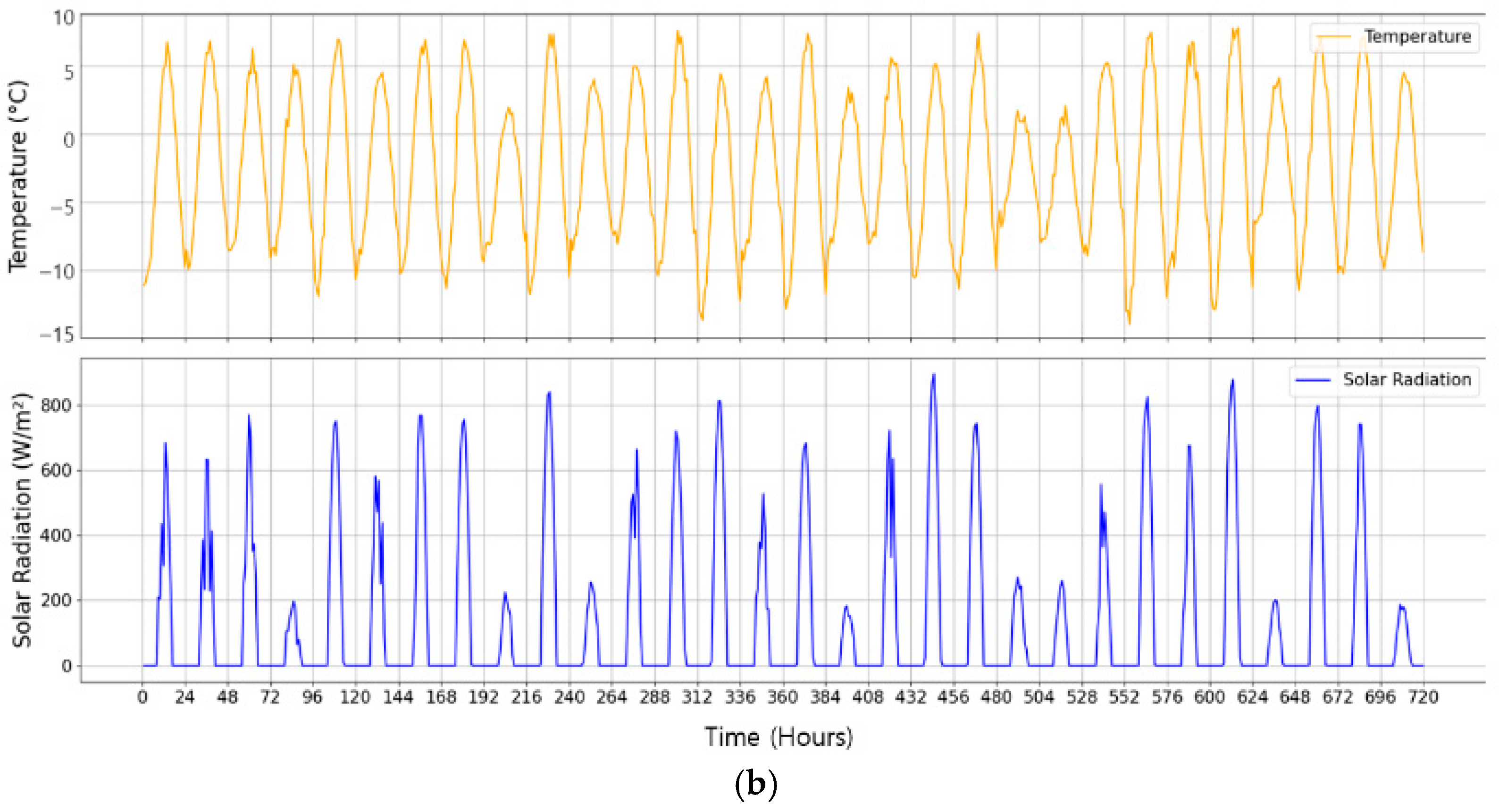

4.1. Dataset and Experimental Setup

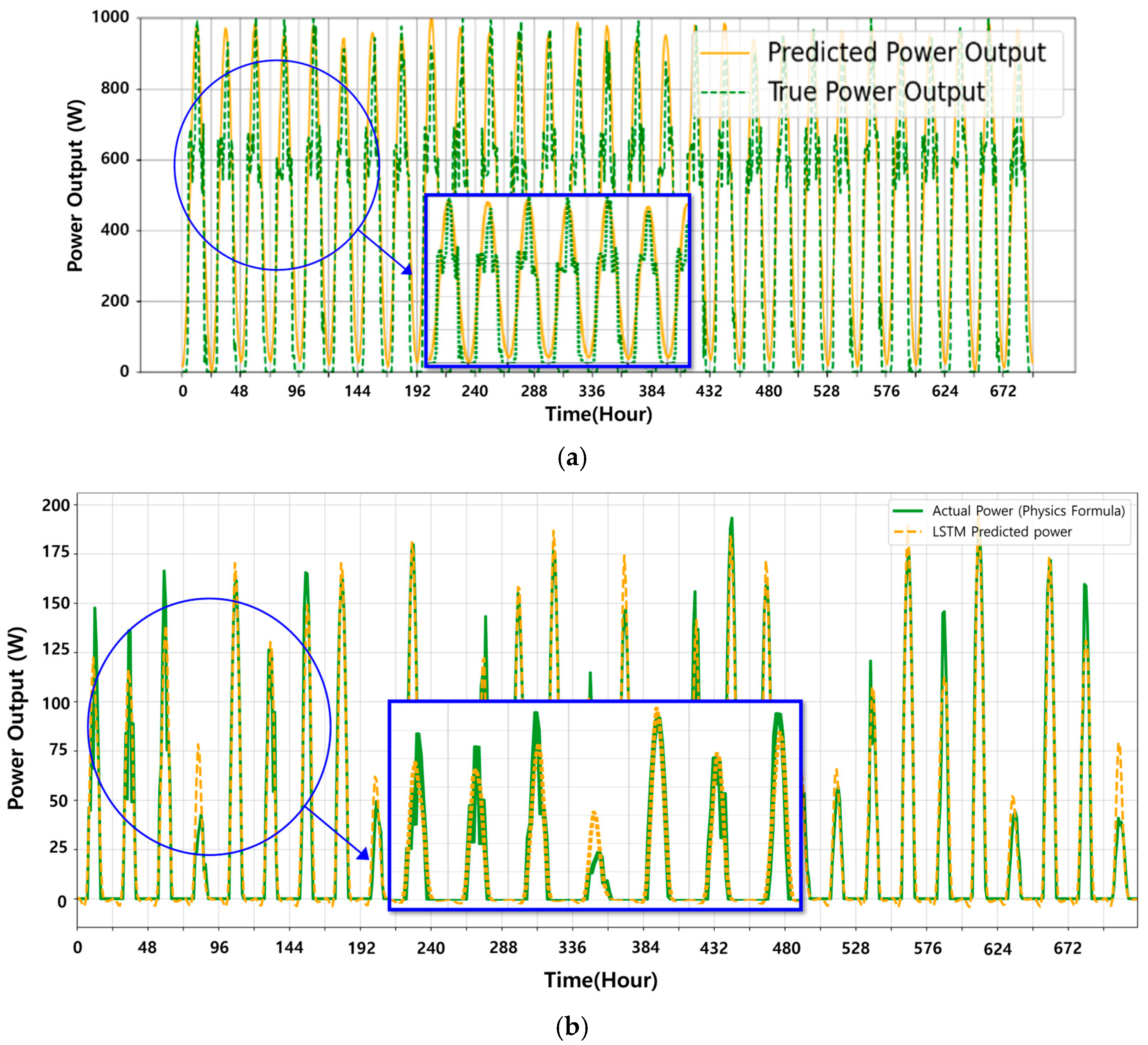

4.2. LSTM Simulation

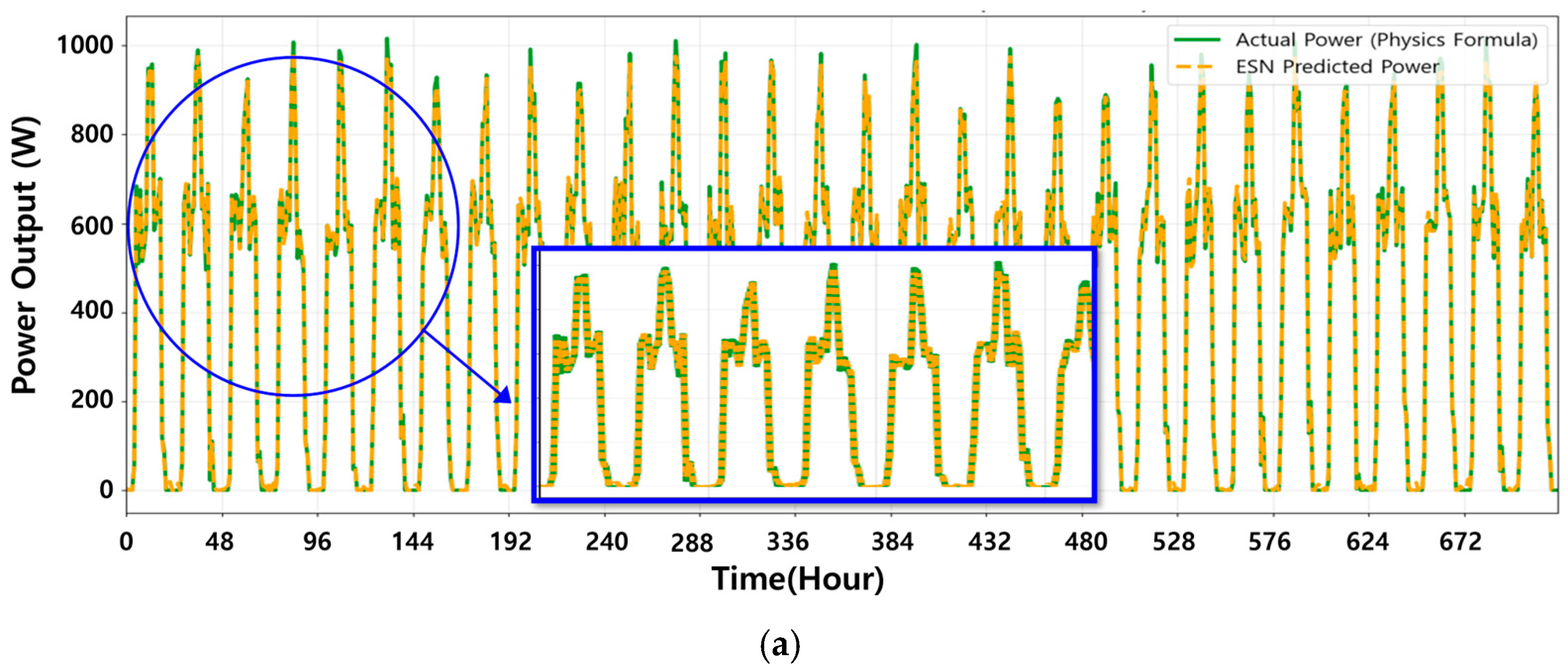

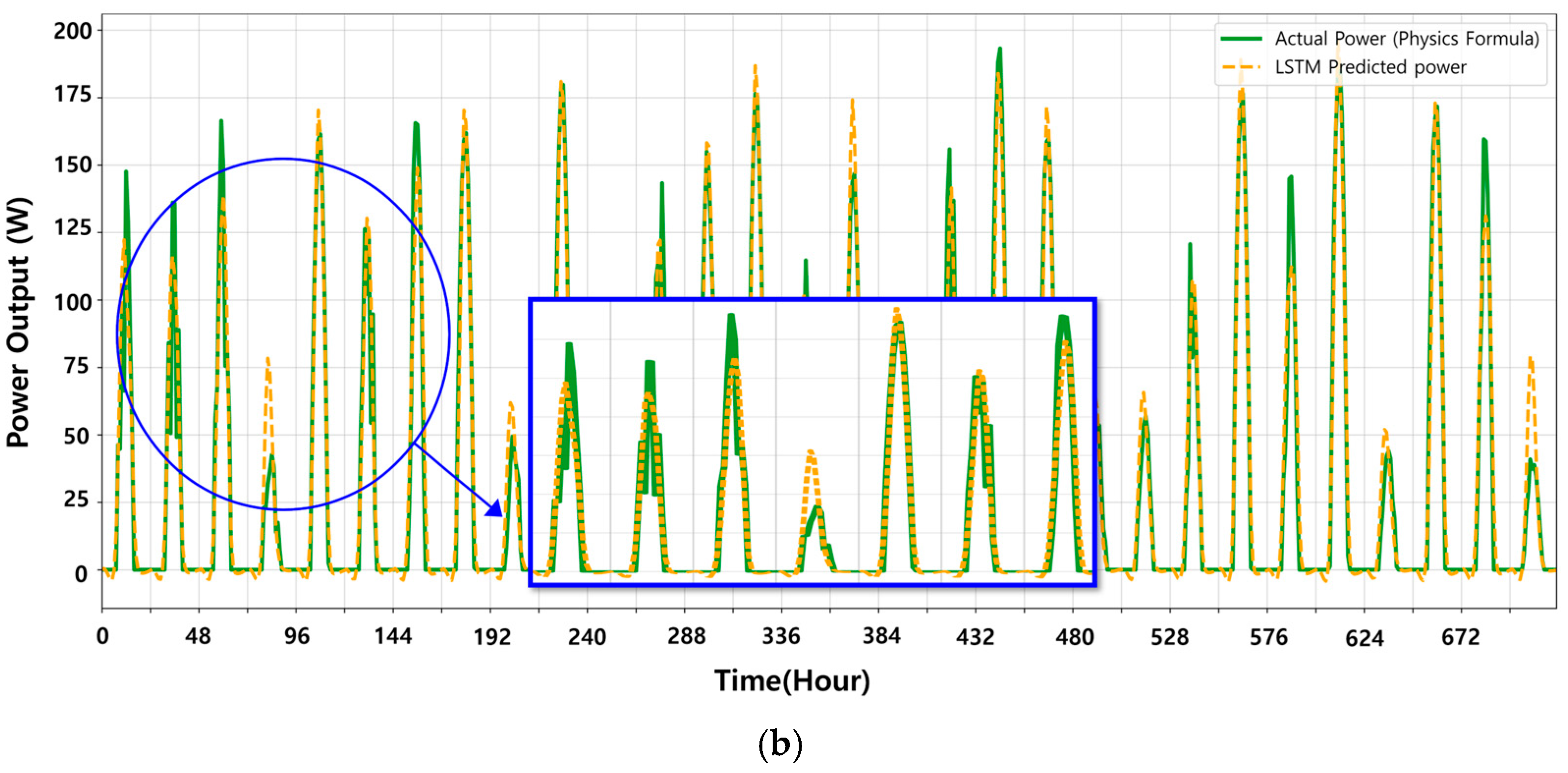

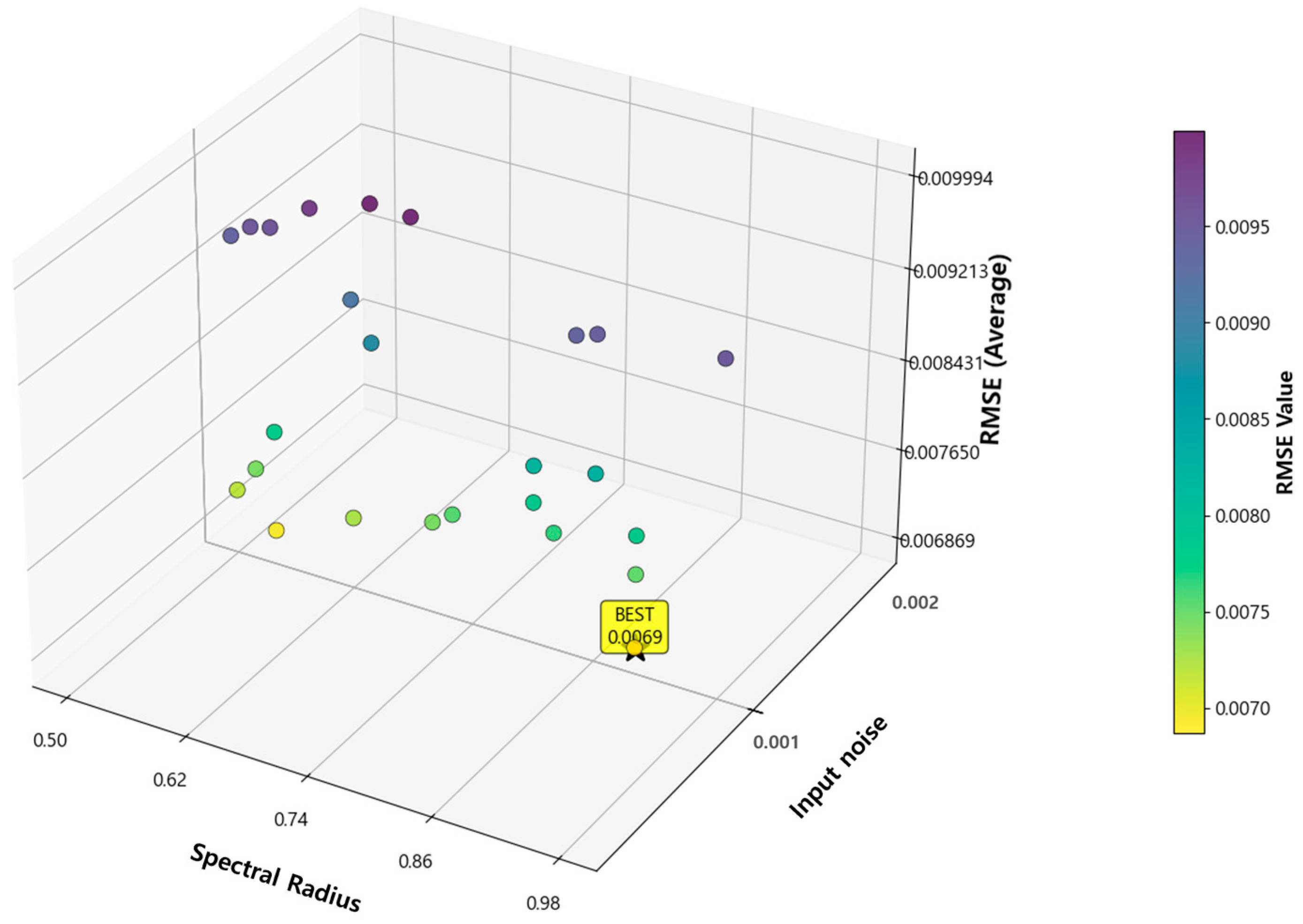

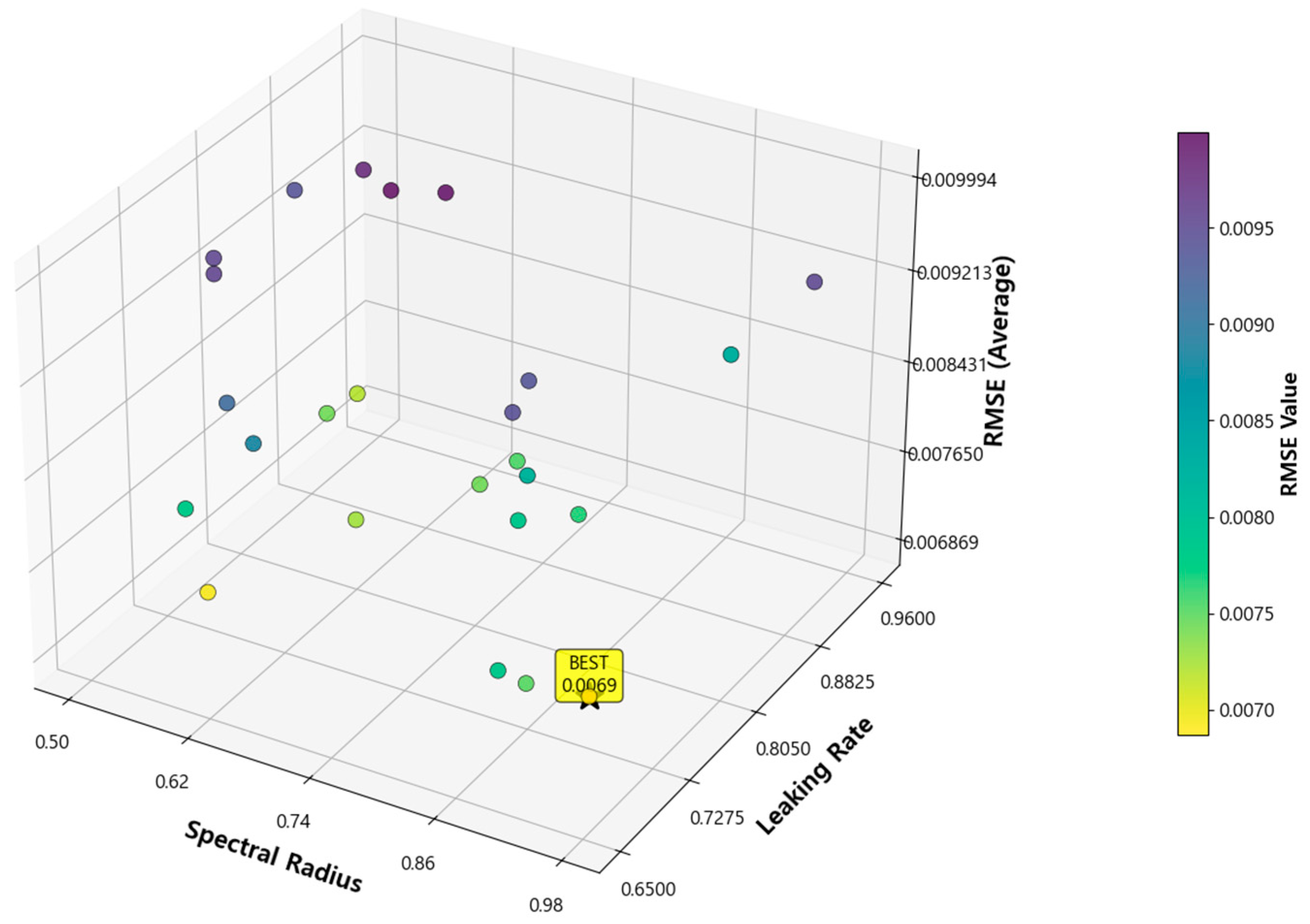

4.3. ESN Simulation

4.4. Comparison with Previous Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- International Energy Agency. Solar PV Global Supply Chains; International Energy Agency: Paris, France, 2022. [Google Scholar]

- Wang, H.; Li, X.; Zhang, Y.; Chen, Z. A Review of Deep Learning for Renewable Energy Forecasting. Energy Convers. Manag. 2019, 198, 111–121. [Google Scholar] [CrossRef]

- Benti, N.E.; Chaka, M.D.; Semie, A.G. Forecasting Renewable Energy Generation with Machine Learning and Deep Learning: Current Advances and Future Prospects. Sustainability 2023, 15, 7087. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, Q.; Sun, Y. Investigating the Power of LSTM-Based Models in Solar Energy Forecasting. Processes 2023, 11, 425–438. [Google Scholar]

- Patel, R.; Gupta, S. Solar Radiation Prediction: A Multi-Model Machine Learning and Deep Learning Approach. AIP Adv. 2025, 15, 125101. [Google Scholar] [CrossRef]

- Chen, L.; Wang, M.; Huang, G. Comparison of AI-Based Forecasting Model ANN, CNN, and ESN for Forecasting Solar Power. Int. J. Innov. Sci. Adv. Eng. 2024, 10, 45–57. [Google Scholar]

- Singh, A.; Kumar, P.; Sharma, T. Hybrid Deep Learning CNN-LSTM Model for Forecasting Direct Normal Irradiance. Nat. Sci. Rep. 2025, 15, 9876. [Google Scholar]

- Zhao, L.; Chen, H. Solar Power Prediction Using Dual Stream CNN-LSTM Architecture. Sensors 2023, 23, 3287. [Google Scholar] [CrossRef]

- Martínez, J.; Fernández, I. Deep Learning Models for PV Power Forecasting: Review. Energies 2024, 17, 1123. [Google Scholar] [CrossRef]

- Santos, R.; Almeida, F. A LSTM Based Method for Photovoltaic Power Prediction. J. Sustain. Energy Built Environ. Stud. 2020, 5, 215–224. [Google Scholar]

- Oliveira, M.; Silva, P.; Costa, R. Predicting Photovoltaic Power Output Using LSTM: A Comparative Study. ICPRAM 2025, 4, 300–309. [Google Scholar]

- Hassan, N.; Rahman, A. Power Output Forecasting of Solar Photovoltaic Plant Using LSTM. Energy Rep. 2023, 9, 1012–1023. [Google Scholar]

- Nguyen, T.; Tran, H. An Optimized Deep Learning Based Hybrid Model for Prediction of Global Horizontal Irradiance. Nat. Sci. Rep. 2025, 15, 10234. [Google Scholar]

- Romero, P.; Diaz, G. Deep Learning-Based Solar Power Forecasting Model. Front. Energy Res. 2024, 12, 543. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Van Houdt, G.; Mosquera, C.; Nápoles, G. A Review on the Long Short-Term Memory Model. Artif. Intell. Rev. 2020, 53, 5929–5955. [Google Scholar] [CrossRef]

- Lukoševičius, M.; Jaeger, H. Reservoir Computing Approaches to Recurrent Neural Network Training. Comput. Sci. Rev. 2009, 3, 127–149. [Google Scholar] [CrossRef]

- Ahmed, R.; Lee, J. Echo State Networks: Novel Reservoir Selection and Hyperparameter Optimization. Neurocomputing 2023, 480, 234–248. [Google Scholar]

- Kim, J.; Park, S. Time Encoding Echo State Network for Prediction Based on Irregular Time Series. In Proceedings of the IJCAI, Montreal, QC, Canada, 19–26 August 2021; pp. 4567–4573. [Google Scholar]

- Banerjee, S.; Roy, P. Prediction of Chaotic Time Series Using Recurrent Neural Networks and Reservoir Computing. Chaos Solitons Fractals 2022, 154, 110619. [Google Scholar]

- Zhang, X.; Wu, Y. Multi-Reservoir Echo State Computing for Solar Irradiance Prediction. Appl. Soft Comput. 2020, 88, 105956. [Google Scholar] [CrossRef]

- Li, Q.; Xu, M. Research on Short-Term Photovoltaic Power Prediction Based on ESN and KELM. Energy 2023, 256, 124381. [Google Scholar]

- Jaeger, H. Optimization and Applications of Echo State Networks with Leaky Integrator Neurons. Neural Netw. 2002, 15, 1345–1357. [Google Scholar] [CrossRef]

- Villalva, M.G.; Gazoli, J.R.; Ruppert Filho, E. Comprehensive Approach to Modeling and Simulation of Photovoltaic Arrays. IEEE Trans. Power Electron. 2009, 24, 1198–1208. [Google Scholar] [CrossRef]

- Luque, A.; Hegedus, S. (Eds.) Handbook of Photovoltaic Science and Engineering; Wiley: Hoboken, NJ, USA, 2011. [Google Scholar]

- Hansen, C.W. Parameter Estimation for Single Diode Models of Photovoltaic Modules; Sandia National Laboratories Report SAND2015-2065; Sandia National Laboratories: Albuquerque, Mexico, 2015; pp. 1–36. [Google Scholar]

- De Soto, W.; Klein, S.A.; Beckman, W.A. Improvement and Validation of a Model for Photovoltaic Array Performance. Solar Energy 2006, 80, 78–88. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Zeng, A.; Chen, M.; Zhang, L.; Xu, Q. Are Transformers Effective for Time Series Forecasting? Proc. AAAI Conf. Artif. Intell. 2023, 37, 11121–11128. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual Prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef] [PubMed]

- Jaeger, H. The ‘Echo State’ Approach to Analysing and Training Recurrent Neural Networks. GMD Report 2001, 148, 1–30. [Google Scholar]

- Lukoševičius, M. A Practical Guide to Applying Echo State Networks. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 659–686. [Google Scholar]

- Jaeger, H. Echo State Network. Scholarpedia 2007, 2, 2330. [Google Scholar] [CrossRef]

- Estébanez, I.; Fischer, I.; Soriano, M.C. Constructive Role of Noise for High-Quality Replication of Chaotic Attractor Dynamics Using a Hardware-Based Reservoir Computer. Phys. Rev. Appl. 2019, 12, 34058. [Google Scholar] [CrossRef]

- Strauss, T.; Jaeger, H.; Haas, H. Reservoir Computing with Noise. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, UK, 2004; Volume 17, pp. 1201–1208. [Google Scholar]

- Yildiz, I.B.; Jaeger, H.; Kiebel, S.J. Re-Visiting the Echo State Property. Neural Netw. 2012, 35, 1–9. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root Mean Square Error (RMSE) or Mean Absolute Error (MAE)?—Arguments Against Avoiding RMSE in the Literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Bergstra, J.; Bengio, Y. Bayesian Optimization of Hyper-Parameters in Reservoir Computing. Neurocomputing 2016, 194, 12–20. [Google Scholar]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical Bayesian Optimization of Machine Learning Algorithms. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, UK, 2012; Volume 25. [Google Scholar]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A Next-generation Hyperparameter Optimization Framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2623–2631. [Google Scholar]

- Al-Hajj, R.; Assi, A.; Fouad, M. Short-Term Solar Irradiance Forecasting Using a Hybrid CNN-LSTM Model. Energies 2021, 14, 5893. [Google Scholar]

- Ullah, F.; Mehmood, A.; Ullah, A. A Short-Term Solar Radiation Forecasting Model Using Recurrent Neural Network. SN Appl. Sci. 2020, 2, 1–8. [Google Scholar]

- Khan, W.; Walker, S.; Zeiler, W. Improved Solar Radiation Forecasting Using a Hybrid CNN-LSTM Model. Energy 2022, 246, 123322. [Google Scholar]

| Reference | Model Used | Reported Performance (Normalized RMSE) |

|---|---|---|

| Al-Hajj et al. (2021) [42] | LSTM | 0.082 |

| Khan et al. (2022) [44] | Hybrid CNN-LSTM | 0.075 |

| Ullah et al. (2020) [43] | LSTM | 0.090 |

| This Study | LSTM | 0.072, 0.094 |

| This Study | Optimized ESN | 0.0069, 0.0097 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Joo, Y.; Kim, D.; Noh, Y.; Choi, J.; Lee, J. Performance Comparison of LSTM and ESN Models in Time-Series Prediction of Solar Power Generation. Sustainability 2025, 17, 8538. https://doi.org/10.3390/su17198538

Joo Y, Kim D, Noh Y, Choi J, Lee J. Performance Comparison of LSTM and ESN Models in Time-Series Prediction of Solar Power Generation. Sustainability. 2025; 17(19):8538. https://doi.org/10.3390/su17198538

Chicago/Turabian StyleJoo, Yehan, Dogyoon Kim, Youngmin Noh, Jaewon Choi, and Jonghwan Lee. 2025. "Performance Comparison of LSTM and ESN Models in Time-Series Prediction of Solar Power Generation" Sustainability 17, no. 19: 8538. https://doi.org/10.3390/su17198538

APA StyleJoo, Y., Kim, D., Noh, Y., Choi, J., & Lee, J. (2025). Performance Comparison of LSTM and ESN Models in Time-Series Prediction of Solar Power Generation. Sustainability, 17(19), 8538. https://doi.org/10.3390/su17198538