1. Introduction

Laboratories are among the most energy-intensive types of buildings. In particular, high energy consumption is characteristic of university and research complexes—this is due to variable user flows, the diversity of spaces, and the physical wear and tear of buildings [

1]. Laboratories require strict microclimate control, continuous operation of specialized equipment, and modern HVAC systems [

2]. HVAC systems alone account for up to 50% of a building’s total energy use, especially in medical and educational institutions [

3].

In addition to high energy consumption, special attention must be paid to issues of health and safety for laboratory personnel [

4]. People spend up to 90% of their time indoors, and indoor air quality directly affects their well-being [

5]. The concentration of carbon dioxide (CO

2) serves as a key indicator of space occupancy and the risk of pathogen transmission [

4]. Since the COVID-19 pandemic, the management of air quality and occupant density has become a critical component in ensuring both safety and energy efficiency [

4,

6].

Improving the energy performance of laboratory buildings is closely linked to the achievement of the United Nations Sustainable Development Goals (SDGs) [

7]. Among the most relevant are the goals related to sustainable cities, clean and affordable energy, responsible consumption, and innovation [

8]. The introduction of digital and automated control systems—particularly IoT technologies—makes it possible to monitor microclimate parameters such as temperature, humidity, and CO

2 levels in real time and to optimize HVAC operation [

9]. These solutions enable energy savings of up to 15% without compromising sanitary requirements [

5]. Dynamic approaches have demonstrated significant energy savings by adapting heating and cooling to actual occupancy patterns. Systems based on space utilization analysis improve both indoor environmental quality (IEQ) and energy efficiency by dynamically modulating airflow. This ensures adequate ventilation in active zones while reducing excessive conditioning in unoccupied areas [

10].

Smart laboratories operate under conditions where environmental sensor data are often incomplete or noisy due to equipment failures, network issues, or harsh operational settings. Traditional anomaly detection methods based on supervised learning require labeled data, which is expensive and often impractical in dynamic environments. In contrast, unsupervised machine learning approaches do not rely on labels and instead learn normal system behavior to identify deviations. Recent research in industrial systems and indoor air quality confirms the potential of such approaches—including clustering, dimensionality reduction, autoencoders, and one-class classifiers—for detecting anomalies and hidden system states [

11,

12].

Clustering methods such as K-means, Density-Based Spatial Clustering of Applications with Noise (DBSCAN), and hierarchical clustering help group sensor data and identify outliers that deviate from dense clusters of normal conditions. DBSCAN is particularly effective in detecting sparse anomalies, which are common in environmental data [

11,

13,

14], while cluster analysis not only detects anomalies but also reveals underlying operational modes of the system [

12].

Since sensor data typically form time series, Long Short-Term Memory (LSTM) models and other recurrent neural networks are widely used to detect temporal dependencies. LSTM autoencoders are well suited for identifying anomalous temporal patterns, and combining these models with one-class classifiers, such as SVMs in latent space, reduces false positives [

15]. In addition, one-class methods like OC-SVM and Isolation Forests are trained on normal data to define a boundary of typical behavior, which is especially relevant in environments where anomalies are rare and unlabeled [

14,

16].

While the application of unsupervised learning for anomaly detection in building systems is an active area of research, existing studies often exhibit limitations that this work seeks to address. Numerous previous approaches have focused on a single building system or a limited set of parameters. Previous research frequently focuses on a single building type like offices or schools [

10], or a limited set of parameters, primarily CO

2 and temperature [

17], neglecting critical multi-pollutant scenarios common in laboratories. Other approaches, such as those using complex deep learning models like LSTM autoencoders [

15], offer powerful detection capabilities but require large, contiguous datasets and significant computational resources, making them less practical for the noisy, incomplete, and resource-constrained environments typical of many research facilities. In contrast, our study specifically targets the high-stakes, multi-parameter context of smart laboratories. We bridge a critical gap by employing a lightweight, computationally efficient ensemble of Isolation Forest and One-Class SVM—methods chosen for their robustness to noise and label-free operation—to simultaneously address energy anomalies and complex IAQ deviations (including TVOC and PM

2.5) that are often overlooked. Furthermore, unlike studies that solely focus on fault detection [

18], we integrate anomaly detection with K-means clustering to uncover and characterize latent operational states (Crowded, Experiment, Empty/Cool). This dual-layer analysis provides not only alerts for acute failures but also an interpretable framework for adaptive, state-dependent HVAC control, moving beyond generic thresholds towards a context-aware management system that jointly optimizes energy efficiency and comprehensive indoor air safety in a way previous works have not achieved. The relevance of the article stems from the fact that laboratory buildings rank among the most energy-intensive facilities: strict microclimate requirements and the continuous operation of specialized equipment impose heavy loads on HVAC systems. Conventional climate-control schemes rely primarily on CO

2 metrics and occupancy schedules, thus tackling energy savings and sanitary safety separately while overlooking critical pollutants such as TVOC and PM

2.5; this results either in excessive energy use or in health risks for staff. The present study shows that unsupervised machine-learning methods—Isolation Forest for anomaly detection and K-means for revealing latent operating modes—can automatically identify unfavorable states even with incomplete or noisy IoT data, laying the groundwork for adaptive ventilation management that simultaneously reduces energy consumption and maintains a comprehensive assessment of indoor air quality. Accordingly, the research bridges the gap between fragmented IAQ and energy-efficiency approaches, supports the achievement of sustainable development goals, and offers a practical solution for modernizing scientific infrastructure, particularly valuable in resource-constrained regions.

2. Related Work

Sustained interest in indoor climate management stems from the dual objective of reducing energy consumption while maintaining high IAQ. Modern control practices primarily rely on CO

2 concentration and occupancy data as key indicators for regulating ventilation due to their accessibility and low cost [

17,

19,

20]. However, this narrow focus overlooks other critical pollutants such as TVOC, fine particulate matter (PM

2.5), and formaldehyde, which are essential for a comprehensive IAQ assessment [

19,

20,

21]. This reliance on a few metrics contributes to a tendency of addressing energy savings and air-quality objectives in isolation [

9]. Existing strategies often focus either on reducing energy costs or on IAQ compliance, rarely integrating both aspects [

22,

23]. Even when filtration and temperature-control measures reduce pollutants like PM

10 and PM

2.5, their energy impact often remains unexamined [

24]. This divide is exacerbated by HVAC systems that operate in fixed modes, failing to adapt to real-time variations in pollutant load and leading to inefficient climate regulation [

25]. To overcome these limitations, contemporary systems require intelligent algorithms capable of adaptive control that responds to multiple environmental variables simultaneously [

26].

The need for an integrated approach is especially acute in critical environments like laboratories and healthcare facilities, where HVAC anomalies can have cascading effects on occupant health and facility sustainability. Anomalies such as uncontrolled variations in temperature, humidity, and air contaminant levels can compromise laboratory safety [

27]. Design and operational shortcomings—including inconsistent airflow patterns and improper air mixing—significantly diminish a system’s ability to control airborne contaminants and pathogens, increasing the risk of infection transmission [

28]. Furthermore, these deficiencies contribute to energy waste and increased operational costs, posing significant challenges to sustainability goals and contradicting policies aimed at reducing carbon footprints [

29,

30]. The intersection between laboratory safety and sustainability thus lies in the dual imperative of protecting human health while ensuring that operational practices remain economically viable and environmentally responsible [

27,

29].

Digital monitoring platforms enable the continuous real-time data capture of HVAC performance, offering a means to detect anomalies and prompt timely interventions that maintain safe indoor conditions [

31]. Organizations that fail to address HVAC deficiencies expose occupants to preventable health risks while incurring long-term sustainability penalties from increased energy consumption and maintenance demands [

30]. Case studies demonstrate that integrating advanced monitoring and machine learning-based fault detection can significantly reduce HVAC anomalies, resulting in enhanced indoor air quality, reduced energy consumption, and minimized downtime [

18].

The application of machine learning (ML) is central to developing these intelligent building systems. Modern buildings generate extensive data on energy use, climate parameters, and system performance, which can be leveraged for optimization and fault diagnosis [

32]. The choice between supervised and unsupervised learning is shaped by the availability of labeled data [

33]. While supervised learning is effective for forecasting when historical data with known outcomes are available [

34], such annotated datasets are rare in real-world HVAC operations. Consequently, unsupervised ML methods are particularly valuable, as they can discover anomalous events and identify latent operational states without the need for labeled data [

35]. In our study, the absence of annotated anomalies or labeled fault events made supervised approaches impractical. Instead, we relied on unsupervised methods, which are especially valuable for anomaly detection and the discovery of hidden patterns in large-scale information where target outcomes are unknown [

12]. Specifically, Isolation Forest and One-Class SVM were employed to detect abnormal operating conditions at the observation level, while K-means clustering was used to identify recurrent operational states. This direct alignment between the data context (unlabeled laboratory logs) and methodology ensures that the pipeline remains both feasible and deployable in real-world monitoring scenarios.

Despite their potential, significant challenges remain. The lack of comprehensive occupancy data can hinder the effective alignment of resource use with real needs [

20]. Moreover, intelligent control systems face obstacles related to speed, reliability, and user integration, which can limit their autonomy [

26]. A comparative analysis of existing anomaly detection methods shows that they possess complementary characteristics. Isolation Forest is distinguished by its high computational efficiency and strong scalability when processing large and high-dimensional datasets, yet it lacks sensitivity to local and context-specific anomalies [

36,

37]. In contrast, One-Class SVM demonstrates high accuracy and can detect even minor deviations in system behavior, but it requires significant computational resources and is sensitive to hyperparameter tuning, which reduces its applicability in dynamically changing environments [

38,

39]. The K-means algorithm, by comparison, provides a simple and fast segmentation of data and is capable of revealing the hidden structure of system states, yet its results are unstable due to dependence on the initial initialization and the need to predefine the number of clusters, especially in noisy and high-dimensional data [

40]. Thus, while Isolation Forest excels in speed, One-Class SVM ensures greater sensitivity, and K-means enables interpretation of the internal data structure. However, each method remains vulnerable when applied in isolation, which justifies the need for their integration into a unified hybrid system where the strengths of one compensate for the limitations of another [

40,

41]. These gaps underscore the need for a lightweight, label-free framework that can reliably detect anomalies and classify operational states in complex, multi-pollutant environments like laboratories [

42].

The novelty of this study lies in the introduction of a lightweight unsupervised framework tailored for laboratory environments. Unlike prior works that either focus on limited pollutants, address only energy efficiency, or employ resource-intensive supervised models, our approach leverages Isolation Forest, One-Class SVM, and K-means clustering to analyze multivariate sensor data without labeled inputs. This methodology is capable of detecting anomalous hours, classifying latent operational states, and producing interpretable outcomes that directly support adaptive HVAC control. A further contribution is the integration of multi-parameter indicators—including CO2, TVOC, PM2.5, temperature, humidity, and noise—together with energy variables, which allows simultaneous consideration of air-quality dynamics and energy consumption. The proposed framework thus provides a practical, scalable, and deployable solution for real-world laboratory monitoring, enhancing both sustainability and safety by enabling context-aware ventilation and energy management.

3. Materials and Methods

3.1. Architecture of the System

In this system, as shown in

Figure 1, the chain begins with field devices—CO

2 sensors, TVOC detectors, temperature-and-humidity probes, presence sensors, door contacts, an industrial Winsen gas module, and a Zigbee-controlled smart plug. All of them join the same Zigbee-3.0 mesh (indicated by the red “Z” emblem in the diagram, which denotes Zigbee protocol), which is protected by the standard 128-bit AES network key; the Wi-Fi segment that links the Raspberry Pi Zero to the LAN runs on WPA2/WPA3, and Home Assistant is exposed only over HTTPS with local user authentication and per-device API tokens. The mesh is serviced by two coordinators—an Aqara Hub M2 (keeps native Aqara features) and an open-firmware USB dongle (gives full Zigbee2MQTT/ZHA flexibility). Sensors unicast their telemetry to the nearest router, then to one of the coordinators and finally to Home Assistant on the Pi, where the data are logged and evaluated.

Control rules combine fixed thresholds with adaptive logic: CO2 and TVOC limits are user-configurable in the Home Assistant user interface (HA UI), but they can also auto-shift according to diurnal patterns and occupancy; optional add-ons employ a simple regression/PID loop that “learns” how quickly the room responds to ventilation and adjusts set-points over time. When a rule fires (CO2 > 1000 ppm or presence detected after 20 min of stale air), Home Assistant issues a Zigbee or Wi-Fi command: turn on the smart plug that powers a humidifier or air purifier, or send an IR code to start the air-conditioner or ventilation fan.

Device states are mirrored to Yandex Smart Home and Mi Home (Xiaomi’s global IoT platform). That gives the user voice control, push notifications, and remote dashboards, yet every cloud command is routed back to Home Assistant and executed locally so the house keeps running if the internet drops. Power resilience is handled by a small UPS that feeds the Raspberry Pi, the Zigbee hubs and the Wi-Fi AP; if the Pi still goes down, a handful of critical automations are duplicated as direct Zigbee bindings inside the Aqara Hub, ensuring that ventilation and safety alerts continue until main control is restored. The dual-coordinator layout thus blends Aqara’s ease of use with open-source freedom, while the IR blaster lets legacy HVAC gear remain in service without costly replacements.

The foundation of the system is a carefully selected array of hardware components, as shown in

Table 1, designed to capture a comprehensive dataset of environmental and operational parameters. The data collection infrastructure is intentionally heterogeneous, integrating devices across multiple communication standards to ensure flexibility and control over both modern and legacy equipment.

All environmental sensors were configured to transmit measurements at fixed one-hour intervals, yielding a total of 1877 hourly records during a continuous four-month monitoring campaign. After data cleaning and alignment, 1602 high-quality observations were retained for analysis. This ensured full 24 h coverage of both occupied and unoccupied laboratory states, providing a representative dataset for anomaly detection and latent state identification.

The system operates as an integrated and responsive ecosystem, with the Home Assistant platform serving as the central hub for data aggregation and control. The process begins at the sensing layer, where a diverse array of hardware captures comprehensive data, including not only environmental metrics but also key operational parameters such as occupancy status, door contacts, and energy consumption. These data are transmitted via its respective protocol, like Zigbee or Wi-Fi, to the central Home Assistant hub. Upon receipt, Home Assistant immediately logs the value with a high-precision timestamp into a MariaDB database for historical record-keeping and analysis. These data are simultaneously displayed on a user interface—a Redmi Pad SE tablet—allowing for real-time monitoring and manual override of any device.

To investigate unsupervised learning techniques in a real-world setting, we designed and implemented a closed-loop system architecture for intelligent and adaptive environmental management. This architecture provides the necessary foundation for data collection, analysis, and, crucially, practical integration with a diverse range of HVAC infrastructure. The system operates in a continuous four-stage cycle.

A diverse array of environmental sensors continuously collects data on parameters such as temperature, humidity, CO2, and 2TVOC levels. These readings are transmitted via standard protocols like Zigbee 3.0 and Wi-Fi to a central Home Assistant (HA) hub. In this hub, each data point is recorded with a high-precision timestamp in a MariaDB database, yielding a granular, unlabeled dataset perfectly suited for unsupervised analysis.

A Machine Learning (ML) Agent periodically queries the database, analyzing both recent inputs and long-term historical records. Using unsupervised models (e.g., clustering, anomaly detection), the agent identifies deviations from established patterns and uncovers latent operational states that emerge from recurring sensor correlations. Based on this analysis, the ML Agent generates optimal, high-level control actions—such as “reduce humidity” or “decrease PM2.5”—and communicates them to the Home Assistant hub.

This is the core of the system’s practical applicability. Home Assistant acts as a critical middleware and abstraction layer, translating the ML agent’s high-level decisions into specific, device-level commands. This approach ensures interoperability across heterogeneous and proprietary systems by leveraging multiple integration pathways. For modern smart devices, HA issues commands directly via local protocols like Zigbee. For Wi-Fi-enabled devices, it communicates through manufacturer cloud platforms like the one for the Xiaomi Mi Air Purifier 2H. To interface with older or closed-off units, the system uses a universal infrared (IR) blaster, which replicates manufacturer-specific remote codes to control non-smart equipment like the Gree-09 Bora air conditioner or the VAKIO Base Smart ventilator. This multi-faceted approach allows our single ML framework to interface with any device supported by the Home Assistant ecosystem, exposing all control actions through consistent MQTT messages and REST APIs.

The entire operational loop is designed for resilience. Because command execution for Zigbee and IR devices is handled locally, core functionality is maintained even if the internet connection is lost. This architecture is also extensible; the use of Home Assistant as a middleware bridge means the system can be adapted to control industrial HVAC systems that use standard protocols like Modbus or BACnet by simply adding the appropriate integration within HA. This robust and flexible design provides a practical, real-world framework for deploying and evaluating advanced, data-driven control strategies in diverse laboratory environments.

3.2. Data Preprocessing

Before the application of advanced analytical methods, the entire dataset underwent meticulous preprocessing to ensure its consistency, reliability, and suitability for time-series modeling. A total of 1877 hourly records were initially collected over a continuous four-month observation campaign. However, after removing incomplete and corrupted entries, 1602 high-quality observations remained, forming the foundation for all subsequent analyses.

A crucial step was temporal alignment, which involved merging separate measurement streams into unified, evenly spaced hourly intervals. This was essential for minimizing potential distortions caused by irregular sampling rates or asynchronous recordings. Data cleaning included type casting, interpolation of missing values using context-aware techniques, and the smoothing of outliers through localized statistical filtering. Special care was taken to preserve the temporal structure of the data while minimizing the risk of introducing artificial trends.

One of the specific challenges stemmed from the fragmented structure of the raw dataset, in which each parameter was recorded with its own individual timestamp. Humidity, noise, temperature, CO2, PM2.5, and TVOC levels were each associated with separate time columns, despite being captured simultaneously. Addressing this required precise merging to reconstruct a coherent multivariate time series. As a result, a unified and synchronized matrix was created, aligning all features to a shared temporal axis. Although the system is equipped with additional sensors—including smoke detectors, eTVOC sensors, contact sensors, power and energy meters, motion detectors, and water leak sensors—these were excluded from the analysis due to insufficient or missing data during the observation period.

All features were subsequently standardized to remove scale-related distortions and ensure fair comparison across variables. This normalization process also facilitated the use of multivariate machine learning algorithms, which require feature distributions to be relatively uniform. The resulting matrix preserved the natural temporal variability of the system while eliminating noise and redundancy.

3.3. Anomaly Detection Through Unsupervised Learning

Unsupervised anomaly detection was implemented using two complementary algorithms: Isolation Forest (IS) and One-Class SVM (OC-SVM). Both were selected for their ability to identify rare, irregular patterns in high-dimensional data without the need for labeled observations. While Isolation Forest isolates anomalies by recursively partitioning data through random feature selection and split values—constructing an ensemble of binary trees known as isolation trees [

43]—One-Class SVM learns a decision boundary that encloses the majority of the data in feature space, flagging any observation that falls outside as anomalous [

44].

We adopt IF and OC-SVM because our data are hourly, multivariate, and span limited continuous windows—conditions under which label-free, data-efficient one-class detectors are reliable and simple to calibrate for deployment [

45,

46]. Both methods output interpretable anomaly scores/flags that align with building operations (alerts, purge, set-point tweaks), a key requirement emphasized in building analytics and the FDD literature [

45,

46]. Used together, IF tends to capture sharp, localized spikes, while OC-SVM better reflects gradual distribution shifts, providing complementary coverage [

47].

Density-based clustering hinges on choosing ε and minPts. In heterogeneous, standardized multi-feature spaces this sensitivity often yields over-fragmentation (many “noise” points) or merging of clusters. Recent work explicitly targets reducing DBSCAN’s parameter sensitivity via topological/embedding pre-processing—evidence that the baseline method can be brittle without careful tuning—so we reserve it for future ablations [

48].

Deep sequence models excel on long, contiguous windows but typically require larger datasets and heavier training/inference budgets; surveys also note their design complexity and focus on subsequence patterns, which is less aligned with our hour-level, deployment-oriented constraints. We therefore prioritize simpler one-class detectors now and plan deep ablations once longer traces and compute budgets are available [

47,

49].

Isolation Forest differs fundamentally from distance-based or density-based techniques by relying on random sub-sampling and axis-aligned splitting, rather than assumptions of local continuity or spatial proximity. Similarly, One-Class SVM does not assume any prior class distributions but instead uses a kernel-based approach (in this case, the radial basis function kernel) to capture nonlinear relationships and define a hypersurface around “normal” data points. Both methods are inherently robust to irrelevant features and noise, making them well suited for environmental datasets where sensor readings are prone to fluctuations due to both systematic and random factors.

The entire dataset was used for training in both models to preserve the diversity of environmental states—ranging from routine operational conditions to infrequent but valid outliers. This comprehensive training ensured that each algorithm could learn the full spectrum of typical behaviors without imposing narrow assumptions about normalcy. All hourly observations were standardized prior to model fitting to neutralize scale disparities among features such as CO2 concentration (ppm), TVOC (ppb), PM2.5 (µg/m3), ambient temperature (°C), relative humidity (%), noise levels (dB), and energy usage.

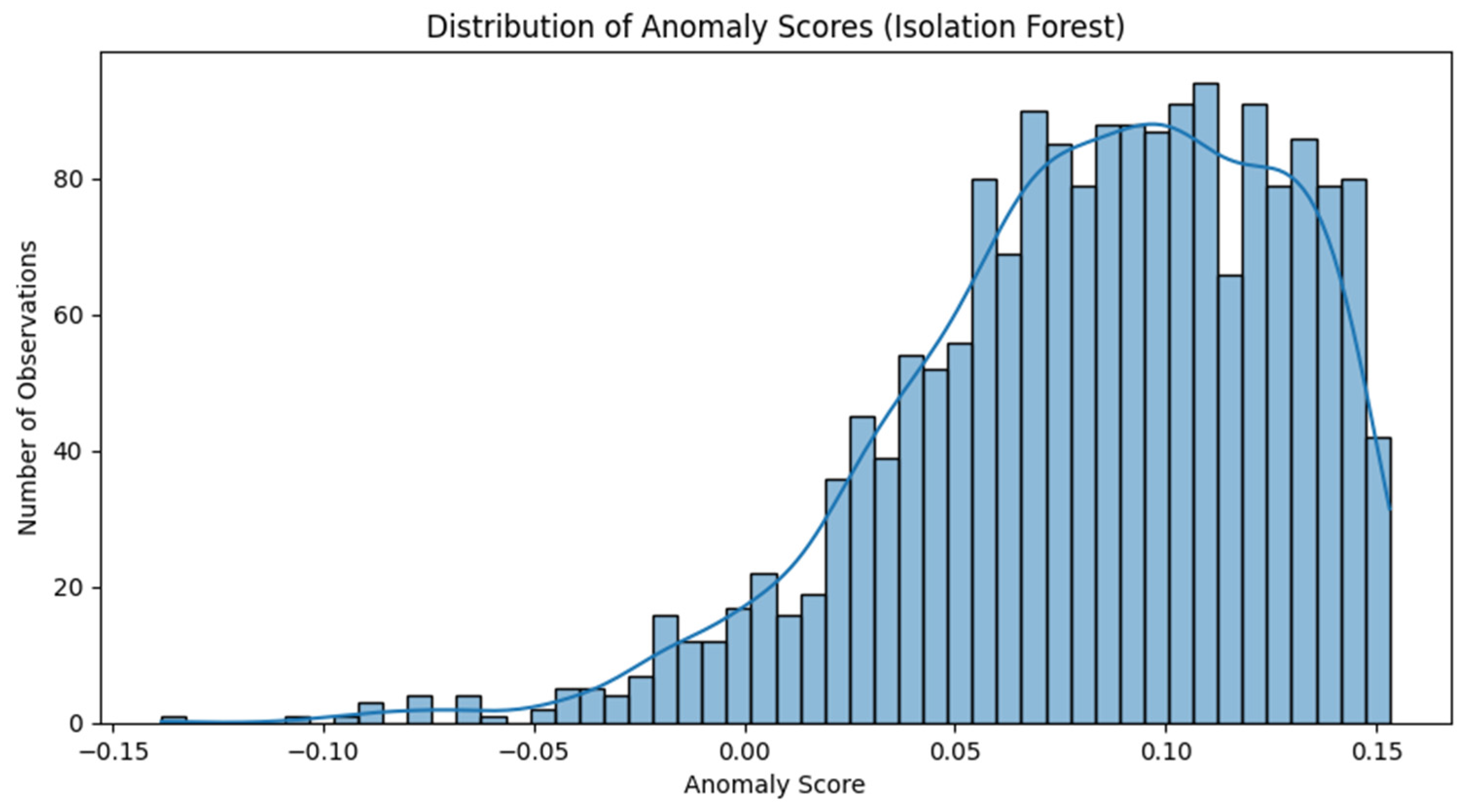

Sensitivity analysis for the n_estimators parameter. The Jaccard stability index was used to assess the consistency of anomaly detection across multiple runs. The chosen value of 300 estimators represents a point of high stability before performance plateaus.

A sensitivity analysis was performed to determine the optimal number of estimators (n_estimators) for the Isolation Forest model (

Figure 2). The model’s stability was evaluated using the Jaccard index across multiple runs with varying numbers of trees. The analysis indicated that stability increases significantly up to 300 estimators, after which it plateaus. Consequently, n_estimators was set to 300 to ensure a robust and stable model without unnecessary computational overhead.

The histogram shows a clear separation between the dense cluster of normal scores (positive) and a long tail of anomalous scores (negative). The anomaly threshold (dashed line at score ≈ 0) corresponds to the contamination parameter setting of 0.05.

The contamination parameter was subsequently set to 0.05, a value informed by the distribution of anomaly scores generated by the model (

Figure 3). This distribution exhibited a distinct separation between a high-density cluster of normal instances with positive scores and a sparse tail of abnormal instances with negative scores. The 0.05 contamination level effectively establishes a threshold at the point where this tail begins, which aligns with the expected frequency of unusual operational events in the laboratory setting. This data-driven approach provides a quantifiable justification for the model’s sensitivity settings and serves as a form of surrogate evaluation.

For Isolation Forest, a total of 300 trees were generated with a contamination parameter set to 0.05 to calibrate anomaly sensitivity. In the case of One-Class SVM, the ν parameter was likewise set to 0.05, and gamma was auto-scaled based on feature count. In both cases, each training instance represented one hour of multivariate environmental data encoded as an eight-dimensional standardized vector, enabling the detection of both abrupt, sparsely distributed anomalies and more subtle, structured deviations. During training, Isolation Forest trees were built in parallel using random feature splits, while One-Class SVM formed a nonlinear boundary around the majority of the data points using kernel transformations. The process for each model culminated in the assignment of anomaly scores or binary flags, providing two independent perspectives on abnormal environmental behavior.

3.4. Characterization of Hidden States Using Clustering

Latent operational modes in the monitored environment were investigated using unsupervised clustering applied to standardized time-series data. K-means clustering was selected as a scalable and interpretable method for segmenting hourly observations into discrete environmental states. Prior to clustering, each hourly segment was transformed into an eight-dimensional standardized feature vector comprising CO2 concentration, TVOC, PM2.5, temperature, relative humidity, noise level, and energy-related metrics. Standardization was applied to equalize the influence of variables expressed on different scales.

The optimal number of clusters, k = 3, was determined using the elbow method, which identifies the point of diminishing returns in intra-cluster inertia reduction. This selection was further validated through silhouette scores, ensuring adequate separation and internal consistency of the resulting groups. The clustering process aimed to capture recurring patterns in the environmental data and assign each hour to one of the emergent operational modes.

The reliability of the proposed framework was assessed using a two-part validation strategy appropriate for unsupervised learning environments where labeled ground-truth data are unavailable. First, internal validation was used to confirm the quality and stability of the identified operational states. The silhouette score was calculated to determine the optimal number of clusters (k). The k = 3 configuration yielded the highest average silhouette score (0.396), outperforming k = 2 (0.31) and k = 4 (0.35). This result indicates that the identified states—“Crowded”, “Experiment”, and “Empty/Cool”—are statistically well-separated and internally dense. Establishing these stable operational baselines is a critical prerequisite for the subsequent anomaly detection, which defines anomalies as significant deviations from these normal states.

Second, since a direct calculation of metrics like precision and recall was not feasible without a labeled dataset, the performance of the anomaly detection model was assessed through qualitative validation. This involved an expert-driven analysis of high-scoring anomalies identified by the Isolation Forest model to determine if they represented practically significant events. For instance, our model successfully flagged a potential safety risk where one detected anomaly showed a sharp spike in TVOC concentrations during late-night hours when CO2 levels were at a minimum. This pattern, which is inconsistent with any normal state, strongly suggests a chemical leak or equipment off-gassing in an unoccupied room. The model also identified an operational inefficiency where another anomaly was characterized by normal CO2 and TVOC levels but co-occurred with unusually high noise and temperature readings in an “Empty/Cool” state. This pointed to a malfunctioning HVAC component or unattended equipment left running, representing both a safety concern and energy waste. The model’s ability to consistently identify such actionable events provides strong qualitative evidence of its effectiveness and validates its utility as an early warning system in a real-world laboratory environment.

Following clustering, the entire timeline was labeled according to the assigned clusters, enabling reconstruction of state transitions over time. This segmentation was designed not only to characterize environmental variability but also to inform downstream analyses such as anomaly contextualization, time-of-day trends, or building system response modeling. The identified clusters further provide a framework for defining state-dependent control logic. By associating multivariate environmental conditions with operational modes, the methodology supports potential integration with building automation systems, enabling adaptive ventilation, filtration, or energy optimization strategies that adjust dynamically based on the detected environmental state.

The resulting segmentation divided the timeline into three interpretable environmental states. The first, labeled Crowded, encompassed 18.9% percent of hourly records and was defined by elevated CO2 concentrations near 790 ppm, moderately high TVOC levels around 860 ppb, noise averaging 50 dB, and temperatures near 30 °C. These features indicated periods of dense human presence and were confirmed by badge-entry logs matching work hours with high occupancy.

The second state, termed Experiment, included 37.6% percent of the data. It was characterized by moderate CO2 levels around 550 ppm but significantly elevated TVOC readings near 2500 ppb. With noise near 48 dB, temperatures around 24 °C, and humidity close to 42% percent, this cluster aligned with active experimental activity involving solvents or emissions, typically conducted by a limited number of personnel or automated equipment.

The third cluster, Empty/Cool, spanned 42.6% percent of the timeline. It showed low CO2 and TVOC values near 520 and 710 ppb, respectively, minimal noise around 43 dB, and lower ambient temperatures close to 14 °C. These patterns reflected inactive periods, such as nights and weekends, when the space was unoccupied and systems operated in standby mode.

4. Results

4.1. Analysis of Preprocessed Time-Series Data

Following the completion of data cleaning and temporal alignment, the dataset was structured into a continuous multivariate time series comprising 1808 complete hourly observations, reliably capturing the microclimatic conditions of the laboratory. CO

2 concentrations ranged from 403 to 2305 ppm, with a median of approximately 492 ppm. TVOC levels fluctuated between 50 and 4925 ppb, median near 1219 ppb. Temperature varied broadly, but clustered around 27 °C during working hours. Noise levels spanned from 36 to 65 dB, median 47 dB, while relative humidity ranged between 19 and 55 percent. A sample fragment of the cleaned and standardized data array used in model development is presented below, as shown in

Table 2.

K-means clustering applied to the standardized feature space consistently revealed three dominant operational modes. The Crowded state encompasses approximately one-third of the timeline and is marked by the highest CO2 levels, mean near 790 ± 250 ppm, and elevated background noise close to 50 dB, indicative of dense occupancy and active equipment use. The Experiment mode covers slightly more than one-third of the dataset and is characterized by peak TVOC emissions, mean around 2500 ± 830 ppb, alongside moderate CO2 concentrations, reflecting chemically intensive procedures carried out with minimal personnel presence. The remaining portion of the timeline is described by the Empty/Cool cluster, which reflects periods of low anthropogenic activity, cooler air temperatures near 14 °C, and the quietest ambient conditions.

Isolation Forest, configured with a contamination rate of 5 percent, identified 94 anomalous hours, two-thirds of which occurred during Crowded periods. These anomalies frequently coincided with CO2 surges above 1800 ppm, TVOC levels exceeding 4000 ppb, or concurrent increases in both, occasionally accompanied by transient noise bursts above 60 dB.

The diurnal profile exhibits clear peaks in CO2 and noise aligned with standard working hours, while TVOC spikes remain irregular and show strong alignment with laboratory-specific processes rather than occupancy patterns. Taken together, the resulting operational mode map accounts for approximately 95 percent of routine environmental behavior, while the remaining 5 percent corresponds to manageable deviations—instances that may warrant targeted interventions in ventilation strategy or process design.

4.2. Identification of Anomalous Events

The model identified 94 h as anomalous, as reflected in the iso_flag column. Manual inspection confirmed that these anomalies corresponded to real environmental events—such as sudden spikes in CO2 or TVOC levels, abnormal drops in temperature, or prolonged periods of elevated noise—rather than sensor noise.

This validation was further supported by quantitative profiling of the operational states. For example, within the “Crowded” state, anomalous hours were characterized by a mean CO2 concentration of 1347.3 ppm, significantly higher than the mean of 916.6 ppm during normal “Crowded” hours. More notably, these anomalies often involved extreme spikes in particulate matter, with mean PM2.5 concentrations reaching 50.8 µg/m3—a value nearly six times higher than the baseline for that operational state. Similarly, a detailed review of the top 10 most anomalous events identified by the model (those with the lowest iso_score) consistently revealed concurrent, multi-variable deviations, such as simultaneous surges in CO2 (above 2200 ppm) and noise (above 60 dB), confirming their operational significance.

To validate the operational significance of these flagged hours beyond statistical measures, the detected anomalies were cross-referenced with the laboratory’s internal activity logs. This process confirmed that the model’s findings corresponded to tangible operational events rather than random sensor noise. For instance, a dense cluster of anomalies identified by the model on 3 April and 26 May directly coincided with logged experimental sessions involving prolonged solvent use under conditions of temporarily reduced ventilation. This alignment with ground-truth records provides strong evidence of the model’s reliability in detecting operationally significant deviations. These detections illustrate the model’s sensitivity to operational irregularities within the monitored space.

To further assess the robustness of the findings, a benchmark comparison was conducted using the Density-Based Spatial Clustering of Applications with Noise (DBSCAN) algorithm, selected for its effectiveness in handling arbitrarily shaped clusters and noise. Of the 94 anomalies identified by Isolation Forest, DBSCAN independently flagged 81 of the same hourly intervals, resulting in a high concordance rate of 86%. This strong agreement between two methodologically different unsupervised algorithms further strengthens the confidence in the identified anomalous events.

As shown in

Figure 4, the temporal dynamics of hourly CO

2 concentrations and the corresponding anomaly intervals are clearly illustrated. Covering the period from 16 March to 9 June 2025, the figure displays CO

2 levels as a continuous blue line, with red markers indicating anomalous intervals as identified by the Isolation Forest algorithm. The model operates in an unsupervised manner, detecting statistically rare deviations from the learned environmental baseline without reliance on labeled data.

Distinct clusters of anomalies emerge in early April and early June, where CO2 concentrations exceed 2000 ppm. These periods correspond to increased laboratory activity and restricted ventilation, as confirmed by internal operational logs. In addition to clustered anomalies, isolated spikes are intermittently observed throughout the timeline, potentially reflecting brief disruptions in airflow or transient increases in occupancy.

Conversely, extended periods of environmental stability—particularly in mid-May—exhibit minimal or no anomalies. These intervals are characterized by low baseline levels of CO2 and TVOC and coincide with reduced personnel presence, as indicated by badge-entry records.

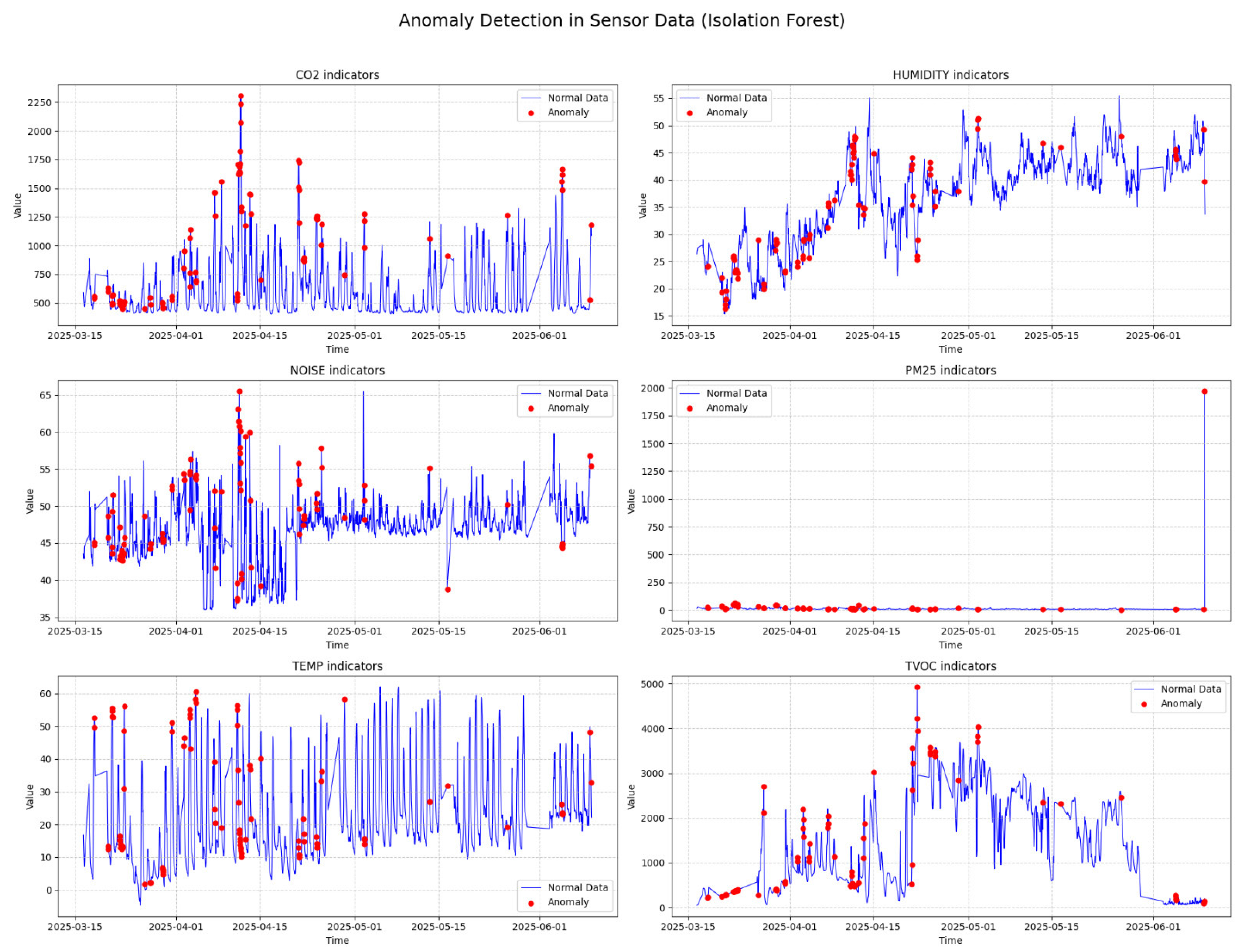

Figure 5 extends the anomaly detection analysis beyond CO

2 to include all monitored environmental indicators including CO

2, humidity, noise, PM

2.5, temperature, and TVOC. Each subplot illustrates the temporal progression of sensor values in blue, while anomalies identified by the Isolation Forest model are highlighted in red. Presenting all modalities together demonstrates that anomalies are not limited to a single pollutant dimension but can also appear in diverse operational states, for instance elevated noise or temperature even when CO

2 levels remain normal. This integrated representation highlights the importance of multivariate monitoring in uncovering complex and context-aware anomalies that would remain undetected if CO

2 were considered in isolation.

The model’s capacity to detect both isolated peaks and temporally aggregated deviations demonstrates its robustness in capturing structurally diverse anomaly patterns. Aligning the detected anomalies with contextual data—such as personnel scheduling and operational routines—enhances the interpretability and diagnostic utility of the detection process. Ultimately, this approach strengthens the environmental monitoring framework by improving its relevance and responsiveness to real-world laboratory conditions.

To enhance the reliability of anomaly detection, an additional model—One-Class Support Vector Machine (One-Class SVM) with an RBF kernel—was trained. Similarly to Isolation Forest, this algorithm operates in an unsupervised manner, learning a boundary that encompasses the normal behavior of the data.

The One-Class SVM model identified 75 anomalous hours, a substantial portion of which overlapped with the anomalies detected by Isolation Forests, as shown in

Figure 6. However, differences between the two approaches were also observed. Isolation Forest demonstrated greater sensitivity to sharp, short-term spikes in CO

2 concentrations, whereas One-Class SVM tended to highlight longer-lasting deviations that were not always captured by the former.

This divergence stems from the fundamental differences in their mechanisms: Isolation Forest isolates anomalies via recursive random partitioning, while One-Class SVM constructs a soft boundary around the main data cluster. As a result, the former is more effective at detecting localized outliers, whereas the latter is better suited to global or structural shifts in the data.

A combined analysis of both models’, as shown in

Figure 6, outputs provides a more complete and reliable view of the anomaly landscape, capturing both abrupt and gradual deviation patterns.

4.3. Discovered Laboratory Operational States

Clustering analysis was employed to reveal latent operational modes in the monitored room based on hourly aggregated environmental data. The K-means algorithm, with the number of clusters set to three, was applied to the standardized feature space, and Principal Component Analysis was used to visualize the resulting clusters. This approach allowed for the differentiation of distinct laboratory states that occurred throughout the observation period.

The first cluster, labeled Crowded, encompassed 42.6% of the total data, equivalent to 770 h of monitoring. This cluster was distinguished by elevated levels of total volatile organic compounds (TVOC), averaging 2483.1 ppb, moderate carbon dioxide concentrations of approximately 528.7 ppm, and consistent acoustic activity around 47.5 dB(A). These environmental patterns suggest active occupancy, likely corresponding to regular working hours characterized by the presence of multiple individuals and frequent use of chemical agents.

The second cluster, referred to as Experiment, comprised 37.6% of the observations (680 h). It was characterized by lower TVOC concentrations, averaging 1241.9 ppb, a higher CO2 level of 974.7 ppm, and increased noise levels near 50.6 dB(A). This configuration is indicative of periods dominated by equipment-intensive processes—such as automated experimental protocols—where human involvement is minimal but instrument operation is continuous. The elevated CO2 in the absence of significant human activity may point to sealed environments with restricted ventilation during extended unattended procedures.

The third “Empty/Cool” cluster accounted for 19.8% of the dataset (358 h) and demonstrates that all monitored environmental variables remained at low levels. With no occupants—and hence no internal sources of heat or moisture—temperature and relative humidity stayed comparatively low and stable; the sound-pressure level did not exceed an average of 50 dB(A); TVOC concentrations remained minimal; and CO2 stabilized at approximately 498 ppm, confirming the absence of respiration-driven emissions. Persistently limited air exchange further corroborates that the room had remained unused for an extended period.

As shown in

Figure 7, the distribution of hourly observations in the reduced PCA space reveals clear spatial separation among the three operational modes identified via K-means clustering. Principal Component Analysis (PCA) projection of hourly environmental data, clustered using K-means (k = 3) into three distinct operational modes. Each point represents one hour of aggregated sensor readings, reduced to two principal components (PC1 and PC2) for visualization. The clusters are color-coded as follows: “Empty” (blue), “Crowded” (orange), and “Experiment” (green). The spatial separation of the clusters indicates distinct environmental patterns tied to different laboratory conditions. The “Crowded” cluster is associated with elevated TVOC levels and moderate CO

2, reflecting active human presence and chemical procedures. The “Experiment” mode is characterized by low noise, low TVOC, and lower temperatures, suggesting automated instrument operation with minimal personnel. The “Empty” cluster corresponds to periods when the workspace was unoccupied, which is clearly reflected in the sensor readings: all key parameters—CO

2, TVOC, temperature, and noise—remain at consistently low levels. This environmental profile indirectly confirms the absence of activity and personnel, making the interpretation of this cluster both clear and reliable.

The clarity and consistency of this clustering structure not only provide insight into typical operational modes but also create a meaningful backdrop against which anomalous conditions can be interpreted.

Table 3 presents representative examples of hourly environmental readings identified as anomalies by the Isolation Forest model. These hours exhibit distinctive deviations across key parameters, aligning with the broader patterns uncovered through PCA and clustering.

The sample presented in

Table 3 includes only those hourly intervals that were flagged as anomalous by the Isolation Forest algorithm, as indicated by the iso_flag column where each entry is marked as TRUE. This designation reflects that the sensor profiles during these hours deviated significantly from the normal environmental patterns typical of the laboratory setting. The reasons for such classifications are clearly traceable within the data itself.

In nearly every listed interval, at least one key parameter reaches extreme values. For instance, CO2 concentrations rise to 900 ppm and beyond, whereas under standard conditions they rarely exceed 500 ppm. Similarly, TVOC levels reach between 2000 and 3600 ppb—several times higher than the typical background levels. In some cases, elevated temperatures and increased noise levels are also recorded simultaneously. These co-occurring anomalies across multiple channels are highly uncommon under routine laboratory operations and are therefore accurately identified as multifactor deviations.

It is particularly noteworthy that the model consistently separates these instances from the main data distribution. This indicates that the algorithm was successfully calibrated on a “healthy” segment of the time series, where sensors operated under stable conditions. Due to its sensitivity to complex patterns, Isolation Forest avoids reacting to random fluctuations and instead reliably detects disruptions to the typical data structure—such as ventilation failures, experiments, or other nonstandard operational scenarios.

The interpretability of the detection results is further enhanced by the inclusion of the iso_score and mode_cluster attributes. The iso_score quantifies the degree of deviation from the baseline data distribution, with lower (more negative) values indicating stronger anomalies. This scoring framework offers a transparent means of ranking anomalous instances by severity, effectively reflecting the model’s internal certainty regarding each classification. Notably, intervals with the most extreme scores often coincide with multidimensional deviations, underscoring the algorithm’s sensitivity to complex, compound disruptions rather than isolated fluctuations. The primary metric for scoring is the path length,

h(

x), which is defined as the number of edges a data point

x traverses from the root of an isolation tree to a terminal node [

50]. Since anomalies are easier to isolate, they are expected to have a statistically shorter average path length,

E(

h(

x)), across the ensemble of trees [

51]. This average path length,

E(

h(

x)), is calculated by averaging the path length for a given point

x across all

T trees in the forest [

52]:

To transform this path length into a standardized anomaly score, it is normalized by a factor,

c(

n), which represents the average path length of an unsuccessful search in a Binary Search Tree given n data points (the number of samples in the dataset). The normalization factor

c(

n) is calculated as follows [

53]:

where

H(

i) is the harmonic number, which can be estimated as

ln(

i) + 0.5772 (Eulerá constant). The scikit-learn library’s decision_function method, which was used in our research, then calculates an anomaly score for each observation x. This score is a shifted version of the original isolation forest paper’s formulation [

54], where lower scores indicate a higher degree of abnormality. After computing the anomaly scores, the Isolation Forest assigns a binary label to each observation through the predict function. In this step, data points with scores below the contamination-adjusted decision threshold are assigned a value of −1 (indicating an outlier), while those above the threshold are assigned a value of +1 (indicating an inlier). For analytical convenience, we further transformed these outputs into a Boolean variable (iso_flag), where True denotes an anomalous observation and False denotes a normal one. This procedure provided a clear categorical indicator of anomaly presence, complementing the continuous scoring mechanism.

In parallel, the mode_cluster attribute facilitates the post hoc characterization of anomaly types by assigning each detected instance to a distinct cluster. These clusters are derived from shared structural features within the sensor profiles and reflect recurring patterns within the anomalous data. Such clustering enables the differentiation of anomaly subtypes—ranging from ventilation system failures to overcrowding events or thermal irregularities—thereby providing a structured basis for downstream analysis, operational diagnostics, and the development of context-specific mitigation strategies.

Taken together, the table validates the model’s ability to filter out meaningful anomalies rather than random noise. This capability is essential for environmental monitoring in laboratory settings, where early detection of abnormal events is directly tied to diagnostic accuracy, personnel safety, and the integrity of experimental procedures.

5. Discussion and Limitations

The modern laboratory environment is characterized by exceptionally high energy consumption compared to other building types. Unlike offices or libraries, laboratories demand tight environmental control, continuous use of specialized equipment, and high-efficiency ventilation systems required for the safe handling of chemical and biological materials. These factors result in significant energy expenditures, making laboratories one of the most resource-intensive categories of buildings. Against this backdrop, ensuring the safety of personnel working in energy-intensive conditions becomes critically important. In this context, energy efficiency and health protection are closely intertwined and directly aligned with the United Nations Sustainable Development Goals (SDGs) [

55]. Particular attention is being paid to the concept of “smart” laboratories, which involves implementing digital and automated energy management strategies. These approaches are especially relevant in the context of Kazakhstan’s sustainable energy development [

29,

56].

Despite the clear benefits of intelligent systems, their widespread implementation in laboratory settings faces several substantial challenges. The need for significant initial investments, large-scale infrastructure modernization, and staff training makes the adaptation process rather complex. In Kazakhstan, these challenges are especially acute due to limited financial and technical resources. However, these very difficulties create opportunities for innovation: by demonstrating the long-term economic and environmental benefits of smart laboratories, organizations can build a compelling case for investments that promote sustainable development and enhance safety [

29,

57].

To overcome these challenges, a comprehensive and systematic approach is required. This includes equipment upgrades, the development of adaptive operational protocols, and widespread adoption of intelligent technologies. Such an approach not only conserves resources but also contributes to environmental protection and improves working conditions in laboratories. Its successful implementation can serve as a model for sustainable development in other energy-intensive sectors. With sustainability now a universal priority, modernization of Kazakhstan’s scientific infrastructure based on smart laboratory principles is a crucial step toward achieving national and international sustainability goals [

29,

58,

59]. Thus, smart laboratories represent more than just the adoption of modern technologies—they signify a fundamental transformation of the research environment. They integrate energy efficiency, environmental sustainability, and employee well-being into a unified strategy. For Kazakhstan and other developing countries, this transition is of vital importance, offering a balanced approach to scientific progress and responsible management of natural and human resources [

58,

59].

Our study presents a methodologically rigorous and contextually grounded approach to the application of unsupervised machine learning for anomaly detection and latent state characterization, without relying on costly and impractical labeled data in smart laboratory environments. The research addresses a clearly defined and timely problem, focusing on the challenges of managing indoor environmental quality and energy efficiency in laboratories—settings known for high energy demand, complex operational patterns, and safety-critical conditions. The alignment of this work with global sustainability objectives, particularly those outlined in the Sustainable Development Goals (SDGs), underscores its broader significance. The methodological framework employs Isolation Forest to detect anomalous environmental conditions and K-means clustering to identify latent operational states within multivariate time-series data. These two unsupervised techniques serve distinct yet complementary functions: the former isolates irregular data points, while the latter uncovers recurring operational patterns. By utilizing environmental sensor data—often incomplete or noisy—our method demonstrates that laboratories can still achieve accurate monitoring, thereby reducing operational costs, improving indoor air quality, and enhancing safety. Moreover, our approach provides actionable environmental insights that can inform adaptive HVAC control, further promoting sustainability and scalability in constrained environments.

The identification of three distinct operational states—“Crowded” (19.8%), “Experiment” (37.6%), and “Empty/Cool” (42.6%)—provides a granular, data-driven profile of laboratory usage that transcends simple occupancy schedules. This state awareness is directly translatable into an intelligent HVAC control strategy. During the “Crowded” state, characterized by high CO2 levels (mean near 790 ppm), the HVAC system should prioritize high-volume fresh air exchange to maintain indoor air quality and mitigate pathogen transmission risk. Conversely, during the “Experiment” state, which is defined by peak TVOC emissions (mean around 2500 ppb) but moderate CO2, the control logic should shift to maximizing chemical fume extraction and filtration, even if occupancy is low. By comparison, the “Empty/Cool” state provides a clear signal for the HVAC system to enter a deep energy-saving setback mode, reducing ventilation to minimal levels and adjusting temperature setpoints without compromising safety. Beyond defining normal operation, the unsupervised anomaly detection models serve as an automated early warning system for laboratory management. The detection of 94 anomalous hours, including CO2 surges above 1800 ppm and TVOC concentrations exceeding 4000 ppb, provides actionable alerts that signify potential risks. For a lab manager, such an alert is not just a data point; it is an immediate flag for a potential ventilation failure, an unauthorized experiment, or a procedural breach in handling volatile materials. This allows for rapid intervention, enhancing safety and ensuring compliance. This function is critical because it moves beyond static, single-parameter thresholds (such as a fixed CO2 alarm) to identify complex, multifactor deviations that would be missed by conventional building management systems. The true practical value of this methodology lies in integrating these two layers of insight—state characterization and anomaly detection—into a dynamic, context-aware control framework for HVAC systems. Instead of relying on a fixed schedule or a single sensor trigger, the system can adopt state-dependent control logic. A moderate TVOC reading acceptable during an “Experiment” state could instead be flagged as a critical anomaly in an “Empty/Cool” state, suggesting a chemical spill or equipment malfunction. This intelligent approach allows for the precise allocation of energy resources, applying intensive ventilation only when and where it is needed, thereby balancing the often-competing goals of occupant safety and energy efficiency.

The observation that anomalies are detected during periods of low CO2 concentration is a pertinent one and highlights a key strength of the multivariate approach employed in this study. The unsupervised models, Isolation Forest and One-Class SVM, operate on a standardized, six-dimensional feature vector comprising CO2, TVOC, PM2.5, temperature, humidity, and noise, rather than monitoring individual parameters in isolation. Consequently, an anomaly is defined not by a univariate extreme but as a data point that deviates from the learned baseline of normal operational correlations between these variables. For instance, an observation characterized by a low CO2 concentration, which is typical of the “Empty/Cool” state, may be flagged as anomalous if it co-occurs with elevated noise levels and temperatures. Such a combination is inconsistent with the established environmental profile for an unoccupied laboratory and could signify a potential equipment malfunction or an HVAC system fault. To illustrate the practical utility of the proposed multivariate anomaly detection framework, a compound anomaly scenario is presented. The feature vector in this case is characterized by normal pollutant concentrations (CO2 and TVOC) alongside elevated temperature and noise levels, representing a departure from the typical “Empty/Cool” operational mode observed during late-night hours. A traditional threshold-based monitoring approach would not flag such conditions since the air quality indicators remain within acceptable limits. However, the Isolation Forest model, which evaluates the joint distribution of all features, identifies the observation as a significant anomaly. This outcome occurs because the co-occurrence of low pollutant levels with high temperature and noise is exceedingly rare in the historical dataset, placing the data point in a sparse region of the feature space. As a result, the algorithm requires very few partitions to isolate it, producing a high anomaly score. Such a condition is most plausibly attributable to unattended equipment operating outside scheduled hours, which leads to unnecessary energy consumption, increased operational costs, and potential safety risks. This outcome provides a powerful, validated indicator for both dimensions simultaneously: a safety assessment, as the condition could signify unattended equipment posing a risk, and a sustainability assessment, as such an event represents clear energy waste. This highlights the framework’s core contribution: it assesses the operational state holistically, providing context-aware insights that enable more intelligent and sustainable building management while enhancing operational safety.

Some limitations should be acknowledged in the current research. The temporal scope of the dataset, which spans a four-month observation period, may not capture long-term seasonal variations or rare operational scenarios that could influence model generalizability. The dataset contains only 1808 records, which may limit the statistical power of the results. The generalizability of the identified operational states—“Crowded,” “Experiment,” and “Empty/Cool”—could be influenced by the specific seasonal context of the observation period. HVAC performance and baseline energy consumption can vary significantly between heating and cooling seasons, which could alter the definitions of these clusters. Consequently, the current models might not generalize perfectly to data from different times of the year without recalibration. To address this limitation and enhance the robustness of our models, data collection is ongoing. We plan to extend the observation period to encompass at least one full calendar year. This expanded, year-long dataset will allow for the capture of seasonal variations and provide a more comprehensive baseline of the laboratory’s operational profile. Future work will focus on retraining the Isolation Forest and K-means models on this longitudinal data to develop a more adaptive and seasonally aware system. This will not only improve the accuracy of anomaly detection but also enable the identification of more nuanced operational states. In addition, the experimental setup is tailored to a specific laboratory configuration with a customized hardware and software architecture. As such, the direct applicability of the results to other environments or institutions may require additional adaptation. While the system architecture supports real-time control and automation, the present study does not evaluate the performance of the proposed models in live control scenarios. A primary limitation is that while the system architecture supports real-time control, the analysis was conducted entirely offline. Consequently, although the potential integration with HVAC systems is a core motivation, this study does not provide empirical data on real-world energy savings or improvements in indoor air quality. A pilot deployment is required to quantitatively validate these practical outcomes.

Moreover, the analysis was conducted offline. While the methodology shows promise for identifying anomalies and operational states from historical data, it has not yet been tested in a real-time deployment. A full evaluation of its practical performance, including its computational efficiency and its ability to integrate with and dynamically inform a live building management system, remains a crucial next step. As with any model trained on a finite dataset, there is a potential risk of overfitting. The algorithms may have learned patterns and noise idiosyncratic to this specific dataset, and their performance might degrade when applied to data from a different laboratory or even the same lab under significantly different conditions. Future work should prioritize validating this framework across a diverse portfolio of laboratories over more extended timeframes to build more robust and generalizable models. Implementing the system in a live, real-time control loop is essential to assess its true efficacy and impact on energy consumption and indoor air quality. Additionally, employing rigorous cross-validation techniques and testing on independent datasets will be critical to mitigate the risk of overfitting and ensure the model’s reliability.

6. Conclusions

This study’s primary contribution is the development and validation of a dual-layer, context-aware monitoring framework for complex indoor environments like laboratories. This framework offers a methodological foundation for assessing and balancing the often-conflicting demands of occupant safety and energy sustainability. The novelty of this approach lies in its ability to first identify distinct, data-driven operational states—such as “Crowded”, “Experiment”, and “Empty/Cool”—and then to detect anomalies within the context of these states. This paradigm shifts away from conventional, static-threshold alarms (e.g., a fixed CO2 limit) to a dynamic system that interprets sensor data based on the laboratory’s current, real-world activities. By understanding “normal” for different situations, the framework can flag subtle deviations that would otherwise be missed, providing a more intelligent and nuanced assessment of environmental safety and performance.

The practical value of this framework is twofold. For laboratory managers, it serves as an automated early warning system, transforming raw data into actionable intelligence. An anomaly alert is no longer just a high reading; it is a contextual flag pointing to a potential ventilation failure during a crowded session, an unauthorized experiment, or a chemical spill in a supposedly empty room, which directly demonstrates how the system assesses and enhances safety. For building control, this methodology provides the foundation for truly adaptive HVAC systems. Instead of relying on rigid schedules, building automation can now apply state-dependent logic: maximizing energy savings during “Empty/Cool” states, prioritizing fresh air exchange when “Crowded”, and enhancing fume extraction during “Experiment” states. This approach moves beyond a simple trade-off, providing a methodology to validate operational decisions and optimize the balance between the critical demands of laboratory safety and the strategic goals of energy sustainability.

Looking ahead, the practical extension of this work is centered on real-world implementation and scalability. The immediate priority is to transition this offline model into a live, closed-loop control system to quantitatively validate its direct impact on energy consumption (sustainability) and air quality (safety). Upon deployment, the pilot is expected to yield quantifiable results, demonstrating significant reductions in HVAC energy consumption while simultaneously ensuring full compliance with safety-critical air change standards. Second, validating the framework across a diverse portfolio of laboratories and building types is essential to develop more robust and generalizable models. Finally, future iterations could enrich the model by integrating additional data streams, such as equipment energy logs or occupancy sensors, to further refine the precision of state detection and create a comprehensive “digital twin” for proactive laboratory management.