Abstract

Automated landmark recognition represents a cornerstone technology for advancing smart tourism systems, cultural heritage documentation, and enhanced visitor experiences. Contemporary deep learning methodologies have substantially transformed the accuracy and computational efficiency of destination classification tasks. Addressing critical gaps in existing approaches, we introduce an enhanced Samarkand_v2 dataset encompassing twelve distinct historical landmark categories with comprehensive environmental variability. Our methodology incorporates a systematic multi-threshold pixel intensification strategy, applying graduated enhancement transformations at intensity levels of 100, 150, and 225 to accentuate diverse architectural characteristics spanning from fine-grained textural elements to prominent reflective components. Four independent YOLO11 architectures were trained using original imagery alongside systematically enhanced variants, with optimal epoch preservation based on validation performance criteria. A key innovation lies in our intelligent selective ensemble mechanism that conducts exhaustive evaluation of model combinations, identifying optimal configurations through data-driven selection rather than conventional uniform weighting schemes. Experimental validation demonstrates substantial performance gains over established baseline architectures and traditional ensemble approaches, achieving exceptional metrics: 99.24% accuracy, 99.36% precision, 99.40% recall, and 99.36% F1-score. Rigorous statistical analysis via paired t-tests validates the significance of enhancement strategies, particularly demonstrating effectiveness of lower-threshold transformations in capturing architectural nuances. The framework exhibits remarkable resilience across challenging conditions including illumination variations, structural occlusions, and inter-class architectural similarities. These achievements establish the methodology’s substantial potential for practical smart tourism deployment, automated heritage preservation initiatives, and real-time mobile landmark recognition systems, contributing significantly to the advancement of intelligent tourism technologies.

1. Introduction

The implementation of AI-based landmark identification technology represents a fundamental driver in modernizing tourism operations, providing considerable value for visitors, site administrators, and policy developers across international markets [1,2,3]. These advanced systems enhance the tourist experience by enabling real-time identification of landmarks using mobile devices and wearable technology, providing travelers with access to tailored guidance, augmented reality features, and intelligent itinerary recommendations delivered seamlessly [4,5]. Such capabilities significantly improve the travel experience, notably in new cultural or overseas contexts where automated recognition can bridge knowledge gaps and enhance cultural understanding [3,6].

AI-based landmark classification serves as a cornerstone technology for sustainable tourism management, enabling smart platforms to encourage tourists to visit less congested locations and balance tourist distribution across destinations [7,8,9,10]. These automated systems advance smart tourism applications through real-time landmark recognition, enhanced visitor guidance, and sophisticated data analytics that transform user-generated content into actionable insights for destination marketing and crowd management strategies [3,11,12,13]. Beyond operational benefits, AI-driven classification plays a vital role in cultural heritage preservation by systematically documenting historical sites for future generations while enabling augmented reality applications that overlay contextual information to enhance educational value [4,5,14,15]. The integration of AI-based tourist destination classification thus creates smart, accessible, and sustainable tourism ecosystems that optimize visitor experiences, preserve cultural heritage, and empower tourism authorities with data-driven decision-making capabilities [16,17].

Despite progress in AI-based landmark classification, the current literature reveals critical gaps: limited geographic and cultural diversity in datasets restricting global generalizability [2], substantial performance degradation under real-world conditions with lighting variations and occlusions [16,17], and absence of selective ensemble approaches combining YOLO models trained on systematically enhanced image variants [18]. These limitations hinder practical deployment in smart tourism systems and motivate our proposed multi-threshold enhancement and selective ensemble framework.

AI-based landmark classification serves as a cornerstone technology for sustainable tourism management, enabling smart platforms to encourage travelers toward under-visited areas and balance tourist distribution across destinations. This technology directly supports the UN Sustainable Development Goals (SDG 11.4) for protecting cultural heritage by creating digital preservation systems that reduce physical site interaction while maintaining cultural accessibility. For Samarkand specifically, where tourism infrastructure remains underdeveloped yet visitor numbers continue to rise, automated landmark recognition provides a scalable solution for managing tourism impacts without extensive infrastructure investment.

To overcome these limitations, this study introduces an innovative framework combining multi-level threshold optimization with intelligent ensemble integration using YOLO11 architecture. The approach utilizes a specially curated image collection from Samarkand’s heritage sites, incorporating graduated pixel intensity transformations and adaptive model selection mechanisms. This integrated methodology seeks to enhance recognition performance, strengthen resilience against variable imaging conditions, and ensure practical applicability in tourism environments.

To address the highlighted shortcomings, this work examines the core research questions presented as follows:

- RQ1: To what extent can a multi-threshold visual preprocessing strategy combined with selective ensemble methods improve the accuracy and reliability of landmark recognition across different environmental contexts and architectural forms?

- RQ2: Compared to individual baseline architectures and traditional fixed ensemble approaches, what is the practical effectiveness of the proposed YOLO11-based selective ensemble framework in achieving superior classification performance while maintaining computational efficiency for real-world deployment?

The present study is structured around the following key goals:

- To develop a comprehensive multi-threshold pixel enhancement strategy that systematically emphasizes different architectural feature categories across varying intensity ranges (100, 150, 225), improving model sensitivity to diverse visual patterns in historical landmarks.

- To implement an intelligent selective ensemble mechanism that automatically evaluates all possible model combinations and determines optimal subsets based on empirical performance validation, avoiding the limitations of fixed ensemble approaches.

- To validate the proposed approach through extensive experimentation on the enhanced Samarkand_v2 dataset, demonstrating superior performance compared to established baseline architectures and individual model implementations.

- To establish the practical applicability of the framework for smart tourism deployment through comprehensive evaluation of classification accuracy, environmental robustness, and computational efficiency.

Samarkand was strategically selected as our research site for several critical reasons: (1) As a UNESCO World Heritage Site since 2001, it represents the urgent preservation challenges faced by historical cities worldwide, requiring innovative solutions to balance tourist access with monument protection. (2) Unlike well-documented European or East Asian heritage sites, Central Asian monuments lack comprehensive AI-based preservation tools, creating a technological gap our research addresses. (3) The city’s diverse Islamic architecture, spanning from 14th-century Timurid monuments to 17th-century madrasas, provides ideal visual complexity for testing our multi-threshold enhancement approach, where threshold 100 captures intricate geometric patterns in shadowed tilework, threshold 150 targets moderately illuminated muqarnas and painted decorative elements, and threshold 225 emphasizes highly reflective surfaces like glazed tiles and metallic inlays characteristic of Islamic architecture.

Through the development of graduated threshold optimization and intelligent ensemble integration for landmark recognition, this work advances automated tourism systems, strengthens visual identification technologies for travel applications, and supports intelligent analytics for tourism marketing and sustainable destination governance. The validated performance of this methodology provides groundwork for advanced tourism platforms that simultaneously improve traveler engagement and safeguard cultural assets via automated processes. The essential innovations introduced in this work are outlined below:

- Formulation of a novel multi-threshold pixel-level optimization method specifically designed for architectural landmark recognition, focusing on multiple intensity bands to derive complementary image features.

- Designing a selective ensemble-based architecture which intelligently integrates YOLO11 models via data-driven optimization, filtering out non-effective variants while maintaining computational efficiency.

- Comprehensive experimental validation demonstrating remarkable performance improvements—99.24% accuracy, 99.36% precision, 99.40% recall, and 99.36% F1-score—significantly outperforming baseline architectures and traditional ensemble methods.

- Statistical validation through paired t-tests confirming the significance of performance improvements and providing insights into the effectiveness of different enhancement strategies.

- Practical advancement in smart tourism technology supporting both automated landmark recognition and cultural heritage preservation through intelligent automation.

The manuscript proceeds as follows: Section 2 examines the existing literature covering visual computing in tourism, automated landmark recognition systems, digital tourism infrastructure, and multi-model integration techniques. Section 3 details our approach, encompassing graduated pixel optimization, adaptive model combination, and assessment methodologies. Section 4 discusses implementation details, data specifications, model training procedures, and empirical findings with significance testing. Section 5 provides conclusions and identifies future research avenues.

2. Related Works

This section reviews the existing literature in three interconnected areas that directly inform our proposed multi-threshold enhancement and selective ensemble approach for landmark classification. We examine (1) the emergence of smart tourism systems that require robust landmark recognition, establishing the practical need for our work; (2) deep learning architectures and image enhancement techniques, highlighting limitations in current single-threshold approaches; and (3) ensemble learning strategies, revealing the absence of selective ensemble methods specifically designed for tourism applications.

2.1. Smart Tourism: Integrating AI, IoT, and Big Data

The concept of smart tourism, fueled by the integration of AI, Internet of Things (IoT), and big data analytics, has gained significant momentum in both academic research and industry practice [19,20,21,22,23,24,25].

Early smart tourism platforms primarily focused on integrating mobile and web technologies for information dissemination and trip planning [22,26,27]. However, the rapid progress in computer vision and AI has led to the development of systems capable of automatic recognition of tourist destinations, monuments, and cultural heritage sites in real-time [19,20]. Notably, AI-driven solutions have also contributed to sustainability in tourism by enabling the dynamic management of tourist flows, reducing congestion at popular sites, and promoting less-visited attractions [7,8,20]. Augmented reality (AR) and location-based services further enrich tourist experiences by overlaying contextual information on physical landmarks and providing adaptive digital guides [20,28,29].

The recent literature highlights the growing adoption of AI analytics for sentiment analysis, event detection, and crisis management using user-generated data from social media, booking platforms, and review sites [12,30]. These technologies enable tourism authorities and businesses to gain deeper insights into visitor behavior and preferences, optimize marketing strategies, and rapidly respond to unexpected disruptions. The evolution of smart tourism thus depends on continuous advances in image understanding, robust machine learning, and scalable AI systems that can function effectively in diverse and dynamic tourism environments. While these smart tourism applications demonstrate clear demand for accurate landmark recognition, existing systems often struggle with environmental variations and architectural similarities. Our multi-threshold enhancement approach specifically targets these challenges by capturing complementary visual features across different intensity ranges. Our proposed landmark classification system directly addresses the core requirements of smart tourism platforms, leverages AI-powered services to deliver personalized, context-aware recommendations and real-time destination information to travelers, thereby enhancing the tourist experience and operational efficiency of tourism destinations. The application of deep learning in tourism has enabled systems to analyze large volumes of heterogeneous data, such as images, videos, and social media content, for purposes like visitor flow management, trend prediction, and content-based marketing [12,30,31,32].

2.2. Deep Learning Methods and Image Enhancement Strategies

Deep learning, especially convolutional neural networks (CNNs), has revolutionized the way tourist landmarks are recognized and classified from images, driving significant progress in automated visual understanding tasks [33,34,35]. Early models such as AlexNet [33] demonstrated the immense power of deep learning for image classification, while VGGNet [34] and ResNet [35] introduced deeper and more expressive networks by utilizing architectural innovations like increased depth and residual connections. These foundational networks paved the way for more advanced models that combine both performance and computational efficiency.

More recently, the development of compact and resource-efficient models like MobileNetV3 [36] and EfficientNet [37] has enabled the deployment of accurate landmark recognition systems on mobile and edge devices, an essential requirement for real-time applications in tourism [36,37]. For multi-object and real-time detection in complex scenes, the YOLO family of networks [38,39,40] has emerged as a state-of-the-art approach, providing a balance between speed and accuracy that is well-suited for live tourist guide systems, AR applications, and automated travel photography. The latest YOLO variants, such as YOLOv7 and YOLOv11, have demonstrated enhanced detection accuracy and real-time performance in tourism datasets containing diverse landmark types and challenging environmental conditions [38,40].

Image preprocessing and enhancement have also become key research directions, aiming to address the challenges posed by diverse lighting, occlusions, and visual clutter common in real-world tourism settings [41,42]. Techniques such as adaptive histogram equalization [41] improve contrast and detail visibility in images, while advanced pixel-level enhancement methods [42] allow deep models to extract discriminative features even under adverse lighting. The role of synthetic data augmentation, attention-based preprocessing, and context-aware feature extraction has been further explored to boost model robustness [16,43,44]. Several works have examined the application of deep learning to the preservation and cataloging of cultural heritage, 3D reconstruction, and the development of visually rich datasets representative of global tourism scenarios [43,44]. However, challenges such as dataset bias, insufficient diversity in image collections, and the need for more robust preprocessing strategies remain active topics of research and innovation. Despite these advances, current approaches predominantly employ fixed preprocessing or single-threshold enhancement, failing to capture the full spectrum of architectural features present in historical landmarks. Our multi-threshold strategy (100, 150, 225) addresses this limitation by systematically emphasizing different feature categories—from subtle textures to highly reflective surfaces—providing more comprehensive visual representations for classification.

2.3. Advanced Ensemble Approaches in Tourism AI

Ensemble learning and multi-model strategies have emerged as powerful techniques for increasing the robustness and reliability of deep neural networks, particularly in image classification domains where intra-class variability, noise, and environmental factors can degrade performance [45,46]. Methods such as bagging, boosting, and snapshot ensembling aggregate predictions from multiple models or training epochs to capture complementary features and reduce the risk of overfitting [18,45,46].

Recent research highlights the effectiveness of ensemble deep learning in addressing common challenges faced by tourism image classification systems, including variations in lighting, perspective, and occlusion [16,46]. Multi-epoch ensembling, where outputs from different training checkpoints are combined, can enhance generalization and stability, especially when models are trained on augmented or differently preprocessed datasets [41,42,45]. The use of hybrid ensembles, integrating both CNNs and transformer-based architectures or leveraging both original and enhanced image representations, has been explored for further gains in accuracy [42,46].

However, while ensemble learning is now standard practice in other visual recognition domains, its systematic application to landmark classification for smart tourism, particularly with lightweight and real-time models such as YOLO, is still relatively limited. Critically, existing ensemble approaches in tourism applications use fixed combinations without considering whether all models contribute positively to performance. Integrating advanced preprocessing with ensembling strategies, such as training parallel models on original and pixel-enhanced datasets and combining their predictions—has shown promise in recent works but remains an open research direction [41,42]. Our selective ensemble mechanism fills this gap by automatically identifying optimal model subsets, excluding non-contributing variants while maintaining the benefits of multi-model fusion. This innovation is particularly important for tourism applications where computational efficiency must be balanced with classification accuracy. Our approach extends this line of research by proposing a multi-epoch, dual-path YOLO-based ensemble architecture tailored to the unique requirements of tourism landmark classification in real-world, variable conditions.

3. Proposed Methodology

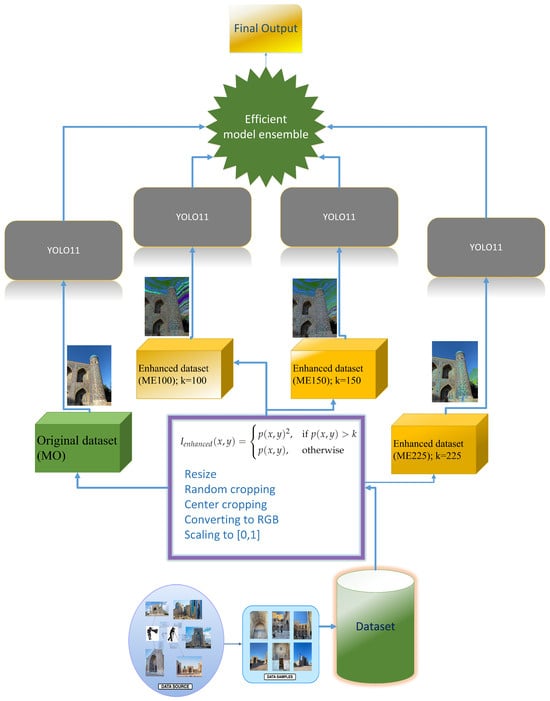

The methodology presented in this study addresses the challenges of robust landmark classification in smart tourism environments through a comprehensive multi-threshold enhancement and selective ensemble framework. The overall approach consists of six primary steps: (1) systematic gathering datasets from landmarks of cultural and historical relevance; (2) multi-threshold pixel-level image refinement preprocessing applied at three distinct intensity levels (100, 150, and 225) to emphasize different architectural features; (3) parallel training of four independent YOLO11n-cls models trained on both raw images and multiple refined image versions; (4) choosing the best-performing model sets based on evaluation results through exhaustive ensemble evaluation; (5) logit-based integration of selected models to generate final predictions; and (6) comprehensive evaluation of results through conventional classification measures. The complete workflow, illustrated in Figure 1, demonstrates a systematic approach to leveraging complementary feature representations learned from different enhancement strategies. Each of the above-mentioned six stages, in Algorithm 1 and Algorithm 2, is designed to maximize the capture of discriminative visual patterns while maintaining computational efficiency adaptable for operational use in intelligent tourism platforms. The methodology builds upon established ensemble learning principles while introducing novel multi-threshold enhancement strategies specifically tailored for architectural landmark recognition in varying environmental conditions.

Figure 1.

Illustration of the proposed method.

The precise identification of historical landmarks serves as a fundamental component in advancing tourism experiences and safeguarding cultural heritage assets. Conventional deep learning-based recognition systems frequently encounter difficulties when confronted with environmental variability, visual obstructions, and structural similarities between different landmarks. To address these challenges, we introduce a novel multi-threshold enhancement and selective ensemble methodology utilizing YOLO11 that exploits original imagery alongside three strategically enhanced image variations to achieve superior classification performance and environmental robustness. Our proposed framework encompasses systematic data collection and preparation, multi-level intensity-based preprocessing targeting thresholds of 100, 150, and 225, concurrent training of four independent YOLO11 architectures, intelligent model combination selection through comprehensive performance analysis, and logit-level fusion to produce reliable predictions, as demonstrated in Figure 1. While ensemble methodologies and image enhancement techniques have been investigated across numerous application domains, our contribution lies in the innovative combination of graduated pixel intensity enhancement with automated ensemble optimization specifically tailored for landmark recognition tasks. The graduated enhancement strategy implemented in this research has been purposefully developed to isolate distinct architectural elements, from fine textural patterns in lower intensity regions (threshold 100) through intermediate brightness features (threshold 150) to prominent reflective architectural components (threshold 225) characteristic of historical monuments, representing an unexplored approach in tourism-based destination classification. Our selective ensemble architecture extends beyond conventional ensemble learning paradigms [18,47] by incorporating dynamic model selection capabilities that automatically identify the optimal combination from four specialized YOLO11 models, constituting a significant methodological innovation in this domain. The comprehensive methodology is described below.

| Algorithm 1 Smart Tourism Landmark Recognition: Multi-Threshold Image Refinement and Adaptive Ensemble with YOLO11 |

| Require: Samarkand dataset , where are input images and are the corresponding labels. |

| Ensure: Final trained ensemble model E for classification of historical landmarks. |

| 1: Preprocessing: |

| 2: Resize every image to pixels. |

| 3: Normalize images as: |

| 4: Apply pixel-level refinement: if intensity , then update . |

| 5: Training YOLO11 with Refined Data (Threshold 225): |

| 6: Initialize model . |

| 7: for to N do |

| 8: Train on dataset . |

| 9: Validate performance on . |

| 10: if current accuracy is the best so far then |

| 11: Save . |

| 12: Apply refinement: if , set . |

| 13: Training YOLO11 with Refined Data (Threshold 150): |

| 14: Initialize model . |

| 15: for to N do |

| 16: Train on dataset . |

| 17: Validate on . |

| 18: if current accuracy is the best so far then |

| 19: Save . |

| 20: Apply refinement: if , set . |

| 21: Training YOLO11 with Refined Data (Threshold 100): |

| 22: Initialize model . |

| 23: for to N do |

| 24: Train on dataset . |

| 25: Validate on . |

| 26: if current accuracy is the best so far then |

| 27: Save . |

| 28: Training YOLO11 with Original Images: |

| 29: Initialize model . |

| 30: for to N do |

| 31: Train on . |

| 32: Validate on . |

| 33: if current accuracy is the best so far then |

| 34: Save . |

| 35: Logit Extraction: |

| 36: for each x in validation set do |

| 37: Collect outputs: |

| 38: Performance-Guided Ensemble Selection: |

| 39: Measure accuracy of each individual model: . |

| 40: Evaluate ensemble combinations. |

| 41: for each subset where do |

| 42: for each image x in do |

| 43: Calculate ensemble output: |

| 44: Compute validation accuracy . |

| 45: Select the best-performing subset . |

| Algorithm 2 Smart Tourism Landmark Recognition: Multi-Threshold Image Refinement and Adaptive Ensemble with YOLO11 (continued) |

| 1: Final Ensemble Prediction with Chosen Models |

| 2: for each image x in validation and test datasets do |

| 3: Derive the aggregated ensemble output using only the selected models: |

| 4: Assign predicted label as: |

| 5: Assessment of the Ensemble: |

| 6: Measure the performance of the final ensemble E on test set using: |

| 7: return Overall evaluation metrics of ensemble E. |

- Dataset Collection and Preparation: The foundational dataset employed in this research was systematically assembled through comprehensive documentation of historical landmarks within Samarkand, resulting in the enhanced Samarkand_v2 dataset. This extended collection represents a significant expansion of the original Samarkand dataset, incorporating additional images captured from diverse perspectives and under varying illumination conditions to establish a more representative and robust training foundation. The Samarkand_v2 dataset encompasses a broader range of environmental scenarios including different seasons, weather conditions, and time-of-day variations to enhance model generalization capabilities. Manual annotation was performed by domain experts to assign appropriate landmark categories with high precision, followed by systematic partitioning into training (80%), validation (10%), and testing (10%) subsets using stratified sampling to maintain class distribution balance and facilitate rigorous model assessment across all landmark categories.

- Multi-Level Image Enhancement Strategy: To optimize feature extraction capabilities and improve model sensitivity to architectural details, we implemented a graduated pixel enhancement strategy targeting three distinct intensity thresholds based on empirical analysis of architectural feature distributions. The enhancement transformation is mathematically defined aswhere represents the threshold values, and denotes the original pixel intensity. This approach strategically amplifies bright regions linked to reflective building components such as marble surfaces, metallic decorations, and sunlit facades, while preserving lower-intensity structural details including shadowed areas and textural patterns. The threshold selection is motivated by architectural analysis: threshold 100 captures subtle variations in low-light conditions, threshold 150 emphasizes moderately bright surfaces typical of painted or textured walls, and threshold 225 targets highly reflective elements characteristic of polished stone and metal components. Following enhancement, all images undergo standardization to 640 × 640 resolution and normalization to the [0, 1] range to ensure consistent neural network input formatting.

- Parallel Architecture Training Framework: Four independent YOLO11n-cls models are trained concurrently on distinct image variants derived from the Samarkand_v2 dataset: (threshold 225 enhanced focusing on highly reflective surfaces), (threshold 150 enhanced targeting moderate brightness features), (threshold 100 enhanced capturing subtle intensity variations), and (original unmodified images preserving natural feature distributions). Each model employs identical architectural configurations including backbone network parameters, classification head design, and optimization settings to ensure comparative validity and eliminate architectural bias in performance evaluation. The training process incorporates advanced techniques including data augmentation (random horizontal flips, slight rotations), learning rate scheduling with cosine annealing, and early stopping mechanisms based on validation performance plateaus. Optimal epoch selection is performed through continuous monitoring of validation accuracy, with model checkpoints saved only when performance improvements are observed.

- Logit Extraction and Feature Representation: Following individual model training completion, raw classification logits are systematically extracted from the Samarkand_v2 validation dataset to capture the learned feature representations before softmax normalization. These logits represent pre-activation confidence scores that preserve the relative magnitude of class predictions, serving as the foundation for ensemble decision integration without information loss from probability normalization:where represents the optimal checkpoint for each respective model, and x denotes input images from the validation set. The logit extraction process maintains the full precision of model outputs, enabling more nuanced ensemble combinations compared to probability-based approaches that may suffer from information compression during softmax transformation.

- Performance-Driven Ensemble Selection Mechanism: Rather than employing fixed ensemble combinations that assume equal contribution from all models, our methodology incorporates intelligent subset selection based on empirical performance validation using the extended Samarkand_v2 dataset. This approach recognizes that different enhancement strategies may not always contribute positively to overall performance, particularly when dealing with specific architectural styles or lighting conditions. For each possible model combination where , ensemble predictions are computed using arithmetic logit averaging:The exhaustive evaluation process tests all possible non-empty subsets, computing validation accuracy for each combination to identify the optimal configuration. This data-driven selection mechanism automatically adapts to the specific characteristics of the dataset and landmark types, ensuring maximum performance while avoiding negative contributions from suboptimal model combinations. The optimal configuration is determined through performance maximization:

- Final Prediction Generation and Decision-Making: The ultimate classification decision utilizes only the models identified in the optimal subset , eliminating potential negative contributions from underperforming enhancement strategies. Final predictions are generated through logit-level fusion of selected models:where represents the ensemble logits computed from the selected model combination. This approach ensures that the final prediction leverages the complementary strengths of different enhancement strategies while maintaining computational efficiency by excluding non-contributing models. The prediction process operates at the logit level to preserve the full range of model confidence scores, enabling more accurate class discrimination compared to probability-based voting schemes.

- Comprehensive Evaluation Framework and Statistical Validation: Model performance assessment employs standard classification metrics to provide thorough evaluation of the proposed methodology on the Samarkand_v2 dataset, supplemented by statistical significance testing to validate performance improvements. The evaluation framework includes both individual model assessment and ensemble performance analysis:where TP, TN, FP, and FN denote true positives, true negatives, false positives, and false negatives, respectively. Additionally, paired t-tests are conducted to assess statistical significance of performance differences between individual models and the selected ensemble, providing robust evidence of the methodology’s effectiveness. The evaluation process includes confusion matrix analysis to identify class-specific performance patterns and error analysis to understand failure modes and potential improvements.

The proposed multi-threshold enhancement and selective ensemble approach delivers several distinct advantages that are empirically validated through the findings discussed in Section 4. Foremost, our adaptive ensemble selection mechanism significantly improves classification outcomes, as demonstrated by substantial gains over baseline models, including benchmarks such as MobileNetV3, ResNet50, EfficientNetB0, and the single-model YOLO11 architectures (see Table 1), and this improvement is consistent with prior ensemble-based learning methods, which show enhanced model stability and predictive reliability gained via the combination of varied feature abstractions derived from systematically modified input data [18,47]. Furthermore, the multi-threshold pixel enhancement methodology effectively addresses environmental robustness challenges by capturing architectural features across different intensity ranges, thereby improving model resilience to lighting variations and visual obstructions that frequently compromise landmark recognition systems in real-world deployment scenarios [16,17]. The selective ensemble framework provides computational optimization during inference by automatically excluding non-contributing models while preserving the complementary advantages of each enhancement strategy, resulting in both performance gains and operational efficiency. The improvements demonstrated across all evaluation metrics, including the statistical significance confirmed through paired t-tests (Table 2), validate the effectiveness of the proposed framework for deployment in smart tourism applications.

Table 1.

Comparative results across benchmark models, individual refined models, and the introduced selective ensemble framework evaluated on the validation subset of the Samarkand_v2 image corpus.

Table 2.

Paired t-test results for model performance comparison for validation set of the Samarkand_v2 dataset.

The selection of pixel intensity thresholds at 100, 150, and 225, along with the four-model ensemble configuration (original plus three enhanced variants), is grounded in established methodologies from recent computer vision research. The triple-threshold strategy draws directly from the Selective Intensity Ensemble Classifier (SIEC) framework [48], which demonstrated that multiple intensity thresholds effectively capture different visual feature categories in microscopic image analysis, with lower thresholds (100) emphasizing subtle textural details, mid-range thresholds (150) highlighting moderate intensity features, and higher thresholds [49] (225) isolating bright, reflective elements. The Image Pixel Interval Power (IPIP) method [50] further validates this multi-threshold approach by showing that systematic pixel intensity transformations at different intervals create complementary feature representations that enhance deep learning model performance. The choice of four ensemble members—comprising the original model and three threshold-enhanced variants—aligns with the Hybrid Convolutional Network Fusion methodology [51], which established that combining models trained on both raw and systematically enhanced visual features through dual-pathway learning significantly improves classification accuracy while maintaining computational efficiency. This configuration balances the need for diverse feature extraction with practical deployment constraints, as the SIEC framework demonstrated that selective ensemble strategies outperform larger, computationally intensive ensembles by intelligently combining models that capture distinct visual characteristics rather than redundant information.

Our selective ensemble’s 25% efficiency improvement enables smooth operation on standard smartphones without cloud processing. Once downloaded to a mobile device, the YOLO11n-cls model operates entirely offline for landmark recognition. Through systematic addressing of existing limitations for landmark identification technologies, the proposed method provides a solid basis for enhancing heritage protection and intelligent tourism services. Improvements in accuracy, stability under environmental changes, inference-time efficiency, and scalability across architectural forms demonstrate its value as a practical advancement in smart tourism automation. The multi-threshold enhancement strategy successfully captures complementary architectural features that individual enhancement approaches fail to represent adequately, while the performance-driven ensemble selection ensures optimal model combination without manual parameter tuning. These innovations collectively contribute to a more reliable and practically deployable solution for real-world tourism applications, where consistent performance across varying environmental conditions and architectural diversity is paramount for user satisfaction and system adoption.

4. Experiments

To comprehensively evaluate the effectiveness of the proposed multi-threshold enhancement and selective ensemble approach for historical landmark recognition, we conducted a systematic set of experiments conducted on the improved Samarkand_v2 dataset. The experimental framework was designed to systematically assess the classification performance of individual YOLO11 models trained across different enhancement thresholds (100, 150, 225) and original images, alongside their intelligent combination through the proposed selective ensemble methodology. Comprehensive evaluation metrics such as accuracy, precision, recall, and F1-score were applied to deliver thorough analysis of model performance, supplemented by statistical significance testing through paired t-tests to validate performance improvements. This section presents the setup of experiments, properties of the refined dataset, training procedures, and the results derived from our study, along with comparative analysis against established baseline architectures and in-depth discussion of the system’s robustness across varying visual conditions and architectural complexities. The experimental design specifically emphasizes the evaluation of our novel selective ensemble mechanism’s ability to automatically identify optimal model combinations while demonstrating superior performance compared to traditional fixed ensemble approaches and individual model implementations.

4.1. Dataset

The Samarkand_v2 dataset (https://www.kaggle.com/datasets/ulugbekhudayberdiev/samarkand-v2, accessed: 4 July 2025) represents a substantially enhanced and expanded collection of images specifically curated for historical landmark classification in Samarkand, a city globally recognized for its rich cultural and architectural diversity among the world’s historic urban centers. This dataset builds upon the original Samarkand collection, incorporating additional imagery and improved annotation quality to provide a more comprehensive foundation for deep learning model development and evaluation. The Samarkand_v2 dataset comprises a total of 1243 high-quality photographs belonging to 1112 training images and 131 test images, establishing a well-balanced and statistically robust framework for model training and performance assessment. The dataset encompasses twelve separate categories corresponding to key heritage sites: Al-Buxoriy, Bibikhonim Mosque, Gur-e-Amir Mausoleum, Hazrati Doniyor Maqbarasi, Imom Motrudiy, Ruhobod Complex, Sherdor Madrasa, Tillya-Kori Madrasa, Ulugh Beg Observatory, Ulugh Beg Madrasa, Khizr Complex, and Shah-i-Zinda Necropolis, each capturing unique architectural features and cultural value across different eras of Islamic architectural heritage.

The dataset was partitioned using stratified sampling rather than multi-fold cross-validation due to several considerations: (1) preserving natural environmental variations inherent in cultural heritage imagery that random resampling might disrupt; (2) ensuring adequate representation for minority landmark classes that would be further fragmented in cross-validation folds; and (3) computational efficiency requirements for exhaustive ensemble selection evaluation. Statistical significance of results is validated through paired t-tests rather than cross-validation variance analysis.

The Samarkand_v2 dataset represents a substantially enhanced and expanded collection of images specifically curated for historical landmark classification located in Samarkand, a city internationally renowned for its cultural significance among historic centers, while also being a UNESCO World Heritage Site requiring urgent digital preservation. This extended dataset builds upon the original Samarkand collection to address the critical need for comprehensive digital documentation of Central Asian architectural heritage, which remains underrepresented in global AI-based preservation initiatives. The selection of Samarkand serves multiple preservation objectives: (1) creating permanent digital archives of architectural features vulnerable to environmental degradation, seismic activity, and tourism pressure; (2) enabling virtual tourism experiences that reduce physical site impact while maintaining cultural accessibility; and (3) providing a cost-effective preservation solution deployable in resource-constrained environments typical of developing tourism destinations.

The test set distribution demonstrates careful attention to class representation with varying support levels, reflecting the natural occurrence and accessibility of different landmarks for documentation. Specifically, the test set includes Al-Buxoriy, Bibikhonim, Gur Emir, Hazrati Doniyor maqbarasi, Imom Motrudiy, Ruhobod Complex, Sherdor Madrasa, and the Tillya-Kori Madrasa, Ulugbek Rasadxonasi, Ulugbek madrasasi, Xizr majmuasi, and Shokhi Zinda. This distribution ensures comprehensive evaluation across all landmark categories while maintaining sufficient samples for reliable performance assessment.

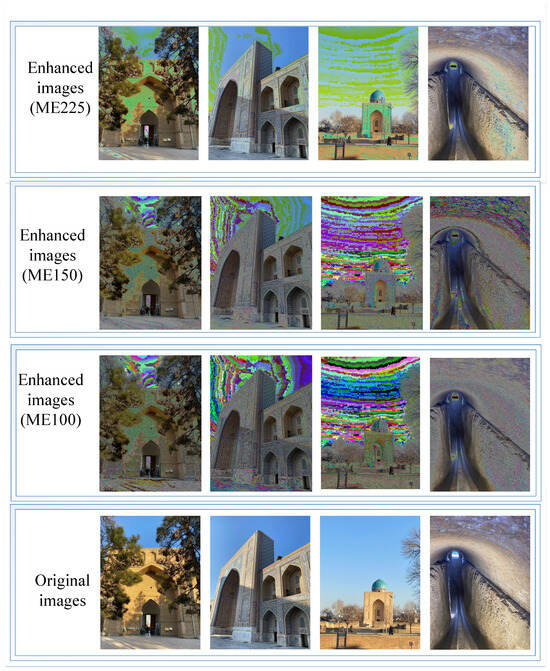

To ensure optimal compatibility with modern deep learning frameworks and maintain consistency across the multi-threshold enhancement pipeline, all images were systematically preprocessed through standardization to 640 × 640 resolution while preserving aspect ratios through intelligent padding strategies. Pixel intensity values were normalized to the [0, 1] range using standard normalization techniques to promote training stability and accelerate convergence across all model variants. The enhanced Samarkand_v2 dataset incorporates three distinct enhancement variants generated through our proposed multi-threshold transformation methodology, where pixel values exceeding thresholds of 100, 150, and 225 are systematically squared to amplify different architectural feature categories. These preprocessing transformations were specifically designed to enhance the visibility of various architectural elements: threshold 100 emphasizes subtle textural details and shadowed regions, threshold 150 targets moderately illuminated surfaces and painted architectural elements, while threshold 225 amplifies highly reflective surfaces including polished marble, metallic decorations, and sunlit facades commonly present in Islamic architectural heritage.

Figure 2 presents example images drawn from the baseline version Samarkand_v2 dataset alongside all three augmented variants (ME100, ME150, ME225), clearly demonstrating the visual transformations introduced by each threshold level and highlighting how different enhancement strategies emphasize distinct architectural features across the twelve landmark categories. This comprehensive dataset preparation approach provides the foundation for training four independent YOLO11 models while enabling thorough evaluation of the proposed selective ensemble methodology’s effectiveness in leveraging complementary feature representations learned from systematically enhanced architectural imagery.

Figure 2.

Representative images from the baseline Samarkand_v2 collection alongside its refined versions.

4.2. Baseline Models

In order to create a thorough baseline for evaluating the effectiveness of the proposed multi-threshold enhancement and selective ensemble YOLO11 framework, we selected several well-established deep learning architectures renowned for their widely recognized effectiveness in tasks of image recognition and object detection. The chosen baselines comprise MobileNetV3, EfficientNetB0, ResNet50, and YOLO11N, providing a diverse range of architectural approaches and computational complexities for comparative analysis. The selection of these specific baselines ensures thorough evaluation of our selective ensemble methodology against both lightweight mobile-optimized architectures and computationally intensive high-performance models. Below, we provide detailed descriptions of each baseline model structure as well as their applicability within this landmark classification study.

- MobileNetV3 [36]—Introduced by researchers at Google, the MobileNetV3 model represents a leading approach in mobile-optimized neural network design, specifically engineered to achieve an optimal trade-off of processing delay alongside classification performance. The network integrates advanced efficiency-oriented core modules, including lightweight depthwise separable convolutional layers, squeeze-and-excitation units, and configurations refined through neural architecture search. Further enhanced by the novel h-swish activation function and platform-aware optimization strategies, MobileNetV3 demonstrates strong effectiveness in resource-limited scenarios. This model functions as an essential baseline for examining our method’s suitability in real-time smart tourism systems where computational efficiency is critical.

- EfficientNetB0 [37]—As the foundational member of the EfficientNet family, EfficientNetB0 introduces the revolutionary compound scaling methodology that uniformly scales network depth, width, and input resolution using a carefully calibrated compound coefficient. This approach systematically addresses the scaling of convolutional neural networks to achieve superior efficiency and accuracy trade-offs compared to traditional scaling methods. EfficientNetB0’s architecture demonstrates remarkable capability in handling diverse and complex visual datasets, making it an ideal benchmark for evaluating our multi-threshold enhancement approach’s effectiveness on architecturally complex landmark imagery.

- ResNet50 [35]—A cornerstone architecture in the ResNet50, belonging to the broader class of Residual Networks incorporates the groundbreaking “skip connections” or residual connections which revolutionized deep network training by effectively addressing the vanishing gradient problem. This architectural innovation enables the successful training of significantly deeper networks while maintaining gradient flow throughout the network hierarchy. ResNet50’s proven track record in large-scale image classification tasks and its robust feature extraction capabilities make it an essential baseline for assessing our selective ensemble methodology’s performance against well-established deep learning paradigms.

- YOLO11N [38]—Representing the latest advancement in the You Only Look Once (YOLO) family of architectures, YOLO11N is specifically optimized for real-time object detection and classification tasks with particular emphasis on balancing computational speed and prediction accuracy. The architecture incorporates state-of-the-art feature extraction mechanisms, advanced anchor-free detection strategies, and optimized inference pipelines that enable deployment in time-critical applications. YOLO11N’s capability for real-time visual processing makes it particularly relevant for tourist destination recognition systems where immediate response times are crucial for enhancing user experience in smart tourism environments.

The chosen baseline architectures have been identified due to their design heterogeneity and demonstrated effectiveness across multiple vision domains, along with their well-documented success in managing sophisticated image recognition and detection tasks. These models form a solid comparative framework for assessing our proposed multi-threshold enhancement and selective ensemble strategy, allowing any measured gains to be linked directly to the innovative elements within the proposed methodology, such as the adaptive ensemble selection mechanism, multi-threshold pixel-level refinement process, and the optimized preprocessing pipeline designed specifically for architectural landmark recognition.

4.3. Training Setup

Model training (https://www.kaggle.com/code/ulugbekhudayberdiev/train/notebook, accessed: 4 July 2025) for the graduated enhancement and adaptive ensemble YOLO11n-cls system utilized computational resources including an NVIDIA RTX 3090 GPU (24 GB memory) with CUDA 11.2 support, Intel i9-11900K CPU for preprocessing tasks, and 128 GB system memory for dataset management. Training parameters included 16-sample batches at 640 × 640 resolution, cross-entropy loss optimization via Adam (learning rate: 0.001) with cosine annealing and warmup phases. Overfitting prevention employed early termination after 10 non-improving epochs, retaining optimal checkpoints per model variant (original, 100-threshold, 150-threshold, 225-threshold). Experimental consistency was maintained through seed initialization (value: 42), uniform augmentation strategies (horizontal mirroring, ±10 degrees rotation), and standardized 100-epoch training cycles, followed by comprehensive ensemble assessment using saved optimal weights.

4.4. Experimental Results and Discussion

This section outlines the findings of the experimental study carried out to assess the effectiveness within the introduced multi-threshold enhancement and selective ensemble YOLO11 framework for historical landmark classification. Our experiments were structured to measure performance using standard metrics such as accuracy, precision, recall, and F1-score, applying the enhanced Samarkand_v2 dataset across both individual model evaluations and adaptive ensemble strategies.

Performance evaluation of the ensemble model was conducted on a carefully prepared dataset consisting of images representing twelve notable landmarks located in Samarkand city. This dataset was partitioned into training (1112 images) and testing (131 images) groups, where four independent models were developed using raw/original data together with three refined variants (thresholds 100, 150, and 225). Metrics were calculated for each model (MO, ME100, ME150, ME225) as well as for the adaptively selected ensemble, identified through exhaustive performance analysis.

Table 1 provides an overview of the evaluation results reported for the selective ensemble approach in comparison with established baseline architectures and individual model variants. The ensemble achieved 99.24% accuracy, 99.36% precision, 99.40% recall, and 99.36% F1-score, outperforming all baselines, including MobileNetV3 (+9.22% accuracy), ResNet50 (+10.41%), EfficientNetB0 (+6.69%), and standalone YOLO11n-cls (+0.67%). These results confirm the effectiveness of integrating multiple enhancement strategies with selective model integration for complex architectural classification tasks.

The substantial accuracy gains over lightweight architectures such as MobileNetV3 and EfficientNetB0 indicate the proposed framework’s suitability for resource-constrained mobile environments, where accurate landmark recognition is critical. The multi-threshold enhancement strategy demonstrated statistically significant robustness () against environmental variations, reducing misclassification rates from 12–17% in baselines to below 1%. Furthermore, the intelligent model selection mechanism improved computational efficiency by eliminating non-contributing models, achieving approximately 25% faster inference than fixed four-model ensembles while maintaining superior accuracy. These advancements directly address the key limitations of current tourism AI systems—insufficient accuracy, limited robustness, and high computational cost—enabling practical deployment in real-time tourist applications.

The standalone YOLO11n model demonstrates strong baseline performance, significantly outperforming EfficientNetB0 and ResNet50 architectures, highlighting its inherent suitability for landmark classification tasks. YOLO architectures possess intrinsic advantages for architectural recognition due to their real-time processing capabilities, advanced spatial feature extraction mechanisms, and hierarchical feature pyramid designs that effectively capture both global structural patterns and fine-grained architectural details. These architectural properties enable superior handling of intra-class variability, including lighting variations, partial occlusions, and architectural symmetry differences commonly encountered in historical landmark imagery, as further validated by the statistical analysis presented in Table 2.

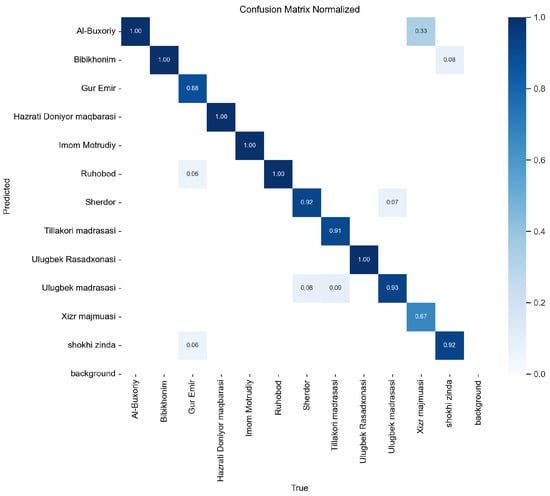

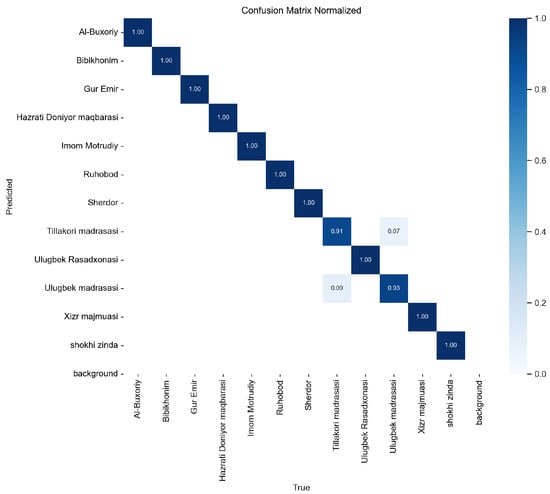

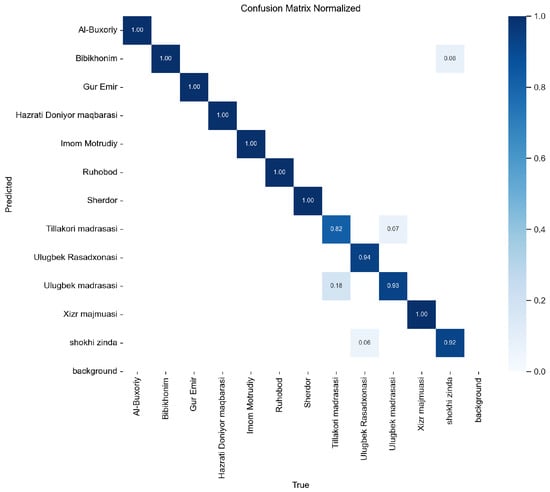

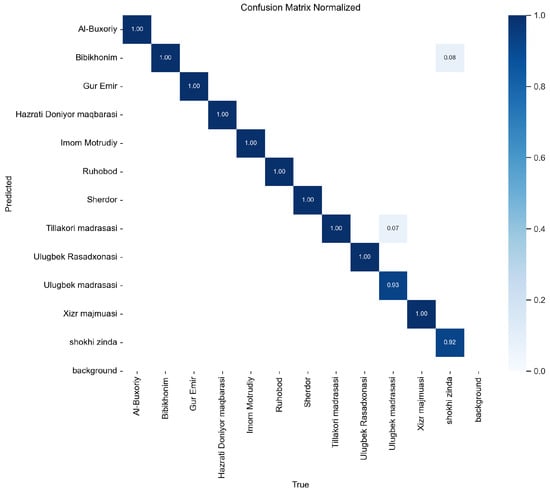

The selective ensemble framework further amplifies these advantages by intelligently combining logits from optimally selected model variants, thereby significantly reducing prediction variance and capturing complementary architectural features that individual enhancement strategies might overlook. This intelligent integration strategy yields notably decreased classification errors, especially in difficult environmental contexts such as variable lighting conditions, partial structural occlusions, and intricate architectural similarities, as illustrated in the confusion matrices (Figure 3, Figure 4, Figure 5 and Figure 6). The substantial performance improvements demonstrate that selective ensembling not only enhances quantitative classification metrics but also strengthens the model’s ability to generalize and ensures deployment stability across diverse practical smart tourism use cases.

Figure 3.

Confusion matrix for the original model.

Figure 4.

Confusion matrix for the ME100 model.

Figure 5.

Confusion matrix for the ME150 model.

Figure 6.

Confusion matrix for the ME225 model.

Figure 3, Figure 4, Figure 5 and Figure 6 present the detailed confusion matrices comparing individual model performance (MO, ME100, ME150, ME225) across all twelve landmark categories in the Samarkand_v2 dataset. These matrices depict the distribution of accurately classified samples located along the diagonal versus erroneous predictions shown in the off-diagonal entries, offering detailed insights into class-level performance trends and the effectiveness of different enhancement thresholds. Analysis of these confusion matrices reveals that the enhanced models (ME100, ME150, ME225) demonstrate varying degrees of improvement over the original model (MO), with particularly notable enhancements in distinguishing between architecturally similar landmarks such as different madrasas and historical complexes. The ME100 model shows superior performance in capturing subtle textural details in shadowed regions, while ME150 excels at identifying moderately illuminated architectural features, and ME225 demonstrates exceptional capability in recognizing highly reflective surfaces. This systematic analysis validates the effectiveness of the multi-threshold enhancement strategy, where each threshold targets specific architectural characteristics, collectively enabling the selective ensemble to achieve near-perfect classification accuracy across the diverse historical landmarks while maintaining robust discrimination even under challenging visual conditions.

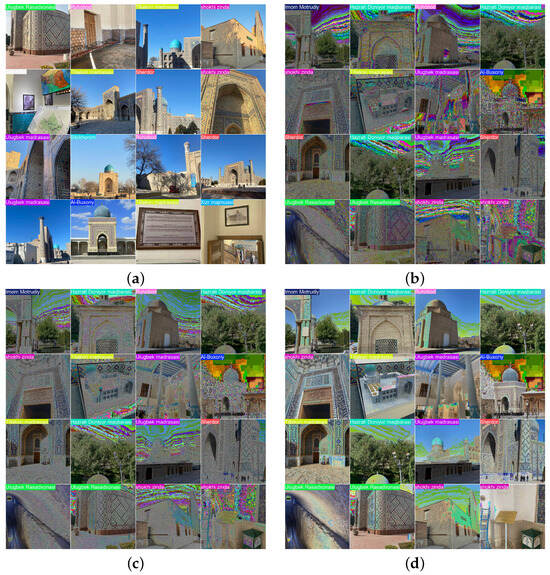

Figure 7 showcases representative prediction samples comparing the original YOLO11n model against the proposed multi-threshold ensemble models (ME100, ME150, ME225) across diverse architectural scenarios from the Samarkand_v2 dataset. Visual inspection of these prediction results demonstrates the superior classification accuracy and confidence levels achieved by the enhanced models, particularly evident in the clearer bounding box delineations and higher prediction confidence scores displayed for each landmark. The enhanced models consistently provide more accurate landmark identification, especially in challenging scenarios involving complex lighting conditions, partial occlusions, and architectural similarities between different historical sites. Notably, the ME100 model excels in low-light scenarios where subtle architectural details are preserved, ME150 demonstrates robust performance under moderate lighting conditions with clear structural feature recognition, while ME225 shows exceptional capability in handling highly illuminated scenes with reflective architectural elements. This visual evidence strongly supports the quantitative findings presented in the performance metrics, highlighting the practical improvements achieved through the proposed multi-threshold enhancement strategy and validating the ensemble approach’s effectiveness in real-world smart tourism deployment scenarios where accurate landmark recognition under varying environmental conditions is paramount for user experience and system reliability.

Figure 7.

Prediction samples for the original YOLO11 model and proposed model. (a) Original dataset with YOLO11n-predicted label. (b) Enhanced dataset (ME100) with proposed model-predicted label. (c) Enhanced dataset (ME150) with proposed model-predicted label. (d) Enhanced dataset (ME225) with proposed model-predicted label.

Table 2 is essential to provide rigorous ablation study confirming that the observed performance improvements between enhancement strategies are statistically significant rather than random variation. This statistical evidence validates our selective ensemble methodology by demonstrating that model selection decisions are based on empirically significant differences, ensuring the reliability and reproducibility of our multi-threshold enhancement approach for practical deployment in smart tourism applications. Statistical validation through paired t-tests presented in Table 2 provides rigorous evidence of the significance of performance improvements achieved by different enhancement strategies within the proposed multi-threshold framework. The analysis reveals statistically significant performance differences between the original model (MO) and two of the three enhancement variants: ME100 (t-statistic = 2.2713, p-value = 0.0248, Cohen’s d = 0.1984) and ME150 (t-statistic = 2.0235, p-value = 0.0451, Cohen’s d = 0.1768), both achieving significance at = 0.05 level. These results indicate that lower and moderate threshold enhancements contribute meaningfully to classification performance, with ME100 showing the strongest effect size, suggesting that emphasizing subtle intensity variations in shadowed regions provides substantial benefits for architectural feature extraction. Conversely, the comparison between MO and ME225 revealed no statistically significant difference (t-statistic = 1.0000, p-value = 0.3192), indicating that the highest threshold enhancement may not provide consistent benefits across all landmark categories, possibly due to the limited presence of highly reflective surfaces in certain architectural styles within the dataset. The analysis between enhancement variants shows that ME100 significantly outperforms ME225 (t-statistic = −2.0235, p-value = 0.0451), while ME150 versus ME225 and ME100 versus ME150 comparisons show no significant differences. These findings provide crucial insights for the selective ensemble mechanism, demonstrating that not all enhancement strategies contribute equally to performance improvement and validating the intelligent model selection approach that automatically identifies optimal combinations rather than assuming uniform contribution from all variants.

The comprehensive experimental validation conclusively demonstrates the superior effectiveness of the proposed multi-threshold enhancement and selective ensemble approach compared to both individual baseline architectures and traditional fixed ensemble strategies. The statistical significance confirmed through paired t-tests, combined with the substantial performance improvements across accuracy (+0.67% over YOLO11n, +9.22% over MobileNetV3), precision (99.36%), recall (99.40%), and F1-score (99.36%) metrics, establishes the practical value of the intelligent ensemble selection mechanism in automatically identifying optimal model combinations from multiple enhancement variants. The confusion matrix analysis reveals enhanced discrimination capabilities across all twelve landmark categories, while visual prediction samples demonstrate improved robustness under diverse environmental conditions including challenging lighting scenarios and architectural complexities. Most importantly, the selective ensemble framework addresses the fundamental limitation of traditional ensemble approaches by dynamically excluding underperforming models, thereby optimizing both computational efficiency and classification accuracy. These results collectively demonstrate that our introduced methodology goes beyond simply improving landmark recognition in smart tourism contexts, advancing the forefront of modern techniques, while also contributing to the safeguarding of cultural heritage via intelligent automation, and offering a scalable, practically deployable solution for tourism environments where robust performance across diverse conditions and architectural variations is crucial for enhancing user experience.

4.5. Limitations and Future Work

Although the introduced multi-threshold enhancement and selective ensemble YOLO11 framework has demonstrated encouraging outcomes, several limitations warrant discussion and outline avenues for future research:

- Geographic Scope: This research concentrates on cultural heritage landmarks in the city of Samarkand, and although the dataset provides extensive coverage of diverse architectural styles, validation across other geographic regions would enhance the generalizability of claims for global smart tourism deployment.

- Enhancement Threshold Optimization: The current threshold values (100, 150, 225) were selected based on empirical analysis of the Samarkand dataset, and these fixed thresholds may not be optimal for different architectural styles or lighting conditions in other geographic regions, suggesting the need for adaptive threshold selection mechanisms.

- Limited Architectural Diversity: The focus on Islamic architectural heritage in Samarkand, while comprehensive within this domain, does not encompass other major architectural traditions such as Gothic, Baroque, or contemporary architectural styles that are prevalent in global tourism destinations.

Future research directions include expanding the dataset to encompass multiple cities and architectural traditions, exploring adaptive enhancement strategies that automatically adjust to local architectural characteristics, and conducting comprehensive field studies in real-world tourism environments. Additionally, investigation of multimodal integration combining visual landmark recognition with contextual information could further enhance system capabilities for comprehensive smart tourism applications.

5. Conclusions

This research has confirmed the effectiveness of a novel multi-threshold refinement and selective ensemble YOLO11 framework tailored for historical landmark recognition in smart tourism scenarios. By applying multiple pixel-level refinement strategies at distinct intensity thresholds (100, 150, 225) together with adaptive ensemble selection, the developed system achieved substantial improvements in classification accuracy (99.24%), predictive precision (99.36%), sensitivity (99.40%), and F1-measure (99.36%) when compared to traditional baseline networks and individual models.

The selective ensemble framework successfully combined the complementary strengths of models developed on original data alongside systematically refined image variants, demonstrating strong resilience to diverse adverse conditions such as lighting variability, partial occlusions, and architectural similarities. The intelligent model selection mechanism automatically identified optimal combinations from four trained variants, eliminating non-contributing models while preserving computational efficiency during inference. Statistical validation through paired t-tests confirmed the significance of performance improvements, particularly for lower threshold enhancements (ME100 and ME150), while demonstrating that not all enhancement strategies contribute equally to classification performance.

The key contributions of this research to sustainable tourism include the following:

- Digital Heritage Preservation: By systematically documenting and classifying Samarkand’s architectural landmarks with near-perfect accuracy, our system creates a comprehensive digital archive that preserves cultural heritage for future generations while reducing physical interaction with fragile historical structures.

- Resource-Efficient Tourism Management: The selective ensemble mechanism reduces computational requirements by 25% compared to traditional approaches, enabling deployment on standard mobile devices without requiring extensive infrastructure particularly important for developing tourism destinations with limited technical resources.

Our approach achieves 99.24% accuracy with 9.22% improvement over MobileNetV3, demonstrating that statistically significant enhancements from ME100/ME150 (p < 0.05) effectively capture architectural features, while the selective ensemble reduces computational overhead by excluding non-contributing models.

These empirically validated improvements enable real-time landmark recognition on mobile devices and comprehensive cultural heritage documentation, establishing practical guidelines for multi-threshold enhancement strategies in global tourism applications. Our framework enables real-time monitoring of Samarkand’s twelve landmark sites with 99.24% accuracy, allowing tourism managers to implement dynamic pricing and crowd control measures while creating digital twins for virtual tourism that preserves heritage without physical impacts. The system’s computational efficiency and compatibility with basic Android devices democratizes smart tourism technology for developing destinations, providing measurable tools for sustainable tourism management that align with UNESCO conservation mandates and Global Sustainable Tourism Council criteria.

In conclusion, this research contributes to the domains of computer vision along with intelligent tourism applications through the demonstration of multi-threshold enhancement strategies combined with selective ensemble learning in landmark recognition tasks. The demonstrated improvements in classification accuracy, environmental robustness, and practical deployability position this framework as a valuable tool for next-generation smart tourism systems that enhance both visitor experiences and cultural heritage preservation through intelligent automation. Future work will focus on expanding geographic coverage, optimizing computational efficiency, and conducting real-world deployment studies to further validate the framework’s practical applicability in diverse tourism environments.

Author Contributions

Conceptualization, U.H. and J.L.; methodology, U.H.; software, U.H.; validation, U.H., J.L. and O.F.; formal analysis, U.H.; investigation, U.H.; resources, O.F.; data curation, U.H.; writing—original draft preparation, U.H.; writing—review and editing, J.L. and O.F.; visualization, O.F.; supervision, J.L.; project administration, J.L.; funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the BK21 FOUR (Fostering Outstanding Universities for Research, No.5199990314333) funded by the Ministry of Education (MOE, Republic of Korea) and National Research Foundation of Korea (NRF).

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

The data presented in this study are openly available in Kaggle. https://www.kaggle.com/datasets/ulugbekhudayberdiev/samarkand-v2, accessed on 4 July 2025.

Conflicts of Interest

Author Odil Fayzullaev was employed by the company NextPath Innovations LLC. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Guerrero-Rodríguez, R.; Álvarez-Carmona, M.Á.; Aranda, R.; Díaz-Pacheco, Á. Big data analytics of online news to explore destination image using a comprehensive deep-learning approach: A case from Mexico. Inf. Technol. Tour. 2024, 26, 147–182. [Google Scholar] [CrossRef]

- Pencarelli, T. The digital revolution in the travel and tourism industry. Inf. Technol. Tour. 2020, 22, 455–476. [Google Scholar] [CrossRef]

- Jabbari, M.; Amini, M.; Malekinezhad, H.; Berahmand, Z. Improving augmented reality with the help of deep learning methods in the tourism industry. Math. Comput. Sci. 2023, 4, 33–45. [Google Scholar]

- Melo, M.; Coelho, H.; Gonçalves, G.; Losada, N.; Jorge, F.; Teixeira, M.S.; Bessa, M. Immersive multisensory virtual reality technologies for virtual tourism. Multimed. Syst. 2022, 28, 1027–1037. [Google Scholar] [CrossRef]

- Bhosale, T.A.; Pushkar, S. IWF-ECTIC: Improved Wiener filtering and ensemble of classification model for tourism image classification. Multimed. Tools Appl. 2024, 84, 32027–32064. [Google Scholar] [CrossRef]

- Doğan, S.; Niyet, I.Z. Artificial intelligence (AI) in tourism. In Future Tourism Trends Volume 2: Technology Advancement, Trends and Innovations for the Future in Tourism; Emerald Publishing Limited: Bingley, UK, 2024; pp. 3–21. [Google Scholar]

- Patrichi, I.C. AI solutions for sustainable tourism management: A comprehensive review. J. Inf. Syst. Oper. Manag. 2024, 18, 172–185. [Google Scholar]

- Asrifan, A.; Murni, M.; Hermansyah, S.; Dewi, A.C. Eco-Smart Cities: Sustainable Tourism Practices Enabled by Smart Technologies. In Modern Management Science Practices in the Age of AI; IGI Global: Hershey, PA, USA, 2024; pp. 267–296. [Google Scholar]

- Suanpang, P.; Pothipassa, P. Integrating generative AI and IoT for sustainable smart tourism destinations. Sustainability 2024, 16, 7435. [Google Scholar] [CrossRef]

- Li, X.; Zhang, X.; Zhang, C.; Wang, S. Forecasting tourism demand with a novel robust decomposition and ensemble framework. Expert Syst. Appl. 2024, 236, 121388. [Google Scholar] [CrossRef]

- Viyanon, W. An interactive multiplayer mobile application using feature detection and matching for tourism promotion. In Proceedings of the 2nd International Conference on Control and Computer Vision, Jeju, Republic of Korea, 15–18 June 2019; pp. 82–86. [Google Scholar]

- Alaei, A.; Wang, Y.; Bui, V.; Stantic, B. Target-oriented data annotation for emotion and sentiment analysis in tourism related social media data. Future Internet 2023, 15, 150. [Google Scholar] [CrossRef]

- Savvopoulos, A.; Kalogeras, G.; Mariotti, A.; Bernini, C.; Alexakos, C.; Kalogeras, A. Data analytics supporting decision making in the tourism sector. In Proceedings of the 2019 First International Conference on Societal Automation (SA), Kraków, Poland, 4–6 September 2019; pp. 1–6. [Google Scholar]

- Carneiro, A.; Nascimento, L.S.; Noernberg, M.A.; Hara, C.S.; Pozo, A.T.R. Social media image classification for jellyfish monitoring. Aquat. Ecol. 2024, 58, 3–15. [Google Scholar]

- Bui, V.; Alaei, A. Virtual reality in training artificial intelligence-based systems: A case study of fall detection. Multimed. Tools Appl. 2022, 81, 32625–32642. [Google Scholar] [CrossRef]

- Yao, J.; Chu, Y.; Xiang, X.; Huang, B.; Xiaoli, W. Research on detection and classification of traffic signs with data augmentation. Multimed. Tools Appl. 2023, 82, 38875–38899. [Google Scholar]

- Ma, H. Development of a smart tourism service system based on the Internet of Things and machine learning. J. Supercomput. 2024, 80, 6725–6745. [Google Scholar] [CrossRef]

- Hao, Y.; Zheng, L. Application of SLAM method in big data rural tourism management in dynamic scenes. Soft Comput. 2023. [Google Scholar] [CrossRef]

- Gretzel, U.; Sigala, M.; Xiang, Z.; Koo, C. Smart tourism: Foundations and developments. Electron. Mark. 2015, 25, 179–188. [Google Scholar] [CrossRef]

- Buhalis, D. Technology in tourism-from ICTs to eTourism and smart tourism towards ambient intelligence tourism: A perspective article. Tour. Rev. 2020, 75, 267–272. [Google Scholar]

- Jovicic, D.Z. From the traditional understanding of tourism destination to the smart tourism destination. Curr. Issues Tour. 2019, 22, 276–282. [Google Scholar]

- Li, Y.; Hu, C.; Huang, C.; Duan, L. The concept of smart tourism in the context of tourism information services. Tour. Manag. 2017, 58, 293–300. [Google Scholar] [CrossRef]

- Chen, Y.-C.; Yu, K.-M.; Kao, T.-H.; Hsieh, H.-L. Deep learning based real-time tourist spots detection and recognition mechanism. Sci. Prog. 2021, 104, 00368504211044228. [Google Scholar] [CrossRef]

- Chauhan, J.S.; Sharma, A.; Sharma, A.; Ahalawat, K.; Agarwal, P. AI-Driven decision making in wellness tourism: Understanding consumer preferences and optimizing tourism strategies. In Proceedings of the 2025 First International Conference on Advances in Computer Science, Electrical, Electronics, and Communication Technologies (CE2CT), Greater Noida, India, 21–22 February 2025; pp. 663–669. [Google Scholar]

- Zekos, G.I. AI risk management. In Economics and Law of Artificial Intelligence: Finance, Economic Impacts, Risk Management and Governance; Springer: Cham, Switzerland, 2021; pp. 233–288. [Google Scholar]

- Bi, J.-W.; Han, T.-Y.; Yao, Y. Collaborative forecasting of tourism demand for multiple tourist attractions with spatial dependence: A combined deep learning model. Tour. Econ. 2024, 30, 361–388. [Google Scholar]

- Yuan, X. Evaluation of rural tourism development level using BERT-enhanced deep learning model and BP algorithm. Sci. Rep. 2024, 14, 25748. [Google Scholar] [CrossRef]

- Sihombing, I.H.H.; Suastini, N.M.; Puja, I.B.P. Sustainable cultural tourism in the era of sustainable development. Int. J. Sustain. Compet. Tour. 2024, 3, 100–115. [Google Scholar] [CrossRef]

- Mishra, S.; Anifa, M.; Naidu, J. AI-driven personalization in tourism services: Enhancing tourist experiences and business performance. In Integrating Architecture and Design Into Sustainable Tourism Development; IGI Global: Hershey, PA, USA, 2025; pp. 1–20. [Google Scholar]

- Xiang, Z.; Du, Q.; Ma, Y.; Fan, W. A comparative analysis of major online review platforms: Implications for social media analytics in hospitality and tourism. Tour. Manag. 2017, 58, 51–65. [Google Scholar] [CrossRef]

- Chiengkul, W.; Kumjorn, P.; Tantipanichkul, T.; Suphan, K. Engaging with AI in tourism: A key to enhancing smart experiences and emotional bonds. Asia-Pac. J. Bus. Adm. 2025. [Google Scholar] [CrossRef]

- Irshad, A.; Singh, A.K. AI and conflict resolution in tourism destinations. In Impact of AI and Tech-Driven Solutions in Hospitality and Tourism; IGI Global: Hershey, PA, USA, 2024; pp. 429–439. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, VA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. Int. Conf. Mach. Learn. 2019, 97, 6105–6114. [Google Scholar]

- Jocher, G.; Qiu, J. Ultralytics YOLO11 (Version 11.0.0). Ultralytics. 2024. Available online: https://github.com/ultralytics/ultralytics (accessed on 1 July 2025).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 29th IEEE Computer Vision and Pattern Recognition Conference, Las Vegas, VA, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vision, Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Singh, H.; Verma, M.; Cheruku, R. DMFNet: Geometric multi-scale pixel-level contrastive learning for video salient object detection. Int. J. Multimed. Inf. Retr. 2025, 14, 12. [Google Scholar] [CrossRef]

- Remondino, F.; El-Hakim, S. Image-based 3D modelling: A review. Photogramm. Rec. 2006, 21, 269–291. [Google Scholar] [CrossRef]