Application of Deep Learning Technology in Monitoring Plant Attribute Changes

Abstract

1. Introduction

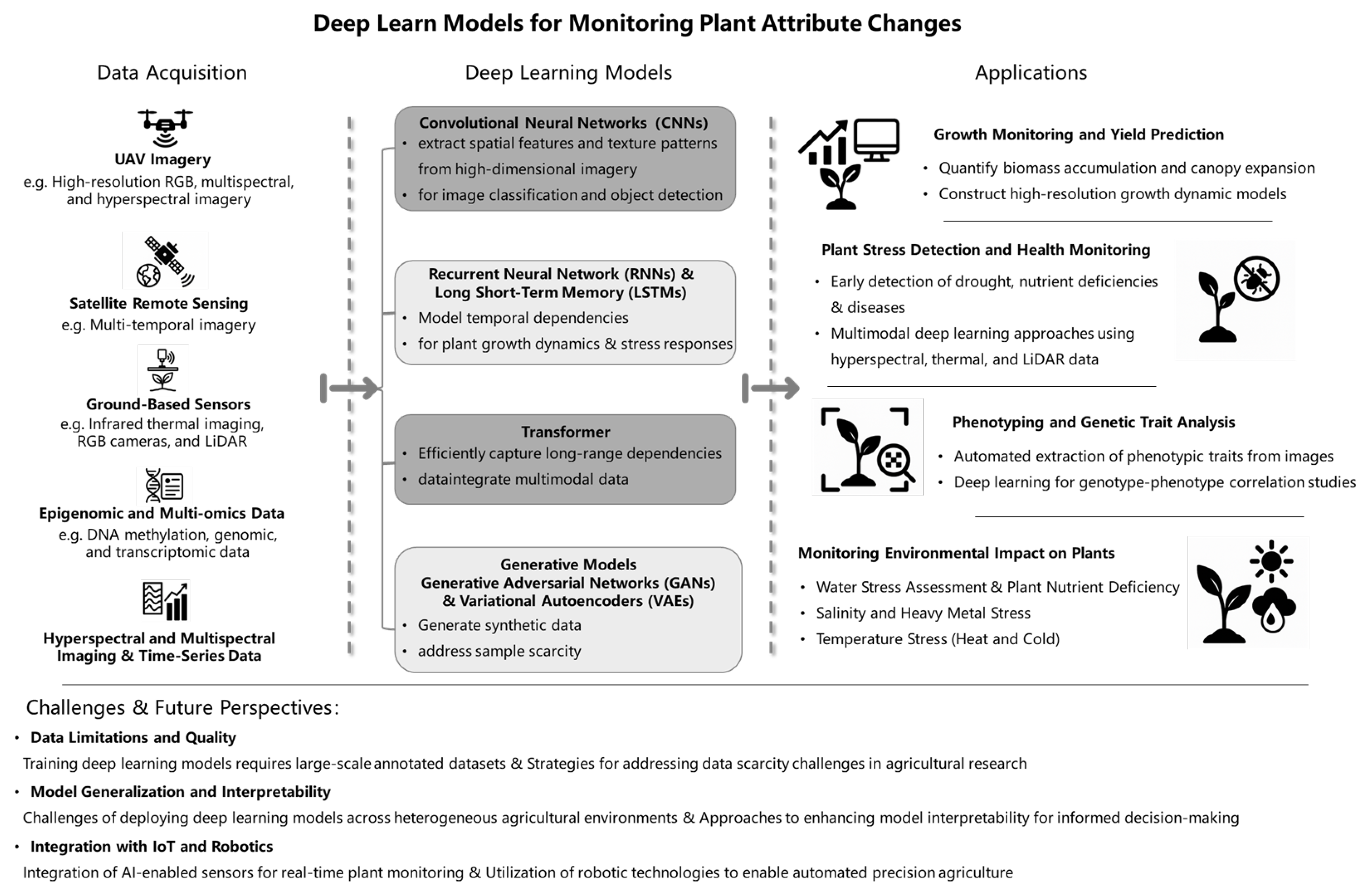

2. Deep Learning Models for Plant Attribute Monitoring

2.1. Convolutional Neural Networks (CNNs)

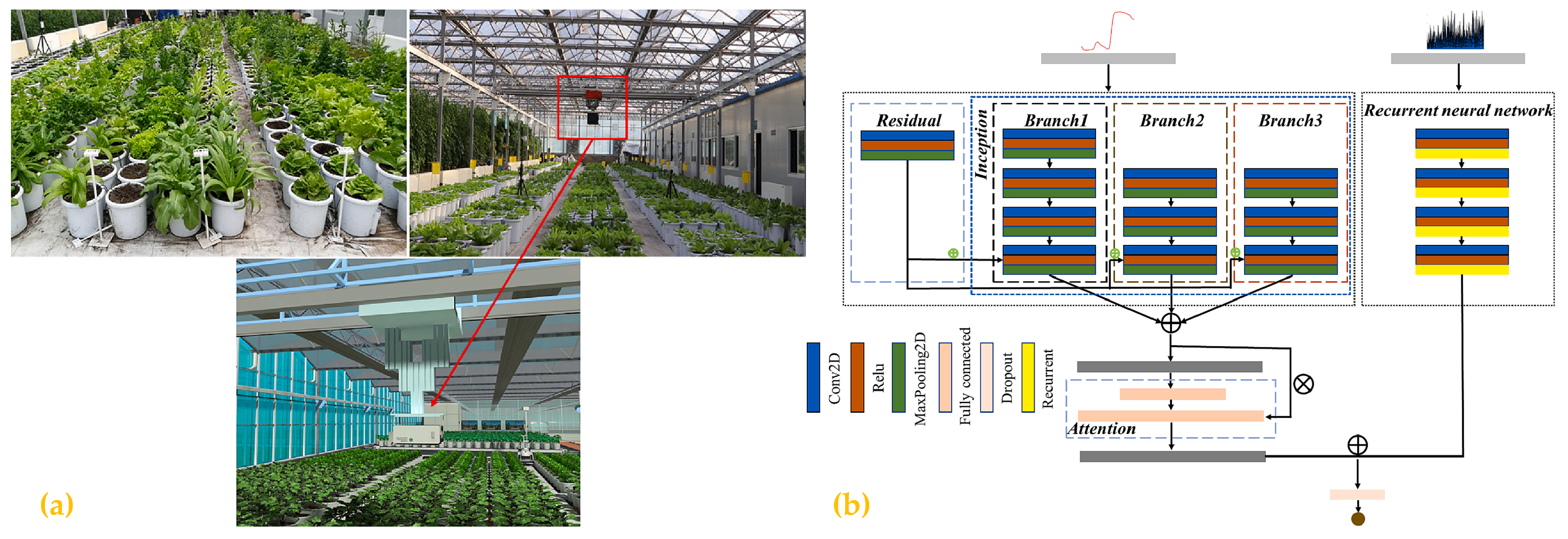

2.2. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM)

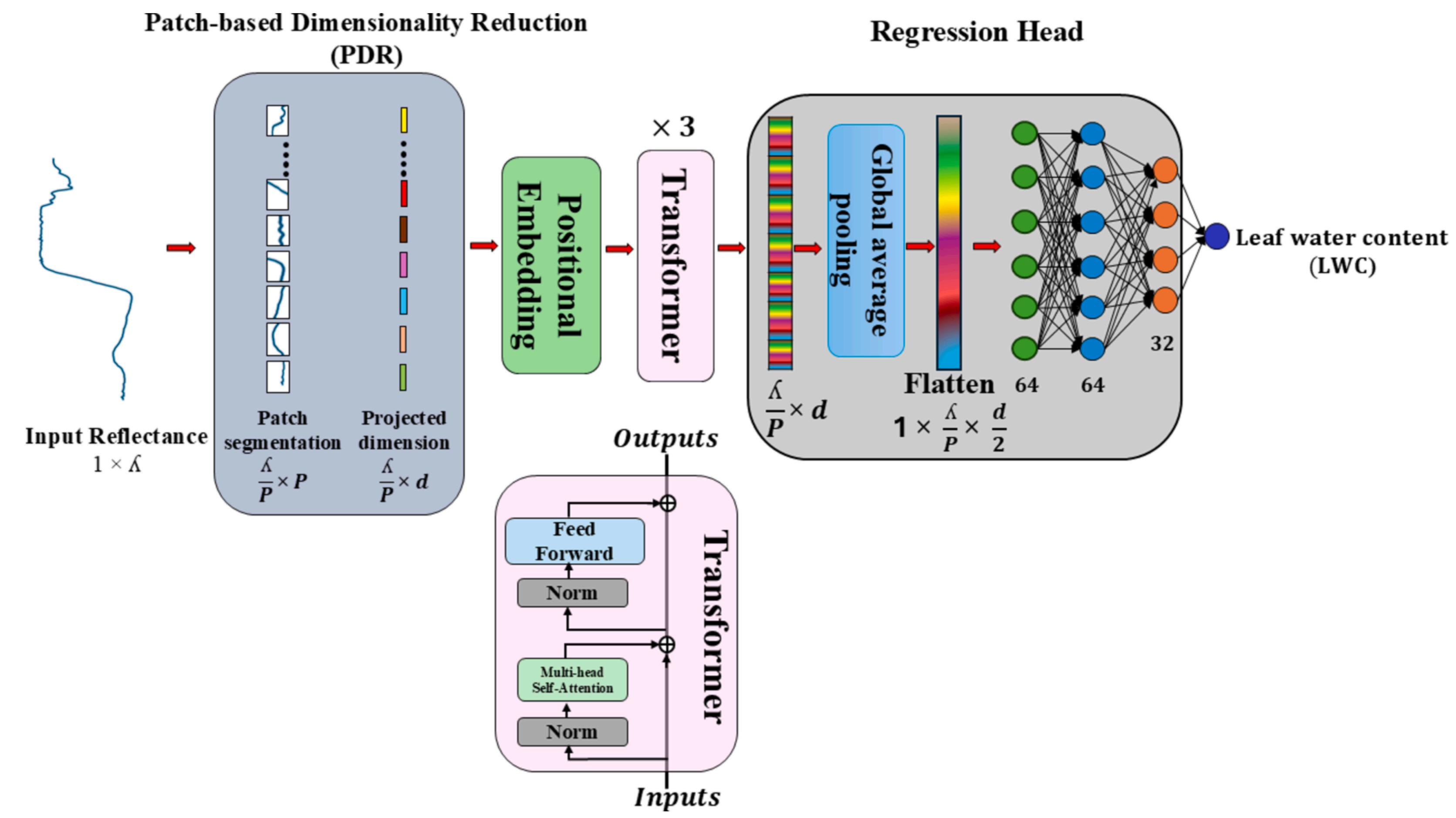

2.3. Transformer

2.4. Generative Models

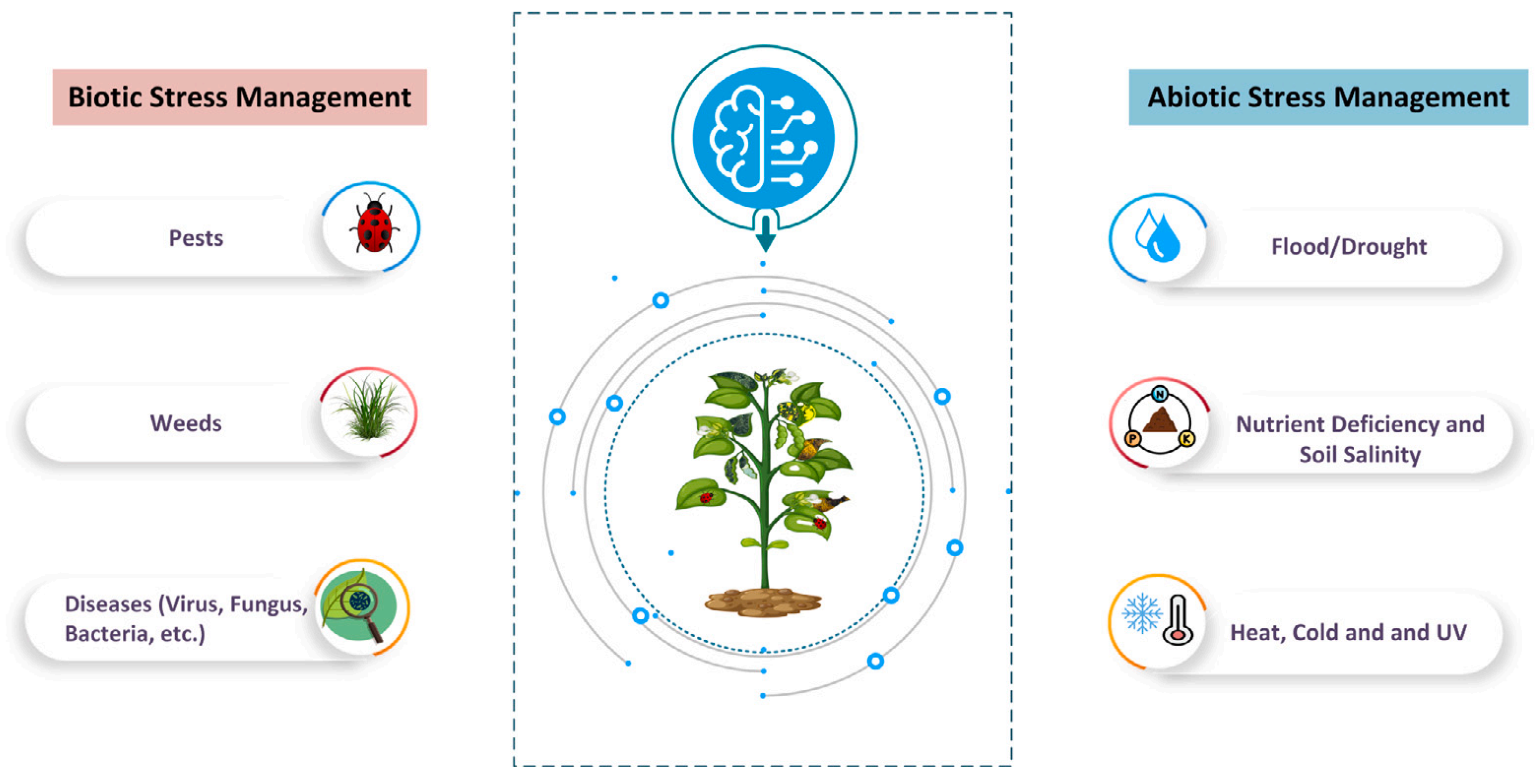

3. Applications of Deep Learning in Monitoring Plant Attributes

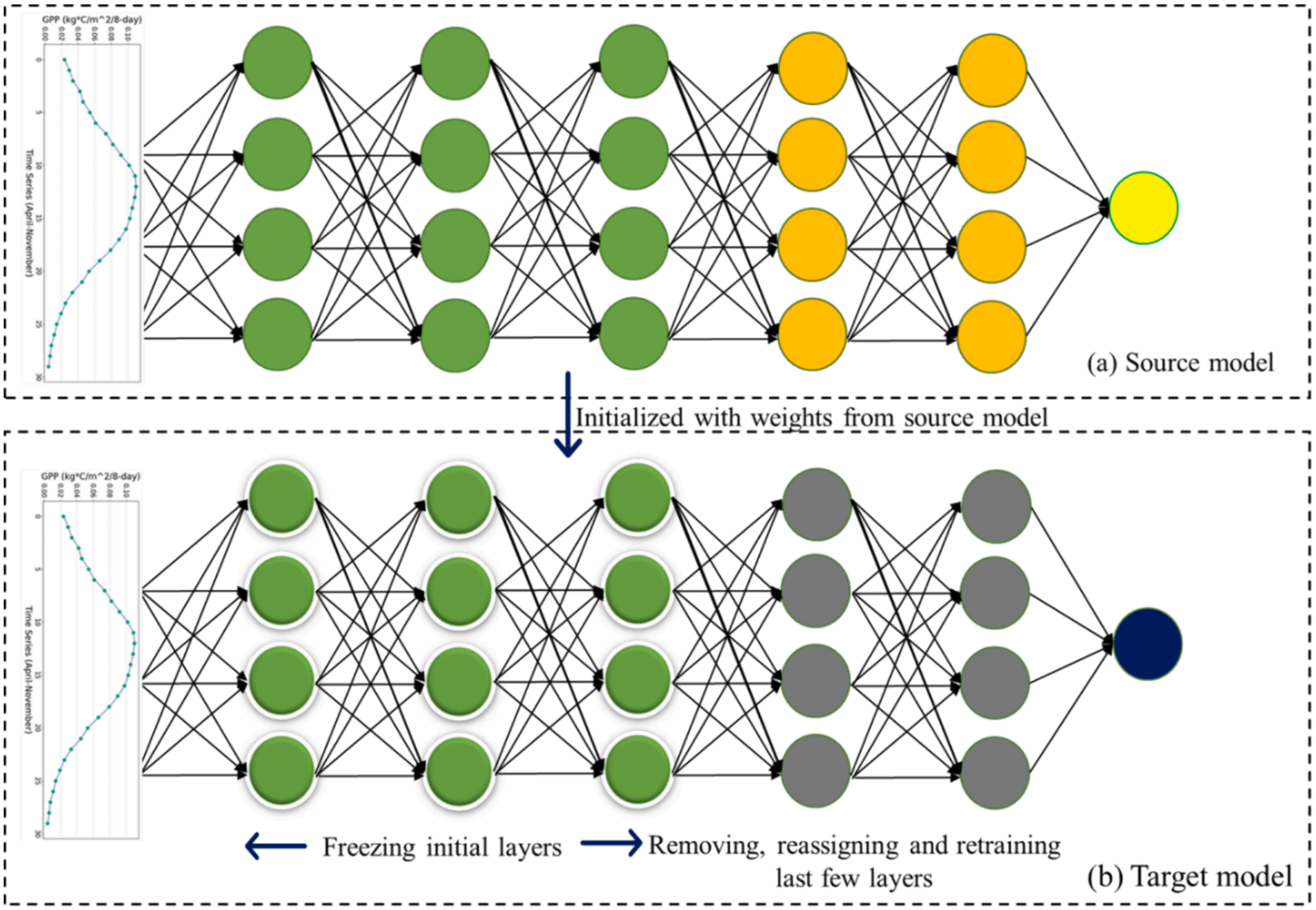

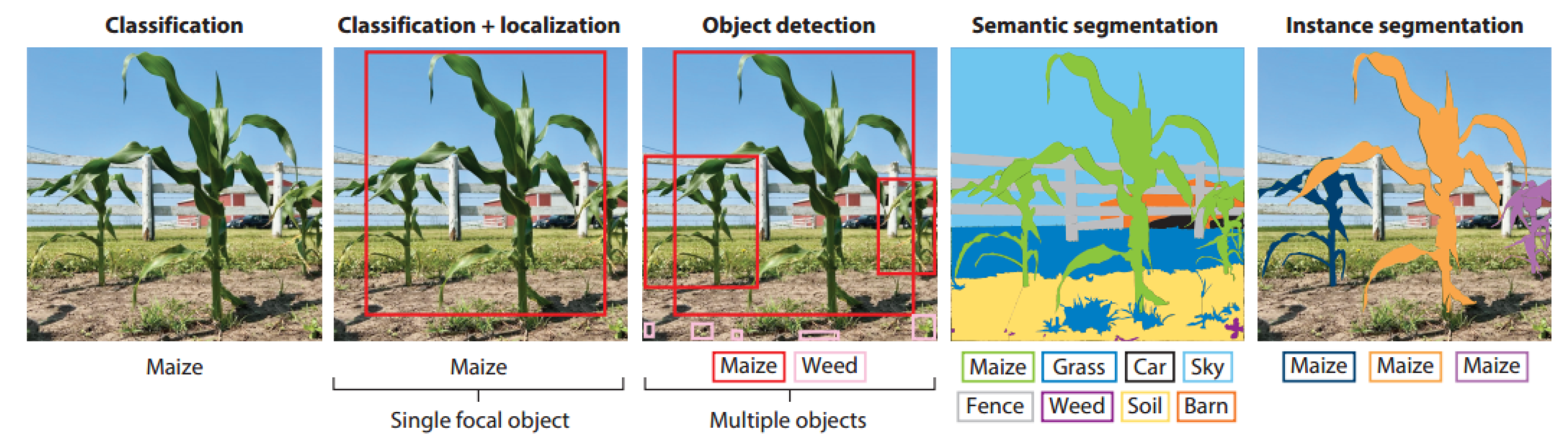

3.1. Growth Monitoring and Yield Prediction

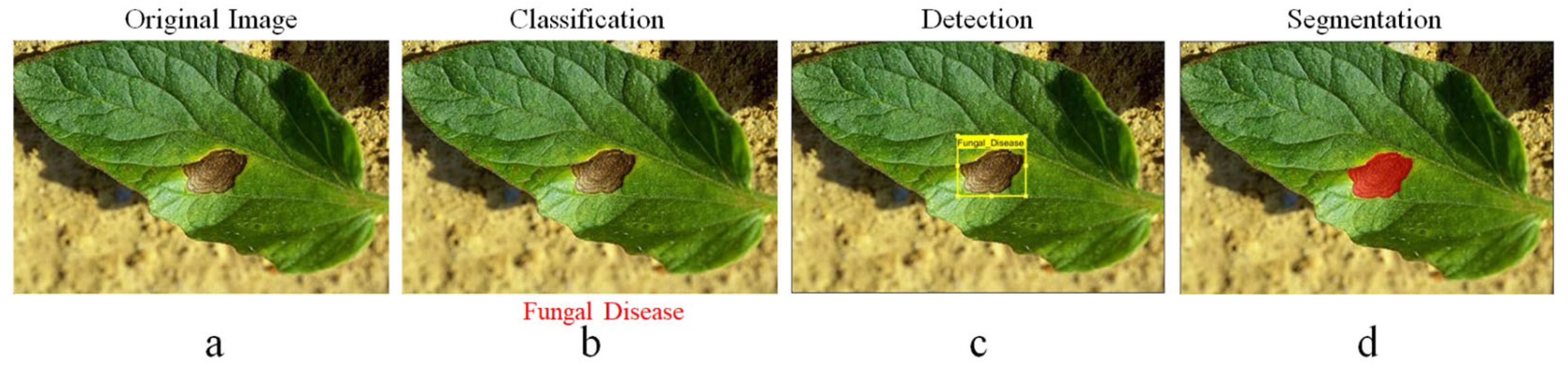

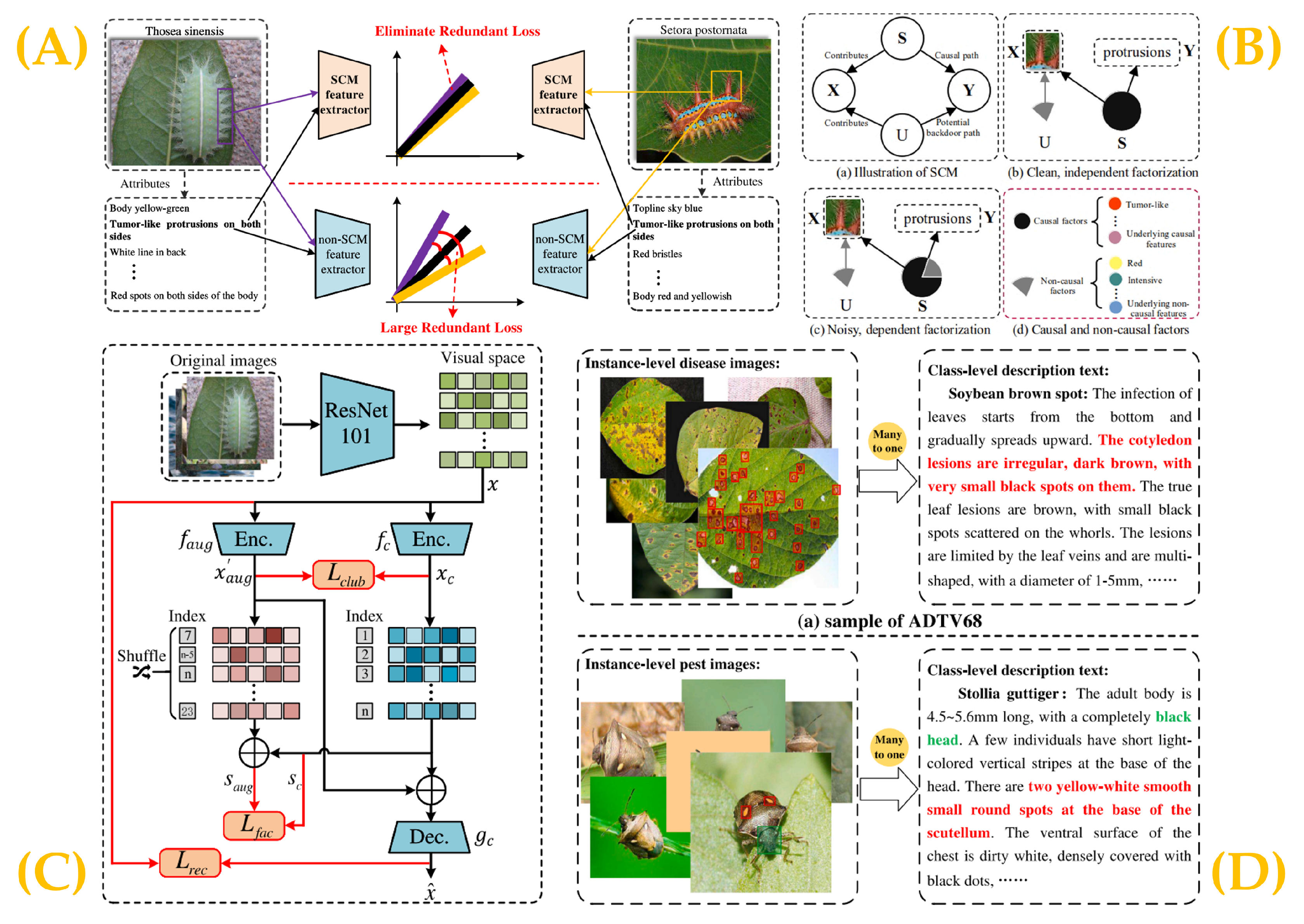

3.2. Disease and Pest Diagnosis

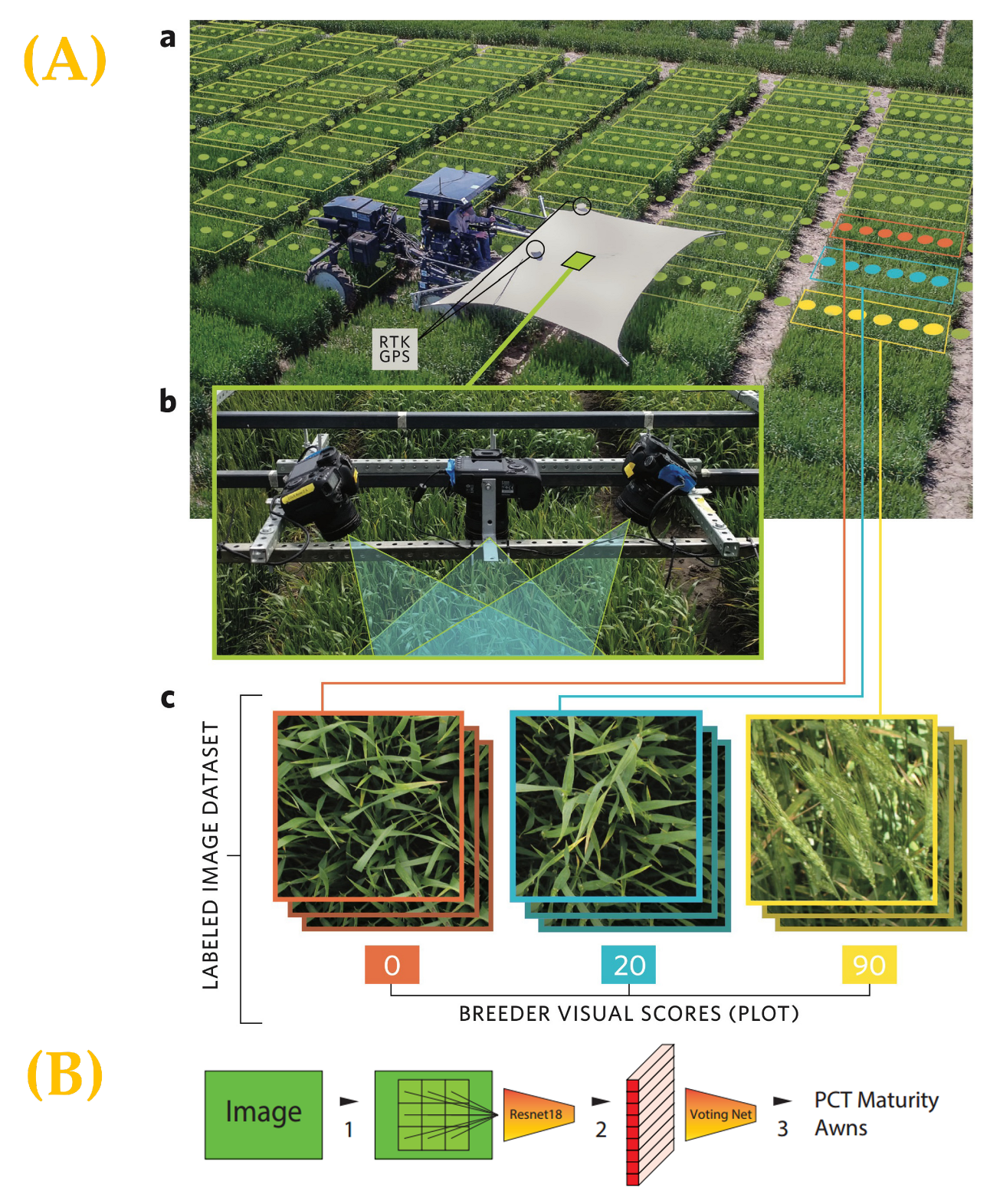

3.3. Phenotyping and Genetic Trait Analysis

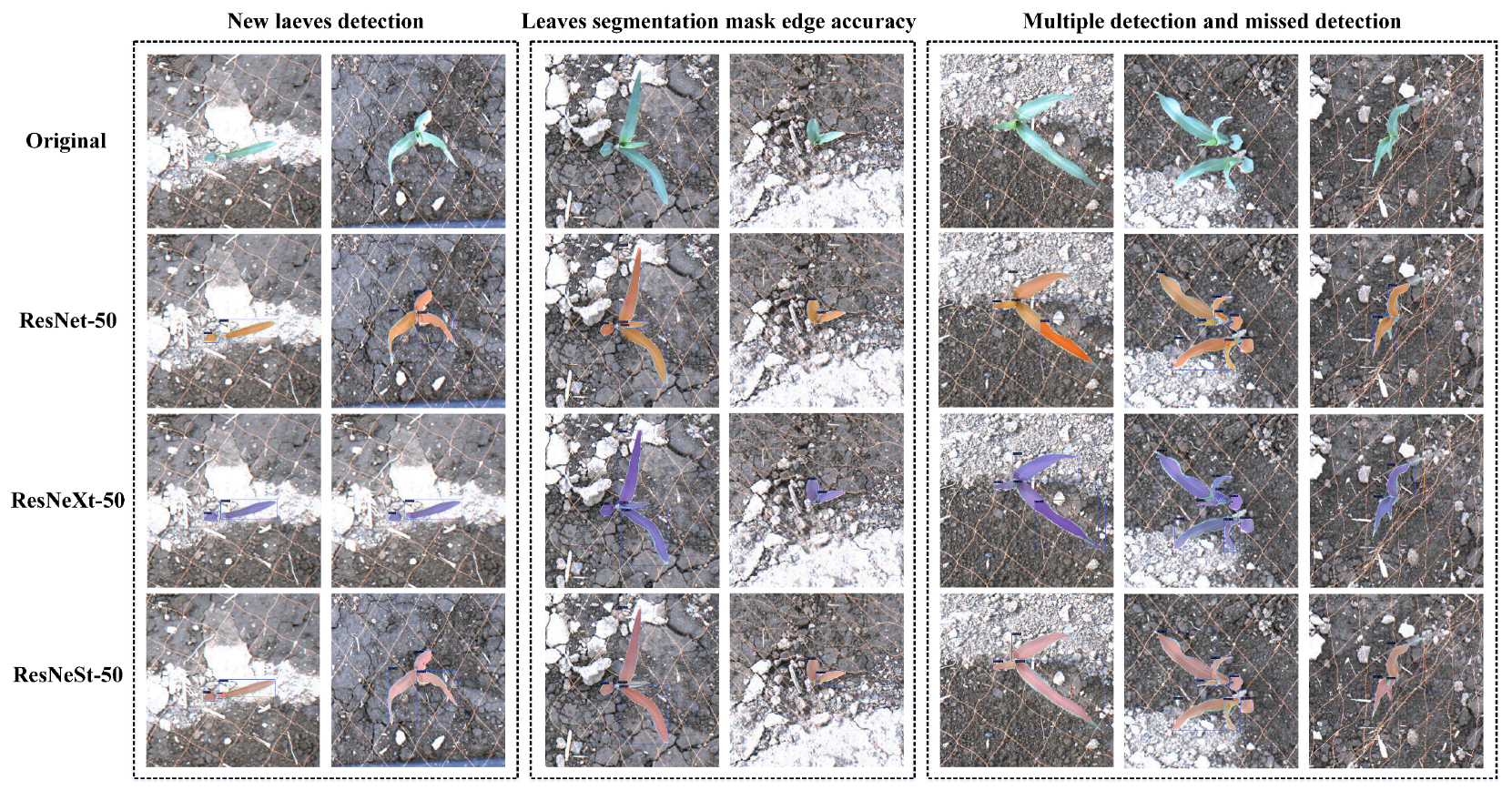

3.3.1. Automatic Extraction of Phenotypic Traits from Images

3.3.2. Applications of Deep Learning in Genotype–Phenotype Association

3.4. Monitoring the Environmental Impact on Plants

3.4.1. Water Stress Assessment

3.4.2. Nutrient Deficiency

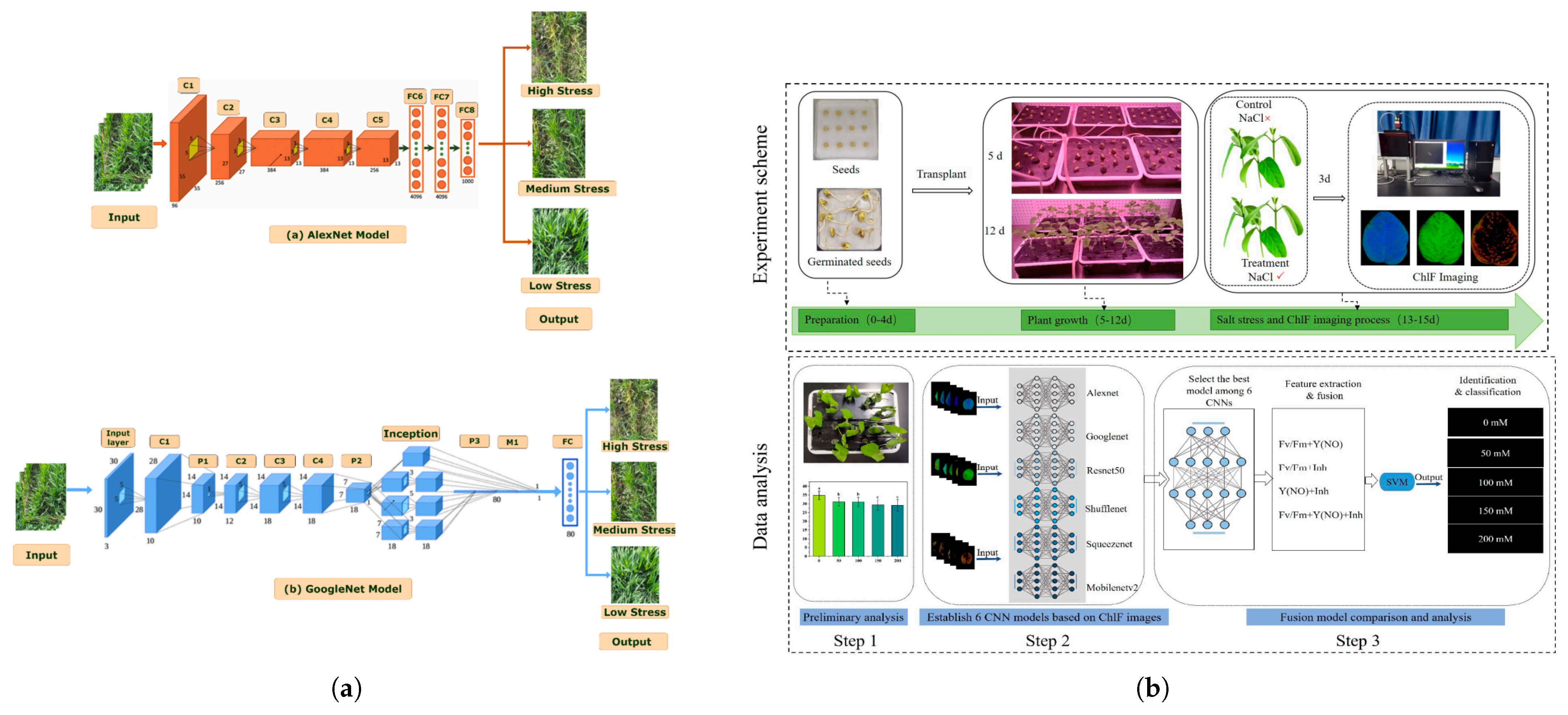

3.4.3. Salinity and Heavy Metal Stress

3.4.4. Temperature Stress

4. Discussion

4.1. Advantages

4.2. Challenges

4.3. Future Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- He, L.; Shan, Y.q.; Liu, C.; Cao, H.; Liu, X.n.; Guo, Y. Prediction of bedload transport inside vegetation canopies with natural morphology. J. Hydrodyn. 2024, 36, 556–569. [Google Scholar] [CrossRef]

- Wang, R.F.; Qu, H.R.; Su, W.H. From Sensors to Insights: Technological Trends in Image-Based High-Throughput Plant Phenotyping. Smart Agric. Technol. 2025, 12, 101257. [Google Scholar] [CrossRef]

- He, Q.; Zhan, J.; Liu, X.; Dong, C.; Tian, D.; Fu, Q. Multispectral polarimetric bidirectional reflectance research of plant canopy. Opt. Lasers Eng. 2025, 184, 108688. [Google Scholar] [CrossRef]

- Pieruschka, R.; Schurr, U. Plant phenotyping: Past, present, and future. Plant Phenomics 2019, 2019, 7507131. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.Y.; Xia, W.K.; Chu, H.Q.; Su, W.H.; Wang, R.F.; Wang, H. A comprehensive review of deep learning applications in cotton industry: From field monitoring to smart processing. Plants 2025, 14, 1481. [Google Scholar] [CrossRef] [PubMed]

- Liao, Q.; Ding, R.; Du, T.; Kang, S.; Tong, L.; Li, S. Salinity-specific stomatal conductance model parameters are reduced by stomatal saturation conductance and area via leaf nitrogen. Sci. Total Environ. 2023, 876, 162584. [Google Scholar] [CrossRef]

- Scafaro, A.P.; Posch, B.C.; Evans, J.R.; Farquhar, G.D.; Atkin, O.K. Rubisco deactivation and chloroplast electron transport rates co-limit photosynthesis above optimal leaf temperature in terrestrial plants. Nat. Commun. 2023, 14, 2820. [Google Scholar] [CrossRef]

- Zavafer, A.; Bates, H.; Mancilla, C.; Ralph, P.J. Phenomics: Conceptualization and importance for plant physiology. Trends Plant Sci. 2023, 28, 1004–1013. [Google Scholar] [CrossRef]

- Hwang, Y.; Kim, J.; Ryu, Y. Canopy structural changes explain reductions in canopy-level solar induced chlorophyll fluorescence in Prunus yedoensis seedlings under a drought stress condition. Remote Sens. Environ. 2023, 296, 113733. [Google Scholar] [CrossRef]

- Meerdink, S.K.; Roberts, D.A.; King, J.Y.; Roth, K.L.; Gader, P.D.; Caylor, K.K. Using hyperspectral and thermal imagery to monitor stress of Southern California plant species during the 2013–2015 drought. ISPRS J. Photogramm. Remote Sens. 2025, 220, 580–592. [Google Scholar] [CrossRef]

- Polk, S.L.; Cui, K.; Plemmons, R.J.; Murphy, J.M. Active diffusion and VCA-assisted image segmentation of hyperspectral images. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; IEEE: New York, NY, USA, 2022; pp. 1364–1367. [Google Scholar]

- Polk, S.L.; Cui, K.; Chan, A.H.; Coomes, D.A.; Plemmons, R.J.; Murphy, J.M. Unsupervised diffusion and volume maximization-based clustering of hyperspectral images. Remote Sens. 2023, 15, 1053. [Google Scholar] [CrossRef]

- Li, L.; Li, J.; Wang, H.; Georgieva, T.; Ferentinos, K.; Arvanitis, K.; Sygrimis, N. Sustainable energy management of solar greenhouses using open weather data on MACQU platform. Int. J. Agric. Biol. Eng. 2018, 11, 74–82. [Google Scholar] [CrossRef]

- Wang, H.; Mei, S.L. Shannon wavelet precision integration method for pathologic onion image segmentation based on homotopy perturbation technology. Math. Probl. Eng. 2014, 2014, 601841. [Google Scholar] [CrossRef]

- Yang, K.; Li, Y. Effects of water stress and fertilizer stress on maize growth and spectral identification of different stresses. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2023, 297, 122703. [Google Scholar] [CrossRef] [PubMed]

- Ali, S.; Hassan, M.; Kim, J.Y.; Farid, M.I.; Sanaullah, M.; Mufti, H. FF-PCA-LDA: Intelligent feature fusion based PCA-LDA classification system for plant leaf diseases. Appl. Sci. 2022, 12, 3514. [Google Scholar] [CrossRef]

- Meerdink, S.; Roberts, D.; Hulley, G.; Gader, P.; Pisek, J.; Adamson, K.; King, J.; Hook, S.J. Plant species’ spectral emissivity and temperature using the hyperspectral thermal emission spectrometer (HyTES) sensor. Remote Sens. Environ. 2019, 224, 421–435. [Google Scholar] [CrossRef]

- Zhang, S. Challenges in KNN classification. IEEE Trans. Knowl. Data Eng. 2021, 34, 4663–4675. [Google Scholar] [CrossRef]

- Sai, K.; Sood, N.; Saini, I. Abiotic stress classification through spectral analysis of enhanced electrophysiological signals of plants. Biosyst. Eng. 2022, 219, 189–204. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Q.; Yu, F.; Zhang, N.; Zhang, X.; Li, Y.; Wang, M.; Zhang, J. Progress in Research on Deep Learning-Based Crop Yield Prediction. Agronomy 2024, 14, 2264. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, R.F. The Heterogeneous Network Community Detection Model Based on Self-Attention. Symmetry 2025, 17, 432. [Google Scholar] [CrossRef]

- Yao, M.; Huo, Y.; Ran, Y.; Tian, Q.; Wang, R.; Wang, H. Neural radiance field-based visual rendering: A comprehensive review. arXiv 2024, arXiv:2404.00714. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Tu, Y.-H.; Wang, R.-F.; Su, W.-H. Active Disturbance Rejection Control—New Trends in Agricultural Cybernetics in the Future: A Comprehensive Review. Machines 2025, 13, 111. [Google Scholar] [CrossRef]

- Sun, H.; Chu, H.Q.; Qin, Y.M.; Hu, P.; Wang, R.F. Empowering Smart Soybean Farming with Deep Learning: Progress, Challenges, and Future Perspectives. Agronomy 2025, 15, 1831. [Google Scholar] [CrossRef]

- Zhou, L.; Cai, J.; Ding, S. The identification of ice floes and calculation of sea ice concentration based on a deep learning method. Remote Sens. 2023, 15, 2663. [Google Scholar] [CrossRef]

- Wang, R.F.; Tu, Y.H.; Li, X.C.; Chen, Z.Q.; Zhao, C.T.; Yang, C.; Su, W.H. An Intelligent Robot Based on Optimized YOLOv11l for Weed Control in Lettuce. In Proceedings of the 2025 ASABE Annual International Meeting. American Society of Agricultural and Biological Engineers, Toronto, ON, Canada, 13–16 July 2025; p. 1. [Google Scholar]

- Wang, R.F.; Su, W.H. The application of deep learning in the whole potato production Chain: A Comprehensive review. Agriculture 2024, 14, 1225. [Google Scholar] [CrossRef]

- Zhao, C.T.; Wang, R.F.; Tu, Y.H.; Pang, X.X.; Su, W.H. Automatic lettuce weed detection and classification based on optimized convolutional neural networks for robotic weed control. Agronomy 2024, 14, 2838. [Google Scholar] [CrossRef]

- Wang, R.F.; Tu, Y.H.; Chen, Z.Q.; Zhao, C.T.; Su, W.H. A Lettpoint-Yolov11l Based Intelligent Robot for Precision Intra-Row Weeds Control in Lettuce. SSRN 2025. [Google Scholar] [CrossRef]

- Dong, S.; Wang, P.; Abbas, K. A survey on deep learning and its applications. Comput. Sci. Rev. 2021, 40, 100379. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, R.F.; Cui, K. A Local Perspective-based Model for Overlapping Community Detection. arXiv 2025, arXiv:2503.21558. [Google Scholar] [CrossRef]

- Dargan, S.; Kumar, M.; Ayyagari, M.R.; Kumar, G. A survey of deep learning and its applications: A new paradigm to machine learning. Arch. Comput. Methods Eng. 2020, 27, 1071–1092. [Google Scholar] [CrossRef]

- Li, Z.; Sun, C.; Wang, H.; Wang, R.F. Hybrid optimization of phase masks: Integrating Non-Iterative methods with simulated annealing and validation via tomographic measurements. Symmetry 2025, 17, 530. [Google Scholar] [CrossRef]

- Li, Y.; Pan, L.; Peng, Y.; Li, X.; Wang, X.; Qu, L.; Song, Q.; Liang, Q.; Peng, S. Application of deep learning-based multimodal fusion technology in cancer diagnosis: A survey. Eng. Appl. Artif. Intell. 2025, 143, 109972. [Google Scholar] [CrossRef]

- de Souza Rodrigues, L.; Caixeta Filho, E.; Sakiyama, K.; Santos, M.F.; Jank, L.; Carromeu, C.; Silveira, E.; Matsubara, E.T.; Junior, J.M.; Goncalves, W.N. Deep4Fusion: A Deep FORage Fusion framework for high-throughput phenotyping for green and dry matter yield traits. Comput. Electron. Agric. 2023, 211, 107957. [Google Scholar] [CrossRef]

- Elavarasan, D.; Durai Raj Vincent, P. Fuzzy deep learning-based crop yield prediction model for sustainable agronomical frameworks. Neural Comput. Appl. 2021, 33, 13205–13224. [Google Scholar] [CrossRef]

- Singh, A.; Jones, S.; Ganapathysubramanian, B.; Sarkar, S.; Mueller, D.; Sandhu, K.; Nagasubramanian, K. Challenges and opportunities in machine-augmented plant stress phenotyping. Trends Plant Sci. 2021, 26, 53–69. [Google Scholar] [CrossRef]

- Wang, K.; Abid, M.A.; Rasheed, A.; Crossa, J.; Hearne, S.; Li, H. DNNGP, a deep neural network-based method for genomic prediction using multi-omics data in plants. Mol. Plant 2023, 16, 279–293. [Google Scholar] [CrossRef]

- Hossen, M.I.; Awrangjeb, M.; Pan, S.; Mamun, A.A. Transfer learning in agriculture: A review. Artif. Intell. Rev. 2025, 58, 97. [Google Scholar] [CrossRef]

- Cui, K.; Shao, Z.; Larsen, G.; Pauca, V.; Alqahtani, S.; Segurado, D.; Pinheiro, J.; Wang, M.; Lutz, D.; Plemmons, R.; et al. PalmProbNet: A Probabilistic Approach to Understanding Palm Distributions in Ecuadorian Tropical Forest via Transfer Learning. In Proceedings of the 2024 ACM Southeast Conference, Marietta, GA, USA, 18–20 April 2024; pp. 272–277. [Google Scholar]

- Khaki, S.; Wang, L.; Archontoulis, S.V. A CNN-RNN framework for crop yield prediction. Front. Plant Sci. 2020, 10, 1750. [Google Scholar] [CrossRef]

- Lu, W.; Du, R.; Niu, P.; Xing, G.; Luo, H.; Deng, Y.; Shu, L. Soybean yield preharvest prediction based on bean pods and leaves image recognition using deep learning neural network combined with GRNN. Front. Plant Sci. 2022, 12, 791256. [Google Scholar] [CrossRef]

- Zhuang, L.; Wang, C.; Hao, H.; Li, J.; Xu, L.; Liu, S.; Guo, X. Maize emergence rate and leaf emergence speed estimation via image detection under field rail-based phenotyping platform. Comput. Electron. Agric. 2024, 220, 108838. [Google Scholar] [CrossRef]

- El Sakka, M.; Ivanovici, M.; Chaari, L.; Mothe, J. A review of CNN applications in smart agriculture using multimodal data. Sensors 2025, 25, 472. [Google Scholar] [CrossRef] [PubMed]

- Huo, Y.; Yao, M.; Tian, Q.; Wang, T.; Wang, R.; Wang, H. FA-YOLO: Research On Efficient Feature Selection YOLO Improved Algorithm Based On FMDS and AGMF Modules. arXiv 2024, arXiv:2408.16313. [Google Scholar] [CrossRef]

- Peyal, H.I.; Nahiduzzaman, M.; Pramanik, M.A.H.; Syfullah, M.K.; Shahriar, S.M.; Sultana, A.; Ahsan, M.; Haider, J.; Khandakar, A.; Chowdhury, M.E. Plant disease classifier: Detection of dual-crop diseases using lightweight 2d cnn architecture. IEEE Access 2023, 11, 110627–110643. [Google Scholar] [CrossRef]

- Huo, Y.; Wang, R.F.; Zhao, C.T.; Hu, P.; Wang, H. Research on Obtaining Pepper Phenotypic Parameters Based on Improved YOLOX Algorithm. AgriEngineering 2025, 7, 209. [Google Scholar] [CrossRef]

- Turkoglu, M.O.; D’Aronco, S.; Wegner, J.D.; Schindler, K. Gating revisited: Deep multi-layer RNNs that can be trained. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4081–4092. [Google Scholar] [CrossRef]

- Vural, N.M.; Ilhan, F.; Yilmaz, S.F.; Ergüt, S.; Kozat, S.S. Achieving online regression performance of LSTMs with simple RNNs. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 7632–7643. [Google Scholar] [CrossRef]

- Basiri, M.E.; Nemati, S.; Abdar, M.; Cambria, E.; Acharya, U.R. ABCDM: An attention-based bidirectional CNN-RNN deep model for sentiment analysis. Future Gener. Comput. Syst. 2021, 115, 279–294. [Google Scholar] [CrossRef]

- Chen, J.; Zhuge, H. Extractive summarization of documents with images based on multi-modal RNN. Future Gener. Comput. Syst. 2019, 99, 186–196. [Google Scholar] [CrossRef]

- Dera, D.; Ahmed, S.; Bouaynaya, N.C.; Rasool, G. Trustworthy uncertainty propagation for sequential time-series analysis in rnns. IEEE Trans. Knowl. Data Eng. 2023, 36, 882–896. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Tian, H.; Wang, P.; Tansey, K.; Han, D.; Zhang, J.; Zhang, S.; Li, H. A deep learning framework under attention mechanism for wheat yield estimation using remotely sensed indices in the Guanzhong Plain, PR China. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102375. [Google Scholar] [CrossRef]

- Cheng, E.; Wang, F.; Peng, D.; Zhang, B.; Zhao, B.; Zhang, W.; Hu, J.; Lou, Z.; Yang, S.; Zhang, H.; et al. A GT-LSTM spatio-temporal approach for winter wheat yield prediction: From the field scale to county scale. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4409318. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, H.; Bian, L.; Zhou, L.; Wang, S.; Ge, Y. Poplar seedling varieties and drought stress classification based on multi-source, time-series data and deep learning. Ind. Crops Prod. 2024, 218, 118905. [Google Scholar] [CrossRef]

- Chandel, N.S.; Rajwade, Y.A.; Dubey, K.; Chandel, A.K.; Subeesh, A.; Tiwari, M.K. Water stress identification of winter wheat crop with state-of-the-art AI techniques and high-resolution thermal-RGB imagery. Plants 2022, 11, 3344. [Google Scholar] [CrossRef] [PubMed]

- Ali, T.; Rehman, S.U.; Ali, S.; Mahmood, K.; Obregon, S.A.; Iglesias, R.C.; Khurshaid, T.; Ashraf, I. Smart agriculture: Utilizing machine learning and deep learning for drought stress identification in crops. Sci. Rep. 2024, 14, 30062. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems, Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Wang, Z.; Wang, R.; Wang, M.; Lai, T.; Zhang, M. Self-supervised transformer-based pre-training method with General Plant Infection dataset. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Urumqi, China, 18–20 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 189–202. [Google Scholar]

- Zhang, W.T.; Bai, Y.; Zheng, S.D.; Cui, J.; Huang, Z.z. Tensor Transformer for hyperspectral image classification. Pattern Recognit. 2025, 163, 111470. [Google Scholar] [CrossRef]

- Guo, Y.; Lan, Y.; Chen, X. CST: Convolutional Swin Transformer for detecting the degree and types of plant diseases. Comput. Electron. Agric. 2022, 202, 107407. [Google Scholar] [CrossRef]

- Rahman, M.H.; Busby, S.; Ru, S.; Hanif, S.; Sanz-Saez, A.; Zheng, J.; Rehman, T.U. Transformer-Based hyperspectral image analysis for phenotyping drought tolerance in blueberries. Comput. Electron. Agric. 2025, 228, 109684. [Google Scholar] [CrossRef]

- Yao, M.; Huo, Y.; Tian, Q.; Zhao, J.; Liu, X.; Wang, R.; Xue, L.; Wang, H. FMRFT: Fusion mamba and DETR for query time sequence intersection fish tracking. Comput. Electron. Agric. 2025, 237, 110742. [Google Scholar] [CrossRef]

- Wu, A.Q.; Li, K.L.; Song, Z.Y.; Lou, X.; Hu, P.; Yang, W.; Wang, R.F. Deep Learning for Sustainable Aquaculture: Opportunities and Challenges. Sustainability 2025, 17, 5084. [Google Scholar] [CrossRef]

- Akkem, Y.; Biswas, S.K.; Varanasi, A. A comprehensive review of synthetic data generation in smart farming by using variational autoencoder and generative adversarial network. Eng. Appl. Artif. Intell. 2024, 131, 107881. [Google Scholar] [CrossRef]

- Wang, K.; Gou, C.; Duan, Y.; Lin, Y.; Zheng, X.; Wang, F.Y. Generative adversarial networks: Introduction and outlook. IEEE/CAA J. Autom. Sin. 2017, 4, 588–598. [Google Scholar] [CrossRef]

- Pu, Y.; Gan, Z.; Henao, R.; Yuan, X.; Li, C.; Stevens, A.; Carin, L. Variational autoencoder for deep learning of images, labels and captions. In Advances in Neural Information Processing Systems, Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Curran Associates Inc.: Red Hook, NY, USA, 2016; Volume 29. [Google Scholar]

- Miranda, M.; Zabawa, L.; Kicherer, A.; Strothmann, L.; Rascher, U.; Roscher, R. Detection of anomalous grapevine berries using variational autoencoders. Front. Plant Sci. 2022, 13, 729097. [Google Scholar] [CrossRef] [PubMed]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative adversarial networks: An overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Gui, J.; Sun, Z.; Wen, Y.; Tao, D.; Ye, J. A review on generative adversarial networks: Algorithms, theory, and applications. IEEE Trans. Knowl. Data Eng. 2021, 35, 3313–3332. [Google Scholar] [CrossRef]

- Yu, H.; Welch, J.D. MichiGAN: Sampling from disentangled representations of single-cell data using generative adversarial networks. Genome Biol. 2021, 22, 158. [Google Scholar] [CrossRef]

- Sun, R.; Huang, C.; Zhu, H.; Ma, L. Mask-aware photorealistic facial attribute manipulation. Comput. Vis. Media 2021, 7, 363–374. [Google Scholar] [CrossRef]

- Qin, C.; Liu, J.; Ma, S.; Du, J.; Jiang, G.; Zhao, L. Inverse design of semiconductor materials with deep generative models. J. Mater. Chem. A 2024, 12, 22689–22702. [Google Scholar] [CrossRef]

- Debnath, S.; Preetham, A.; Vuppu, S.; Kumar, S.N.P. Optimal weighted GAN and U-Net based segmentation for phenotypic trait estimation of crops using Taylor Coot algorithm. Appl. Soft Comput. 2023, 144, 110396. [Google Scholar] [CrossRef]

- Li, J.; Wang, Y.; Zheng, L.; Zhang, M.; Wang, M. Towards end-to-end deep RNN based networks to precisely regress of the lettuce plant height by single perspective sparse 3D point cloud. Expert Syst. Appl. 2023, 229, 120497. [Google Scholar] [CrossRef]

- Singh, A.K.; Rao, A.; Chattopadhyay, P.; Maurya, R.; Singh, L. Effective plant disease diagnosis using Vision Transformer trained with leafy-generative adversarial network-generated images. Expert Syst. Appl. 2024, 254, 124387. [Google Scholar] [CrossRef]

- Murphy, K.M.; Ludwig, E.; Gutierrez, J.; Gehan, M.A. Deep learning in image-based plant phenotyping. Annu. Rev. Plant Biol. 2024, 75, 771–795. [Google Scholar] [CrossRef]

- Chawla, T.; Mittal, S.; Azad, H.K. MobileNet-GRU fusion for optimizing diagnosis of yellow vein mosaic virus. Ecol. Inform. 2024, 81, 102548. [Google Scholar] [CrossRef]

- Taghavi Namin, S.; Esmaeilzadeh, M.; Najafi, M.; Brown, T.B.; Borevitz, J.O. Deep phenotyping: Deep learning for temporal phenotype/genotype classification. Plant Methods 2018, 14, 66. [Google Scholar] [CrossRef]

- Sagar, B.; Cauvery, N. Agriculture data analytics in crop yield estimation: A critical review. Indones. J. Electr. Eng. Comput. Sci. 2018, 12, 1087–1093. [Google Scholar] [CrossRef]

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Pretty, J.; Robinson, S.; Thomas, S.M.; Toulmin, C. Food security: The challenge of feeding 9 billion people. Science 2010, 327, 812–818. [Google Scholar] [CrossRef] [PubMed]

- Nelson, W.J.; Lee, B.C.; Gasperini, F.A.; Hair, D.M. Meeting the challenge of feeding 9 billion people safely and securely. J. Agromed. 2012, 17, 347–350. [Google Scholar] [CrossRef] [PubMed]

- Muruganantham, P.; Wibowo, S.; Grandhi, S.; Samrat, N.H.; Islam, N. A systematic literature review on crop yield prediction with deep learning and remote sensing. Remote Sens. 2022, 14, 1990. [Google Scholar] [CrossRef]

- Khan, S.N.; Li, D.; Maimaitijiang, M. Using gross primary production data and deep transfer learning for crop yield prediction in the US Corn Belt. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103965. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, D.; Zhang, Y.; Zhang, Y.; Han, Y.; Zhang, Q.; Zhang, Q.; Zhang, C.; Liu, Z.; Wang, K. Prediction of corn variety yield with attribute-missing data via graph neural network. Comput. Electron. Agric. 2023, 211, 108046. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Chu, Z.; Yu, J. An end-to-end model for rice yield prediction using deep learning fusion. Comput. Electron. Agric. 2020, 174, 105471. [Google Scholar] [CrossRef]

- Wei, J.; Tian, X.; Ren, W.; Gao, R.; Ji, Z.; Kong, Q.; Su, Z. A precise plot-level rice yield prediction method based on panicle detection. Agronomy 2024, 14, 1618. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, S.; Wang, X.; Chen, B.; Chen, J.; Wang, J.; Huang, M.; Wang, Z.; Ma, L.; Wang, P.; et al. Exploring the superiority of solar-induced chlorophyll fluorescence data in predicting wheat yield using machine learning and deep learning methods. Comput. Electron. Agric. 2022, 192, 106612. [Google Scholar] [CrossRef]

- Jeong, S.; Ko, J.; Yeom, J.M. Predicting rice yield at pixel scale through synthetic use of crop and deep learning models with satellite data in South and North Korea. Sci. Total Environ. 2022, 802, 149726. [Google Scholar] [CrossRef] [PubMed]

- Cho, W.; Kim, S.; Na, M.; Na, I. Forecasting of tomato yields using attention-based LSTM network and ARMA model. Electronics 2021, 10, 1576. [Google Scholar] [CrossRef]

- Pisharody, S.N.; Duraisamy, P.; Rangarajan, A.K.; Whetton, R.L.; Herrero-Langreo, A. Precise Tomato Ripeness Estimation and Yield Prediction using Transformer Based Segmentation-SegLoRA. Comput. Electron. Agric. 2025, 233, 110172. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, M.; Li, J.; Yang, S.; Zheng, L.; Liu, X.; Wang, M. Automatic non-destructive multiple lettuce traits prediction based on DeepLabV3+. J. Food Meas. Charact. 2023, 17, 636–652. [Google Scholar] [CrossRef]

- Qin, Y.M.; Tu, Y.H.; Li, T.; Ni, Y.; Wang, R.F.; Wang, H. Deep Learning for sustainable agriculture: A systematic review on applications in lettuce cultivation. Sustainability 2025, 17, 3190. [Google Scholar] [CrossRef]

- Chakraborty, M.; Pourreza, A.; Zhang, X.; Jafarbiglu, H.; Shackel, K.A.; DeJong, T. Early almond yield forecasting by bloom mapping using aerial imagery and deep learning. Comput. Electron. Agric. 2023, 212, 108063. [Google Scholar] [CrossRef]

- Deguine, J.P.; Aubertot, J.N.; Flor, R.J.; Lescourret, F.; Wyckhuys, K.A.; Ratnadass, A. Integrated pest management: Good intentions, hard realities. A review. Agron. Sustain. Dev. 2021, 41, 38. [Google Scholar] [CrossRef]

- Das, A.; Pathan, F.; Jim, J.R.; Kabir, M.M.; Mridha, M. Deep learning-based classification, detection, and segmentation of tomato leaf diseases: A state-of-the-art review. Artif. Intell. Agric. 2025, 15, 192–220. [Google Scholar] [CrossRef]

- Polk, S.L.; Chan, A.H.; Cui, K.; Plemmons, R.J.; Coomes, D.A.; Murphy, J.M. Unsupervised detection of ash dieback disease (Hymenoscyphus fraxineus) using diffusion-based hyperspectral image clustering. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; IEEE: New York, NY, USA, 2022; pp. 2287–2290. [Google Scholar]

- Wilke, C. Remote Sensing for Crops Spots Pests and Pathogens. ACS Cent. Sci. 2023, 9, 339–342. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Zhang, H.W.; Dai, Y.Q.; Cui, K.; Wang, H.; Chee, P.W.; Wang, R.F. Resource-Efficient Cotton Network: A Lightweight Deep Learning Framework for Cotton Disease and Pest Classification. Plants 2025, 14, 2082. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.; Sadeghi-Niaraki, A.; Ali, F.; Hussain, I.; Khalid, S. Leveraging deep learning for plant disease and pest detection: A comprehensive review and future directions. Front. Plant Sci. 2025, 16, 1538163. [Google Scholar] [CrossRef]

- Wyckhuys, K.; Sanchez-Bayo, F.; Aebi, A.; van Lexmond, M.B.; Bonmatin, J.M.; Goulson, D.; Mitchell, E. Stay true to integrated pest management. Science 2021, 371, 133. [Google Scholar] [CrossRef]

- Chen, P.; Wang, R.; Yang, P. Deep learning in crop diseases and insect pests. Front. Plant Sci. 2023, 14, 1145458. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Plant diseases and pests detection based on deep learning: A review. Plant Methods 2021, 17, 22. [Google Scholar] [CrossRef]

- Gao, Y.; Alyokhin, A.; Prager, S.M.; Reitz, S.; Huseth, A. Complexities in the Implementation and Maintenance of Integrated Pest Management in Potato. Annu. Rev. Entomol. 2024, 70, 45–63. [Google Scholar] [CrossRef]

- Tassis, L.M.; de Souza, J.E.T.; Krohling, R.A. A deep learning approach combining instance and semantic segmentation to identify diseases and pests of coffee leaves from in-field images. Comput. Electron. Agric. 2021, 186, 106191. [Google Scholar] [CrossRef]

- Xiong, Y.; Liang, L.; Wang, L.; She, J.; Wu, M. Identification of cash crop diseases using automatic image segmentation algorithm and deep learning with expanded dataset. Comput. Electron. Agric. 2020, 177, 105712. [Google Scholar] [CrossRef]

- Wang, C.; Du, P.; Wu, H.; Li, J.; Zhao, C.; Zhu, H. A cucumber leaf disease severity classification method based on the fusion of DeepLabV3+ and U-Net. Comput. Electron. Agric. 2021, 189, 106373. [Google Scholar] [CrossRef]

- Rehman, M.U.; Eesaar, H.; Abbas, Z.; Seneviratne, L.; Hussain, I.; Chong, K.T. Advanced drone-based weed detection using feature-enriched deep learning approach. Knowl.-Based Syst. 2024, 305, 112655. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Pu, R.; Gonzalez-Moreno, P.; Yuan, L.; Wu, K.; Huang, W. Monitoring plant diseases and pests through remote sensing technology: A review. Comput. Electron. Agric. 2019, 165, 104943. [Google Scholar] [CrossRef]

- Xu, W.; Li, W.; Wang, L.; Pompelli, M.F. Enhancing corn pest and disease recognition through deep learning: A comprehensive analysis. Agronomy 2023, 13, 2242. [Google Scholar] [CrossRef]

- Di, X.; Cui, K.; Wang, R.F. Toward Efficient UAV-Based Small Object Detection: A Lightweight Network with Enhanced Feature Fusion. Remote Sens. 2025, 17, 2235. [Google Scholar] [CrossRef]

- Zhu, H.; Lin, C.; Liu, G.; Wang, D.; Qin, S.; Li, A.; Xu, J.L.; He, Y. Intelligent agriculture: Deep learning in UAV-based remote sensing imagery for crop diseases and pests detection. Front. Plant Sci. 2024, 15, 1435016. [Google Scholar] [CrossRef]

- Muhammed, D.; Ahvar, E.; Ahvar, S.; Trocan, M.; Montpetit, M.J.; Ehsani, R. Artificial Intelligence of Things (AIoT) for smart agriculture: A review of architectures, technologies and solutions. J. Netw. Comput. Appl. 2024, 228, 103905. [Google Scholar] [CrossRef]

- Sharma, R.P.; Ramesh, D.; Pal, P.; Tripathi, S.; Kumar, C. IoT-enabled IEEE 802.15. 4 WSN monitoring infrastructure-driven fuzzy-logic-based crop pest prediction. IEEE Internet Things J. 2021, 9, 3037–3045. [Google Scholar] [CrossRef]

- Cai, J.; Xiao, D.; Lv, L.; Ye, Y. An early warning model for vegetable pests based on multidimensional data. Comput. Electron. Agric. 2019, 156, 217–226. [Google Scholar] [CrossRef]

- Hari, P.; Singh, M.P. Adaptive knowledge transfer using federated deep learning for plant disease detection. Comput. Electron. Agric. 2025, 229, 109720. [Google Scholar] [CrossRef]

- Mallick, M.T.; Murty, D.O.; Pal, R.; Mandal, S.; Saha, H.N.; Chakrabarti, A. High-speed system-on-chip-based platform for real-time crop disease and pest detection using deep learning techniques. Comput. Electr. Eng. 2025, 123, 110182. [Google Scholar] [CrossRef]

- Wang, S.; Zeng, Q.; Yuan, G.; Ni, W.; Li, C.; Duan, H.; Xie, N.; Xiao, F.; Yang, X. Generalized zero-shot pest and disease image classification based on causal gating model. Comput. Electron. Agric. 2025, 230, 109827. [Google Scholar] [CrossRef]

- Chen, X.; Yang, B.; Liu, Y.; Feng, Z.; Lyu, J.; Luo, J.; Wu, J.; Yao, Q.; Liu, S. Intelligent survey method of rice diseases and pests using AR glasses and image-text multimodal fusion model. Comput. Electron. Agric. 2025, 237, 110574. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, C. Convolutional neural networks for image-based high-throughput plant phenotyping: A review. Plant Phenomics 2020, 2020, 4152816. [Google Scholar] [CrossRef]

- Zarboubi, M.; Bellout, A.; Chabaa, S.; Dliou, A. CustomBottleneck-VGGNet: Advanced tomato leaf disease identification for sustainable agriculture. Comput. Electron. Agric. 2025, 232, 110066. [Google Scholar] [CrossRef]

- Weihs, B.J.; Tang, Z.; Tian, Z.; Heuschele, D.J.; Siddique, A.; Terrill, T.H.; Zhang, Z.; York, L.M.; Zhang, Z.; Xu, Z. Phenotyping alfalfa (Medicago sativa L.) root structure architecture via integrating confident machine learning with ResNet-18. Plant Phenomics 2024, 6, 0251. [Google Scholar] [CrossRef]

- Bheemanahalli, R.; Wang, C.; Bashir, E.; Chiluwal, A.; Pokharel, M.; Perumal, R.; Moghimi, N.; Ostmeyer, T.; Caragea, D.; Jagadish, S.K. Classical phenotyping and deep learning concur on genetic control of stomatal density and area in sorghum. Plant Physiol. 2021, 186, 1562–1579. [Google Scholar] [CrossRef]

- Atkins, K.; Garzón-Martínez, G.A.; Lloyd, A.; Doonan, J.H.; Lu, C. Unlocking the power of AI for phenotyping fruit morphology in Arabidopsis. GigaScience 2025, 14, giae123. [Google Scholar] [CrossRef]

- Zhou, S.; Chai, X.; Yang, Z.; Wang, H.; Yang, C.; Sun, T. Maize-IAS: A maize image analysis software using deep learning for high-throughput plant phenotyping. Plant Methods 2021, 17, 48. [Google Scholar] [CrossRef]

- Li, L.; Chang, H.; Zhao, S.; Liu, R.; Yan, M.; Li, F.; El-Sheery, N.I.; Feng, Z.; Yu, S. Combining high-throughput deep learning phenotyping and GWAS to reveal genetic variants of fruit branch angle in upland cotton. Ind. Crops Prod. 2024, 220, 119180. [Google Scholar] [CrossRef]

- Yu, H.; Dong, M.; Zhao, R.; Zhang, L.; Sui, Y. Research on precise phenotype identification and growth prediction of lettuce based on deep learning. Environ. Res. 2024, 252, 118845. [Google Scholar] [CrossRef]

- Uffelmann, E.; Huang, Q.Q.; Munung, N.S.; De Vries, J.; Okada, Y.; Martin, A.R.; Martin, H.C.; Lappalainen, T.; Posthuma, D. Genome-wide association studies. Nat. Rev. Methods Prim. 2021, 1, 59. [Google Scholar] [CrossRef]

- Arya, S.; Sandhu, K.S.; Singh, J.; Kumar, S. Deep learning: As the new frontier in high-throughput plant phenotyping. Euphytica 2022, 218, 47. [Google Scholar] [CrossRef]

- Champigny, M.J.; Unda, F.; Skyba, O.; Soolanayakanahally, R.Y.; Mansfield, S.D.; Campbell, M.M. Learning from methylomes: Epigenomic correlates of Populus balsamifera traits based on deep learning models of natural DNA methylation. Plant Biotechnol. J. 2020, 18, 1361–1375. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Xuan, H.; Evers, B.; Shrestha, S.; Pless, R.; Poland, J. High-throughput phenotyping with deep learning gives insight into the genetic architecture of flowering time in wheat. GigaScience 2019, 8, giz120. [Google Scholar] [CrossRef] [PubMed]

- Peleke, F.F.; Zumkeller, S.M.; Gültas, M.; Schmitt, A.; Szymański, J. Deep learning the cis-regulatory code for gene expression in selected model plants. Nat. Commun. 2024, 15, 3488. [Google Scholar] [CrossRef] [PubMed]

- Rairdin, A.; Fotouhi, F.; Zhang, J.; Mueller, D.S.; Ganapathysubramanian, B.; Singh, A.K.; Dutta, S.; Sarkar, S.; Singh, A. Deep learning-based phenotyping for genome wide association studies of sudden death syndrome in soybean. Front. Plant Sci. 2022, 13, 966244. [Google Scholar] [CrossRef]

- Wang, H.; Yan, S.; Wang, W.; Chen, Y.; Hong, J.; He, Q.; Diao, X.; Lin, Y.; Chen, Y.; Cao, Y.; et al. Cropformer: An interpretable deep learning framework for crop genomic prediction. Plant Commun. 2025, 6, 101223. [Google Scholar] [CrossRef]

- Waadt, R.; Seller, C.A.; Hsu, P.K.; Takahashi, Y.; Munemasa, S.; Schroeder, J.I. Plant hormone regulation of abiotic stress responses. Nat. Rev. Mol. Cell Biol. 2022, 23, 680–694. [Google Scholar] [CrossRef]

- Zhang, H.; Zhu, J.; Gong, Z.; Zhu, J.K. Abiotic stress responses in plants. Nat. Rev. Genet. 2022, 23, 104–119. [Google Scholar] [CrossRef] [PubMed]

- Subeesh, A.; Chauhan, N. Deep learning based abiotic crop stress assessment for precision agriculture: A comprehensive review. J. Environ. Manag. 2025, 381, 125158. [Google Scholar] [CrossRef]

- Kidokoro, S.; Shinozaki, K.; Yamaguchi-Shinozaki, K. Transcriptional regulatory network of plant cold-stress responses. Trends Plant Sci. 2022, 27, 922–935. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Duan, X.; Luo, L.; Dai, S.; Ding, Z.; Xia, G. How plant hormones mediate salt stress responses. Trends Plant Sci. 2020, 25, 1117–1130. [Google Scholar] [CrossRef] [PubMed]

- Mittler, R.; Zandalinas, S.I.; Fichman, Y.; Van Breusegem, F. Reactive oxygen species signalling in plant stress responses. Nat. Rev. Mol. Cell Biol. 2022, 23, 663–679. [Google Scholar] [CrossRef]

- Cai, J.; Shen, L.; Kang, H.; Xu, T. RNA modifications in plant adaptation to abiotic stresses. Plant Commun. 2025, 6, 101229. [Google Scholar] [CrossRef]

- Zhu, J.K. Abiotic stress signaling and responses in plants. Cell 2016, 167, 313–324. [Google Scholar] [CrossRef]

- Zhou, Z.; Majeed, Y.; Naranjo, G.D.; Gambacorta, E.M. Assessment for crop water stress with infrared thermal imagery in precision agriculture: A review and future prospects for deep learning applications. Comput. Electron. Agric. 2021, 182, 106019. [Google Scholar] [CrossRef]

- Chandel, N.S.; Chakraborty, S.K.; Rajwade, Y.A.; Dubey, K.; Tiwari, M.K.; Jat, D. Identifying crop water stress using deep learning models. Neural Comput. Appl. 2021, 33, 5353–5367. [Google Scholar] [CrossRef]

- Yu, S.; Fan, J.; Lu, X.; Wen, W.; Shao, S.; Liang, D.; Yang, X.; Guo, X.; Zhao, C. Deep learning models based on hyperspectral data and time-series phenotypes for predicting quality attributes in lettuces under water stress. Comput. Electron. Agric. 2023, 211, 108034. [Google Scholar] [CrossRef]

- Buitrago, M.F.; Groen, T.A.; Hecker, C.A.; Skidmore, A.K. Changes in thermal infrared spectra of plants caused by temperature and water stress. ISPRS J. Photogramm. Remote Sens. 2016, 111, 22–31. [Google Scholar] [CrossRef]

- Bouain, N.; Krouk, G.; Lacombe, B.; Rouached, H. Getting to the root of plant mineral nutrition: Combinatorial nutrient stresses reveal emergent properties. Trends Plant Sci. 2019, 24, 542–552. [Google Scholar] [CrossRef] [PubMed]

- Theodore Armand, T.P.; Nfor, K.A.; Kim, J.I.; Kim, H.C. Applications of artificial intelligence, machine learning, and deep learning in nutrition: A systematic review. Nutrients 2024, 16, 1073. [Google Scholar] [CrossRef]

- Chandel, N.S.; Jat, D.; Chakraborty, S.K.; Upadhyay, A.; Subeesh, A.; Chouhan, P.; Manjhi, M.; Dubey, K. Deep learning assisted real-time nitrogen stress detection for variable rate fertilizer applicator in wheat crop. Comput. Electron. Agric. 2025, 237, 110545. [Google Scholar] [CrossRef]

- Deng, Y.; Xin, N.; Zhao, L.; Shi, H.; Deng, L.; Han, Z.; Wu, G. Precision Detection of Salt Stress in Soybean Seedlings Based on Deep Learning and Chlorophyll Fluorescence Imaging. Plants 2024, 13, 2089. [Google Scholar] [CrossRef]

- Miura, G. Surviving salt stress. Nat. Chem. Biol. 2023, 19, 1291. [Google Scholar] [CrossRef]

- Ma, X.; Zeng, X.; Huang, Y.; Liu, S.H.; Yin, J.; Yang, G.F. Visualizing plant salt stress with a NaCl-responsive fluorescent probe. Nat. Protoc. 2024, 20, 902–933. [Google Scholar] [CrossRef]

- Yao, J.P.; Wang, Z.Y.; de Oliveira, R.F.; Wang, Z.Y.; Huang, L. A deep learning method for the long-term prediction of plant electrical signals under salt stress to identify salt tolerance. Comput. Electron. Agric. 2021, 190, 106435. [Google Scholar] [CrossRef]

- Feng, X.; Zhan, Y.; Wang, Q.; Yang, X.; Yu, C.; Wang, H.; Tang, Z.; Jiang, D.; Peng, C.; He, Y. Hyperspectral imaging combined with machine learning as a tool to obtain high-throughput plant salt-stress phenotyping. Plant J. 2020, 101, 1448–1461. [Google Scholar] [CrossRef]

- Khotimah, W.N.; Boussaid, F.; Sohel, F.; Xu, L.; Edwards, D.; Jin, X.; Bennamoun, M. SC-CAN: Spectral convolution and Channel Attention network for wheat stress classification. Remote Sens. 2022, 14, 4288. [Google Scholar] [CrossRef]

- Jin, X.; Lin, Q.; Zhang, X.; Zhang, S.; Ma, W.; Sheng, P.; Zhang, L.; Wu, M.; Zhu, X.; Li, Z.; et al. OsPRMT6b balances plant growth and high temperature stress by feedback inhibition of abscisic acid signaling. Nat. Commun. 2025, 16, 5173. [Google Scholar] [CrossRef] [PubMed]

- Kan, Y.; Mu, X.R.; Gao, J.; Lin, H.X.; Lin, Y. The molecular basis of heat stress responses in plants. Mol. Plant 2023, 16, 1612–1634. [Google Scholar] [CrossRef] [PubMed]

- Lantzouni, O.; Alkofer, A.; Falter-Braun, P.; Schwechheimer, C. GROWTH-REGULATING FACTORS interact with DELLAs and regulate growth in cold stress. Plant Cell 2020, 32, 1018–1034. [Google Scholar] [CrossRef]

- Yang, W.; Yang, C.; Hao, Z.; Xie, C.; Li, M. Diagnosis of plant cold damage based on hyperspectral imaging and convolutional neural network. IEEE Access 2019, 7, 118239–118248. [Google Scholar] [CrossRef]

- Ryu, J.; Wi, S.; Lee, H. Snapshot-Based Multispectral Imaging for Heat Stress Detection in Southern-Type Garlic. Appl. Sci. 2023, 13, 8133. [Google Scholar] [CrossRef]

- Xing, D.; Wang, Y.; Sun, P.; Huang, H.; Lin, E. A CNN-LSTM-att hybrid model for classification and evaluation of growth status under drought and heat stress in chinese fir (Cunninghamia lanceolata). Plant Methods 2023, 19, 66. [Google Scholar] [CrossRef]

- Tan, Z.; Shi, J.; Lv, R.; Li, Q.; Yang, J.; Ma, Y.; Li, Y.; Wu, Y.; Zhang, R.; Ma, H.; et al. Fast anther dehiscence status recognition system established by deep learning to screen heat tolerant cotton. Plant Methods 2022, 18, 53. [Google Scholar] [CrossRef]

- Mao, Y.; Li, H.; Wang, Y.; Wang, H.; Shen, J.; Xu, Y.; Ding, S.; Wang, H.; Ding, Z.; Fan, K. Rapid monitoring of tea plants under cold stress based on UAV multi-sensor data. Comput. Electron. Agric. 2023, 213, 108176. [Google Scholar] [CrossRef]

- Wang, J.; Liu, N.; Liu, G.; Liu, Y.; Zhuang, X.; Zhang, L.; Wang, Y.; Yu, G. RSD-YOLO: A Defect Detection Model for Wind Turbine Blade Images. In Proceedings of the International Conference on Computer Engineering and Networks, Kashi, China, 18–21 October 2024; Springer: Berlin/Heidelberg, Germany, 2025; pp. 423–435. [Google Scholar]

- Yang, Z.X.; Li, Y.; Wang, R.F.; Hu, P.; Su, W.H. Deep Learning in Multimodal Fusion for Sustainable Plant Care: A Comprehensive Review. Sustainability 2025, 17, 5255. [Google Scholar] [CrossRef]

- Jiang, D.; Wang, H.; Li, T.; Gouda, M.A.; Zhou, B. Real-time tracker of chicken for poultry based on attention mechanism-enhanced YOLO-Chicken algorithm. Comput. Electron. Agric. 2025, 237, 110640. [Google Scholar] [CrossRef]

- Teklegiorgis, S.; Belayneh, A.; Gebermeskel, K.; Akomolafe, G.F.; Dejene, S.W. Modelling the current and future agro-ecological distribution potential of Mexican prickly poppy (Argemone mexicana L.) invasive alien plant species in South Wollo, Ethiopia. Geol. Ecol. Landscapes 2024, 1–15. [Google Scholar] [CrossRef]

- Sekar, K.C.; Thapliyal, N.; Pandey, A.; Joshi, B.; Mukherjee, S.; Bhojak, P.; Bisht, M.; Bhatt, D.; Singh, S.; Bahukhandi, A. Plant species diversity and density patterns along altitude gradient covering high-altitude alpine regions of west Himalaya, India. Geol. Ecol. Landscapes 2024, 8, 559–573. [Google Scholar] [CrossRef]

| Model Type | Main Characteristics | Advantages | Disadvantages | Algorithms and Applications | Potential Scenarios |

|---|---|---|---|---|---|

| CNN | Multi-layer convolution and pooling structures that automatically extract local spatial features | Excellent performance in image classification and object detection; strong feature extraction capacity | Limited in capturing long-range dependencies; less effective for temporal modeling | ResNet, DenseNet, and VGG; maize emergence rate and leaf emergence speed monitoring [44] | Plant pest and disease identification, leaf phenotyping, and crop distribution classification |

| RNN | Processes sequential data through recurrent connections | Strong sequence modeling capabilities; suited for temporal dependencies | Prone to vanishing/exploding gradients; limited in capturing long-term dependencies | Basic RNN; accurate regression of lettuce plant height from single-view sparse 3D point clouds [77] | Crop growth modeling and yield prediction |

| LSTM | Adds gating mechanisms to RNNs to enhance long-term memory | Effectively learns complex dynamics in long sequences | Computationally intensive; sensitive to parameters | Stacked LSTM; Poplar seedling variety and drought stress classification [57] | Multitemporal yield prediction, phenological monitoring, and stress analysis |

| Transformer | Self-attention mechanism capturing global dependencies | Highly efficient parallel computation; supports multimodal data fusion | Large model size; requires substantial training data | Vision Transformer (ViT); Hyperspectral imagery for blueberry drought phenotyping [64] | Hyperspectral feature extraction and multimodal phenotypic prediction |

| GAN | Generates high-quality synthetic data via adversarial training | Mitigates sample scarcity; enhances model robustness | Training instability; difficult generator–discriminator balance | Pix2Pix GAN; Novel leaf disease augmentation model for plant disease diagnosis [78] | Pest and disease sample expansion, data augmentation, and rare phenotype simulation |

| VAE | Probabilistic generative model learning latent representations | Generates diverse samples; interpretable latent variables | Lower sample clarity compared to GANs | -VAE; dimensionality reduction and generation of hyperspectral plant phenotyping data [79] | Phenotypic representation, anomaly detection, and phenomics data generation |

| GRU | Simplified LSTM variant | Fewer parameters; efficient training | Slightly lower expressiveness than LSTM | GRU network; optimized diagnosis of yellow vein mosaic virus [80] | Pest and disease monitoring and continuous growth dynamics prediction |

| Ensemble DL | Integrates multiple models to improve robustness | High accuracy; good generalization | High computational resource consumption | Ensemble CNN + LSTM; automated genotype classification and dynamic phenotyping recognition during plant growth [81] | Cross-scale yield prediction and stress adaptation modeling |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, S.; Wang, H. Application of Deep Learning Technology in Monitoring Plant Attribute Changes. Sustainability 2025, 17, 7602. https://doi.org/10.3390/su17177602

Han S, Wang H. Application of Deep Learning Technology in Monitoring Plant Attribute Changes. Sustainability. 2025; 17(17):7602. https://doi.org/10.3390/su17177602

Chicago/Turabian StyleHan, Shuwei, and Haihua Wang. 2025. "Application of Deep Learning Technology in Monitoring Plant Attribute Changes" Sustainability 17, no. 17: 7602. https://doi.org/10.3390/su17177602

APA StyleHan, S., & Wang, H. (2025). Application of Deep Learning Technology in Monitoring Plant Attribute Changes. Sustainability, 17(17), 7602. https://doi.org/10.3390/su17177602