1. Introduction

Dams serve as critical infrastructure for water resource management, hydropower generation, and ecological balance. Consequently, ensuring their long-term operational safety has become a global priority and a cornerstone of sustainable development for resilient infrastructure. Dam displacement is a primary indicator of structural health, but its prediction is complicated by the interplay of hydrostatic pressure, thermal effects, and irreversible material aging. Consequently, developing accurate displacement prediction models that fuse physical mechanisms with data-driven insights remains a key research frontier in dam engineering, directly supporting the sustainable stewardship of vital water resources and the communities they serve.

Displacement prediction methodologies have evolved from physically interpretable statistical models to sophisticated machine learning frameworks. The cornerstone of the statistical approach is the Hydrostatic–Temperature–Time (HTT) model, which leverages regression analysis to quantify deformation components [

1]. This framework has been progressively enhanced to handle complex geometries in high dams [

2], to incorporate cold-climate effects [

3], and to couple spatial and seepage dynamics [

4]. The recent paradigm shift towards machine learning [

5] has unlocked new capabilities. For instance, Su et al. [

6] pioneered hybrid frameworks combining support vector machines (SVM) with finite element simulations, which Wang et al. [

7] later complemented by incorporating Gaussian process regression. Tree-based ensembles have also been prominent; Alazar et al. [

8] leveraged gradient-boosted regression trees for displacement and seepage modeling, Yang et al. [

9] proposed a hybrid XGBoost–ANN model for residual prediction, and Su et al. [

10] advanced random forest (RF) models with sliding time windows.

Within the domain of neural networks, significant progress has been made. Huang et al. [

11] proposed a dual-attention long short-term memory (DALSTM) model to effectively capture thermal effects. To enhance generalizability, Xu et al. [

12] synergized LSTM with wavelet decomposition, while to improve predictive robustness, Li et al. [

13] developed a one-dimensional residual network and LSTM (DRLSTM) architecture. Further innovations in neural architecture have also been explored. Peng et al. [

14] integrated graph convolutional networks (GCN) with attention mechanisms for distributed sensor feature extraction, and Kang et al. [

15] applied extreme learning machines (ELM) with modified activation functions to gravity dam health monitoring.

Among these methods, the Gated Recurrent Unit (GRU) is particularly adept at modeling long-term dependencies while remaining computationally efficient. Its efficacy in dam engineering has been validated in various specialized models. Yuan et al. [

16] proposed a VMD–TSVR–GRU model to account for non-stationarity in displacement data. Lu et al. [

17] validated an Inception–ResNet–GRU model on the Ertan Dam, achieving significant RMSE reduction. Concurrently, Xu et al. [

18] utilized a CNN–GRU model with spatial pooling to predict zonal displacement clusters. Despite these advancements, challenges in mitigating overfitting and ensuring comprehensive feature extraction from limited data remain [

19]. Furthermore, most recurrent models are unidirectional, struggling to fully capture the complex hysteresis phenomena where structural responses lag behind load variations [

20,

21].

To address the limitations of unidirectional models, especially in capturing the pronounced posteriority (time-lag effect) of temperature on dam deformation, this paper proposes an enhanced predictive model based on a Bidirectional GRU (BiGRU) network. This posteriority signifies that a dam’s structural response is a function of the temperature history over an extended preceding period, rather than just the instantaneous temperature. Our framework explicitly tackles this by incorporating a sliding window mechanism for feature engineering [

13,

22]. This technique provides the model not with a single snapshot in time, but with a sequence of recent historical data, thereby embedding the necessary temporal context to model the lag. The BiGRU architecture then processes these entire sequences in both forward and backward directions, allowing it to effectively learn the complex dependencies and time-lag patterns inherent in the data. The primary contribution of this work is the development and validation of this integrated framework on a 130 m concrete gravity dam. By providing more reliable foresight into structural behavior, this work offers a critical tool for proactive maintenance and risk management, thereby enhancing the long-term sustainability and resilience of critical water infrastructure.

The remainder of this paper is structured as follows:

Section 2 details the methodology.

Section 3 introduces the case study and evaluation metrics, and details the optimization of key parameters, including the sliding window size and the model’s hyperparameters.

Section 4 presents the experimental results and model comparisons. Finally,

Section 5 discusses the findings and outlines future research directions.

2. Methodology

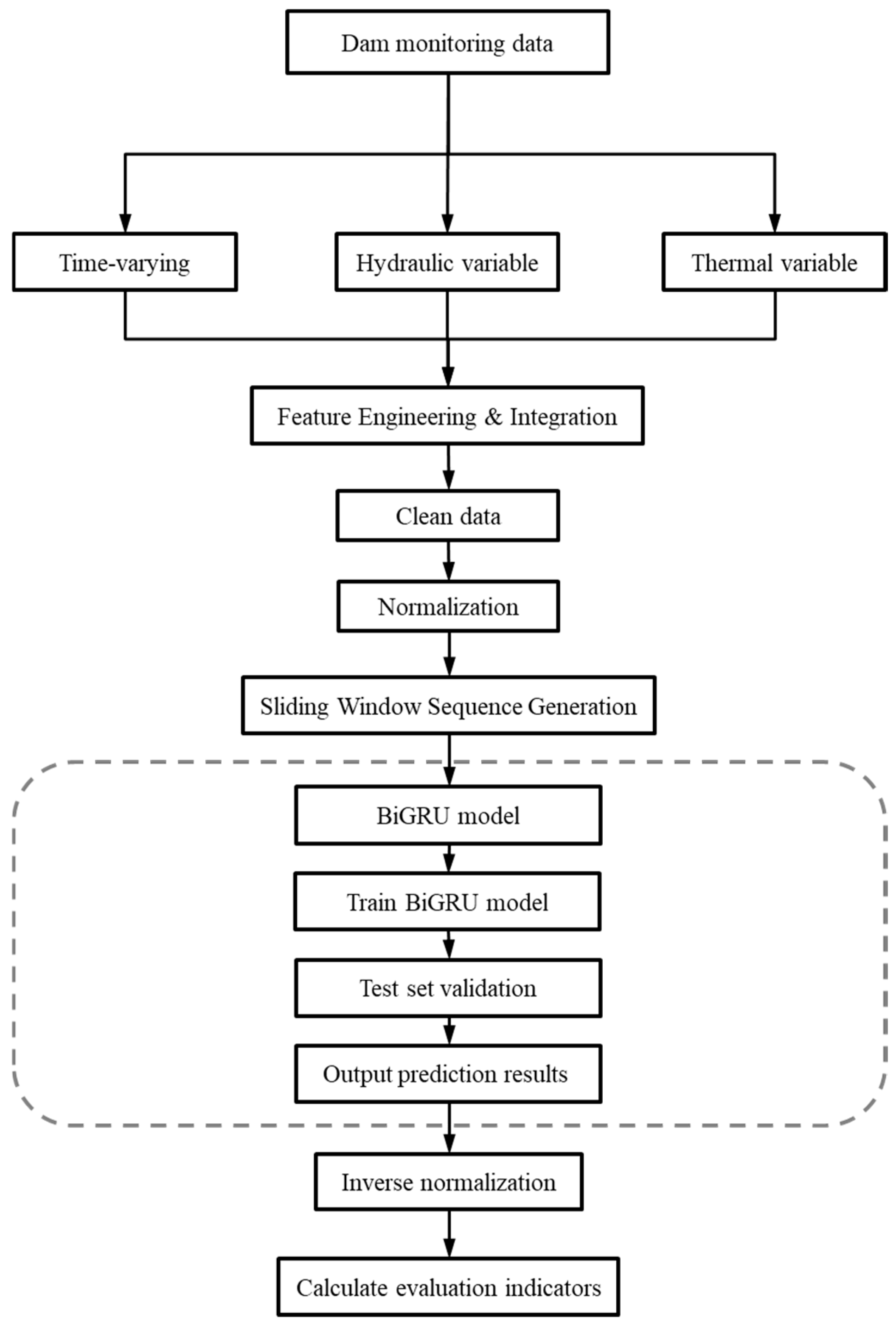

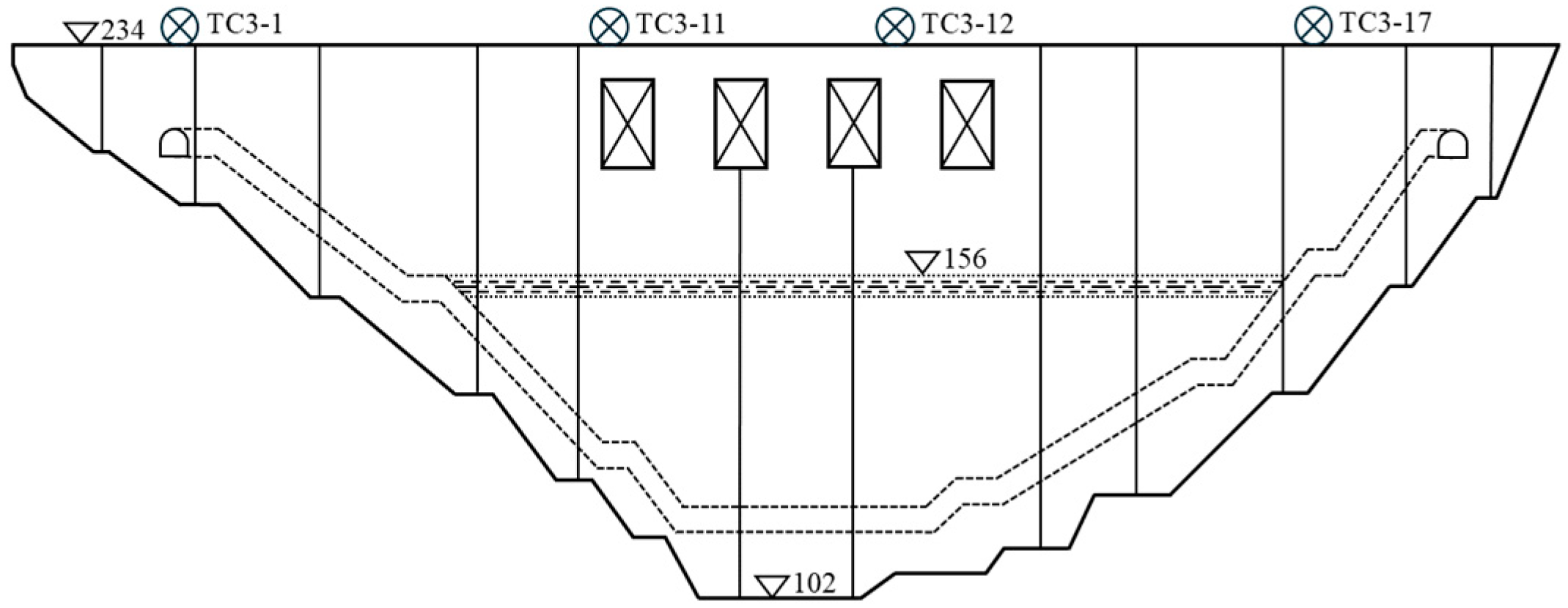

As illustrated in

Figure 1, this framework is conceptually guided by the classical hydrostatic–seasonal–time (HTT) model’s principles and implemented using a powerful bidirectional gated recurrent unit (BiGRU) neural network. The implementation comprises three sequential phases: (1) Feature engineering guided by the HTT framework: Instead of performing a statistical decomposition, this phase focuses on selecting and engineering a comprehensive feature set. We utilize the raw monitoring variables that correspond to the HTT components (e.g., water levels for the hydrostatic effect, temperatures for the thermal effect). These are then enhanced with additional time-series features (e.g., lagged values and rolling statistics of the displacement) to holistically capture the time-dependent effects, such as material creep and seasonal patterns. (2) Sequence construction via sliding window: The resulting multi-feature time series is then partitioned using a sliding window technique. This creates discrete yet overlapping intervals, thereby capturing sequential dependencies and inherent time-lag characteristics intrinsic to dam deformation mechanisms; (3) Predictive modeling with a BiGRU: Finally, the preprocessed sequences are fed into the BiGRU architecture. The network leverages forward–backward propagation to model non-linear interactions between historical and future states, ultimately generating long-term deformation forecasts alongside corresponding statistical evaluation metrics (

RMSE,

MAE,

R2). To ensure operational validity, the framework is rigorously validated through an engineering case study of a 130 m-high concrete gravity dam with over 18 years of operational history. Four strategically positioned monitoring points are utilized to evaluate spatiotemporal prediction accuracy across distinct dam zones.

2.1. Hydraulic–Thermal Transfer (HTT) Statistical Model

The Hydraulic Structure Temperature (HST) model utilizes harmonic functions to represent thermal variations yet fails to adequately explain thermally induced displacements arising from both short-term ambient temperature fluctuations and multi-year climatic variations. Conversely, the HTT model directly derives its thermal component from in situ temperature measurements, achieving a superior accuracy in modeling real-world dam monitoring data. The HTT framework decomposes concrete dam displacements (

δ) into three constitutive terms: the static hydrostatic pressure component (

δH), the thermal effects component (

δT), and the time-dependent creep component (

δθ), expressed as:

The hydrostatic component (

δH) is governed by the reservoir elevation, expressed as:

where

ai are the regression coefficients;

H denotes the reservoir elevation, with the exponent

n = 3 for gravity dams and

n = 4 for arch dams.

The thermal component

δT characterizes thermally induced displacements from concrete and foundation temperature fluctuations, calculated using measured temperatures:

where

bi are the thermal regression coefficients,

m indicates the number of temperature sensors, and

Ti represents the measured temperature values.

The time-dependent component represents concrete creep deformation:

where

c1 and

c2 are the time-effect coefficients, and

t denotes the cumulative operational days post-construction.

The aforementioned equations represent the classical statistical formulation of the HTT model, which provides a robust theoretical basis for understanding the primary factors of dam displacement. However, this traditional approach often assumes linear relationships and may struggle to capture the full spectrum of complex, non-linear dynamics inherent in dam behavior.

Therefore, in our proposed framework, we pivot from this statistical decomposition. Instead of using the calculated components (δH, δT, δθ) as inputs, we leverage the fundamental principles of the HTT model to guide our feature engineering. The raw physical variables that constitute these components (i.e., water levels, temperatures, and time-related markers) are fed directly into a deep learning network. This data-driven methodology allows the BiGRU model to learn the underlying non-linear relationships directly, offering the potential for a more accurate and comprehensive prediction.

2.2. BiGRU Model

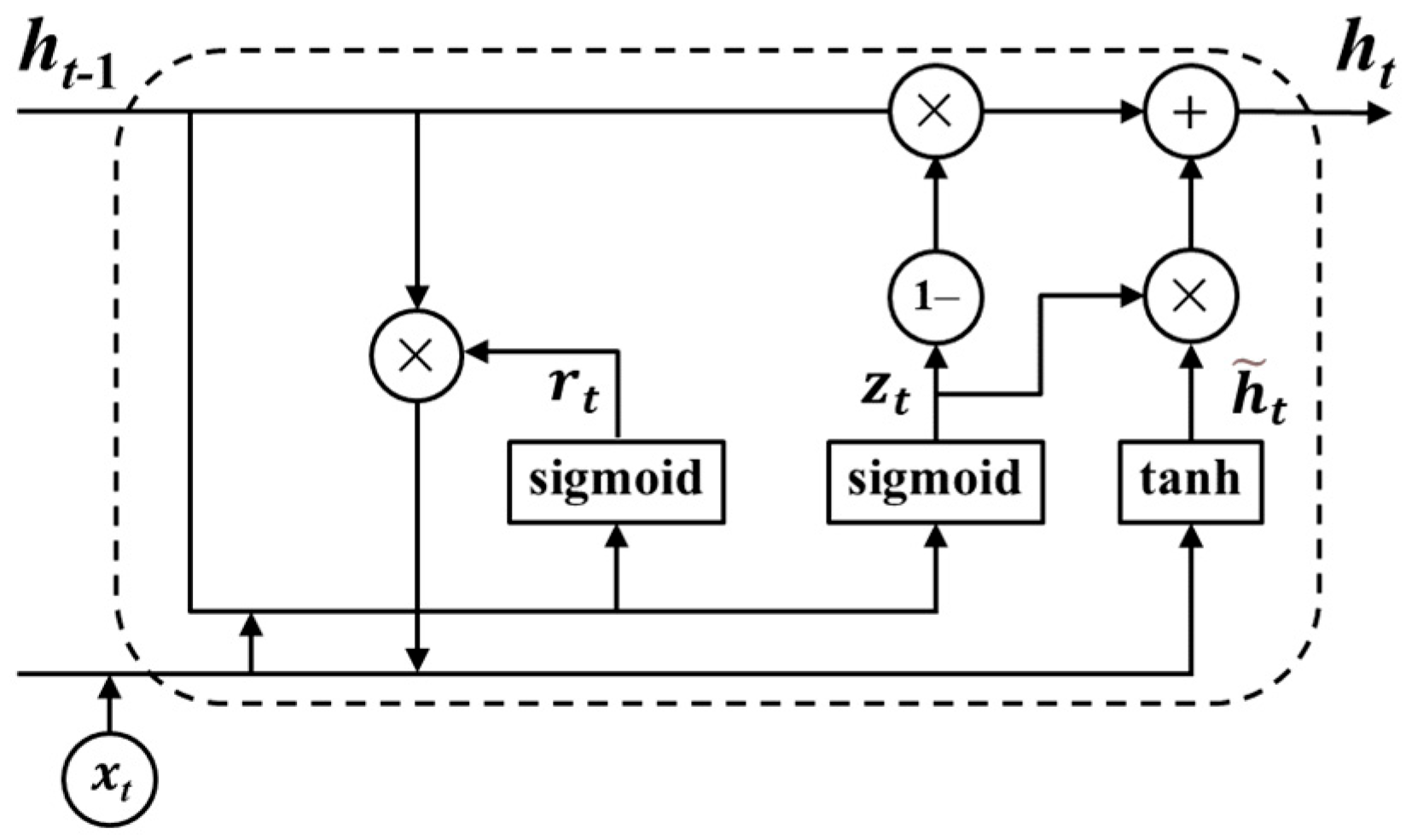

Traditional Recurrent Neural Networks (RNNs) are constrained by vanishing gradient and exploding gradient phenomena, which impair their performance on long-sequence modeling. The GRU addresses these limitations through gating mechanisms—a reset gate (

rt) for short-term pattern extraction and an update gate (

zt) for long-term state retention [

23].

Figure 2 illustrates its computational graph at a single time step:

xt denotes the input vector of the time point,

zt denotes the update gate,

rt denotes the reset gate,

ht−1 denotes the hidden state,

denotes the candidate hidden state, and

ht denotes the updated hidden state. The sigmoid function (

σ) generates gate activations (0–1), controlling information flow:

where

denotes element-wise multiplication. The update gate interpolates between prior state retention (

zt → 0) and new state adoption (

zt → 1), while the reset gate

rt modulates historical information integration.

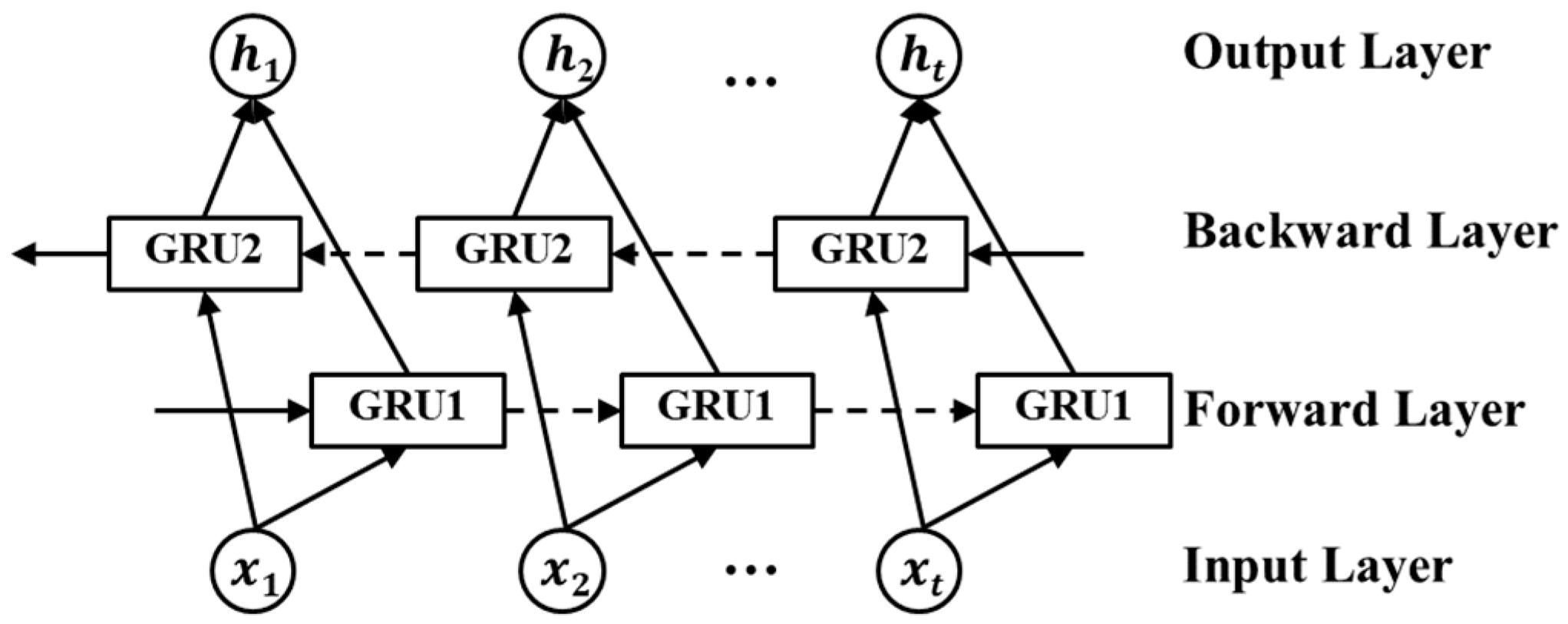

The BiGRU extends the GRU’s representational power through dual-directional sequence processing. By jointly analyzing forward (past-to-future) and reverse (future-to-past) contexts, the BiGRU captures comprehensive temporal patterns, demonstrating superior performance in sequential modeling tasks. As shown in

Figure 3, the BiGRU framework integrates two GRU layers. The architecture implements bidirectional temporal processing through two complementary units: the forward GRU extracts features by propagating hidden states chronologically from timestep

t = 1 to

t =

T, capturing historical dependencies, while the backward GRU operates inversely, propagating from

t =

T to

t = 1 to model future-influenced patterns. Hidden state outputs from both directions are concatenated, enabling a synergistic integration of past and future contextual information for enhanced sequential representation learning.

At each time step

t, the forward hidden state

and backward hidden state

are computed independently as follows:

The final output

yt concatenates both states as:

This bidirectional architecture enables the synergistic learning of historical influences and future trends, providing a holistic representation of time-series dynamics.

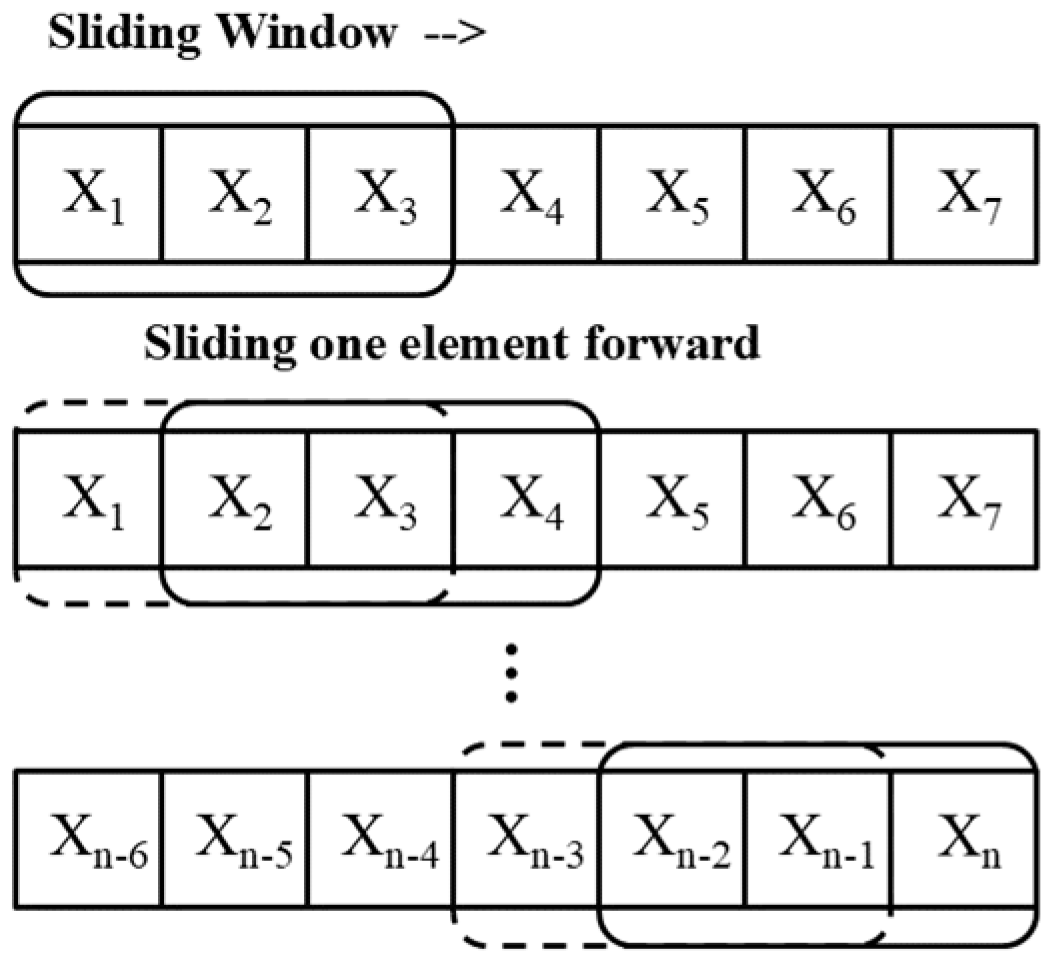

2.3. Rolling Window Feature Engineering

To address delayed hydrological effects in dam monitoring, we employ rolling window aggregation to inject temporally shifted context into the HTT framework.

Wτ = [

t −

τ,

t − 1] defines a backward-looking window of days. The method computes six statistical descriptors over

Wτ as:

where

μ denotes the mean,

denotes the median,

σ denotes the standard deviation,

max denotes the max,

min denotes the min, and

IQR denotes the interquartile range. As diagrammed in

Figure 4, for

τ = 3, these features derive from the interval [

t − 3,

t − 1], creating a 72 h contextual prior. The engineered features

st are concatenated with raw inputs

xt as:

where

denotes the feature concatenation. The enriched input

feeds into the BiGRU layer, enabling a simultaneous learning of instantaneous signals and historical trends. Explicit feature engineering enhances interpretability by quantifying how statistical properties of past observations influence current predictions.

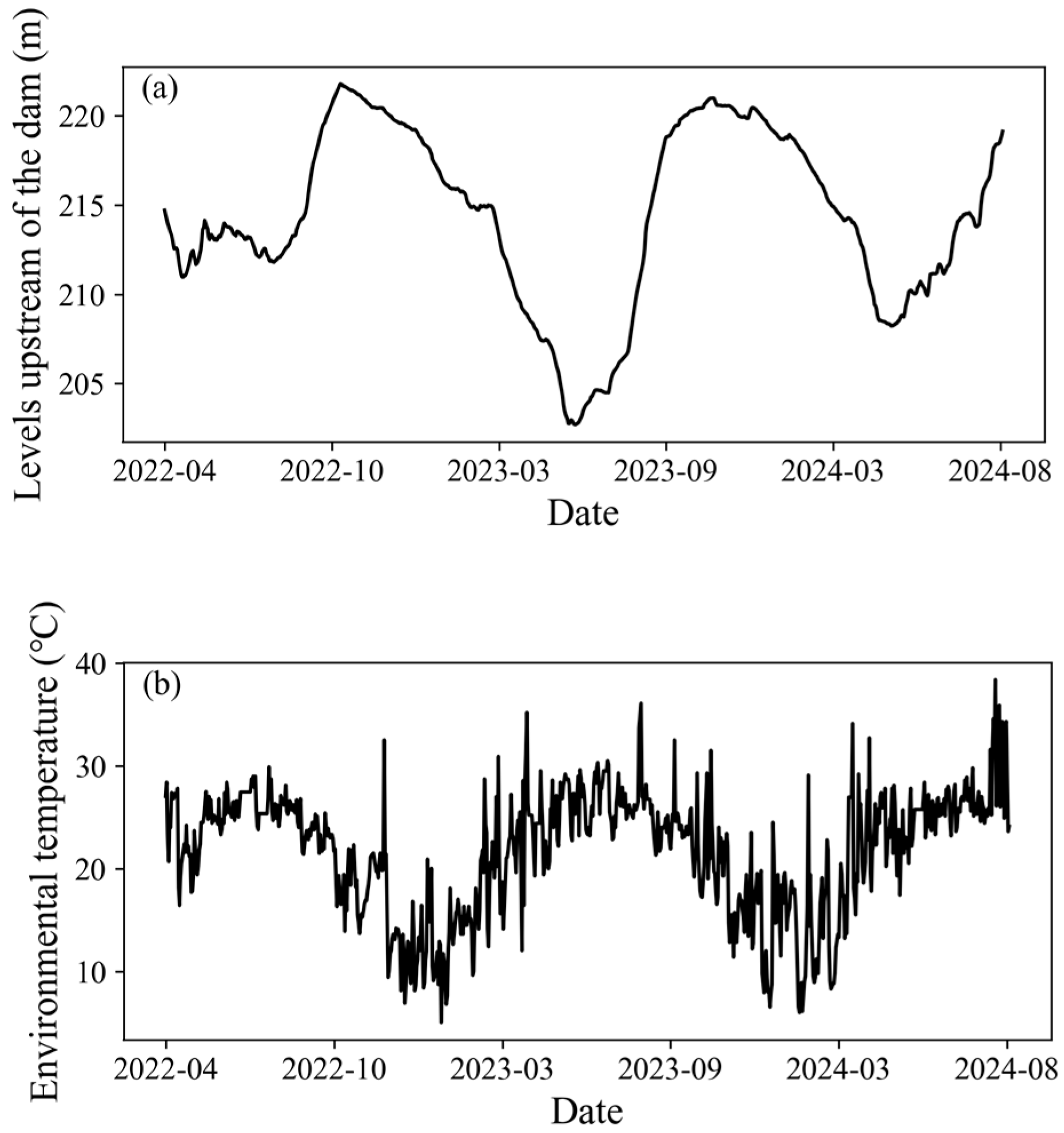

4. Results

4.1. Experimental Setup

To ensure the transparency and reproducibility of our research, it is essential to document the precise configuration of the experimental environment. Accordingly, all pertinent details regarding the hardware platform, software dependencies, and the final model hyperparameters employed in this study are systematically summarized in

Table 4. This information includes the specifications of the computing hardware, the versions of key libraries, and the optimal parameter values determined through the tuning processes described in the preceding sections.

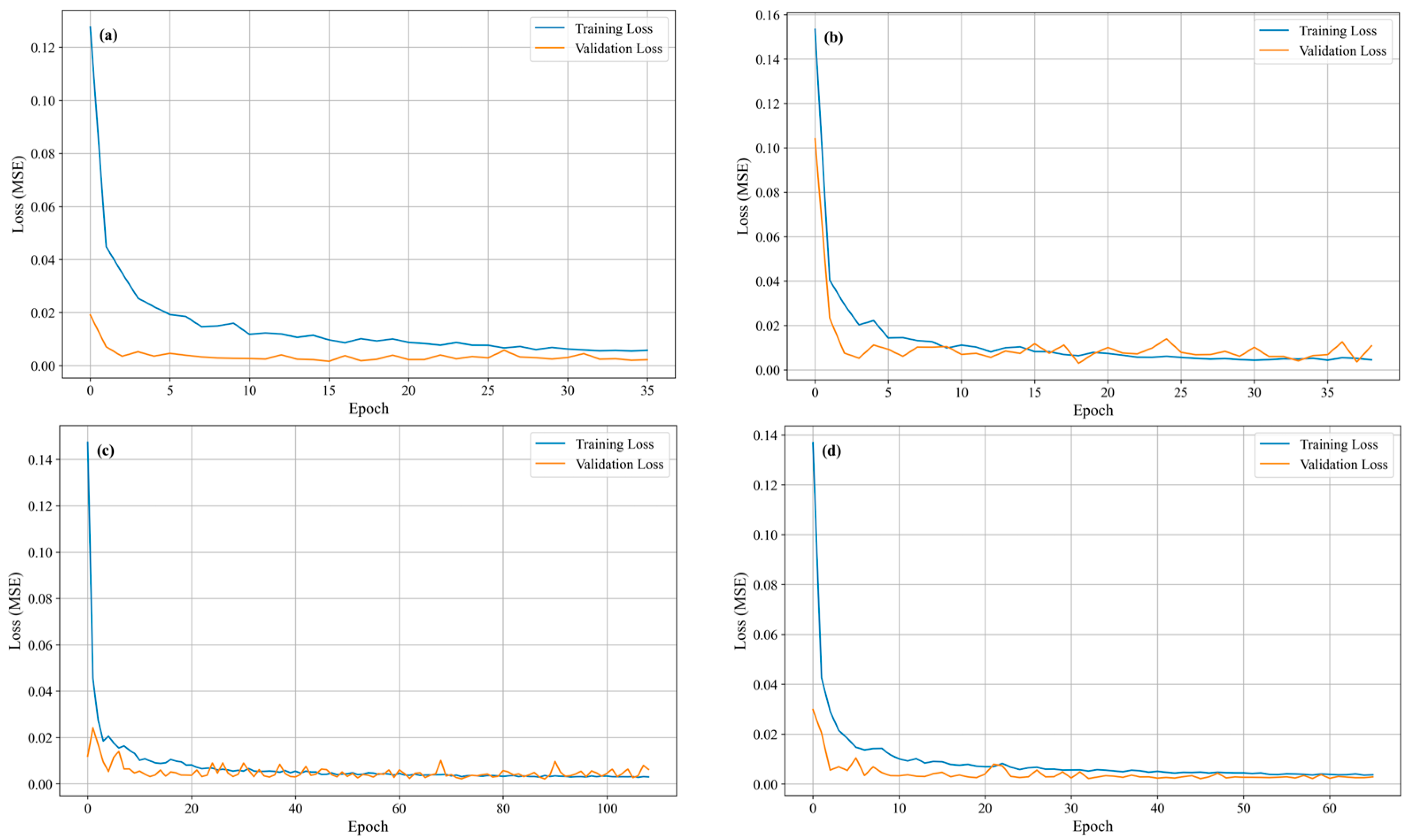

4.2. Model Training and Convergence Analysis

To analyze the convergence and stability of the model during the training phase, the learning curves for the optimized BiGRU model at each of the four monitoring points are presented in

Figure 9. A consistent and desirable training pattern is observed across all four subplots (a–d).

In each case, both the training loss and validation loss decrease sharply during the initial epoch, indicating that the model learns the primary data patterns rapidly. Subsequently, the curves flatten and converge to a stable, low value. Crucially, the validation loss curve closely tracks the training loss curve throughout the entire process, without any significant divergence. This proximity between the two curves provides strong evidence that the models achieved effective generalization without overfitting the training data. Furthermore, the varying number of total epochs in each subplot demonstrates the effective functioning of the Early Stopping mechanism, which terminated the training process optimally for each case once the validation loss ceased to improve.

4.3. Comparative Analysis of Model Performance

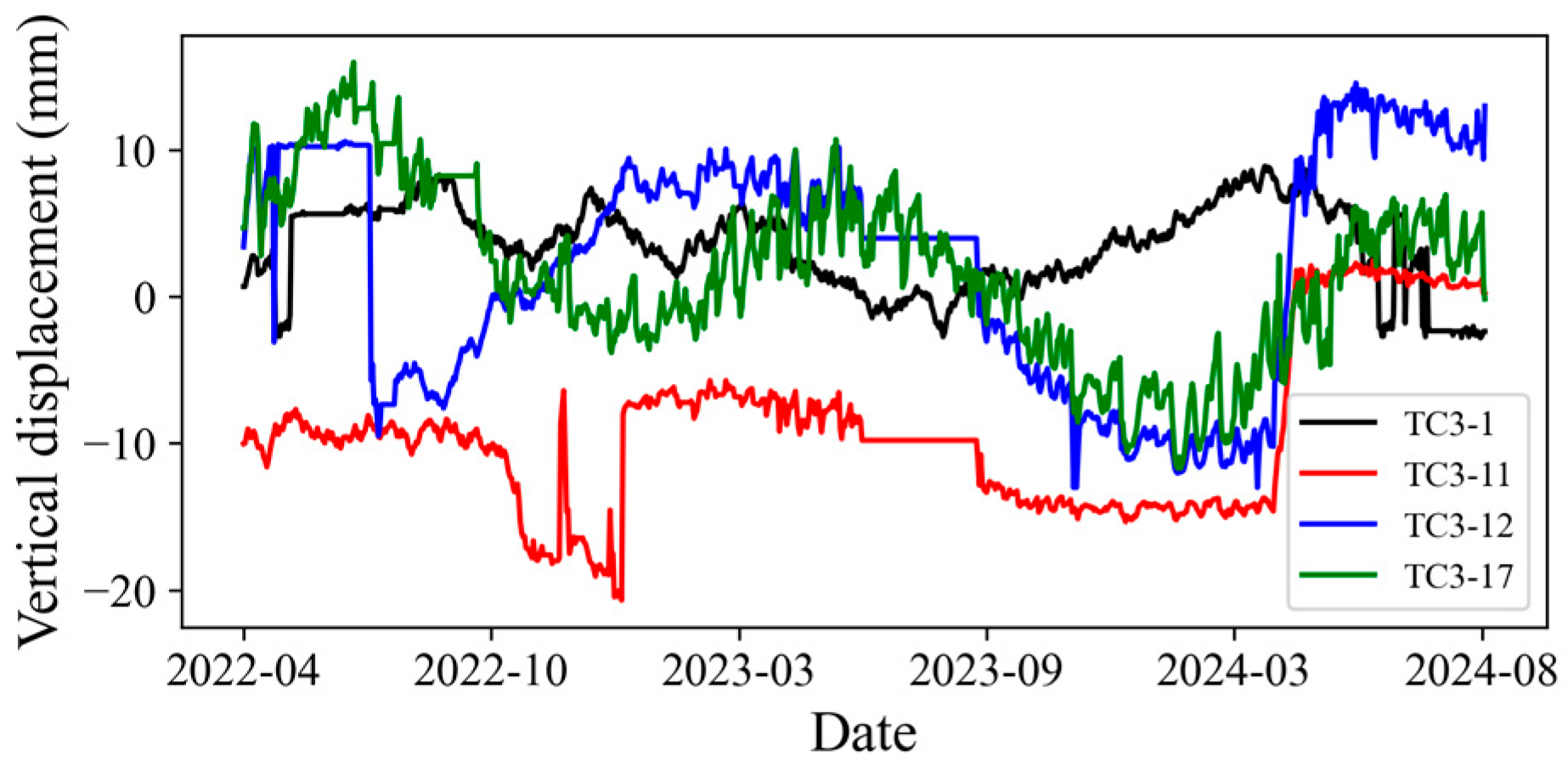

To benchmark the baseline model performance, four time-series forecasting architectures—LSTM, BiLSTM, GRU, and BiGRU—were trained on raw measured displacement data using a three-step sliding window with default hyperparameters. The model’s generalization capability was evaluated through long-term prediction accuracy and standard evaluation metrics. As the dam’s service duration extends, operational monitoring datasets exhibit a progressive temporal expansion.

The quantitative evaluation of the proposed method and baseline models for long-term dam displacement prediction is summarized in

Table 5. The BiGRU algorithm consistently outperformed other architectures across all four monitoring points, demonstrating an enhanced generalization capacity and robust stability. This superiority stems from its dual-directional memory propagation mechanism, which adaptively weights historical context and future-inferred patterns—a critical advantage when processing sparse or asynchronous monitoring data. When using the correlation coefficients as the evaluation metric, the BiGRU exhibited statistically significant improvements over LSTM, BiLSTM, and GRU, with performance gains closely aligned with the complexity of each baseline’s temporal dependency modeling capability. These results demonstrate that the BiGRU is capable of extracting meaningful patterns from four distinct and weakly correlated datasets, validating its effectiveness in disentangling heterogeneous spatiotemporal couplings within dam systems.

4.4. Visual Analysis of Prediction Results

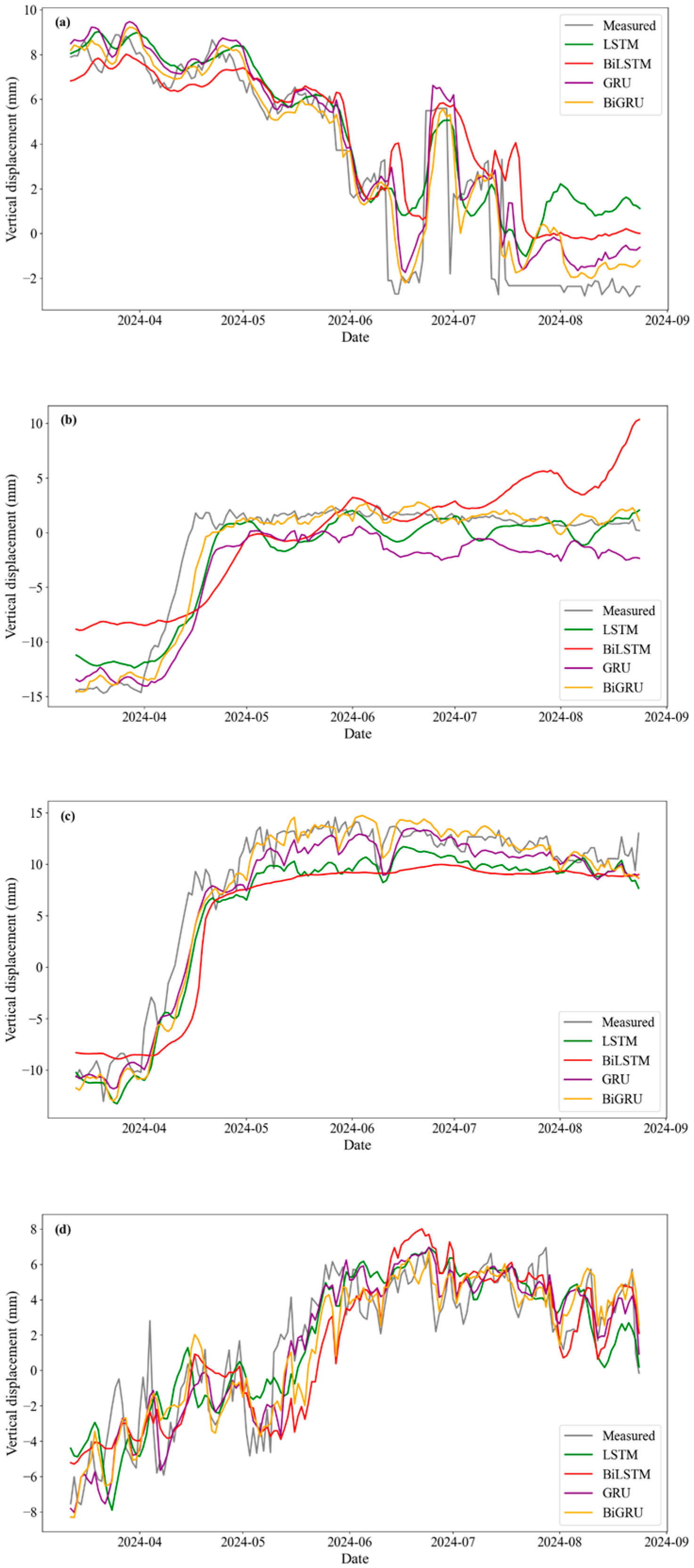

Figure 10 provides a comparative visualization of the long-term prediction performance between the proposed method and baseline models, validated against monitoring data collected from April to August 2024. The BiGRU model shows the strongest agreement with observational trends during the local temporal interval (April–July), achieving the lowest overall prediction error among all models. Its trajectory closely follows the phase-shifted deformation pulses induced by delayed reservoir seepage pressures—an effect that unidirectional models fail to capture effectively. All methods exhibit residual inaccuracies during the terminal prediction phase (August 2024), with errors systematically increasing compared with earlier stages. This divergence becomes more pronounced near hydrological transition periods, where conventional models struggle to reconcile rapid water level fluctuations with slow-moving creep deformations. The consistent performance degradation across models highlights the inherent challenges in maintaining predictive stability over extended horizons, underscoring the necessity of memory-gated architectures for effective error attenuation.

The observed difference in the magnitude of displacement fluctuations between the monitoring points can be attributed to the distinct structural characteristics and boundary conditions at their respective locations on the dam.

Points TC3-1 and TC3-17 are situated at the dam abutments, where the concrete structure is anchored into the bedrock of the valley sides. As shown in the profile diagram, these sections are thinner and have a smaller volume compared to the central monoliths. This lower thermal mass makes them more susceptible to ambient temperature variations, leading to more pronounced and frequent cycles of expansion and contraction. This heightened sensitivity to thermal effects is the primary reason for the larger displacement fluctuations observed at the dam’s flanks.

In contrast, points TC3-11 and TC3-12 are located on the crest of the massive central dam monoliths, directly above the powerhouse and spillway block. Due to their enormous volume, these sections possess significant thermal inertia, meaning their temperature changes much more slowly and is less influenced by short-term air temperature swings. Consequently, their displacement behavior is more stable and is predominantly governed by the more slowly varying hydrostatic pressure from the reservoir. Therefore, while their absolute displacement may be substantial, the fluctuations around their mean positions are less significant than those at the abutments.

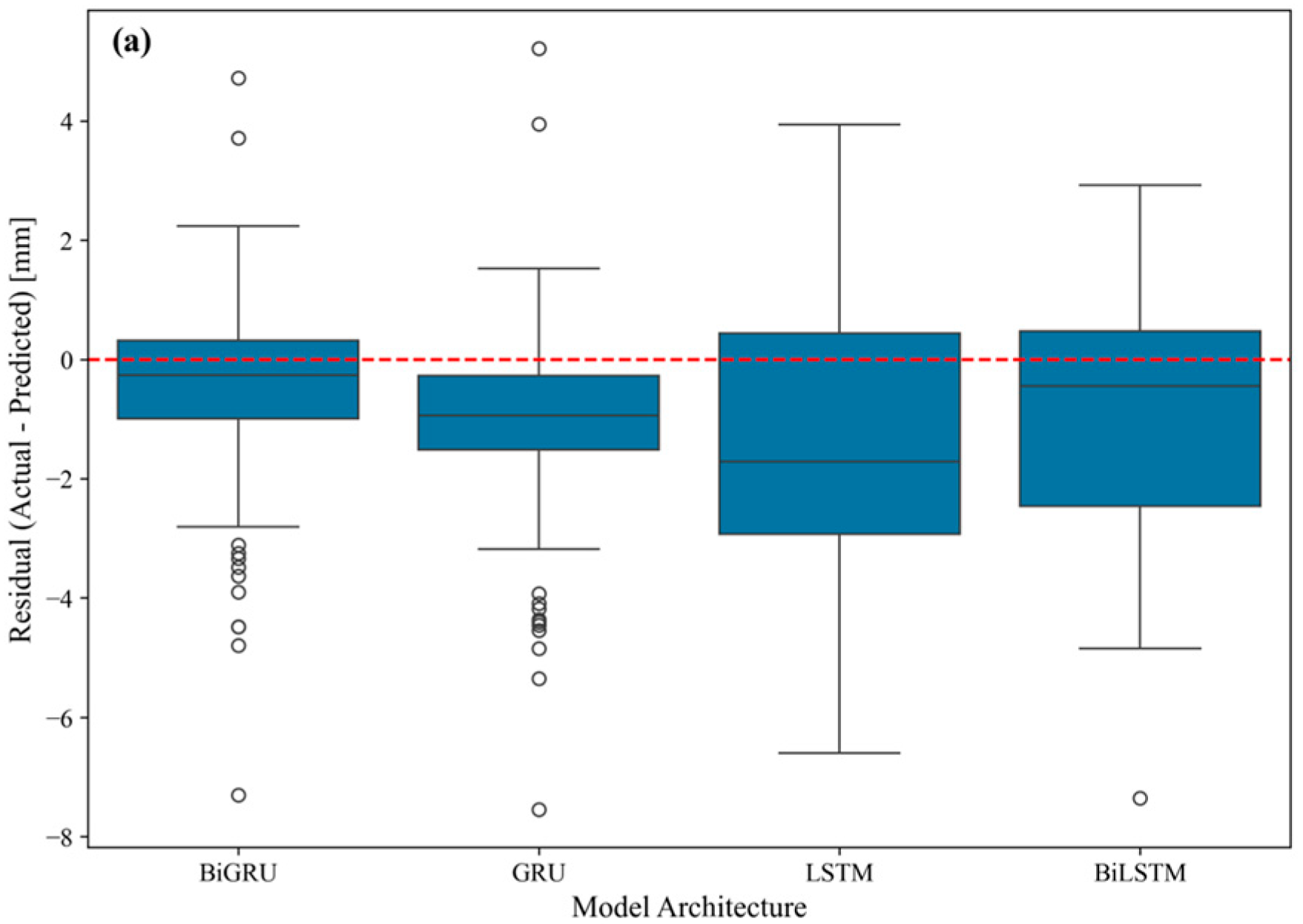

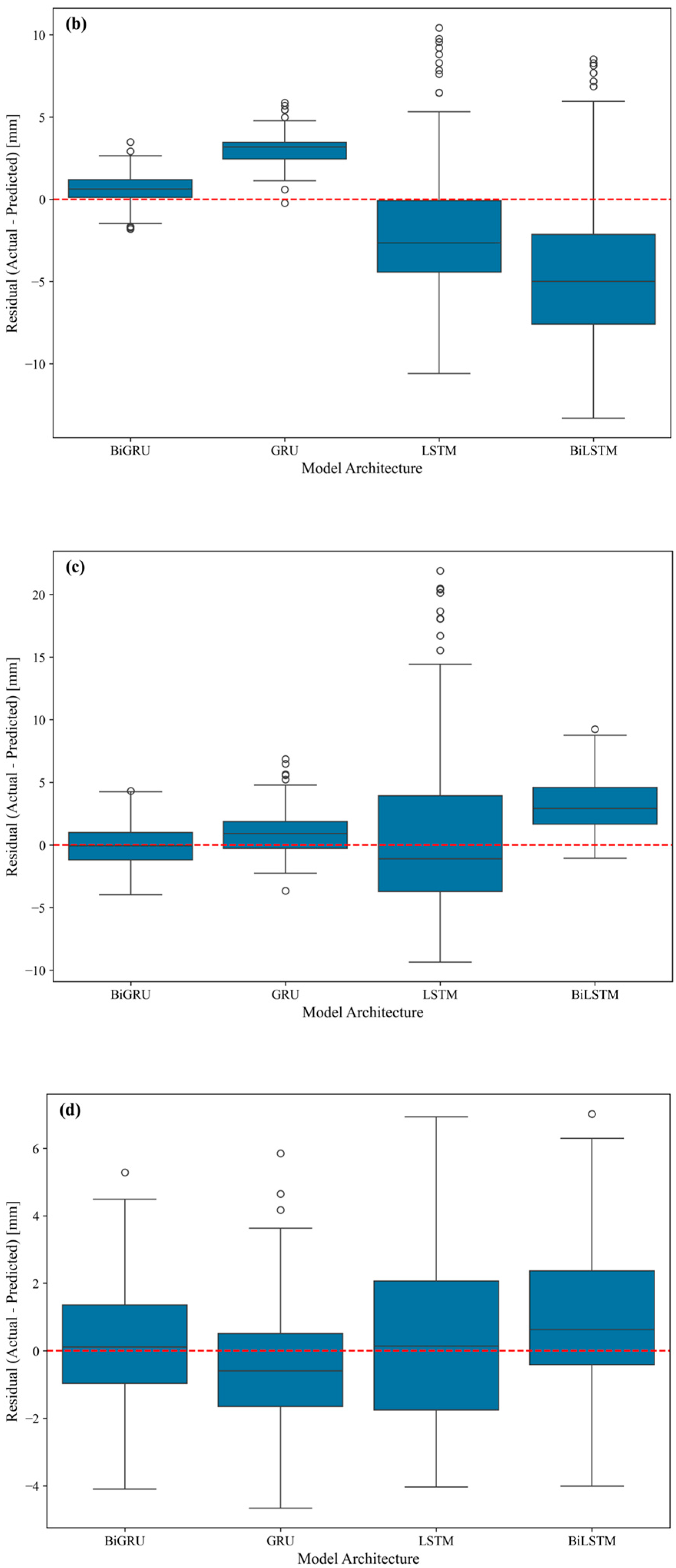

The proposed BiGRU-based predictive framework demonstrates an exceptional generalization capability and interpretability, achieving a superior average performance across all four monitoring points with an R

2 of 0.89, an MAE of 1.17 mm, and an RMSE of 1.70 mm. This performance represents a significant leap over conventional architectures; for instance, compared to the standard GRU model—the strongest of the benchmarks—our proposed model delivered a 20.2% reduction in RMSE and a 30.4% reduction in MAE. This quantitative superiority is visually substantiated by the analysis presented in

Figure 11, where the BiGRU model’s residual box plots consistently exhibit a more compact and zero-centered error distribution. While the standard GRU showed competitive performance at a single point, the BiGRU model’s consistent high accuracy across all locations underscores its superior stability and robustness. These findings indicate that the synergistic integration of the bidirectional architecture and sliding window optimization enables the model to effectively extract latent spatiotemporal patterns, making it a promising solution for long-term displacement forecasting in concrete gravity dams.

5. Conclusions

In this study, we developed and validated an enhanced predictive framework for dam displacement by integrating a Bidirectional GRU (BiGRU) with optimized time-series feature engineering. The primary contributions and findings of this work are summarized as follows:

- (1)

Novel hysteresis-aware framework: A predictive model was established that explicitly accounts for the hydraulic hysteresis effects in dam behavior. This was achieved by synergizing a BiGRU architecture, which captures the temporal dependencies from both past and future contexts, with a sliding window mechanism for dynamic feature extraction.

- (2)

Systematic model optimization: The model’s robustness and configuration were not arbitrary but were determined through a rigorous, two-stage optimization process. A preliminary comparative experiment identified the optimal rolling window size (τ = 3) for feature engineering, followed by a systematic hyperparameter tuning process to ascertain the ideal network architecture, learning rate, and regularization parameters.

- (3)

Superior quantitative performance: The optimized BiGRU model demonstrated superior predictive accuracy and generalization ability. Across all four monitoring points, it achieved an average R2 of 0.89 and an RMSE of 1.70 mm. This represents a substantial improvement over the strongest benchmark model (standard GRU), with a 20.2% reduction in RMSE and a 30.4% reduction in MAE, providing concrete evidence of the framework’s value.

- (4)

Enhanced temporal feature representation: The success of the model is attributed to the BiGRU’s dual-path processing (forward and backward), which distills a more comprehensive feature representation from complex multivariate time-series data compared to unidirectional models. This establishes a new paradigm for enhancing dam safety assessments through bidirectional temporal learning.

Despite the model’s strong performance, certain limitations highlight avenues for future research. First, the framework’s sensitivity to displacement anomalies induced by operational fluctuations or sensor drifts warrants the development of integrated data-cleaning and anomaly detection protocols. Second, as a dam deforms as an integrated system, future work should prioritize multi-point data fusion strategies. Investigating cross-correlation analysis and employing advanced methodologies, like graph neural networks, could better quantify the spatiotemporal interdependencies among monitoring points, which is essential for modeling the holistic behavior of the entire structure. Finally, the validation in this study was limited to monitoring points on the dam crest due to limited data availability; future work should aim to verify the model’s performance across the entire dam profile by incorporating data from its middle and base sections. Successfully addressing these limitations will lead to more robust predictive systems, providing operators with critical tools for proactive maintenance and asset management, and thereby ensuring the long-term sustainability and resilience of vital water infrastructure.