Improved DeepLabV3+ for UAV-Based Highway Lane Line Segmentation

Abstract

1. Introduction

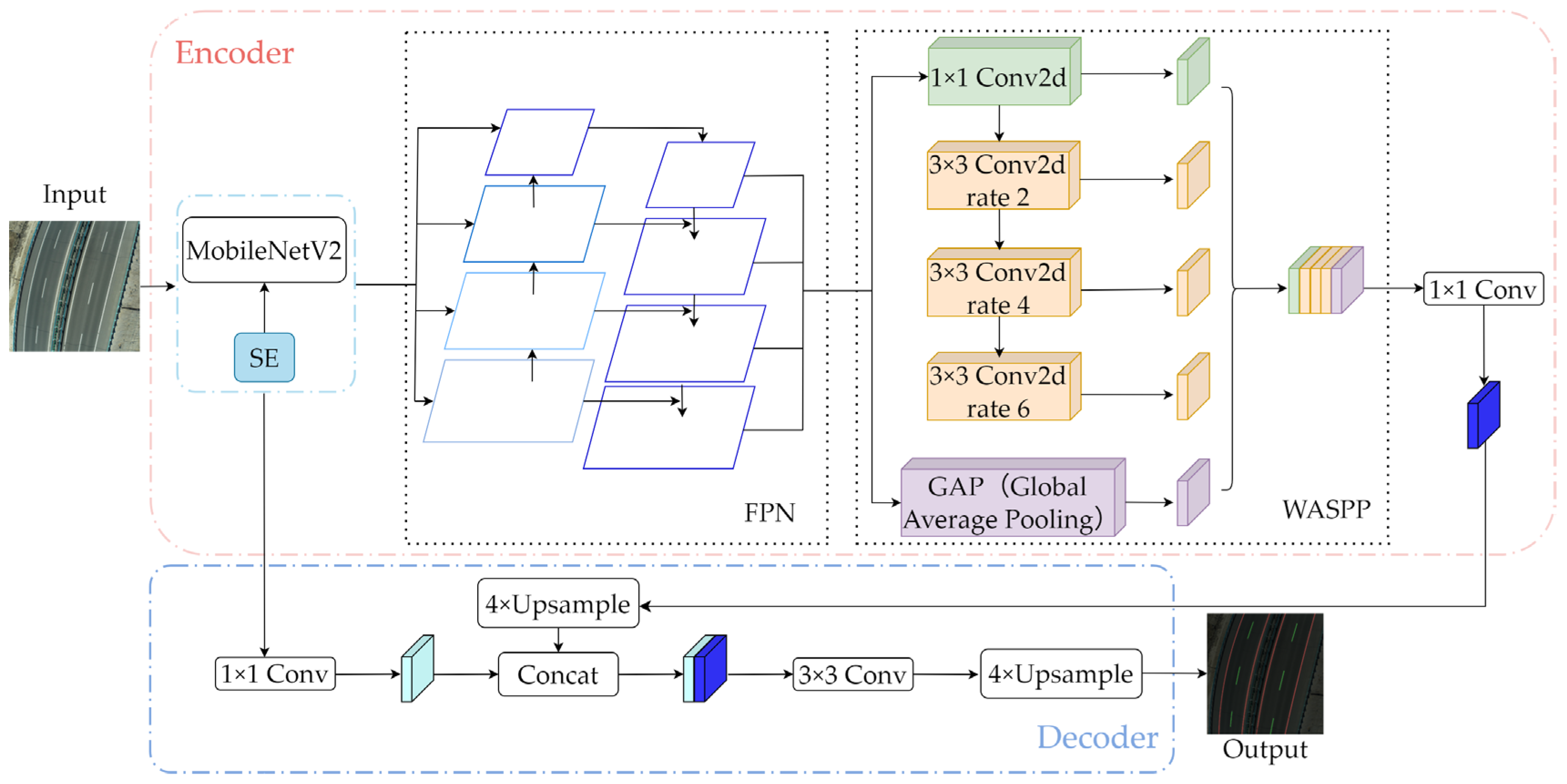

- Lightweight Backbone: MobileNetV2 replaces the original Xception-65 backbone, drastically reducing the parameters while maintaining its feature extraction ability;

- Edge-Aware Attention: SE (Squeeze-and-Excitation) modules enhance channel-wise feature recalibration, prioritizing lane line edges;

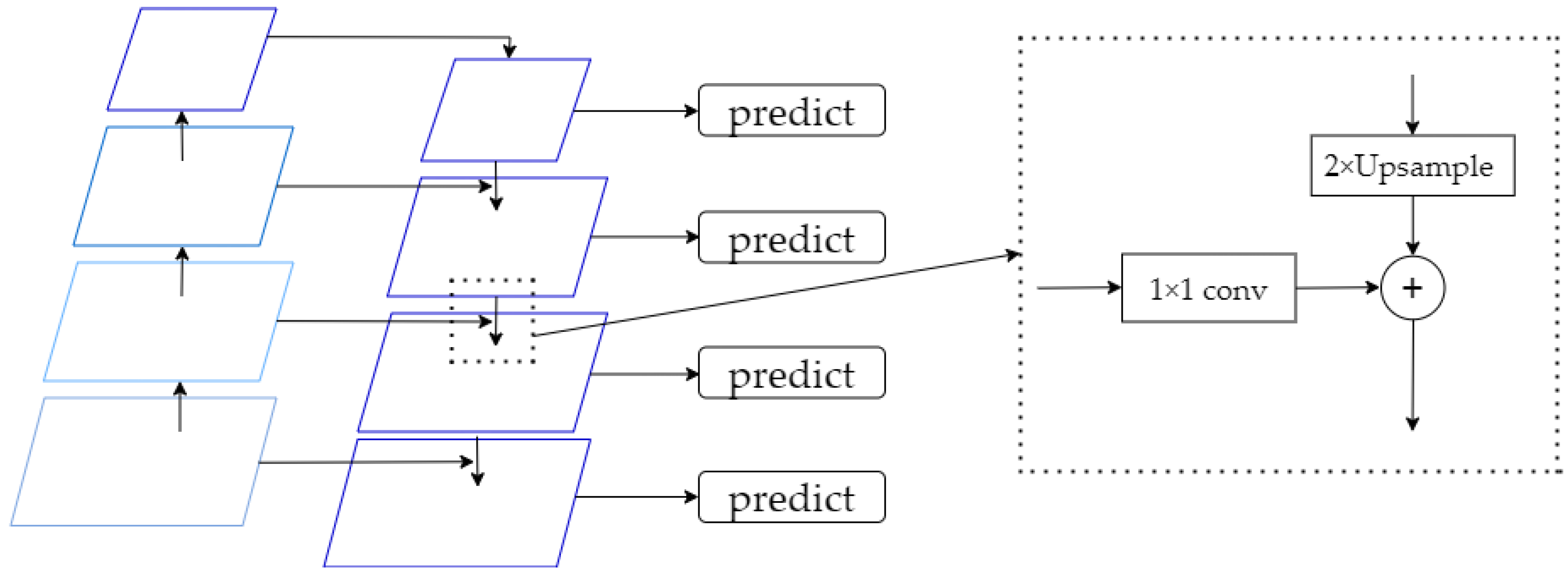

- Multi-Scale Fusion: FPN (Feature Pyramid Network) integrates shallow texture details and deep semantics for the improved detection of dashed lines and occluded regions.

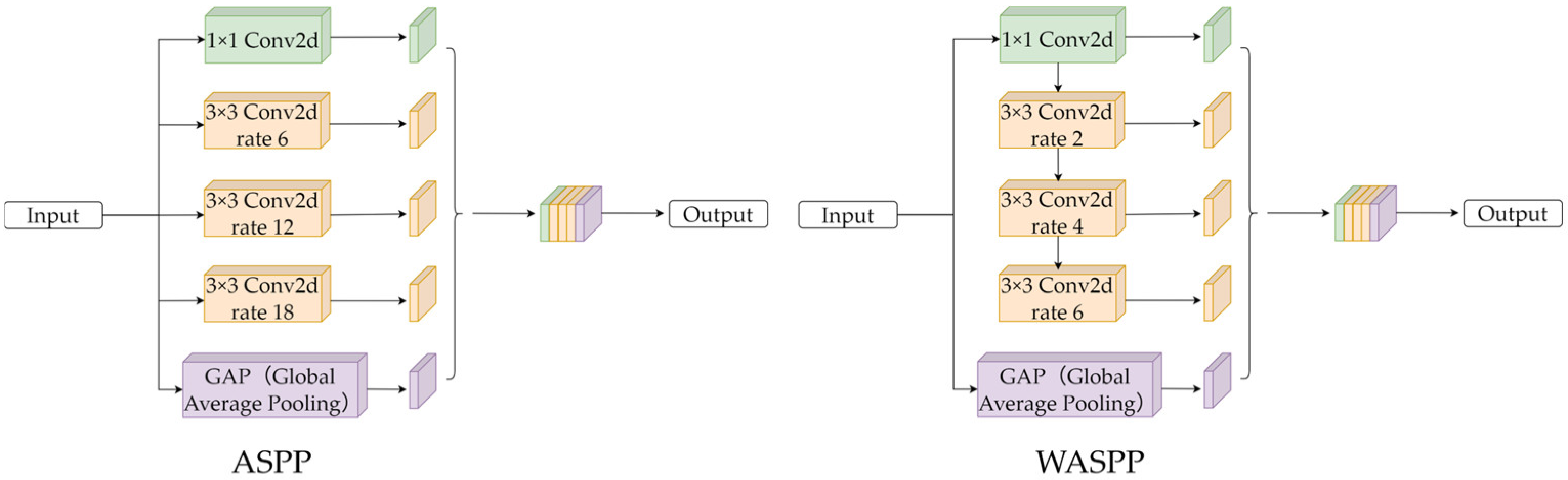

- Adaptive Receptive Fields: The WASPP (Waterfall Atrous Spatial Pyramid Pooling) module cascades atrous convolutions with dilation rates (2, 4, 6) to progressively expand receptive fields, capturing fine-grained lane structures.

2. Materials and Methods

2.1. Data Sources

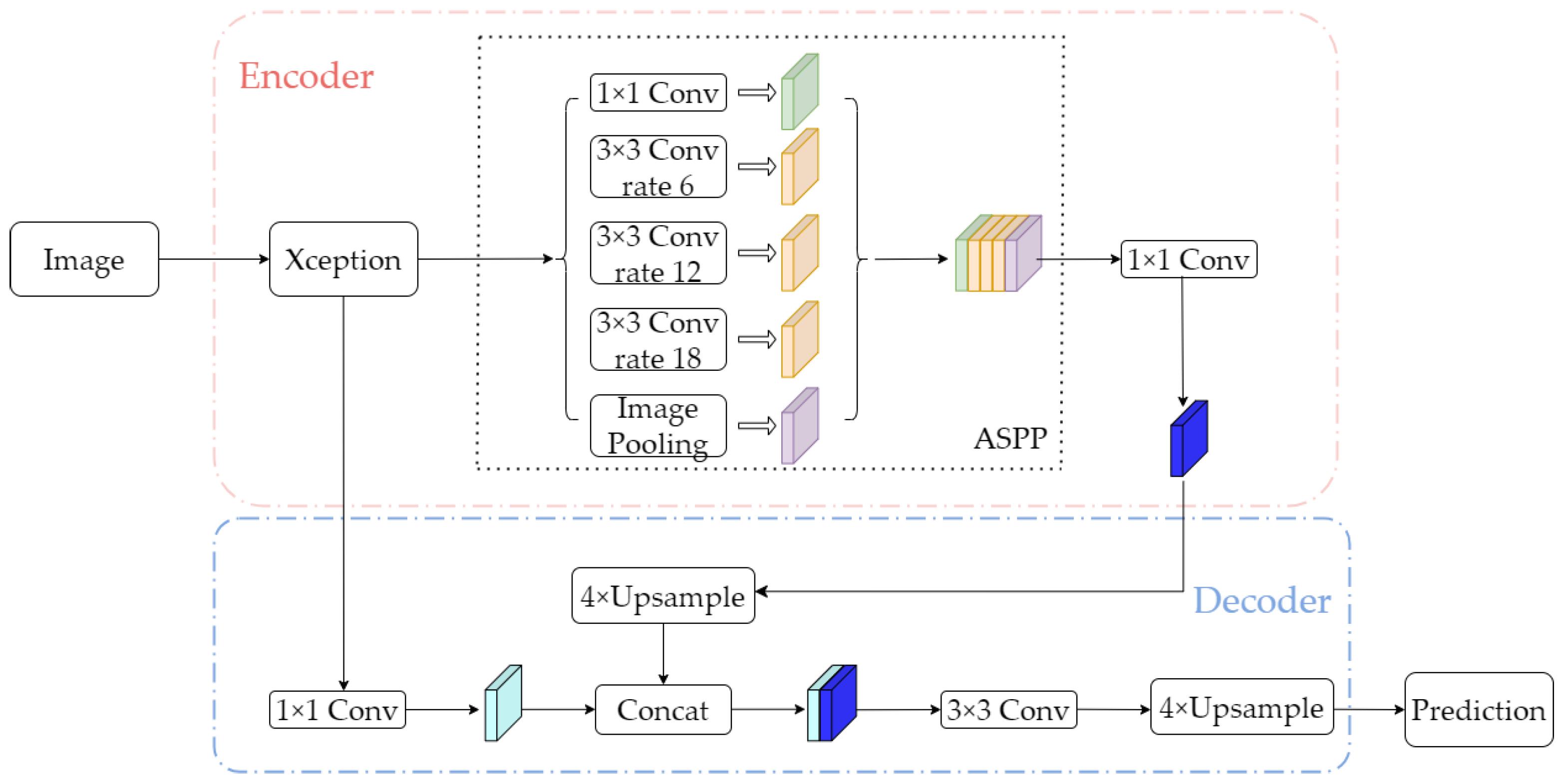

2.2. Traditional DeepLabV3+ Network Model

2.3. Improved DeepLabV3+ Model

2.3.1. Replacement of the Backbone Network

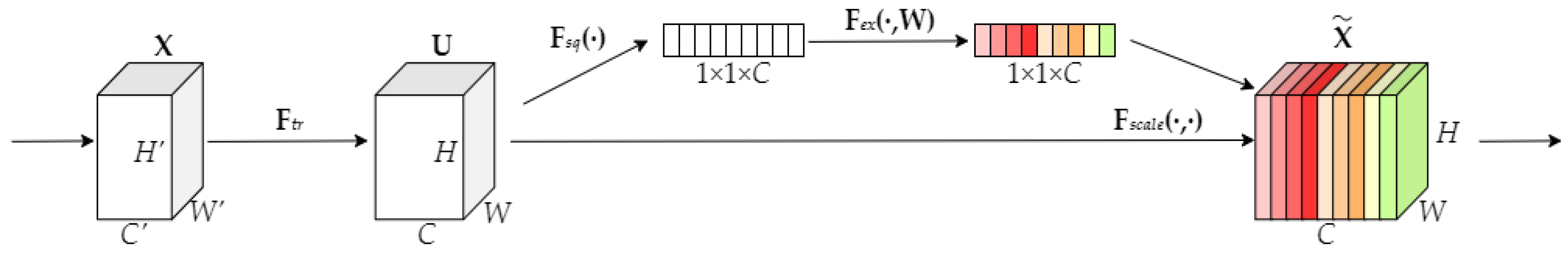

2.3.2. Introduction of the SE Attention Mechanism

2.3.3. Introduction of the FPN

2.3.4. WASPP Module

3. Experimental Results and Analysis

3.1. Experimental Environment

3.2. Model Evaluation Metrics

3.3. Comparative Analysis of Experimental Results

3.3.1. Comparison Experiments of Backbone Network

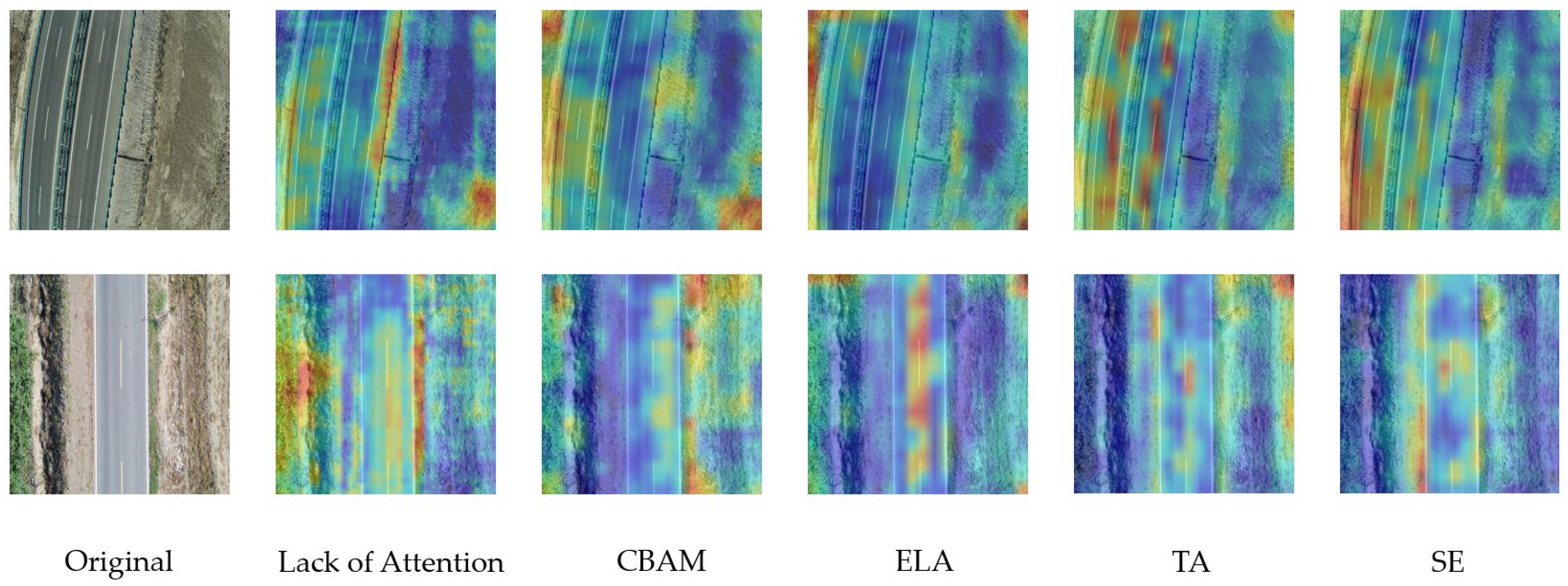

3.3.2. Comparative Experiments on Attentional Mechanisms

3.3.3. Comparative Experiments Between WASPP Module and Other Improved ASPP Modules

3.3.4. Comparative Ablation Experiments of WASPP Modules with Different Dilation Rates for Atrous Convolution

3.3.5. Ablation Experiments with Different Modules

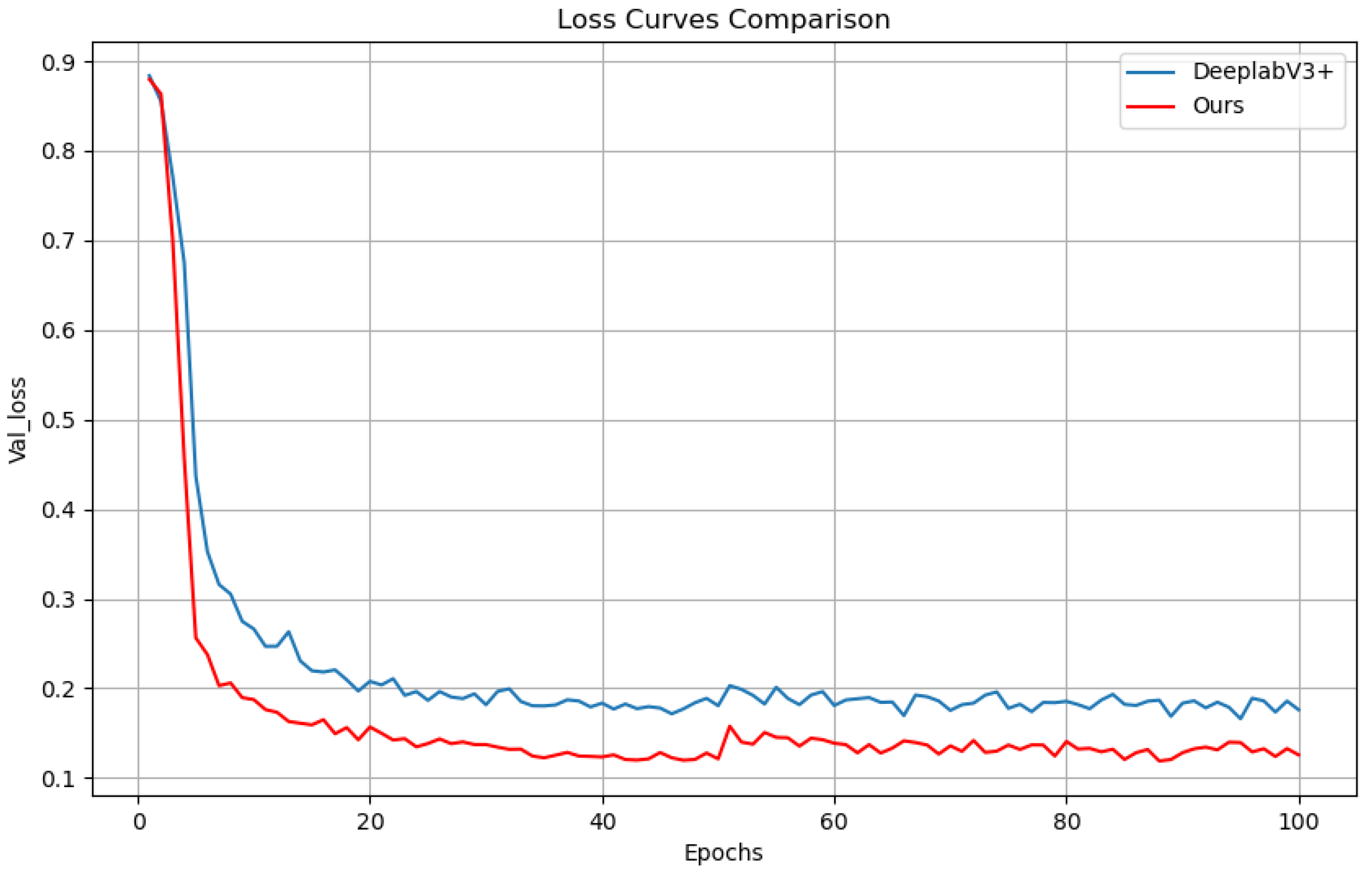

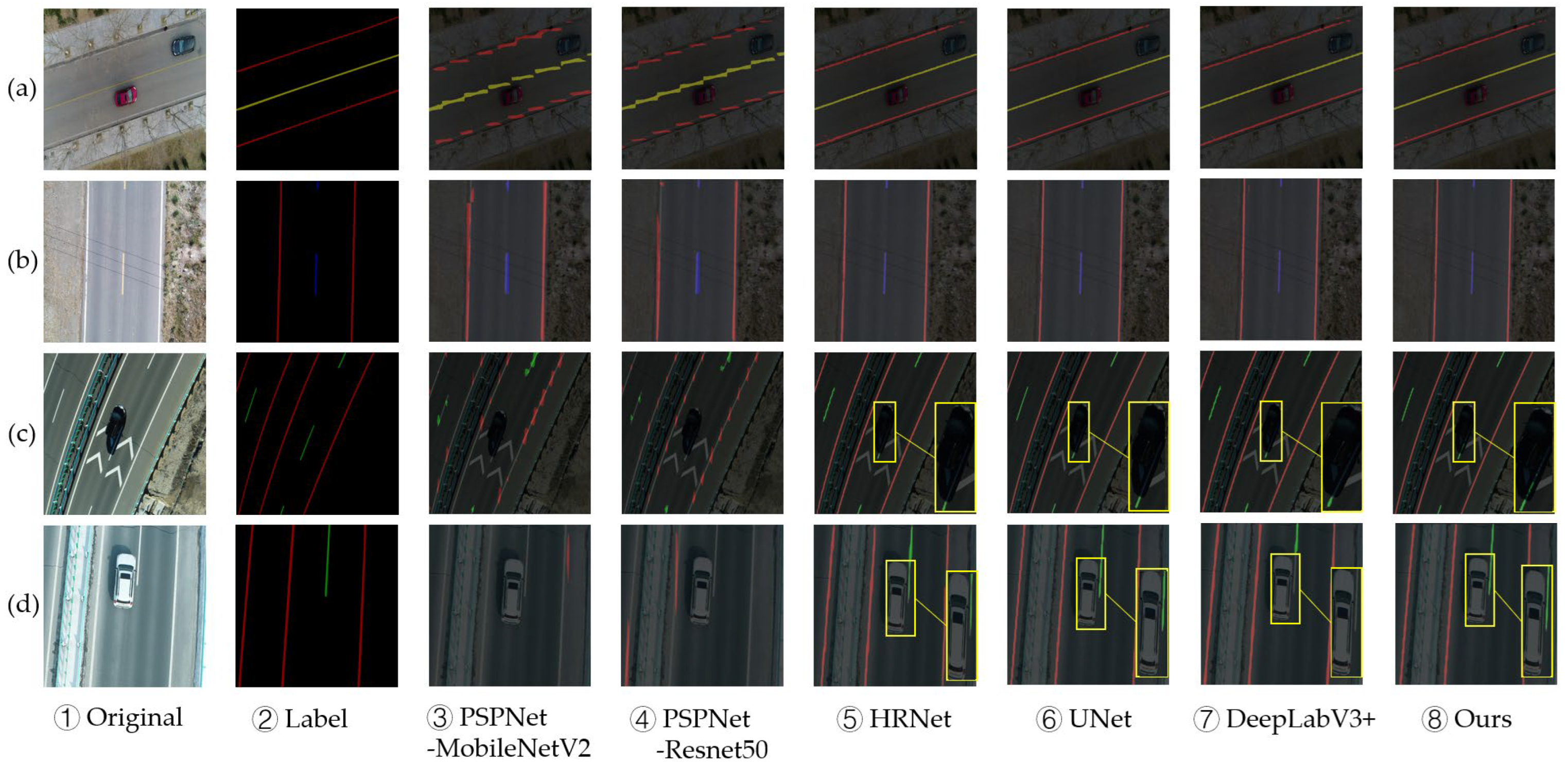

3.3.6. Comparative Experiments on Segmentation Network Models

4. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| FPN | Feature Pyramid Network |

| WASPP | Waterfall Atrous Spatial Pyramid Pooling |

| SE | Squeeze and Excitation |

| IoU | Intersection over Union |

| MIoU | Mean Intersection over Union |

| Params | Parameters |

References

- Babić, D.; Fiolić, M.; Ferko, M. Road Markings and Signs in Road Safety. Encyclopedia 2022, 2, 1738–1752. [Google Scholar] [CrossRef]

- Narote, S.P.; Bhujbal, P.N.; Narote, A.S.; Dhane, D.M. A review of recent advances in lane detection and departure warning system. Pattern Recognit. 2018, 73, 216–234. [Google Scholar] [CrossRef]

- Guan, Y.; Hu, J.; Wang, R.; Cao, Q.; Xie, F. Research on the Nighttime Visibility of White Pavement Markings. Heliyon 2024, 10, e36533. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Liu, Q.; Liu, Z. Vehicle-Based LIDAR for Lane Line Detection. J. Phys. Conf. Ser. 2023, 2617, 012012. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zheng, J. Automatic background filtering and lane identification with roadside LiDAR data. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; IEEE: Yokohama, Japan, 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Jie, H.; Zuo, X.; Gao, J.; Liu, W.; Hu, J.; Cheng, S. LLFormer: An Efficient and Real-time LiDAR Lane Detection Method Based on Transformer. In Proceedings of the 2023 5th International Conference on Pattern Recognition and Intelligent Systems (PRIS’23), New York, NY, USA, 18–23 July 2023. [Google Scholar] [CrossRef]

- Cheng, Y.-T.; Lin, Y.-C.; Habib, A. Generalized LiDAR Intensity Normalization and Its Positive Impact on Geometric and Learning-Based Lane Marking Detection. Remote Sens. 2022, 14, 4393. [Google Scholar] [CrossRef]

- Zhao, R.; Heng, Y.; Wang, H.; Gao, Y.; Liu, S.; Yao, C.; Chen, J.; Cai, W. Advancements in 3D Lane Detection Using LiDAR Point Clouds: From Data Collection to Model Development. arXiv 2023. [Google Scholar] [CrossRef]

- Rui, R. Lane line detection technology based on machine vision. In Proceedings of the 2022 4th International Conference on Artificial Intelligence and Advanced Manufacturing (AIAM), Hamburg, Germany, 7–9 October 2022; pp. 562–566. [Google Scholar] [CrossRef]

- Wei, Y.; Xu, M. Detection of Lane Line Based on Robert Operator. J. Meas. Eng. 2021, 9, 156–166. [Google Scholar] [CrossRef]

- Duan, J.; Zhang, Y.; Zheng, B. Lane Line Recognition Algorithm Based on Threshold Segmentation and Continuity of Lane Line. In Proceedings of the 2016 2nd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 14–17 October 2016; IEEE: Chengdu, China, 2016; pp. 680–684. [Google Scholar] [CrossRef]

- Chen, J.; Ruan, Y.; Chen, Q. A Precise Information Extraction Algorithm for Lane Lines. China Commun. 2018, 15, 210–219. [Google Scholar] [CrossRef]

- Li, D.; Yang, Z.; Nai, W.; Xing, Y.; Chen, Z. A Road Lane Detection Approach Based on Reformer Model. Egypt. Inform. J. 2025, 29, 100625. [Google Scholar] [CrossRef]

- Hao, W. Review on lane detection and related methods. Cogn. Robot. 2023, 3, 135–141. [Google Scholar] [CrossRef]

- Lee, Y.; Kim, J. Robustness of Deep Learning Models for Vision Tasks. Appl. Sci. 2023, 13, 4422. [Google Scholar] [CrossRef]

- Lu, X.; Lv, X.; Jiang, J.; Li, S. An Improved YOLOv5s for Lane Line Detection. In Proceedings of the 2022 5th International Conference on Robotics, Control and Automation Engineering (RCAE), Changchun, China, 28 October 2022; IEEE: Changchun, China, 2022; pp. 326–330. [Google Scholar] [CrossRef]

- Neven, D.; Brabandere, B.D.; Georgoulis, S.; Proesmans, M.; Gool, L.V. Towards End-to-End Lane Detection: An Instance Segmentation Approach. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; IEEE: Changshu, China, 2018; pp. 286–291. [Google Scholar]

- Liu, L.; Chen, X.; Zhu, S.; Tan, P. CondLaneNet: A Top-to-down Lane Detection Framework Based on Conditional Convolution. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: Montreal, QC, Canada, 2021; pp. 3753–3762. [Google Scholar] [CrossRef]

- Liu, B.; Liu, H.; Yuan, J. Lane Line Detection Based on Mask R-CNN. In Proceedings of the 3rd International Conference on Mechatronics Engineering and Information Technology (ICMEIT 2019), Dalian, China, 29–30 March 2019; Atlantis Press: Dalian, China, 2019. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: Boston, MA, USA, 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Honolulu, HI, USA, 2017; pp. 6230–6239. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected CRFs. arXiv 2016, arXiv:1412.7062. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11211, pp. 833–851. ISBN 978-3-030-01233-5. [Google Scholar]

- Zakaria, N.J.; Shapiai, M.I.; Abdul Rahman, M.A.; Yahya, W.J. Lane Line Detection via Deep Learning Based- Approach Applying Two Types of Input into Network Model. J. Soc. Automot. Eng. Malays. 2020, 4, 208–220. [Google Scholar] [CrossRef]

- Chen, L.; Xu, X.; Pan, L.; Cao, J.; Li, X. Real-Time Lane Detection Model Based on Non Bottleneck Skip Residual Connections and Attention Pyramids. PLoS ONE 2021, 16, e0252755. [Google Scholar] [CrossRef]

- Li, J.; Jiang, F.; Yang, J.; Kong, B.; Gogate, M.; Dashtipour, K.; Hussain, A. Lane-DeepLab: Lane Semantic Segmentation in Automatic Driving Scenarios for High-Definition Maps. Neurocomputing 2021, 465, 15–25. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, Y.; Tian, Y.; Zhang, Y.; Gao, L. The Improved Deeplabv3plus Based Fast Lane Detection Method. Actuators 2022, 11, 197. [Google Scholar] [CrossRef]

- Zhang, Y.; Zuo, Z.; Xu, X.; Wu, J.; Zhu, J.; Zhang, H.; Wang, J.; Tian, Y. Road Damage Detection Using UAV Images Based on Multi-Level Attention Mechanism. Autom. Constr. 2022, 144, 104613. [Google Scholar] [CrossRef]

- Burghardt, T.E.; Popp, R.; Helmreich, B.; Reiter, T.; Böhm, G.; Pitterle, G.; Artmann, M. Visibility of Various Road Markings for Machine Vision. Case Stud. Constr. Mater. 2021, 15, e00579. [Google Scholar] [CrossRef]

- Kiss, B.; Ballagi, Á.; Kuczmann, M. Overview Study of the Applications of Unmanned Aerial Vehicles in the Transportation Sector. Eng. Proc. 2024, 79, 11. [Google Scholar] [CrossRef]

- Butilă, E.V.; Boboc, R.G. Urban Traffic Monitoring and Analysis Using Unmanned Aerial Vehicles (UAVs): A Systematic Literature Review. Remote Sens. 2022, 14, 620. [Google Scholar] [CrossRef]

- Hayal, M.R.; Elsayed, E.E.; Kakati, D.; Singh, M.; Elfikky, A.; Boghdady, A.I.; Grover, A.; Mehta, S.; Mohsan, S.A.H.; Nurhidayat, I. Modeling and Investigation on the Performance Enhancement of Hovering UAV-Based FSO Relay Optical Wireless Communication Systems under Pointing Errors and Atmospheric Turbulence Effects. Opt. Quantum Electron. 2023, 55, 625. [Google Scholar] [CrossRef]

- Munir, A.; Siddiqui, A.J.; Anwar, S.; El-Maleh, A.; Khan, A.H.; Rehman, A. Impact of Adverse Weather and Image Distortions on Vision-Based UAV Detection: A Performance Evaluation of Deep Learning Models. Drones 2024, 8, 638. [Google Scholar] [CrossRef]

- Feng, H.; Zhang, L.; Zhang, S.; Wang, D.; Yang, X.; Liu, Z. RTDOD: A Large-Scale RGB-Thermal Domain-Incremental Object Detection Dataset for UAVs. Image Vis. Comput. 2023, 140, 104856. [Google Scholar] [CrossRef]

- Yang, Y. A Review of Lane Detection in Autonomous Vehicles. J. Adv. Eng. Technol. 2024, 1, 30–36. [Google Scholar] [CrossRef]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV 2018), Munich, Germany, 8–14 September 2018; Part IV. Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2018; 11210, pp. 370–386. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Proceedings, Part III. Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science. Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241, ISBN 978-3-319-24573-7. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Honolulu, HI, USA, 2017; pp. 1800–1807. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Salt Lake City, UT, USA, 2018; pp. 4510–4520. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Salt Lake City, UT, USA, 2018; pp. 7132–7141. [Google Scholar]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Honolulu, HI, USA, 2017; pp. 936–944. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Artacho, B.; Savakis, A. Waterfall Atrous Spatial Pooling Architecture for Efficient Semantic Segmentation. Sensors 2019, 19, 5361. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. In Proceedings of the International Conference on Learning Representations (ICLR 2016), San Juan, Puerto Rico, 2–4 May 2016; pp. 1–13. [Google Scholar]

- Jaccard, P. Étude Comparative de la Distribution Florale dans Une Portion des Alpes et du Jura. Bull. Soc. Vaudoise Sci. Nat. 1901, 37, 547–579. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Van Rijsbergen, C.J. A non-classical logic for information retrieval. Comput. J. 1986, 29, 481–485. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; Proceedings, Part VII. Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–19. [Google Scholar]

- Xu, W.; Wan, Y. ELA: Efficient local attention for deep convolutional neural networks. arXiv 2024, arXiv:2403.01123. [Google Scholar] [CrossRef]

- Gao, S.; Qin, Y.; Zhu, R.; Zhao, Z.; Zhou, H.; Zhu, Z. SGSAFormer: Spike Gated Self-Attention Transformer and Temporal Attention. Electronics 2024, 14, 43. [Google Scholar] [CrossRef]

- Yang, M.; Yu, K.; Zhang, C.; Li, Z.; Yang, K. DenseASPP for Semantic Segmentation in Street Scenes. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Salt Lake City, UT, USA, 2018; pp. 3684–3692. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Buslaev, A.; Parinov, A.; Khvedchenya, E.; Iglovikov, V.I.; Kalinin, A.A. Albumentations: Fast and Flexible Image Augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef]

- IPCC. Climate Change 2022-Mitigation of Climate Change: Working Group III Contribution to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change, 1st ed.; Intergovernmental Panel on Climate Change (IPCC); Cambridge University Press: Cambridge, UK, 2022; ISBN 978-1-009-15792-6. [Google Scholar]

| Types of Lane Lines | Number of Labels | Colors of Labels |

|---|---|---|

| white-solid-lane-line | 2522 | red |

| white-dashed-lane-line | 2930 | green |

| yellow-solid-lane-line | 238 | yellow |

| yellow-dashed-lane-line | 685 | blue |

| Operator | t | c | n | s | Output |

|---|---|---|---|---|---|

| Conv2d 3 × 3 | - | 32 | 1 | 2 | 3202 × 32 |

| Bottleneck | 1 | 16 | 1 | 1 | 3202 × 16 |

| Bottleneck | 6 | 24 | 2 | 2 | 1602 × 24 |

| Bottleneck | 6 | 32 | 3 | 2 | 802 × 32 |

| Bottleneck | 6 | 64 | 4 | 2 | 402 × 64 |

| Bottleneck | 6 | 96 | 3 | 1 | 402 × 96 |

| Bottleneck | 6 | 160 | 3 | 2 | 202 × 160 |

| Bottleneck | 6 | 320 | 1 | 1 | 202 × 320 |

| MODULE WASPP: |

| INPUT: |

| x: input feature map [batch, channels, height, width] base_dilation_rate: integer dilation rate // Cascaded branches |

| branch1 = Conv1 × 1 (x) → BN → ReLU |

| branch2 = Conv3 × 3 (branch1, dilation = 2 * rate) → BN → ReLU |

| branch3 = Conv3 × 3 (branch2, dilation = 4 * rate) → BN → ReLU |

| branch4 = Conv3 × 3 (branch3, dilation = 6 * rate) → BN → ReLU |

| // branch5: Global context global_feature = GlobalAveragePooling (x) → [batch, channels, 1, 1] global_feature = Conv1 × 1 (global_feature, kernel = 1 × 1) → BN → ReLU global_feature = BilinearUpsample (global_feature, size = (height, width)) // Feature aggregation concatenated = ChannelwiseConcat (branch1, branch2, branch3, branch4, global feature) // Feature fusion output = Conv1 × 1 (concatenated, kernel = 1 × 1) → BN → ReLU RETURN output |

| Backbone Network | IoU (%) | F1-Score (%) | Params (M) | ||||

|---|---|---|---|---|---|---|---|

| Yellow-Dashed-Lane-Line | Yellow-Solid-Lane-Line | White-Dashed-Lane-Line | White-Solid-Lane-Line | MIoU | |||

| Xception | 84.42 | 77.87 | 64.11 | 75.40 | 80.26 | 88.39 | 54.71 |

| MobileNetV2 | 84.12 | 76.77 | 64.23 | 75.93 | 80.11 | 88.05 | 5.81 |

| MobileNetV3-Large | 82.63 | 70.75 | 69.03 | 78.16 | 80.02 | 88.11 | 11.73 |

| MobileNetV3-Small | 83.20 | 70.67 | 67.82 | 77.55 | 79.75 | 88.01 | 6.83 |

| Method | IoU (%) | F1-Score (%) | Params (M) | ||||

|---|---|---|---|---|---|---|---|

| Yellow-Dashed-Lane-Line | Yellow-Solid-Lane-Line | White-Dashed-Lane-Line | White-Solid-Lane-Line | MIoU | |||

| MobileNetV2 | 84.12 | 76.77 | 64.23 | 75.93 | 80.11 | 88.05 | 5.81 |

| +CBAM | 82.90 | 75.42 | 63.23 | 74.62 | 78.74 | 87.86 | 5.95 |

| +ELA | 84.14 | 76.04 | 63.41 | 75.19 | 79.65 | 87.84 | 5.93 |

| +TA | 84.26 | 77.98 | 64.44 | 75.93 | 80.42 | 88.07 | 5.95 |

| +SE | 84.56 | 78.92 | 65.19 | 76.84 | 81.00 | 88.48 | 6.03 |

| Method | IoU (%) | F1-Score (%) | Params (M) | ||||

|---|---|---|---|---|---|---|---|

| Yellow-Dashed-Lane-Line | Yellow-Solid-Lane-Line | White-Dashed-Lane-Line | White-Solid-Lane-Line | MIoU | |||

| MobileNetV2 | 84.12 | 76.77 | 64.23 | 75.93 | 80.11 | 88.05 | 5.81 |

| +WASP (rates = 3/6/12/18) | 83.62 | 75.68 | 63.34 | 74.41 | 79.30 | 87.41 | 3.53 |

| +DenseASPP (rates = 3/6/12/18) | 84.55 | 78.60 | 65.15 | 76.82 | 80.92 | 88.66 | 11.55 |

| +WASPP (rates = 6/12/18) | 84.68 | 78.62 | 65.39 | 76.90 | 81.02 | 88.74 | 6.74 |

| Group | MobileNetV2 | FPN | WASPP (Rates = 2/4/6) | WASPP (Rates = 6/12/18) | MIoU(%) | F1-Score (%) | Params (M) |

|---|---|---|---|---|---|---|---|

| ① | √ | √ | — | — | 84.37 | 91.43 | 7.81 |

| ② | √ | — | √ | — | 79.70 | 87.46 | 6.74 |

| ③ | √ | — | — | √ | 81.02 | 88.74 | 6.74 |

| ④ | √ | √ | — | √ | 84.29 | 91.44 | 7.92 |

| ⑤ | √ | √ | √ | — | 84.57 | 91.40 | 7.92 |

| Group | MobileNetV2 | SE | FPN | WASPP | IoU(%) | F1-Score (%) | Params (M) | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Yellow-Dashed-Lane-Line | Yellow-Solid-Lane-Line | White-Dashed-Lane-Line | White-Solid-Lane-Line | MIoU | |||||||

| ① | — | — | — | — | 84.42 | 77.87 | 64.11 | 75.40 | 80.26 | 88.39 | 54.71 |

| ② | √ | — | — | — | 84.12 | 76.77 | 64.23 | 75.93 | 80.11 | 88.05 | 5.81 |

| ③ | √ | √ | — | — | 84.56 | 78.92 | 65.19 | 76.84 | 81.00 | 88.48 | 6.03 |

| ④ | √ | — | √ | — | 85.67 | 77.80 | 75.75 | 82.94 | 84.37 | 91.43 | 7.81 |

| ⑤ | √ | — | — | √ | 83.69 | 76.27 | 63.98 | 75.08 | 79.70 | 87.46 | 6.74 |

| ⑥ | √ | √ | √ | — | 84.96 | 76.85 | 74.78 | 82.42 | 83.74 | 90.69 | 8.03 |

| ⑦ | √ | — | √ | √ | 85.42 | 78.67 | 76.03 | 83.04 | 84.57 | 91.40 | 7.92 |

| ⑧ | √ | √ | — | √ | 84.55 | 78.57 | 65.33 | 76.56 | 80.91 | 88.83 | 6.96 |

| ⑨ | √ | √ | √ | √ | 85.68 | 80.33 | 77.04 | 83.76 | 85.30 | 91.74 | 8.03 |

| Network Model | Backbone Network | MIoU (%) | F1-Score (%) | Training Time | Params (M) |

|---|---|---|---|---|---|

| PSPNet [21] | MobileNetV2 | 44.24 | 58.74 | 14 h 15 min | 2.38 |

| Resnet50 | 46.73 | 59.84 | 14 h 25 min | 46.71 | |

| HRNet [57] | — | 80.72 | 88.92 | 13 h 9 min | 9.64 |

| UNet [39] | VGG16 | 84.74 | 91.47 | 15 h 11 min | 24.89 |

| DeeplabV3+ | Xception-65 | 80.26 | 88.39 | 17 h 43 min | 54.71 |

| Ours | MobileNetV2 | 85.30 | 91.74 | 14 h 53 min | 8.03 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Guo, D.; Wang, Y.; Shuai, H.; Li, Z.; Ran, J. Improved DeepLabV3+ for UAV-Based Highway Lane Line Segmentation. Sustainability 2025, 17, 7317. https://doi.org/10.3390/su17167317

Wang Y, Guo D, Wang Y, Shuai H, Li Z, Ran J. Improved DeepLabV3+ for UAV-Based Highway Lane Line Segmentation. Sustainability. 2025; 17(16):7317. https://doi.org/10.3390/su17167317

Chicago/Turabian StyleWang, Yueze, Dudu Guo, Yang Wang, Hongbo Shuai, Zhuzhou Li, and Jin Ran. 2025. "Improved DeepLabV3+ for UAV-Based Highway Lane Line Segmentation" Sustainability 17, no. 16: 7317. https://doi.org/10.3390/su17167317

APA StyleWang, Y., Guo, D., Wang, Y., Shuai, H., Li, Z., & Ran, J. (2025). Improved DeepLabV3+ for UAV-Based Highway Lane Line Segmentation. Sustainability, 17(16), 7317. https://doi.org/10.3390/su17167317